Planning For Interpreting Using Assessment Data Gary Williams

- Slides: 31

Planning For, Interpreting & Using Assessment Data Gary Williams, Ed. D. Instructional Assessment Specialist, Crafton Hills College gwilliams@craftonhills. edu Fred Trapp, Ph. D. Administrative Dean, Institutional Research/Academic Services, Long Beach City College October, 2007 ftrapp@lbcc. edu

Goals of the Presentation • “De-mystify” the assessment process • Provide practical approaches & examples for assessing student learning • Answer questions posed by attendees that pertain to their assessment challenges

What’s It All About? • An ongoing process aimed at understanding and improving student learning. • Faculty making learning expectations explicit and public. • Faculty setting appropriate standards for learning quality.

What’s It All About? • Systematically gathering, analyzing and interpreting evidence to determine how well student performance matches agreed upon faculty expectations & standards. • Using results to document, explain and improve teaching & learning performance. Tom Angelo AAHE Bulletin, November 1995

Roles of Assessment • “We assess to assist, assess to advance, assess to adjust”: – Assist: provide formative feedback to guide student performance – Advance: summative assessment of student readiness for what’s next – Adjust: continuous improvement of curriculum, pedagogy. - Ruth Stiehl, The Assessment Primer: Creating a Flow of Learning Evidence (2007)

Formulating Questions for Assessment • Curriculum designed backwards; Students’ journey forward: – What do students need to DO “out there” that we’re responsible for “in here? ” (Stiehl) • Subsequent roles in life (work or future study, etc. ) – How do students demonstrate the intended learning now? – What kinds of evidence must we collect and how do we collect it?

Assessment Questions & Strategies– Factors to consider: • Meeting Standards – Does the program meet or exceed certain standards? – Criterion reference, commonly state or national standards • Comparing to Others – How does the student or program compare to others? – Norm reference, other students, programs or institutions

Assessment Questions & Strategies. Factors to Consider: • Measuring Goal Attainment – Does the student or program do a good job at what it sets out to accomplish? – Internal reference to goals and educational objectives compared to actual performance. – Formative student-center. – Professional judgment about evidence common.

Assessment Questions & Strategies. Factors to Consider: • Developing Talent and Improving Programs – Has the student or program improved? – How can the student’s program and learning experience be improved even further? – Formative and developmental. – Variety of assessment tools and sources of evidence.

Choosing Assessment Tools • Depends upon the “unit of analysis” – Course – Program – Degree/general education – Co-curricular • Also depends upon overall learning expectations

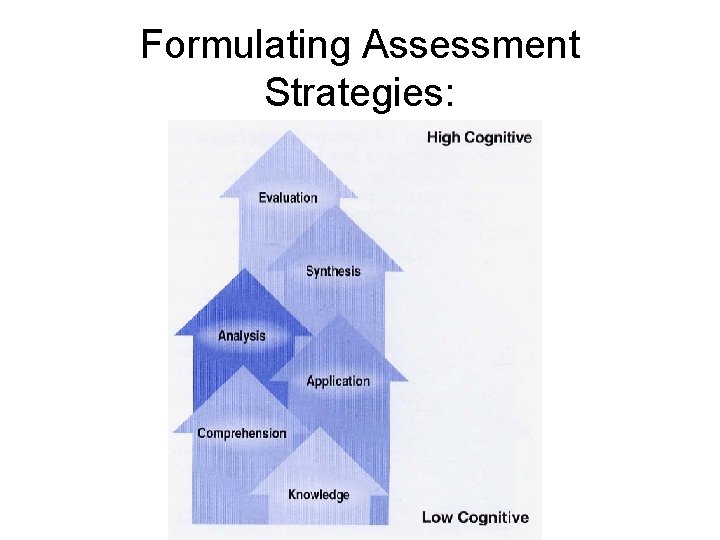

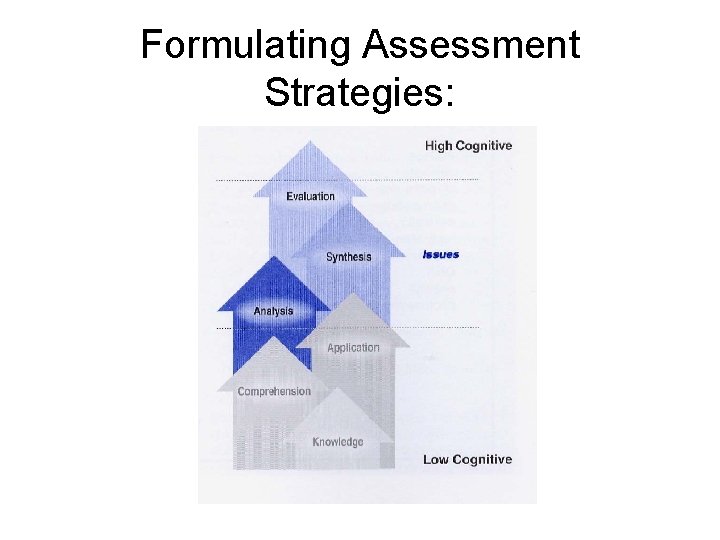

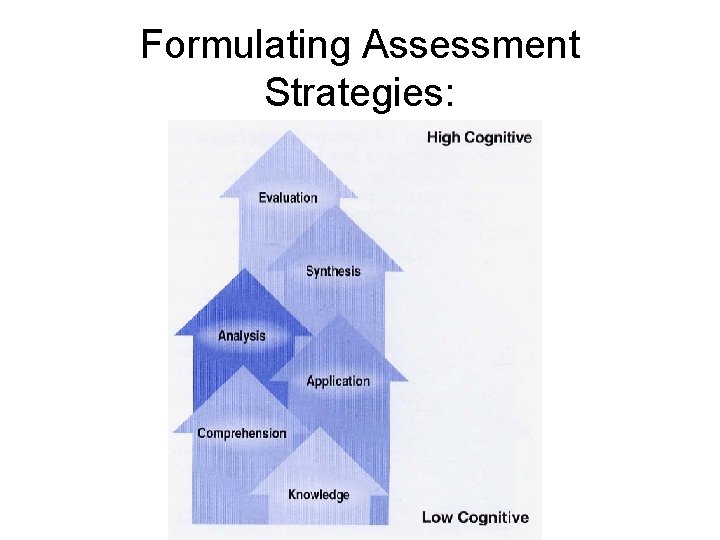

Formulating Assessment Strategies:

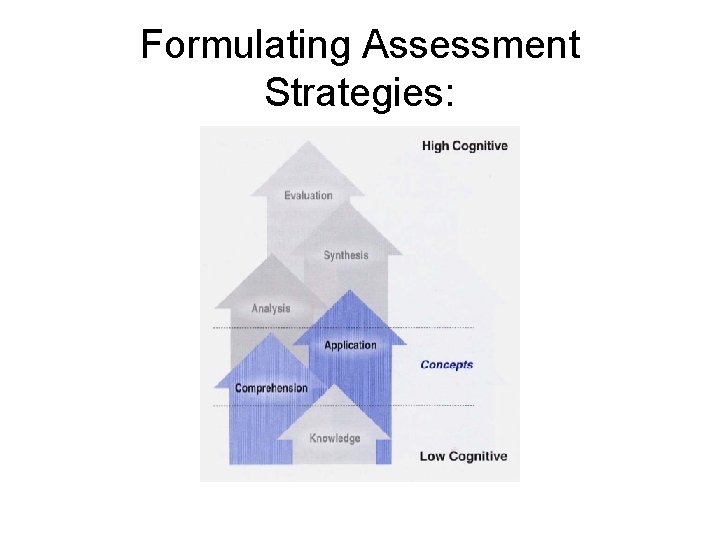

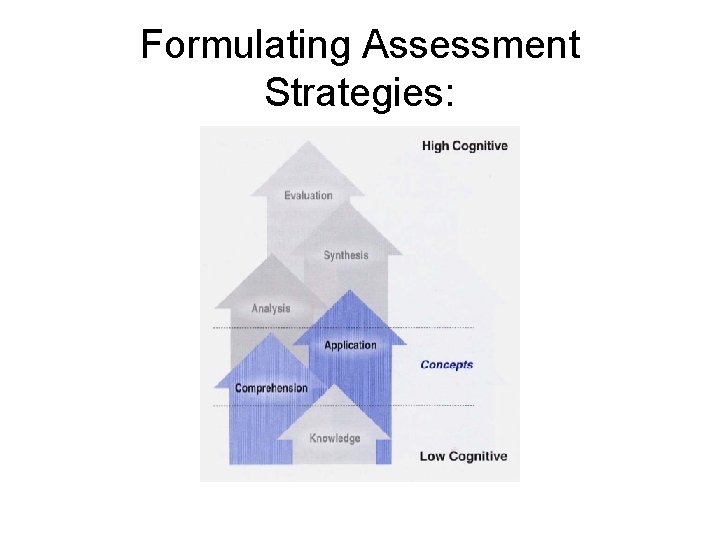

Formulating Assessment Strategies:

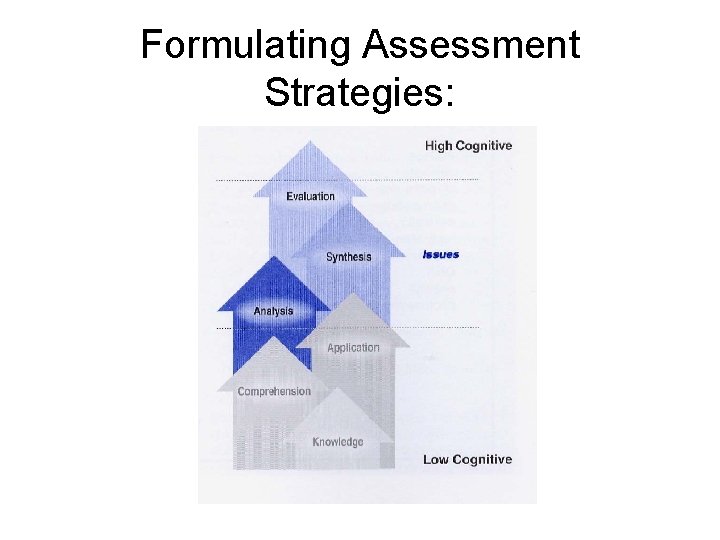

Formulating Assessment Strategies:

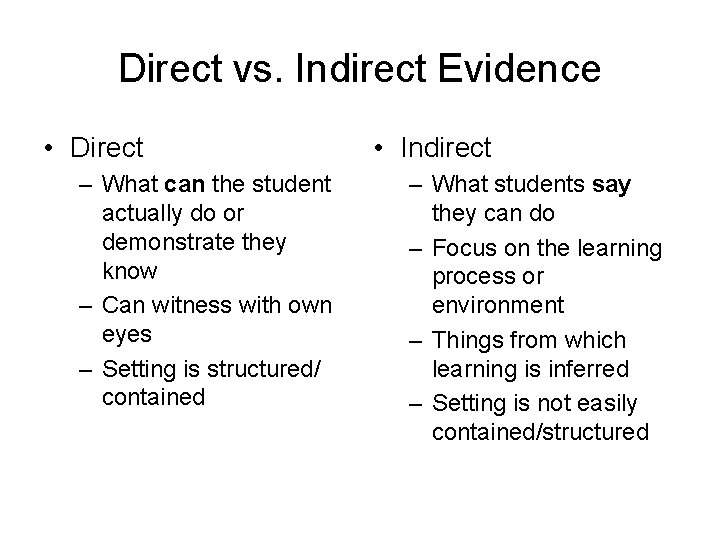

Direct vs. Indirect Evidence • Direct – What can the student actually do or demonstrate they know – Can witness with own eyes – Setting is structured/ contained • Indirect – What students say they can do – Focus on the learning process or environment – Things from which learning is inferred – Setting is not easily contained/structured

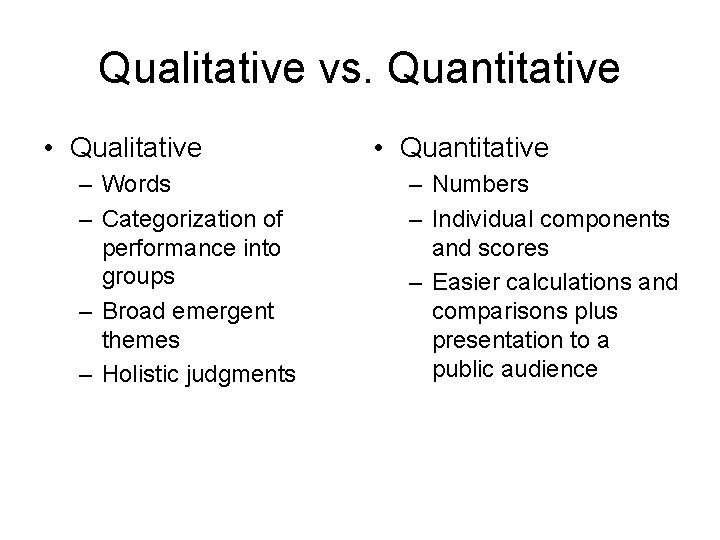

Qualitative vs. Quantitative • Qualitative – Words – Categorization of performance into groups – Broad emergent themes – Holistic judgments • Quantitative – Numbers – Individual components and scores – Easier calculations and comparisons plus presentation to a public audience

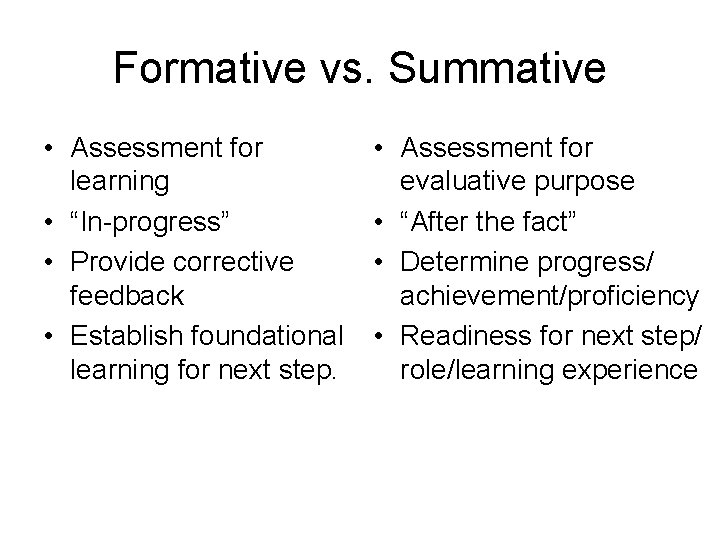

Formative vs. Summative • Assessment for learning • “In-progress” • Provide corrective feedback • Establish foundational learning for next step. • Assessment for evaluative purpose • “After the fact” • Determine progress/ achievement/proficiency • Readiness for next step/ role/learning experience

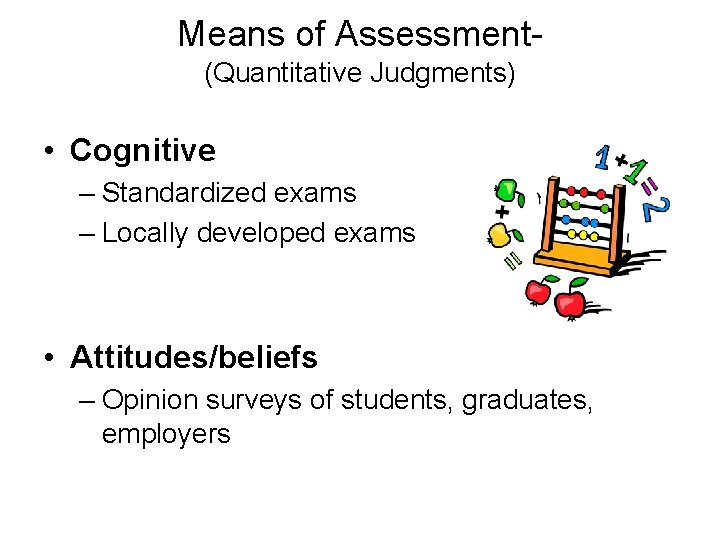

Means of Assessment(Quantitative Judgments) • Cognitive – Standardized exams – Locally developed exams • Attitudes/beliefs – Opinion surveys of students, graduates, employers

Means of Assessment(Qualitative Judgments) • Cognitive – Embedded classroom assignments • Behavior/performances (skills applications) – – – Portfolios Public performances Juried competitions Internships Simulations Practical demonstrations • Attitudes/beliefs – Focus groups

Interpreting Results- How Good Is Good Enough? • Norm Referencing – Comparing student achievement against other students doing the same task • Criterion Referencing – Criteria and standards of judgment developed within the institution

Are Results Valid and Reliable? • • • Validity Reliability Authentic assessment Important questions or easy questions Inform teaching and learning?

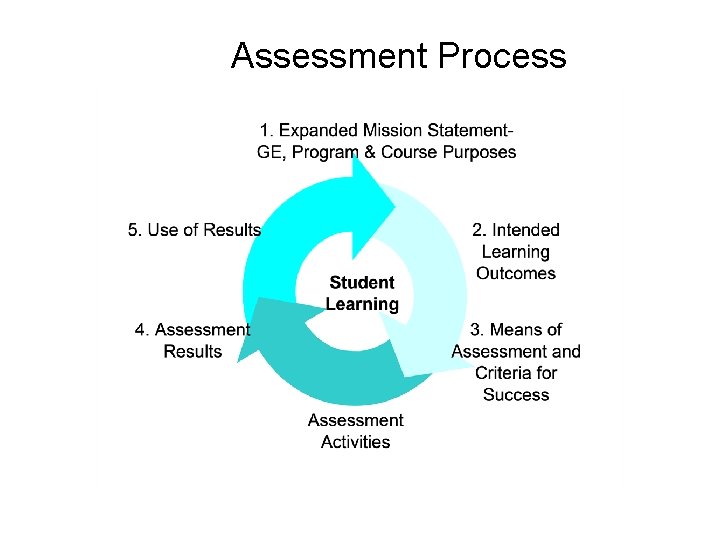

How Does Assessment Data Inform Decision-Making? • Goal: Making sound curricular and pedagogical decisions, based on evidence • Assessment questions are tied to instructional goals. • Assessment methods yield data that is valid & reliable. • A variety of measures are considered. • Assessment is an ongoing cycle.

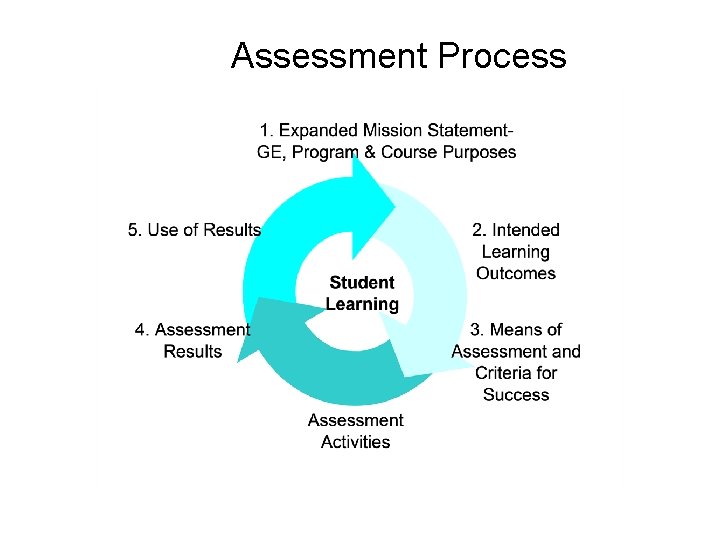

Assessment Process

Collaboration Among Faculty, Administration & Researchers • Assessment, the auto, and a road trip: an analogy – Who should drive the car? – Who provides the car, gas, insurance and maintenance? – Who brings the maps, directions, repair manual, tool kit, first aid kit, and stimulates the conversation along the journey?

Why Faculty are the Drivers • Faculty have the primary responsibility for facilitating learning (delivery of instruction) • Faculty are already heavily involved in assessment (classroom, matriculation) • Faculty are the content experts • Who knows better what students should learn than faculty?

Who Provides the Car and Keeps Gas in It? Administrators!

Role of Administrators • Establish that an assessment program is important at the institution • Ensure college’s mission and goals reflect a focus on student learning • Institutionalize the practice of data-driven decision making (curriculum change, pedagogy, planning, budget, program review) • Create a neutral, safe environment for dialogue

Where Does IR Sit in the Car?

Roles of Researchers • Serve as a resource on assessment methods • Assist in the selection/design and validation of assessment instruments • Provide expertise on data collection, analysis, interpretation, reporting, and use of results • Facilitate dialogue - train and explain • Help faculty improve their assessment efforts

Faculty DON’Ts… • Avoid the SLO process or rely on others to do it for you. • Rely on outdated evaluation/grading models to tell you how your students are learning. • Use only one measure to assess learning • Don’t criticize or inhibit the assessment efforts of others.

Faculty DOs. . . • Participate in SLO assessment cycle • Make your learning expectations explicit • Use assessment opportunities to teach as well as to evaluate. • Dialogue with colleagues about assessment methods and data. • Focus on assessment as a continuous improvement cycle.

Questions From the Field…