Pixel GAN Autoencoders Alireza Makhzani Brendan Frey University

Pixel. GAN Autoencoders Alireza Makhzani, Brendan Frey University of Toronto Liu ze Dec 30 th, 2017 中国科学技术大学 University of Science and Technology of China

Outline 1. Background • Pixel. CNNs • Variational Autoencoders • Adversarial Autoencoders 2. Pixel. GAN Autoencoders • Limitations of VAE/AAE • Structure and Training • Benefits of Pixel. GAN Autoencoders 3. Experiments 4. Conclusion

Outline 1. Background • Pixel. CNNs • Variational Autoencoders • Adversarial Autoencoders 2. Pixel. GAN Autoencoders • Limitations of VAE/AAE • Structure and Training • Benefits of Pixel. GAN Autoencoders 3. Experiments 4. Conclusion

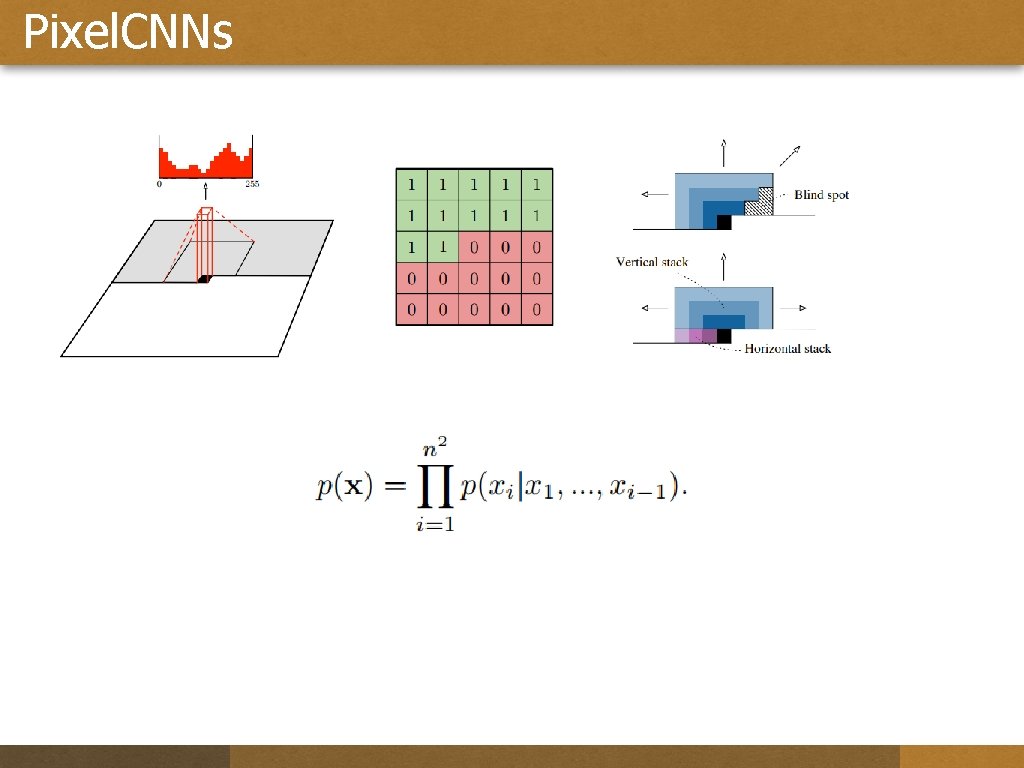

Pixel. CNNs

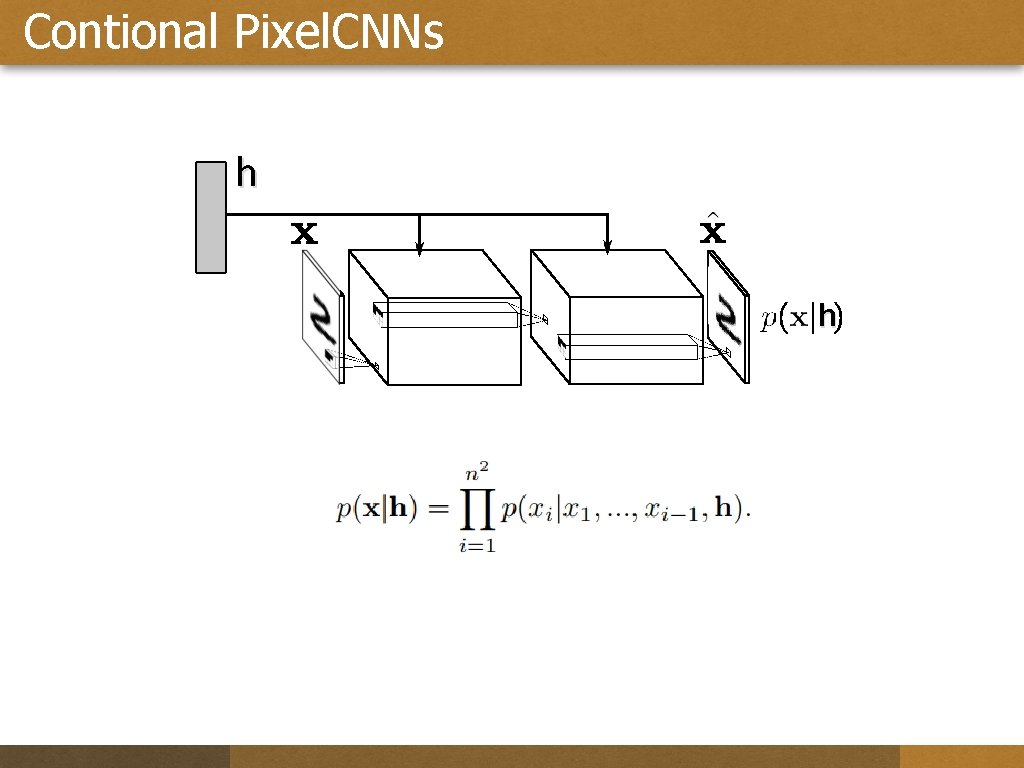

Contional Pixel. CNNs h h

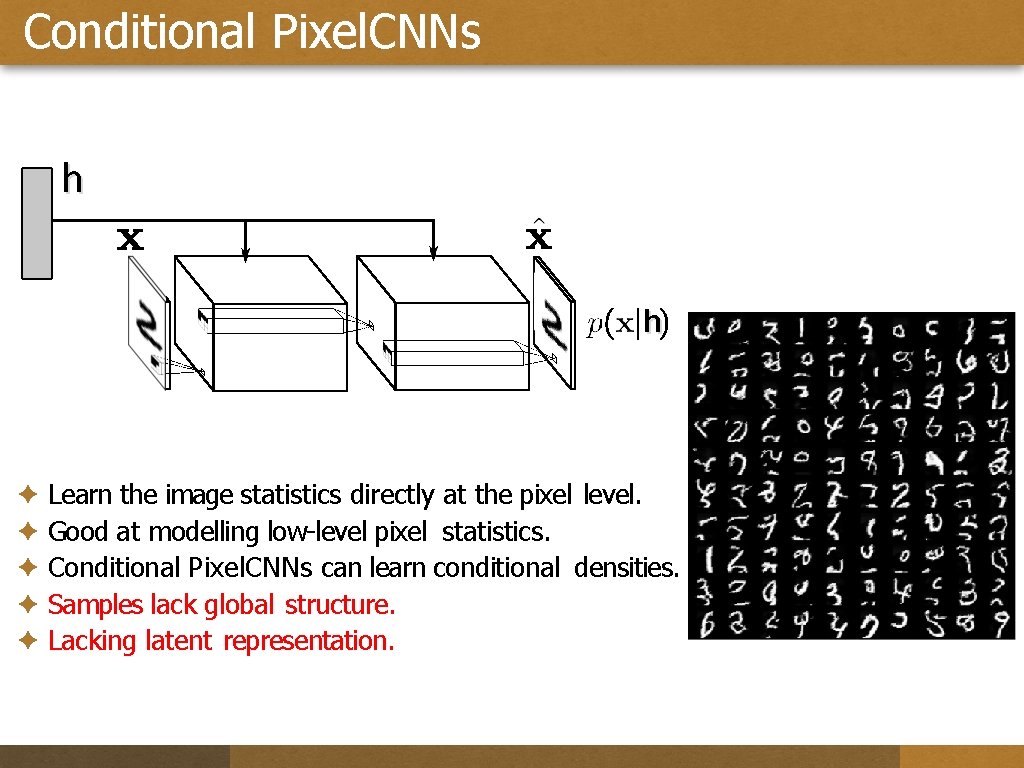

Conditional Pixel. CNNs h h ✦ Learn the image statistics directly at the pixel level. ✦ Good at modelling low-level pixel statistics. ✦ Conditional Pixel. CNNs can learn conditional densities. ✦ Samples lack global structure. ✦ Lacking latent representation.

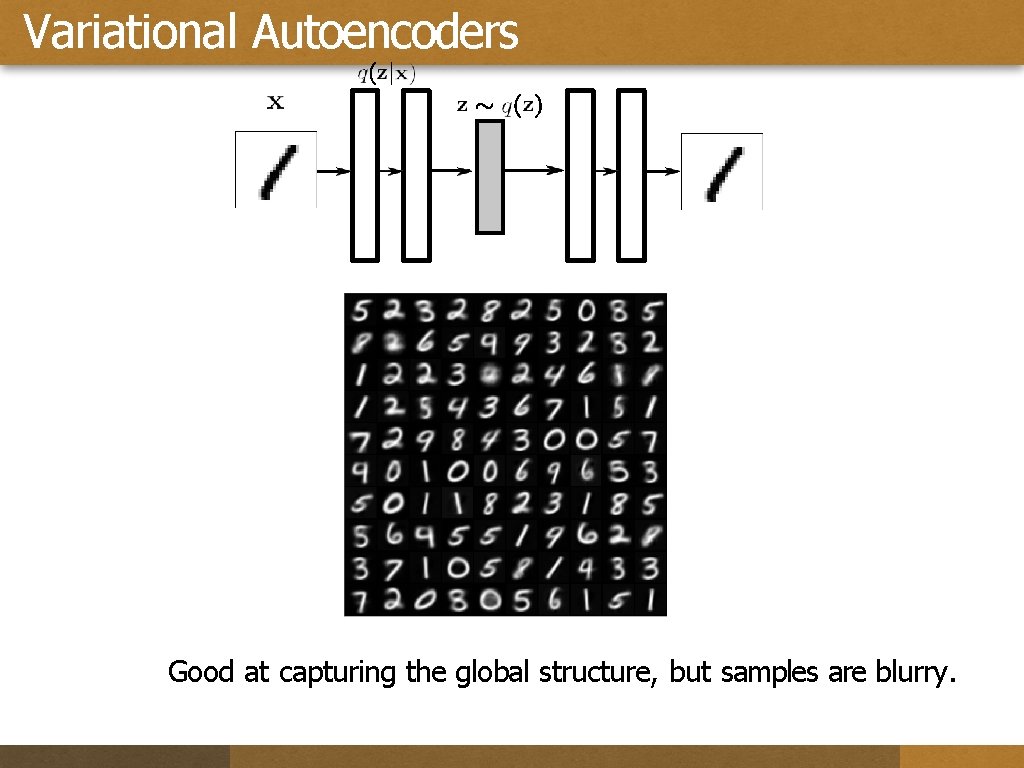

Variational Autoencoders Good at capturing the global structure, but samples are blurry.

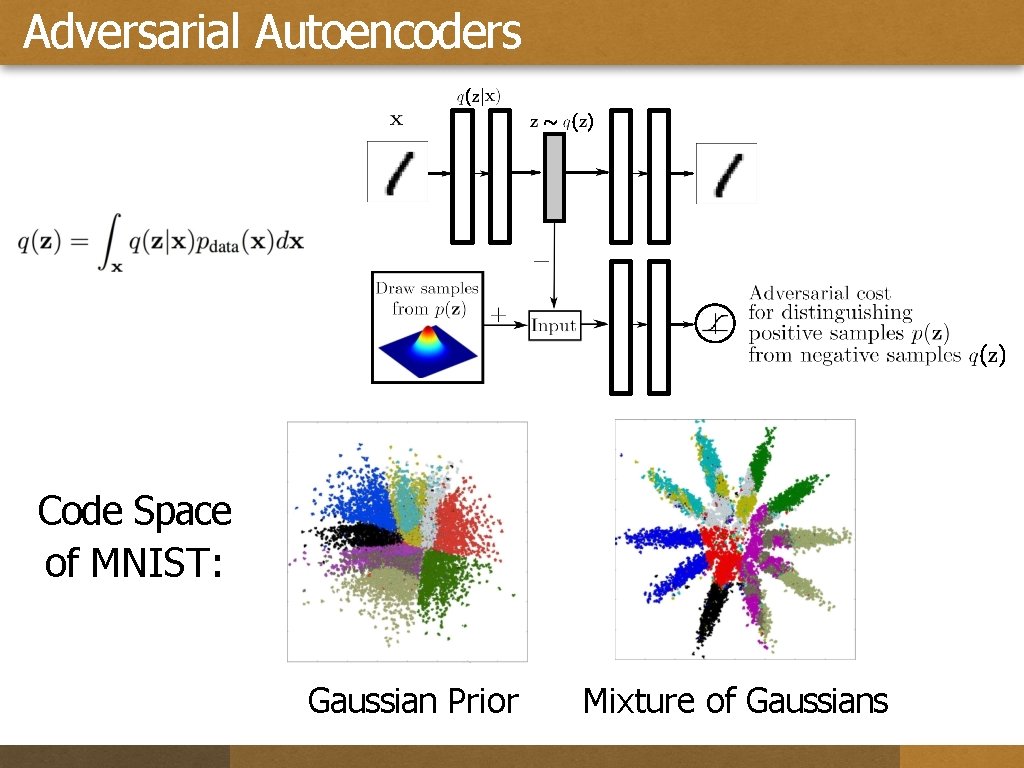

Adversarial Autoencoders Code Space of MNIST: Gaussian Prior Mixture of Gaussians

Outline 1. Background • Pixel. CNNs • Variational Autoencoders • Adversarial Autoencoders 2. Pixel. GAN Autoencoders • Limitations of VAE/AAE • Structure and Training • Benefits of Pixel. GAN Autoencoders 3. Experiments 4. Conclusion

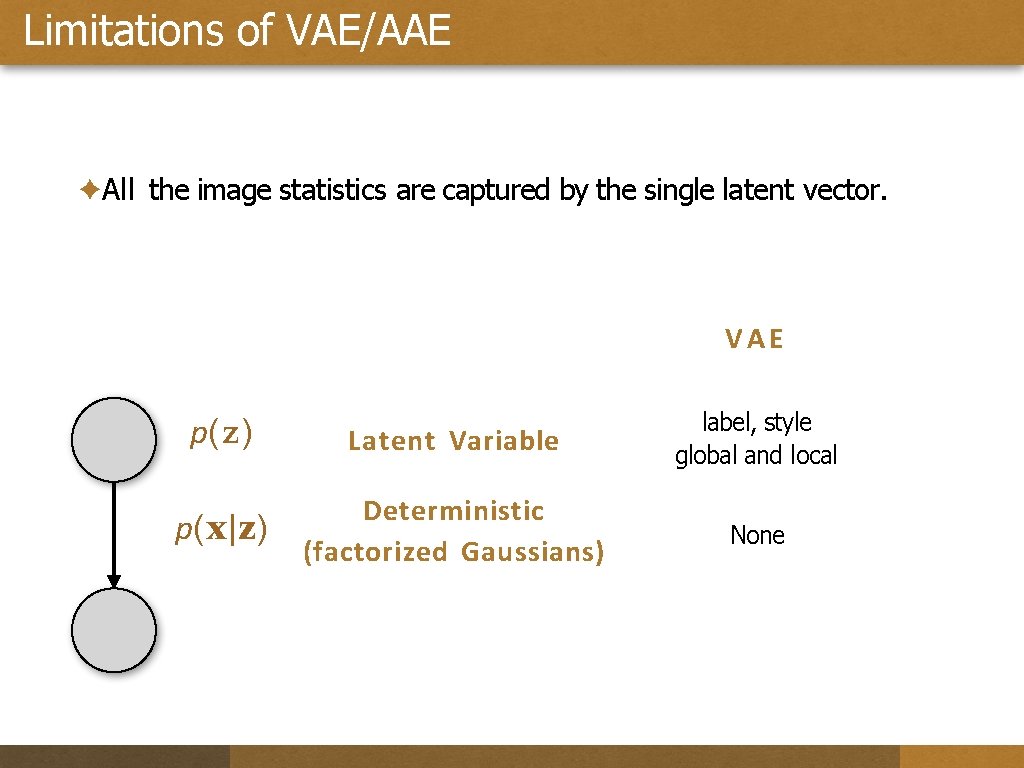

Limitations of VAE/AAE ✦All the image statistics are captured by the single latent vector. VAE p(z) Latent Variable label, style global and local p(x|z) Deterministic (factorized Gaussians) None

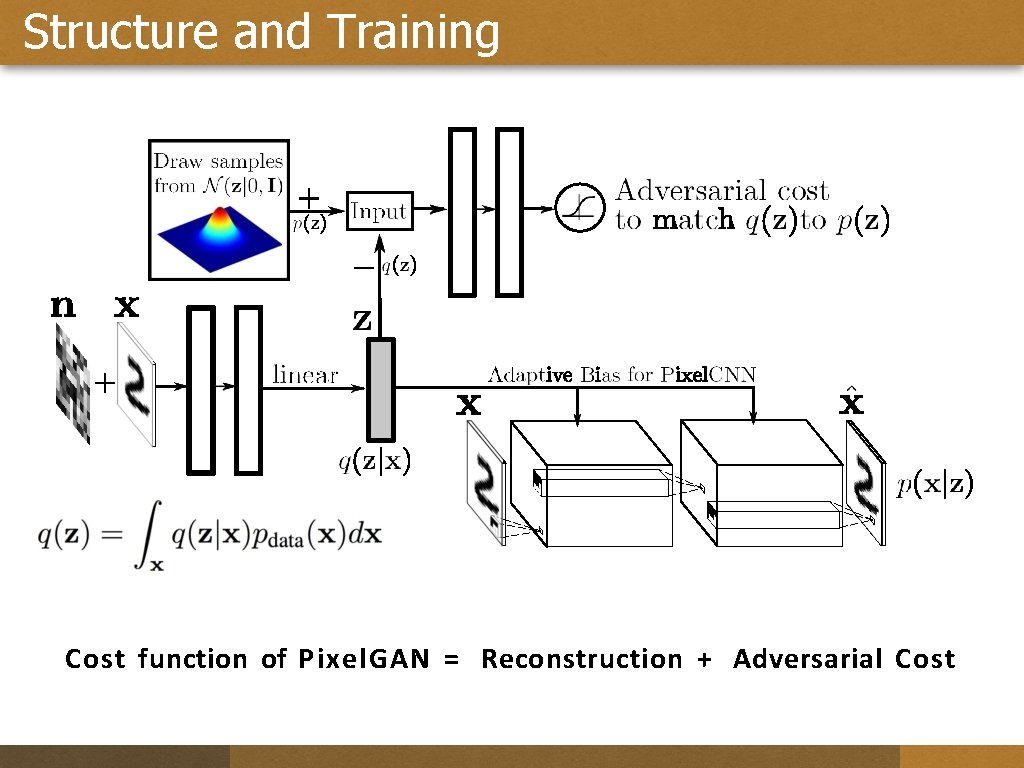

Structure and Training Cost function of Pixel. GAN = Reconstruction + Adversarial Cost

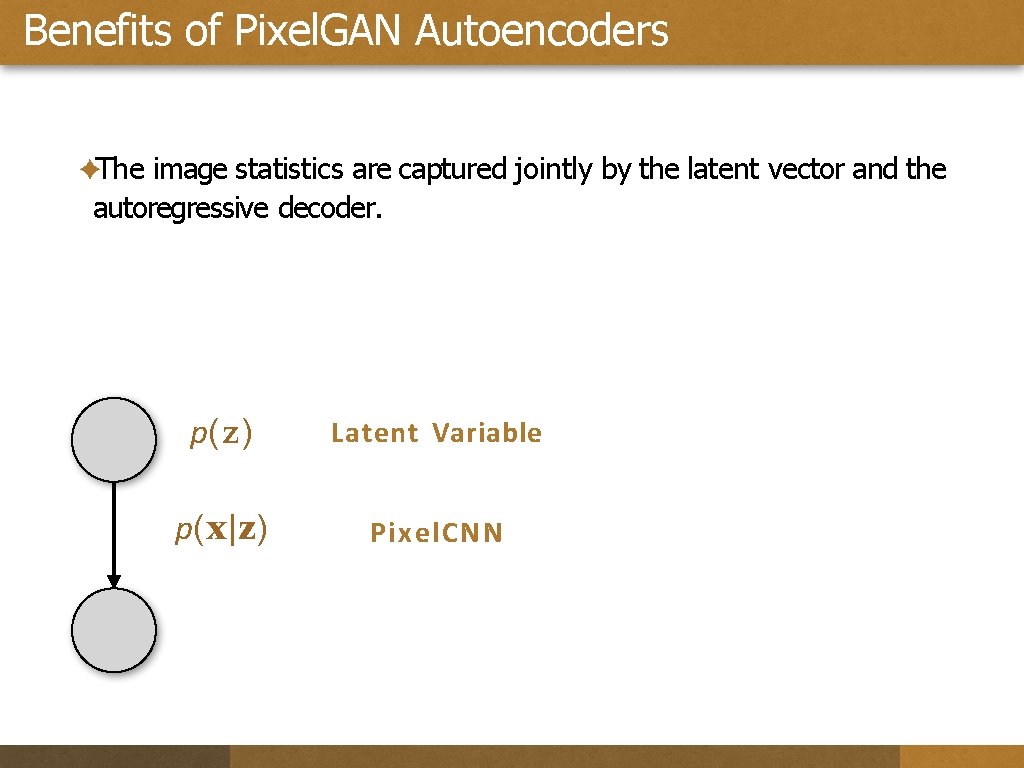

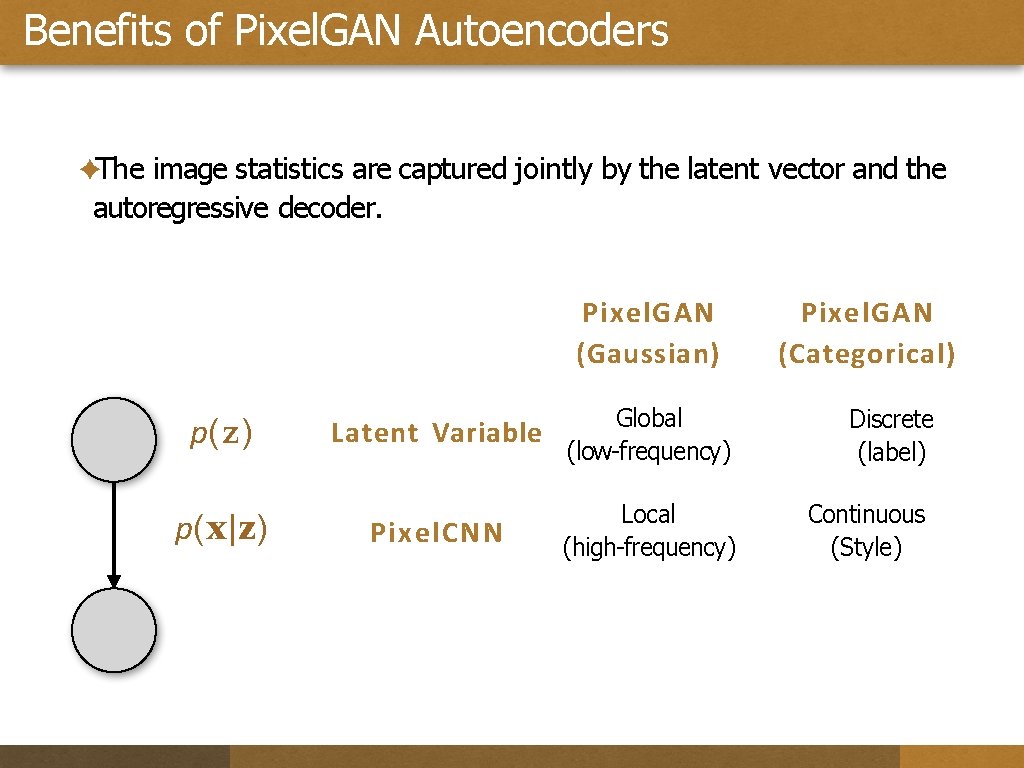

Benefits of Pixel. GAN Autoencoders ✦The image statistics are captured jointly by the latent vector and the autoregressive decoder. p(z) Latent Variable p(x|z) Pixel. CNN

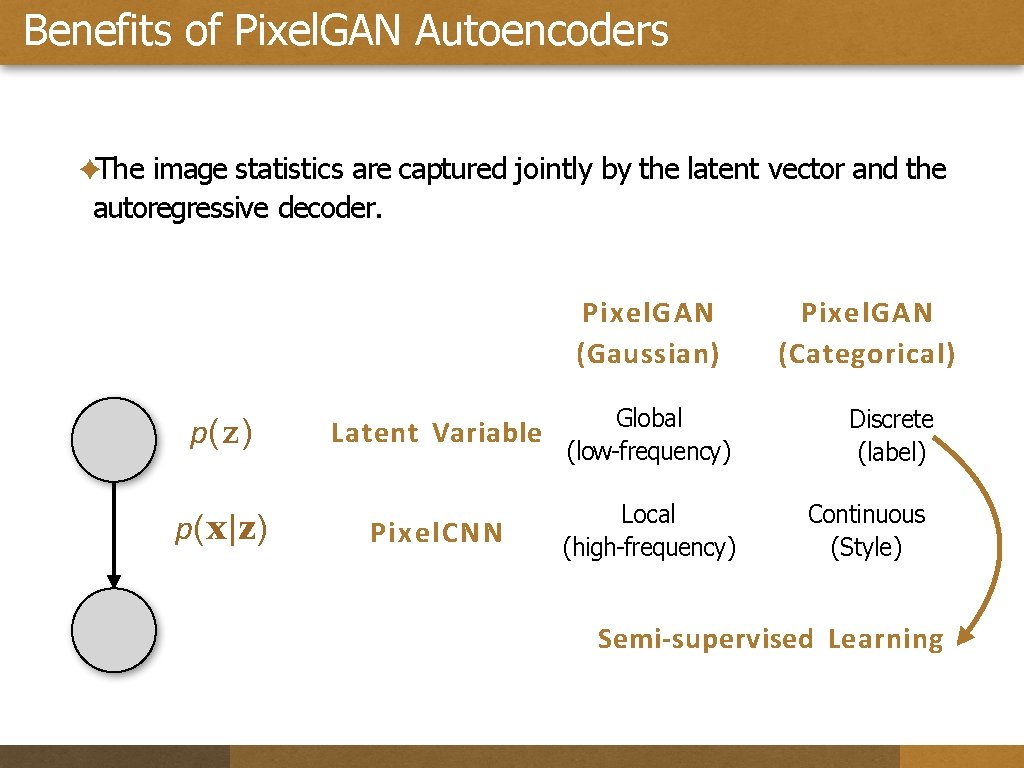

Benefits of Pixel. GAN Autoencoders ✦The image statistics are captured jointly by the latent vector and the autoregressive decoder. Pixel. GAN (Gaussian) p(z) p(x|z) Latent Variable Global (low-frequency) Pixel. CNN Local (high-frequency) Pixel. GAN (Categorical) Discrete (label) Continuous (Style)

Benefits of Pixel. GAN Autoencoders ✦The image statistics are captured jointly by the latent vector and the autoregressive decoder. Pixel. GAN (Gaussian) p(z) p(x|z) Latent Variable Global (low-frequency) Pixel. CNN Local (high-frequency) Pixel. GAN (Categorical) Discrete (label) Continuous (Style) Semi-supervised Learning

Outline 1. Background • Pixel. CNNs • Variational Autoencoders • Adversarial Autoencoders 2. Pixel. GAN Autoencoders • Limitations of VAE/AAE • Structure and Training • Benefits of Pixel. GAN Autoencoders 3. Experiments 4. Conclusion

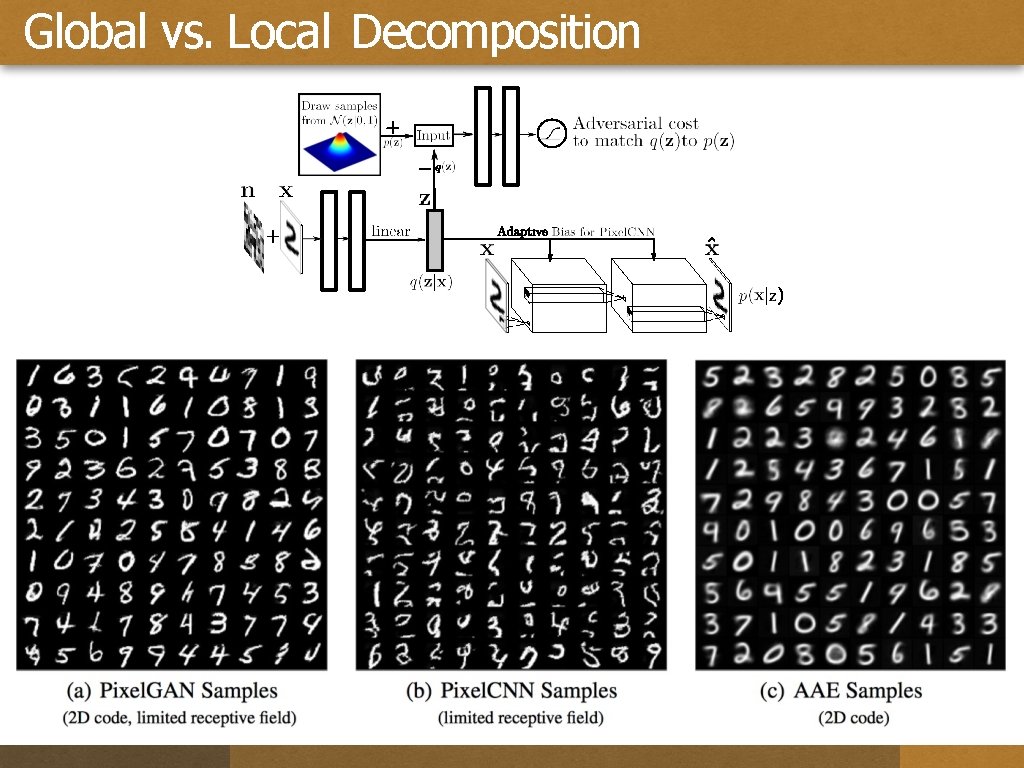

Global vs. Local Decomposition

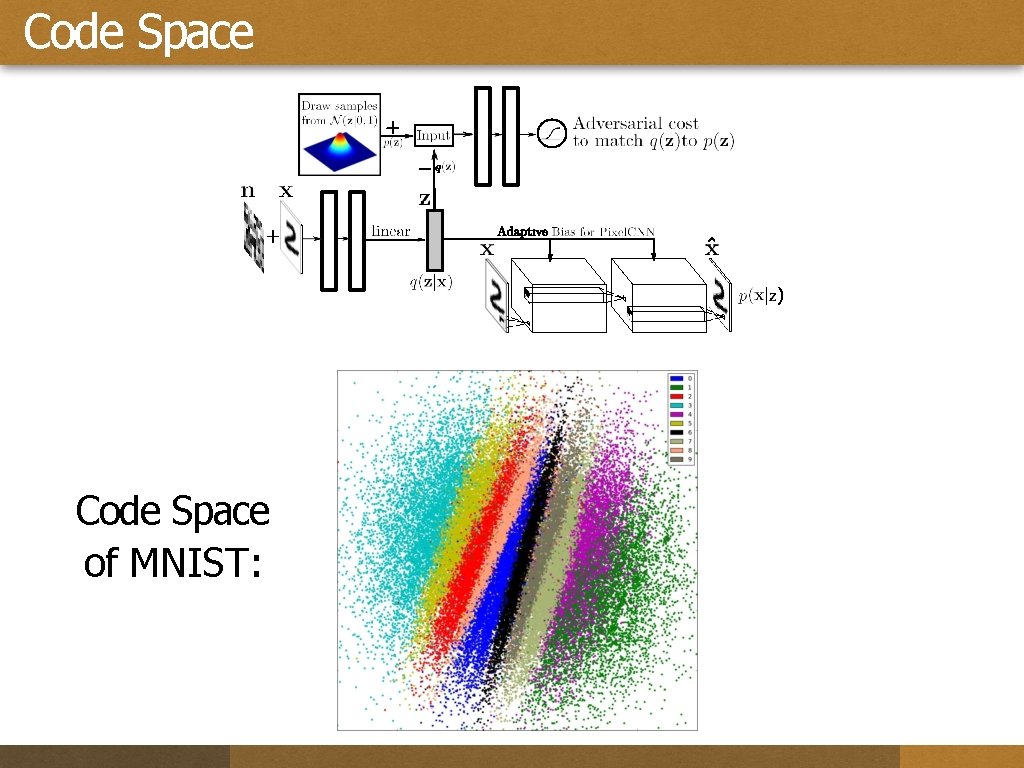

Code Space of MNIST:

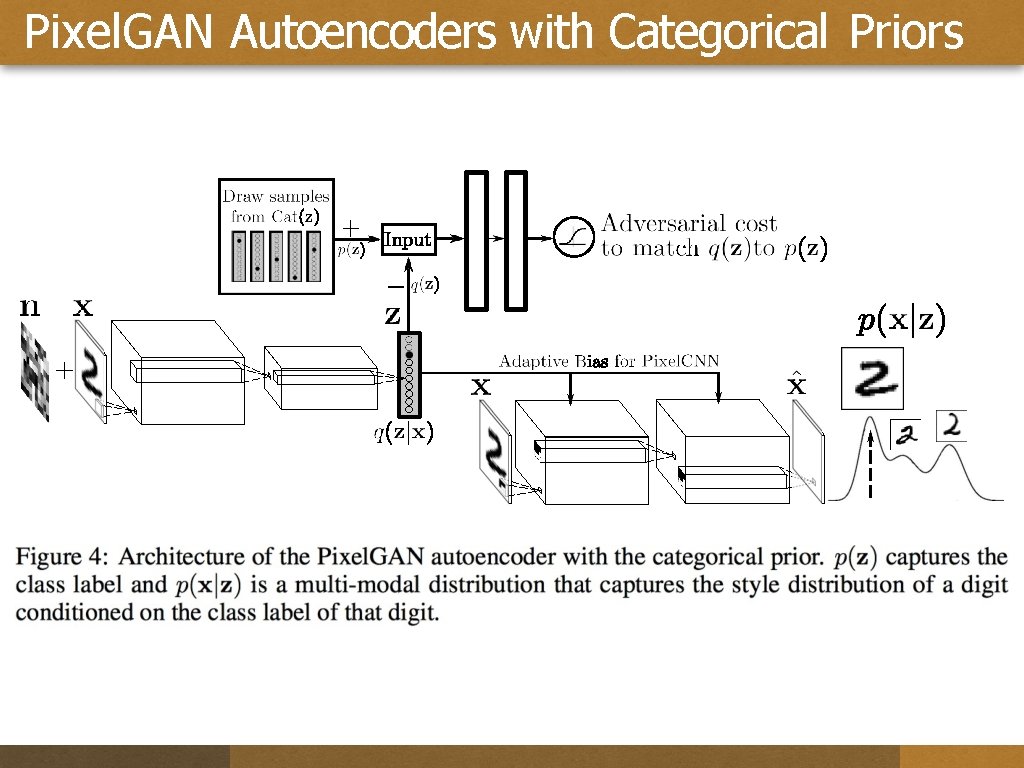

Pixel. GAN Autoencoders with Categorical Priors

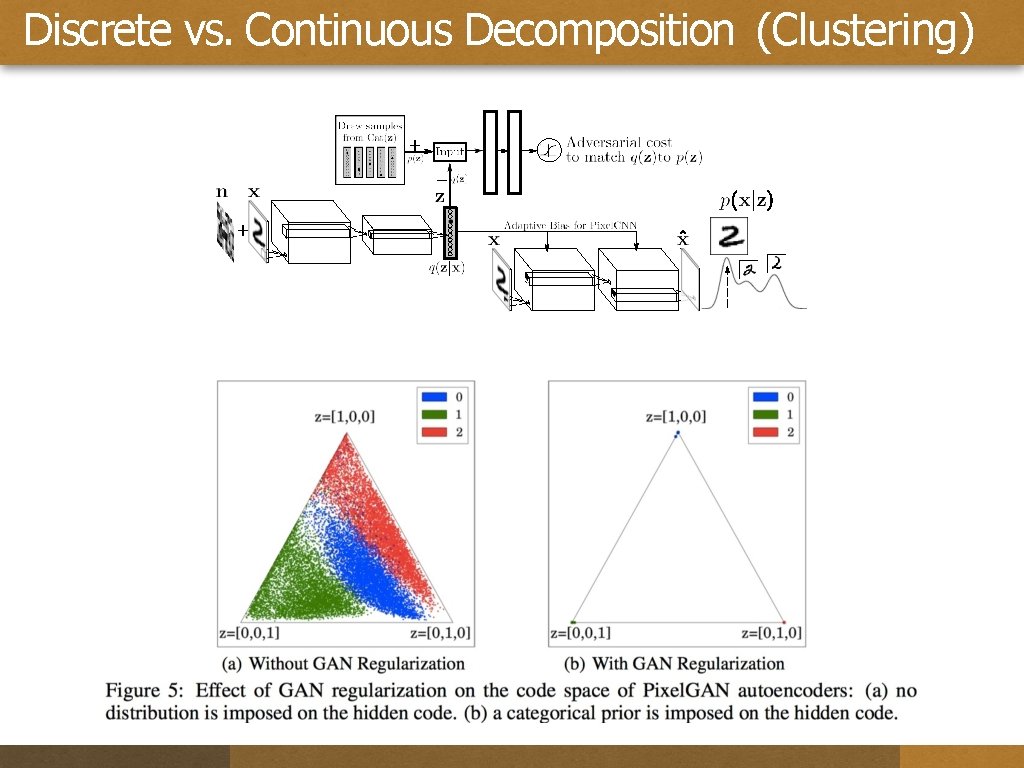

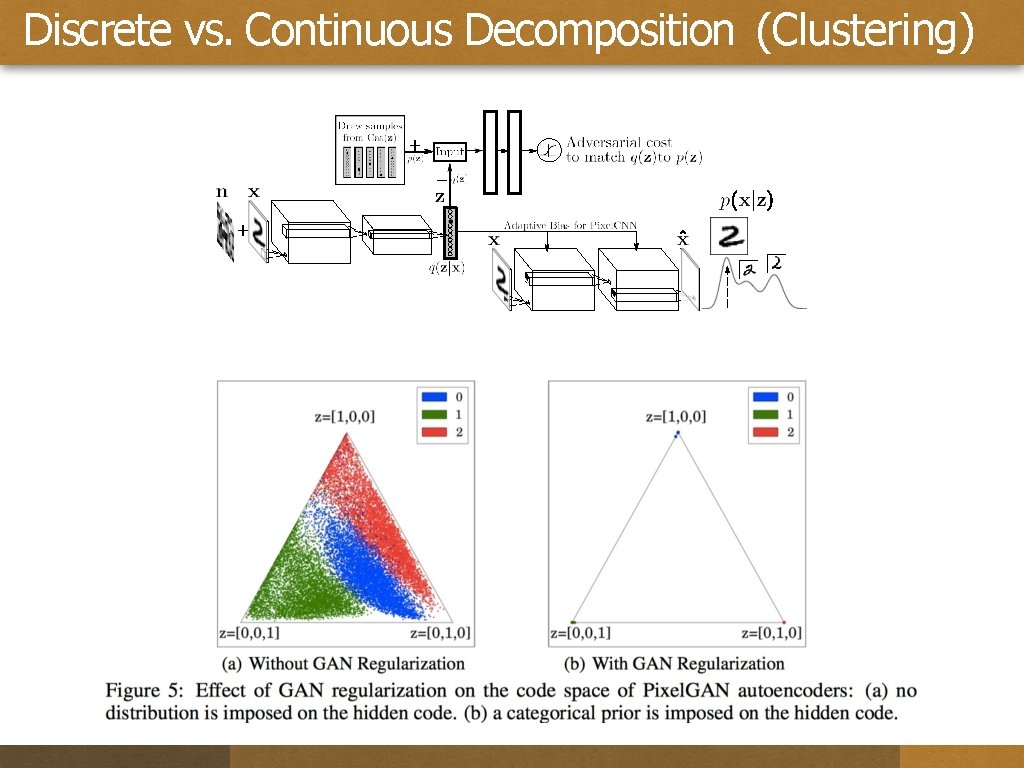

Discrete vs. Continuous Decomposition (Clustering)

Discrete vs. Continuous Decomposition (Clustering)

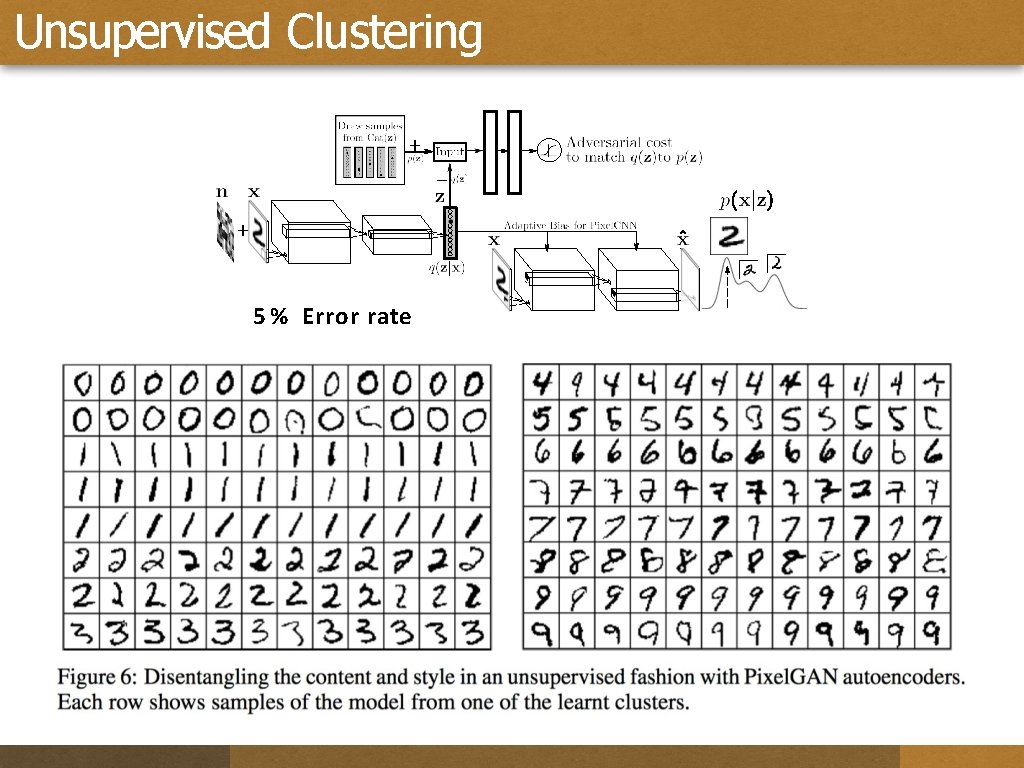

Unsupervised Clustering 5 % Error rate

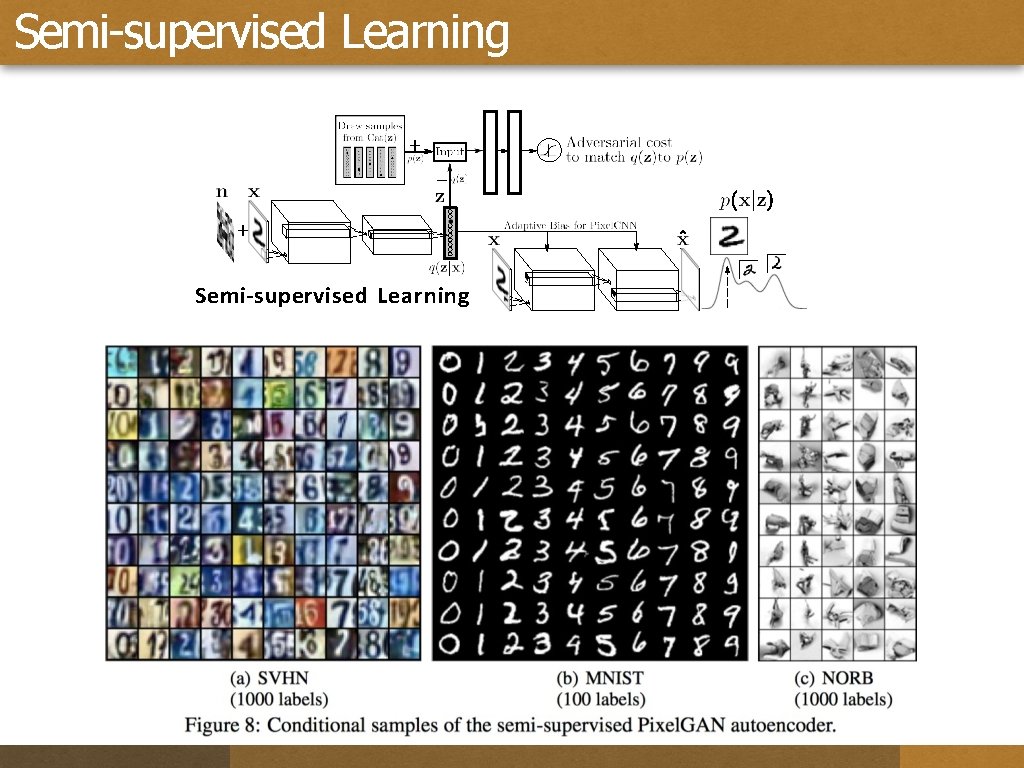

Semi-supervised Learning

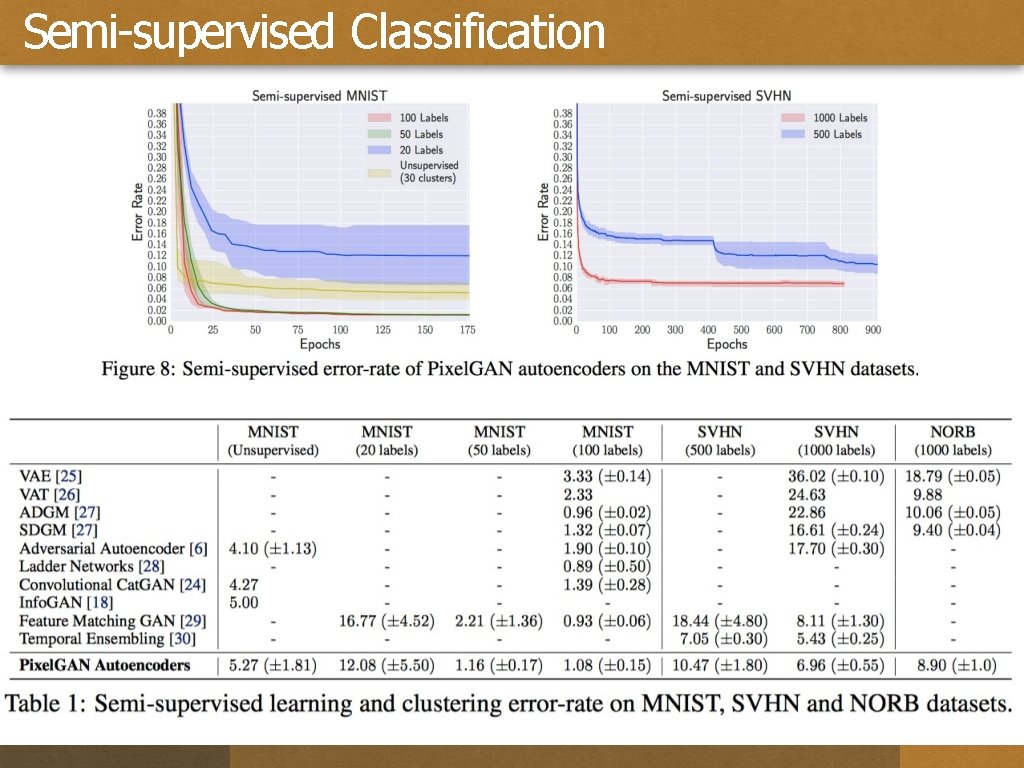

Semi-supervised Classification

Outline 1. Background • Pixel. CNNs • Variational Autoencoders • Adversarial Autoencoders 2. Pixel. GAN Autoencoders • Limitations of VAE/AAE • Structure and Training • Benefits of Pixel. GAN Autoencoders 3. Experiments 4. Conclusion

Unsupervised Clustering A Proposed the Pixel. GAN autoencoder, which is a generative autoencoder that combines a generative Pixel. CNN with a GAN inference network that can impose arbitrary priors on the latent code. B Showed that imposing different distributions as the prior enables us to learn a latent representation that captures the type of statistics that we care about, while the remaining structure of the image is captured by the Pixel. CNN decoder. C Demonstrate the application of Pixel. GAN autoencoders in downstream tasks such as semi-supervised learning; Discussed how these architectures have other potentials such as learning cross-domain relations between two different domains

Thank you!

- Slides: 26