Pipelining for Multi Core Architectures 1 MultiCore Technology

- Slides: 31

Pipelining for Multi. Core Architectures 1

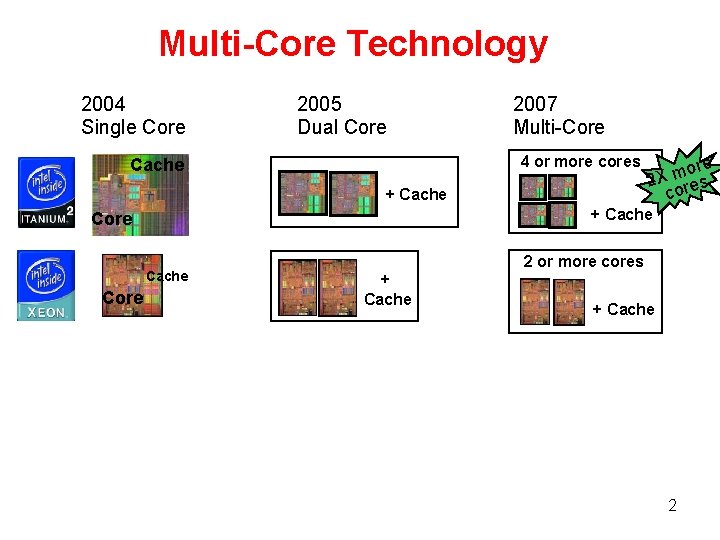

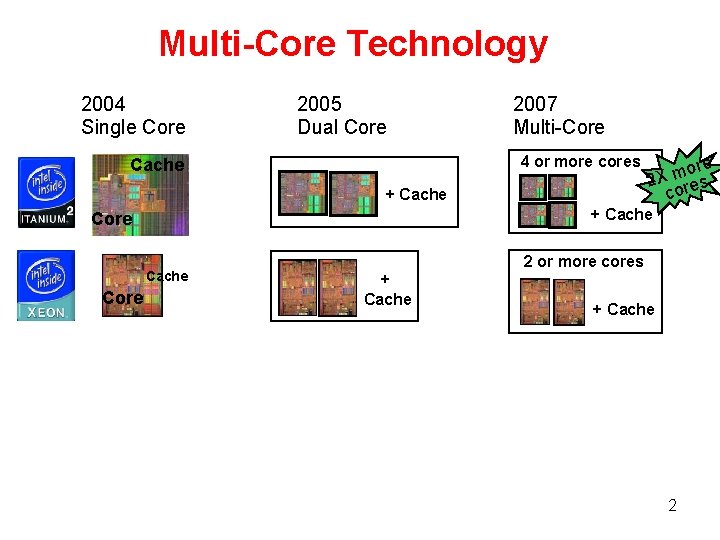

Multi-Core Technology 2004 Single Core 2005 Dual Core 2007 Multi Core 4 or more cores Cache + Cache Core ore m 2 X res co 2 or more cores + Cache 2

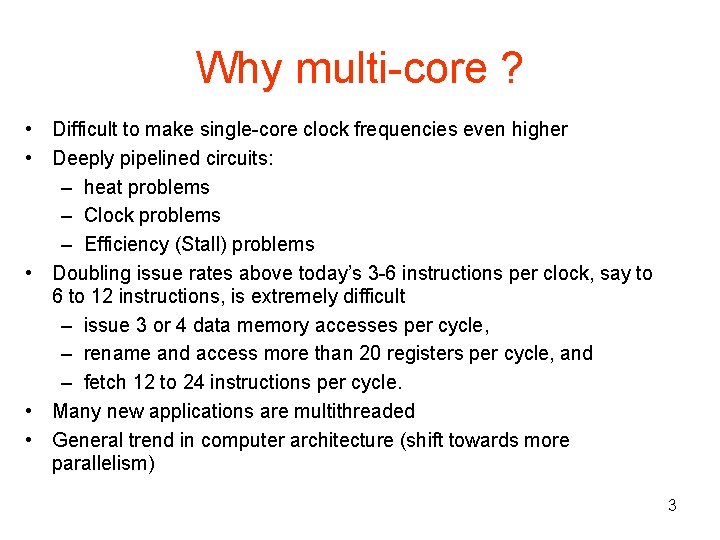

Why multi core ? • Difficult to make single core clock frequencies even higher • Deeply pipelined circuits: – heat problems – Clock problems – Efficiency (Stall) problems • Doubling issue rates above today’s 3 6 instructions per clock, say to 6 to 12 instructions, is extremely difficult – issue 3 or 4 data memory accesses per cycle, – rename and access more than 20 registers per cycle, and – fetch 12 to 24 instructions per cycle. • Many new applications are multithreaded • General trend in computer architecture (shift towards more parallelism) 3

Instruction level parallelism • Parallelism at the machine instruction level • The processor can re order, pipeline instructions, split them into microinstructions, do aggressive branch prediction, etc. • Instruction level parallelism enabled rapid increases in processor speeds over the last 15 years 4

Thread level parallelism (TLP) • This is parallelism on a more coarser scale • Server can serve each client in a separate thread (Web server, database server) • A computer game can do AI, graphics, and sound in three separate threads • Single core superscalar processors cannot fully exploit TLP • Multi core architectures are the next step in processor evolution: explicitly exploiting TLP 5

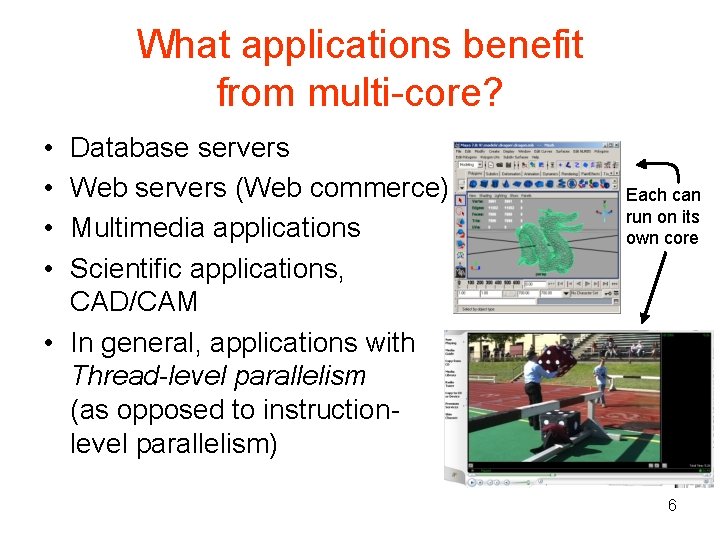

What applications benefit from multi core? • • Database servers Web servers (Web commerce) Multimedia applications Scientific applications, CAD/CAM • In general, applications with Thread-level parallelism (as opposed to instruction level parallelism) Each can run on its own core 6

More examples • Editing a photo while recording a TV show through a digital video recorder • Downloading software while running an anti virus program • “Anything that can be threaded today will map efficiently to multi core” • BUT: some applications difficult to parallelize 7

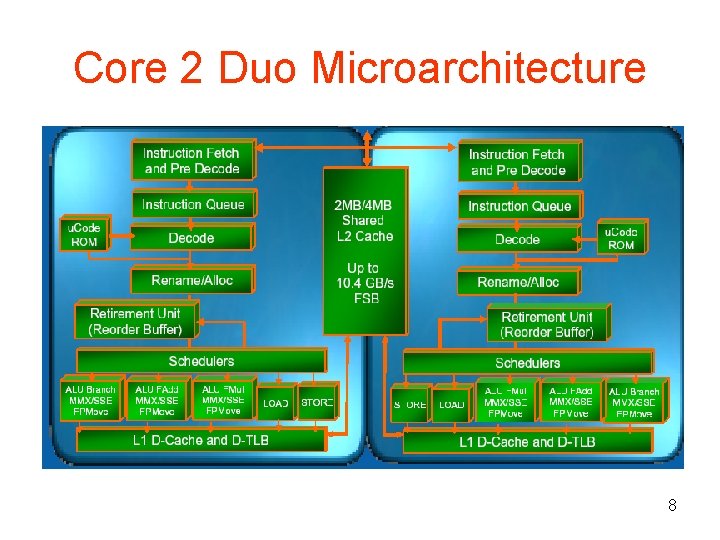

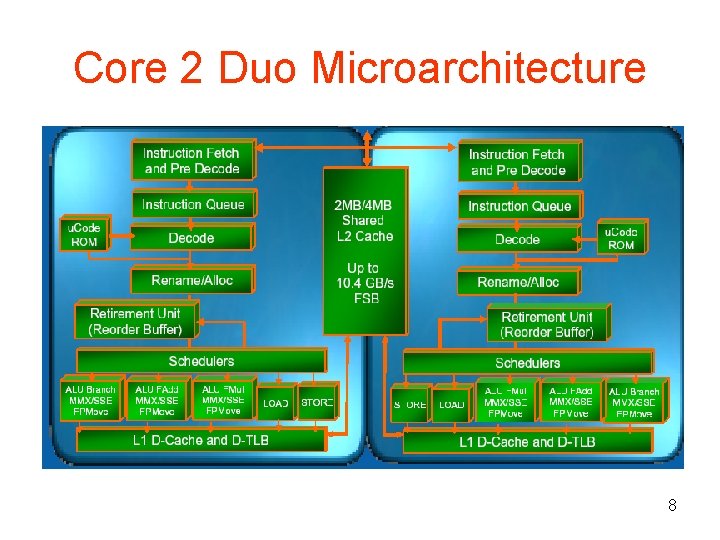

Core 2 Duo Microarchitecture 8

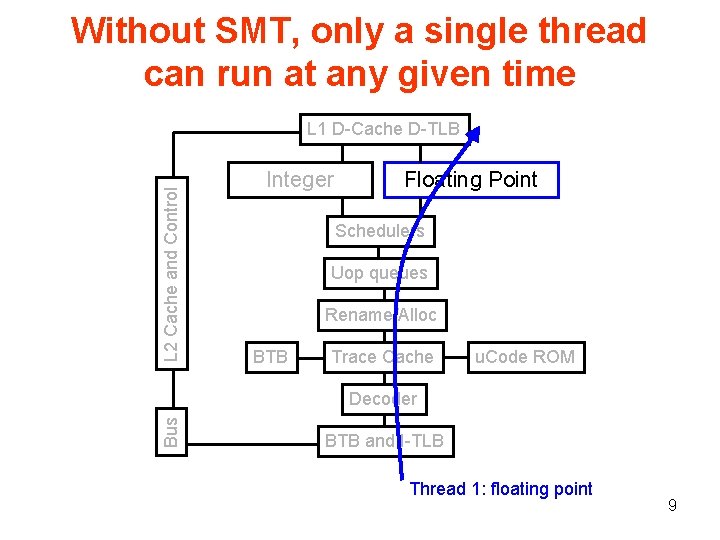

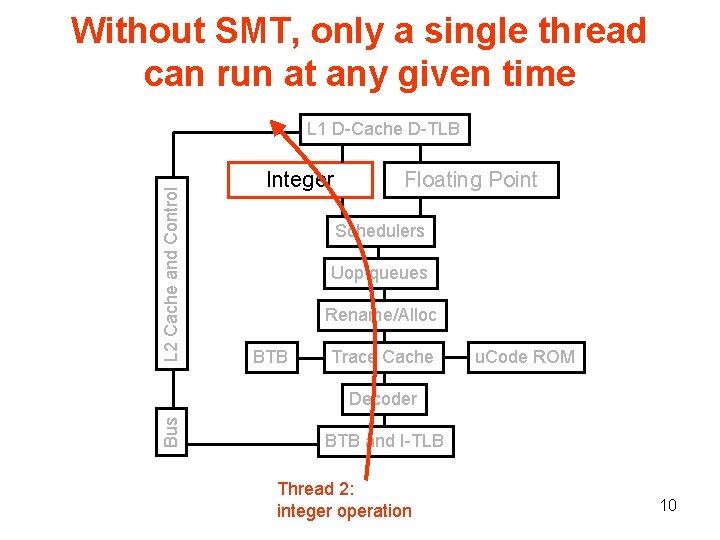

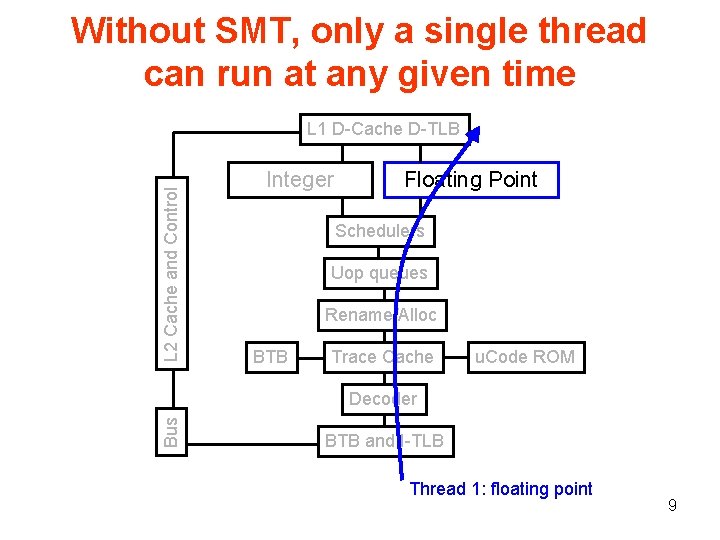

Without SMT, only a single thread can run at any given time L 2 Cache and Control L 1 D Cache D TLB Integer Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Bus Decoder BTB and I TLB Thread 1: floating point 9

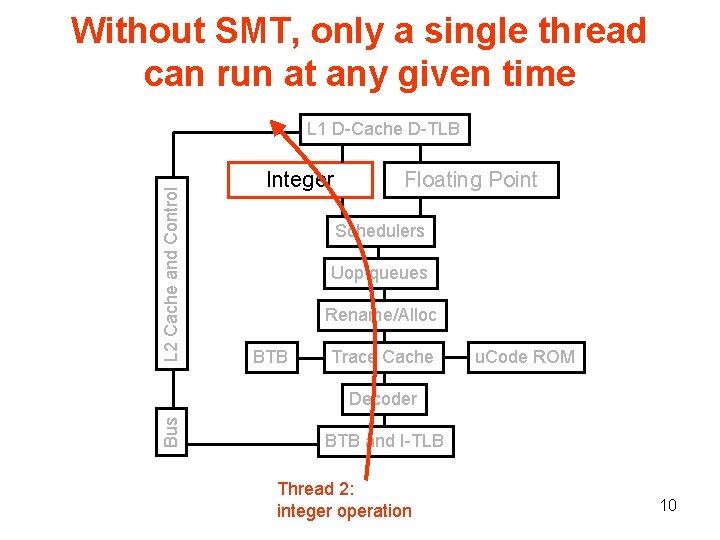

Without SMT, only a single thread can run at any given time L 2 Cache and Control L 1 D Cache D TLB Integer Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Bus Decoder BTB and I TLB Thread 2: integer operation 10

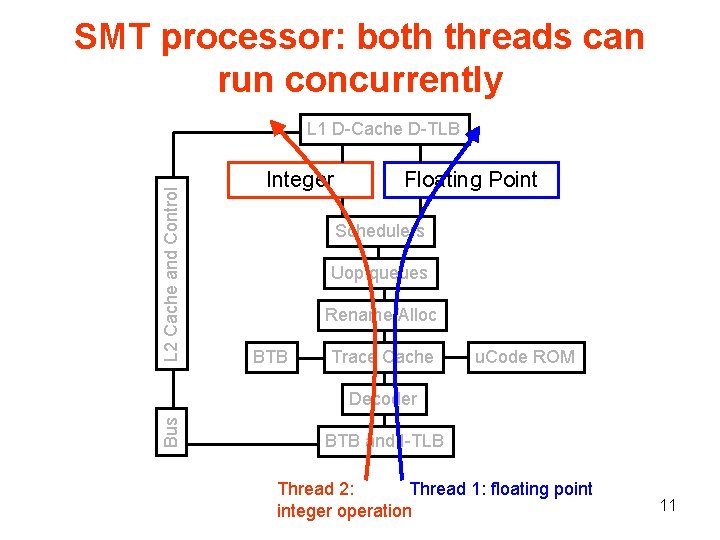

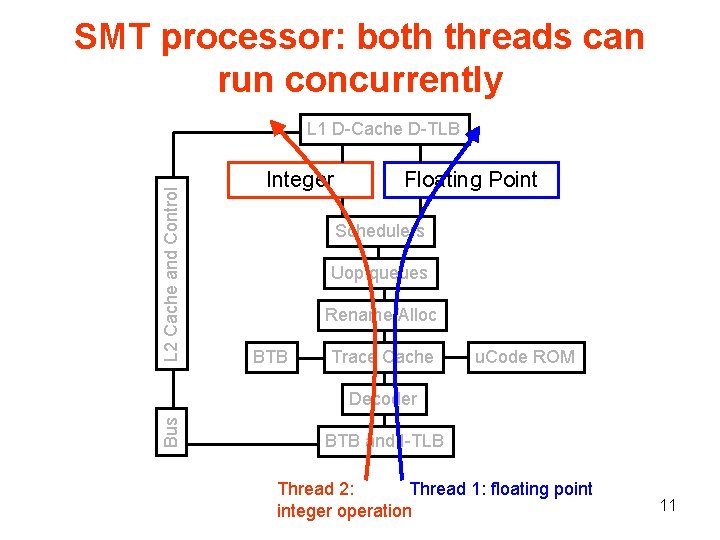

SMT processor: both threads can run concurrently L 2 Cache and Control L 1 D Cache D TLB Integer Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Bus Decoder BTB and I TLB Thread 2: Thread 1: floating point integer operation 11

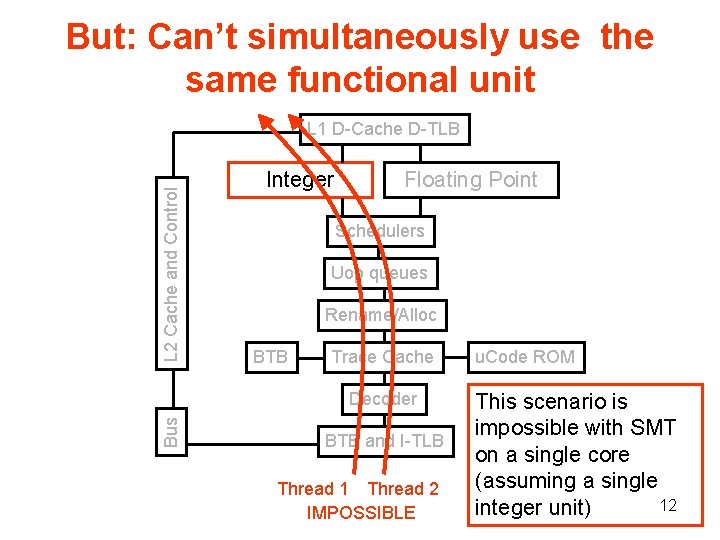

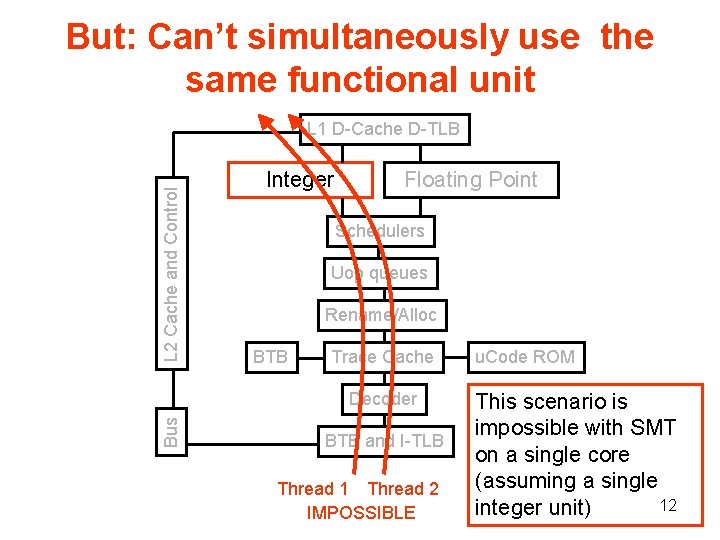

But: Can’t simultaneously use the same functional unit L 2 Cache and Control L 1 D Cache D TLB Integer Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache Bus Decoder BTB and I TLB Thread 1 Thread 2 IMPOSSIBLE u. Code ROM This scenario is impossible with SMT on a single core (assuming a single 12 integer unit)

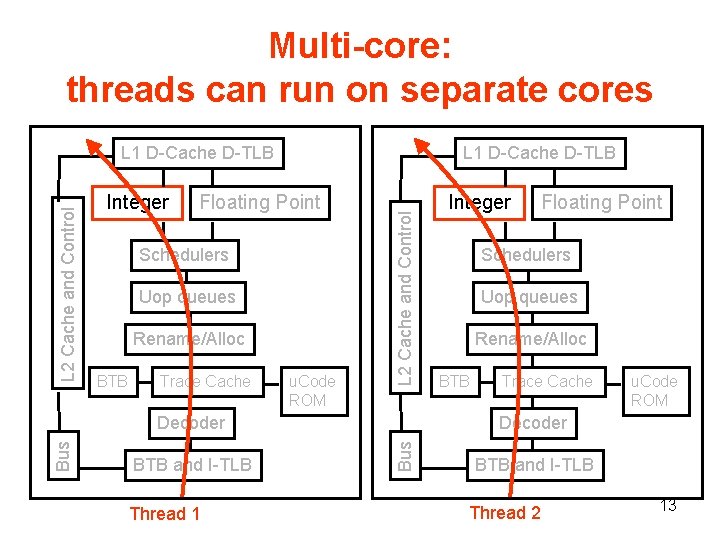

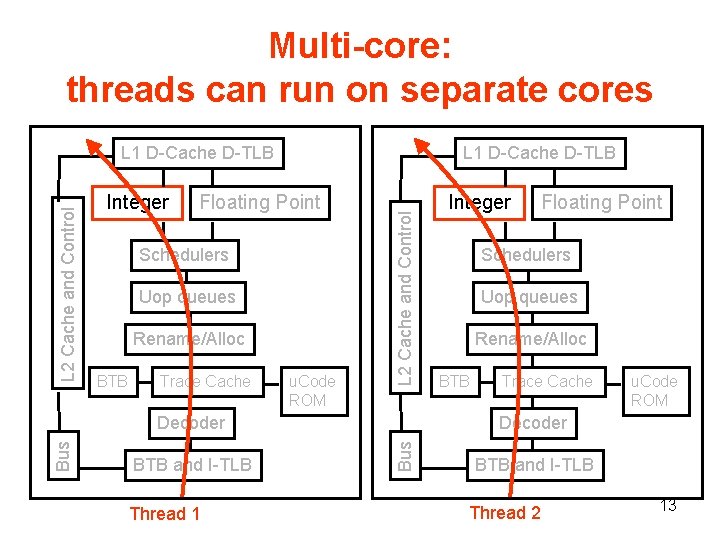

Multi-core: threads can run on separate cores Integer L 1 D Cache D TLB Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM L 2 Cache and Control L 1 D Cache D TLB BTB and I TLB Thread 1 Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Decoder Bus Decoder Integer BTB and I TLB Thread 2 13

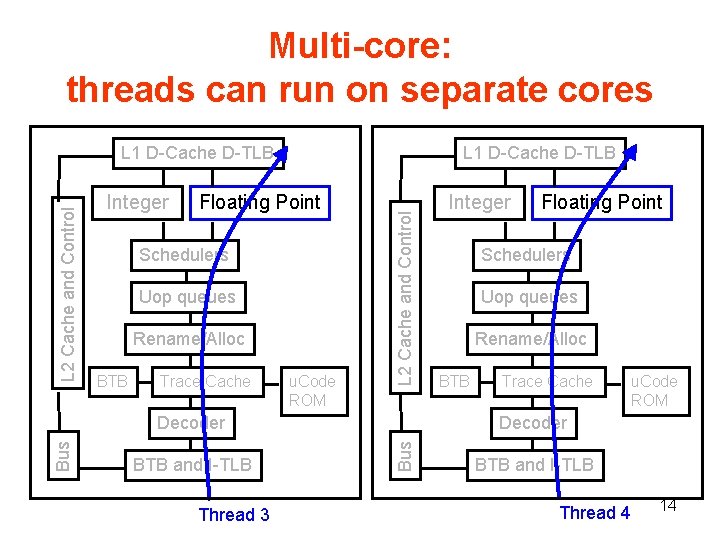

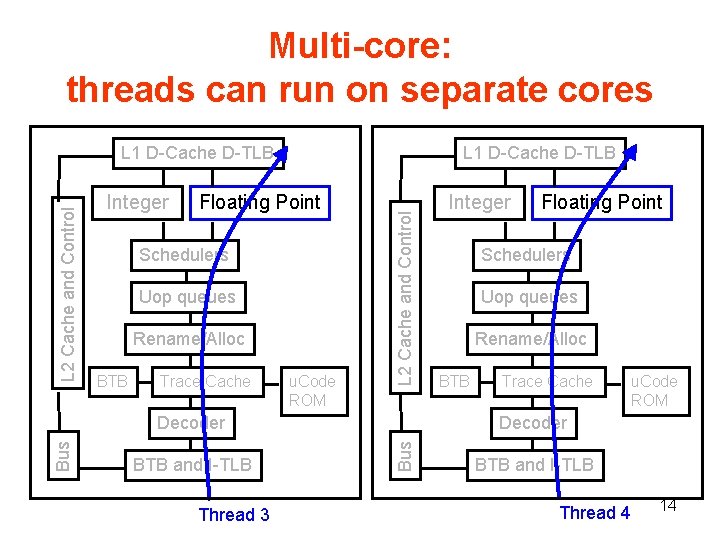

Multi-core: threads can run on separate cores Integer L 1 D Cache D TLB Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM L 2 Cache and Control L 1 D Cache D TLB BTB and I TLB Thread 3 Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Decoder Bus Decoder Integer BTB and I TLB Thread 4 14

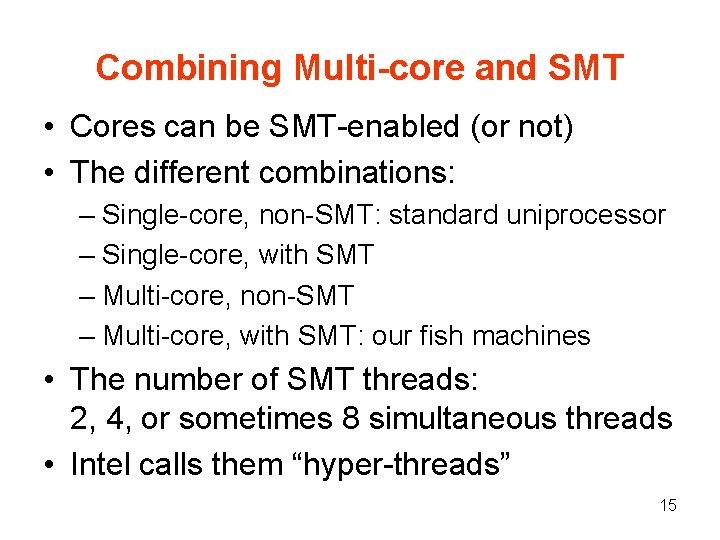

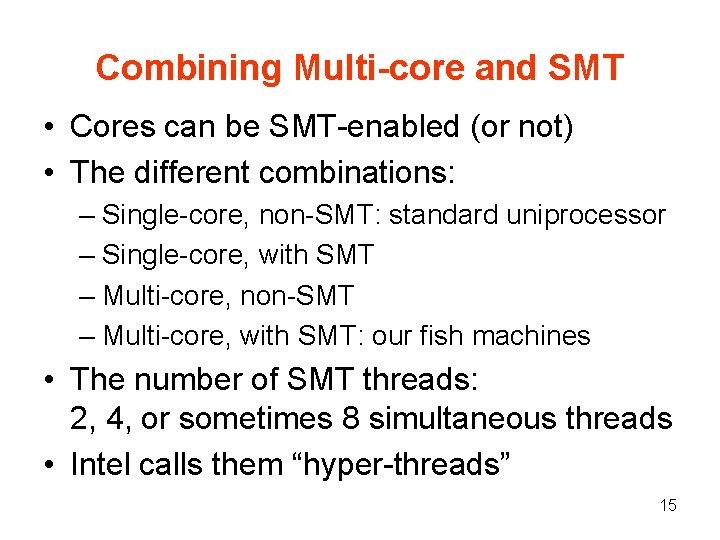

Combining Multi-core and SMT • Cores can be SMT enabled (or not) • The different combinations: – Single core, non SMT: standard uniprocessor – Single core, with SMT – Multi core, non SMT – Multi core, with SMT: our fish machines • The number of SMT threads: 2, 4, or sometimes 8 simultaneous threads • Intel calls them “hyper threads” 15

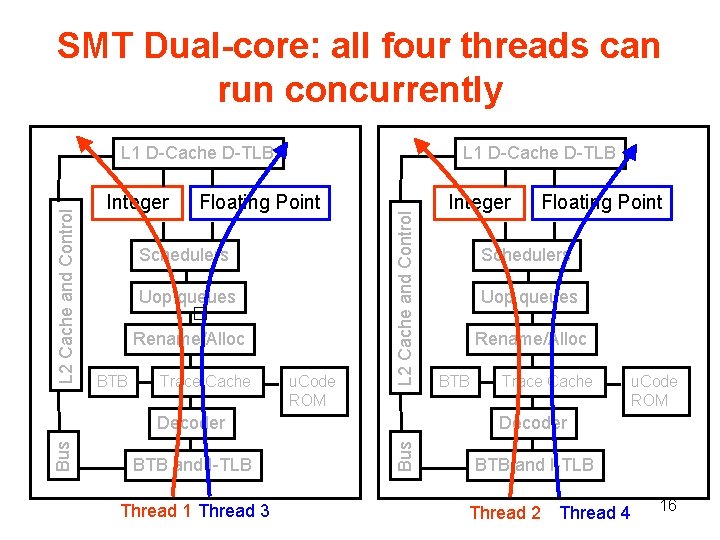

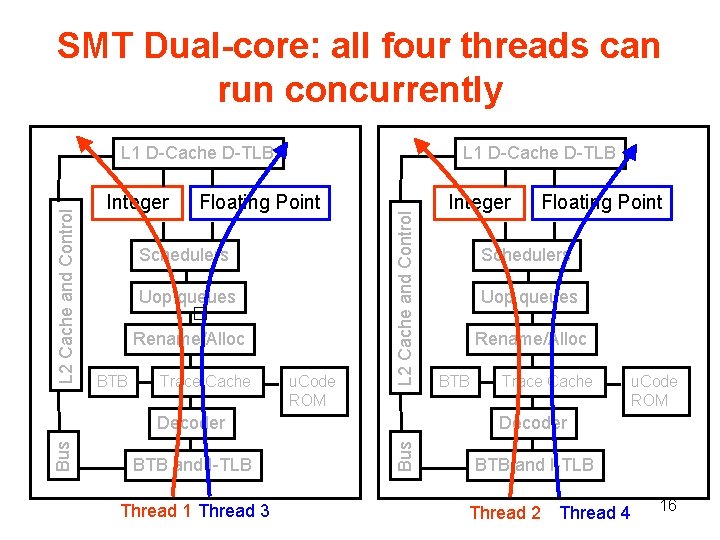

SMT Dual-core: all four threads can run concurrently Integer L 1 D Cache D TLB Floating Point Schedulers Uop queues � Rename/Alloc BTB Trace Cache u. Code ROM L 2 Cache and Control L 1 D Cache D TLB BTB and I TLB Thread 1 Thread 3 Floating Point Schedulers Uop queues Rename/Alloc BTB Trace Cache u. Code ROM Decoder Bus Decoder Integer BTB and I TLB Thread 2 Thread 4 16

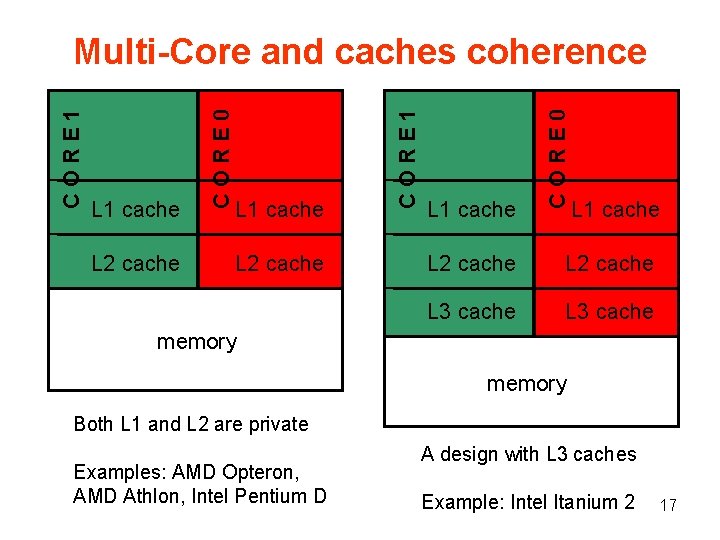

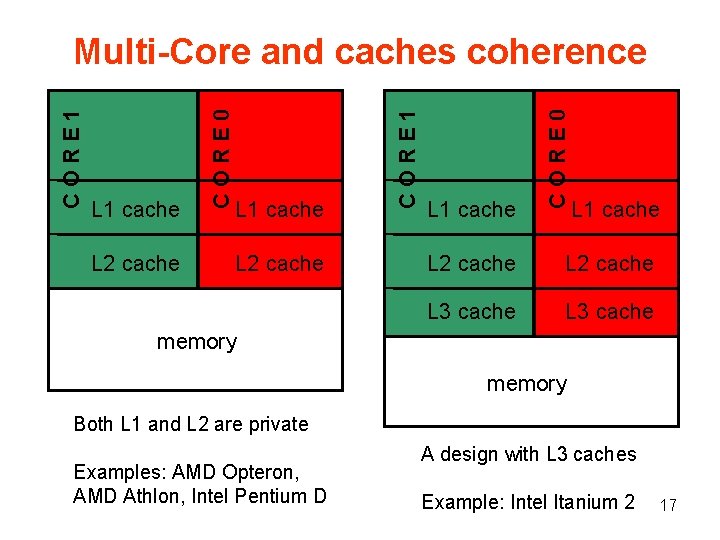

L 2 cache L 1 cache CORE 0 L 1 cache CORE 1 L 1 cache CORE 0 CORE 1 Multi-Core and caches coherence L 1 cache L 2 cache L 3 cache memory Both L 1 and L 2 are private Examples: AMD Opteron, AMD Athlon, Intel Pentium D A design with L 3 caches Example: Intel Itanium 2 17

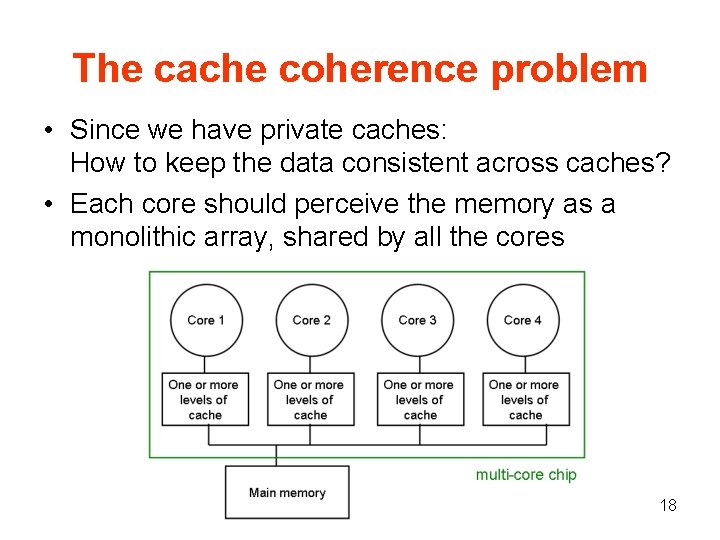

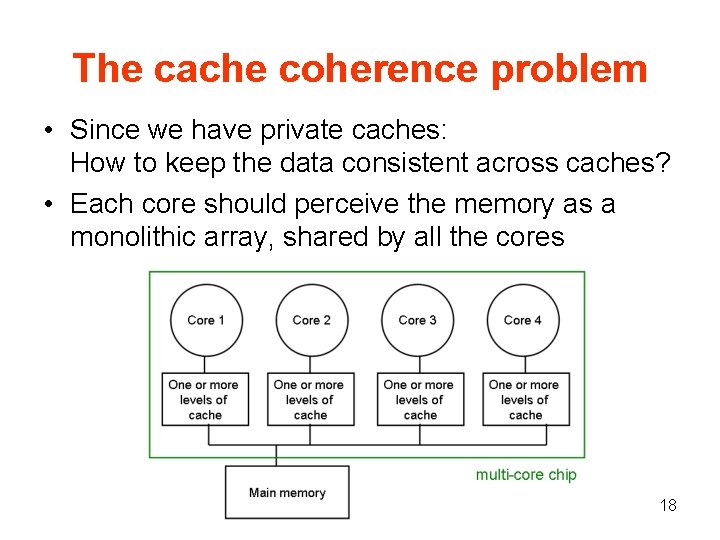

The cache coherence problem • Since we have private caches: How to keep the data consistent across caches? • Each core should perceive the memory as a monolithic array, shared by all the cores 18

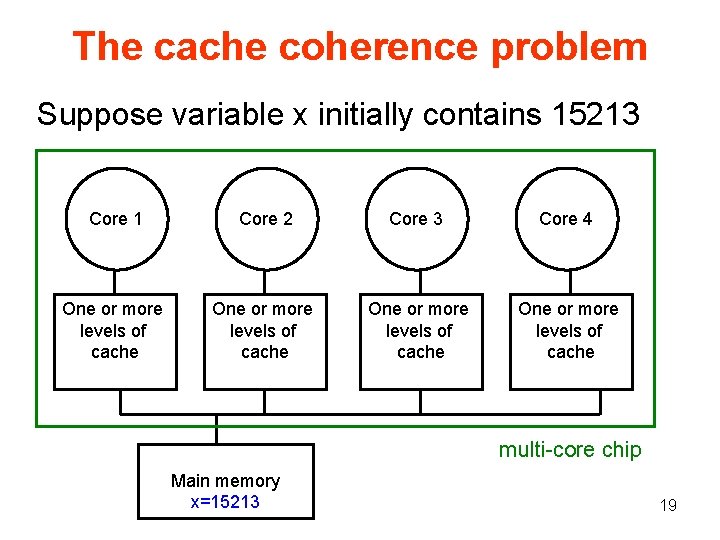

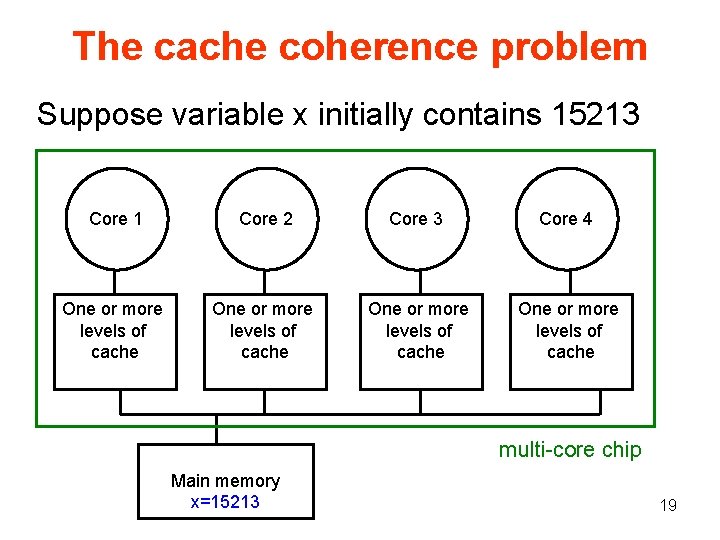

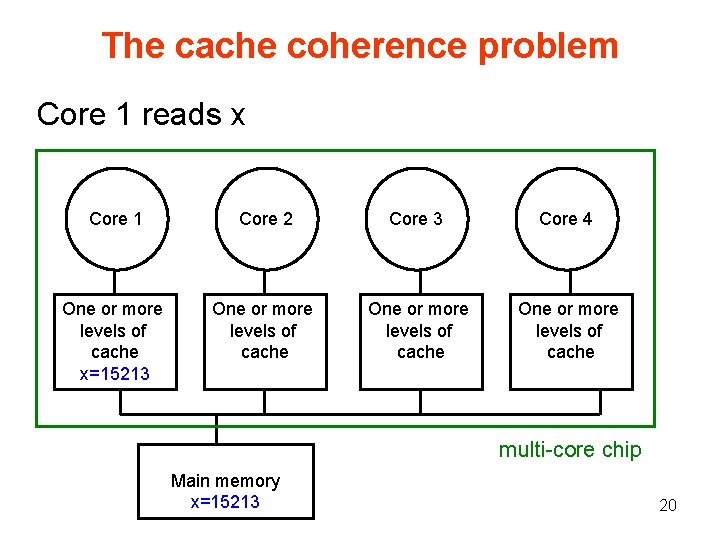

The cache coherence problem Suppose variable x initially contains 15213 Core 1 Core 2 Core 3 Core 4 One or more levels of cache multi core chip Main memory x=15213 19

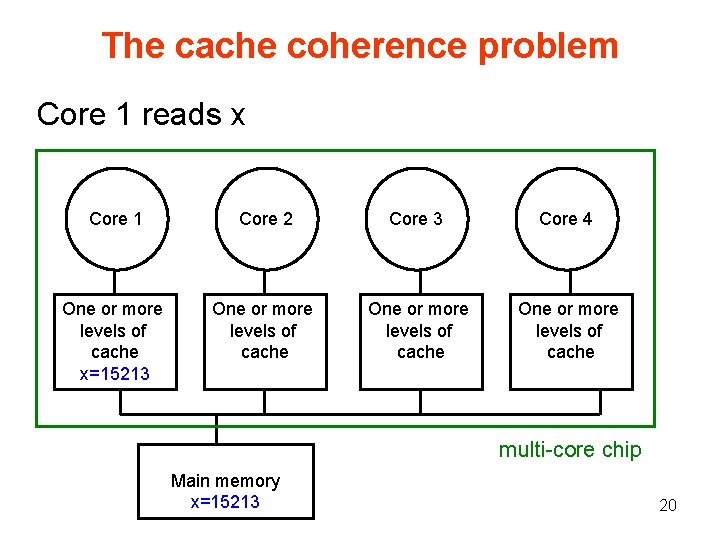

The cache coherence problem Core 1 reads x Core 1 Core 2 Core 3 Core 4 One or more levels of cache x=15213 One or more levels of cache multi core chip Main memory x=15213 20

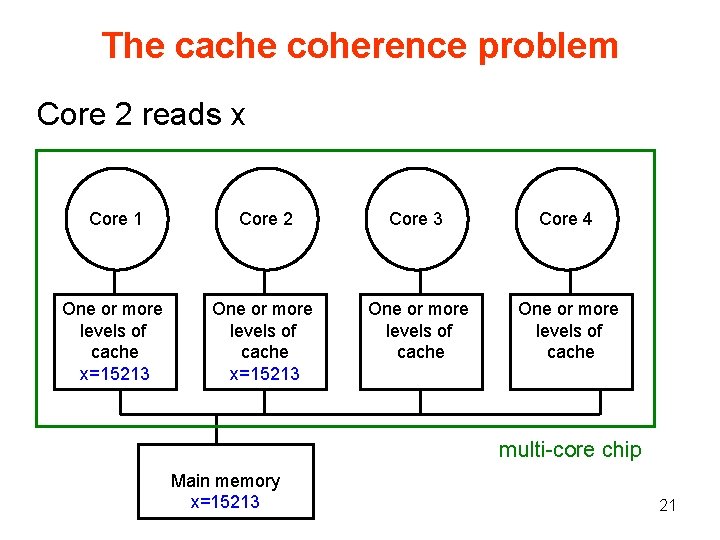

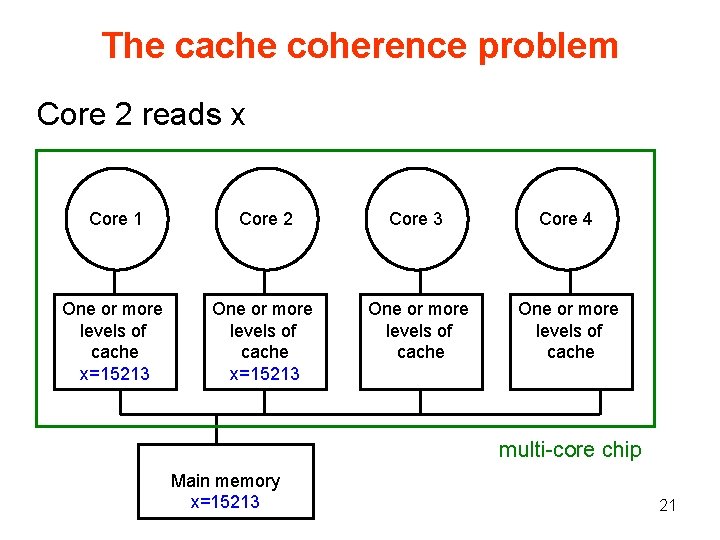

The cache coherence problem Core 2 reads x Core 1 Core 2 Core 3 Core 4 One or more levels of cache x=15213 One or more levels of cache multi core chip Main memory x=15213 21

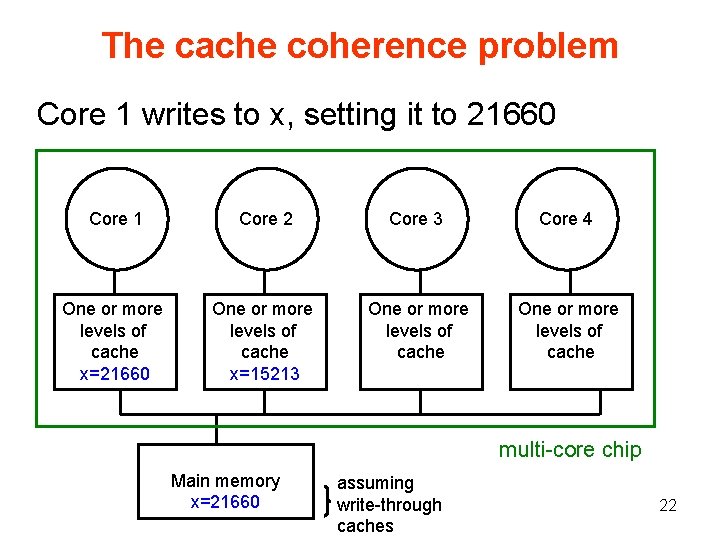

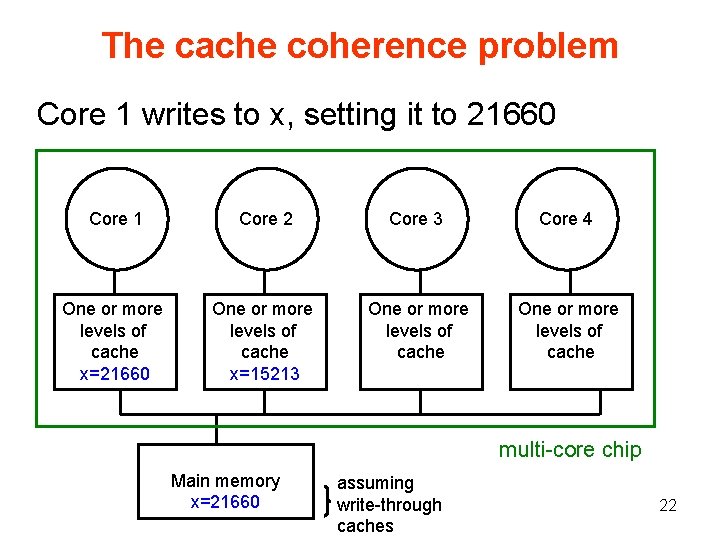

The cache coherence problem Core 1 writes to x, setting it to 21660 Core 1 Core 2 Core 3 Core 4 One or more levels of cache x=21660 One or more levels of cache x=15213 One or more levels of cache multi core chip Main memory x=21660 assuming write through caches 22

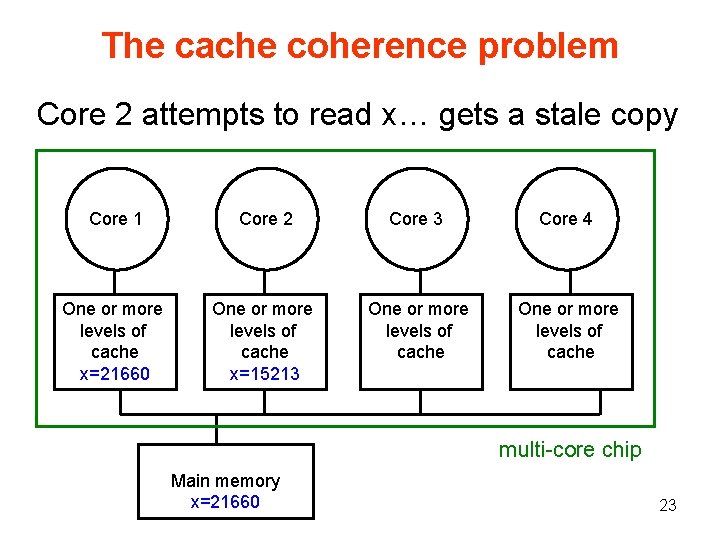

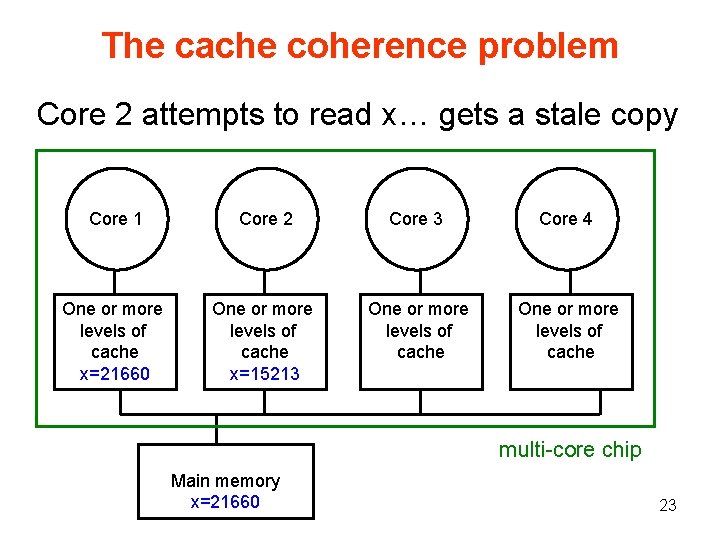

The cache coherence problem Core 2 attempts to read x… gets a stale copy Core 1 Core 2 Core 3 Core 4 One or more levels of cache x=21660 One or more levels of cache x=15213 One or more levels of cache multi core chip Main memory x=21660 23

The Memory Wall Problem 24

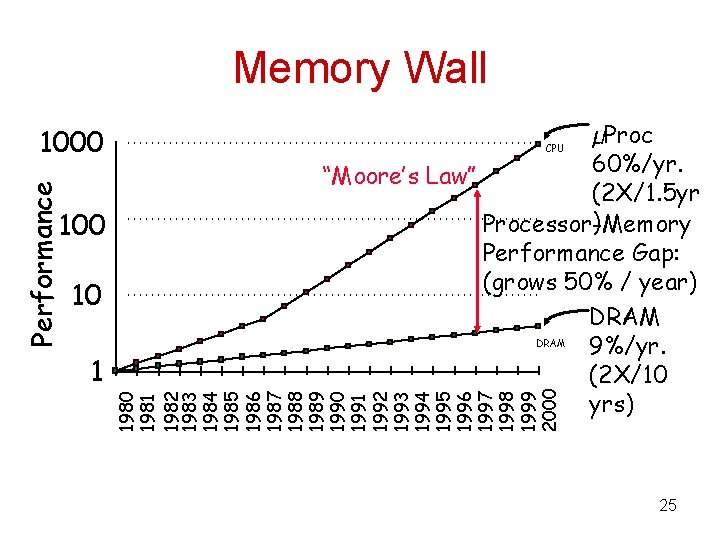

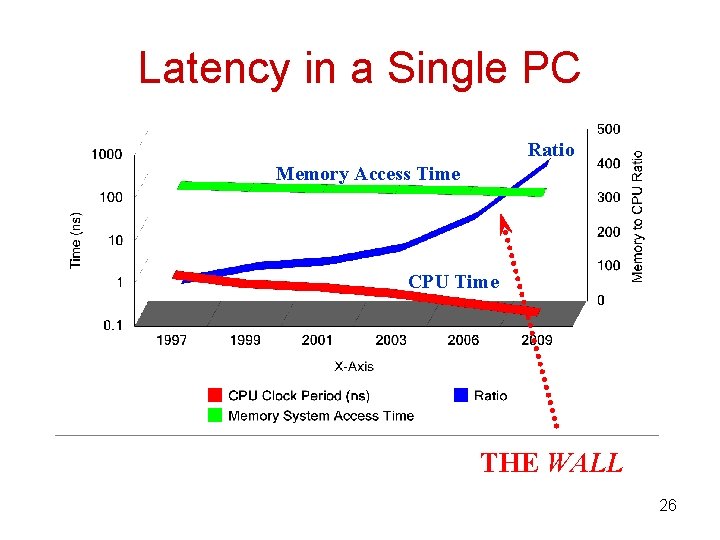

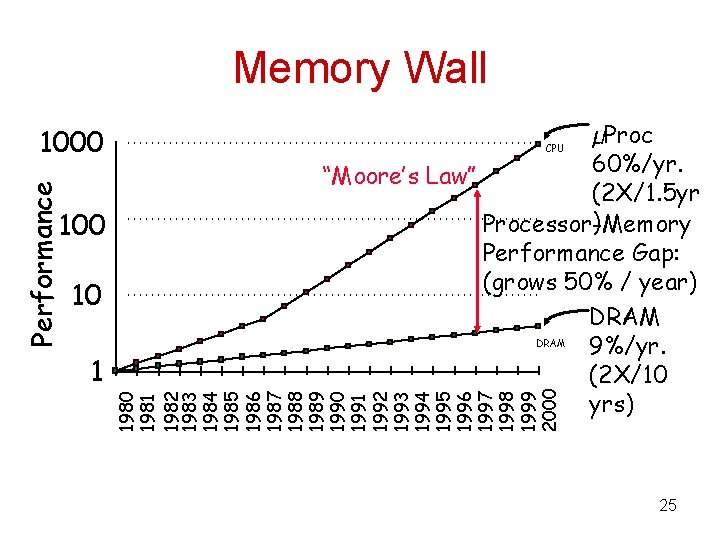

Memory Wall 100 10 1 µProc 60%/yr. “Moore’s Law” (2 X/1. 5 yr ) Processor-Memory Performance Gap: (grows 50% / year) DRAM 9%/yr. (2 X/10 yrs) CPU 1980 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 Performance 1000 25

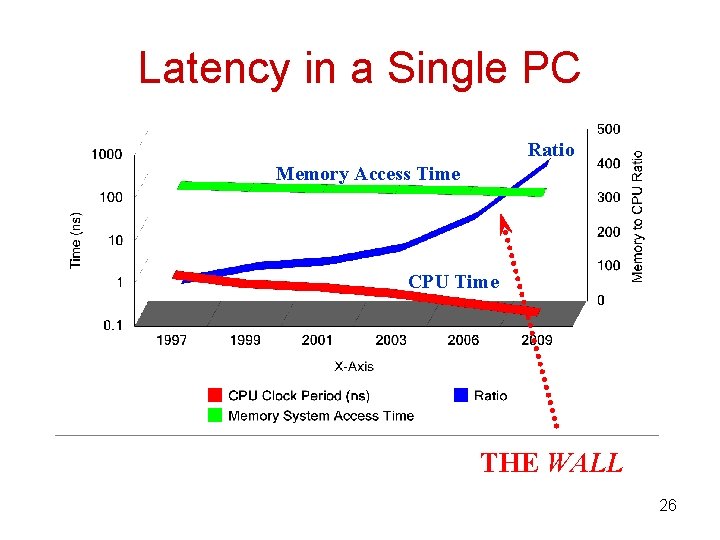

Latency in a Single PC Ratio Memory Access Time CPU Time THE WALL 26

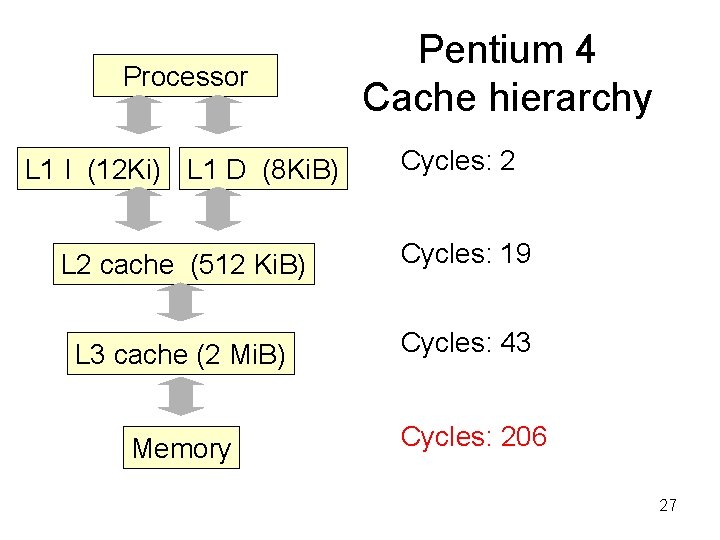

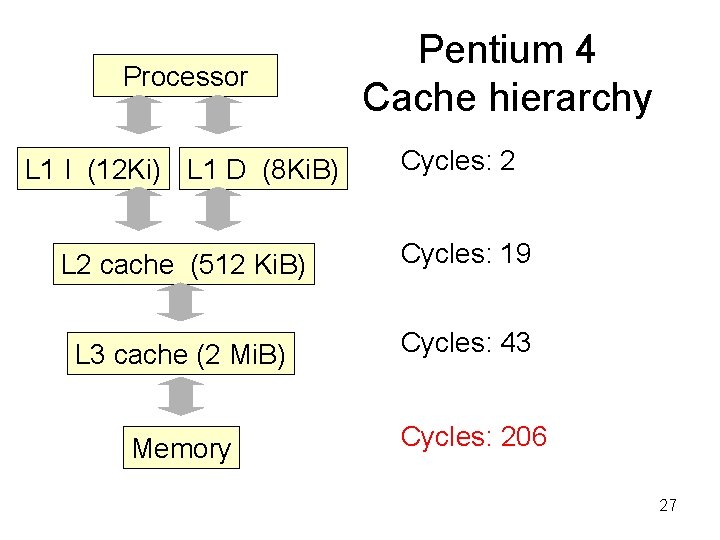

Processor Pentium 4 Cache hierarchy L 1 I (12 Ki) L 1 D (8 Ki. B) Cycles: 2 L 2 cache (512 Ki. B) Cycles: 19 L 3 cache (2 Mi. B) Cycles: 43 Memory Cycles: 206 27

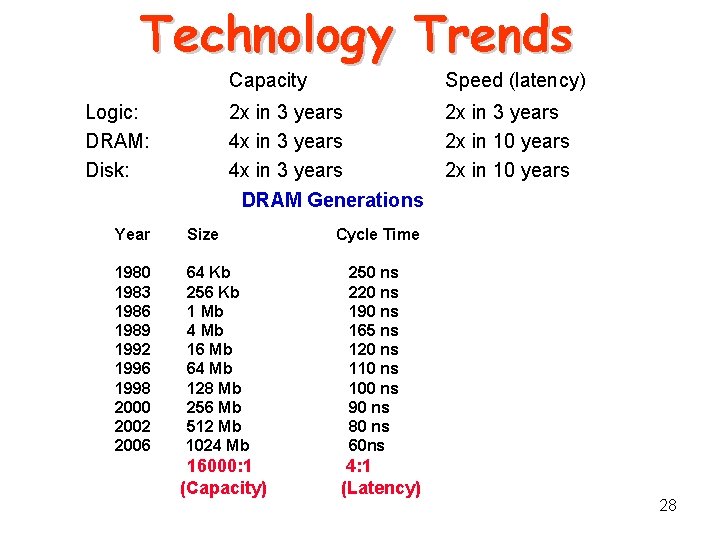

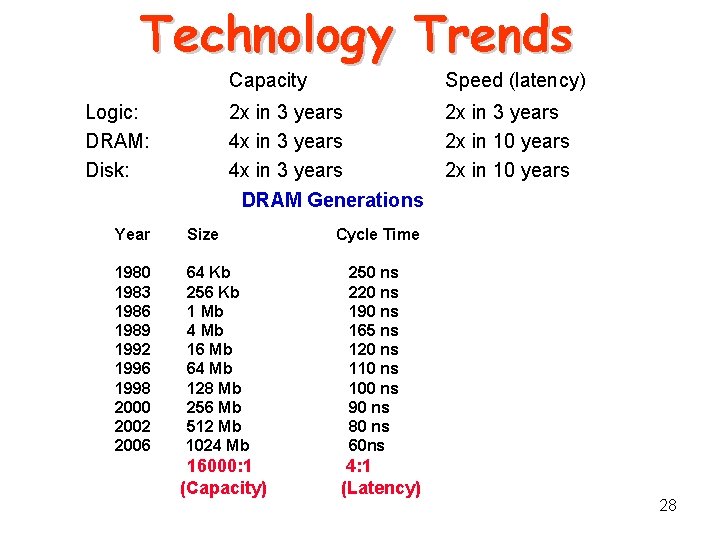

Technology Trends Logic: DRAM: Disk: Capacity Speed (latency) 2 x in 3 years 4 x in 3 years 2 x in 10 years DRAM Generations Year Size 1980 1983 1986 1989 1992 1996 1998 2000 2002 2006 64 Kb 256 Kb 1 Mb 4 Mb 16 Mb 64 Mb 128 Mb 256 Mb 512 Mb 1024 Mb 16000: 1 (Capacity) Cycle Time 250 ns 220 ns 190 ns 165 ns 120 ns 110 ns 100 ns 90 ns 80 ns 60 ns 4: 1 (Latency) 28

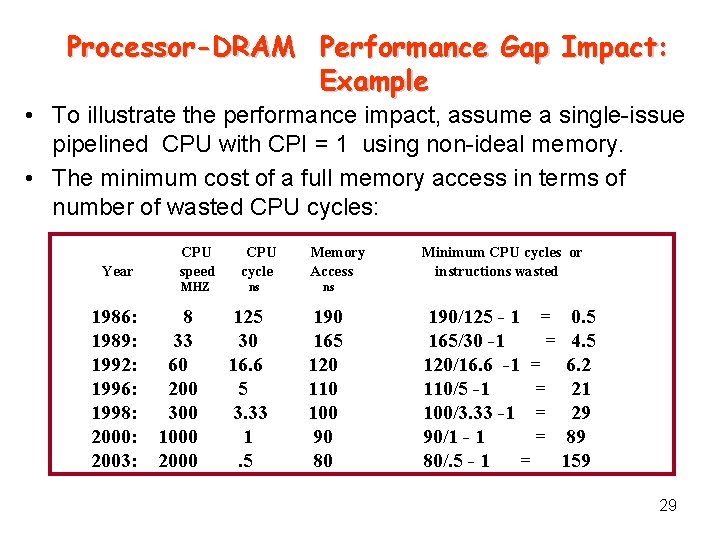

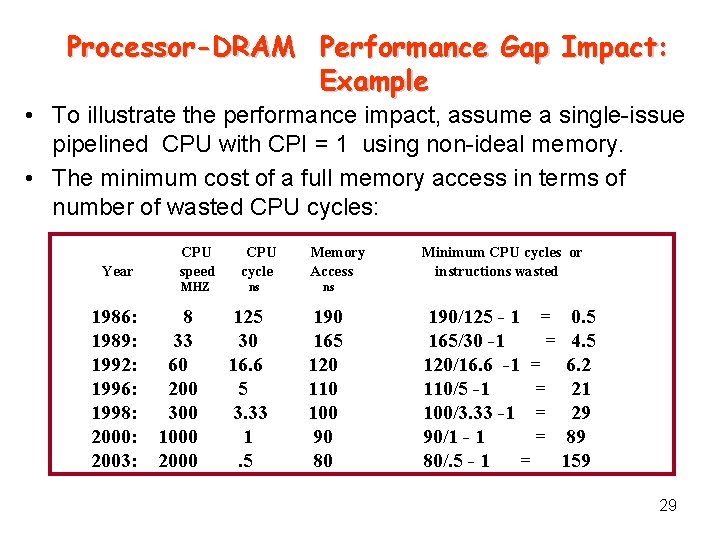

Processor-DRAM Performance Gap Impact: Example • To illustrate the performance impact, assume a single issue pipelined CPU with CPI = 1 using non ideal memory. • The minimum cost of a full memory access in terms of number of wasted CPU cycles: Year CPU speed MHZ 1986: 8 1989: 33 1992: 60 1996: 200 1998: 300 2000: 1000 2003: 2000 CPU cycle ns 125 30 16. 6 5 3. 33 1. 5 Memory Access Minimum CPU cycles or instructions wasted ns 190 165 120 110 100 90 80 190/125 - 1 = 0. 5 165/30 -1 = 4. 5 120/16. 6 -1 = 6. 2 110/5 -1 = 21 100/3. 33 -1 = 29 90/1 - 1 = 89 80/. 5 - 1 = 159 29

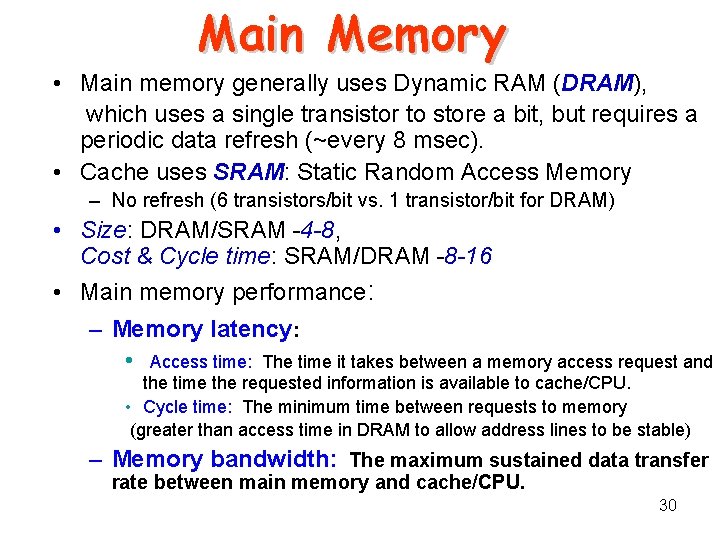

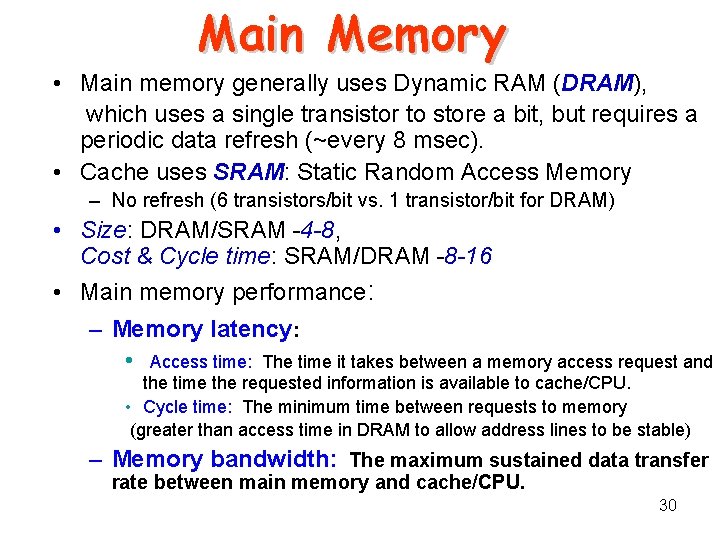

Main Memory • Main memory generally uses Dynamic RAM (DRAM), which uses a single transistor to store a bit, but requires a periodic data refresh (~every 8 msec). • Cache uses SRAM: Static Random Access Memory – No refresh (6 transistors/bit vs. 1 transistor/bit for DRAM) • Size: DRAM/SRAM 4 -8, Cost & Cycle time: SRAM/DRAM 8 -16 • Main memory performance: – Memory latency: • Access time: The time it takes between a memory access request and the time the requested information is available to cache/CPU. • Cycle time: The minimum time between requests to memory (greater than access time in DRAM to allow address lines to be stable) – Memory bandwidth: The maximum sustained data transfer rate between main memory and cache/CPU. 30

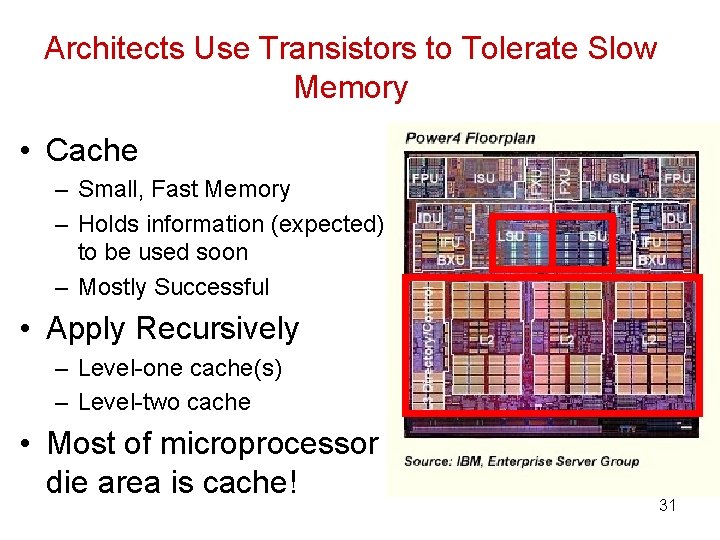

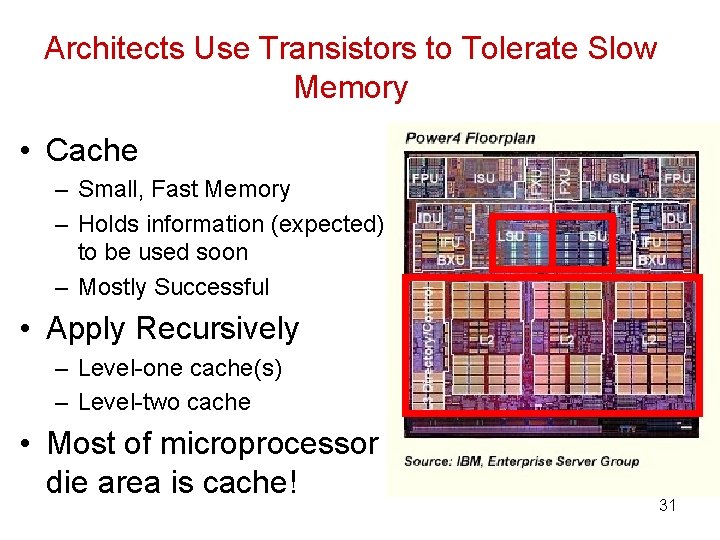

Architects Use Transistors to Tolerate Slow Memory • Cache – Small, Fast Memory – Holds information (expected) to be used soon – Mostly Successful • Apply Recursively – Level one cache(s) – Level two cache • Most of microprocessor die area is cache! 31