Pipelined Processor Design COE 308 Computer Architecture Prof

- Slides: 59

Pipelined Processor Design COE 308 Computer Architecture Prof. Muhamed Mudawar Computer Engineering Department King Fahd University of Petroleum and Minerals

Presentation Outline v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 2

Pipelining Example v Laundry Example: Three Stages 1. Wash dirty load of clothes 2. Dry wet clothes 3. Fold and put clothes into drawers v Each stage takes 30 minutes to complete v Four loads of clothes to wash, dry, and fold Pipelined Processor Design COE 308 – Computer Architecture A B C D © Muhamed Mudawar – slide 3

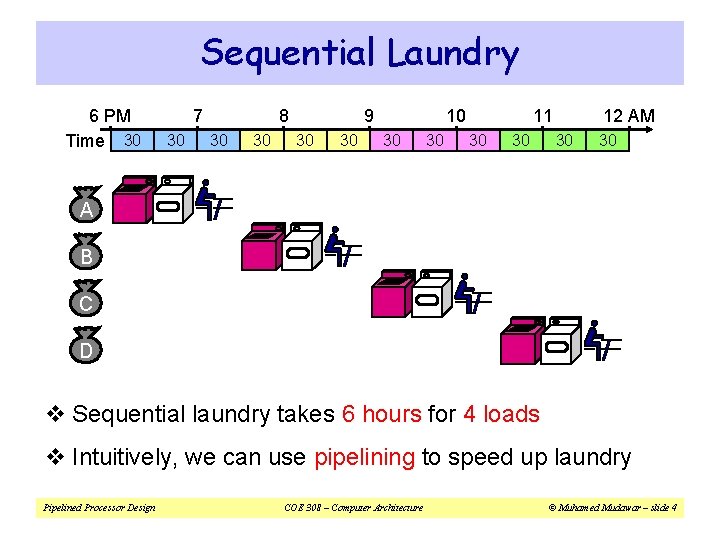

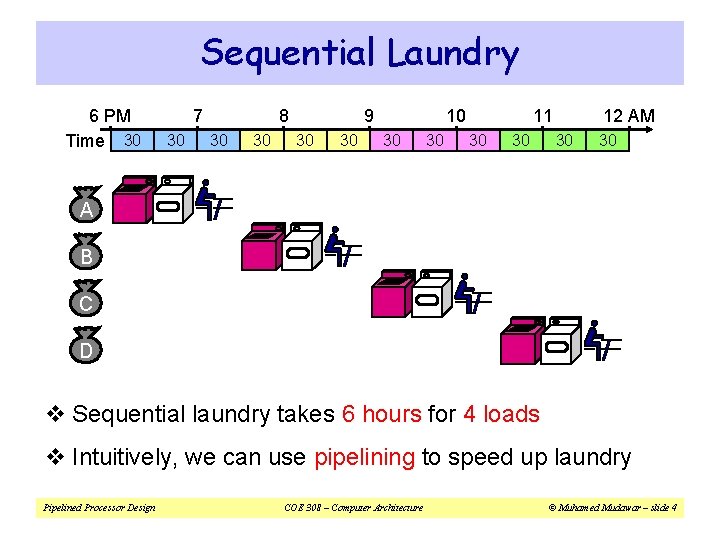

Sequential Laundry 6 PM Time 30 7 30 8 30 30 9 30 30 10 30 30 11 30 30 12 AM 30 30 A B C D v Sequential laundry takes 6 hours for 4 loads v Intuitively, we can use pipelining to speed up laundry Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 4

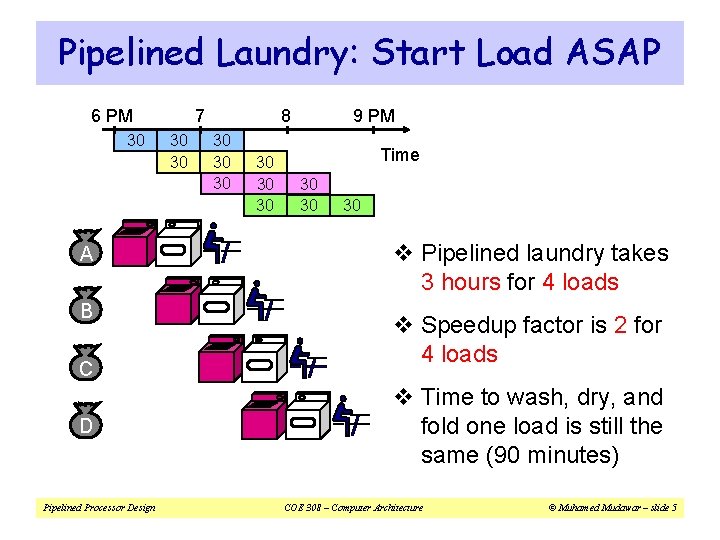

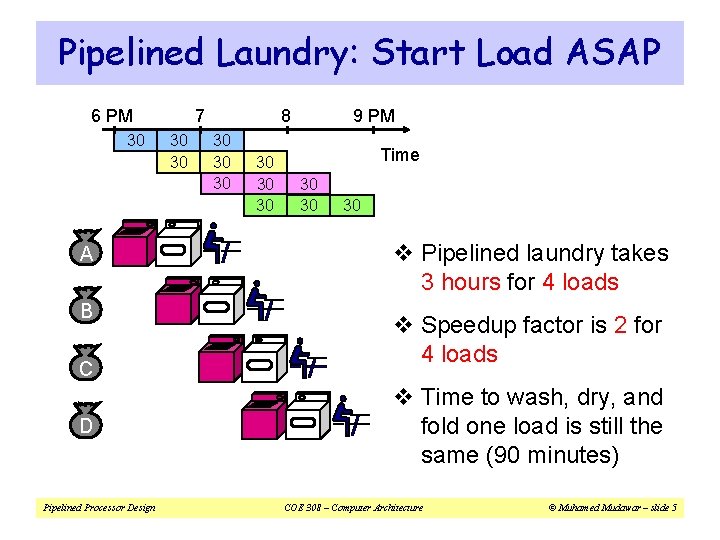

Pipelined Laundry: Start Load ASAP 6 PM 30 A B C D Pipelined Processor Design 7 30 30 8 30 30 30 9 PM Time 30 30 30 v Pipelined laundry takes 3 hours for 4 loads v Speedup factor is 2 for 4 loads v Time to wash, dry, and fold one load is still the same (90 minutes) COE 308 – Computer Architecture © Muhamed Mudawar – slide 5

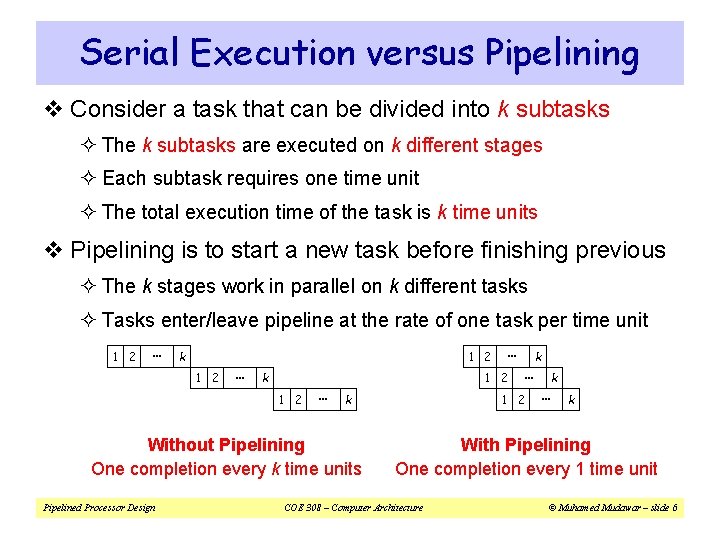

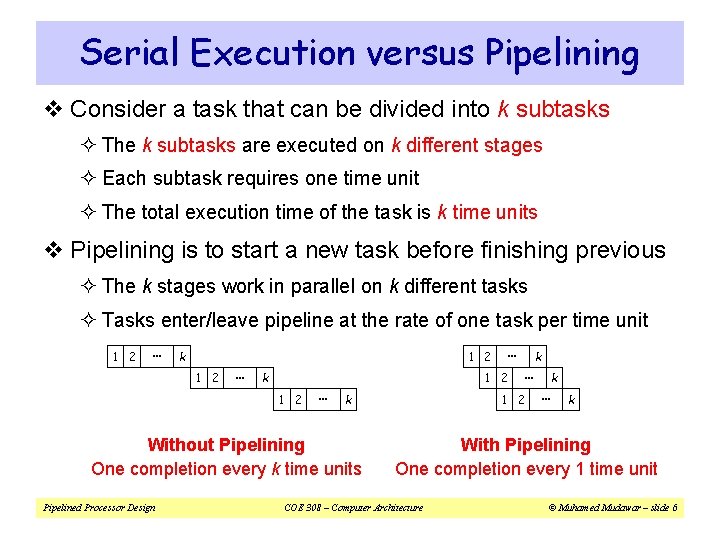

Serial Execution versus Pipelining v Consider a task that can be divided into k subtasks ² The k subtasks are executed on k different stages ² Each subtask requires one time unit ² The total execution time of the task is k time units v Pipelining is to start a new task before finishing previous ² The k stages work in parallel on k different tasks ² Tasks enter/leave pipeline at the rate of one task per time unit 1 2 … k 1 2 … 1 2 k Without Pipelining One completion every k time units Pipelined Processor Design … k … 1 2 k … k With Pipelining One completion every 1 time unit COE 308 – Computer Architecture © Muhamed Mudawar – slide 6

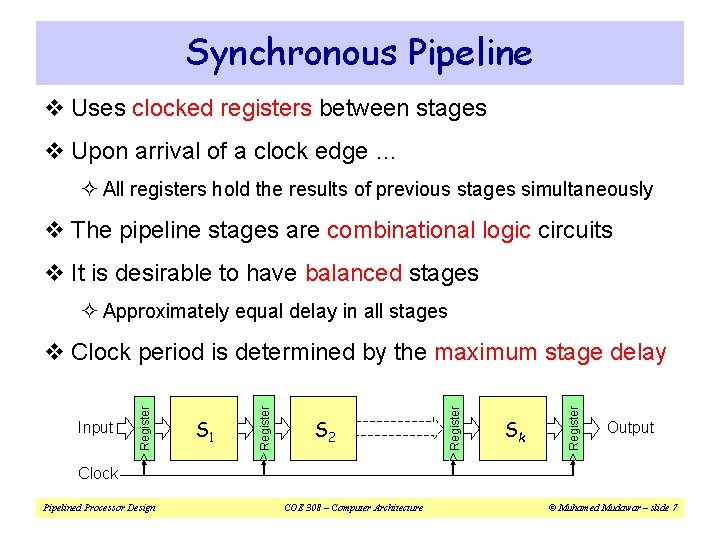

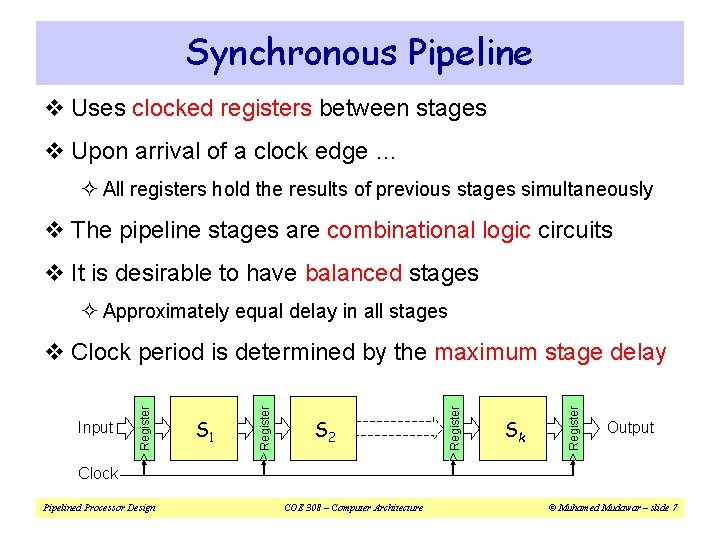

Synchronous Pipeline v Uses clocked registers between stages v Upon arrival of a clock edge … ² All registers hold the results of previous stages simultaneously v The pipeline stages are combinational logic circuits v It is desirable to have balanced stages ² Approximately equal delay in all stages Sk Register S 2 Register S 1 Register Input Register v Clock period is determined by the maximum stage delay Output Clock Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 7

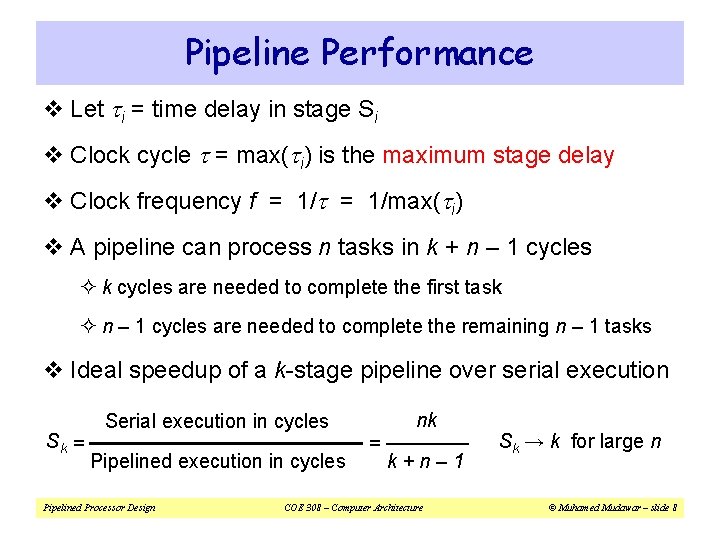

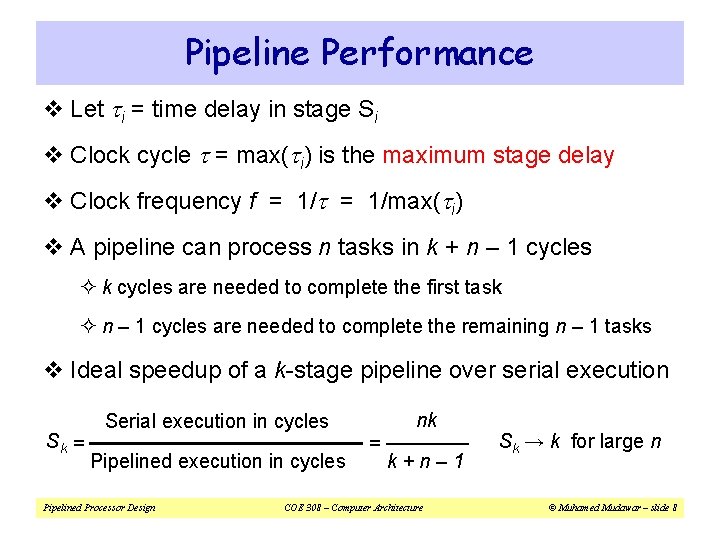

Pipeline Performance v Let ti = time delay in stage Si v Clock cycle t = max(ti) is the maximum stage delay v Clock frequency f = 1/t = 1/max(ti) v A pipeline can process n tasks in k + n – 1 cycles ² k cycles are needed to complete the first task ² n – 1 cycles are needed to complete the remaining n – 1 tasks v Ideal speedup of a k-stage pipeline over serial execution Sk = Serial execution in cycles Pipelined Processor Design nk = k+n– 1 COE 308 – Computer Architecture Sk → k for large n © Muhamed Mudawar – slide 8

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 9

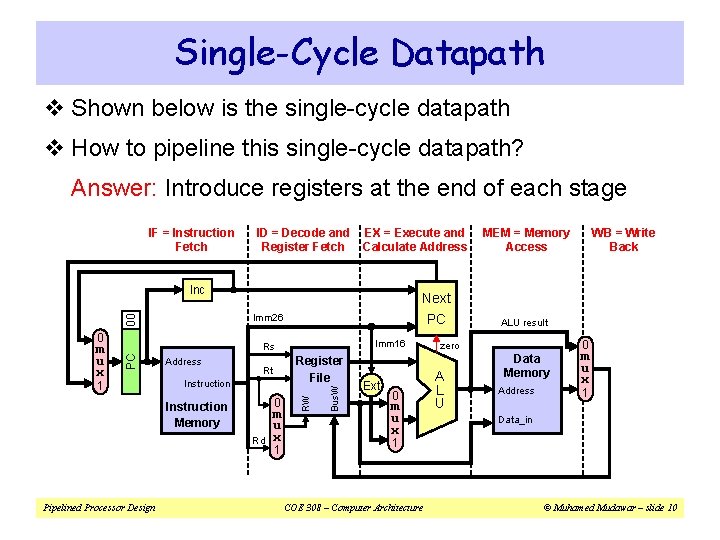

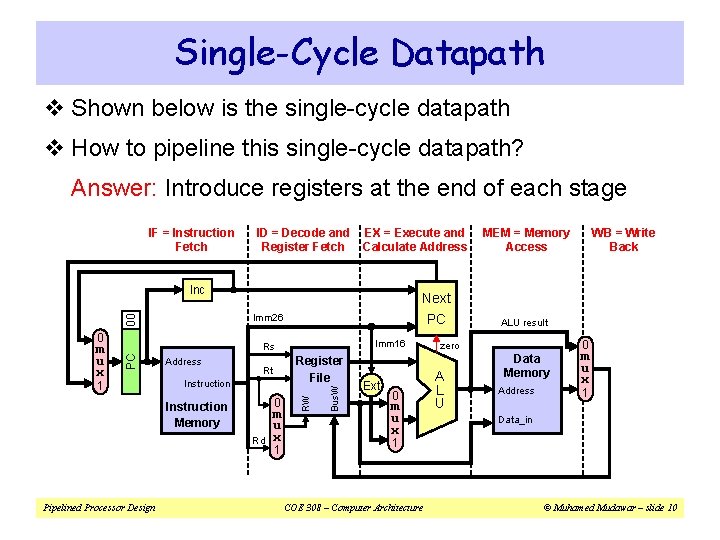

Single-Cycle Datapath v Shown below is the single-cycle datapath v How to pipeline this single-cycle datapath? Answer: Introduce registers at the end of each stage IF = Instruction Fetch ID = Decode and Register Fetch EX = Execute and Calculate Address Inc 00 Imm 26 0 Imm 16 Rs Instruction 0 Instruction Memory Rd Pipelined Processor Design Register File Rt m u x 1 Bus. W 1 Address RW PC m u x Next PC Ext 0 m u x MEM = Memory Access ALU result 0 zero A L U WB = Write Back Data Memory Address m u x 1 Data_in 1 COE 308 – Computer Architecture © Muhamed Mudawar – slide 10

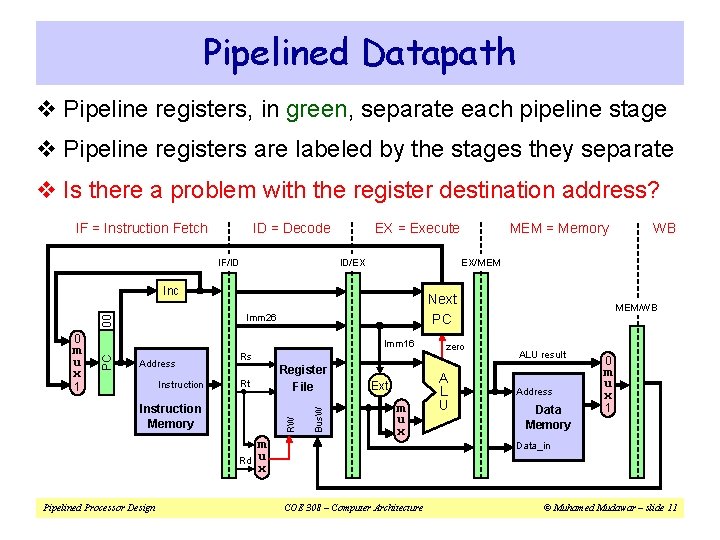

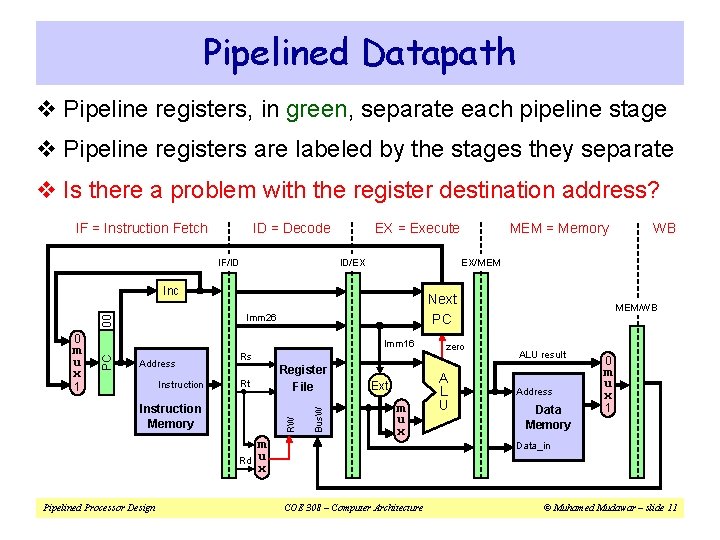

Pipelined Datapath v Pipeline registers, in green, separate each pipeline stage v Pipeline registers are labeled by the stages they separate v Is there a problem with the register destination address? IF = Instruction Fetch ID = Decode IF/ID EX = Execute ID/EX Next PC 00 Imm 26 0 Instruction 1 Rs Register File Rt RW Instruction Memory Rd Pipelined Processor Design m u x Bus. W PC Imm 16 Address WB EX/MEM Inc m u x MEM = Memory Ext m u x zero A L U MEM/WB ALU result Address Data Memory 0 m u x 1 Data_in COE 308 – Computer Architecture © Muhamed Mudawar – slide 11

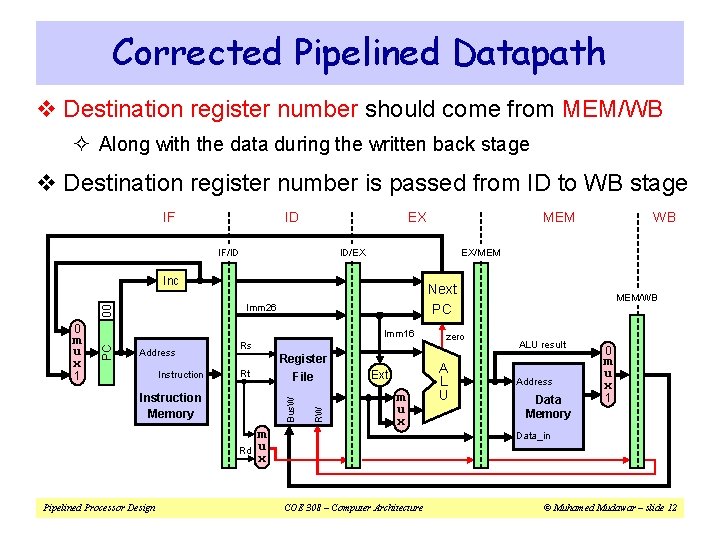

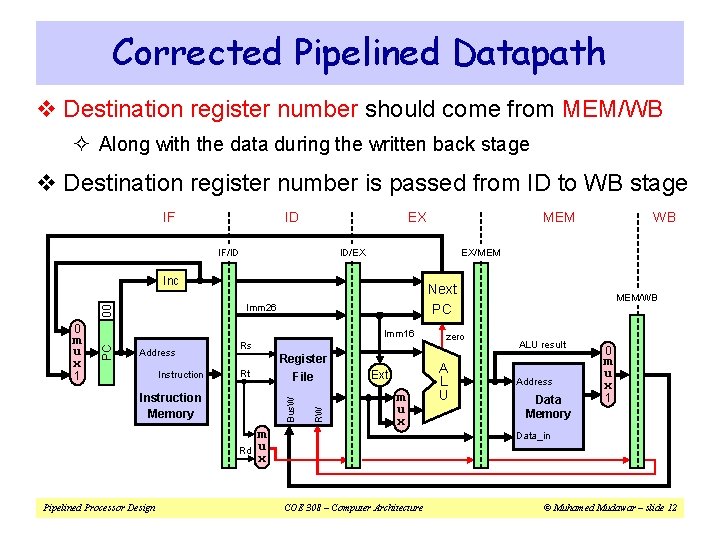

Corrected Pipelined Datapath v Destination register number should come from MEM/WB ² Along with the data during the written back stage v Destination register number is passed from ID to WB stage IF ID EX IF/ID ID/EX Next PC 00 Imm 26 0 Instruction 1 Rs Register File Rt Bus. W Instruction Memory Rd Pipelined Processor Design m u x RW PC Imm 16 Address WB EX/MEM Inc m u x MEM Ext m u x zero A L U MEM/WB ALU result Address Data Memory 0 m u x 1 Data_in COE 308 – Computer Architecture © Muhamed Mudawar – slide 12

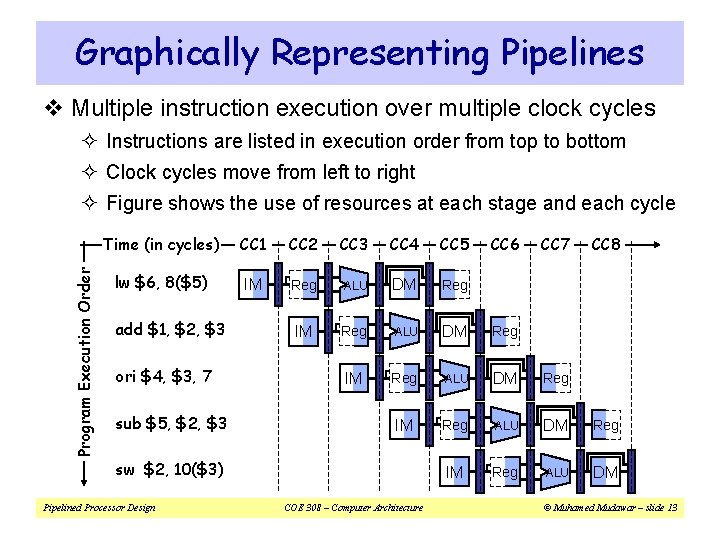

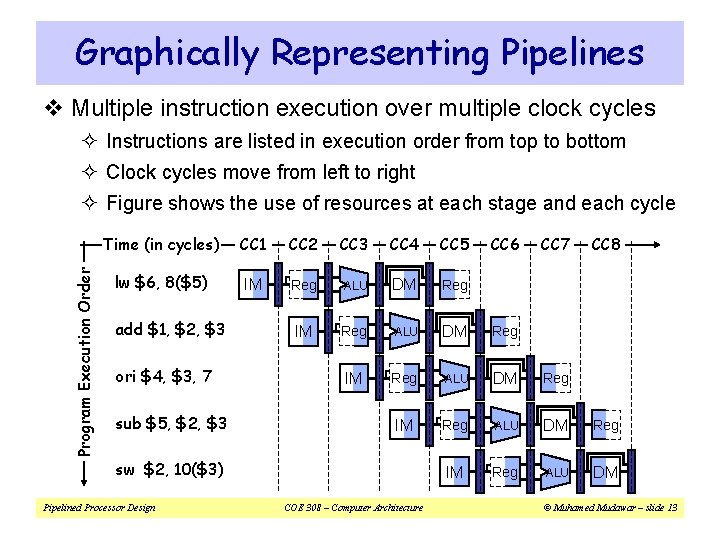

Graphically Representing Pipelines v Multiple instruction execution over multiple clock cycles ² Instructions are listed in execution order from top to bottom ² Clock cycles move from left to right Program Execution Order ² Figure shows the use of resources at each stage and each cycle Time (in cycles) CC 1 CC 2 CC 3 CC 4 CC 5 lw $6, 8($5) IM Reg ALU DM Reg IM Reg ALU DM add $1, $2, $3 ori $4, $3, 7 sub $5, $2, $3 sw $2, 10($3) Pipelined Processor Design COE 308 – Computer Architecture CC 6 CC 7 CC 8 © Muhamed Mudawar – slide 13

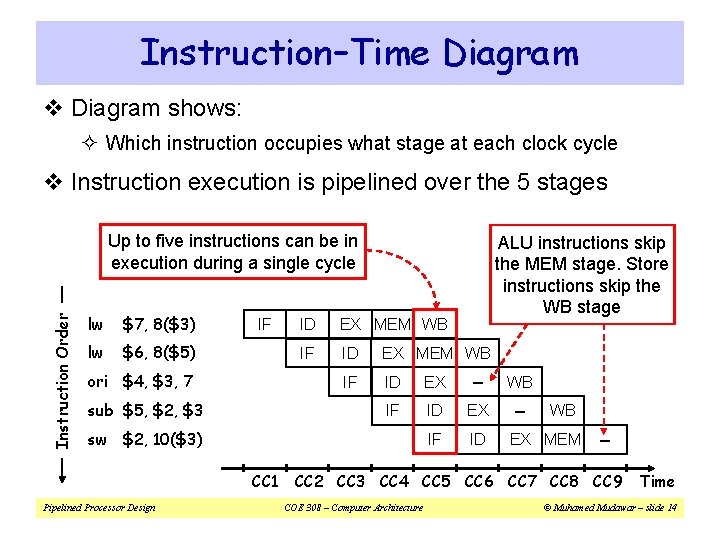

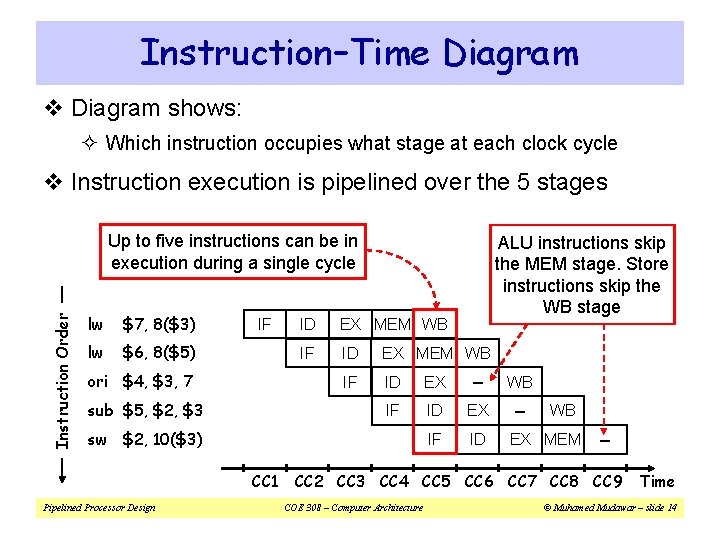

Instruction–Time Diagram v Diagram shows: ² Which instruction occupies what stage at each clock cycle v Instruction execution is pipelined over the 5 stages Instruction Order Up to five instructions can be in execution during a single cycle lw $7, 8($3) lw $6, 8($5) IF ori $4, $3, 7 sub $5, $2, $3 sw ID EX MEM WB IF ID EX – IF ID $2, 10($3) CC 1 Pipelined Processor Design ALU instructions skip the MEM stage. Store instructions skip the WB stage WB EX MEM – CC 2 CC 3 CC 4 CC 5 CC 6 CC 7 CC 8 CC 9 COE 308 – Computer Architecture Time © Muhamed Mudawar – slide 14

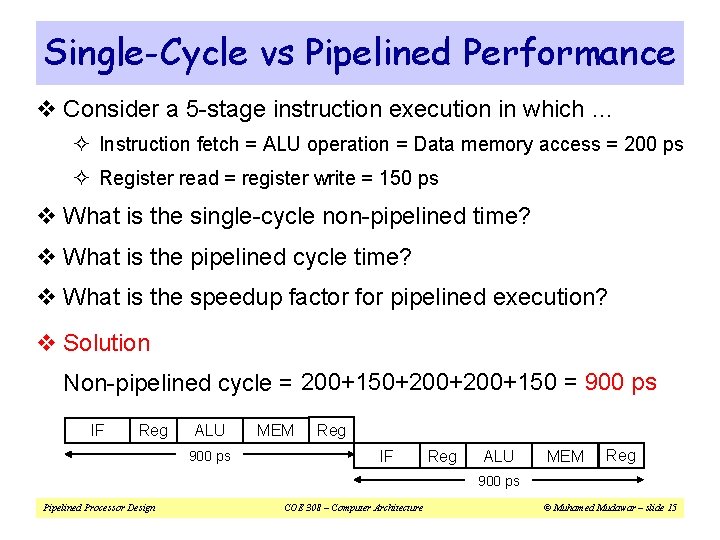

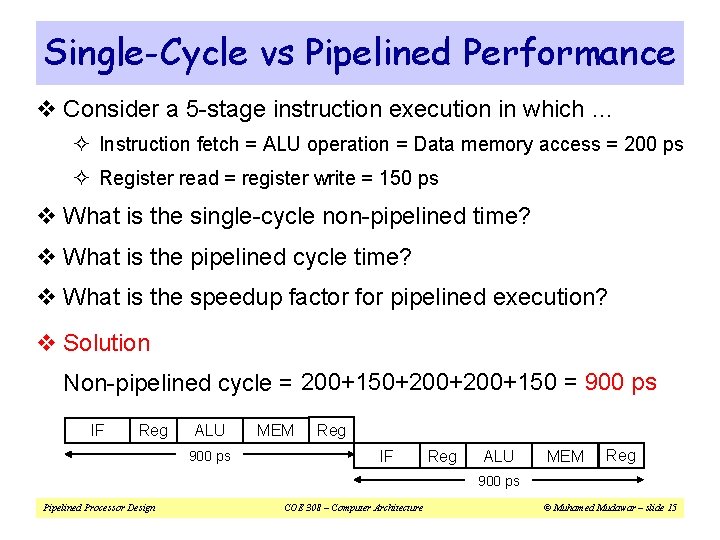

Single-Cycle vs Pipelined Performance v Consider a 5 -stage instruction execution in which … ² Instruction fetch = ALU operation = Data memory access = 200 ps ² Register read = register write = 150 ps v What is the single-cycle non-pipelined time? v What is the pipelined cycle time? v What is the speedup factor for pipelined execution? v Solution Non-pipelined cycle = 200+150+200+150 = 900 ps IF Reg ALU 900 ps MEM Reg IF Reg ALU MEM Reg 900 ps Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 15

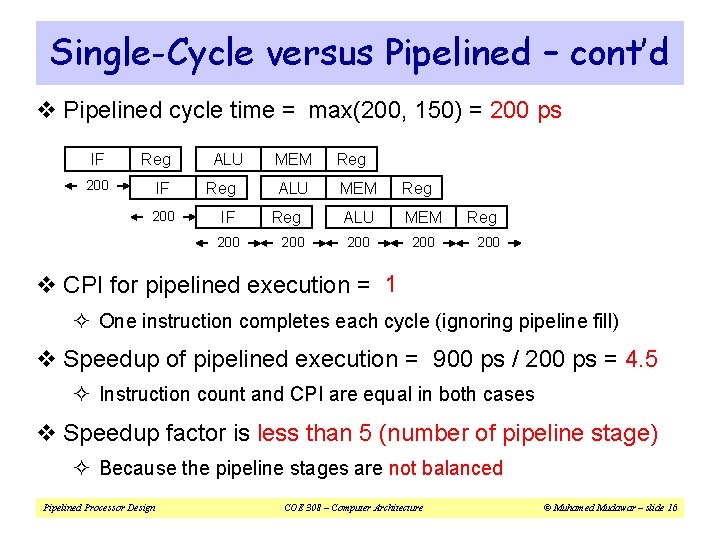

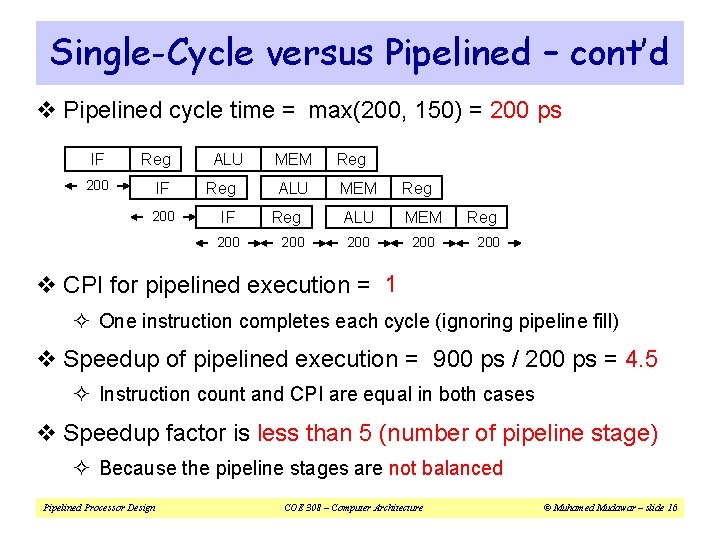

Single-Cycle versus Pipelined – cont’d v Pipelined cycle time = max(200, 150) = 200 ps IF Reg 200 IF 200 ALU Reg IF 200 MEM Reg ALU MEM 200 Reg 200 v CPI for pipelined execution = 1 ² One instruction completes each cycle (ignoring pipeline fill) v Speedup of pipelined execution = 900 ps / 200 ps = 4. 5 ² Instruction count and CPI are equal in both cases v Speedup factor is less than 5 (number of pipeline stage) ² Because the pipeline stages are not balanced Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 16

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 17

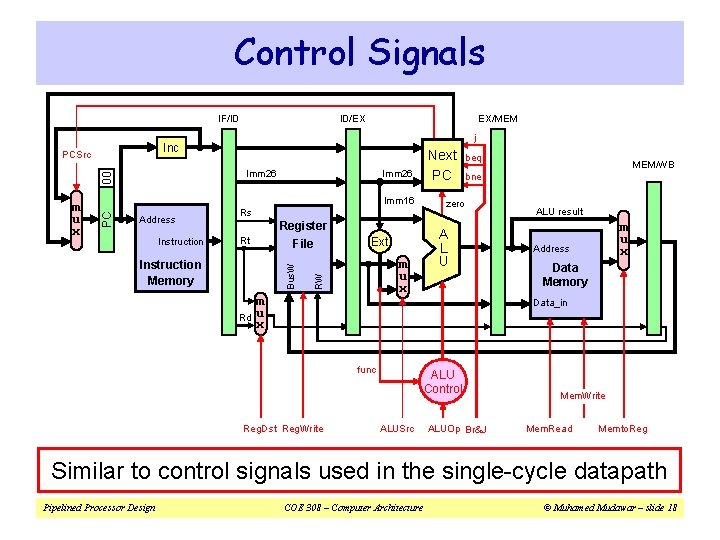

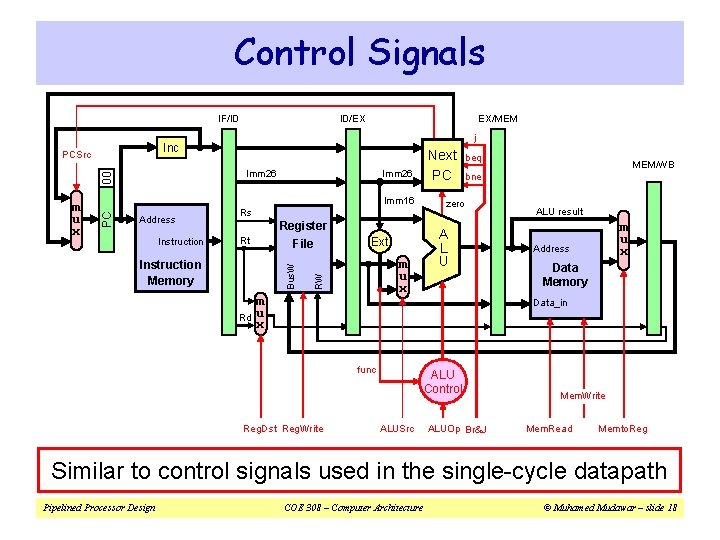

Control Signals IF/ID EX/MEM j Inc PCSrc 00 Imm 26 Imm 16 Address Instruction Rs Register File Rt Bus. W Instruction Memory Rd Ext m u x RW PC m u x ID/EX m u x Next PC beq MEM/WB bne zero A L U ALU result m u x Address Data Memory Data_in func Reg. Dst Reg. Write ALU Control ALUSrc ALUOp Br&J Mem. Write Mem. Read Memto. Reg Similar to control signals used in the single-cycle datapath Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 18

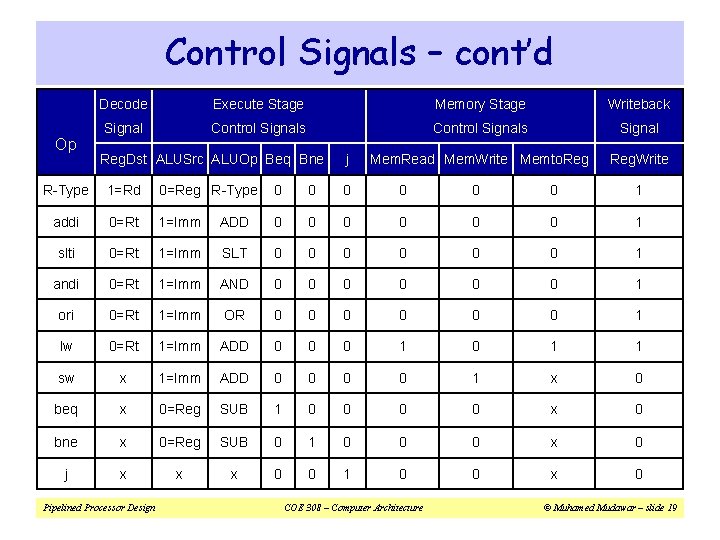

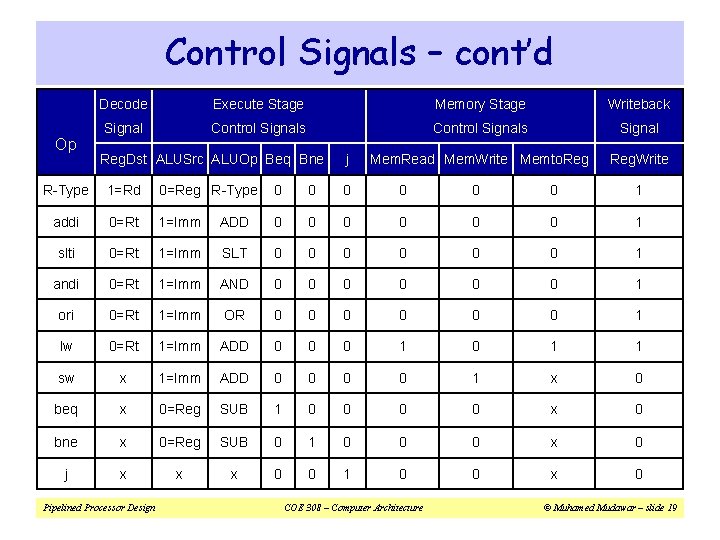

Control Signals – cont’d Op Decode Execute Stage Memory Stage Writeback Signal Control Signals Signal Mem. Read Mem. Write Memto. Reg. Write Reg. Dst ALUSrc ALUOp Beq Bne j R-Type 1=Rd 0=Reg R-Type 0 0 0 1 addi 0=Rt 1=Imm ADD 0 0 0 1 slti 0=Rt 1=Imm SLT 0 0 0 1 andi 0=Rt 1=Imm AND 0 0 0 1 ori 0=Rt 1=Imm OR 0 0 0 1 lw 0=Rt 1=Imm ADD 0 0 0 1 1 sw x 1=Imm ADD 0 0 1 x 0 beq x 0=Reg SUB 1 0 0 x 0 bne x 0=Reg SUB 0 1 0 0 0 x 0 j x x x 0 0 1 0 0 x 0 Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 19

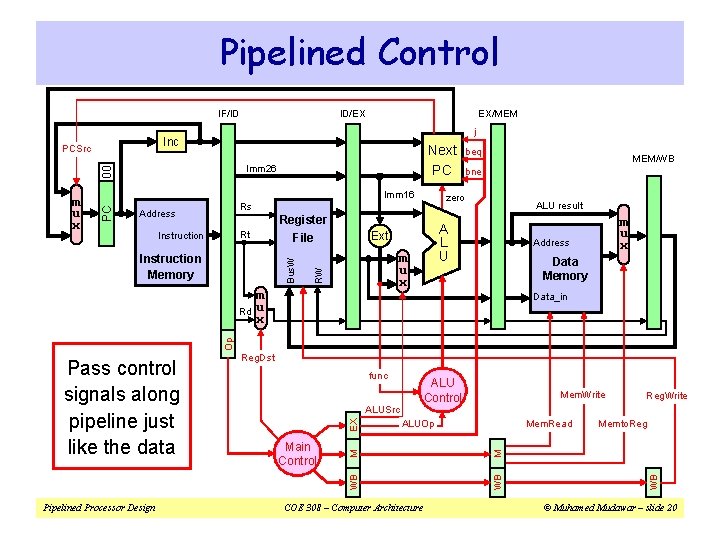

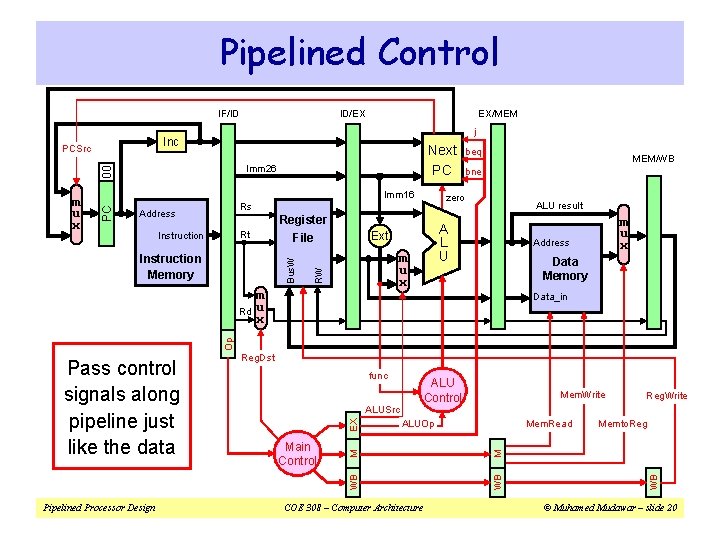

Pipelined Control IF/ID EX/MEM j Inc PCSrc Next PC 00 Imm 26 Imm 16 Address Register File Rt Instruction Bus. W Instruction Memory ALU result m u x Address Data Memory Data_in Op Rd MEM/WB bne A L U Ext m u x beq zero Rs RW PC m u x ID/EX Pipelined Processor Design Reg. Dst ALU Control M WB Main Control Mem. Read ALUOp M EX ALUSrc Mem. Write COE 308 – Computer Architecture Reg. Write Memto. Reg WB func WB Pass control signals along pipeline just like the data © Muhamed Mudawar – slide 20

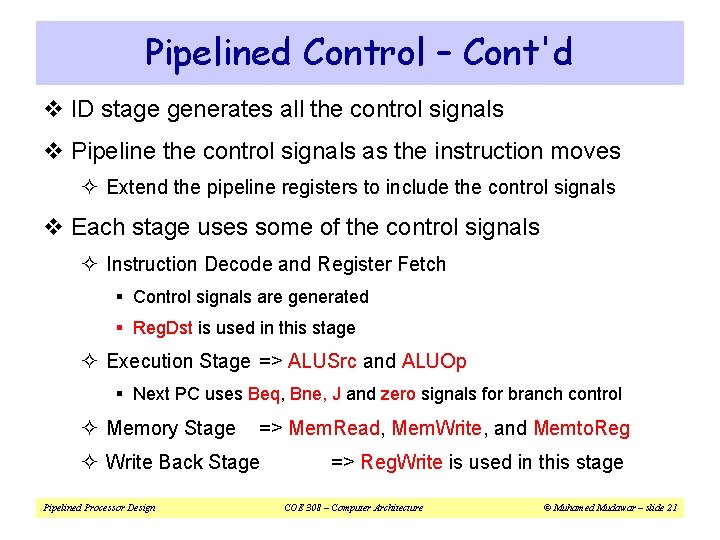

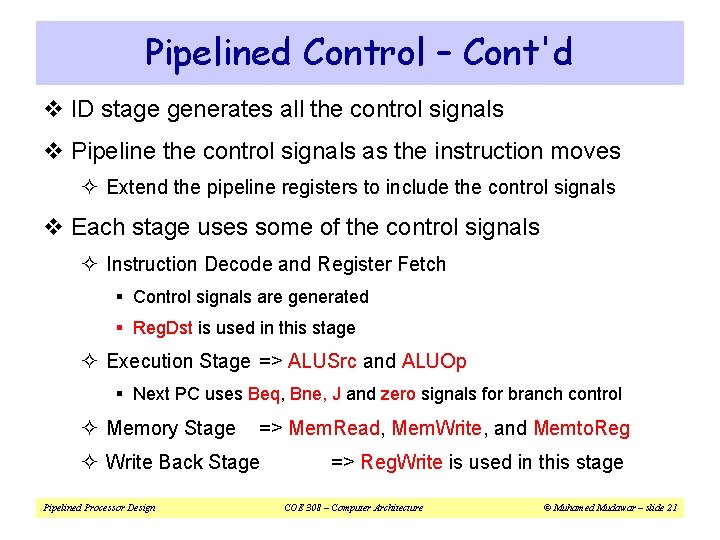

Pipelined Control – Cont'd v ID stage generates all the control signals v Pipeline the control signals as the instruction moves ² Extend the pipeline registers to include the control signals v Each stage uses some of the control signals ² Instruction Decode and Register Fetch § Control signals are generated § Reg. Dst is used in this stage ² Execution Stage => ALUSrc and ALUOp § Next PC uses Beq, Bne, J and zero signals for branch control ² Memory Stage => Mem. Read, Mem. Write, and Memto. Reg ² Write Back Stage Pipelined Processor Design => Reg. Write is used in this stage COE 308 – Computer Architecture © Muhamed Mudawar – slide 21

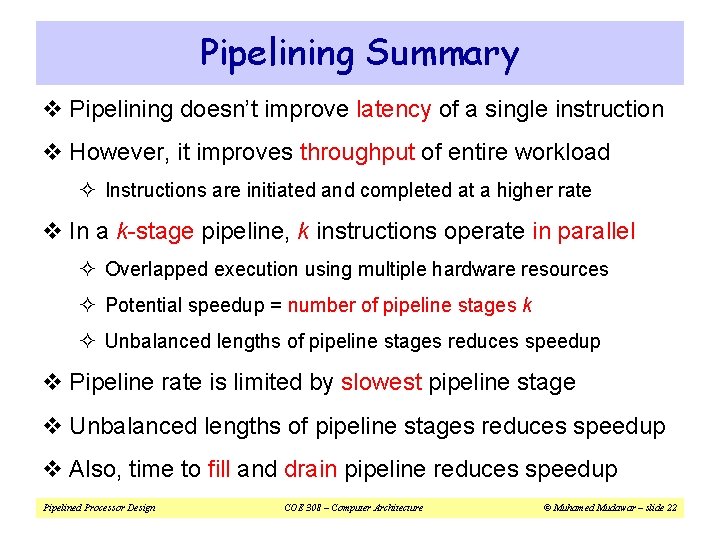

Pipelining Summary v Pipelining doesn’t improve latency of a single instruction v However, it improves throughput of entire workload ² Instructions are initiated and completed at a higher rate v In a k-stage pipeline, k instructions operate in parallel ² Overlapped execution using multiple hardware resources ² Potential speedup = number of pipeline stages k ² Unbalanced lengths of pipeline stages reduces speedup v Pipeline rate is limited by slowest pipeline stage v Unbalanced lengths of pipeline stages reduces speedup v Also, time to fill and drain pipeline reduces speedup Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 22

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 23

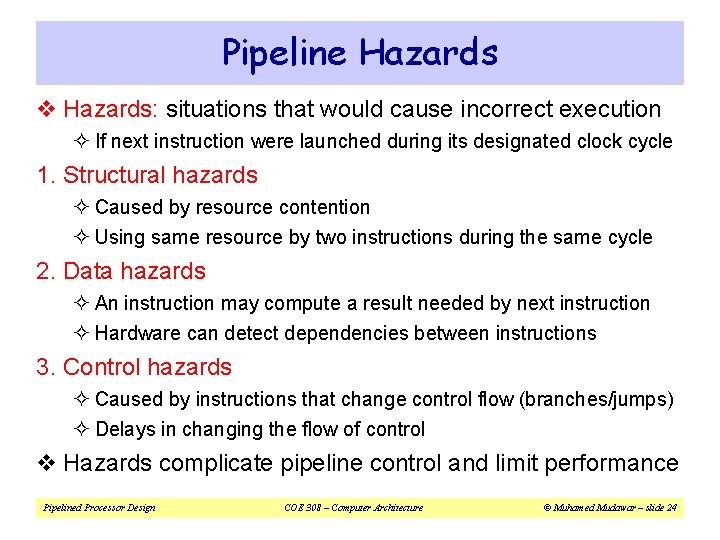

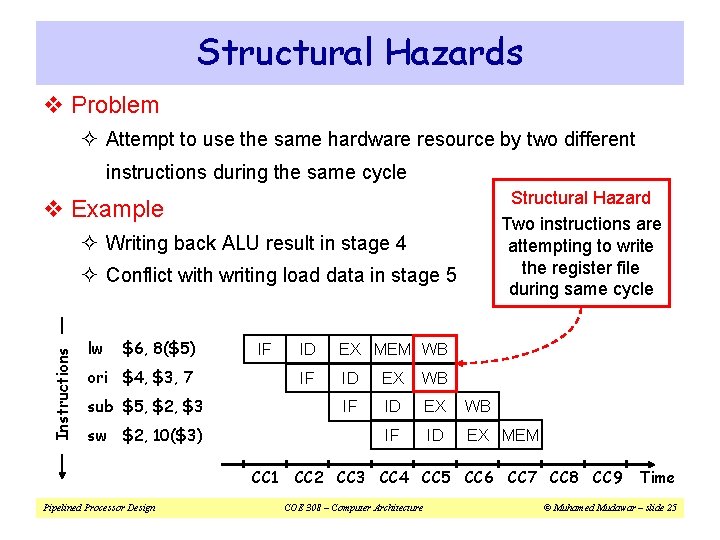

Pipeline Hazards v Hazards: situations that would cause incorrect execution ² If next instruction were launched during its designated clock cycle 1. Structural hazards ² Caused by resource contention ² Using same resource by two instructions during the same cycle 2. Data hazards ² An instruction may compute a result needed by next instruction ² Hardware can detect dependencies between instructions 3. Control hazards ² Caused by instructions that change control flow (branches/jumps) ² Delays in changing the flow of control v Hazards complicate pipeline control and limit performance Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 24

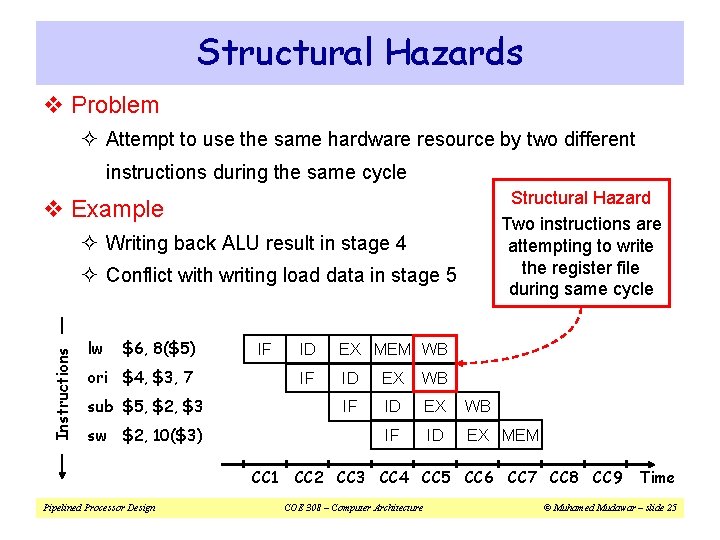

Structural Hazards v Problem ² Attempt to use the same hardware resource by two different instructions during the same cycle Structural Hazard Two instructions are attempting to write the register file during same cycle v Example ² Writing back ALU result in stage 4 Instructions ² Conflict with writing load data in stage 5 lw $6, 8($5) IF ori $4, $3, 7 sub $5, $2, $3 sw $2, 10($3) CC 1 Pipelined Processor Design ID EX MEM WB IF ID EX MEM CC 2 CC 3 CC 4 CC 5 CC 6 CC 7 CC 8 CC 9 COE 308 – Computer Architecture Time © Muhamed Mudawar – slide 25

Resolving Structural Hazards v Serious Hazard: ² Hazard cannot be ignored v Solution 1: Delay Access to Resource ² Must have mechanism to delay instruction access to resource ² Delay all write backs to the register file to stage 5 § ALU instructions bypass stage 4 (memory) without doing anything v Solution 2: Add more hardware resources (more costly) ² Add more hardware to eliminate the structural hazard ² Redesign the register file to have two write ports § First write port can be used to write back ALU results in stage 4 § Second write port can be used to write back load data in stage 5 Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 26

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 27

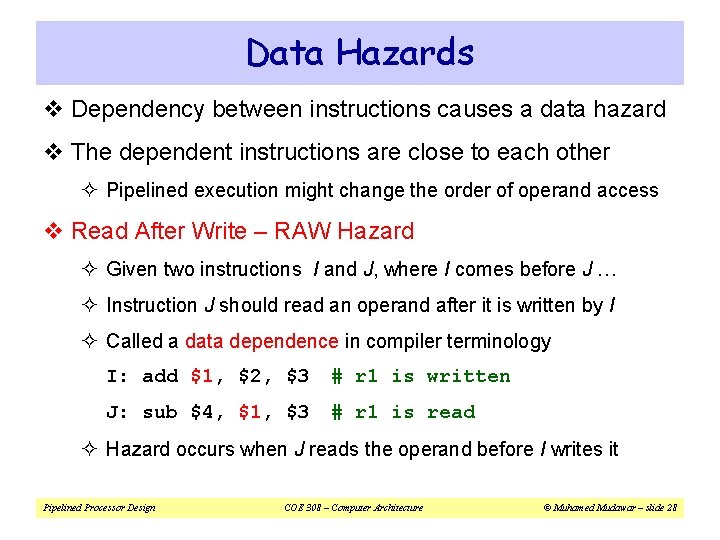

Data Hazards v Dependency between instructions causes a data hazard v The dependent instructions are close to each other ² Pipelined execution might change the order of operand access v Read After Write – RAW Hazard ² Given two instructions I and J, where I comes before J … ² Instruction J should read an operand after it is written by I ² Called a data dependence in compiler terminology I: add $1, $2, $3 # r 1 is written J: sub $4, $1, $3 # r 1 is read ² Hazard occurs when J reads the operand before I writes it Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 28

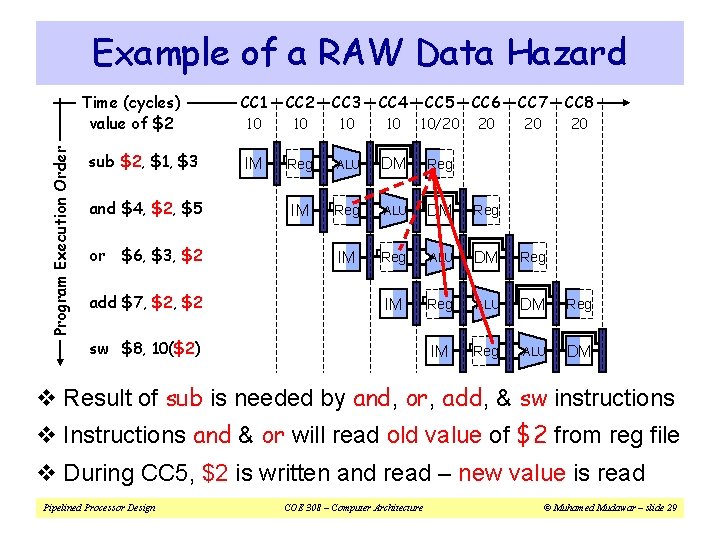

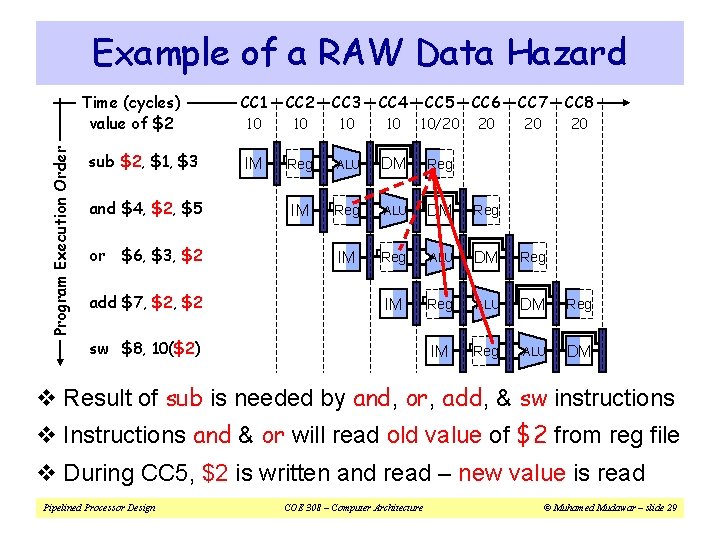

Example of a RAW Data Hazard Program Execution Order Time (cycles) value of $2 sub $2, $1, $3 and $4, $2, $5 or $6, $3, $2 add $7, $2 CC 1 CC 2 CC 3 CC 4 CC 5 CC 6 CC 7 CC 8 10 10 10/20 20 IM Reg ALU DM Reg IM Reg ALU DM sw $8, 10($2) v Result of sub is needed by and, or, add, & sw instructions v Instructions and & or will read old value of $2 from reg file v During CC 5, $2 is written and read – new value is read Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 29

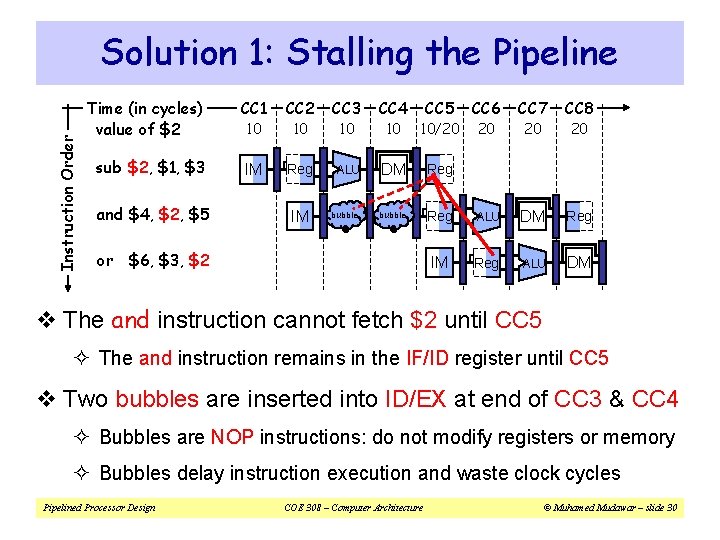

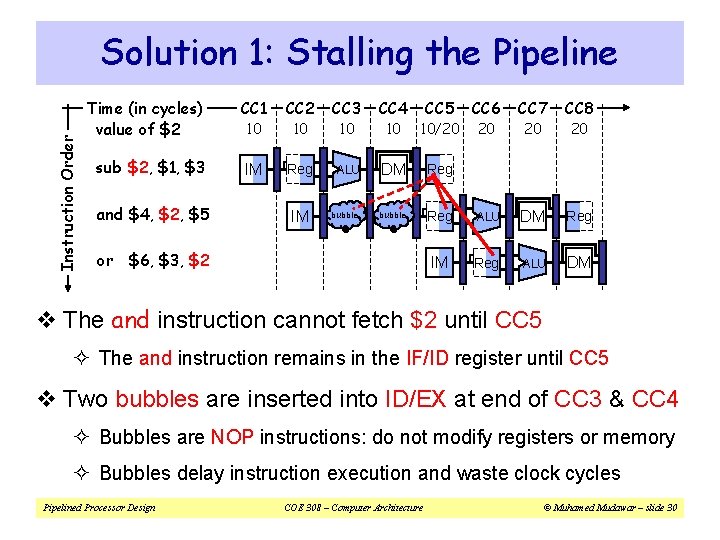

Instruction Order Solution 1: Stalling the Pipeline Time (in cycles) value of $2 CC 1 CC 2 CC 3 CC 4 CC 5 CC 6 CC 7 CC 8 10 10 10/20 20 sub $2, $1, $3 IM Reg ALU DM Reg bubble Reg ALU DM Reg IM Reg ALU DM and $4, $2, $5 IM or $6, $3, $2 v The and instruction cannot fetch $2 until CC 5 ² The and instruction remains in the IF/ID register until CC 5 v Two bubbles are inserted into ID/EX at end of CC 3 & CC 4 ² Bubbles are NOP instructions: do not modify registers or memory ² Bubbles delay instruction execution and waste clock cycles Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 30

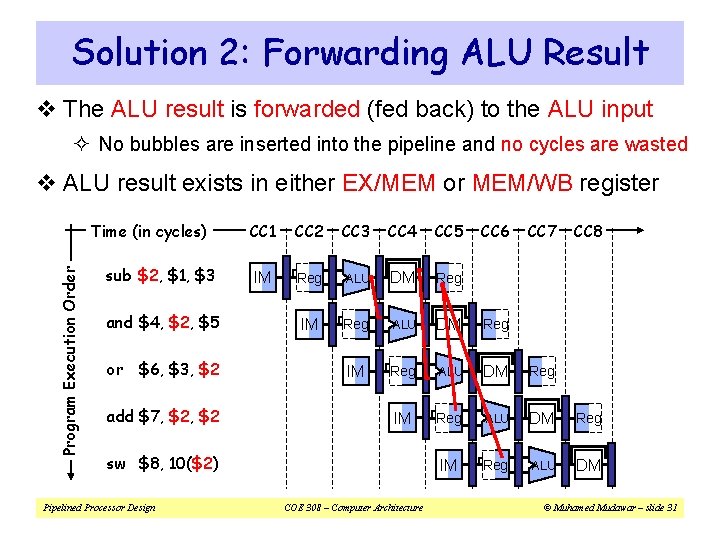

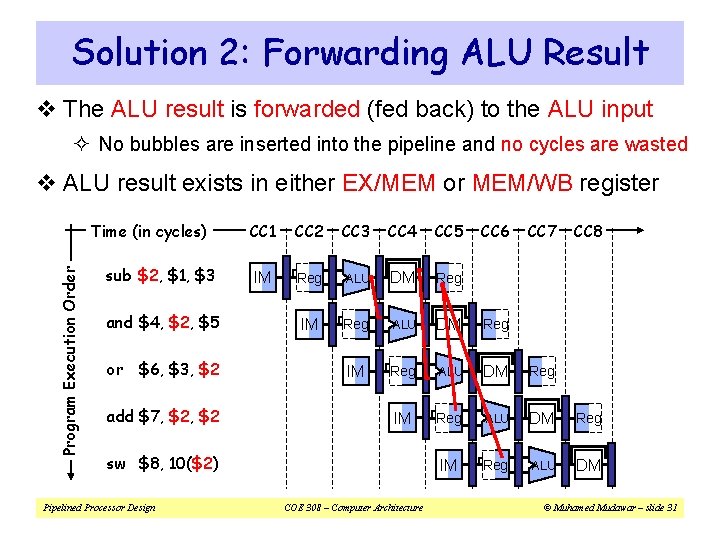

Solution 2: Forwarding ALU Result v The ALU result is forwarded (fed back) to the ALU input ² No bubbles are inserted into the pipeline and no cycles are wasted v ALU result exists in either EX/MEM or MEM/WB register Program Execution Order Time (in cycles) sub $2, $1, $3 and $4, $2, $5 or $6, $3, $2 add $7, $2 CC 1 CC 2 CC 3 CC 4 CC 5 IM Reg ALU DM Reg IM Reg ALU DM sw $8, 10($2) Pipelined Processor Design COE 308 – Computer Architecture CC 6 CC 7 CC 8 © Muhamed Mudawar – slide 31

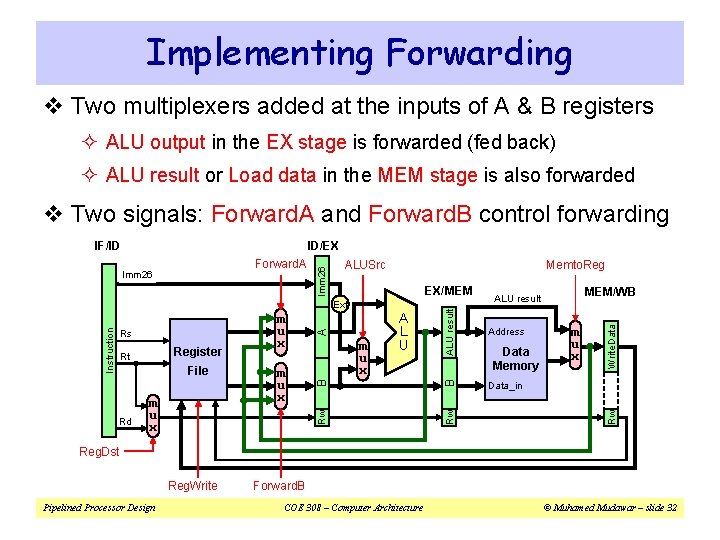

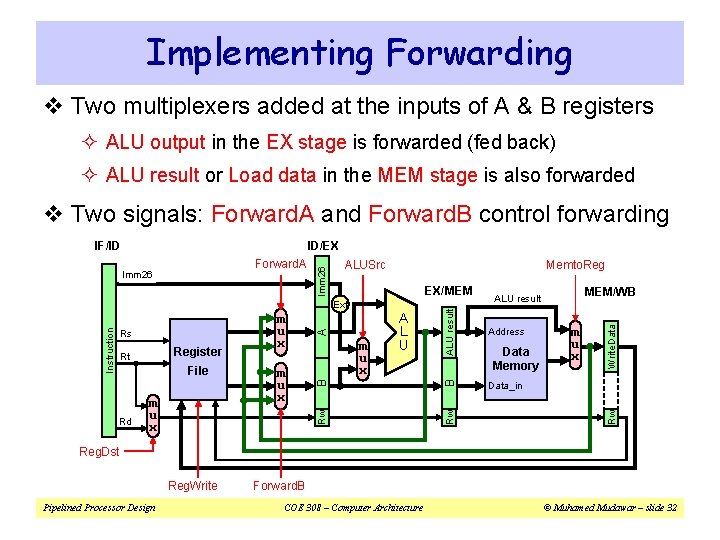

Implementing Forwarding v Two multiplexers added at the inputs of A & B registers ² ALU output in the EX stage is forwarded (fed back) ² ALU result or Load data in the MEM stage is also forwarded v Two signals: Forward. A and Forward. B control forwarding ID/EX Memto. Reg EX/MEM ALU result B Rd m u x Data_in Rw File A Register Rt Address B Rs m u x A L U MEM/WB ALU result Rw Instruction Ext Data Memory m u x Write. Data ALUSrc Rw Forward. A Imm 26 IF/ID Reg. Dst Reg. Write Pipelined Processor Design Forward. B COE 308 – Computer Architecture © Muhamed Mudawar – slide 32

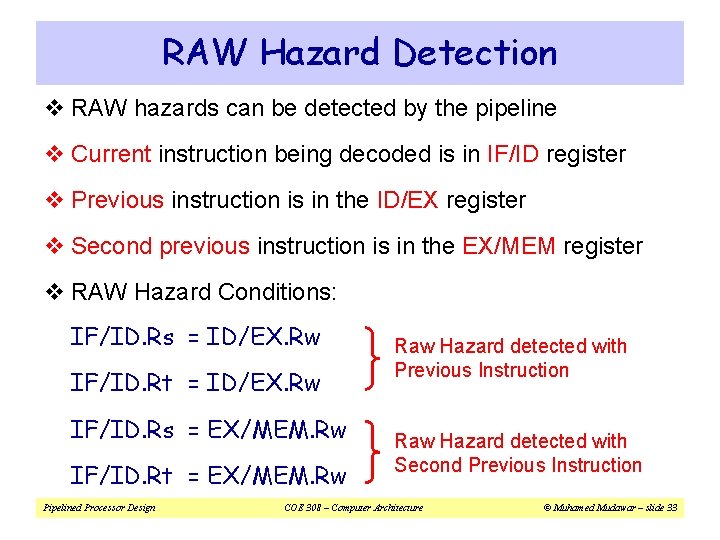

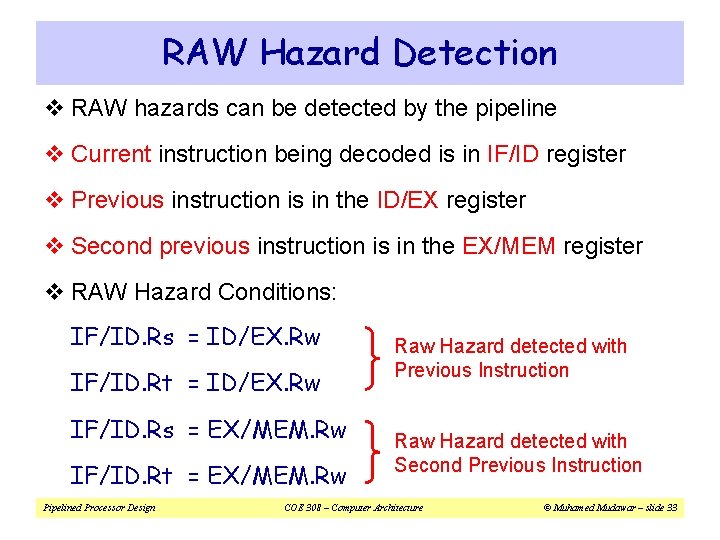

RAW Hazard Detection v RAW hazards can be detected by the pipeline v Current instruction being decoded is in IF/ID register v Previous instruction is in the ID/EX register v Second previous instruction is in the EX/MEM register v RAW Hazard Conditions: IF/ID. Rs = ID/EX. Rw IF/ID. Rt = ID/EX. Rw IF/ID. Rs = EX/MEM. Rw IF/ID. Rt = EX/MEM. Rw Pipelined Processor Design Raw Hazard detected with Previous Instruction Raw Hazard detected with Second Previous Instruction COE 308 – Computer Architecture © Muhamed Mudawar – slide 33

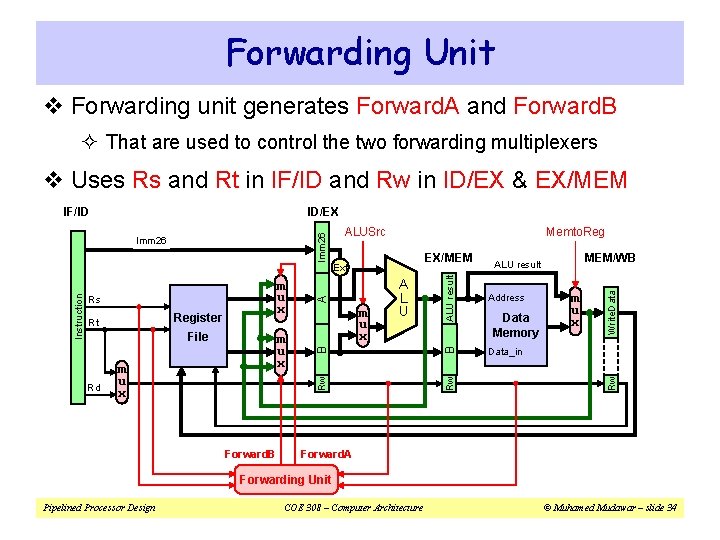

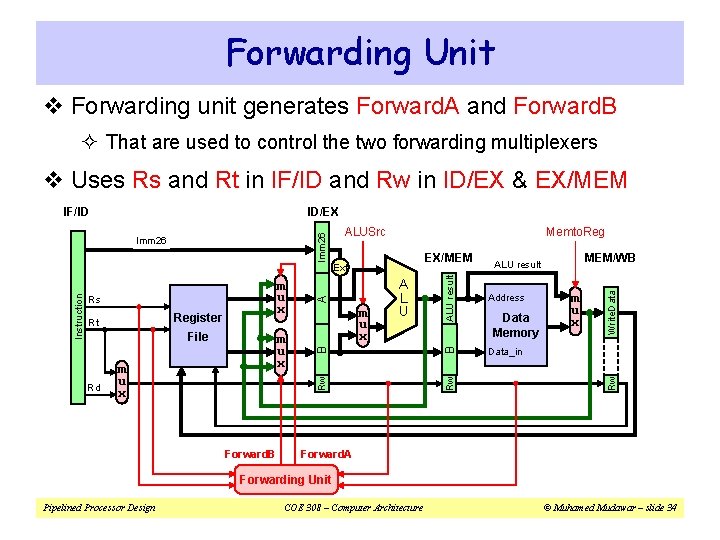

Forwarding Unit v Forwarding unit generates Forward. A and Forward. B ² That are used to control the two forwarding multiplexers v Uses Rs and Rt in IF/ID and Rw in ID/EX & EX/MEM ID/EX Forward. B m u x Data_in m u x Data Memory m u x Write. Data ALU result Address B Rd m u x A L U MEM/WB ALU result Rw File EX/MEM Ext A Register Rt Memto. Reg B Rs ALUSrc Rw Instruction Imm 26 Rw Imm 26 IF/ID Forward. A Forwarding Unit Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 34

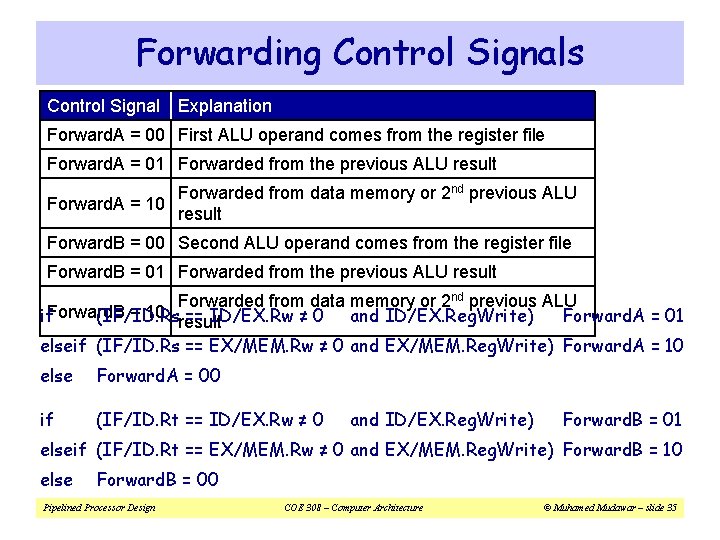

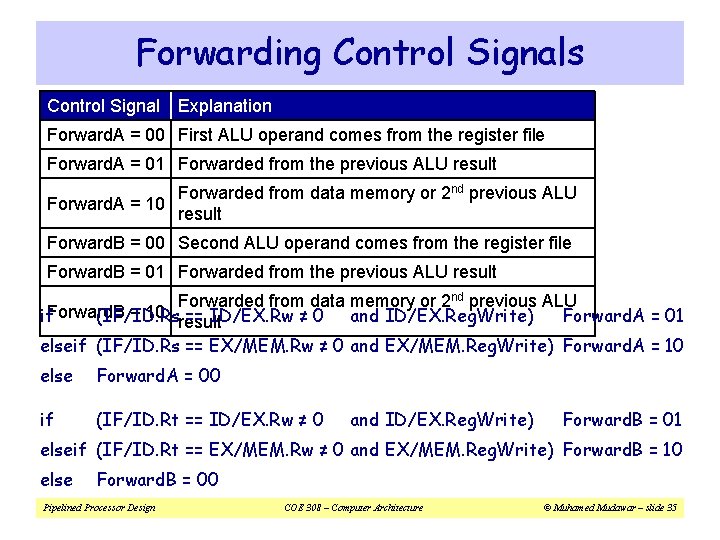

Forwarding Control Signals Control Signal Explanation Forward. A = 00 First ALU operand comes from the register file Forward. A = 01 Forwarded from the previous ALU result Forwarded from data memory or 2 nd previous ALU Forward. A = 10 result Forward. B = 00 Second ALU operand comes from the register file Forward. B = 01 Forwarded from the previous ALU result Forwarded from data memory or 2 nd previous ALU = 10 == ID/EX. Rw ≠ 0 and ID/EX. Reg. Write) if. Forward. B (IF/ID. Rs Forward. A = 01 result elseif (IF/ID. Rs == EX/MEM. Rw ≠ 0 and EX/MEM. Reg. Write) Forward. A = 10 else Forward. A = 00 if (IF/ID. Rt == ID/EX. Rw ≠ 0 and ID/EX. Reg. Write) Forward. B = 01 elseif (IF/ID. Rt == EX/MEM. Rw ≠ 0 and EX/MEM. Reg. Write) Forward. B = 10 else Forward. B = 00 Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 35

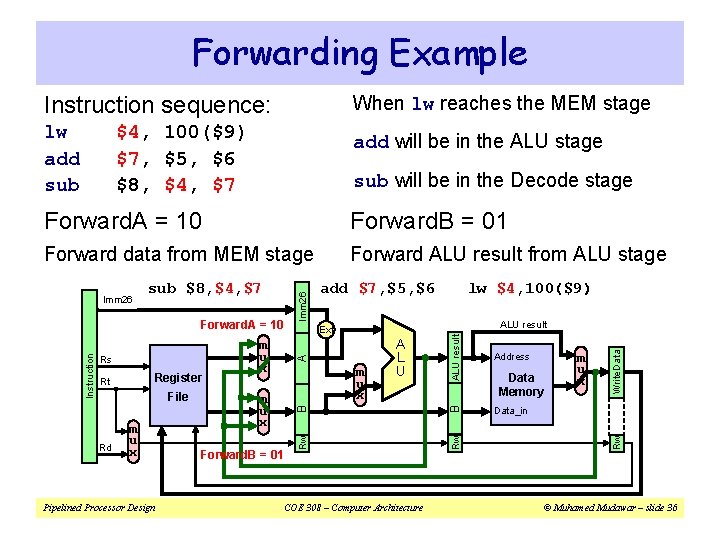

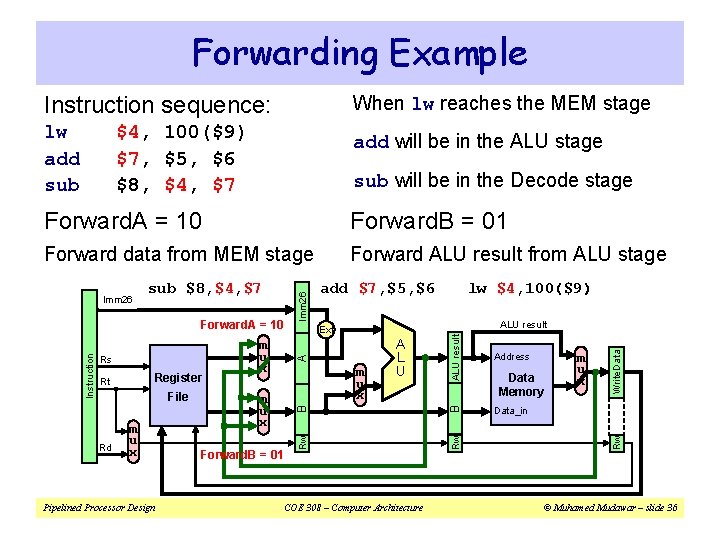

Forwarding Example Instruction sequence: When lw reaches the MEM stage lw add sub add will be in the ALU stage $4, 100($9) $7, $5, $6 $8, $4, $7 sub will be in the Decode stage Forward. B = 01 Forward data from MEM stage Forward ALU result from ALU stage Pipelined Processor Design ALU result m u x B Rd m u x Address Data_in Rw File m u x A Register Rt ALU result Ext B Rs lw $4, 100($9) Rw Instruction Forward. A = 10 add $7, $5, $6 m u x Forward. B = 01 A L U COE 308 – Computer Architecture Data Memory m u x Write. Data sub $8, $4, $7 Rw Imm 26 Forward. A = 10 © Muhamed Mudawar – slide 36

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 37

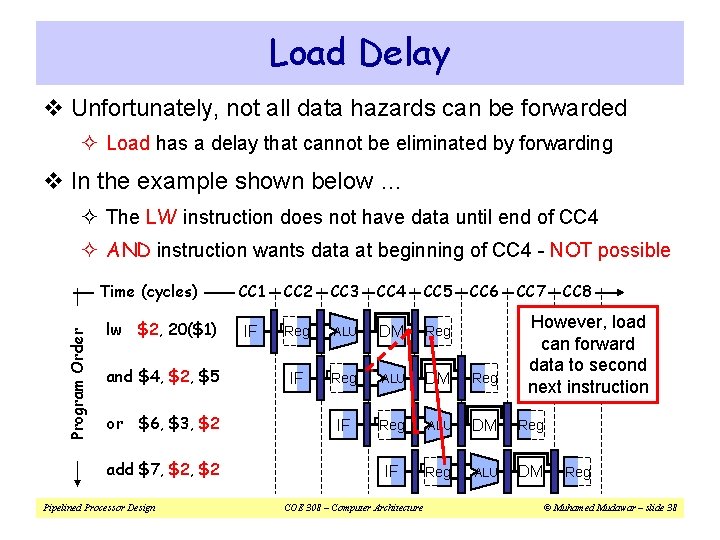

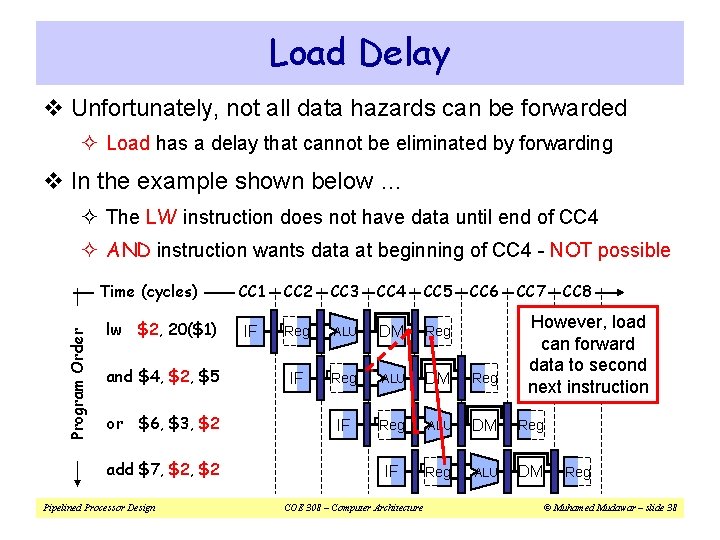

Load Delay v Unfortunately, not all data hazards can be forwarded ² Load has a delay that cannot be eliminated by forwarding v In the example shown below … ² The LW instruction does not have data until end of CC 4 ² AND instruction wants data at beginning of CC 4 - NOT possible Program Order Time (cycles) lw $2, 20($1) and $4, $2, $5 or $6, $3, $2 add $7, $2 Pipelined Processor Design CC 1 CC 2 CC 3 CC 4 CC 5 IF Reg ALU DM Reg IF Reg ALU DM COE 308 – Computer Architecture CC 6 CC 7 CC 8 However, load can forward data to second next instruction Reg © Muhamed Mudawar – slide 38

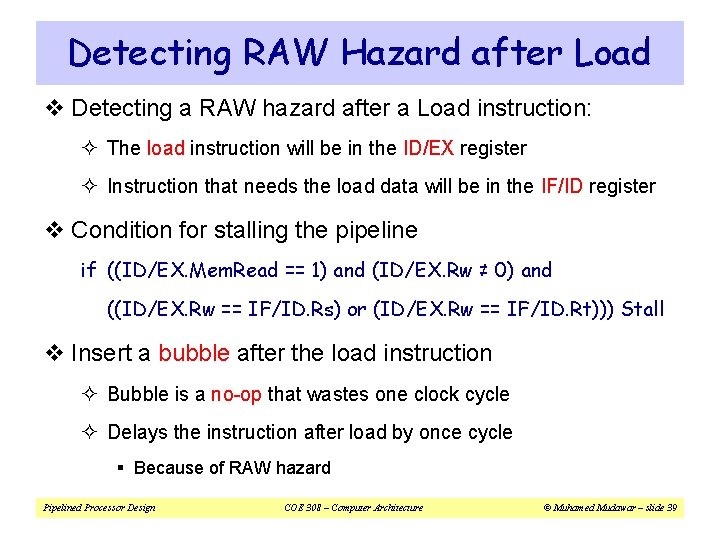

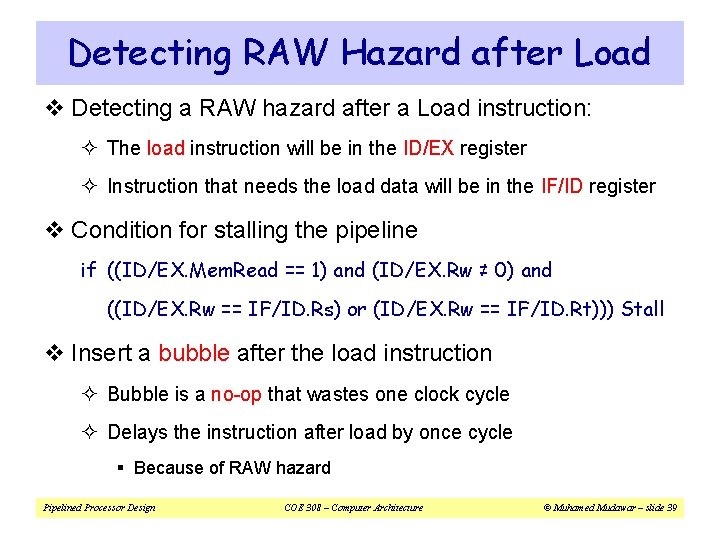

Detecting RAW Hazard after Load v Detecting a RAW hazard after a Load instruction: ² The load instruction will be in the ID/EX register ² Instruction that needs the load data will be in the IF/ID register v Condition for stalling the pipeline if ((ID/EX. Mem. Read == 1) and (ID/EX. Rw ≠ 0) and ((ID/EX. Rw == IF/ID. Rs) or (ID/EX. Rw == IF/ID. Rt))) Stall v Insert a bubble after the load instruction ² Bubble is a no-op that wastes one clock cycle ² Delays the instruction after load by once cycle § Because of RAW hazard Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 39

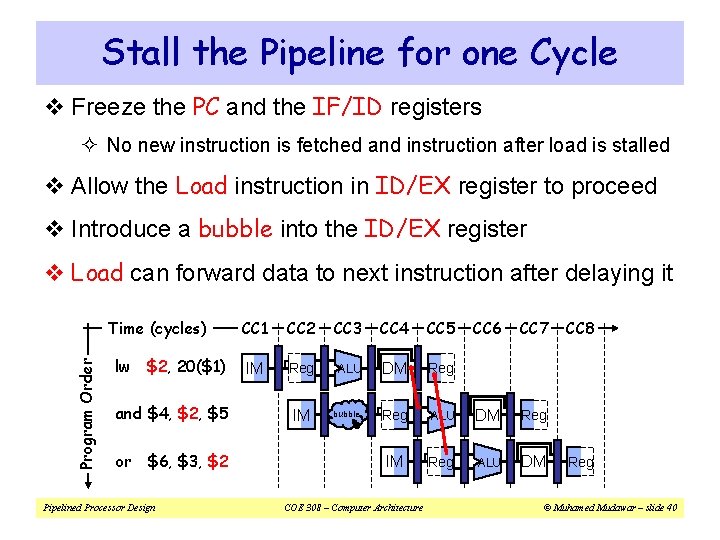

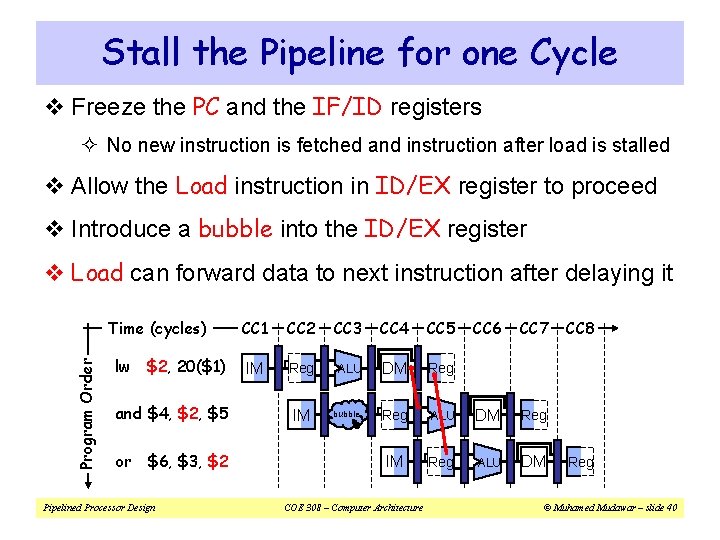

Stall the Pipeline for one Cycle v Freeze the PC and the IF/ID registers ² No new instruction is fetched and instruction after load is stalled v Allow the Load instruction in ID/EX register to proceed v Introduce a bubble into the ID/EX register v Load can forward data to next instruction after delaying it Program Order Time (cycles) lw $2, 20($1) and $4, $2, $5 or $6, $3, $2 Pipelined Processor Design CC 1 CC 2 CC 3 CC 4 CC 5 IM Reg ALU DM Reg bubble Reg IM IM COE 308 – Computer Architecture CC 6 CC 7 ALU DM Reg ALU DM CC 8 Reg © Muhamed Mudawar – slide 40

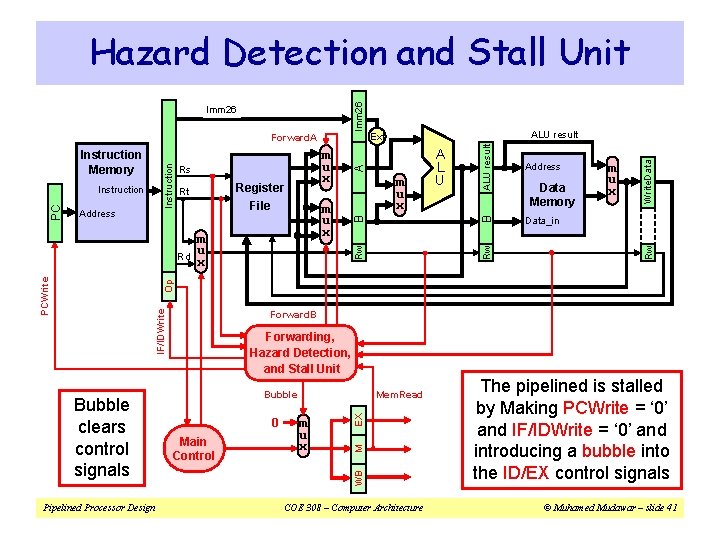

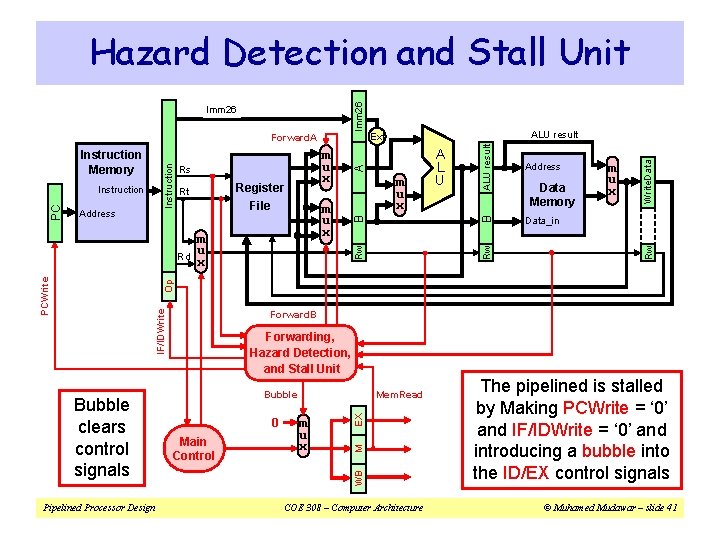

Data_in Rw Data Memory m u x Write. Data Address IF/IDWrite Op PCWrite Rd m u x A L U ALU result File A Address Register Rt Pipelined Processor Design Forwarding, Hazard Detection, and Stall Unit Mem. Read 0 Main Control m u x EX Bubble M Bubble clears control signals Forward. B WB PC Instruction Rs m u x ALU result Ext Rw Instruction Memory B Forward. A Rw Imm 26 B Imm 26 Hazard Detection and Stall Unit COE 308 – Computer Architecture The pipelined is stalled by Making PCWrite = ‘ 0’ and IF/IDWrite = ‘ 0’ and introducing a bubble into the ID/EX control signals © Muhamed Mudawar – slide 41

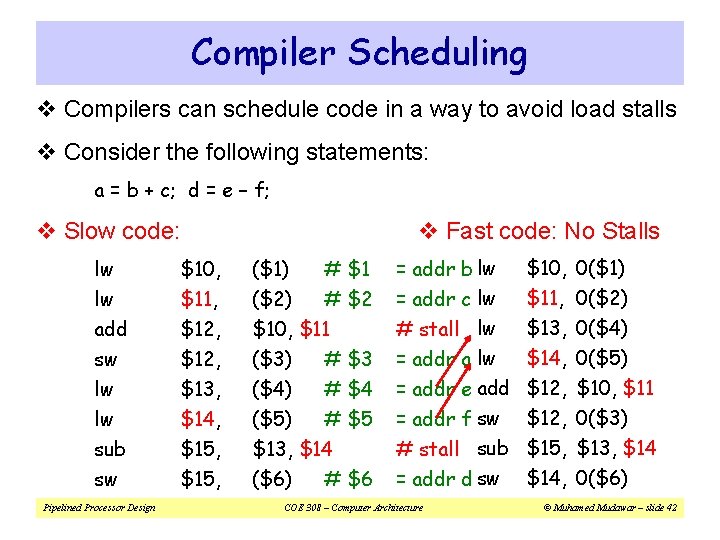

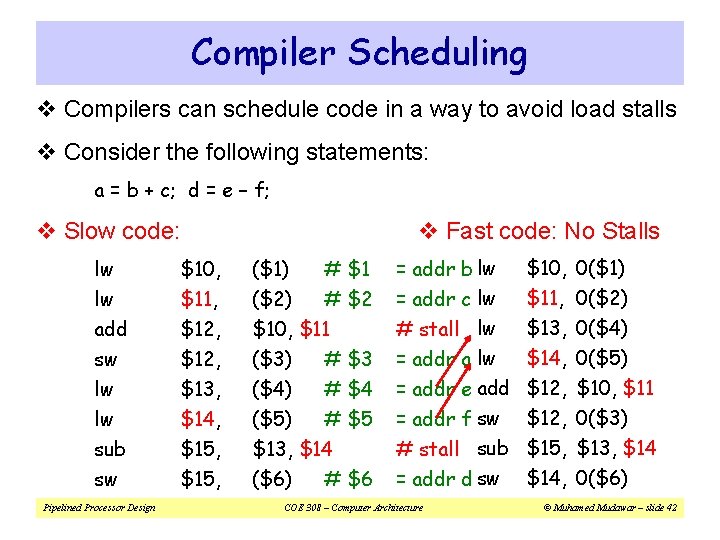

Compiler Scheduling v Compilers can schedule code in a way to avoid load stalls v Consider the following statements: a = b + c; d = e – f; v Fast code: No Stalls v Slow code: lw lw add $10, $11, $12, ($1) # $1 ($2) # $2 $10, $11 sw lw lw sub sw $12, $13, $14, $15, ($3) # $3 ($4) # $4 ($5) # $5 $13, $14 ($6) # $6 Pipelined Processor Design = addr b lw = addr c lw # stall lw = addr a lw = addr e add = addr f sw # stall sub $10, $11, $13, $14, $12, $15, = addr d sw $14, 0($6) COE 308 – Computer Architecture 0($1) 0($2) 0($4) 0($5) $10, $11 0($3) $13, $14 © Muhamed Mudawar – slide 42

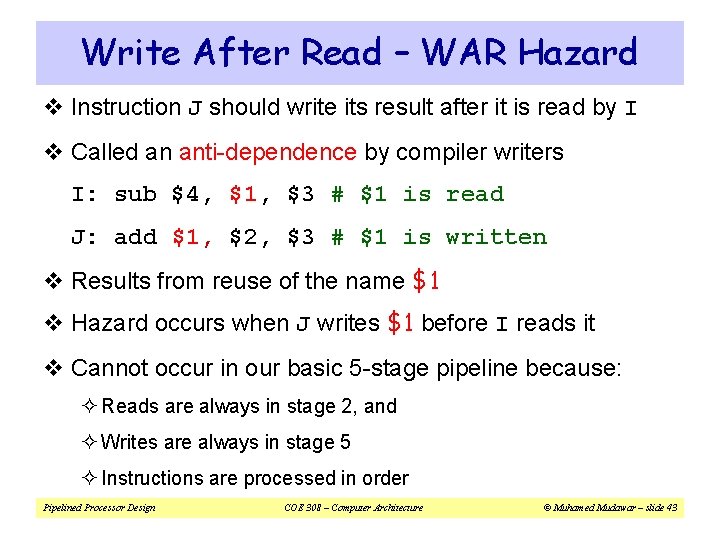

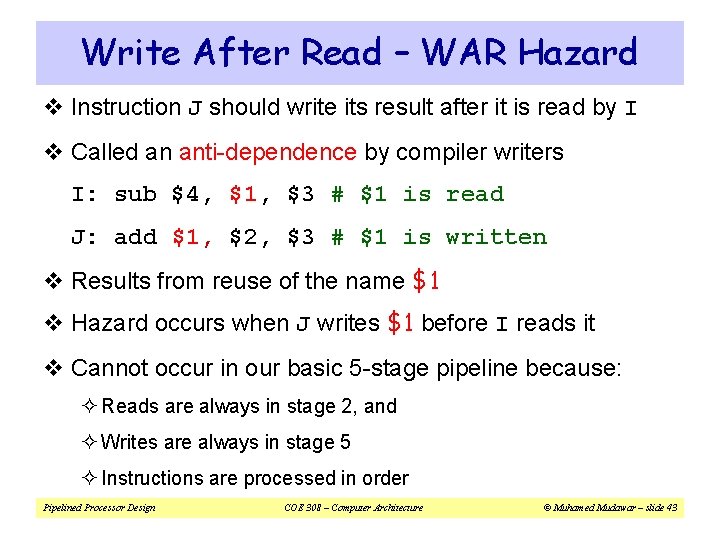

Write After Read – WAR Hazard v Instruction J should write its result after it is read by I v Called an anti-dependence by compiler writers I: sub $4, $1, $3 # $1 is read J: add $1, $2, $3 # $1 is written v Results from reuse of the name $1 v Hazard occurs when J writes $1 before I reads it v Cannot occur in our basic 5 -stage pipeline because: ² Reads are always in stage 2, and ² Writes are always in stage 5 ² Instructions are processed in order Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 43

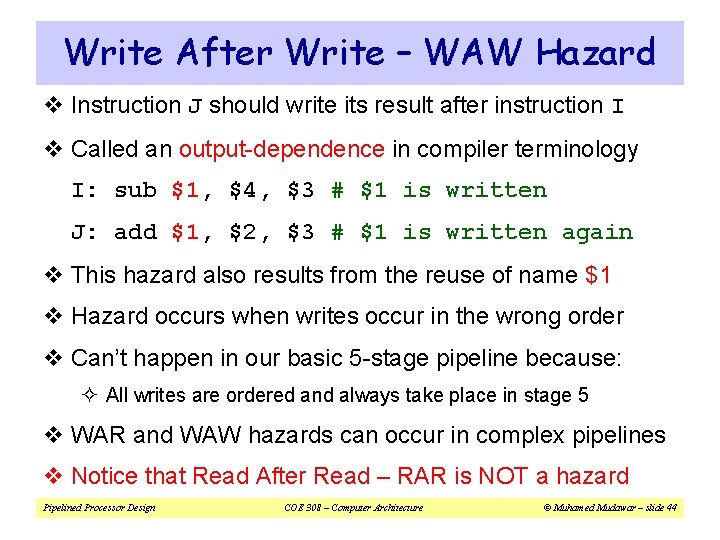

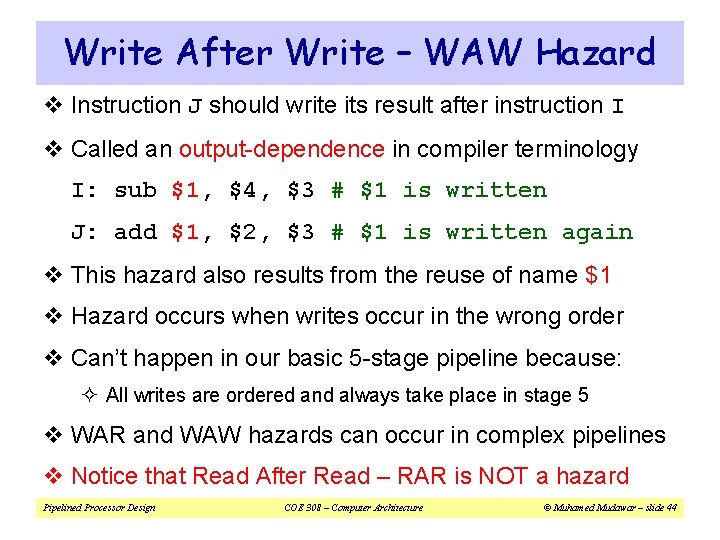

Write After Write – WAW Hazard v Instruction J should write its result after instruction I v Called an output-dependence in compiler terminology I: sub $1, $4, $3 # $1 is written J: add $1, $2, $3 # $1 is written again v This hazard also results from the reuse of name $1 v Hazard occurs when writes occur in the wrong order v Can’t happen in our basic 5 -stage pipeline because: ² All writes are ordered and always take place in stage 5 v WAR and WAW hazards can occur in complex pipelines v Notice that Read After Read – RAR is NOT a hazard Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 44

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 45

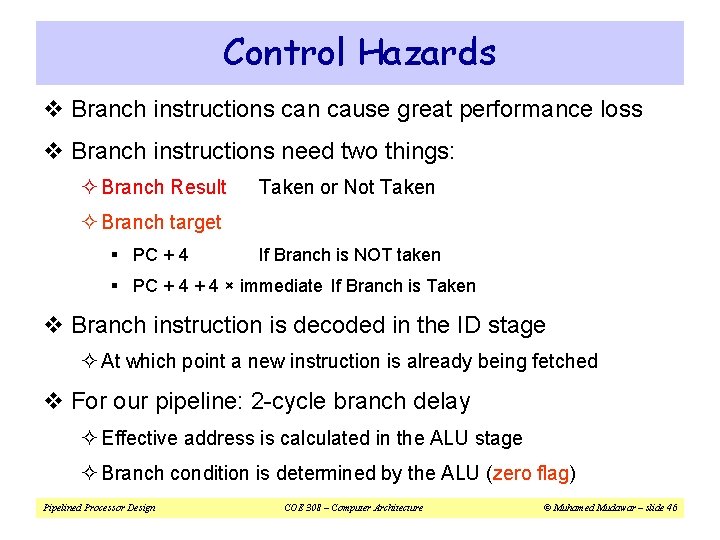

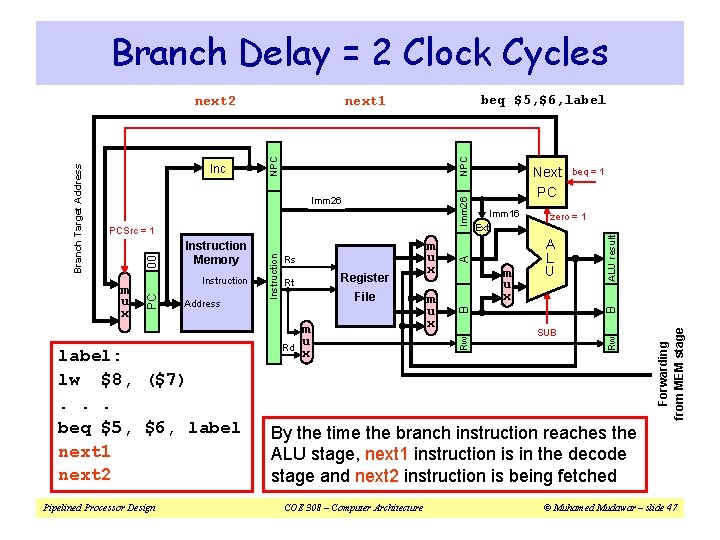

Control Hazards v Branch instructions can cause great performance loss v Branch instructions need two things: ² Branch Result Taken or Not Taken ² Branch target § PC + 4 If Branch is NOT taken § PC + 4 × immediate If Branch is Taken v Branch instruction is decoded in the ID stage ² At which point a new instruction is already being fetched v For our pipeline: 2 -cycle branch delay ² Effective address is calculated in the ALU stage ² Branch condition is determined by the ALU (zero flag) Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 46

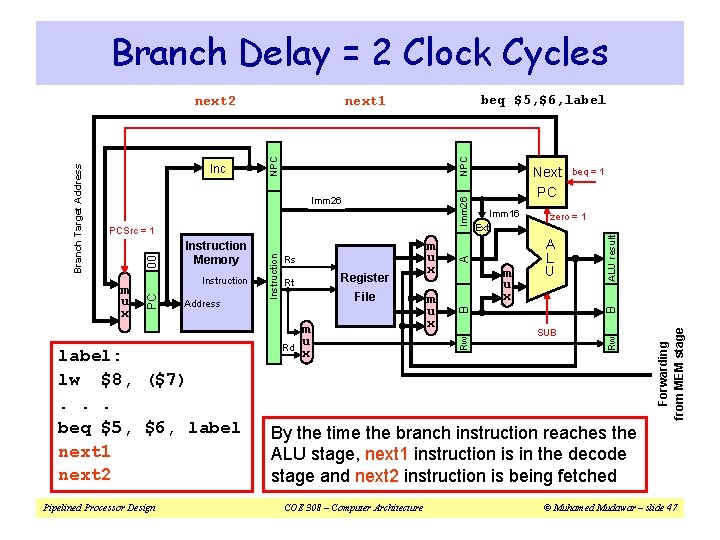

Branch Delay = 2 Clock Cycles Pipelined Processor Design Rd A L U ALU result m u x zero = 1 B Imm 26 m u x Imm 16 Ext beq = 1 SUB Forwarding from MEM stage label: lw $8, ($7). . . beq $5, $6, label next 1 next 2 File A Address Register Rt m u x B Instruction Rs Rw m u x Instruction Memory Instruction 00 PCSrc = 1 Next PC Rw NPC Imm 26 PC Branch Target Address Inc beq $5, $6, label next 1 next 2 By the time the branch instruction reaches the ALU stage, next 1 instruction is in the decode stage and next 2 instruction is being fetched COE 308 – Computer Architecture © Muhamed Mudawar – slide 47

2 -Cycle Branch Delay v Next 1 thru Next 2 instructions will be fetched anyway v Pipeline should flush Next 1 and Next 2 if branch is taken v Otherwise, they can be executed if branch is not taken beq $5, $6, label Next 1 # bubble cc 1 cc 2 cc 3 IF Reg ALU IF Next 2 # bubble cc 4 cc 5 cc 6 Reg Bubble Bubble IF Reg ALU MEM label: branch target instruction Pipelined Processor Design COE 308 – Computer Architecture cc 7 © Muhamed Mudawar – slide 48

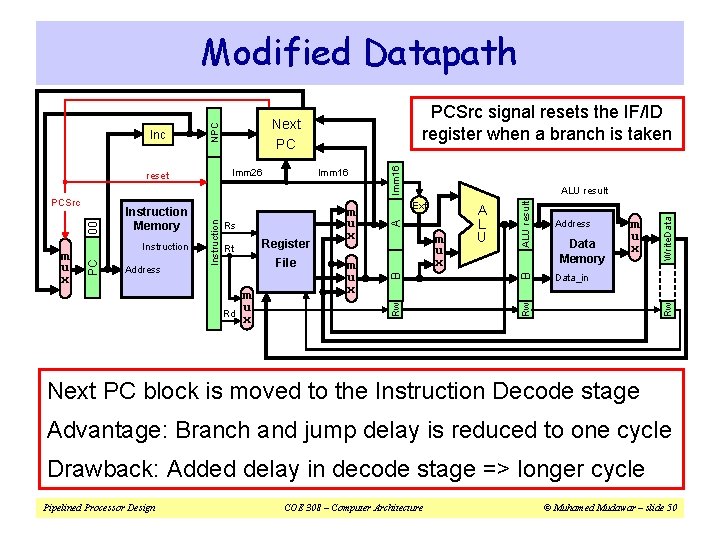

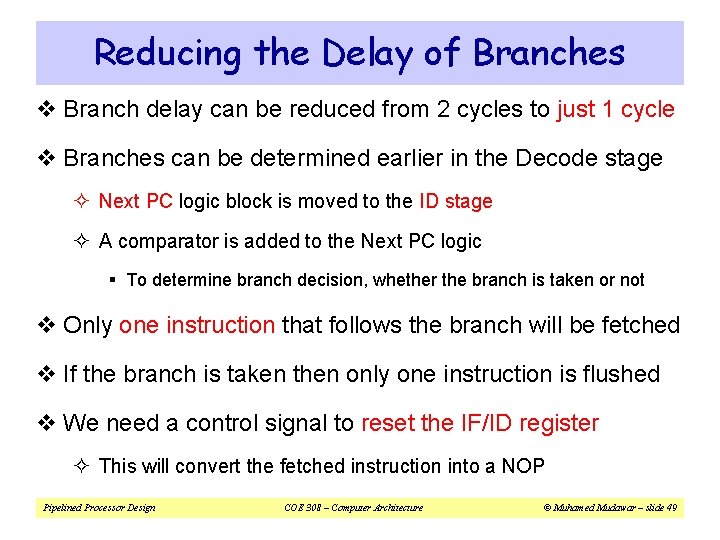

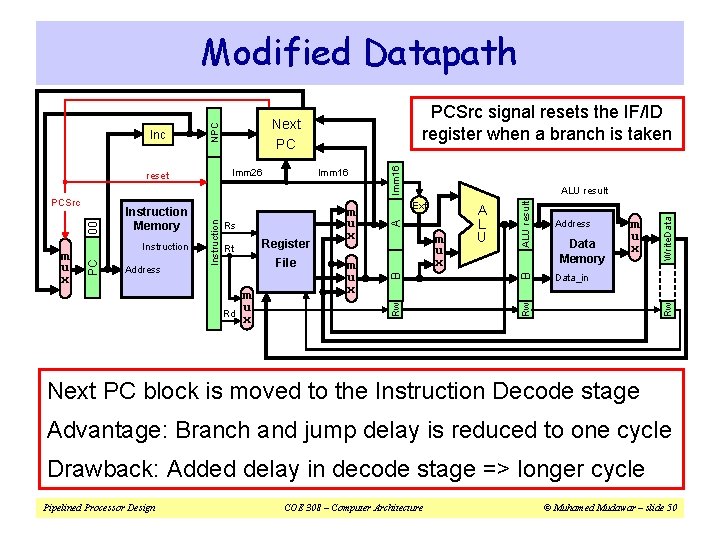

Reducing the Delay of Branches v Branch delay can be reduced from 2 cycles to just 1 cycle v Branches can be determined earlier in the Decode stage ² Next PC logic block is moved to the ID stage ² A comparator is added to the Next PC logic § To determine branch decision, whether the branch is taken or not v Only one instruction that follows the branch will be fetched v If the branch is taken then only one instruction is flushed v We need a control signal to reset the IF/ID register ² This will convert the fetched instruction into a NOP Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 49

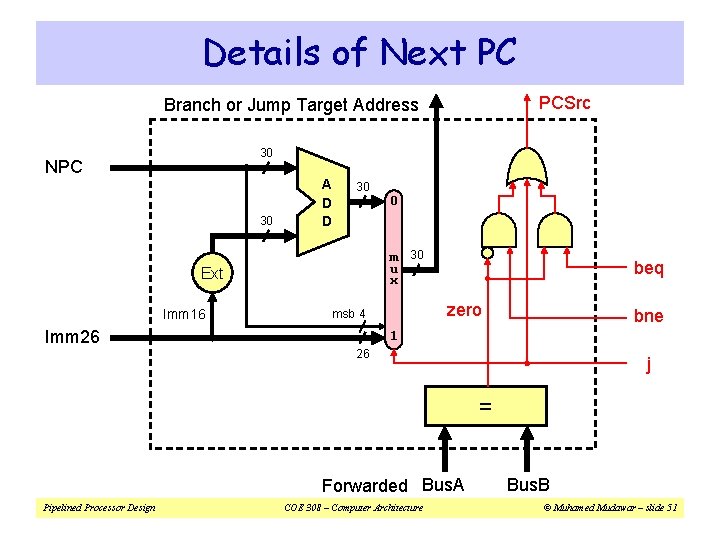

Modified Datapath Imm 16 Data_in Data Memory m u x Write. Data m u x A L U Rw Rd m u x ALU result File Address B Register Rt Ext m u x Rw Address Rs ALU result A Instruction PC m u x Instruction Memory Instruction 00 PCSrc Imm 16 B Imm 26 reset Rw NPC Inc PCSrc signal resets the IF/ID register when a branch is taken Next PC block is moved to the Instruction Decode stage Advantage: Branch and jump delay is reduced to one cycle Drawback: Added delay in decode stage => longer cycle Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 50

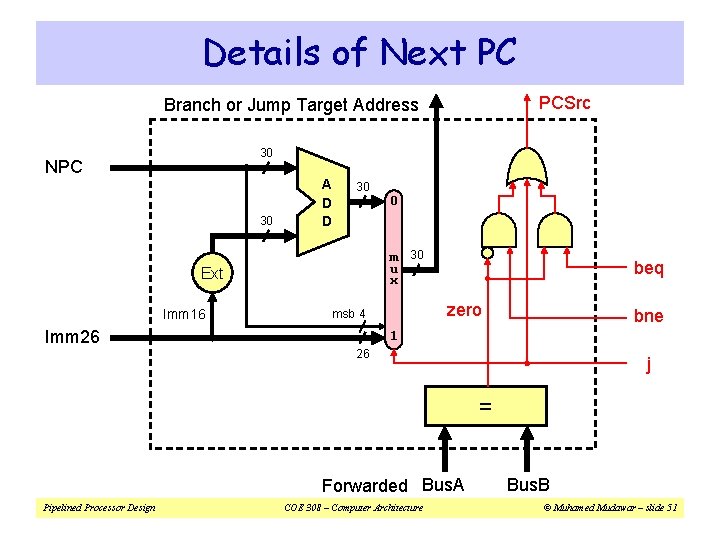

Details of Next PC PCSrc Branch or Jump Target Address 30 NPC 30 A D D 30 0 m 30 u x Ext Imm 16 zero msb 4 Imm 26 beq bne 1 26 j = Forwarded Bus. A Pipelined Processor Design COE 308 – Computer Architecture Bus. B © Muhamed Mudawar – slide 51

Next. . . v Pipelining versus Serial Execution v Pipelined Datapath v Pipelined Control v Pipeline Hazards v Data Hazards and Forwarding v Load Delay, Hazard Detection, and Stall Unit v Control Hazards v Delayed Branch and Dynamic Branch Prediction Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 52

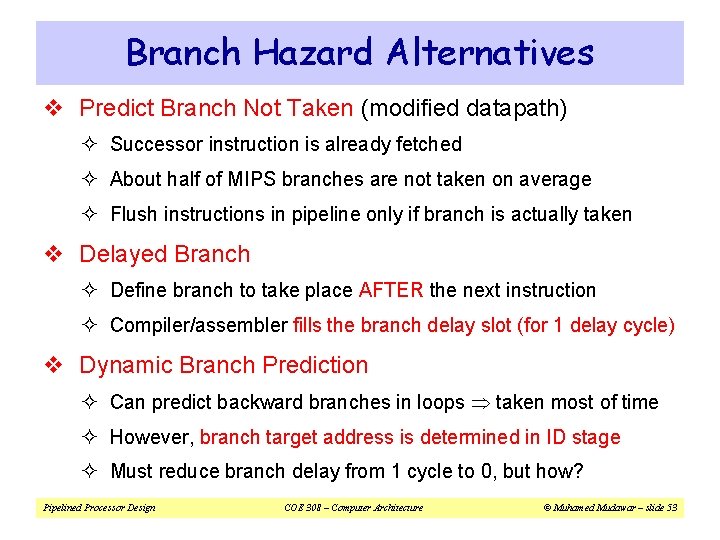

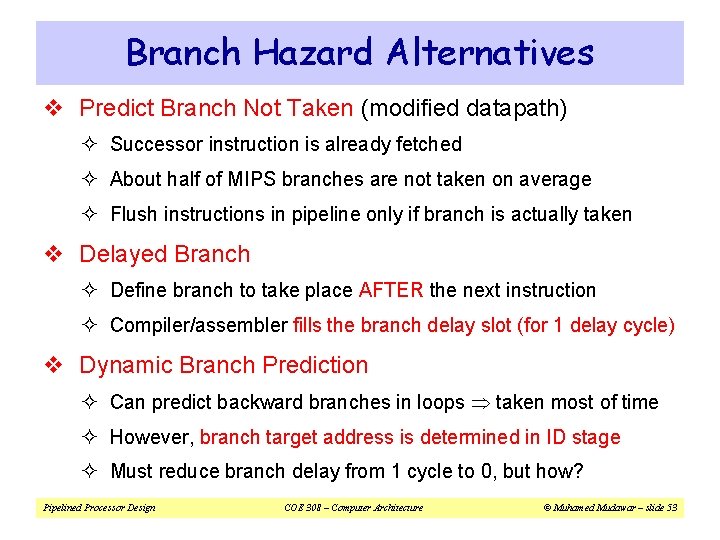

Branch Hazard Alternatives v Predict Branch Not Taken (modified datapath) ² Successor instruction is already fetched ² About half of MIPS branches are not taken on average ² Flush instructions in pipeline only if branch is actually taken v Delayed Branch ² Define branch to take place AFTER the next instruction ² Compiler/assembler fills the branch delay slot (for 1 delay cycle) v Dynamic Branch Prediction ² Can predict backward branches in loops taken most of time ² However, branch target address is determined in ID stage ² Must reduce branch delay from 1 cycle to 0, but how? Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 53

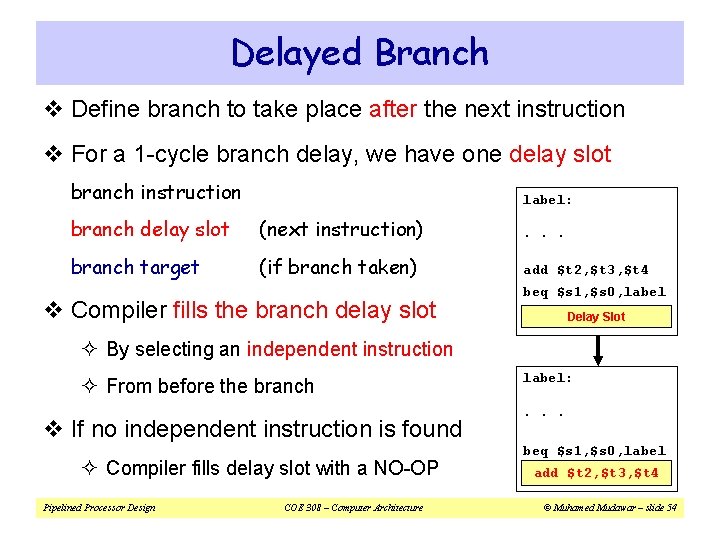

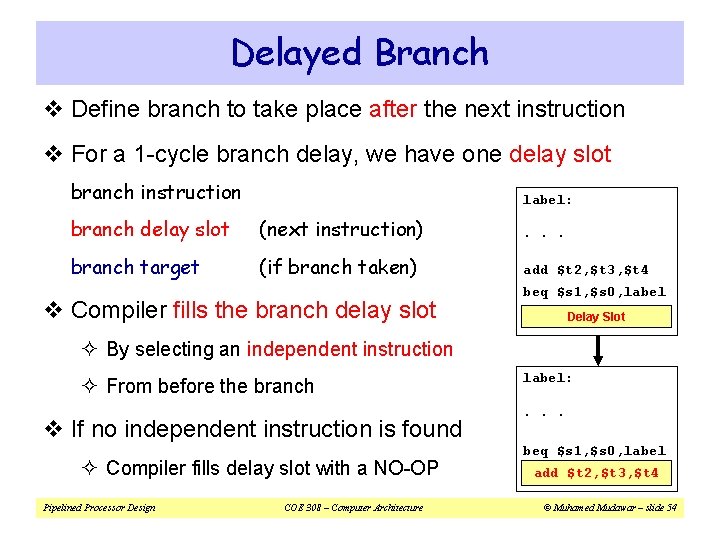

Delayed Branch v Define branch to take place after the next instruction v For a 1 -cycle branch delay, we have one delay slot branch instruction label: branch delay slot (next instruction) . . . branch target (if branch taken) add $t 2, $t 3, $t 4 v Compiler fills the branch delay slot beq $s 1, $s 0, label Delay Slot ² By selecting an independent instruction ² From before the branch v If no independent instruction is found ² Compiler fills delay slot with a NO-OP Pipelined Processor Design COE 308 – Computer Architecture label: . . . beq $s 1, $s 0, label add $t 2, $t 3, $t 4 © Muhamed Mudawar – slide 54

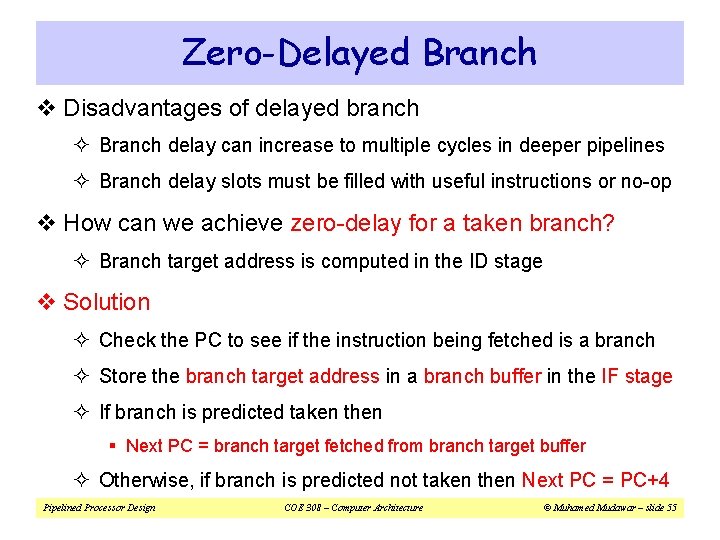

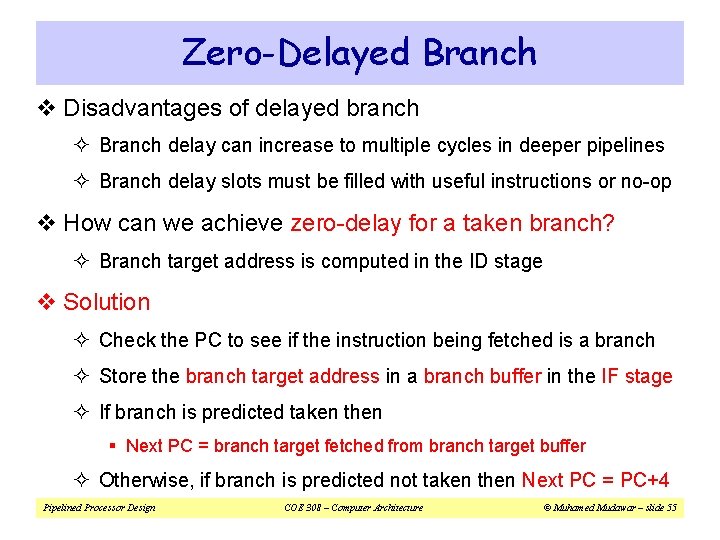

Zero-Delayed Branch v Disadvantages of delayed branch ² Branch delay can increase to multiple cycles in deeper pipelines ² Branch delay slots must be filled with useful instructions or no-op v How can we achieve zero-delay for a taken branch? ² Branch target address is computed in the ID stage v Solution ² Check the PC to see if the instruction being fetched is a branch ² Store the branch target address in a branch buffer in the IF stage ² If branch is predicted taken then § Next PC = branch target fetched from branch target buffer ² Otherwise, if branch is predicted not taken then Next PC = PC+4 Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 55

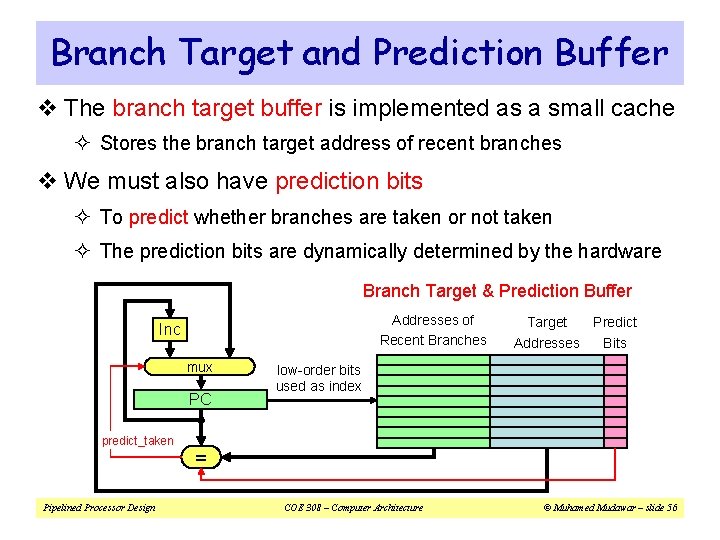

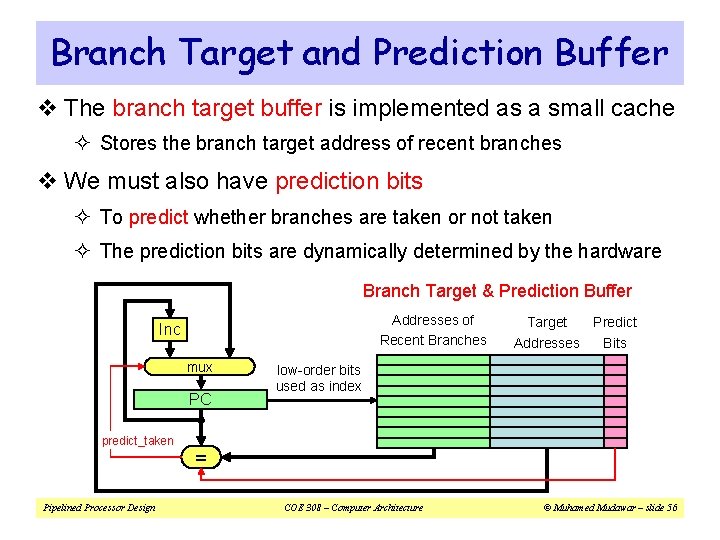

Branch Target and Prediction Buffer v The branch target buffer is implemented as a small cache ² Stores the branch target address of recent branches v We must also have prediction bits ² To predict whether branches are taken or not taken ² The prediction bits are dynamically determined by the hardware Branch Target & Prediction Buffer Addresses of Recent Branches Inc mux PC predict_taken Pipelined Processor Design Target Predict Addresses Bits low-order bits used as index = COE 308 – Computer Architecture © Muhamed Mudawar – slide 56

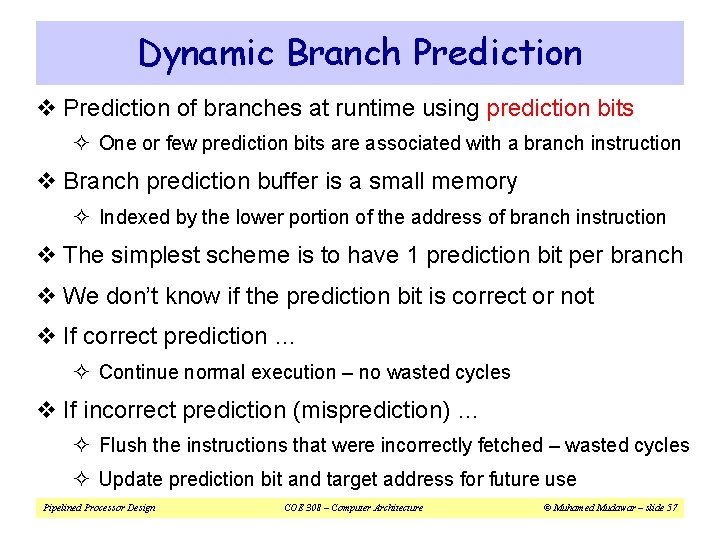

Dynamic Branch Prediction v Prediction of branches at runtime using prediction bits ² One or few prediction bits are associated with a branch instruction v Branch prediction buffer is a small memory ² Indexed by the lower portion of the address of branch instruction v The simplest scheme is to have 1 prediction bit per branch v We don’t know if the prediction bit is correct or not v If correct prediction … ² Continue normal execution – no wasted cycles v If incorrect prediction (misprediction) … ² Flush the instructions that were incorrectly fetched – wasted cycles ² Update prediction bit and target address for future use Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 57

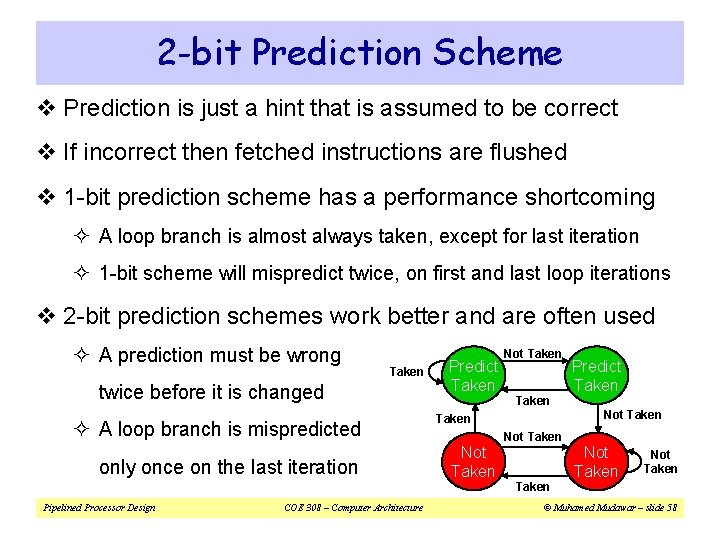

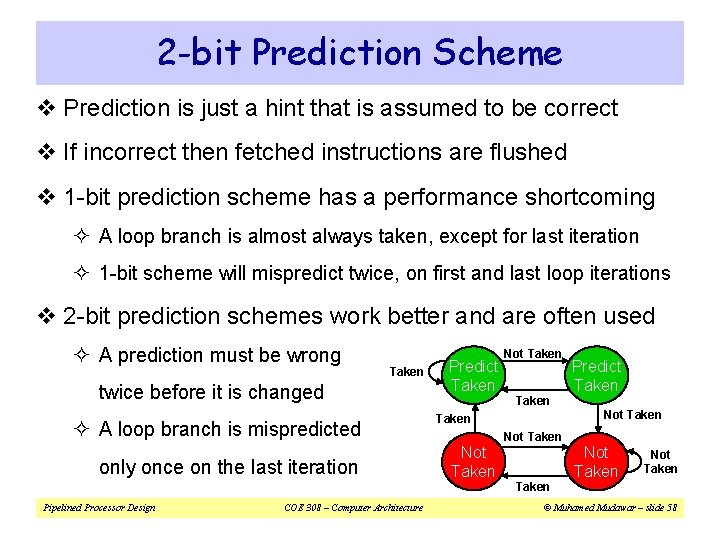

2 -bit Prediction Scheme v Prediction is just a hint that is assumed to be correct v If incorrect then fetched instructions are flushed v 1 -bit prediction scheme has a performance shortcoming ² A loop branch is almost always taken, except for last iteration ² 1 -bit scheme will mispredict twice, on first and last loop iterations v 2 -bit prediction schemes work better and are often used ² A prediction must be wrong Taken twice before it is changed ² A loop branch is mispredicted only once on the last iteration Pipelined Processor Design COE 308 – Computer Architecture Predict Taken Not Taken Taken Not Taken © Muhamed Mudawar – slide 58

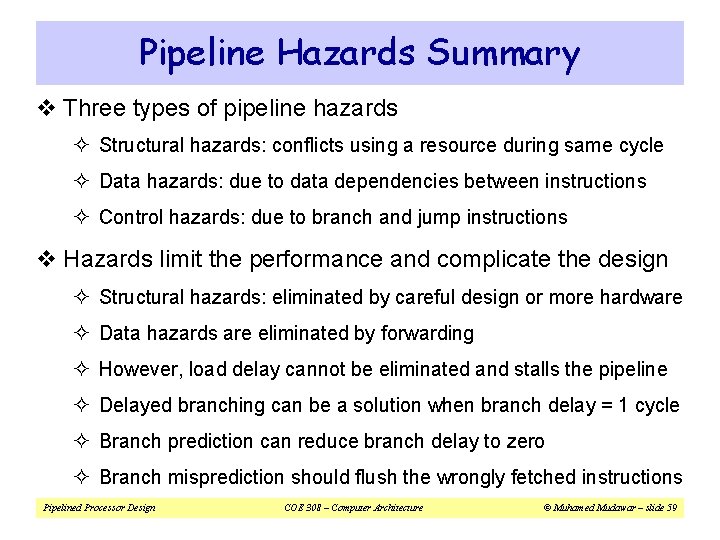

Pipeline Hazards Summary v Three types of pipeline hazards ² Structural hazards: conflicts using a resource during same cycle ² Data hazards: due to data dependencies between instructions ² Control hazards: due to branch and jump instructions v Hazards limit the performance and complicate the design ² Structural hazards: eliminated by careful design or more hardware ² Data hazards are eliminated by forwarding ² However, load delay cannot be eliminated and stalls the pipeline ² Delayed branching can be a solution when branch delay = 1 cycle ² Branch prediction can reduce branch delay to zero ² Branch misprediction should flush the wrongly fetched instructions Pipelined Processor Design COE 308 – Computer Architecture © Muhamed Mudawar – slide 59