Pipe Switch Fast Pipelined Context Switching for Deep

- Slides: 46

Pipe. Switch: Fast Pipelined Context Switching for Deep Learning Applications Zhihao Bai, Zhen Zhang, Yibo Zhu, Xin Jin 1

Deep learning powers intelligent applications in many domains 2

Training and inference Training Inference High throughput Low latency 3

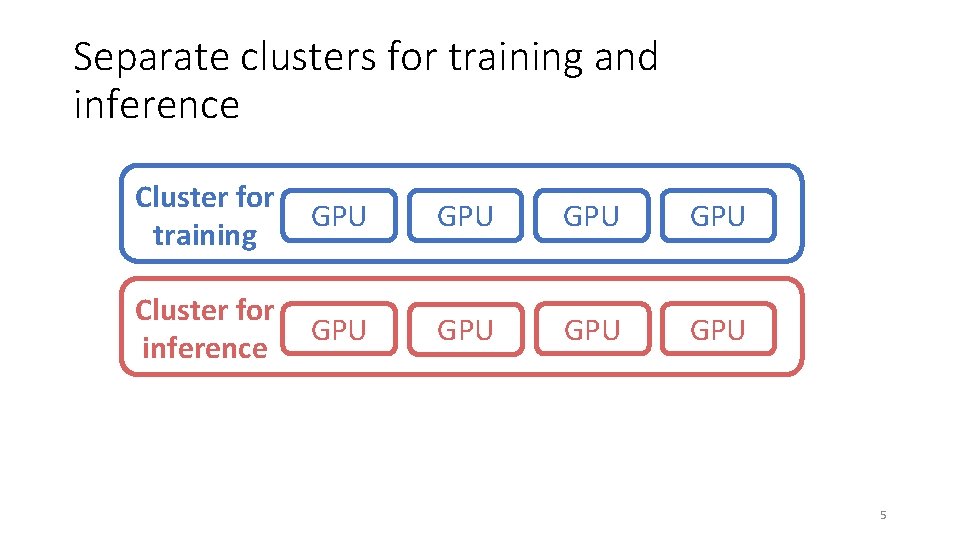

GPUs clusters for DL workloads GPU GPU 4

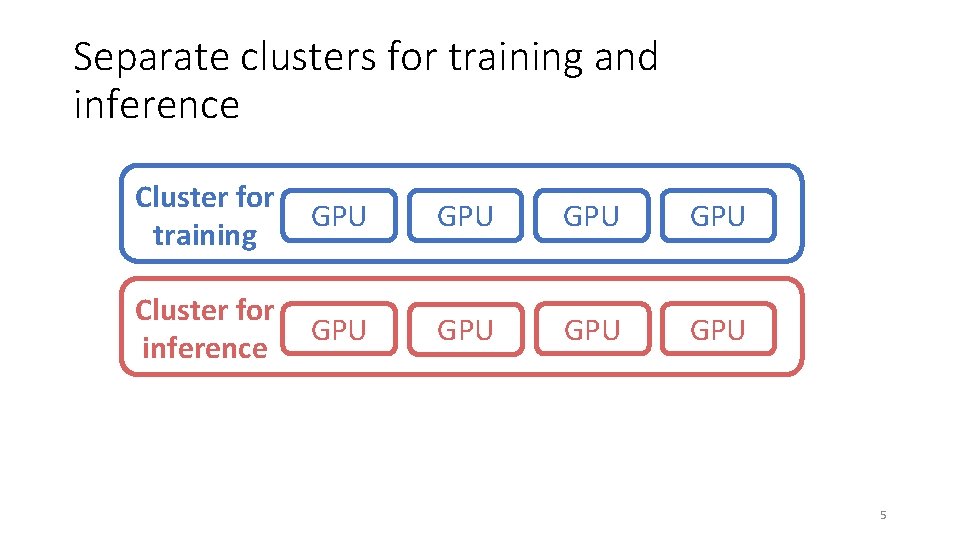

Separate clusters for training and inference Cluster for training GPU GPU Cluster for inference GPU GPU 5

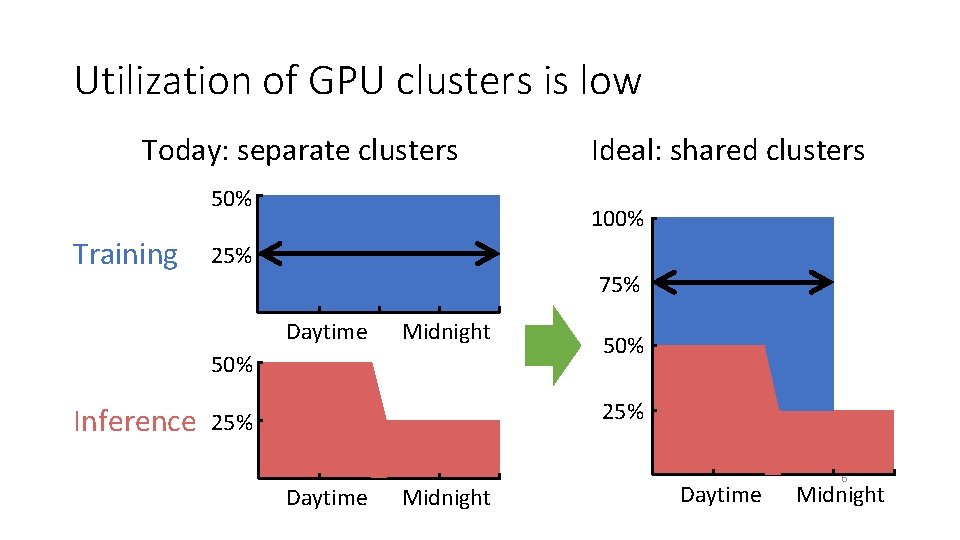

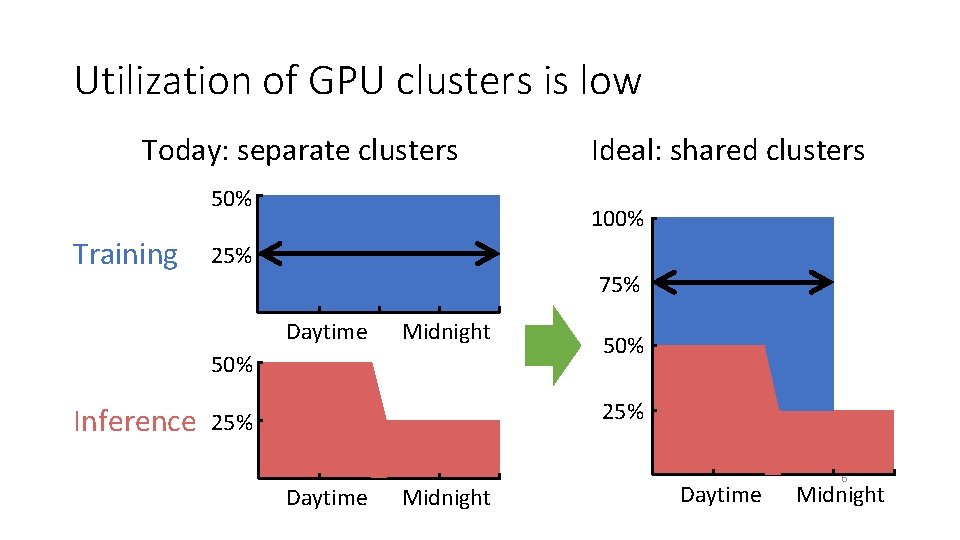

Utilization of GPU clusters is low Today: separate clusters 50% Training 100% 25% 75% Daytime Midnight 50% Inference Ideal: shared clusters 50% 25% Daytime Midnight Daytime 6 Midnight

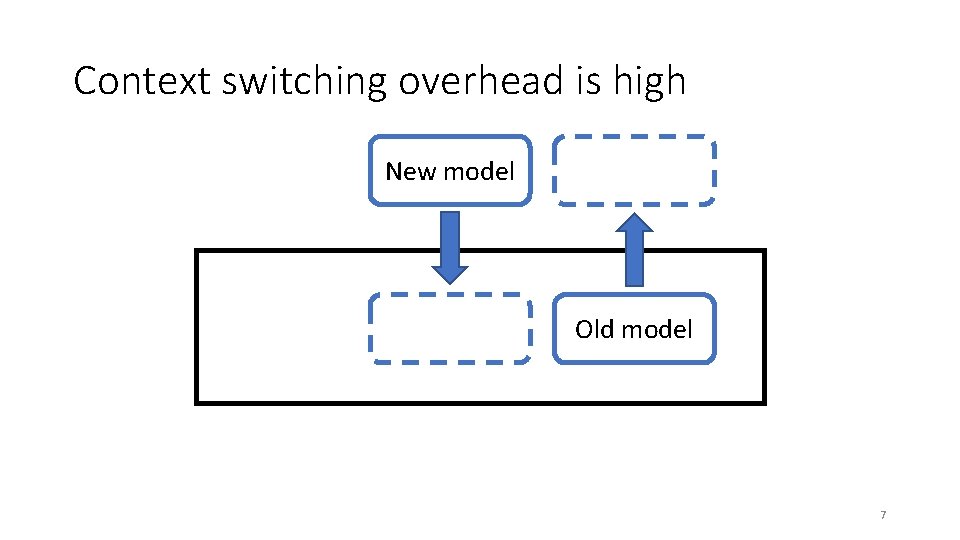

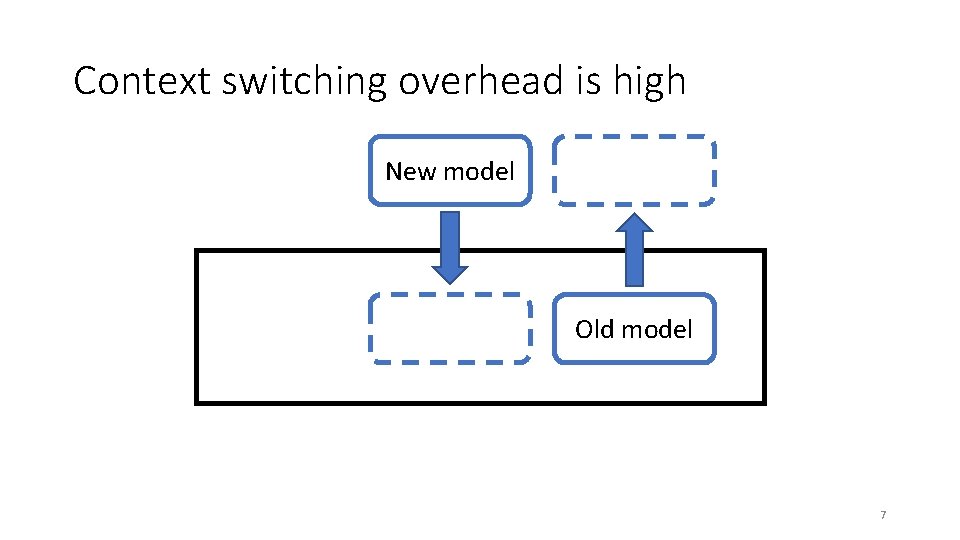

Context switching overhead is high New model Old model 7

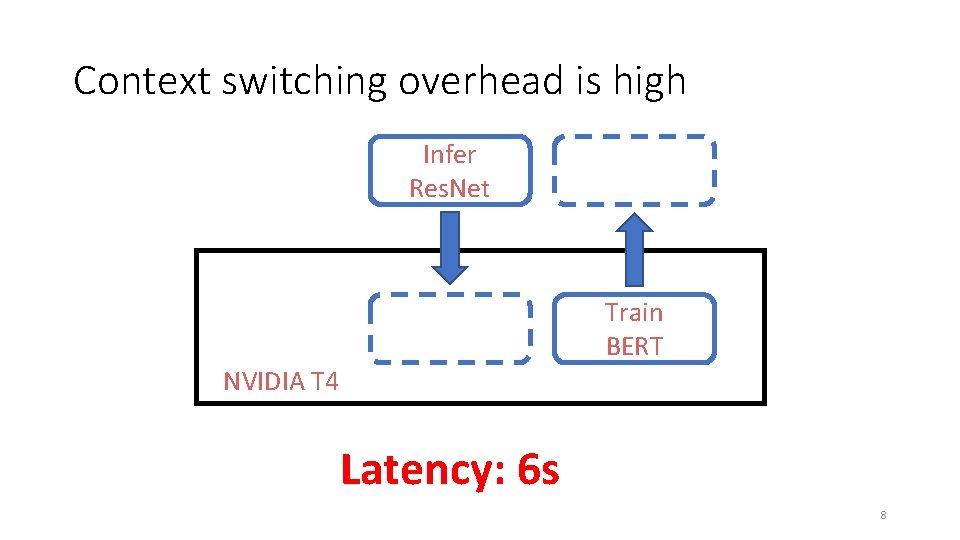

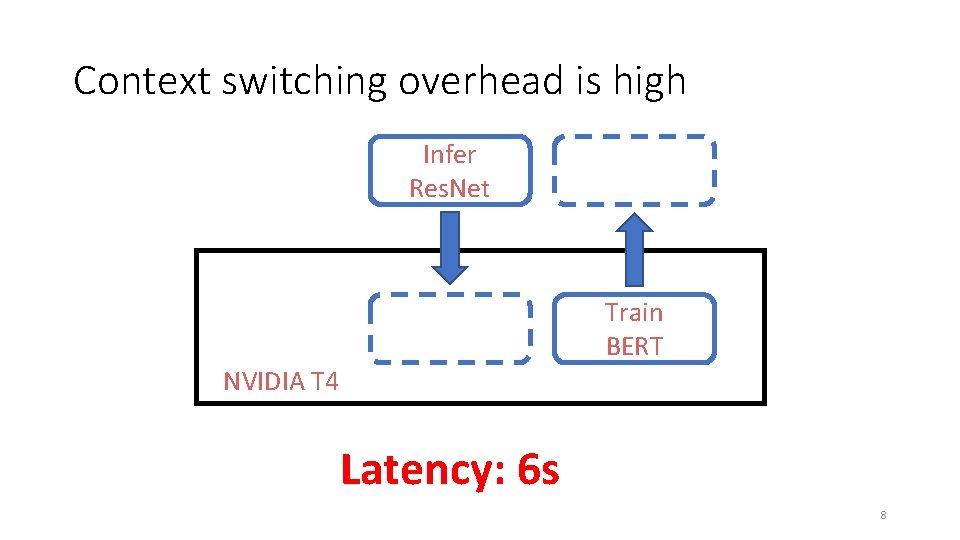

Context switching overhead is high Infer Res. Net Train BERT NVIDIA T 4 Latency: 6 s 8

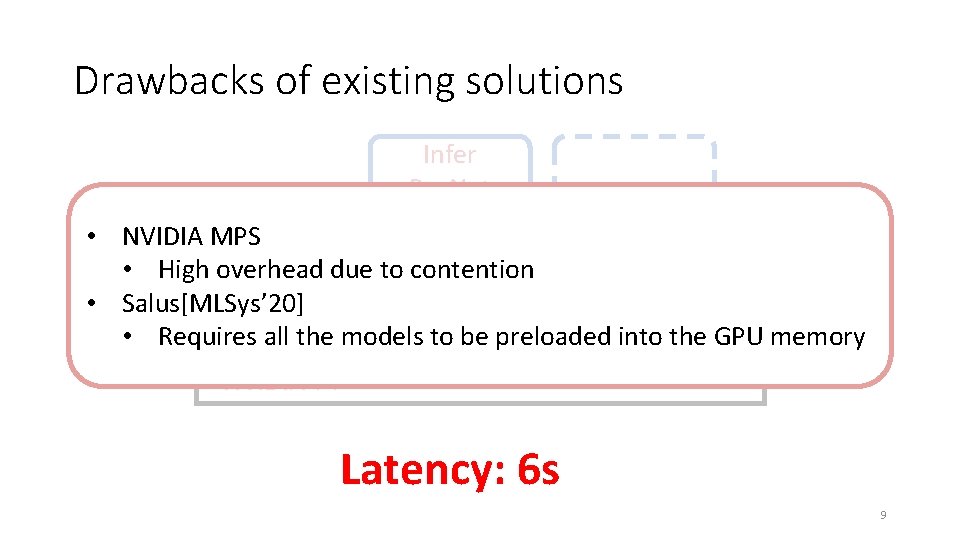

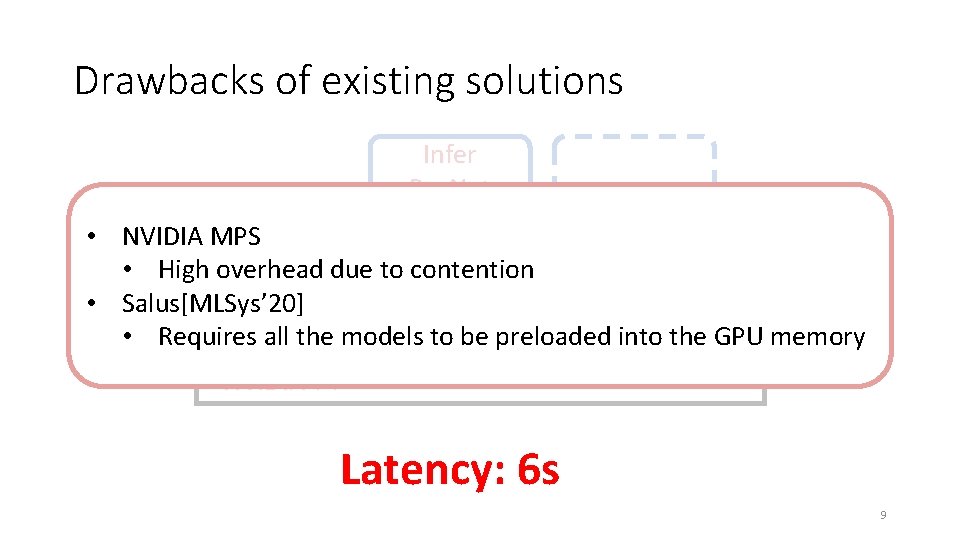

Drawbacks of existing solutions Infer Res. Net • NVIDIA MPS • High overhead due to contention • Salus[MLSys’ 20] Train • Requires all the models to be preloaded. BERT into the GPU memory NVIDIA T 4 Latency: 6 s 9

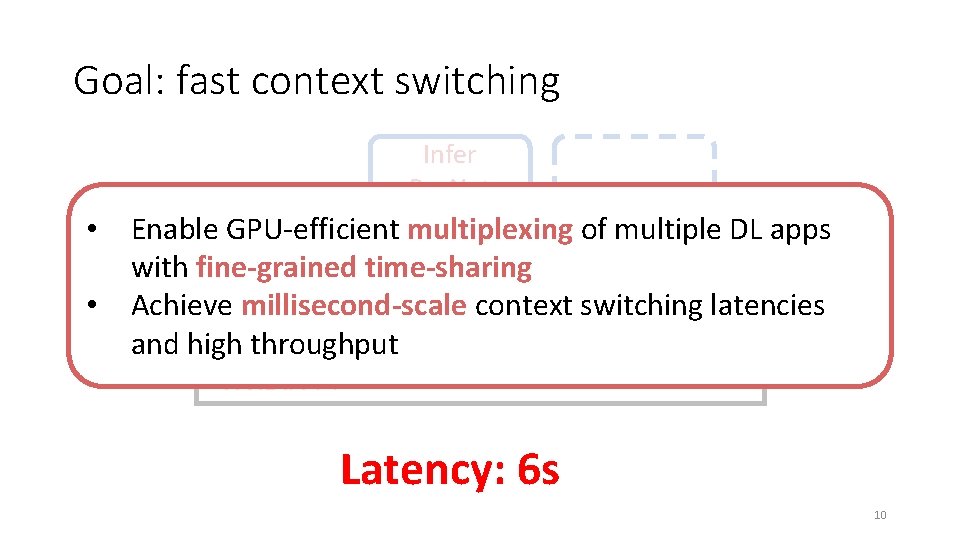

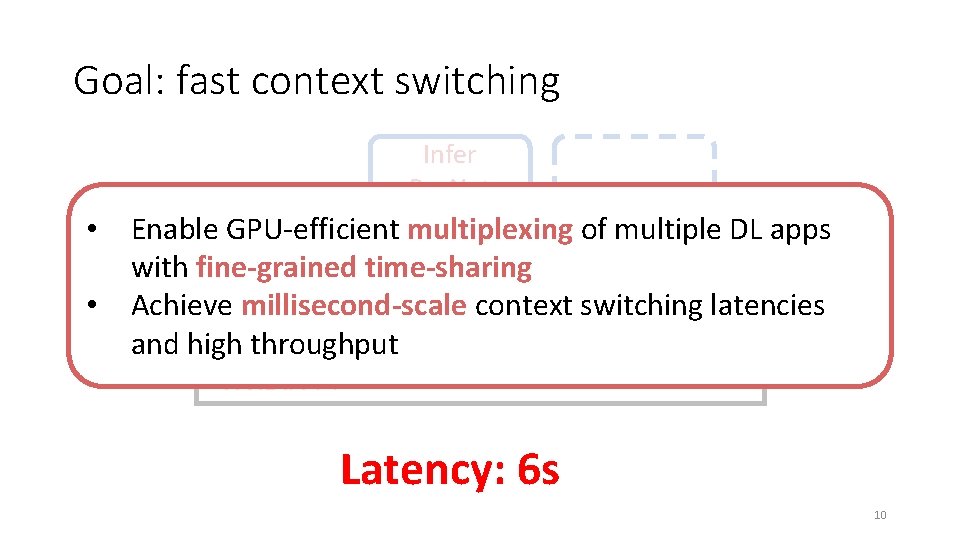

Goal: fast context switching Infer Res. Net • • Enable GPU-efficient multiplexing of multiple DL apps with fine-grained time-sharing Achieve millisecond-scale context switching latencies Train and high throughput BERT NVIDIA T 4 Latency: 6 s 10

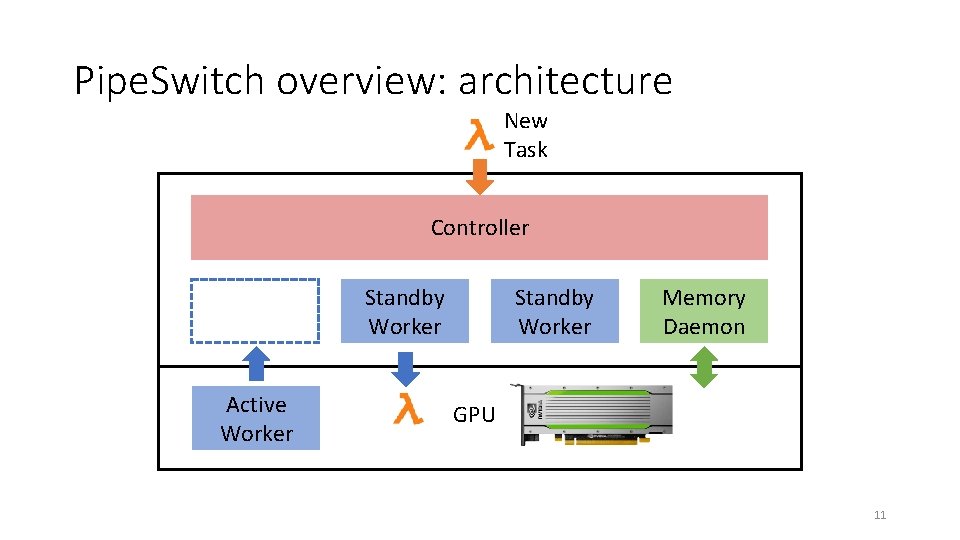

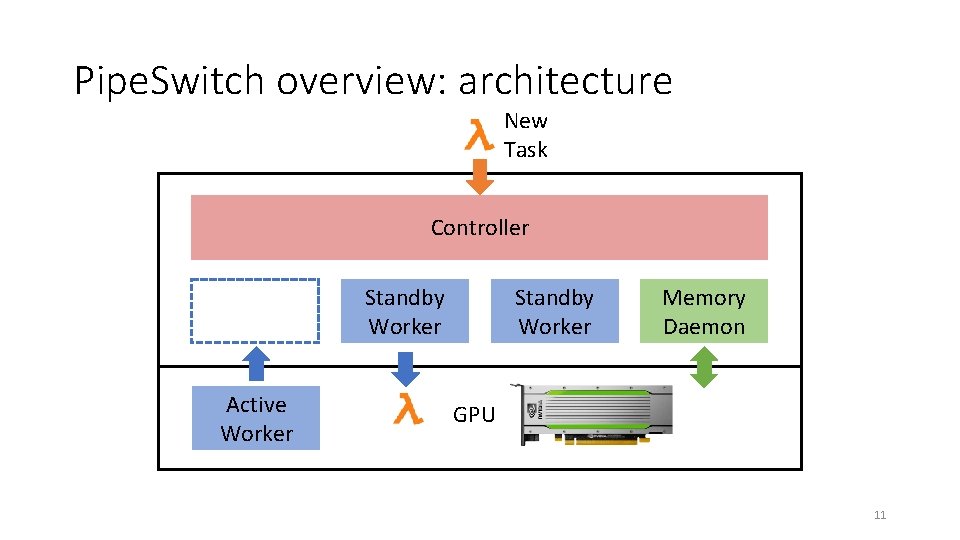

Pipe. Switch overview: architecture New Task Controller Standby Worker Active Worker Standby Worker Memory Daemon GPU 11

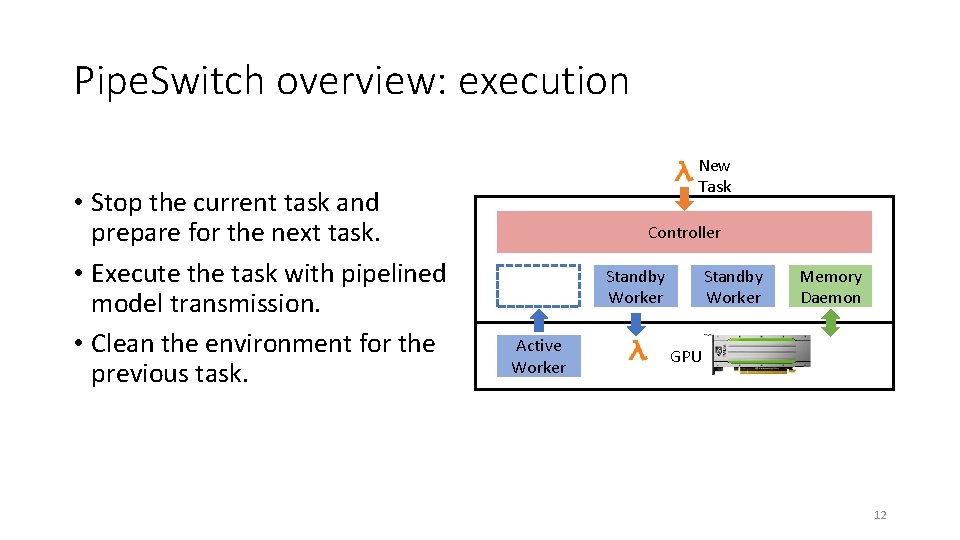

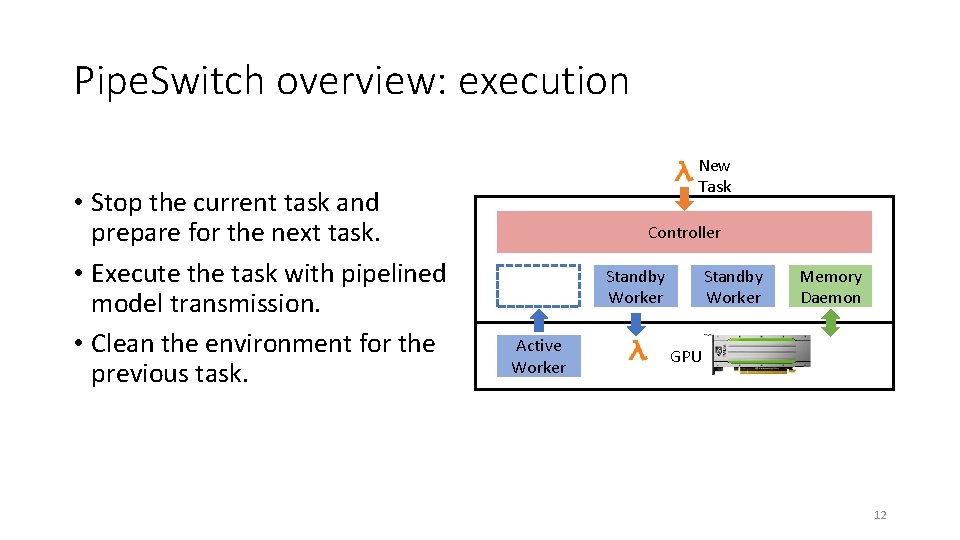

Pipe. Switch overview: execution • Stop the current task and prepare for the next task. • Execute the task with pipelined model transmission. • Clean the environment for the previous task. New Task Controller Standby Worker Active Worker Standby Worker Memory Daemon GPU 12

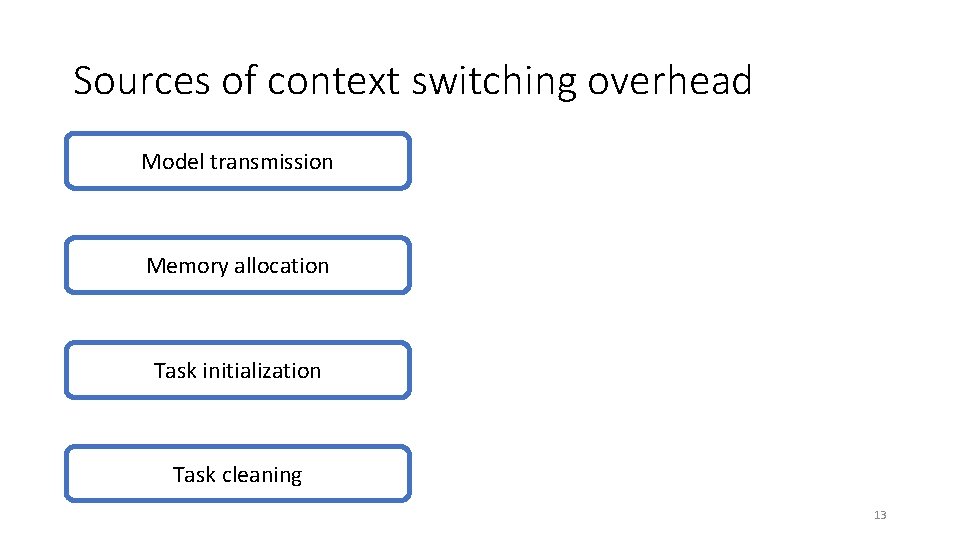

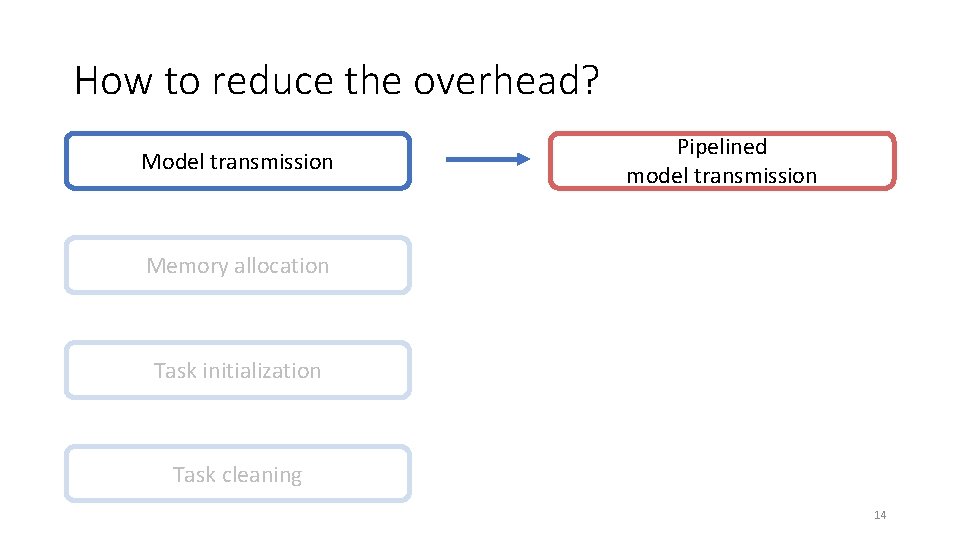

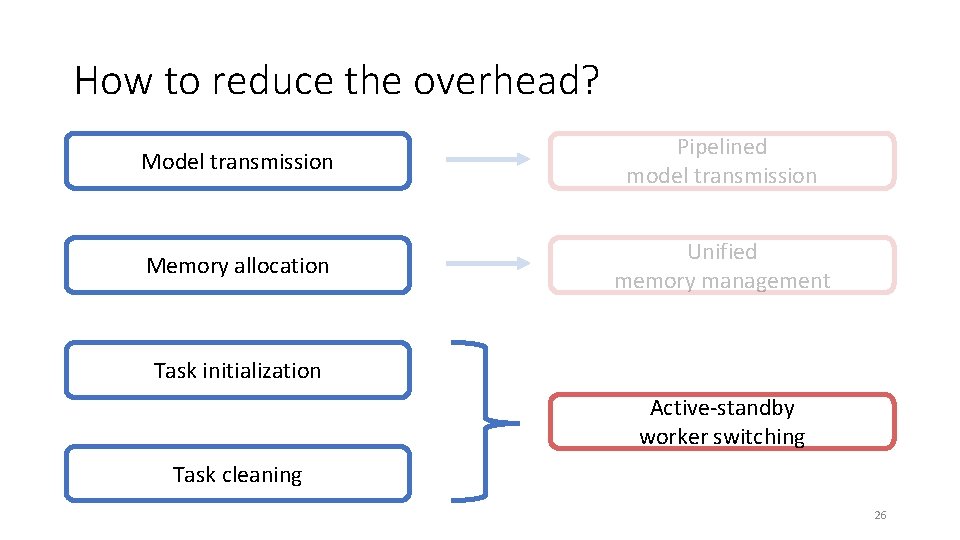

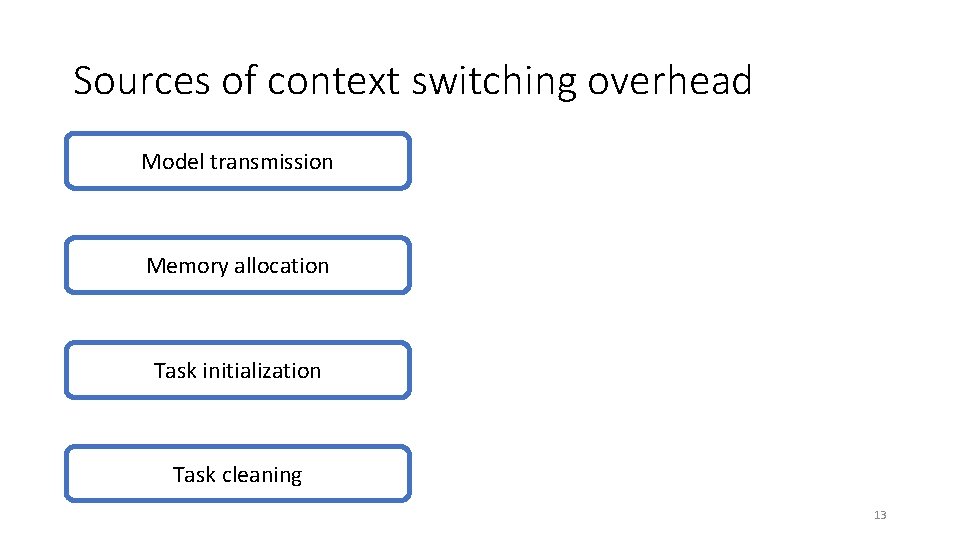

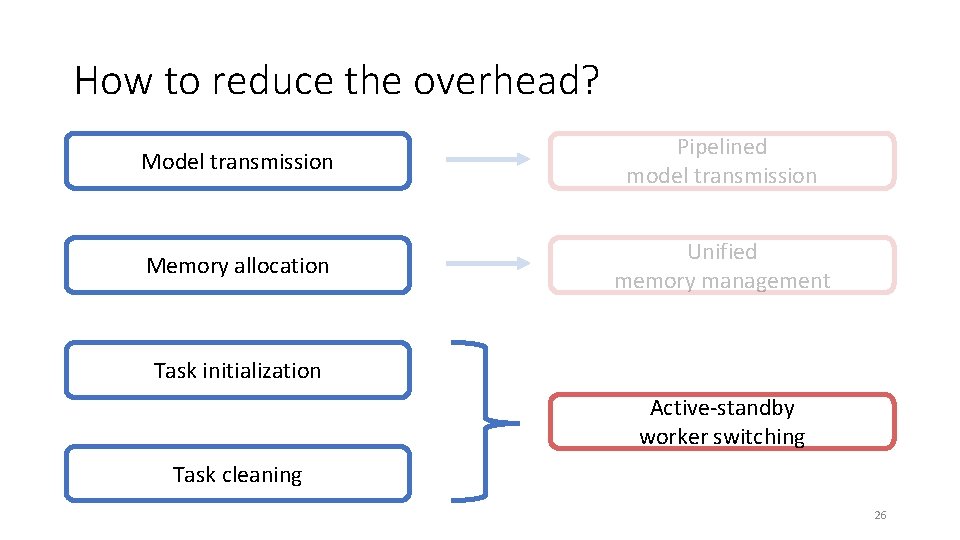

Sources of context switching overhead Model transmission Memory allocation Task initialization Task cleaning 13

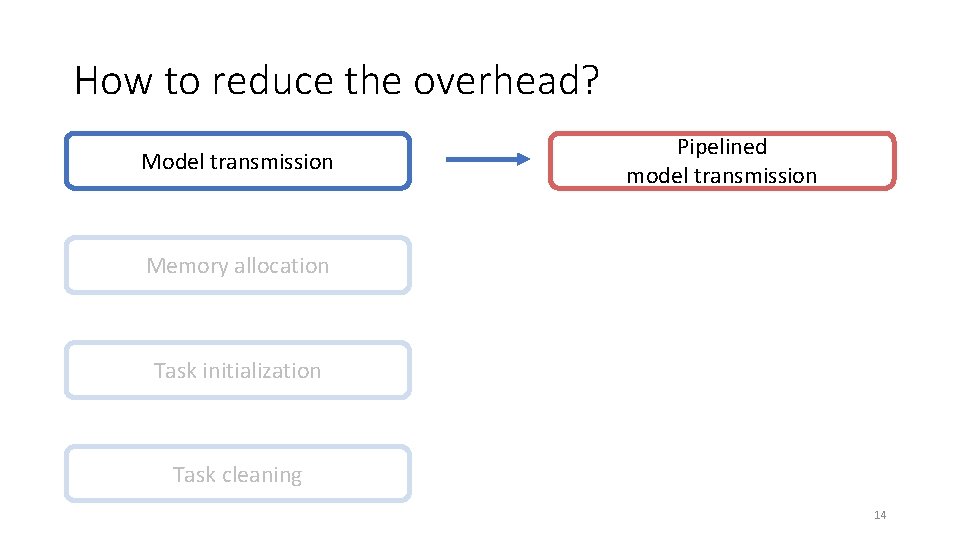

How to reduce the overhead? Model transmission Pipelined model transmission Memory allocation Task initialization Task cleaning 14

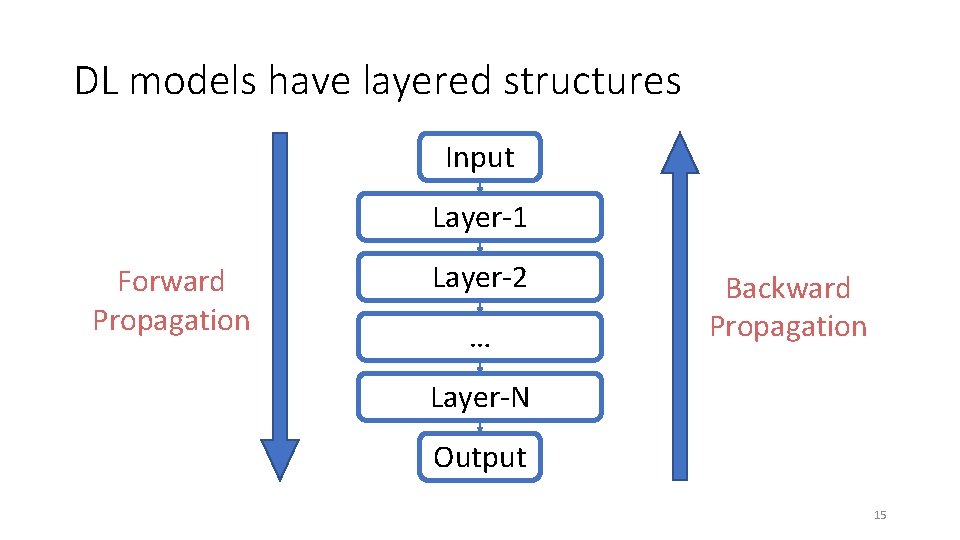

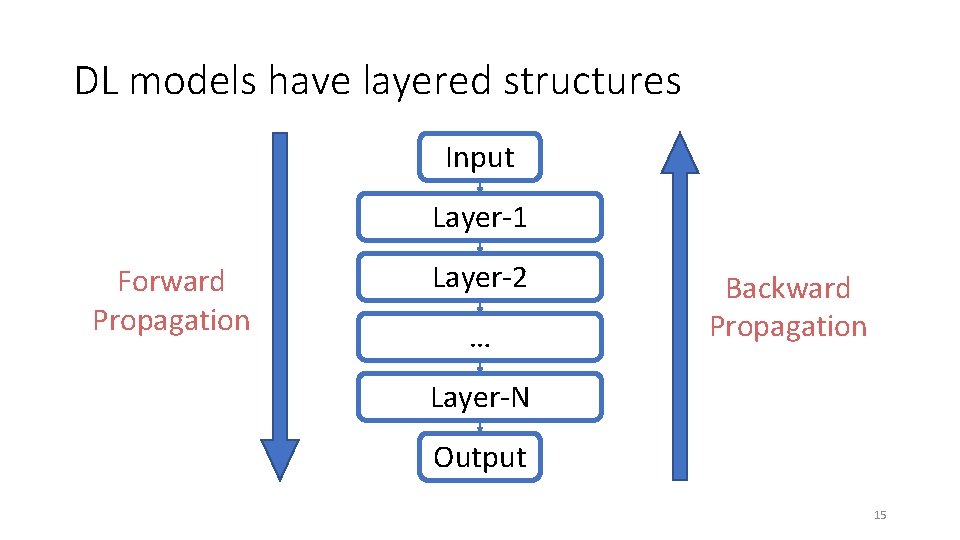

DL models have layered structures Input Layer-1 Forward Propagation Layer-2 … Backward Propagation Layer-N Output 15

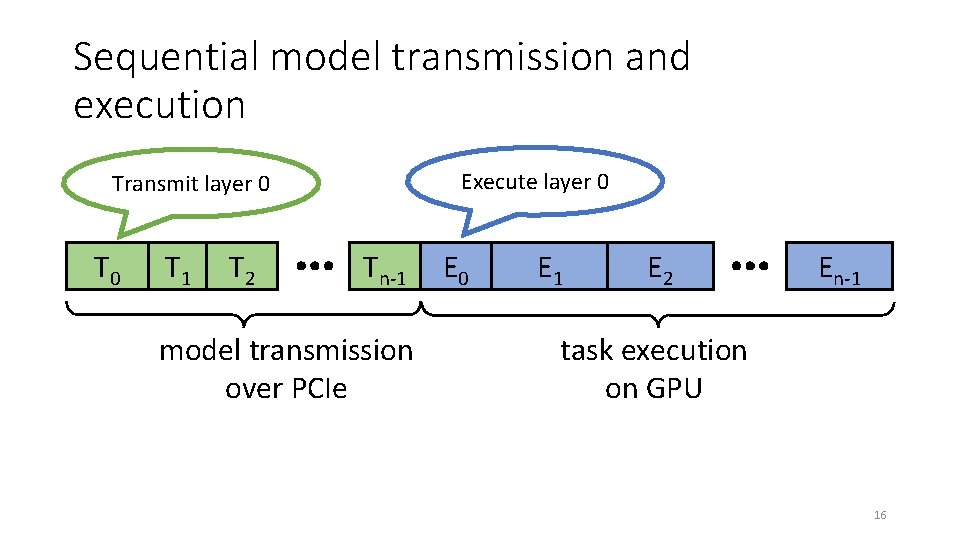

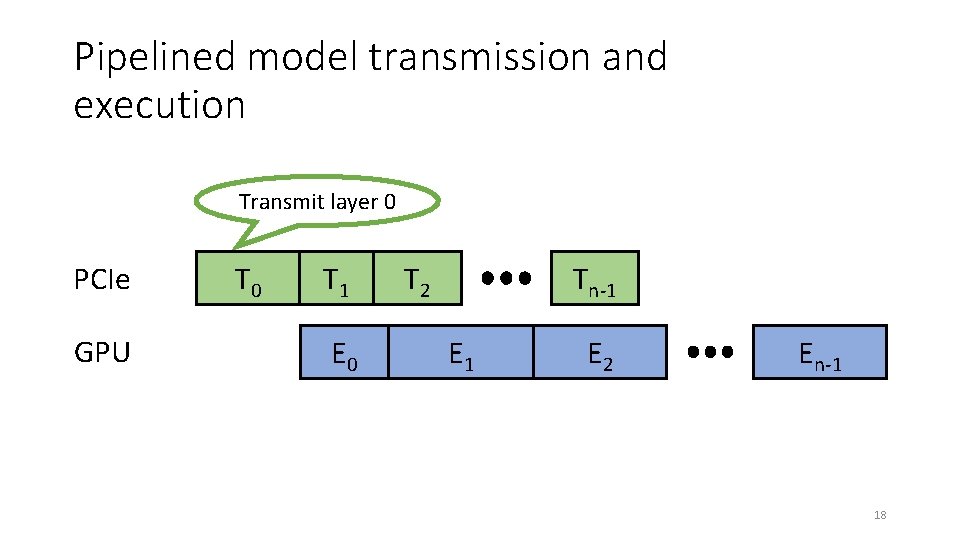

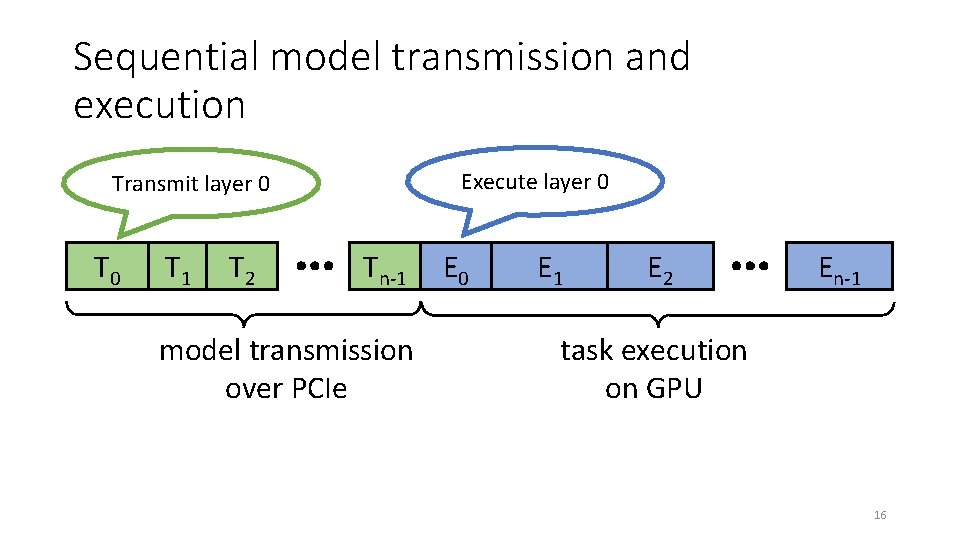

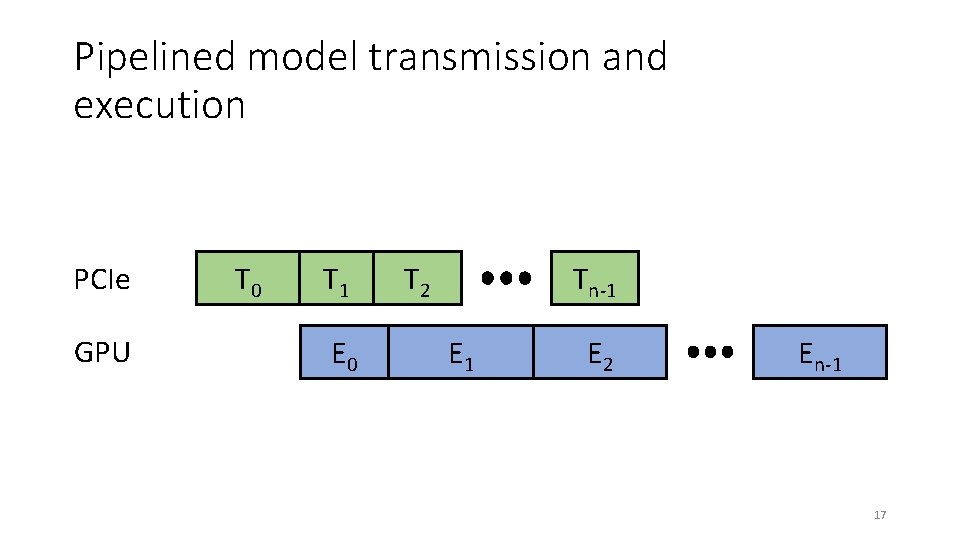

Sequential model transmission and execution Execute layer 0 Transmit layer 0 T 1 T 2 Tn-1 model transmission over PCIe E 0 E 1 E 2 En-1 task execution on GPU 16

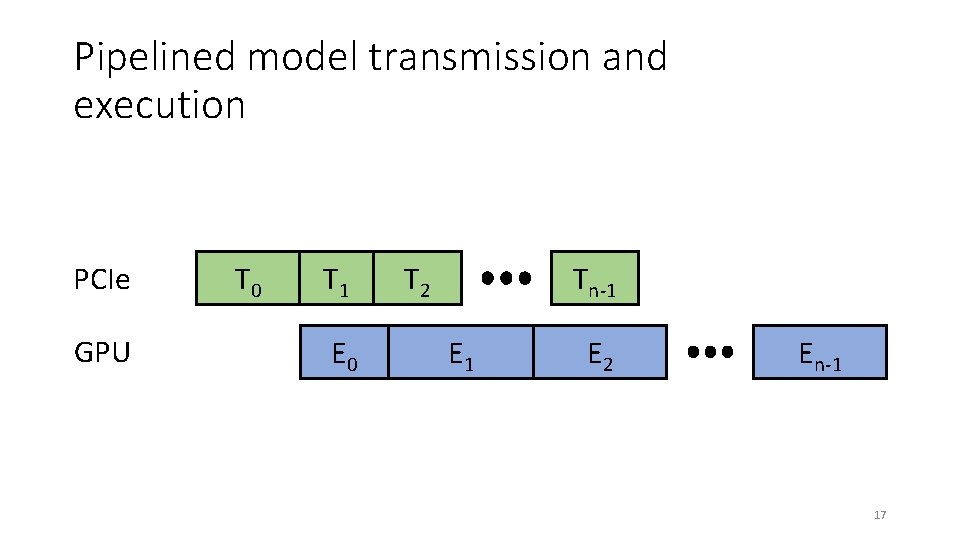

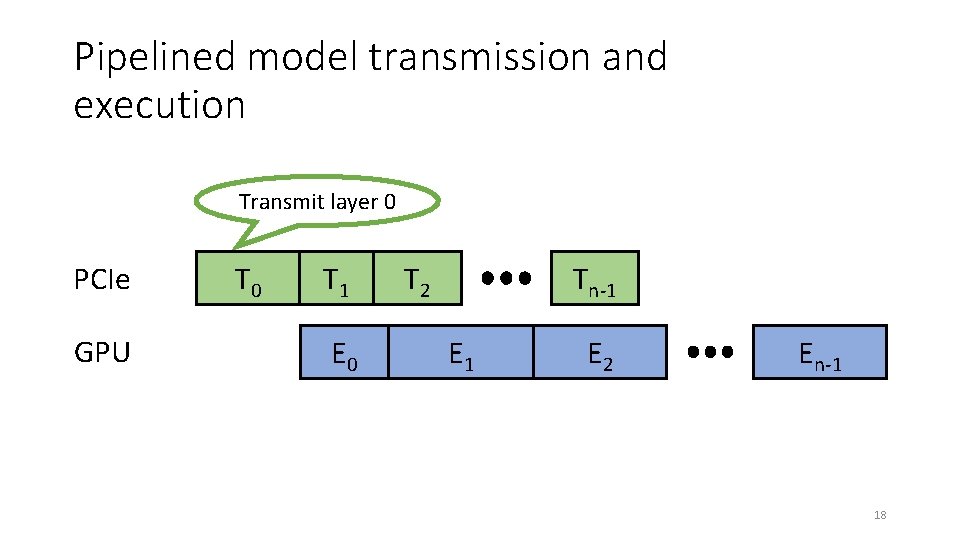

Pipelined model transmission and execution PCIe GPU T 0 T 1 E 0 Tn-1 T 2 E 1 E 2 En-1 17

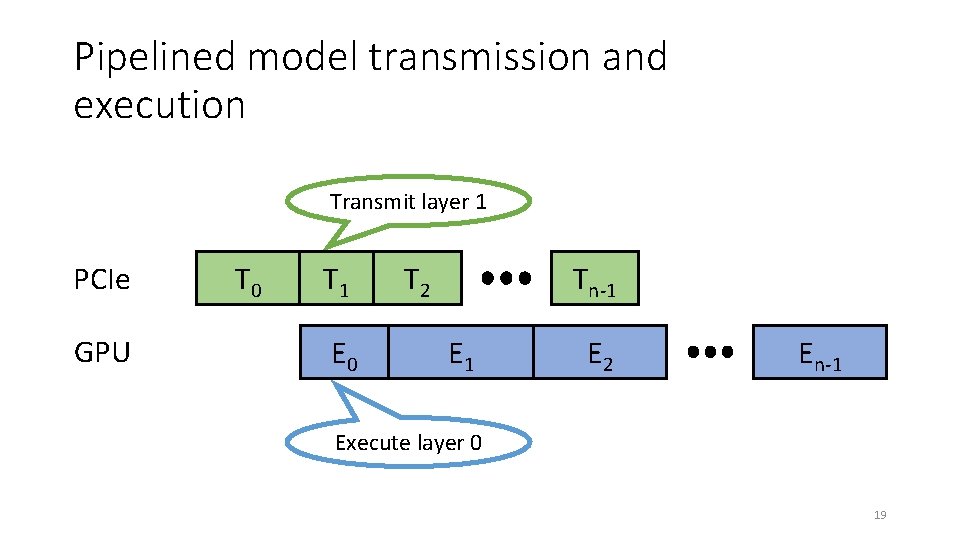

Pipelined model transmission and execution Transmit layer 0 PCIe GPU T 0 T 1 E 0 Tn-1 T 2 E 1 E 2 En-1 18

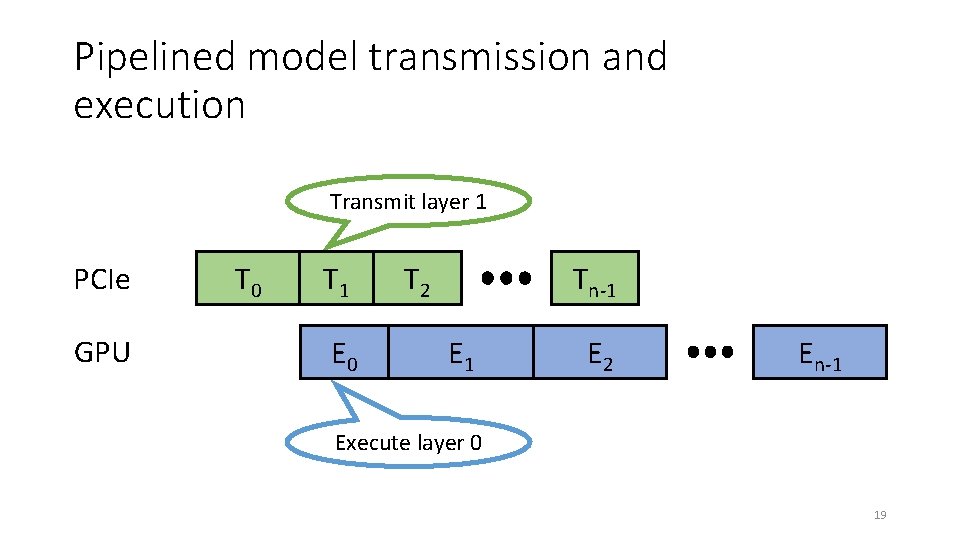

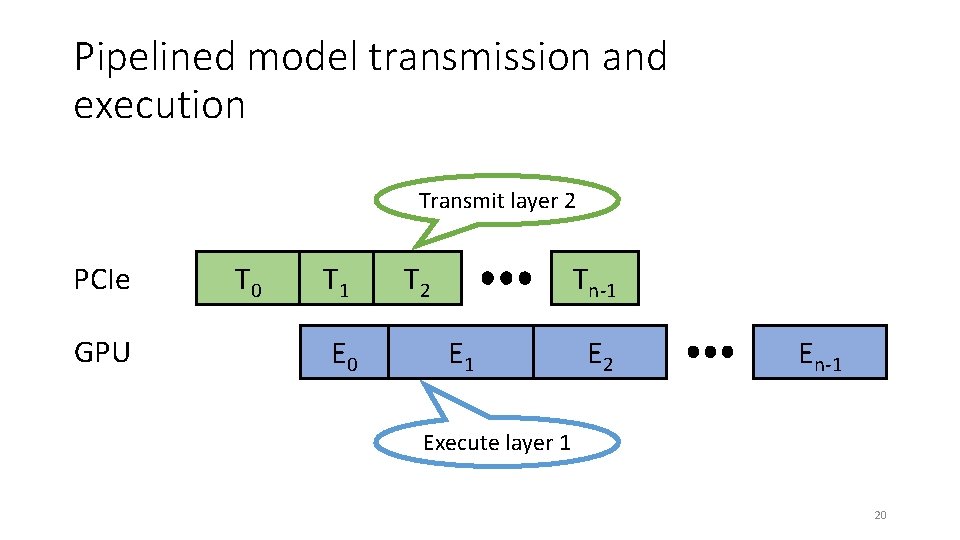

Pipelined model transmission and execution Transmit layer 1 PCIe GPU T 0 T 1 E 0 Tn-1 T 2 E 1 E 2 En-1 Execute layer 0 19

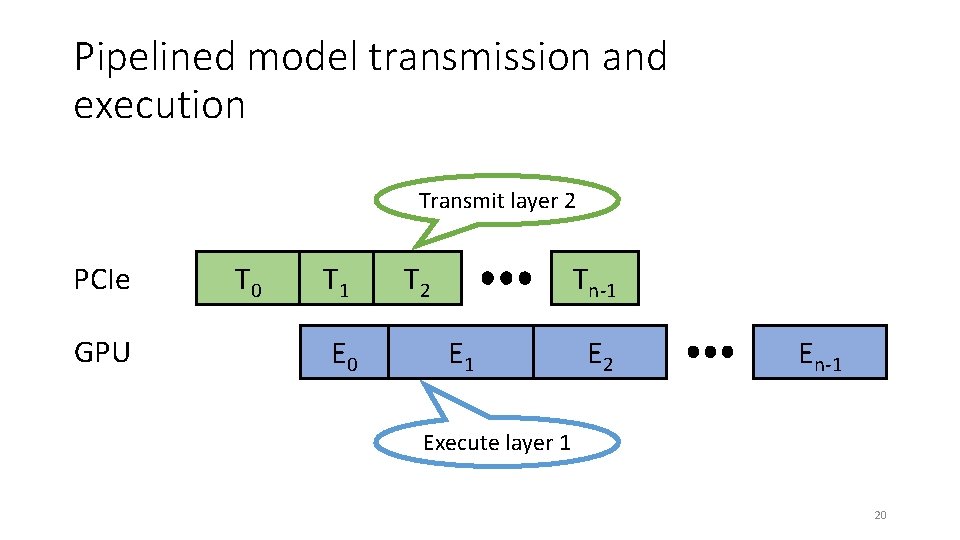

Pipelined model transmission and execution Transmit layer 2 PCIe GPU T 0 T 1 E 0 Tn-1 T 2 E 1 E 2 En-1 Execute layer 1 20

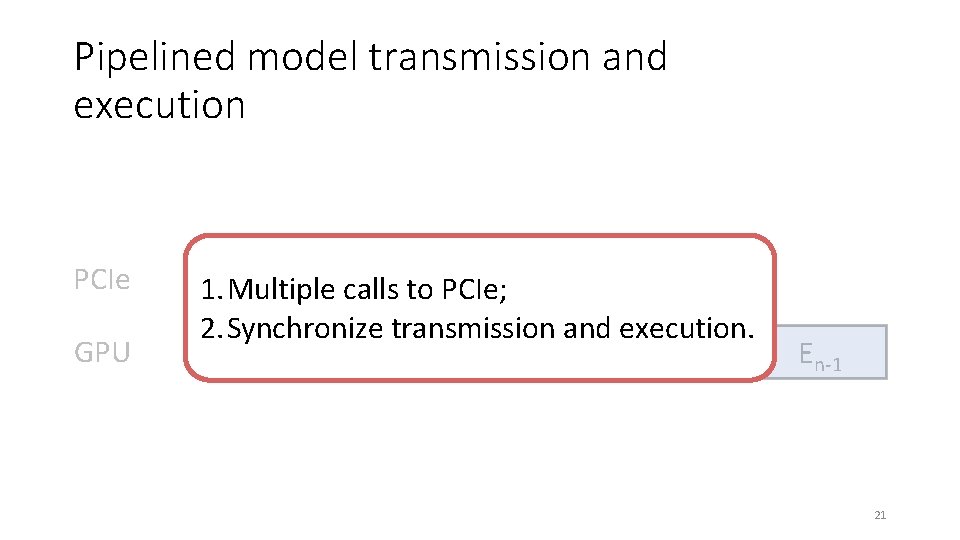

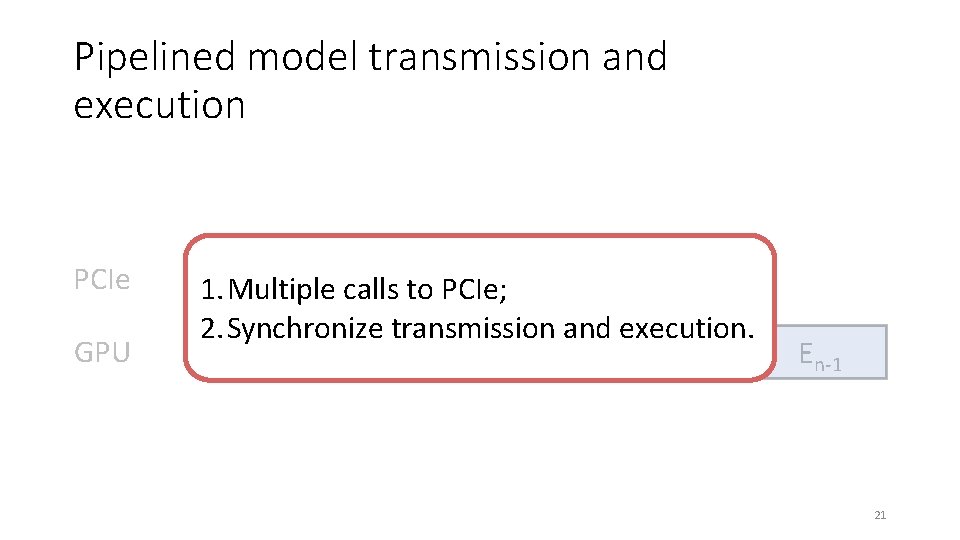

Pipelined model transmission and execution PCIe GPU Tn-1 T 0 T 1 calls Tto 2 PCIe; 1. Multiple 2. Synchronize transmission and execution. E 0 E 1 E 2 En-1 21

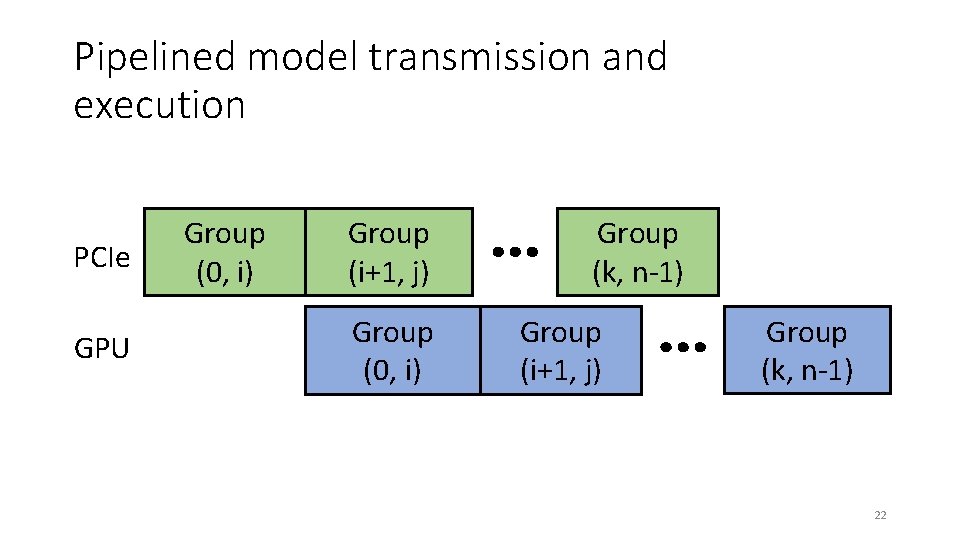

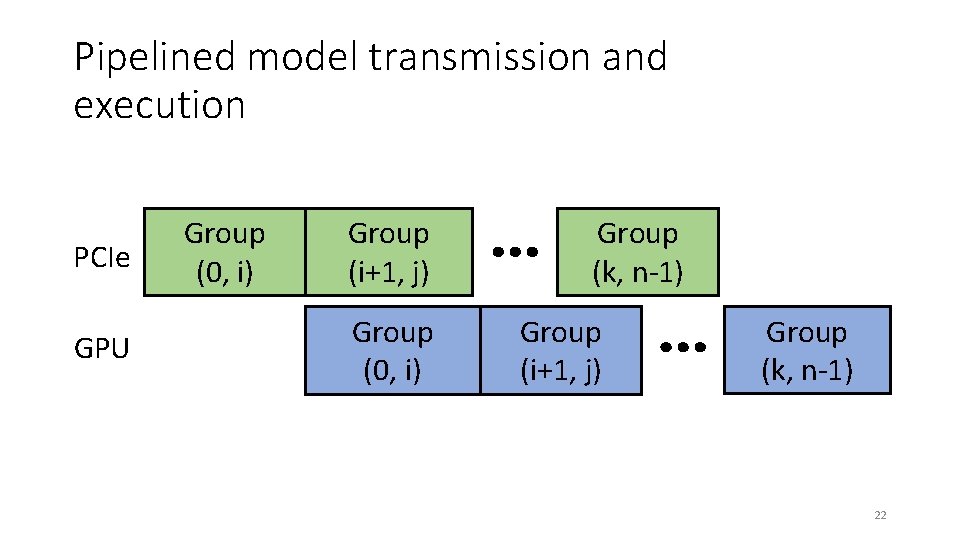

Pipelined model transmission and execution PCIe GPU Group (0, i) Group (i+1, j) Group (0, i) Group (k, n-1) Group (i+1, j) Group (k, n-1) 22

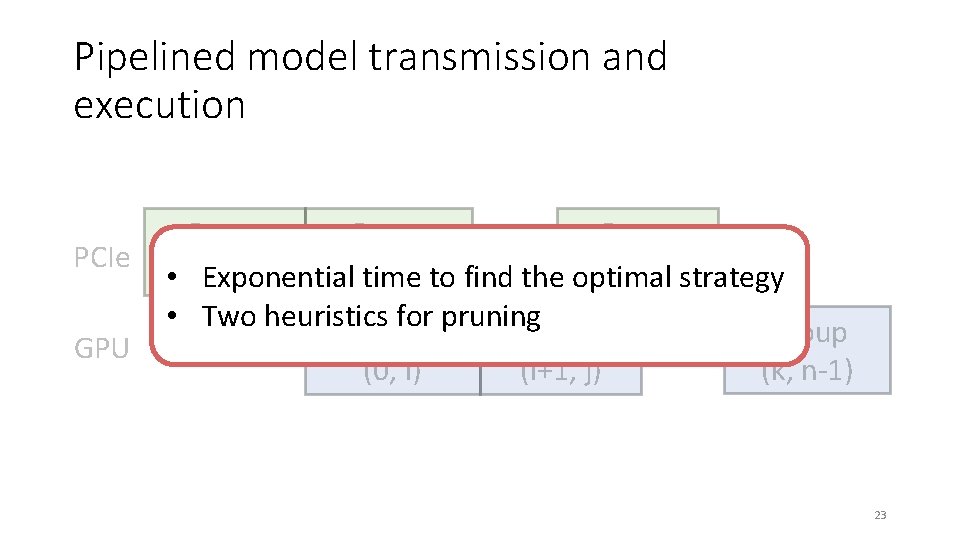

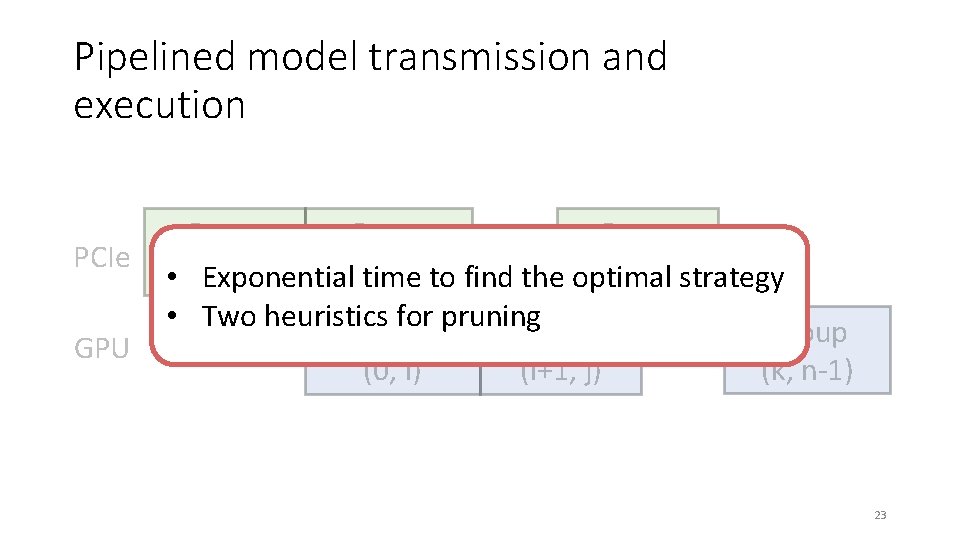

Pipelined model transmission and execution PCIe GPU Group (i+1, (k, n-1)strategy i) • (0, Exponential timej)to find the optimal • Two heuristics for pruning Group (k, n-1) (i+1, j) (0, i) 23

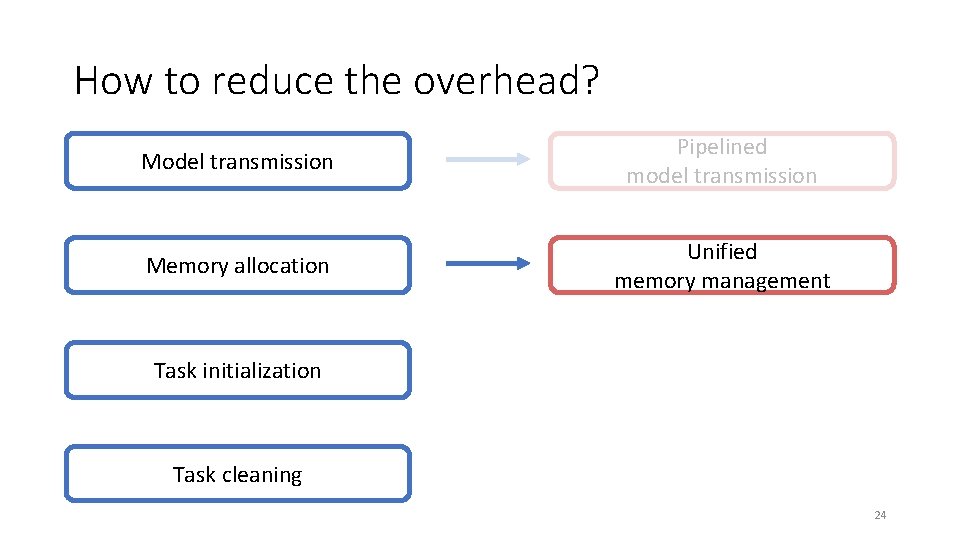

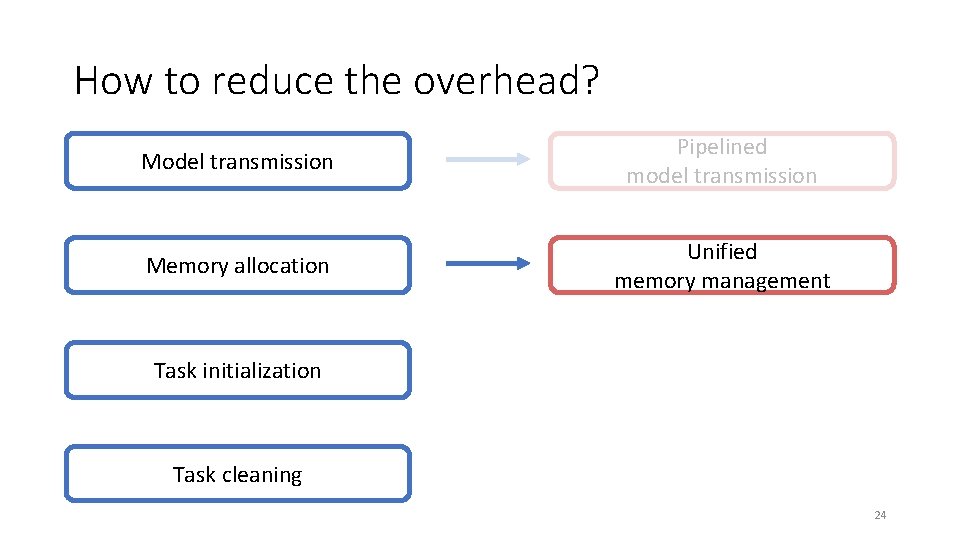

How to reduce the overhead? Model transmission Pipelined model transmission Memory allocation Unified memory management Task initialization Task cleaning 24

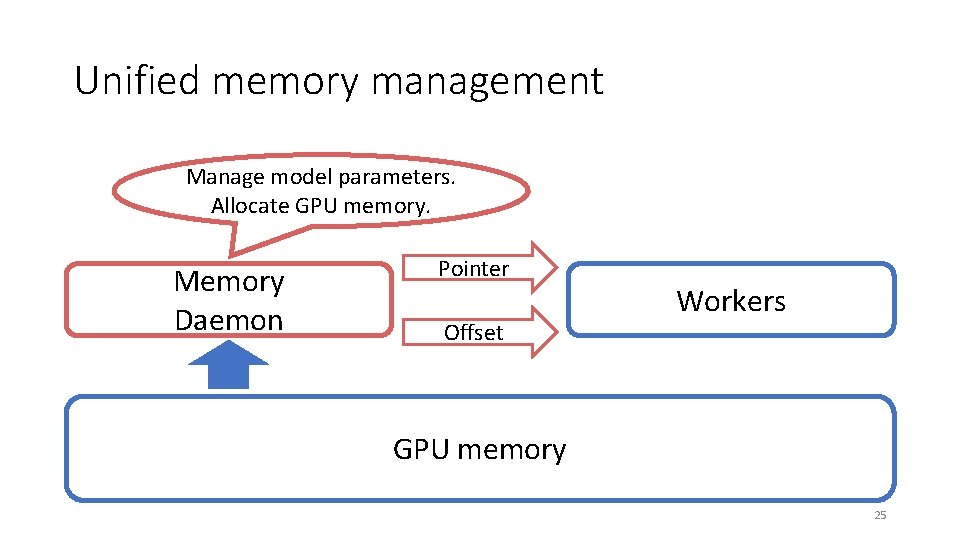

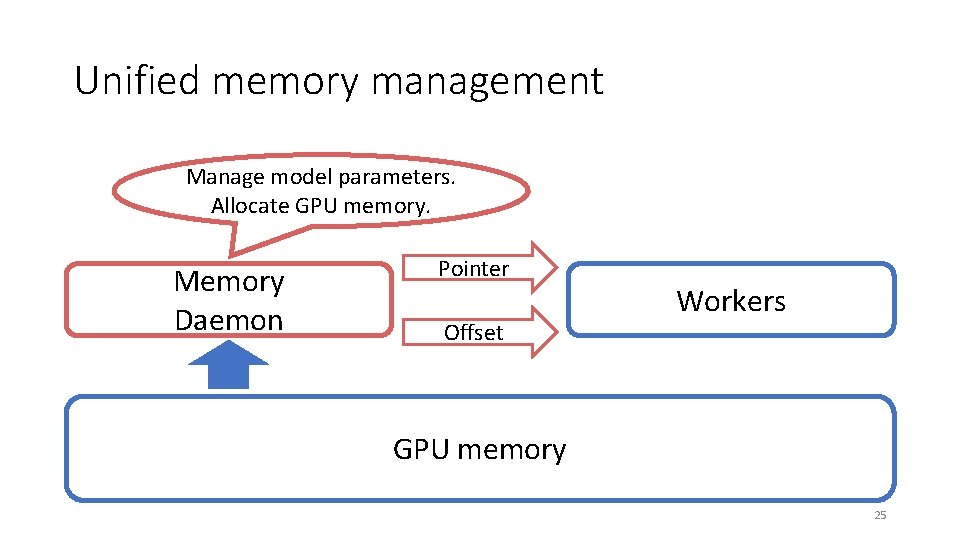

Unified memory management Manage model parameters. Allocate GPU memory. Memory Daemon Pointer Offset Workers GPU memory 25

How to reduce the overhead? Model transmission Pipelined model transmission Memory allocation Unified memory management Task initialization Active-standby worker switching Task cleaning 26

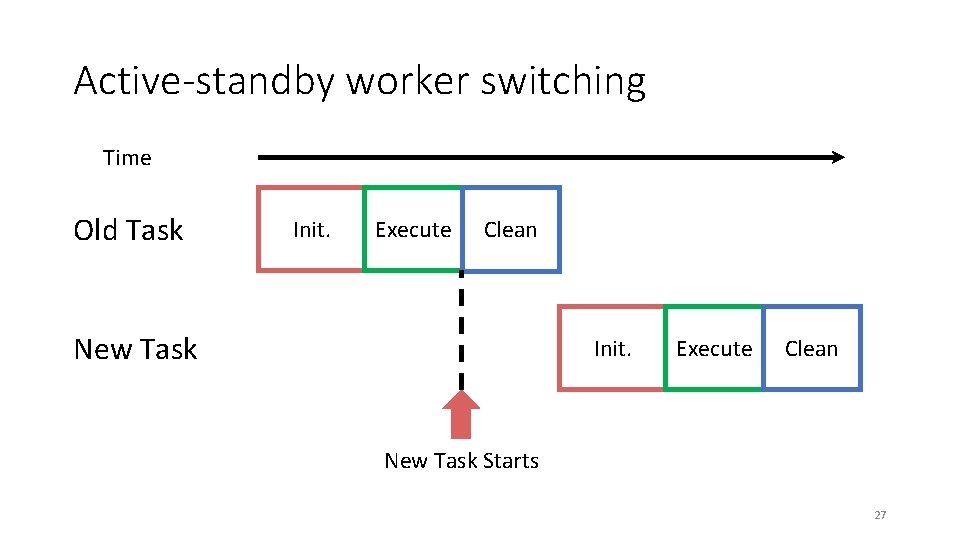

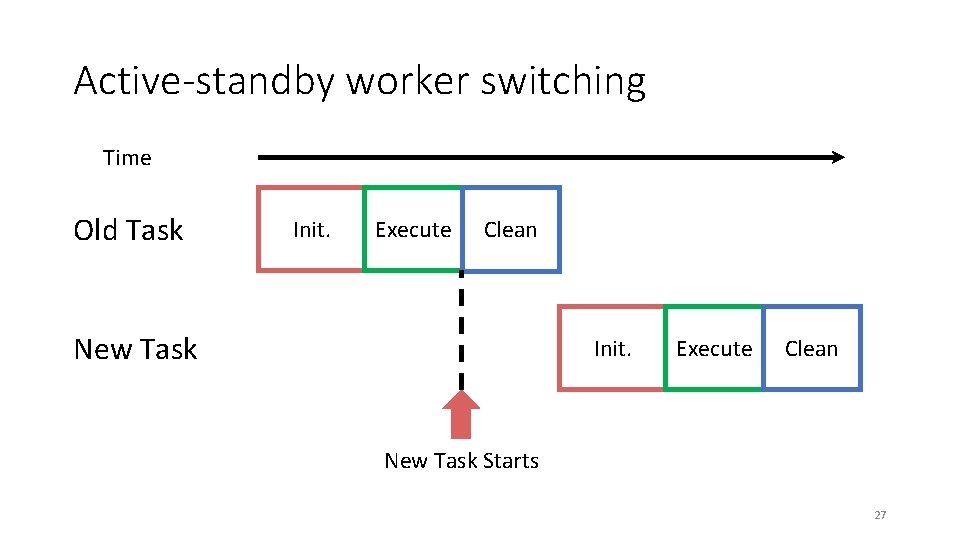

Active-standby worker switching Time Old Task Init. Execute Clean New Task Starts 27

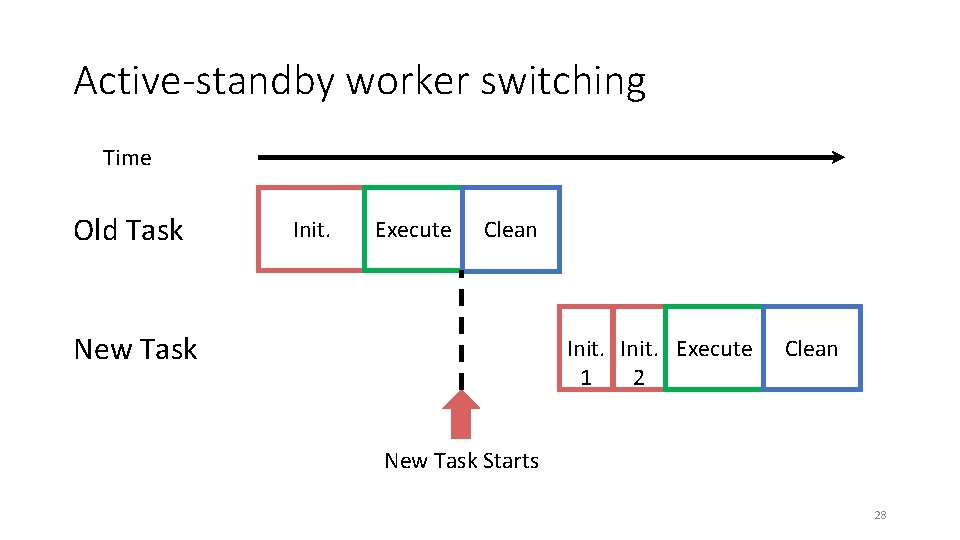

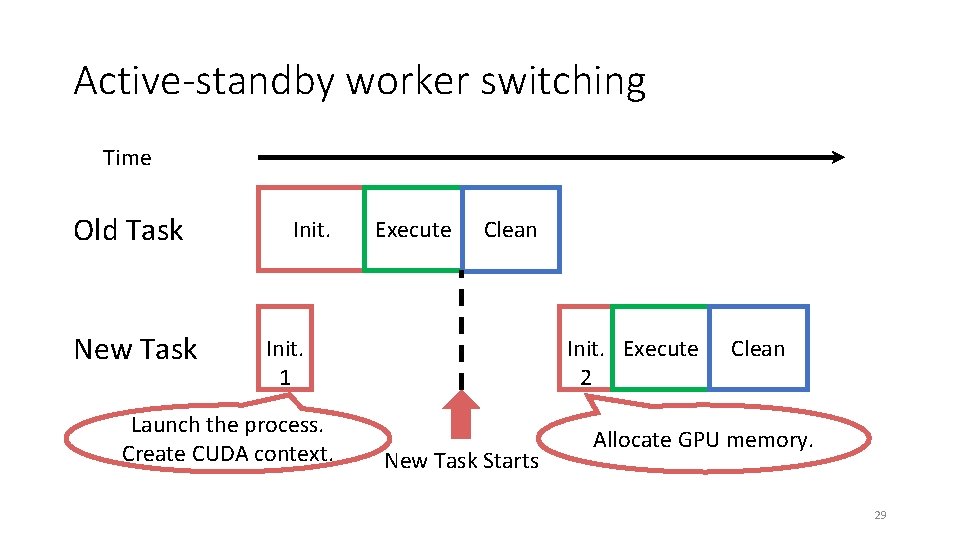

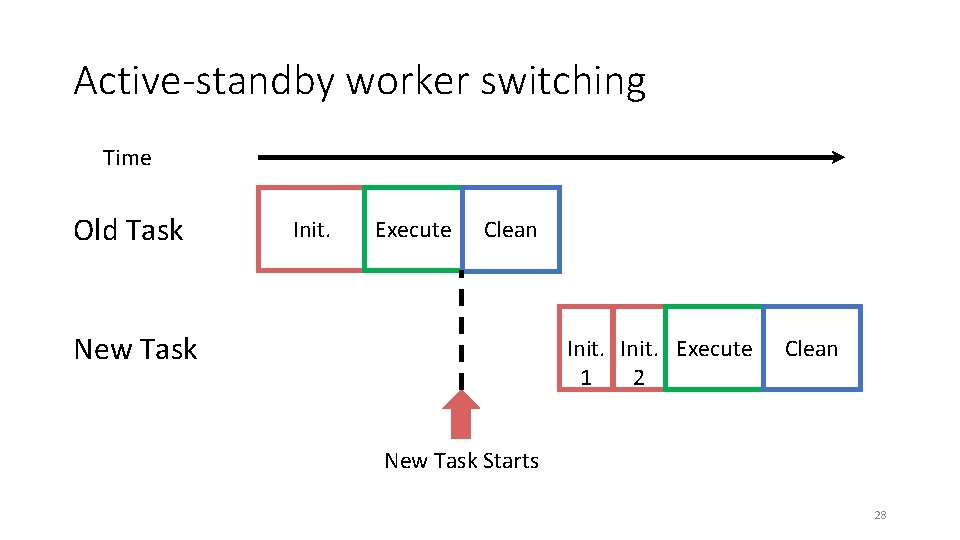

Active-standby worker switching Time Old Task Init. Execute Clean New Task Init. Execute 1 2 Clean New Task Starts 28

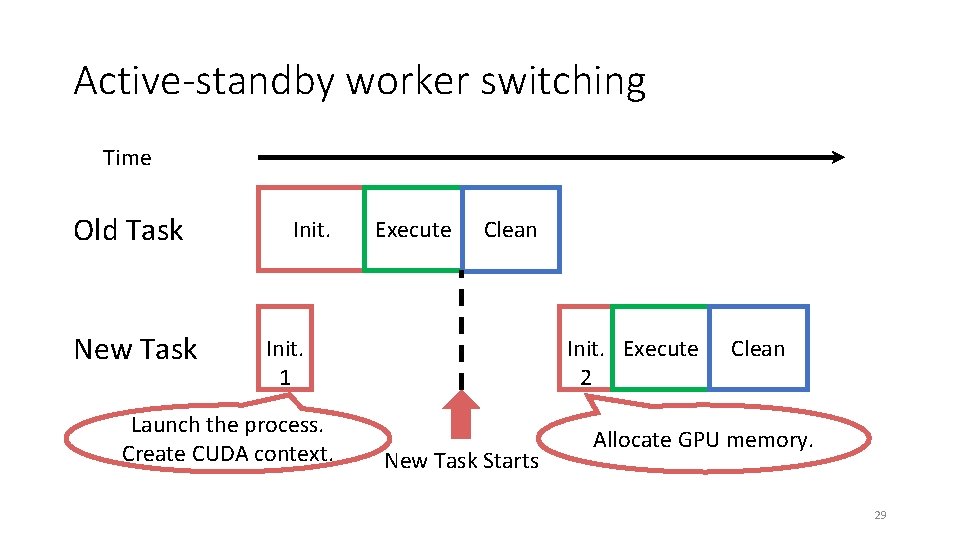

Active-standby worker switching Time Old Task New Task Init. Execute Clean Init. Execute 2 Init. 1 Launch the process. Create CUDA context. New Task Starts Clean Allocate GPU memory. 29

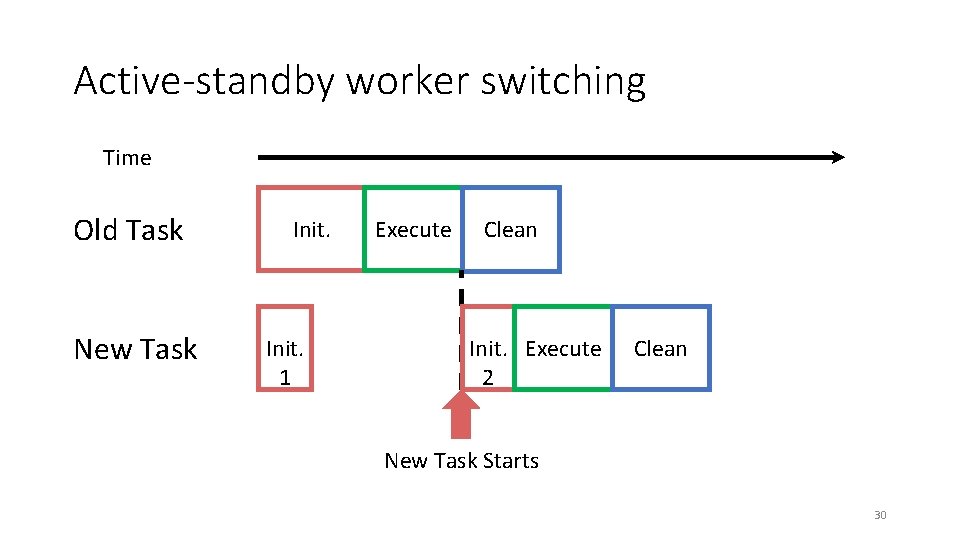

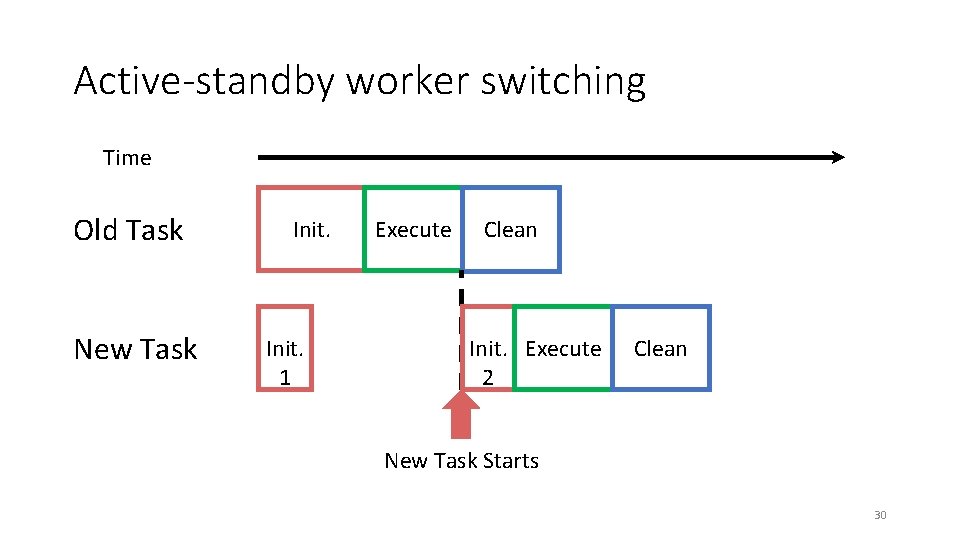

Active-standby worker switching Time Old Task New Task Init. 1 Execute Clean Init. Execute 2 Clean New Task Starts 30

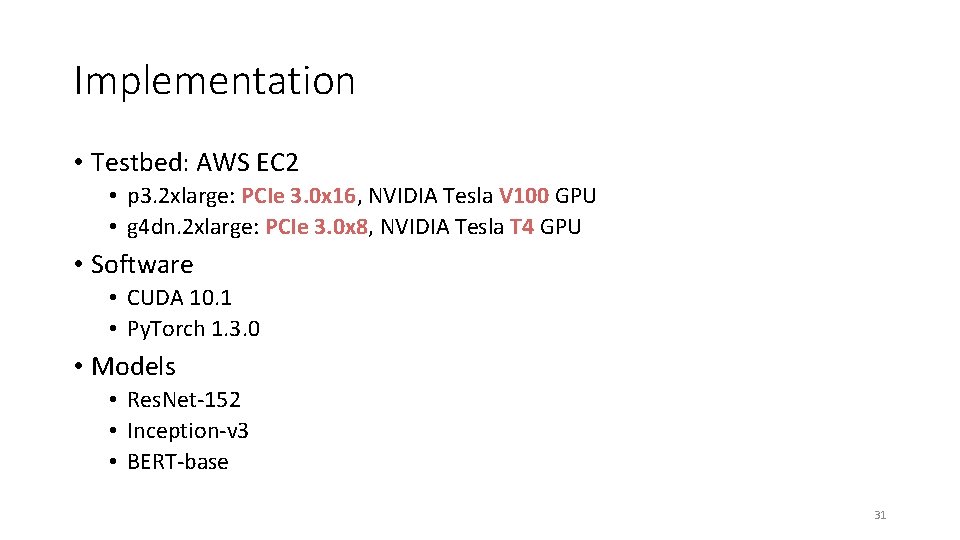

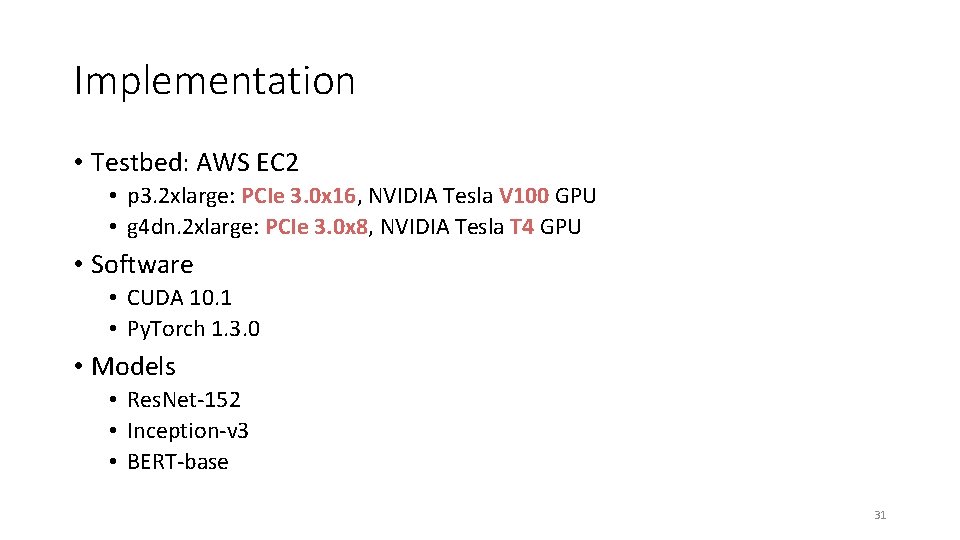

Implementation • Testbed: AWS EC 2 • p 3. 2 xlarge: PCIe 3. 0 x 16, NVIDIA Tesla V 100 GPU • g 4 dn. 2 xlarge: PCIe 3. 0 x 8, NVIDIA Tesla T 4 GPU • Software • CUDA 10. 1 • Py. Torch 1. 3. 0 • Models • Res. Net-152 • Inception-v 3 • BERT-base 31

Evaluation • Can Pipe. Switch satisfy SLOs? • Can Pipe. Switch provide high utilization? • How well do the design choices of Pipe. Switch work? 32

Evaluation • Can Pipe. Switch satisfy SLOs? • Can Pipe. Switch provide high utilization? • How well do the design choices of Pipe. Switch work? 33

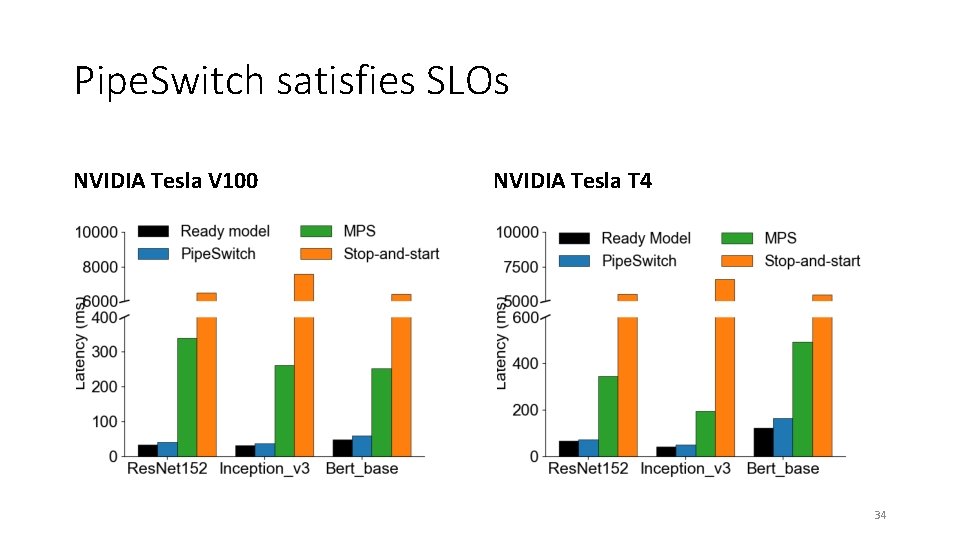

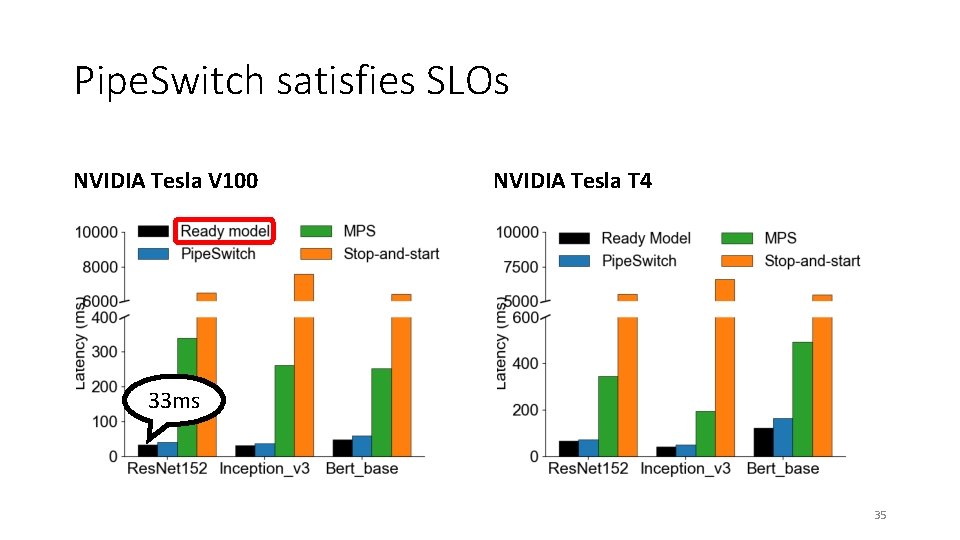

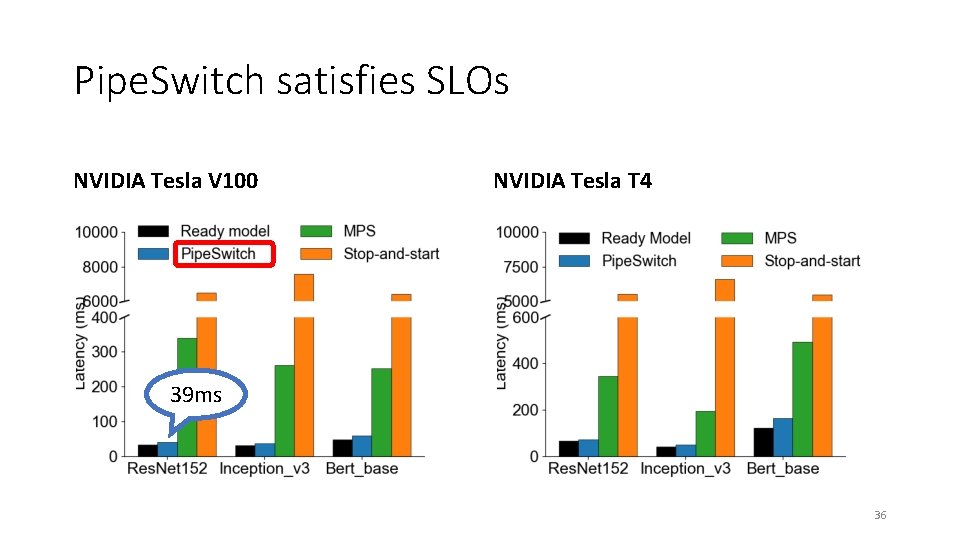

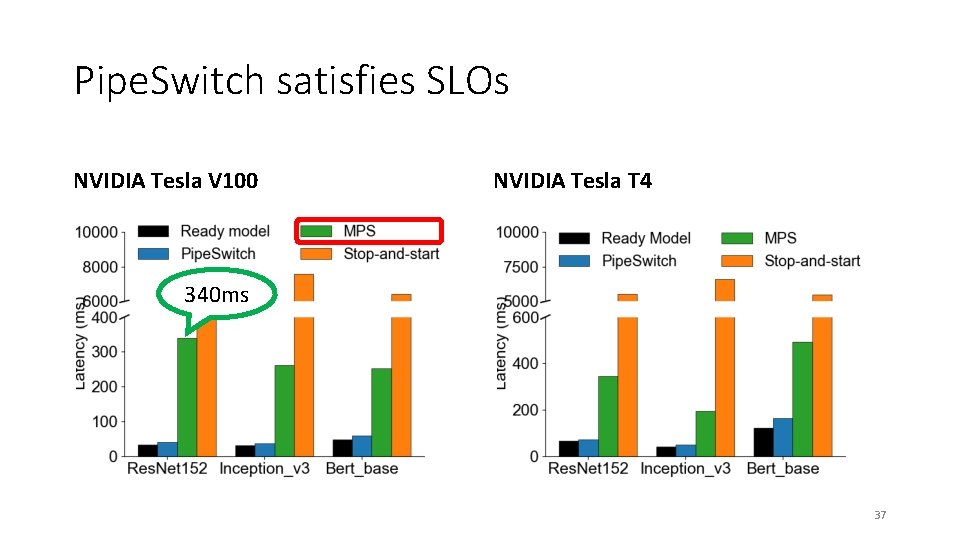

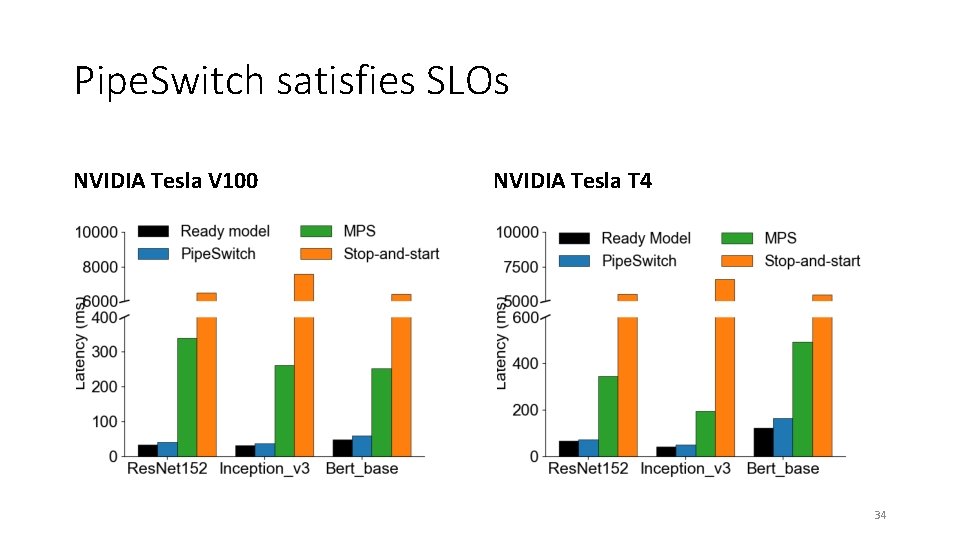

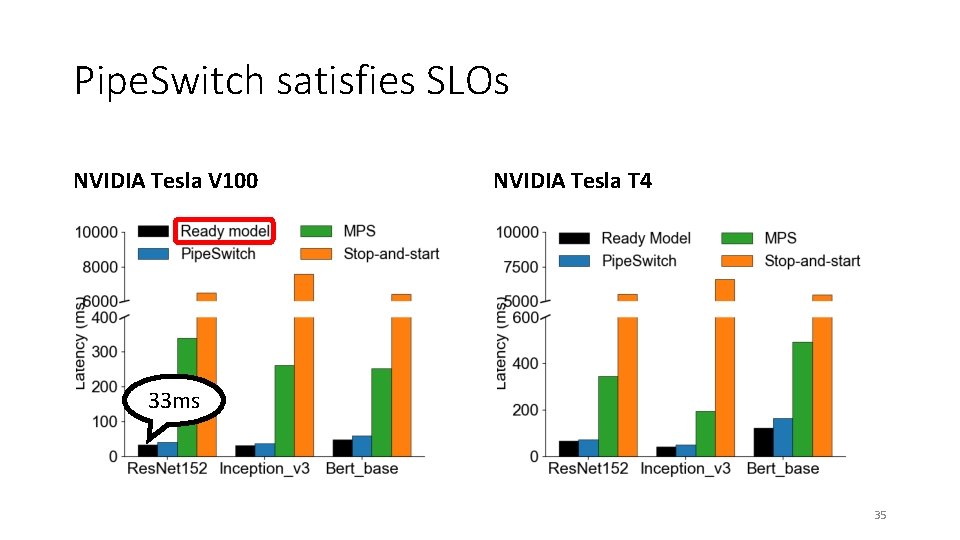

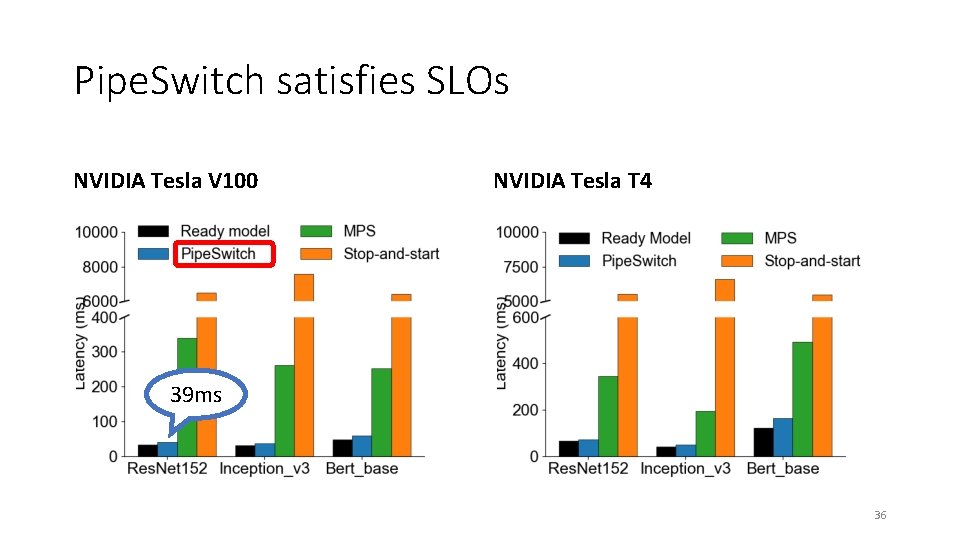

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 34

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 33 ms 35

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 39 ms 36

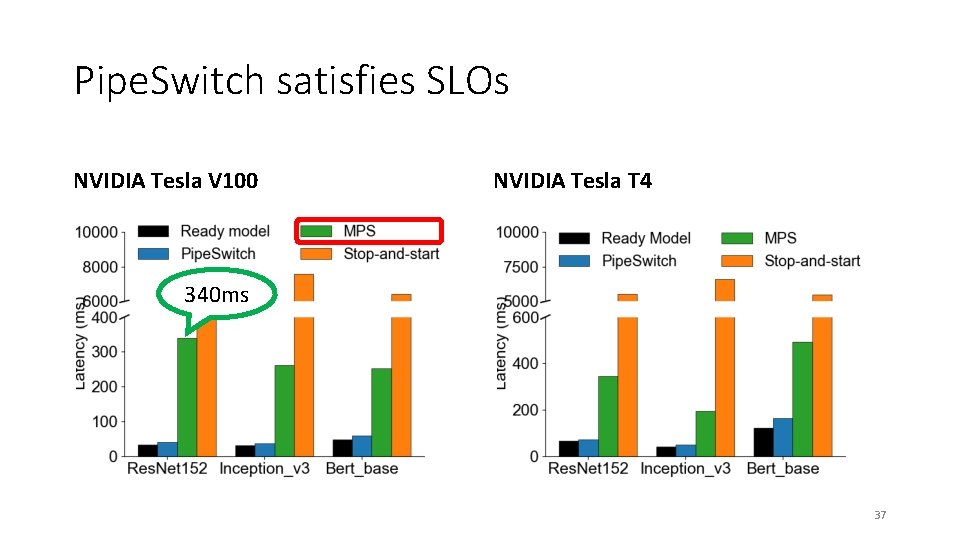

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 340 ms 37

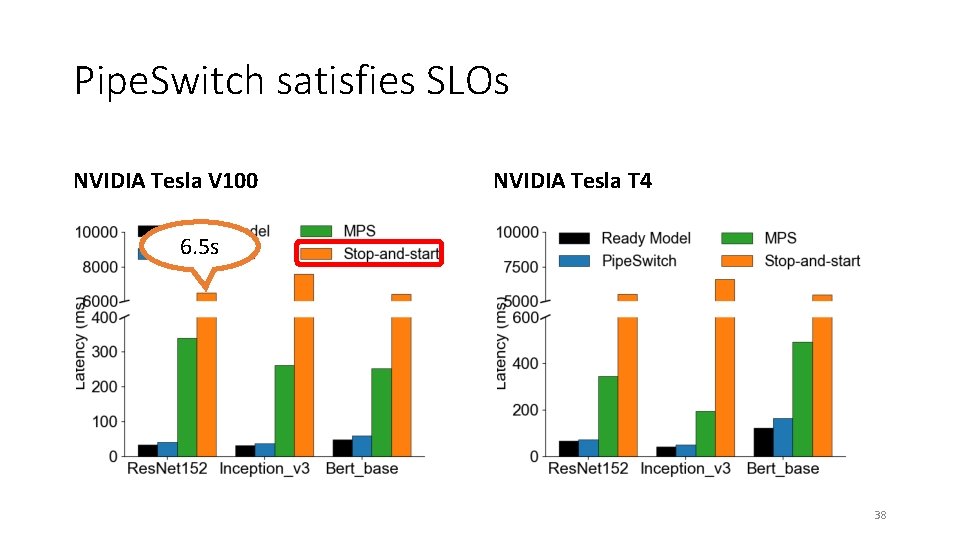

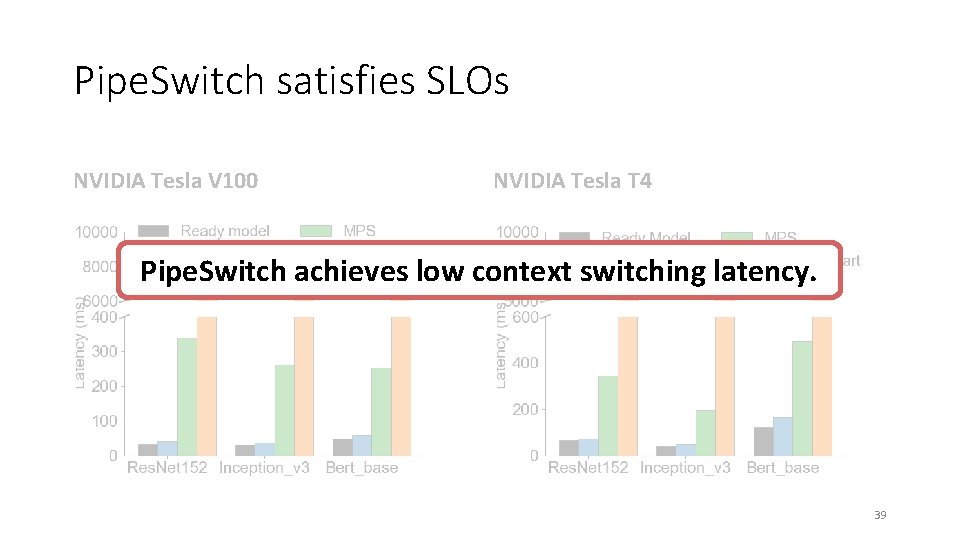

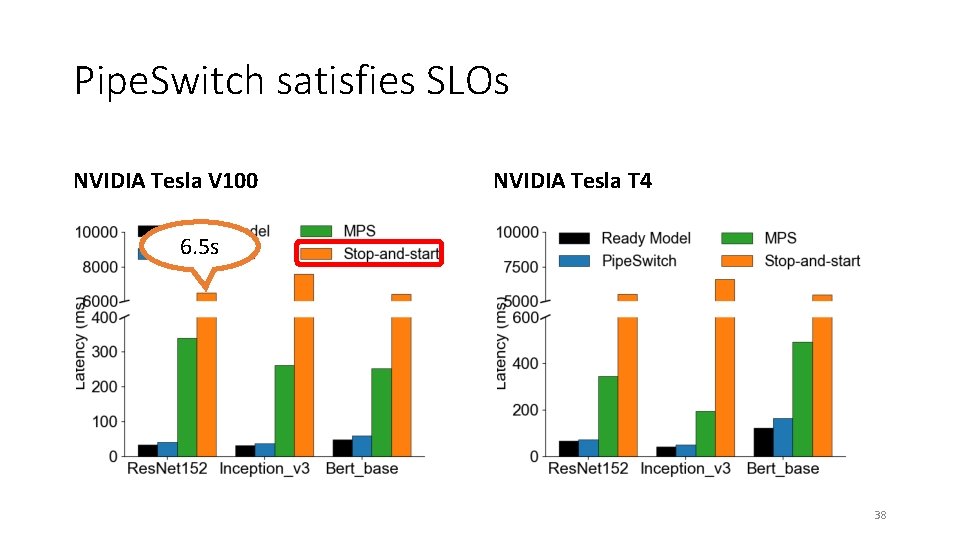

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 6. 5 s 38

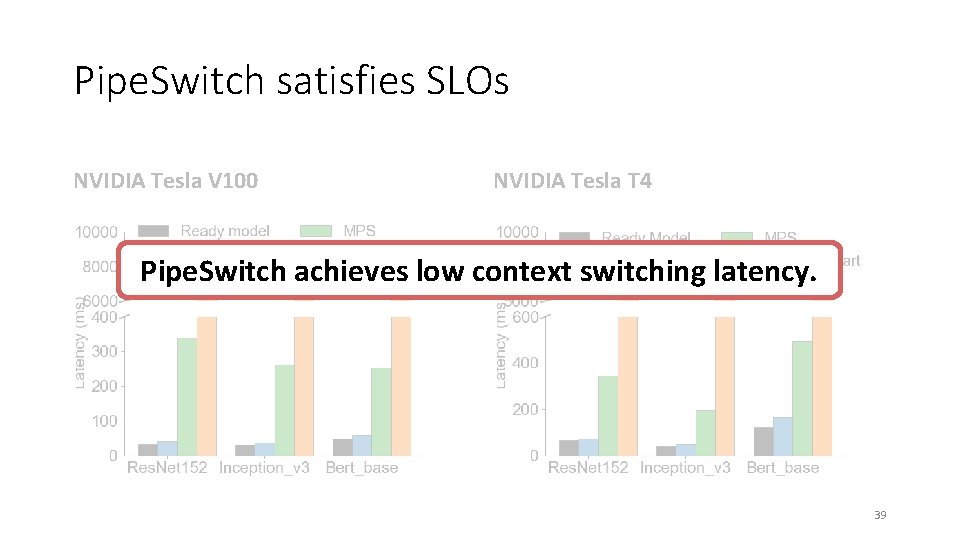

Pipe. Switch satisfies SLOs NVIDIA Tesla V 100 NVIDIA Tesla T 4 Pipe. Switch achieves low context switching latency. 39

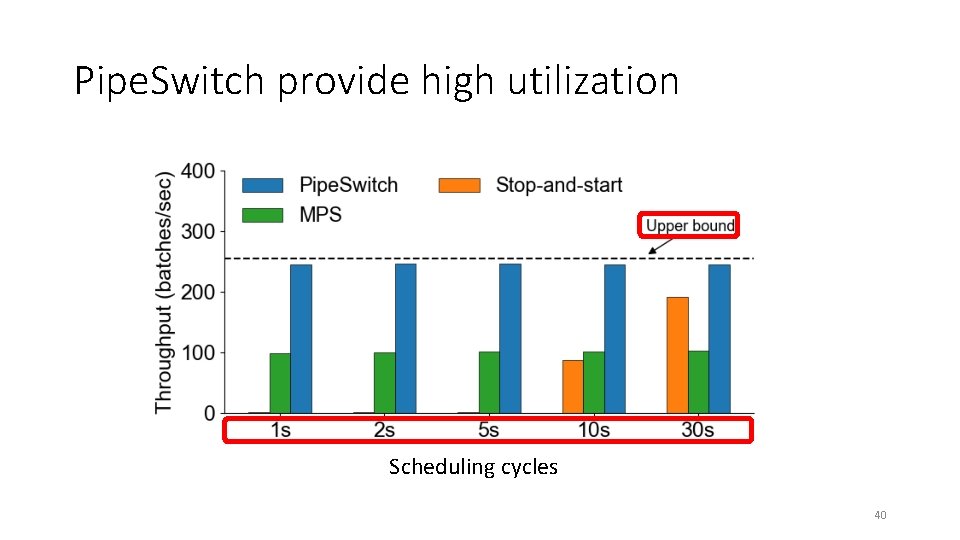

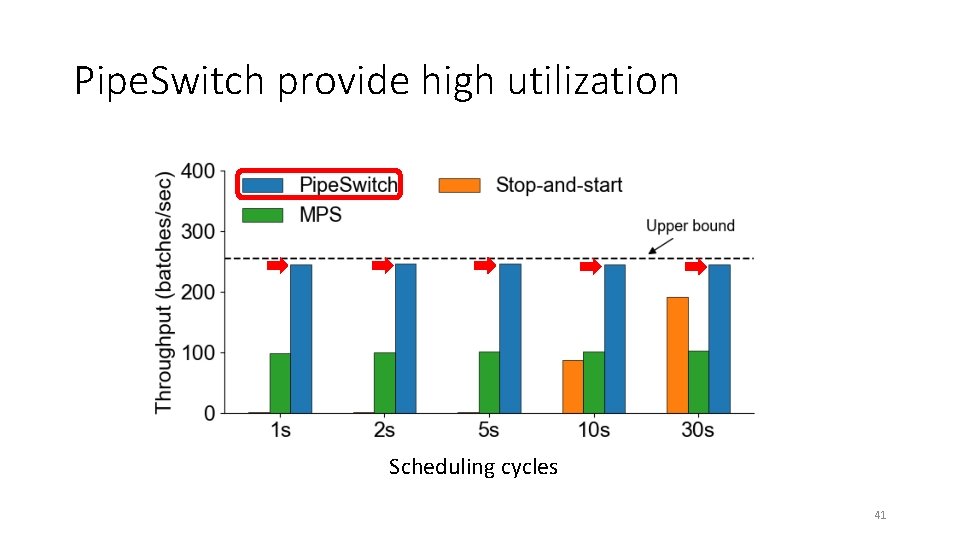

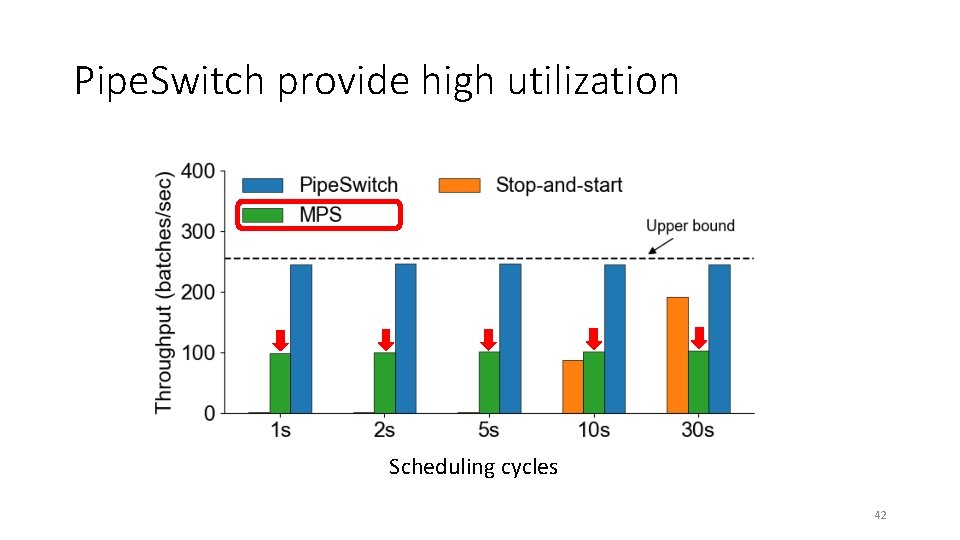

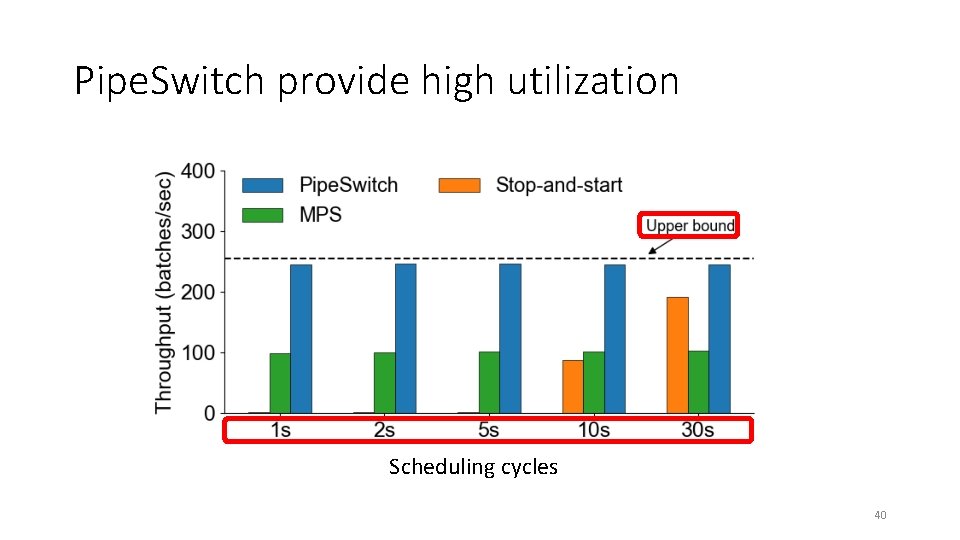

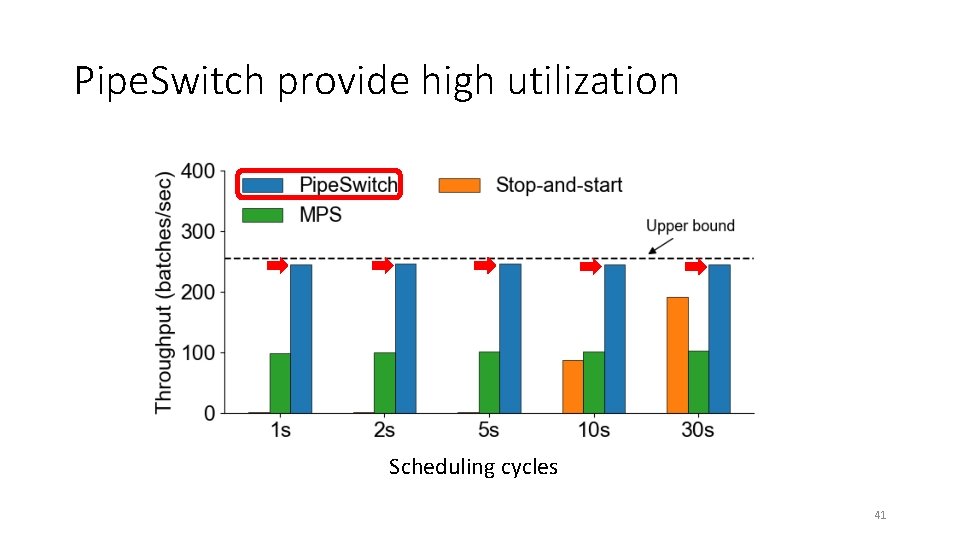

Pipe. Switch provide high utilization Scheduling cycles 40

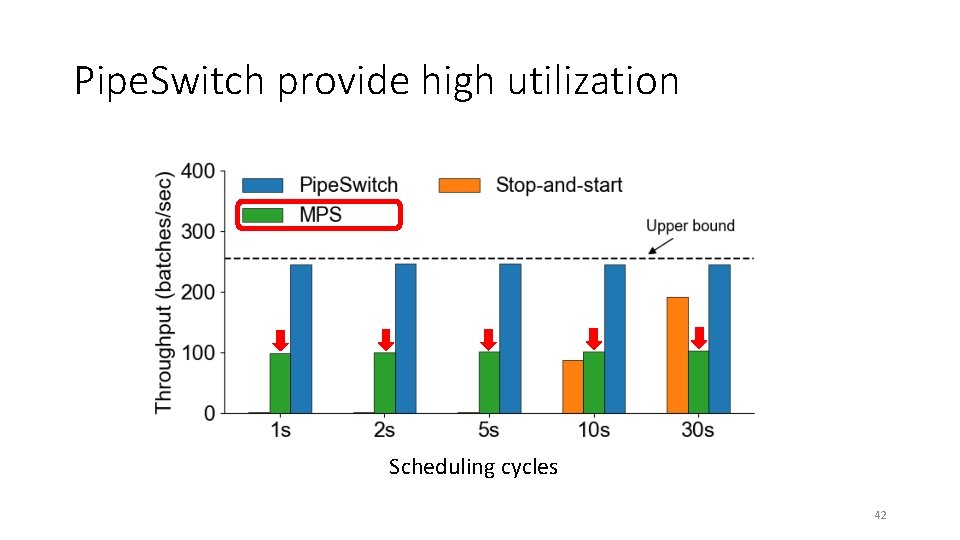

Pipe. Switch provide high utilization Scheduling cycles 41

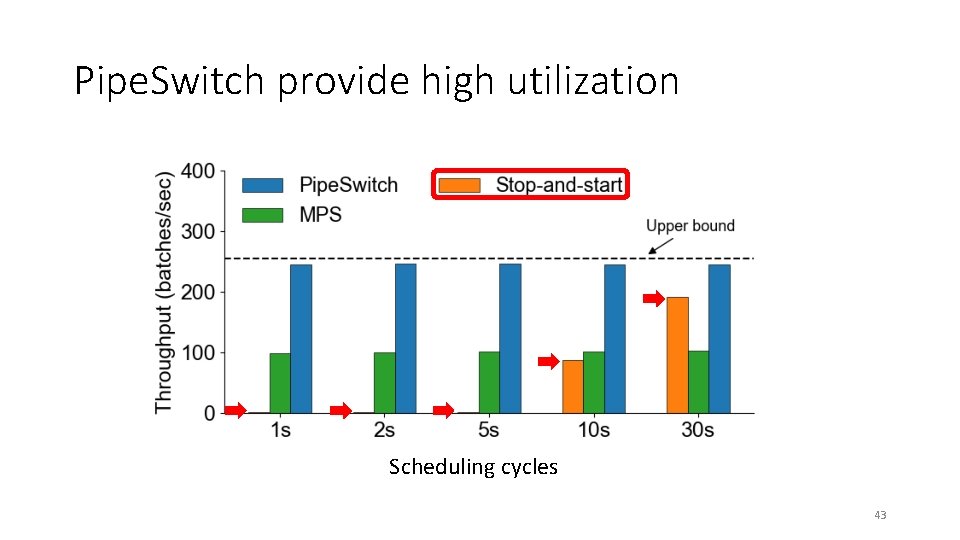

Pipe. Switch provide high utilization Scheduling cycles 42

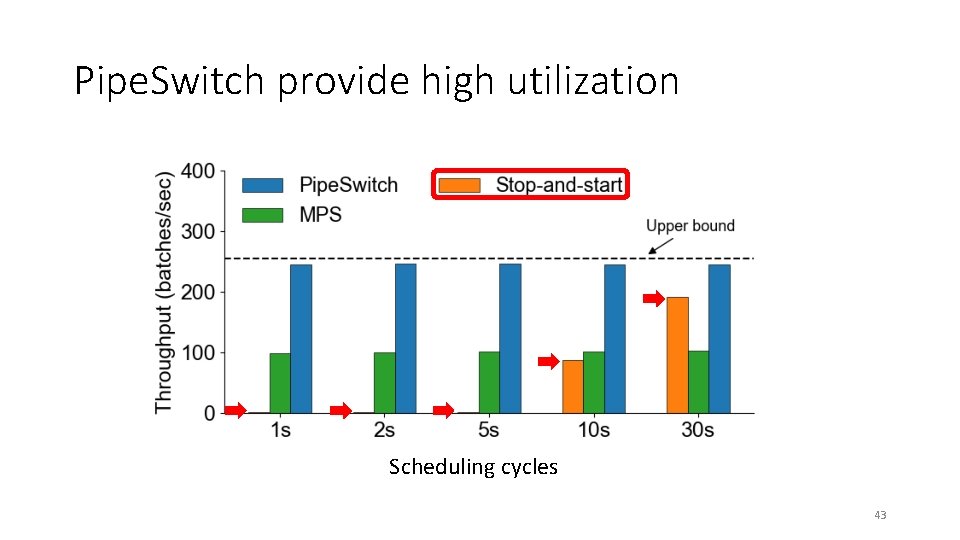

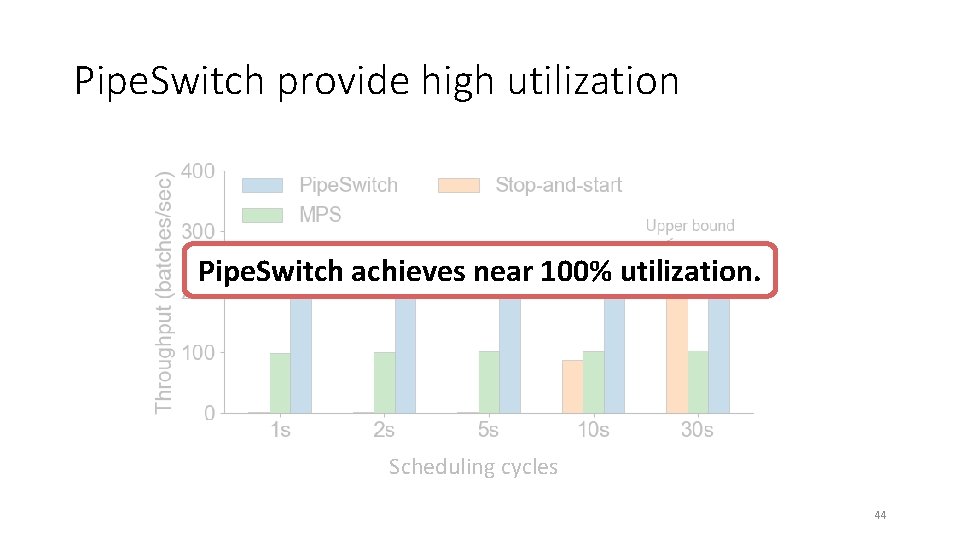

Pipe. Switch provide high utilization Scheduling cycles 43

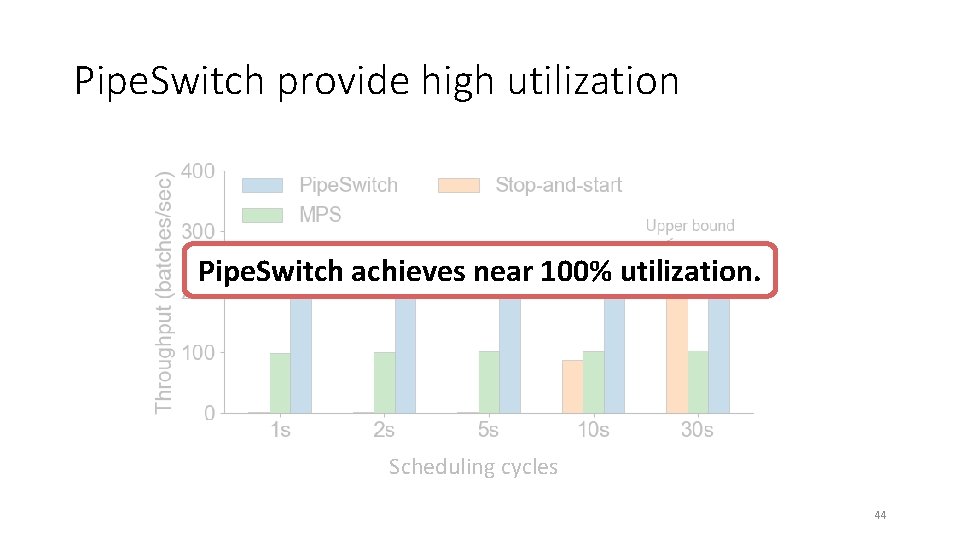

Pipe. Switch provide high utilization Pipe. Switch achieves near 100% utilization. Scheduling cycles 44

Summary • GPU clusters for DL applications suffer from low utilization • Limited share between training and inference workloads • Pipe. Switch introduces pipelined context switching • Enable GPU-efficient multiplexing of DL apps with fine-grained timesharing • Achieve millisecond-scale context switching latencies and high throughput 45

Thank you! zbai 1@jhu. edu 46