Pictor A Benchmarking Framework for Cloud 3 D

Pictor A Benchmarking Framework for Cloud 3 D Applications Tianyi Liu Sen He Sunzhou Huang Danny Tsang Lingjia Tang Jason Mars Wei Wang 0

Outline 1. Background: Cloud 3 D System 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 1

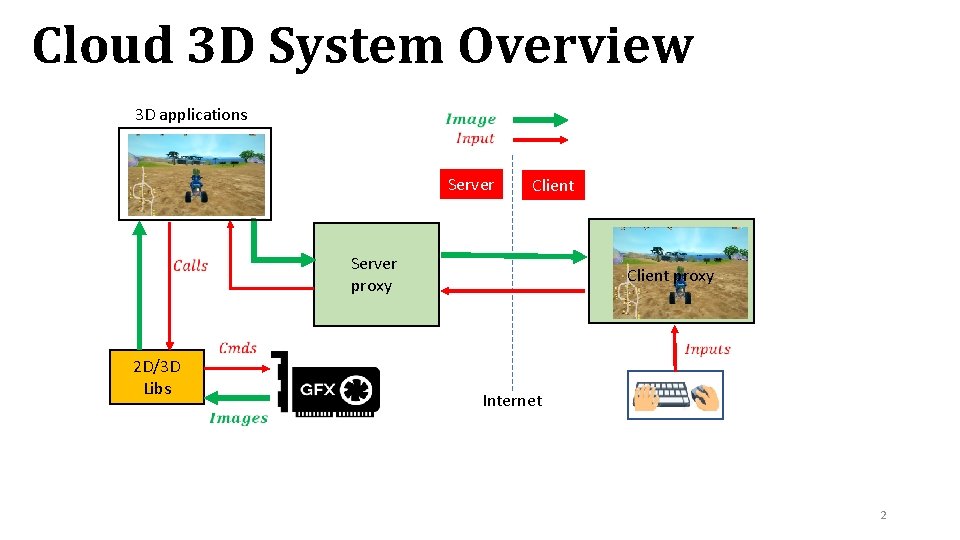

Cloud 3 D System Overview 3 D applications Server Client Server proxy 2 D/3 D Libs Client proxy Internet 2

Motivation • Motivation: Cloud 3 D applications are becoming a major type of workload for cloud datacenters. • Limited public research on how to design cloud systems to efficiently support 3 D applications. • Main reason: no standard benchmarking infrastructures for this new area, including benchmark suite and performance evaluation tools. 3

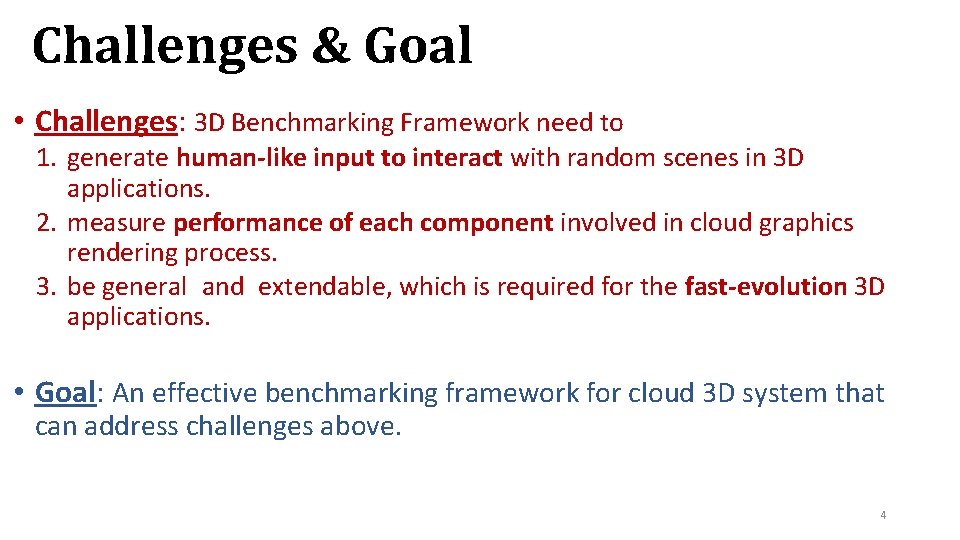

Challenges & Goal • Challenges: 3 D Benchmarking Framework need to 1. generate human-like input to interact with random scenes in 3 D applications. 2. measure performance of each component involved in cloud graphics rendering process. 3. be general and extendable, which is required for the fast-evolution 3 D applications. • Goal: An effective benchmarking framework for cloud 3 D system that can address challenges above. 4

Outline 1. Background: Cloud 3 D System Basics 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 5

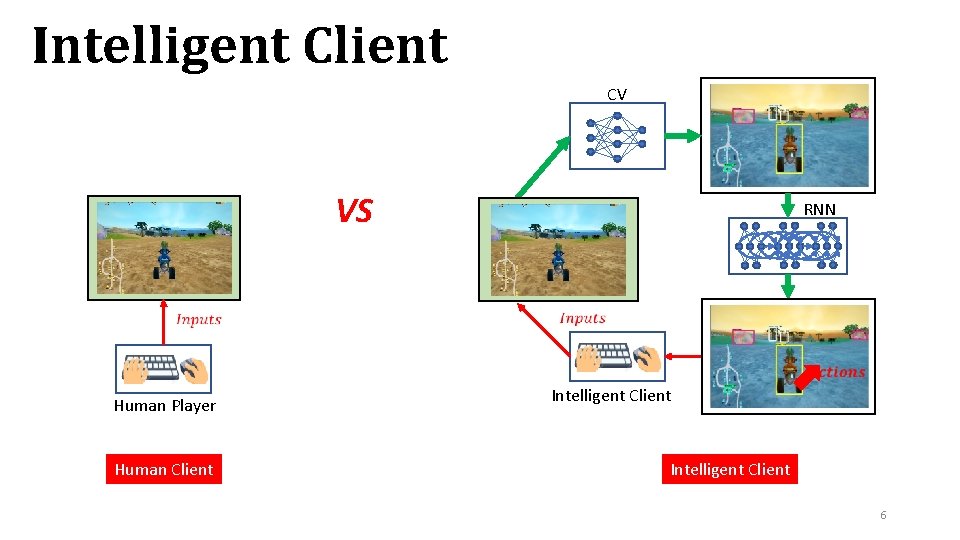

Intelligent Client CV VS Human Player Human Client RNN Intelligent Client 6

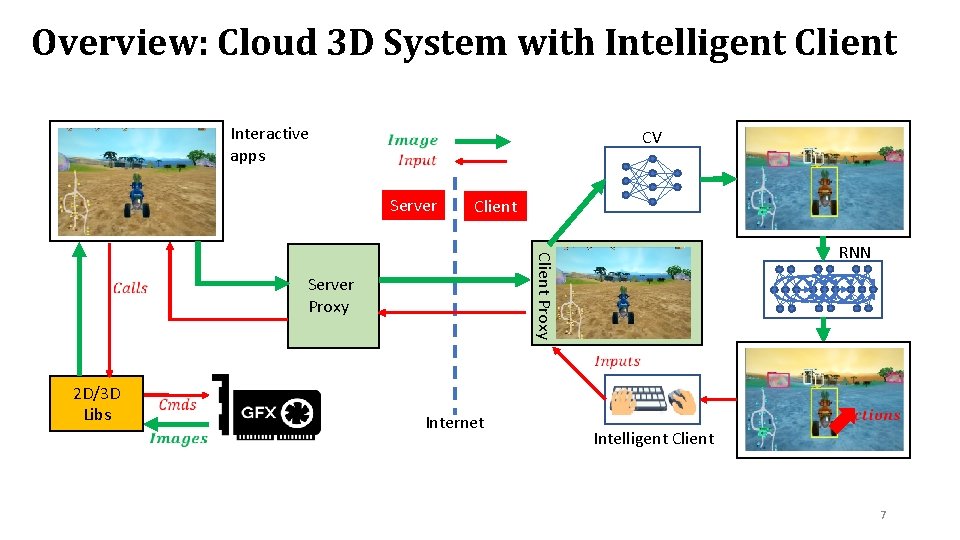

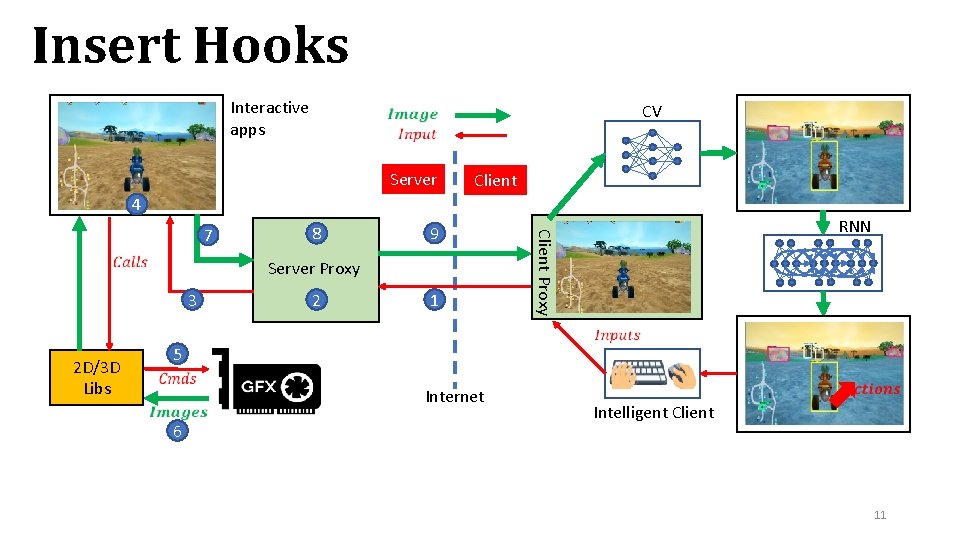

Overview: Cloud 3 D System with Intelligent Client Interactive apps CV Server Client Proxy Server Proxy 2 D/3 D Libs RNN Internet Intelligent Client 7

Outline 1. Background: Cloud 3 D System Basics 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 8

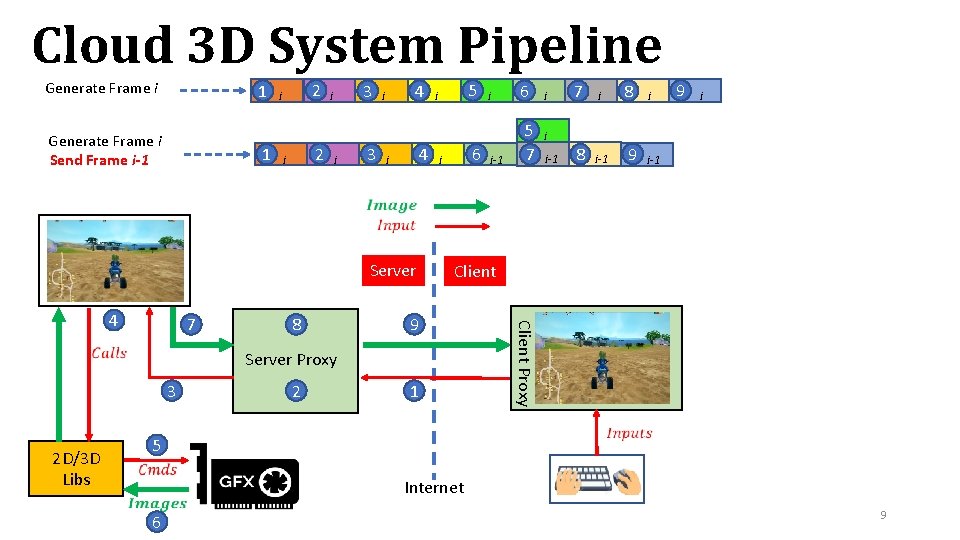

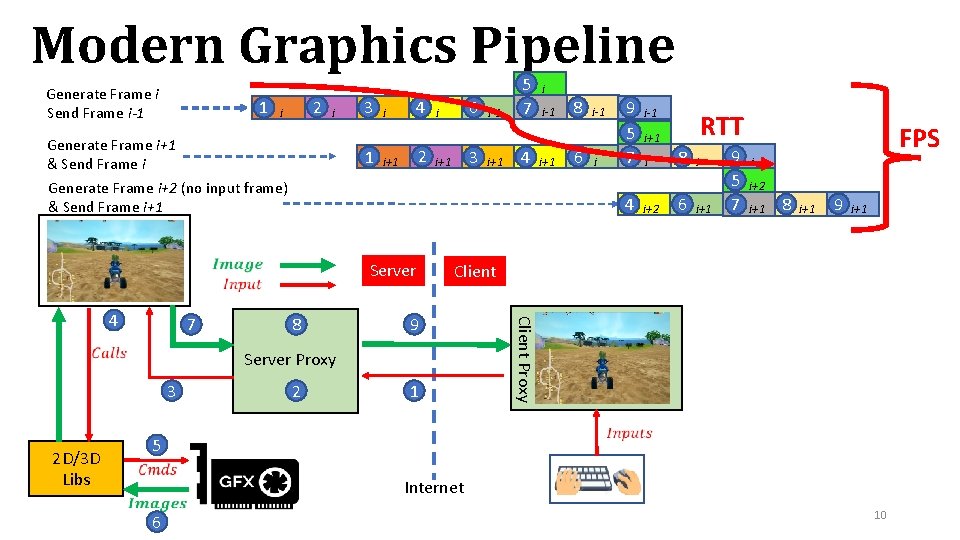

Cloud 3 D System Pipeline Generate Frame i 1 Generate Frame i Send Frame i-1 1 2 i 3 i i 3 4 i Server 7 8 6 i 2 D/3 D Libs 2 i-1 6 5 7 i i i-1 7 i 8 i-1 8 9 i i-1 Client 9 Server Proxy 3 i 1 Client Proxy 4 5 i 5 Internet 6 9

Modern Graphics Pipeline Generate Frame i Send Frame i-1 1 2 i i Generate Frame i+1 & Send Frame i Generate Frame i+2 (no input frame) & Send Frame i+1 3 1 i 4 2 i+1 2 D/3 D Libs 4 i+1 8 6 i-1 i 9 5 7 8 2 i-1 i+1 i i+2 RTT 8 6 i i+1 9 5 7 FPS i i+2 i+1 8 i+1 9 i+1 Client 9 Server Proxy 3 i+1 i-1 1 Client Proxy 7 3 i+1 i-1 i 4 Server 4 6 i 5 7 5 Internet 6 10

Insert Hooks Interactive apps CV Server Client 4 8 9 Server Proxy 3 2 D/3 D Libs 2 1 RNN Client Proxy 7 5 Internet 6 Intelligent Client 11

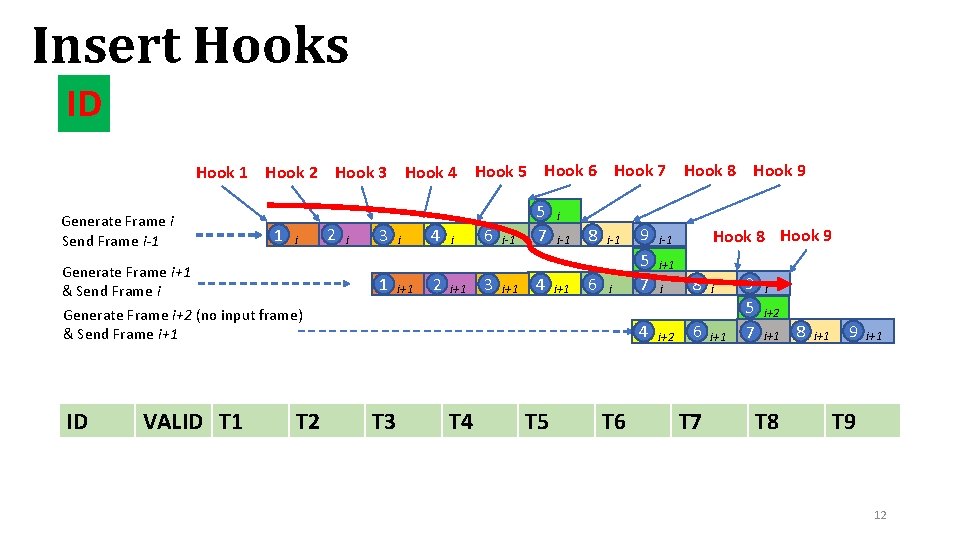

Insert Hooks ID Hook 1 Hook 2 Hook 3 Hook 4 Hook 5 Hook 6 Hook 7 Hook 8 Hook 9 Generate Frame i Send Frame i-1 1 i Generate Frame i+1 & Send Frame i Generate Frame i+2 (no input frame) & Send Frame i+1 ID VALID T 1 T 2 2 i 3 1 i i+1 4 2 i i+1 6 3 i-1 i+1 5 7 4 i i-1 i+1 8 6 i-1 i 9 5 7 4 T 3 T 4 T 5 T 6 Hook 8 Hook 9 i-1 i+1 i i+2 8 6 T 7 i i+1 9 5 7 i i+2 i+1 T 8 8 i+1 9 i+1 T 9 12

Outline 1. Background: Cloud 3 D System Basics 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 13

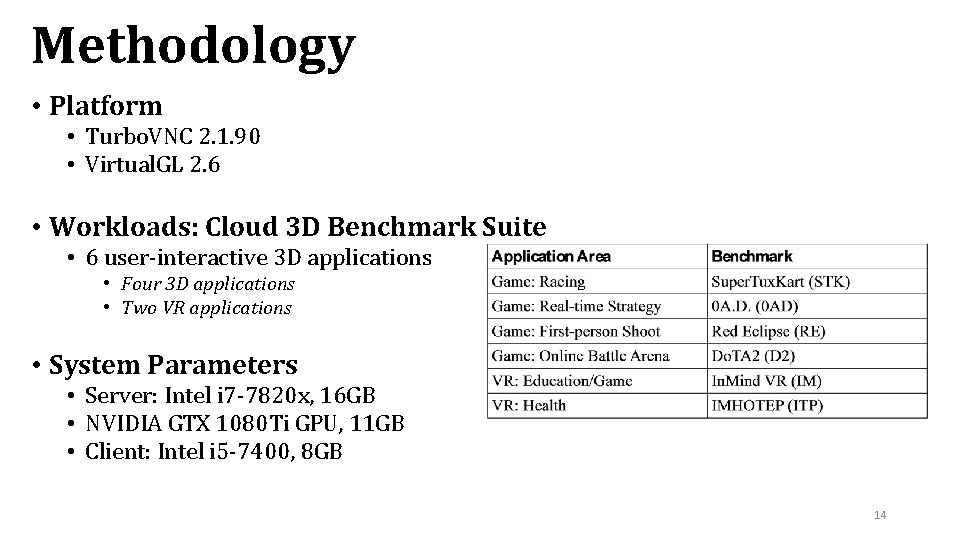

Methodology • Platform • Turbo. VNC 2. 1. 90 • Virtual. GL 2. 6 • Workloads: Cloud 3 D Benchmark Suite • 6 user-interactive 3 D applications • Four 3 D applications • Two VR applications • System Parameters • Server: Intel i 7 -7820 x, 16 GB • NVIDIA GTX 1080 Ti GPU, 11 GB • Client: Intel i 5 -7400, 8 GB 14

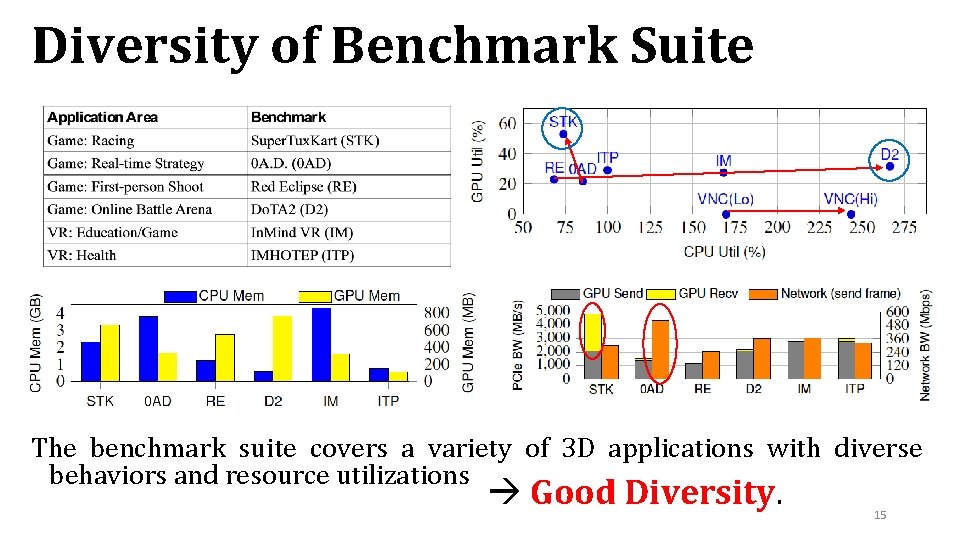

Diversity of Benchmark Suite The benchmark suite covers a variety of 3 D applications with diverse behaviors and resource utilizations Good Diversity. 15

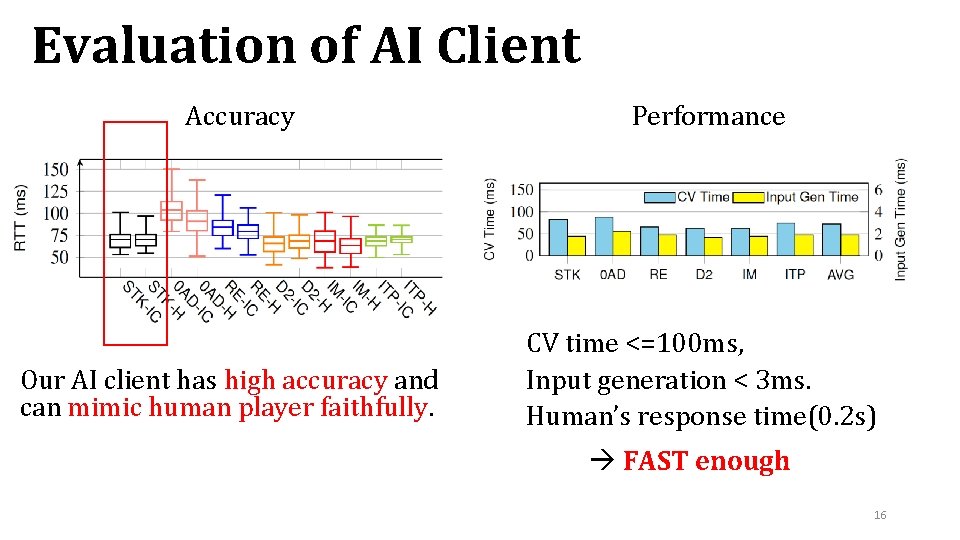

Evaluation of AI Client Accuracy Our AI client has high accuracy and can mimic human player faithfully. Performance CV time <=100 ms, Input generation < 3 ms. Human’s response time(0. 2 s) FAST enough 16

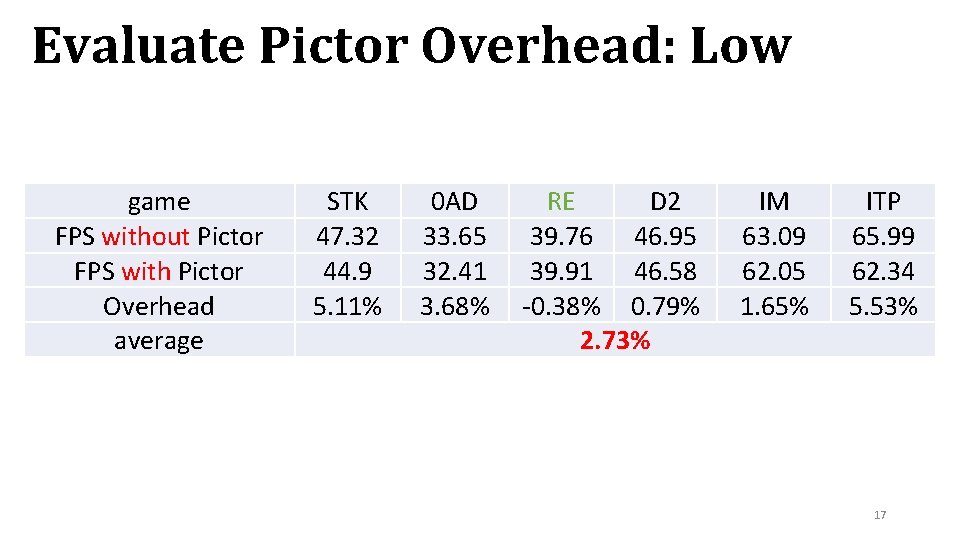

Evaluate Pictor Overhead: Low game FPS without Pictor FPS with Pictor Overhead average STK 47. 32 44. 9 5. 11% 0 AD 33. 65 32. 41 3. 68% RE D 2 39. 76 46. 95 39. 91 46. 58 -0. 38% 0. 79% 2. 73% IM 63. 09 62. 05 1. 65% ITP 65. 99 62. 34 5. 53% 17

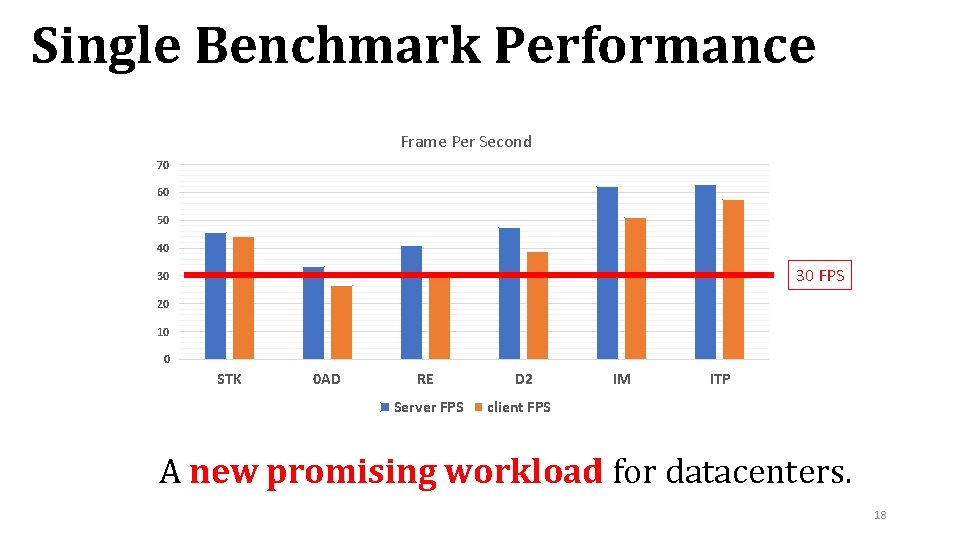

Single Benchmark Performance Frame Per Second 70 60 50 40 30 FPS 30 20 10 0 STK 0 AD RE Server FPS D 2 IM ITP client FPS A new promising workload for datacenters. 18

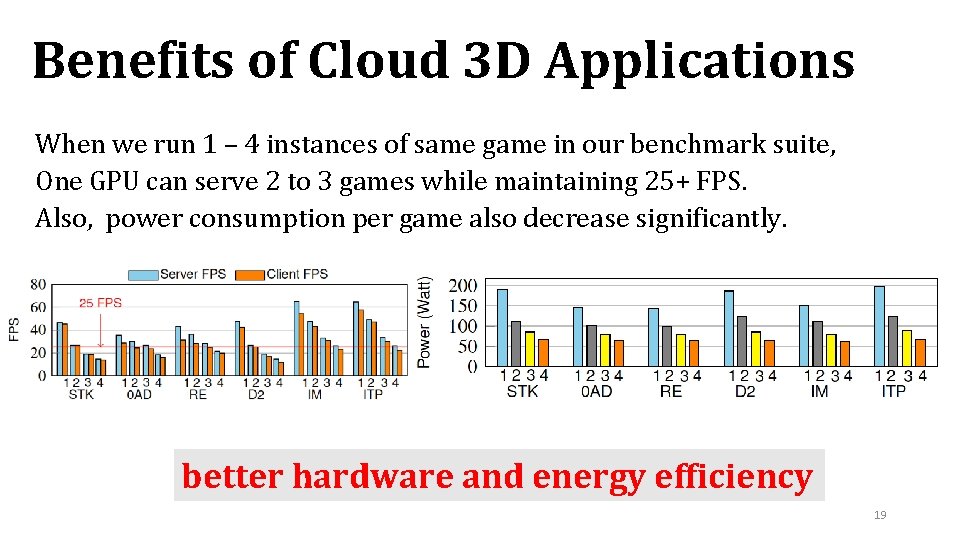

Benefits of Cloud 3 D Applications When we run 1 – 4 instances of same game in our benchmark suite, One GPU can serve 2 to 3 games while maintaining 25+ FPS. Also, power consumption per game also decrease significantly. better hardware and energy efficiency 19

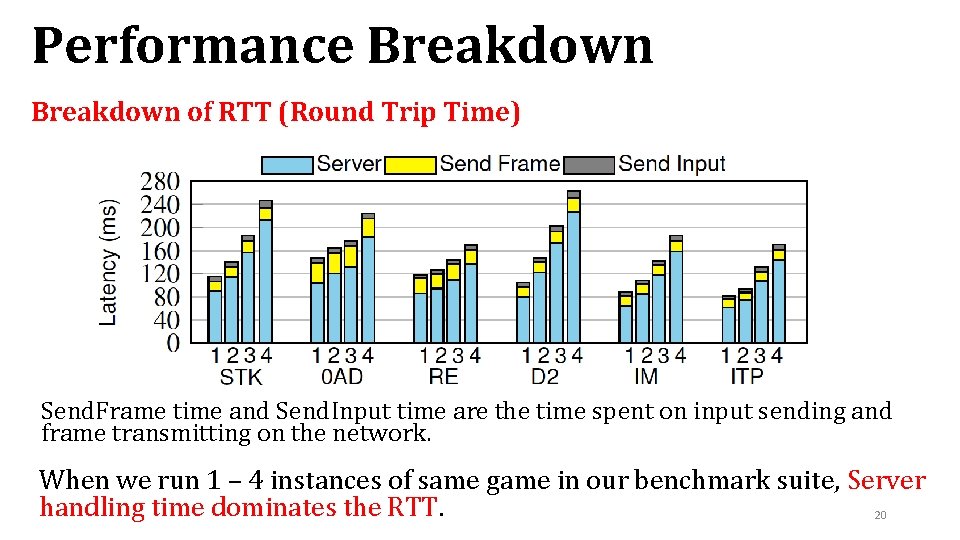

Performance Breakdown of RTT (Round Trip Time) Send. Frame time and Send. Input time are the time spent on input sending and frame transmitting on the network. When we run 1 – 4 instances of same game in our benchmark suite, Server handling time dominates the RTT. 20

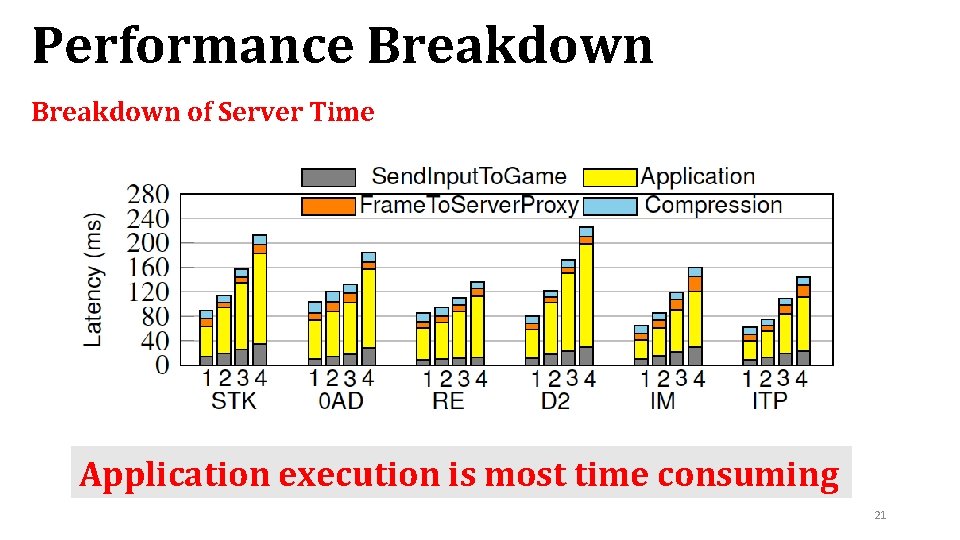

Performance Breakdown of Server Time Application execution is most time consuming 21

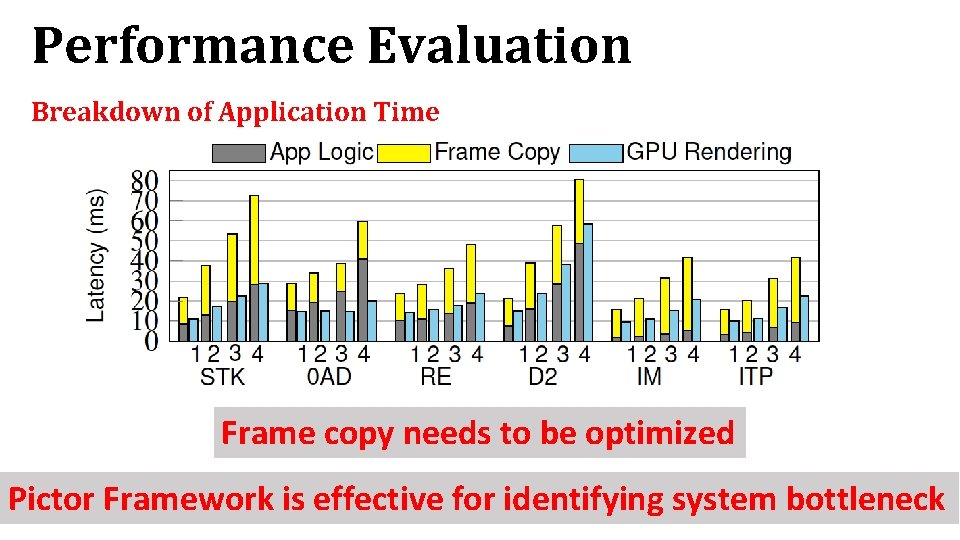

Performance Evaluation Breakdown of Application Time Frame copy needs to be optimized Pictor Framework is effective for identifying system bottleneck 22

Outline 1. Background: Cloud 3 D System Basics 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 23

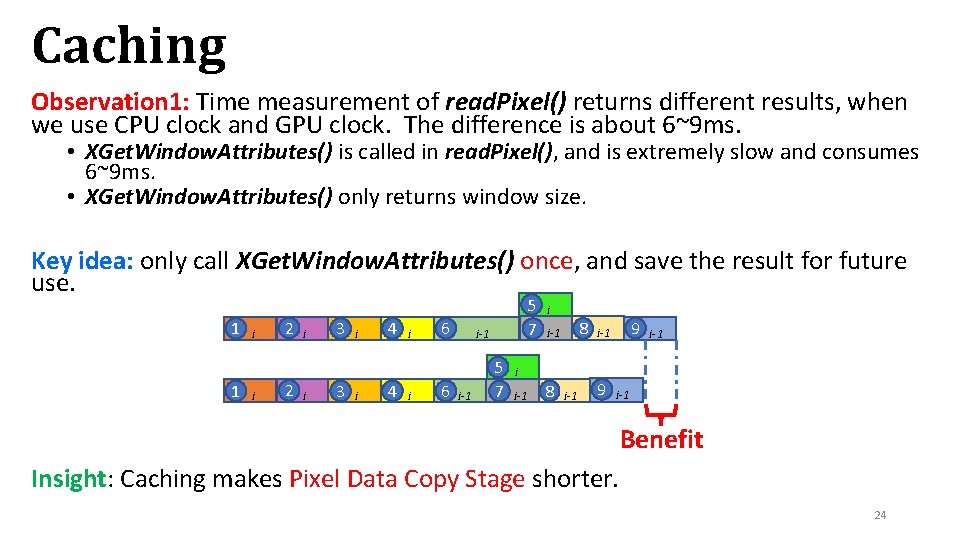

Caching Observation 1: Time measurement of read. Pixel() returns different results, when we use CPU clock and GPU clock. The difference is about 6~9 ms. • XGet. Window. Attributes() is called in read. Pixel(), and is extremely slow and consumes 6~9 ms. • XGet. Window. Attributes() only returns window size. Key idea: only call XGet. Window. Attributes() once, and save the result for future use. 1 1 i i 2 2 i i 3 3 i i 4 4 i i 6 6 5 7 i-1 5 7 i i-1 i 8 i-1 9 i-1 i-1 Benefit Insight: Caching makes Pixel Data Copy Stage shorter. 24

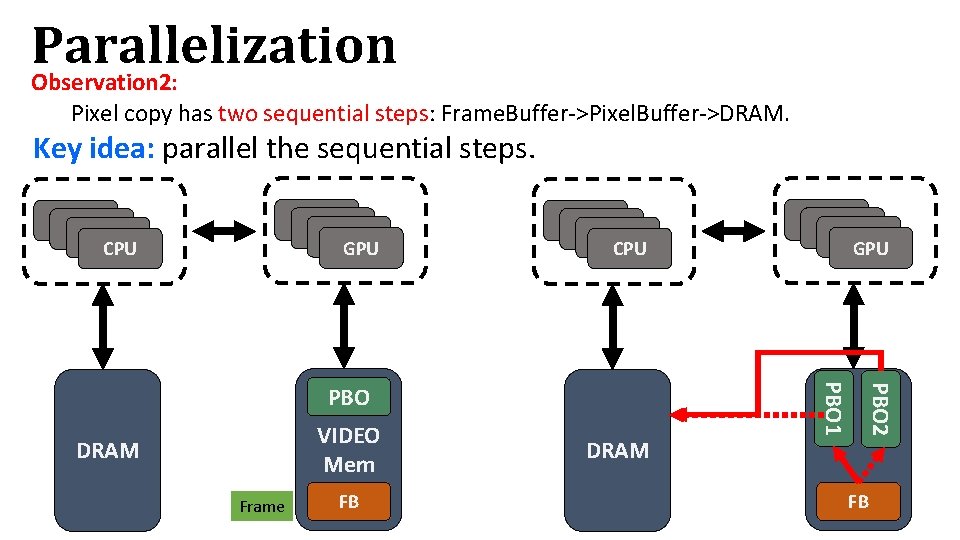

Parallelization Observation 2: Pixel copy has two sequential steps: Frame. Buffer->Pixel. Buffer->DRAM. Key idea: parallel the sequential steps. CPU CPU CPU GPU CPU CPU CPU DRAM Frame FB DRAM PBO 2 VIDEO Mem CPU GPU PBO 1 PBO CPU FB 25

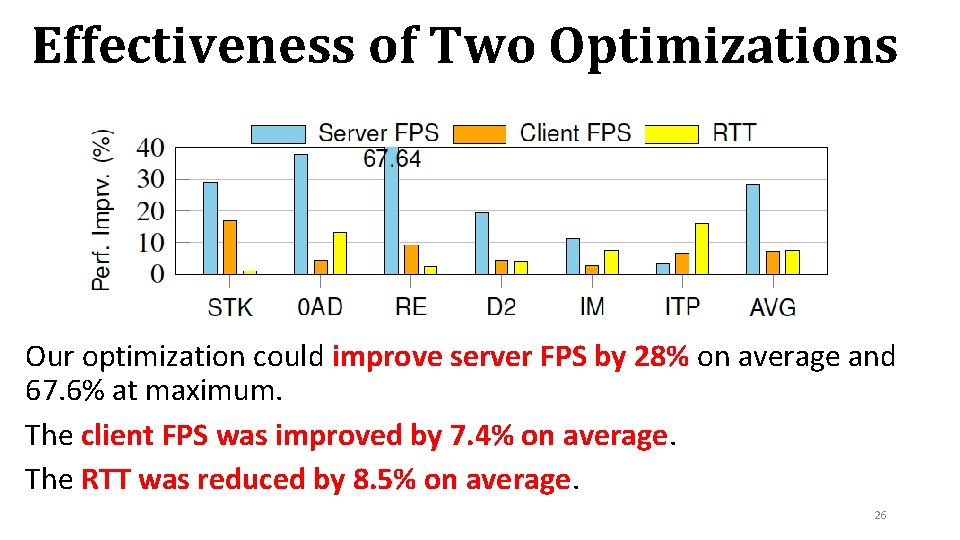

Effectiveness of Two Optimizations Our optimization could improve server FPS by 28% on average and 67. 6% at maximum. The client FPS was improved by 7. 4% on average. The RTT was reduced by 8. 5% on average. 26

Outline 1. Background: Cloud 3 D System Basics 2. The Pictor Framework Intelligent Client Performance Measurement Mechanism 3. Evaluation Two Optimizations: Caching & Parallelization 4. Conclusion 27

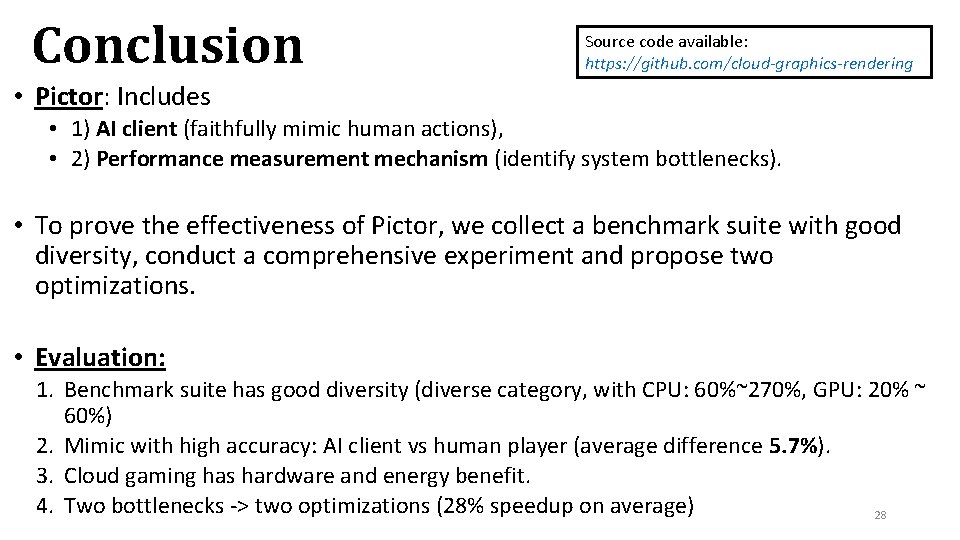

Conclusion Source code available: https: //github. com/cloud-graphics-rendering • Pictor: Includes • 1) AI client (faithfully mimic human actions), • 2) Performance measurement mechanism (identify system bottlenecks). • To prove the effectiveness of Pictor, we collect a benchmark suite with good diversity, conduct a comprehensive experiment and propose two optimizations. • Evaluation: 1. Benchmark suite has good diversity (diverse category, with CPU: 60%~270%, GPU: 20% ~ 60%) 2. Mimic with high accuracy: AI client vs human player (average difference 5. 7%). 3. Cloud gaming has hardware and energy benefit. 4. Two bottlenecks -> two optimizations (28% speedup on average) 28

Pictor A Benchmarking Framework for Cloud 3 D Applications Tianyi Liu Sen He Sunzhou Huang Danny Tsang Lingjia Tang Jason Mars Wei Wang 29

Backup Slides 30

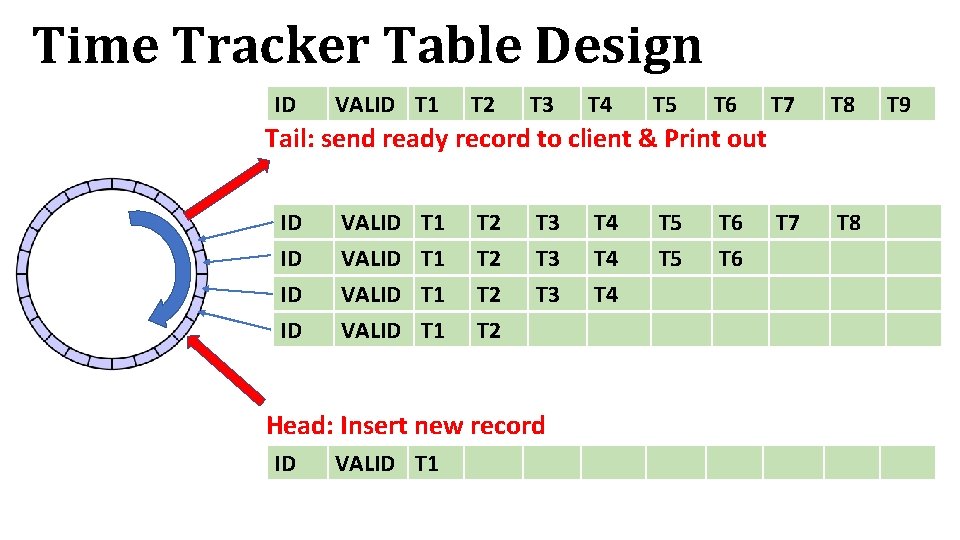

Time Tracker Table Design ID VALID T 1 T 2 T 3 T 4 T 5 T 6 T 7 T 8 Tail: send ready record to client & Print out ID ID VALID T 1 T 1 T 2 T 2 T 3 T 3 Head: Insert new record ID VALID T 1 T 4 T 4 T 5 T 6 T 9

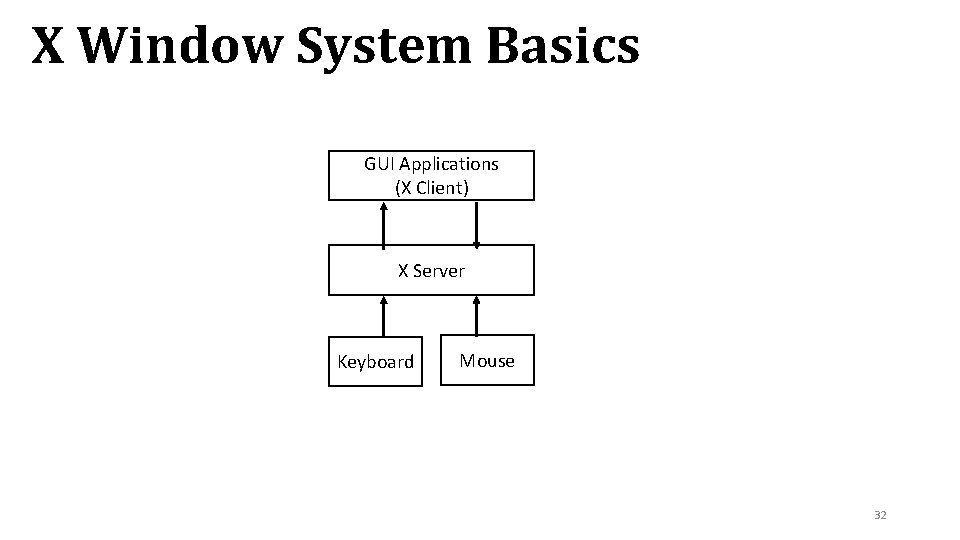

X Window System Basics GUI Applications (X Client) X Server Keyboard Mouse 32

- Slides: 33