Phase Testing 1 Overview of Implementation phase Define

![Testing ] Goal: Deliberately trying to cause failures in a software system in order Testing ] Goal: Deliberately trying to cause failures in a software system in order](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-3.jpg)

![Basic Definitions ] Fault: u is a condition that causes the software to malfunction Basic Definitions ] Fault: u is a condition that causes the software to malfunction](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-4.jpg)

![Basic Definitions ] Error: u refers to any discrepancy between an actual, measured value Basic Definitions ] Error: u refers to any discrepancy between an actual, measured value](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-5.jpg)

![fault may produce failure produces error ] Failures are caused by faults, but not fault may produce failure produces error ] Failures are caused by faults, but not](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-6.jpg)

![Faults and Failures: example ] Consider an array of integers myarray[], the ] ] Faults and Failures: example ] Consider an array of integers myarray[], the ] ]](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-7.jpg)

![Test Plan ] Specifies how we will demonstrate that the software produces expected results Test Plan ] Specifies how we will demonstrate that the software produces expected results](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-8.jpg)

![Unit Test Plan: (not unit testing) ] Unit test planning activity: (performed by programmer) Unit Test Plan: (not unit testing) ] Unit test planning activity: (performed by programmer)](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-9.jpg)

![Unit Test ] Goal: ensure that a unit (class) works (e. g. methods’ post Unit Test ] Goal: ensure that a unit (class) works (e. g. methods’ post](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-10.jpg)

![Integration Test Plan (!= integration and test plan) ] Activity: 1. Select and create Integration Test Plan (!= integration and test plan) ] Activity: 1. Select and create](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-11.jpg)

![Integration Test ] Goal: performed on partially constructed software system (builds) to verify that, Integration Test ] Goal: performed on partially constructed software system (builds) to verify that,](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-12.jpg)

![System Testing ] Goal: ensure that the system actually does what the customer expects System Testing ] Goal: ensure that the system actually does what the customer expects](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-13.jpg)

![User Acceptance Testing ] Goal: client/users test our software system by mimicking real world User Acceptance Testing ] Goal: client/users test our software system by mimicking real world](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-14.jpg)

![Developing Test Cases ] We could test code using all possible inputs u exhaustive Developing Test Cases ] We could test code using all possible inputs u exhaustive](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-15.jpg)

![Test Case ]Selection of test cases based on two strategies: u. Black box testing Test Case ]Selection of test cases based on two strategies: u. Black box testing](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-16.jpg)

![Test Case Format ] Test id -> uniquely identify our test case ] Test Test Case Format ] Test id -> uniquely identify our test case ] Test](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-17.jpg)

![Expected Results ] Expected results are created from the requirements specification, class invariants, post Expected Results ] Expected results are created from the requirements specification, class invariants, post](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-18.jpg)

![Selecting Test Cases ]Selection of test cases based on two strategies: u. Black box Selecting Test Cases ]Selection of test cases based on two strategies: u. Black box](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-19.jpg)

![Glass (White) Box Testing ] We treat the software system as a glass box Glass (White) Box Testing ] We treat the software system as a glass box](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-20.jpg)

![Black Box Testing ] We treat software system as a black box ] We Black Box Testing ] We treat software system as a black box ] We](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-22.jpg)

- Slides: 23

Phase Testing 1

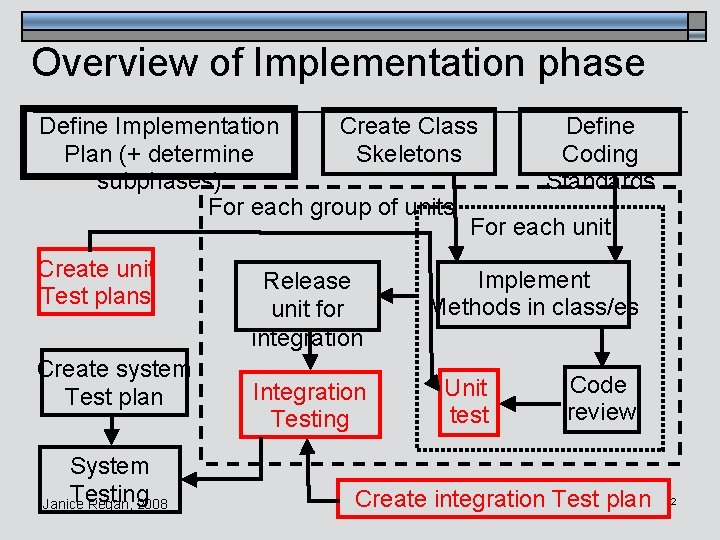

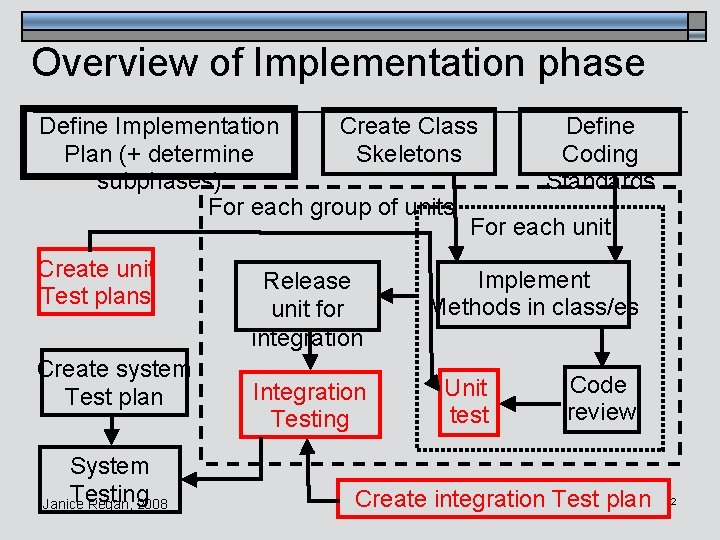

Overview of Implementation phase Define Implementation Create Class Define Plan (+ determine Skeletons Coding subphases) Standards For each group of units For each unit Create unit Test plans Create system Test plan System Testing Janice Regan, 2008 Release unit for integration Integration Testing Implement Methods in class/es Unit test Code review Create integration Test plan 2

![Testing Goal Deliberately trying to cause failures in a software system in order Testing ] Goal: Deliberately trying to cause failures in a software system in order](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-3.jpg)

Testing ] Goal: Deliberately trying to cause failures in a software system in order to detect any defects that might be present u Test effectively: uncovers as many defects as possible u Test efficiently: find largest possible number of defects using fewest possible tests Janice Regan, 2008 3

![Basic Definitions Fault u is a condition that causes the software to malfunction Basic Definitions ] Fault: u is a condition that causes the software to malfunction](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-4.jpg)

Basic Definitions ] Fault: u is a condition that causes the software to malfunction or fail ] Failure: u is the inability of a piece of software to perform according to its specifications (violation of functional and non-functional requirements) u A piece of software has failed if its actual behaviour differs in any way from its expected behaviour Janice Regan, 2008 4

![Basic Definitions Error u refers to any discrepancy between an actual measured value Basic Definitions ] Error: u refers to any discrepancy between an actual, measured value](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-5.jpg)

Basic Definitions ] Error: u refers to any discrepancy between an actual, measured value (result of running the code) and a theoretical or predicted value (expected result of code from specifications) Janice Regan, 2008 5

![fault may produce failure produces error Failures are caused by faults but not fault may produce failure produces error ] Failures are caused by faults, but not](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-6.jpg)

fault may produce failure produces error ] Failures are caused by faults, but not all faults cause failures. Unexercised faults will go undetected. ] If software system has not failed during testing (no errors were produced), we still cannot guarantee that the software system is without faults ] Testing can only show presence of faults ] A successful test does not prove our system is faultless!!!! Janice Regan, 2008 6

![Faults and Failures example Consider an array of integers myarray the Faults and Failures: example ] Consider an array of integers myarray[], the ] ]](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-7.jpg)

Faults and Failures: example ] Consider an array of integers myarray[], the ] ] array is declared to have 10 elements Code contains a loop to print the contents of the array. The array may be partially filled. Loop to print k elements for (i=0; i<k; i++) {} There is no check in the code to prevent printing more values than the number of available array elements Code is tested for an array with 6 elements. No failures occur. There is an undetected fault in the code that will cause failure if k>9 Janice Regan, 2008 7

![Test Plan Specifies how we will demonstrate that the software produces expected results Test Plan ] Specifies how we will demonstrate that the software produces expected results](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-8.jpg)

Test Plan ] Specifies how we will demonstrate that the software produces expected results and behaves according to the requirements specification ] Various tests (each representing a phase in our project schedule) u Unit test usually included within the implementation plan u Integration test may be included within the implementation plan u System test u User Acceptance test Janice Regan, 2008 8

![Unit Test Plan not unit testing Unit test planning activity performed by programmer Unit Test Plan: (not unit testing) ] Unit test planning activity: (performed by programmer)](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-9.jpg)

Unit Test Plan: (not unit testing) ] Unit test planning activity: (performed by programmer) 1. Select and create test cases Plan how to test the unit in isolation from the rest of the system ] Related Unit testing activity: (performed by tester, probably not the same person as the programmer) 1. Implement planned test cases into a unit test driver 2. Exercise (run) test cases and collect results 3. Record and report test results Janice Regan, 2008 9

![Unit Test Goal ensure that a unit class works e g methods post Unit Test ] Goal: ensure that a unit (class) works (e. g. methods’ post](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-10.jpg)

Unit Test ] Goal: ensure that a unit (class) works (e. g. methods’ post conditions are satisfied) ] Testing “in isolation” ] Activities: 1. Implement test cases created, (write a unit test driver) 2. Run unit test driver(s) 3. Report results of tests Janice Regan, 2008 10

![Integration Test Plan integration and test plan Activity 1 Select and create Integration Test Plan (!= integration and test plan) ] Activity: 1. Select and create](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-11.jpg)

Integration Test Plan (!= integration and test plan) ] Activity: 1. Select and create test cases Plan how we shall test our builds ] Related phase is Integration Test phase -> where we exercise integration test cases Janice Regan, 2008 11

![Integration Test Goal performed on partially constructed software system builds to verify that Integration Test ] Goal: performed on partially constructed software system (builds) to verify that,](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-12.jpg)

Integration Test ] Goal: performed on partially constructed software system (builds) to verify that, after integrating additional bits to our software, it still operates as planned (i. e. , as specified in the requirements document) ] Testing “in context” ] Activities: 1. Write drivers/stubs 2. Run integration test(s) 3. Report results of tests Janice Regan, 2008 12

![System Testing Goal ensure that the system actually does what the customer expects System Testing ] Goal: ensure that the system actually does what the customer expects](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-13.jpg)

System Testing ] Goal: ensure that the system actually does what the customer expects it to do ] For us, in 275, our system test plan is to successfully execute all of our use cases ] Activities: 1. Run system test using User Manual 2. Report results of test Janice Regan, 2008 13

![User Acceptance Testing Goal clientusers test our software system by mimicking real world User Acceptance Testing ] Goal: client/users test our software system by mimicking real world](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-14.jpg)

User Acceptance Testing ] Goal: client/users test our software system by mimicking real world activities ] Users should also intentionally enter erroneous values to determine the robustness of the software system Janice Regan, 2008 14

![Developing Test Cases We could test code using all possible inputs u exhaustive Developing Test Cases ] We could test code using all possible inputs u exhaustive](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-15.jpg)

Developing Test Cases ] We could test code using all possible inputs u exhaustive testing is impractical u More extensive testing for mission critical software ] Instead, we shall select a set of inputs (i. e. , a set of test cases) Janice Regan, 2008 15

![Test Case Selection of test cases based on two strategies u Black box testing Test Case ]Selection of test cases based on two strategies: u. Black box testing](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-16.jpg)

Test Case ]Selection of test cases based on two strategies: u. Black box testing strategy Select test cases by examining expected behaviour and results of software system u. Glass (white) box testing strategy Select test cases by examining internal structure of code Janice Regan, 2008 16

![Test Case Format Test id uniquely identify our test case Test Test Case Format ] Test id -> uniquely identify our test case ] Test](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-17.jpg)

Test Case Format ] Test id -> uniquely identify our test case ] Test purpose -> what we are testing, coverage type, resulting sequence of statements … ] Requirement # -> from SRS, to validate our test cases ] Inputs -> input values and states of objects ] Testing procedure -> steps to be performed by testers to execute this test case (algorithm of test driver for automated testing) ] Evaluation -> step to be performed by testers in order to evaluate whether test is successful or not ] Expected behaviours and results -> test oracle ] Actual behaviours and results -> one way to report Janice Regan, 2008 17

![Expected Results Expected results are created from the requirements specification class invariants post Expected Results ] Expected results are created from the requirements specification, class invariants, post](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-18.jpg)

Expected Results ] Expected results are created from the requirements specification, class invariants, post conditions of methods, etc. ] When expected results are not observed a failure has occurred ] Be careful (when developing tests) u keep in mind that the expected results, themselves, could be erroneous Janice Regan, 2008 18

![Selecting Test Cases Selection of test cases based on two strategies u Black box Selecting Test Cases ]Selection of test cases based on two strategies: u. Black box](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-19.jpg)

Selecting Test Cases ]Selection of test cases based on two strategies: u. Black box testing strategy Select test cases by examining expected behaviour and results of software system u. Glass (white) box testing strategy Select test cases by examining internal structure of code Janice Regan, 2008 19

![Glass White Box Testing We treat the software system as a glass box Glass (White) Box Testing ] We treat the software system as a glass box](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-20.jpg)

Glass (White) Box Testing ] We treat the software system as a glass box ] Can see inside, i. e. , we knows about u Source code (steps taken by algorithms) u Internal data u design documentation ] We can select test cases using our knowledge of the course code, hence test cases can exercise all aspects of each algorithm and data ] More thorough but more time-consuming than black box testing Janice Regan, 2008 20

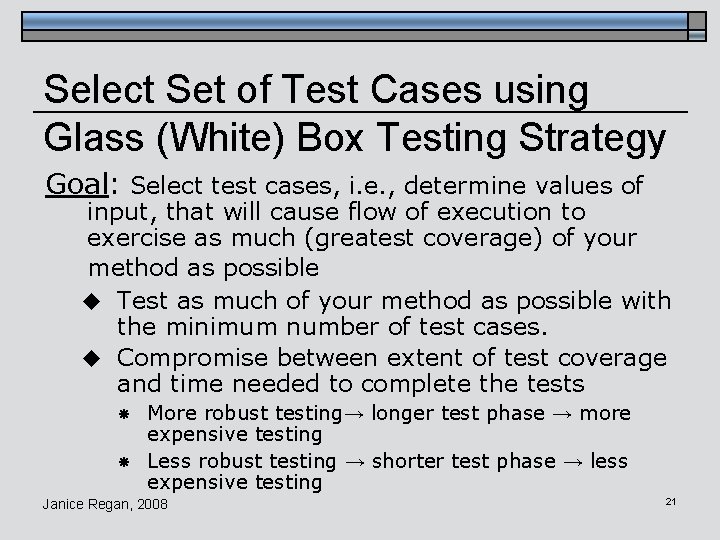

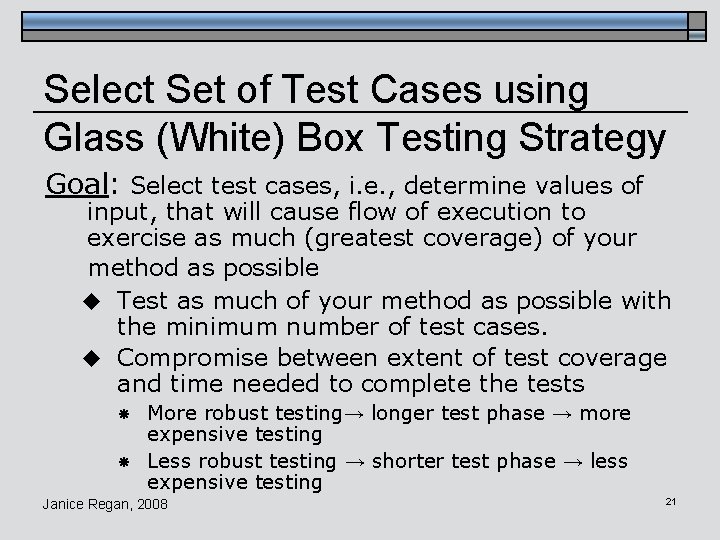

Select Set of Test Cases using Glass (White) Box Testing Strategy Goal: Select test cases, i. e. , determine values of input, that will cause flow of execution to exercise as much (greatest coverage) of your method as possible u Test as much of your method as possible with the minimum number of test cases. u Compromise between extent of test coverage and time needed to complete the tests More robust testing→ longer test phase → more expensive testing Less robust testing → shorter test phase → less expensive testing Janice Regan, 2008 21

![Black Box Testing We treat software system as a black box We Black Box Testing ] We treat software system as a black box ] We](https://slidetodoc.com/presentation_image_h/bce04c83a10a7ad64ee57f4c010a9f31/image-22.jpg)

Black Box Testing ] We treat software system as a black box ] We cannot see inside, i. e. , we knows nothing about u source code u internal data u design documentation ] So what do we know then? u Public interface of the function or class (methods, parameters) u Class skeleton (pre and post conditions, description, invariants) ] Test cases are selected based on u input values or situation u expected behaviour and output of methods 22

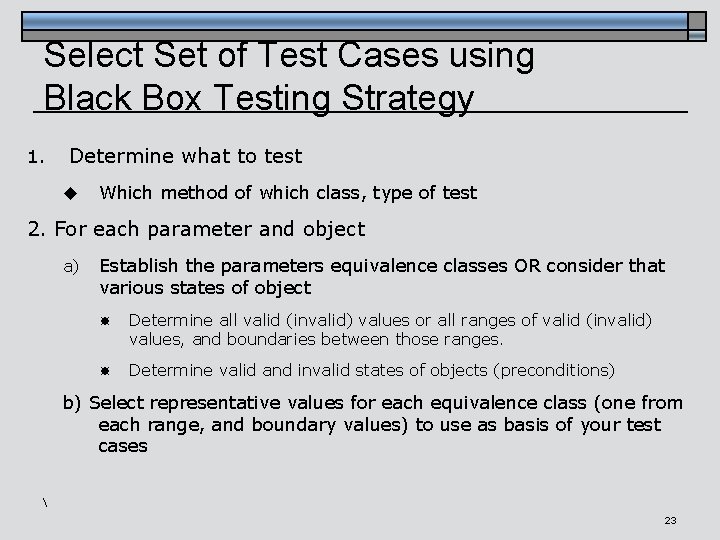

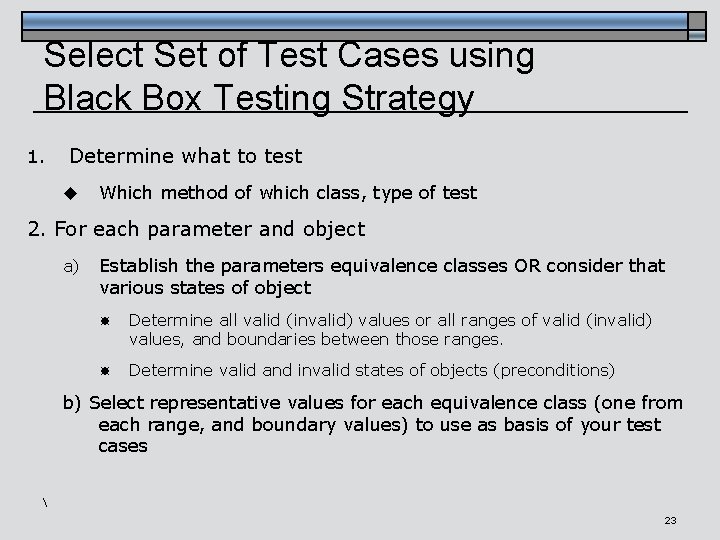

Select Set of Test Cases using Black Box Testing Strategy 1. Determine what to test u Which method of which class, type of test 2. For each parameter and object a) Establish the parameters equivalence classes OR consider that various states of object Determine all valid (invalid) values or all ranges of valid (invalid) values, and boundaries between those ranges. Determine valid and invalid states of objects (preconditions) b) Select representative values for each equivalence class (one from each range, and boundary values) to use as basis of your test cases 23