Ph D Final Exam Neural Network Ensonification Emulation

- Slides: 63

Ph. D. Final Exam Neural Network Ensonification Emulation: Training And Application JAE-BYUNG JUNG Department of Electrical Engineering University of Washington August 8, 2001

Overview Review of adaptive sonar Neural network training for varying output nodes – On-line training – Batch-mode training Neural network inversion Sensitivity analysis Maximal area coverage problem Conclusions and ideas for future works 2

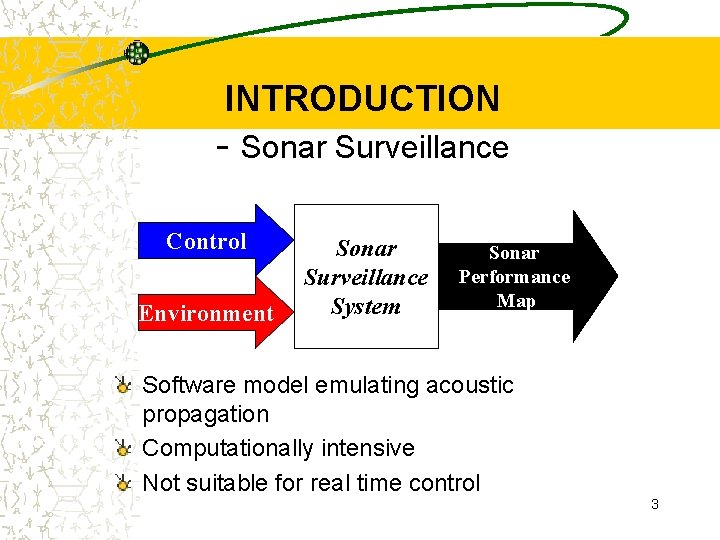

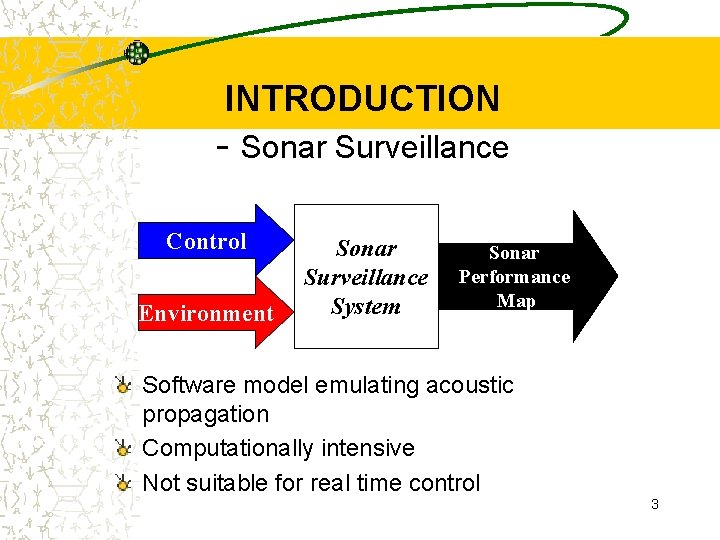

INTRODUCTION - Sonar Surveillance Control Environment Sonar Surveillance System Sonar Performance Map Software model emulating acoustic propagation Computationally intensive Not suitable for real time control 3

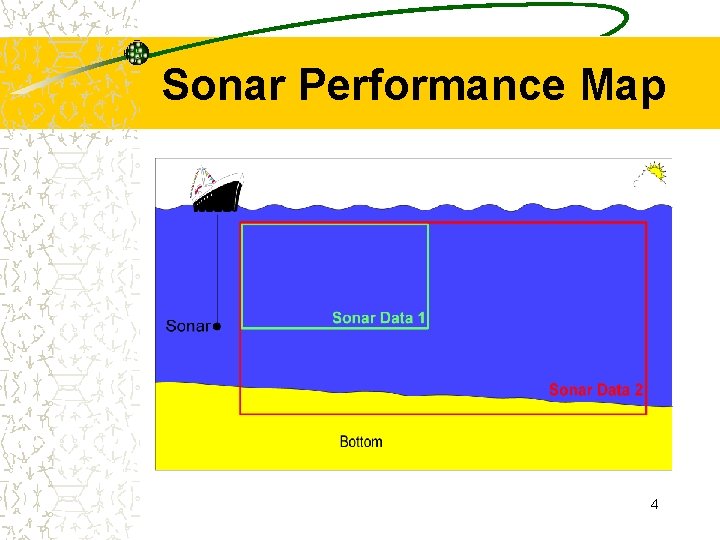

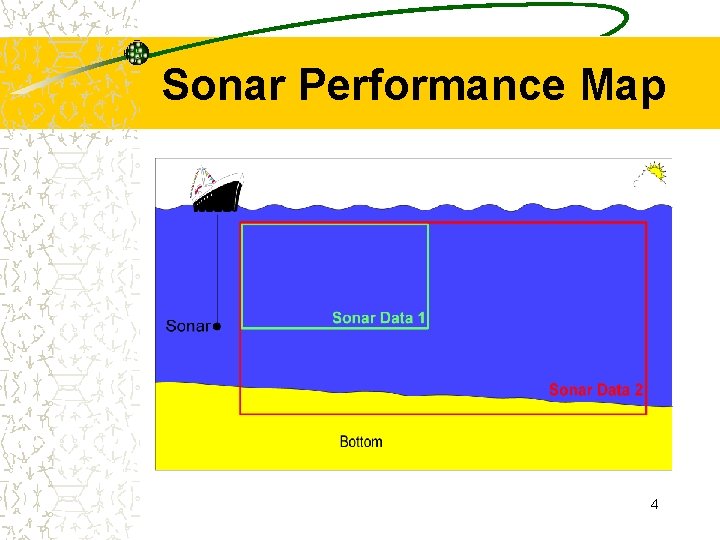

Sonar Performance Map 4

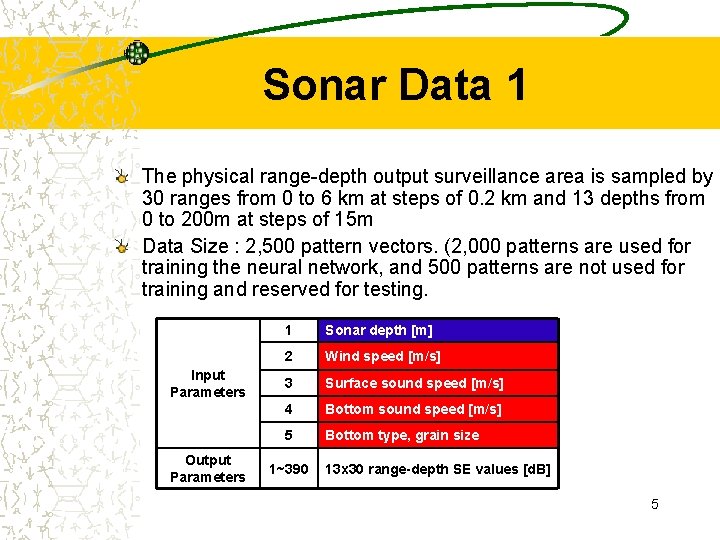

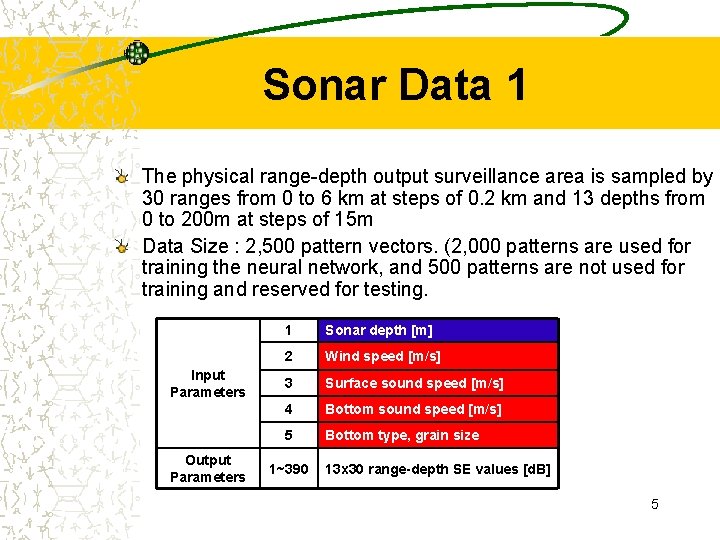

Sonar Data 1 The physical range-depth output surveillance area is sampled by 30 ranges from 0 to 6 km at steps of 0. 2 km and 13 depths from 0 to 200 m at steps of 15 m Data Size : 2, 500 pattern vectors. (2, 000 patterns are used for training the neural network, and 500 patterns are not used for training and reserved for testing. Input Parameters Output Parameters 1 Sonar depth [m] 2 Wind speed [m/s] 3 Surface sound speed [m/s] 4 Bottom sound speed [m/s] 5 Bottom type, grain size 1~390 13 x 30 range-depth SE values [d. B] 5

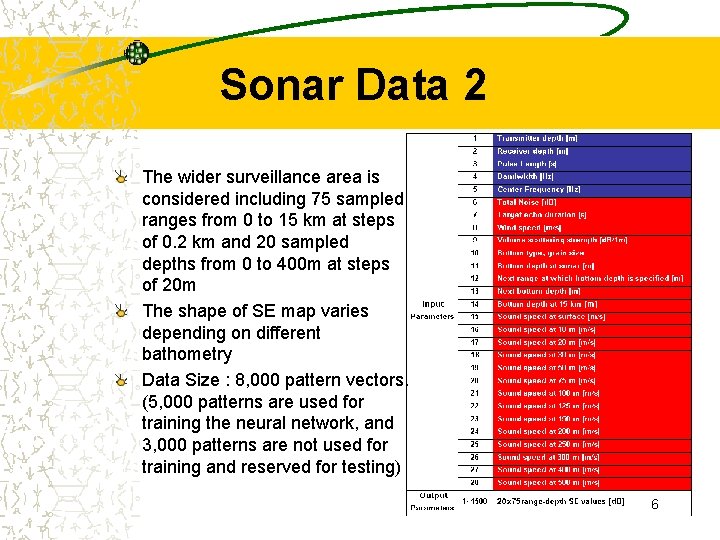

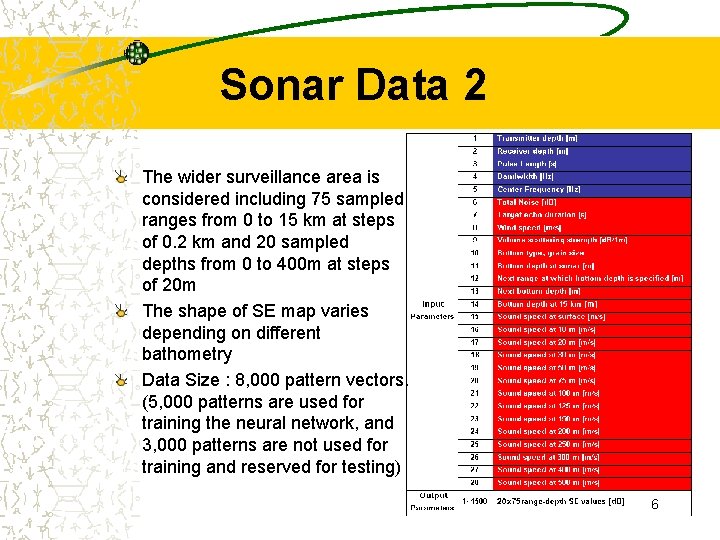

Sonar Data 2 The wider surveillance area is considered including 75 sampled ranges from 0 to 15 km at steps of 0. 2 km and 20 sampled depths from 0 to 400 m at steps of 20 m The shape of SE map varies depending on different bathometry Data Size : 8, 000 pattern vectors. (5, 000 patterns are used for training the neural network, and 3, 000 patterns are not used for training and reserved for testing) 6

Neural Network Replacement Fast Reproduction of SE Map Inversion (Derivative Existence) Real-time Control (Optimization) 7

Training NN High dimensionality of output space Þ Multi-Layered Perceptrons Þ Neural Smithing (e. g. input data jittering, pattern clipping, and weight decay …) Widely varying bathymetry Þ Adaptive training strategy for flexible output dimensionality 8

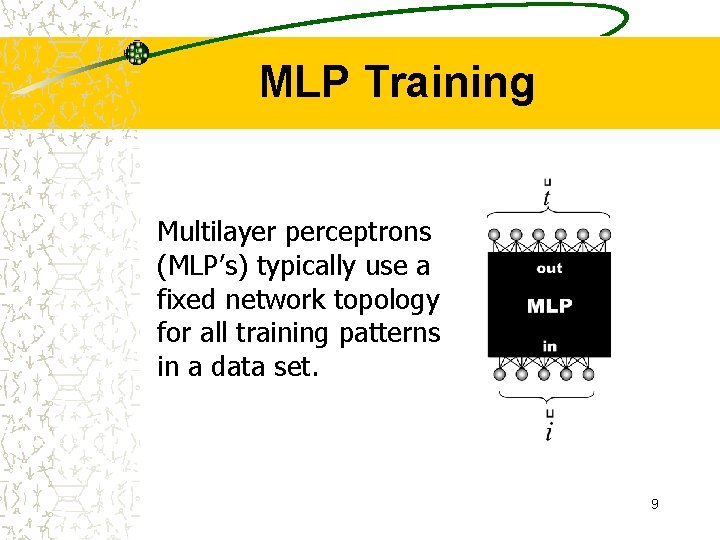

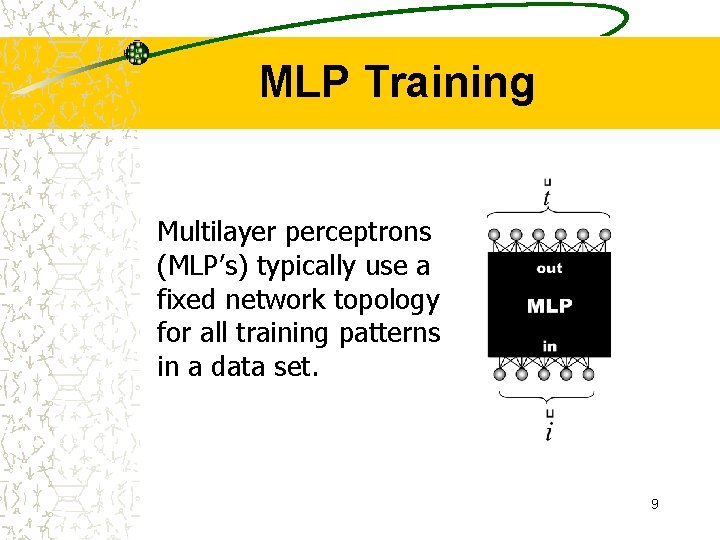

MLP Training Multilayer perceptrons (MLP’s) typically use a fixed network topology for all training patterns in a data set. 9

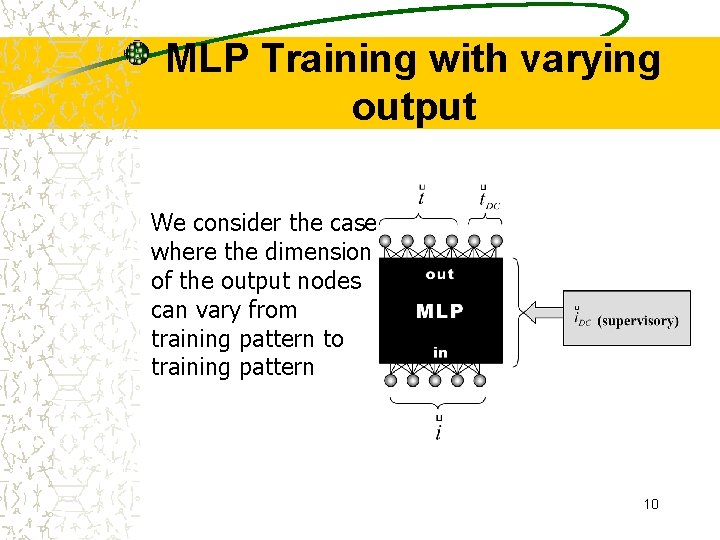

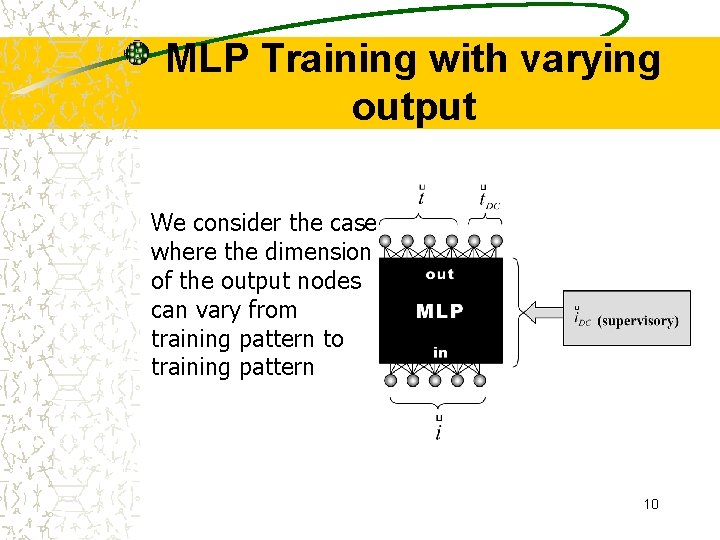

MLP Training with varying output We consider the case where the dimension of the output nodes can vary from training pattern to training pattern 10

Flexible Dimensionality Generally, MLP must have a fixed network topology A single neural network can not handle flexible network topology A modular neural network structure that has local experts for different dimension specific training patterns It becomes increasingly difficult, however, to implement a large number of neural networks as the number of local experts increases 11

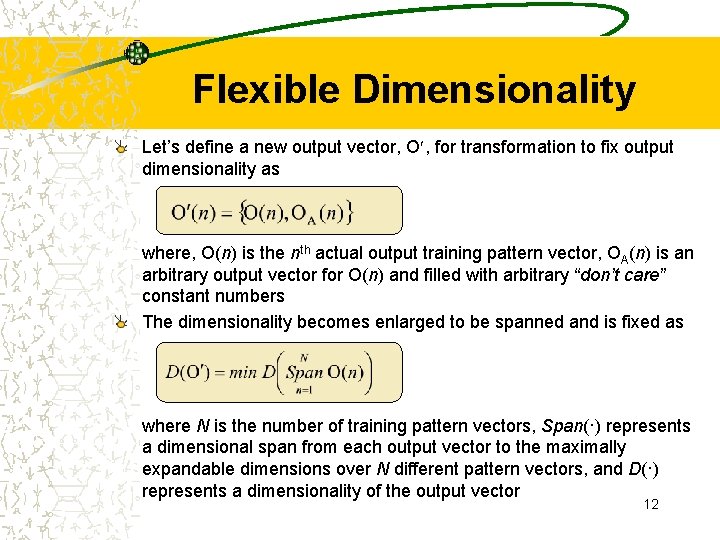

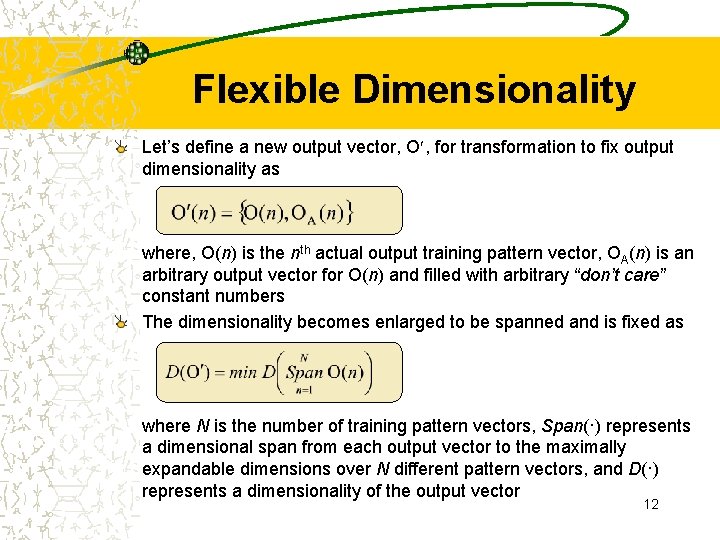

Flexible Dimensionality Let’s define a new output vector, O , for transformation to fix output dimensionality as where, O(n) is the nth actual output training pattern vector, OA(n) is an arbitrary output vector for O(n) and filled with arbitrary “don’t care” constant numbers The dimensionality becomes enlarged to be spanned and is fixed as where N is the number of training pattern vectors, Span(·) represents a dimensional span from each output vector to the maximally expandable dimensions over N different pattern vectors, and D(·) represents a dimensionality of the output vector 12

Flexible Dimensionality Train a single neural network using a fixed-dimensional output vector O (n) by 1) Filling arbitrary constant value into OA(n) High spatial freq. components are washed out 2) Smearing neighborhood pixels to OA(n) Still need to train unnecessary part (longer training time) OA(n) can be ignored when O (n) is projected onto O(n) in the testing 13 phase.

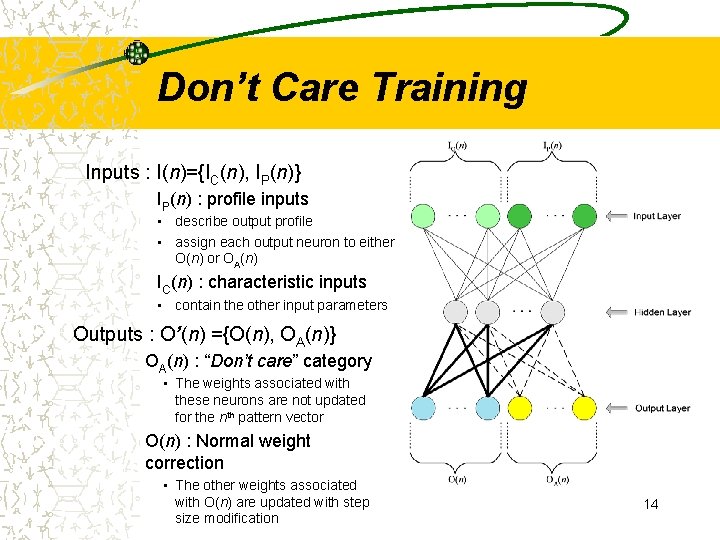

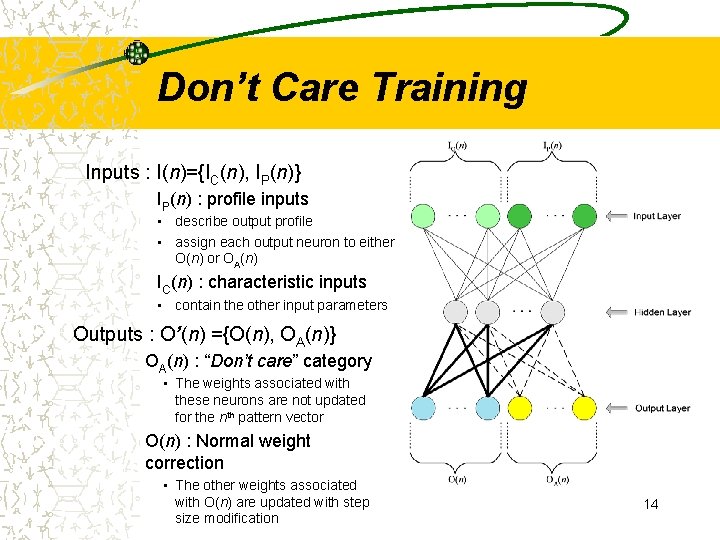

Don’t Care Training Inputs : I(n)={IC(n), IP(n)} IP(n) : profile inputs • describe output profile • assign each output neuron to either O(n) or OA(n) IC(n) : characteristic inputs • contain the other input parameters Outputs : O’(n) ={O(n), OA(n)} OA(n) : “Don’t care” category • The weights associated with these neurons are not updated for the nth pattern vector O(n) : Normal weight correction • The other weights associated with O(n) are updated with step size modification 14

Don’t Care Training Advantages ü Significantly reduced training time by not correcting weights in don’t care category ü Boundary problem is solved ü Less training vectors required ü Focus on active nodes only Drawbacks ü Rough weight space due to irregular weight correction ü Possibly leading to local minima 15

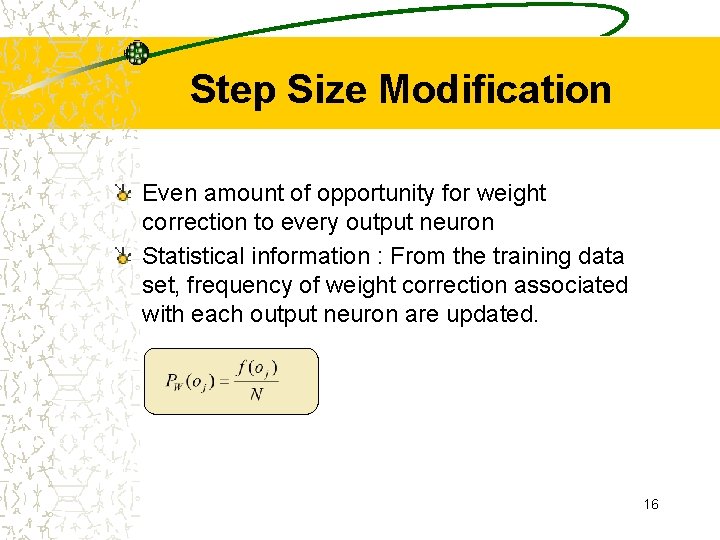

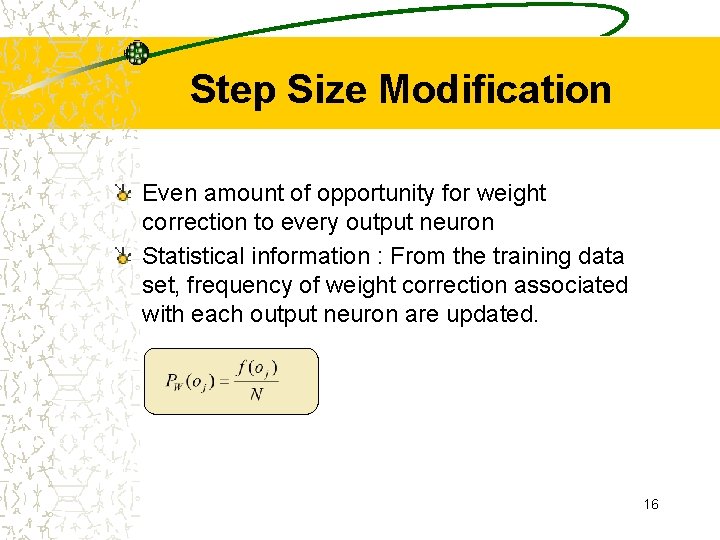

Step Size Modification Even amount of opportunity for weight correction to every output neuron Statistical information : From the training data set, frequency of weight correction associated with each output neuron are updated. 16

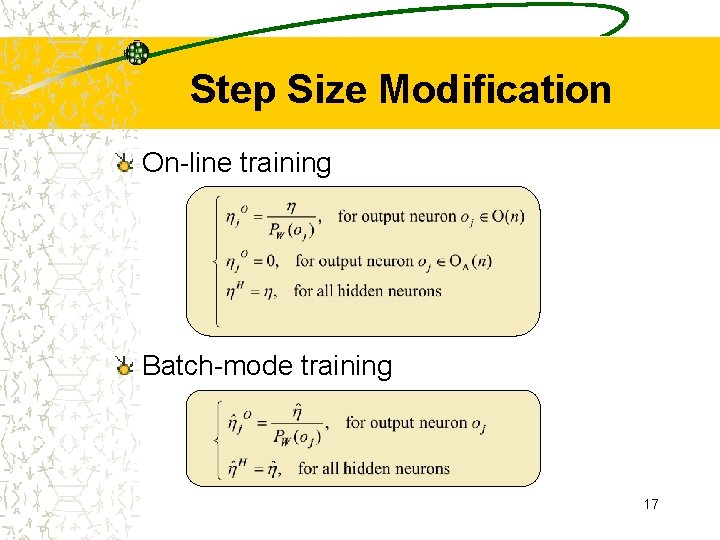

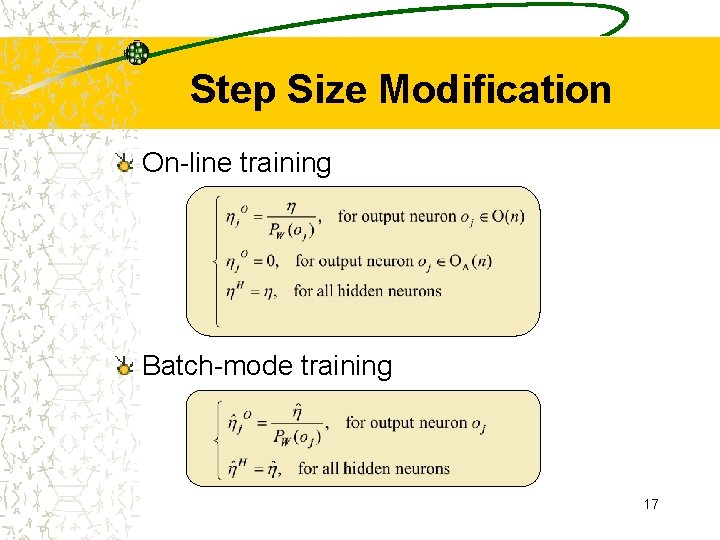

Step Size Modification On-line training Batch-mode training 17

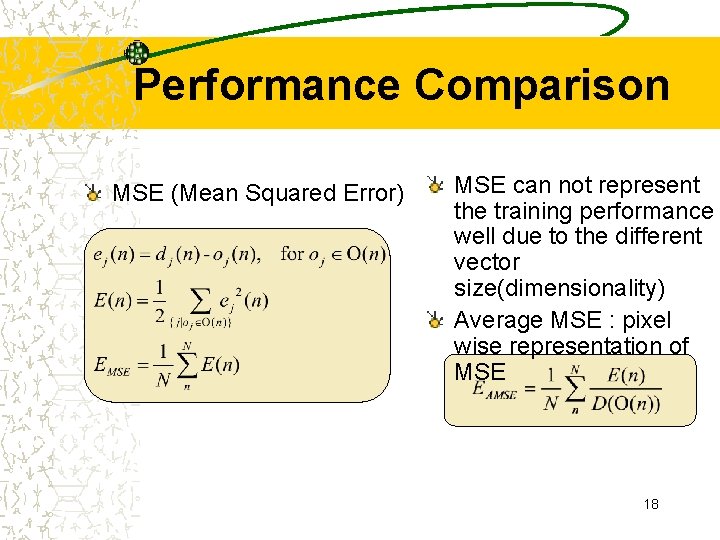

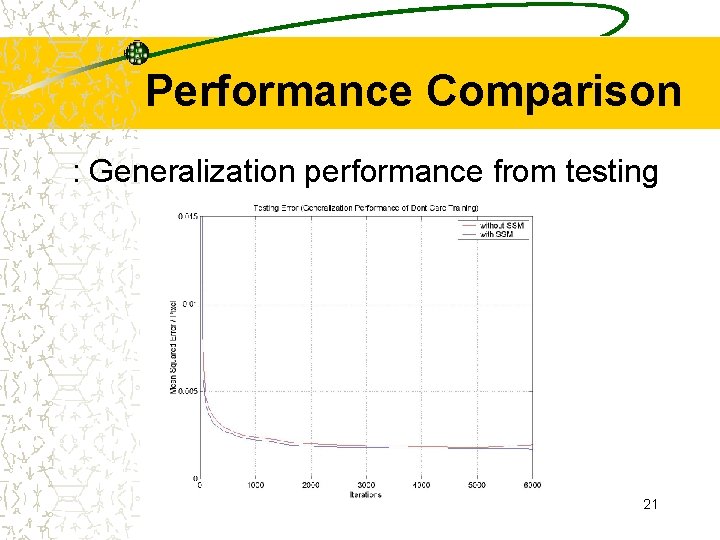

Performance Comparison MSE (Mean Squared Error) MSE can not represent the training performance well due to the different vector size(dimensionality) Average MSE : pixel wise representation of MSE 18

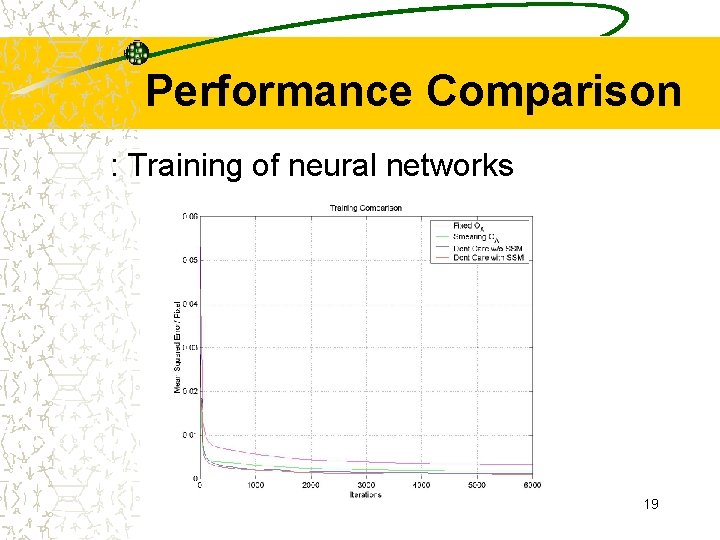

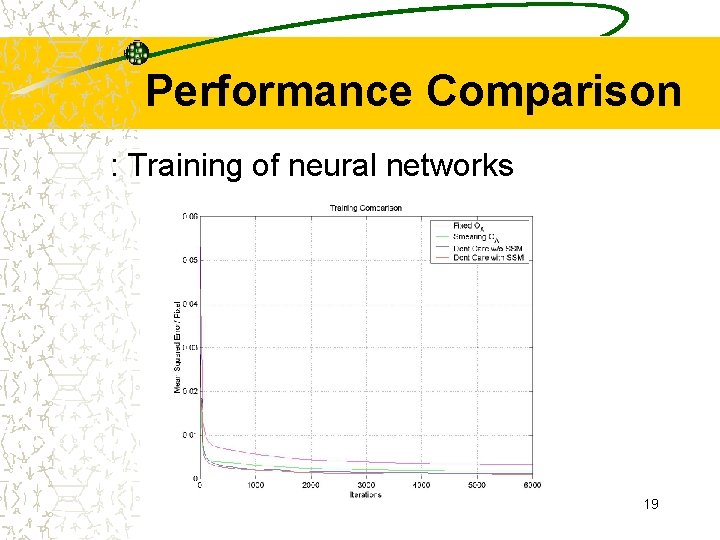

Performance Comparison : Training of neural networks 19

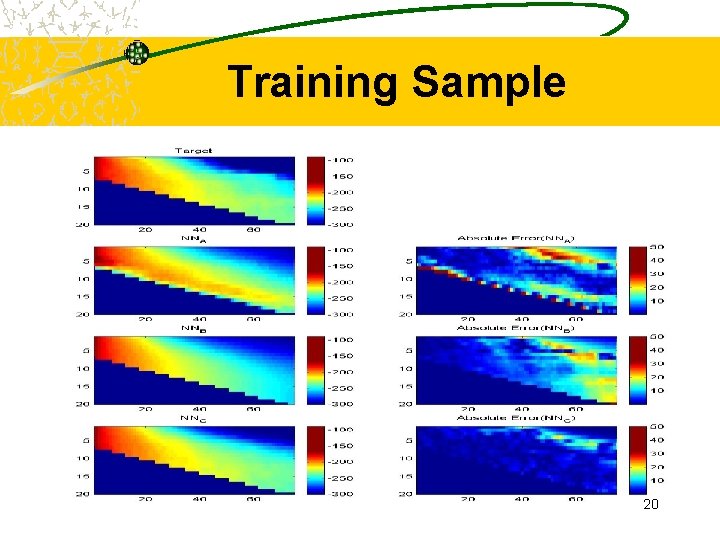

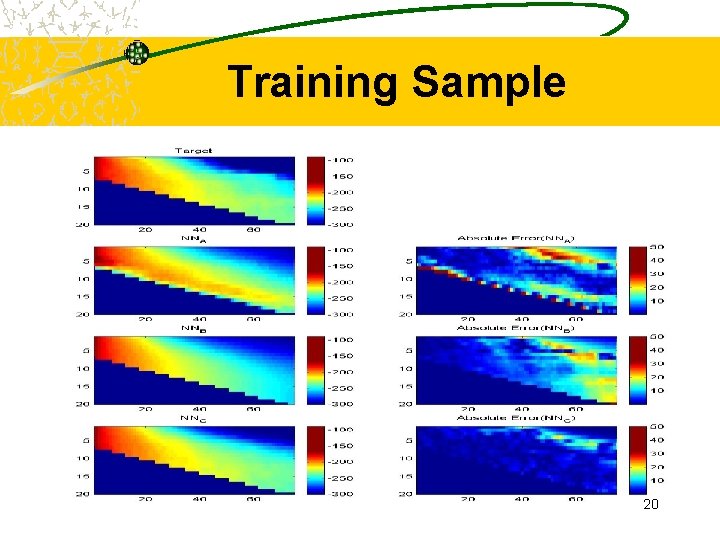

Training Sample 20

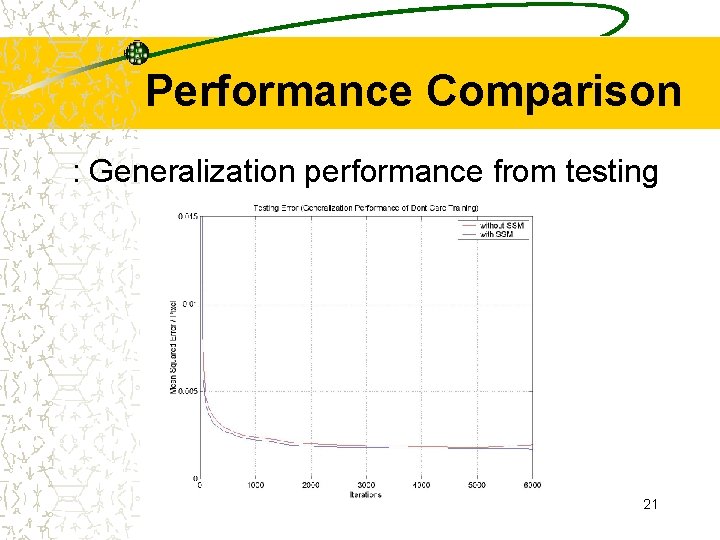

Performance Comparison : Generalization performance from testing error 21

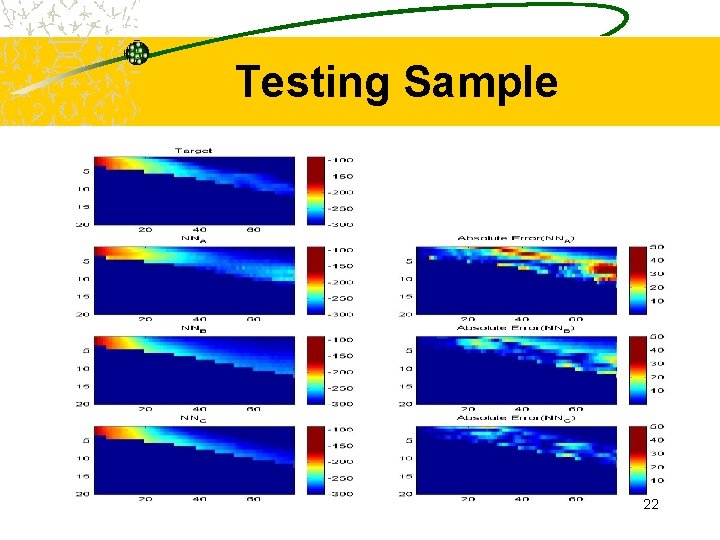

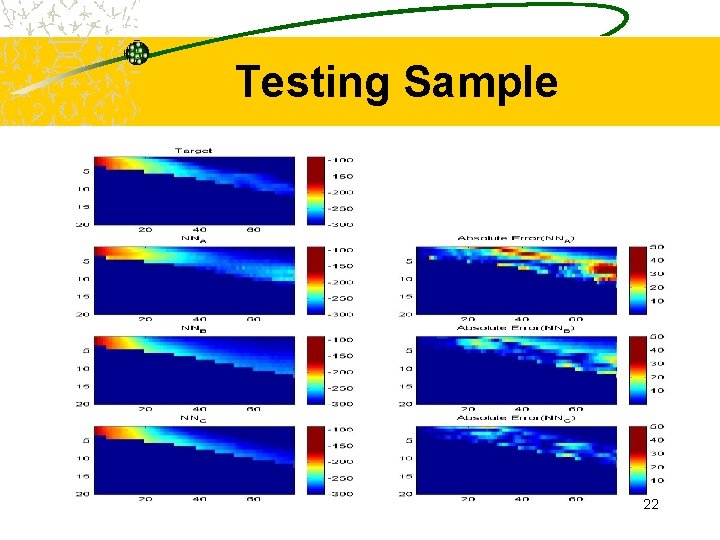

Testing Sample 22

Contributions: Training A novel neural network learning algorithm for data sets with varying output node dimension is proposed. – Selective weight update • Fast convergence • Improved accuracy – Step size modification • Good generalization • Improved accuracy 23

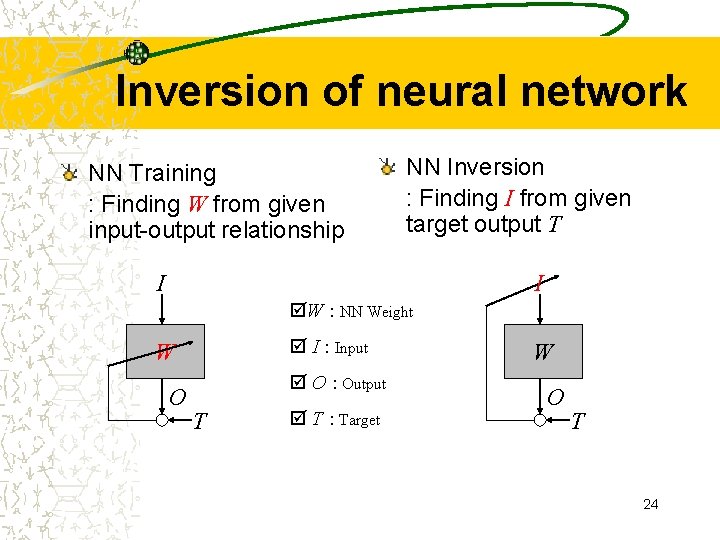

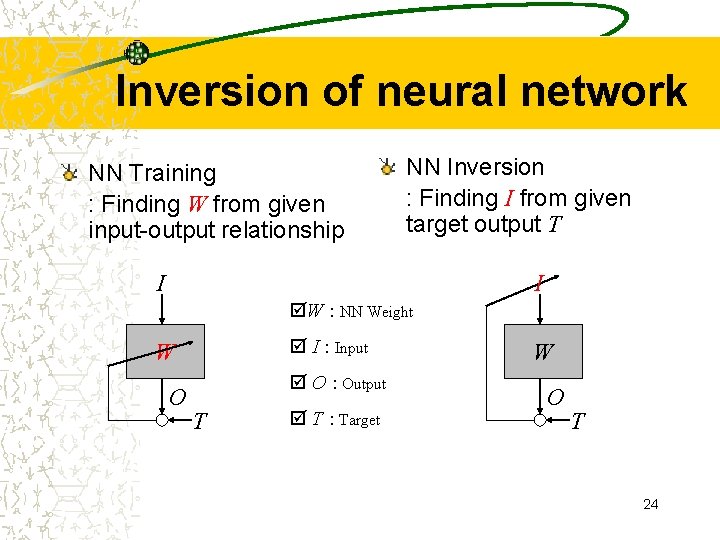

Inversion of neural network NN Training : Finding W from given input-output relationship NN Inversion : Finding I from given target output T I I þW : NN Weight þ I : Input W O þ O : Output T þ T : Target W O T 24

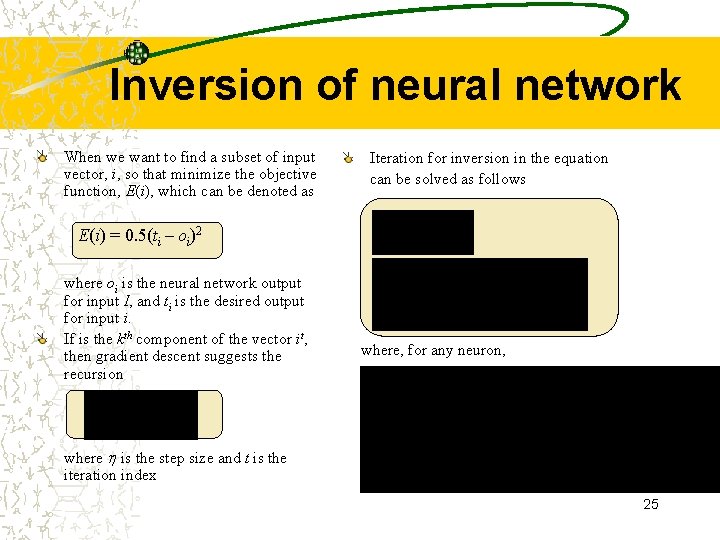

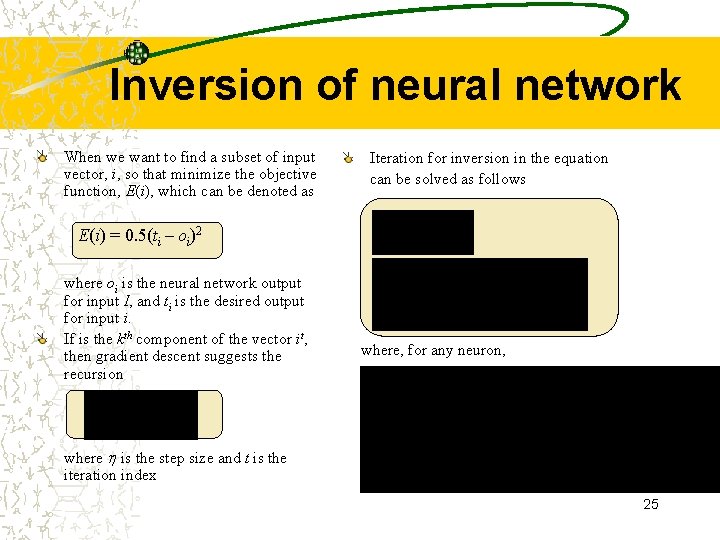

Inversion of neural network When we want to find a subset of input vector, i, so that minimize the objective function, E(i), which can be denoted as Iteration for inversion in the equation can be solved as follows E(i) = 0. 5(ti – oi)2 where oi is the neural network output for input I, and ti is the desired output for input i. If is the kth component of the vector it, then gradient descent suggests the recursion where, for any neuron, where is the step size and t is the iteration index 25

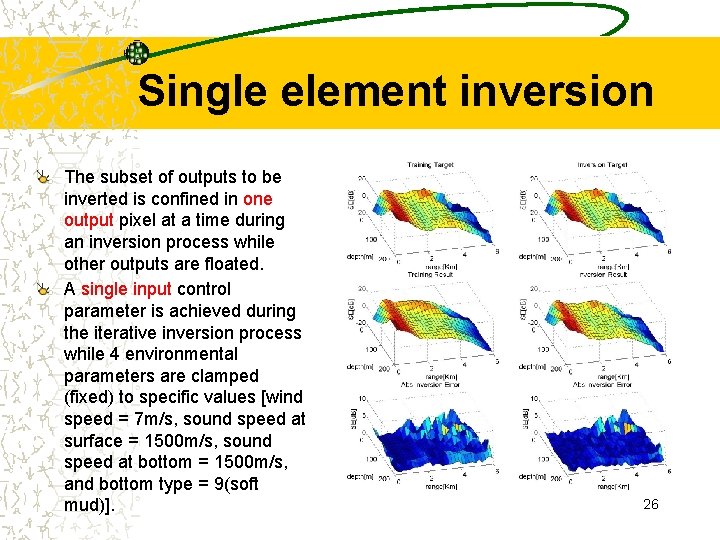

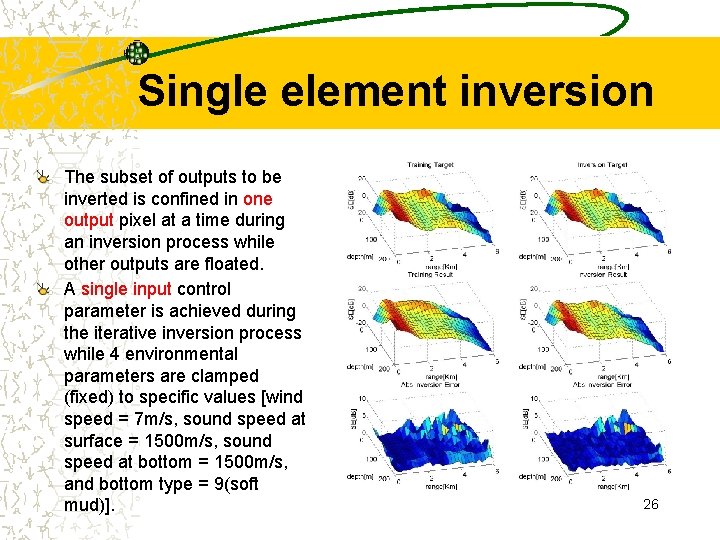

Single element inversion The subset of outputs to be inverted is confined in one output pixel at a time during an inversion process while other outputs are floated. A single input control parameter is achieved during the iterative inversion process while 4 environmental parameters are clamped (fixed) to specific values [wind speed = 7 m/s, sound speed at surface = 1500 m/s, sound speed at bottom = 1500 m/s, and bottom type = 9(soft mud)]. 26

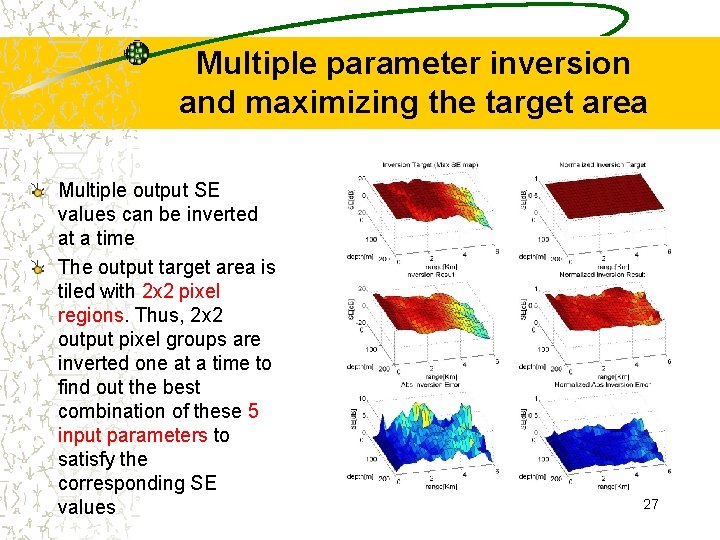

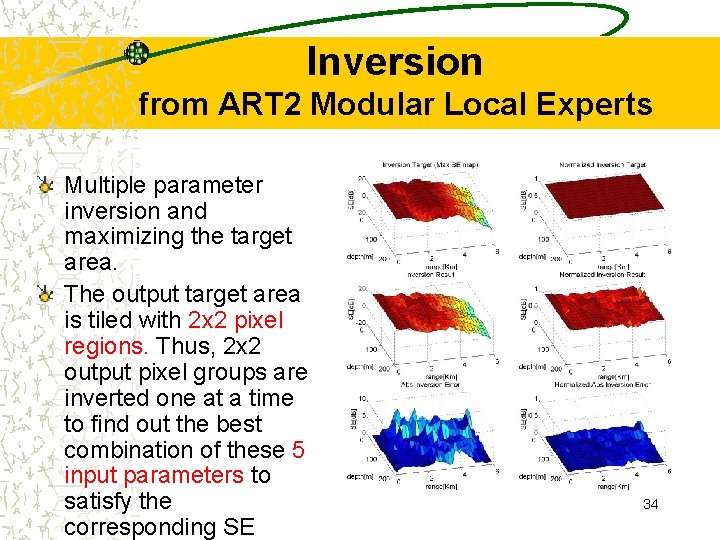

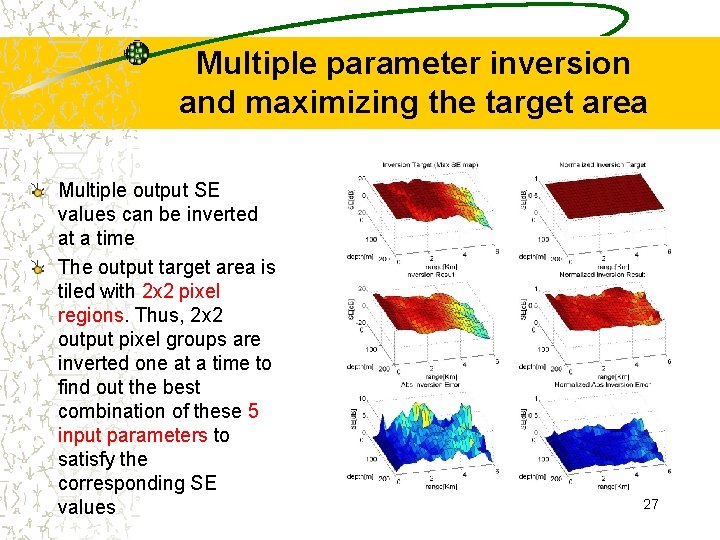

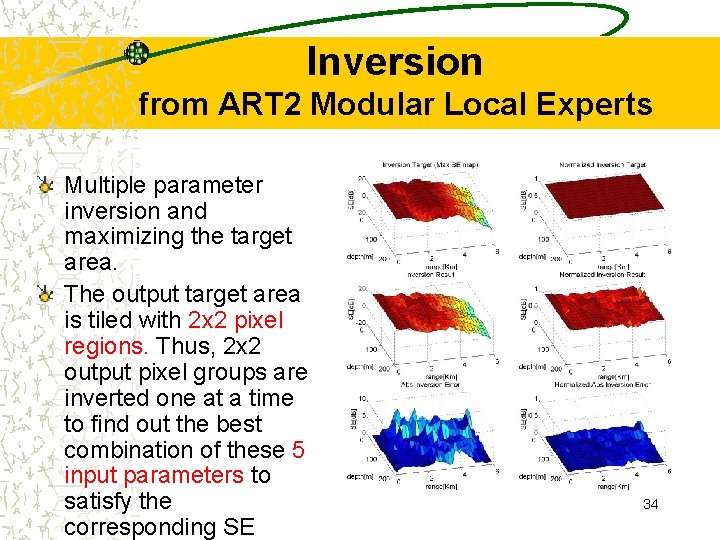

Multiple parameter inversion and maximizing the target area Multiple output SE values can be inverted at a time The output target area is tiled with 2 x 2 pixel regions. Thus, 2 x 2 output pixel groups are inverted one at a time to find out the best combination of these 5 input parameters to satisfy the corresponding SE values 27

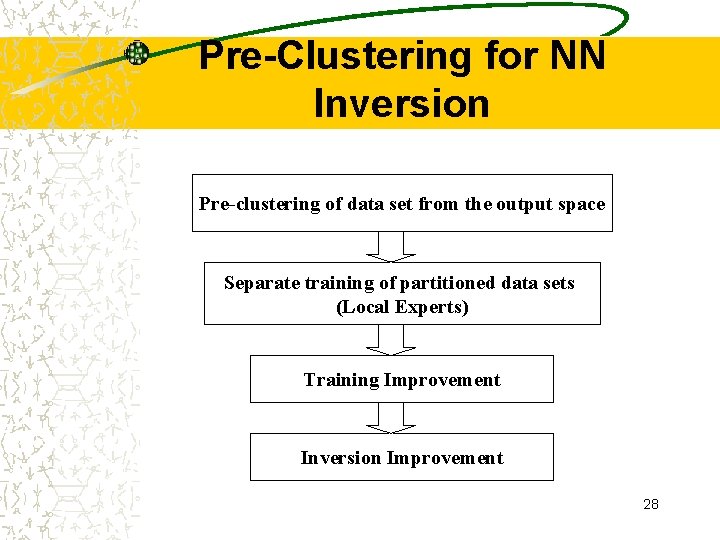

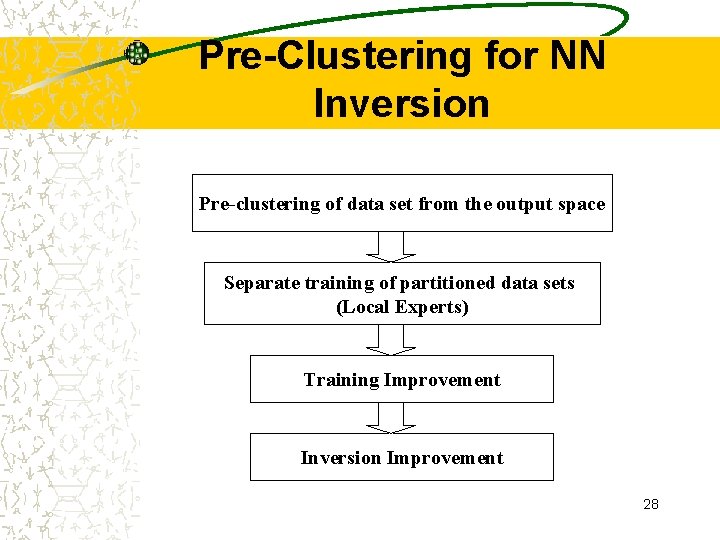

Pre-Clustering for NN Inversion Pre-clustering of data set from the output space Separate training of partitioned data sets (Local Experts) Training Improvement Inversion Improvement 28

Unsupervised Clustering Partitioning a collection of data points into a number of subgroups When we know the number of prototypes ð K-NN, Fuzzy C-Means, … When no a priori information is available. ð ART, Kohonen SOFM, … 29

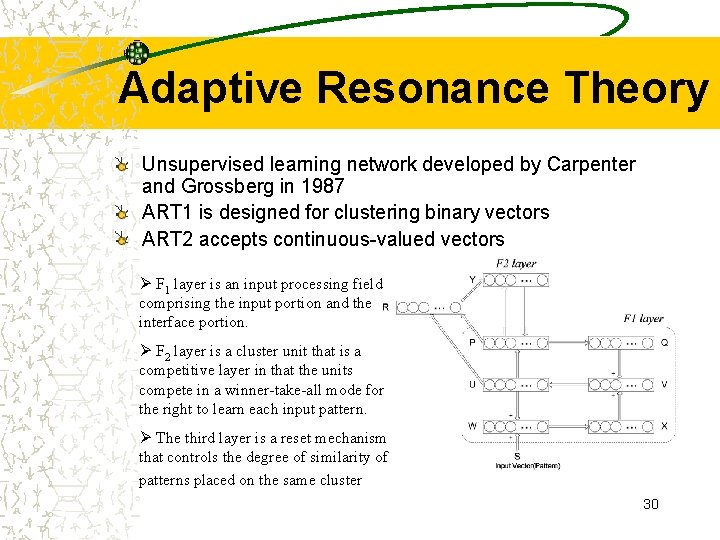

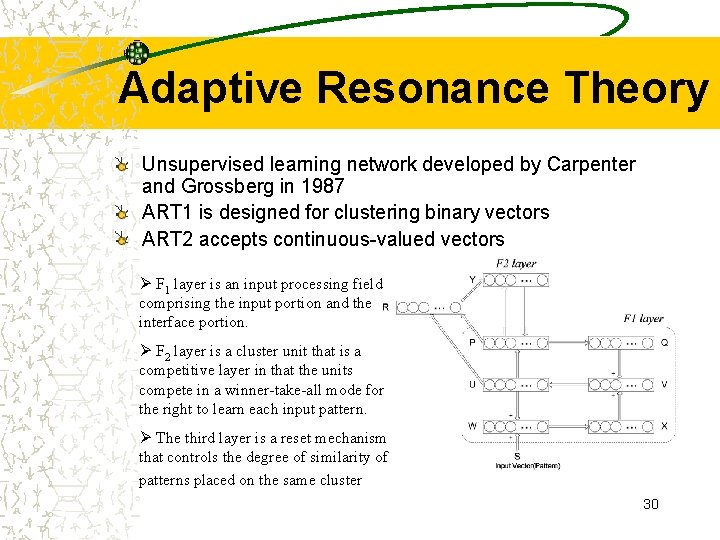

Adaptive Resonance Theory Unsupervised learning network developed by Carpenter and Grossberg in 1987 ART 1 is designed for clustering binary vectors ART 2 accepts continuous-valued vectors Ø F 1 layer is an input processing field comprising the input portion and the interface portion. Ø F 2 layer is a cluster unit that is a competitive layer in that the units compete in a winner-take-all mode for the right to learn each input pattern. Ø The third layer is a reset mechanism that controls the degree of similarity of patterns placed on the same cluster 30

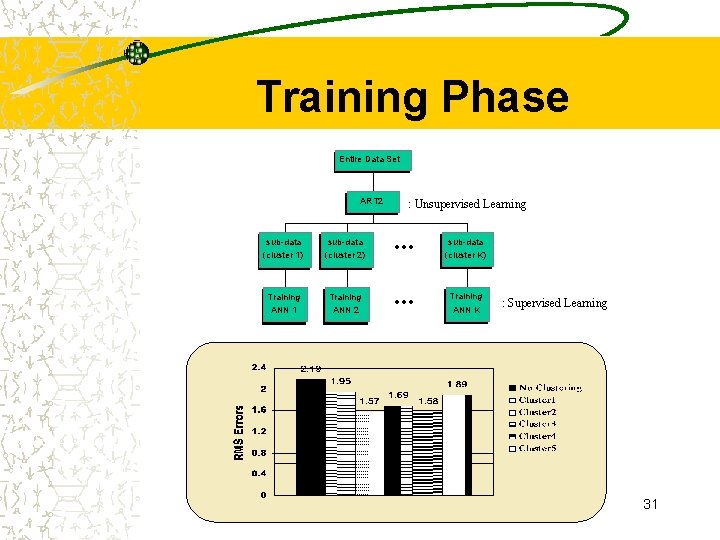

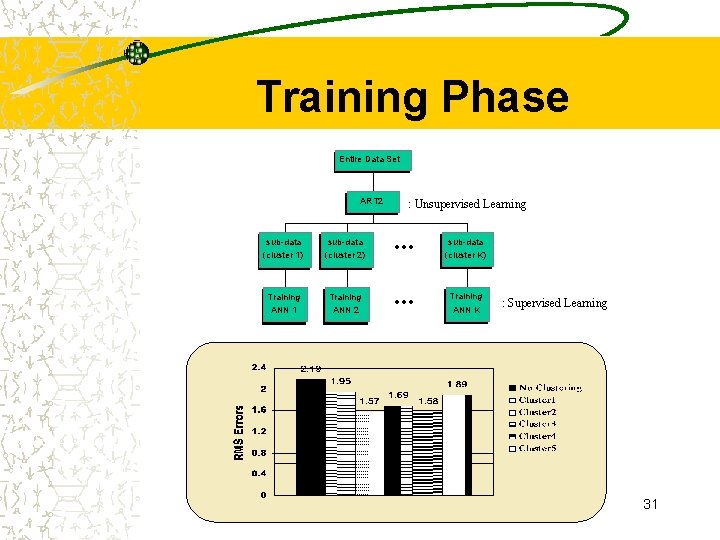

Training Phase Entire Data Set ART 2 sub-data (cluster 1) (cluster 2) Training ANN 1 ANN 2 : Unsupervised Learning sub-data (cluster K) Training ANN K : Supervised Learning 31

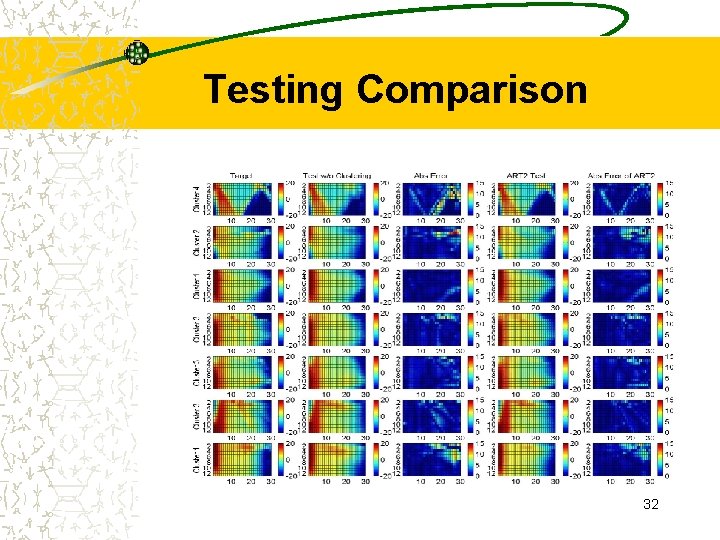

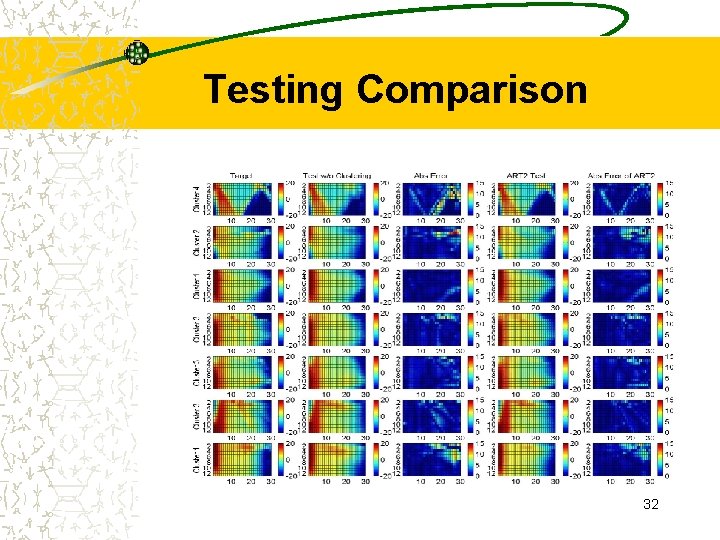

Testing Comparison 32

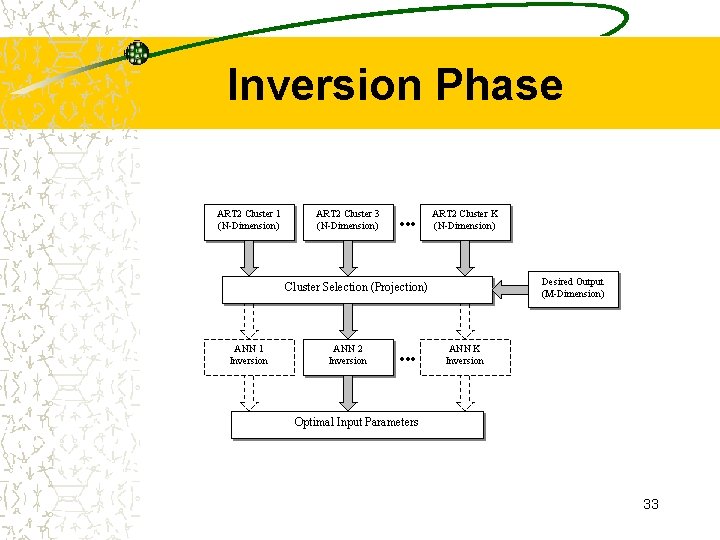

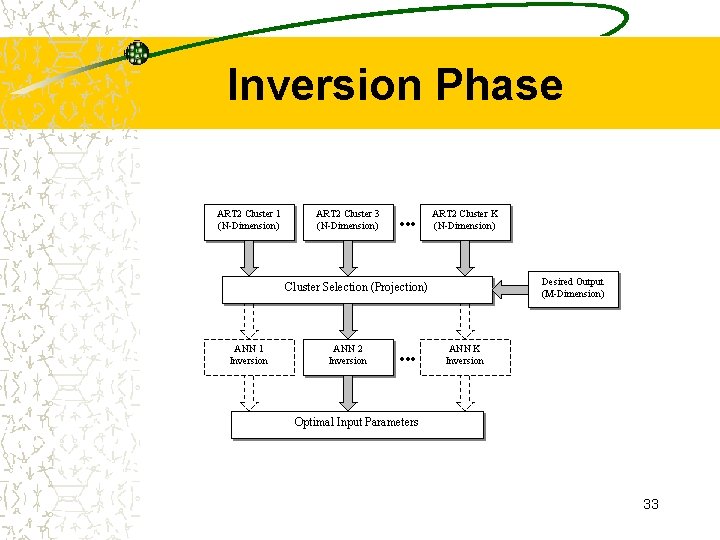

Inversion Phase ART 2 Cluster 1 (N-Dimension) ART 2 Cluster 3 (N-Dimension) ART 2 Cluster K (N-Dimension) Desired Output (M-Dimension) Cluster Selection (Projection) ANN 1 Inversion ANN 2 Inversion ANN K Inversion Optimal Input Parameters 33

Inversion from ART 2 Modular Local Experts Multiple parameter inversion and maximizing the target area. The output target area is tiled with 2 x 2 pixel regions. Thus, 2 x 2 output pixel groups are inverted one at a time to find out the best combination of these 5 input parameters to satisfy the corresponding SE 34

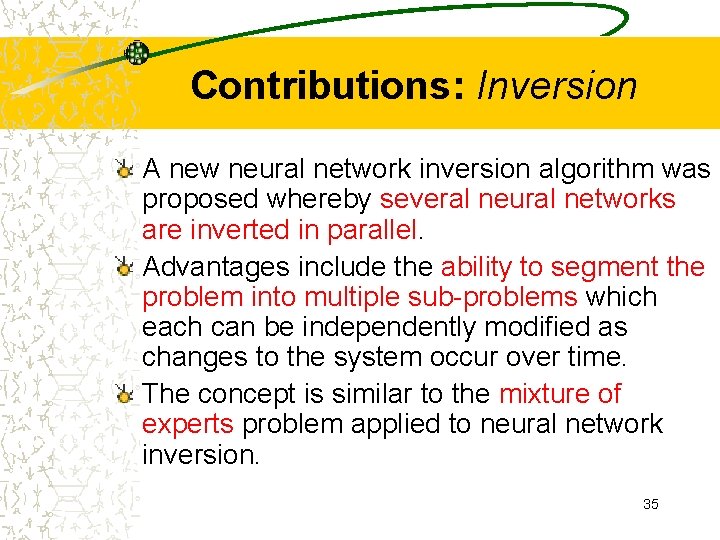

Contributions: Inversion A new neural network inversion algorithm was proposed whereby several neural networks are inverted in parallel. Advantages include the ability to segment the problem into multiple sub-problems which each can be independently modified as changes to the system occur over time. The concept is similar to the mixture of experts problem applied to neural network inversion. 35

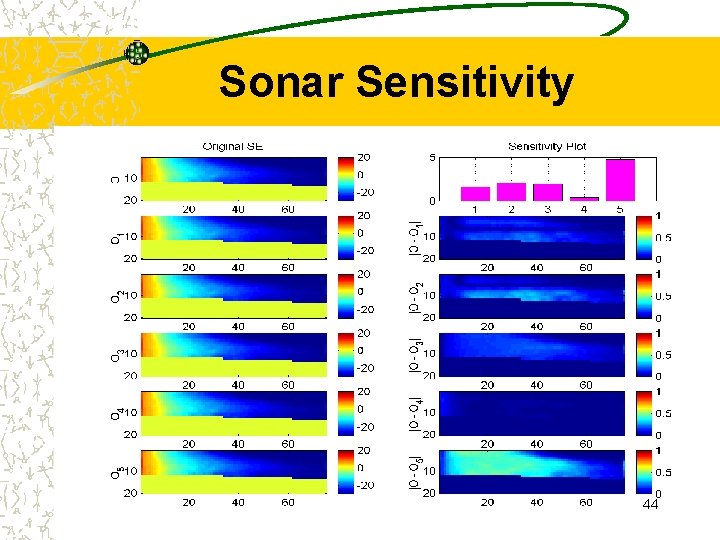

Sensitivity Analysis Feature selection as neural network is being trained or after the training. Useful to eliminate superfluous input parameters [Rambhia]. – reducing the dimension of the decision space and – increasing speed and accuracy of the system. When implemented in hardware, the non-linearity occuring in the operation of various network component may practically make a network impossible to train significantly [Jiao]. – very important in the investigation of non-ideal effects. (important issue from the view point of engineering). Once neural network is trained, it is very important to determine which of the control parameters are critical to the decision making at a certain operating point. 36

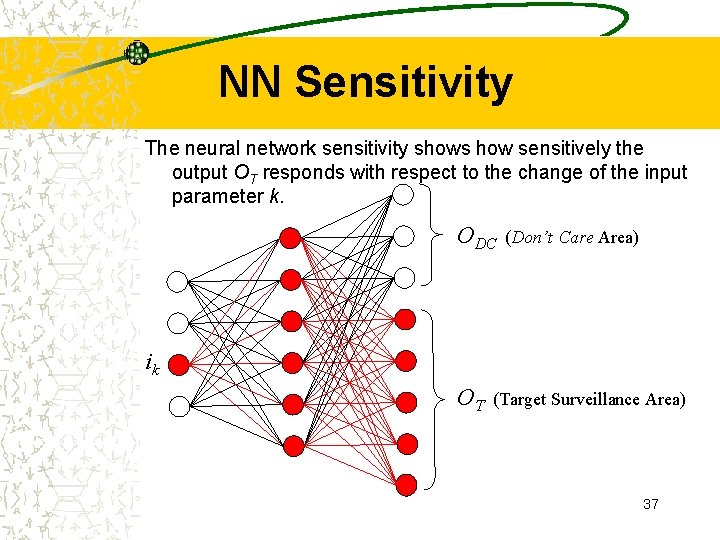

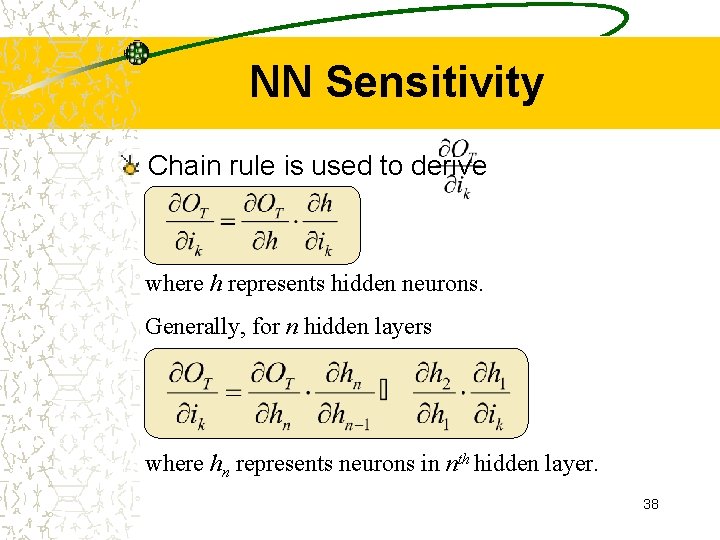

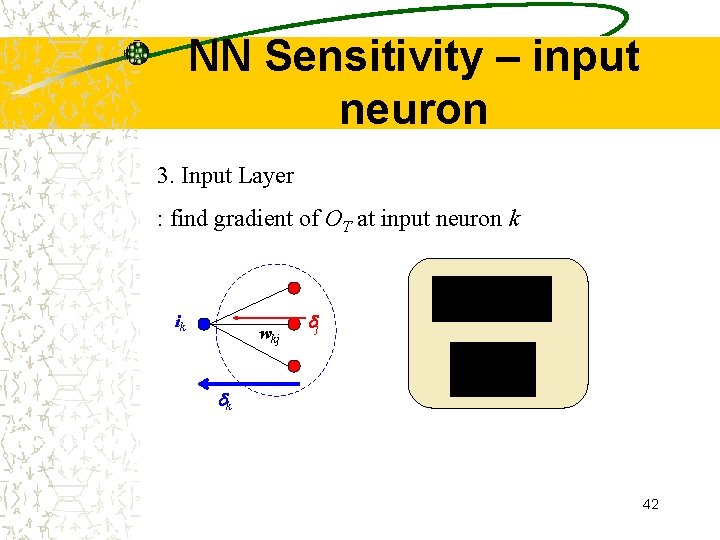

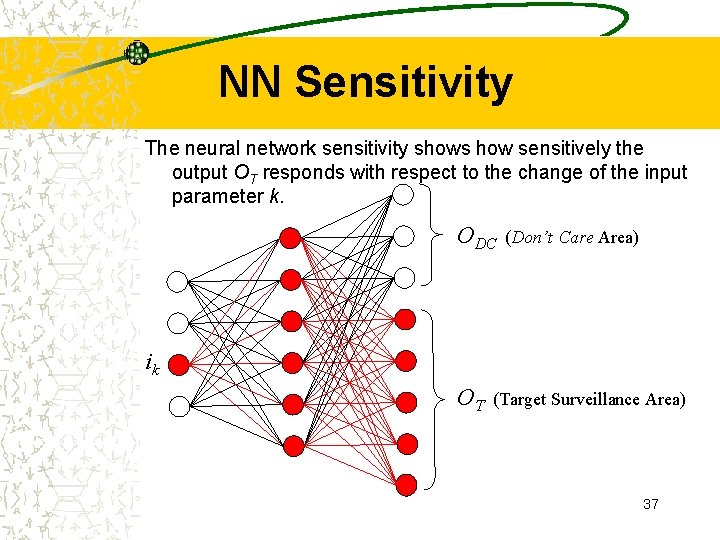

NN Sensitivity The neural network sensitivity shows how sensitively the output OT responds with respect to the change of the input parameter k. ODC (Don’t Care Area) ik OT (Target Surveillance Area) 37

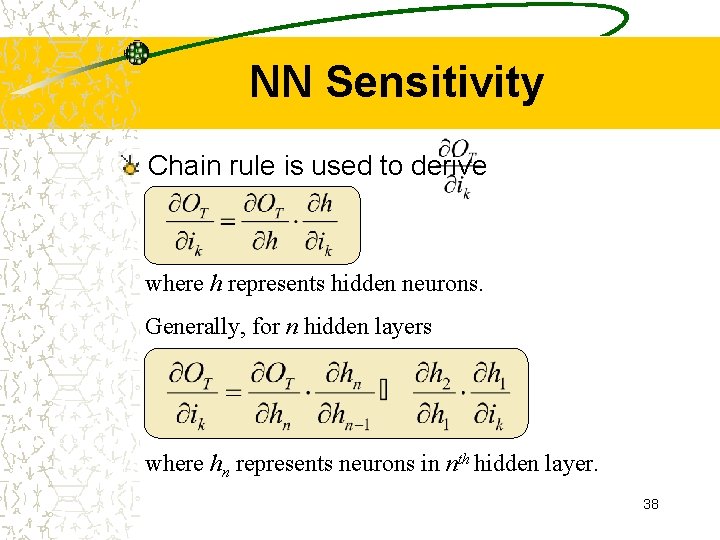

NN Sensitivity Chain rule is used to derive where h represents hidden neurons. Generally, for n hidden layers where hn represents neurons in nth hidden layer. 38

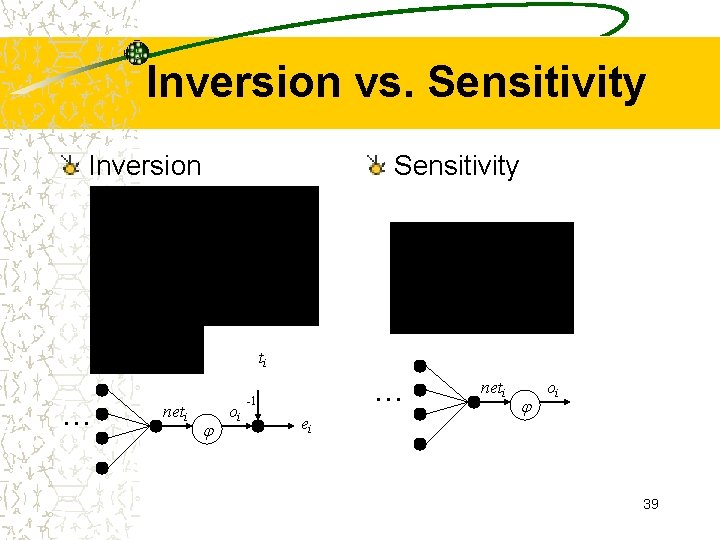

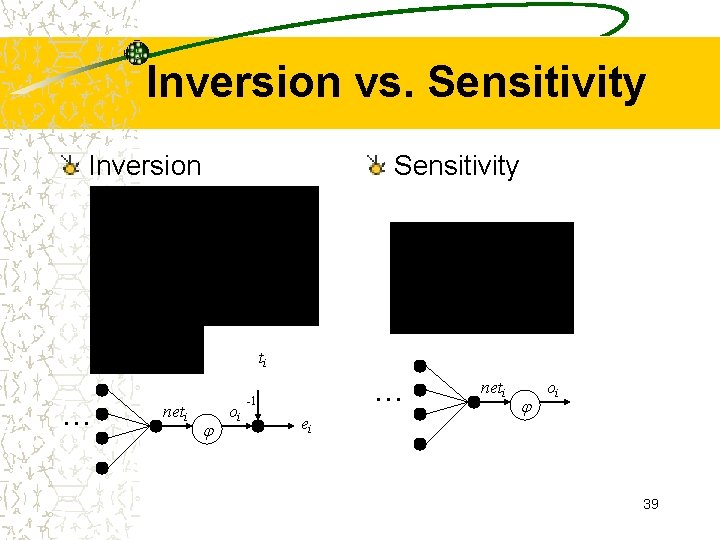

Inversion vs. Sensitivity Inversion Sensitivity ti … neti oi … -1 ei neti oi 39

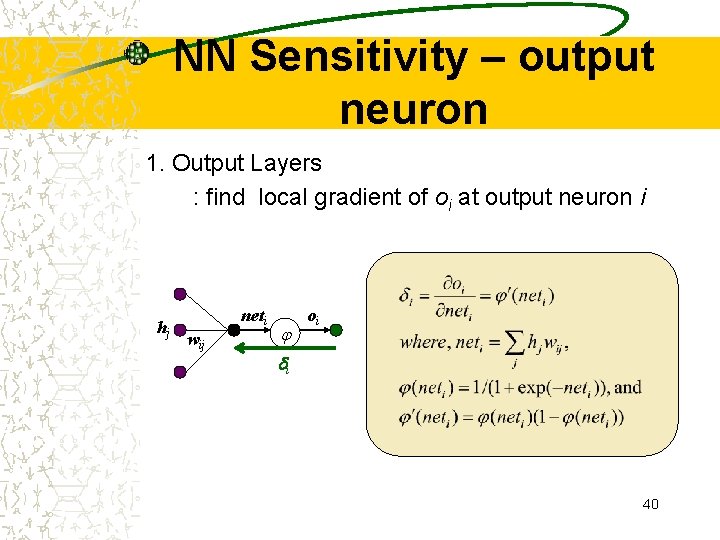

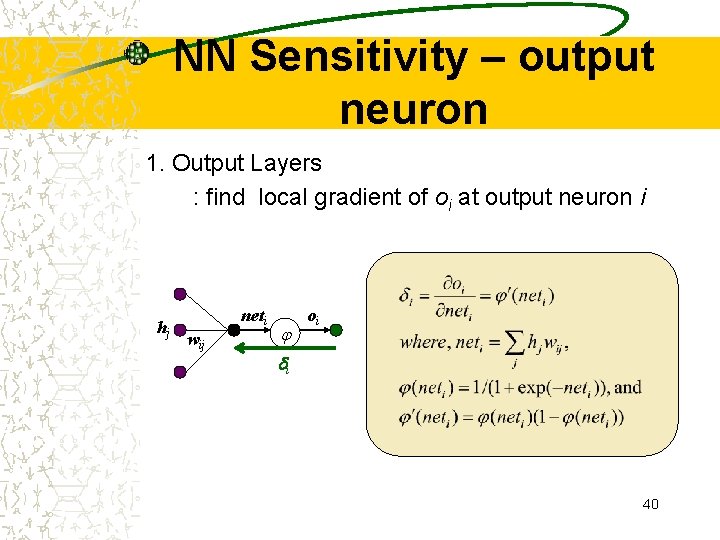

NN Sensitivity – output neuron 1. Output Layers : find local gradient of oi at output neuron i hj neti wij oi i 40

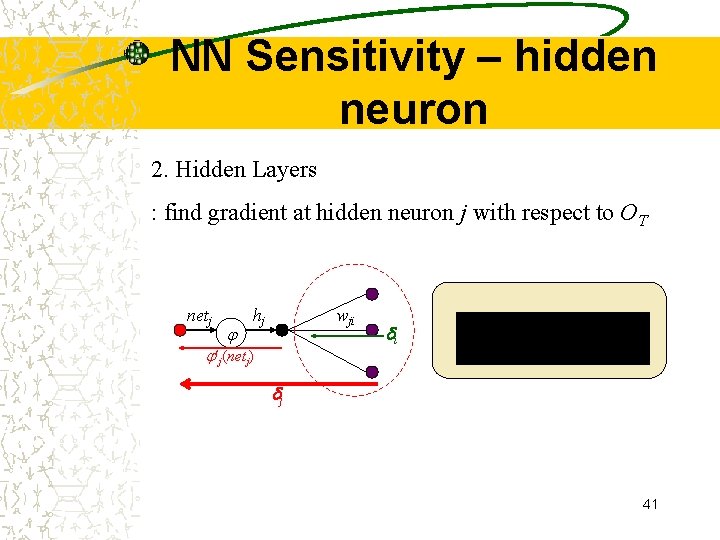

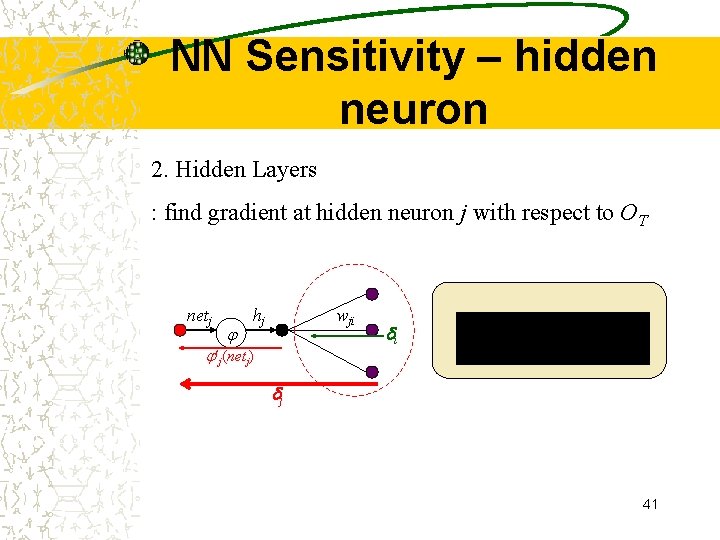

NN Sensitivity – hidden neuron 2. Hidden Layers : find gradient at hidden neuron j with respect to OT netj hj wji j(netj) i j 41

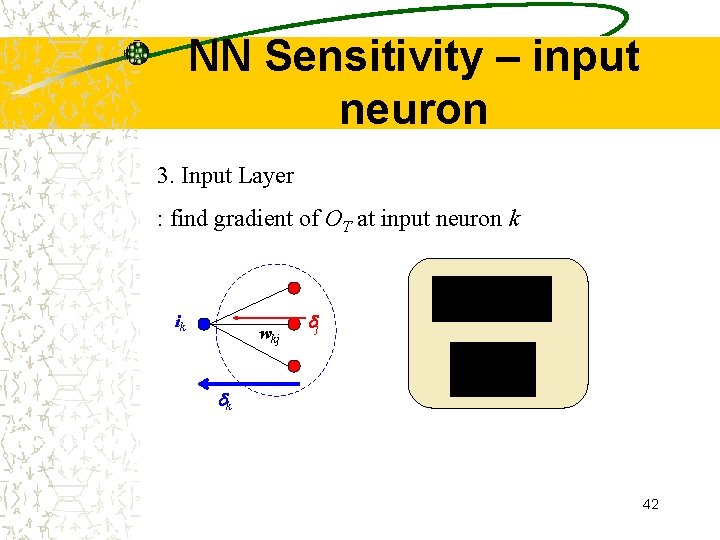

NN Sensitivity – input neuron 3. Input Layer : find gradient of OT at input neuron k ik wkj j k 42

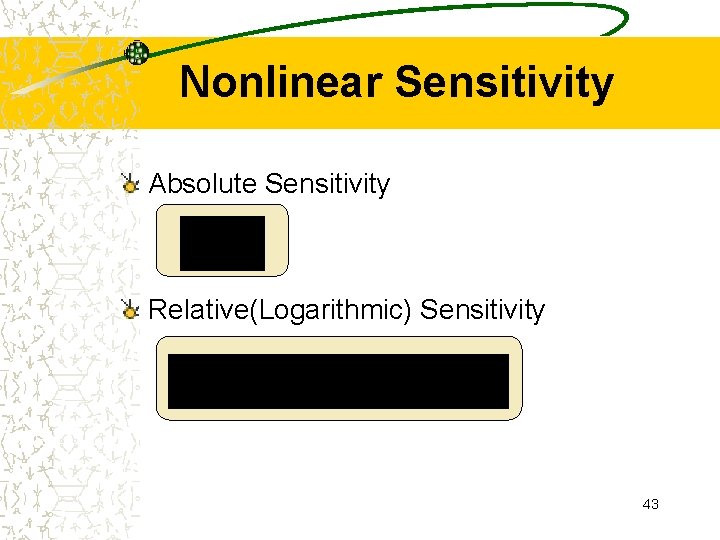

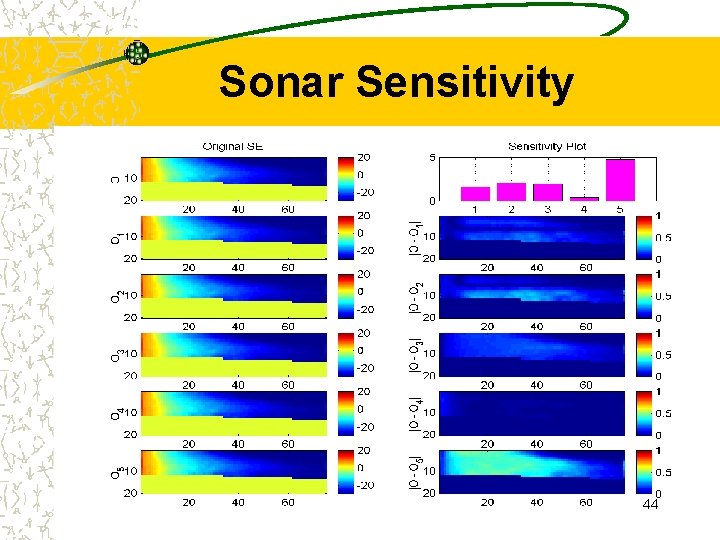

Nonlinear Sensitivity Absolute Sensitivity Relative(Logarithmic) Sensitivity 43

Sonar Sensitivity 44

Contributions: Sensitivity Analysis Once neural network is trained, especially, it is very important to determine which of the control parameters are critical to the decision making at a certain operating point such that environmental situation or/and control criteria is given. 45

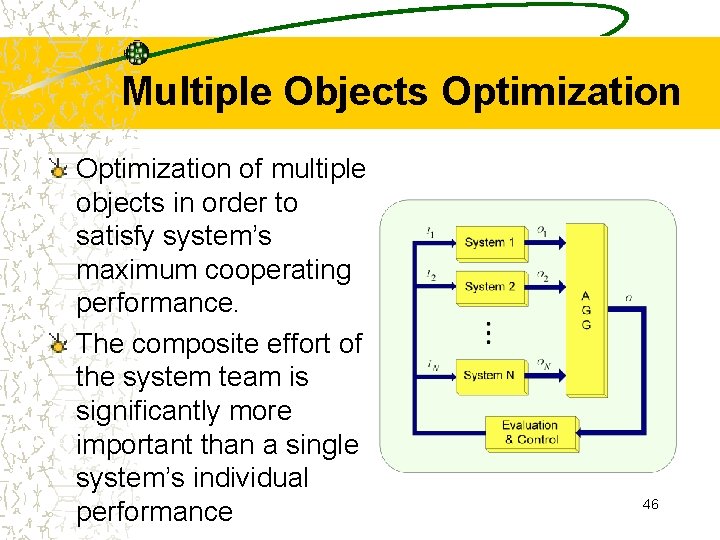

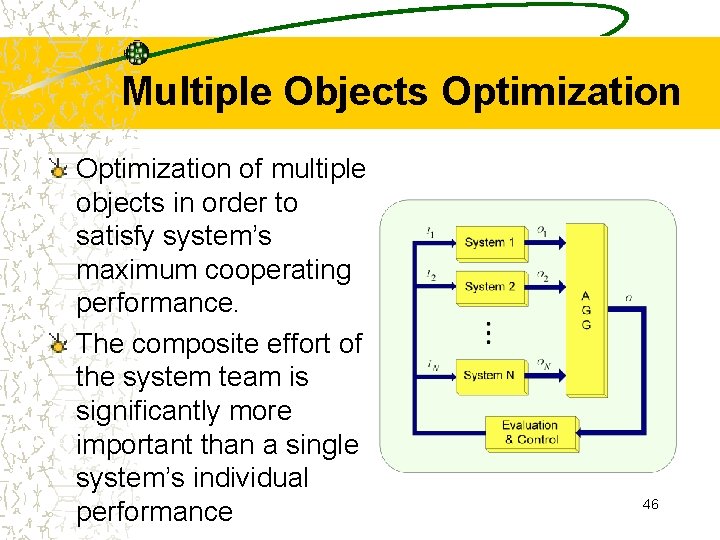

Multiple Objects Optimization of multiple objects in order to satisfy system’s maximum cooperating performance. The composite effort of the system team is significantly more important than a single system’s individual performance 46

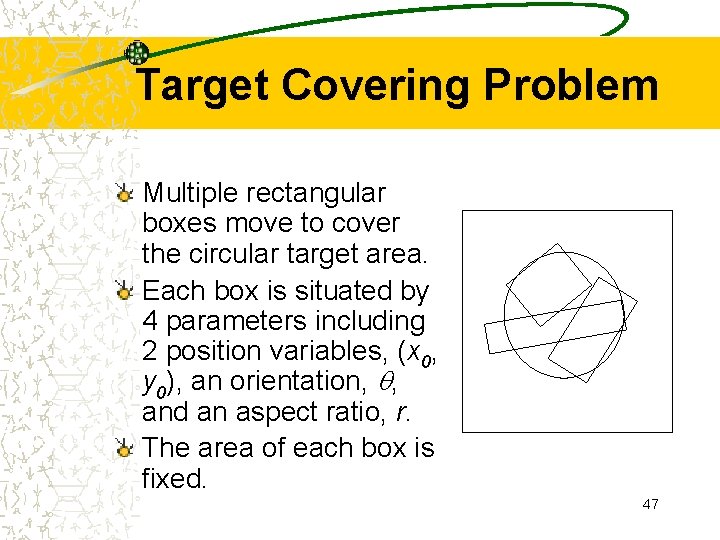

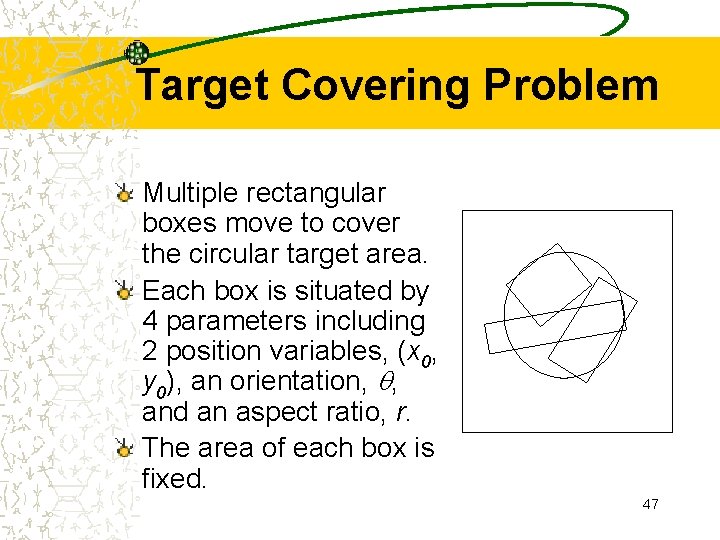

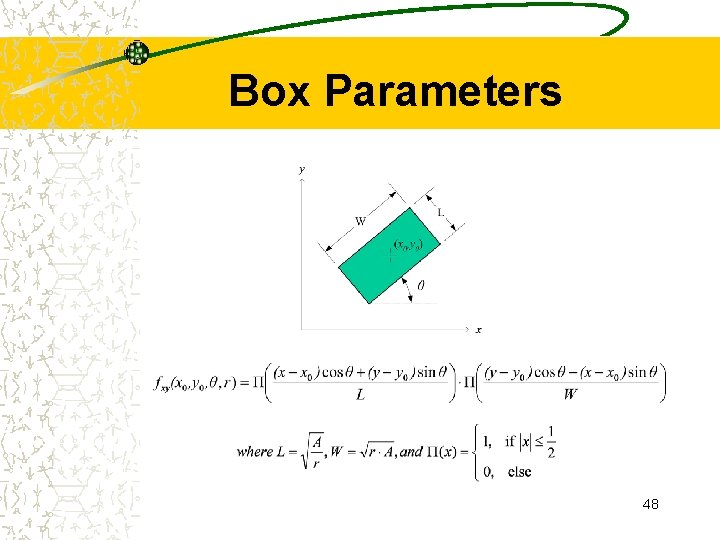

Target Covering Problem Multiple rectangular boxes move to cover the circular target area. Each box is situated by 4 parameters including 2 position variables, (x 0, y 0), an orientation, , and an aspect ratio, r. The area of each box is fixed. 47

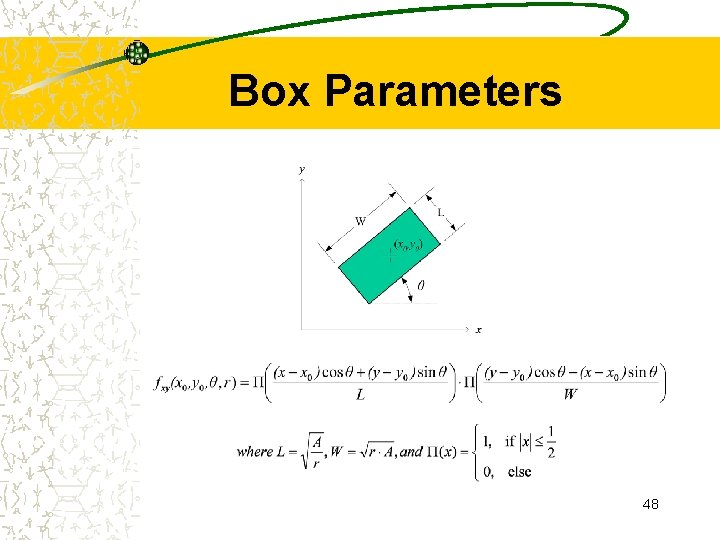

Box Parameters 48

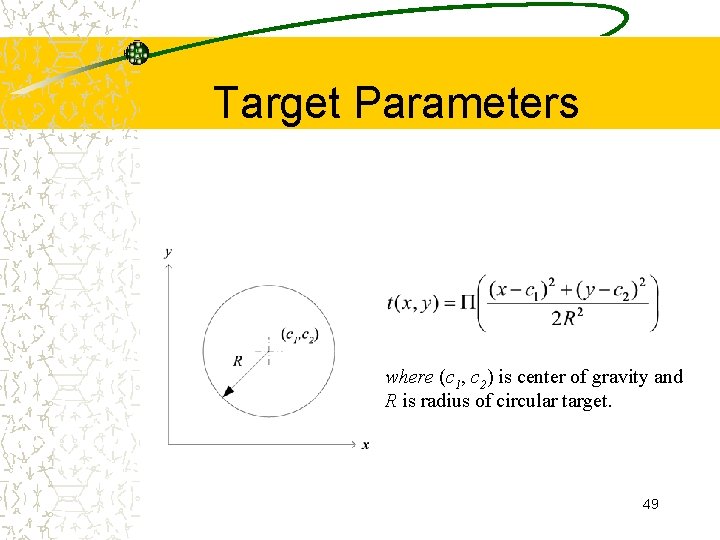

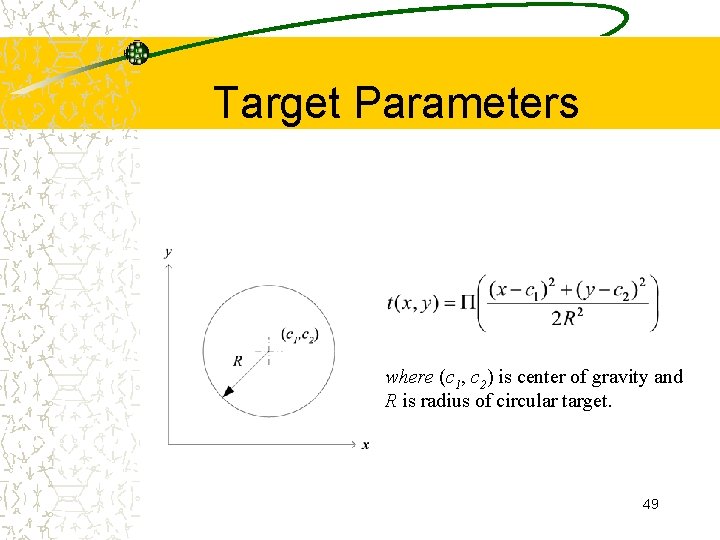

Target Parameters where (c 1, c 2) is center of gravity and R is radius of circular target. 49

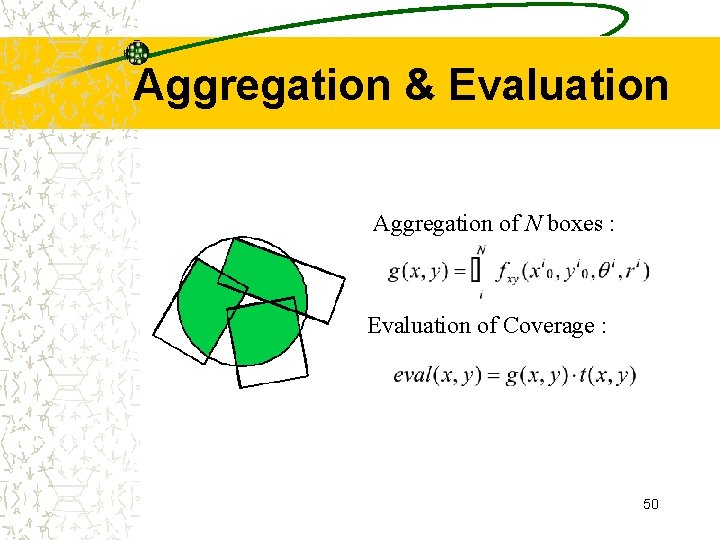

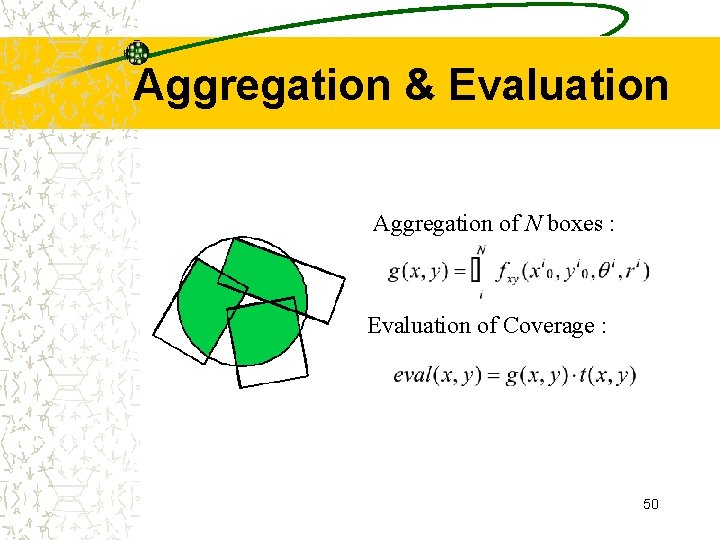

Aggregation & Evaluation Aggregation of N boxes : Evaluation of Coverage : 50

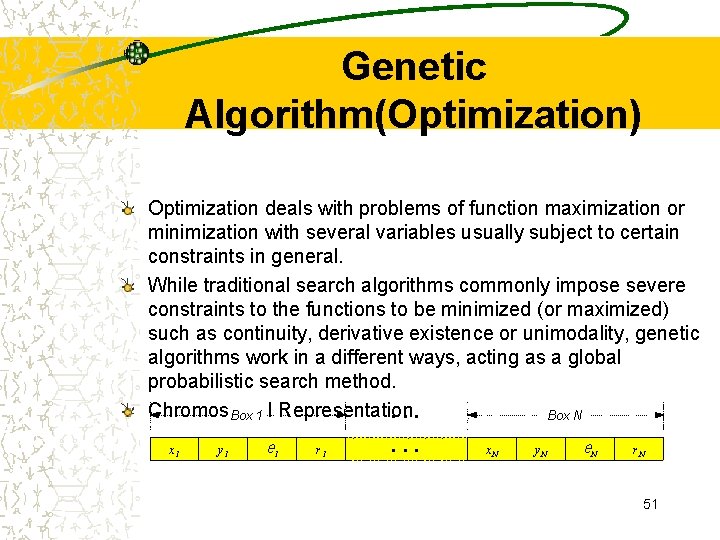

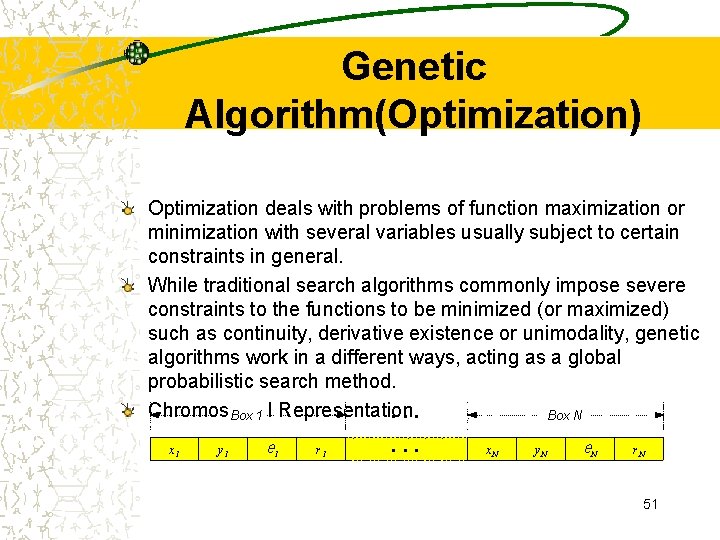

Genetic Algorithm(Optimization) Optimization deals with problems of function maximization or minimization with several variables usually subject to certain constraints in general. While traditional search algorithms commonly impose severe constraints to the functions to be minimized (or maximized) such as continuity, derivative existence or unimodality, genetic algorithms work in a different ways, acting as a global probabilistic search method. Chromosomal. . . Box 1 Representation. Box N x 1 y 1 1 r 1 . . . x. N y. N N r. N 51

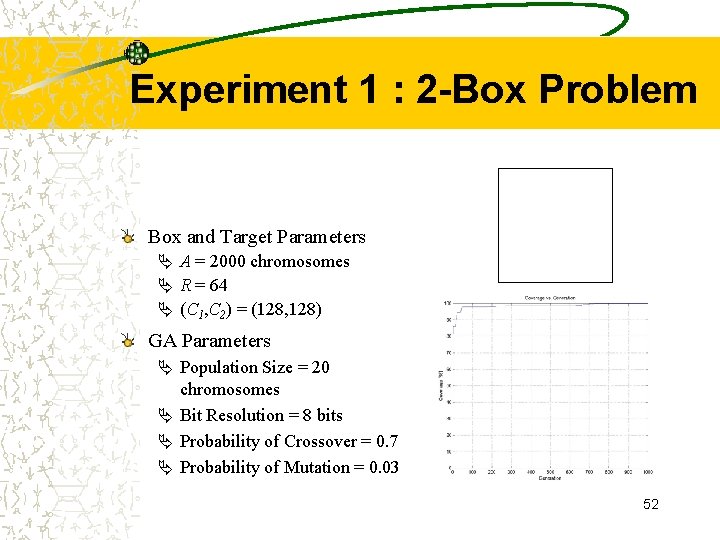

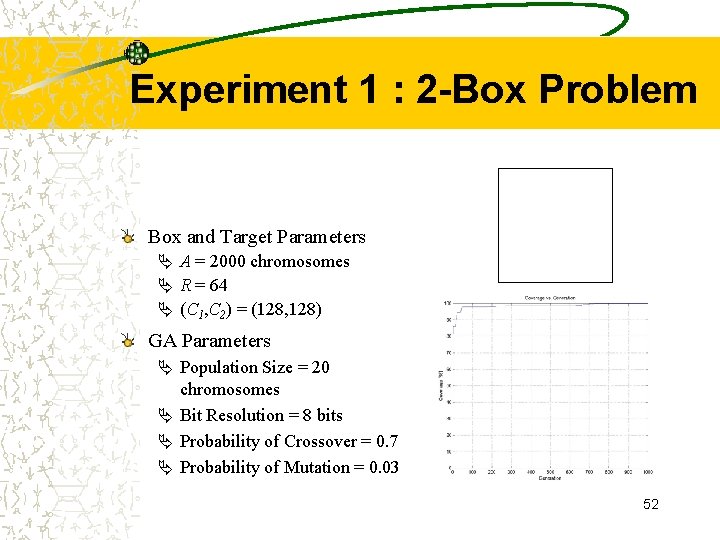

Experiment 1 : 2 -Box Problem Box and Target Parameters Ä A = 2000 chromosomes Ä R = 64 Ä (C 1, C 2) = (128, 128) GA Parameters Ä Population Size = 20 chromosomes Ä Bit Resolution = 8 bits Ä Probability of Crossover = 0. 7 Ä Probability of Mutation = 0. 03 52

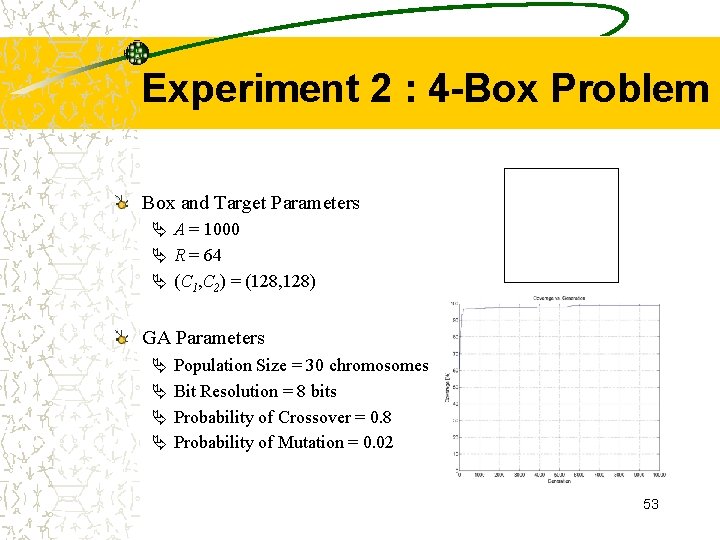

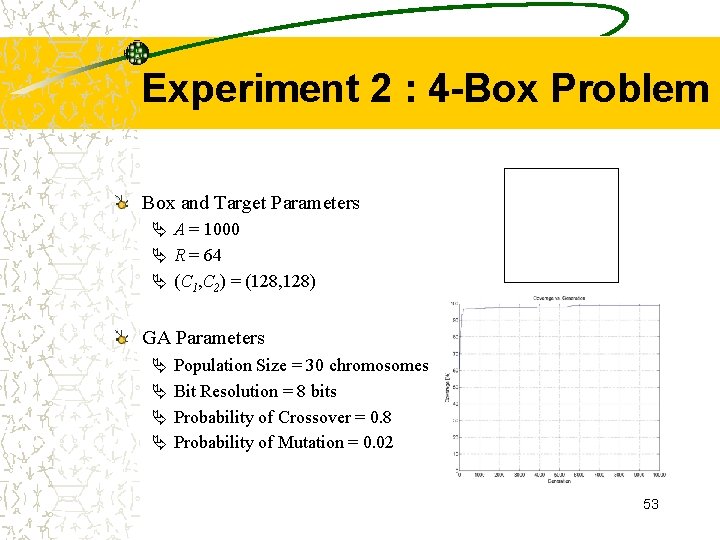

Experiment 2 : 4 -Box Problem Box and Target Parameters Ä A = 1000 Ä R = 64 Ä (C 1, C 2) = (128, 128) GA Parameters Ä Ä Population Size = 30 chromosomes Bit Resolution = 8 bits Probability of Crossover = 0. 8 Probability of Mutation = 0. 02 53

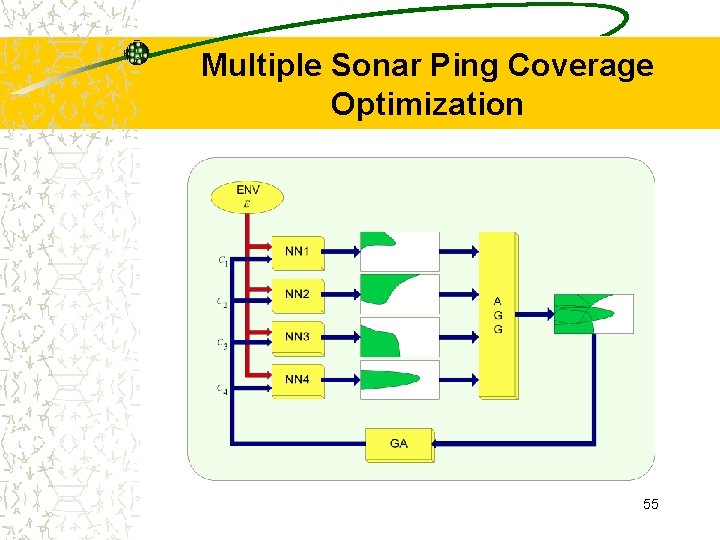

MULTIPLE SONAR PING OPTIMIZATION Sonar Coverage : When the output pixels above a certain threshold value are only meaningful, those pixels in the output surveillance area are considered as “covered”. Maximization of the aggregated sonar coverage from the given number of pings allows minimization of the counter detection by the object for which we are looking. Genetic algorithm based approach to find the best combination of control parameters when the environment is given. 54

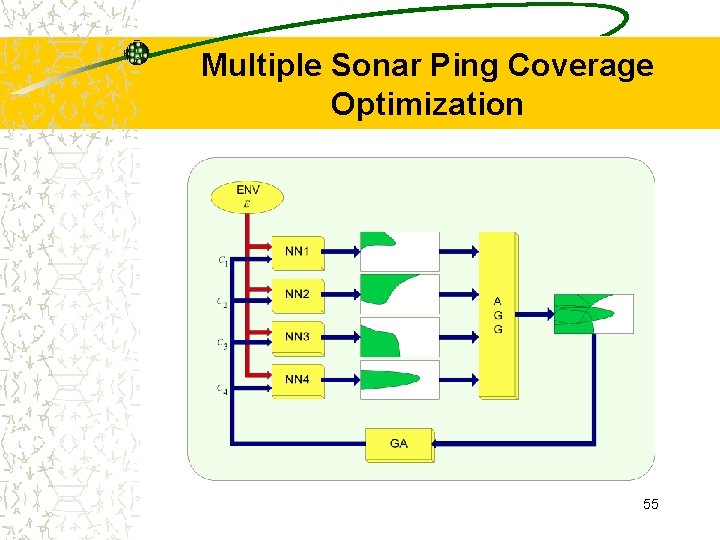

Multiple Sonar Ping Coverage Optimization 55

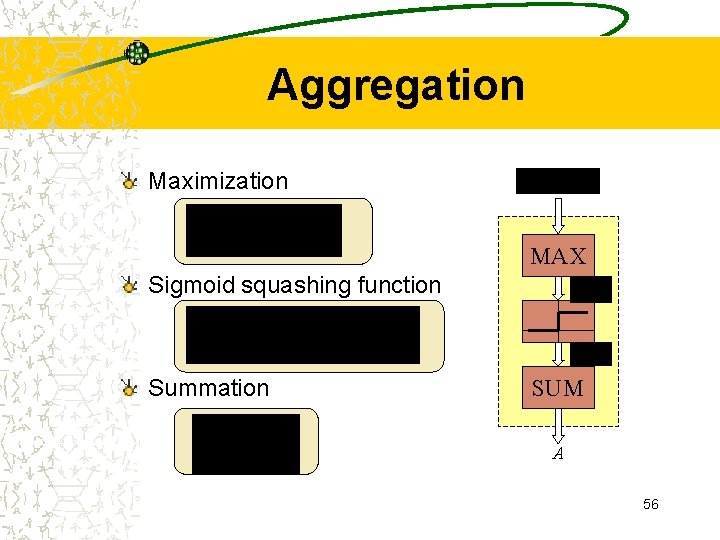

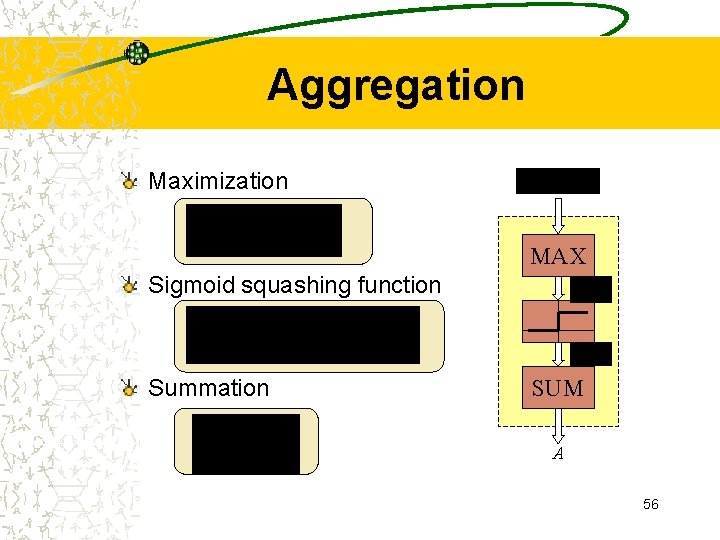

Aggregation Maximization MAX Sigmoid squashing function Summation SUM A 56

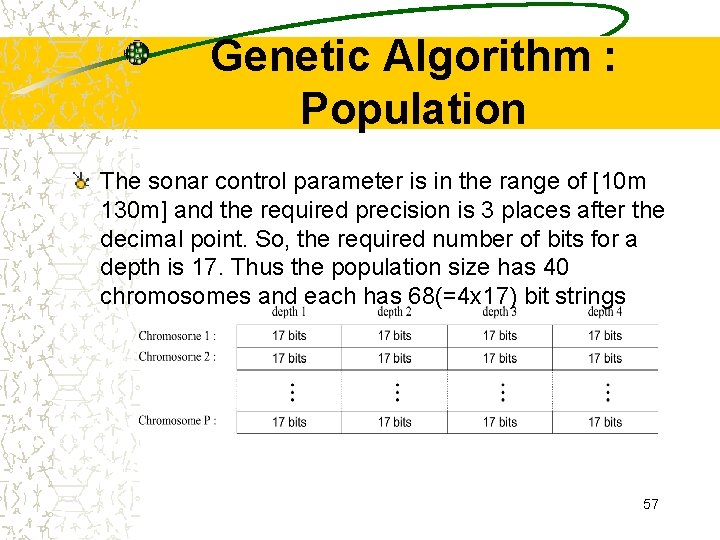

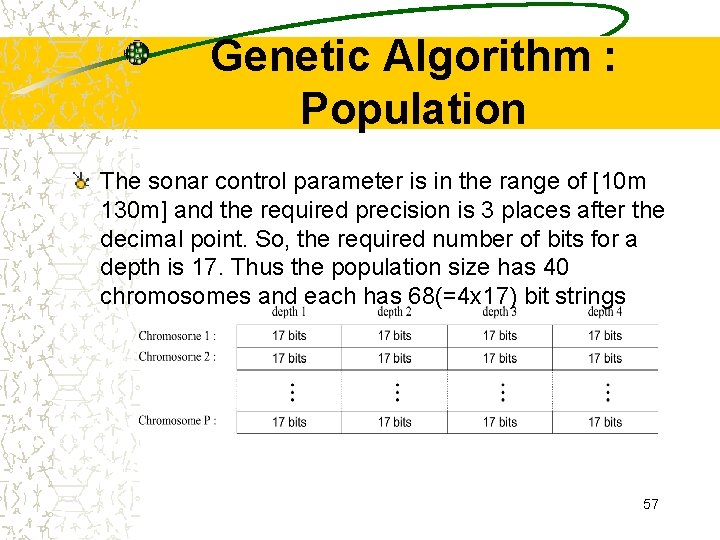

Genetic Algorithm : Population The sonar control parameter is in the range of [10 m 130 m] and the required precision is 3 places after the decimal point. So, the required number of bits for a depth is 17. Thus the population size has 40 chromosomes and each has 68(=4 x 17) bit strings 57

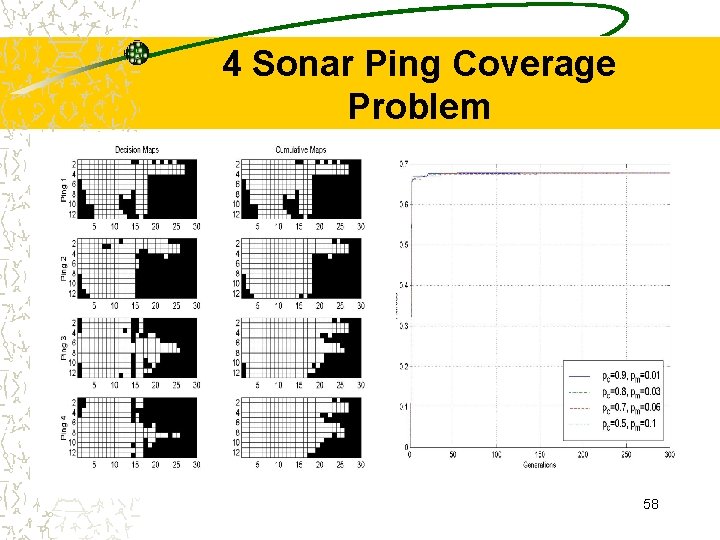

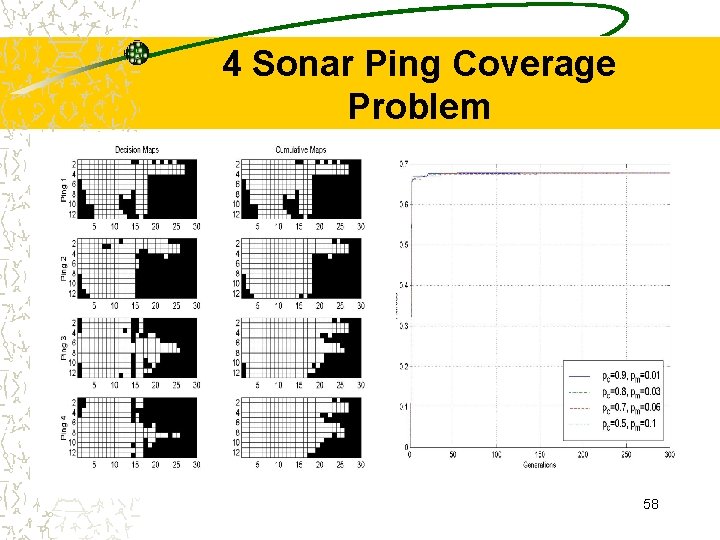

4 Sonar Ping Coverage Problem 58

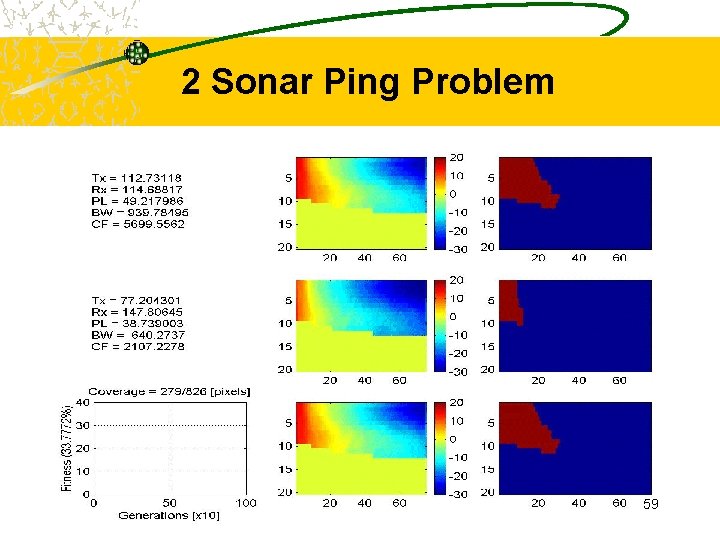

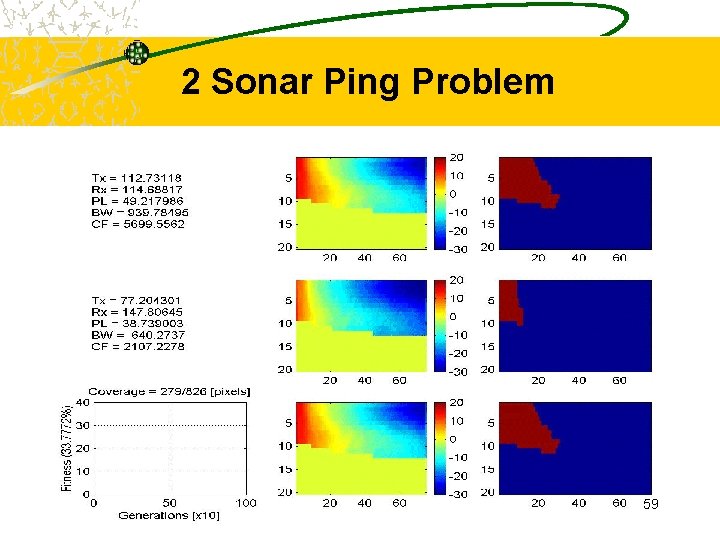

2 Sonar Ping Problem 59

Contributions: Maximal Area Coverage The systems need not be the replications of each other but can, for example, specialize in different aspects of appeasing the fitness function. The search can be constrained, for example, a constraint imposing module can be inserted. 60

Conclusions A novel neural network learning algorithm (Don’t care training with step size modification) for data sets with varying output dimension is proposed. A new neural network inversion algorithm was proposed whereby several neural networks are inverted in parallel. (the ability to segment the problem into multiple subproblems) The sensitivity of neural network is investigated. Once neural network is trained, especially, it is critical to the decision making at a certain operating point. This can be done through input parameter sensitivity analysis. There exist numerous generalizations of the fundamental architecture of maximal area coverage problem that allow application to a larger scope of problems. 61

Ideas for Future Works More work could be done for more accurate training of sonar data. Especially, multi resolution neural networks could help extract discrete detection maps. Data pruning using nearest neighbor analysis before training or query-based learning using sensitivity analysis during training could improve the training time or/and accuracy. Extensive research for the use of evolutionary algorithms to improve the inversion speed and precision. Particle swarm optimization or genetic algorithms could be considered for more flexibility on imposing feasibility constraints. 62

63