Personnel Selection Selection What is selection Using scientific

- Slides: 37

Personnel Selection

Selection • What is selection? – Using scientific methodology to choose one alternative (job candidate) over another. • Job Analysis • Measurement • Statistics • Why is selection important? – Decreases the likelihood of hiring “bad” employees – Increases the likelihood that people will be treated fairly when hiring decisions are made • Reduces discrimination • Reduces likelihood of discrimination lawsuits • What do I/O psychologists need to know about selection? – How to select predictors of job performance (criteria problem) – How to accurately indentify and validate predictors for specific jobs (job analysis) • Rely on cognitive and personality variables – How to reliably and validly measure these predictors – How to use these predictors to make selection decisions

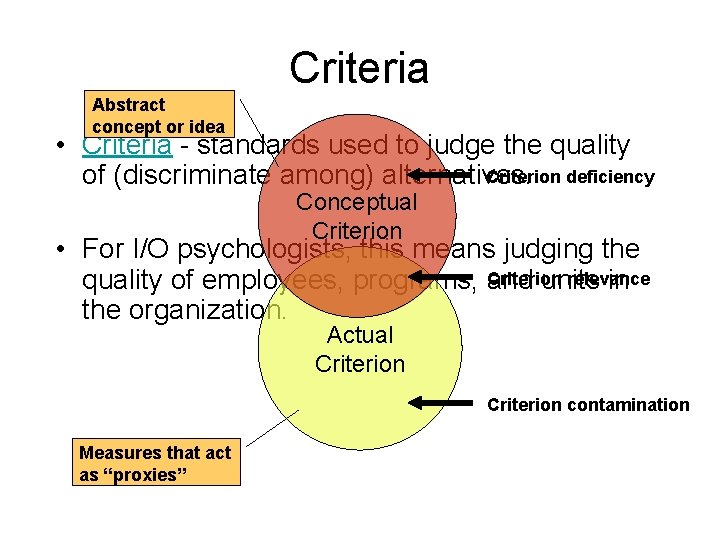

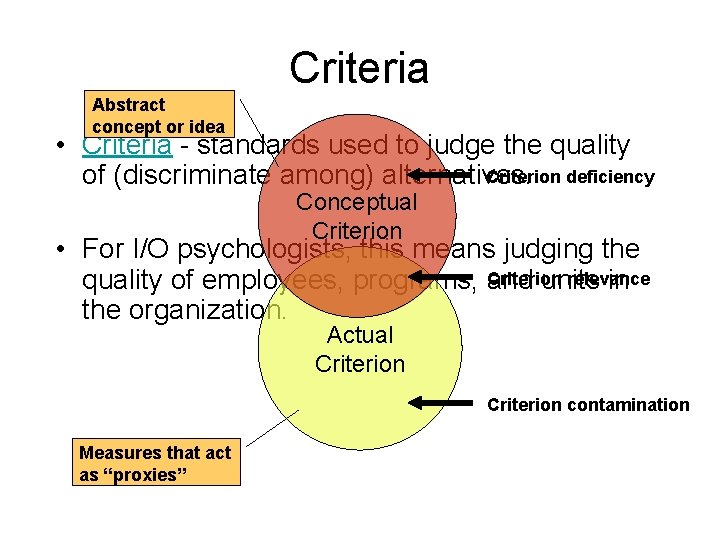

Criteria Abstract concept or idea • Criteria - standards used to judge the quality Criterion deficiency of (discriminate among) alternatives. Conceptual Criterion • For I/O psychologists, this means judging the Criterion relevance quality of employees, programs, and units in the organization. Actual Criterion contamination Measures that act as “proxies”

Illegal Criteria • Title VII of the 1964 Civil Rights Act prohibits using selection practices that have an unequal impact on members of a different: – Race – Color – Sex – Religion – National Origin

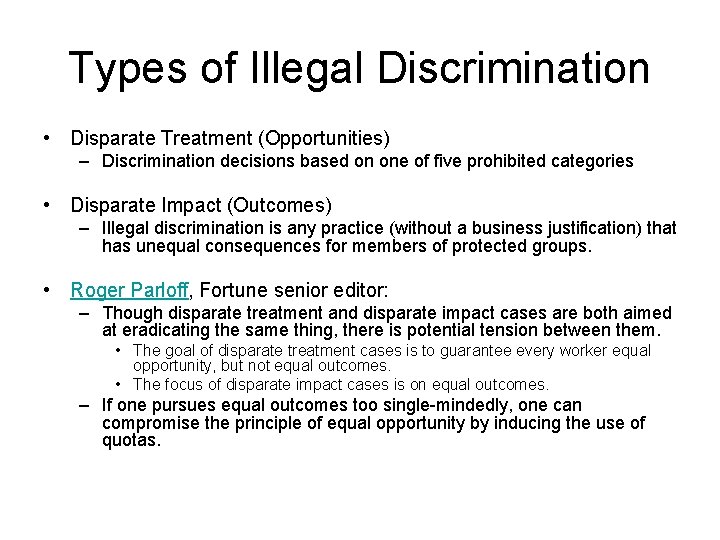

Types of Illegal Discrimination • Disparate Treatment (Opportunities) – Discrimination decisions based on one of five prohibited categories • Disparate Impact (Outcomes) – Illegal discrimination is any practice (without a business justification) that has unequal consequences for members of protected groups. • Roger Parloff, Fortune senior editor: – Though disparate treatment and disparate impact cases are both aimed at eradicating the same thing, there is potential tension between them. • The goal of disparate treatment cases is to guarantee every worker equal opportunity, but not equal outcomes. • The focus of disparate impact cases is on equal outcomes. – If one pursues equal outcomes too single-mindedly, one can compromise the principle of equal opportunity by inducing the use of quotas.

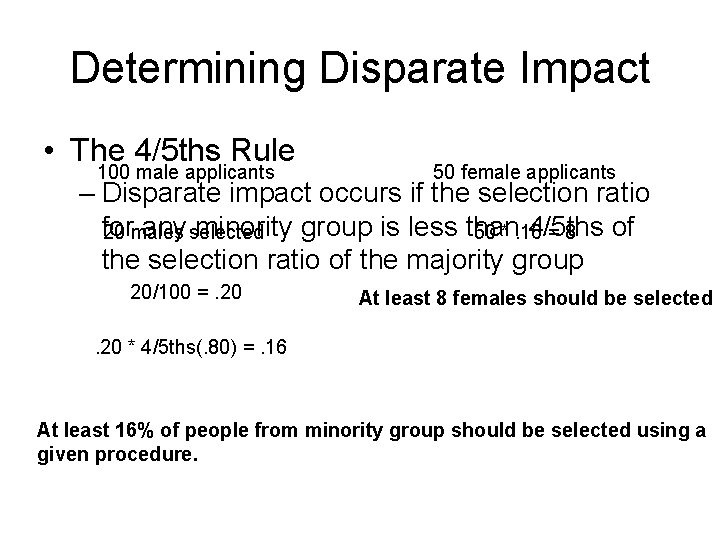

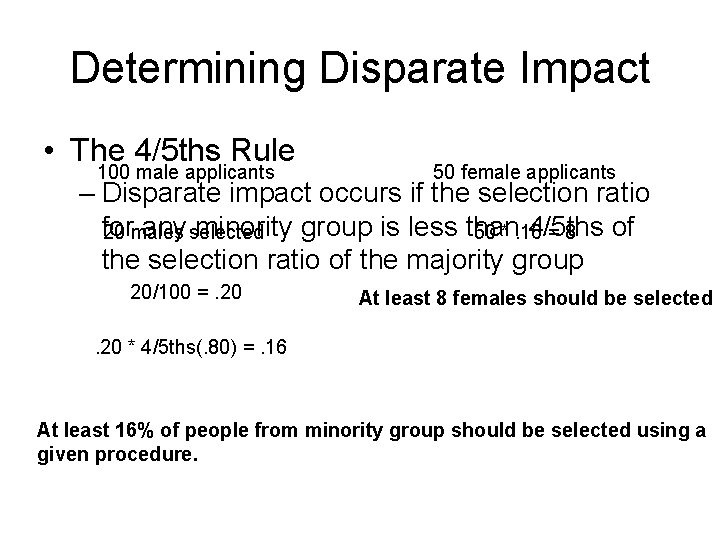

Determining Disparate Impact • The 4/5 ths Rule 100 male applicants 50 female applicants – Disparate impact occurs if the selection ratio for any minority group is less than 4/5 ths of 20 males selected 50 *. 16 = 8 the selection ratio of the majority group 20/100 =. 20 At least 8 females should be selected . 20 * 4/5 ths(. 80) =. 16 At least 16% of people from minority group should be selected using a given procedure.

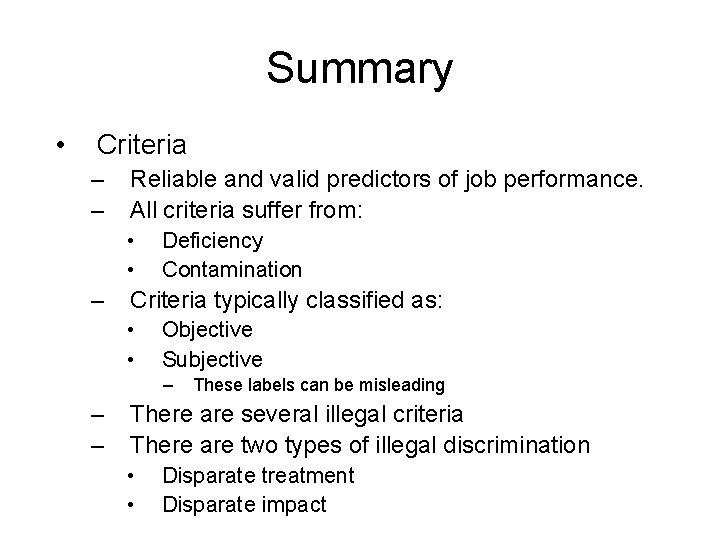

Summary • Criteria – – Reliable and valid predictors of job performance. All criteria suffer from: • • – Deficiency Contamination Criteria typically classified as: • • Objective Subjective – – – These labels can be misleading There are several illegal criteria There are two types of illegal discrimination • • Disparate treatment Disparate impact

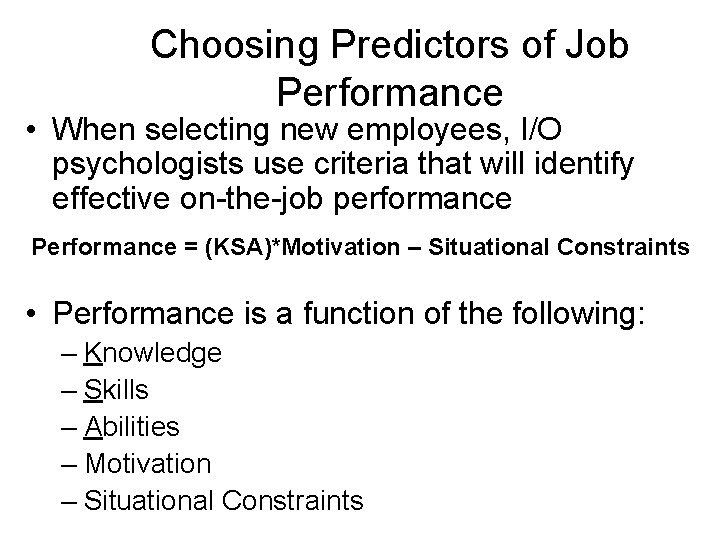

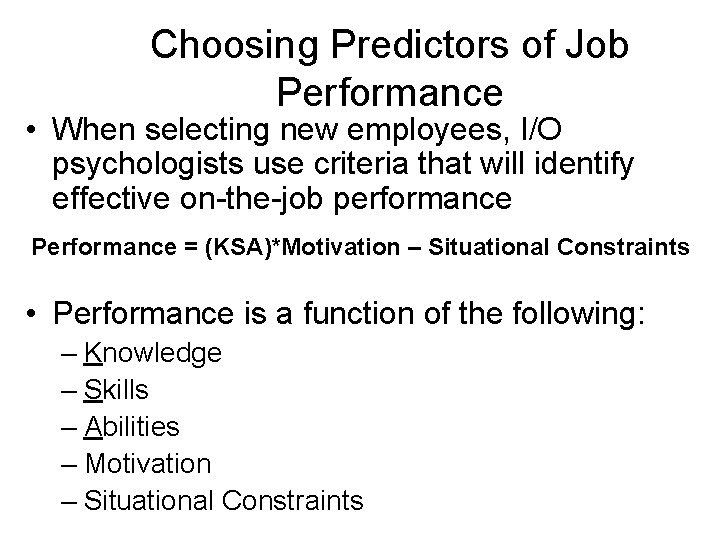

Choosing Predictors of Job Performance • When selecting new employees, I/O psychologists use criteria that will identify effective on-the-job performance Performance = (KSA)*Motivation – Situational Constraints • Performance is a function of the following: – Knowledge – Skills – Abilities – Motivation – Situational Constraints

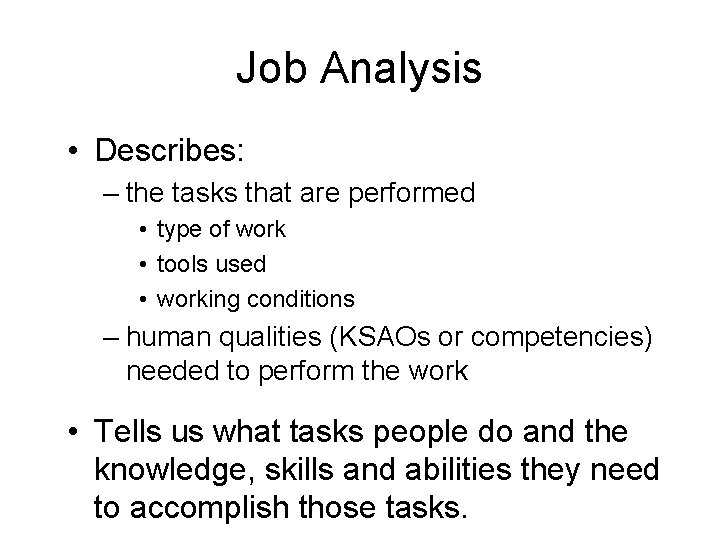

Job Analysis • Describes: – the tasks that are performed • type of work • tools used • working conditions – human qualities (KSAOs or competencies) needed to perform the work • Tells us what tasks people do and the knowledge, skills and abilities they need to accomplish those tasks.

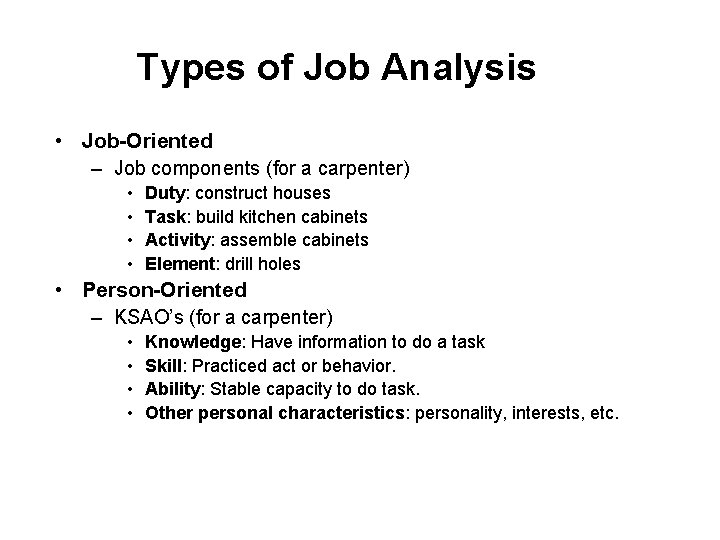

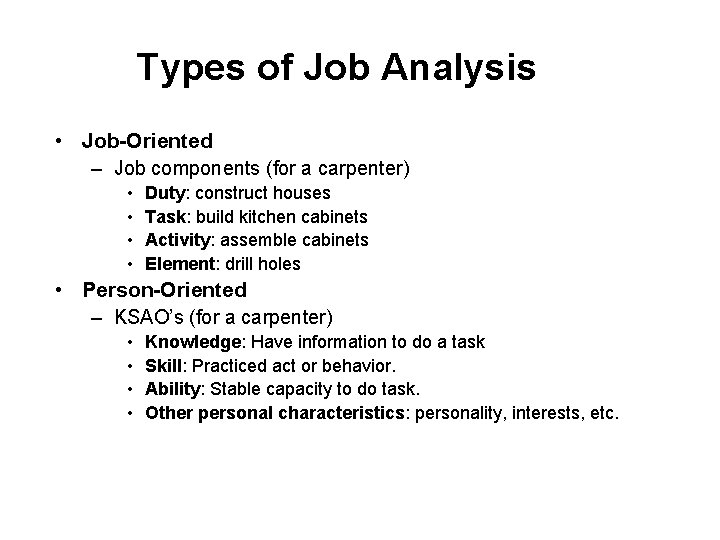

Types of Job Analysis • Job-Oriented – Job components (for a carpenter) • • Duty: construct houses Task: build kitchen cabinets Activity: assemble cabinets Element: drill holes • Person-Oriented – KSAO’s (for a carpenter) • • Knowledge: Have information to do a task Skill: Practiced act or behavior. Ability: Stable capacity to do task. Other personal characteristics: personality, interests, etc.

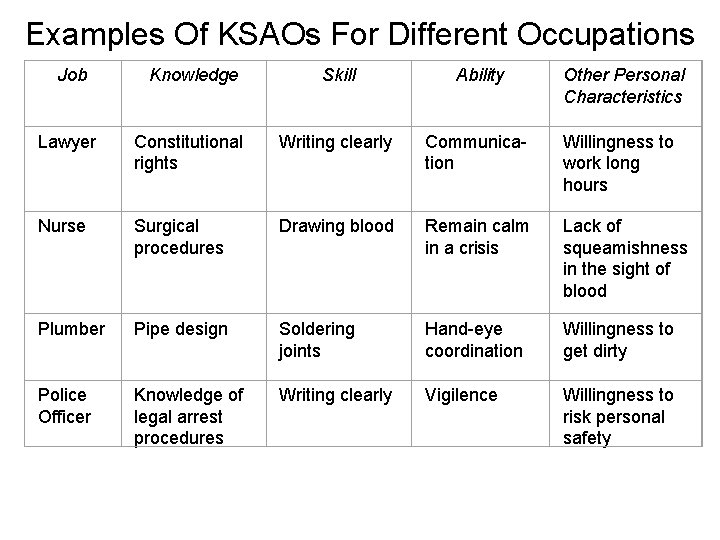

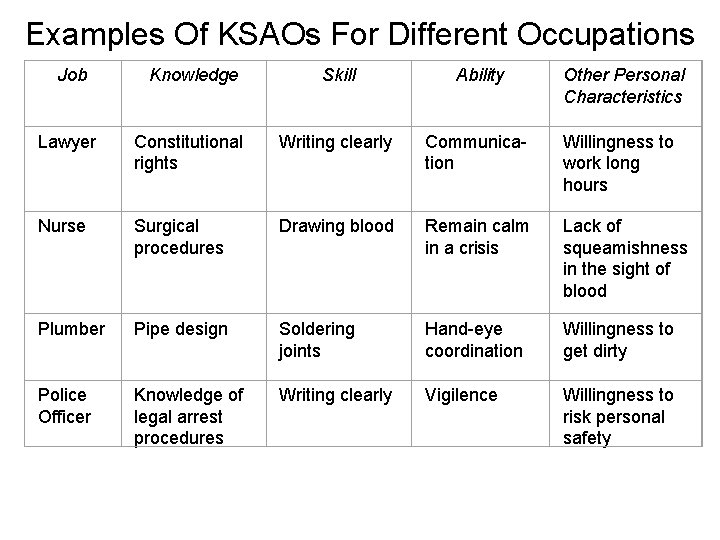

Examples Of KSAOs For Different Occupations Job Knowledge Skill Ability Other Personal Characteristics Lawyer Constitutional rights Writing clearly Communication Willingness to work long hours Nurse Surgical procedures Drawing blood Remain calm in a crisis Lack of squeamishness in the sight of blood Plumber Pipe design Soldering joints Hand-eye coordination Willingness to get dirty Police Officer Knowledge of legal arrest procedures Writing clearly Vigilence Willingness to risk personal safety

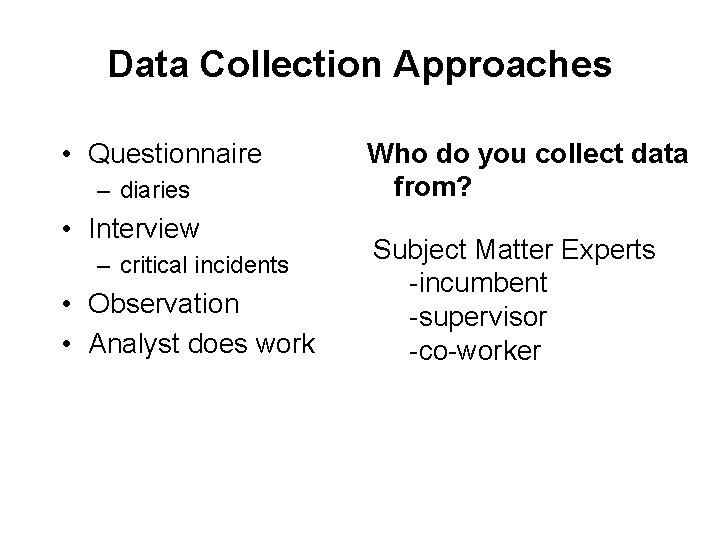

Data Collection Approaches • Questionnaire – diaries • Interview – critical incidents • Observation • Analyst does work Who do you collect data from? Subject Matter Experts -incumbent -supervisor -co-worker

Hiring the Best • Job: Firefighter • What are the major duties of a college professor? • What tasks are performed to complete each duty • Develop a set of KSAO’s necessary for these tasks. – should be useable for recruiting and evaluating • Challenges? • What other information would you want? How would you get it?

Selection • Predictors – Any variable used to forecast a criterion – Issues • Quality (Reliability & Validity) • Types – – – – Psychological Tests & Inventories Interviews Assessment Centers Work Samples & Situational Exercises Biodata Peer Assessment Letters of Recommendation

Biographical Data • Good questions are about events that are: – historical – external – discrete – controllable (by the individual) – verifiable – equal access – job relevant – non-invasive (Mael, 1991) • Rationale vs. empirical method

Biographical Data • Strong criterion validity – drug use, criminal history predicts dysfunctional police behavior (Sarchione et al. , 1998) – not redundant with personality (Mc. Manus & Kelly, 1999) • Measurement issues – – Generalizability Faking Fairness Privacy concerns

Interviews • Structured vs. Unstructured • Info. gathering vs. interpersonal behavior sample • Situational interview – “How would you handle a circumstance in which you needed the help of a person you did not like? ” • Measurement issues – structured has more criterion related validity – value of unstructured? – Illusion of validity • Guidelines for structured interviews – interviewer should know about job – interviewer should NOT have prior info about interviewee – individual ratings of dimensions AFTER the interview is over

Work Samples • perform a task under standardized conditions • historically were for blue collar jobs – e. g. use of tools, demonstrate driving skills • white collar examples – speech interview foreign worker, test of basic chemistry knowledge, • Measurement issues – high criterion validity if skills are similar to job – costly to administer – work best with mechanical, rather than peopleoriented tasks

Assessment Centers • Realistic tasks done in groups • Assessed by multiple of raters rating multiple domains • Multiple methods – in basket group exercise – leaderless group exercise • Strong criterion validity (e. g. , teachers, police) – overall scores predict job performance • Measurement issues – – costly to administer different ratings on a task too highly correlated dimension ratings not correlated strongly across tasks fix? focus on behavior checklists and rater training

Drug Testing • opinion? • People are more accepting of it if job involves risks to others (Paronto, et al. , 2002) • Measurement issues – reliability is very high, but not perfect – Validity? • Normands, Salyards, & Mahoney (1990) – over 5000 postal service applicants – those who tested positive had 59% higher absenteeism, 47% more likely to be fired – no differences in injury or accidents

Letters of Recommendation • ever written a letter of recommendation for someone? • worst criterion validity of all commonly used assessment tools – some use for screening extremely bad candidates • Measurement issues – restriction of range – writer bias/investment

Psychological Test Characteristics • • Group vs. individual Objective vs. open-ended Paper & pencil vs. performance Power vs. speed

Psychological Test Types • Ability Tests – Cognitive ability – Psychomotor ability • • • Knowledge and skill or achievement Integrity Personality Emotional Intelligence Vocational interest

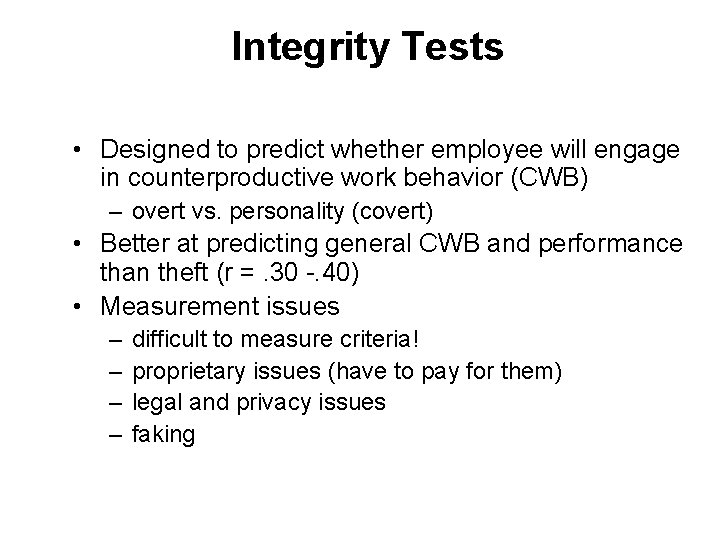

Integrity Tests • Designed to predict whether employee will engage in counterproductive work behavior (CWB) – overt vs. personality (covert) • Better at predicting general CWB and performance than theft (r =. 30 -. 40) • Measurement issues – – difficult to measure criteria! proprietary issues (have to pay for them) legal and privacy issues faking

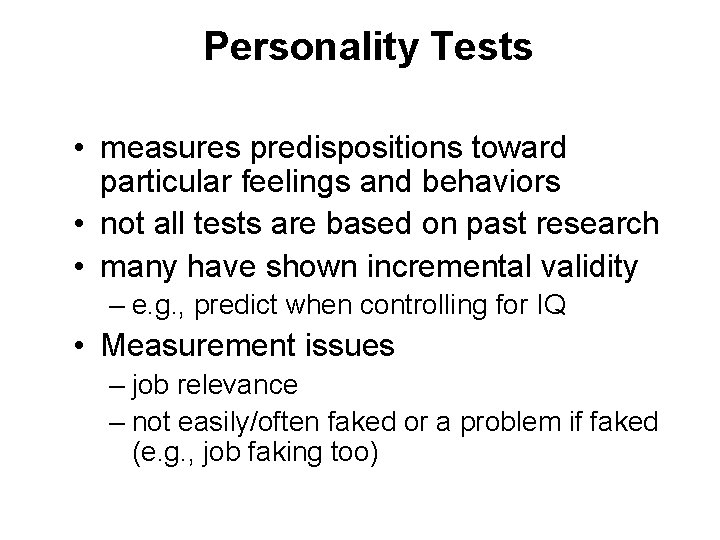

Personality Tests • measures predispositions toward particular feelings and behaviors • not all tests are based on past research • many have shown incremental validity – e. g. , predict when controlling for IQ • Measurement issues – job relevance – not easily/often faked or a problem if faked (e. g. , job faking too)

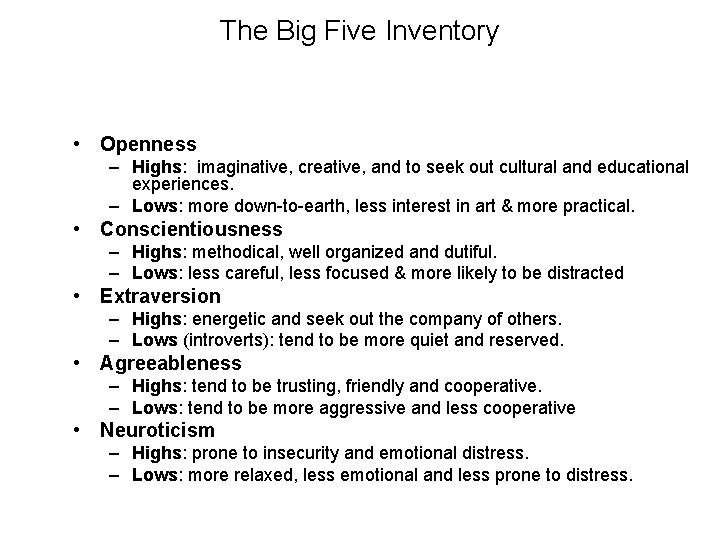

The Big Five Inventory • Openness – Highs: imaginative, creative, and to seek out cultural and educational experiences. – Lows: more down-to-earth, less interest in art & more practical. • Conscientiousness – Highs: methodical, well organized and dutiful. – Lows: less careful, less focused & more likely to be distracted • Extraversion – Highs: energetic and seek out the company of others. – Lows (introverts): tend to be more quiet and reserved. • Agreeableness – Highs: tend to be trusting, friendly and cooperative. – Lows: tend to be more aggressive and less cooperative • Neuroticism – Highs: prone to insecurity and emotional distress. – Lows: more relaxed, less emotional and less prone to distress.

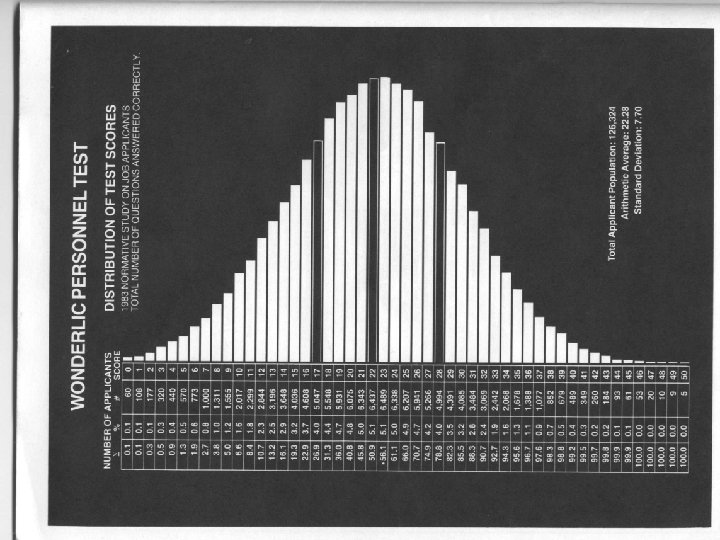

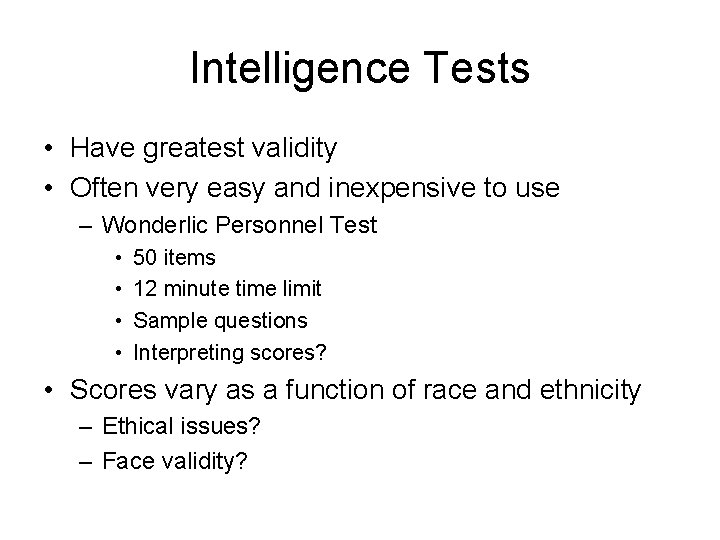

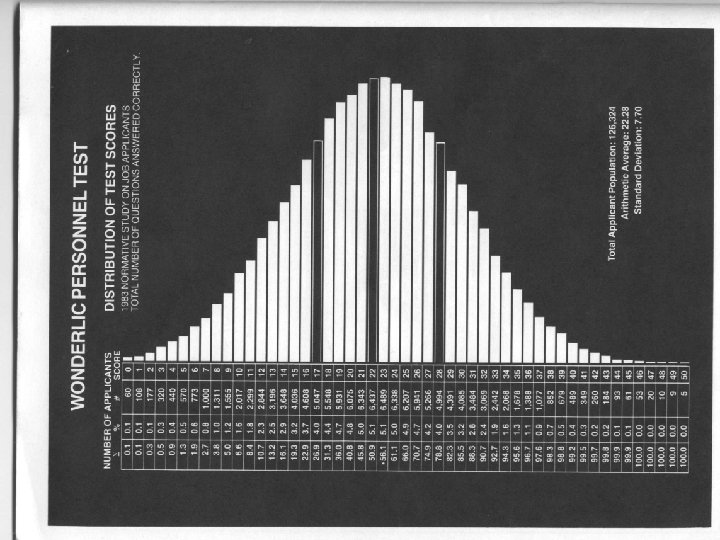

Intelligence Tests • Have greatest validity • Often very easy and inexpensive to use – Wonderlic Personnel Test • • 50 items 12 minute time limit Sample questions Interpreting scores? • Scores vary as a function of race and ethnicity – Ethical issues? – Face validity?

Determining “Test” Utility • Goal of testing is to make decisions about individuals on the basis of the amount of a given trait they possess. • A test should give us a “true” picture of a person’s traits • Test Score = True Score + Error

Reliability and Validity • Reliability – Test-retest – Parallel (Alternate) forms – Internal Consistency • Validity – – Face Content Criterion-related Construct-related

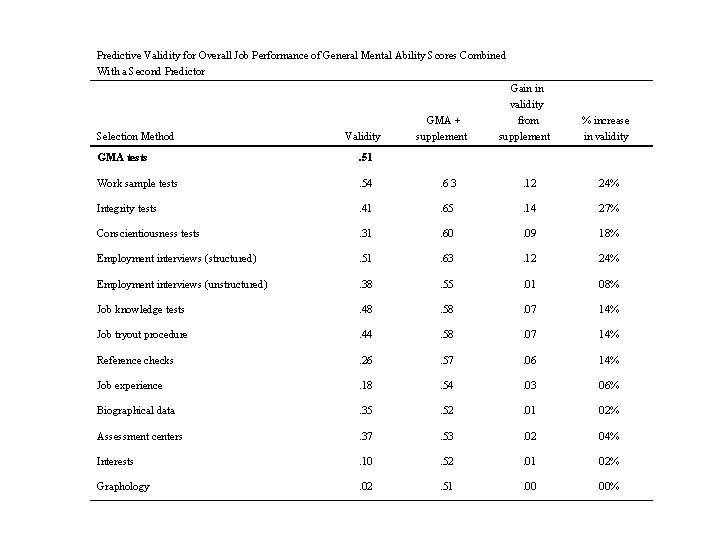

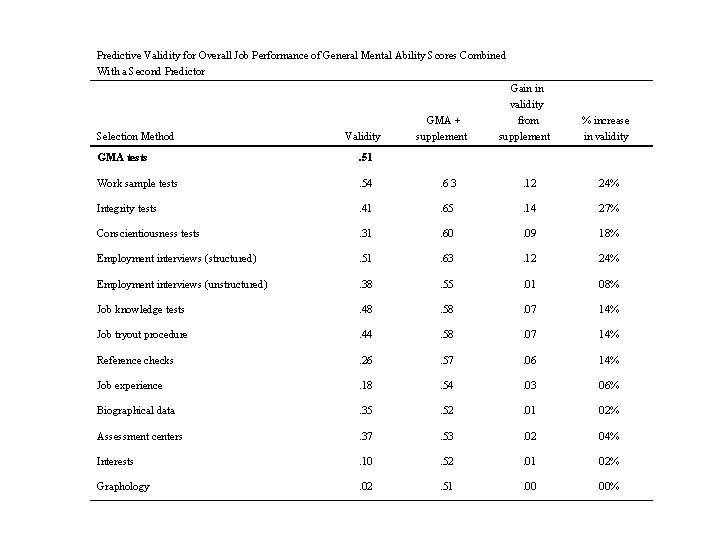

Predictive Validity for Overall Job Performance of General Mental Ability Scores Combined With a Second Predictor Selection Method Validity GMA + supplement Gain in validity from supplement % increase in validity GMA tests . 51 Work sample tests . 54 . 6 3 . 12 24% Integrity tests . 41 . 65 . 14 27% Conscientiousness tests . 31 . 60 . 09 18% Employment interviews (structured) . 51 . 63 . 12 24% Employment interviews (unstructured) . 38 . 55 . 01 08% Job knowledge tests . 48 . 58 . 07 14% Job tryout procedure . 44 . 58 . 07 14% Reference checks . 26 . 57 . 06 14% Job experience . 18 . 54 . 03 06% Biographical data . 35 . 52 . 01 02% Assessment centers . 37 . 53 . 02 04% Interests . 10 . 52 . 01 02% Graphology . 02 . 51 . 00 00%

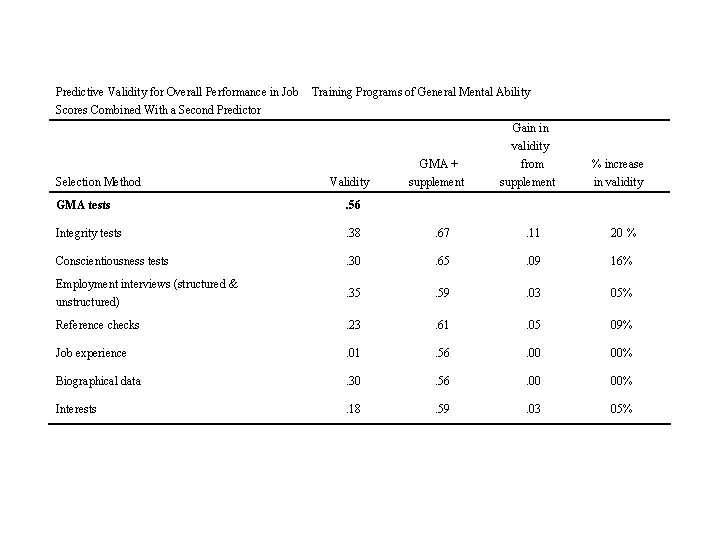

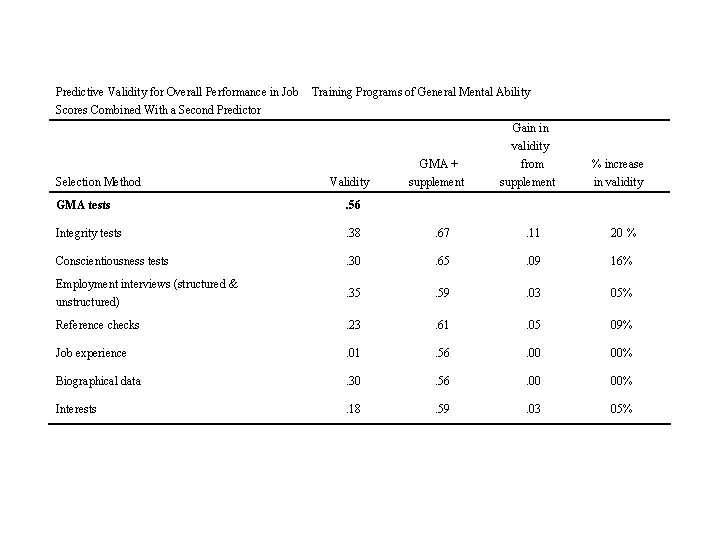

Predictive Validity for Overall Performance in Job Scores Combined With a Second Predictor Selection Method Training Programs of General Mental Ability Validity GMA + supplement Gain in validity from supplement % increase in validity GMA tests . 56 Integrity tests . 38 . 67 . 11 20 % Conscientiousness tests . 30 . 65 . 09 16% Employment interviews (structured & unstructured) . 35 . 59 . 03 05% Reference checks . 23 . 61 . 05 09% Job experience . 01 . 56 . 00 00% Biographical data . 30 . 56 . 00 00% Interests . 18 . 59 . 03 05%

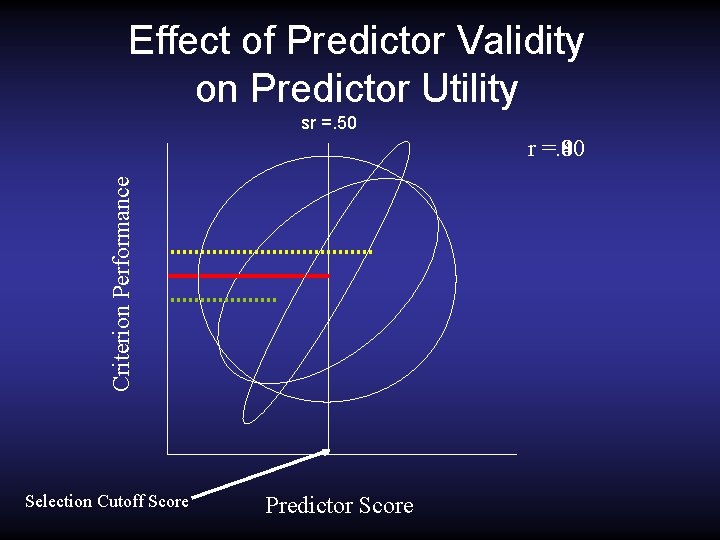

Factors Influencing Selection Quality • Three factors influence selection quality – Predictor validity – Selection ratio – Base rate

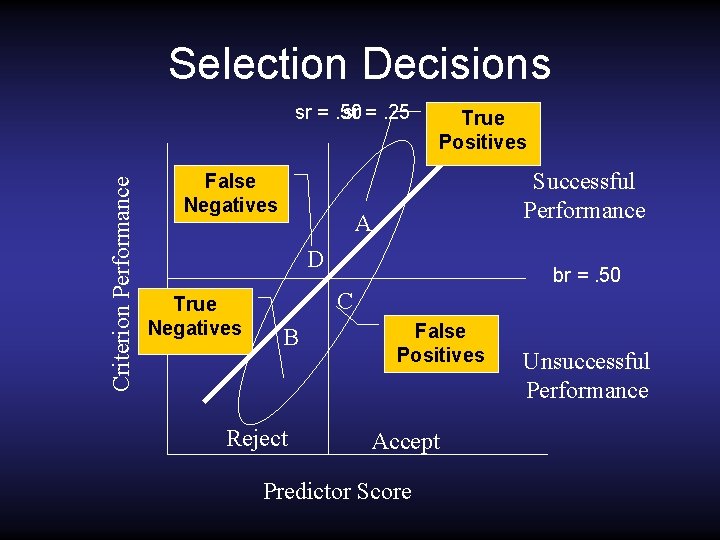

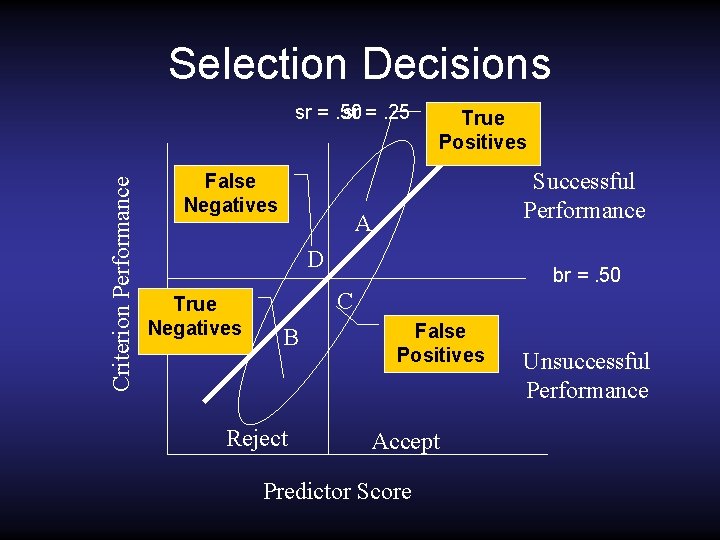

Selection Decisions Criterion Performance sr =. 50 sr =. 25 False Negatives True r =1. 00 =. 60 Positives Successful Performance A D True Negatives br =. 50 C B Reject False Positives Accept Predictor Score Unsuccessful Performance

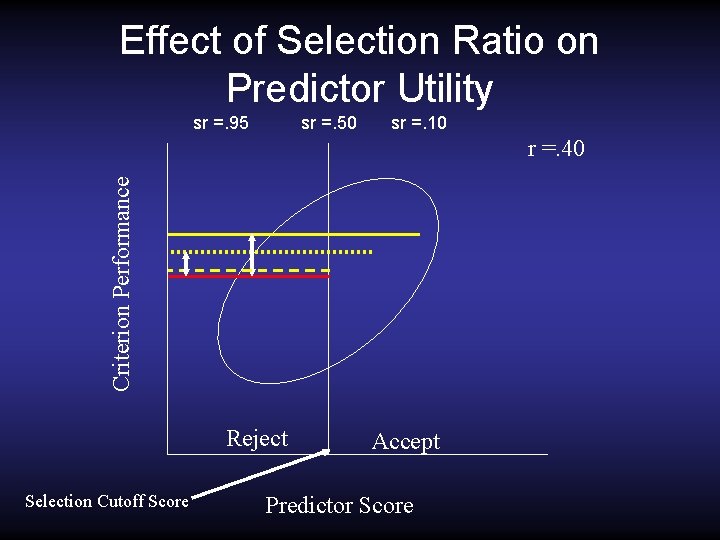

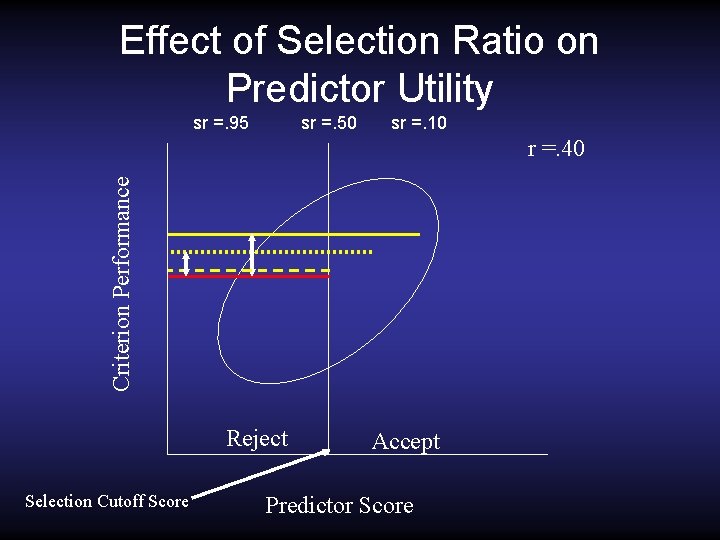

Effect of Selection Ratio on Predictor Utility sr =. 95 sr =. 50 sr =. 10 Criterion Performance r =. 40 Reject Selection Cutoff Score Accept Predictor Score

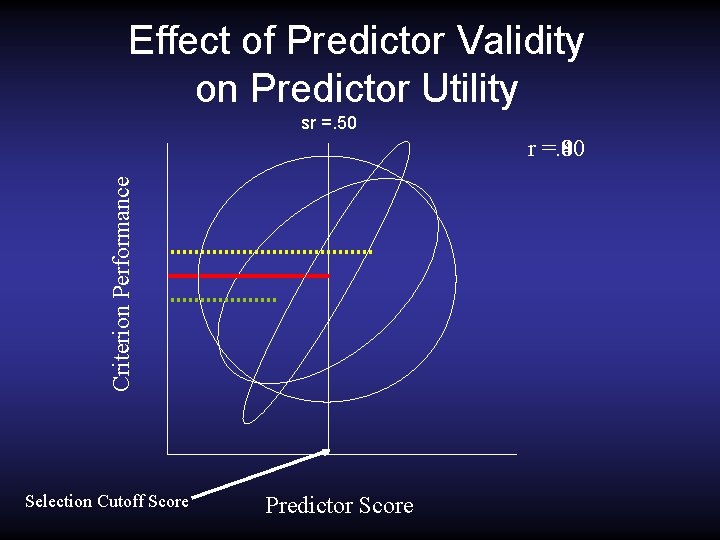

Effect of Predictor Validity on Predictor Utility sr =. 50 Criterion Performance r =. 00 =. 40 =. 80 Selection Cutoff Score Predictor Score

Selection Strategies • 3 Basic Strategies – Multiple Regression • Assumes relationships between predictors and criterion are linear • Assumes having a lot of one attribute compensates for having little of another – Multiple Cutoff • Applicants must achieve a set, minimum score on all predictors – Multiple Hurdle • Applicants must achieve satisfactory scores on a number of predictors that are administered over time.