Performance Modeling in GPGPU By Arun Bhandari Course

Performance Modeling in GPGPU By Arun Bhandari Course: HPC Date: 01/28/12

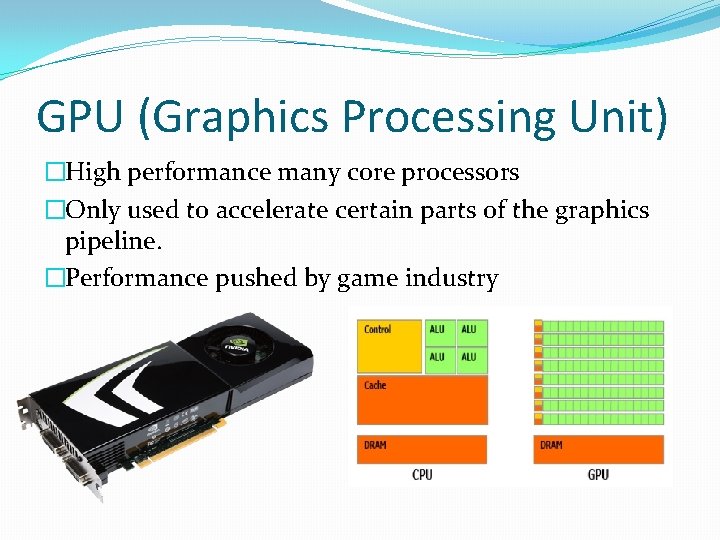

GPU (Graphics Processing Unit) �High performance many core processors �Only used to accelerate certain parts of the graphics pipeline. �Performance pushed by game industry

Introduction to GPGPU Ø Stands for General Purpose Graphics Processing Unit Ø Also called GPU computing Ø Use of GPU to do general purpose computing Ø Heterogeneous co-processing computing model

Why GPU for computing? �GPU is fast Massively parallel �High memory Bandwidth �Programmable NVIDIA CUDA, Open. CL � Inexpensive desktop supercomputing NVIDIA Tesla C 1060 : ~1 TFLOPS @ $1000

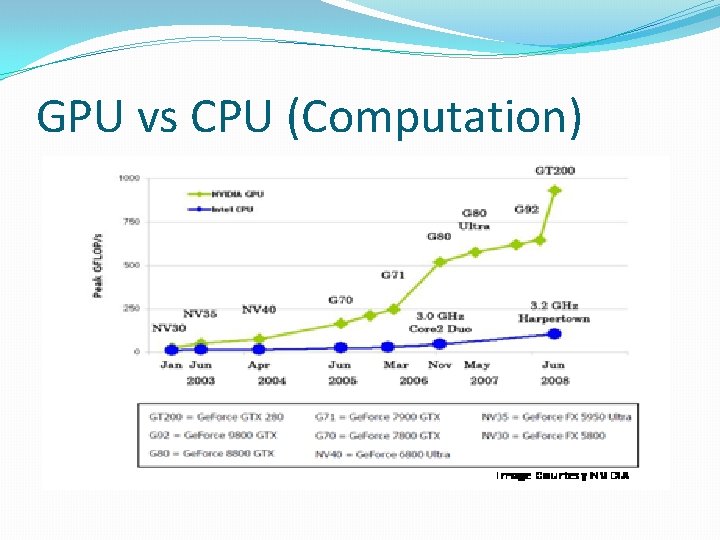

GPU vs CPU (Computation)

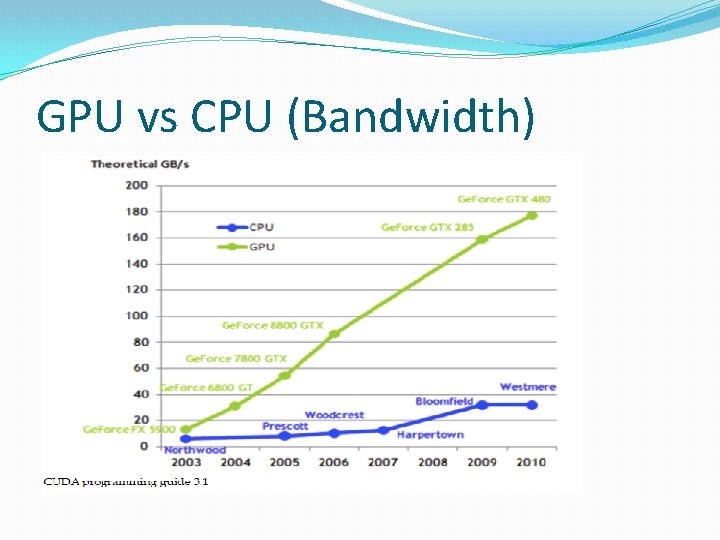

GPU vs CPU (Bandwidth)

Applications of GPGPU �MATLAB �Statistical physics �Audio Signal processing �Speech processing �Digital image processing �Video processing �Geometric computing �Weather forecasting �Climate research �Bioinformatics �Medical imaging �Database operations �Molecular modeling �Control engineering �Electronic design automation And many more……

Programming Models �Data-parallel processing �High arithmetic intensity �Coherent data access �GPU programming languages � NVIDIA CUDA � Open. CL

CUDA vs Open. CL �Conceptually identical work-item = thread work-group = block �Similar memory model �Global, local, shared memory �Kernel , host program �CUDA, highly optimized for NVIDIA GPUs �Open. CL can be widely used for any GPUs/ CPUs.

GPU Optimization �Maximize parallel execution �Use large inputs �Minimize device to host memory overhead �Avoid shared memory bank conflict �Use less expensive operators �Division : 32 cycles, multiplication : 4 cycles � *0. 5 instead of /2. 0

Conclusions �GPU computing delivers high performance �Many scientific computing problems are parallelizable �Future of today’s technology Issues �Every problem is not suitable for GPUs �Unclear future performance growth of GPU hardware

Questions ? ? ?

- Slides: 12