Performance modeling in Germany Why care about cluster

Performance modeling in Germany Why care about cluster performance? G. Wellein, G. Hager, T. Zeiser, M. Meier Regional Computing Center Erlangen (RRZE) Friedrich-Alexander-University Erlangen-Nuremberg April 16, 2008 IDC hpcuserforum

HPC Centers in Germany: Jülich Supercomputing Center 8, 9 TFlop/s IBM Power 4+ 46 TFlop/s Blue. Gene/L 228 TFlop/s Blue. Gene/P A view from Erlangen Hannover Berlin FZ Jülich Erlangen/ Nürnberg HLRS-Stuttgart LRZ-München HLR Stuttgart: 12 TFlop/s NEC SX 8 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de LRZ Munich: SGI Altix (62 TFlop/s) IDC - hpcuserforum 2

Friedrich-Alexander-University Erlangen-Nuremberg (FAU) FAU 2 nd largest university in Bavaria 26. 500+ students 12. 000+ employees 11 faculties 83 institutes 23 hospitals 265 chairs (C 4 / W 3) 141 fields of study 250 buildings scattered through 4 cities (Erlangen, Nuremberg, Fürth, Bamberg) WS 06/07 RRZE provides all “IT” services for the university 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 3

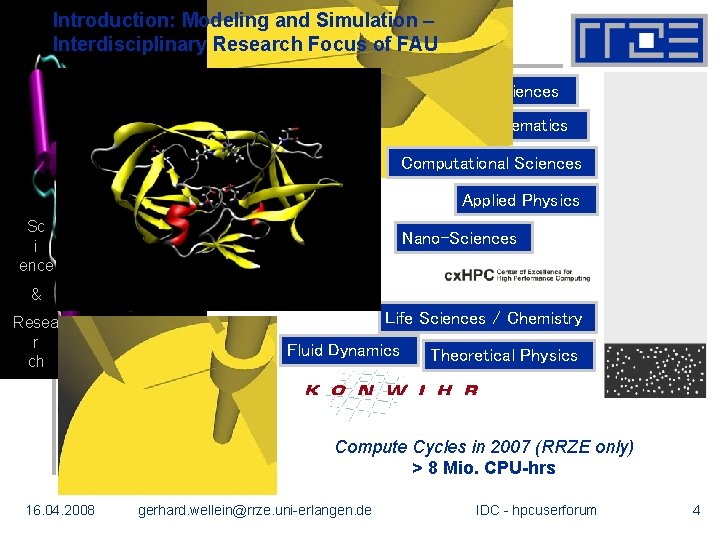

Introduction: Modeling and Simulation – Interdisciplinary Research Focus of FAU Material Sciences Applied Mathematics Computational Sciences Applied Physics Sc i ence Nano-Sciences & Resea r ch Life Sciences / Chemistry Fluid Dynamics Theoretical Physics Compute Cycles in 2007 (RRZE only) > 8 Mio. CPU-hrs 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 4

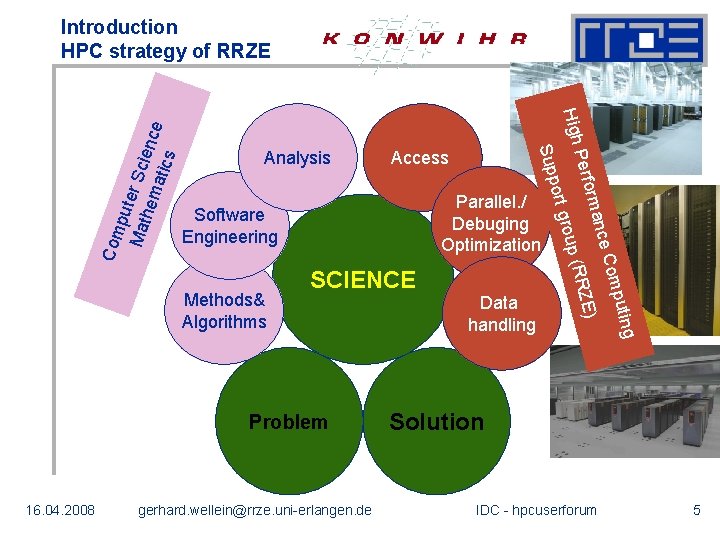

rma n ce C o mp ut ing E) gerhard. wellein@rrze. uni-erlangen. de (R R Z Problem Data handling P e rf o SCIENCE ro u p Parallel. / Debuging Optimization Software Engineering Methods& Algorithms 16. 04. 2008 Access o rt g Com High Analysis S upp put e Mat r Sci en hem atic ce s Introduction HPC strategy of RRZE Solution IDC - hpcuserforum 5

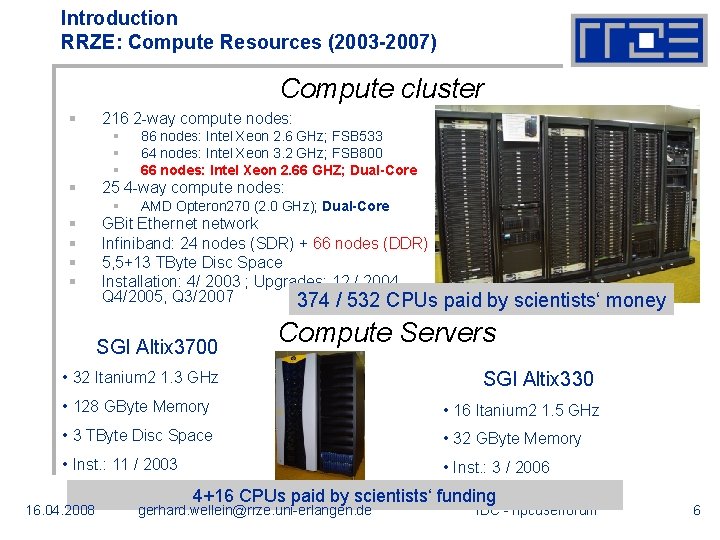

Introduction RRZE: Compute Resources (2003 -2007) Compute cluster § 216 2 -way compute nodes: § § 25 4 -way compute nodes: § § § 86 nodes: Intel Xeon 2. 6 GHz; FSB 533 64 nodes: Intel Xeon 3. 2 GHz; FSB 800 66 nodes: Intel Xeon 2. 66 GHZ; Dual-Core AMD Opteron 270 (2. 0 GHz); Dual-Core GBit Ethernet network Infiniband: 24 nodes (SDR) + 66 nodes (DDR) 5, 5+13 TByte Disc Space Installation: 4/ 2003 ; Upgrades: 12 / 2004, Q 4/2005, Q 3/2007 374 / 532 CPUs SGI Altix 3700 paid by scientists‘ money Compute Servers • 32 Itanium 2 1. 3 GHz SGI Altix 330 • 128 GByte Memory • 16 Itanium 2 1. 5 GHz • 3 TByte Disc Space • 32 GByte Memory • Inst. : 11 / 2003 • Inst. : 3 / 2006 16. 04. 2008 4+16 CPUs paid by scientists‘ funding gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 6

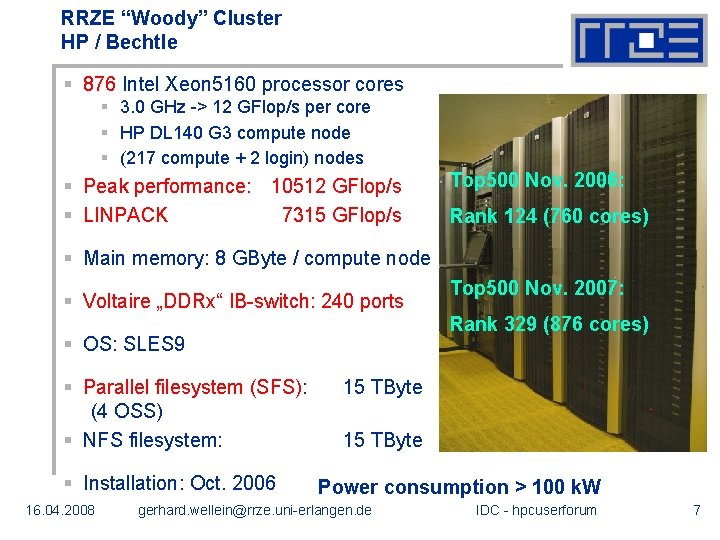

RRZE “Woody” Cluster HP / Bechtle § 876 Intel Xeon 5160 processor cores § 3. 0 GHz -> 12 GFlop/s per core § HP DL 140 G 3 compute node § (217 compute + 2 login) nodes § Peak performance: 10512 GFlop/s § LINPACK 7315 GFlop/s Top 500 Nov. 2006: Rank 124 (760 cores) § Main memory: 8 GByte / compute node § Voltaire „DDRx“ IB-switch: 240 ports Rank 329 (876 cores) § OS: SLES 9 § Parallel filesystem (SFS): (4 OSS) § NFS filesystem: § Installation: Oct. 2006 16. 04. 2008 Top 500 Nov. 2007: 15 TByte Power consumption > 100 k. W gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 7

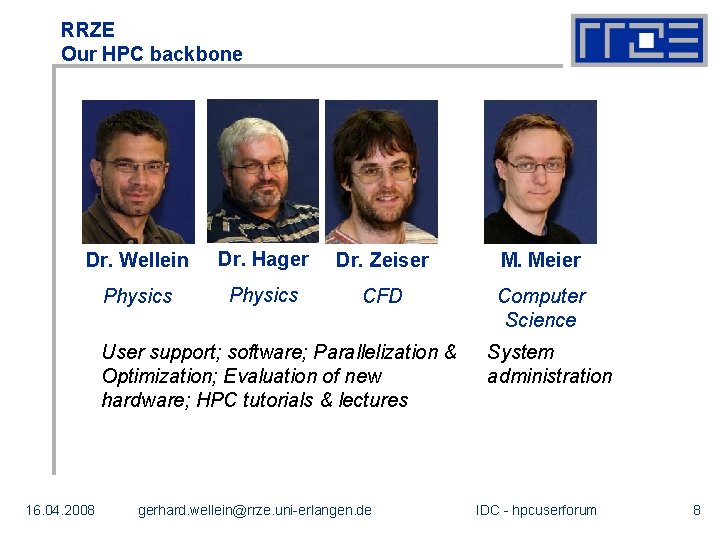

RRZE Our HPC backbone Dr. Wellein Dr. Hager Dr. Zeiser M. Meier Physics CFD Computer Science User support; software; Parallelization & Optimization; Evaluation of new hardware; HPC tutorials & lectures 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de System administration IDC - hpcuserforum 8

Architecture of cluster nodes cc. NUMA – why care about it?

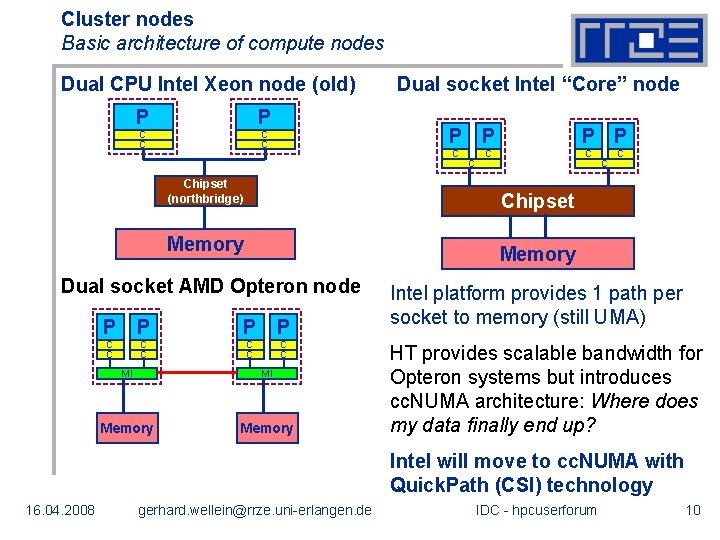

Cluster nodes Basic architecture of compute nodes Dual CPU Intel Xeon node (old) P P C C Dual socket Intel “Core” node P P C Chipset (northbridge) C P P C C Chipset Memory Dual socket AMD Opteron node Intel platform provides 1 path per socket to memory (still UMA) P P C C C C MI MI Memory HT provides scalable bandwidth for Opteron systems but introduces cc. NUMA architecture: Where does my data finally end up? Intel will move to cc. NUMA with Quick. Path (CSI) technology 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 10

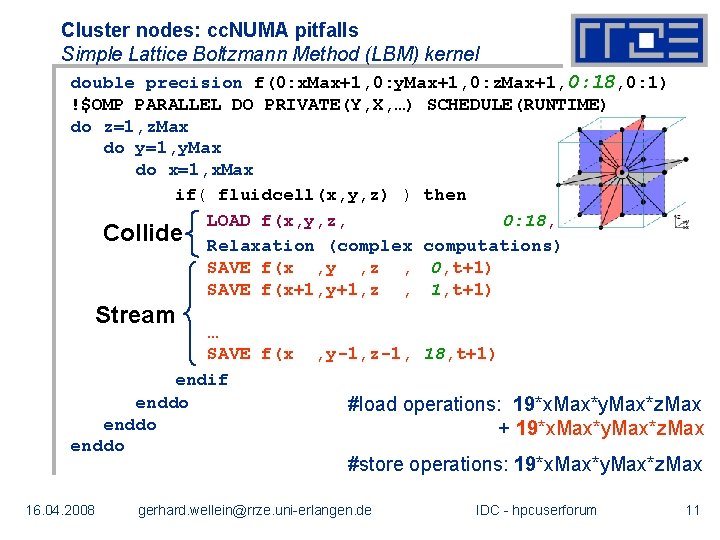

Cluster nodes: cc. NUMA pitfalls Simple Lattice Boltzmann Method (LBM) kernel double precision f(0: x. Max+1, 0: y. Max+1, 0: z. Max+1, 0: 18, 0: 1) !$OMP PARALLEL DO PRIVATE(Y, X, …) SCHEDULE(RUNTIME) do z=1, z. Max do y=1, y. Max do x=1, x. Max if( fluidcell(x, y, z) ) then LOAD f(x, y, z, 0: 18, t) Collide Relaxation (complex computations) SAVE f(x , y , z , 0, t+1) SAVE f(x+1, y+1, z , 1, t+1) Stream … SAVE f(x endif enddo 16. 04. 2008 , y-1, z-1, 18, t+1) #load operations: 19*x. Max*y. Max*z. Max + 19*x. Max*y. Max*z. Max #store operations: 19*x. Max*y. Max*z. Max gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 11

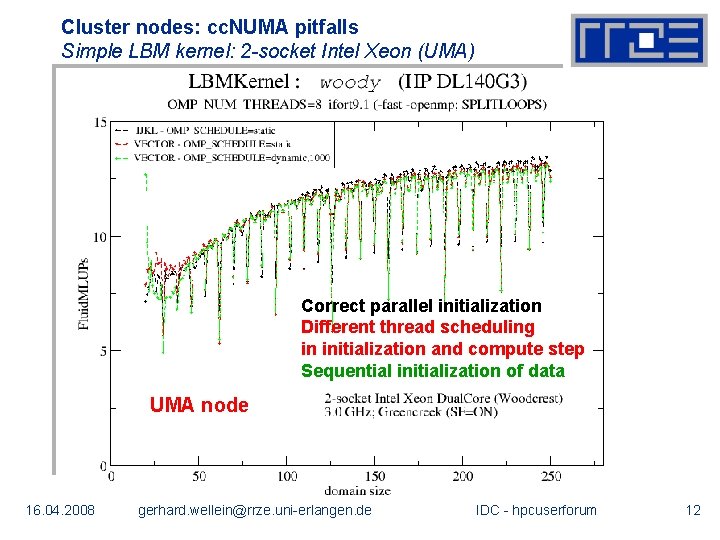

Cluster nodes: cc. NUMA pitfalls Simple LBM kernel: 2 -socket Intel Xeon (UMA) Correct parallel initialization Different thread scheduling in initialization and compute step Sequential initialization of data UMA node 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 12

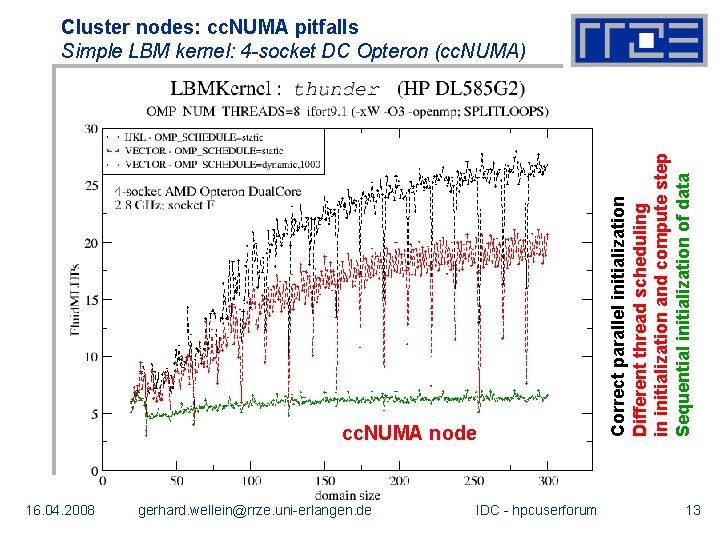

cc. NUMA node 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum Correct parallel initialization Different thread scheduling in initialization and compute step Sequential initialization of data Cluster nodes: cc. NUMA pitfalls Simple LBM kernel: 4 -socket DC Opteron (cc. NUMA) 13

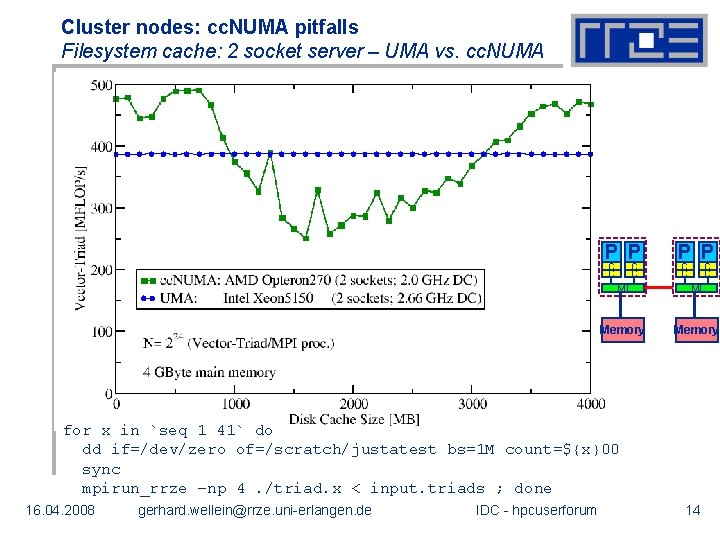

Cluster nodes: cc. NUMA pitfalls Filesystem cache: 2 socket server – UMA vs. cc. NUMA P P MI MI Memory C C C C for x in `seq 1 41` do dd if=/dev/zero of=/scratch/justatest bs=1 M count=${x}00 sync mpirun_rrze –np 4. /triad. x < input. triads ; done 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 14

Main memory bandwidth – Did you ever check the stream number of your compute nodes?

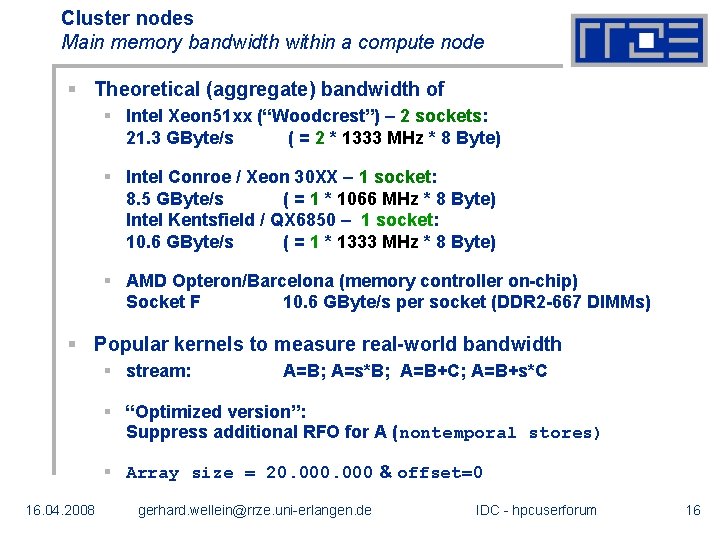

Cluster nodes Main memory bandwidth within a compute node § Theoretical (aggregate) bandwidth of § Intel Xeon 51 xx (“Woodcrest”) – 2 sockets: 21. 3 GByte/s ( = 2 * 1333 MHz * 8 Byte) § Intel Conroe / Xeon 30 XX – 1 socket: 8. 5 GByte/s ( = 1 * 1066 MHz * 8 Byte) Intel Kentsfield / QX 6850 – 1 socket: 10. 6 GByte/s ( = 1 * 1333 MHz * 8 Byte) § AMD Opteron/Barcelona (memory controller on-chip) Socket F 10. 6 GByte/s per socket (DDR 2 -667 DIMMs) § Popular kernels to measure real-world bandwidth § stream: A=B; A=s*B; A=B+C; A=B+s*C § “Optimized version”: Suppress additional RFO for A (nontemporal stores) § Array size = 20. 000 & offset=0 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 16

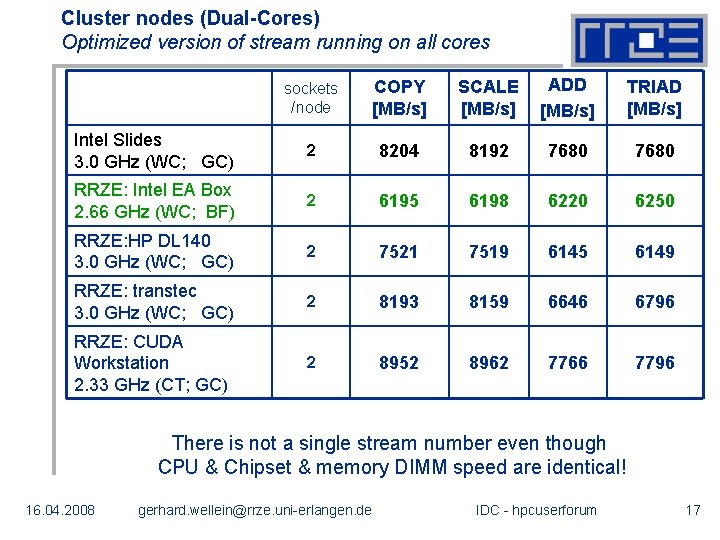

Cluster nodes (Dual-Cores) Optimized version of stream running on all cores sockets /node COPY [MB/s] SCALE [MB/s] ADD [MB/s] TRIAD [MB/s] Intel Slides 3. 0 GHz (WC; GC) 2 8204 8192 7680 RRZE: Intel EA Box 2. 66 GHz (WC; BF) 2 6195 6198 6220 6250 RRZE: HP DL 140 3. 0 GHz (WC; GC) 2 7521 7519 6145 6149 RRZE: transtec 3. 0 GHz (WC; GC) 2 8193 8159 6646 6796 RRZE: CUDA Workstation 2. 33 GHz (CT; GC) 2 8952 8962 7766 7796 There is not a single stream number even though CPU & Chipset & memory DIMM speed are identical! 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 17

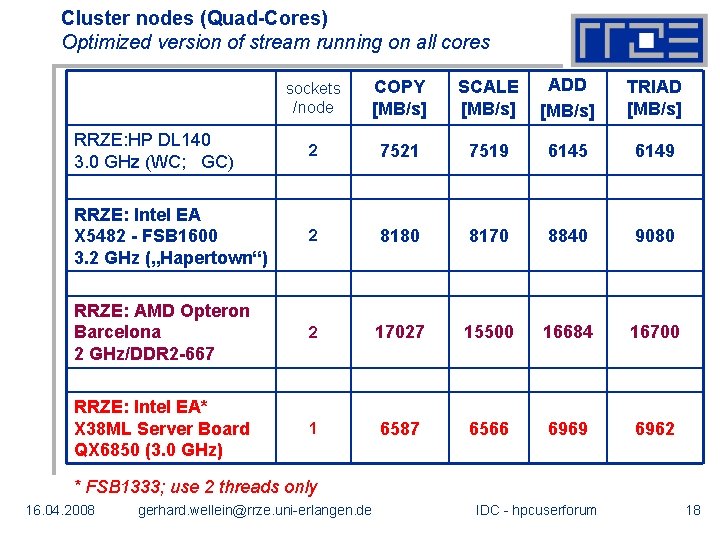

Cluster nodes (Quad-Cores) Optimized version of stream running on all cores sockets /node COPY [MB/s] SCALE [MB/s] ADD [MB/s] TRIAD [MB/s] RRZE: HP DL 140 3. 0 GHz (WC; GC) 2 7521 7519 6145 6149 RRZE: Intel EA X 5482 - FSB 1600 3. 2 GHz („Hapertown“) 2 8180 8170 8840 9080 RRZE: AMD Opteron Barcelona 2 GHz/DDR 2 -667 2 17027 15500 16684 16700 RRZE: Intel EA* X 38 ML Server Board QX 6850 (3. 0 GHz) 1 6587 6566 6969 6962 * FSB 1333; use 2 threads only 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 18

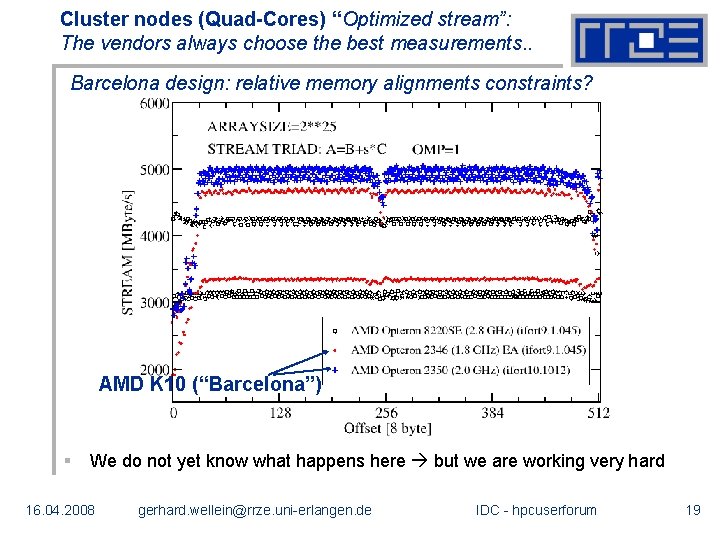

Cluster nodes (Quad-Cores) “Optimized stream”: The vendors always choose the best measurements. . Barcelona design: relative memory alignments constraints? AMD K 10 (“Barcelona”) § We do not yet know what happens here but we are working very hard 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 19

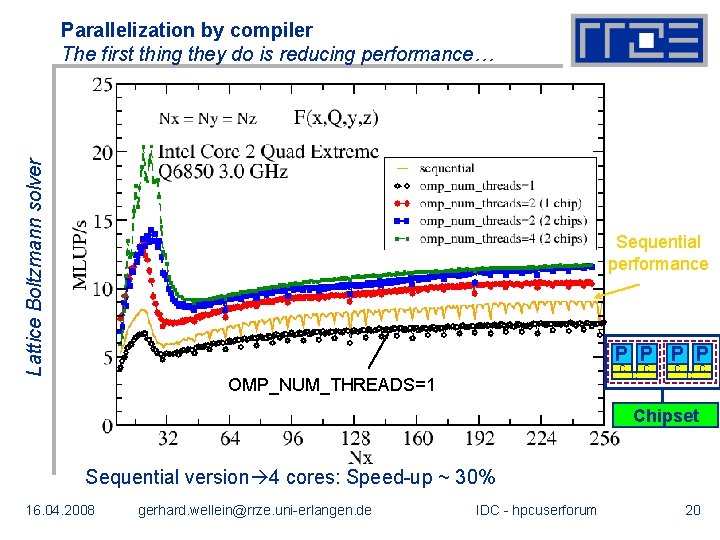

Lattice Boltzmann solver Parallelization by compiler The first thing they do is reducing performance… Sequential performance P P C OMP_NUM_THREADS=1 C C Chipset Sequential version 4 cores: Speed-up ~ 30% 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 20

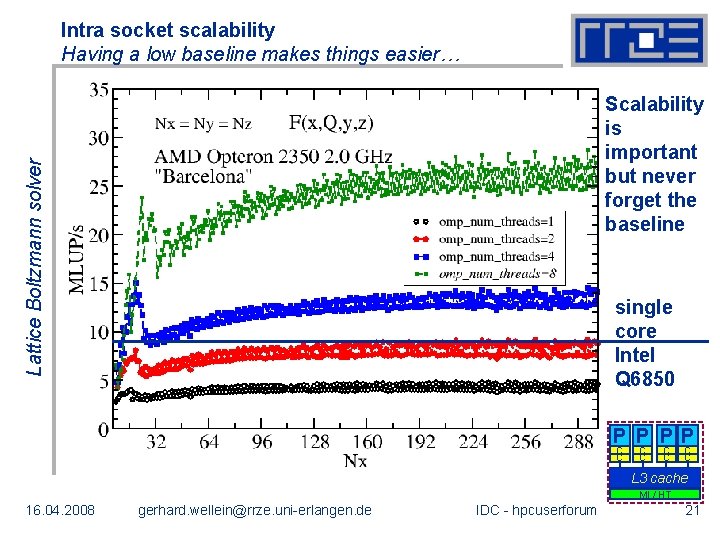

Intra socket scalability Having a low baseline makes things easier… Lattice Boltzmann solver Scalability is important but never forget the baseline single core Intel Q 6850 P P C C C C L 3 cache MI / HT 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 21

Experiences with cluster performance Tales from the trenches. .

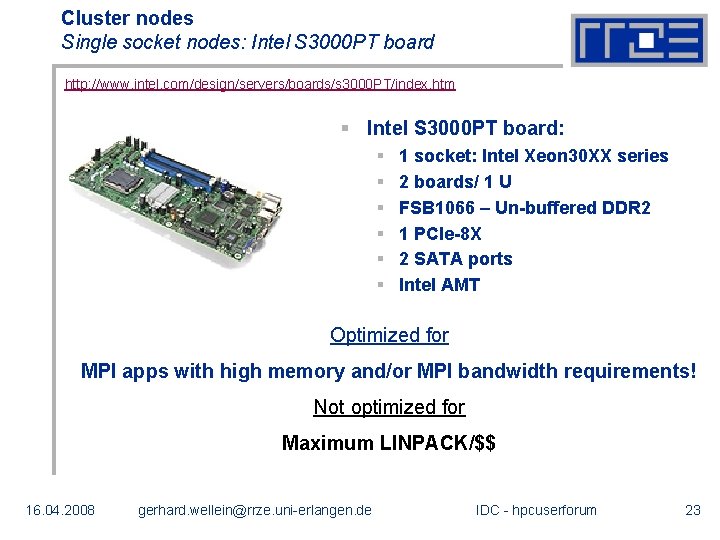

Cluster nodes Single socket nodes: Intel S 3000 PT board http: //www. intel. com/design/servers/boards/s 3000 PT/index. htm § Intel S 3000 PT board: § § § 1 socket: Intel Xeon 30 XX series 2 boards/ 1 U FSB 1066 – Un-buffered DDR 2 1 PCIe-8 X 2 SATA ports Intel AMT Optimized for MPI apps with high memory and/or MPI bandwidth requirements! Not optimized for Maximum LINPACK/$$ 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 23

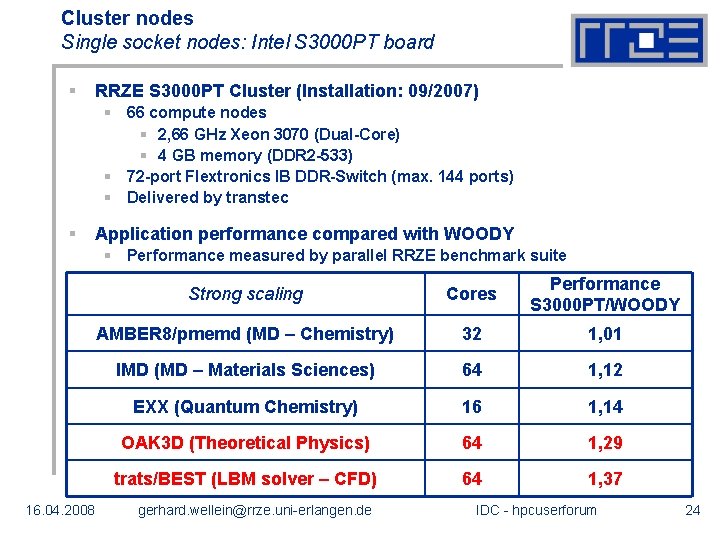

Cluster nodes Single socket nodes: Intel S 3000 PT board § RRZE S 3000 PT Cluster (Installation: 09/2007) § 66 compute nodes § 2, 66 GHz Xeon 3070 (Dual-Core) § 4 GB memory (DDR 2 -533) § 72 -port Flextronics IB DDR-Switch (max. 144 ports) § Delivered by transtec § Application performance compared with WOODY § Performance measured by parallel RRZE benchmark suite 16. 04. 2008 Strong scaling Cores Performance S 3000 PT/WOODY AMBER 8/pmemd (MD – Chemistry) 32 1, 01 IMD (MD – Materials Sciences) 64 1, 12 EXX (Quantum Chemistry) 16 1, 14 OAK 3 D (Theoretical Physics) 64 1, 29 trats/BEST (LBM solver – CFD) 64 1, 37 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 24

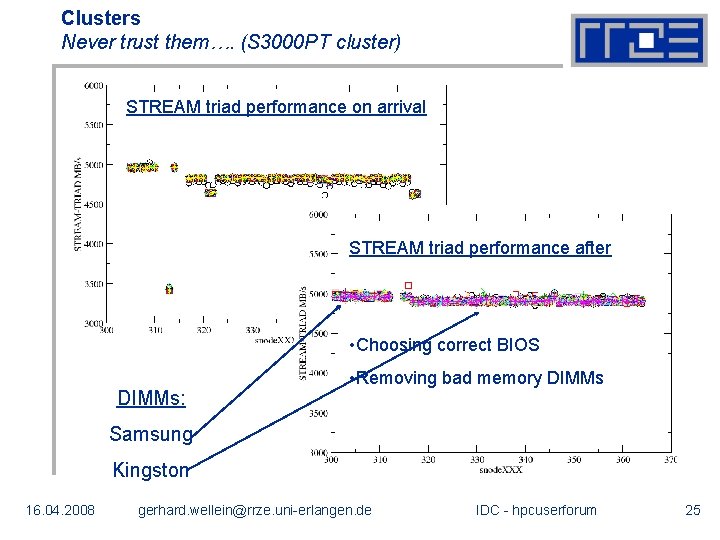

Clusters Never trust them…. (S 3000 PT cluster) STREAM triad performance on arrival STREAM triad performance after • Choosing correct BIOS DIMMs: • Removing bad memory DIMMs Samsung Kingston 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 25

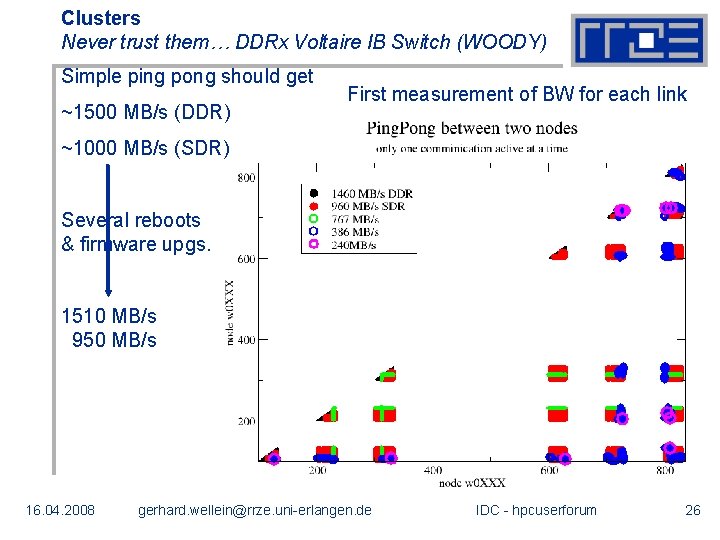

Clusters Never trust them… DDRx Voltaire IB Switch (WOODY) Simple ping pong should get ~1500 MB/s (DDR) First measurement of BW for each link ~1000 MB/s (SDR) Several reboots & firmware upgs. 1510 MB/s 950 MB/s 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 26

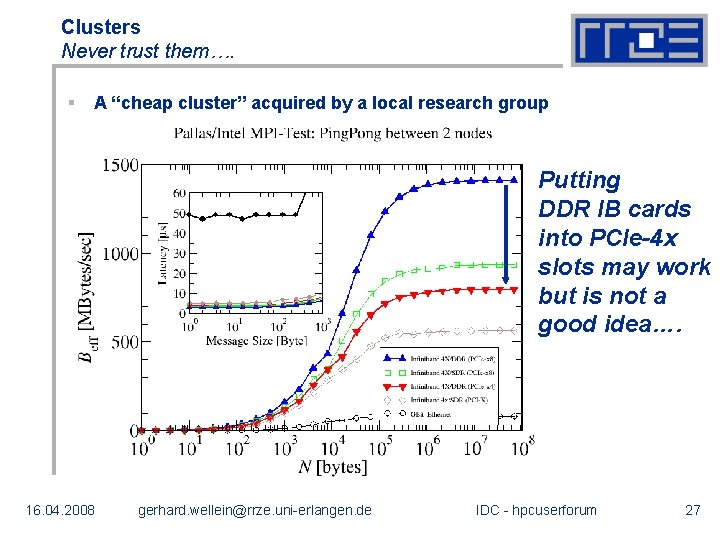

Clusters Never trust them…. § A “cheap cluster” acquired by a local research group Putting DDR IB cards into PCIe-4 x slots may work but is not a good idea…. 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 27

Clusters Never trust anyone…. § A “cheap cluster” acquired by a local research group § “We were told thaton AMD is the best processor 4 MPI processes 8 MPIavailable!” processes 2 -way (AMD Opteron Dual-Core 2. 2 GHz) + DDR IB network one nodes of woody (Intel 64/9. 1. + Intel. MPI) § “Why buy a commercial compiler when a free one is available? ” Runtime [s] gfortran Runtime [s] § Studio 12 Target application: AMBER 9/pmemd Opteron cluster SUN 2*2*2 1930 3500 (2. 2 GHz) Open. MPI gfortran Intel MPI 3000 Woody cluster (3. 0 GHz) 2*2*2 1430 Intel 64/9. 1. Intel MPI 2700 2 x Intel QX 6850 (3. 0 GHz) 2*1*4 1440 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 28

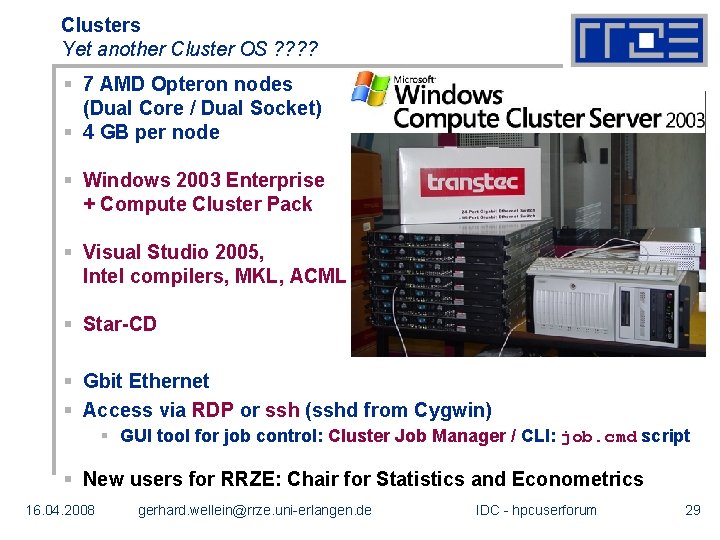

Clusters Yet another Cluster OS ? ? § 7 AMD Opteron nodes (Dual Core / Dual Socket) § 4 GB per node § Windows 2003 Enterprise + Compute Cluster Pack § Visual Studio 2005, Intel compilers, MKL, ACML § Star-CD § Gbit Ethernet § Access via RDP or ssh (sshd from Cygwin) § GUI tool for job control: Cluster Job Manager / CLI: job. cmd script § New users for RRZE: Chair for Statistics and Econometrics 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 29

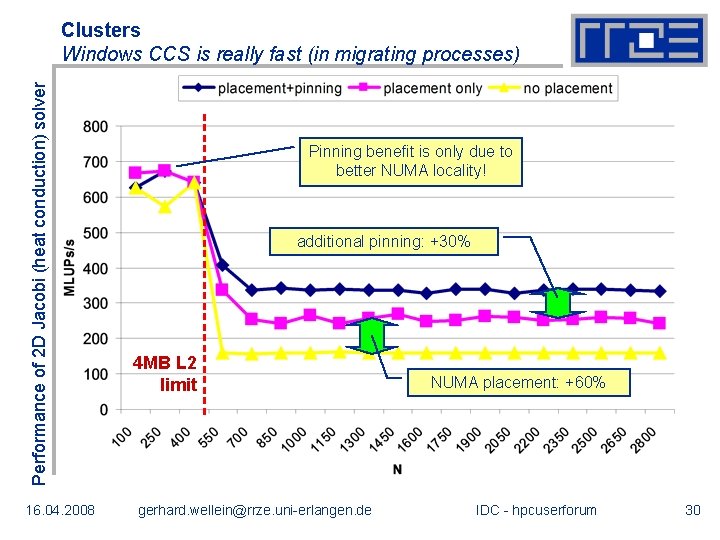

Performance of 2 D Jacobi (heat conduction) solver Clusters Windows CCS is really fast (in migrating processes) 16. 04. 2008 Pinning benefit is only due to better NUMA locality! additional pinning: +30% 4 MB L 2 limit gerhard. wellein@rrze. uni-erlangen. de NUMA placement: +60% IDC - hpcuserforum 30

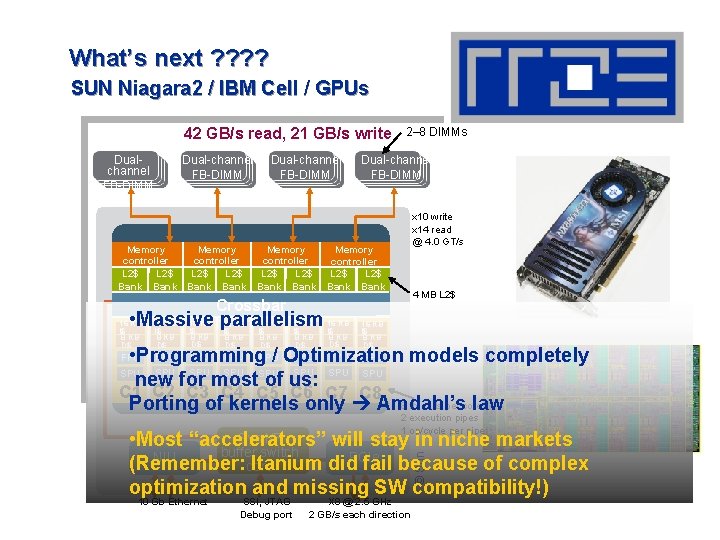

What’s next ? ? SUN Niagara 2 / IBM Cell / GPUs 42 GB/s read, 21 GB/s write Dualchannel FB-DIMM Dual-channel FB-DIMM 2– 8 DIMMs Dual-channel FB-DIMM x 10 write x 14 read @ 4. 0 GT/s Memory controller L 2$ L 2$ L 2$ Bank Bank Bank 4 MB L 2$ Crossbar • Massive parallelism 16 KB I$ 8 KB D$ 16 KB I$ 8 KB D$ FPU FPU • Programming / Optimization models completely SPU SPU new for most of us: C 1 C 2 C 3 C 4 C 5 C 6 C 7 C 8 8 threads per core Porting of kernels only Amdahl’s law FPU 2 execution pipes 1 op/cycle per pipe © Sun Sys I/F • Most “accelerators” will stay in niche markets buffer switch NIU (Remember: Itanium did. PCIe fail because of complex core optimization and missing SW compatibility!) 10 Gb Ethernet SSI, JTAG Debug port X 8 @ 2. 5 GHz 2 GB/s each direction

Summary § Cluster provide tremendous compute capacity at a low price tag but they are far away from being § a standard product § designed for optimal performance on HPC apps § a solution for highly parallel high end apps § (Heterogeneous) Multi-/Many-Core architectures will further improve price/performance ratio but increase programming complexity § Most users of HPC systems will not be able to adequately address the challenges and problems pointed out in this talk! 16. 04. 2008 gerhard. wellein@rrze. uni-erlangen. de IDC - hpcuserforum 32

- Slides: 32