Performance Modeling and System Management for MultiComponent Online

![Motivational Research Challenge “Their [very-large-scale systems] complexity approaches that of an urban community or Motivational Research Challenge “Their [very-large-scale systems] complexity approaches that of an urban community or](https://slidetodoc.com/presentation_image_h/e6d21cfabdb79115bf48ac9ec7175ca5/image-2.jpg)

- Slides: 29

Performance Modeling and System Management for Multi-Component Online Services Christopher Stewart and Kai Shen University of Rochester 1

![Motivational Research Challenge Their verylargescale systems complexity approaches that of an urban community or Motivational Research Challenge “Their [very-large-scale systems] complexity approaches that of an urban community or](https://slidetodoc.com/presentation_image_h/e6d21cfabdb79115bf48ac9ec7175ca5/image-2.jpg)

Motivational Research Challenge “Their [very-large-scale systems] complexity approaches that of an urban community or the economy, where technicians may understand components, but can neither predict nor control the whole system. ” CRA Conference on Grand Research Challenges in Information Systems September 2003 2

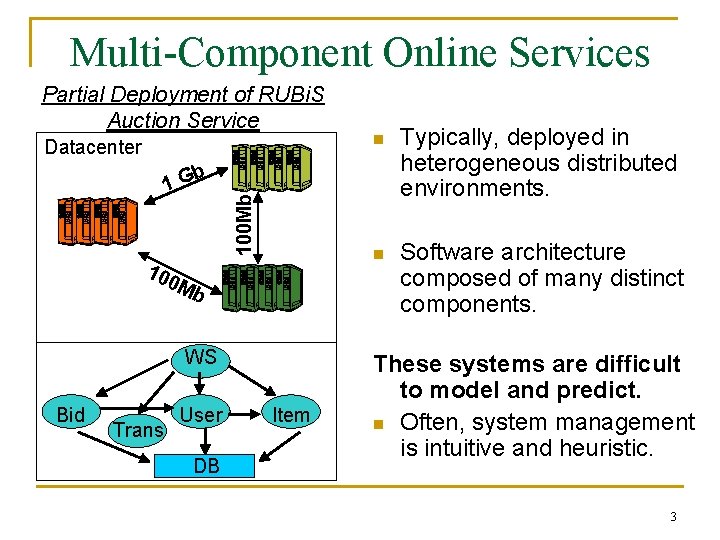

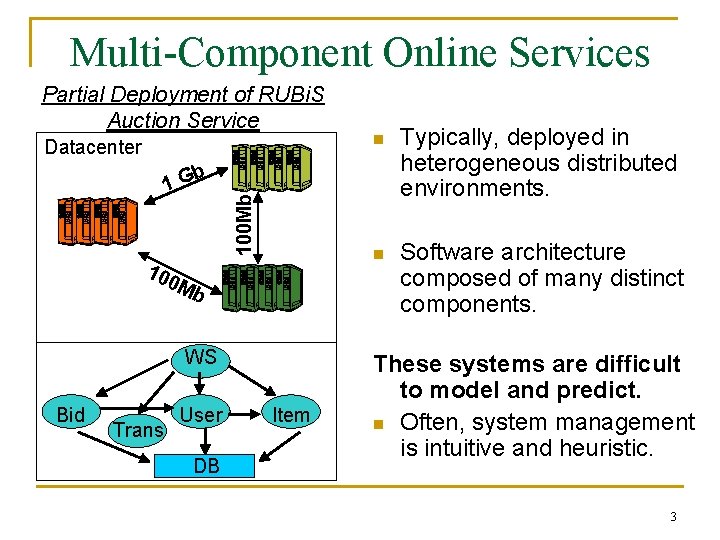

Multi-Component Online Services Partial Deployment of RUBi. S Auction Service Datacenter n Typically, deployed in heterogeneous distributed environments. n Software architecture composed of many distinct components. 100 Mb b 1 G 100 Mb WS Bid Trans User DB Item These systems are difficult to model and predict. n Often, system management is intuitive and heuristic. 3

Talk Outline Overview. 1. ü ü Contributions. Approach and Sub Goals. 2. Key application characteristics. 3. Models to predict whole-system performance. 4. Model-driven system management. 5. Related Work and Conclusion. 4

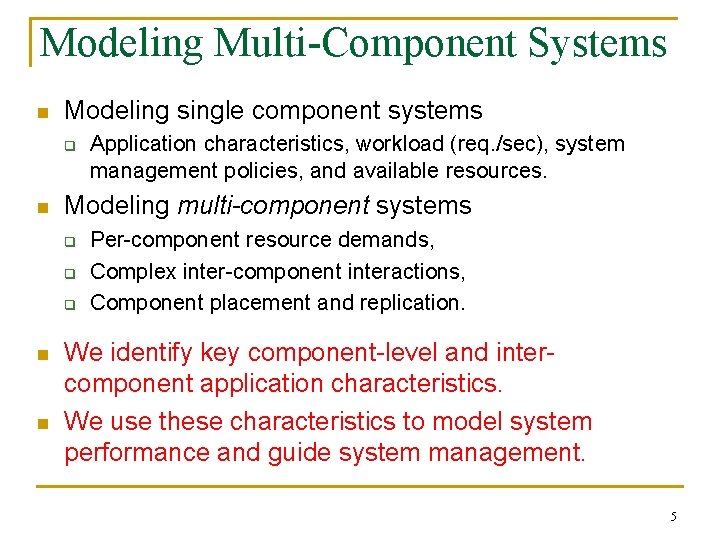

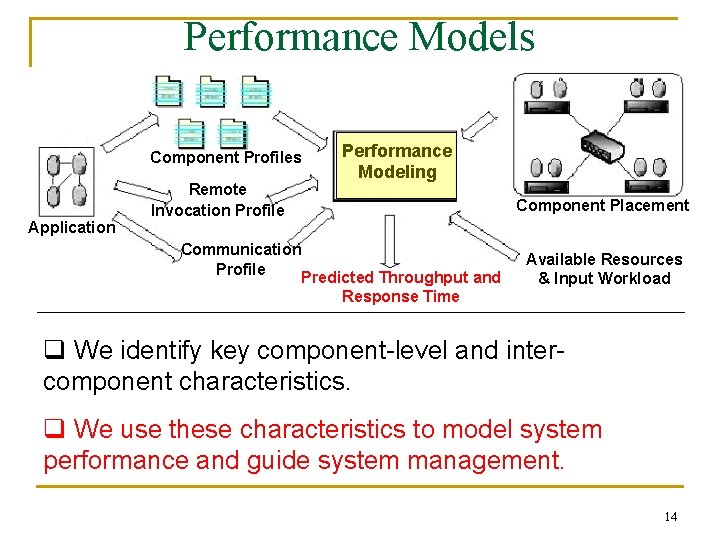

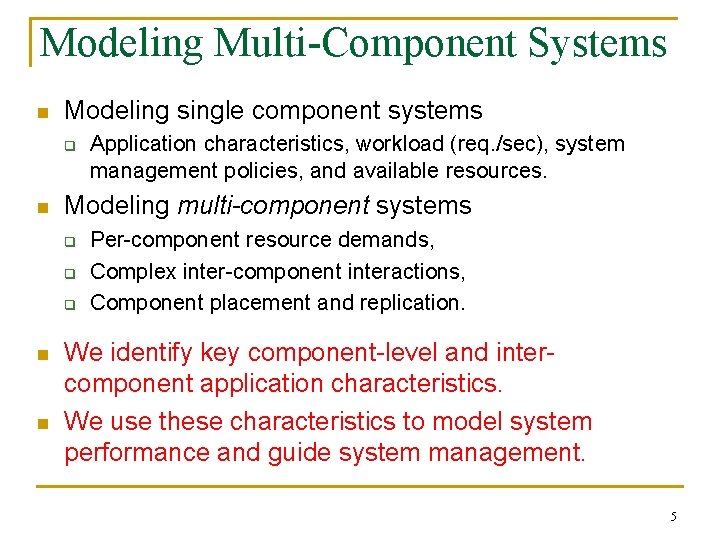

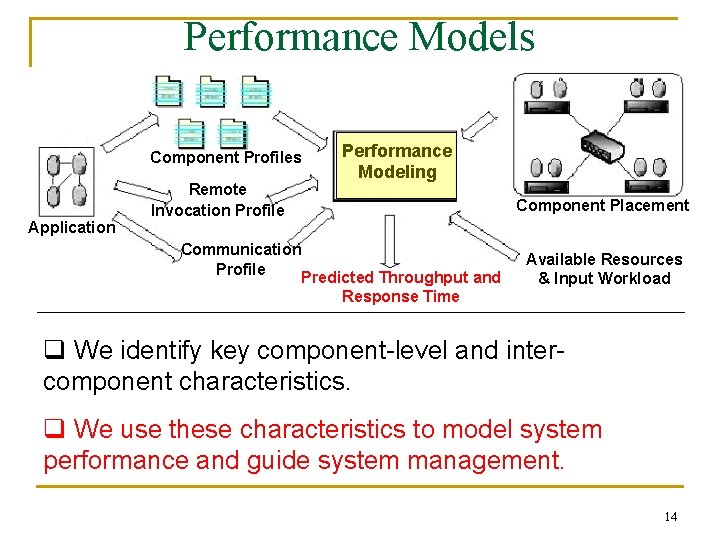

Modeling Multi-Component Systems n Modeling single component systems q n Modeling multi-component systems q q q n n Application characteristics, workload (req. /sec), system management policies, and available resources. Per-component resource demands, Complex inter-component interactions, Component placement and replication. We identify key component-level and intercomponent application characteristics. We use these characteristics to model system performance and guide system management. 5

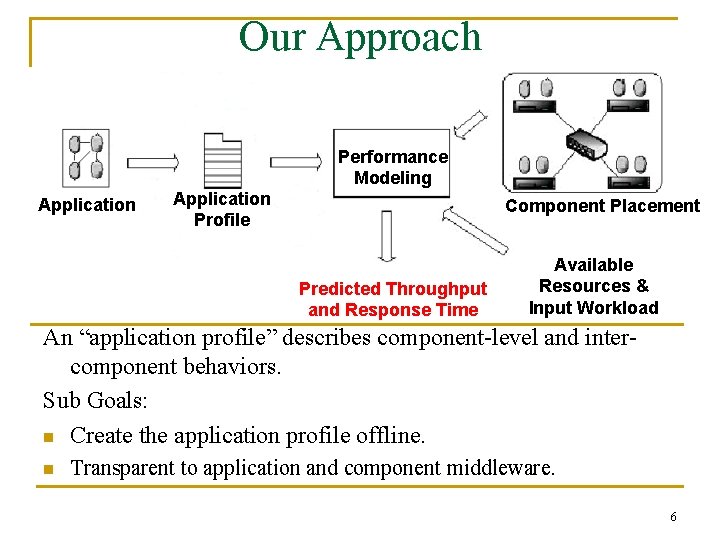

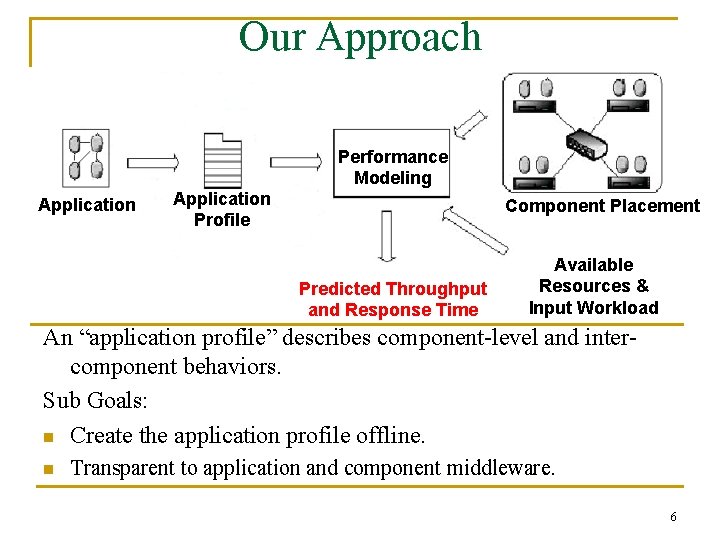

Our Approach Performance Modeling Application Profile Component Placement Predicted Throughput and Response Time Available Resources & Input Workload An “application profile” describes component-level and intercomponent behaviors. Sub Goals: n Create the application profile offline. n Transparent to application and component middleware. 6

Talk Outline 1. Introduction 2. Key application characteristics. ü ü o Per-component resource needs. Inter-component communication. Overhead of remote invocations. 3. Models to predict whole-system performance. 4. Model-driven system management. 5. Related Work and Conclusion. 7

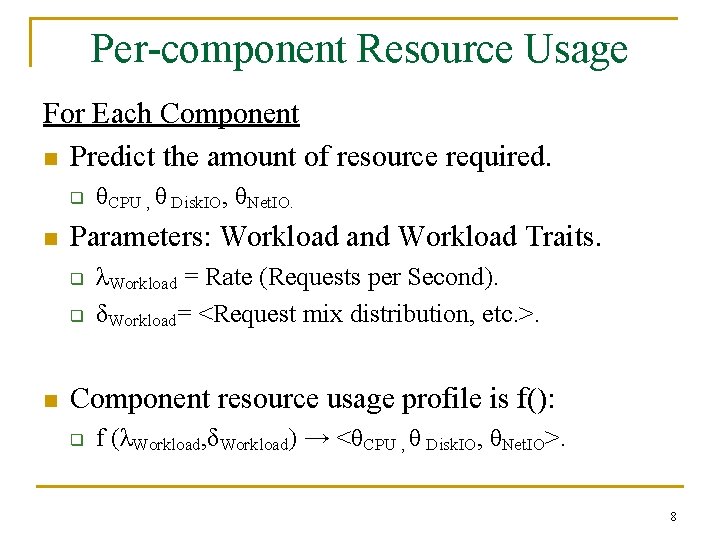

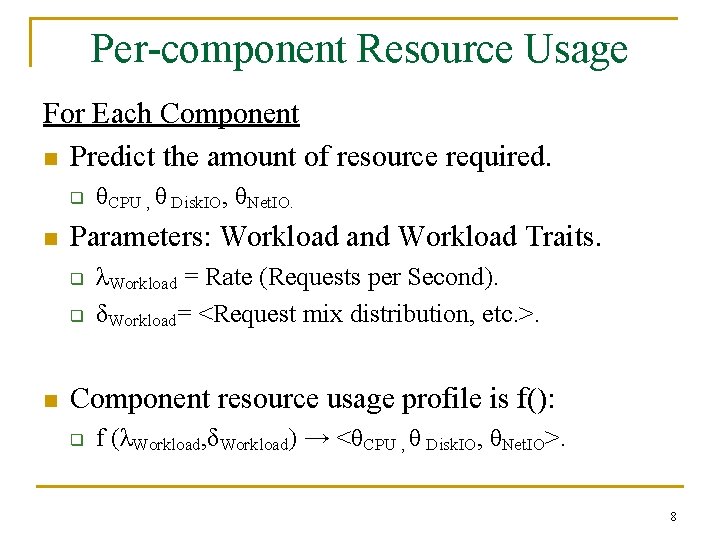

Per-component Resource Usage For Each Component n Predict the amount of resource required. q n Parameters: Workload and Workload Traits. q q n θCPU , θ Disk. IO, θNet. IO. λWorkload = Rate (Requests per Second). δWorkload= <Request mix distribution, etc. >. Component resource usage profile is f(): q f (λWorkload, δWorkload) → <θCPU , θ Disk. IO, θNet. IO>. 8

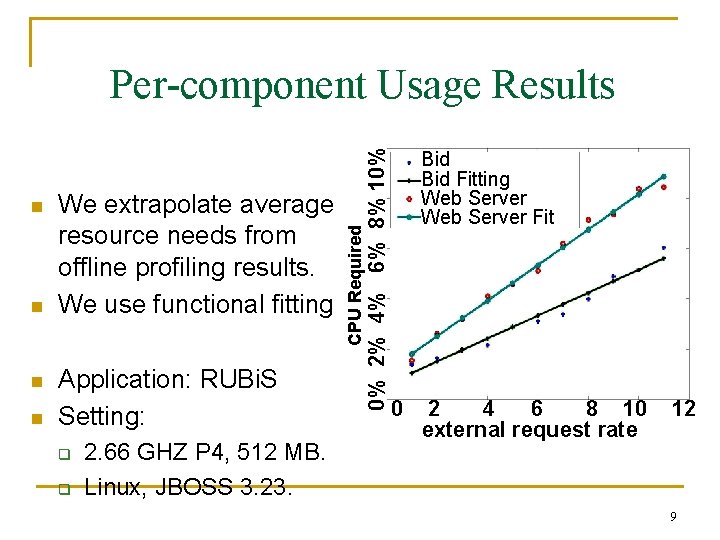

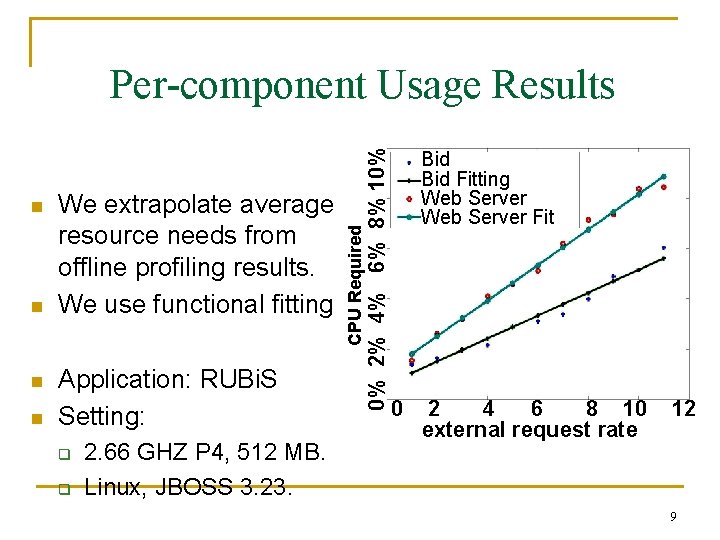

n n n We extrapolate average resource needs from offline profiling results. We use functional fitting. Application: RUBi. S Setting: q q 2. 66 GHZ P 4, 512 MB. Linux, JBOSS 3. 23. Bid Fitting Web Server Fit CPU Required n 0% 2% 4% 6% 8% 10% Per-component Usage Results 0 2 4 6 8 10 external request rate 12 9

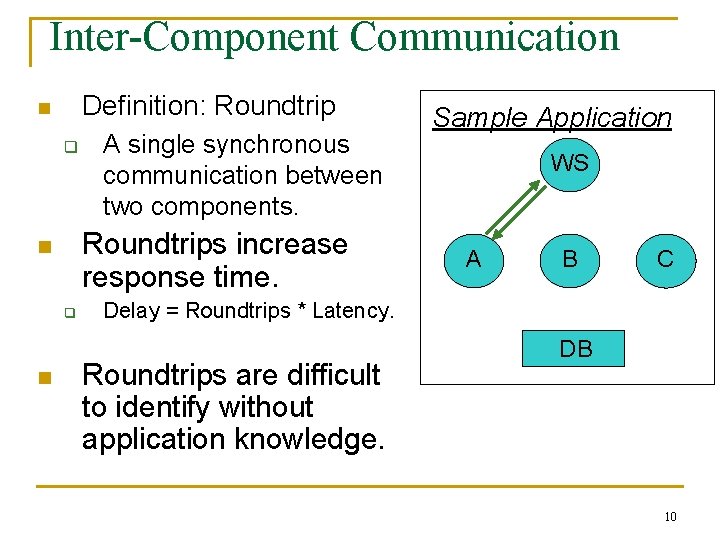

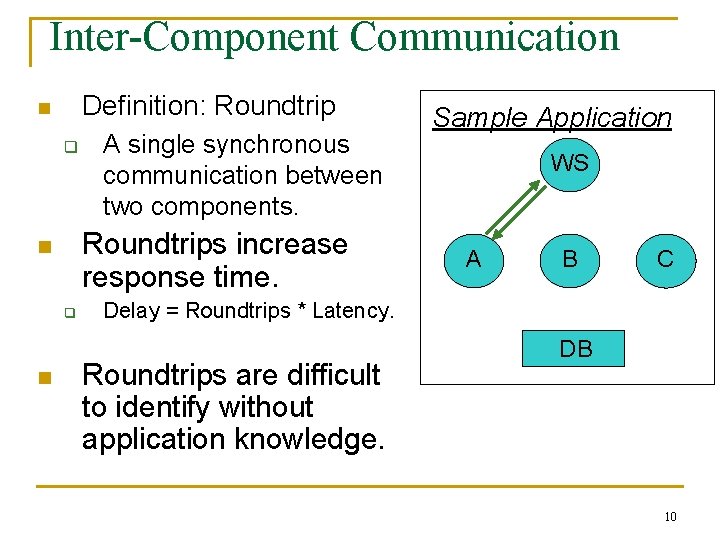

Inter-Component Communication Definition: Roundtrip n q Roundtrips increase response time. n q n A single synchronous communication between two components. Sample Application WS A B C Delay = Roundtrips * Latency. Roundtrips are difficult to identify without application knowledge. DB 10

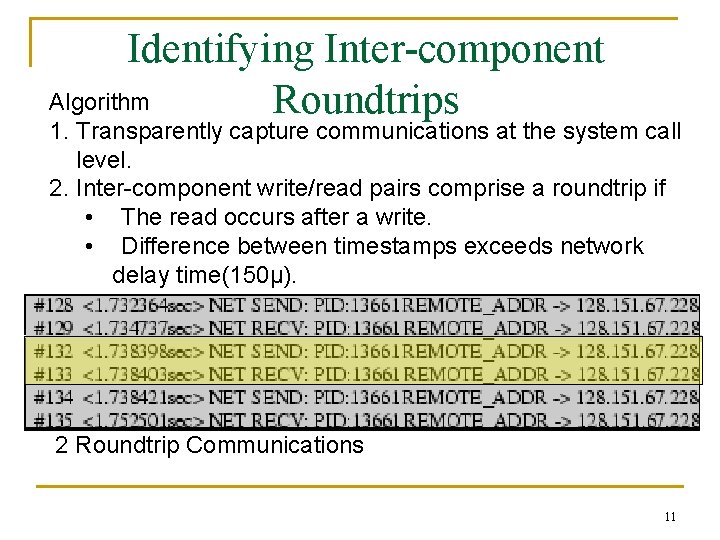

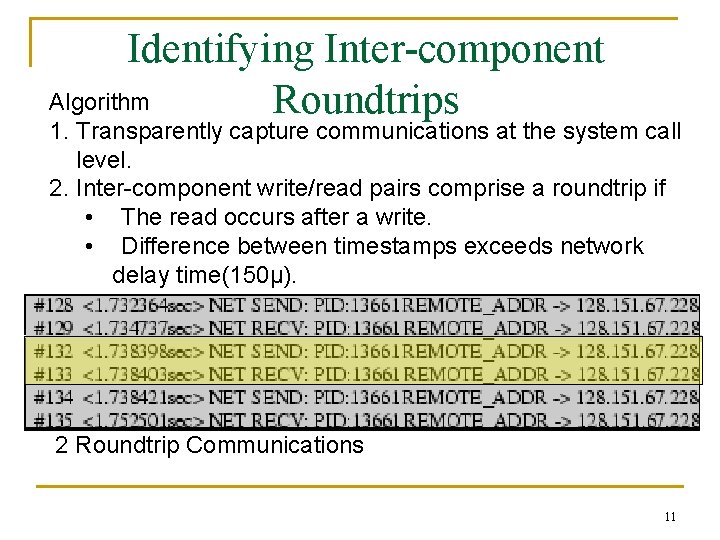

Identifying Inter-component Algorithm Roundtrips 1. Transparently capture communications at the system call level. 2. Inter-component write/read pairs comprise a roundtrip if • The read occurs after a write. • Difference between timestamps exceeds network delay time(150μ). 2 Roundtrip Communications 11

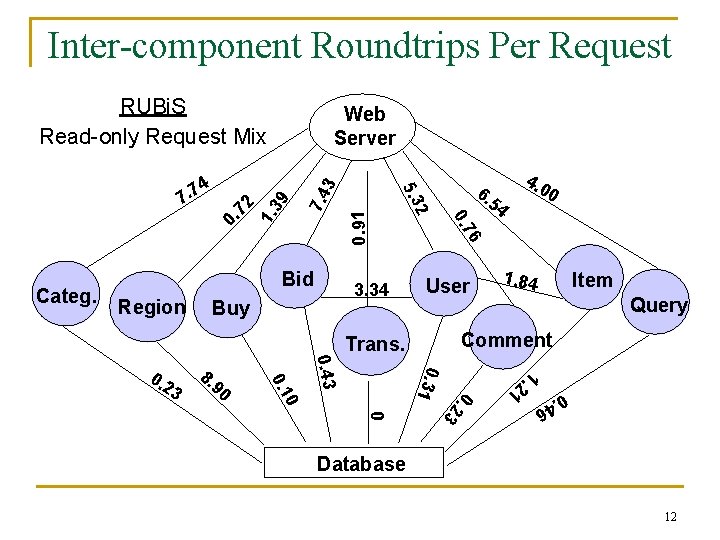

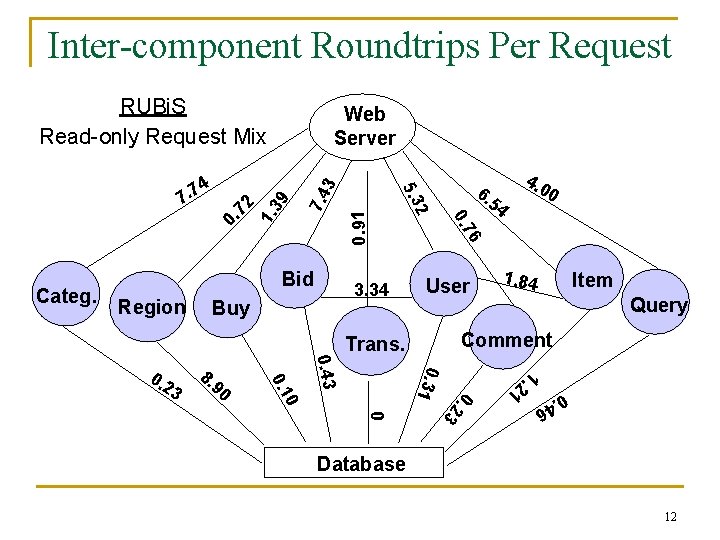

Inter-component Roundtrips Per Request RUBi. S Read-only Request Mix 0. 91 3 39 1. 72 7. 4 3. 34 Buy User 1. 84 Item Query 1. 21 0 0 3 0. 2 0. 31 0. 43 90 0. 1 3 8. 0 Comment Trans. 0. 2 54 4. 0 6 0. 4 0. 6 0. 7 Region 6. 2 Categ. Bid 5. 3 4 7. 7 Web Server Database 12

Talk Outline 1. Introduction 2. Key application characteristics. 3. Models to predict whole-system performance. ü ü Throughput Response Time 4. Model-driven system management. 5. Related Work and Conclusion. 13

Performance Models Component Profiles Application Remote Invocation Profile Communication Profile Performance Modeling Component Placement Predicted Throughput and Response Time Available Resources & Input Workload q We identify key component-level and intercomponent characteristics. q We use these characteristics to model system performance and guide system management. 14

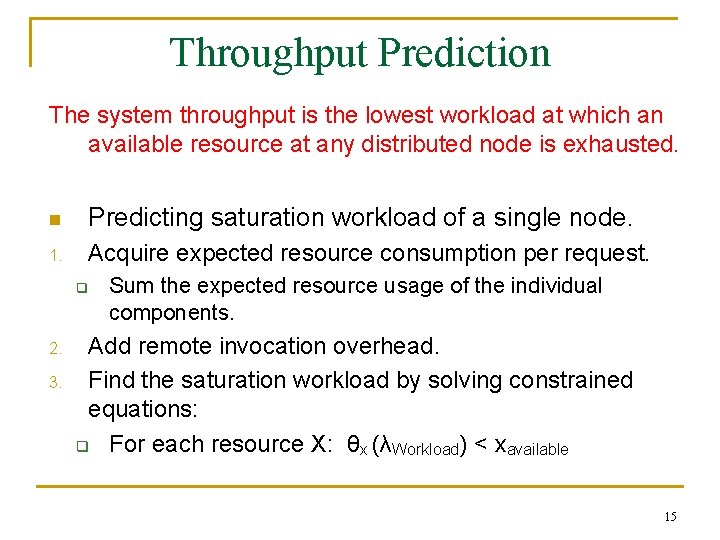

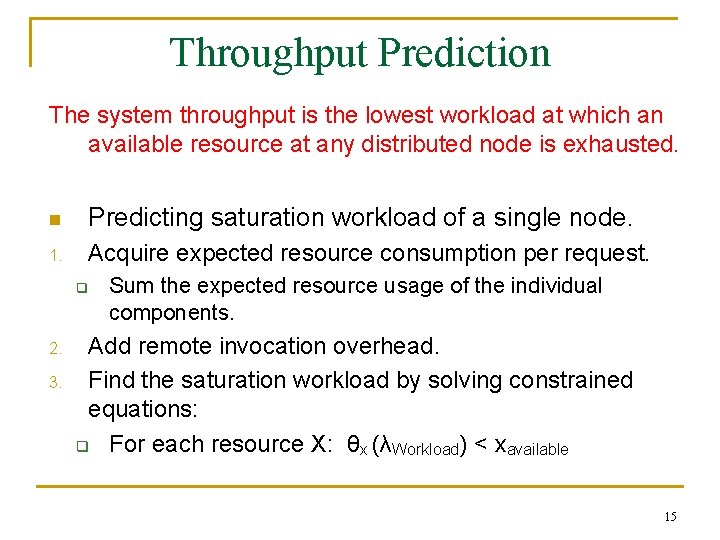

Throughput Prediction The system throughput is the lowest workload at which an available resource at any distributed node is exhausted. n Predicting saturation workload of a single node. 1. Acquire expected resource consumption per request. q 2. 3. Sum the expected resource usage of the individual components. Add remote invocation overhead. Find the saturation workload by solving constrained equations: q For each resource X: θx (λWorkload) < xavailable 15

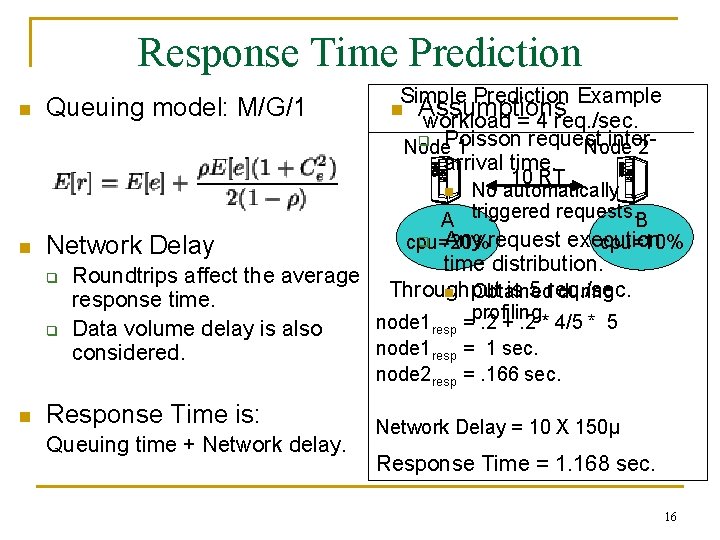

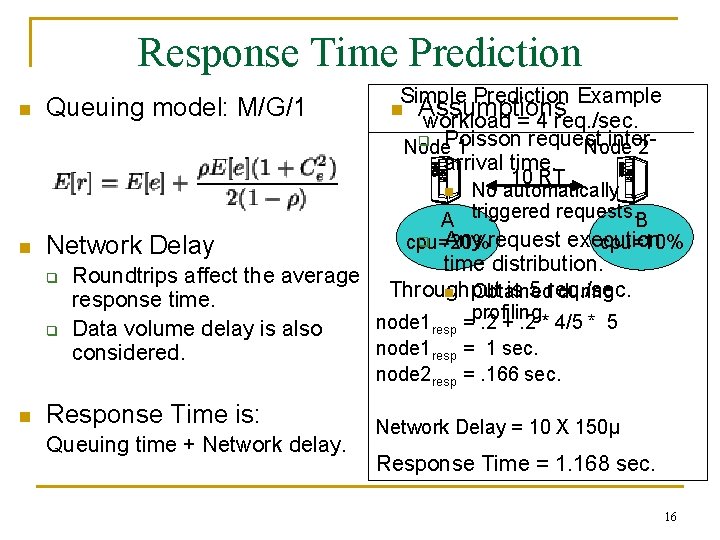

Response Time Prediction n n Queuing model: M/G/1 Network Delay q q Simple Prediction Example n Assumptions workload = 4 req. /sec. q Poisson request inter. Node 1 Node 2 arrival time. 10 RT n No automatically A triggered requests. B q Any request execution cpu=20% cpu=10% time distribution. Roundtrips affect the average Throughput is 5 req. /sec. n Obtained during response time. profiling. node 1 =. 2 +. 2 * 4/5 * 5 resp Data volume delay is also node 1 resp = 1 sec. considered. node 2 resp =. 166 sec. n Response Time is: Queuing time + Network delay. Network Delay = 10 X 150μ Response Time = 1. 168 sec. 16

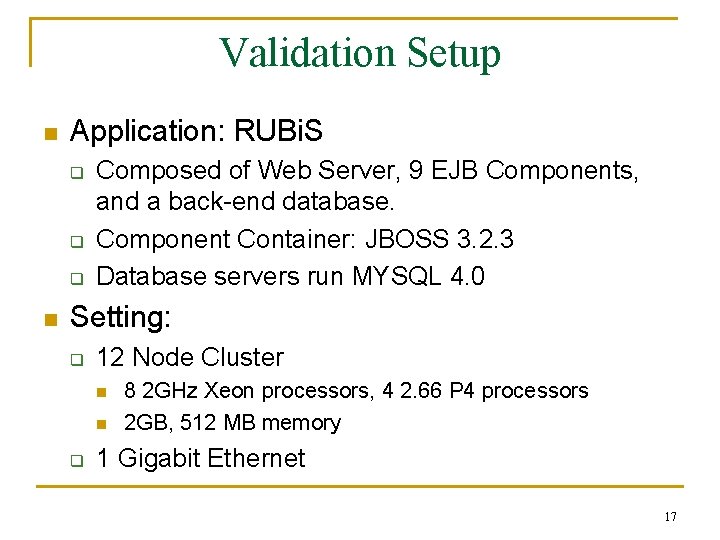

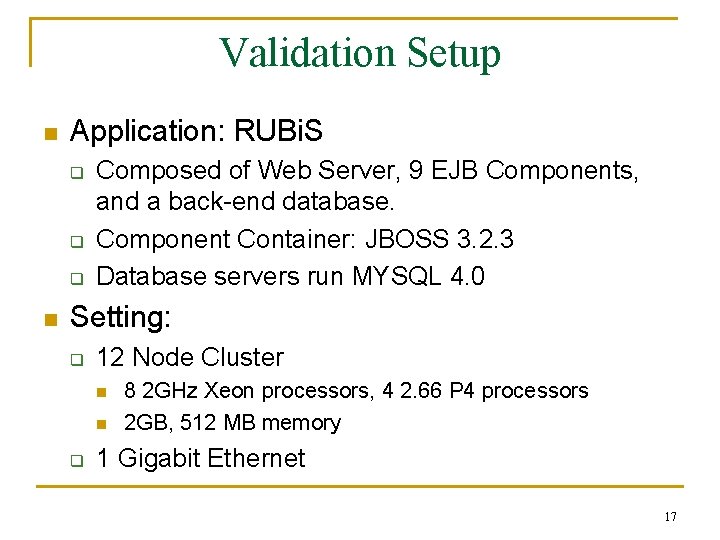

Validation Setup n Application: RUBi. S q q q n Composed of Web Server, 9 EJB Components, and a back-end database. Component Container: JBOSS 3. 2. 3 Database servers run MYSQL 4. 0 Setting: q 12 Node Cluster n n q 8 2 GHz Xeon processors, 4 2. 66 P 4 processors 2 GB, 512 MB memory 1 Gigabit Ethernet 17

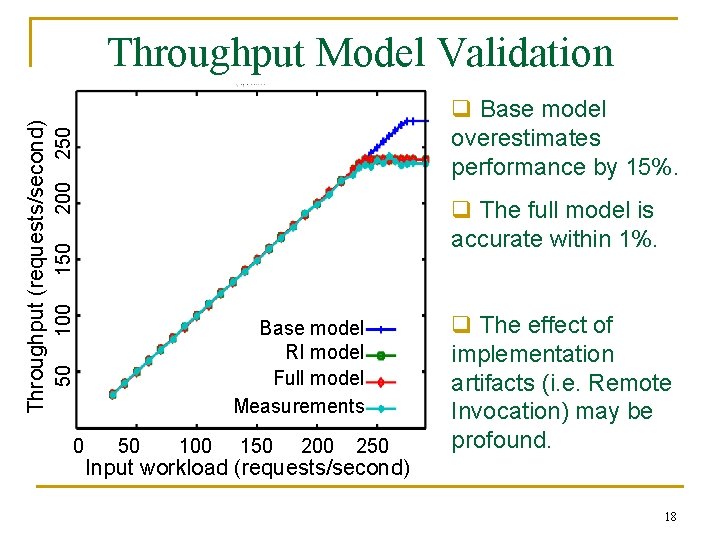

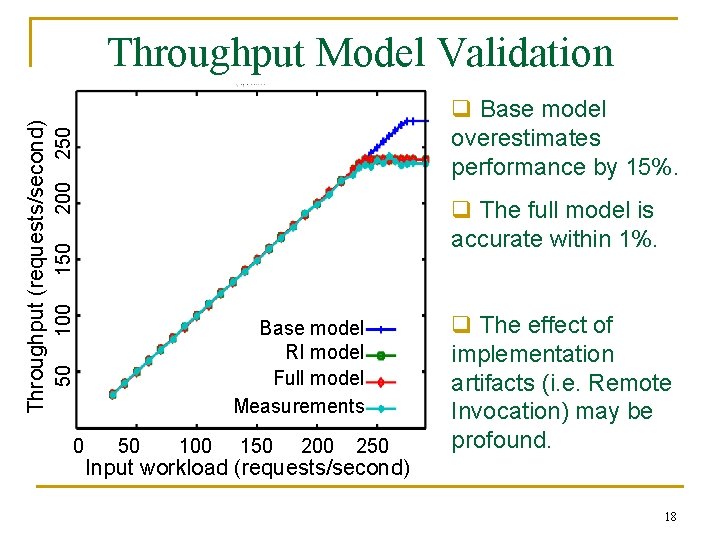

200 250 q Base model overestimates performance by 15%. 100 150 q The full model is accurate within 1%. Base model RI model Full model Measurements 50 Throughput (requests/second) Throughput Model Validation 0 50 100 150 200 250 q The effect of implementation artifacts (i. e. Remote Invocation) may be profound. Input workload (requests/second) 18

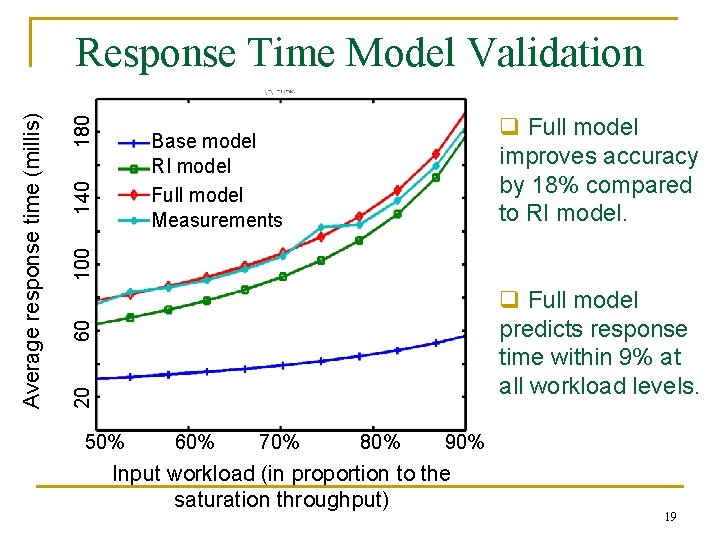

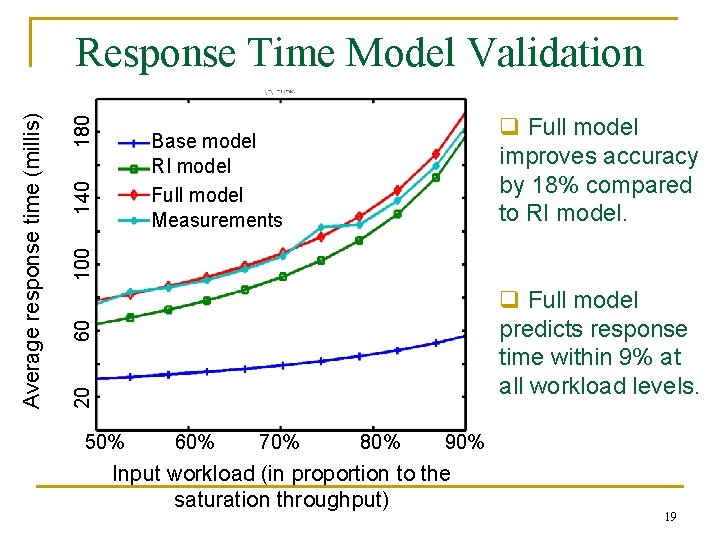

180 q Full model improves accuracy by 18% compared to RI model. 100 140 Base model RI model Full model Measurements 60 q Full model predicts response time within 9% at all workload levels. 20 Average response time (millis) Response Time Model Validation 50% 60% 70% 80% 90% Input workload (in proportion to the saturation throughput) 19

Talk Outline 1. Introduction 2. Key application characteristics. 3. Models to predict whole-system performance. 4. Model-driven system management. ü 5. Performance optimization techniques based upon accurate performance models. Related Work and Conclusion. 20

System Management n Current, system management techniques are imprecise. q q n Inadequate system models. Administrators may provide generic heurists/tips. We use accurate model-based predictions to guide system management. q q High-performance component placement. Capacity planning / Cost-effectiveness analysis. 21

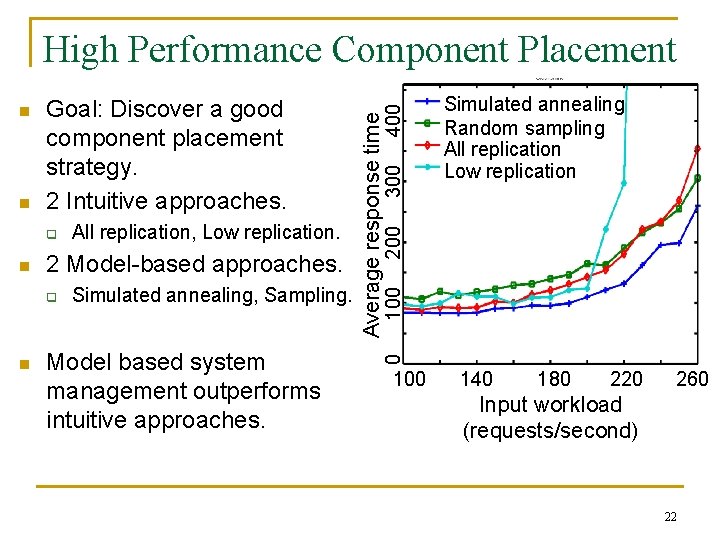

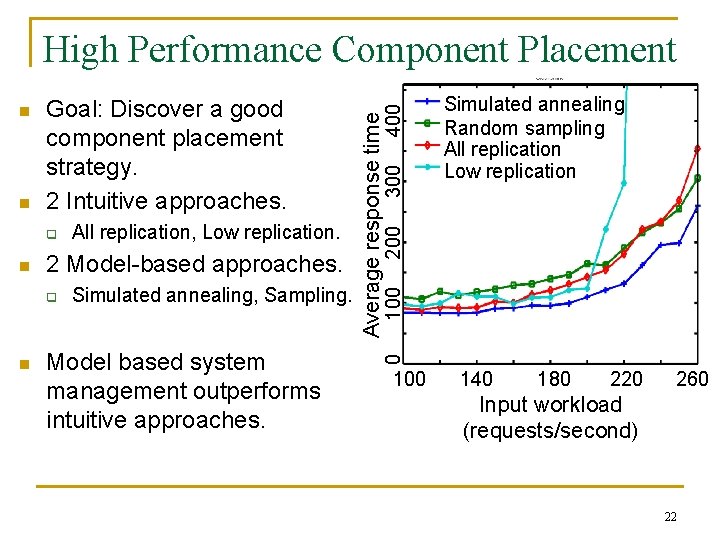

2 Model-based approaches. q n Simulated annealing, Sampling. Model based system management outperforms intuitive approaches. 300 200 n All replication, Low replication. 100 q Simulated annealing Random sampling All replication Low replication 0 n Goal: Discover a good component placement strategy. 2 Intuitive approaches. Average response time n 400 High Performance Component Placement 100 140 180 220 260 Input workload (requests/second) 22

Related Work n Application profiling. q q n Performance modeling. q q n Amza et al. , 2002. Barham et al. , 2005 (Magpie). Doyle et al. , 2003. Urgaonkar et al. , 2005. Distributed component placement. q q q Hunt and Scott, 1999 (Coign). Amiri et al. , 2000 (ABACUS). Ivan et al. , 2002. 23

Take Away Points n We transparently identify and profile key application characteristics. n Using application profiles, we accurately model system performance. n We demonstrate the effectiveness of modelbased system management. www. cs. rochester. edu/~stewart/component. html 24

25

Extra Slides n Someone asked a good question. 26

Detailed Operating System Level Profiles n n We modified the LTT (Yaghmour). LTT instruments the Linux Kernel with trace points and forwards them to a logging daemon. We added trace points at the CPU context switch, the socket level interface, and disk IO. Our approach is still applicable with a different OS and less accurate measurement techniques. 27

Additional Profiling Issues n Nonlinear functional mappings q q q Occurs if requests have strong inter-dependencies. e. g. CPU contention on a spin lock The model is still applicable. n n May require more data points to acquire a decent approximation. Worst case scenario: Table based mappings [E. Anderson] 28

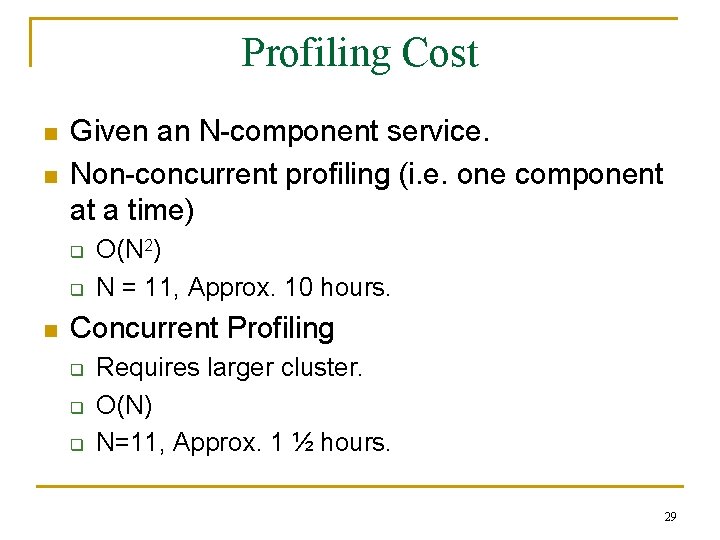

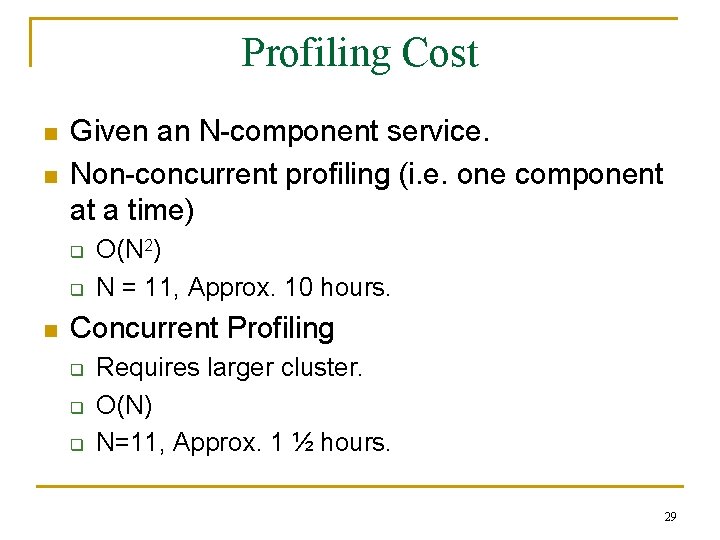

Profiling Cost n n Given an N-component service. Non-concurrent profiling (i. e. one component at a time) q q n O(N 2) N = 11, Approx. 10 hours. Concurrent Profiling q q q Requires larger cluster. O(N) N=11, Approx. 1 ½ hours. 29