Performance Issue Machine Learning to the Rescue Agenda

![Average response time [ms] Results JVM major version 14 Average response time [ms] Results JVM major version 14](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-14.jpg)

![Average response time [ms] Results Framework and JVM 15 Average response time [ms] Results Framework and JVM 15](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-15.jpg)

![Average response time [ms] Results Garbage Collection Algorithm 16 Average response time [ms] Results Garbage Collection Algorithm 16](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-16.jpg)

- Slides: 49

Performance Issue? Machine Learning to the Rescue!

Agenda • Introduction • Measuring performance • What is performance? • How is traditional tuning done? • Now for something completely different! • Analyzing the data • Introduction to terminology • Introduction to the Random Forest • Results • Checking the results • Verifying the model 2

About me • Software architect at AMIS / Conclusion • Several certifications SOA, BPM, MCS, Java, SQL, PL/SQL, Mule, AWS, etc • Enthusiastic blogger http: //javaoraclesoa. blogspot. com • Frequent presenter at conferences https: //nl. linkedin. com/in/smeetsm @Maarten. Smeets. NL 3

What is performance? • Better performance indicates more work can be done using less resources or time • What is work? • Starting an application • Time required to process a single request (response times) • Number of requests which can be processed during a certain period (throughput) • Which resources? • CPU / cores • Memory • Disk • What uses resources to do work? • Application platform

Traditional performance tuning. Trial and error! • Use informed judgement to manipulate a specific variable • Compare it to a baseline • If it’s good, keep it, else revert • Try another variable Add a bit of Machine Learning Wouldn’t it help if you could more accurately determine the variable to manipulate before starting a trial and error process? • Continue until the performance is sufficient 5

What do you need to let the Machine augment ‘informed judgement’ • Lots of performance data! • Different kinds of ‘work’ • response times • throughput • A model to process the data • Understands the structure of the data • Is suitable for my data • Can tell me which variables are important • Different kinds of ‘resources’ for load generator and application • Cores • Memory • Variations in the application and platform • • JVM GC algorithm JVM version Framework used 6

What did I measure? What did I manipulate? Framework Throughput Requests per second Memory JVM (8, 11, 12, 13) Cores Response time Concurrency Garbage Collection 7

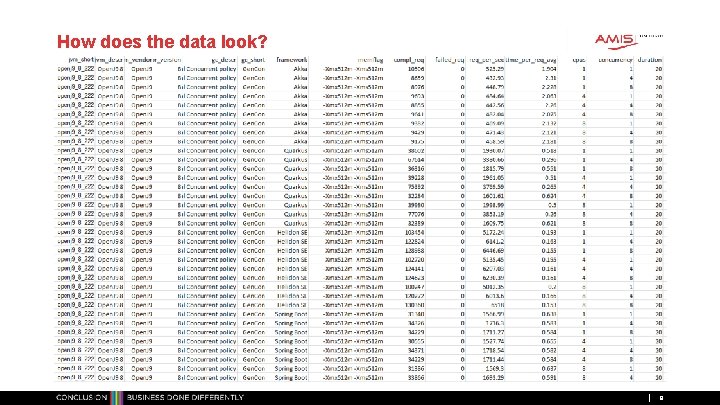

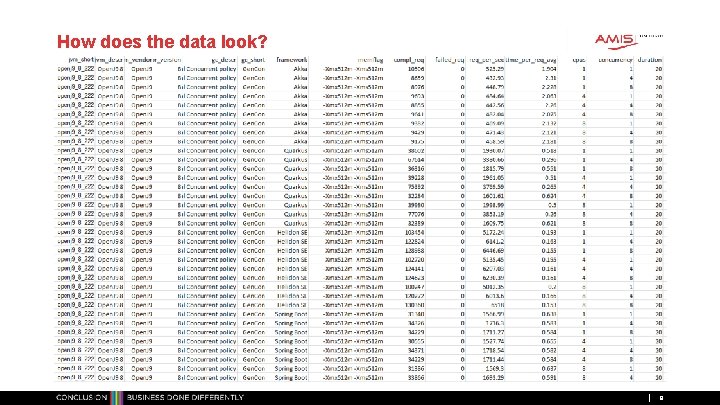

How does the data look? 8

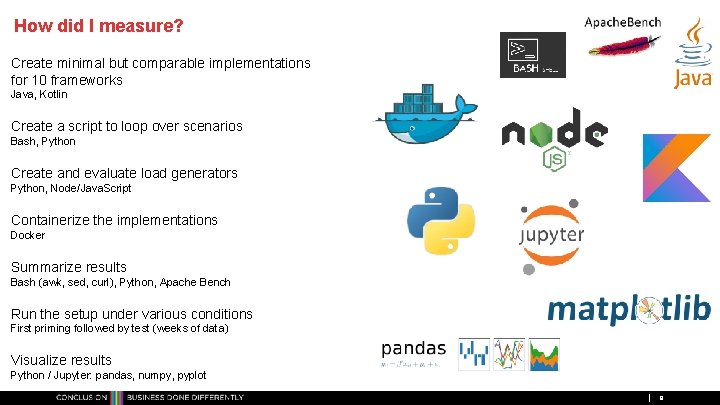

How did I measure? Create minimal but comparable implementations for 10 frameworks Java, Kotlin Create a script to loop over scenarios Bash, Python Create and evaluate load generators Python, Node/Java. Script Containerize the implementations Docker Summarize results Bash (awk, sed, curl), Python, Apache Bench Run the setup under various conditions First priming followed by test (weeks of data) Visualize results Python / Jupyter: pandas, numpy, pyplot 9

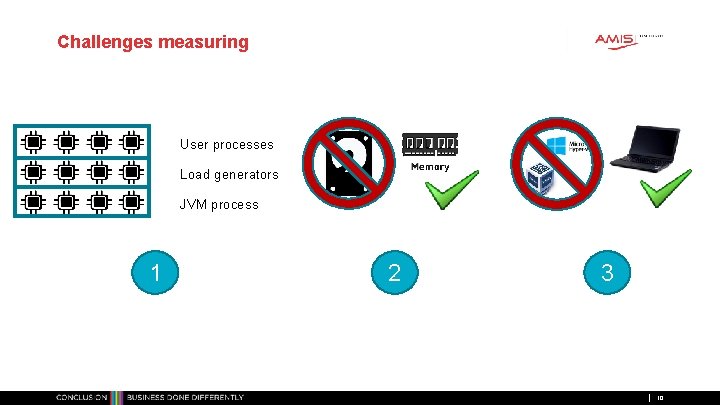

Challenges measuring User processes Load generators JVM process 1 2 3 10

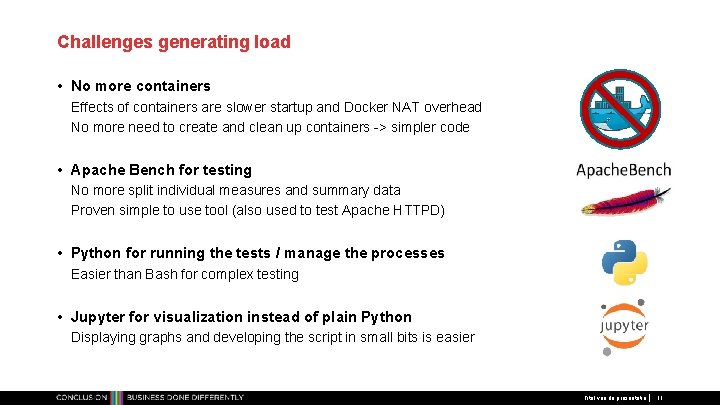

Challenges generating load • No more containers Effects of containers are slower startup and Docker NAT overhead No more need to create and clean up containers -> simpler code • Apache Bench for testing No more split individual measures and summary data Proven simple to use tool (also used to test Apache HTTPD) • Python for running the tests / manage the processes Easier than Bash for complex testing • Jupyter for visualization instead of plain Python Displaying graphs and developing the script in small bits is easier Titel van de presentatie 11

And now for some results! 12

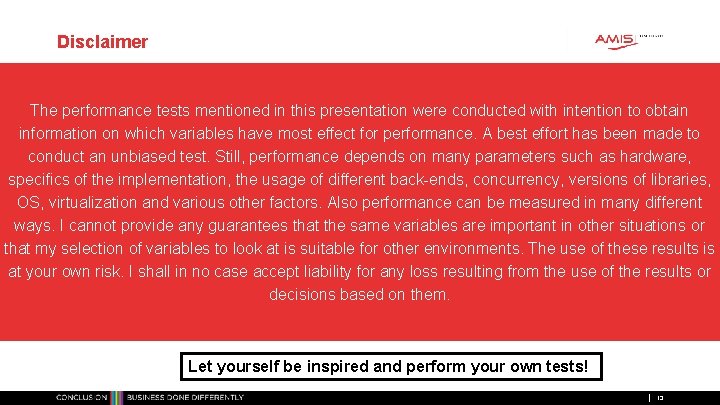

Disclaimer The performance tests mentioned in this presentation were conducted with intention to obtain information on which variables have most effect for performance. A best effort has been made to conduct an unbiased test. Still, performance depends on many parameters such as hardware, specifics of the implementation, the usage of different back-ends, concurrency, versions of libraries, OS, virtualization and various other factors. Also performance can be measured in many different ways. I cannot provide any guarantees that the same variables are important in other situations or that my selection of variables to look at is suitable for other environments. The use of these results is at your own risk. I shall in no case accept liability for any loss resulting from the use of the results or decisions based on them. Let yourself be inspired and perform your own tests! 13

![Average response time ms Results JVM major version 14 Average response time [ms] Results JVM major version 14](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-14.jpg)

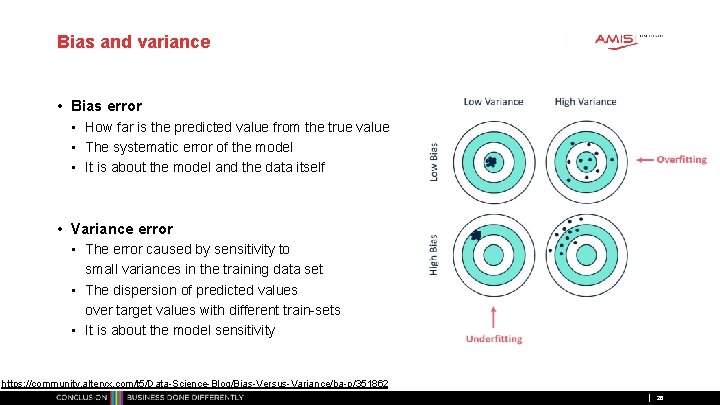

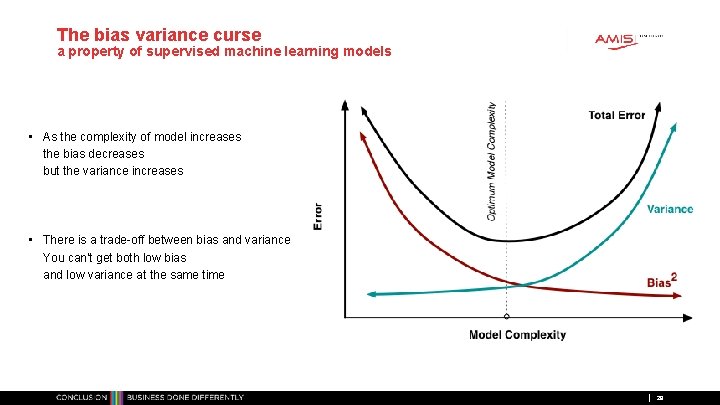

Average response time [ms] Results JVM major version 14

![Average response time ms Results Framework and JVM 15 Average response time [ms] Results Framework and JVM 15](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-15.jpg)

Average response time [ms] Results Framework and JVM 15

![Average response time ms Results Garbage Collection Algorithm 16 Average response time [ms] Results Garbage Collection Algorithm 16](https://slidetodoc.com/presentation_image/1c052988c871de1051c9b17c82b270ba/image-16.jpg)

Average response time [ms] Results Garbage Collection Algorithm 16

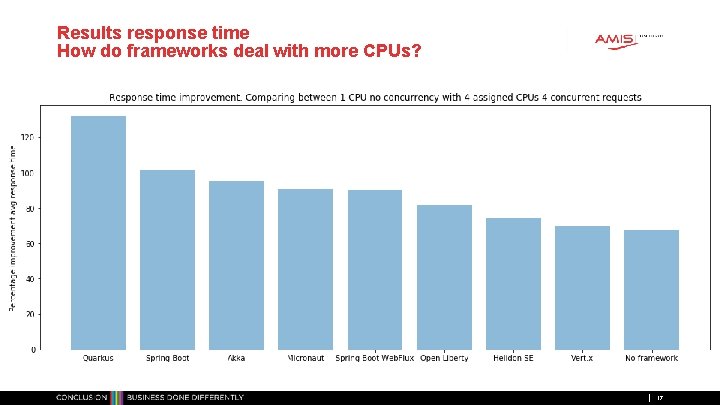

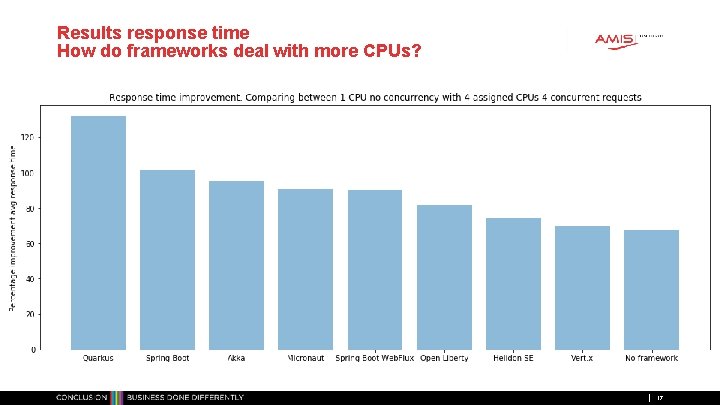

Results response time How do frameworks deal with more CPUs? 17

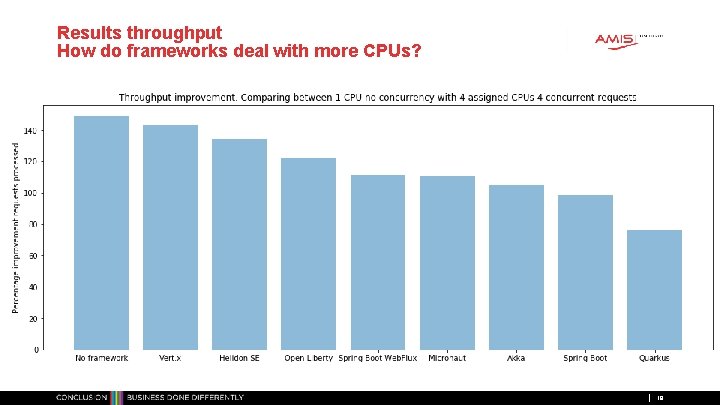

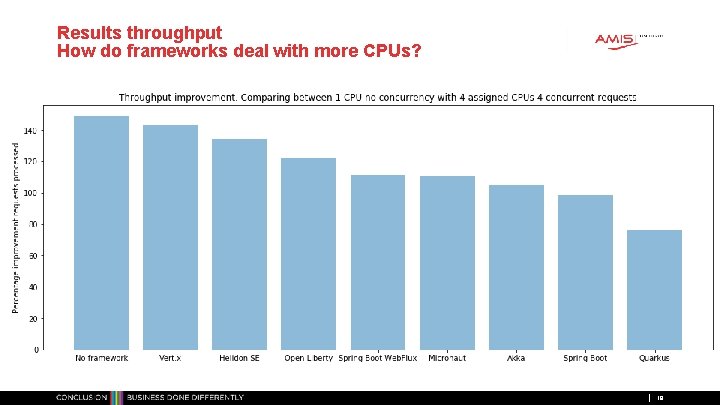

Results throughput How do frameworks deal with more CPUs? 18

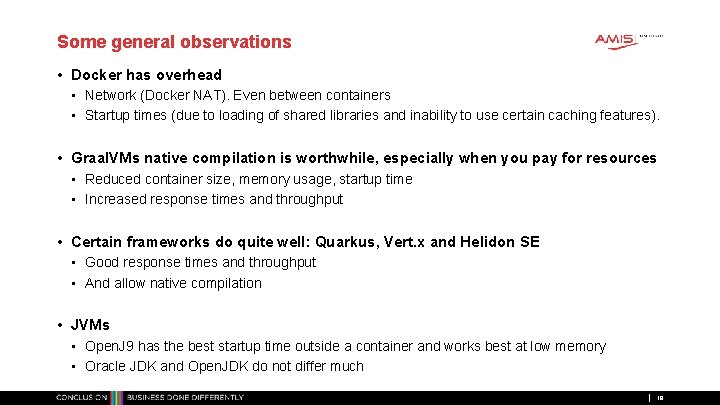

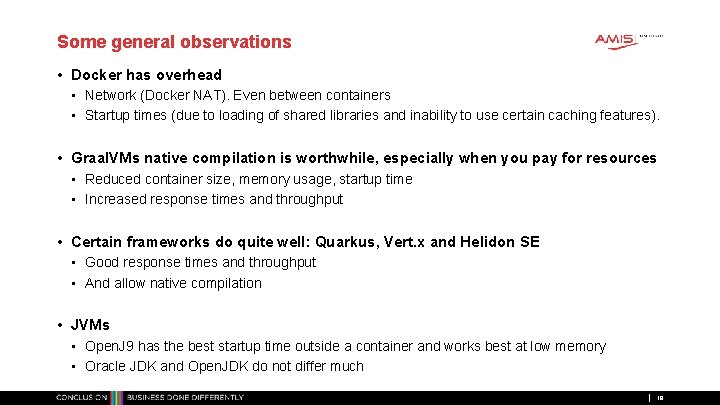

Some general observations • Docker has overhead • Network (Docker NAT). Even between containers • Startup times (due to loading of shared libraries and inability to use certain caching features). • Graal. VMs native compilation is worthwhile, especially when you pay for resources • Reduced container size, memory usage, startup time • Increased response times and throughput • Certain frameworks do quite well: Quarkus, Vert. x and Helidon SE • Good response times and throughput • And allow native compilation • JVMs • Open. J 9 has the best startup time outside a container and works best at low memory • Oracle JDK and Open. JDK do not differ much 19

Lots of data But what do I want to know? 20

What are the most important variables determining my performance? And which are the variables I can (more or less) safely ignore 21

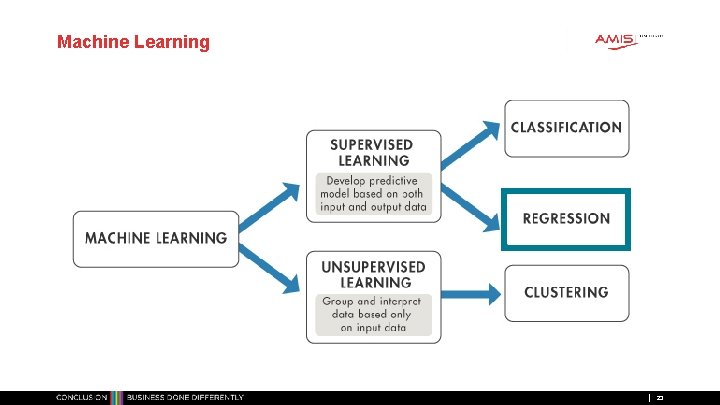

Can Machine Learning solve this for me? 22

Machine Learning 23

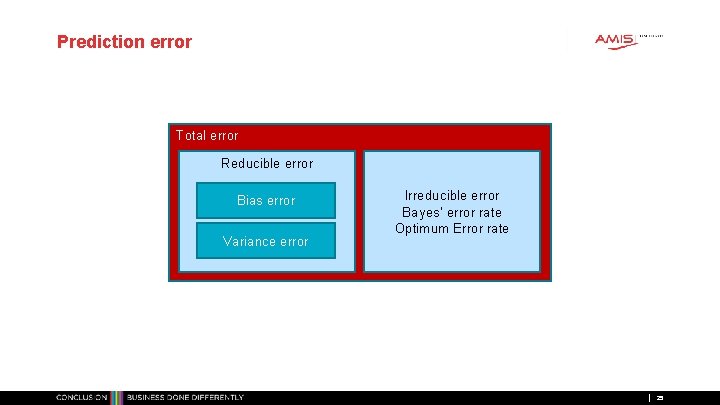

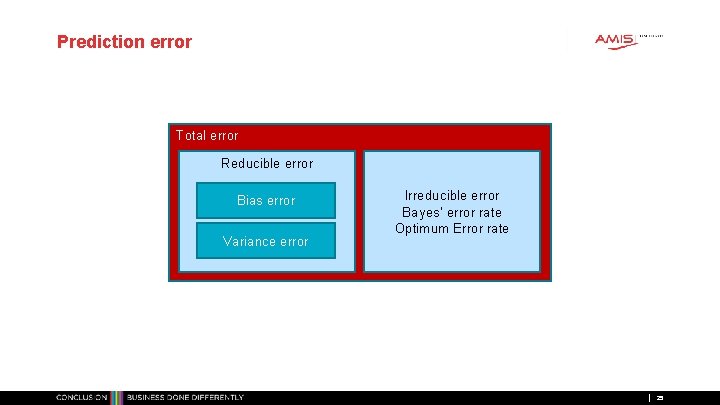

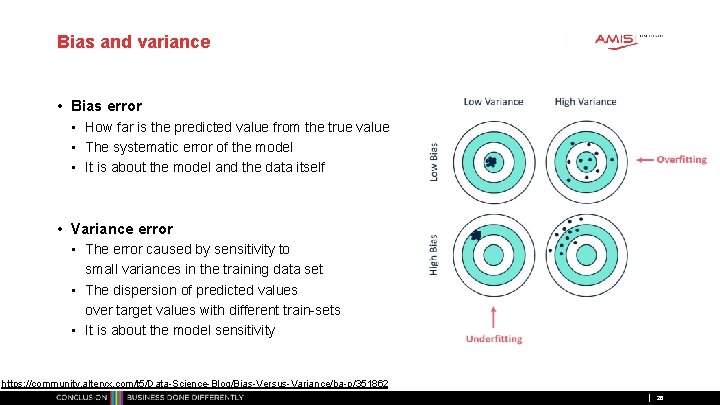

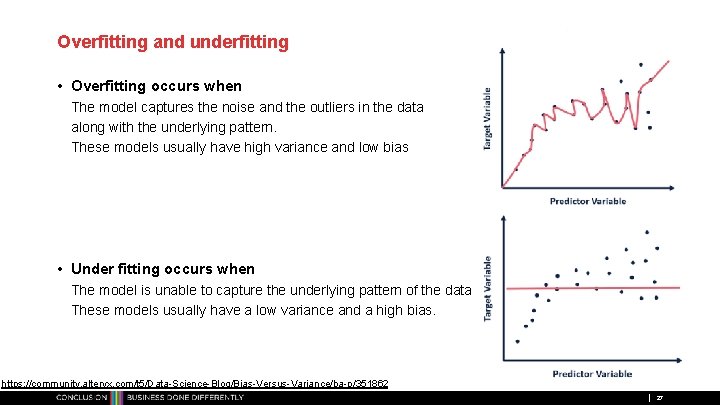

Machine Learning challenges • Prediction error • Bias • Variance • Overfitting and underfitting • Selection and tuning of a model 24

Prediction error Total error Reducible error Bias error Variance error Irreducible error Bayes’ error rate Optimum Error rate 25

Bias and variance • Bias error • How far is the predicted value from the true value • The systematic error of the model • It is about the model and the data itself • Variance error • The error caused by sensitivity to small variances in the training data set • The dispersion of predicted values over target values with different train-sets • It is about the model sensitivity https: //community. alteryx. com/t 5/Data-Science-Blog/Bias-Versus-Variance/ba-p/351862 26

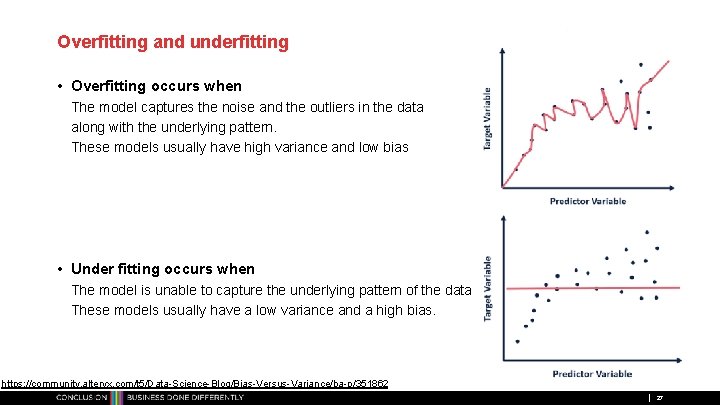

Overfitting and underfitting • Overfitting occurs when The model captures the noise and the outliers in the data along with the underlying pattern. These models usually have high variance and low bias • Under fitting occurs when The model is unable to capture the underlying pattern of the data These models usually have a low variance and a high bias. https: //community. alteryx. com/t 5/Data-Science-Blog/Bias-Versus-Variance/ba-p/351862 27

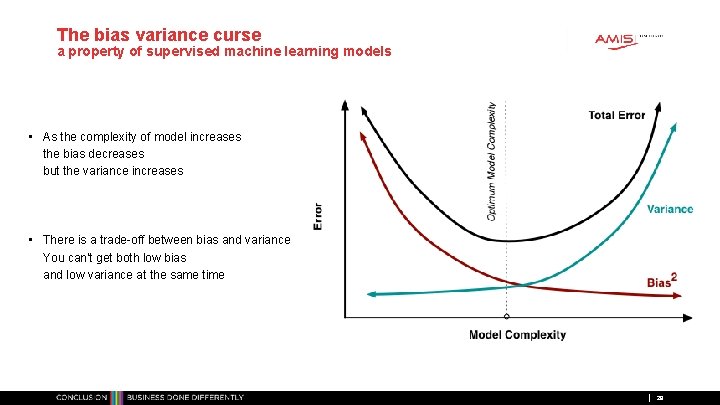

The bias variance curse a property of supervised machine learning models • As the complexity of model increases the bias decreases but the variance increases • There is a trade-off between bias and variance You can't get both low bias and low variance at the same time 28

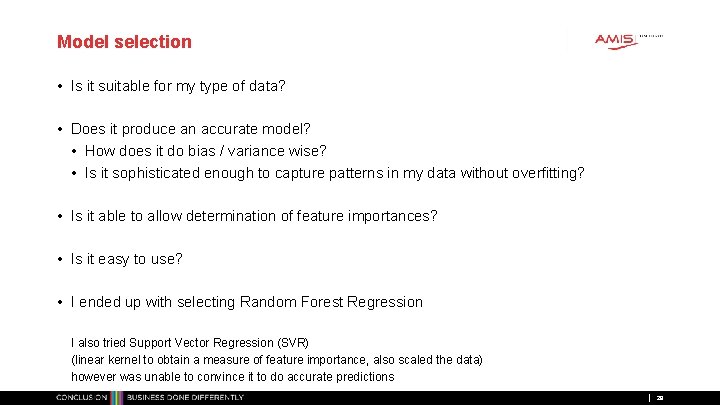

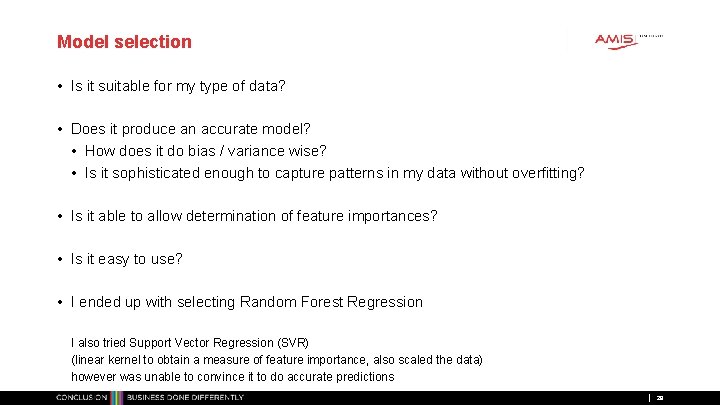

Model selection • Is it suitable for my type of data? • Does it produce an accurate model? • How does it do bias / variance wise? • Is it sophisticated enough to capture patterns in my data without overfitting? • Is it able to allow determination of feature importances? • Is it easy to use? • I ended up with selecting Random Forest Regression I also tried Support Vector Regression (SVR) (linear kernel to obtain a measure of feature importance, also scaled the data) however was unable to convince it to do accurate predictions 29

Why a Random Forest? • Accurate predictions • Standard decision trees often have high variance and low bias High chance of overfitting (with ‘deep trees’, many nodes) • With a Random Forest, the bias remains low and the variance is reduced thus we decrease the chances of overfitting • Flexible • Can be used with many variables • Can be used for classification but also for regression • Easy 200 trees Create a model to predict ytrain based on Xtrain Use the model to do predictions based on Xtest • Feature Importance! 30

Introduction Random Forest • Can be used for classification and regression • A Random Forest can deal with many different features • A Random Forest is a collection of Decision Trees of which results are averaged or majority voted 31

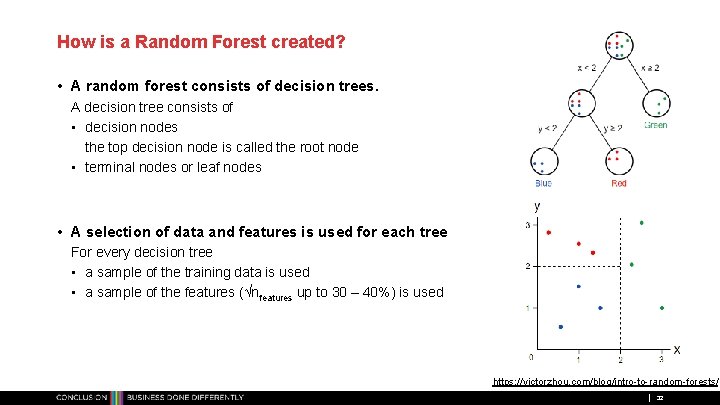

How is a Random Forest created? • A random forest consists of decision trees. A decision tree consists of • decision nodes the top decision node is called the root node • terminal nodes or leaf nodes • A selection of data and features is used for each tree For every decision tree • a sample of the training data is used • a sample of the features (√nfeatures up to 30 – 40%) is used https: //victorzhou. com/blog/intro-to-random-forests/ 32

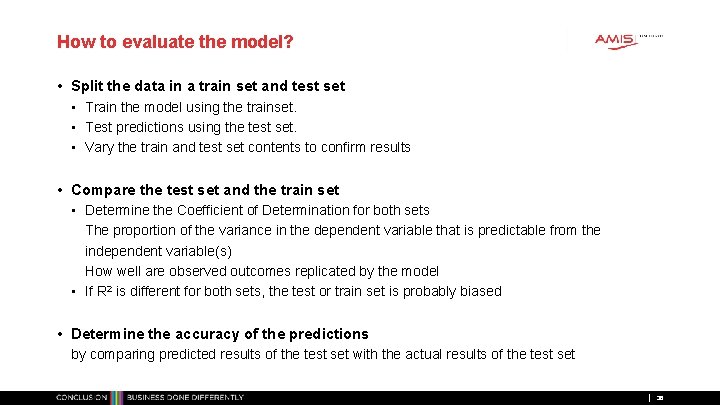

For an individual Decision Tree • Find the best split in the data. This is the root node (decision node) • Find in the first branch for that part of the data again the best split. That is the first sub node (decision node) • Continue creating decision nodes until splitting doesn’t improve the situation The average value of the target variable is assigned to the leaf (terminal node) • Continue until there are only leaf nodes left or until a minimum value is reached • Now the decision tree can be used to do a prediction based on the input features 33

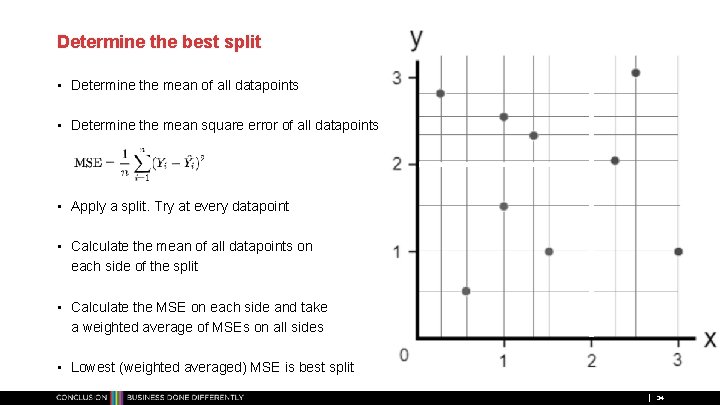

Determine the best split • Determine the mean of all datapoints • Determine the mean square error of all datapoints • Apply a split. Try at every datapoint • Calculate the mean of all datapoints on each side of the split • Calculate the MSE on each side and take a weighted average of MSEs on all sides • Lowest (weighted averaged) MSE is best split 34

Processing the ensemble of trees called The Random Forest • • Take a set of variables Run them through every decision tree Determine a predicted target variable for each of the trees Average the result of all trees 35

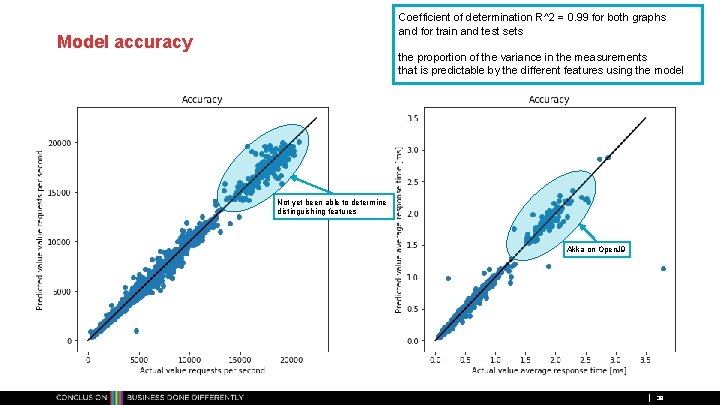

How to evaluate the model? • Split the data in a train set and test set • Train the model using the trainset. • Test predictions using the test set. • Vary the train and test set contents to confirm results • Compare the test set and the train set • Determine the Coefficient of Determination for both sets The proportion of the variance in the dependent variable that is predictable from the independent variable(s) How well are observed outcomes replicated by the model • If R 2 is different for both sets, the test or train set is probably biased • Determine the accuracy of the predictions by comparing predicted results of the test set with the actual results of the test set 36

Back to the data 37

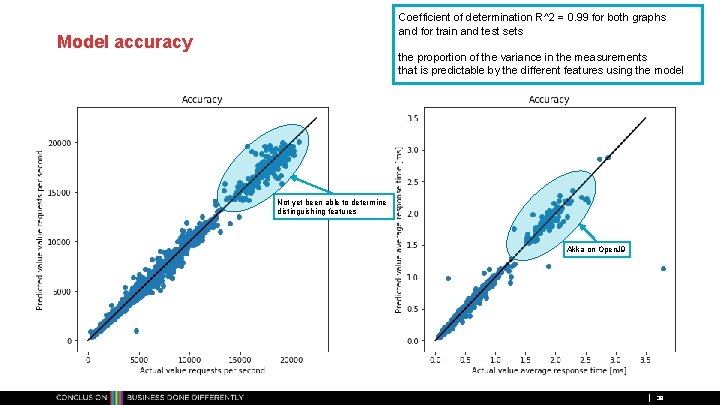

Coefficient of determination R^2 = 0. 99 for both graphs and for train and test sets Model accuracy the proportion of the variance in the measurements that is predictable by the different features using the model Not yet been able to determine distinguishing features Akka on Open. J 9 38

A Random Forest has a cool feature You can determine the relative importance of features in the Decision Trees 39

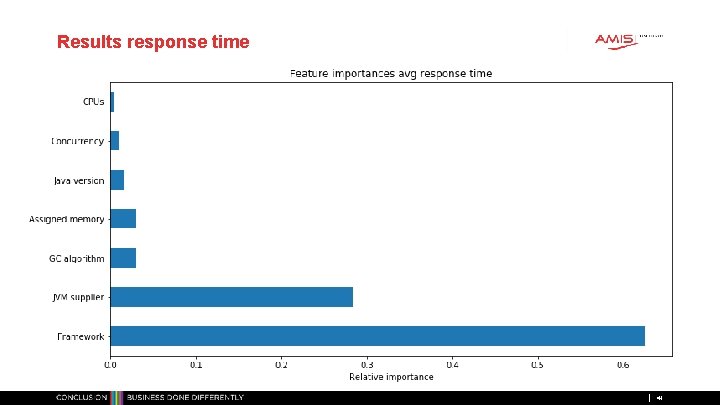

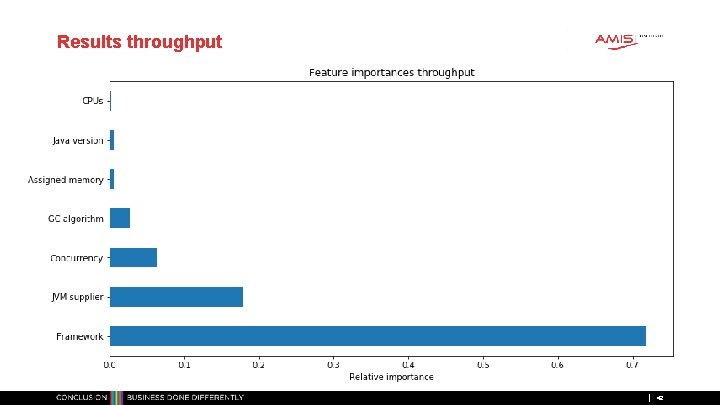

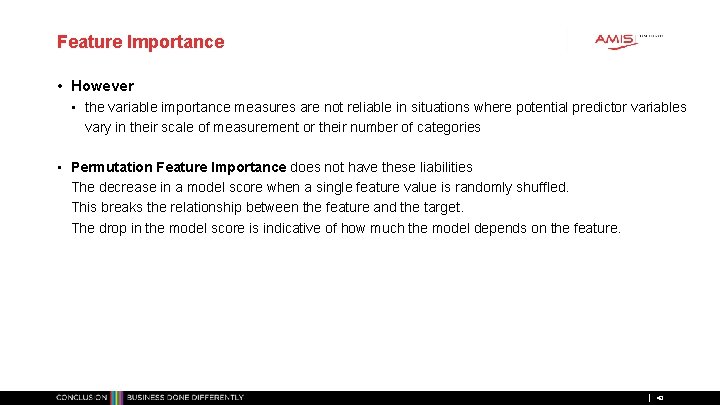

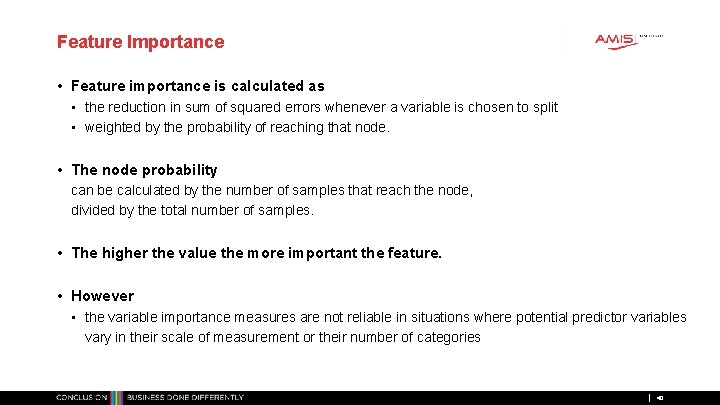

Feature Importance • Feature importance is calculated as • the reduction in sum of squared errors whenever a variable is chosen to split • weighted by the probability of reaching that node. • The node probability can be calculated by the number of samples that reach the node, divided by the total number of samples. • The higher the value the more important the feature. • However • the variable importance measures are not reliable in situations where potential predictor variables vary in their scale of measurement or their number of categories 40

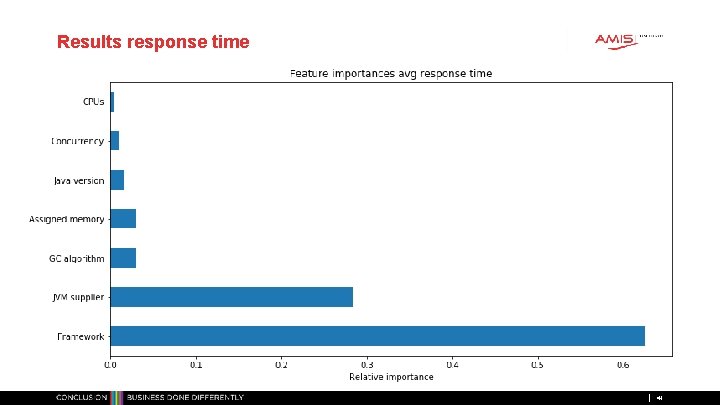

Results response time 41

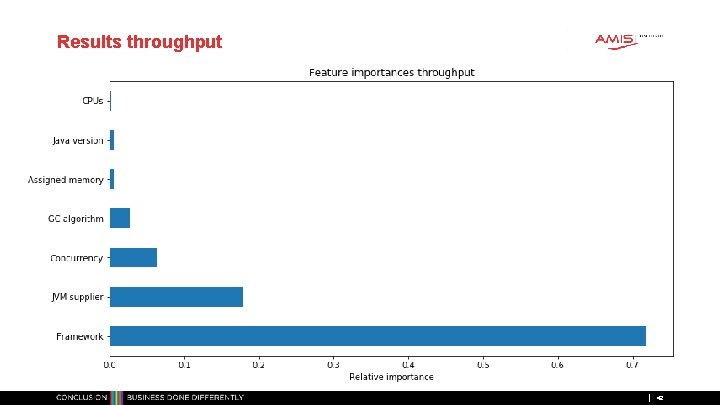

Results throughput 42

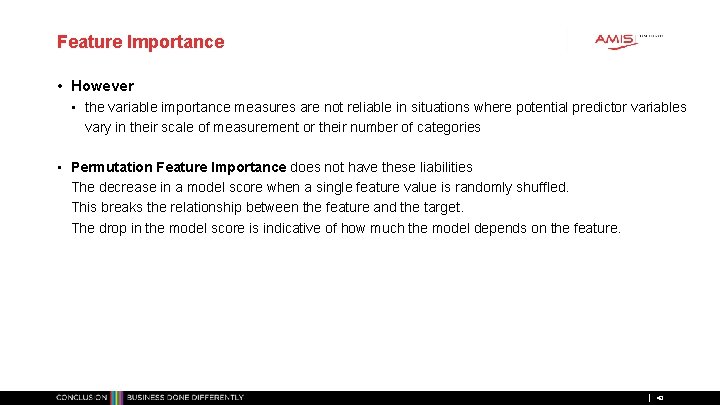

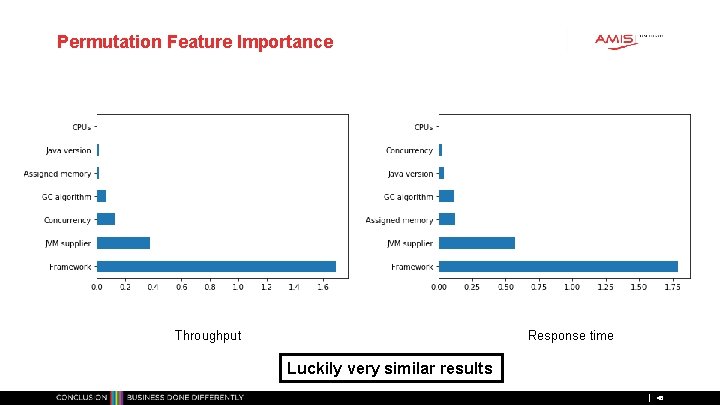

Feature Importance • However • the variable importance measures are not reliable in situations where potential predictor variables vary in their scale of measurement or their number of categories • Permutation Feature Importance does not have these liabilities The decrease in a model score when a single feature value is randomly shuffled. This breaks the relationship between the feature and the target. The drop in the model score is indicative of how much the model depends on the feature. 43

Permutation Feature Importance • Train the baseline model and record the accuracy • Shuffle values from one feature in the selected dataset (keep the same variance) • Pass the dataset to the model again to obtain predictions and calculate the accuracy. The feature importance is the difference between the benchmark score and the one from the modified (permuted) dataset. • Repeat for all features in the dataset • The resulting score is a measure of how important the feature is. If the accuracy is greatly reduced, the feature is important If the accuracy does not change much by randomizing a feature, the feature is not important 44

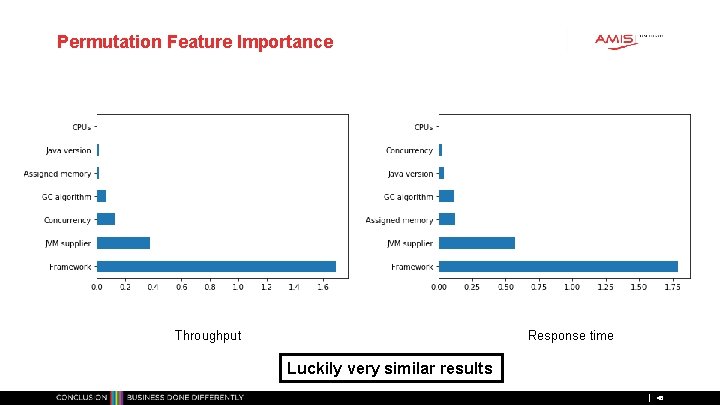

Permutation Feature Importance Throughput Response time Luckily very similar results 45

How do you use this information? When you’re having a performance issue • Look at your application and the frameworks and logic used Quarkus, Helidon SE, Vert. x are good • Next try different JVMs • Oracle JDK and Open. JDK are good • Substrate. VM (Graal. VM native) might be an option for fast startup less memory • Don’t pay much attention to • • Memory CPU GC algorithm JVM version Of course within the scope of the tested variables / code Can this model generalize outside of the tested variables? 46

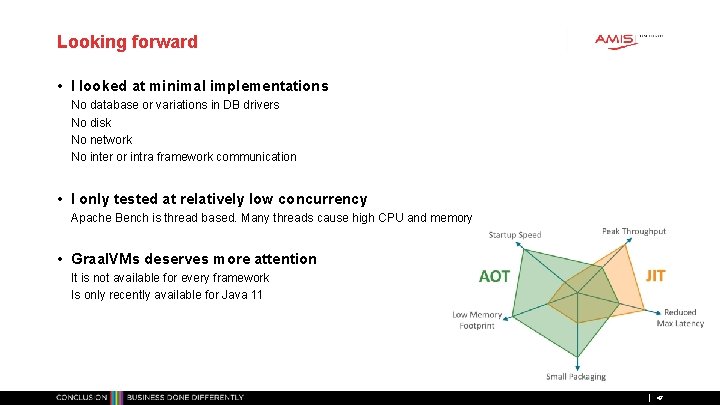

Looking forward • I looked at minimal implementations No database or variations in DB drivers No disk No network No inter or intra framework communication • I only tested at relatively low concurrency Apache Bench is thread based. Many threads cause high CPU and memory • Graal. VMs deserves more attention It is not available for every framework Is only recently available for Java 11 47

Finally • The model has been created based on the supplied variables and data • I had to make choices and scope the test due to time limitations. • Many more variables can be relevant! • How accurate are predictions outside the scope of measurements? • Machine learning can help • • Find patterns and generalize data (create a model) Do predictions Find out what is important in your data Provide valuable insights • Performance • is a great use case for machine learning (e. g. data with patterns, optimization challenges) • a fresh look by a machine can help us be more efficient in our tuning efforts 48

49