Performance Dominik Gddeke Overview Motivation and example PDE

- Slides: 29

Performance Dominik Göddeke

Overview • Motivation and example PDE – Poisson problem – Discretization and data layouts • Five points of attack for GPGPU 2

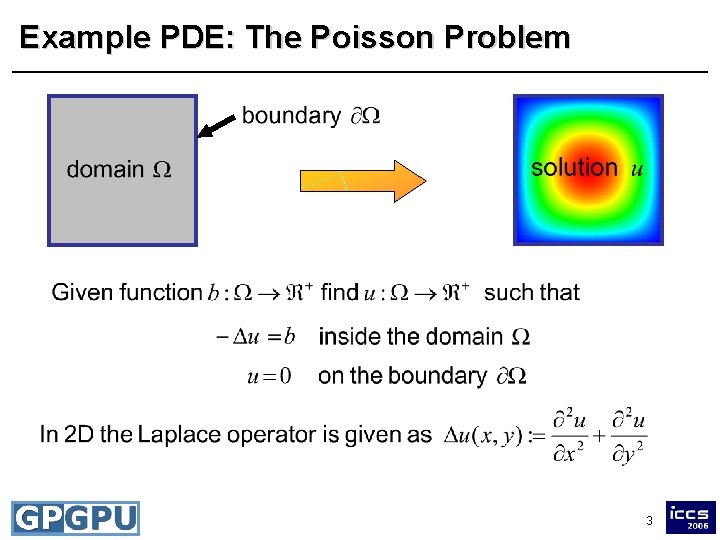

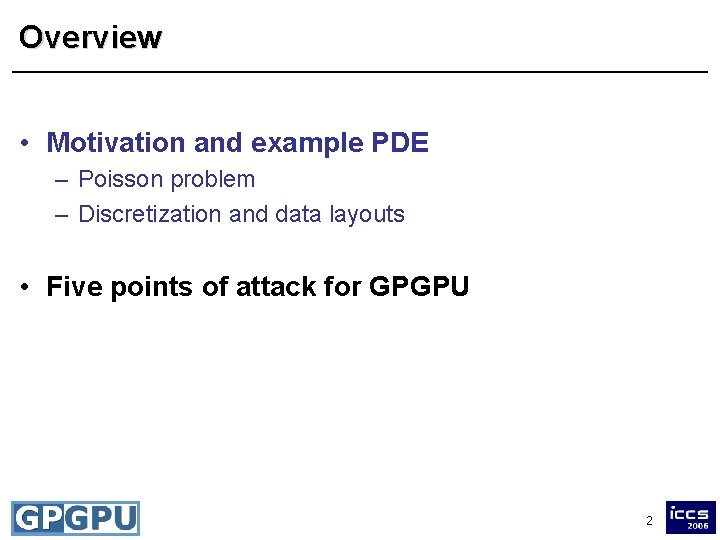

Example PDE: The Poisson Problem 3

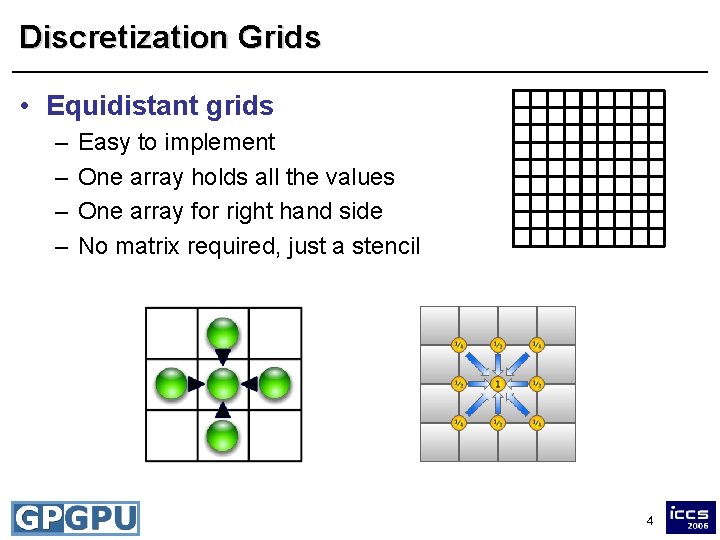

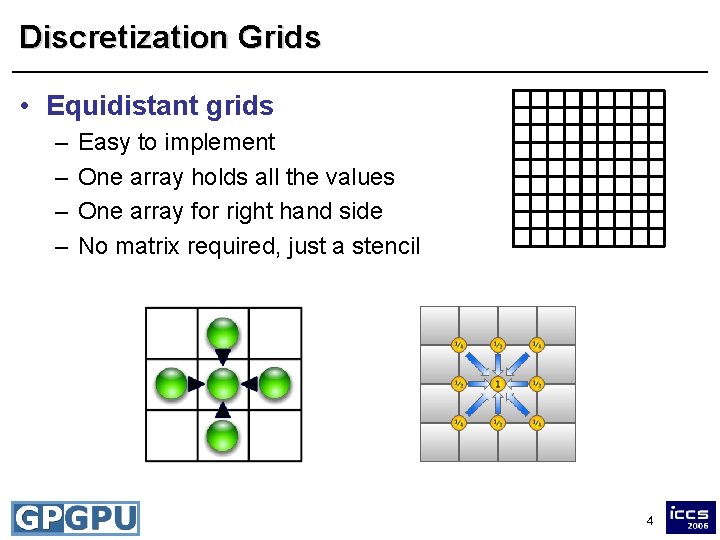

Discretization Grids • Equidistant grids – – Easy to implement One array holds all the values One array for right hand side No matrix required, just a stencil 4

Discretization Grids • Tensorproduct grids – Reasonably easy to implement – Banded matrix, each band represented ass individual array i Matrix N 2 Vectors 1 N N 1 2 GPU arrays 2 2 image courtesy of Jens Krüger 5

Discretization Grids • Generalized tensorproduct grids – Generality vs. efficient data structures tailored for GPU – Global unstructured macro mesh, domain decomposition – (an-)isotropic refinement into local tensorproduct meshes • Efficient compromise – Hide anisotropies locally and exploit fast solvers on regular sub-problems: excellent numerical convergence – Large problems become viable 6

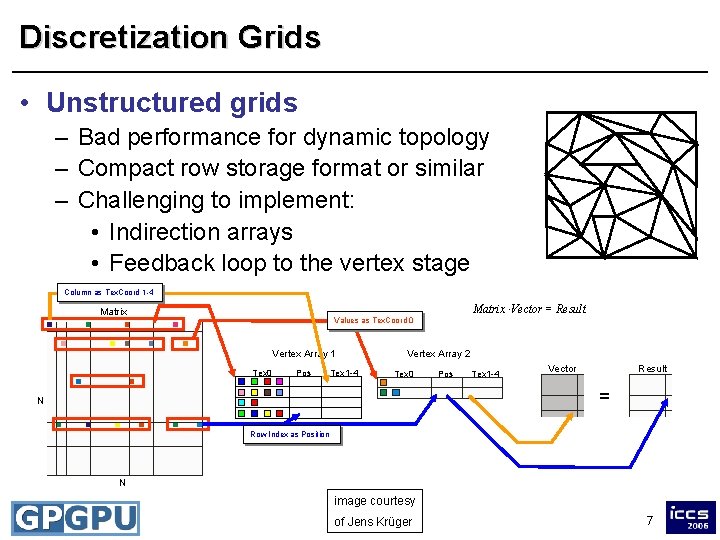

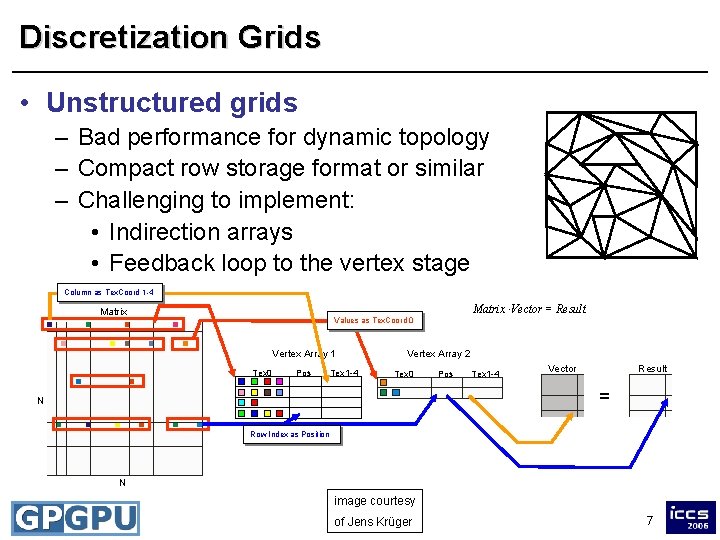

Discretization Grids • Unstructured grids – Bad performance for dynamic topology – Compact row storage format or similar – Challenging to implement: • Indirection arrays • Feedback loop to the vertex stage Column as Tex. Coord 1 -4 Matrix × Vector = Result Matrix Values as Tex. Coord 0 Vertex Array 1 Tex 0 Pos Tex 1 -4 Vertex Array 2 Tex 0 Pos Tex 1 -4 Vector Result = N Row Index as Position N image courtesy of Jens Krüger 7

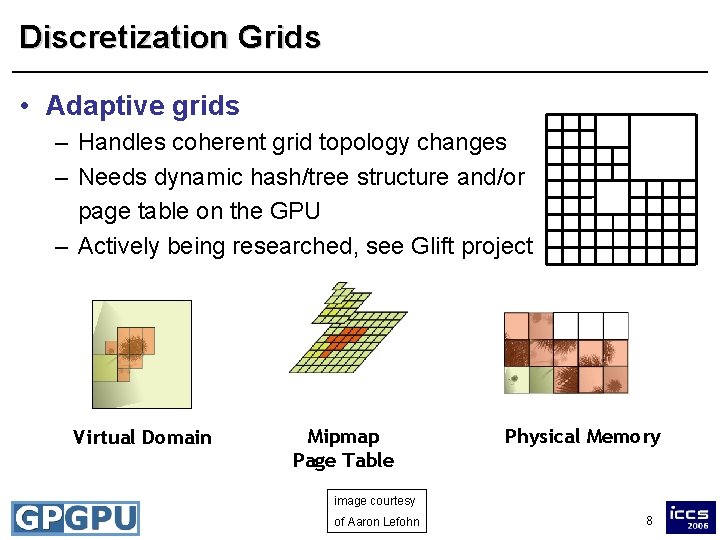

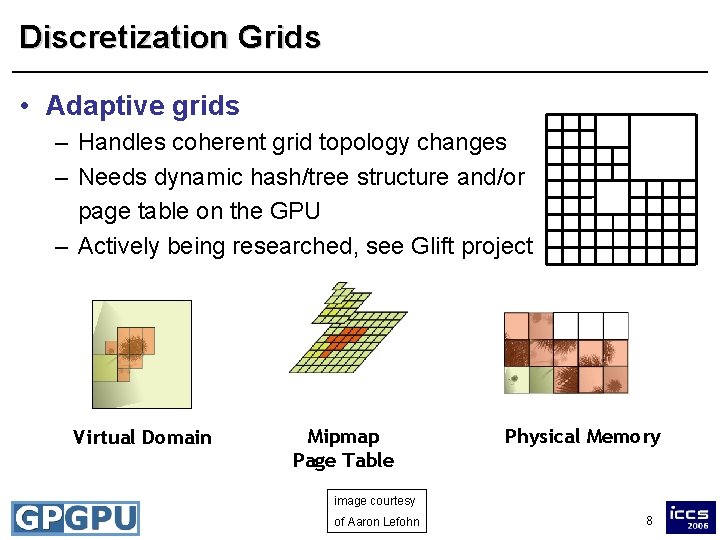

Discretization Grids • Adaptive grids – Handles coherent grid topology changes – Needs dynamic hash/tree structure and/or page table on the GPU – Actively being researched, see Glift project Virtual Domain Mipmap Page Table Physical Memory image courtesy of Aaron Lefohn 8

Overview • Motivation and example PDE • Five points of attack for GPGPU – – – Interpolation On-chip bandwidth Off-chip bandwidth Overhead Vectorization 9

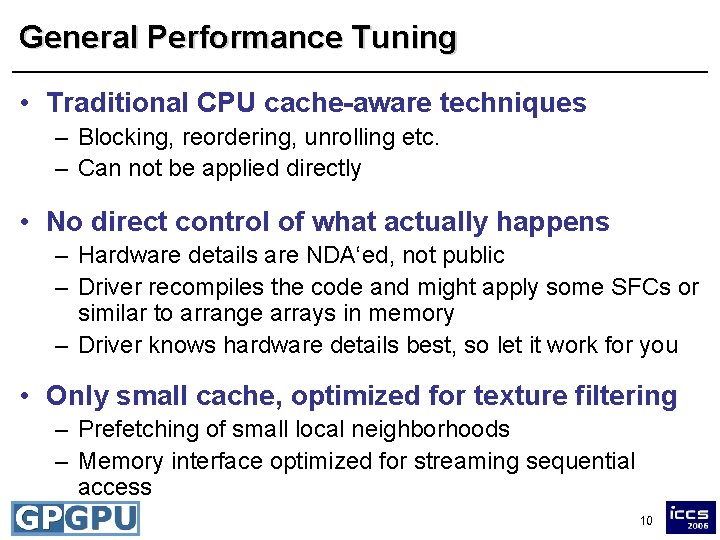

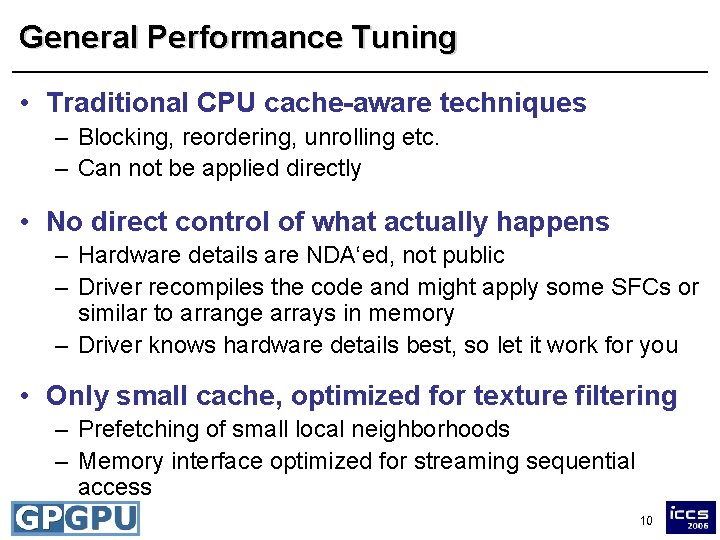

General Performance Tuning • Traditional CPU cache-aware techniques – Blocking, reordering, unrolling etc. – Can not be applied directly • No direct control of what actually happens – Hardware details are NDA‘ed, not public – Driver recompiles the code and might apply some SFCs or similar to arrange arrays in memory – Driver knows hardware details best, so let it work for you • Only small cache, optimized for texture filtering – Prefetching of small local neighborhoods – Memory interface optimized for streaming sequential access 10

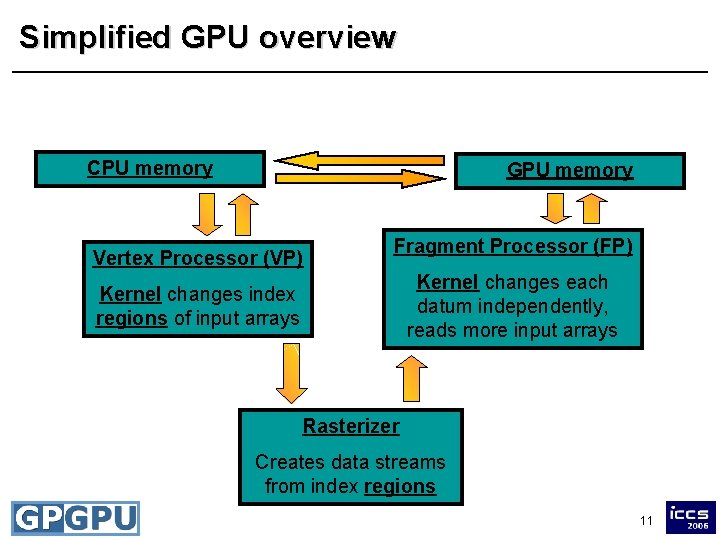

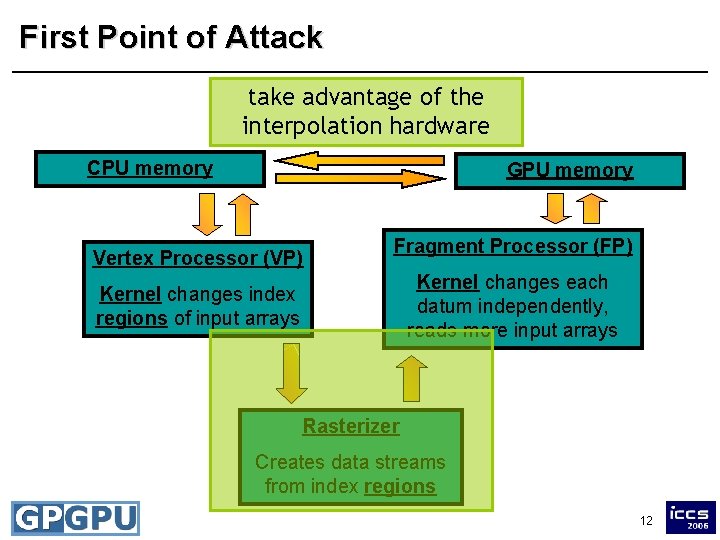

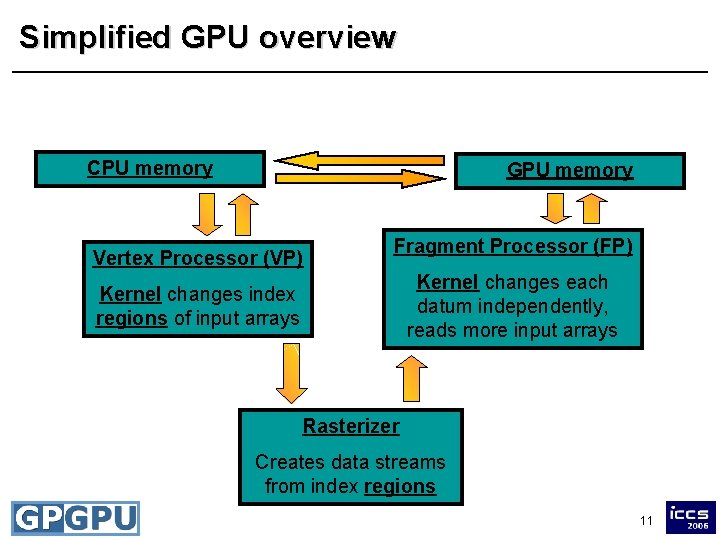

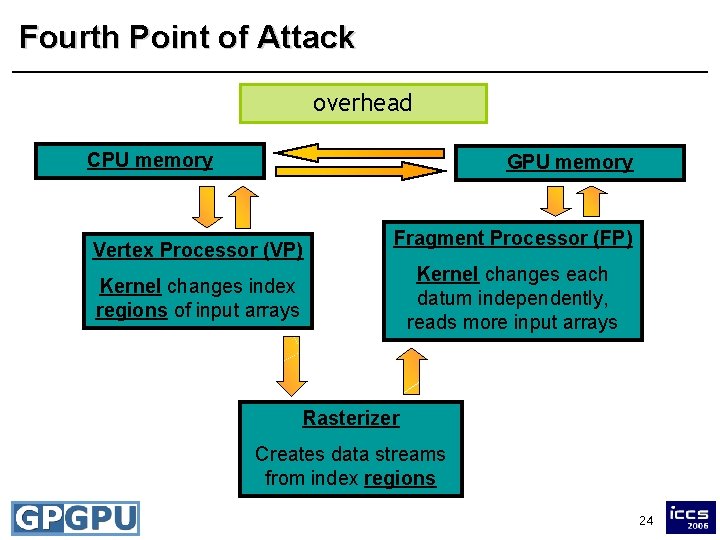

Simplified GPU overview CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 11

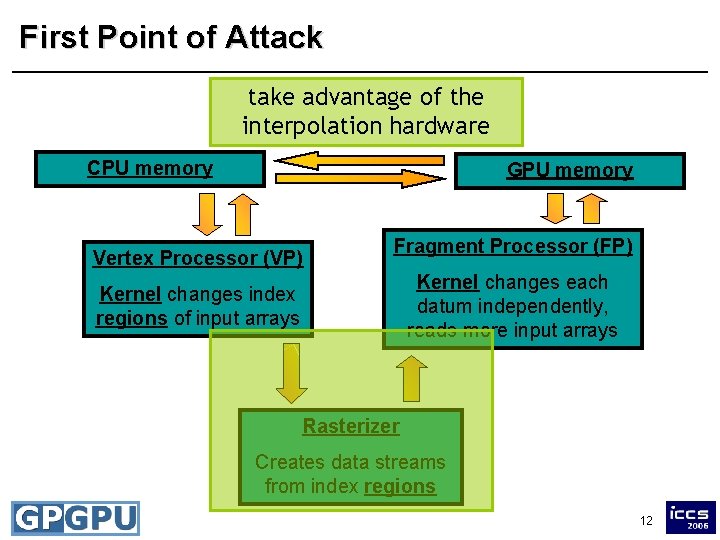

First Point of Attack take advantage of the interpolation hardware CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 12

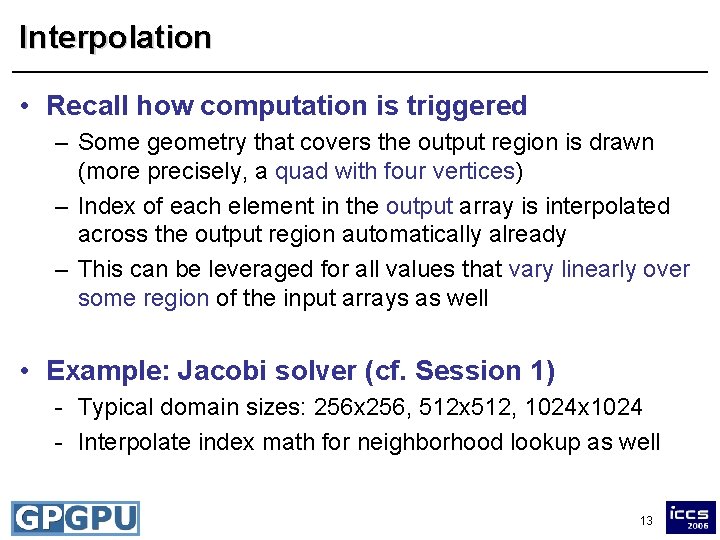

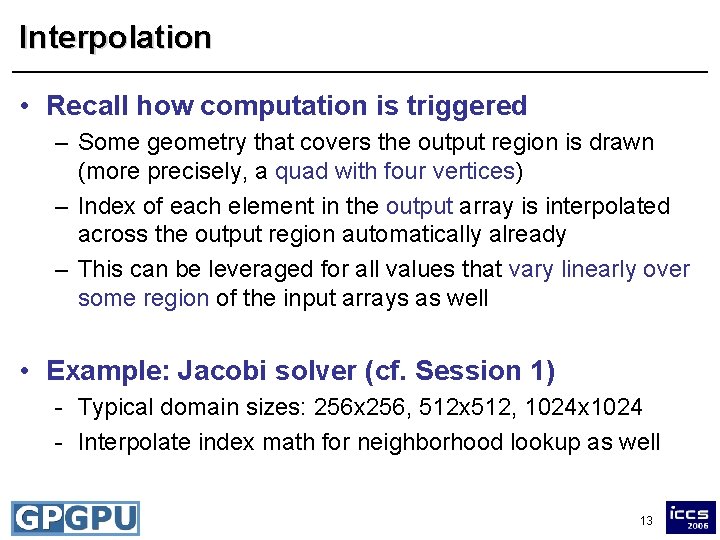

Interpolation • Recall how computation is triggered – Some geometry that covers the output region is drawn (more precisely, a quad with four vertices) – Index of each element in the output array is interpolated across the output region automatically already – This can be leveraged for all values that vary linearly over some region of the input arrays as well • Example: Jacobi solver (cf. Session 1) - Typical domain sizes: 256 x 256, 512 x 512, 1024 x 1024 - Interpolate index math for neighborhood lookup as well 13

Interpolation Example float jacobi (float 2 center : WPOS, uniform sampler. RECT x, uniform sampler. RECT b, uniform float : COLOR in float 2 leftone_over_h) : TEXCOORD 0, { in float 2 right : TEXCOORD 1, float 2 left = center – float 2(1, 0); in float 2 bottom : TEXCOORD 2, float 2 right + float 2(1, 0); in float 2 top = center : TEXCOORD 3, float 2 bottom center – float 2(0, 1); uniform float = one_over_h) : COLOR = center + float 2(0, 1); { float 2 top float float x_center x_left x_right x_bottom x_top rhs = = = tex. RECT(x, tex. RECT(b, center); left); right); bottom); top); center); float Ax = one_over_h * ( 4. 0 * x_center x_left - x_right – x_bottom – x_top ); float inv_diag = one_over_h / 4. 0; return x_center + inv_diag*(rhs – Ax); extract offset calculation to the vertex processor void stencil (float 4 position : POSITION, out float 4 center: HPOS, out float 2 left : TEXCOORD 0, out float 2 right : TEXCOORD 1, out float 2 bottom: TEXCOORD 2, out float 2 top : TEXCOORD 3, uniform float 4 x 4 Model. View. Matrix) { center = mul(Model. View. Matrix, position); left = center – float 2(1, 0); right = center + float 2(1, 0); bottom = center – float 2(0, 1); top = center + float 2(0, 1); } input calculated vars after calculated 1024^2 interpolation times 4 times } 14

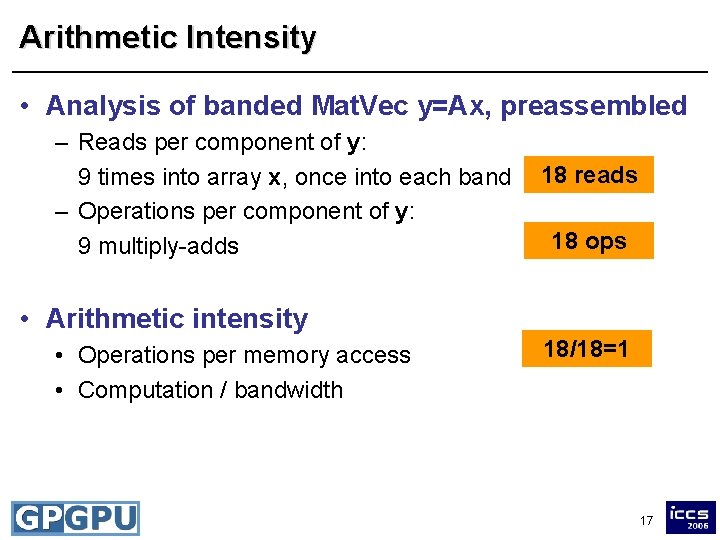

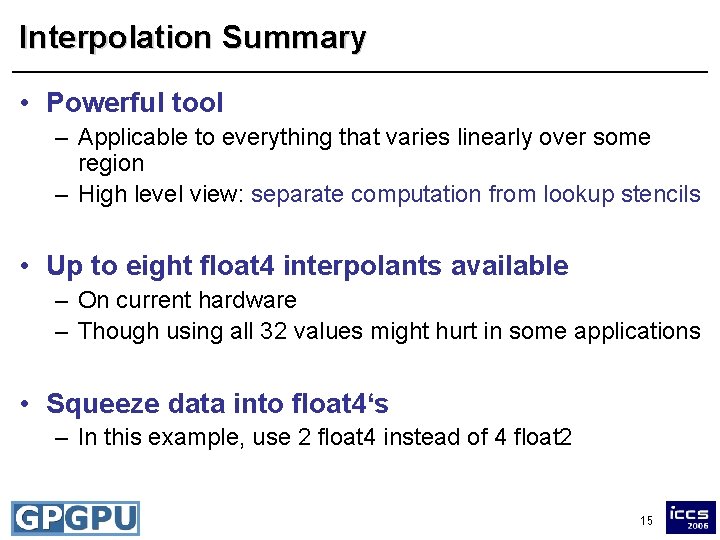

Interpolation Summary • Powerful tool – Applicable to everything that varies linearly over some region – High level view: separate computation from lookup stencils • Up to eight float 4 interpolants available – On current hardware – Though using all 32 values might hurt in some applications • Squeeze data into float 4‘s – In this example, use 2 float 4 instead of 4 float 2 15

Second Point of Attack on-chip bandwidth CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 16

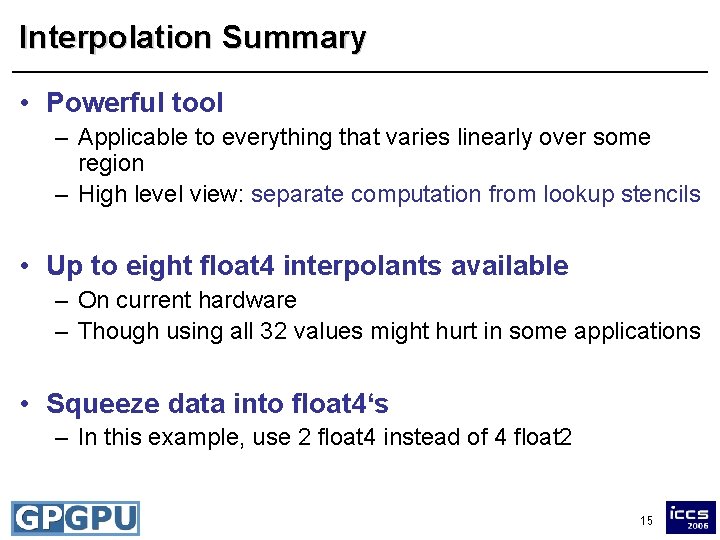

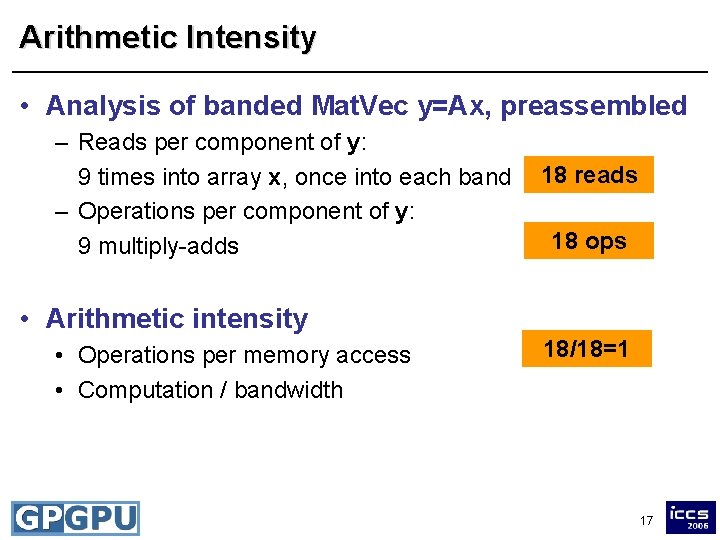

Arithmetic Intensity • Analysis of banded Mat. Vec y=Ax, preassembled – Reads per component of y: 9 times into array x, once into each band – Operations per component of y: 9 multiply-adds 18 reads 18 ops • Arithmetic intensity • Operations per memory access • Computation / bandwidth 18/18=1 17

Precompute vs. Recompute • Case 1: Application is compute-bound – High arithmetic intensity – Trade computation for memory access – Precompute as many values as possible and read in from additional input arrays • Try to maintain spatial coherence – Otherwise, performance will degrade • Rule of thumb – Need approx. 7 basic arithmetic ops to hide latency – Do not precompute x 2 if you read in x anyway 18

Precompute vs. Recompute • Case 2: Application is bandwidth-bound – Trade memory access for additional computation • Example: Matrix assembly and Matrix-Vector multiplication – On-the-fly: recompute all entries in each Mat. Vec • Lowest memory requirement • Good for simple entries or seldom use of matrix 19

Precompute vs. Recompute – Partial assembly: precompute only few intermediate values • Allows to balance computation and bandwidth requirements • Good choice of precomputed results requires also little memory – Full assembly: precompute all entries of A • Read entries during Mat. Vec • Good if other computations hide bandwidth problem • Otherwise try to use partial assembly 20

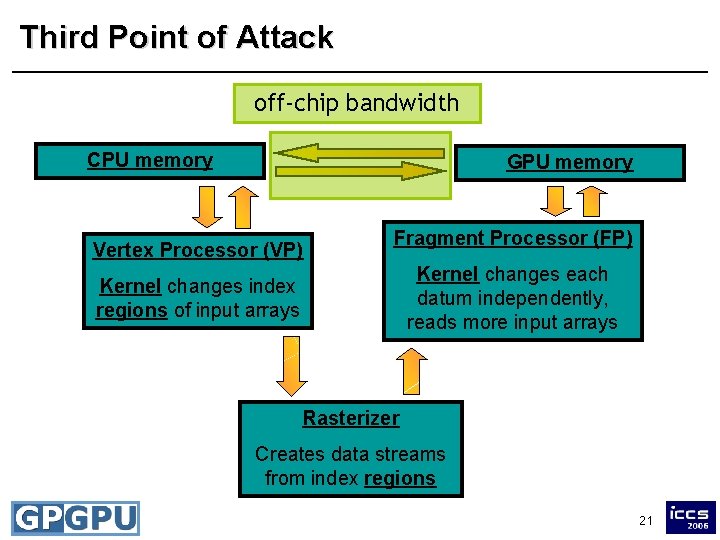

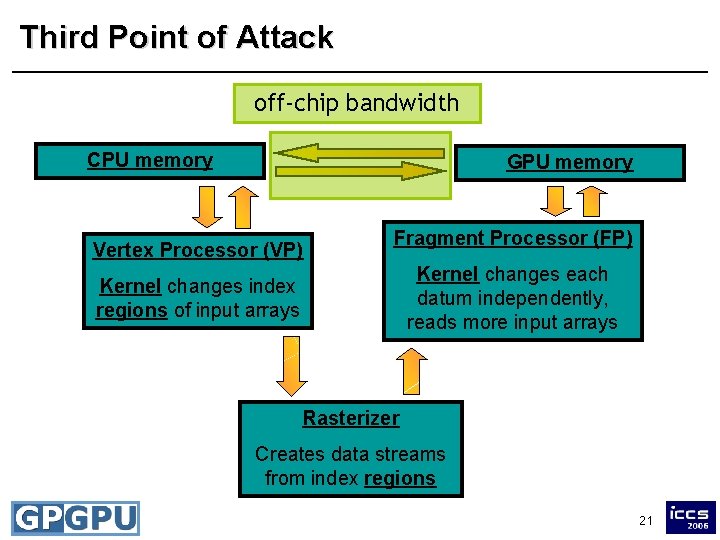

Third Point of Attack off-chip bandwidth CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 21

CPU - GPU Barrier • Transfer is potential bottleneck – Often less than 1 GB/s via PCIe bus – Readback to CPU memory always implies a global syncronization point (pipeline flush) • Easy case – Application directly visualizes results – Only need to transfer initial data to the GPU in a preprocessing step – No readback required – Examples: Interactive visualization and fluid solvers (cf. session 1) 22

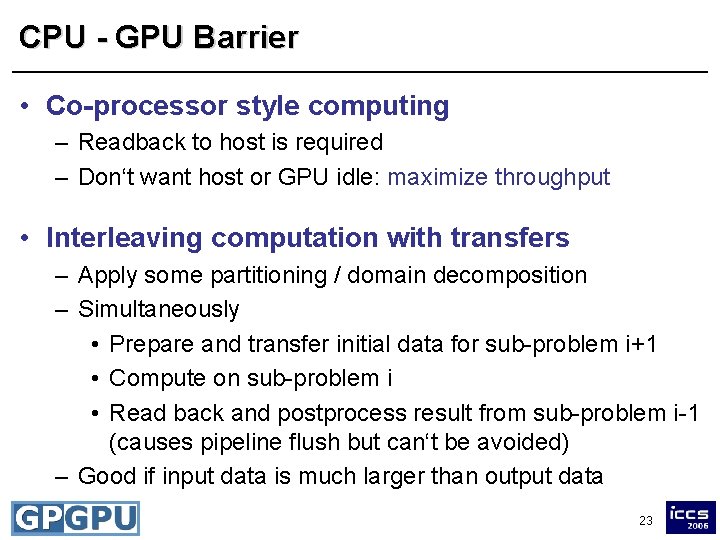

CPU - GPU Barrier • Co-processor style computing – Readback to host is required – Don‘t want host or GPU idle: maximize throughput • Interleaving computation with transfers – Apply some partitioning / domain decomposition – Simultaneously • Prepare and transfer initial data for sub-problem i+1 • Compute on sub-problem i • Read back and postprocess result from sub-problem i-1 (causes pipeline flush but can‘t be avoided) – Good if input data is much larger than output data 23

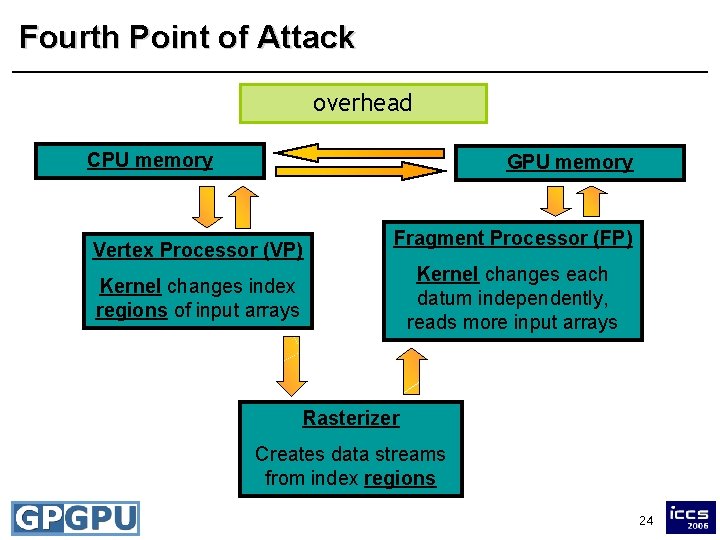

Fourth Point of Attack overhead CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 24

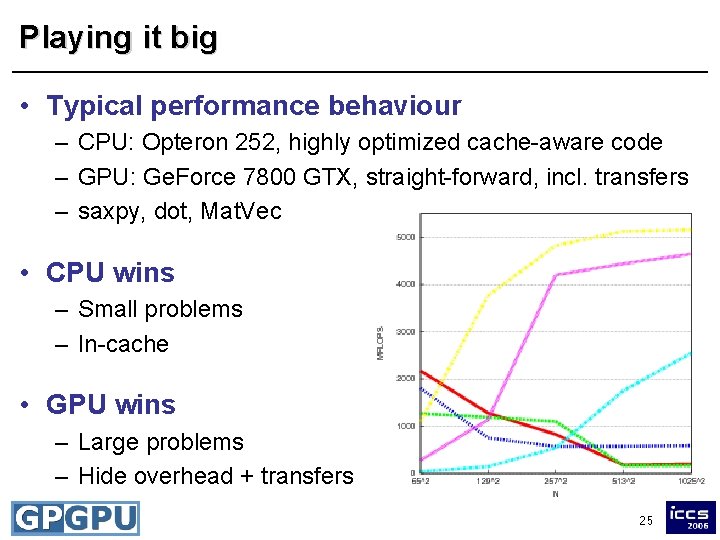

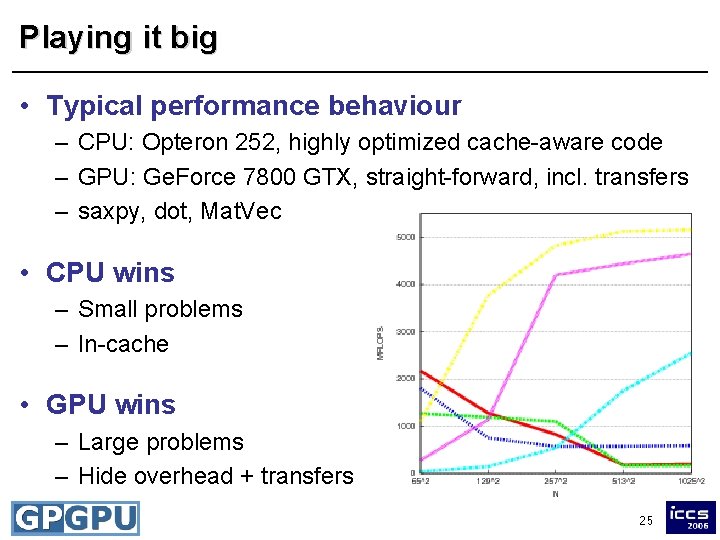

Playing it big • Typical performance behaviour – CPU: Opteron 252, highly optimized cache-aware code – GPU: Ge. Force 7800 GTX, straight-forward, incl. transfers – saxpy, dot, Mat. Vec • CPU wins – Small problems – In-cache • GPU wins – Large problems – Hide overhead + transfers 25

Playing it big • Nice analogy: Memory hierarchies – GPU memory is fast, comparable to in-cache on CPUs – Consider offloading to the GPU as manual prefetching – Always choose that type of memory that is fastest for the given chunk of data • Lots of parallel threads in flight – Need lots of data elements to compute on – Otherwise, PEs won‘t be saturated • Worst case and best case – Offload saxpy for small N individually to the GPU – Offload whole solvers for large N to the GPU (e. g. a full MG cycle) 26

Fifth Point of Attack instruction-level parallelism CPU memory GPU memory Vertex Processor (VP) Fragment Processor (FP) Kernel changes each datum independently, reads more input arrays Kernel changes index regions of input arrays Rasterizer Creates data streams from index regions 27

Vectorization • GPUs are designed to process 4 -tupels of data – Same cost to compute on four float values as on one – Take advantage of co-issueing over the four components • Swizzles – Swizzling components of the 4 -tupels is free (no MOVs) – Example: data=(1, 2, 3, 4) yields data. zzyx=(3, 3, 2, 1) – Very useful for index math and storing values in float 4‘s • Problem – Challenging task to map data into RGBA – Very problem-specific, no rules of thumb 28

Conclusions • Be aware of potential bottlenecks – – – Know hardware capabilities Analyze arithmetic intensity Check memory access patterns Run existing benchmarks, e. g. GPUbench (Stanford) Minimize number of pipeline stalls • Adapt algorithms – Try to work around bottlenecks – Reformulate algorithm to exploit the hardware more efficiently 29