Performance and Optimization Measuring Performance Key measure of

- Slides: 33

Performance and Optimization

Measuring Performance • Key measure of performance for a computing system is speed – Response time or execution time or latency. – Throughput. • We have seen how to increase throughput, while slightly increasing execution time of each single instruction – Pipeline design. • We now concentrate on measuring total execution time. • Total execution time can mean: – Elapsed time -- includes all I/O, OS and time spent on other jobs. – CPU time -- time spent by processor on your job.

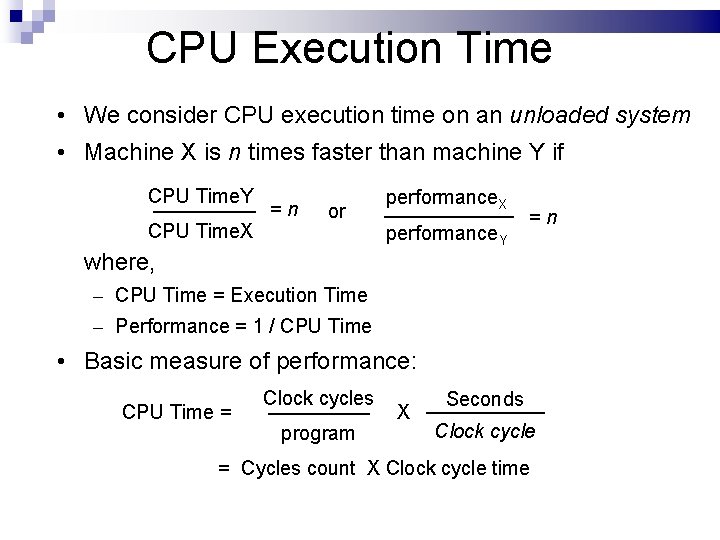

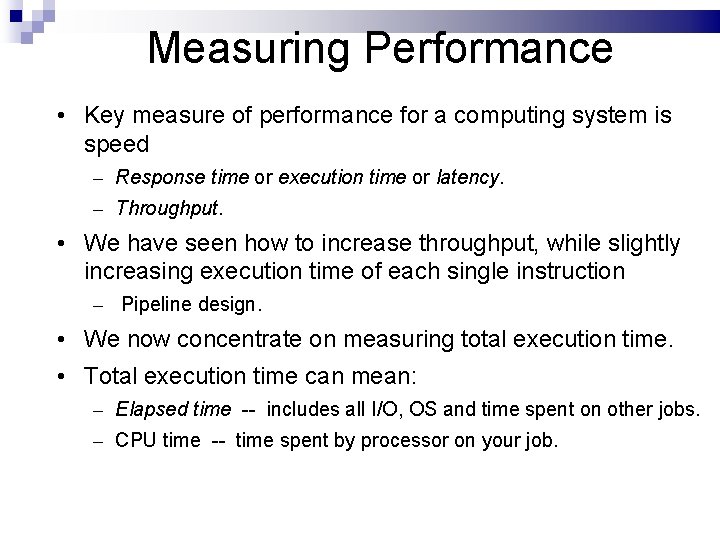

CPU Execution Time • We consider CPU execution time on an unloaded system • Machine X is n times faster than machine Y if CPU Time. Y CPU Time. X = n or performance. X performance. Y = n where, – CPU Time = Execution Time – Performance = 1 / CPU Time • Basic measure of performance: Clock cycles CPU Time = X program Seconds Clock cycle = Cycles count X Clock cycle time

CPU Execution Time • Clock cycle time is measured in nanoseconds (10 -9 sec) or microseconds (10 -6 sec) • Clock cycle rate = in 1 / (Clock cycle time) is measured • Mega. Hertz (MHz) - 106 cycles/sec • Giga. Hertz (GHz) - 109 cycles/sec

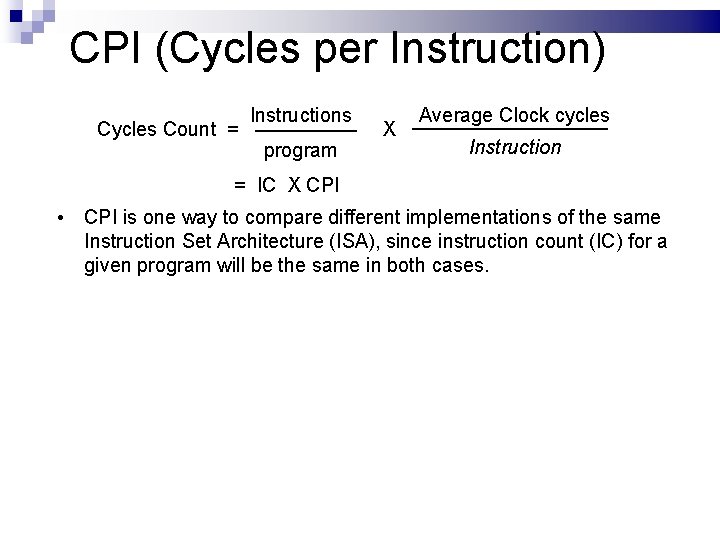

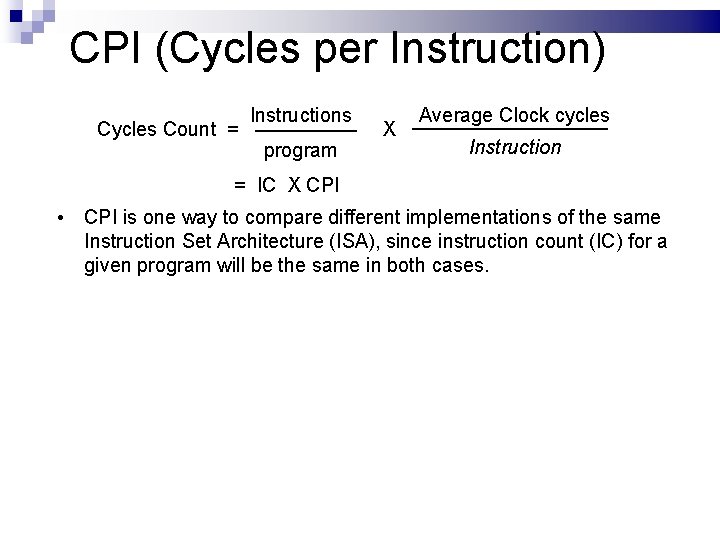

CPI (Cycles per Instruction) Instructions Cycles Count = X program Average Clock cycles Instruction = IC X CPI • CPI is one way to compare different implementations of the same Instruction Set Architecture (ISA), since instruction count (IC) for a given program will be the same in both cases.

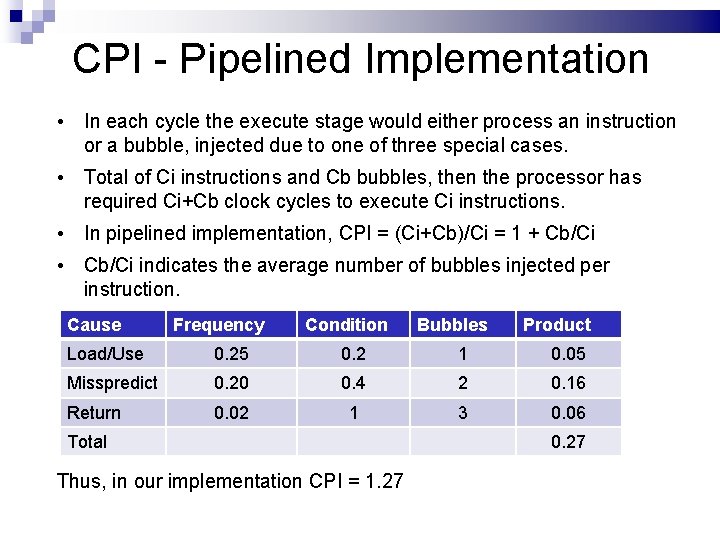

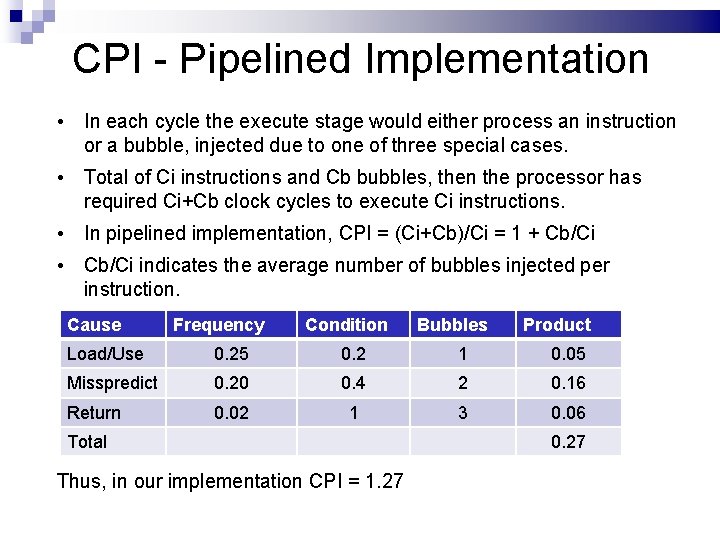

CPI - Pipelined Implementation • In each cycle the execute stage would either process an instruction or a bubble, injected due to one of three special cases. • Total of Ci instructions and Cb bubbles, then the processor has required Ci+Cb clock cycles to execute Ci instructions. • In pipelined implementation, CPI = (Ci+Cb)/Ci = 1 + Cb/Ci • Cb/Ci indicates the average number of bubbles injected per instruction. Cause Frequency Condition Bubbles Product Load/Use 0. 25 0. 2 1 0. 05 Misspredict 0. 20 0. 4 2 0. 16 Return 0. 02 1 3 0. 06 Total Thus, in our implementation CPI = 1. 27 0. 27

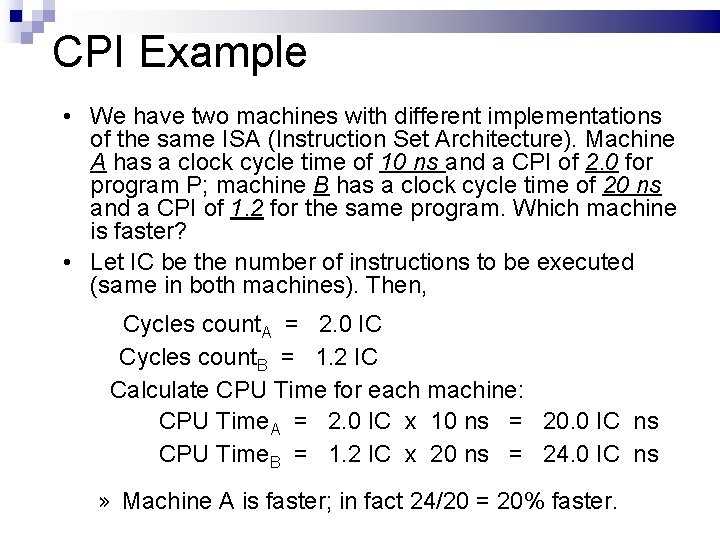

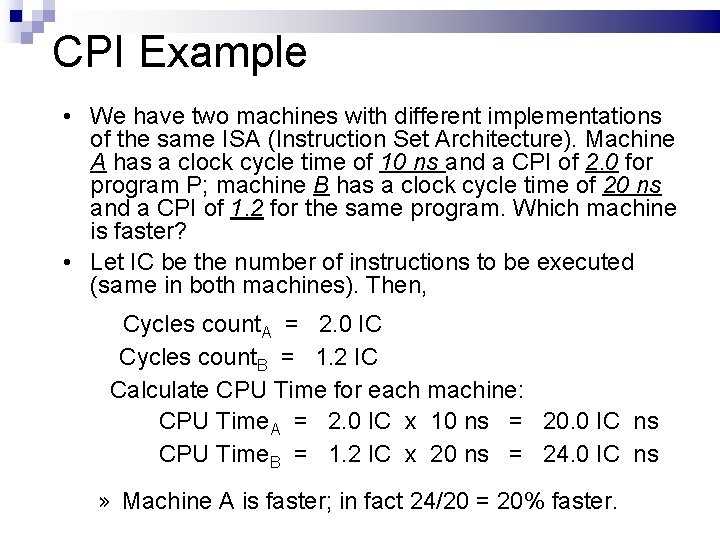

CPI Example • We have two machines with different implementations of the same ISA (Instruction Set Architecture). Machine A has a clock cycle time of 10 ns and a CPI of 2. 0 for program P; machine B has a clock cycle time of 20 ns and a CPI of 1. 2 for the same program. Which machine is faster? • Let IC be the number of instructions to be executed (same in both machines). Then, Cycles count. A = 2. 0 IC Cycles count. B = 1. 2 IC Calculate CPU Time for each machine: CPU Time. A = 2. 0 IC x 10 ns = 20. 0 IC ns CPU Time. B = 1. 2 IC x 20 ns = 24. 0 IC ns » Machine A is faster; in fact 24/20 = 20% faster.

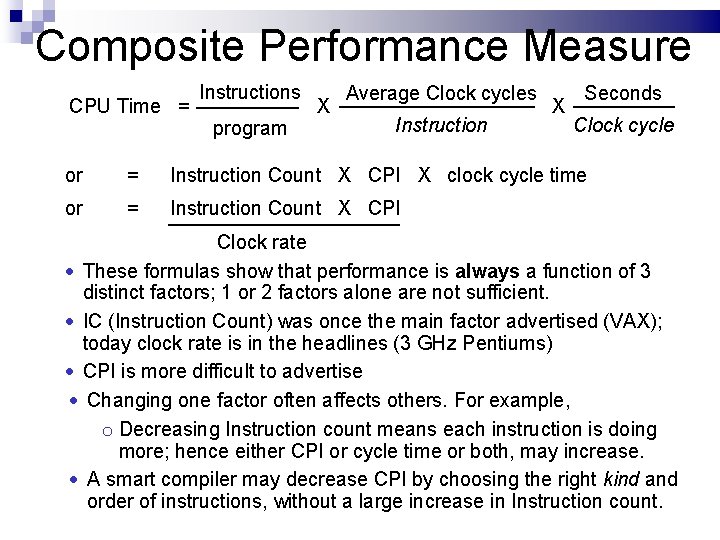

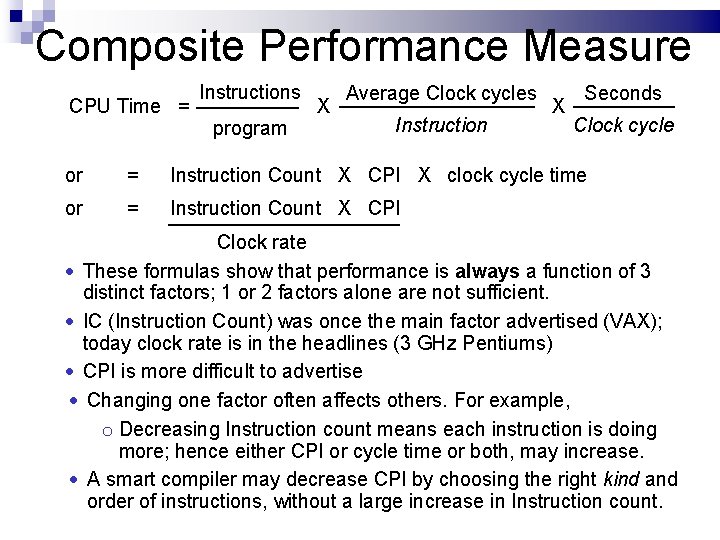

Composite Performance Measure Instructions Average Clock cycles Seconds CPU Time = X Instruction Clock cycle program or = Instruction Count X CPI X clock cycle time or = Instruction Count X CPI Clock rate • These formulas show that performance is always a function of 3 distinct factors; 1 or 2 factors alone are not sufficient. • IC (Instruction Count) was once the main factor advertised (VAX); today clock rate is in the headlines (3 GHz Pentiums) • CPI is more difficult to advertise • Changing one factor often affects others. For example, o Decreasing Instruction count means each instruction is doing more; hence either CPI or cycle time or both, may increase. • A smart compiler may decrease CPI by choosing the right kind and order of instructions, without a large increase in Instruction count.

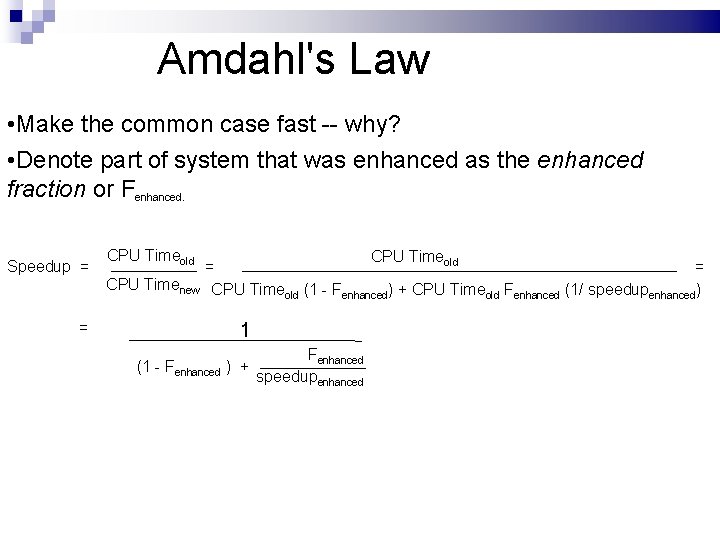

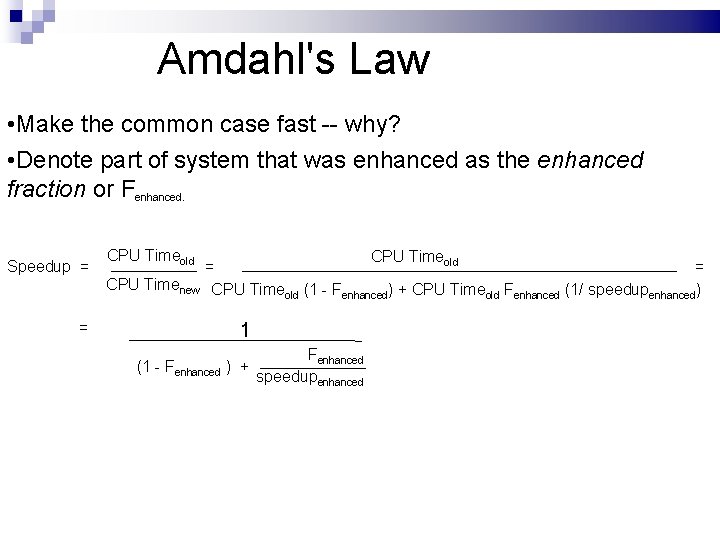

Amdahl's Law • Make the common case fast -- why? • Denote part of system that was enhanced as the enhanced fraction or Fenhanced. CPU Timeold Speedup = = CPU Timenew CPU Time (1 - F old enhanced) + CPU Timeold Fenhanced (1/ speedupenhanced) = 1 Fenhanced (1 - Fenhanced ) + speedupenhanced

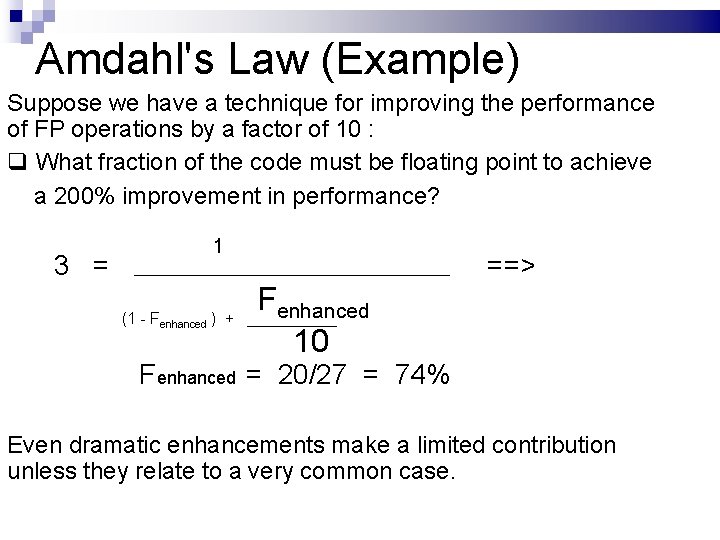

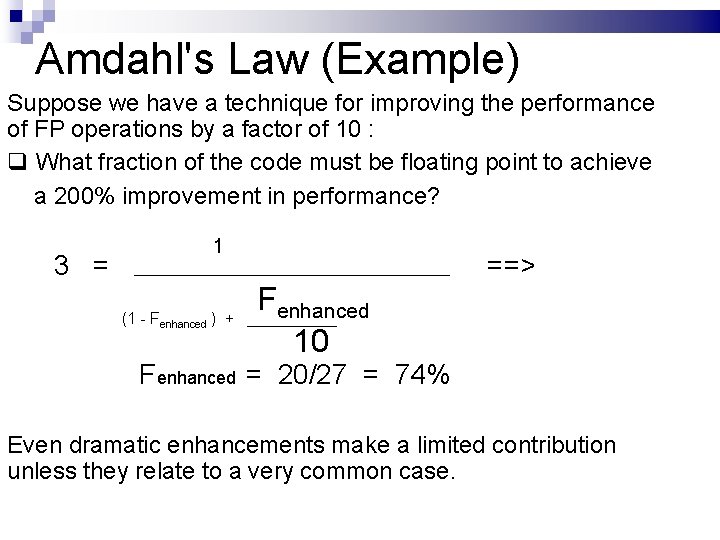

Amdahl's Law (Example) Suppose we have a technique for improving the performance of FP operations by a factor of 10 : q What fraction of the code must be floating point to achieve a 200% improvement in performance? 1 3 = ==> F enhanced ) + 10 (1 - Fenhanced Fenhanced = 20/27 = 74% Even dramatic enhancements make a limited contribution unless they relate to a very common case.

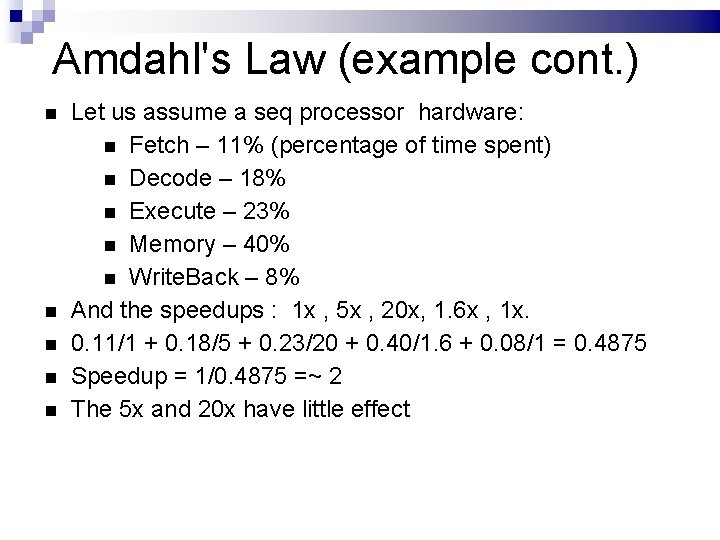

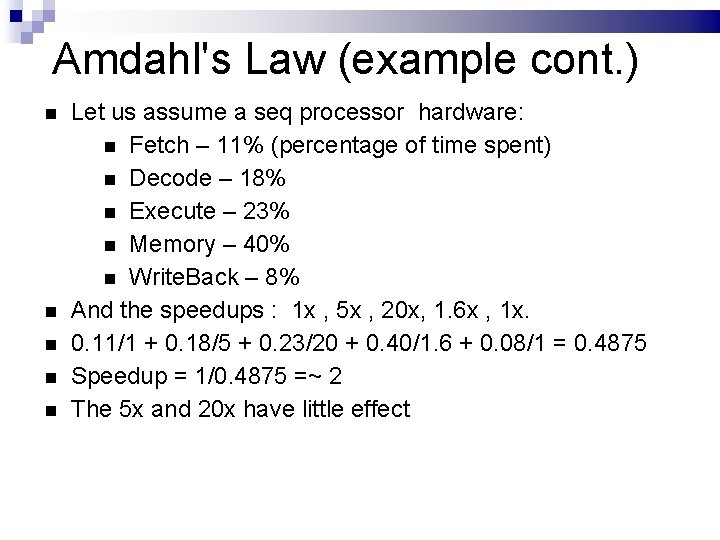

Amdahl's Law (example cont. ) Let us assume a seq processor hardware: Fetch – 11% (percentage of time spent) Decode – 18% Execute – 23% Memory – 40% Write. Back – 8% And the speedups : 1 x , 5 x , 20 x, 1. 6 x , 1 x. 0. 11/1 + 0. 18/5 + 0. 23/20 + 0. 40/1. 6 + 0. 08/1 = 0. 4875 Speedup = 1/0. 4875 =~ 2 The 5 x and 20 x have little effect

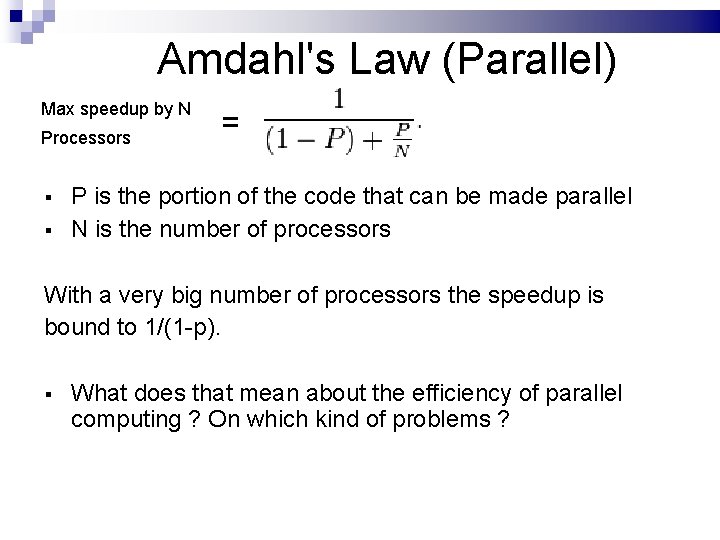

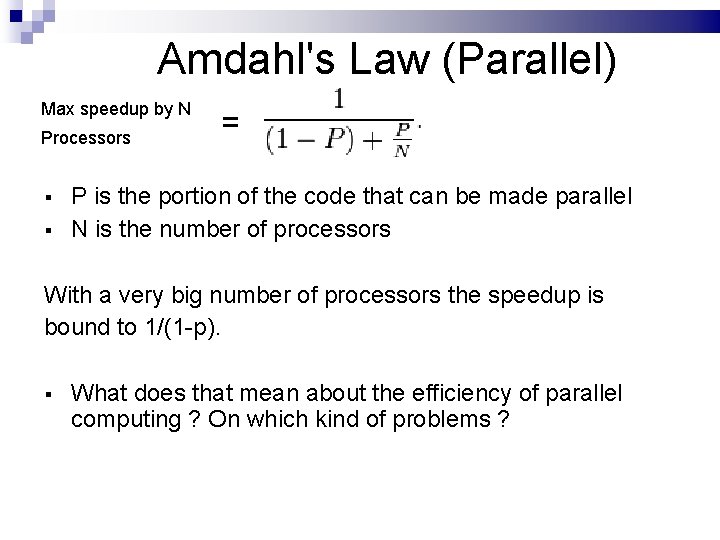

Amdahl's Law (Parallel) Max speedup by N Processors § § = P is the portion of the code that can be made parallel N is the number of processors With a very big number of processors the speedup is bound to 1/(1 -p). § What does that mean about the efficiency of parallel computing ? On which kind of problems ?

Important to Keep in Mind 90/10 rule. 90% of the time spent in 10% of the code. Readability vs. Performances. Time vs. Memory.

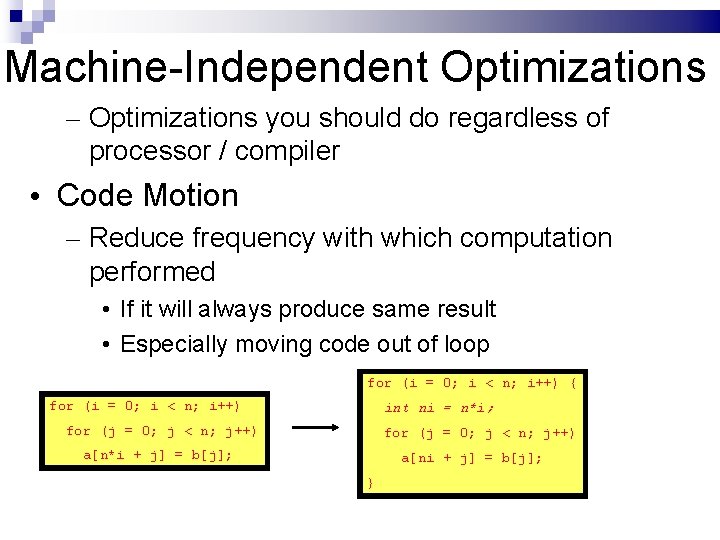

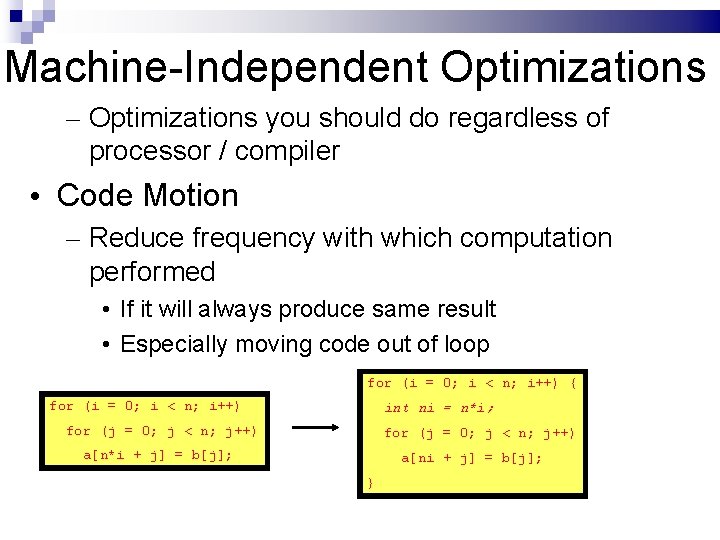

Machine-Independent Optimizations – Optimizations you should do regardless of processor / compiler • Code Motion – Reduce frequency with which computation performed • If it will always produce same result • Especially moving code out of loop for (i = 0; i < n; i++) { for (i = 0; i < n; i++) int ni = n*i; for (j = 0; j < n; j++) a[n*i + j] = b[j]; a[ni + j] = b[j]; }

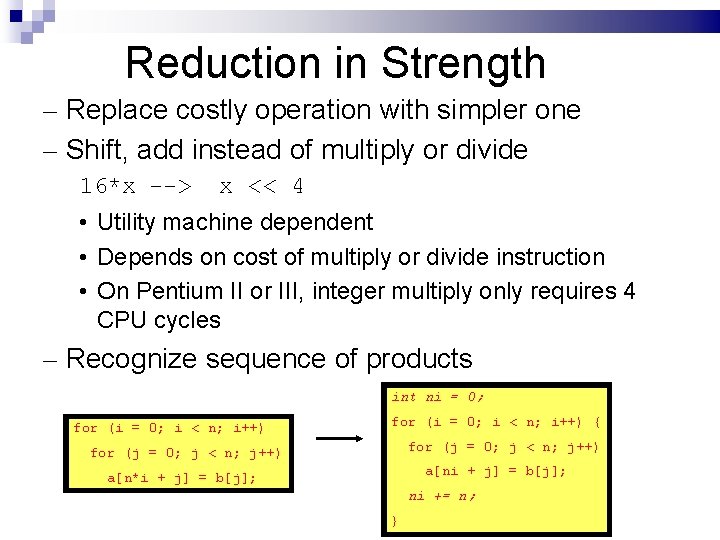

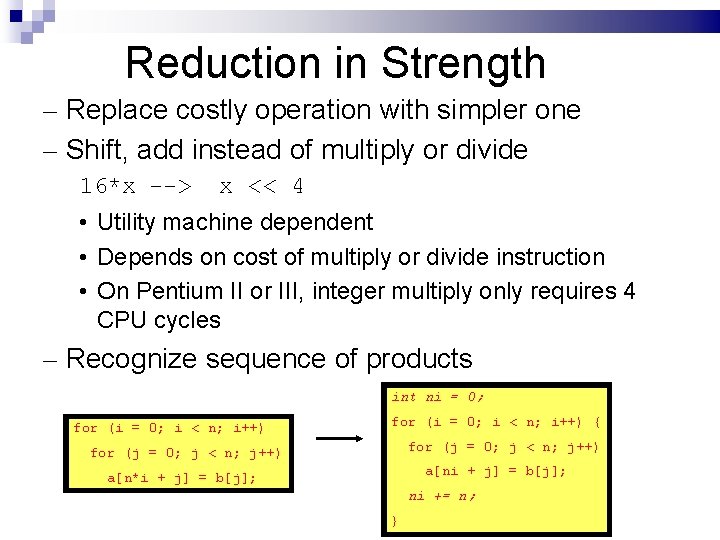

Reduction in Strength – Replace costly operation with simpler one – Shift, add instead of multiply or divide 16*x --> x << 4 • Utility machine dependent • Depends on cost of multiply or divide instruction • On Pentium II or III, integer multiply only requires 4 CPU cycles – Recognize sequence of products int ni = 0; for (i = 0; i < n; i++) { for (j = 0; j < n; j++) a[ni + j] = b[j]; a[n*i + j] = b[j]; ni += n; }

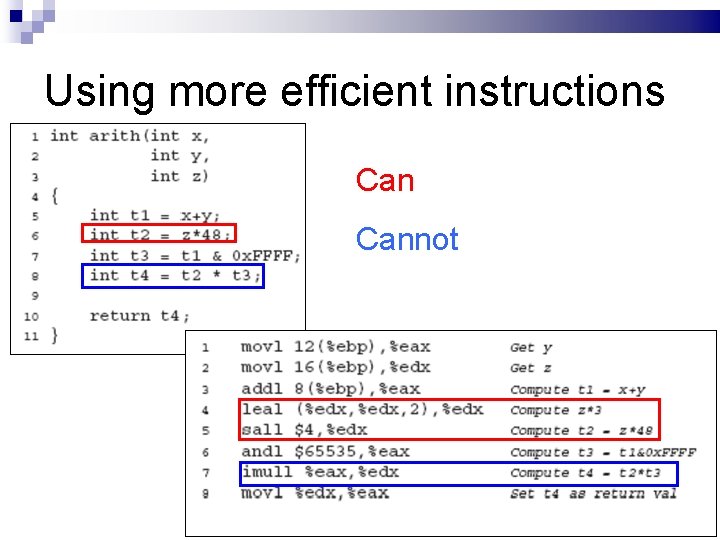

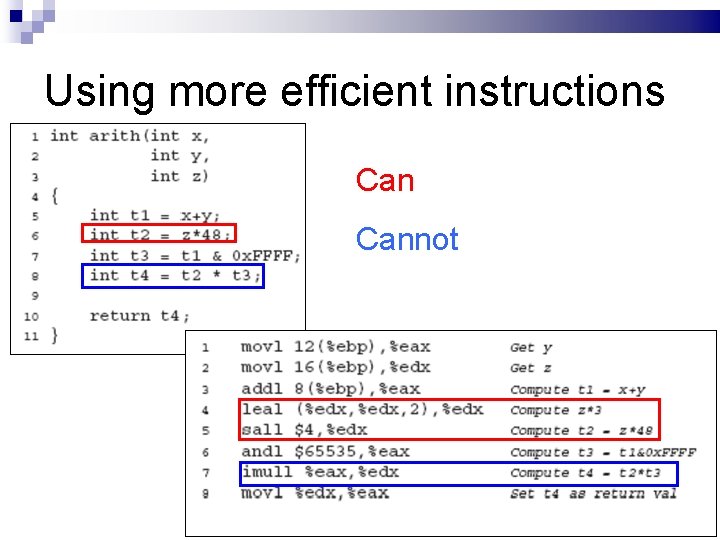

Using more efficient instructions Cannot

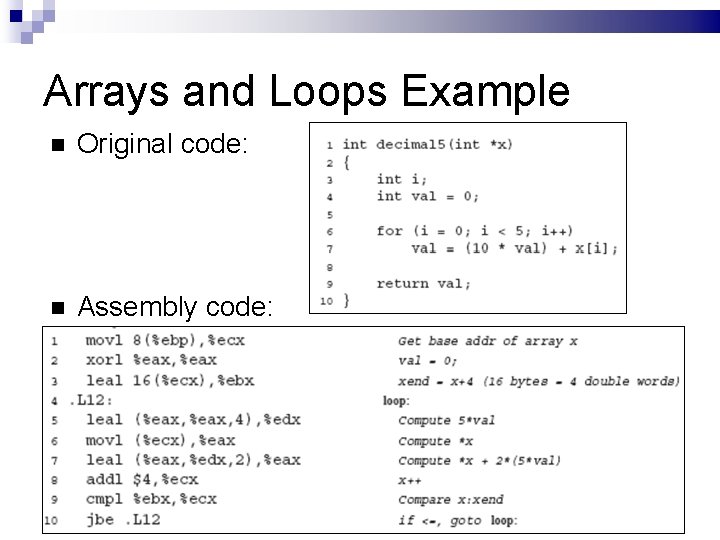

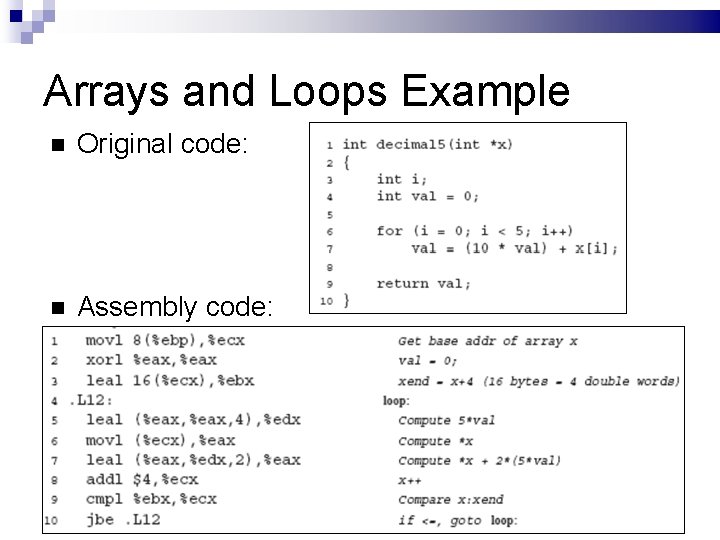

Arrays and Loops Example Original code: Assembly code:

Loop Optimization Loop optimization is the process of the increasing execution speed and reducing the overheads associated of loops. Plays an important role in improving cache performance and making effective use of parallel processing capabilities. Most execution time of a program is spent on loops, so a lot of compiler optimization techniques have been developed to make them faster.

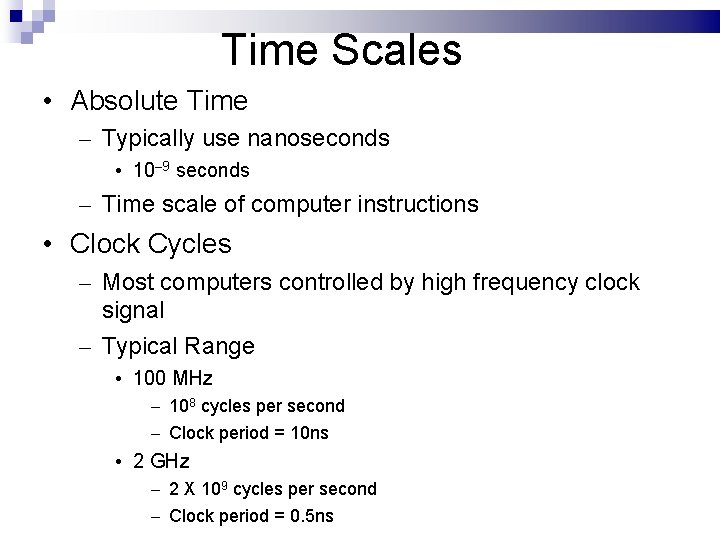

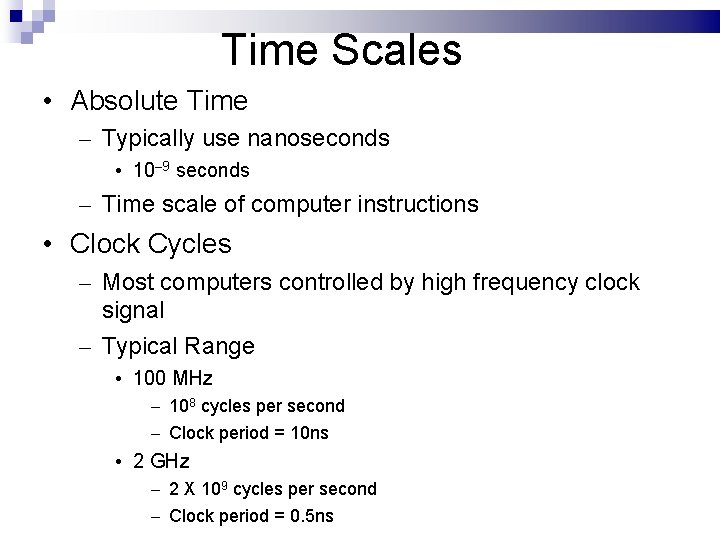

Time Scales • Absolute Time – Typically use nanoseconds • 10– 9 seconds – Time scale of computer instructions • Clock Cycles – Most computers controlled by high frequency clock signal – Typical Range • 100 MHz – 108 cycles per second – Clock period = 10 ns • 2 GHz – 2 X 109 cycles per second – Clock period = 0. 5 ns

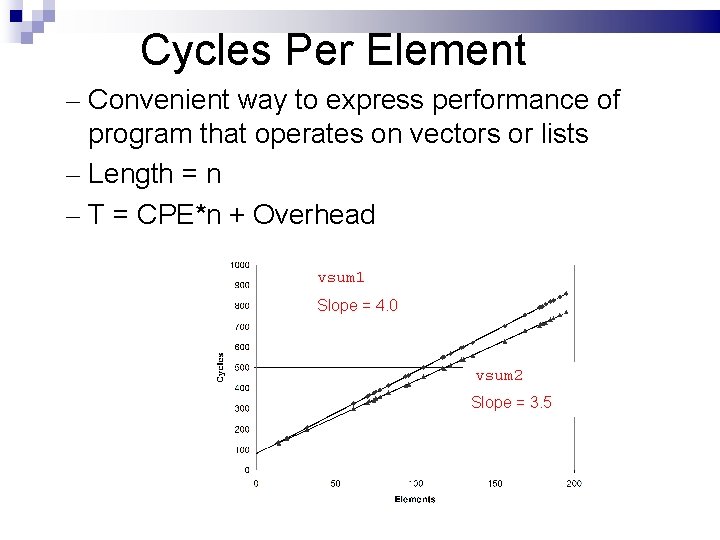

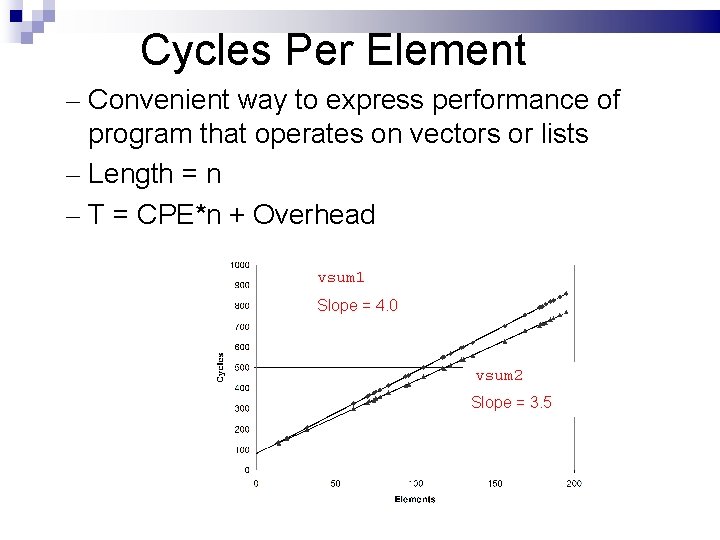

Cycles Per Element – Convenient way to express performance of program that operates on vectors or lists – Length = n – T = CPE*n + Overhead vsum 1 Slope = 4. 0 vsum 2 Slope = 3. 5

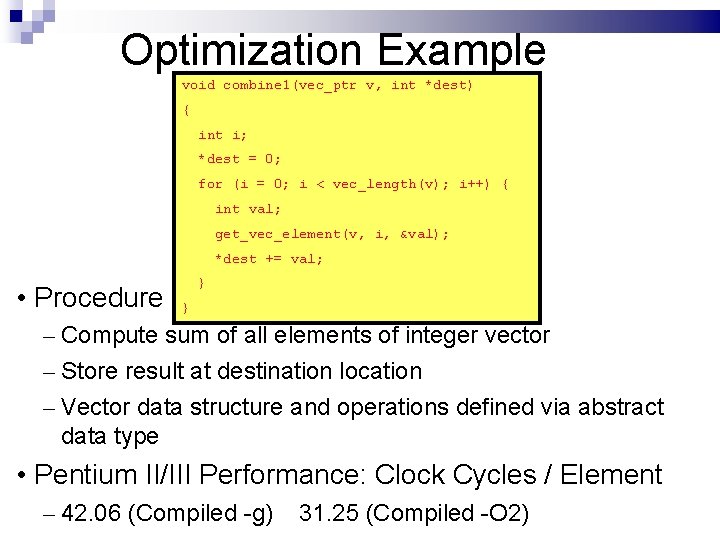

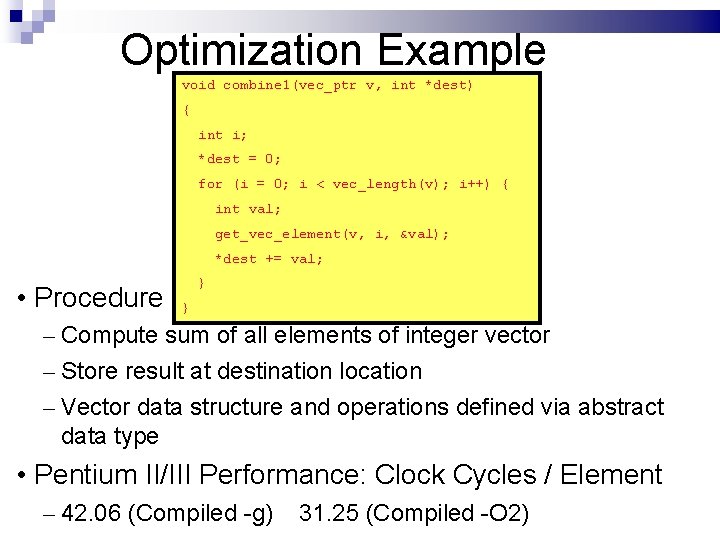

Optimization Example void combine 1(vec_ptr v, int *dest) { int i; *dest = 0; for (i = 0; i < vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; • Procedure } } – Compute sum of all elements of integer vector – Store result at destination location – Vector data structure and operations defined via abstract data type • Pentium II/III Performance: Clock Cycles / Element – 42. 06 (Compiled -g) 31. 25 (Compiled -O 2)

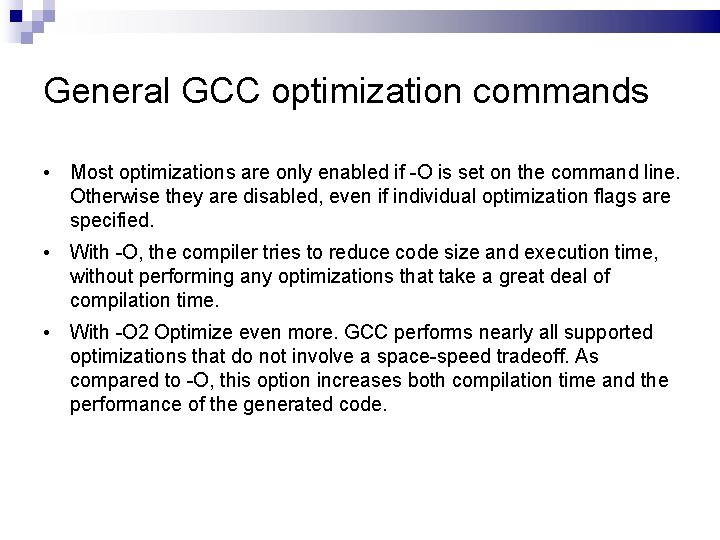

General GCC optimization commands • Most optimizations are only enabled if -O is set on the command line. Otherwise they are disabled, even if individual optimization flags are specified. • With -O, the compiler tries to reduce code size and execution time, without performing any optimizations that take a great deal of compilation time. • With -O 2 Optimize even more. GCC performs nearly all supported optimizations that do not involve a space-speed tradeoff. As compared to -O, this option increases both compilation time and the performance of the generated code.

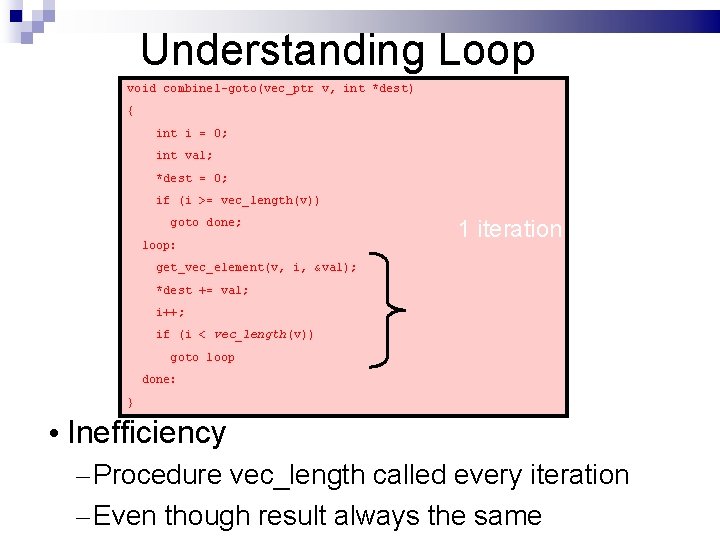

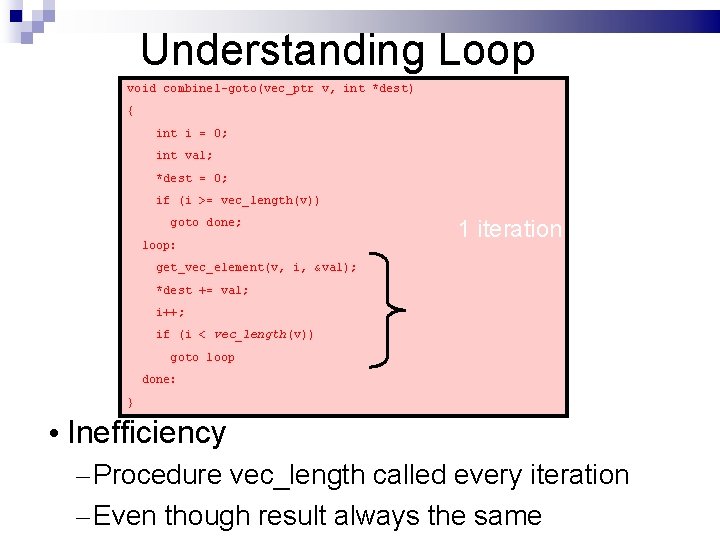

Understanding Loop void combine 1 -goto(vec_ptr v, int *dest) { int i = 0; int val; *dest = 0; if (i >= vec_length(v)) goto done; loop: 1 iteration get_vec_element(v, i, &val); *dest += val; i++; if (i < vec_length (v)) goto loop done: } • Inefficiency – Procedure vec_length called every iteration – Even though result always the same

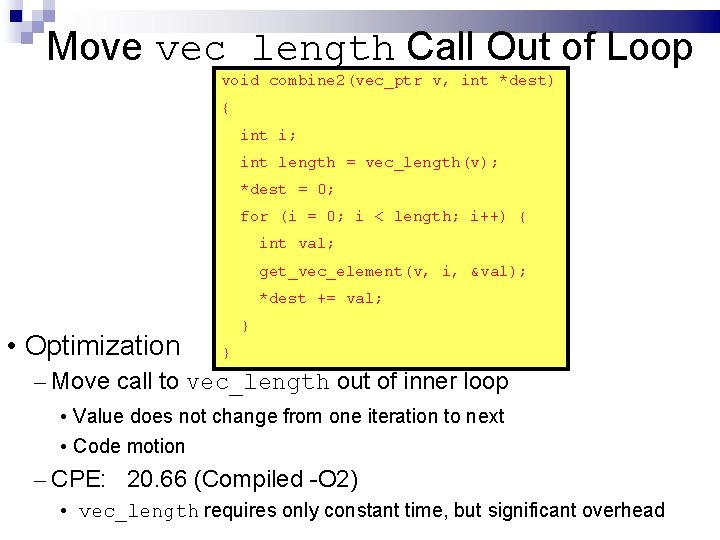

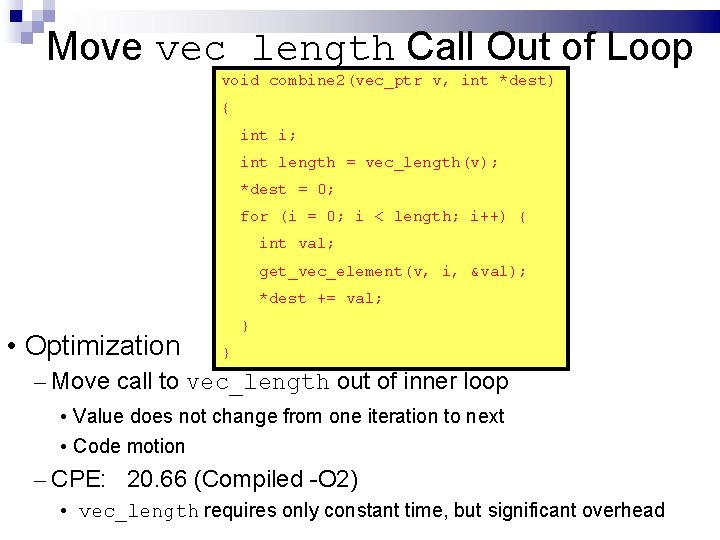

Move vec_length Call Out of Loop void combine 2(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; get_vec_element(v, i, &val); *dest += val; • Optimization } } – Move call to vec_length out of inner loop • Value does not change from one iteration to next • Code motion – CPE: 20. 66 (Compiled -O 2) • vec_length requires only constant time, but significant overhead

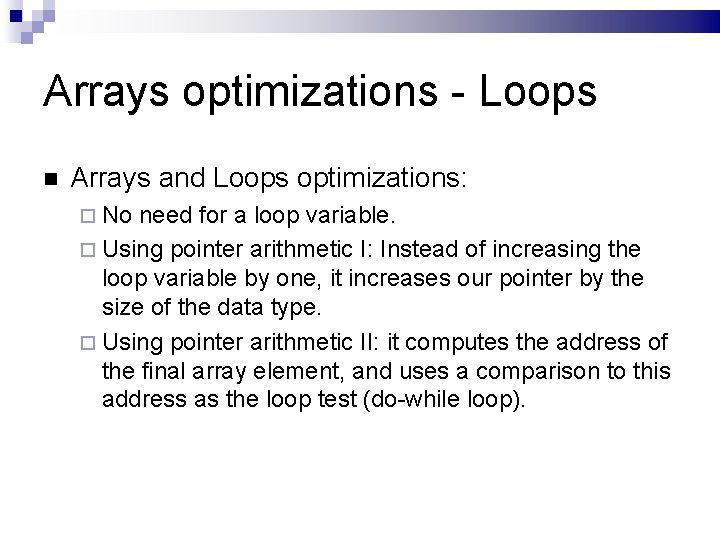

Arrays optimizations - Loops Arrays and Loops optimizations: No need for a loop variable. Using pointer arithmetic I: Instead of increasing the loop variable by one, it increases our pointer by the size of the data type. Using pointer arithmetic II: it computes the address of the final array element, and uses a comparison to this address as the loop test (do-while loop).

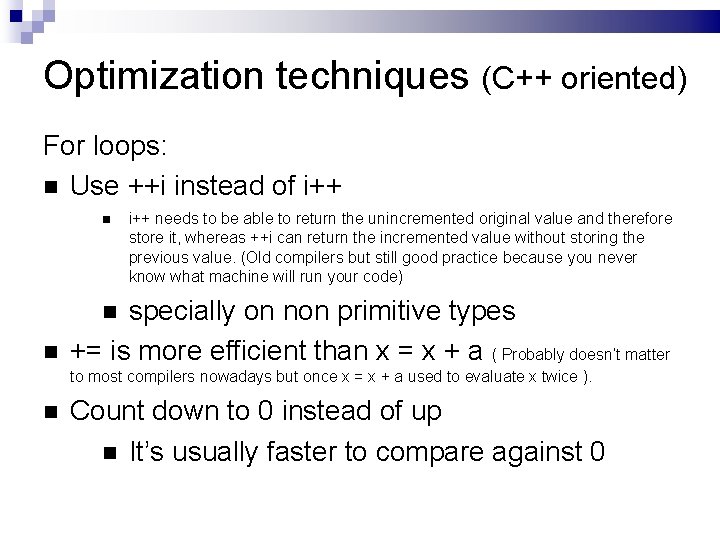

Optimization techniques (C++ oriented) For loops: Use ++i instead of i++ needs to be able to return the unincremented original value and therefore store it, whereas ++i can return the incremented value without storing the previous value. (Old compilers but still good practice because you never know what machine will run your code) specially on non primitive types += is more efficient than x = x + a ( Probably doesn’t matter to most compilers nowadays but once x = x + a used to evaluate x twice ). Count down to 0 instead of up It’s usually faster to compare against 0

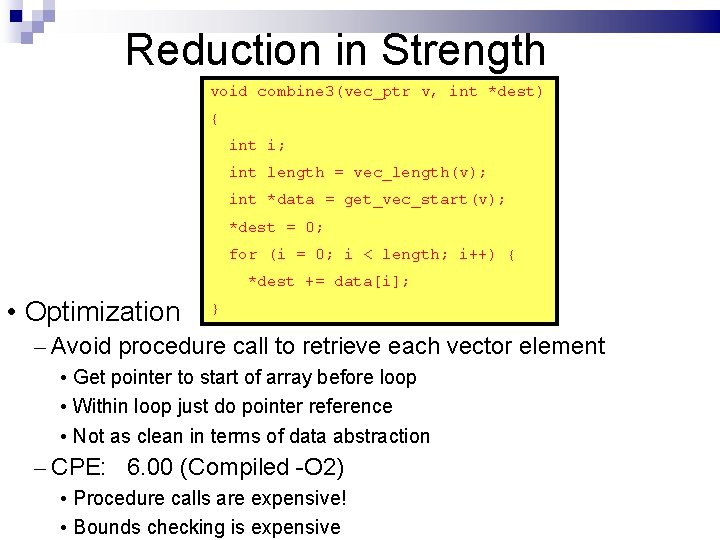

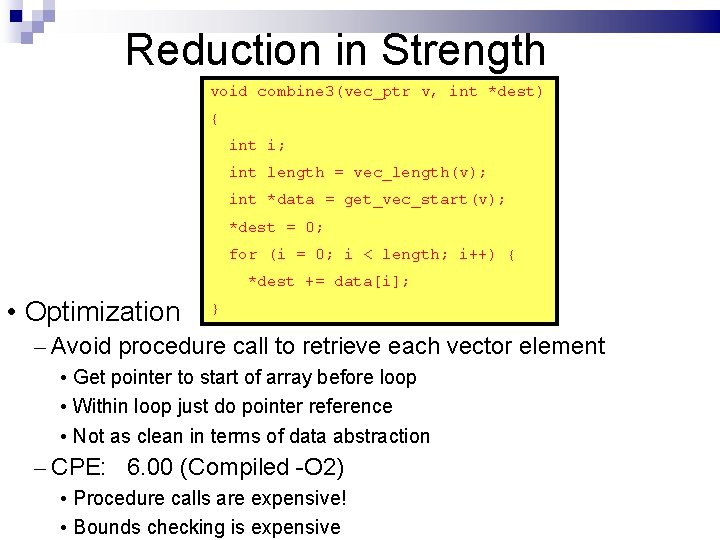

Reduction in Strength void combine 3(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); *dest = 0; for (i = 0; i < length; i++) { *dest += data[i]; • Optimization } – Avoid procedure call to retrieve each vector element • Get pointer to start of array before loop • Within loop just do pointer reference • Not as clean in terms of data abstraction – CPE: 6. 00 (Compiled -O 2) • Procedure calls are expensive! • Bounds checking is expensive

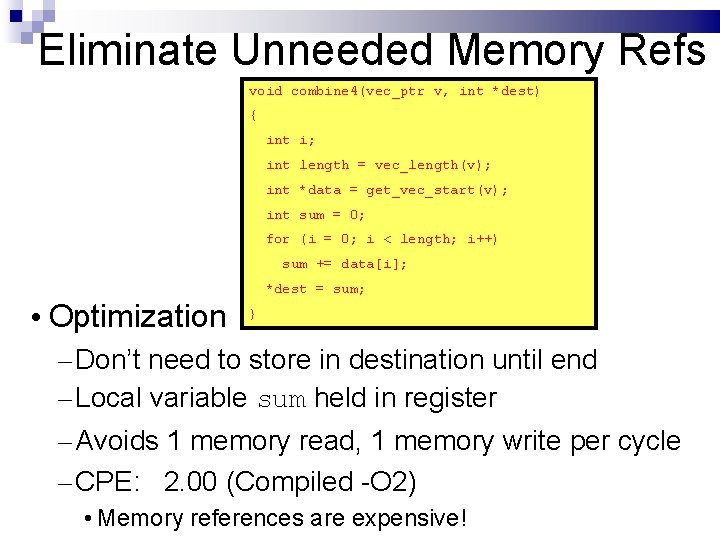

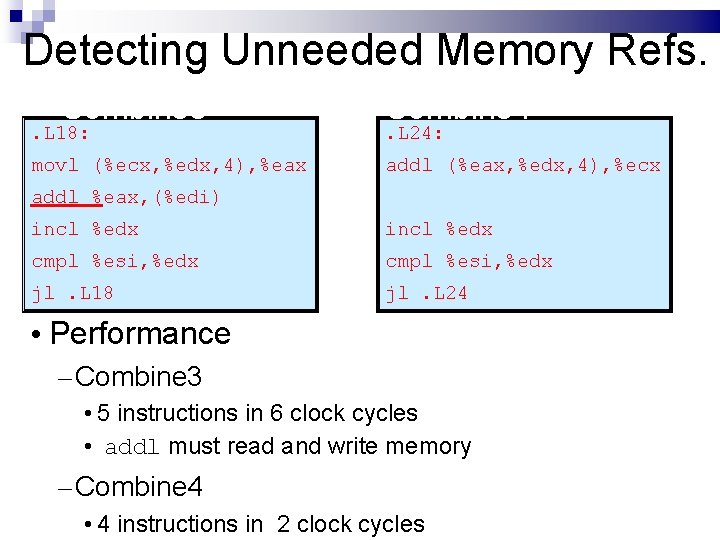

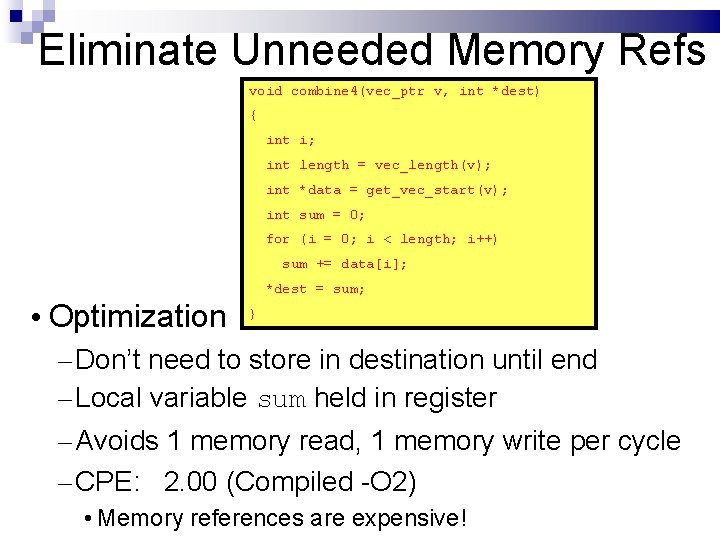

Eliminate Unneeded Memory Refs void combine 4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int sum = 0; for (i = 0; i < length; i++) sum += data[i]; *dest = sum; • Optimization } – Don’t need to store in destination until end – Local variable sum held in register – Avoids 1 memory read, 1 memory write per cycle – CPE: 2. 00 (Compiled -O 2) • Memory references are expensive!

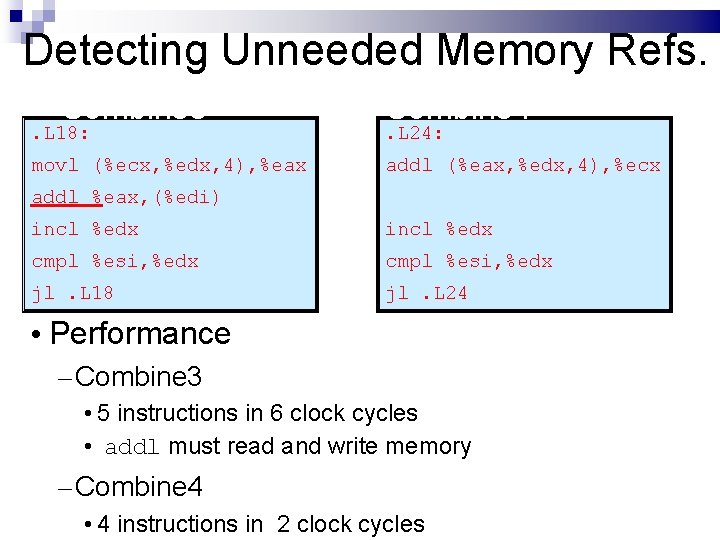

Detecting Unneeded Memory Refs. Combine 3 Combine 4 . L 18: . L 24: movl (%ecx, %edx, 4), %eax addl (%eax, %edx, 4), %ecx addl %eax, (%edi) incl %edx cmpl %esi, %edx jl. L 18 jl. L 24 • Performance – Combine 3 • 5 instructions in 6 clock cycles • addl must read and write memory – Combine 4 • 4 instructions in 2 clock cycles

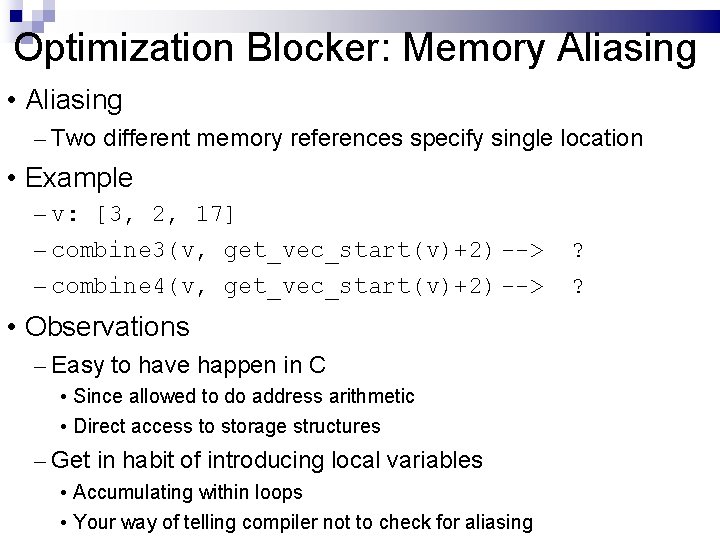

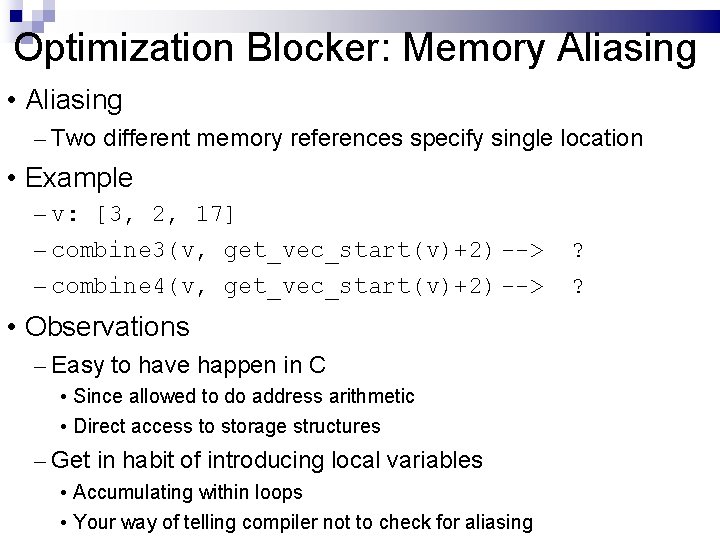

Optimization Blocker: Memory Aliasing • Aliasing – Two different memory references specify single location • Example – v: [3, 2, 17] – combine 3(v, get_vec_start(v)+2) --> – combine 4(v, get_vec_start(v)+2) --> • Observations – Easy to have happen in C • Since allowed to do address arithmetic • Direct access to storage structures – Get in habit of introducing local variables • Accumulating within loops • Your way of telling compiler not to check for aliasing ? ?

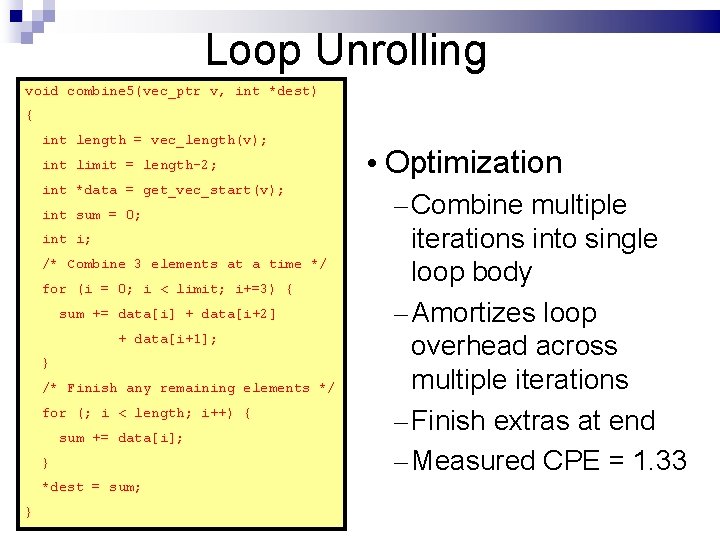

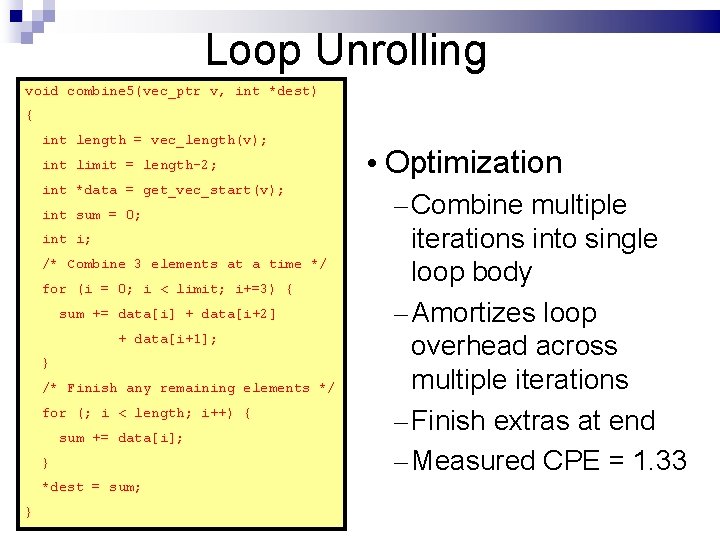

Loop Unrolling void combine 5(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-2; int *data = get_vec_start(v); int sum = 0; int i; /* Combine 3 elements at a time */ for (i = 0; i < limit; i+=3) { sum += data[i] + data[i+2] + data[i+1]; } /* Finish any remaining elements */ for (; i < length; i++) { sum += data[i]; } *dest = sum; } • Optimization – Combine multiple iterations into single loop body – Amortizes loop overhead across multiple iterations – Finish extras at end – Measured CPE = 1. 33

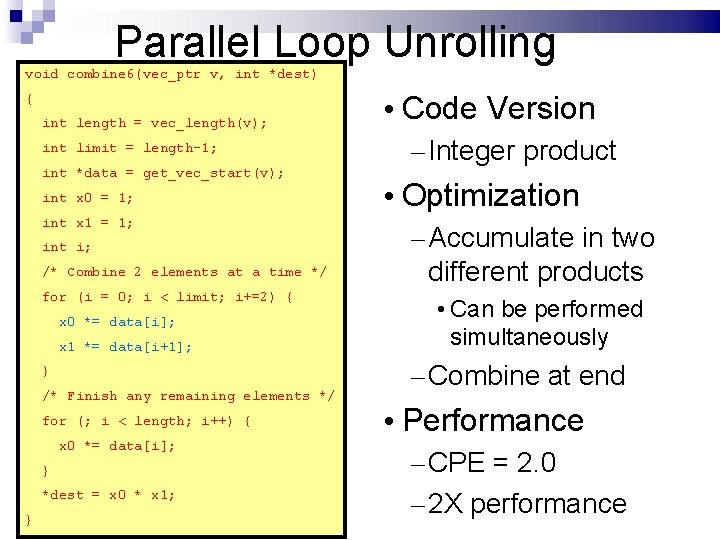

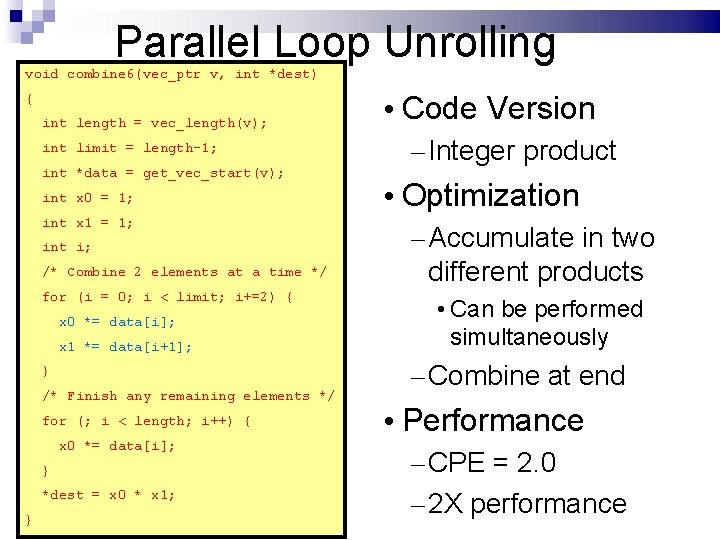

Parallel Loop Unrolling void combine 6(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-1; int *data = get_vec_start(v); int x 0 = 1; int x 1 = 1; int i; /* Combine 2 elements at a time */ for (i = 0; i < limit; i+=2) { x 0 *= data[i]; x 1 *= data[i+1]; } /* Finish any remaining elements */ for (; i < length; i++) { x 0 *= data[i]; } *dest = x 0 * x 1; } • Code Version – Integer product • Optimization – Accumulate in two different products • Can be performed simultaneously – Combine at end • Performance – CPE = 2. 0 – 2 X performance

Code Optimizing Time Using efficient algorithms. Constant calculations – outside of loops. Accessing memory as little as possible. Using more efficient instructions (shift vs. mult). Minimizing the call to inefficient functions. Memory Using the smallest data types that fit. Using more efficient structures that allow for the same functionality with less memory (see example later). Using as little variables as possible.