Performance Analysis using PAPI and Hardware Performance Counters

![PAPI High-level Example long values[NUM_EVENTS]; unsigned int Events[NUM_EVENTS]={PAPI_TOT_INS, PAPI_TOT_CYC}; /* Start the counters */ PAPI High-level Example long values[NUM_EVENTS]; unsigned int Events[NUM_EVENTS]={PAPI_TOT_INS, PAPI_TOT_CYC}; /* Start the counters */](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-13.jpg)

![Event set operations • Event set management PAPI_create_eventset, PAPI_add_event[s], PAPI_rem_event[s], PAPI_destroy_eventset • Event set Event set operations • Event set management PAPI_create_eventset, PAPI_add_event[s], PAPI_rem_event[s], PAPI_destroy_eventset • Event set](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-18.jpg)

![Simple example #include "papi. h“ #define NUM_EVENTS 2 int Events[NUM_EVENTS]={PAPI_FP_INS, PAPI_TOT_CYC}, Event. Set; long_long Simple example #include "papi. h“ #define NUM_EVENTS 2 int Events[NUM_EVENTS]={PAPI_FP_INS, PAPI_TOT_CYC}, Event. Set; long_long](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-19.jpg)

- Slides: 40

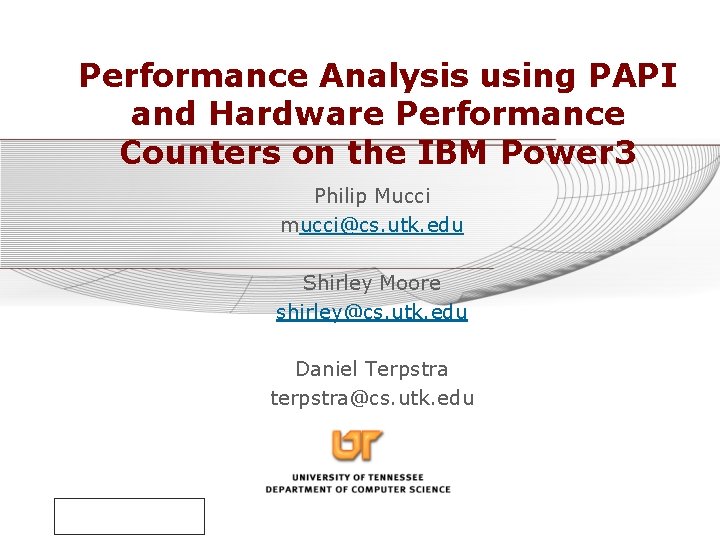

Performance Analysis using PAPI and Hardware Performance Counters on the IBM Power 3 Philip Mucci mucci@cs. utk. edu Shirley Moore shirley@cs. utk. edu Daniel Terpstra terpstra@cs. utk. edu

Hardware Counters • Small set of registers that count events, which are occurrences of specific signals related to the processor’s function • Monitoring these events facilitates correlation between the structure of the source/object code and the efficiency of the mapping of that code to the underlying architecture. October 11, 2001 Scicom. P 4 Knoxville, TN 2

Goals of PAPI • Solid foundation for cross platform performance analysis tools • Free tool developers from reimplementing counter access • Standardization between vendors, academics and users • Encourage vendors to provide hardware and OS support for counter access • Reference implementations for a number of HPC architectures • Well documented and easy to use October 11, 2001 Scicom. P 4 Knoxville, TN 3

Overview of PAPI • Performance Application Programming Interface • The purpose of the PAPI project is to design, standardize and implement a portable and efficient API to access the hardware performance monitor counters found on most modern microprocessors. • Parallel Tools Consortium project http: //www. ptools. org/ October 11, 2001 Scicom. P 4 Knoxville, TN 4

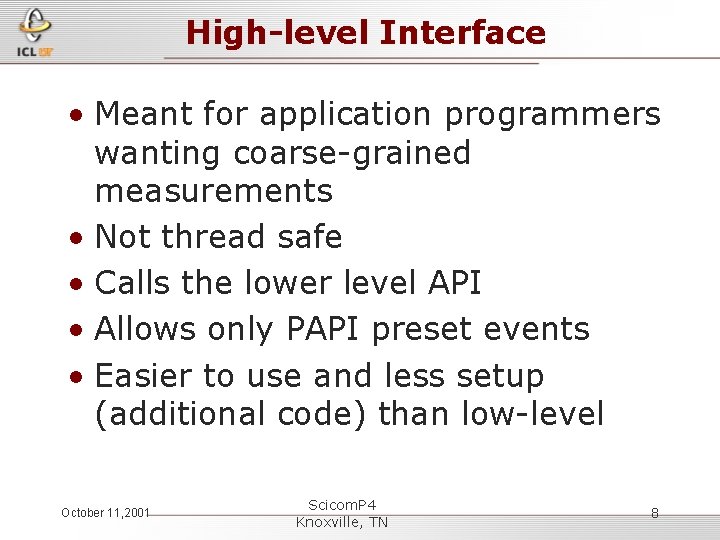

PAPI Counter Interfaces • PAPI provides three interfaces to the underlying counter hardware: 1. The low level interface manages hardware events in user defined groups called Event. Sets. 2. The high level interface simply provides the ability to start, stop and read the counters for a specified list of events. 3. Graphical tools to visualize information. October 11, 2001 Scicom. P 4 Knoxville, TN 5

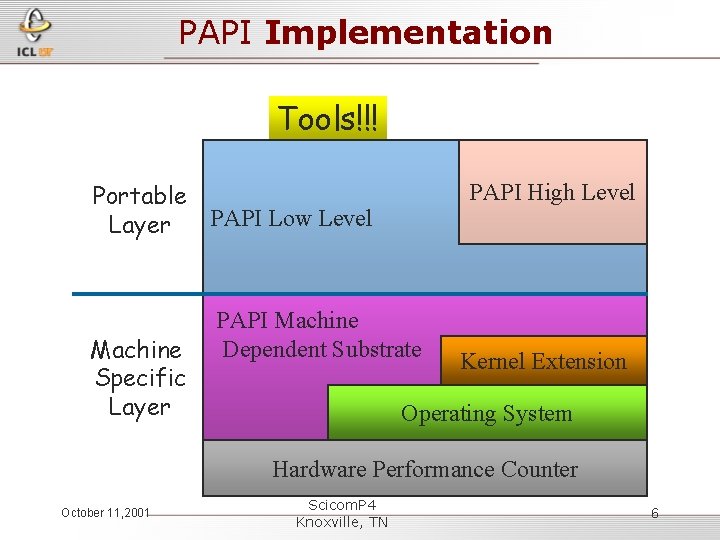

PAPI Implementation Tools!!! Portable PAPI Low Level Layer Machine Specific Layer PAPI High Level PAPI Machine Dependent Substrate Kernel Extension Operating System Hardware Performance Counter October 11, 2001 Scicom. P 4 Knoxville, TN 6

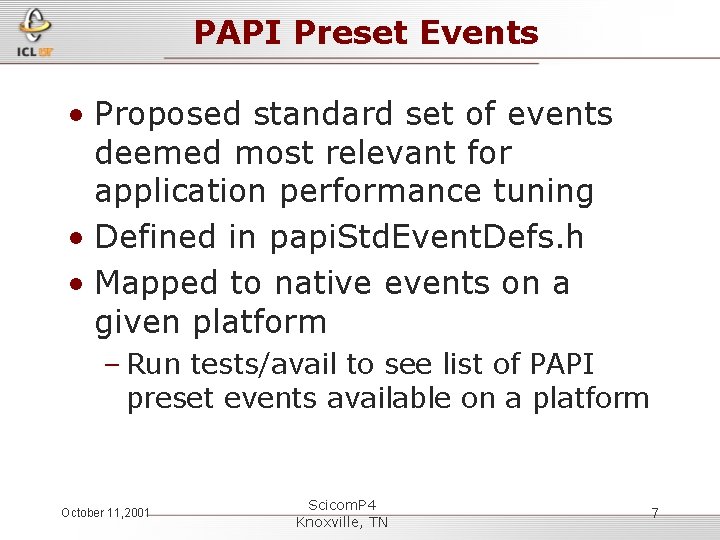

PAPI Preset Events • Proposed standard set of events deemed most relevant for application performance tuning • Defined in papi. Std. Event. Defs. h • Mapped to native events on a given platform – Run tests/avail to see list of PAPI preset events available on a platform October 11, 2001 Scicom. P 4 Knoxville, TN 7

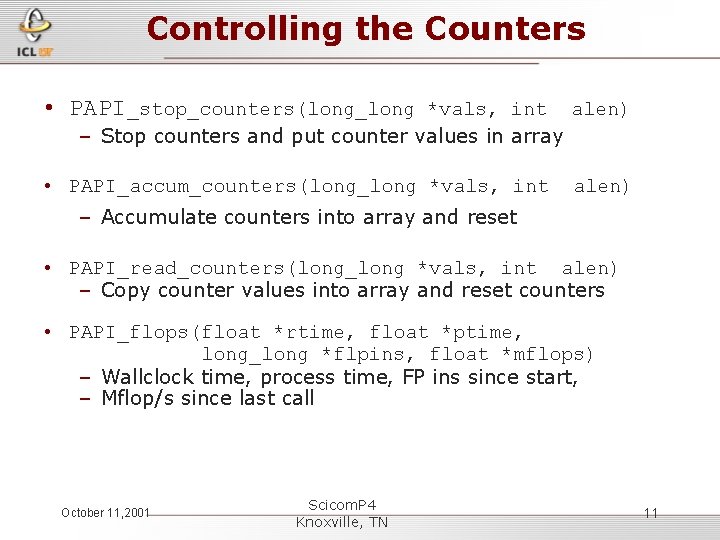

High-level Interface • Meant for application programmers wanting coarse-grained measurements • Not thread safe • Calls the lower level API • Allows only PAPI preset events • Easier to use and less setup (additional code) than low-level October 11, 2001 Scicom. P 4 Knoxville, TN 8

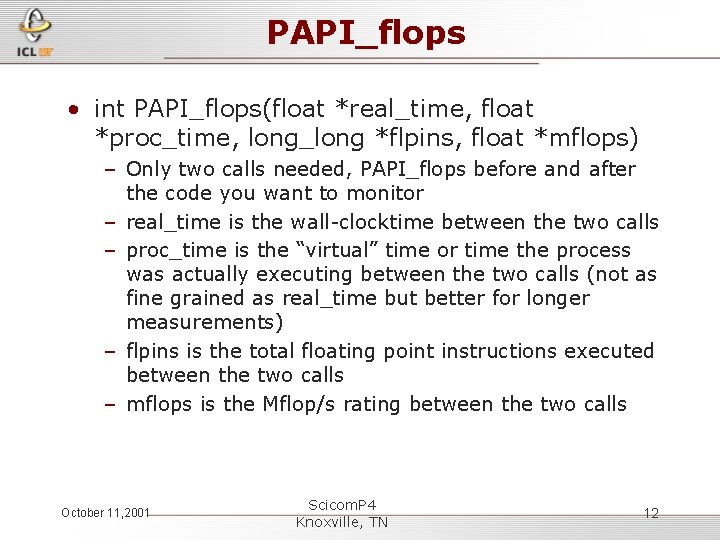

High-level API • C interface • Fortran interface PAPI_start_counters PAPIF_start_counters PAPI_read_counters PAPIF_read_counters PAPI_stop_counters PAPIF_stop_counters PAPI_accum_counters PAPIF_accum_counters PAPI_num_counters PAPIF_num_counters PAPI_flops PAPIF_flops October 11, 2001 Scicom. P 4 Knoxville, TN 9

Using the High-level API • Int PAPI_num_counters(void) – Initializes PAPI (if needed) – Returns number of hardware counters • int PAPI_start_counters(int *events, int len) – Initializes PAPI (if needed) – Sets up an event set with the given counters – Starts counting in the event set • int PAPI_library_init(int version) – Low-level routine implicitly called by above October 11, 2001 Scicom. P 4 Knoxville, TN 10

Controlling the Counters • PAPI_stop_counters(long_long *vals, int alen) – Stop counters and put counter values in array • PAPI_accum_counters(long_long *vals, int alen) – Accumulate counters into array and reset • PAPI_read_counters(long_long *vals, int alen) – Copy counter values into array and reset counters • PAPI_flops(float *rtime, float *ptime, long_long *flpins, float *mflops) – Wallclock time, process time, FP ins since start, – Mflop/s since last call October 11, 2001 Scicom. P 4 Knoxville, TN 11

PAPI_flops • int PAPI_flops(float *real_time, float *proc_time, long_long *flpins, float *mflops) – Only two calls needed, PAPI_flops before and after the code you want to monitor – real_time is the wall-clocktime between the two calls – proc_time is the “virtual” time or time the process was actually executing between the two calls (not as fine grained as real_time but better for longer measurements) – flpins is the total floating point instructions executed between the two calls – mflops is the Mflop/s rating between the two calls October 11, 2001 Scicom. P 4 Knoxville, TN 12

![PAPI Highlevel Example long valuesNUMEVENTS unsigned int EventsNUMEVENTSPAPITOTINS PAPITOTCYC Start the counters PAPI High-level Example long values[NUM_EVENTS]; unsigned int Events[NUM_EVENTS]={PAPI_TOT_INS, PAPI_TOT_CYC}; /* Start the counters */](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-13.jpg)

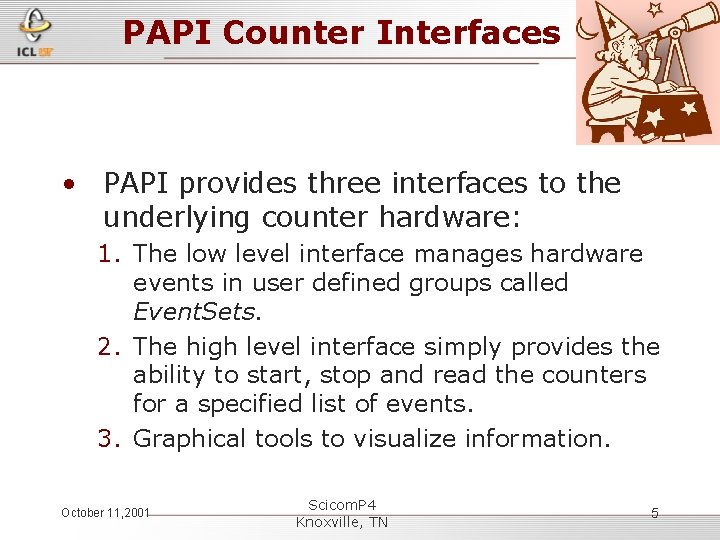

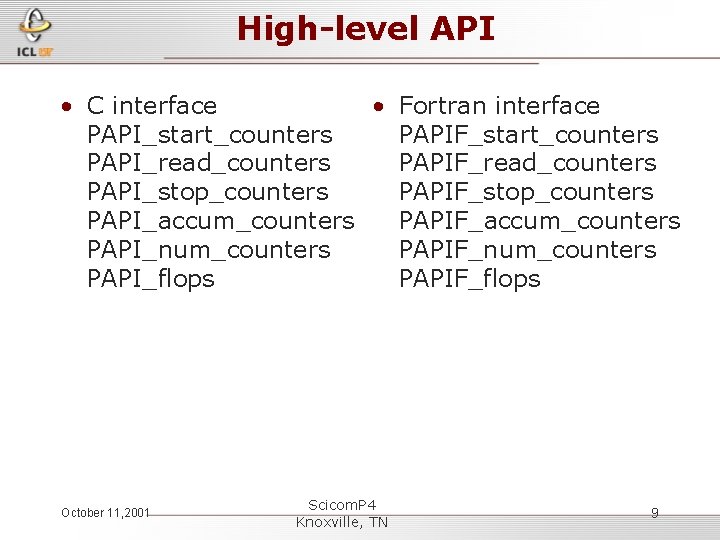

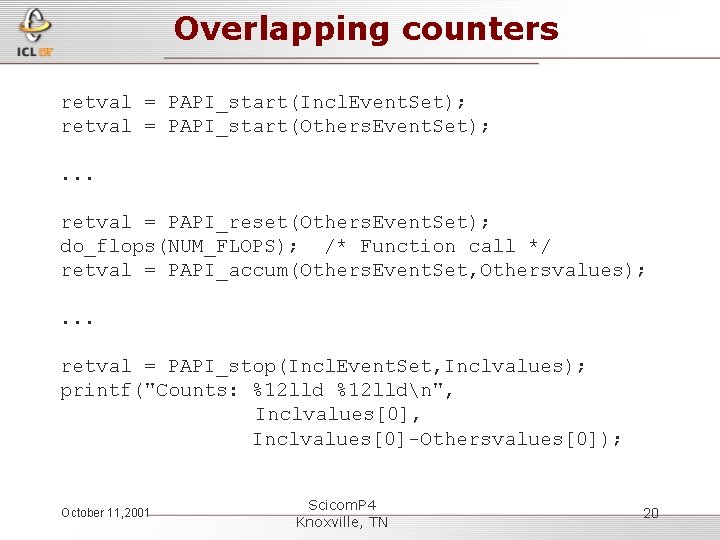

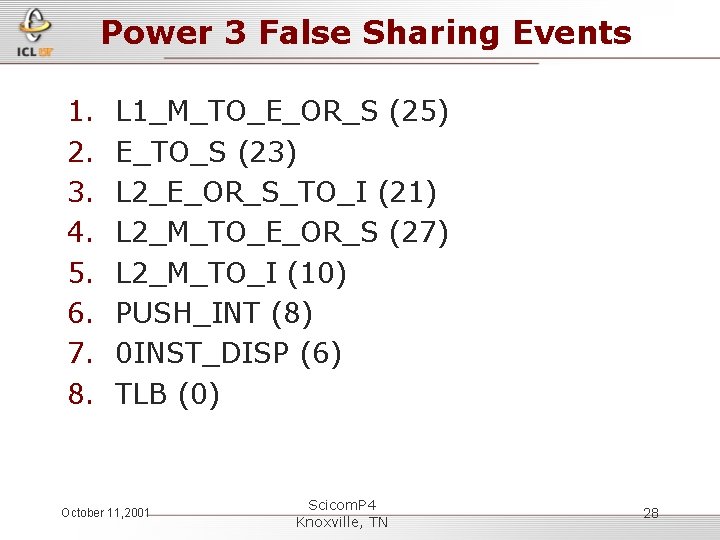

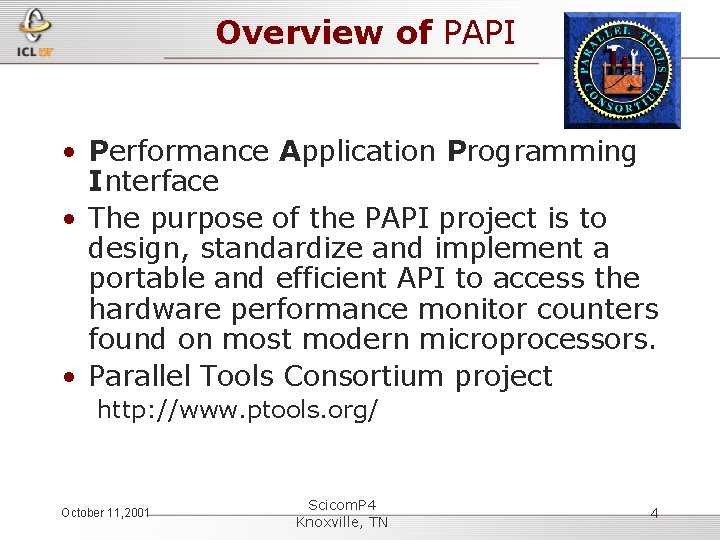

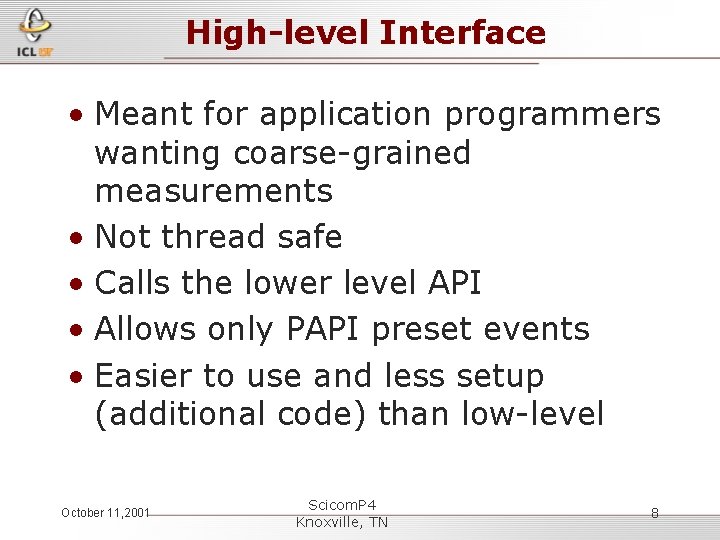

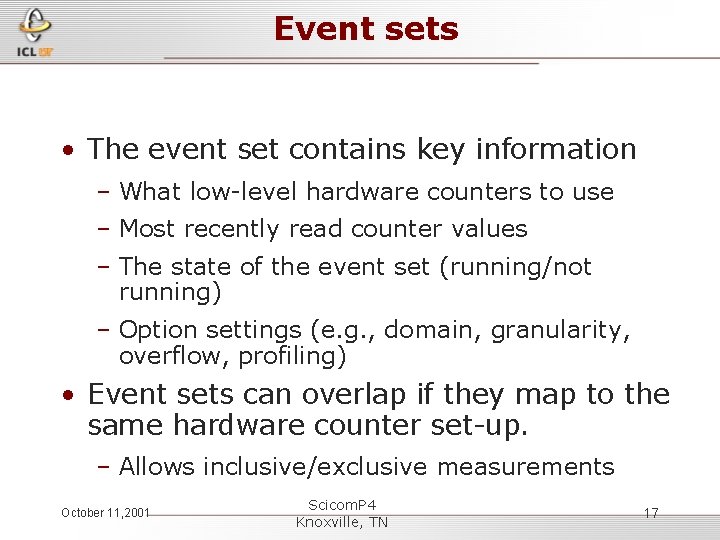

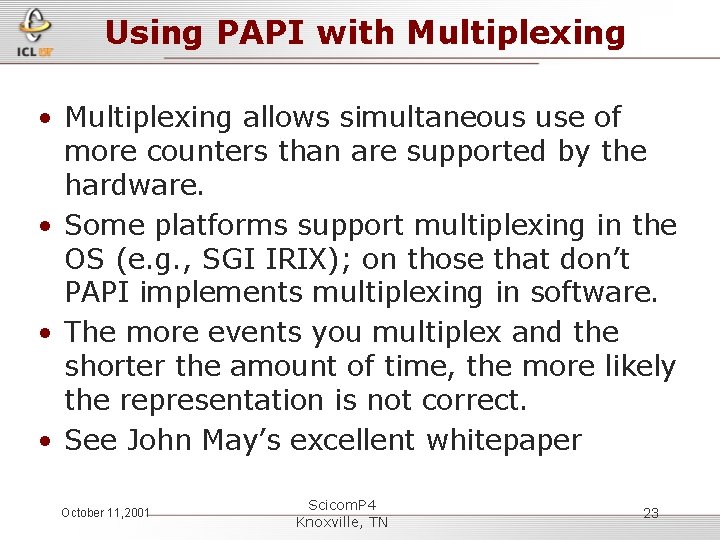

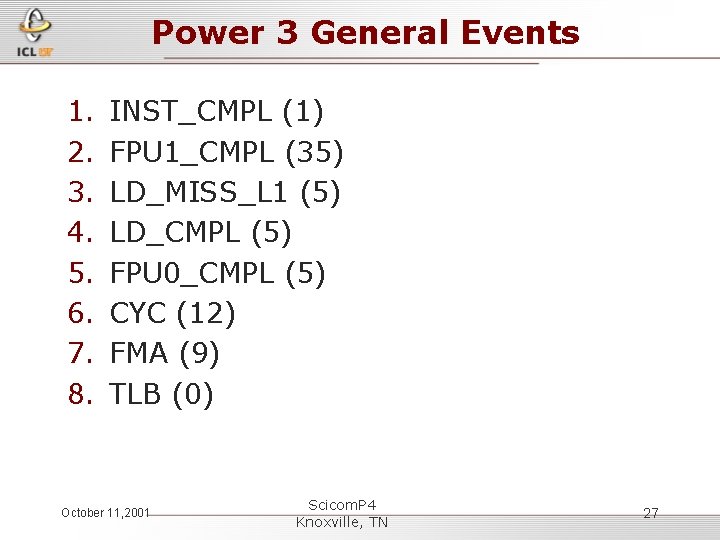

PAPI High-level Example long values[NUM_EVENTS]; unsigned int Events[NUM_EVENTS]={PAPI_TOT_INS, PAPI_TOT_CYC}; /* Start the counters */ PAPI_start_counters((int*)Events, NUM_EVENTS); /* What we are monitoring? */ do_work(); /* Stop the counters and store the results in values */ retval = PAPI_stop_counters(values, NUM_EVENTS); October 11, 2001 Scicom. P 4 Knoxville, TN 13

Return Codes October 11, 2001 Scicom. P 4 Knoxville, TN 14

Low-level Interface • Increased efficiency and functionality over the high level PAPI interface • About 40 functions • Obtain information about the executable and the hardware • Thread-safe • Fully programmable • Callbacks on counter overflow October 11, 2001 Scicom. P 4 Knoxville, TN 15

Low-level Functionality • Library initialization PAPI_library_init, PAPI_thread_init, PAPI_shutdown • Timing functions PAPI_get_real_usec, PAPI_get_virt_usec PAPI_get_real_cyc, PAPI_get_virt_cyc • Inquiry functions • Management functions • Simple lock PAPI_lock/PAPI_unlock October 11, 2001 Scicom. P 4 Knoxville, TN 16

Event sets • The event set contains key information – What low-level hardware counters to use – Most recently read counter values – The state of the event set (running/not running) – Option settings (e. g. , domain, granularity, overflow, profiling) • Event sets can overlap if they map to the same hardware counter set-up. – Allows inclusive/exclusive measurements October 11, 2001 Scicom. P 4 Knoxville, TN 17

![Event set operations Event set management PAPIcreateeventset PAPIaddevents PAPIremevents PAPIdestroyeventset Event set Event set operations • Event set management PAPI_create_eventset, PAPI_add_event[s], PAPI_rem_event[s], PAPI_destroy_eventset • Event set](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-18.jpg)

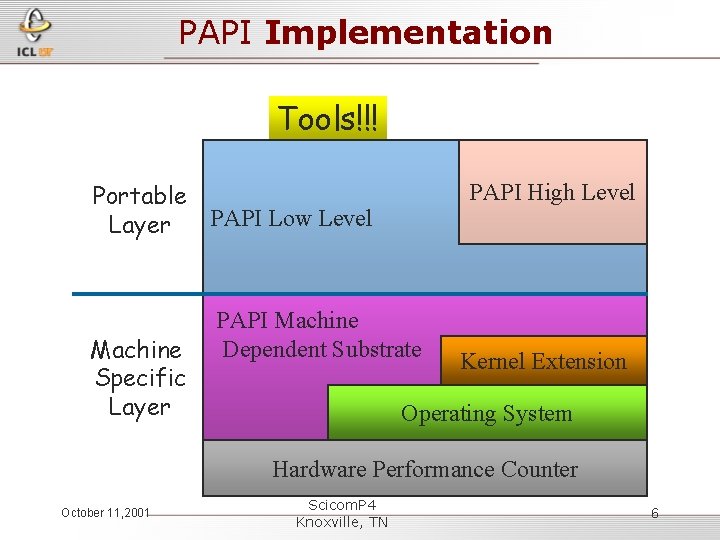

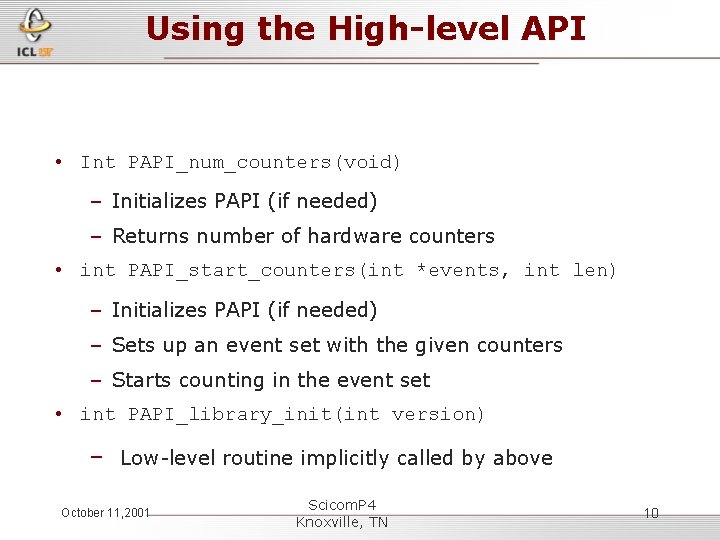

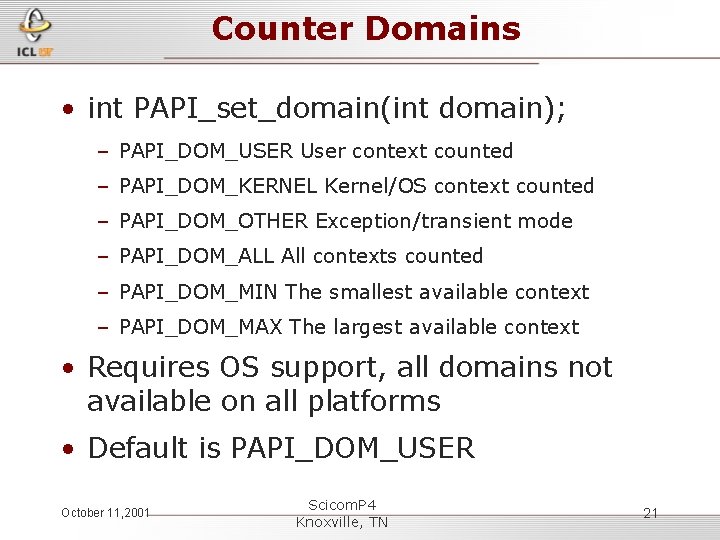

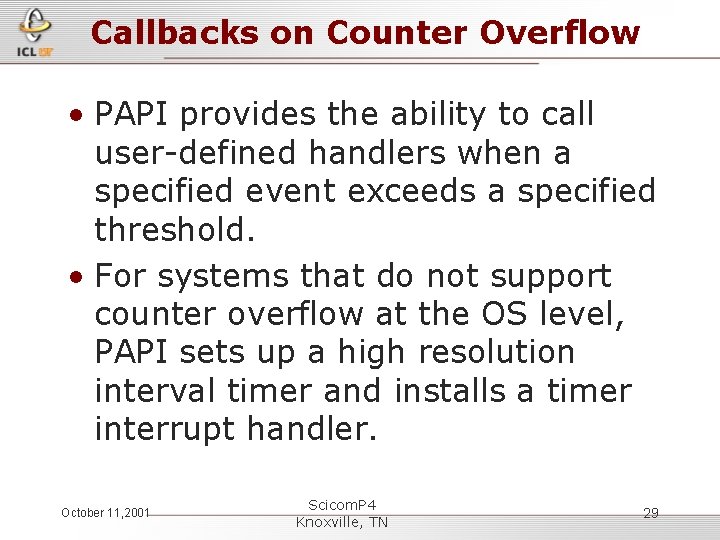

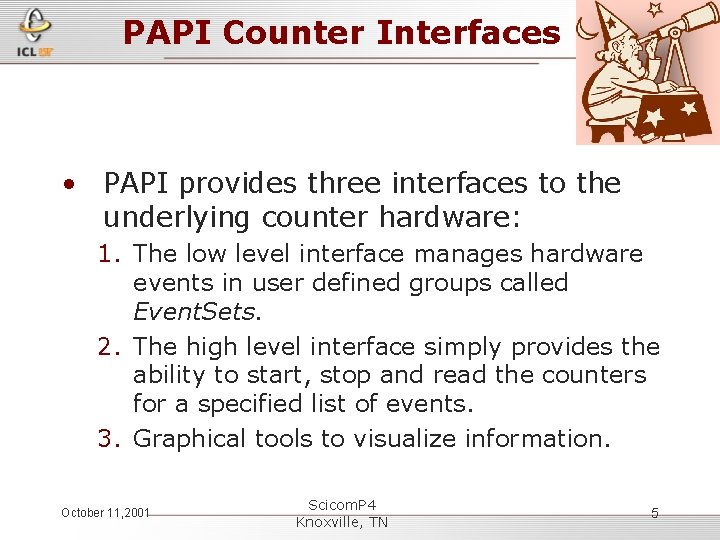

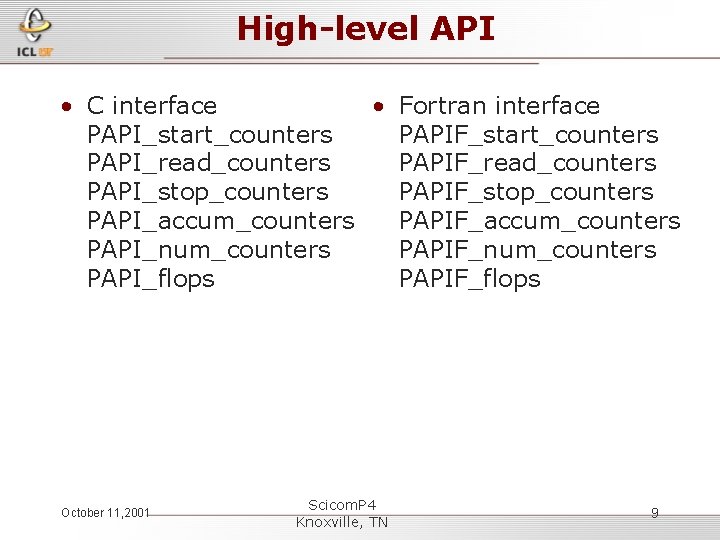

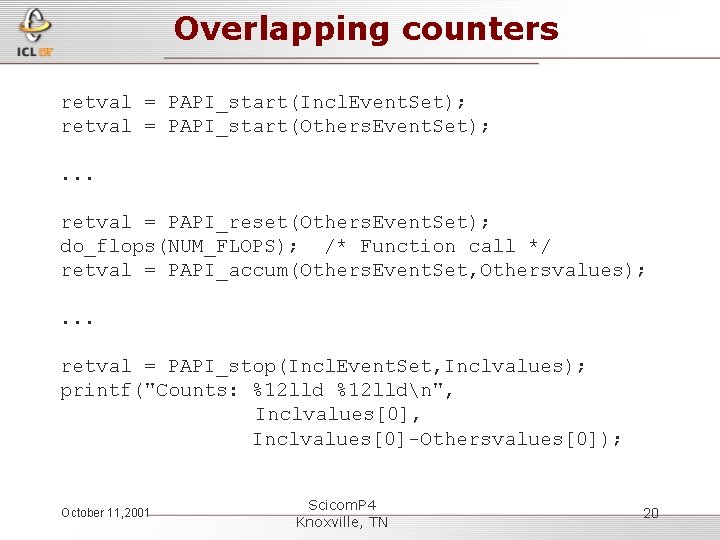

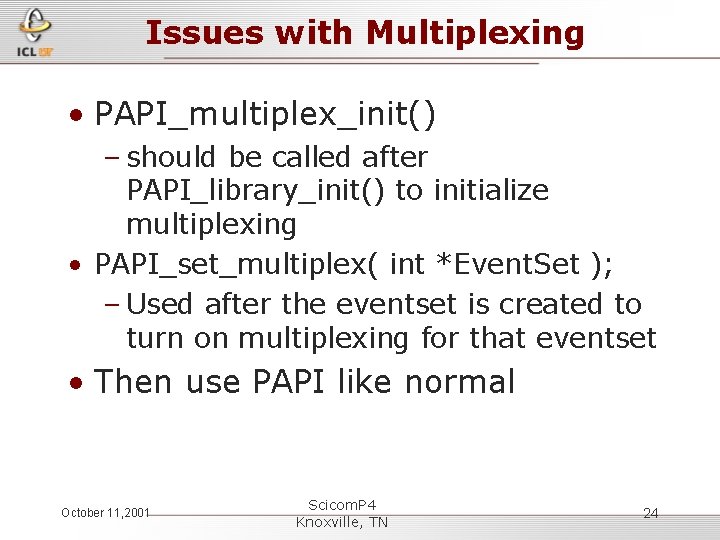

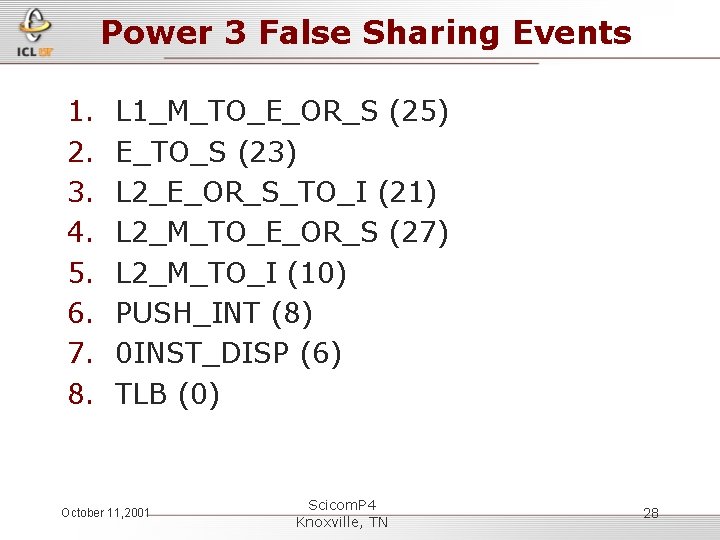

Event set operations • Event set management PAPI_create_eventset, PAPI_add_event[s], PAPI_rem_event[s], PAPI_destroy_eventset • Event set control PAPI_start, PAPI_stop, PAPI_read, PAPI_accum • Event set inquiry PAPI_query_event, PAPI_list_events, . . . October 11, 2001 Scicom. P 4 Knoxville, TN 18

![Simple example include papi h define NUMEVENTS 2 int EventsNUMEVENTSPAPIFPINS PAPITOTCYC Event Set longlong Simple example #include "papi. h“ #define NUM_EVENTS 2 int Events[NUM_EVENTS]={PAPI_FP_INS, PAPI_TOT_CYC}, Event. Set; long_long](https://slidetodoc.com/presentation_image/010b972b9f846a512fd87e3629d40fbc/image-19.jpg)

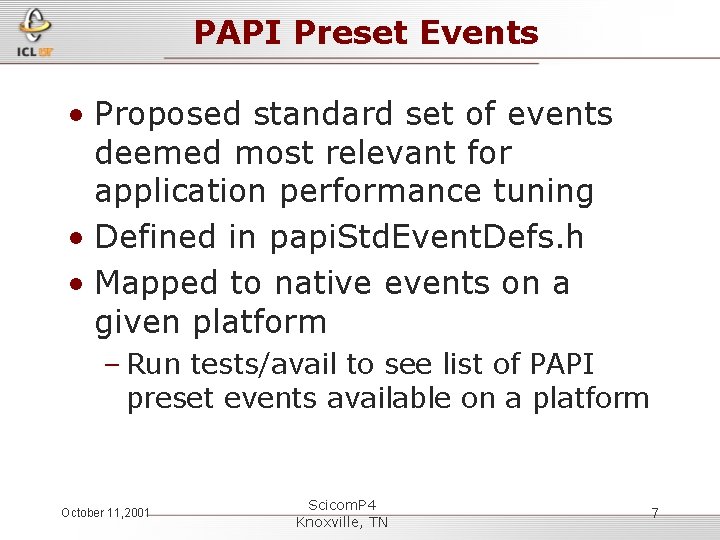

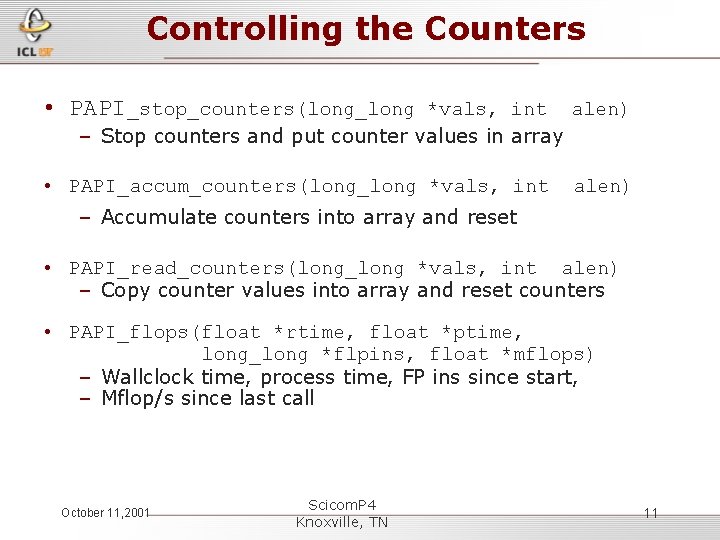

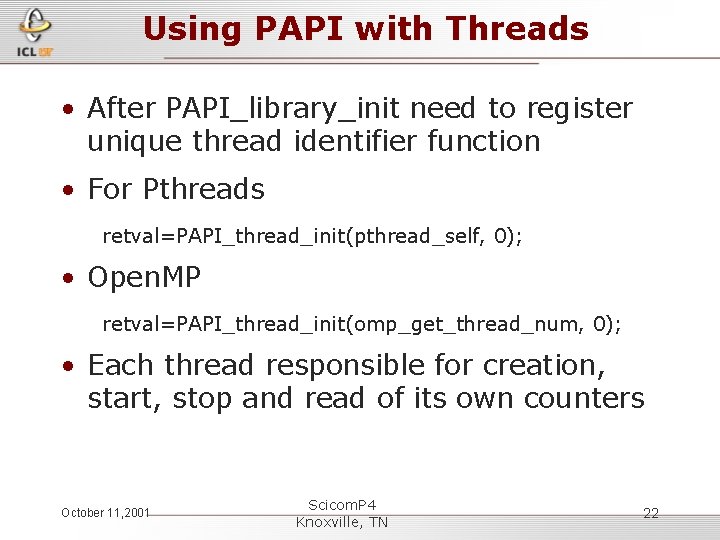

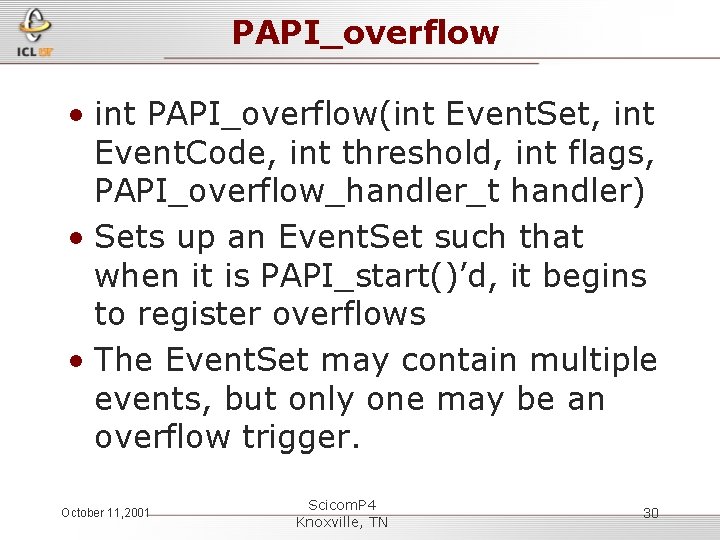

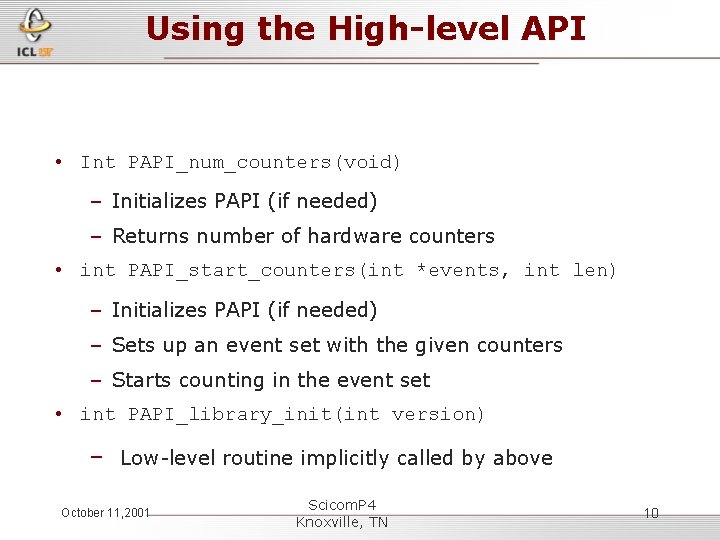

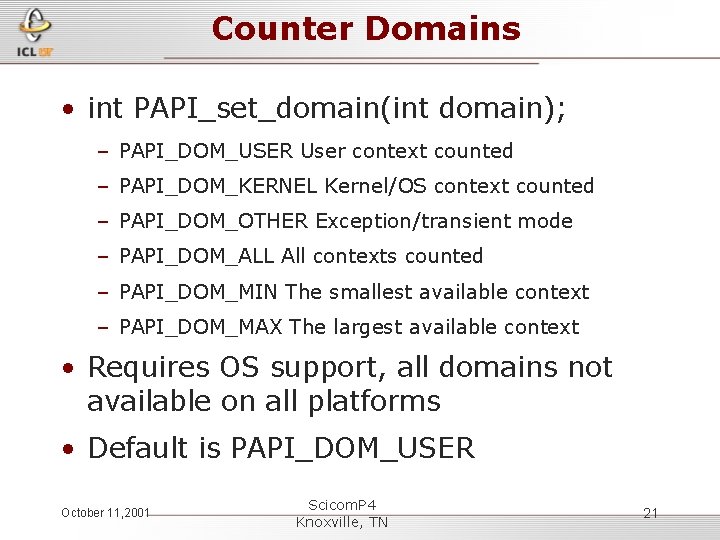

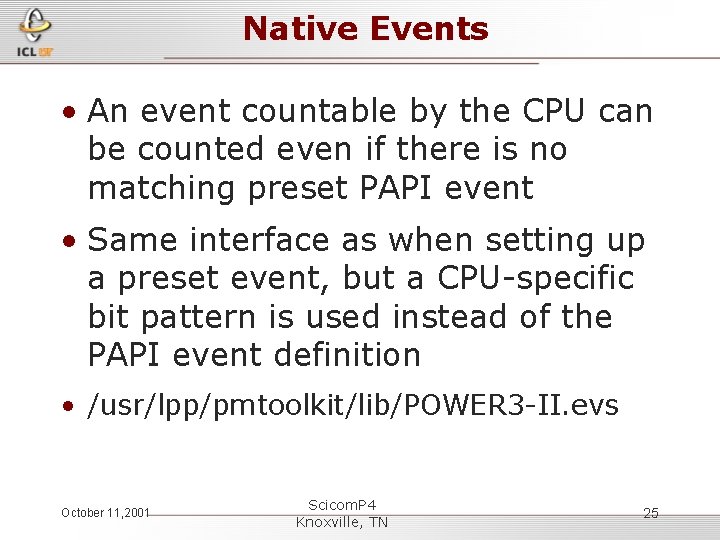

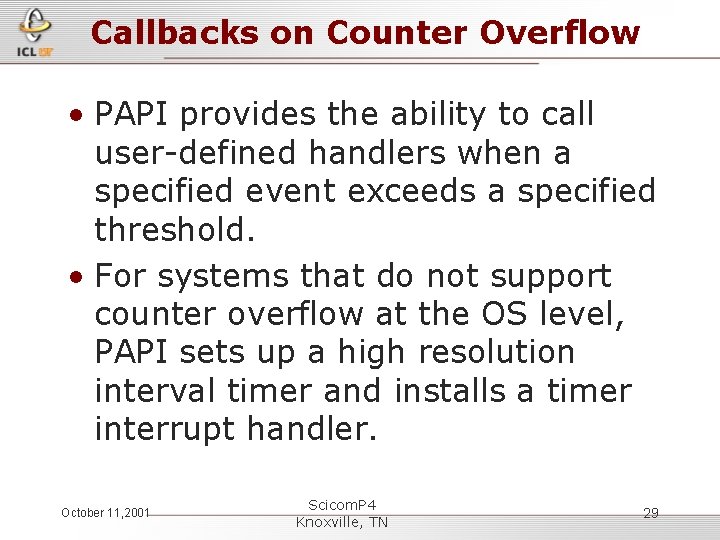

Simple example #include "papi. h“ #define NUM_EVENTS 2 int Events[NUM_EVENTS]={PAPI_FP_INS, PAPI_TOT_CYC}, Event. Set; long_long values[NUM_EVENTS]; /* Initialize the Library */ retval = PAPI_library_init(PAPI_VER_CURRENT); /* Allocate space for the new eventset and do setup */ retval = PAPI_create_eventset(&Event. Set); /* Add Flops and total cycles to the eventset */ retval = PAPI_add_events(&Event. Set, Events, NUM_EVENTS); /* Start the counters */ retval = PAPI_start(Event. Set); do_work(); /* What we want to monitor*/ /*Stop counters and store results in values */ retval = PAPI_stop(Event. Set, values); October 11, 2001 Scicom. P 4 Knoxville, TN 19

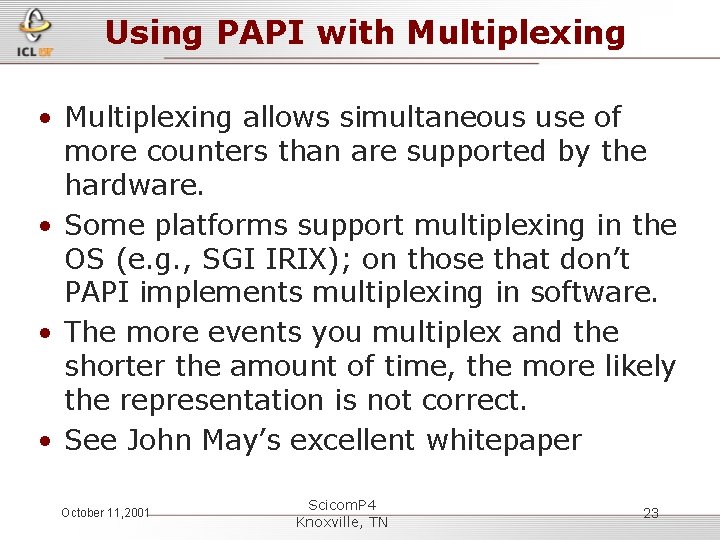

Overlapping counters retval = PAPI_start(Incl. Event. Set); retval = PAPI_start(Others. Event. Set); . . . retval = PAPI_reset(Others. Event. Set); do_flops(NUM_FLOPS); /* Function call */ retval = PAPI_accum(Others. Event. Set, Othersvalues); . . . retval = PAPI_stop(Incl. Event. Set, Inclvalues); printf("Counts: %12 lldn", Inclvalues[0]-Othersvalues[0]); October 11, 2001 Scicom. P 4 Knoxville, TN 20

Counter Domains • int PAPI_set_domain(int domain); – PAPI_DOM_USER User context counted – PAPI_DOM_KERNEL Kernel/OS context counted – PAPI_DOM_OTHER Exception/transient mode – PAPI_DOM_ALL All contexts counted – PAPI_DOM_MIN The smallest available context – PAPI_DOM_MAX The largest available context • Requires OS support, all domains not available on all platforms • Default is PAPI_DOM_USER October 11, 2001 Scicom. P 4 Knoxville, TN 21

Using PAPI with Threads • After PAPI_library_init need to register unique thread identifier function • For Pthreads retval=PAPI_thread_init(pthread_self, 0); • Open. MP retval=PAPI_thread_init(omp_get_thread_num, 0); • Each thread responsible for creation, start, stop and read of its own counters October 11, 2001 Scicom. P 4 Knoxville, TN 22

Using PAPI with Multiplexing • Multiplexing allows simultaneous use of more counters than are supported by the hardware. • Some platforms support multiplexing in the OS (e. g. , SGI IRIX); on those that don’t PAPI implements multiplexing in software. • The more events you multiplex and the shorter the amount of time, the more likely the representation is not correct. • See John May’s excellent whitepaper October 11, 2001 Scicom. P 4 Knoxville, TN 23

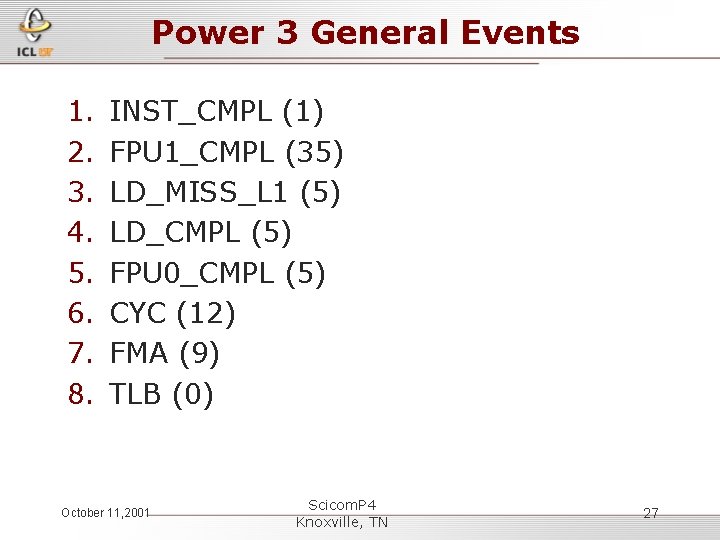

Issues with Multiplexing • PAPI_multiplex_init() – should be called after PAPI_library_init() to initialize multiplexing • PAPI_set_multiplex( int *Event. Set ); – Used after the eventset is created to turn on multiplexing for that eventset • Then use PAPI like normal October 11, 2001 Scicom. P 4 Knoxville, TN 24

Native Events • An event countable by the CPU can be counted even if there is no matching preset PAPI event • Same interface as when setting up a preset event, but a CPU-specific bit pattern is used instead of the PAPI event definition • /usr/lpp/pmtoolkit/lib/POWER 3 -II. evs October 11, 2001 Scicom. P 4 Knoxville, TN 25

Power 3 Native Event Example See for list of native events. The encoding for native events is: Lower 8 bits indicate which counter number: 0 – 7 Bits 8 -16 indicate which event number: 0 -50 native = (35 << 8) | 1; /* FPU 1 CMPL */ PAPI_add_event(&Event. Set, native) October 11, 2001 Scicom. P 4 Knoxville, TN 26

Power 3 General Events 1. 2. 3. 4. 5. 6. 7. 8. INST_CMPL (1) FPU 1_CMPL (35) LD_MISS_L 1 (5) LD_CMPL (5) FPU 0_CMPL (5) CYC (12) FMA (9) TLB (0) October 11, 2001 Scicom. P 4 Knoxville, TN 27

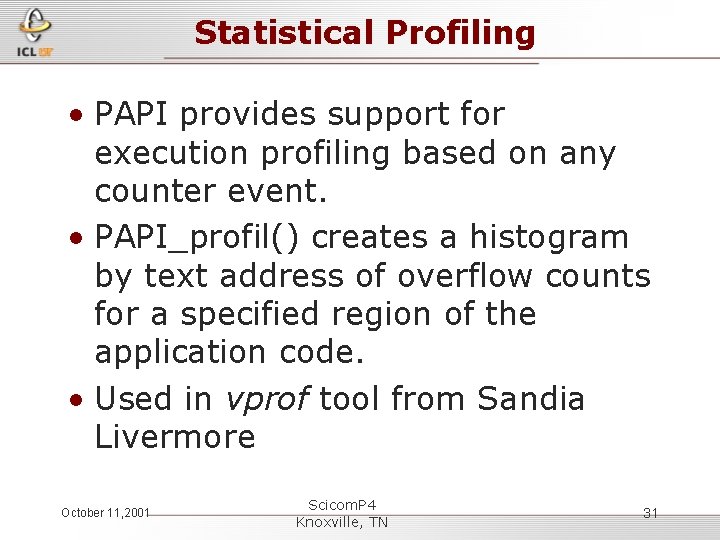

Power 3 False Sharing Events 1. 2. 3. 4. 5. 6. 7. 8. L 1_M_TO_E_OR_S (25) E_TO_S (23) L 2_E_OR_S_TO_I (21) L 2_M_TO_E_OR_S (27) L 2_M_TO_I (10) PUSH_INT (8) 0 INST_DISP (6) TLB (0) October 11, 2001 Scicom. P 4 Knoxville, TN 28

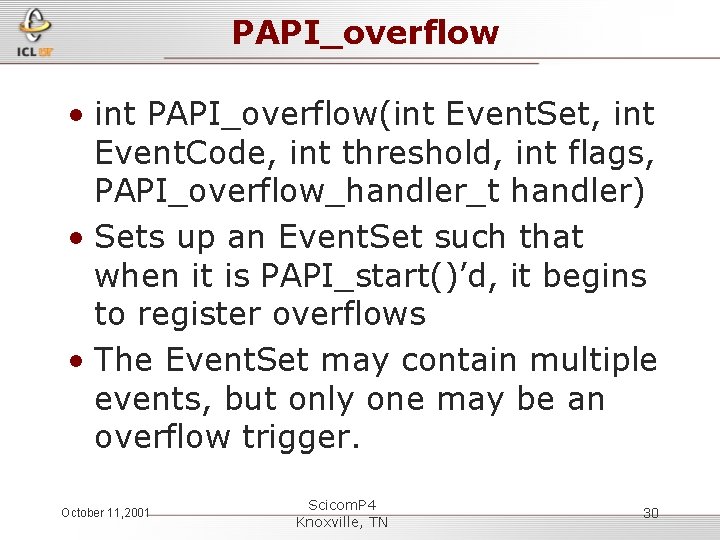

Callbacks on Counter Overflow • PAPI provides the ability to call user-defined handlers when a specified event exceeds a specified threshold. • For systems that do not support counter overflow at the OS level, PAPI sets up a high resolution interval timer and installs a timer interrupt handler. October 11, 2001 Scicom. P 4 Knoxville, TN 29

PAPI_overflow • int PAPI_overflow(int Event. Set, int Event. Code, int threshold, int flags, PAPI_overflow_handler_t handler) • Sets up an Event. Set such that when it is PAPI_start()’d, it begins to register overflows • The Event. Set may contain multiple events, but only one may be an overflow trigger. October 11, 2001 Scicom. P 4 Knoxville, TN 30

Statistical Profiling • PAPI provides support for execution profiling based on any counter event. • PAPI_profil() creates a histogram by text address of overflow counts for a specified region of the application code. • Used in vprof tool from Sandia Livermore October 11, 2001 Scicom. P 4 Knoxville, TN 31

PAPI Release Platforms • Linux/x 86, Windows 2000 – Requires patch to Linux kernel, driver for Windows • Linux/IA-64 • Sun Solaris 2. 8/Ultra I/II • IBM AIX 4. 3+/Power – Contact IBM for pmtoolkit • SGI IRIX/MIPS • Compaq Tru 64/Alpha Ev 6 & Ev 67 • Requires OS device driver from Compaq • Cray T 3 E/Unicos October 11, 2001 Scicom. P 4 Knoxville, TN 32

For More Information • http: //icl. cs. utk. edu/projects/papi/ – Software and documentation – Reference materials – Papers and presentations – Third-party tools – Mailing lists October 11, 2001 Scicom. P 4 Knoxville, TN 33

PERC: Performance Evaluation Research Center • Developing a science for understanding performance of scientific applications on high-end computer systems. • Developing engineering strategies for improving performance on these systems. • DOE Labs: ANL, LBNL, LLNL, ORNL • Universities: UCSD, UI-UC, UMD, UTK • Funded by Sci. DAC: Scientific Discovery through Advanced Computing October 11, 2001 Scicom. P 4 Knoxville, TN 34

PERC: Real-World Applications • High Energy and Nuclear Physics – Shedding New Light on Exploding Stars: Terascale Simulations of Neutrino-Driven Super. Novae and Their Nucleo. Synthesis – Advanced Computing for 21 st Century Accelerator Science and Technology • Biology and Environmental Research – Collaborative Design and Development of the Community Climate System Model for Terascale Computers • Fusion Energy Sciences – Numerical Computation of Wave-Plasma Interactions in Multidimensional Systems • Advanced Scientific Computing – – – Terascale Optimal PDE Solvers (TOPS) Applied Partial Differential Equations Center (APDEC) Scientific Data Management (SDM) • Chemical Sciences – Accurate Properties for Open-Shell States of Large Molecules • …and more… October 11, 2001 Scicom. P 4 Knoxville, TN 35

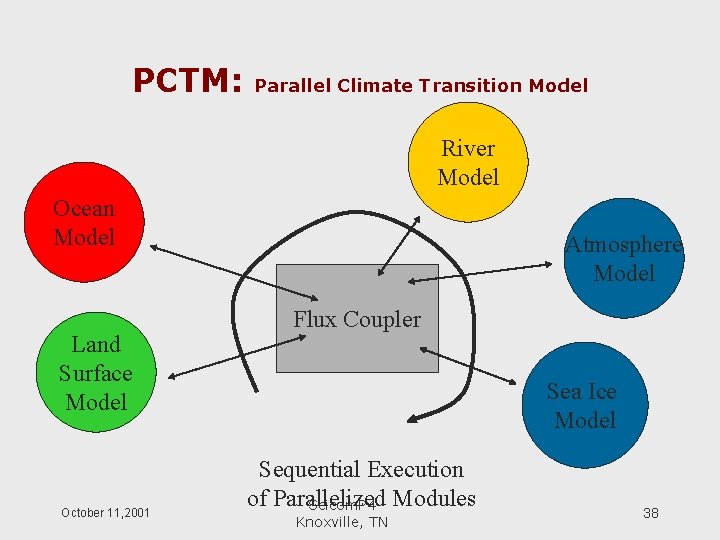

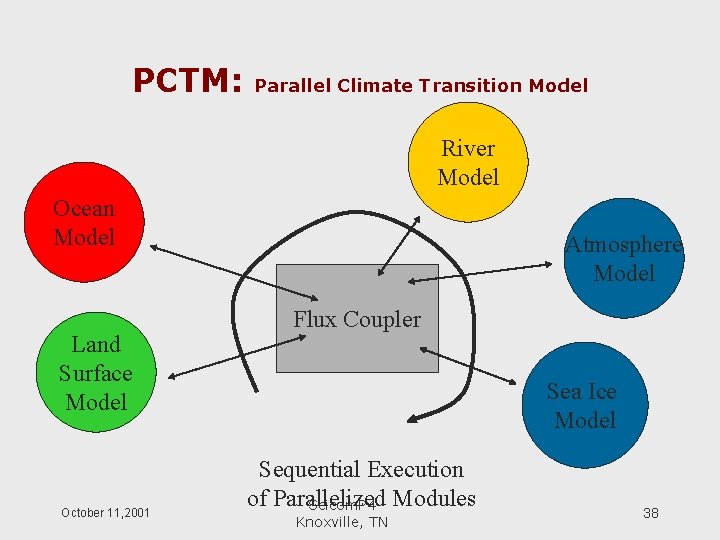

Parallel Climate Transition Model • Components for Ocean, Atmosphere, Sea Ice, Land Surface and River Transport • Developed by Warren Washington’s group at NCAR 1. POP: Parallel Ocean Program from LANL 2. CCM 3: Community Climate Model 3. 2 from NCAR including LSM: Land Surface Model 3. ICE: CICE from LANL and CCSM from NCAR 4. RTM: River Transport Module from UT Austin October 11, 2001 Scicom. P 4 Knoxville, TN 36

PCTM: The Code • 132 K lines of code • Mostly Fortran 90 with some Fortran 77 and C • Each component runs sequentially • Each component is parallel • The overall model must run as pure MPI • Code is already instrumented with timing library in flux coupler • Add additional PAPI code to measure 8 hardware metrics for each timer October 11, 2001 Scicom. P 4 Knoxville, TN 37

PCTM: Parallel Climate Transition Model River Model Ocean Model Land Surface Model October 11, 2001 Atmosphere Model Flux Coupler Sea Ice Model Sequential Execution of Parallelized Scicom. P 4 Modules Knoxville, TN 38

PCTM: Performance Questions • Are we cache, functional unit or bound? • How does shared memory MPI affect performance? • How many tasks on a 4 -way node? October 11, 2001 Scicom. P 4 Knoxville, TN 39

PCTM: Answers • No answers yet… Wait for SC 2001 – Measure coarse regions of code for • Instructions per Cycle • Stall Cycles • 0 Instruction Dispatch Cycles – Measure those regions with multiplexing and inspect code – Statistically profile candidates for improvement, line by line October 11, 2001 Scicom. P 4 Knoxville, TN 40