Performance Analysis of Packet Classification Algorithms on Network

- Slides: 35

Performance Analysis of Packet Classification Algorithms on Network Processors Deepa Srinivasan, IBM Corporation Wu-chang Feng, Portland State University November 18, 2004 IEEE Local Computer Networks

Network Processors • Emerging platform for high-speed packet processing – Splice in a statistic here? – Provide device programmability while keeping performance • Architectures differ, but common features include… – Multiple processing units executing in parallel – Instruction set customized for network applications – Binary image pre-determined at compile time

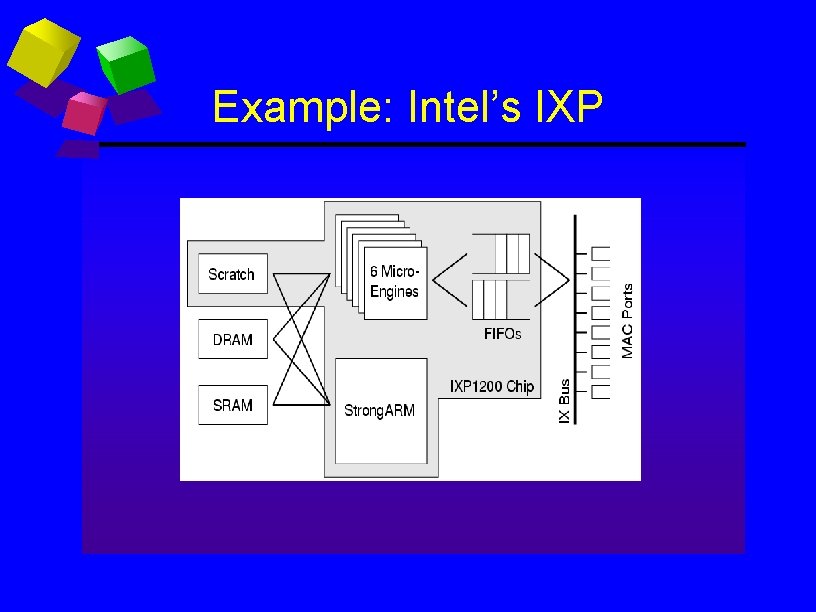

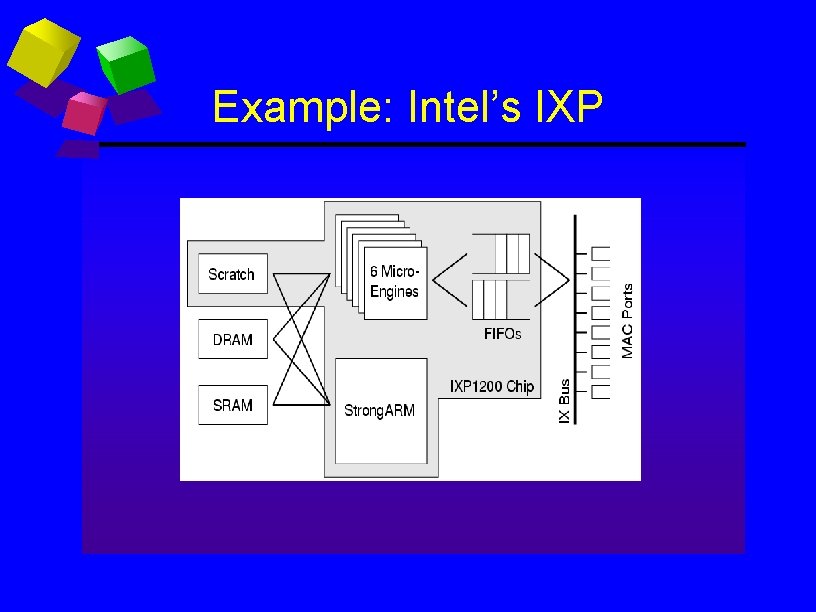

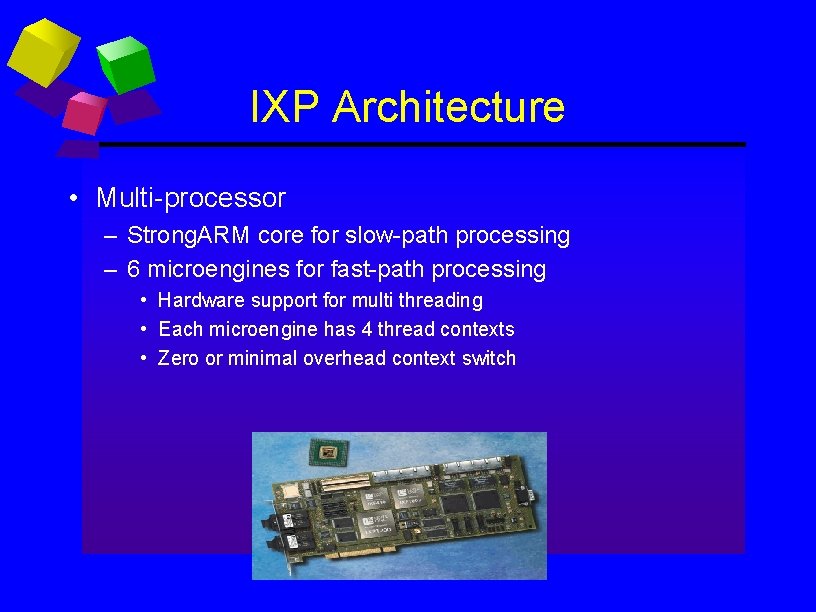

Example: Intel’s IXP

IXP Architecture • Multi-processor – Strong. ARM core for slow-path processing – 6 microengines for fast-path processing • Hardware support for multi threading • Each microengine has 4 thread contexts • Zero or minimal overhead context switch

Motivation for study • NPs offer a programmable, parallel alternative, but current packet processing algorithms are – Written for sequential execution or – Designed using custom, invariant ASICs • To use them on NPs – Algorithms must be mapped onto NPs in different ways with each mapping having varying performance

Our study • Examine several mappings of a packet classification algorithm onto NP hardware • Identify general problems in performing such mappings

Why packet classification? • Fundamental function performed by all network devices – Routers, switches, bridges, firewalls, IDS • Increasing complexity makes packet classification the bottleneck – Increase in size of rulesets – Increase in dimension of rulesets – Algorithms must perform at high-speed on the fast-path

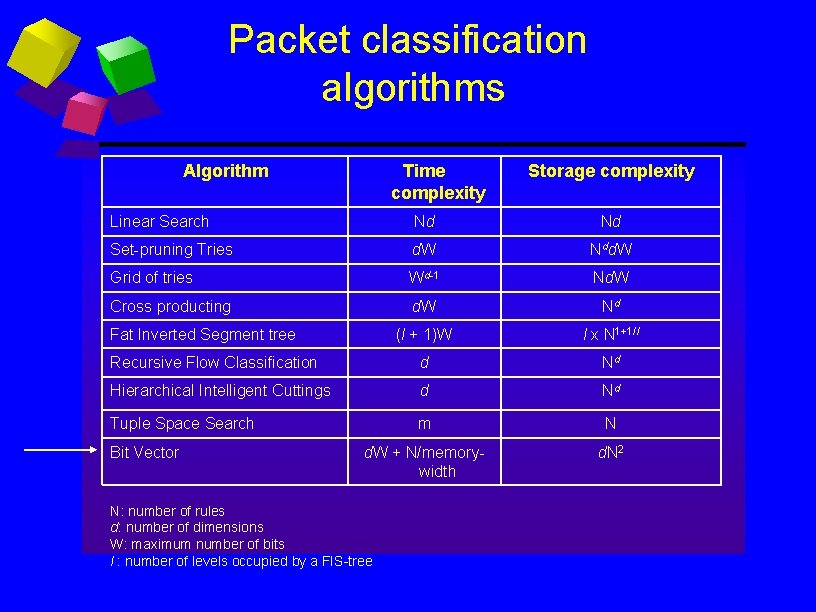

Picking an algorithm • Many algorithms sequential – Do not leverage inherent parallelism in NPs • Several parallel algorithms – Bit. Vector [Lakshman 98] • Parallel lookup implemented via FPGA • Maps well onto NP platform

Bit Vector algorithm • T. V. Lakshman, D. Stiliadis, “High-speed policybased packet forwarding using efficient multidimensional range matching”, SIGCOMM 1998. – Parallel search algorithm – Preprocessing phase – Two-stage classification phase • Perform lookup for each dimension in parallel • Combine results to determine matching rule

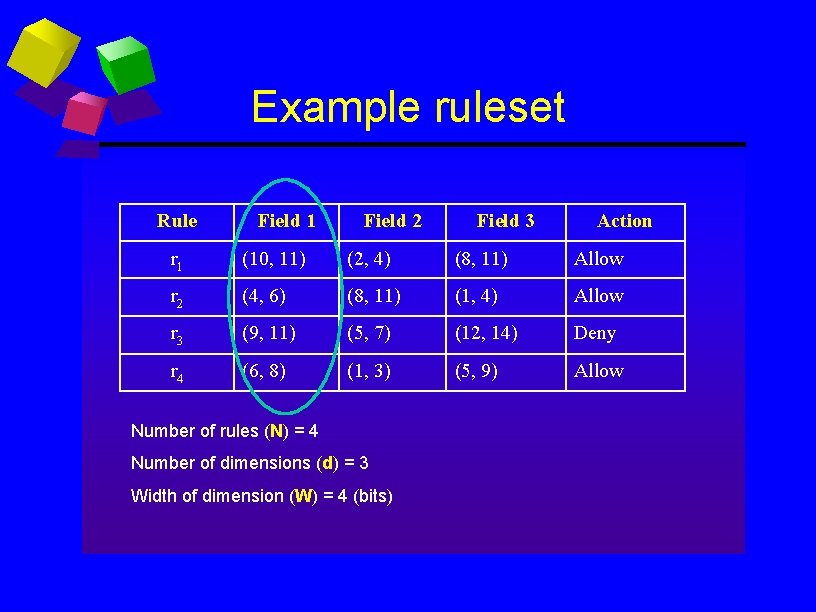

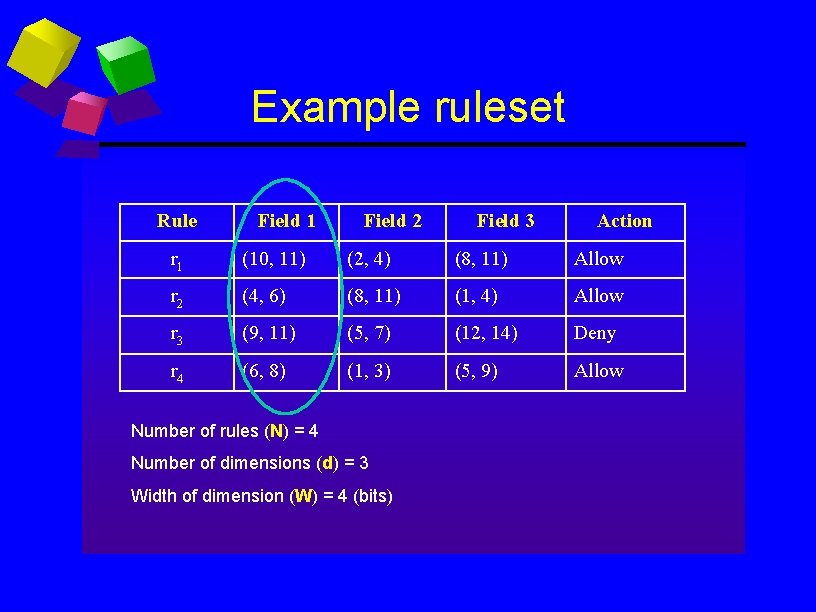

Example ruleset Rule Field 1 Field 2 Field 3 Action r 1 (10, 11) (2, 4) (8, 11) Allow r 2 (4, 6) (8, 11) (1, 4) Allow r 3 (9, 11) (5, 7) (12, 14) Deny r 4 (6, 8) (1, 3) (5, 9) Allow Number of rules (N) = 4 Number of dimensions (d) = 3 Width of dimension (W) = 4 (bits)

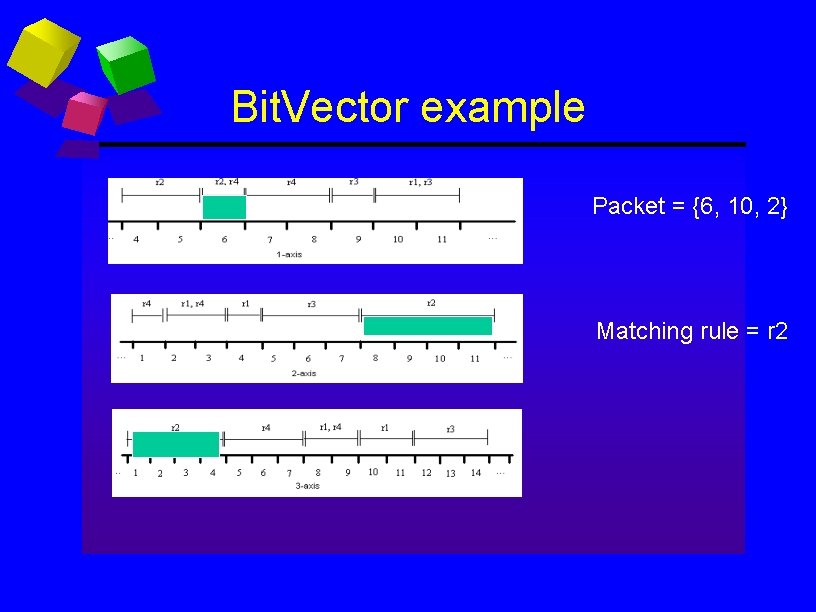

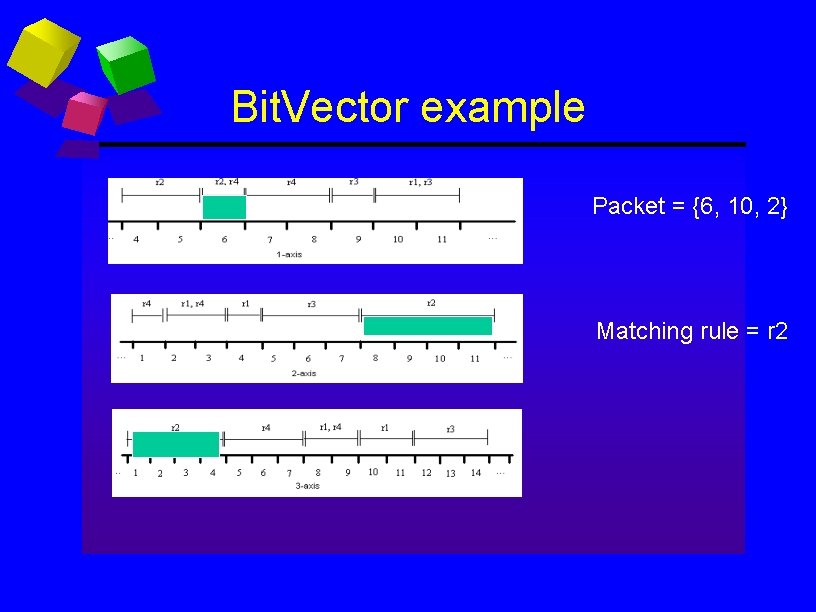

Bit. Vector example Packet = {6, 10, 2} Matching rule = r 2

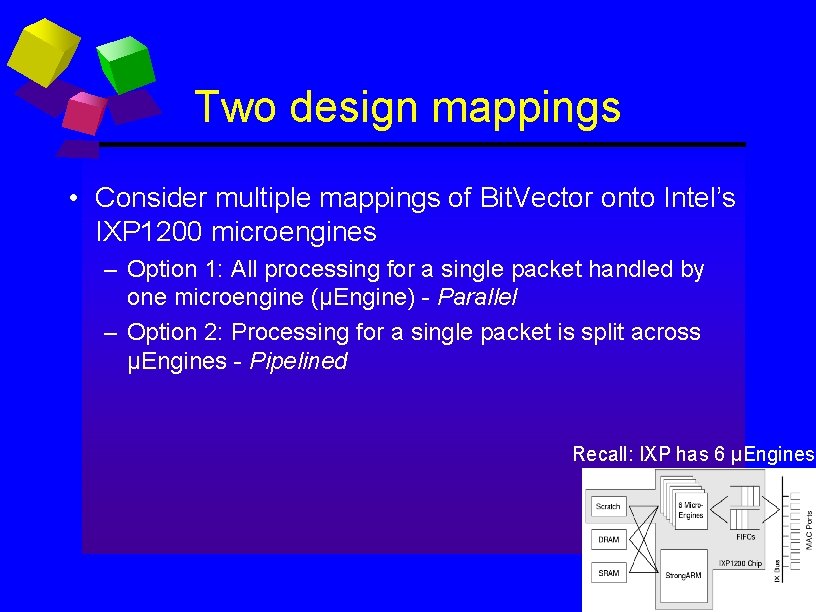

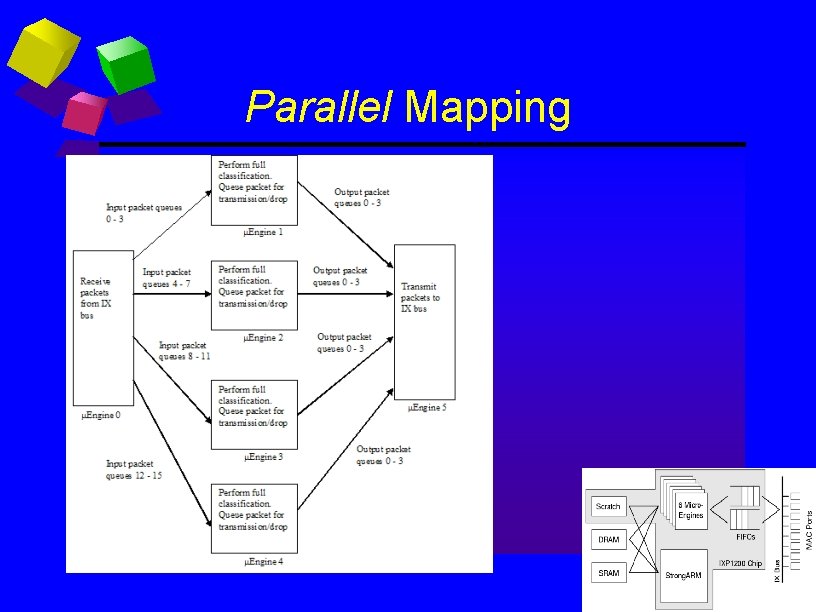

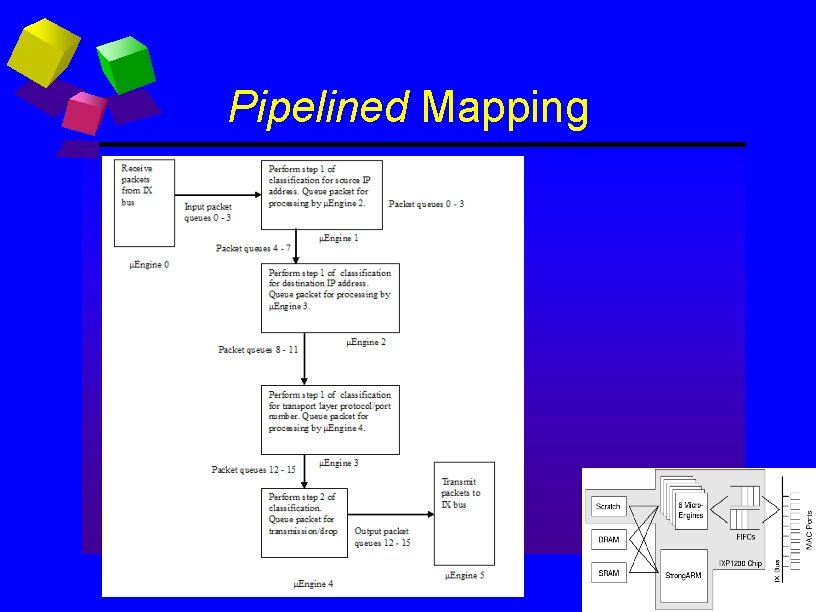

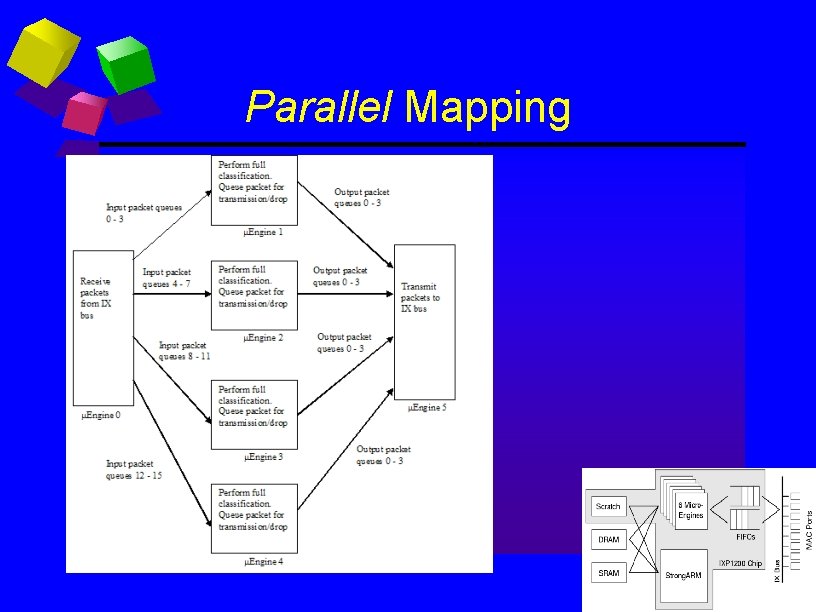

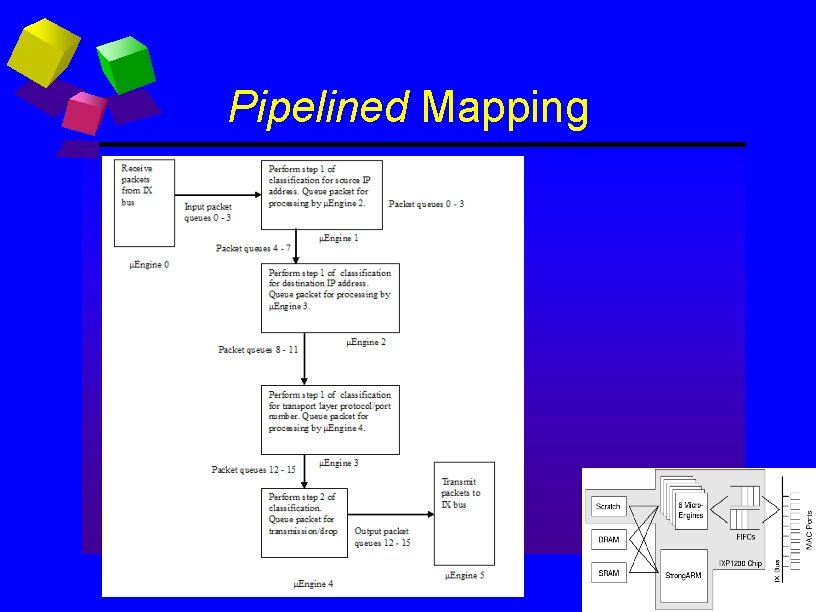

Two design mappings • Consider multiple mappings of Bit. Vector onto Intel’s IXP 1200 microengines – Option 1: All processing for a single packet handled by one microengine (μEngine) - Parallel – Option 2: Processing for a single packet is split across μEngines - Pipelined Recall: IXP has 6 μEngines

Parallel Mapping

Pipelined Mapping

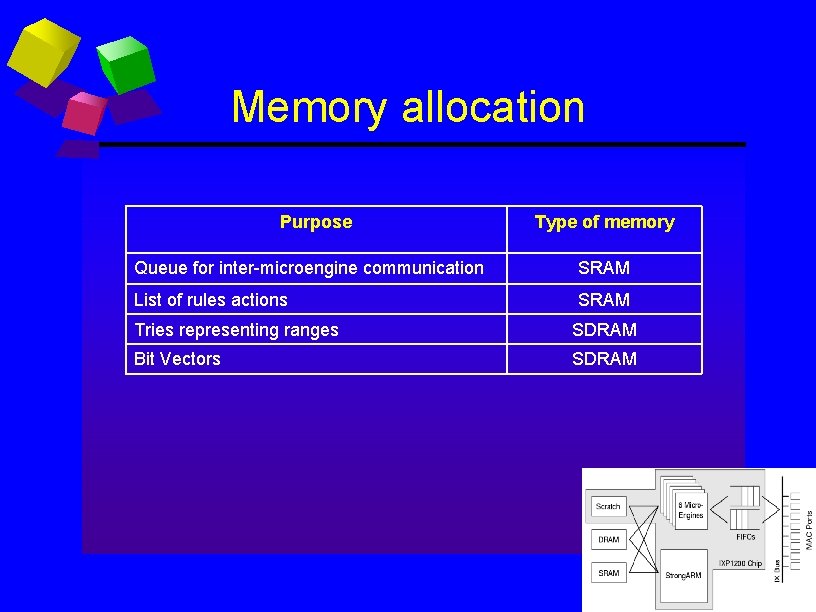

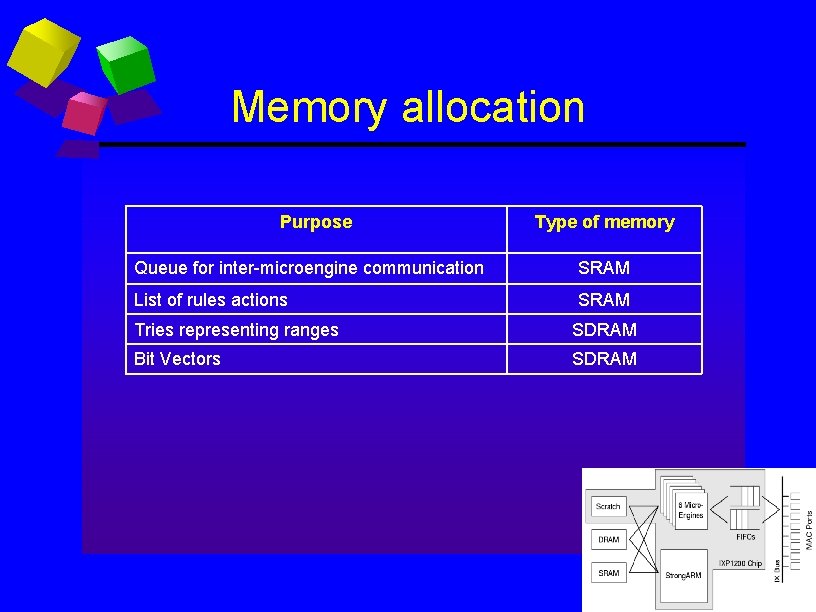

Memory allocation Purpose Type of memory Queue for inter-microengine communication SRAM List of rules actions SRAM Tries representing ranges SDRAM Bit Vectors SDRAM

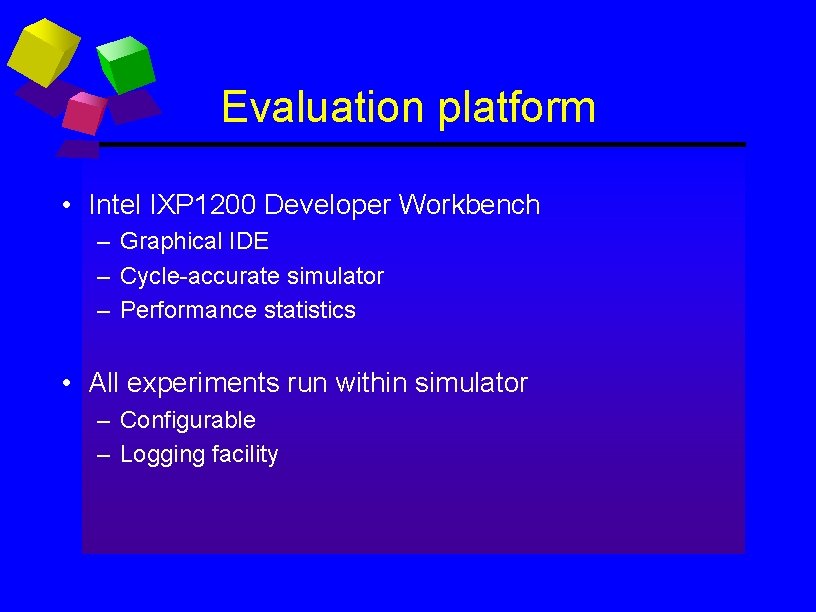

Evaluation platform • Intel IXP 1200 Developer Workbench – Graphical IDE – Cycle-accurate simulator – Performance statistics • All experiments run within simulator – Configurable – Logging facility

Simulator configuration • IXP 1200 chip – 1 K microstore – Core frequency (~ 165 MHz) – 4 ports receive data • Simulations run until 75000 packets received by IXP – Simulator sends packets as fast as possible • Rulesets used – Experiments use a small, fixed set of rules – Availability of real-world firewall rulesets limited

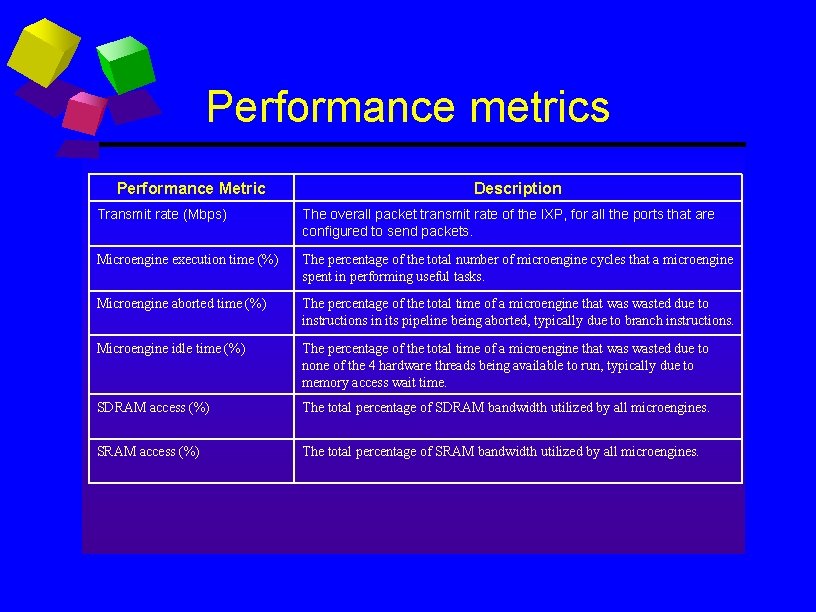

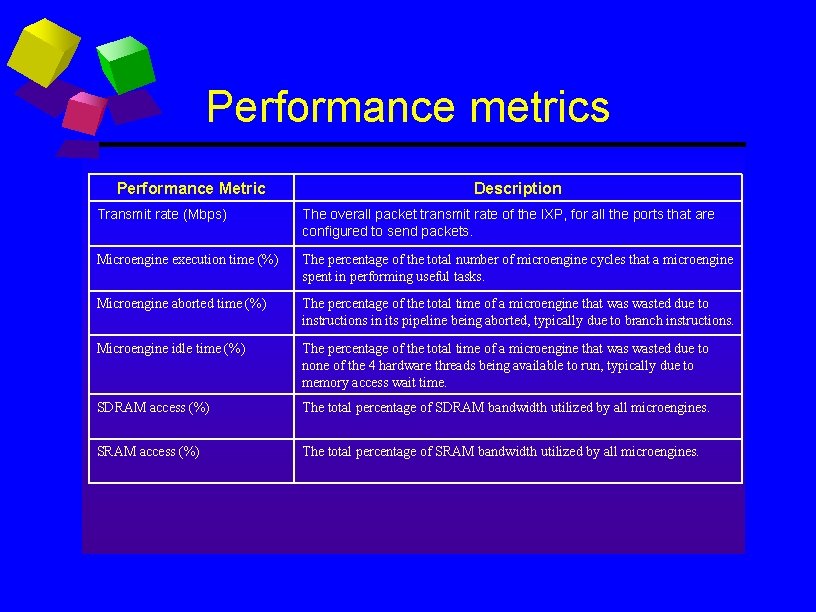

Performance metrics Performance Metric Description Transmit rate (Mbps) The overall packet transmit rate of the IXP, for all the ports that are configured to send packets. Microengine execution time (%) The percentage of the total number of microengine cycles that a microengine spent in performing useful tasks. Microengine aborted time (%) The percentage of the total time of a microengine that wasted due to instructions in its pipeline being aborted, typically due to branch instructions. Microengine idle time (%) The percentage of the total time of a microengine that wasted due to none of the 4 hardware threads being available to run, typically due to memory access wait time. SDRAM access (%) The total percentage of SDRAM bandwidth utilized by all microengines. SRAM access (%) The total percentage of SRAM bandwidth utilized by all microengines.

Results and Analysis

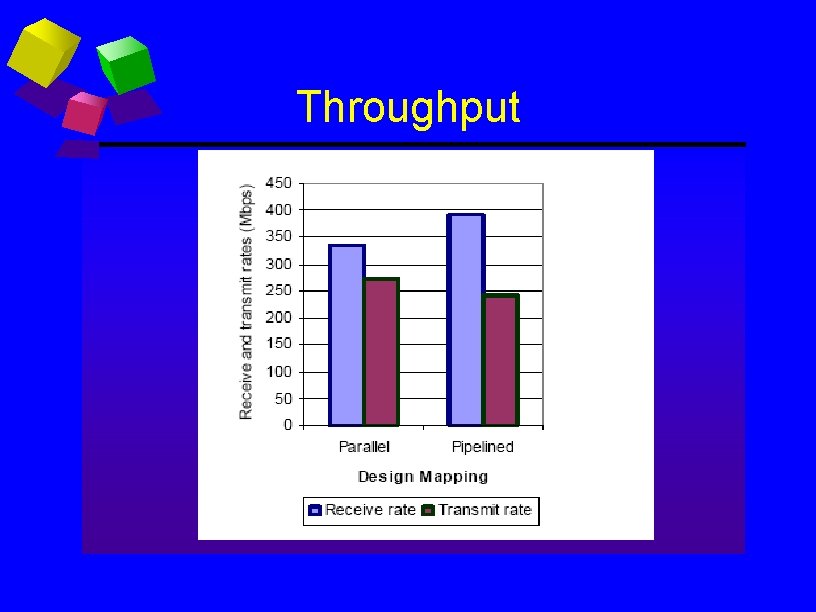

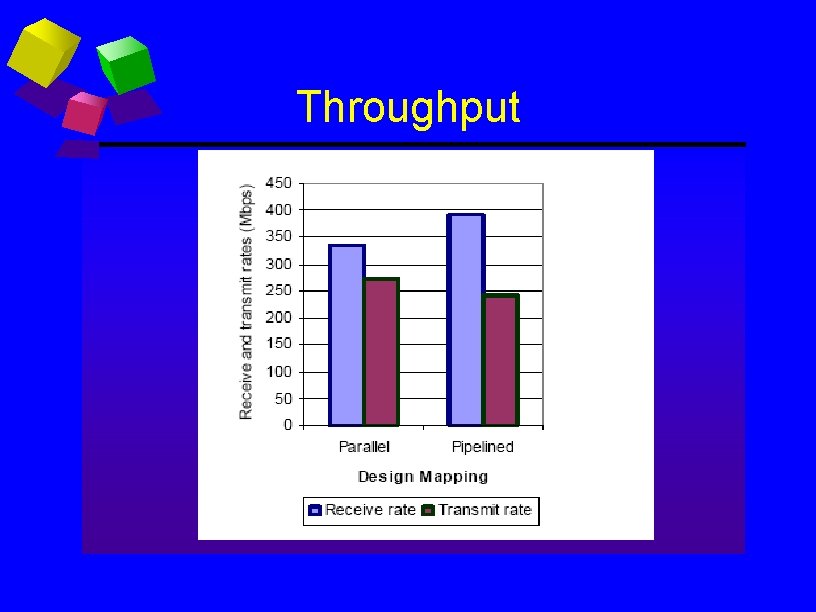

Throughput

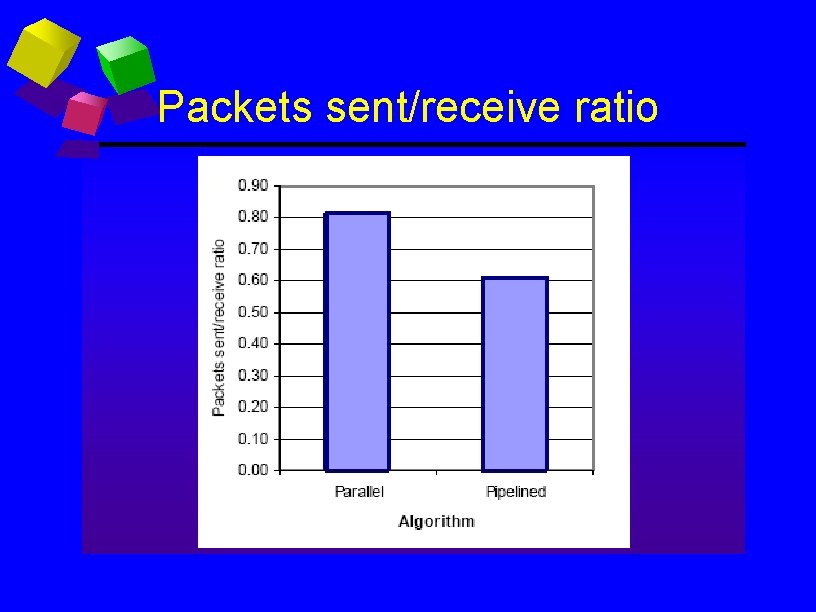

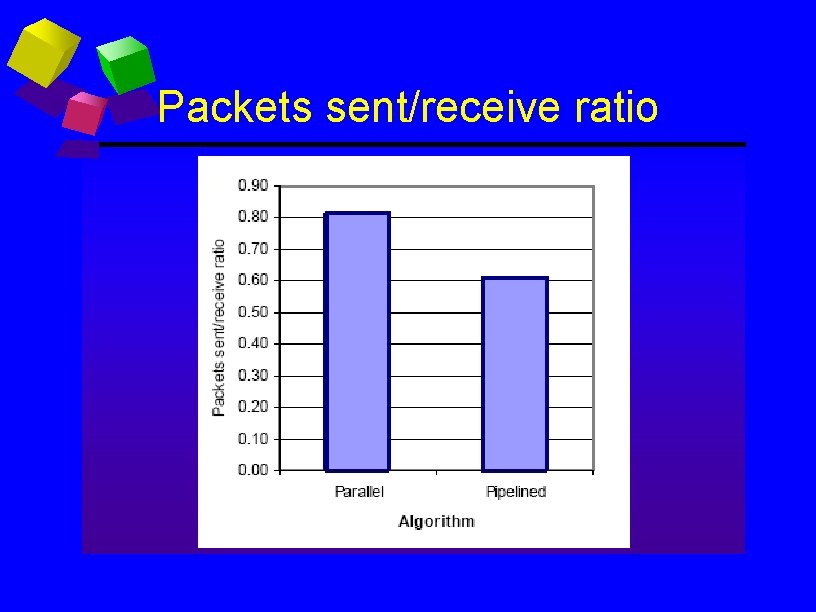

Packets sent/receive ratio

Analysis • Overall, Parallel performs better than Pipelined • Pipelined : A single packet header in SDRAM is read multiple (3) times

Microengine utilization

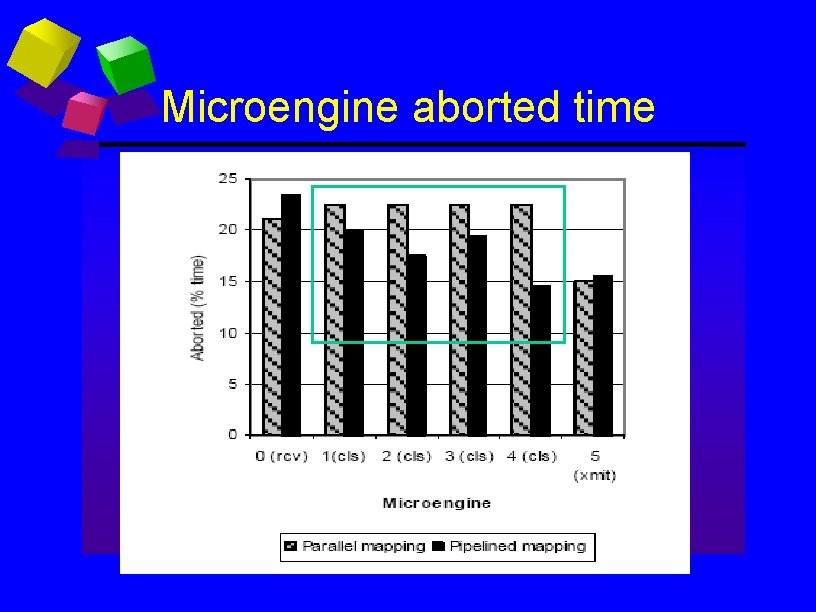

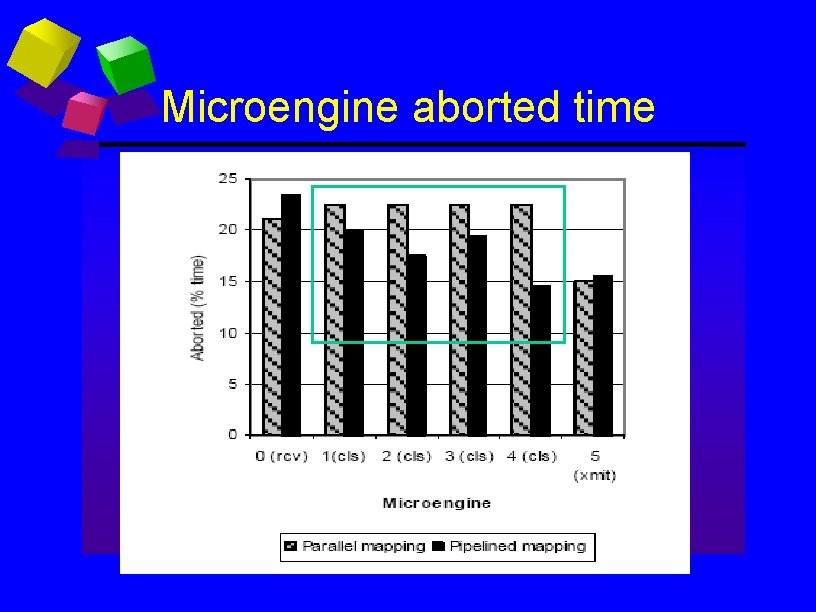

Microengine aborted time

Analysis • Aborted time is typically caused by branch instructions • Algorithms must reduce branch instructions to maximize throughput

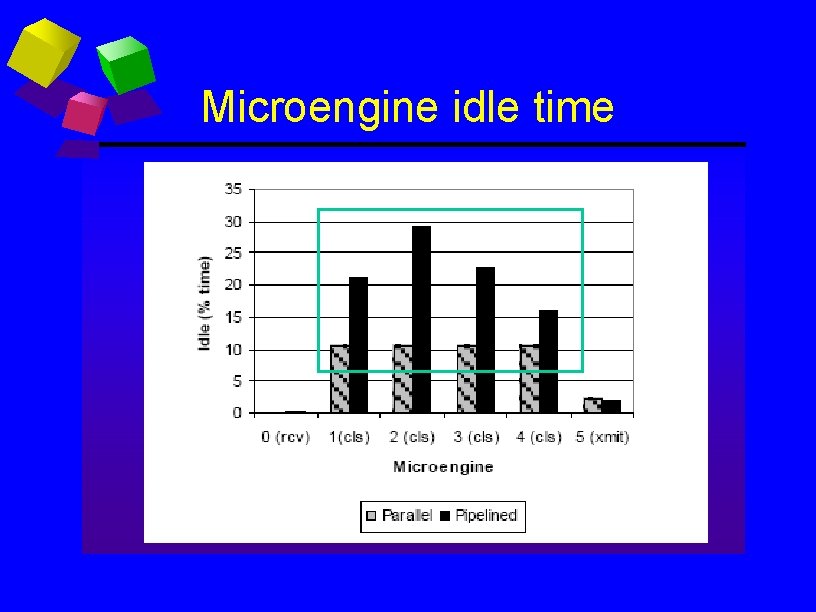

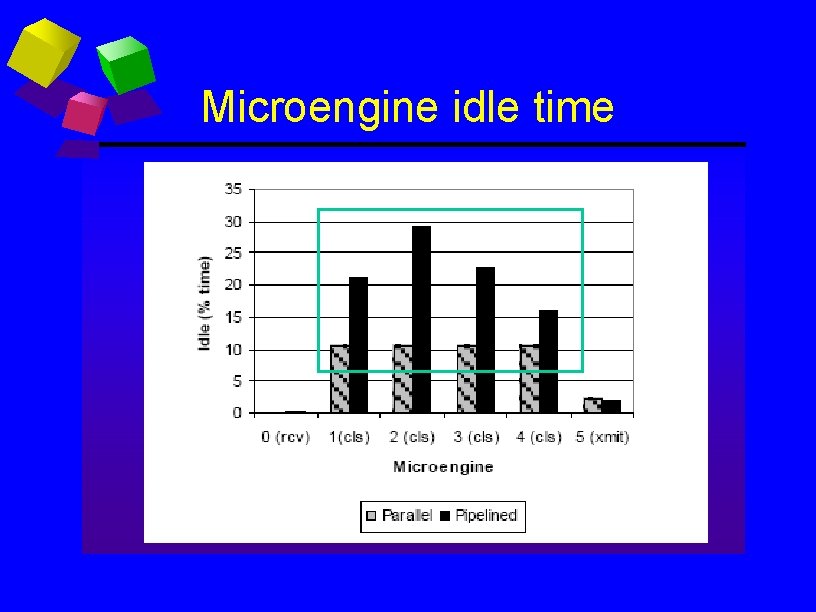

Microengine idle time

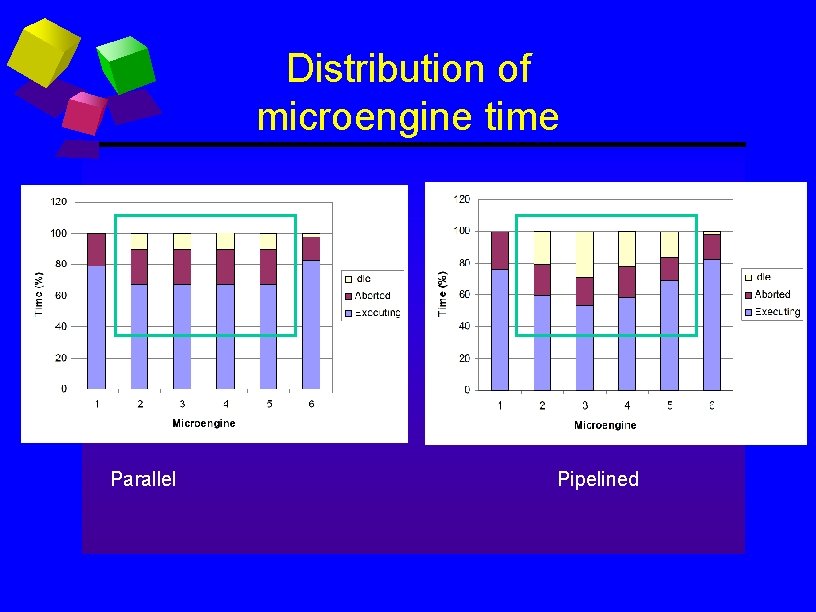

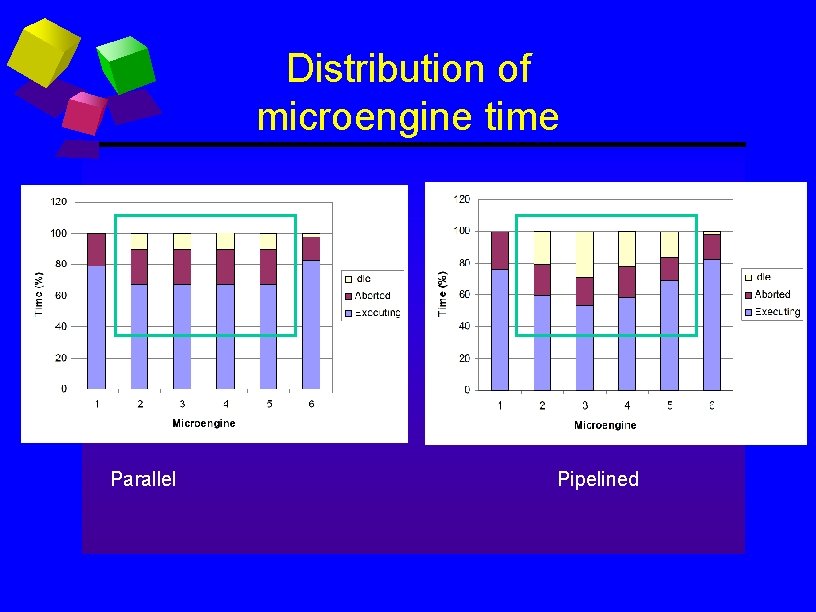

Distribution of microengine time Parallel Pipelined

Analysis • High microengine idle time in Pipelined due to memory latency • Lower microengine aborted time in Pipelined due to what?

Discussion • Pipelined mappings can bottleneck through memory – Repeated memory reads to send work from μEngine to μEngine – Direct hardware support for pipelining required • IXP 2 xxx = next-neighbor registers • Currently re-examining our results on IXP 2400 • Algorithms with fewer branch instructions result in better microengine utilization (lower aborted time)

Conclusion • Packet classification is a fundamental function • Parallel nature of NPs well-suited for parallel search algorithms

Conclusion • Network processors offer high packet processing speed and programmability – Performance of an algorithm depends on the design mapping chosen • Contributions – Demonstrated that mapping has considerable impact on performance • Pipelined mappings benefit from hardware support • Algorithms with fewer branch instructions result in better processor utilization

Future work • Analyze other mappings – Split work across different hardware threads in a single microengine – Placement of data structures in different memory banks • IXP 2400 – Examine how hardware features change trade-offs in algorithm mapping • Algorithms designed specifically for network processors

Backup Slides

Definitions • Process of categorizing packets according to predefined rules • Classifier or ruleset: collection of rules • Dimension or field: packet header used • Rule: range of field values and action

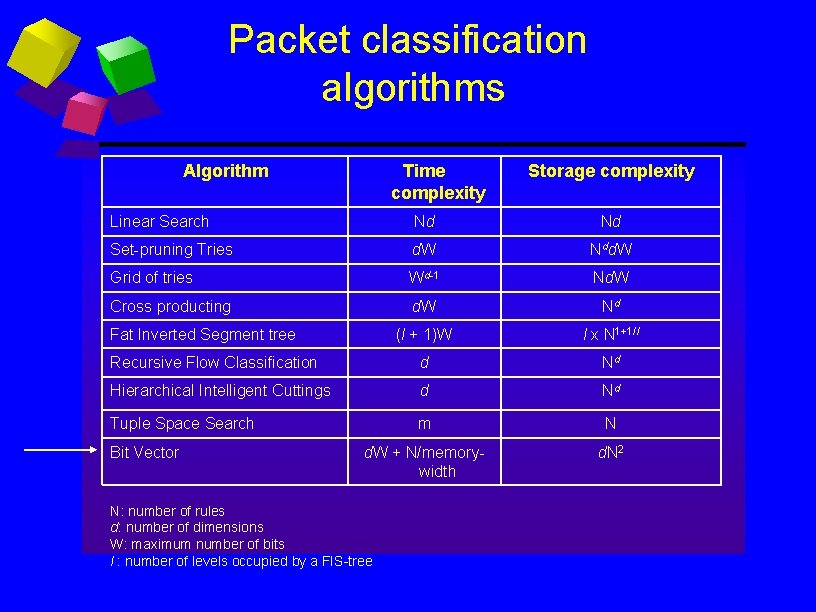

Packet classification algorithms Algorithm Time complexity Storage complexity Linear Search Nd Nd Set-pruning Tries d. W Ndd. W Grid of tries Wd-1 Nd. W Cross producting d. W Nd (l + 1)W l x N 1+1/l Recursive Flow Classification d Nd Hierarchical Intelligent Cuttings d Nd Tuple Space Search m N d. W + N/memorywidth d. N 2 Fat Inverted Segment tree Bit Vector N: number of rules d: number of dimensions W: maximum number of bits l : number of levels occupied by a FIS-tree