Pentium IVXEON Computer architectures M 1 Pentium IV

- Slides: 38

Pentium IV-XEON Computer architectures M 1

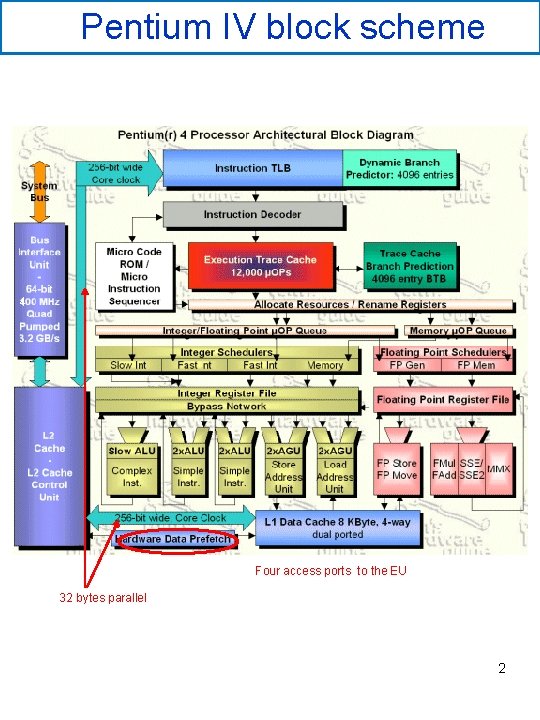

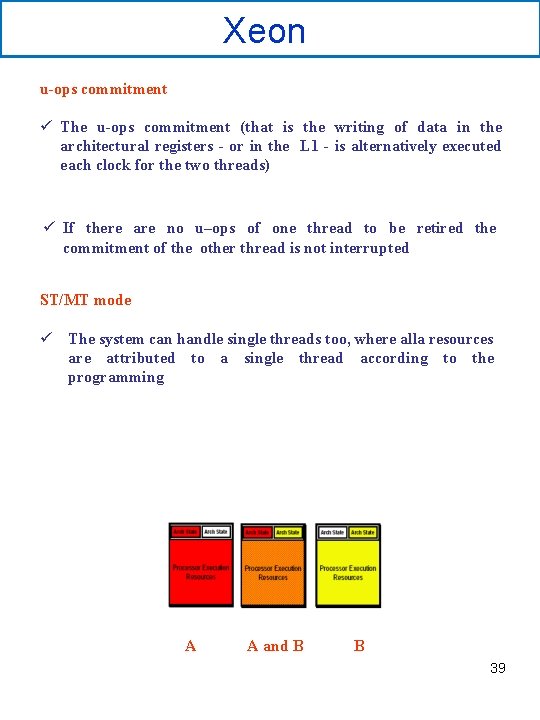

Pentium IV block scheme 4 Four access ports to the EU 32 bytes parallel 2

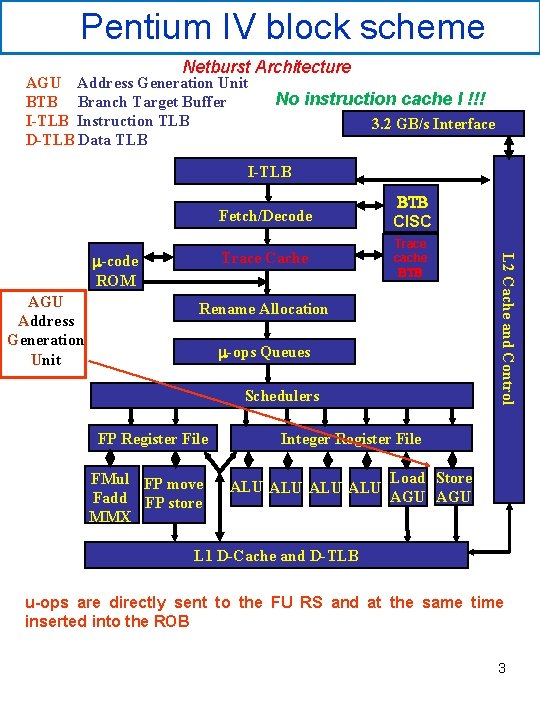

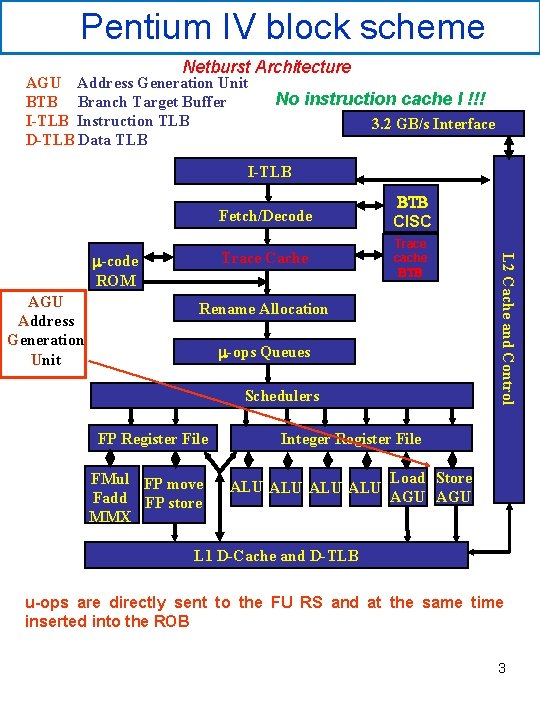

Pentium IV block scheme Netburst Architecture AGU Address Generation Unit BTB Branch Target Buffer I-TLB Instruction TLB D-TLB Data TLB No instruction cache I !!! 3. 2 GB/s Interface I-TLB AGU Address Generation Unit Trace Cache Trace cache BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Fetch/Decode BTB CISC Integer Register File ALU ALU Load Store AGU L 1 D-Cache and D-TLB u-ops are directly sent to the FU RS and at the same time inserted into the ROB 3

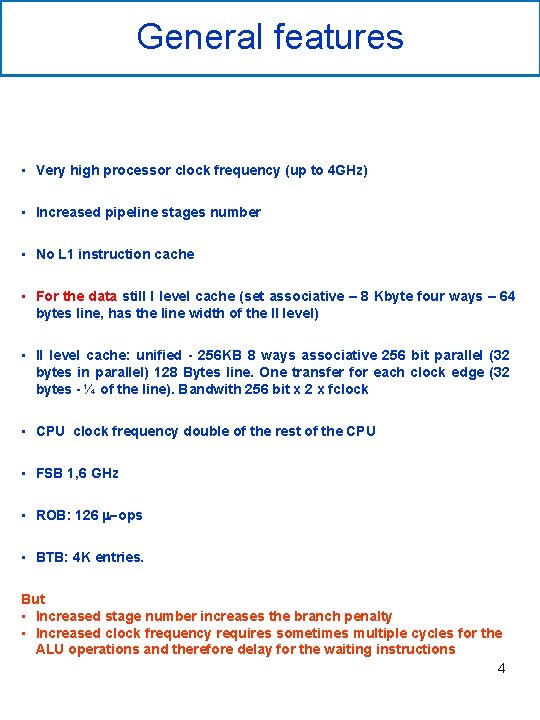

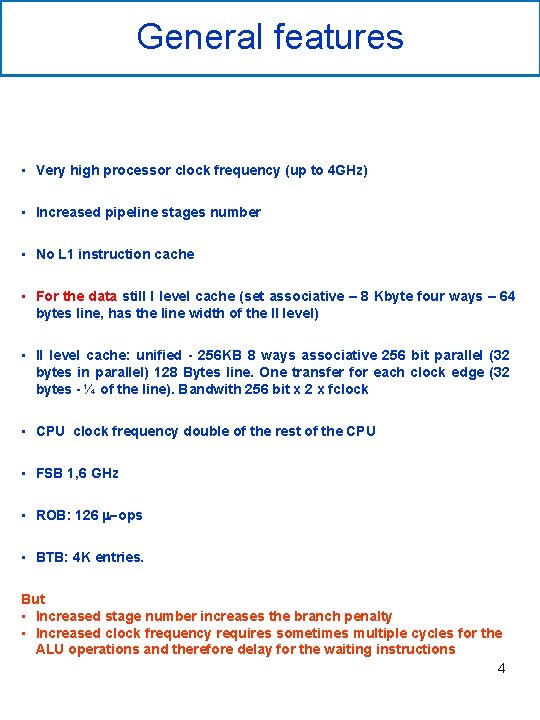

General features • Very high processor clock frequency (up to 4 GHz) • Increased pipeline stages number • No L 1 instruction cache • For the data still I level cache (set associative – 8 Kbyte four ways – 64 bytes line, has the line width of the II level) • II level cache: unified - 256 KB 8 ways associative 256 bit parallel (32 bytes in parallel) 128 Bytes line. One transfer for each clock edge (32 bytes - ¼ of the line). Bandwith 256 bit x 2 x fclock • CPU clock frequency double of the rest of the CPU • FSB 1, 6 GHz • ROB: 126 m-ops • BTB: 4 K entries. But • Increased stage number increases the branch penalty • Increased clock frequency requires sometimes multiple cycles for the ALU operations and therefore delay for the waiting instructions 4

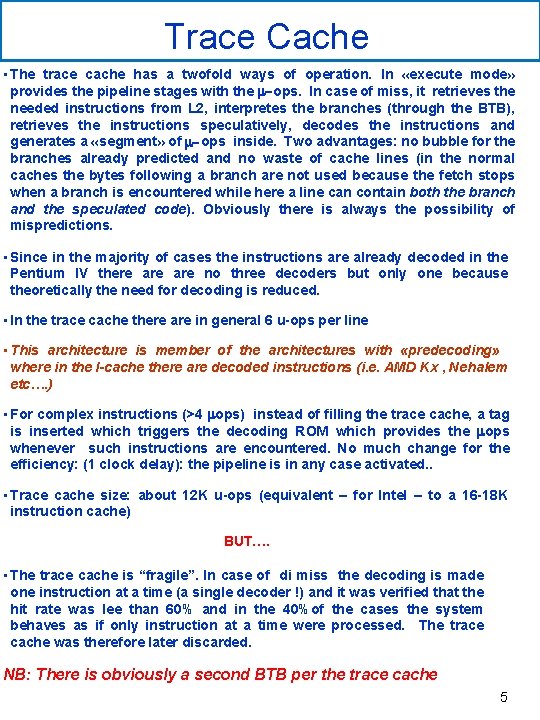

Trace Cache • The trace cache has a twofold ways of operation. In «execute mode» provides the pipeline stages with the m-ops. In case of miss, it retrieves the needed instructions from L 2, interpretes the branches (through the BTB), retrieves the instructions speculatively, decodes the instructions and generates a «segment» of m-ops inside. Two advantages: no bubble for the branches already predicted and no waste of cache lines (in the normal caches the bytes following a branch are not used because the fetch stops when a branch is encountered while here a line can contain both the branch and the speculated code). Obviously there is always the possibility of mispredictions. • Since in the majority of cases the instructions are already decoded in the Pentium IV there are no three decoders but only one because theoretically the need for decoding is reduced. • In the trace cache there are in general 6 u-ops per line • This architecture is member of the architectures with «predecoding» where in the I-cache there are decoded instructions (i. e. AMD Kx , Nehalem etc…. ) • For complex instructions (>4 mops) instead of filling the trace cache, a tag is inserted which triggers the decoding ROM which provides the mops whenever such instructions are encountered. No much change for the efficiency: (1 clock delay): the pipeline is in any case activated. . • Trace cache size: about 12 K u-ops (equivalent – for Intel – to a 16 -18 K instruction cache) BUT…. • The trace cache is “fragile”. In case of di miss the decoding is made one instruction at a time (a single decoder !) and it was verified that the hit rate was lee than 60% and in the 40%of the cases the system behaves as if only instruction at a time were processed. The trace cache was therefore later discarded. NB: There is obviously a second BTB per the trace cache 5

It was said • Intel ha increased the clock frequency (and therefore the number of pipelines stages) only for marketing reasons… • By reducing the transistors size the clock speed can be increased but the functionality too could be improved. This was not the case with the PIV 6

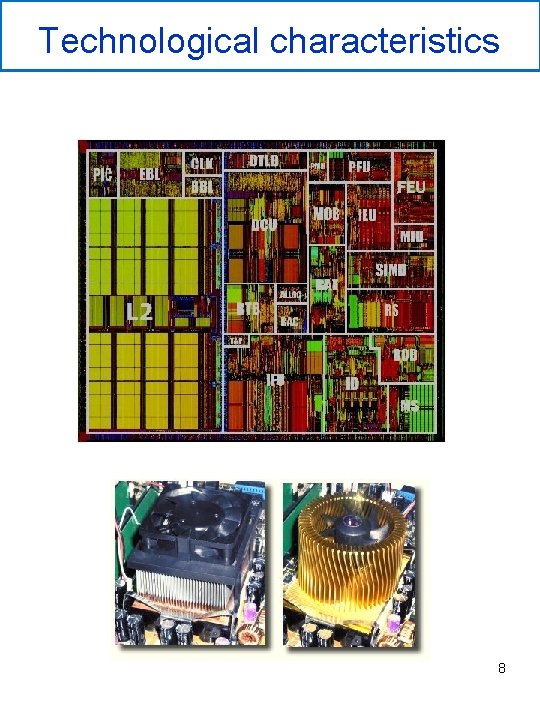

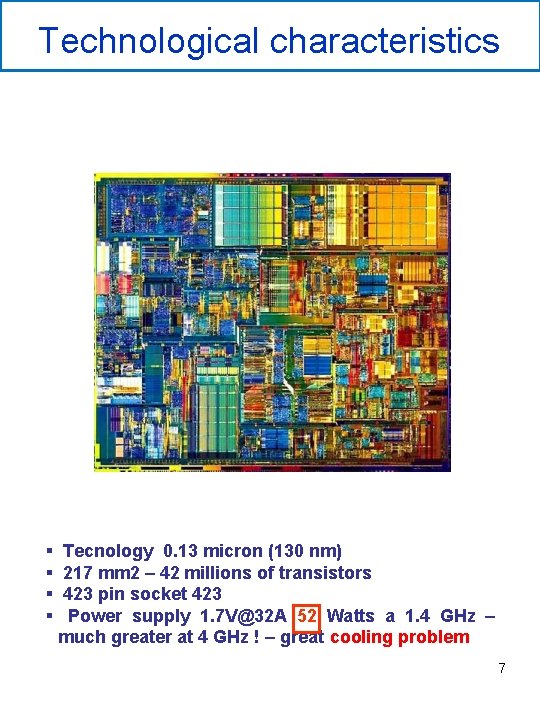

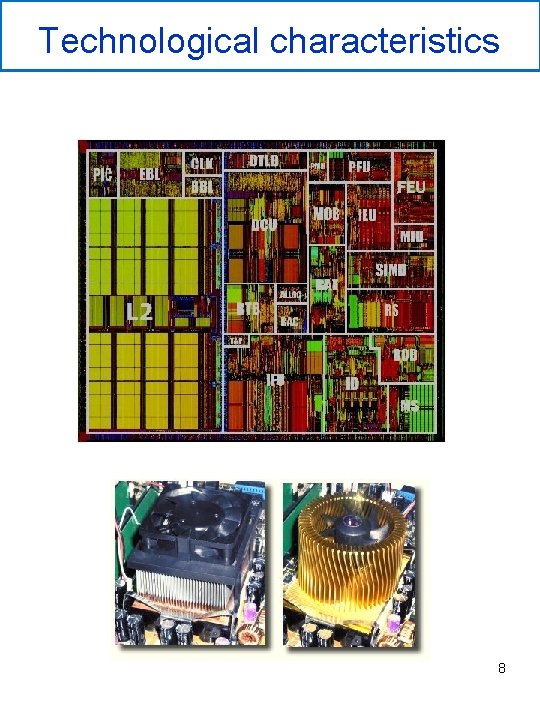

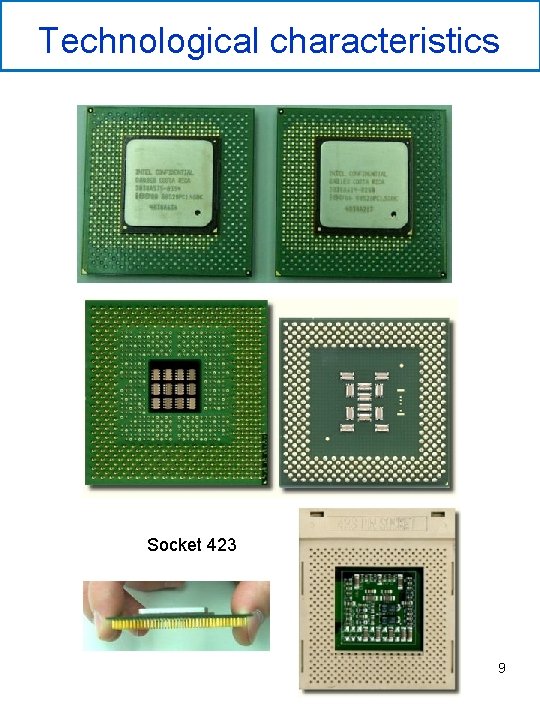

Technological characteristics § § Tecnology 0. 13 micron (130 nm) 217 mm 2 – 42 millions of transistors 423 pin socket 423 Power supply 1. 7 V@32 A 52 Watts a 1. 4 GHz – much greater at 4 GHz ! – great cooling problem 7

Technological characteristics 8

Technological characteristics Socket 423 9

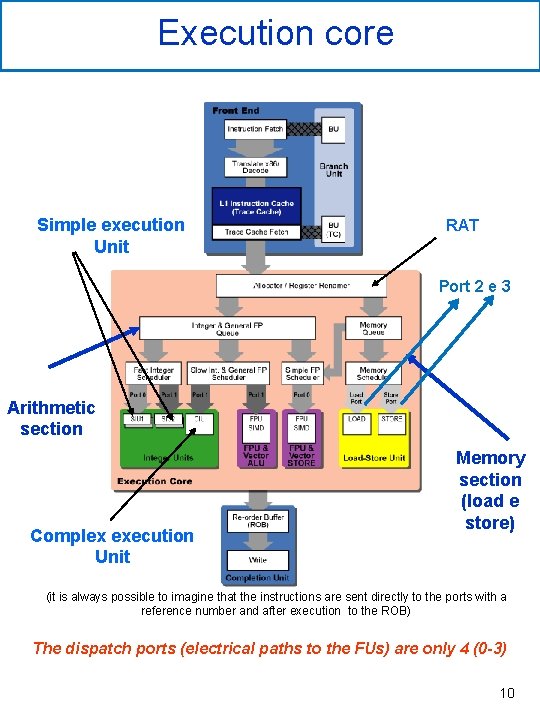

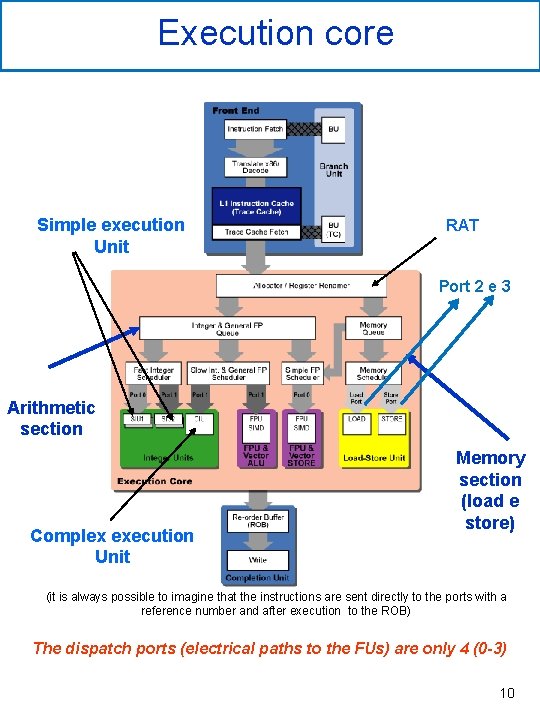

Execution core Simple execution Unit RAT Port 2 e 3 Arithmetic section Complex execution Unit Memory section (load e store) (it is always possible to imagine that the instructions are sent directly to the ports with a reference number and after execution to the ROB) The dispatch ports (electrical paths to the FUs) are only 4 (0 -3) 10

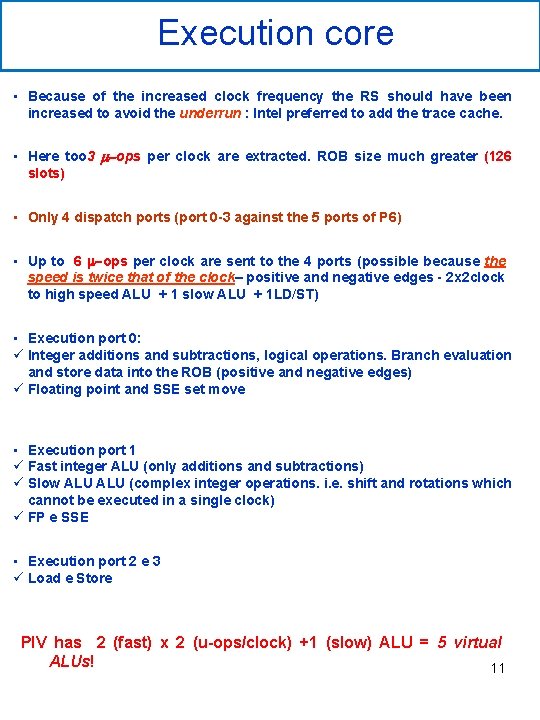

Execution core • Because of the increased clock frequency the RS should have been increased to avoid the underrun : Intel preferred to add the trace cache. • Here too 3 m-ops per clock are extracted. ROB size much greater (126 slots) • Only 4 dispatch ports (port 0 -3 against the 5 ports of P 6) • Up to 6 m-ops per clock are sent to the 4 ports (possible because the speed is twice that of the clock– positive and negative edges - 2 x 2 clock to high speed ALU + 1 slow ALU + 1 LD/ST) • Execution port 0: ü Integer additions and subtractions, logical operations. Branch evaluation and store data into the ROB (positive and negative edges) ü Floating point and SSE set move • Execution port 1 ü Fast integer ALU (only additions and subtractions) ü Slow ALU (complex integer operations. i. e. shift and rotations which cannot be executed in a single clock) ü FP e SSE • Execution port 2 e 3 ü Load e Store Pl. V has 2 (fast) x 2 (u-ops/clock) +1 (slow) ALU = 5 virtual ALUs! 11

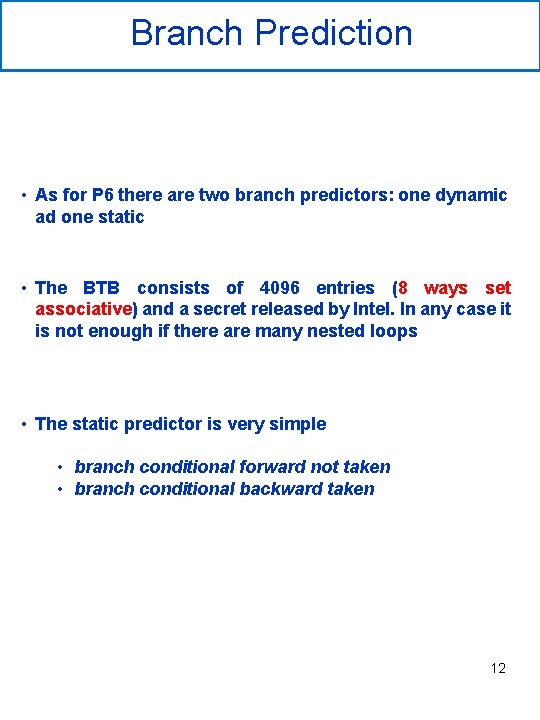

Branch Prediction • As for P 6 there are two branch predictors: one dynamic ad one static • The BTB consists of 4096 entries (8 ways set associative) and a secret released by Intel. In any case it is not enough if there are many nested loops • The static predictor is very simple • branch conditional forward not taken • branch conditional backward taken 12

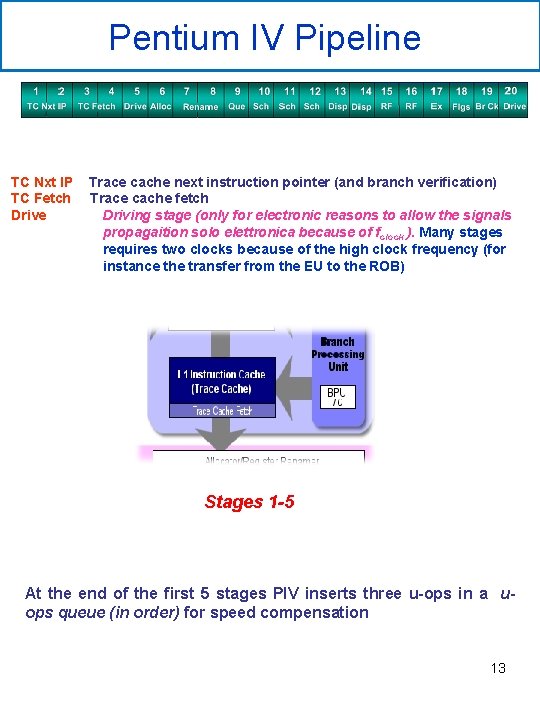

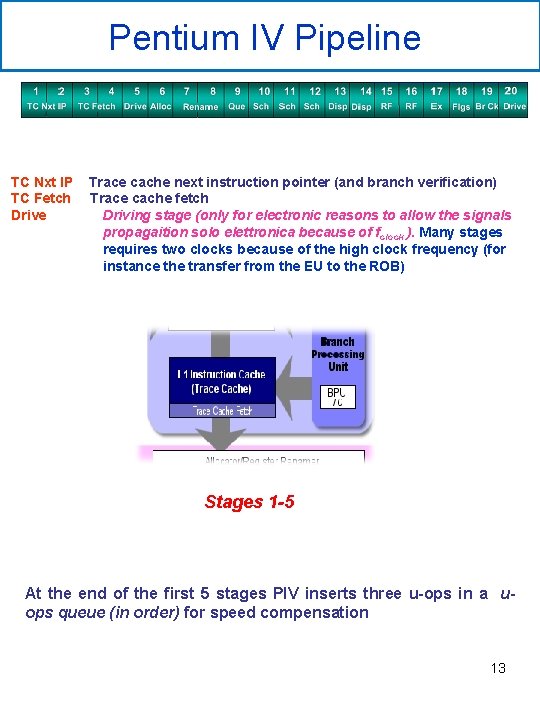

Pentium IV Pipeline TC Nxt IP TC Fetch Drive Trace cache next instruction pointer (and branch verification) Trace cache fetch Driving stage (only for electronic reasons to allow the signals propagaition solo elettronica because of fclock ). Many stages requires two clocks because of the high clock frequency (for instance the transfer from the EU to the ROB) Stages 1 -5 At the end of the first 5 stages PIV inserts three u-ops in a uops queue (in order) for speed compensation 13

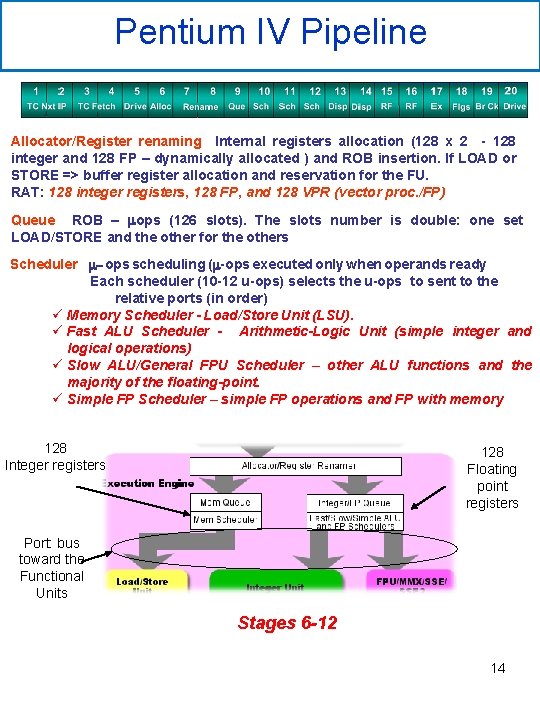

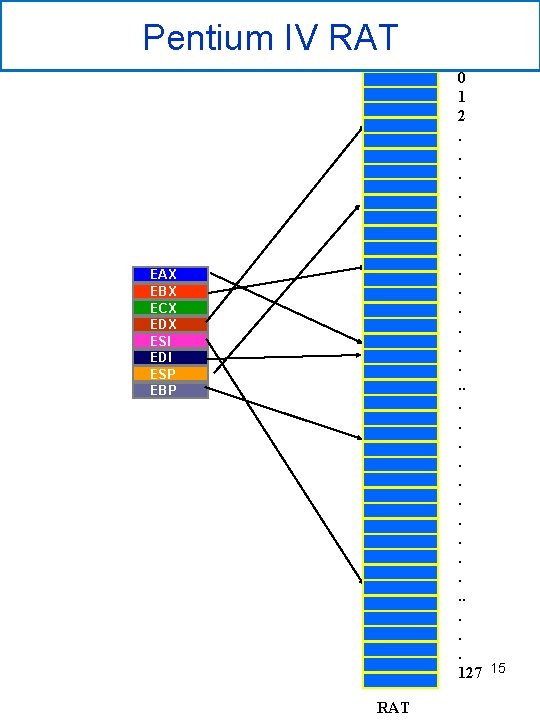

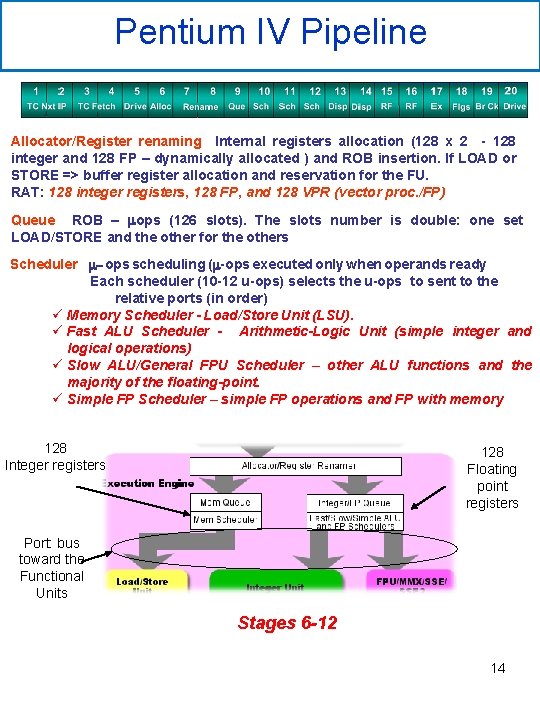

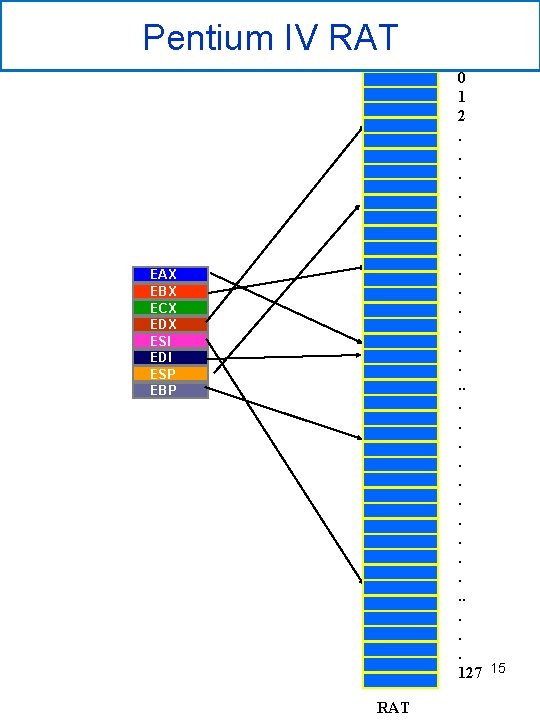

Pentium IV Pipeline Allocator/Register renaming Internal registers allocation (128 x 2 - 128 integer and 128 FP – dynamically allocated ) and ROB insertion. If LOAD or STORE => buffer register allocation and reservation for the FU. RAT: 128 integer registers, 128 FP, and 128 VPR (vector proc. /FP) Queue ROB – mops (126 slots). The slots number is double: one set LOAD/STORE and the other for the others Scheduler m-ops scheduling (m-ops executed only when operands ready Each scheduler (10 -12 u-ops) selects the u-ops to sent to the relative ports (in order) ü Memory Scheduler - Load/Store Unit (LSU). ü Fast ALU Scheduler - Arithmetic-Logic Unit (simple integer and logical operations) ü Slow ALU/General FPU Scheduler – other ALU functions and the majority of the floating-point. ü Simple FP Scheduler – simple FP operations and FP with memory 128 Integer registers 128 Floating point registers Port: bus toward the Functional Units Stages 6 -12 14

Pentium IV RAT 0 EAX EBX ECX EDX ESI EDI ESP EBP RAT 0 1 2. . . . 127 15

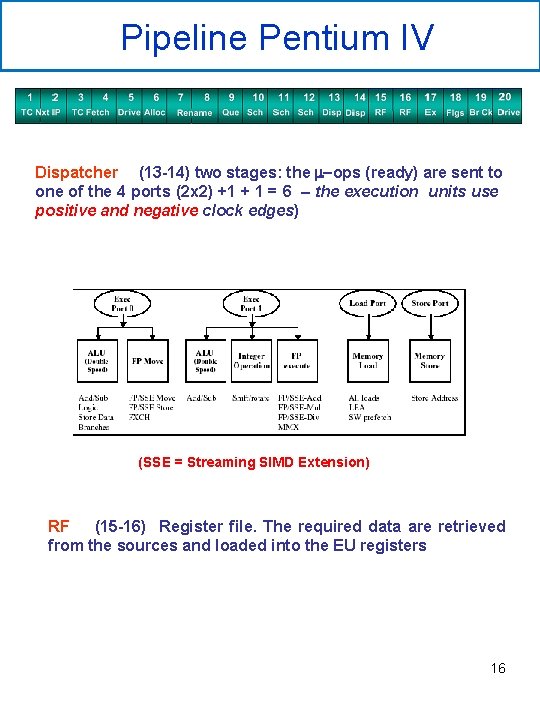

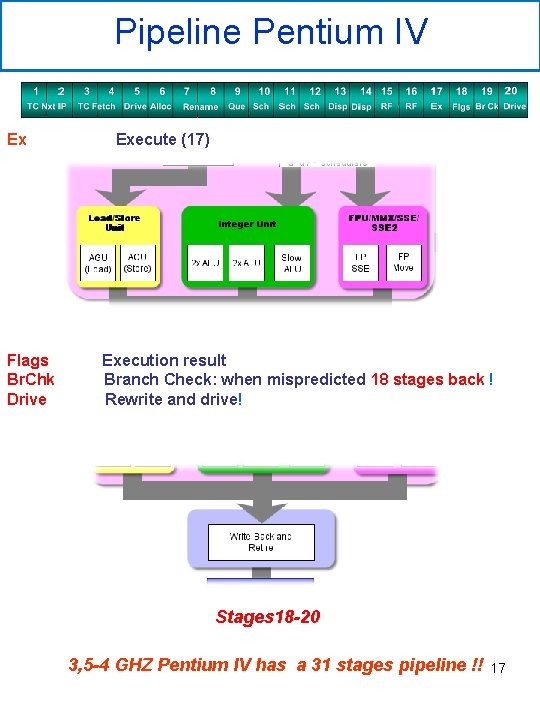

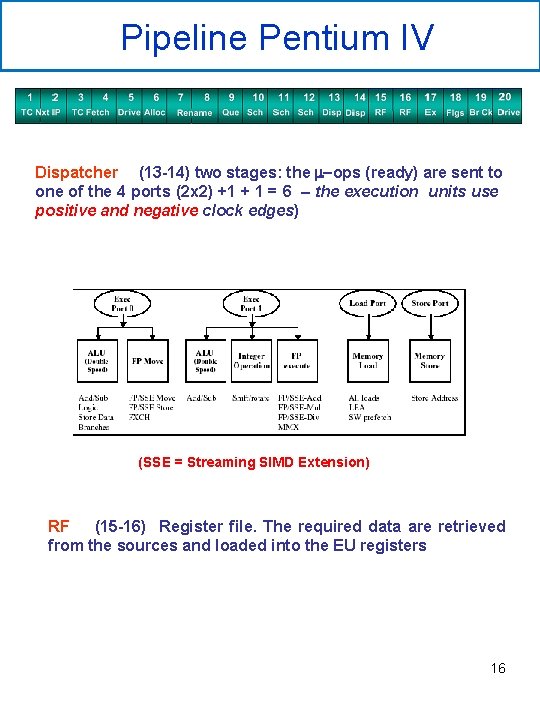

Pipeline Pentium IV Dispatcher (13 -14) two stages: the m-ops (ready) are sent to one of the 4 ports (2 x 2) +1 + 1 = 6 – the execution units use positive and negative clock edges) (SSE = Streaming SIMD Extension) RF (15 -16) Register file. The required data are retrieved from the sources and loaded into the EU registers 16

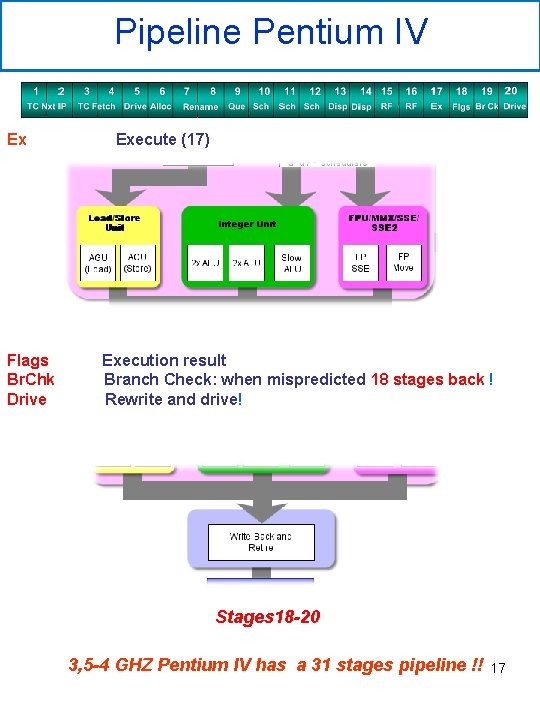

Pipeline Pentium IV Ex Flags Br. Chk Drive Execute (17) Execution result Branch Check: when mispredicted 18 stages back ! Rewrite and drive! Stages 18 -20 3, 5 -4 GHZ Pentium IV has a 31 stages pipeline !! 17

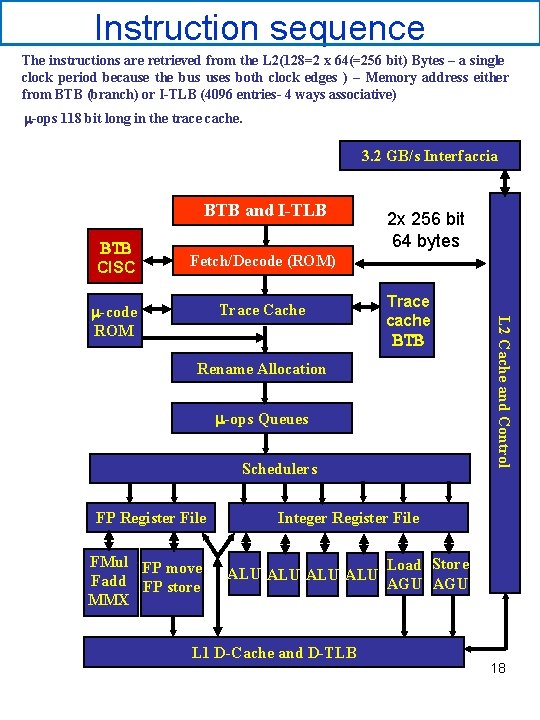

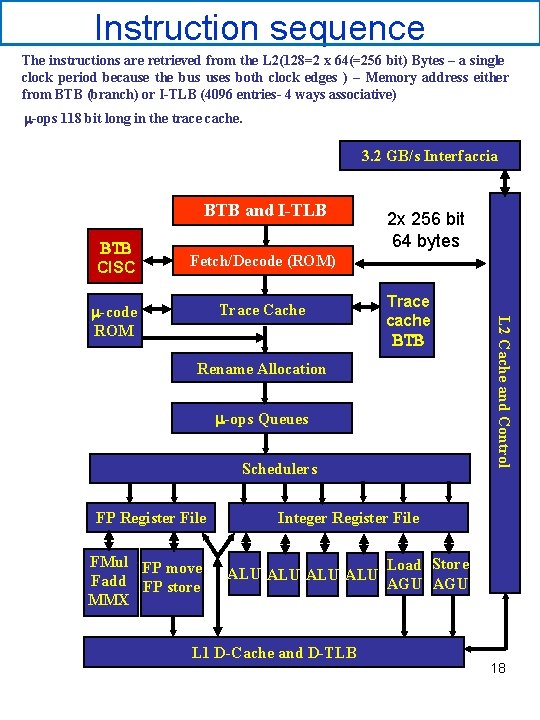

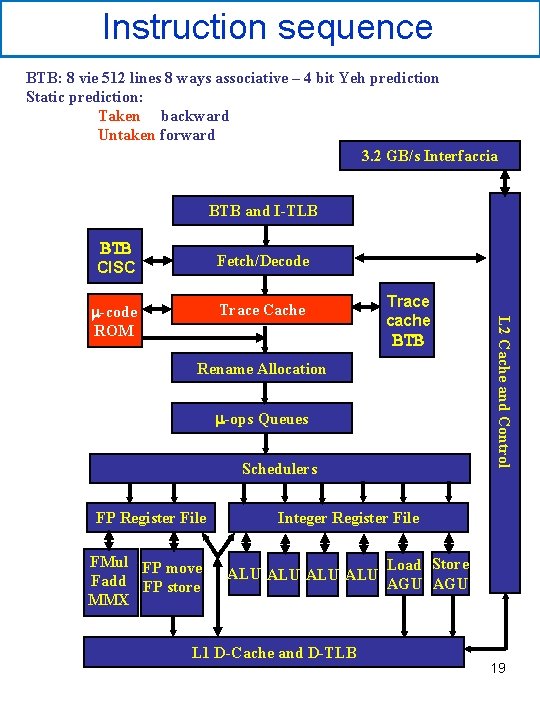

Instruction sequence The instructions are retrieved from the L 2(128=2 x 64(=256 bit) Bytes – a single clock period because the bus uses both clock edges ) – Memory address either from BTB (branch) or I-TLB (4096 entries- 4 ways associative) m-ops 118 bit long in the trace cache. 3. 2 GB/s Interfaccia BTB and I-TLB BTB CISC 2 x 256 bit 64 bytes Fetch/Decode (ROM) Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 18

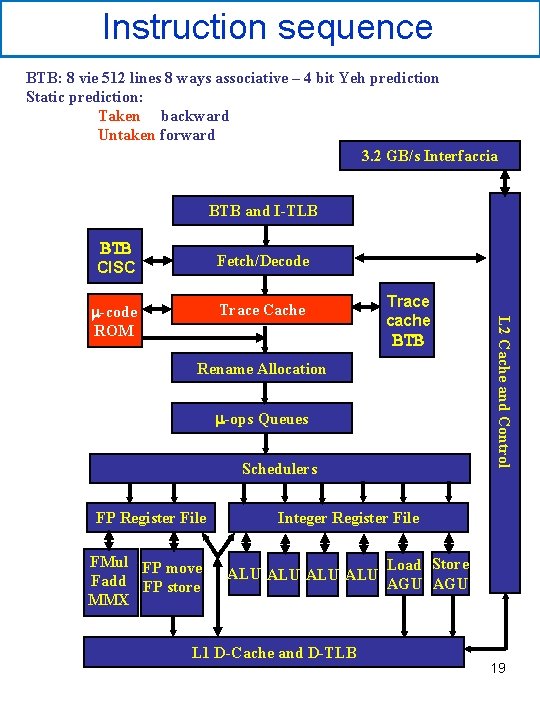

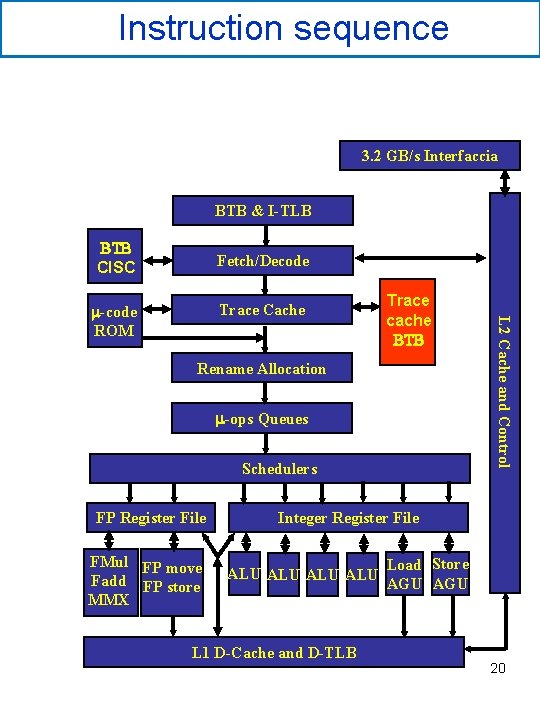

Instruction sequence BTB: 8 vie 512 lines 8 ways associative – 4 bit Yeh prediction Static prediction: Taken backward Untaken forward 3. 2 GB/s Interfaccia BTB and I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 19

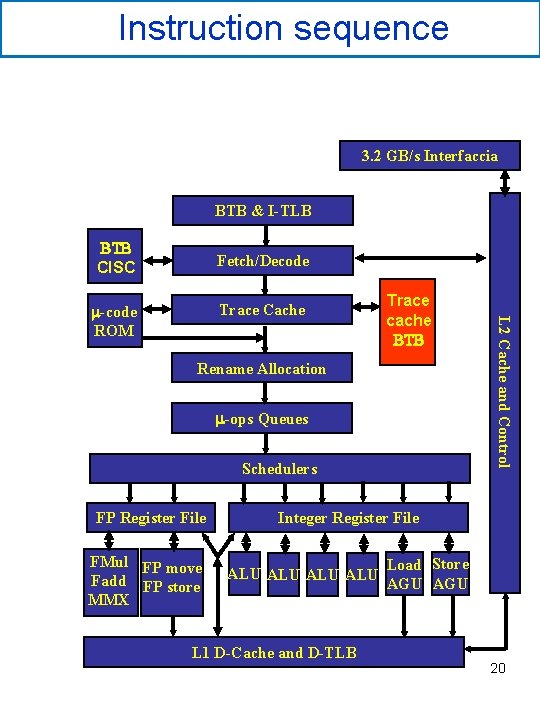

Instruction sequence 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 20

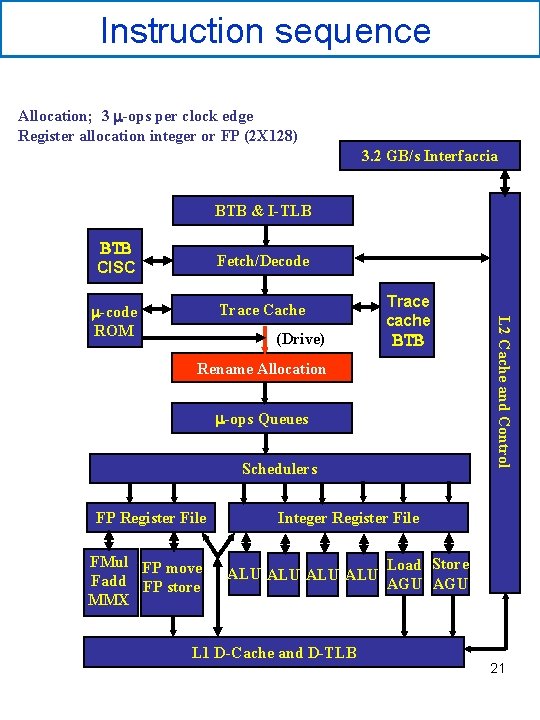

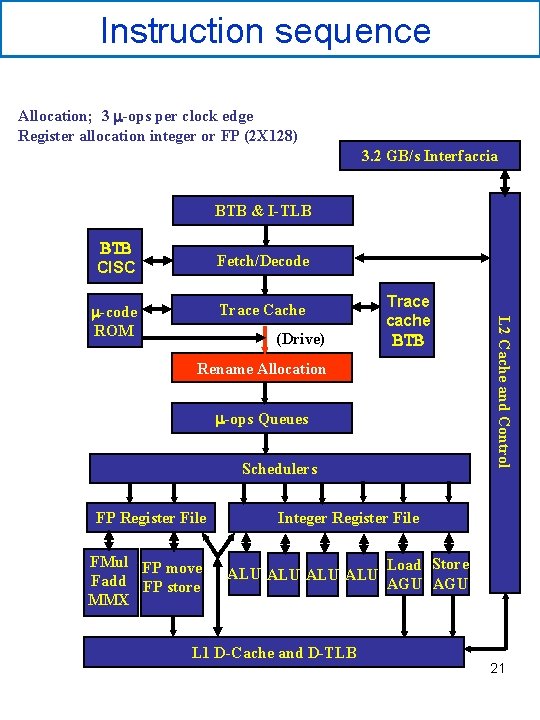

Instruction sequence Allocation; 3 m-ops per clock edge Register allocation integer or FP (2 X 128) 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache (Drive) Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 21

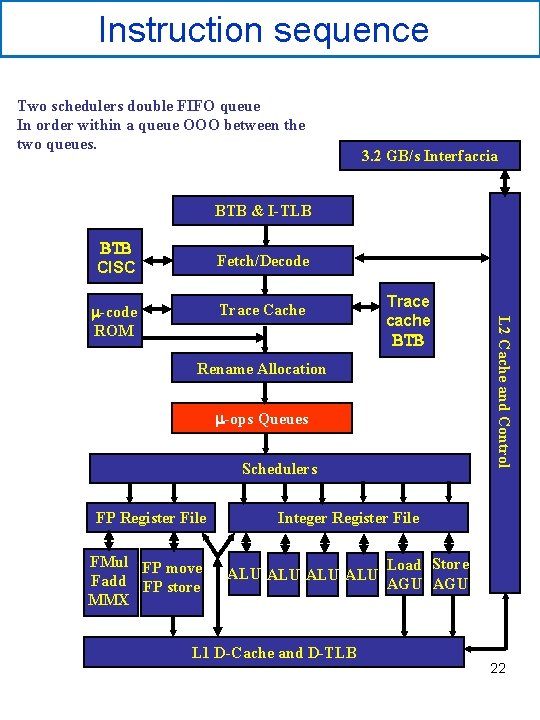

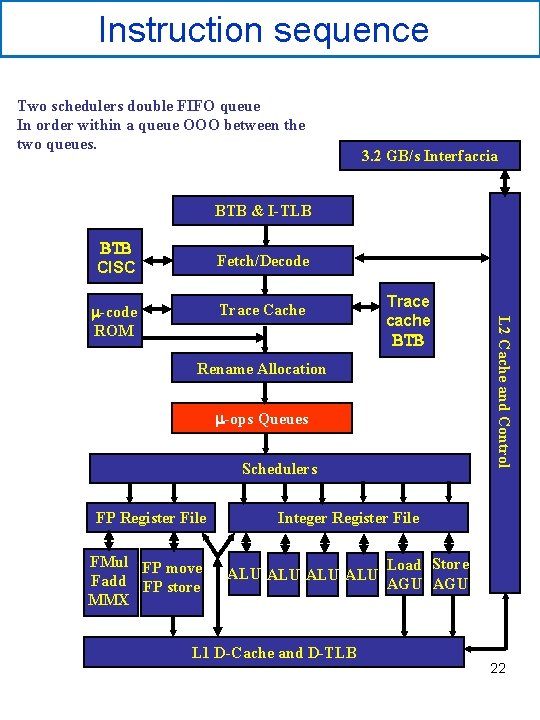

Instruction sequence Two schedulers double FIFO queue In order within a queue OOO between the two queues. 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 22

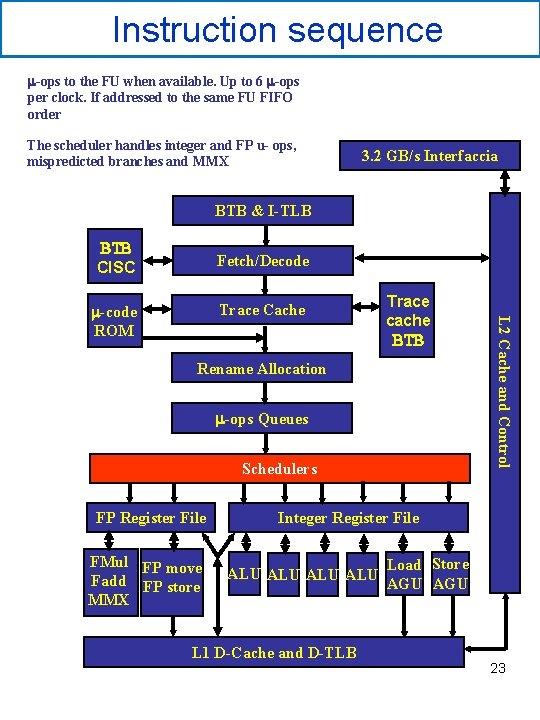

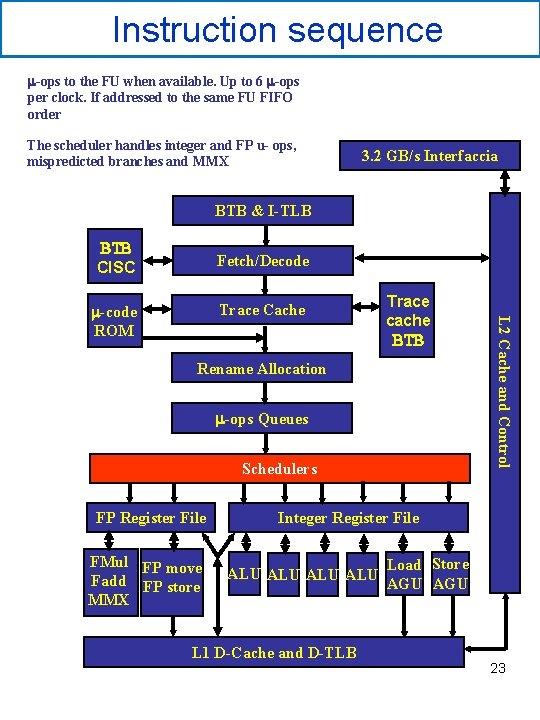

Instruction sequence m-ops to the FU when available. Up to 6 m-ops per clock. If addressed to the same FU FIFO order The scheduler handles integer and FP u- ops, mispredicted branches and MMX 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 23

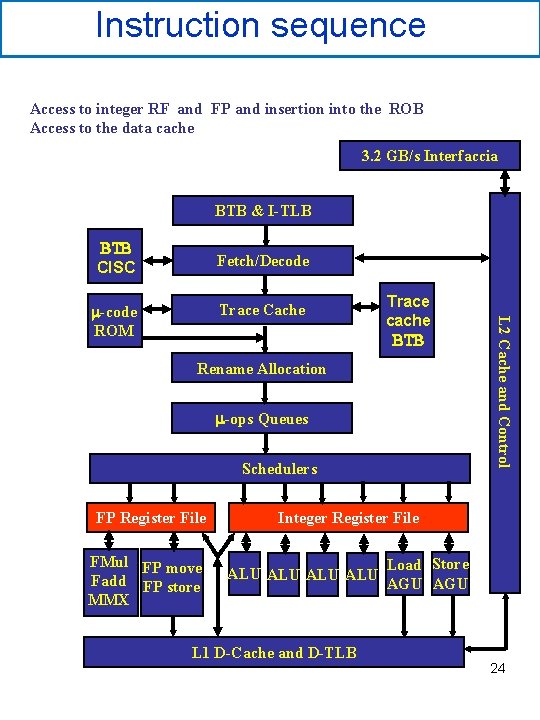

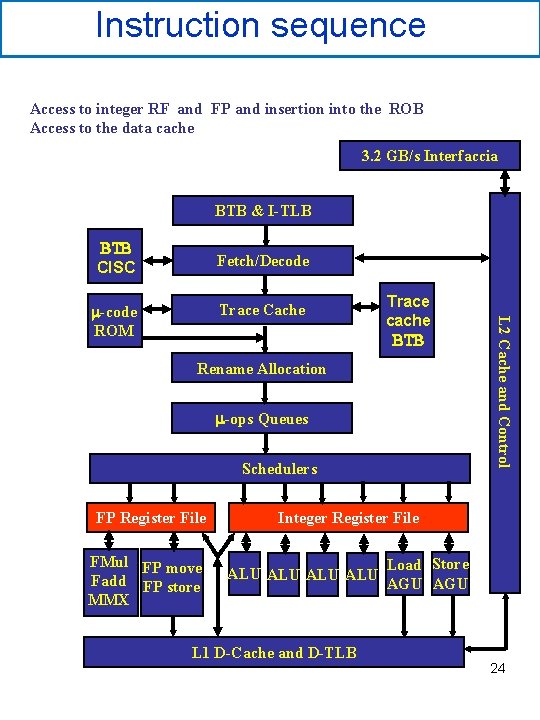

Instruction sequence Access to integer RF and FP and insertion into the ROB Access to the data cache 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 24

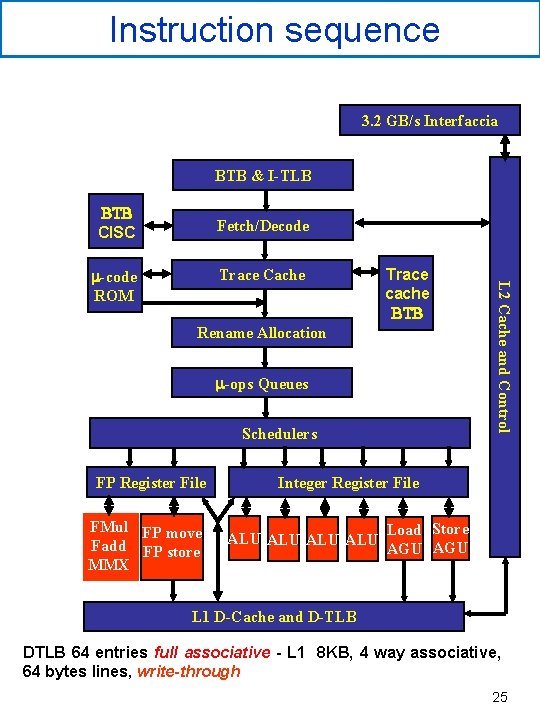

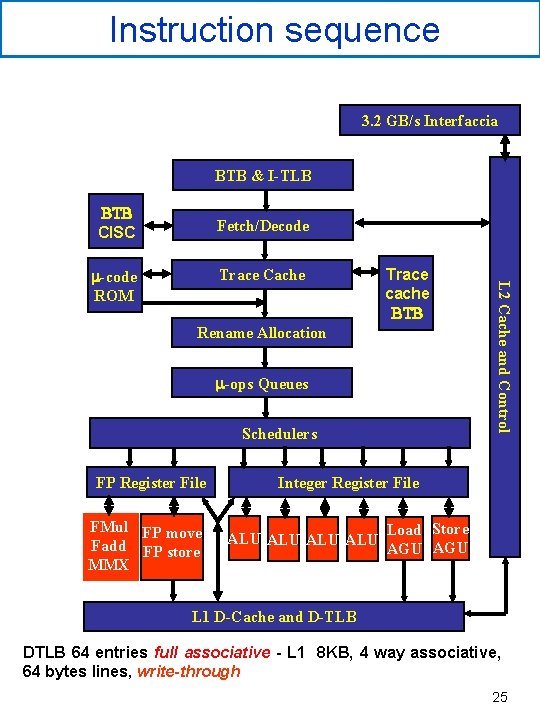

Instruction sequence 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU Load Store AGU L 1 D-Cache and D-TLB DTLB 64 entries full associative - L 1 8 KB, 4 way associative, 64 bytes lines, write-through 25

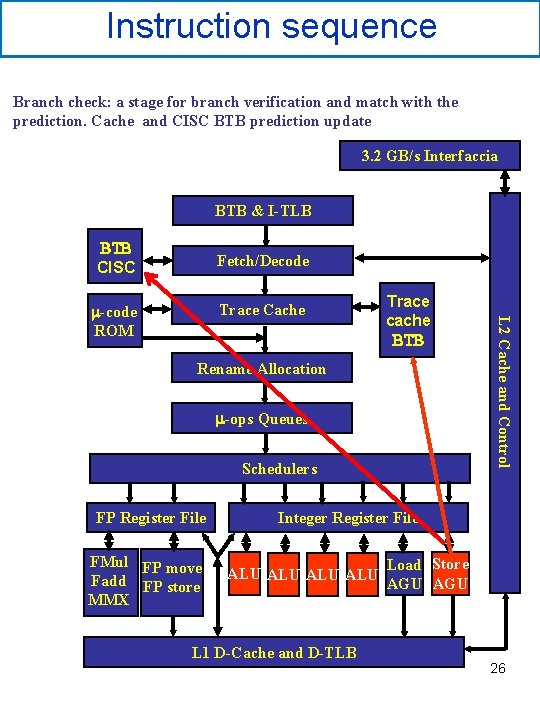

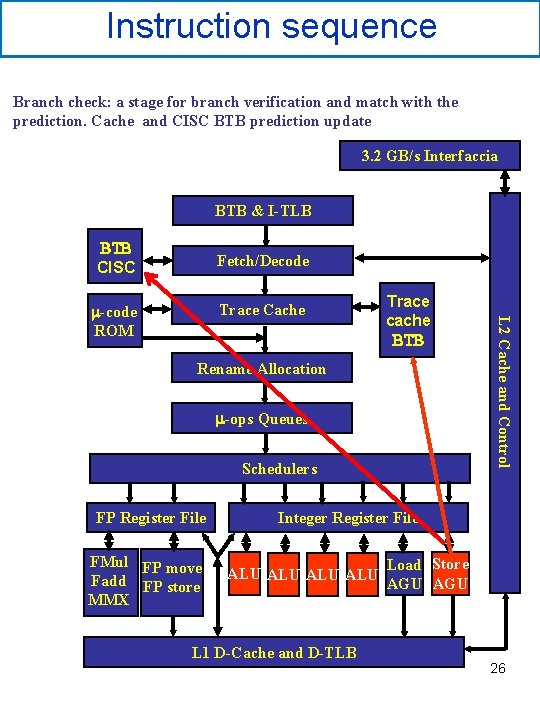

Instruction sequence Branch check: a stage for branch verification and match with the prediction. Cache and CISC BTB prediction update 3. 2 GB/s Interfaccia BTB & I-TLB BTB CISC Fetch/Decode Trace Cache Trace BTB cache 512 BTB Rename Allocation m-ops Queues Schedulers FP Register File FMul FP move Fadd FP store MMX L 2 Cache and Control m-code ROM Integer Register File ALU ALU L 1 D-Cache and D-TLB Load Store AGU 26

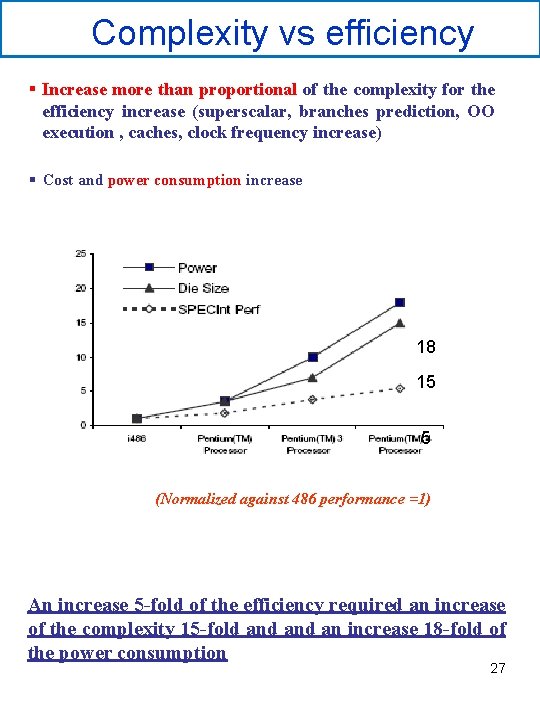

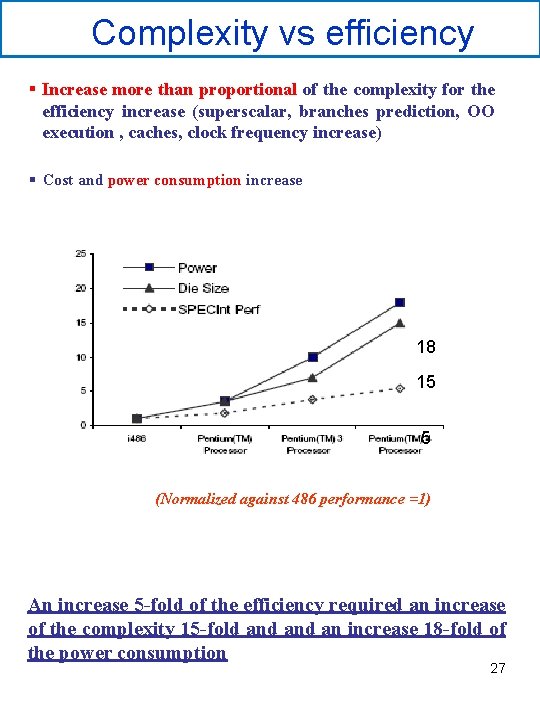

Complexity vs efficiency § Increase more than proportional of the complexity for the efficiency increase (superscalar, branches prediction, OO execution , caches, clock frequency increase) § Cost and power consumption increase 18 15 5 (Normalized against 486 performance =1) An increase 5 -fold of the efficiency required an increase of the complexity 15 -fold and an increase 18 -fold of the power consumption 27

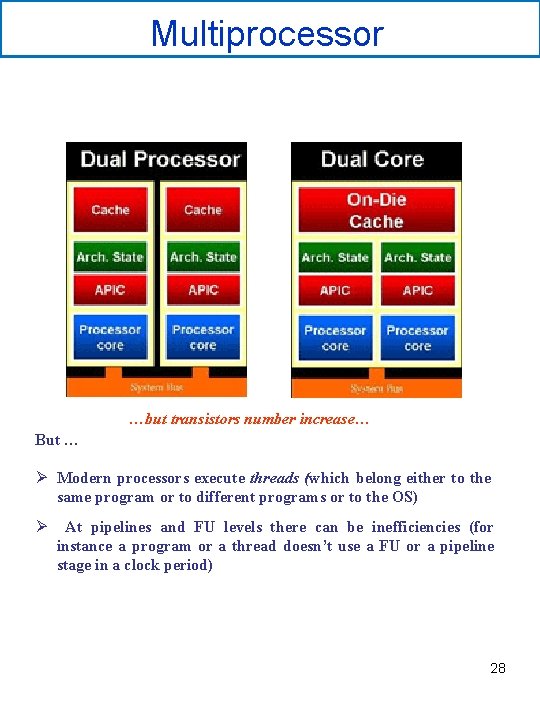

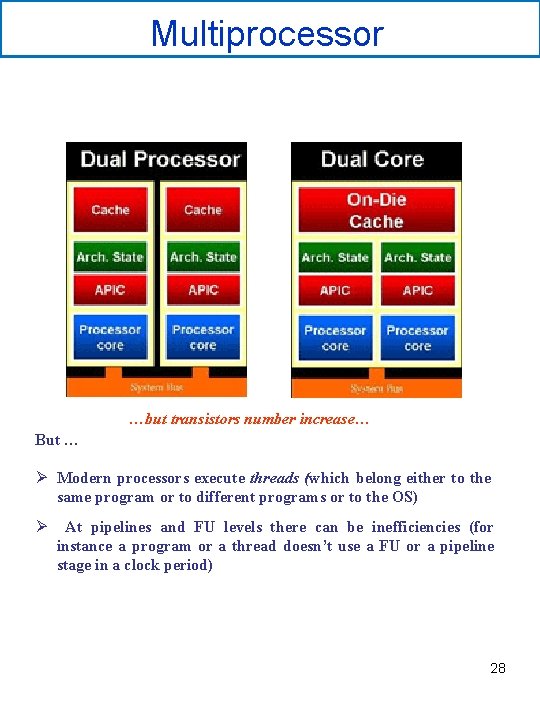

Multiprocessor …but transistors number increase… But … Ø Modern processors execute threads (which belong either to the same program or to different programs or to the OS) Ø At pipelines and FU levels there can be inefficiencies (for instance a program or a thread doesn’t use a FU or a pipeline stage in a clock period) 28

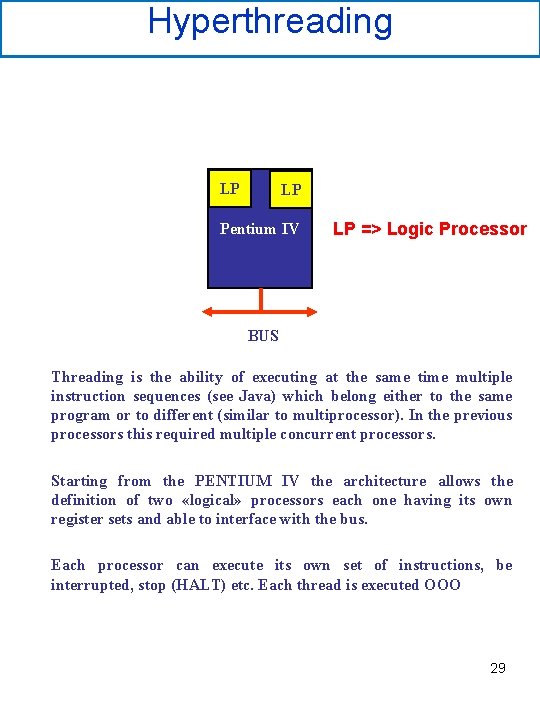

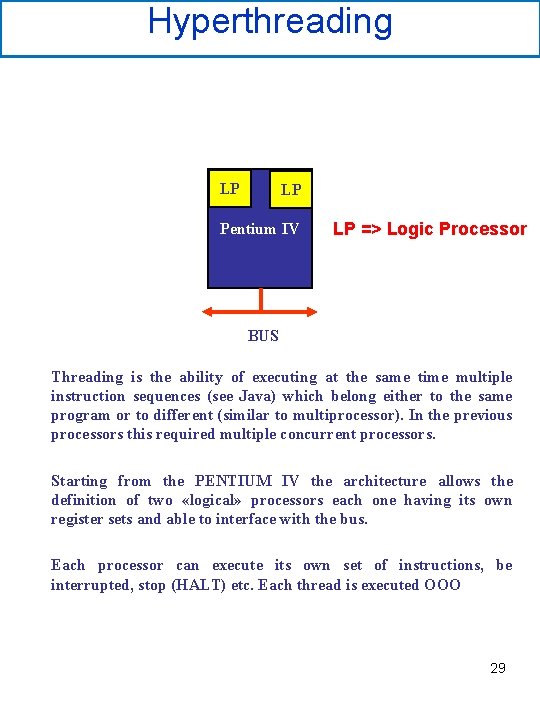

Hyperthreading LP LP Pentium IV LP => Logic Processor BUS Threading is the ability of executing at the same time multiple instruction sequences (see Java) which belong either to the same program or to different (similar to multiprocessor). In the previous processors this required multiple concurrent processors. Starting from the PENTIUM IV the architecture allows the definition of two «logical» processors each one having its own register sets and able to interface with the bus. Each processor can execute its own set of instructions, be interrupted, stop (HALT) etc. Each thread is executed OOO 29

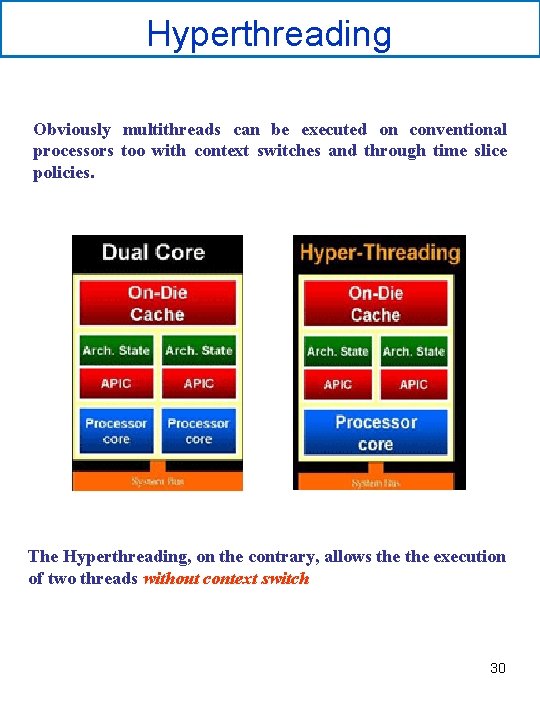

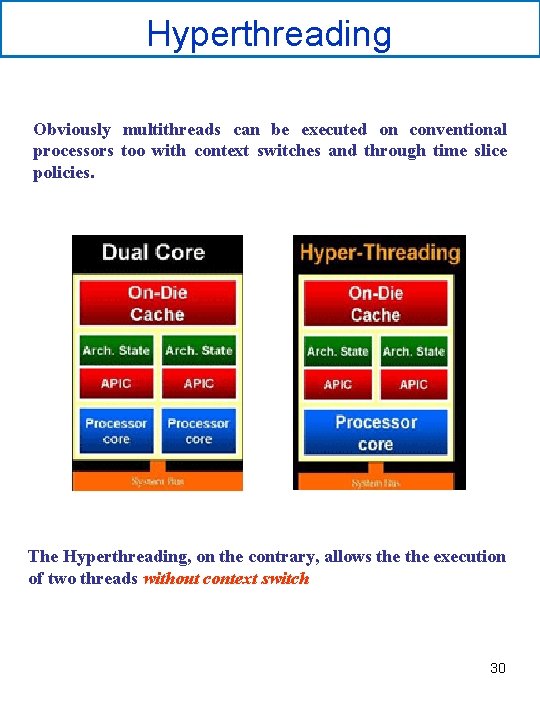

Hyperthreading Obviously multithreads can be executed on conventional processors too with context switches and through time slice policies. The Hyperthreading, on the contrary, allows the execution of two threads without context switch 30

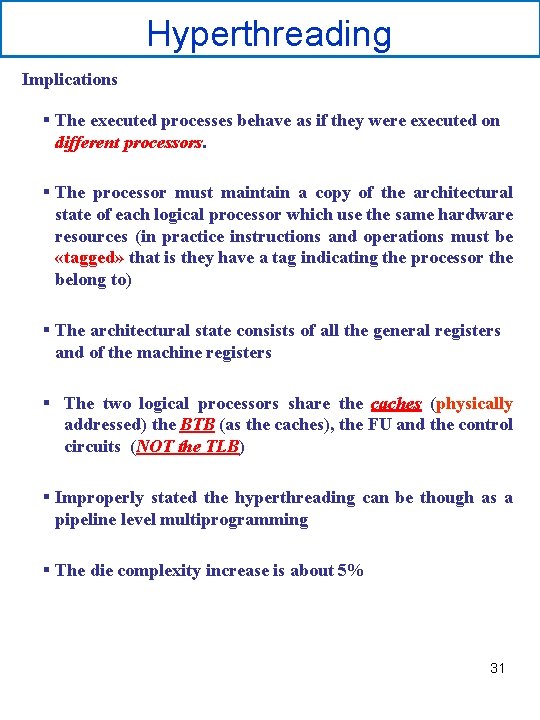

Hyperthreading Implications § The executed processes behave as if they were executed on different processors. § The processor must maintain a copy of the architectural state of each logical processor which use the same hardware resources (in practice instructions and operations must be «tagged» that is they have a tag indicating the processor the belong to) § The architectural state consists of all the general registers and of the machine registers § The two logical processors share the caches (physically addressed) the BTB (as the caches), the FU and the control circuits (NOT the TLB) § Improperly stated the hyperthreading can be though as a pipeline level multiprogramming § The die complexity increase is about 5% 31

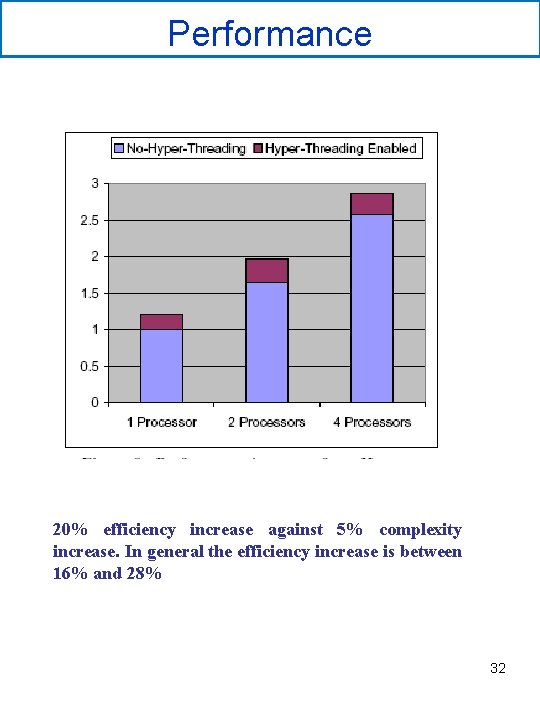

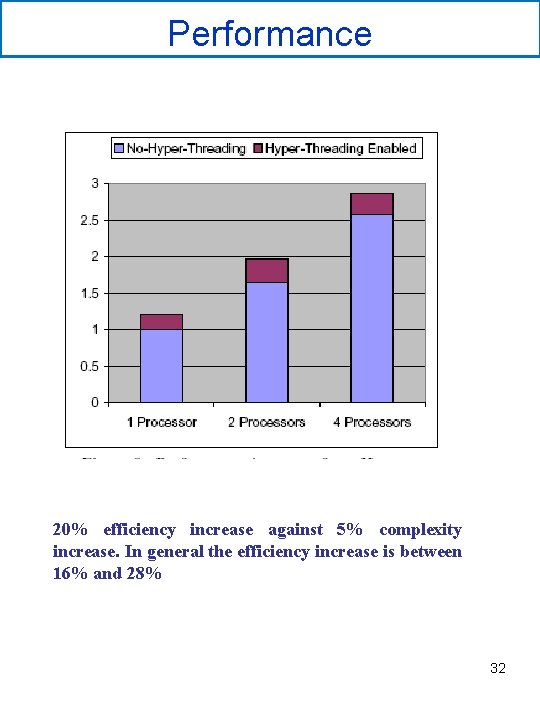

Performance 20% efficiency increase against 5% complexity increase. In general the efficiency increase is between 16% and 28% 32

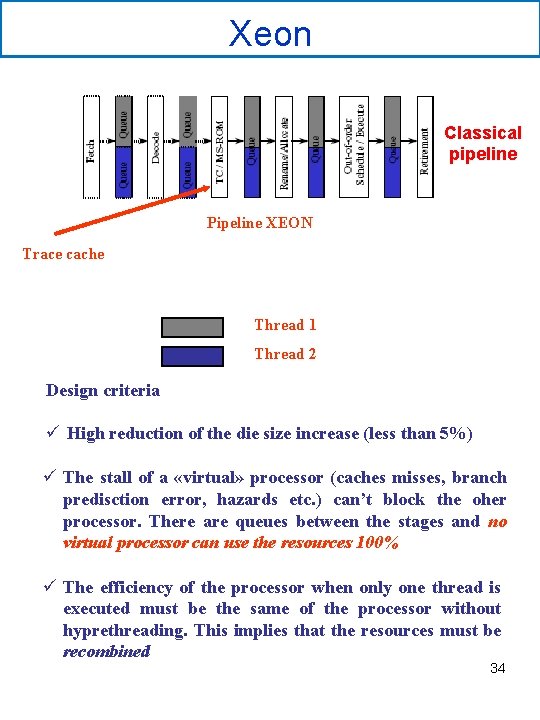

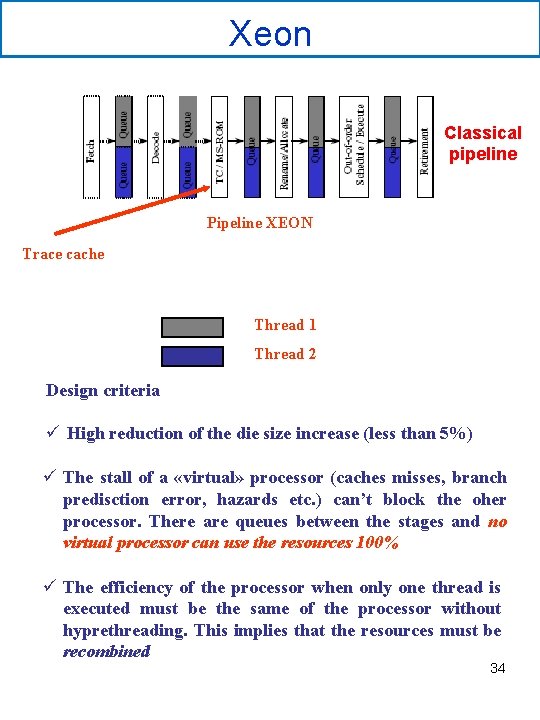

Xeon Classical pipeline Pipeline XEON Trace cache Thread 1 Thread 2 Design criteria ü High reduction of the die size increase (less than 5%) ü The stall of a «virtual» processor (caches misses, branch predisction error, hazards etc. ) can’t block the oher processor. There are queues between the stages and no virtual processor can use the resources 100% ü The efficiency of the processor when only one thread is executed must be the same of the processor without hyprethreading. This implies that the resources must be recombined 34

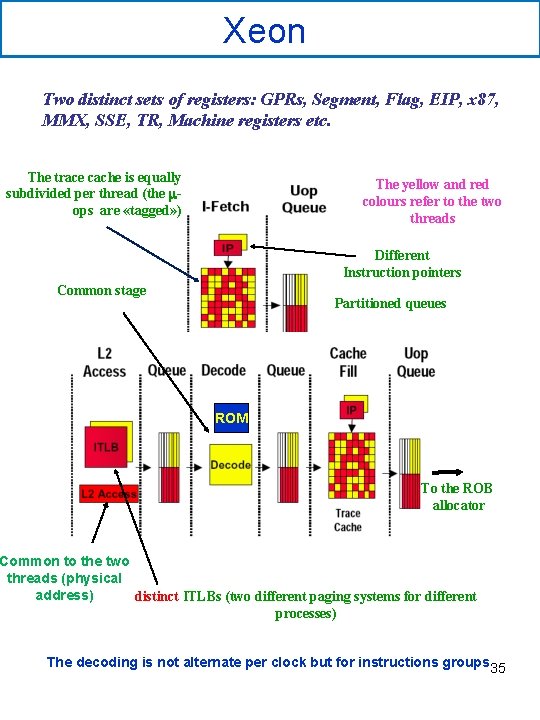

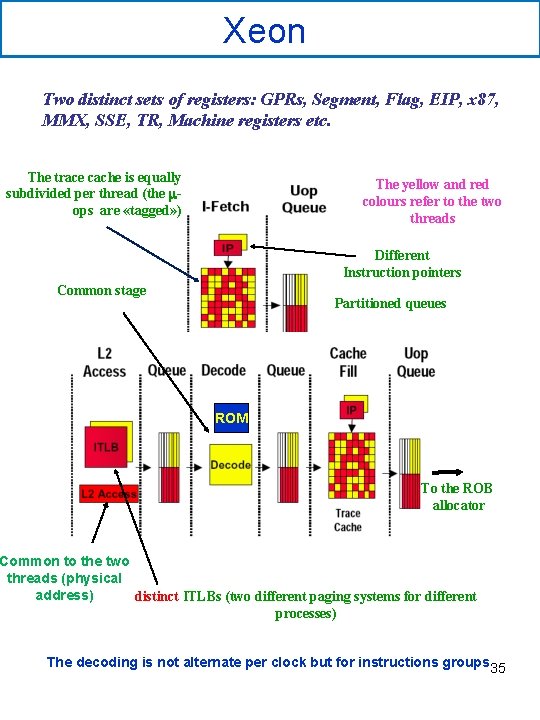

Xeon Two distinct sets of registers: GPRs, Segment, Flag, EIP, x 87, MMX, SSE, TR, Machine registers etc. The trace cache is equally subdivided per thread (the mops are «tagged» ) The yellow and red colours refer to the two threads Different Instruction pointers Common stage Partitioned queues ROM To the ROB allocator Common to the two threads (physical address) distinct ITLBs (two different paging systems for different processes) The decoding is not alternate per clock but for instructions groups 35

Xeon Execution Trace In case of acces request overlap arbitration and alternative assignment per clock. If a thread is stalled the other executes regularly Ø The TC contains 12 K u-ops and is 8 ways set associative. All entries are “tagged” by thread (replacement per thread) Ø Precise replacement LRU (shift register) Ø Caches L 1 (data), L 2 e L 3 (if any) are unified (physical addresses) Ø L 1 (data cache) is 4 ways associative, very fast, 64 bytes lines and is “write through” to L 2. Ø There is a common DTLB for L 1 4 ways with the entries “tagged”. Pages are 4 KB or 4 MB Ø L 2 and L 3 are 8 ways associative with 128 bytes lines Ø Arbitration for L 2 access FCFS with requests queue where at least one slot per thread is reserved Ø Output L 2 queues different per thread each with 64 bytes slots Ø BTB partially shared (physical addresses). The branch hystory buffers are different. The PHT is unique with tagged entries. RSB are obviously different (12 slots per thread) Ø Unified caches can triggers conflicts but also benefits (i. e. the prefetched instructions of one thread could be used by the other or a thread can use data produced by the others) Ø RAT registers are obviously replicated 36

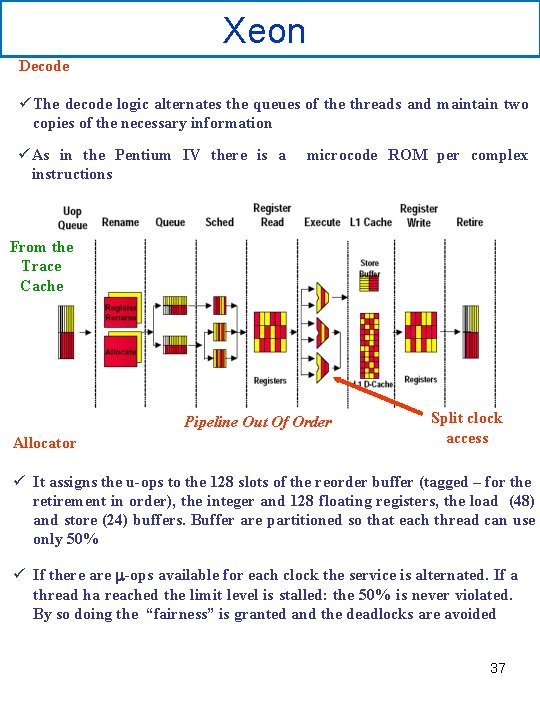

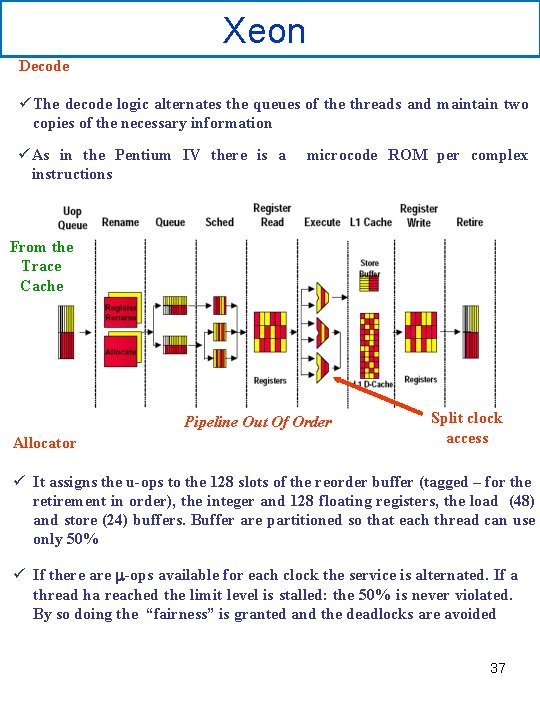

Xeon Decode ü The decode logic alternates the queues of the threads and maintain two copies of the necessary information ü As in the Pentium IV there is a instructions microcode ROM per complex From the Trace Cache Pipeline Out Of Order Allocator Split clock access ü It assigns the u-ops to the 128 slots of the reorder buffer (tagged – for the retirement in order), the integer and 128 floating registers, the load (48) and store (24) buffers. Buffer are partitioned so that each thread can use only 50% ü If there are m-ops available for each clock the service is alternated. If a thread ha reached the limit level is stalled: the 50% is never violated. By so doing the “fairness” is granted and the deadlocks are avoided 37

Xeon Register Aliasing ü It assigns to each of the 8 registers of a thread up to 16 registers (16 x 8=128). It operates in parallel to the allocator After the renamig/allocator stage there are two distinct m-op quues one of them for the memory Scheduling ü There are n schedulers, one for each functional unit ü Up to 6 u-osp can be inserted into the FU per clock. Each single uop is deemed ready for execution according to the availability of the operands and of the functional unit ü Each scheduler has a 12 entries queue where it extracts the u-op to execute. In the same clock period u-ops of the two threads can be extracted for execution ü A thread cannot use all the queue slots of a funcional unit. Execution unit ü The FU are not concerned with the origin of the u-ops they execute. Data are retrieved from the «alias” registers of the ROB and write the results in the ROB ü After the execution the state of the u-ops is RR 38

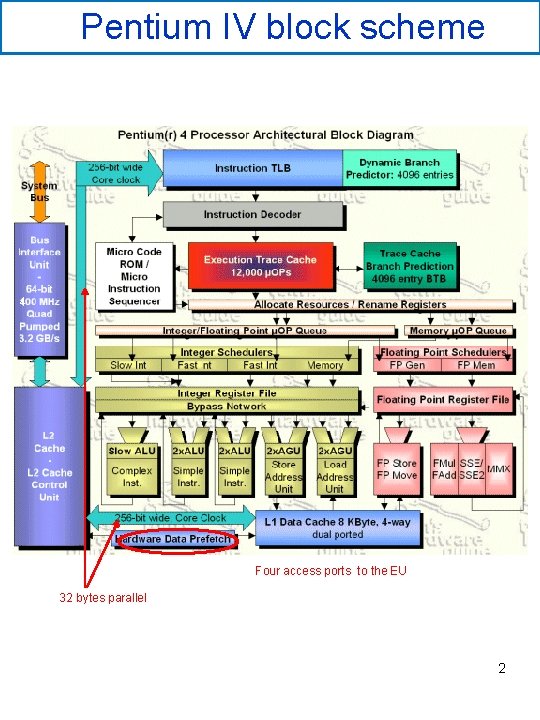

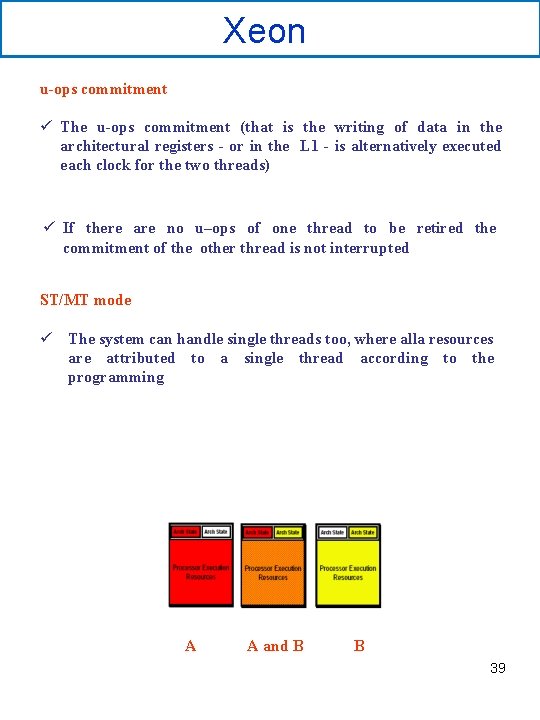

Xeon u-ops commitment ü The u-ops commitment (that is the writing of data in the architectural registers - or in the L 1 - is alternatively executed each clock for the two threads) ü If there are no u–ops of one thread to be retired the commitment of the other thread is not interrupted ST/MT mode ü The system can handle single threads too, where alla resources are attributed to a single thread according to the programming A A and B B 39