PENALTY SELECTION METHODS IN NETWORK MODELS ANNA WYSOCKI

- Slides: 38

PENALTY SELECTION METHODS IN NETWORK MODELS ANNA WYSOCKI, M. S. awysocki@ucdavis. edu

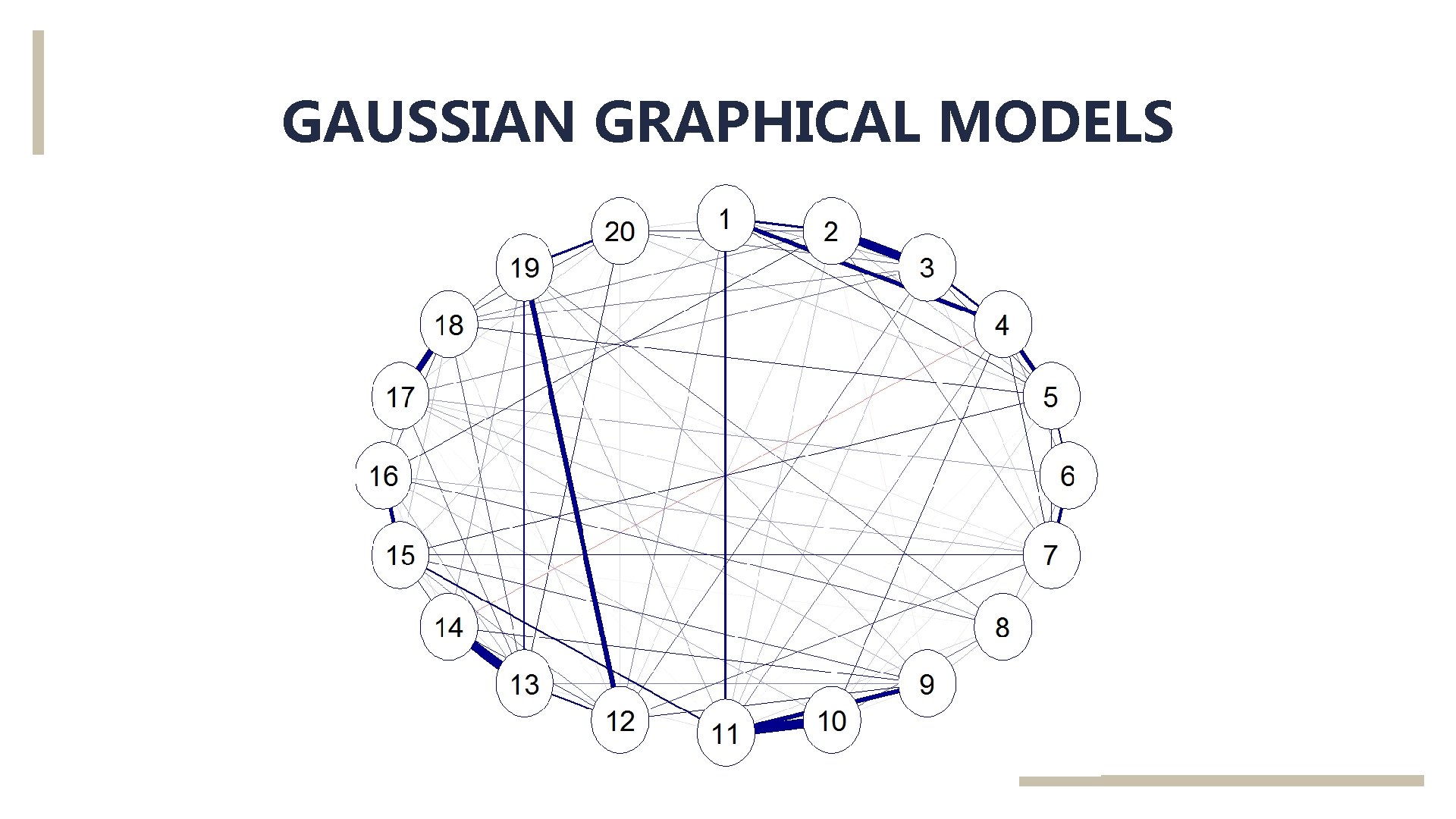

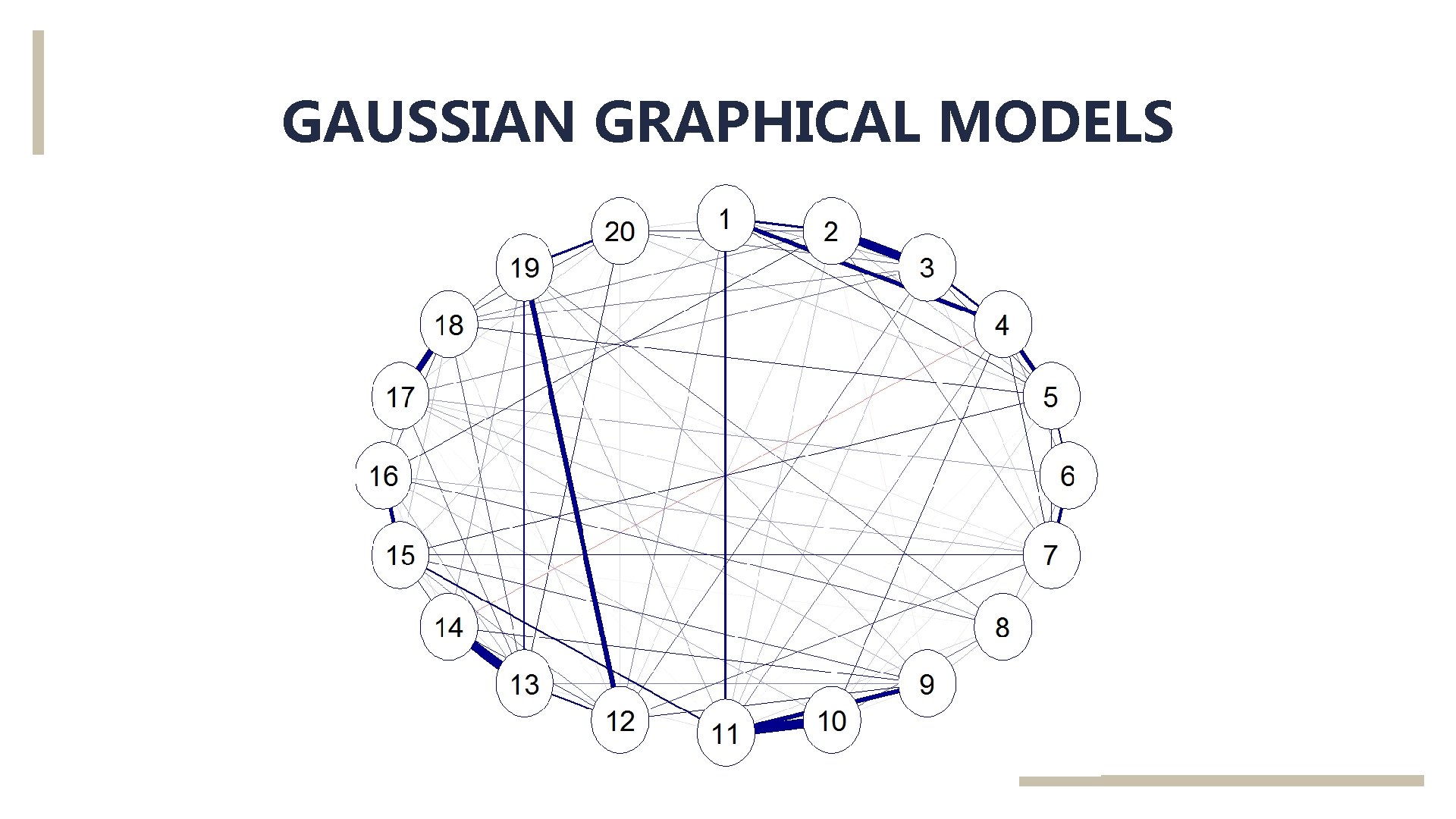

GAUSSIAN GRAPHICAL MODELS

How are network models estimated?

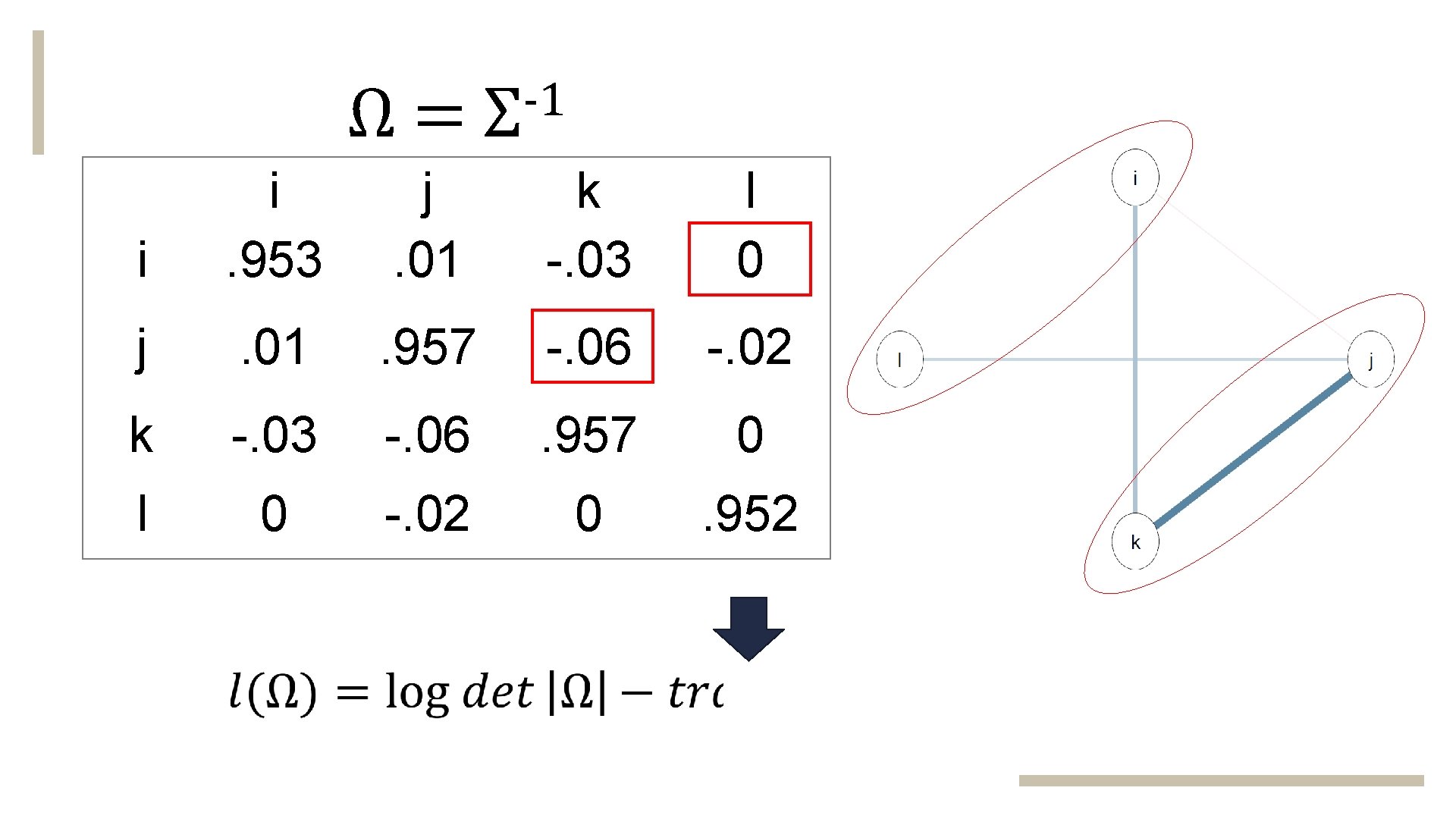

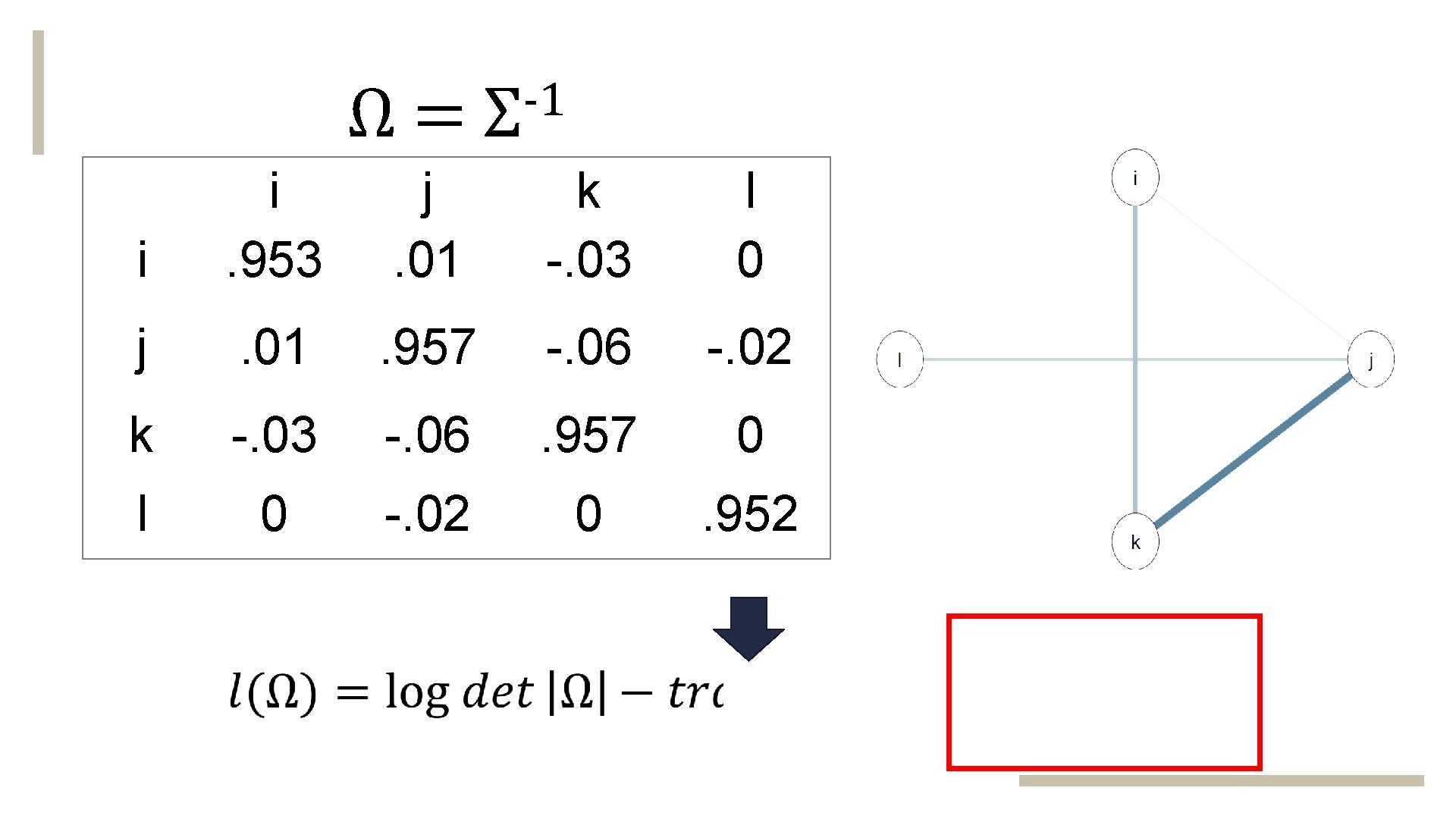

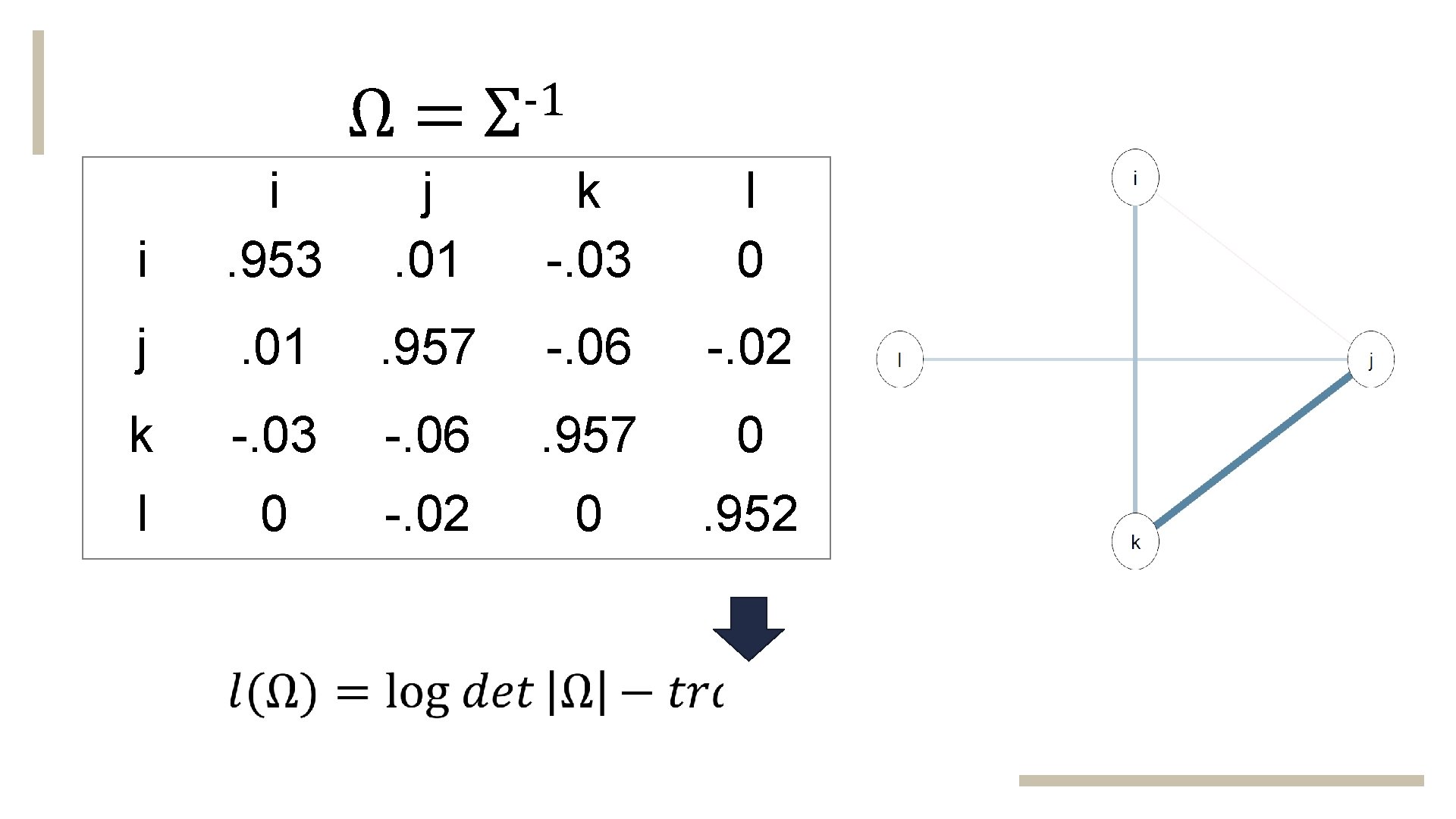

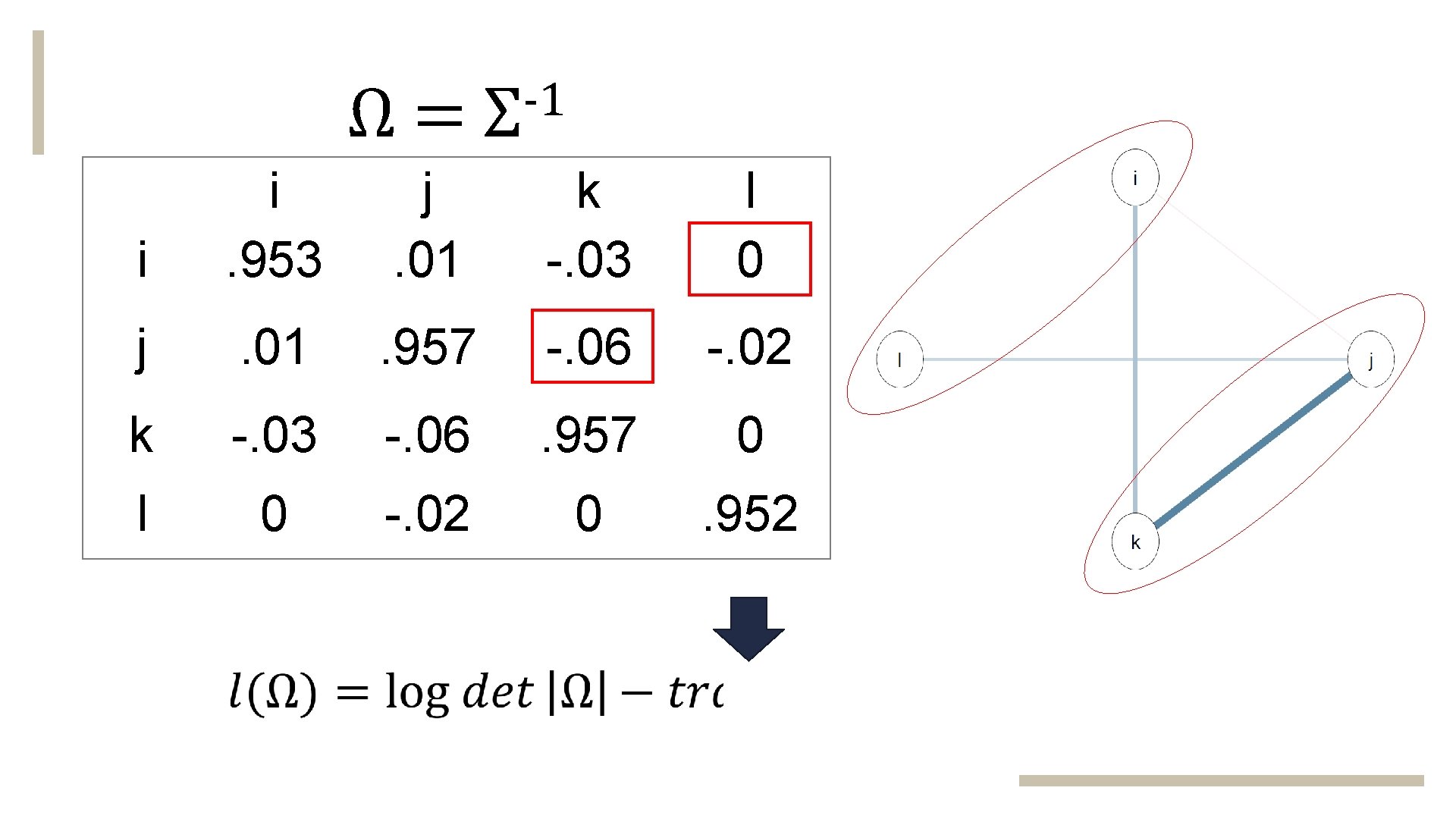

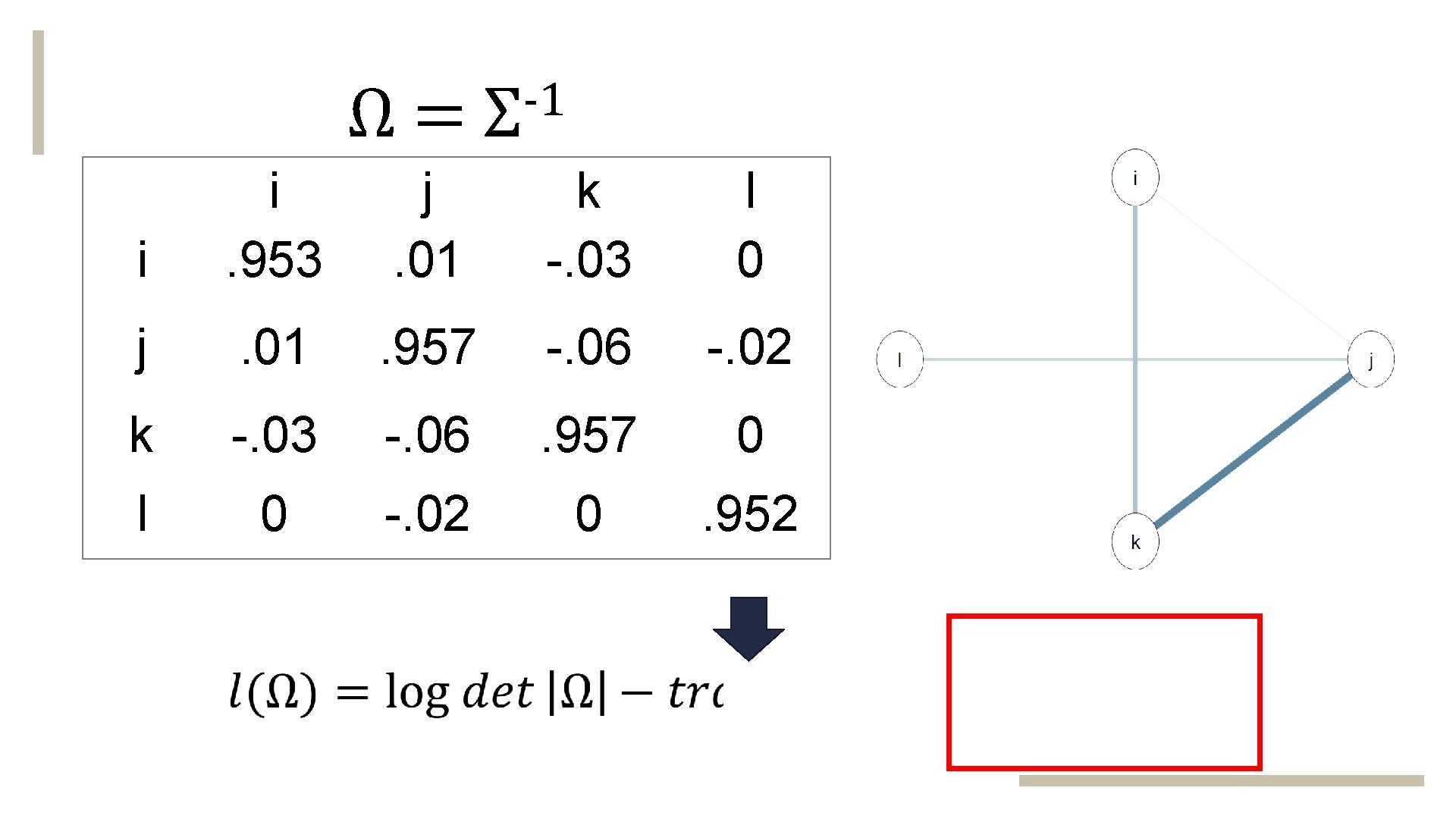

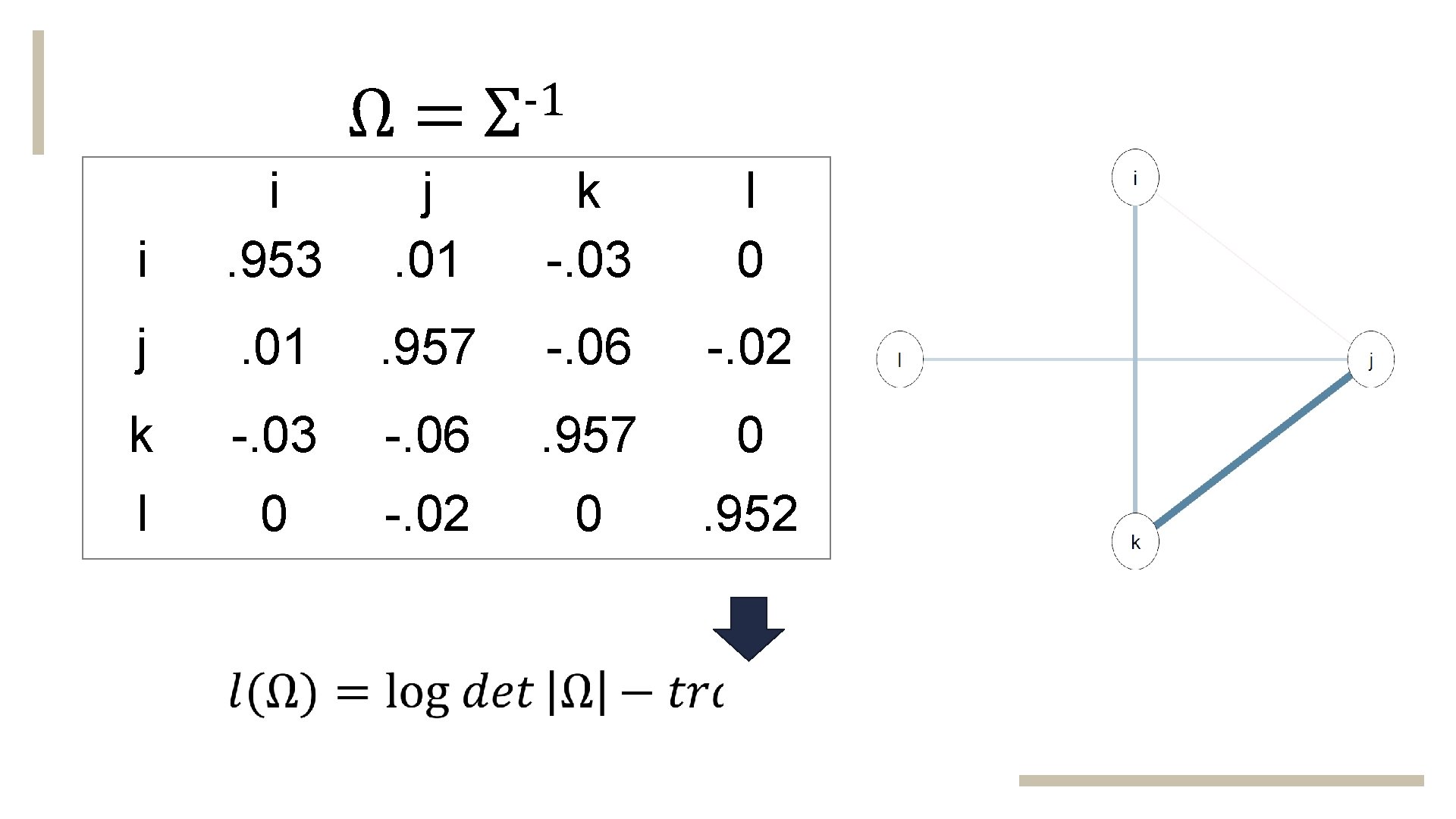

Ω= -1 Σ i i. 953 j. 01 k -. 03 l 0 j . 01 . 957 -. 06 -. 02 k -. 03 -. 06 . 957 0 l 0 -. 02 0 . 952

Ω= -1 Σ i i. 953 j. 01 k -. 03 l 0 j . 01 . 957 -. 06 -. 02 k -. 03 -. 06 . 957 0 l 0 -. 02 0 . 952

Ω= -1 Σ i i. 953 j. 01 k -. 03 l 0 j . 01 . 957 -. 06 -. 02 k -. 03 -. 06 . 957 0 l 0 -. 02 0 . 952

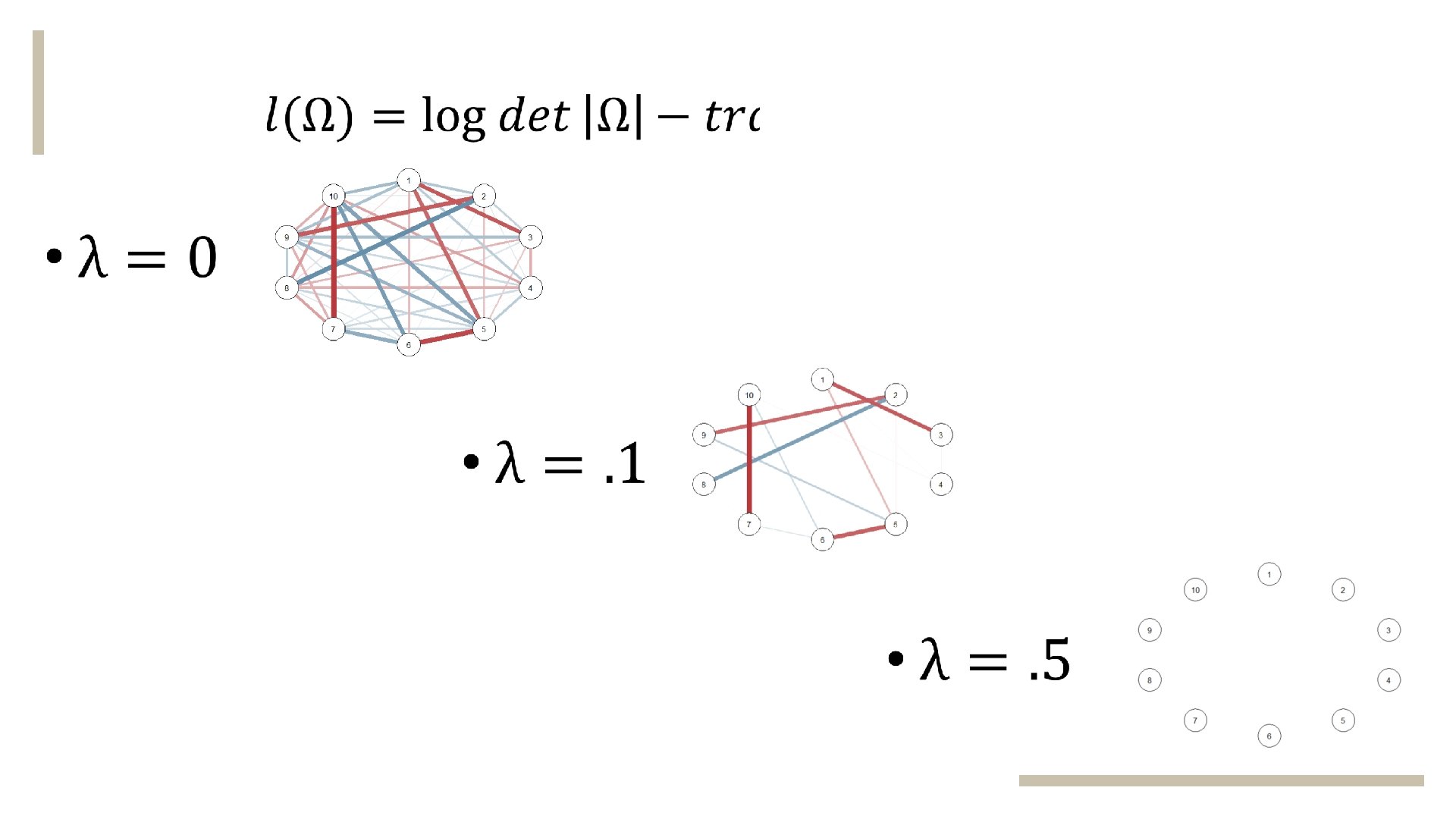

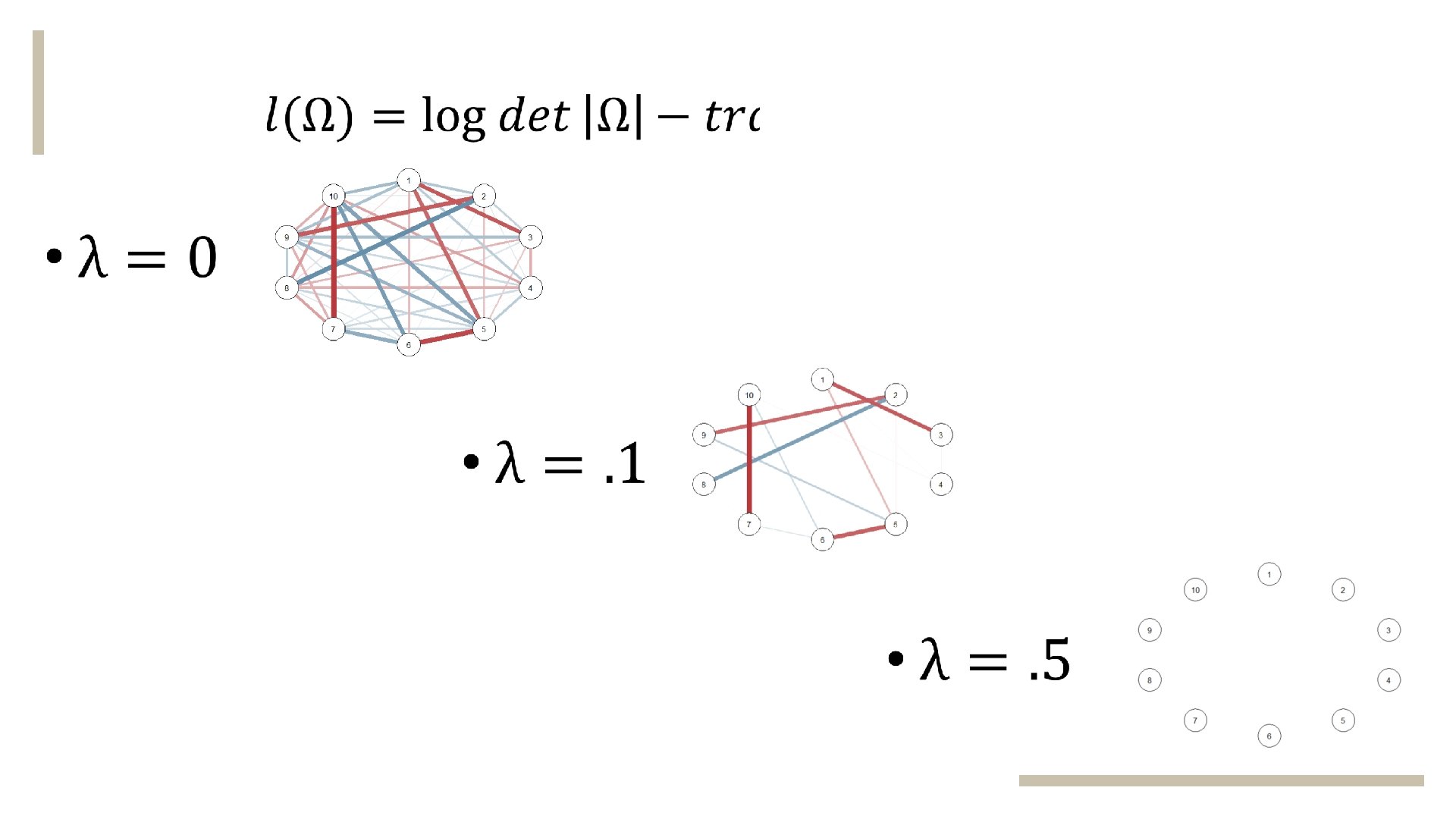

Choosing lambda is extremely important. As different lambdas can correspond to different networks.

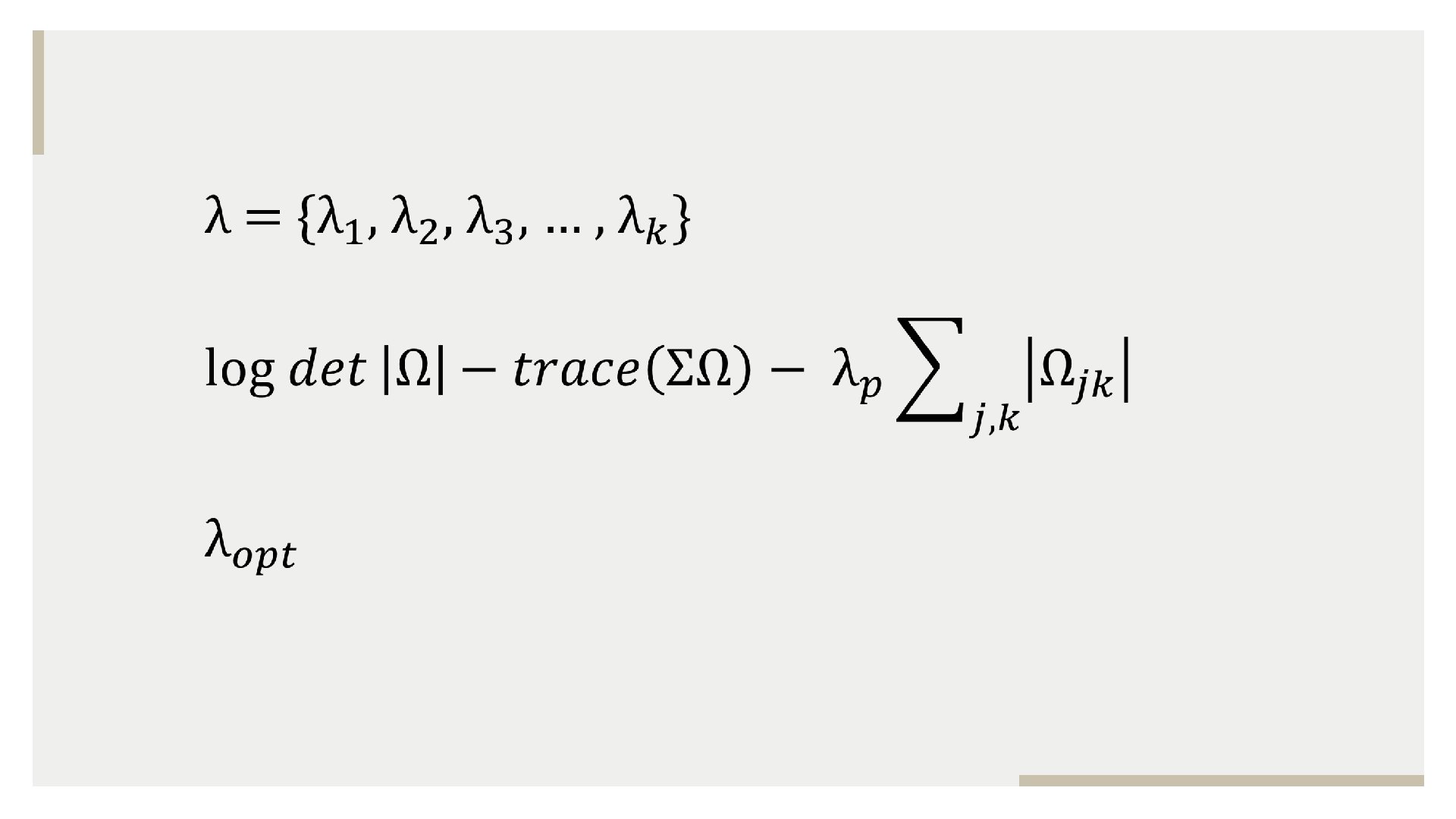

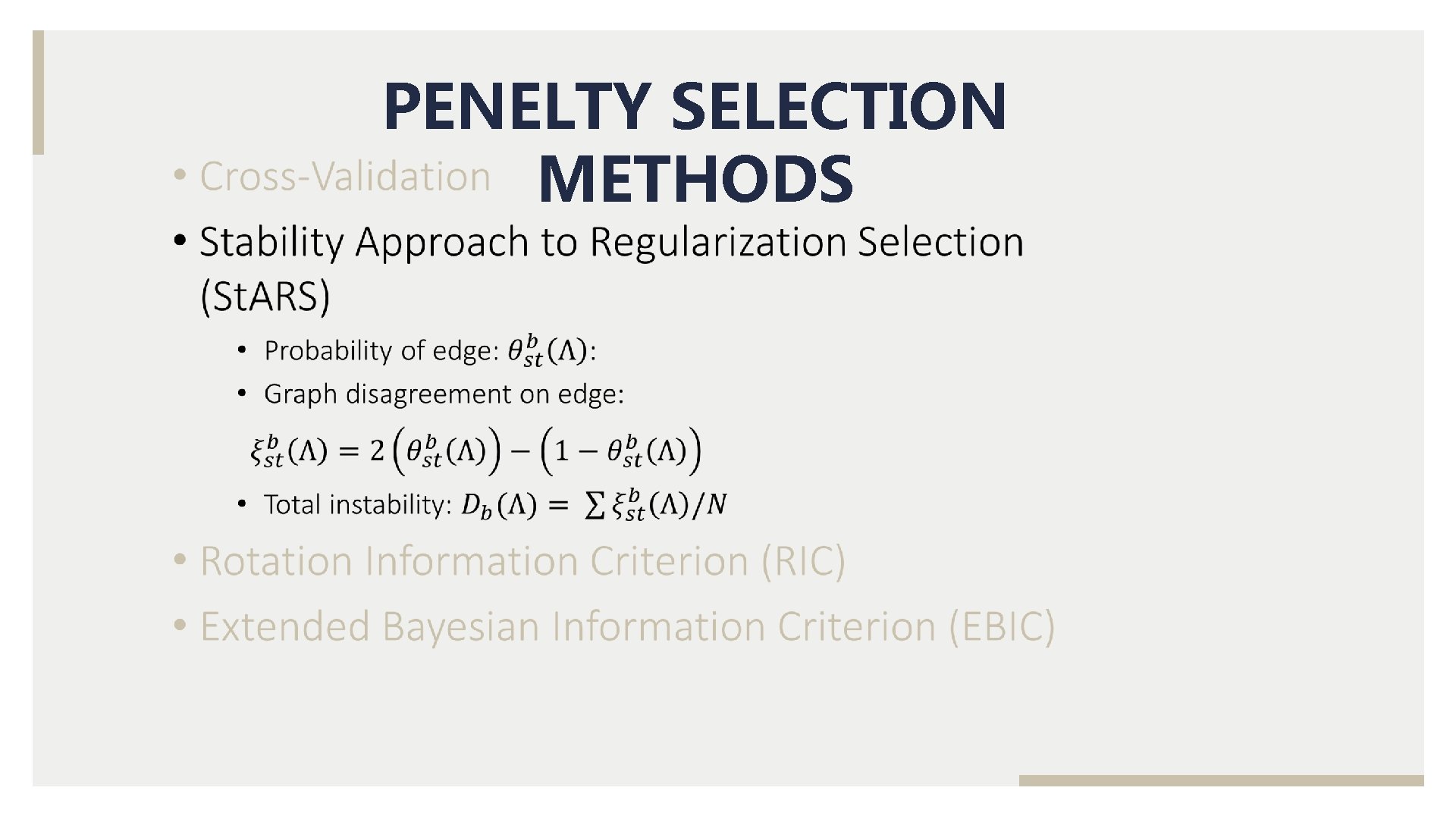

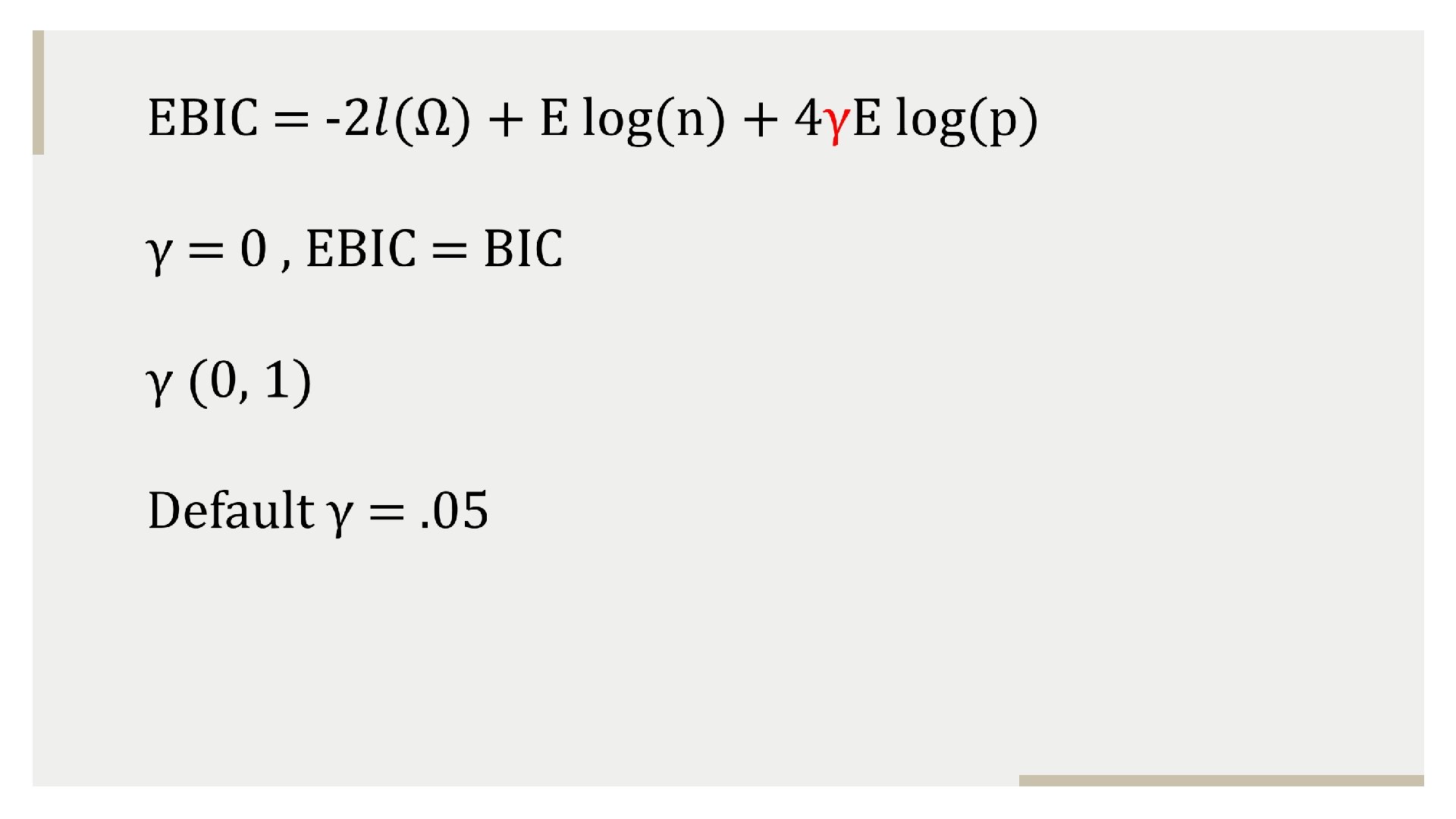

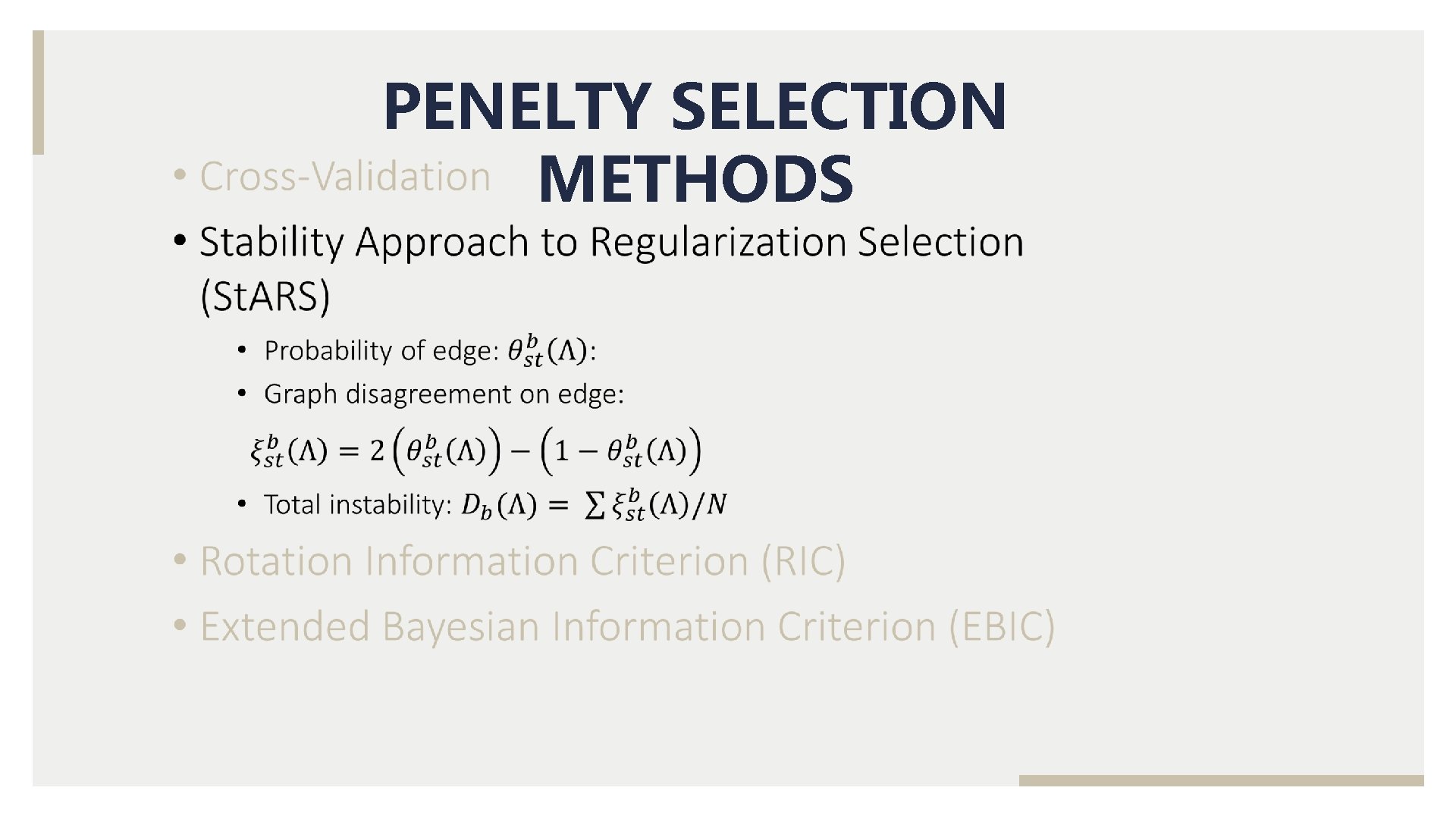

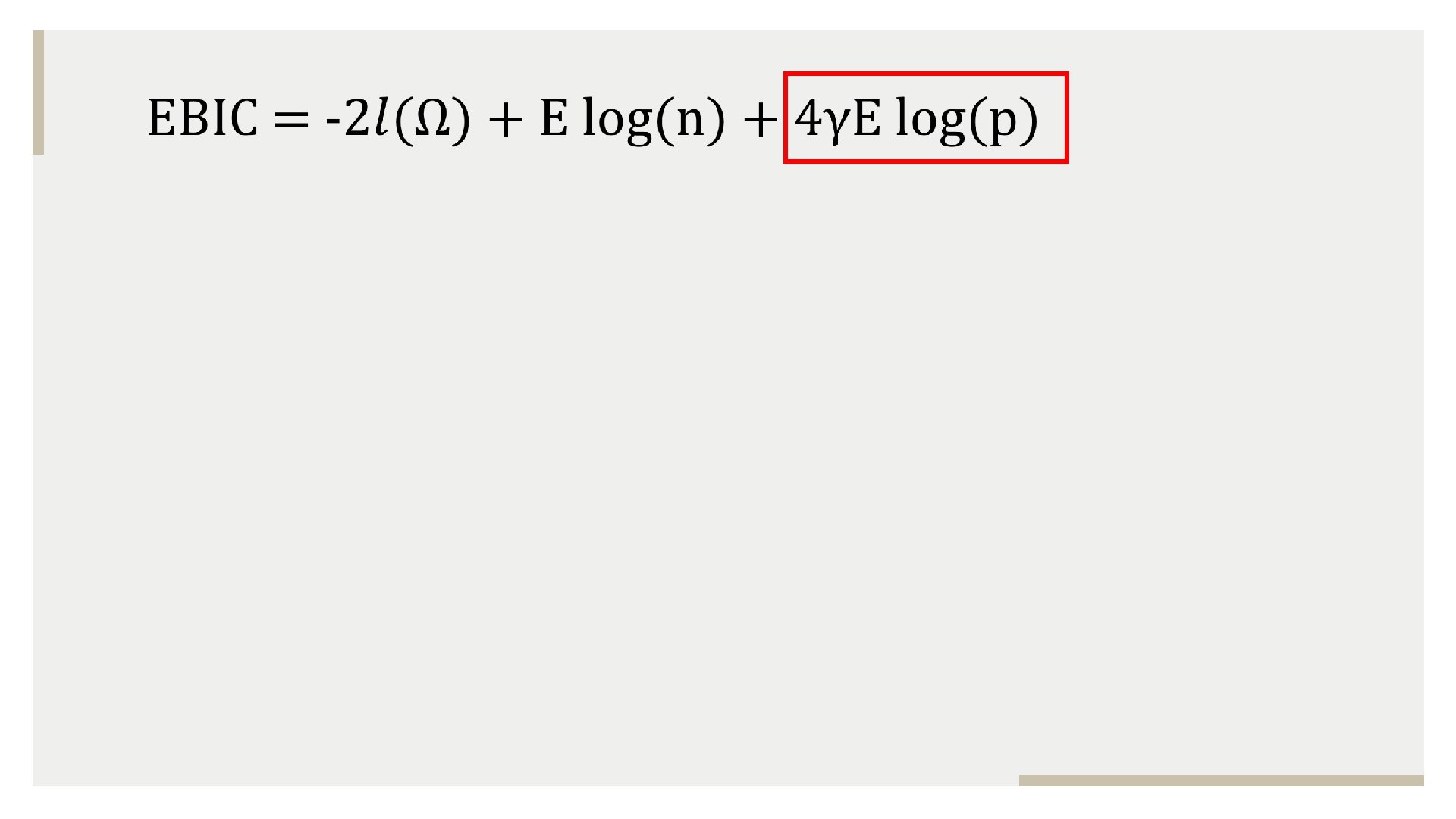

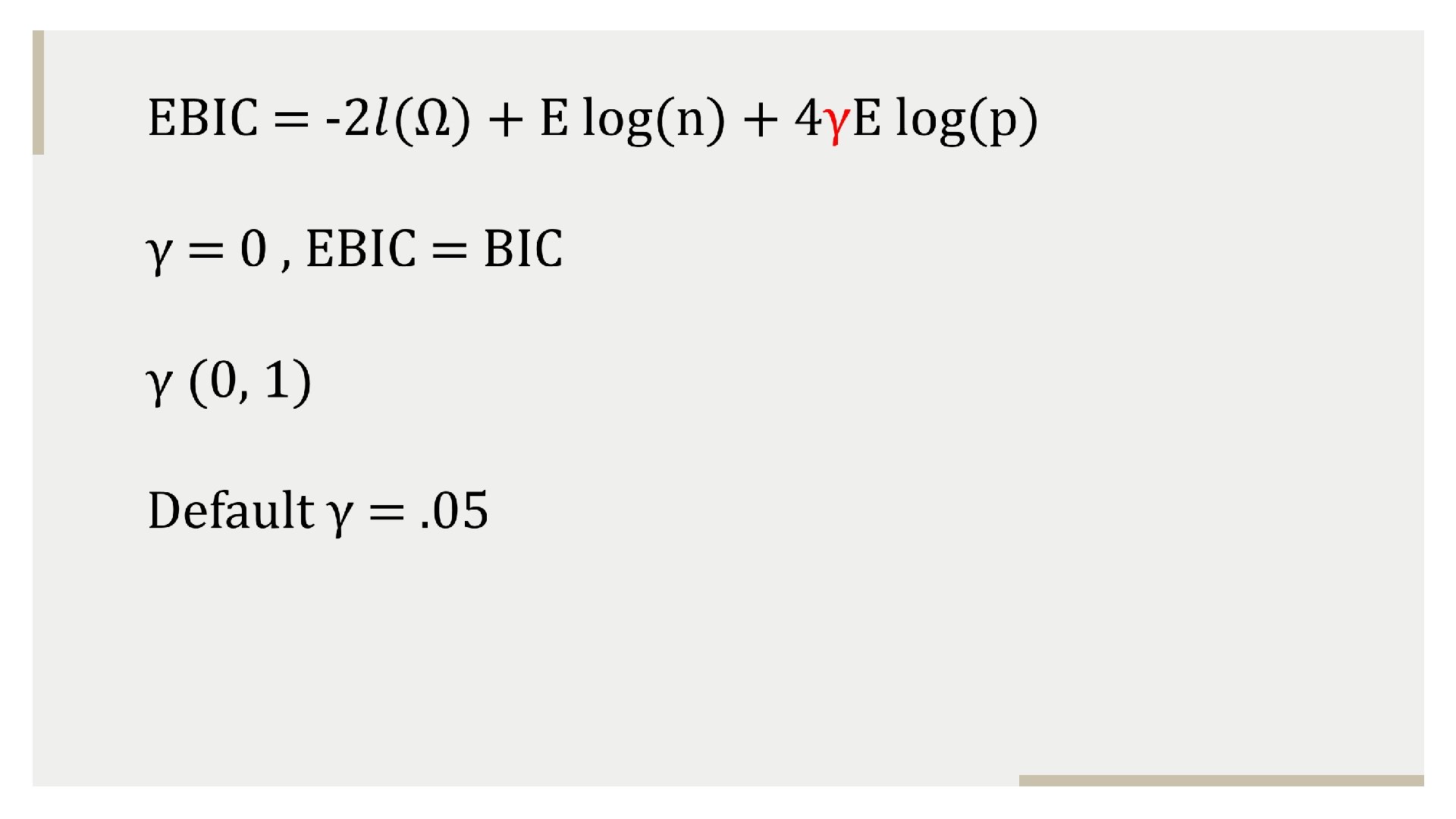

PENELTY SELECTION • Cross-Validation. METHODS • Stability Approach to Regularization Selection (St. ARS) • Rotation Information Criterion (RIC) • Extended Bayesian Information Criterion (EBIC)

PENELTY SELECTION METHODS

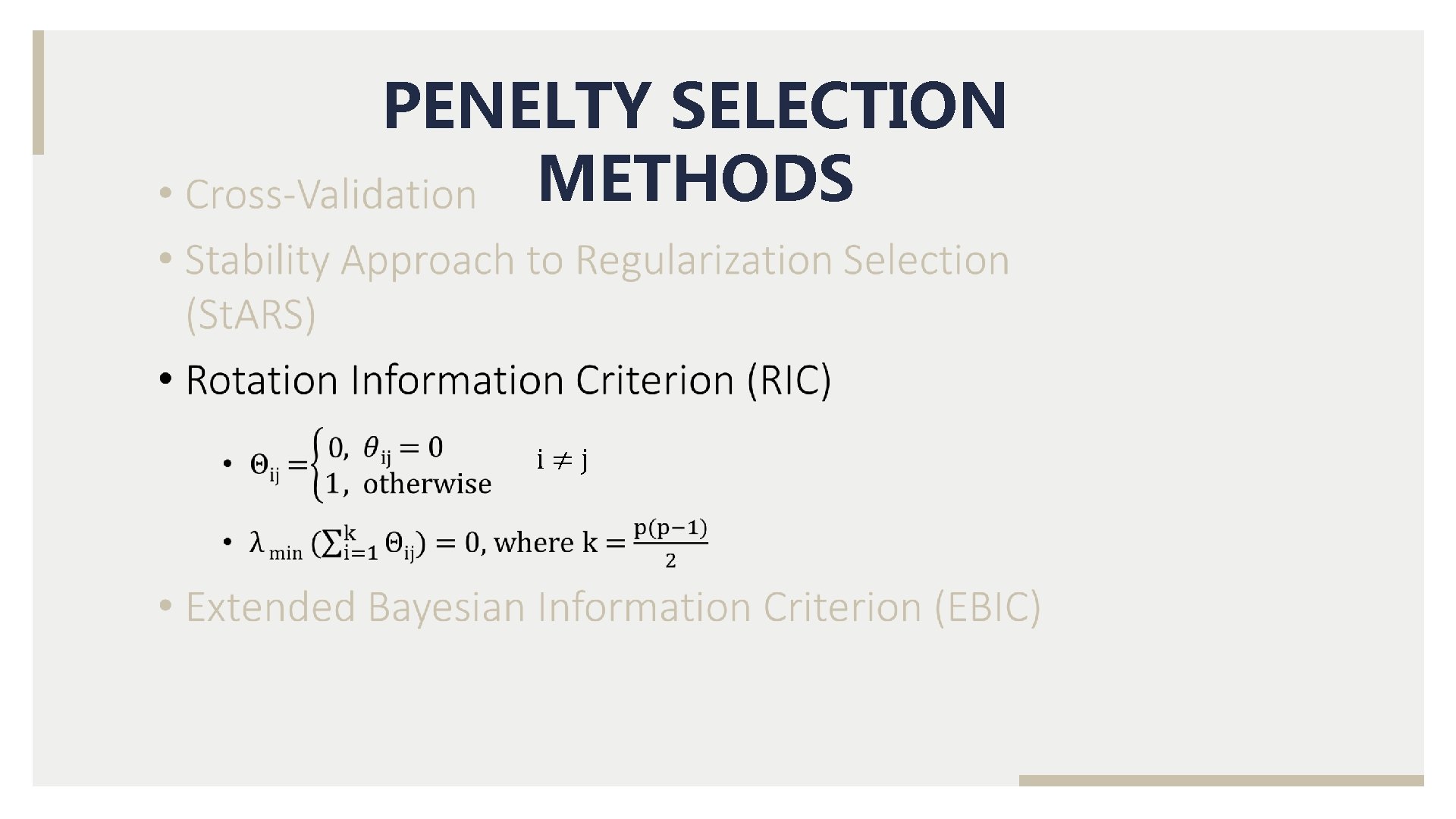

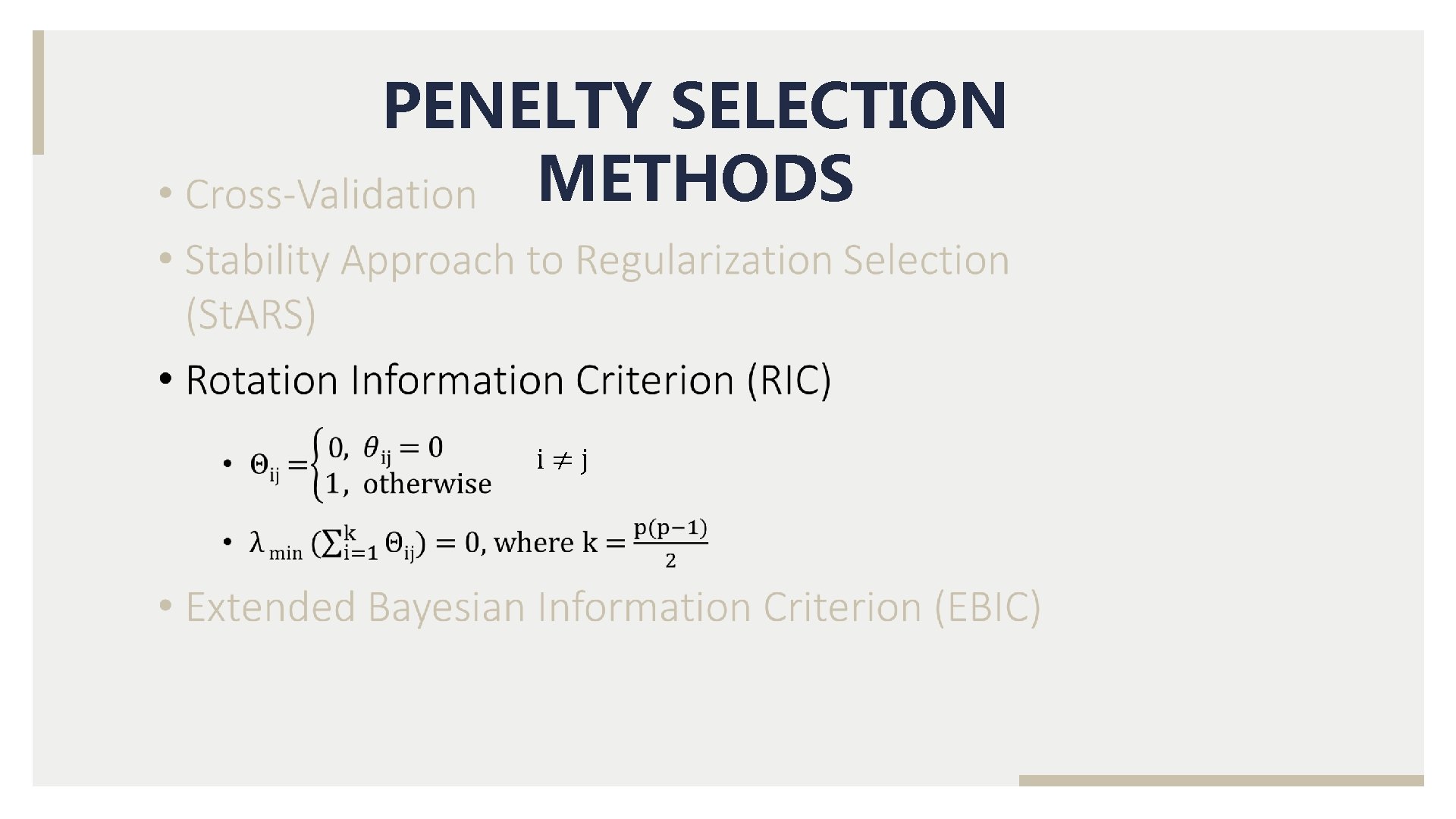

PENELTY SELECTION METHODS i≠j

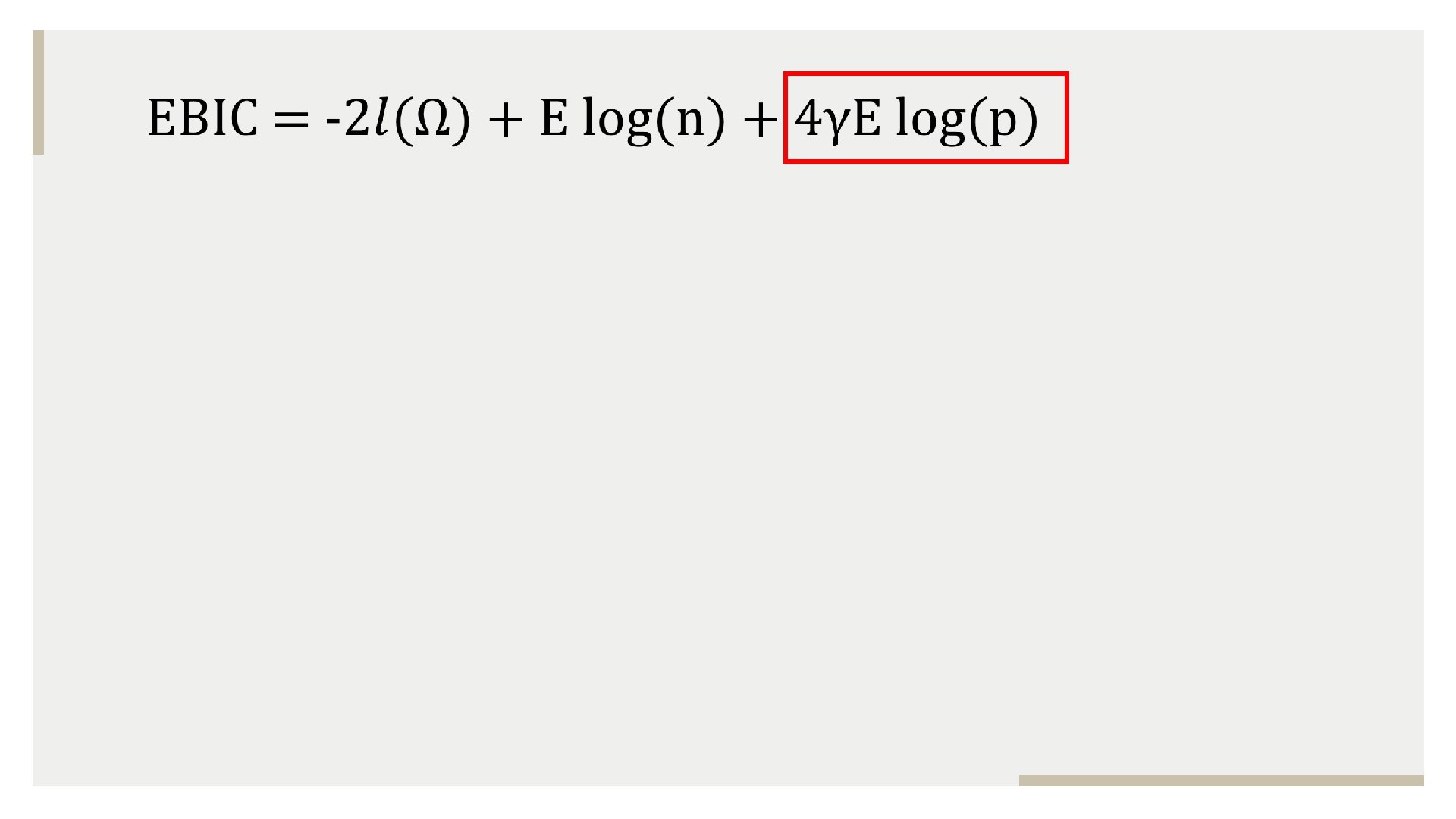

PENELTY SELECTION • Cross-Validation. METHODS • Stability Approach to Regularization Selection (St. ARS) • Rotation Information Criterion (RIC) • Extended Bayesian Information Criterion (EBIC)

PENELTY SELECTION • Cross-Validation. METHODS • Stability Approach to Regularization Selection (St. ARS) • Rotation Information Criterion (RIC) • Extended Bayesian Information Criterion (EBIC)

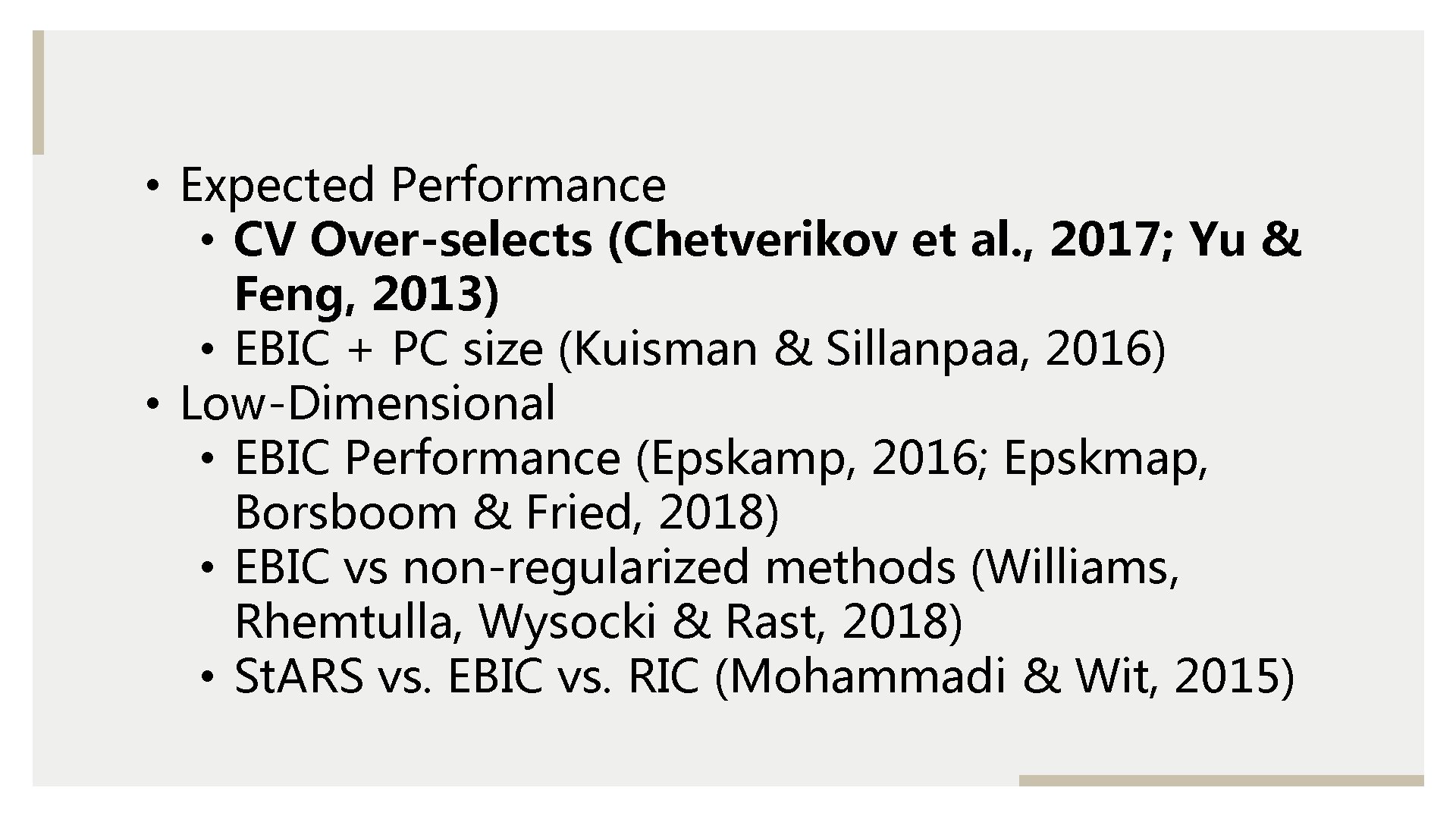

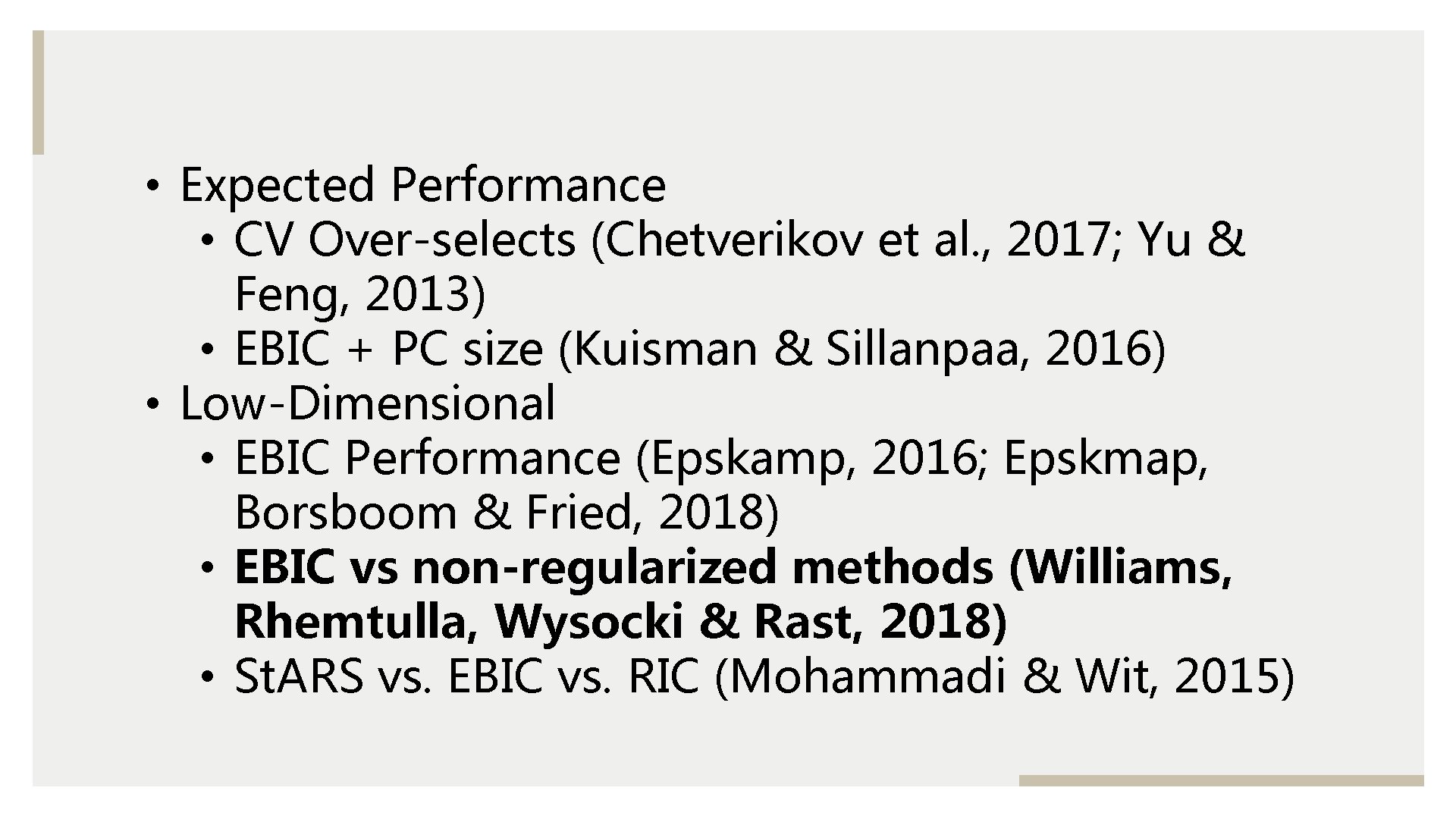

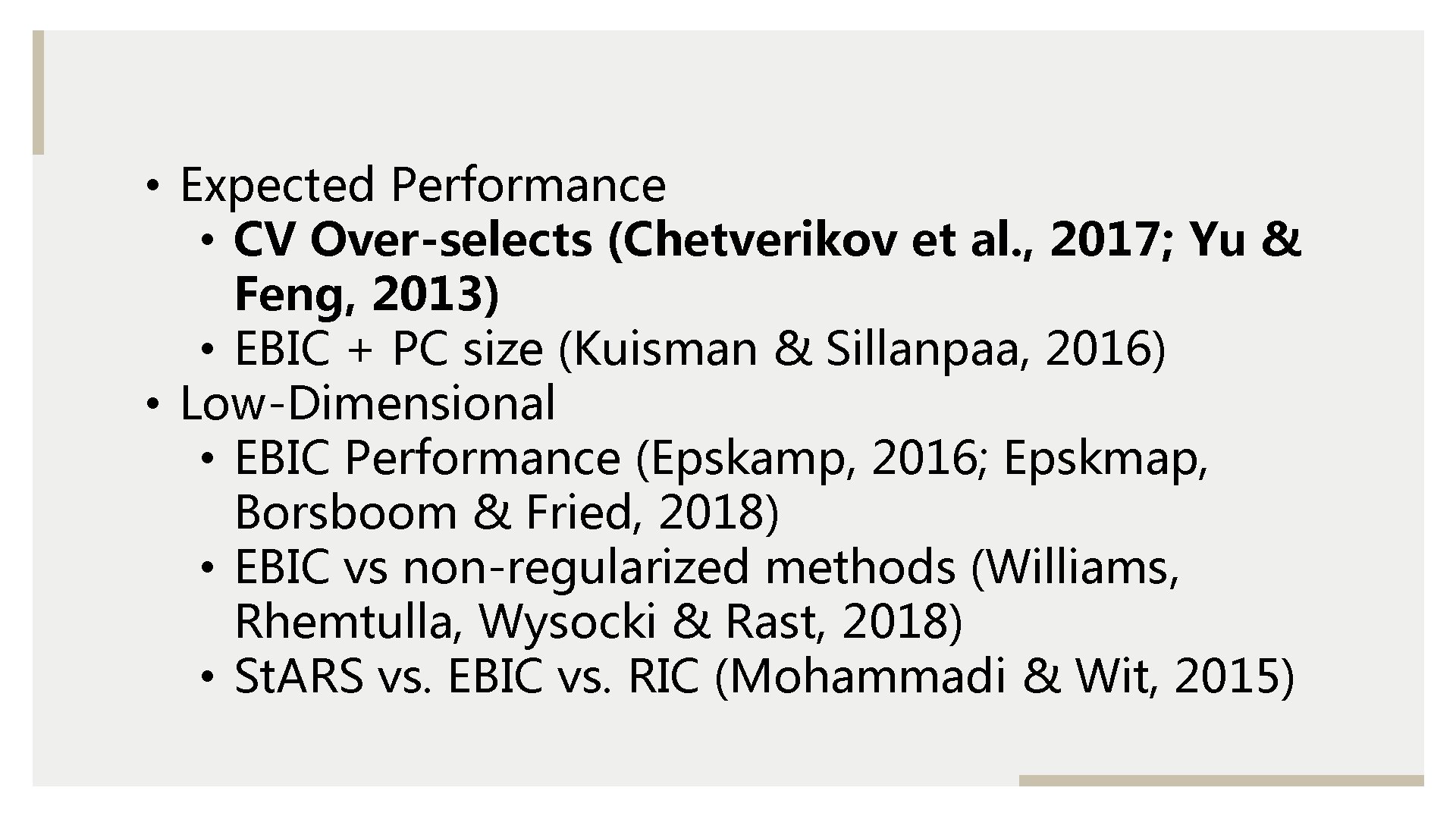

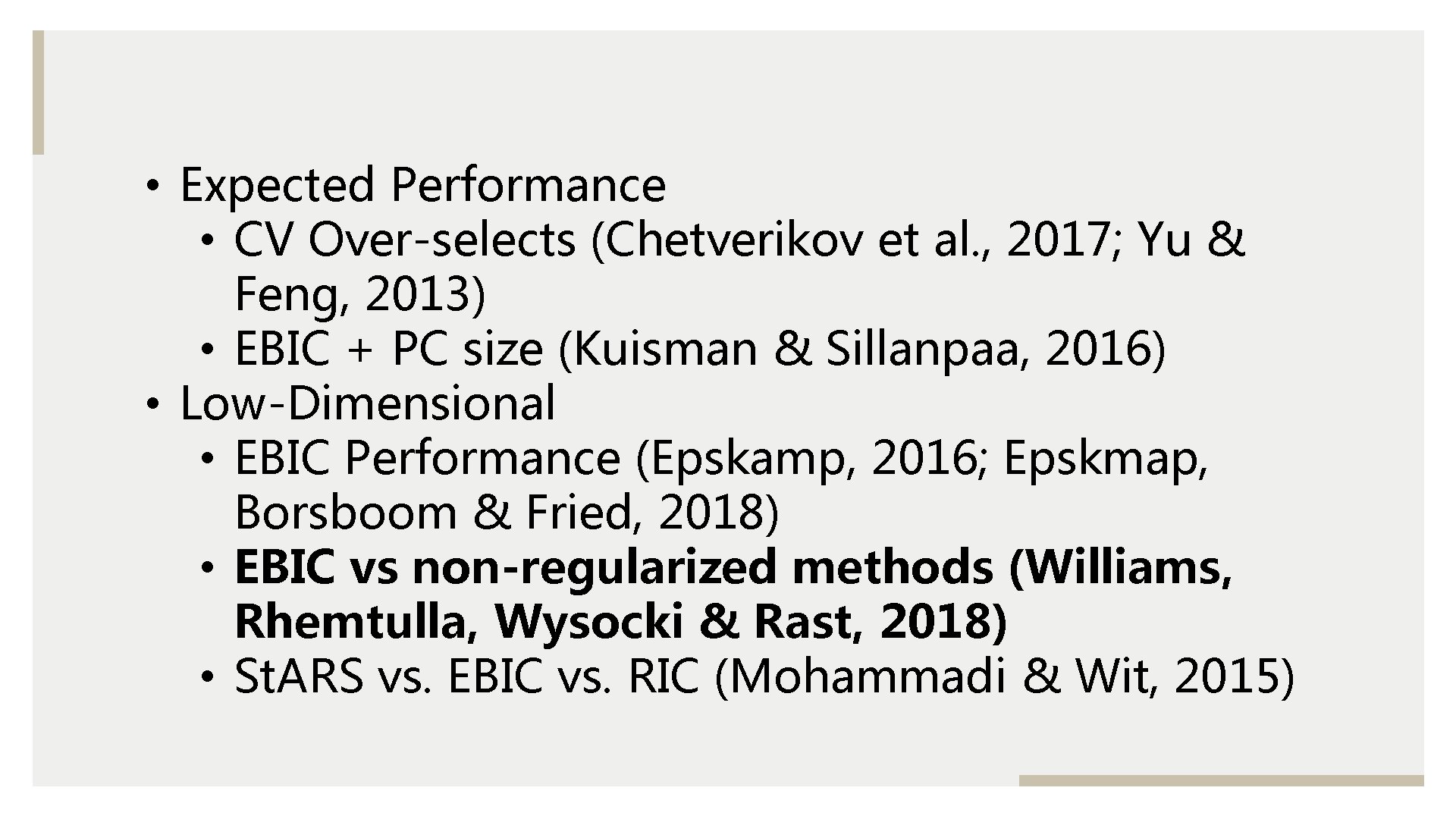

• Expected Performance • CV Over-selects (Chetverikov et al. , 2017; Yu & Feng, 2013) • EBIC + PC size (Kuisman & Sillanpaa, 2016) • Low-Dimensional • EBIC Performance (Epskamp, 2016; Epskmap, Borsboom & Fried, 2018) • EBIC vs non-regularized methods (Williams, Rhemtulla, Wysocki & Rast, 2018) • St. ARS vs. EBIC vs. RIC (Mohammadi & Wit, 2015)

• Expected Performance • CV Over-selects (Chetverikov et al. , 2017; Yu & Feng, 2013) • EBIC + PC size (Kuisman & Sillanpaa, 2016) • Low-Dimensional • EBIC Performance (Epskamp, 2016; Epskmap, Borsboom & Fried, 2018) • EBIC vs non-regularized methods (Williams, Rhemtulla, Wysocki & Rast, 2018) • St. ARS vs. EBIC vs. RIC (Mohammadi & Wit, 2015)

• Expected Performance • CV Over-selects (Chetverikov et al. , 2017; Yu & Feng, 2013) • EBIC + PC size (Kuisman & Sillanpaa, 2016) • Low-Dimensional • EBIC Performance (Epskamp, 2016; Epskmap, Borsboom & Fried, 2018) • EBIC vs non-regularized methods (Williams, Rhemtulla, Wysocki & Rast, 2018) • St. ARS vs. EBIC vs. RIC (Mohammadi & Wit, 2015)

• Expected Performance • CV Over-selects (Chetverikov et al. , 2017; Yu & Feng, 2013) • EBIC + PC size (Kuisman & Sillanpaa, 2016) • Low-Dimensional • EBIC Performance (Epskamp, 2016; Epskmap, Borsboom & Fried, 2018) • EBIC vs non-regularized methods (Williams, Rhemtulla, Wysocki & Rast, 2018) • St. ARS vs. EBIC vs. RIC (Mohammadi & Wit, 2015)

• Expected Performance • CV Over-selects (Chetverikov et al. , 2017; Yu & Feng, 2013) • EBIC + PC size (Kuisman & Sillanpaa, 2016) • Low-Dimensional • EBIC Performance (Epskamp, 2016; Epskmap, Borsboom & Fried, 2018) • EBIC vs non-regularized methods (Williams, Rhemtulla, Wysocki & Rast, 2018) • St. ARS vs. EBIC vs. RIC (Mohammadi & Wit, 2015)

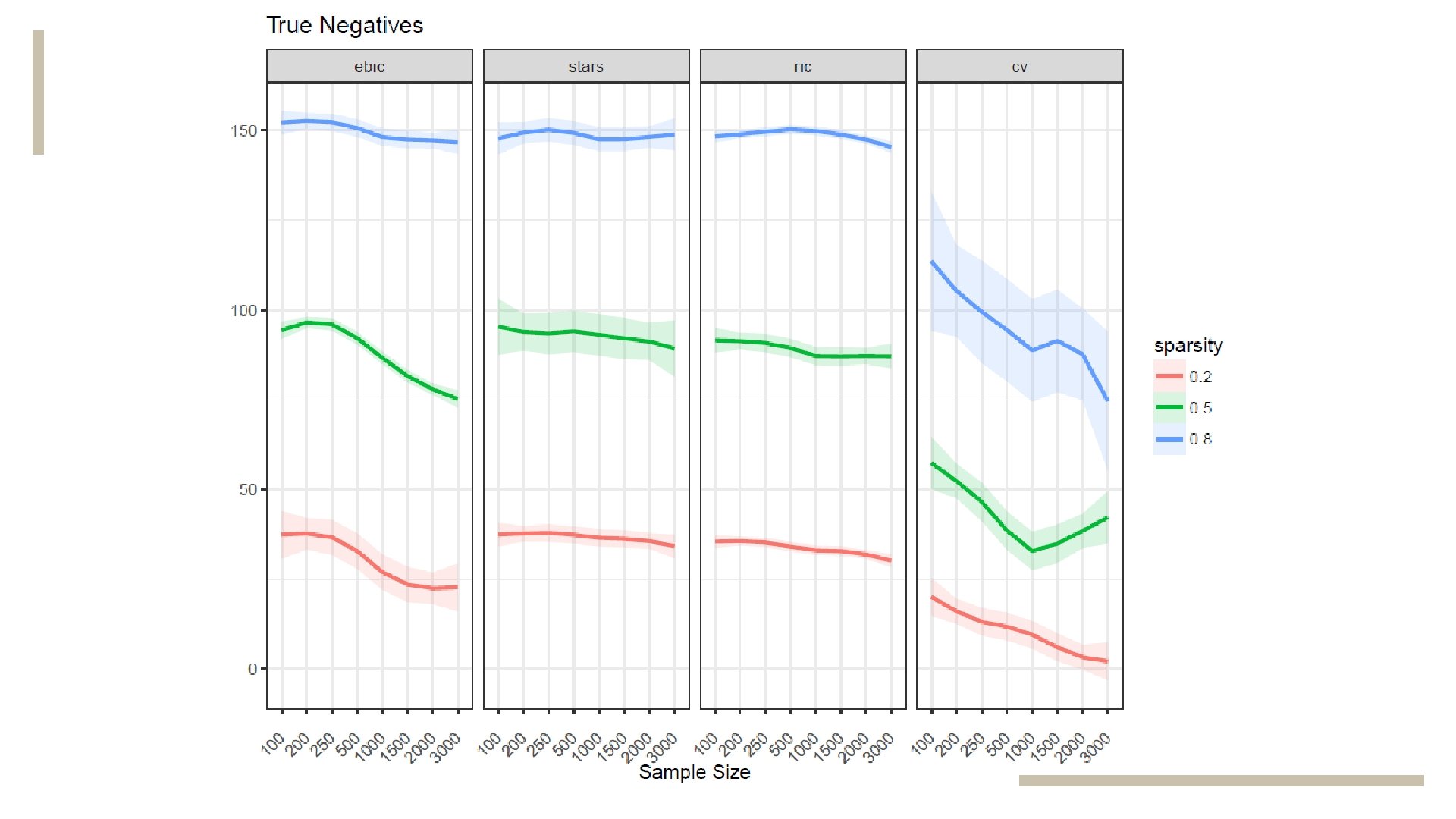

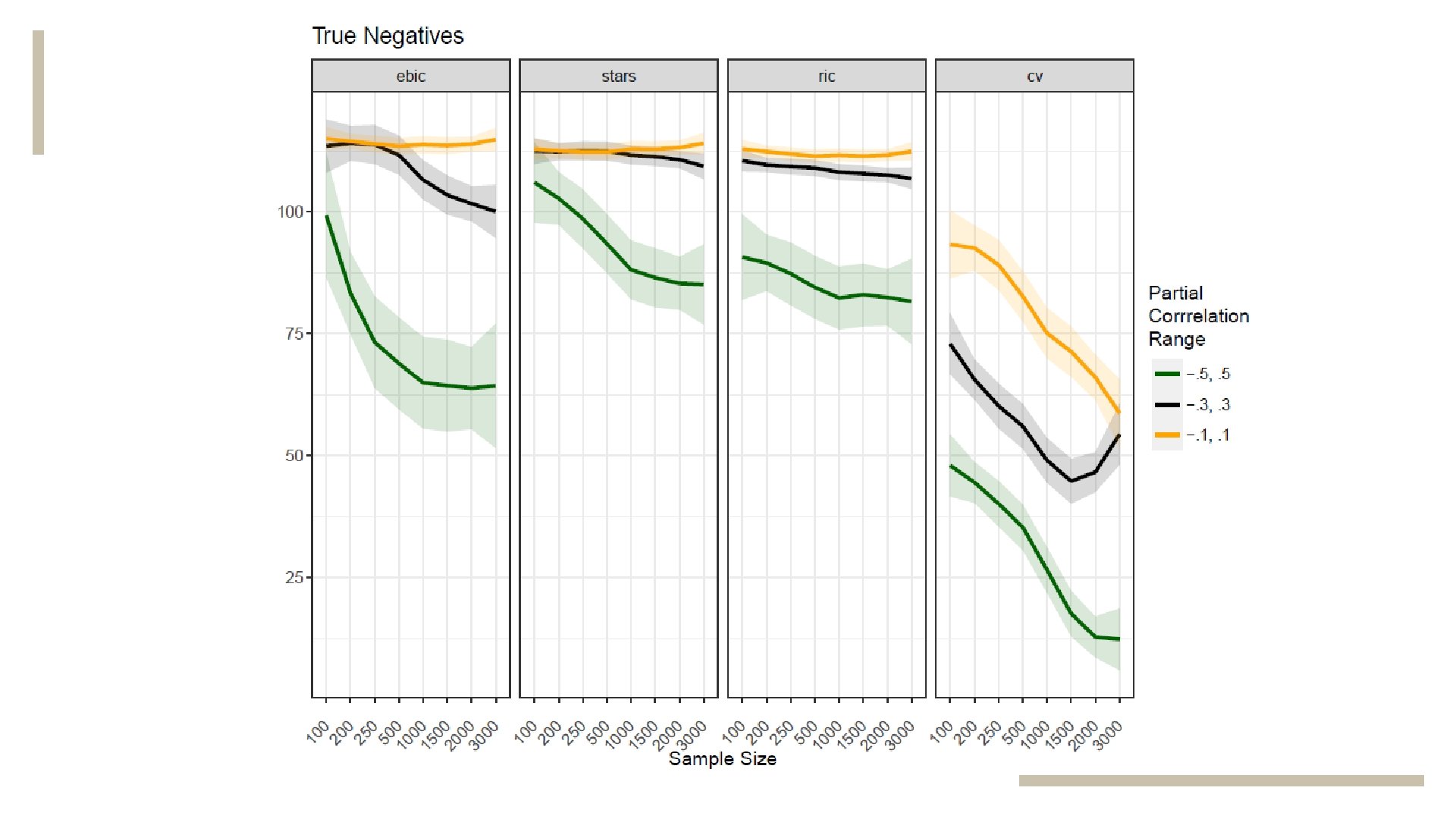

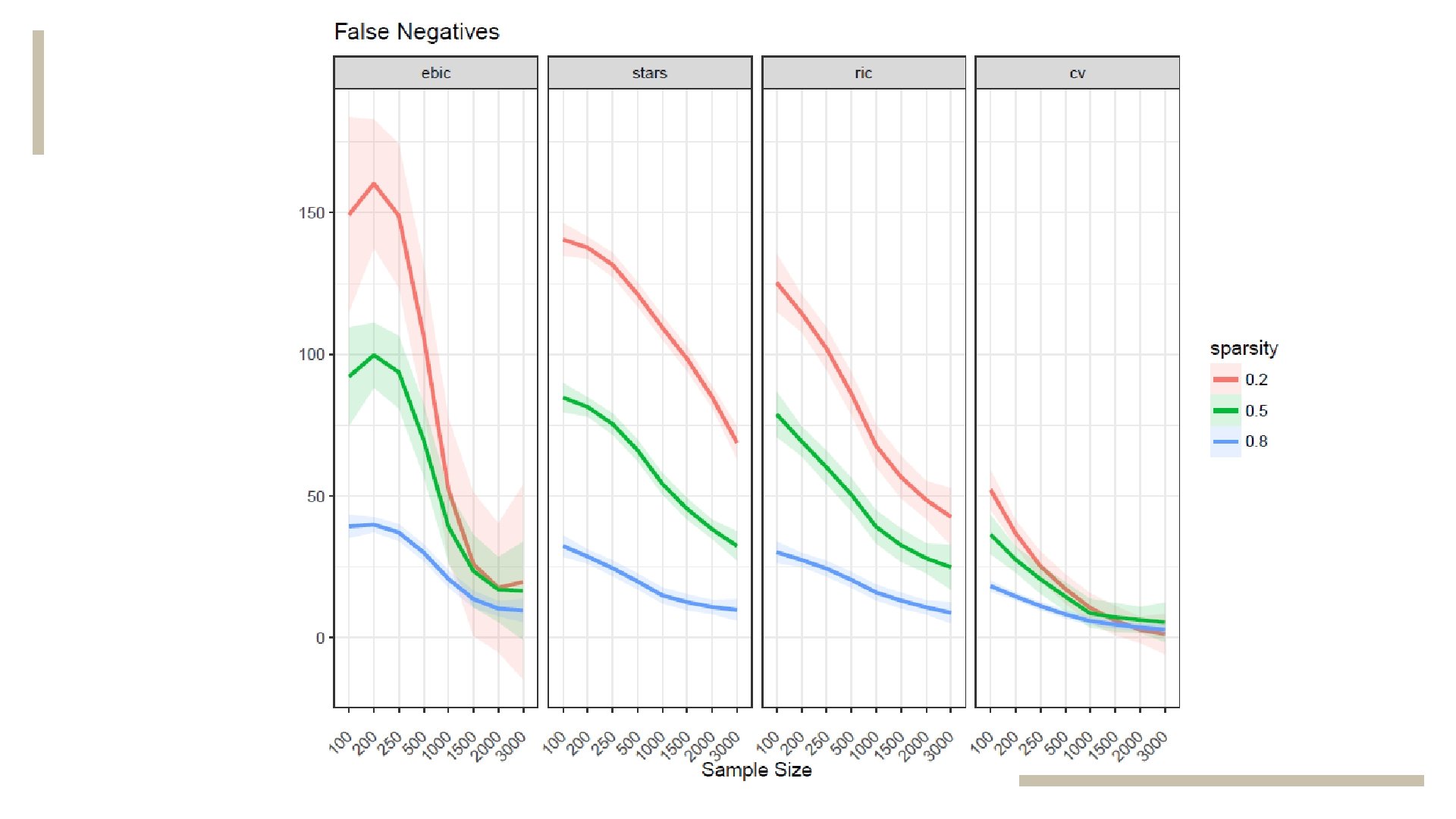

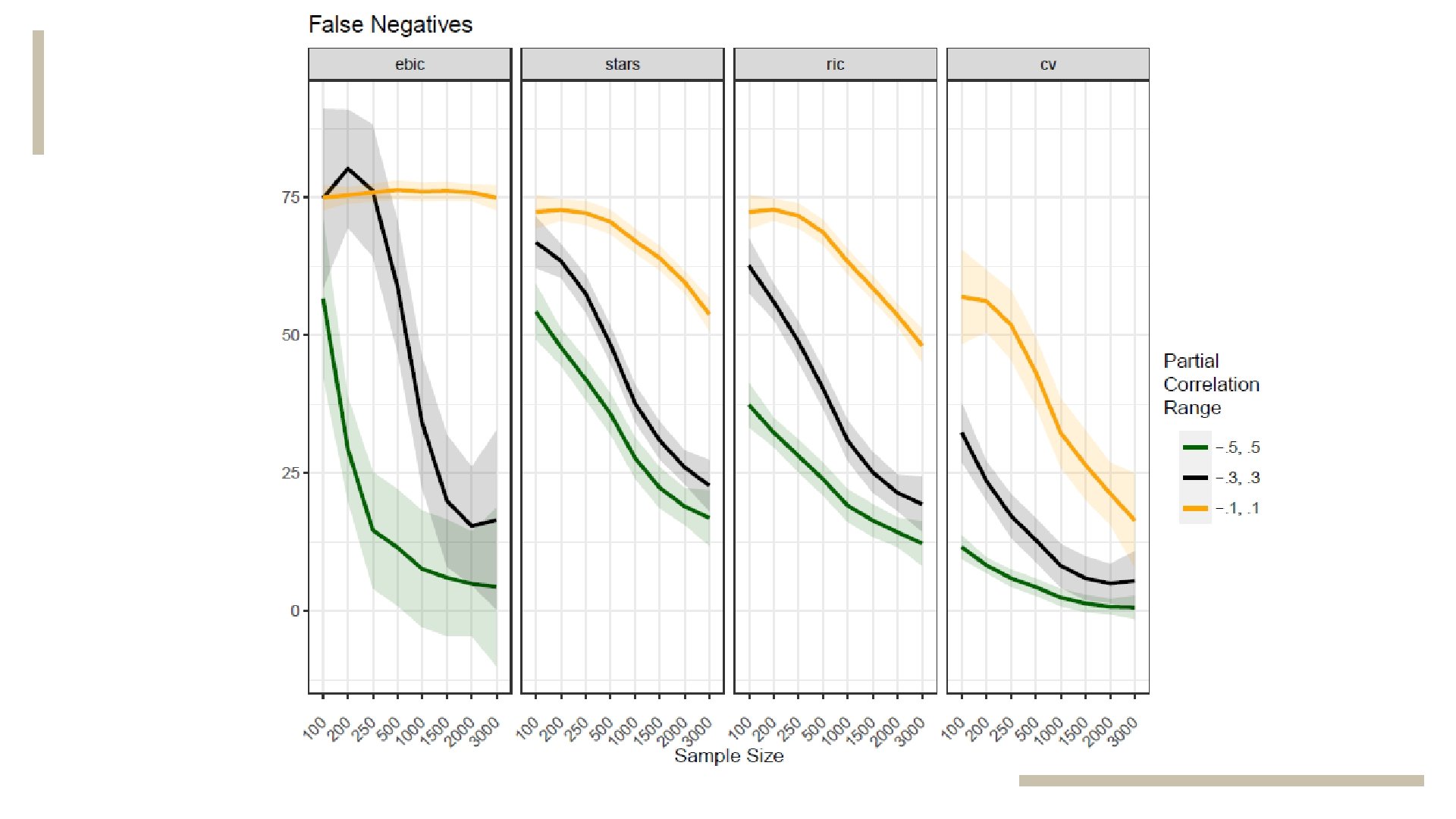

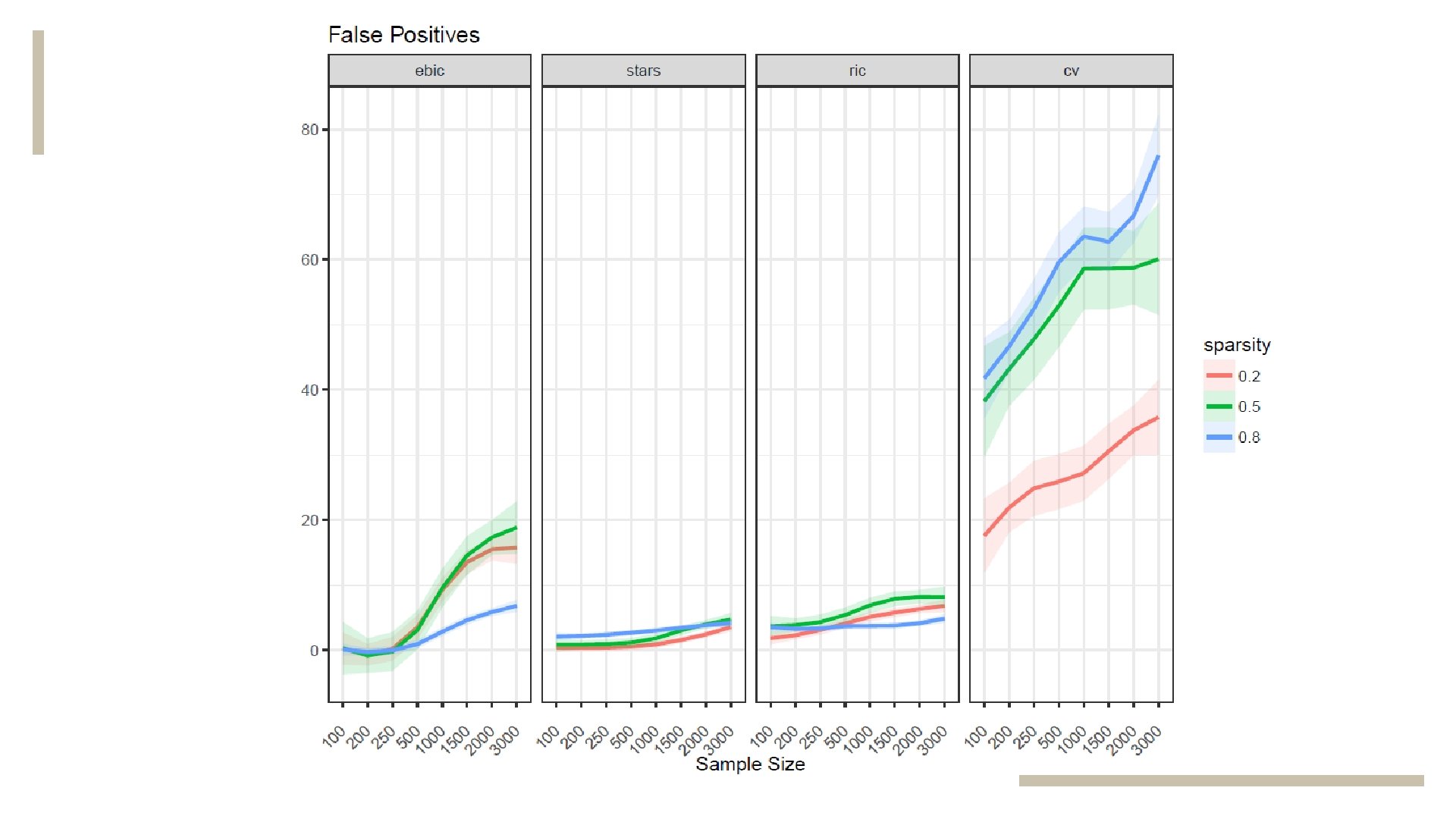

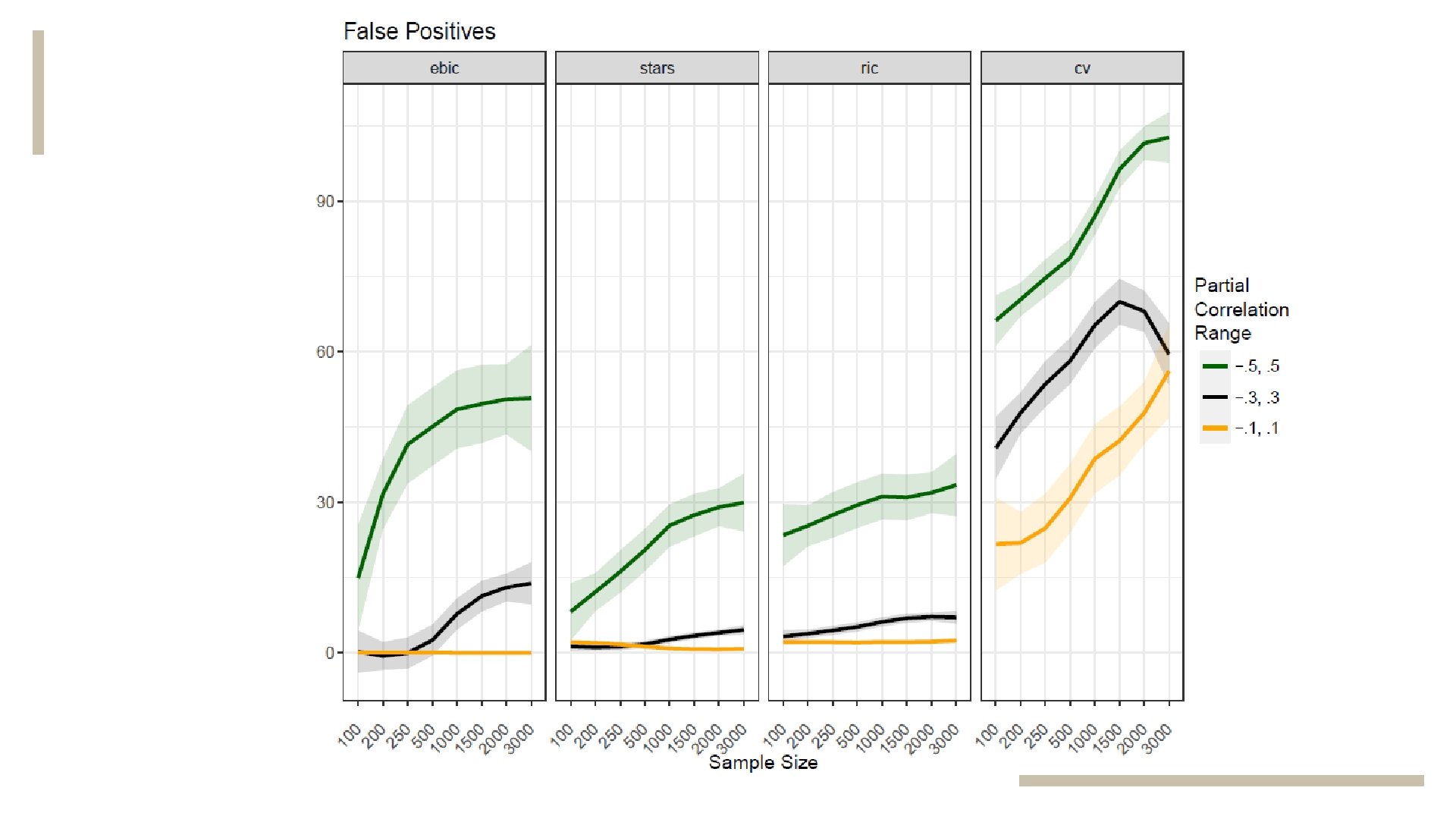

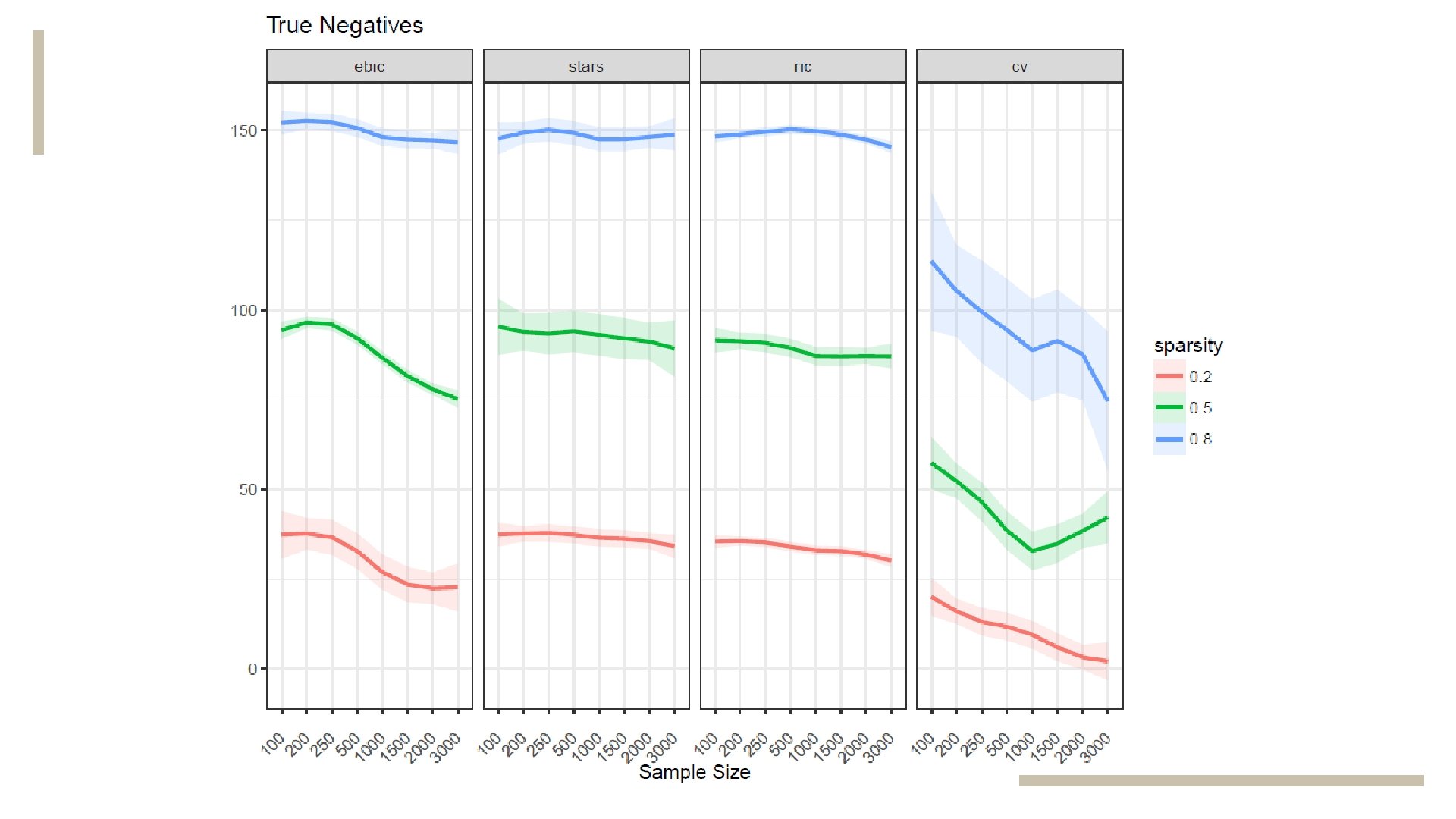

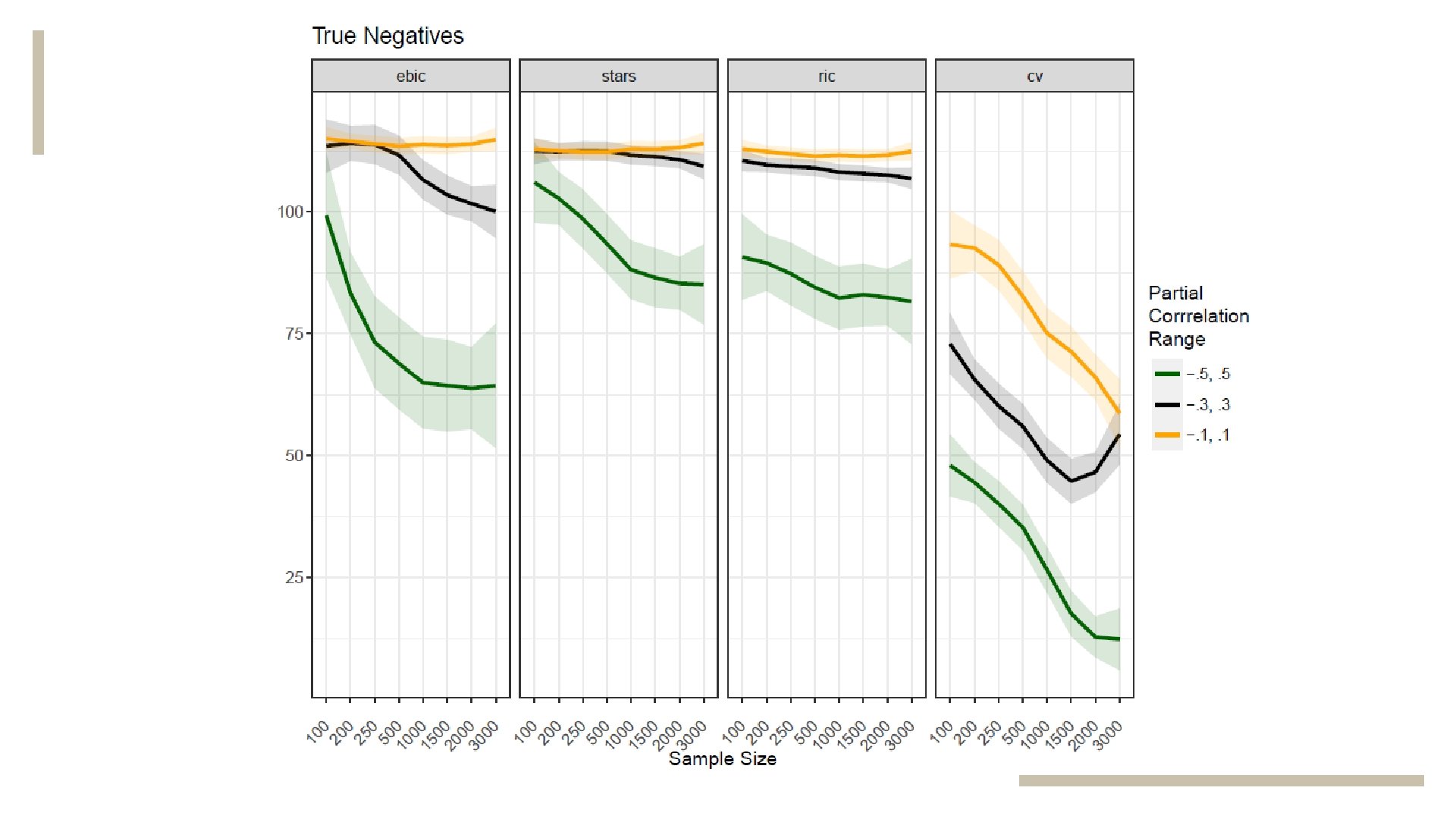

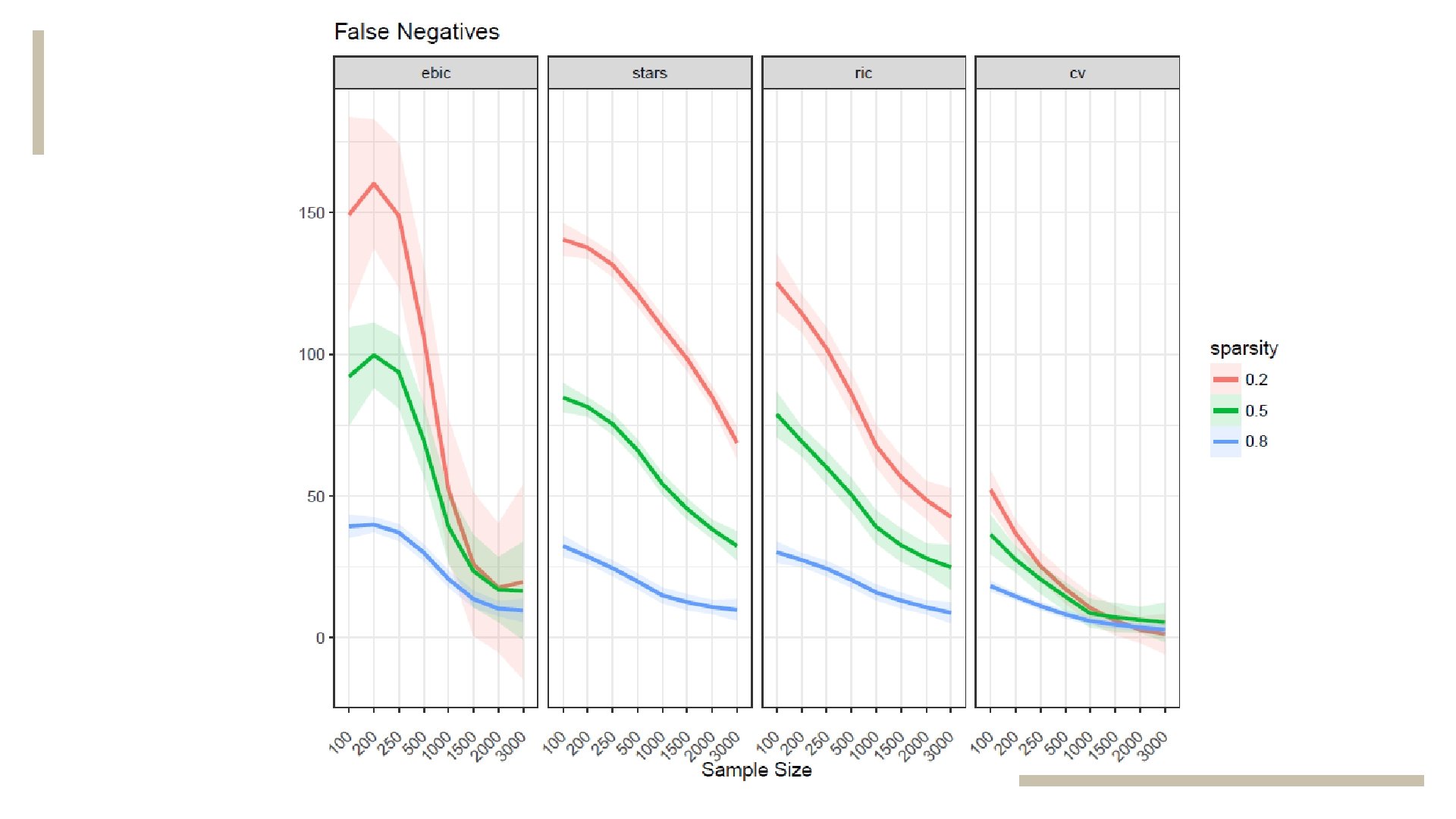

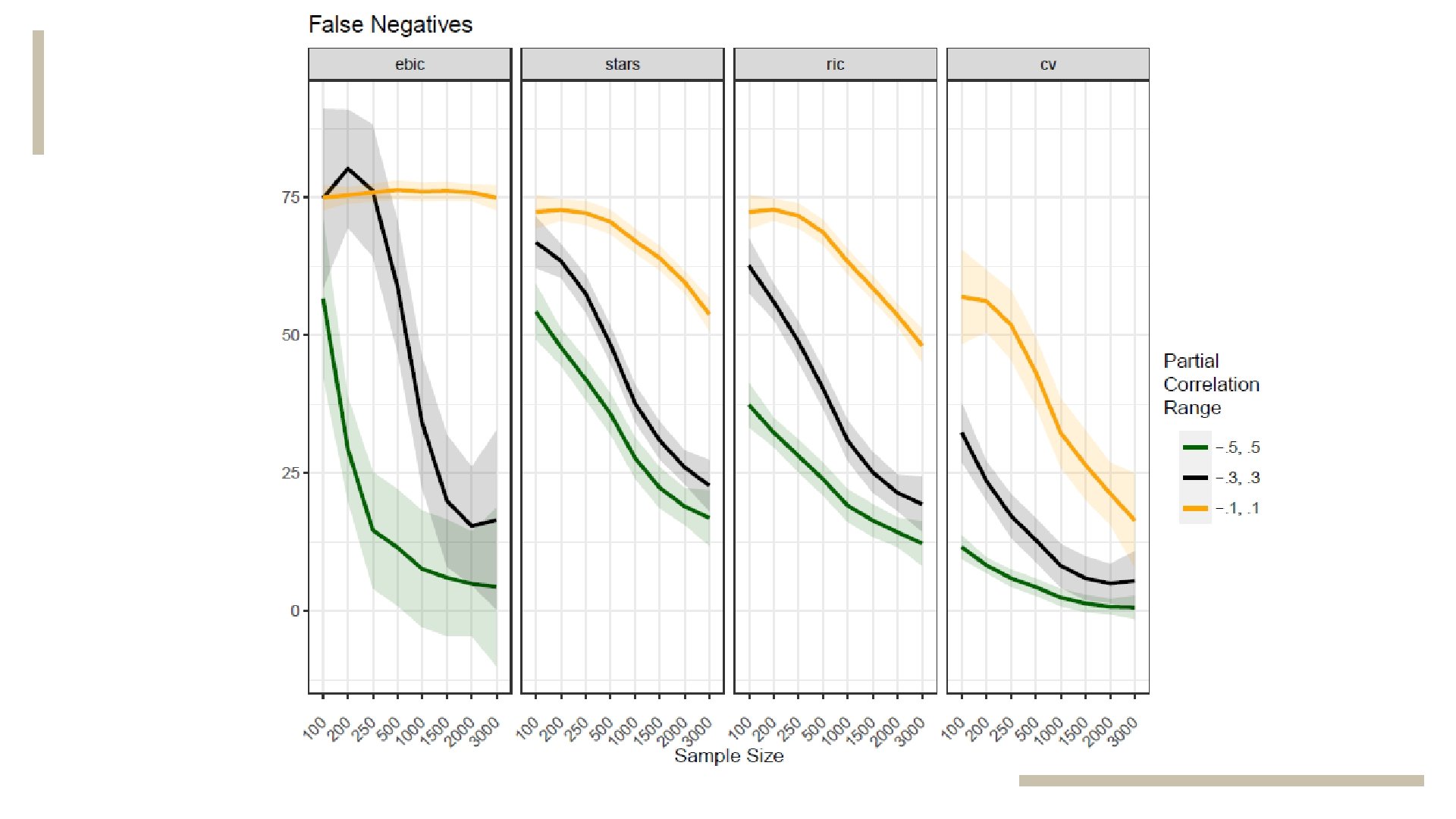

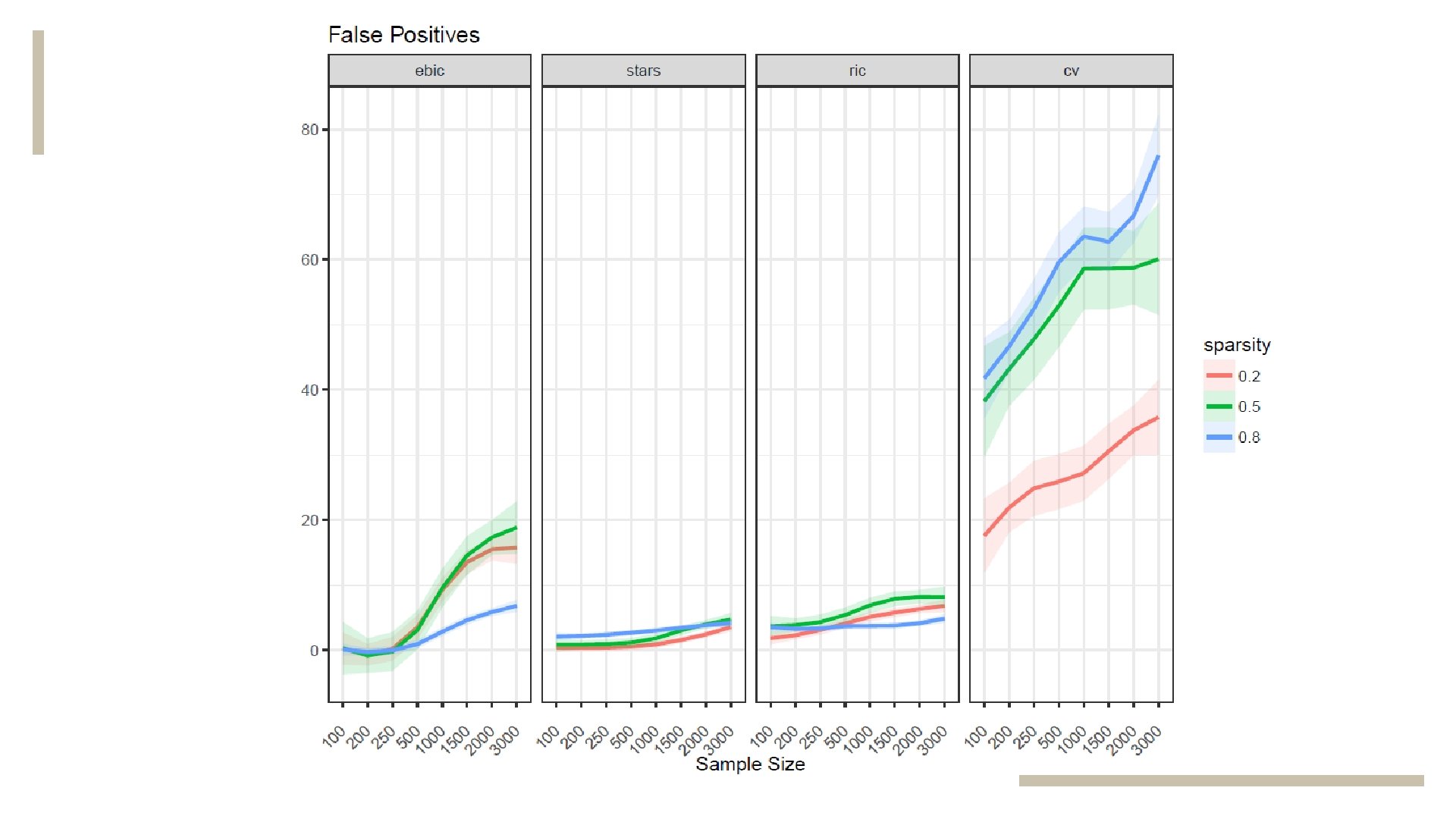

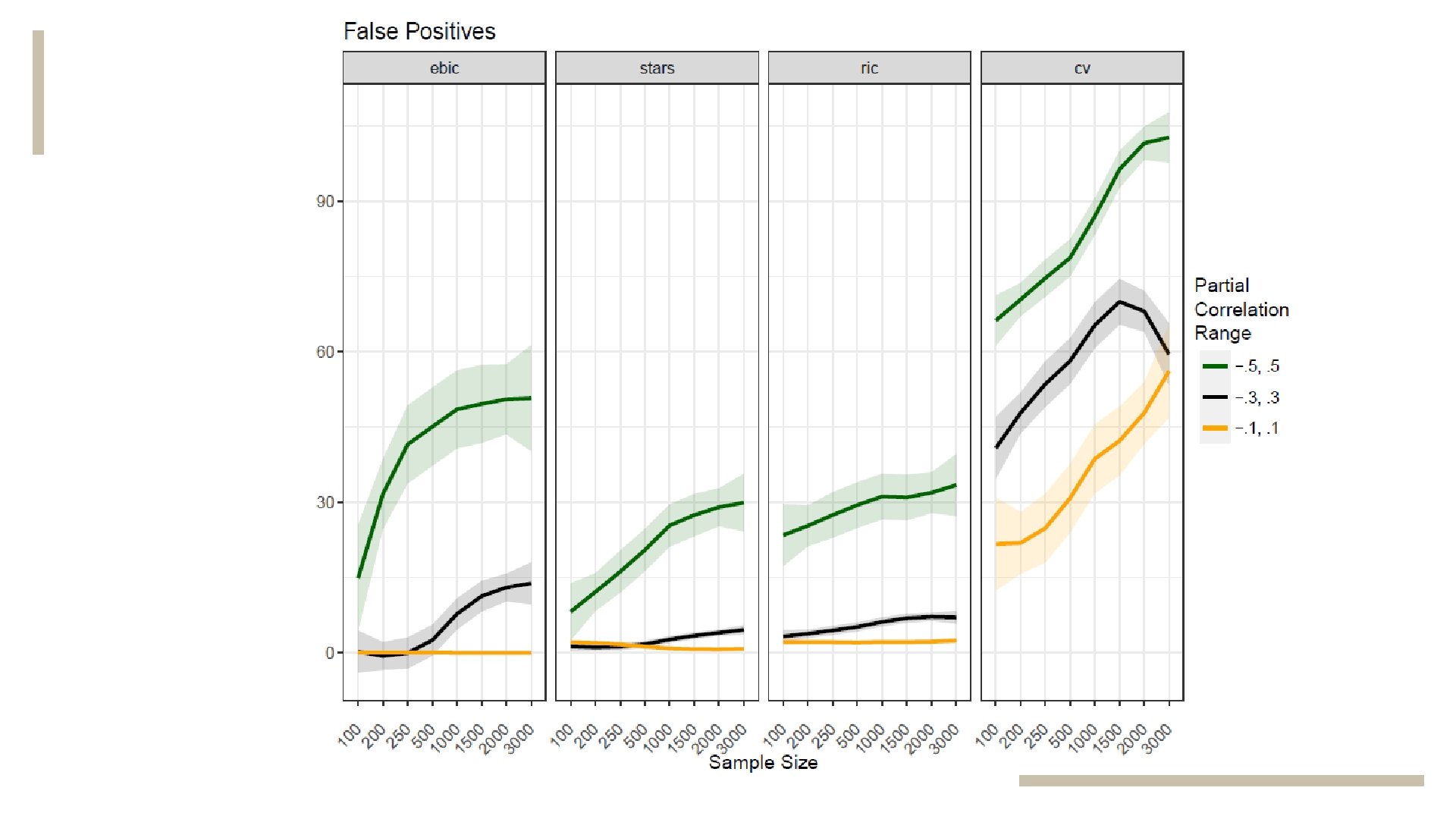

1. What is the best method for psychological data? 2. How does partial correlation size affect performance? 3. How does sparsity affect performance?

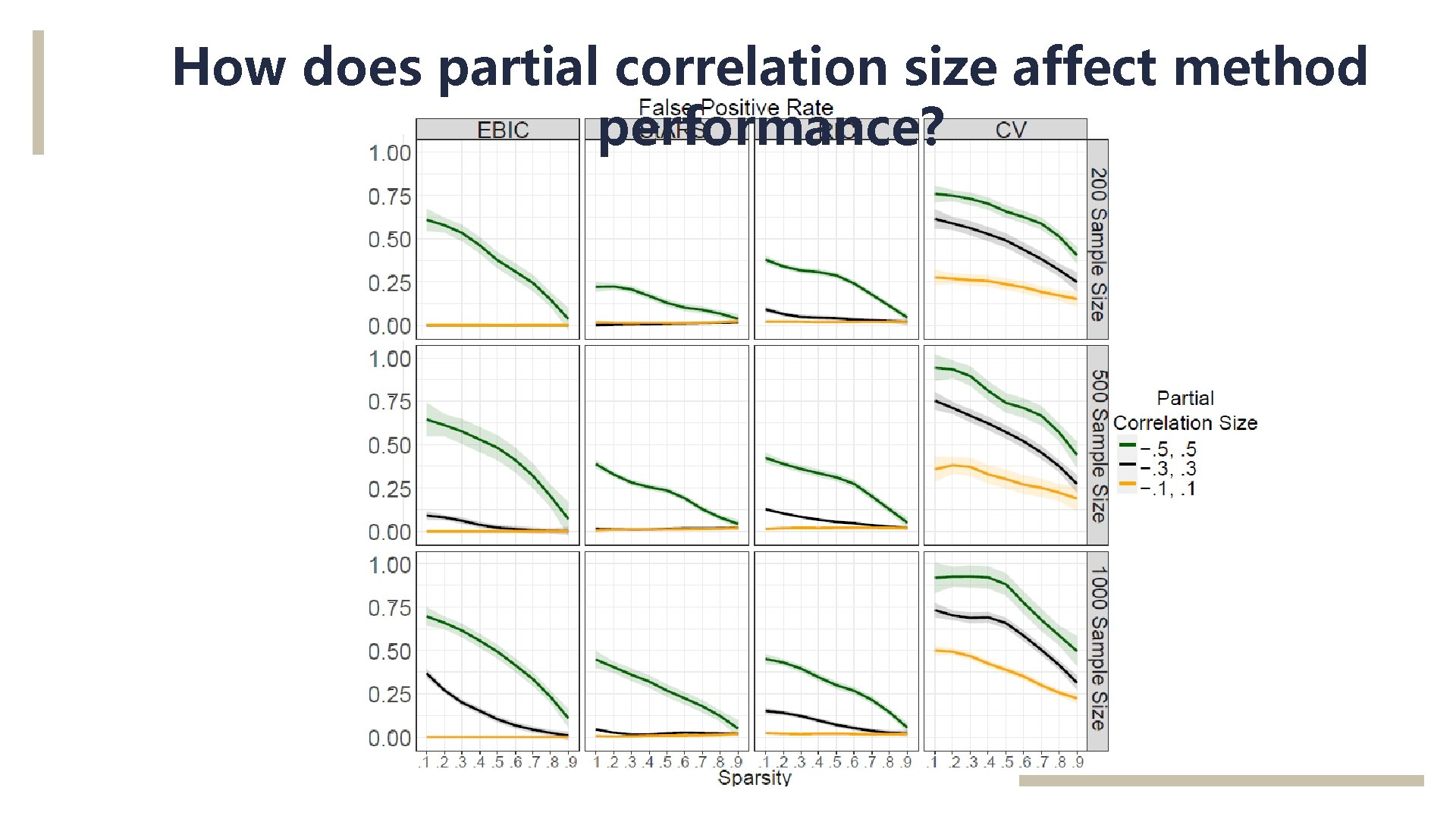

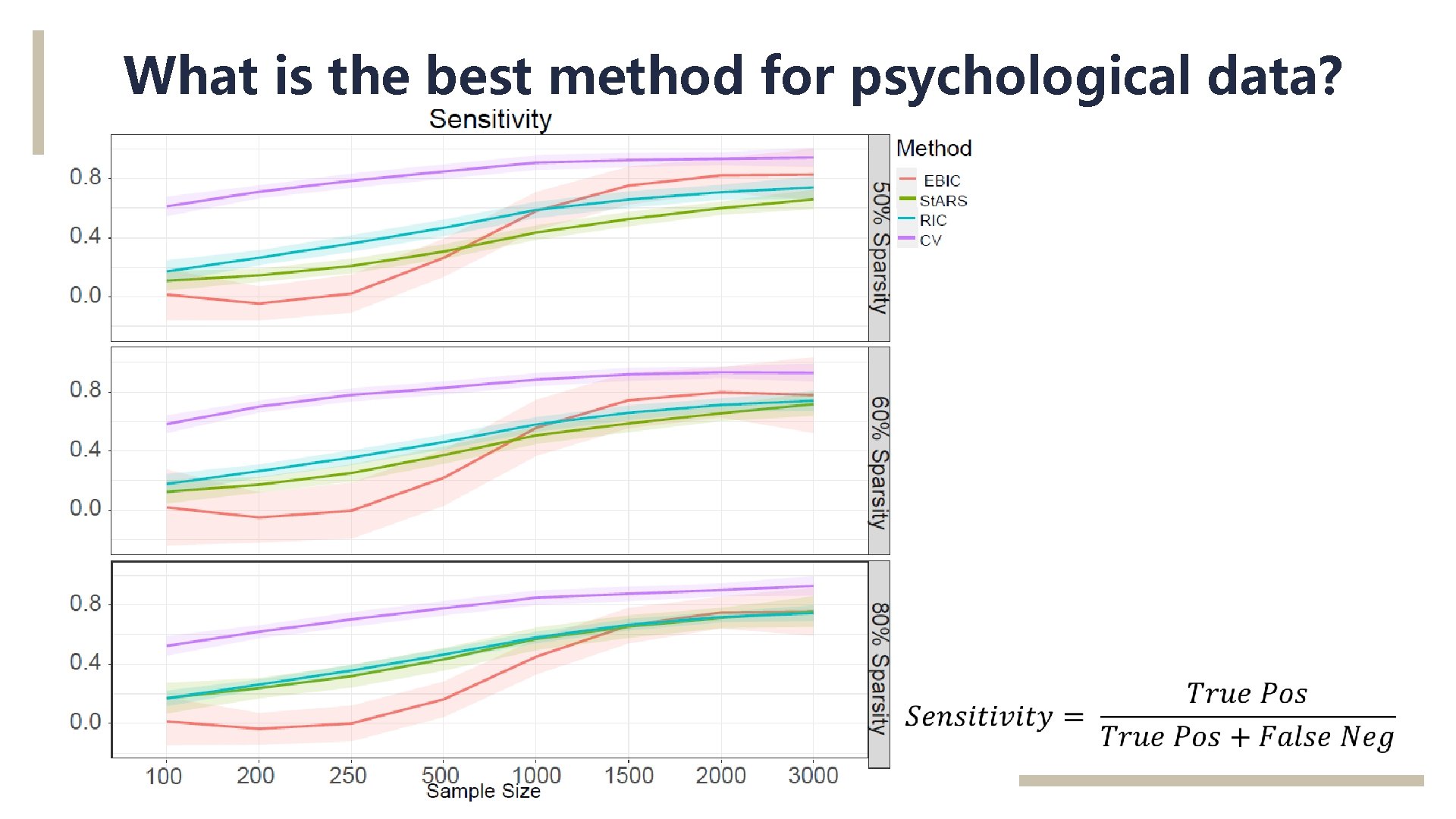

SIMULATION PROCEDURE • Conditions • Sample Size (100, 250, 500, …, 3000) • Number of Variables (20) • Sparsity (. 10, . 20, …, . 90) • Partial Correlation Range (-. 1, . 1 ; -. 3, . 3 ; -. 5, . 5) • Performance Measures • False Positive Rate • Sensitivity

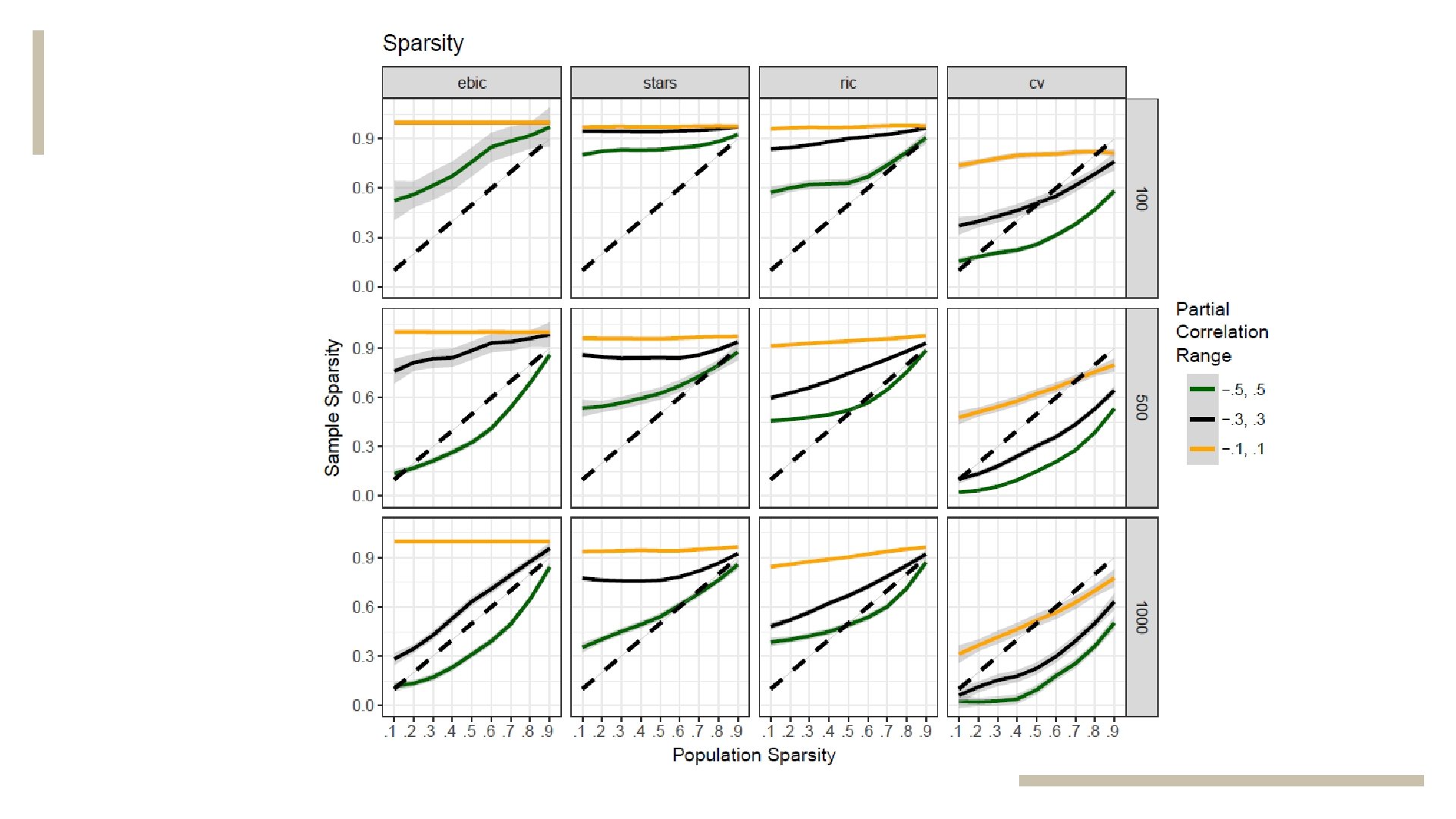

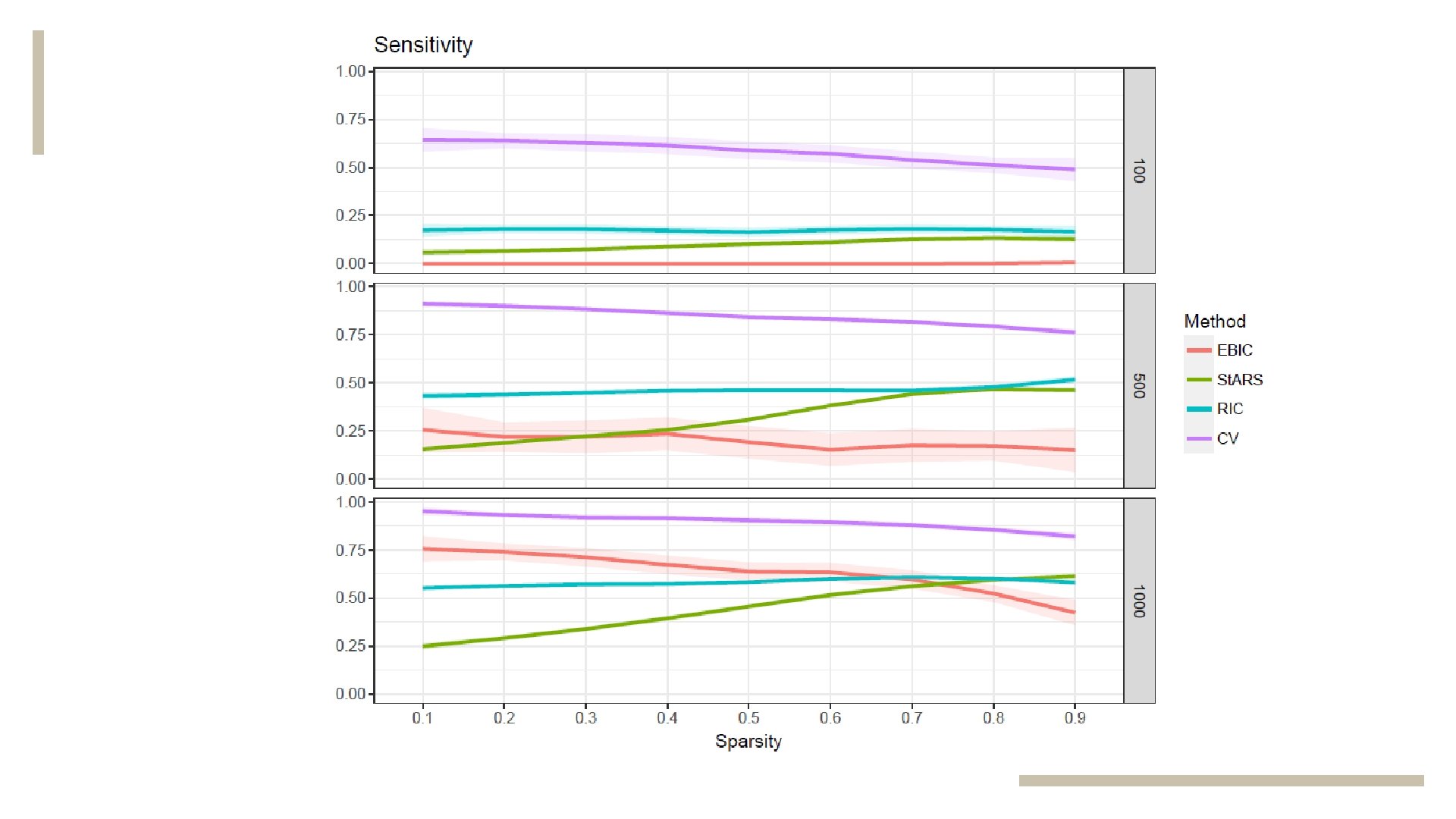

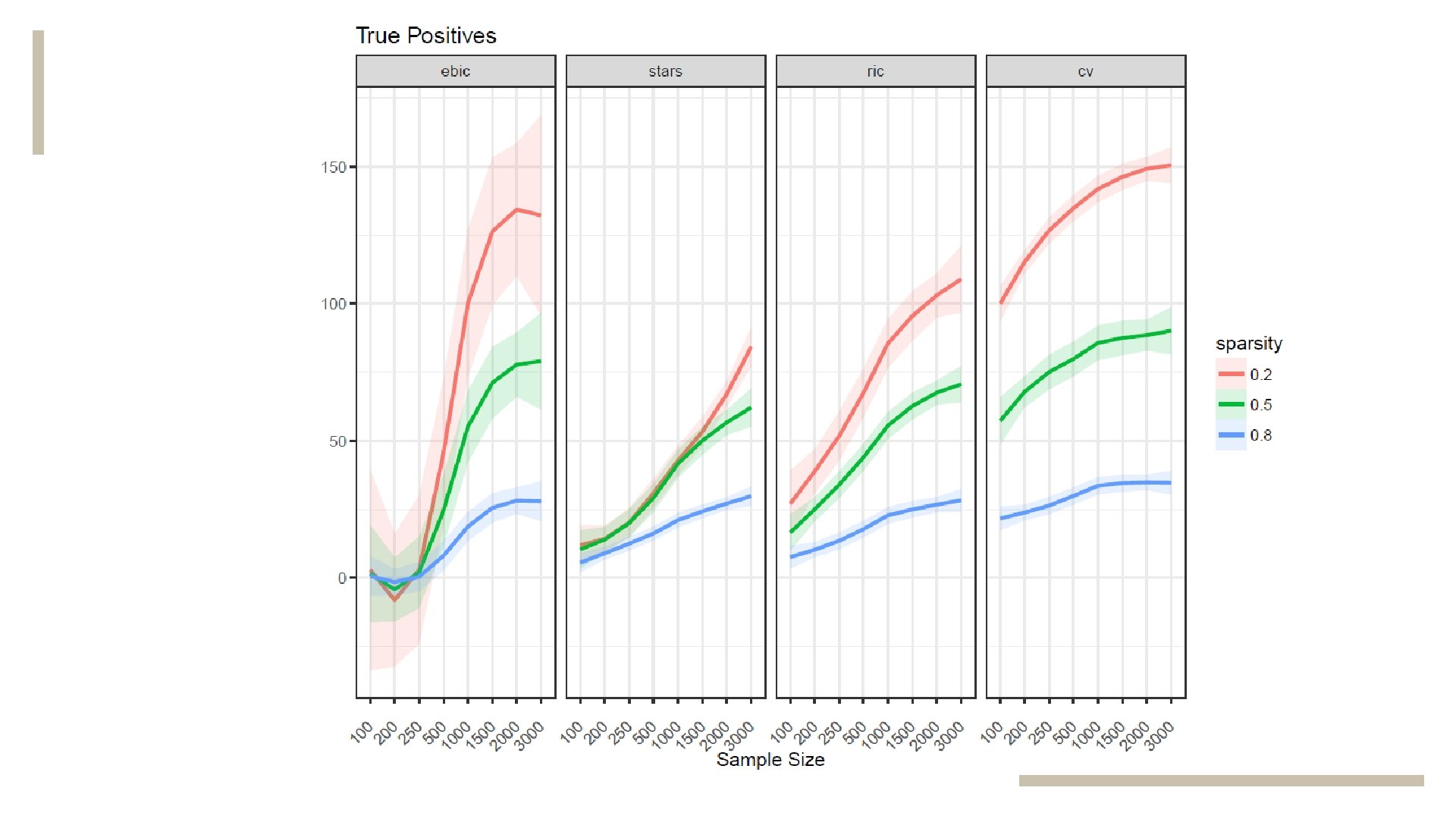

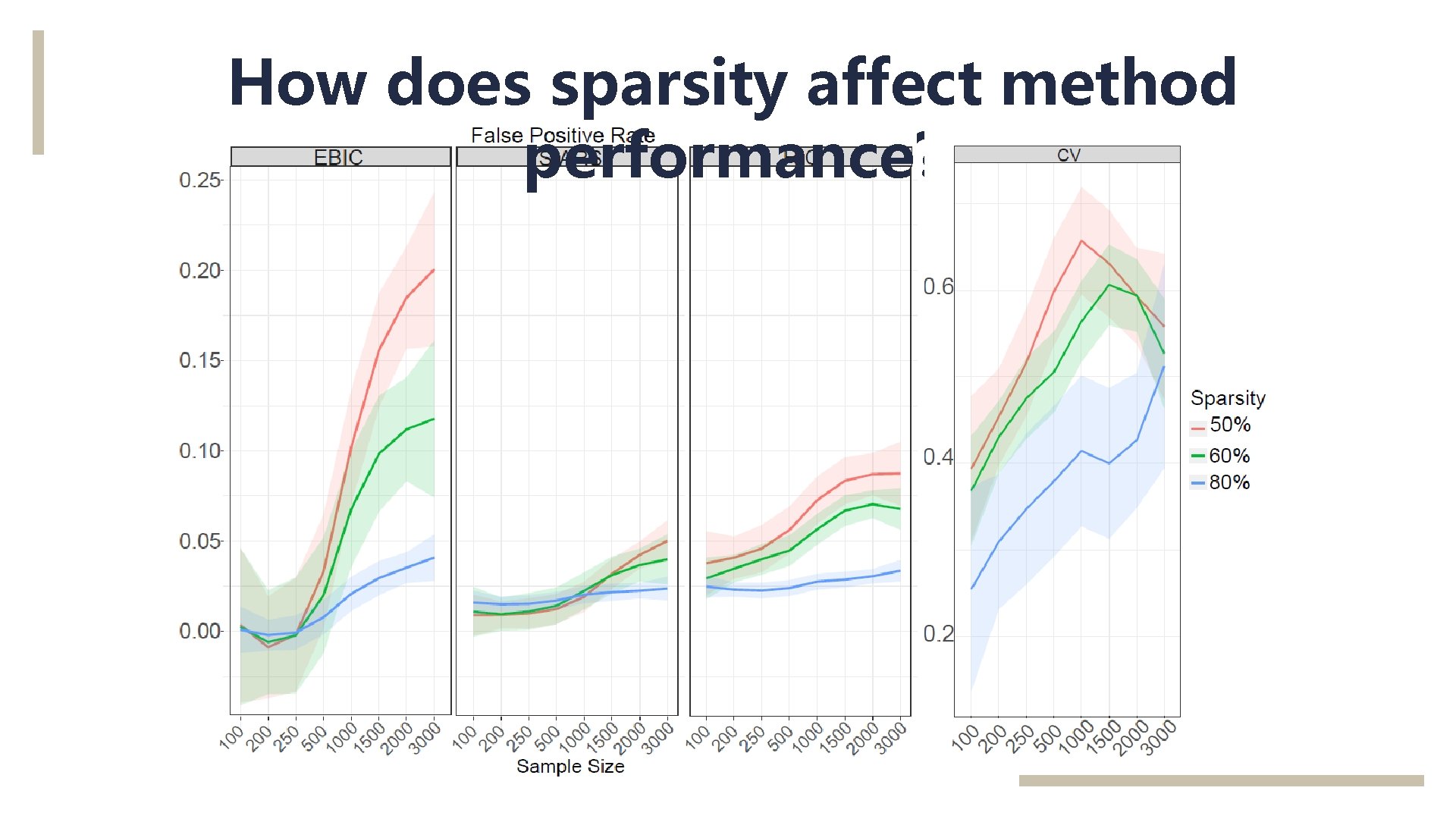

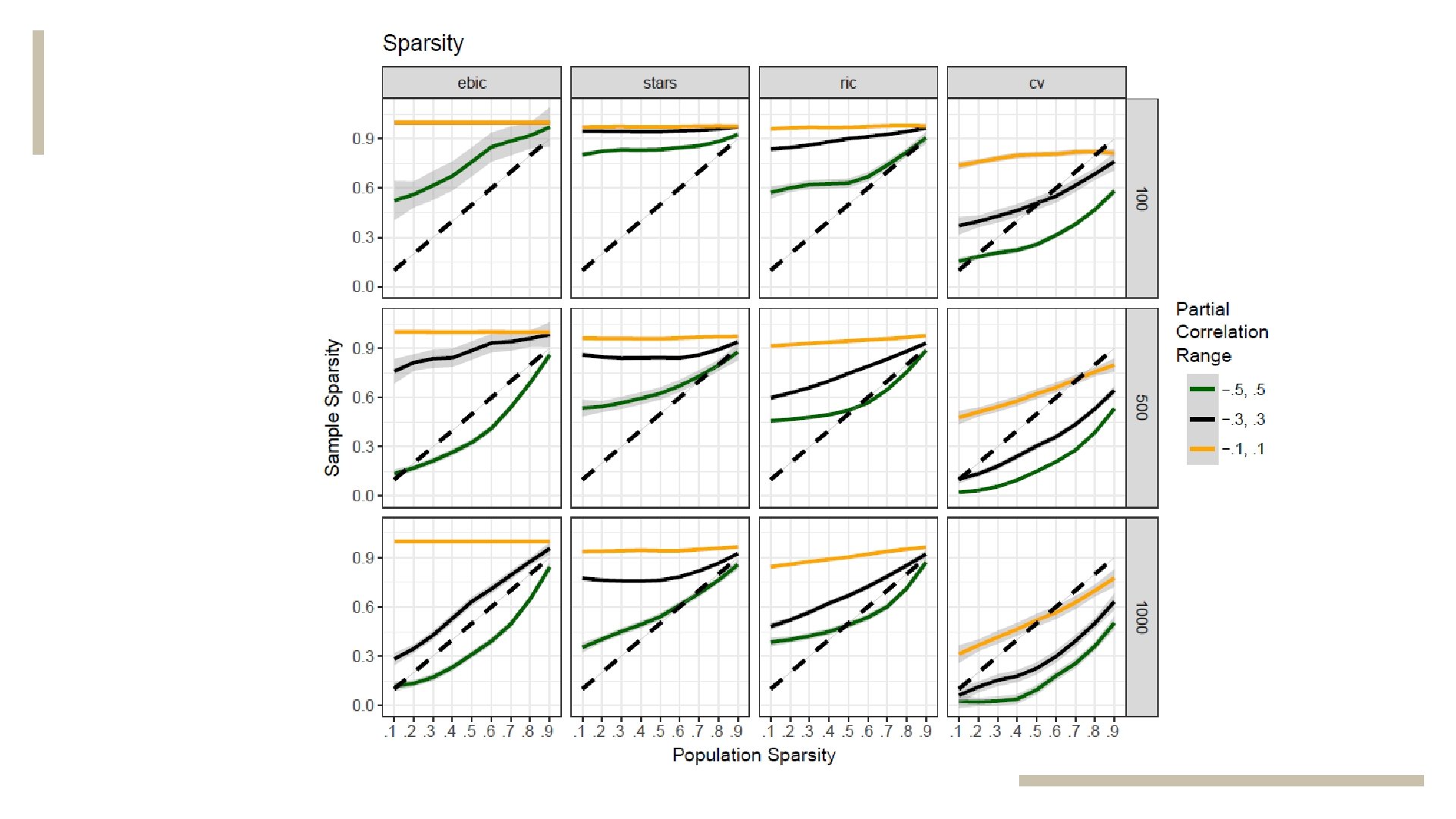

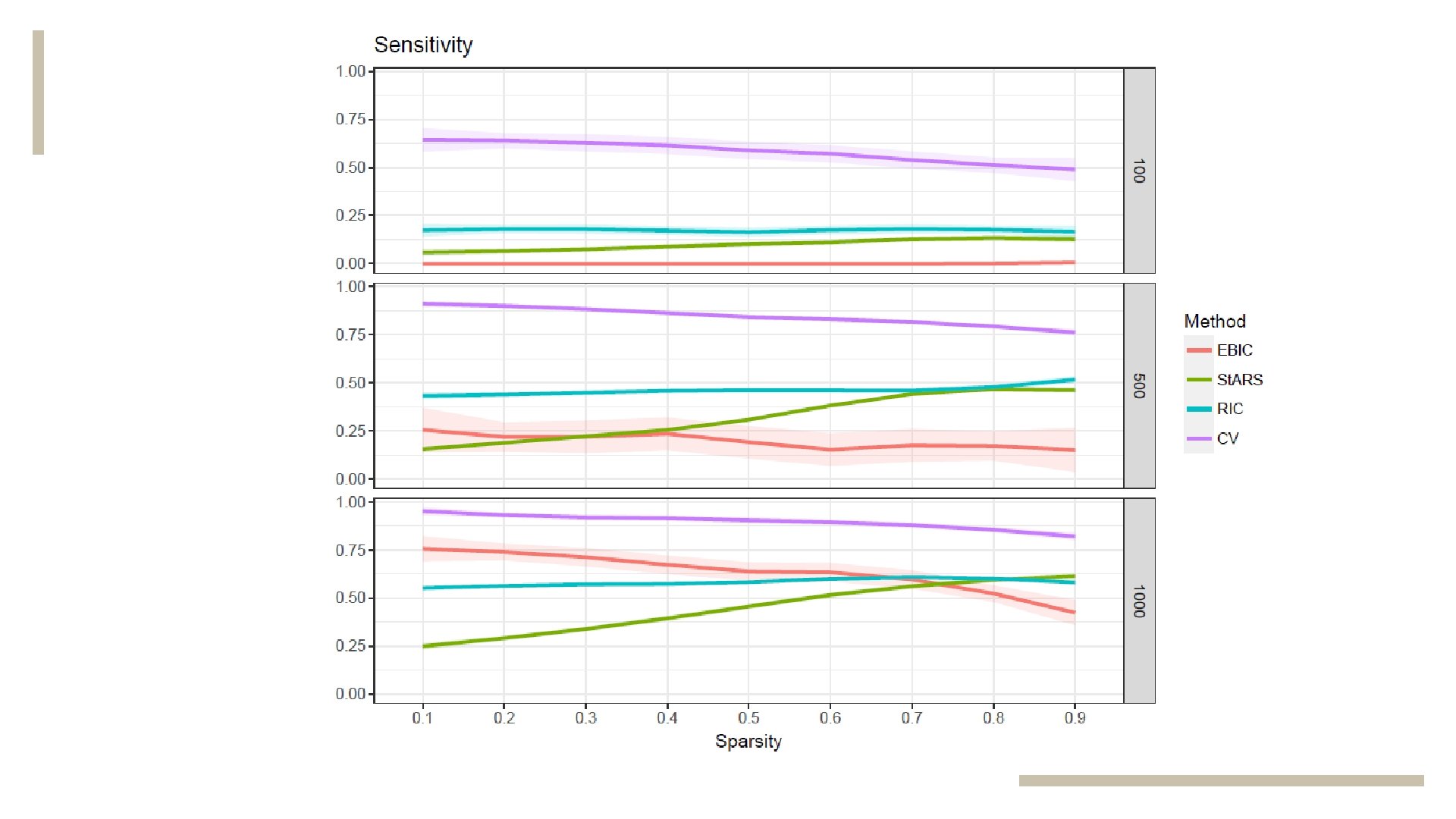

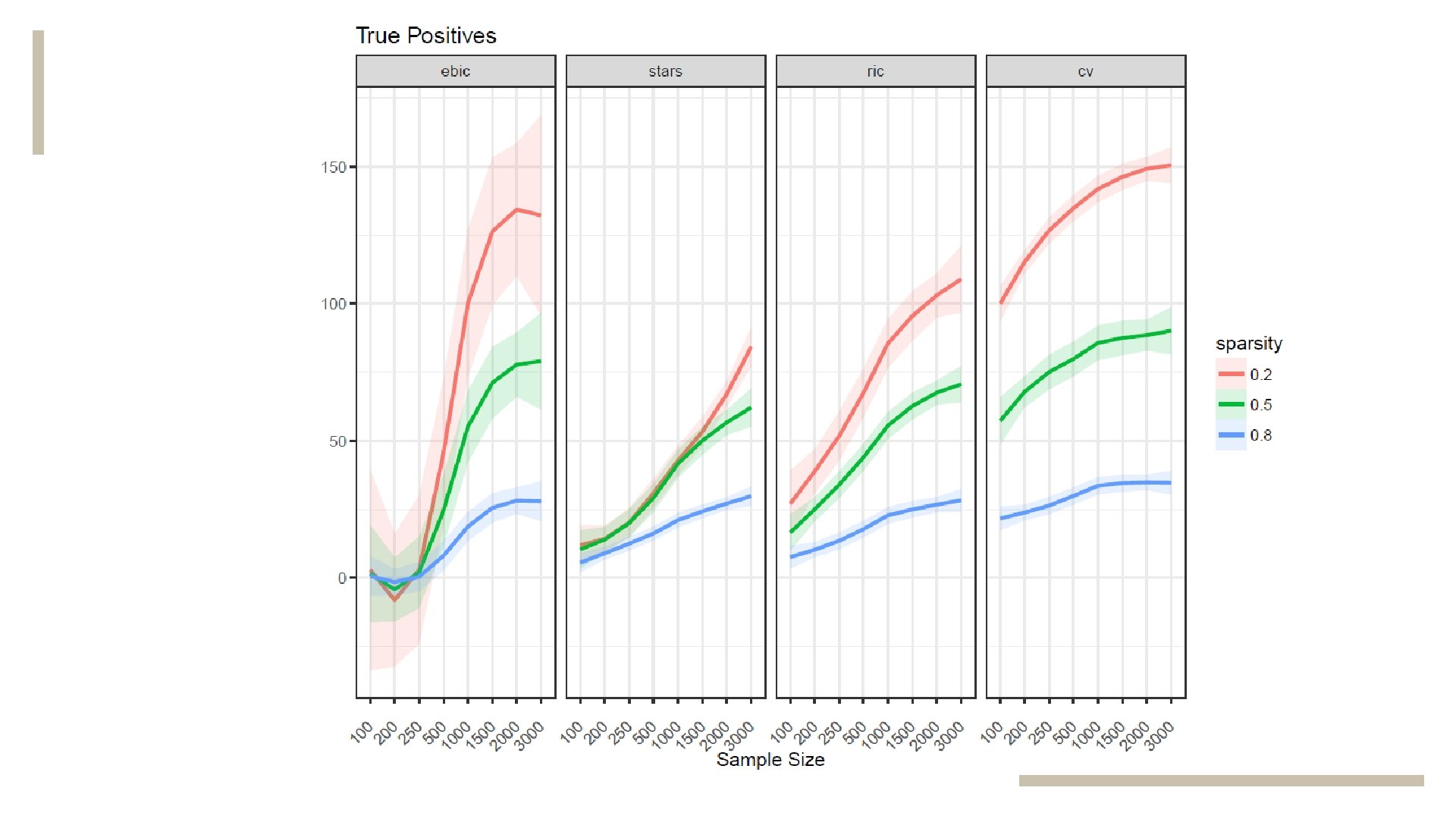

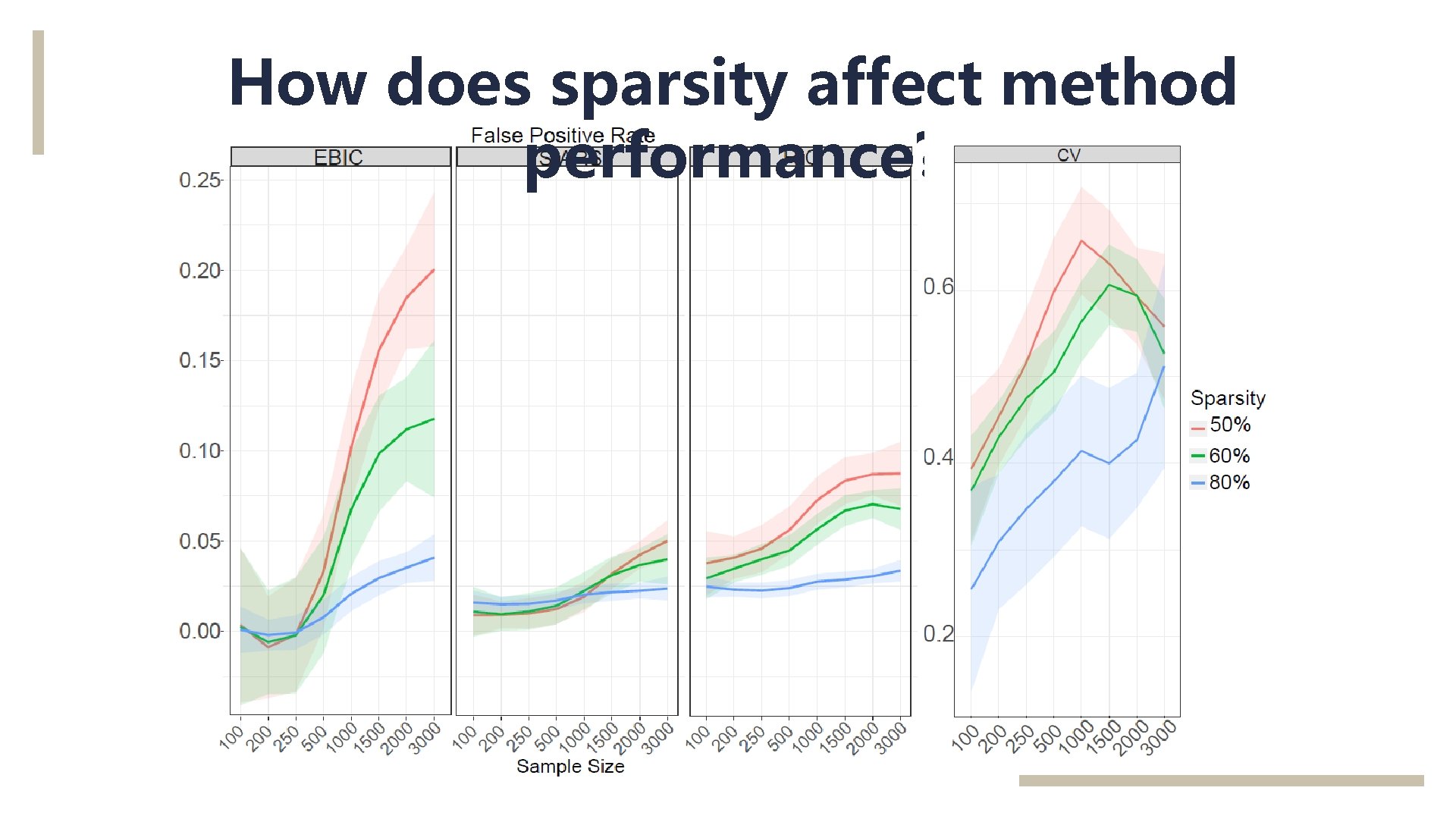

How does sparsity affect method performance?

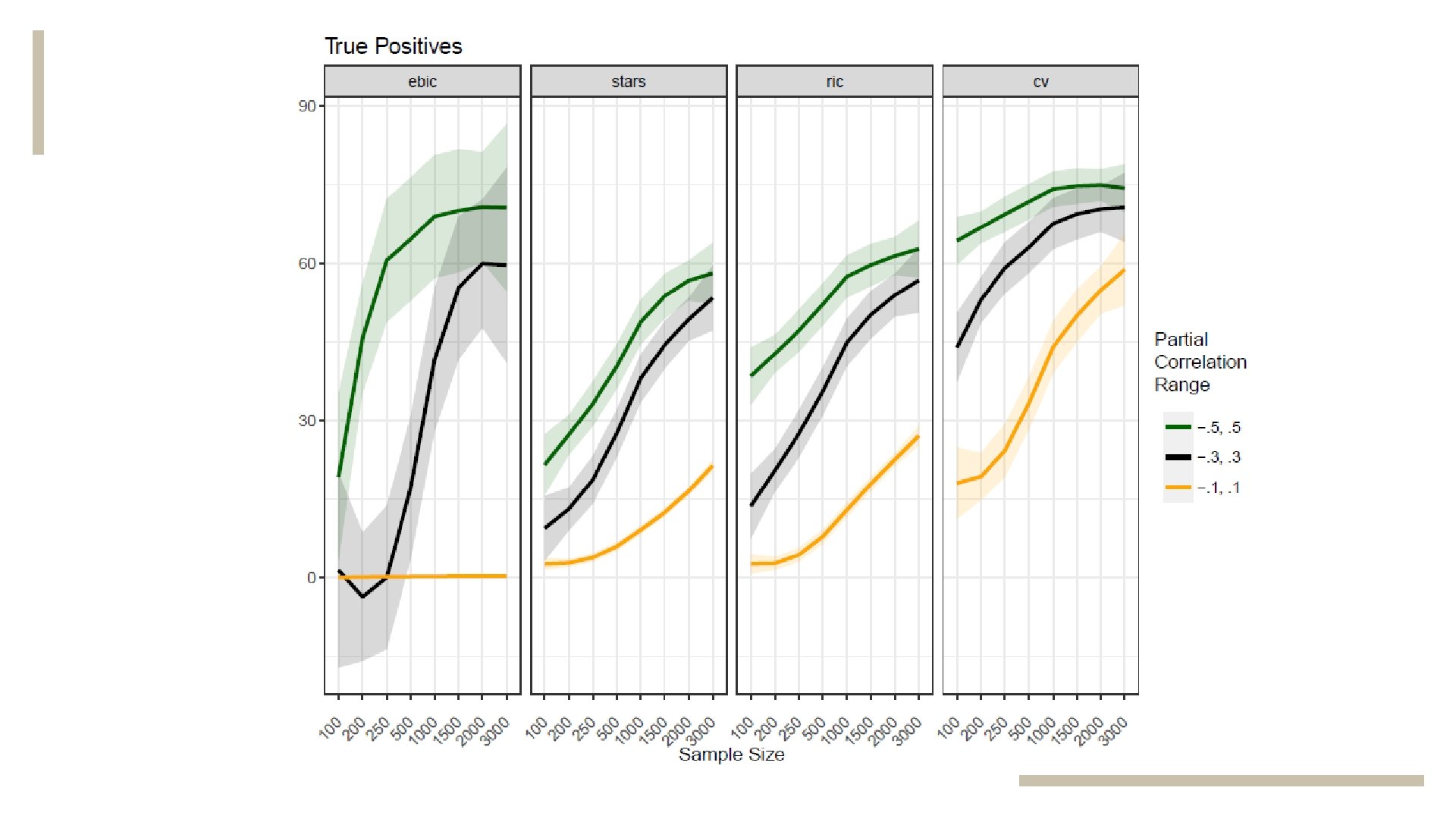

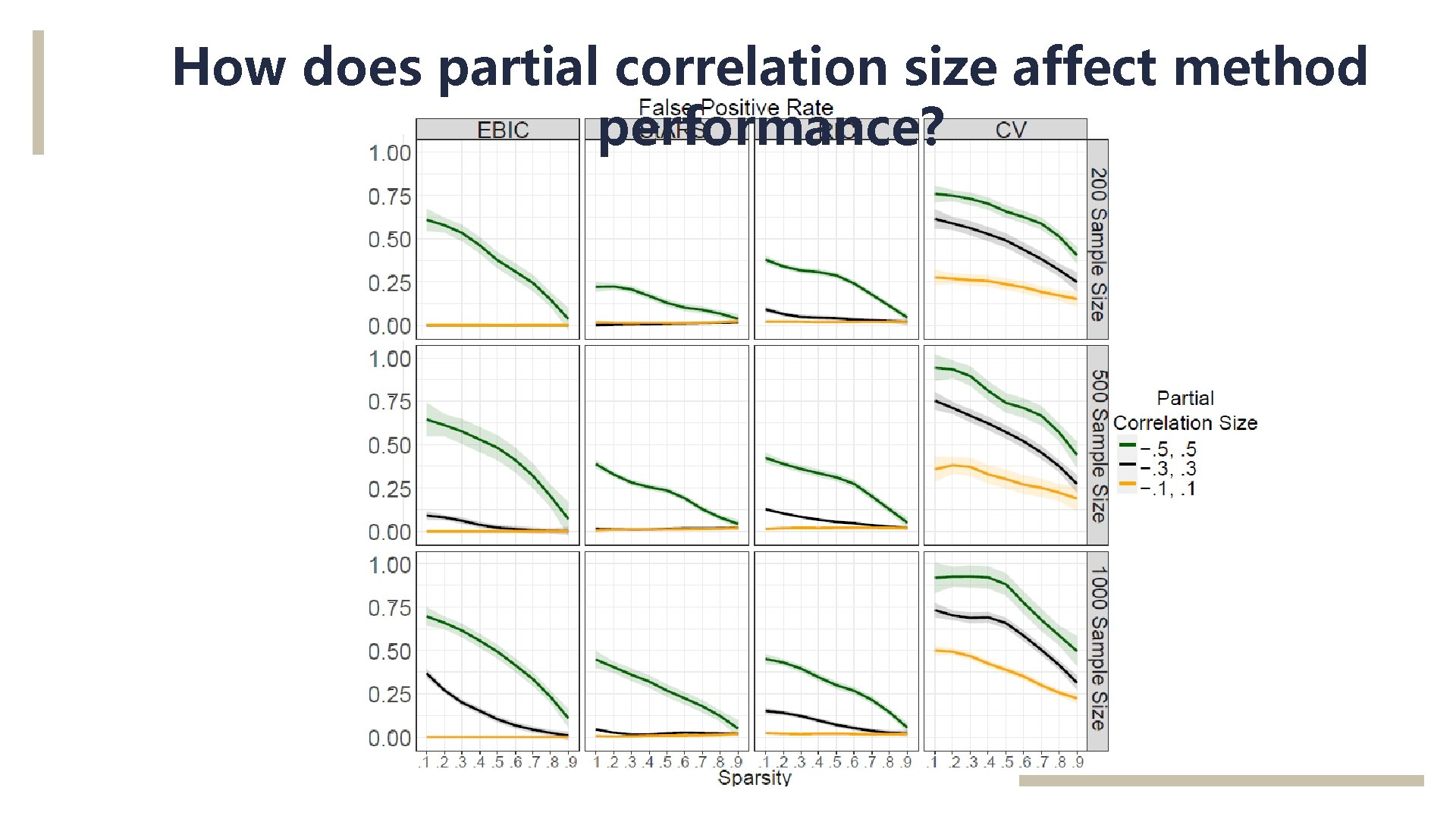

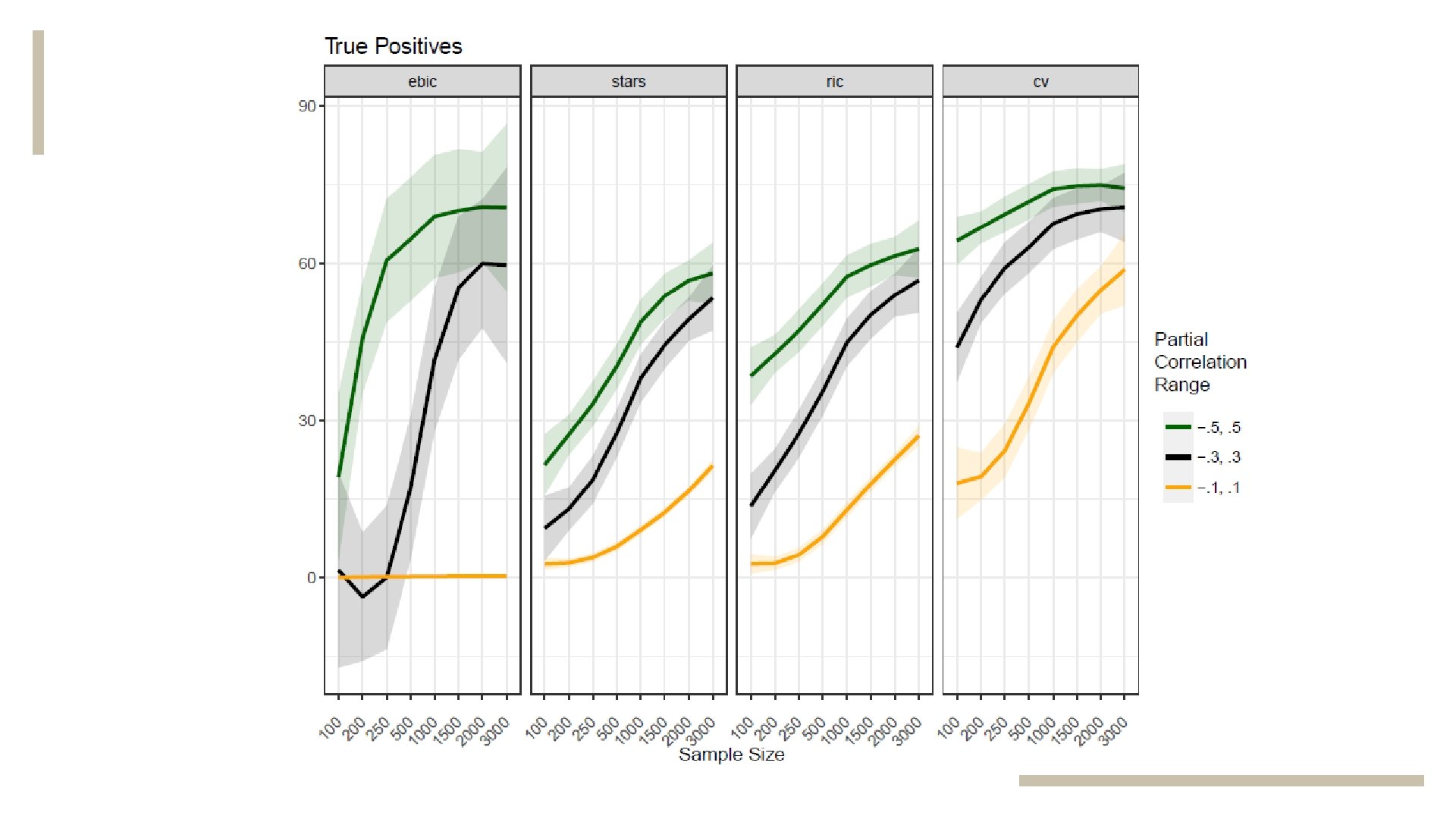

How does partial correlation size affect method performance?

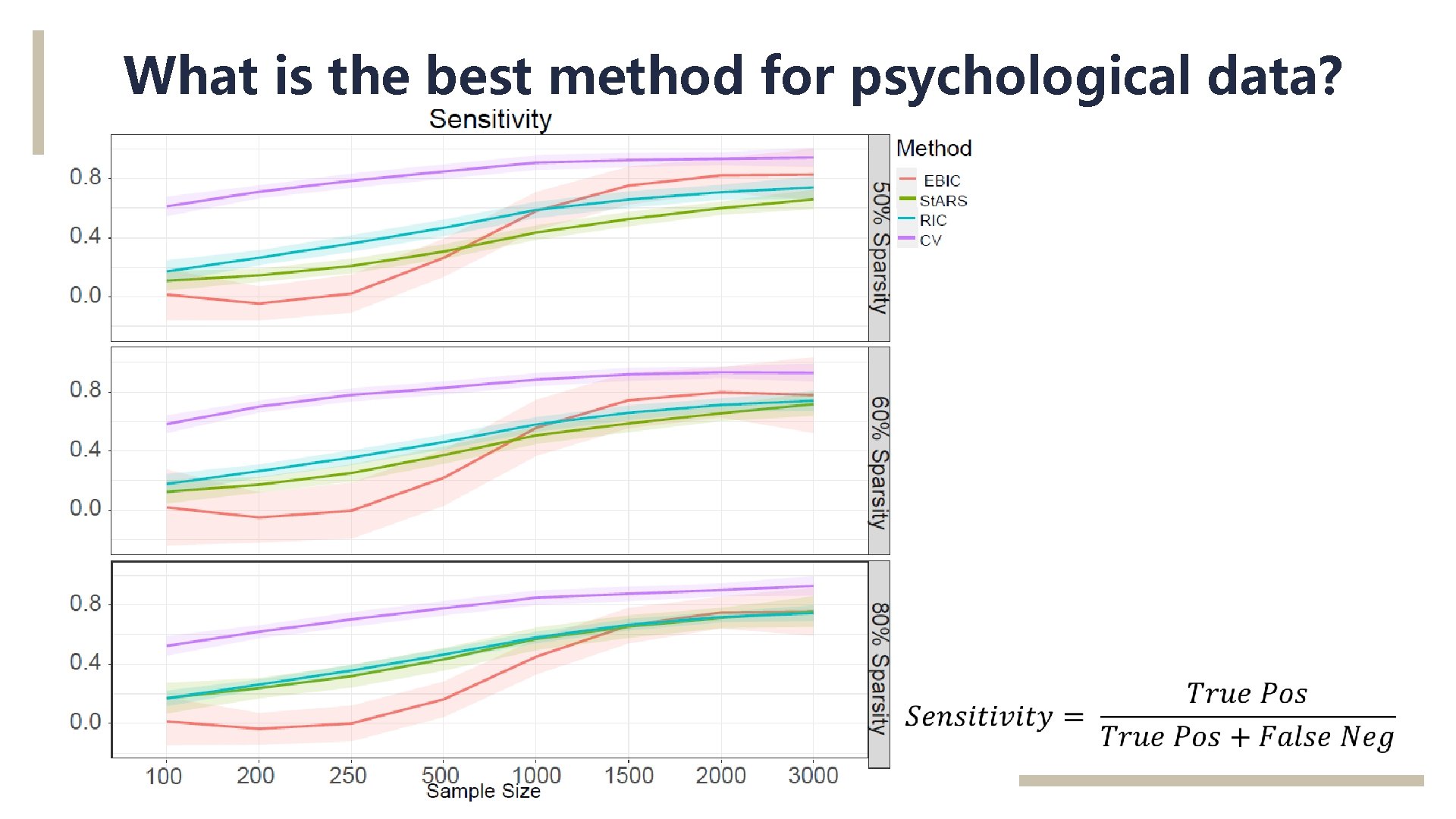

What is the best method for psychological data?

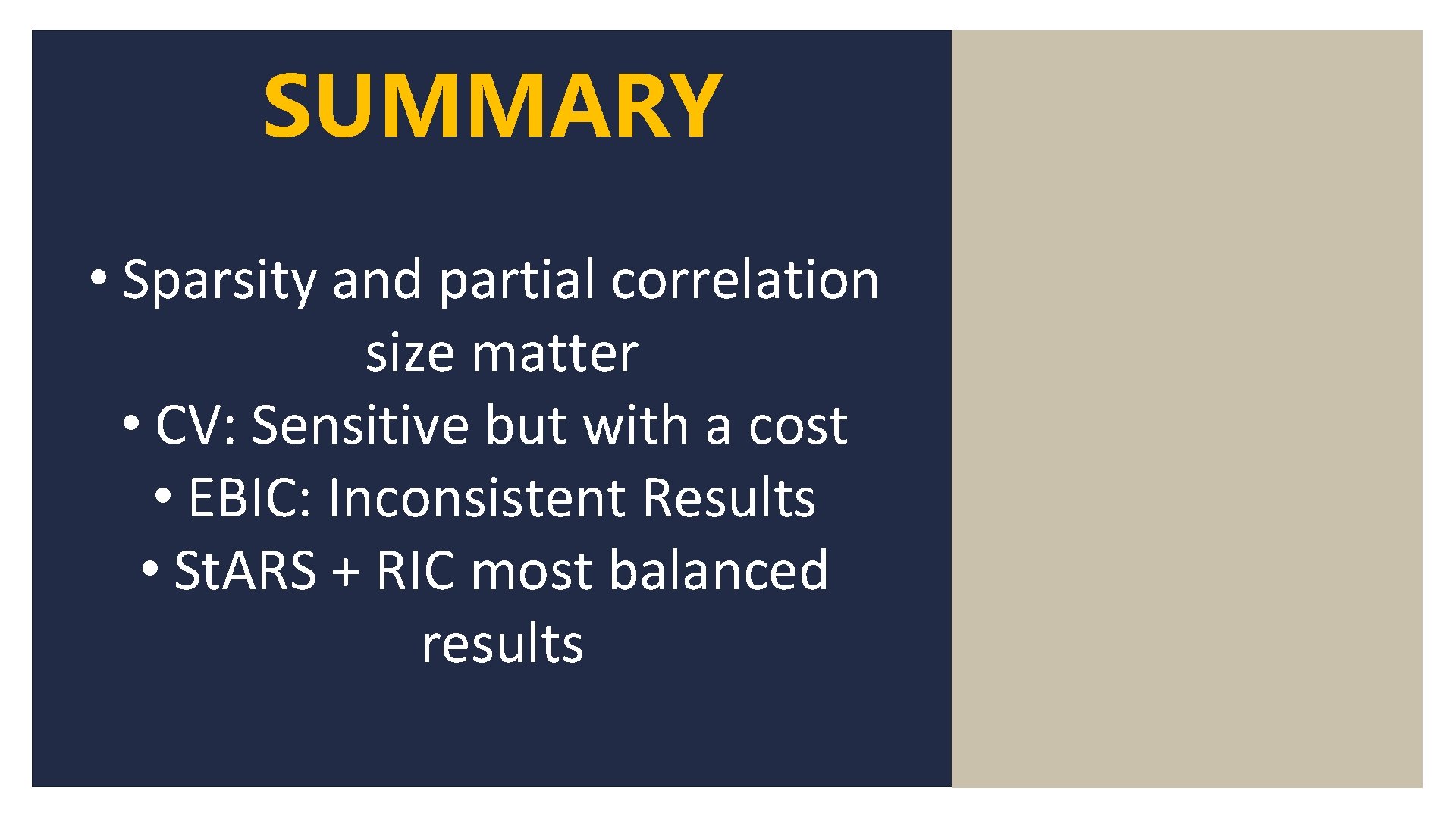

SUMMARY • Sparsity and partial correlation size matter • CV: Sensitive but with a cost • EBIC: Inconsistent Results • St. ARS + RIC most balanced results

THANK YOU! ANNA WYSOCKI, M. S. awysocki@ucdavis. edu