Peertopeer computing research a fad Frans Kaashoek kaashoeklcs

![Many recent DHT-based projects • • File sharing [CFS, Ocean. Store, PAST, Ivy, …] Many recent DHT-based projects • • File sharing [CFS, Ocean. Store, PAST, Ivy, …]](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-11.jpg)

![Half-life [Liben-Nowell 2002] N new nodes join N nodes N/2 old nodes leave • Half-life [Liben-Nowell 2002] N new nodes join N nodes N/2 old nodes leave •](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-32.jpg)

![Sybil attack [Douceur 02] N 5 N 10 N 110 N 20 N 99 Sybil attack [Douceur 02] N 5 N 10 N 110 N 20 N 99](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-39.jpg)

- Slides: 47

Peer-to-peer computing research: a fad? Frans Kaashoek kaashoek@lcs. mit. edu Joint work with: H. Balakrishnan, P. Druschel , J. Hellerstein , D. Karger, R. Karp, J. Kubiatowicz, B. Liskov, D. Mazières, R. Morris, S. Shenker, and I. Stoica Berkeley, ICSI, MIT, NYU, and Rice

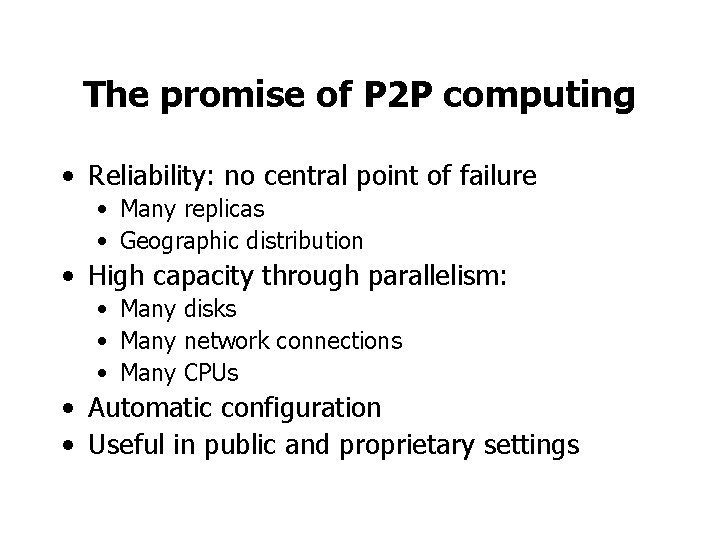

What is a P 2 P system? Node Internet Node • A distributed system architecture: • No centralized control • Nodes are symmetric in function • Larger number of unreliable nodes • Enabled by technology improvements

P 2 P: an exciting social development • Internet users cooperating to share, for example, music files • Napster, Gnutella, Morpheus, Ka. Za. A, etc. • Lots of attention from the popular press “The ultimate form of democracy on the Internet” “The ultimate threat to copy-right protection on the Internet”

How to build critical services? • Many critical services use Internet • Hospitals, government agencies, etc. • These services need to be robust • Node and communication failures • Load fluctuations (e. g. , flash crowds) • Attacks (including DDo. S)

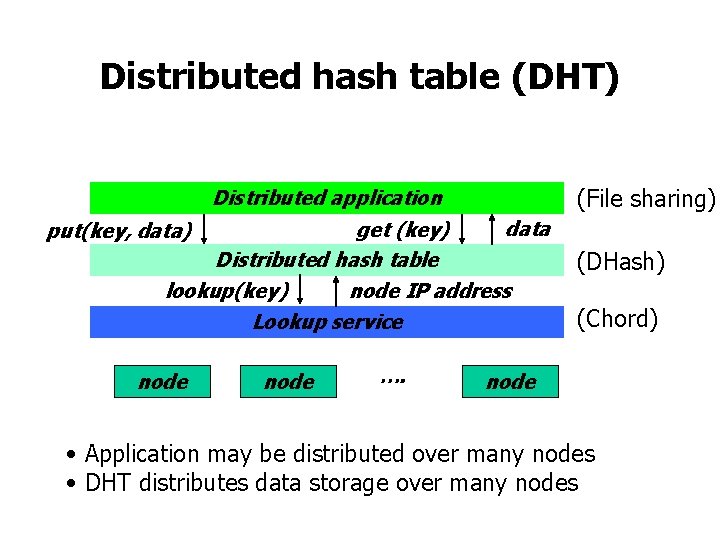

The promise of P 2 P computing • Reliability: no central point of failure • Many replicas • Geographic distribution • High capacity through parallelism: • Many disks • Many network connections • Many CPUs • Automatic configuration • Useful in public and proprietary settings

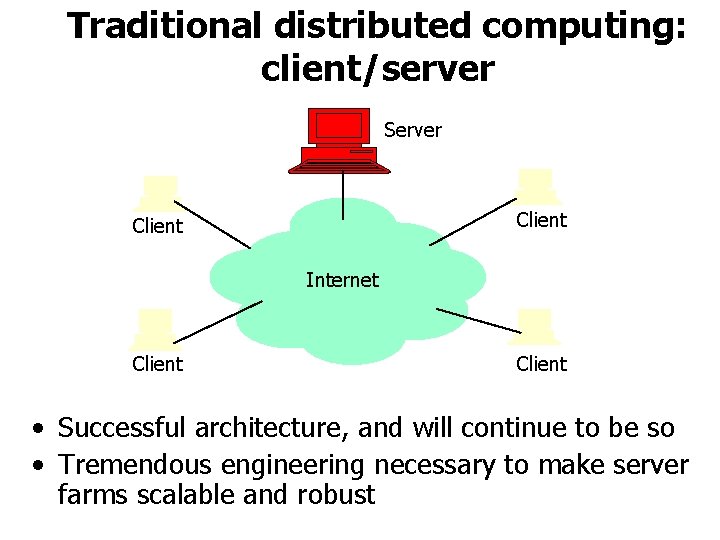

Traditional distributed computing: client/server Server Client Internet Client • Successful architecture, and will continue to be so • Tremendous engineering necessary to make server farms scalable and robust

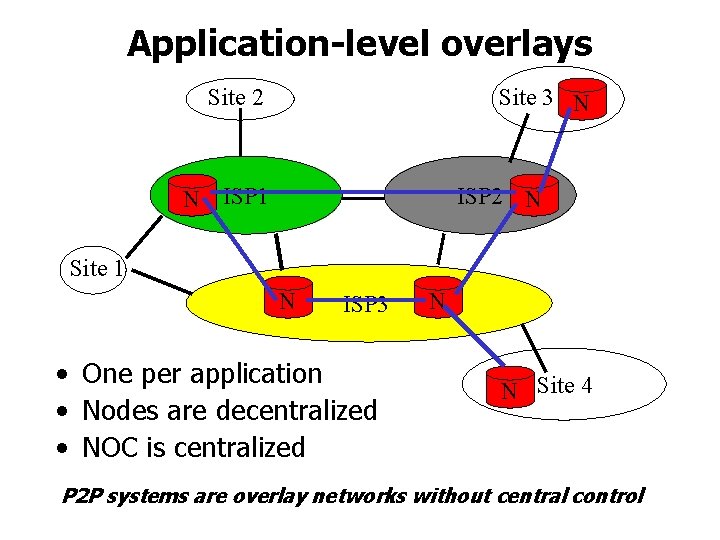

Application-level overlays Site 2 Site 3 N N ISP 1 ISP 2 N Site 1 N ISP 3 • One per application • Nodes are decentralized • NOC is centralized N N Site 4 P 2 P systems are overlay networks without central control

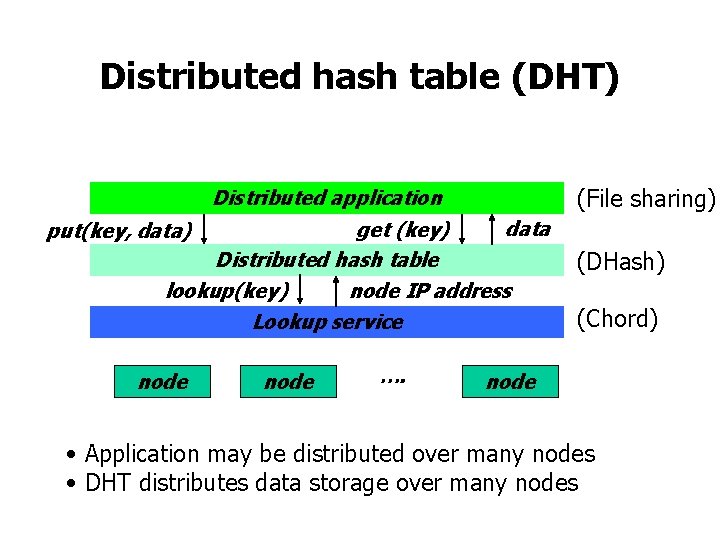

Distributed hash table (DHT) (File sharing) Distributed application data get (key) Distributed hash table lookup(key) node IP address Lookup service put(key, data) node …. (DHash) (Chord) node • Application may be distributed over many nodes • DHT distributes data storage over many nodes

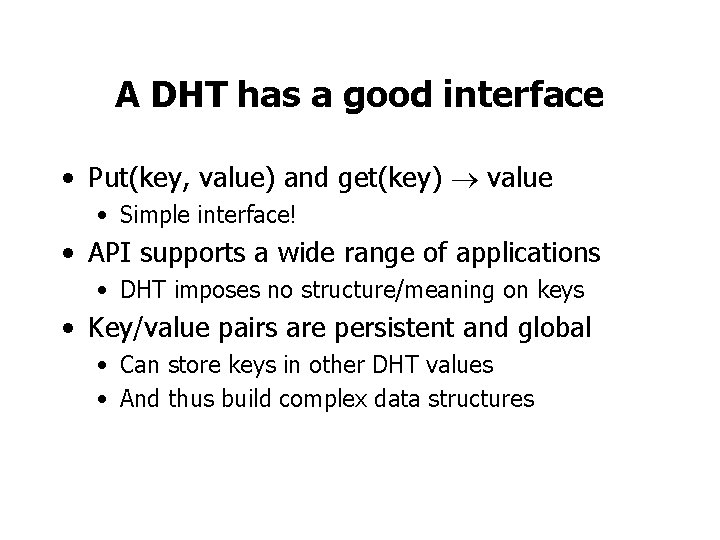

A DHT has a good interface • Put(key, value) and get(key) value • Simple interface! • API supports a wide range of applications • DHT imposes no structure/meaning on keys • Key/value pairs are persistent and global • Can store keys in other DHT values • And thus build complex data structures

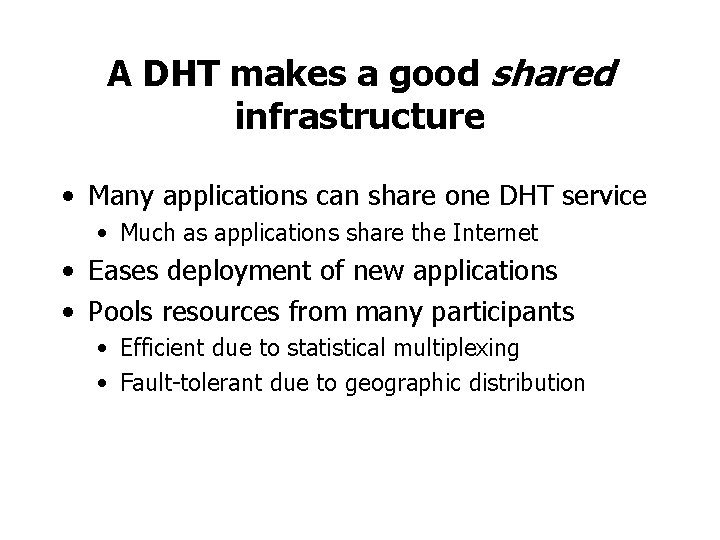

A DHT makes a good shared infrastructure • Many applications can share one DHT service • Much as applications share the Internet • Eases deployment of new applications • Pools resources from many participants • Efficient due to statistical multiplexing • Fault-tolerant due to geographic distribution

![Many recent DHTbased projects File sharing CFS Ocean Store PAST Ivy Many recent DHT-based projects • • File sharing [CFS, Ocean. Store, PAST, Ivy, …]](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-11.jpg)

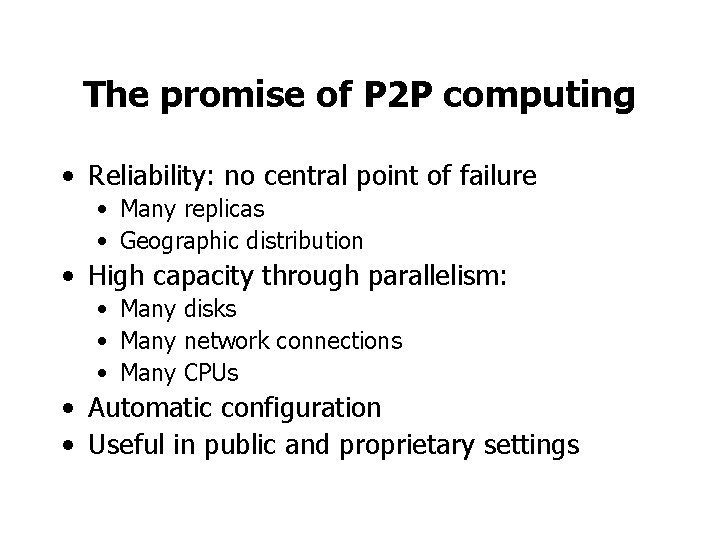

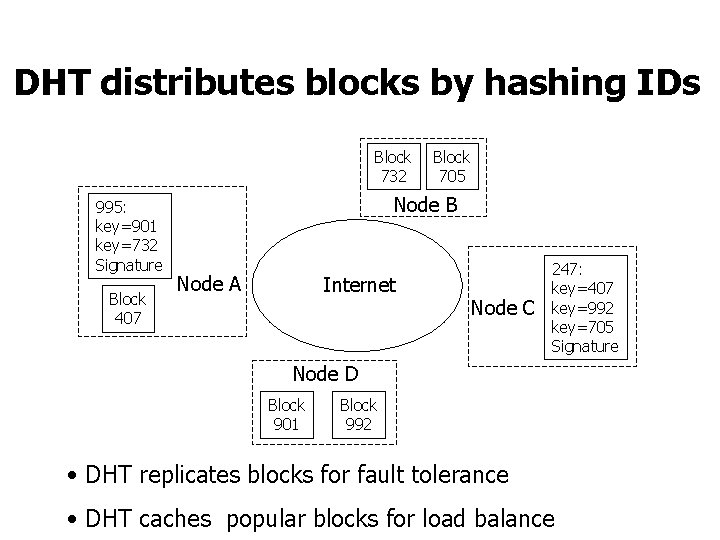

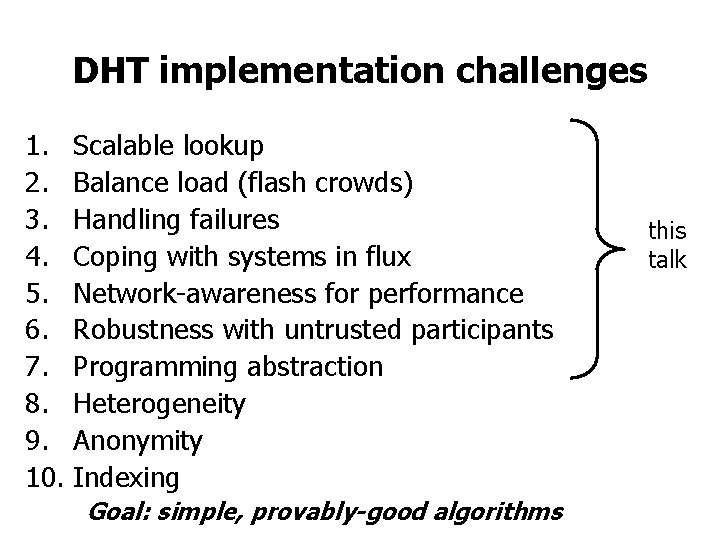

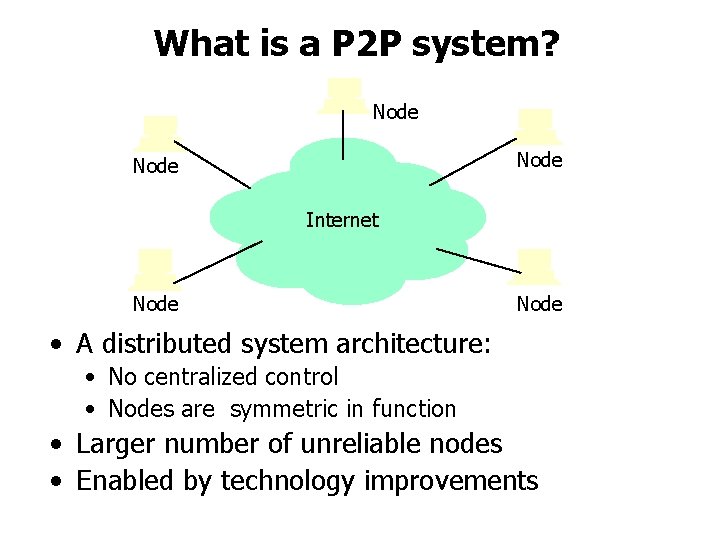

Many recent DHT-based projects • • File sharing [CFS, Ocean. Store, PAST, Ivy, …] Web cache [Squirrel, . . ] Archival/Backup store [Hive. Net, Mojo, Pastiche] Censor-resistant stores [Eternity, Free. Net, . . ] DB query and indexing [PIER, …] Event notification [Scribe] Naming systems [Chord. DNS, Twine, . . ] Communication primitives [I 3, …] Common thread: data is location-independent

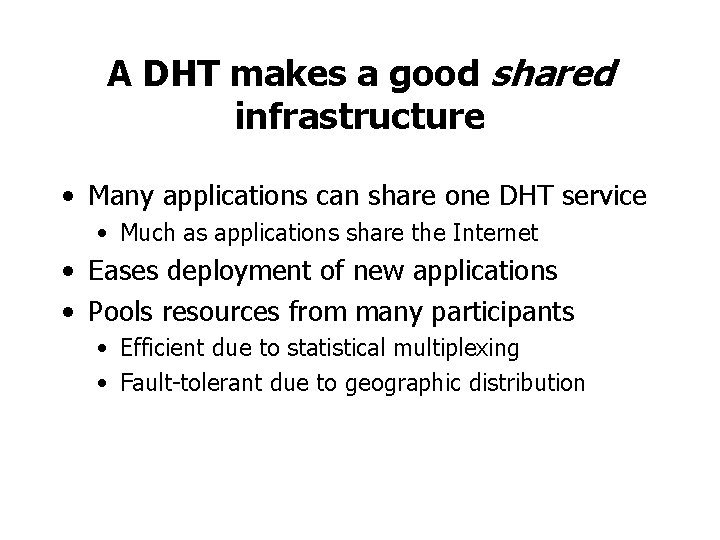

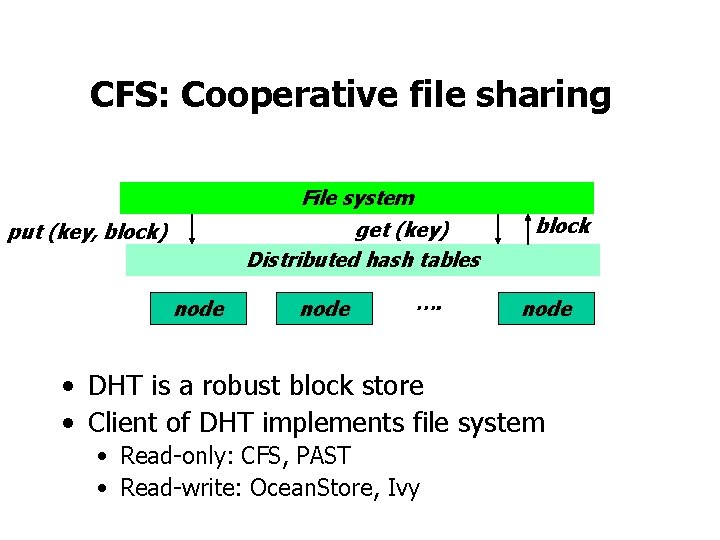

CFS: Cooperative file sharing File system get (key) Distributed hash tables put (key, block) node …. block node • DHT is a robust block store • Client of DHT implements file system • Read-only: CFS, PAST • Read-write: Ocean. Store, Ivy

File representation: self-authenticating data File System key=995 995: key=901 key=732 Signature (root block) 431=SHA-1 144 = SHA-1 … 901= SHA-1 key=431 “a. txt” ID=144 key=795 … (i-node block) … (data) … (directory blocks) • Key = SHA-1(content block) • File and file systems form Merkle hash trees

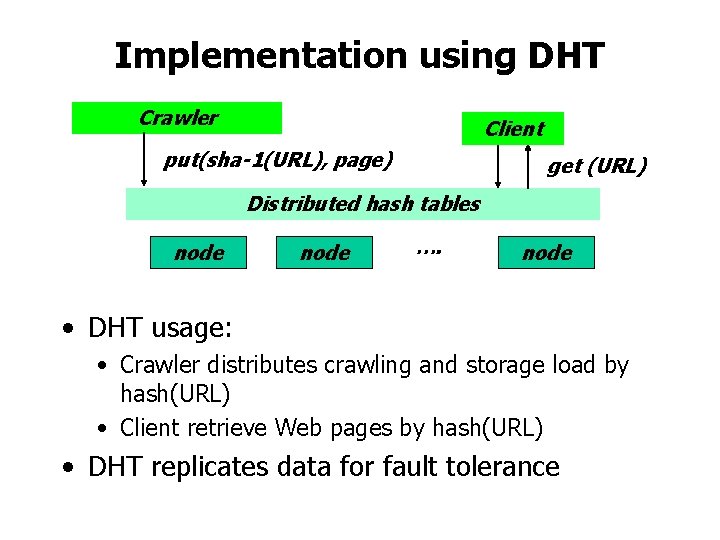

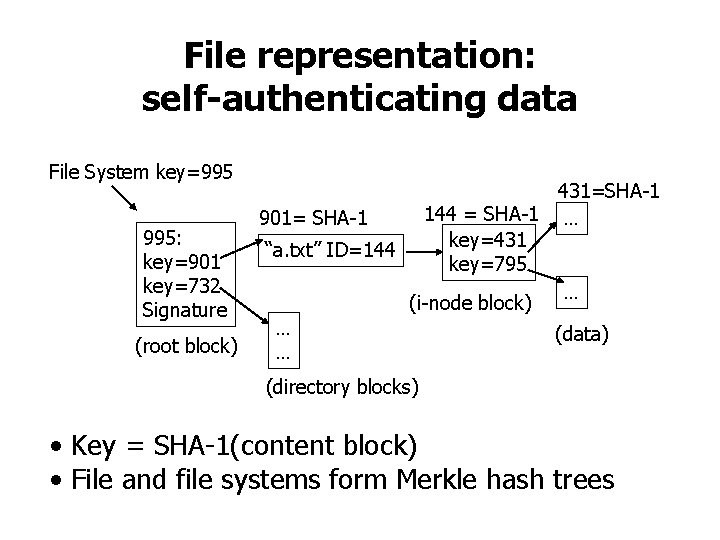

DHT distributes blocks by hashing IDs Block 732 995: key=901 key=732 Signature Block 407 Block 705 Node B Node A Internet Node C 247: key=407 key=992 key=705 Signature Node D Block 901 Block 992 • DHT replicates blocks for fault tolerance • DHT caches popular blocks for load balance

Historical web archiver • Goal: make and archive a daily check point of the Web • Estimates: • Web is about 57 Tbyte, compressed HTML+img • New data per day: 580 Gbyte Ø 128 Tbyte per year with 5 replicas • Design: • 12, 810 nodes: 100 Gbyte disk each and 61 Kbit/s per node

Implementation using DHT Crawler Client put(sha-1(URL), page) get (URL) Distributed hash tables node …. node • DHT usage: • Crawler distributes crawling and storage load by hash(URL) • Client retrieve Web pages by hash(URL) • DHT replicates data for fault tolerance

Archival/backup store • Goal: archive on other user’s machines • Observations • Many user machines are not backed up • Archiving requires significant manual effort • Many machines have lots of spare disk space • Using DHT: • Merkle tree to validate integrity of data • Administrative and financial costs are less for all participants • Archives are robust (automatic off-site backups) • Blocks are stored once, if key = sha 1(data)

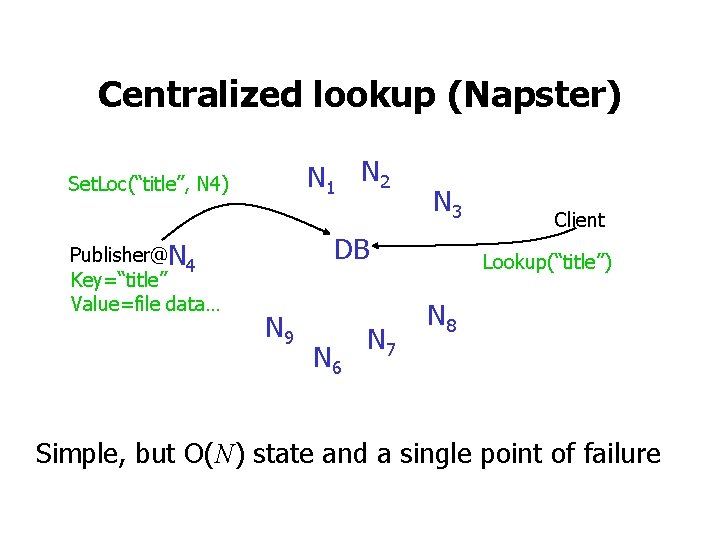

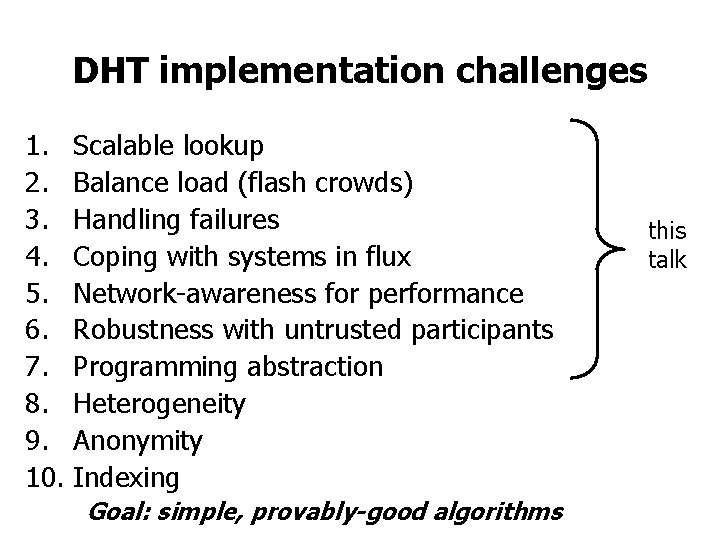

DHT implementation challenges 1. Scalable lookup 2. Balance load (flash crowds) 3. Handling failures 4. Coping with systems in flux 5. Network-awareness for performance 6. Robustness with untrusted participants 7. Programming abstraction 8. Heterogeneity 9. Anonymity 10. Indexing Goal: simple, provably-good algorithms this talk

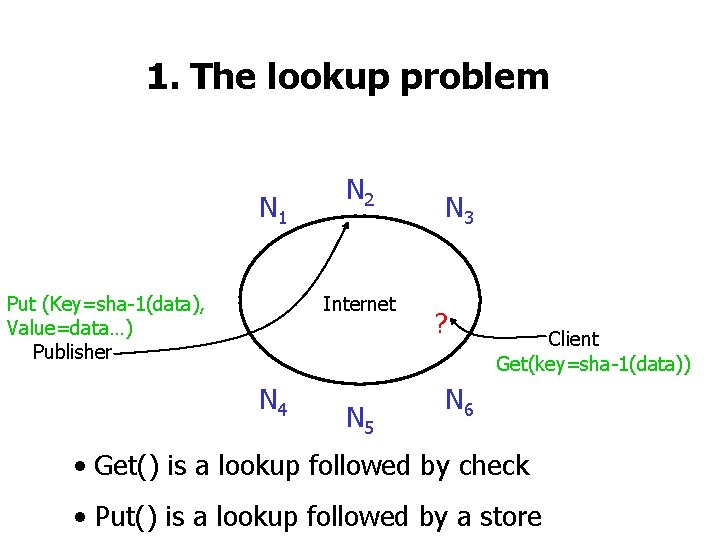

1. The lookup problem N 1 Put (Key=sha-1(data), Value=data…) Publisher N 2 Internet N 4 N 5 N 3 ? Client Get(key=sha-1(data)) N 6 • Get() is a lookup followed by check • Put() is a lookup followed by a store

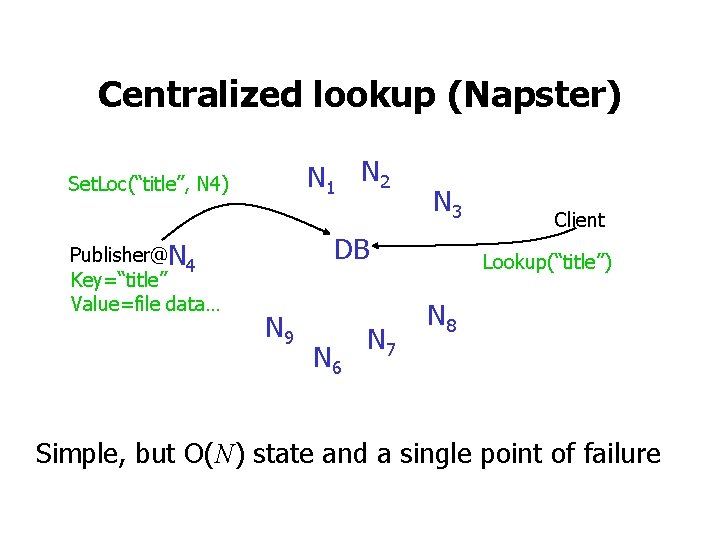

Centralized lookup (Napster) N 1 N 2 Set. Loc(“title”, N 4) Publisher@N 4 Key=“title” Value=file data… N 3 DB N 9 N 6 N 7 Client Lookup(“title”) N 8 Simple, but O(N) state and a single point of failure

Flooded queries (Gnutella) N 2 N 1 Publisher@N 4 Key=“title” Value=MP 3 data… N 6 N 7 N 3 Lookup(“title”) Client N 8 N 9 Robust, but worst case O(N) messages per lookup

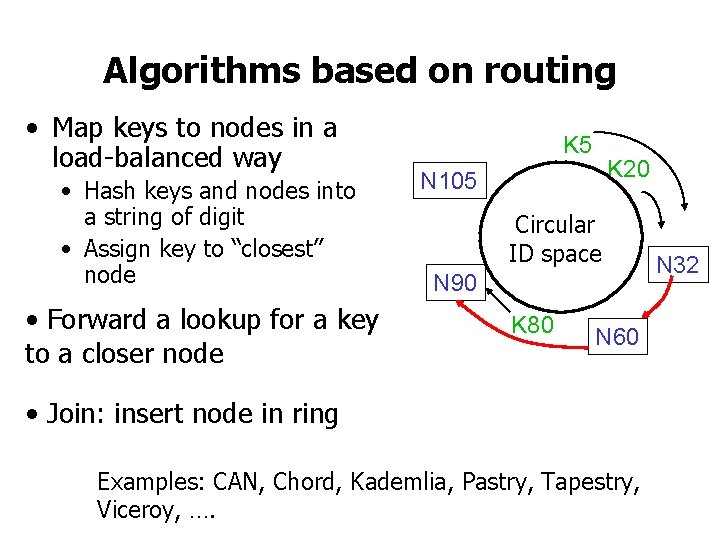

Algorithms based on routing • Map keys to nodes in a load-balanced way • Hash keys and nodes into a string of digit • Assign key to “closest” node • Forward a lookup for a key to a closer node K 5 K 20 N 105 Circular ID space N 90 K 80 N 60 • Join: insert node in ring Examples: CAN, Chord, Kademlia, Pastry, Tapestry, Viceroy, …. N 32

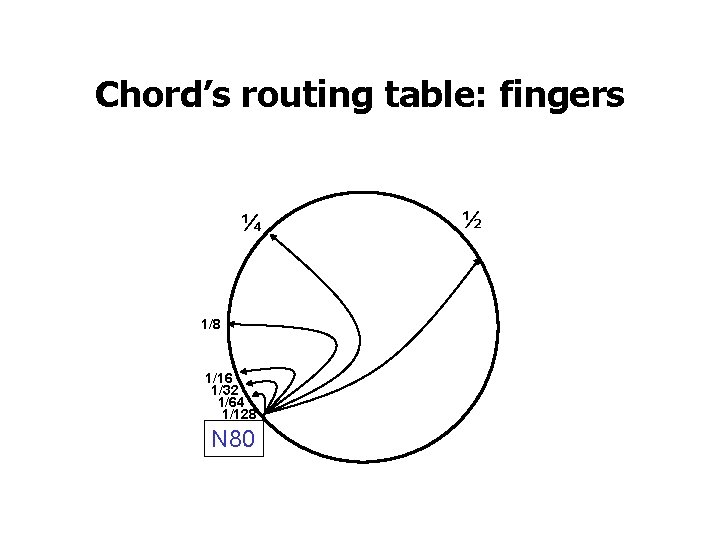

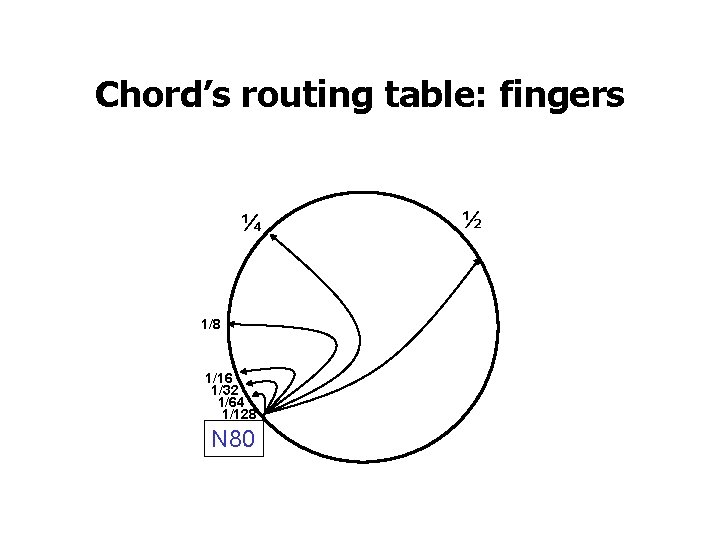

Chord’s routing table: fingers ¼ 1/8 1/16 1/32 1/64 1/128 N 80 ½

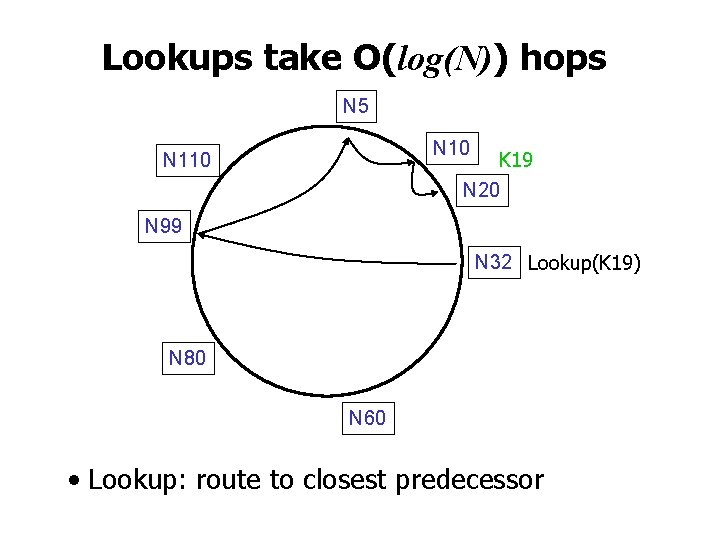

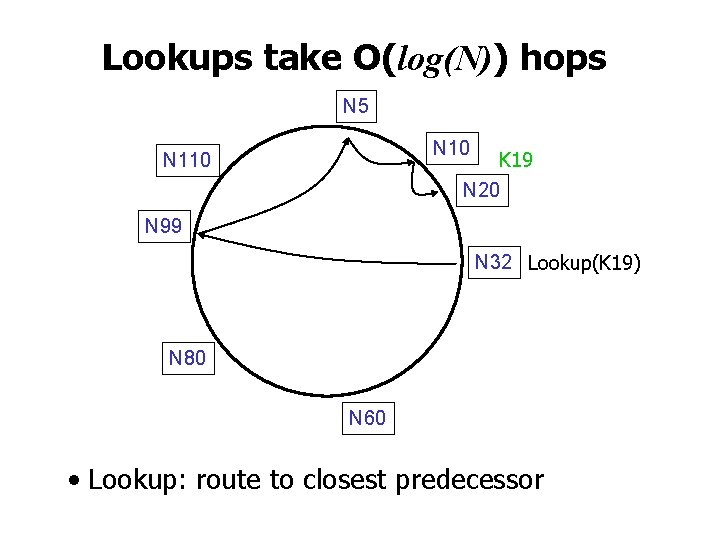

Lookups take O(log(N)) hops N 5 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Lookup: route to closest predecessor

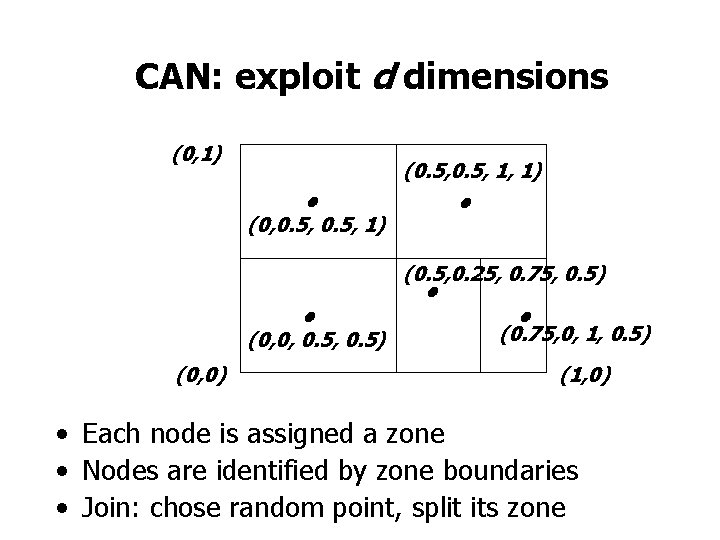

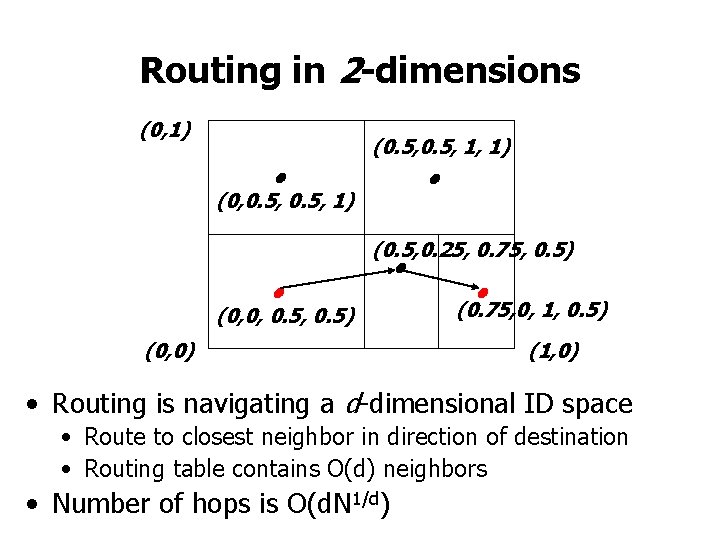

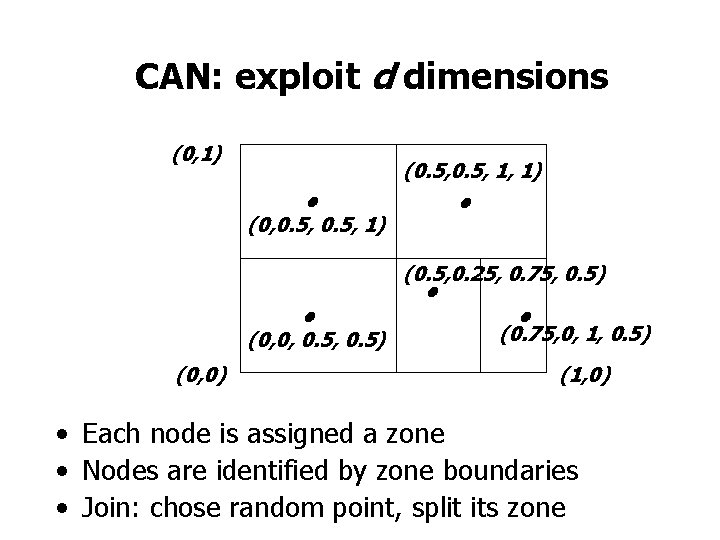

CAN: exploit d dimensions (0, 1) • (0, 0. 5, 1) (0. 5, 1, 1) • (0. 5, 0. 25, 0. 75, 0. 5) • • • (0. 75, 0, 1, 0. 5) (0, 0, 0. 5) (0, 0) (1, 0) • Each node is assigned a zone • Nodes are identified by zone boundaries • Join: chose random point, split its zone

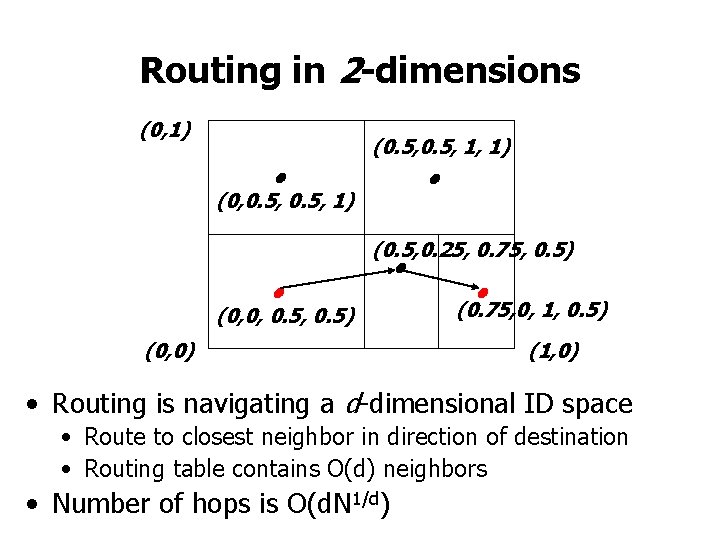

Routing in 2 -dimensions (0, 1) • (0, 0. 5, 1) (0. 5, 1, 1) • (0. 5, 0. 25, 0. 75, 0. 5) • • • (0. 75, 0, 1, 0. 5) (0, 0, 0. 5) (0, 0) (1, 0) • Routing is navigating a d-dimensional ID space • Route to closest neighbor in direction of destination • Routing table contains O(d) neighbors • Number of hops is O(d. N 1/d)

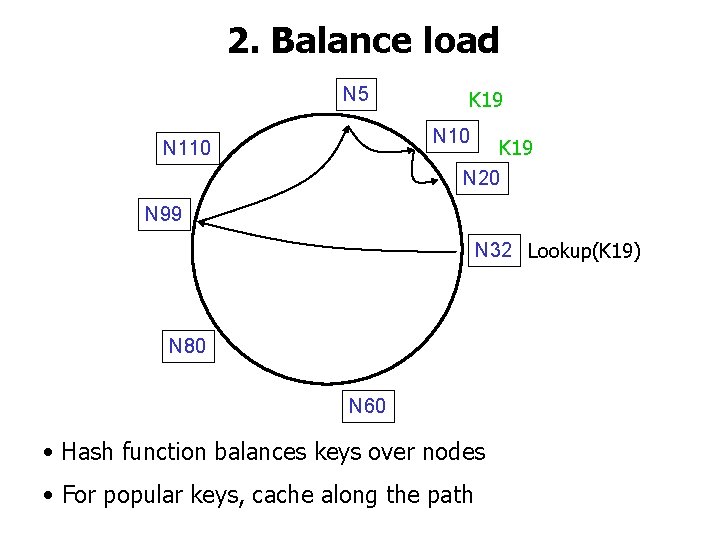

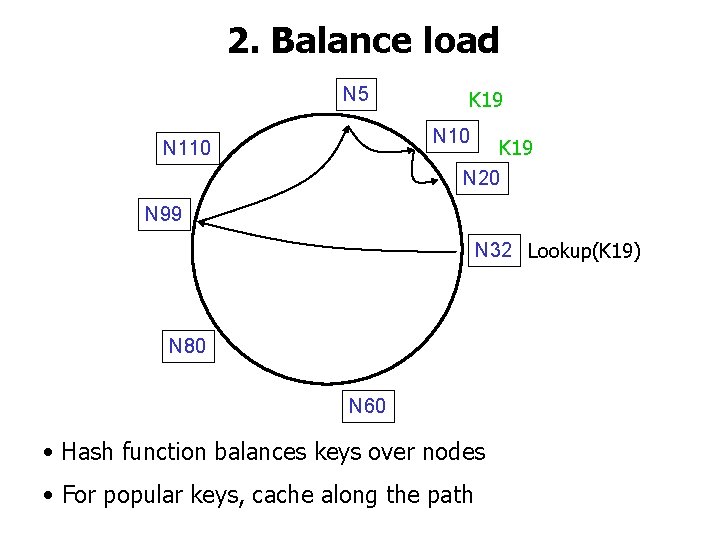

2. Balance load N 5 K 19 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Hash function balances keys over nodes • For popular keys, cache along the path

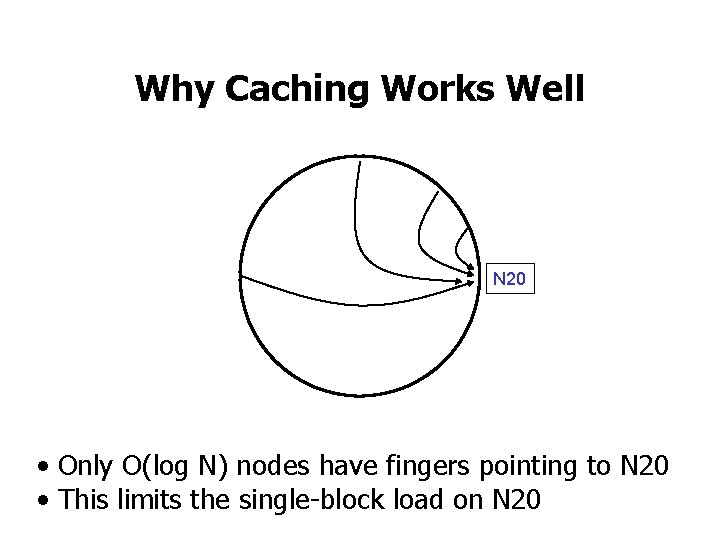

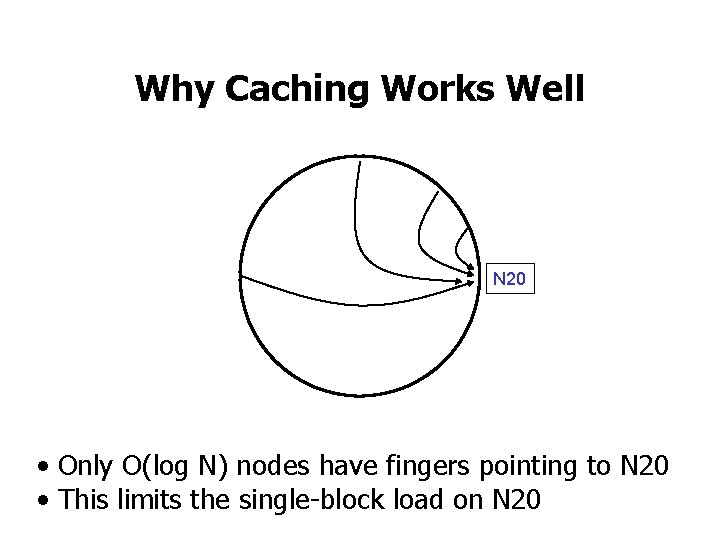

Why Caching Works Well N 20 • Only O(log N) nodes have fingers pointing to N 20 • This limits the single-block load on N 20

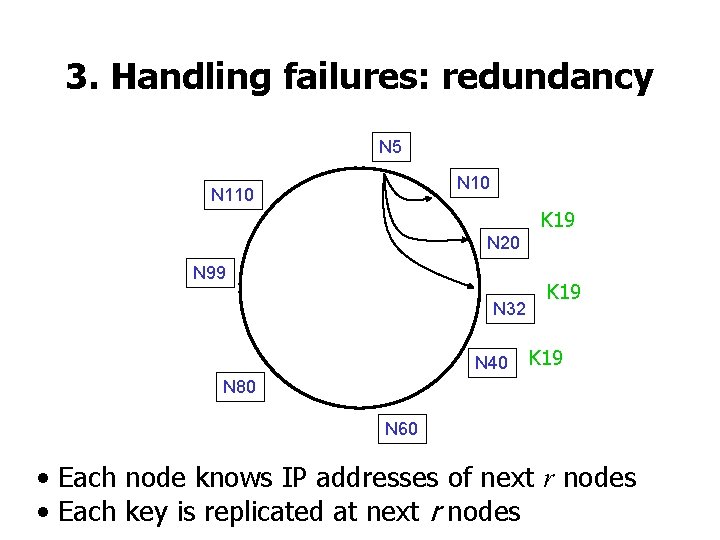

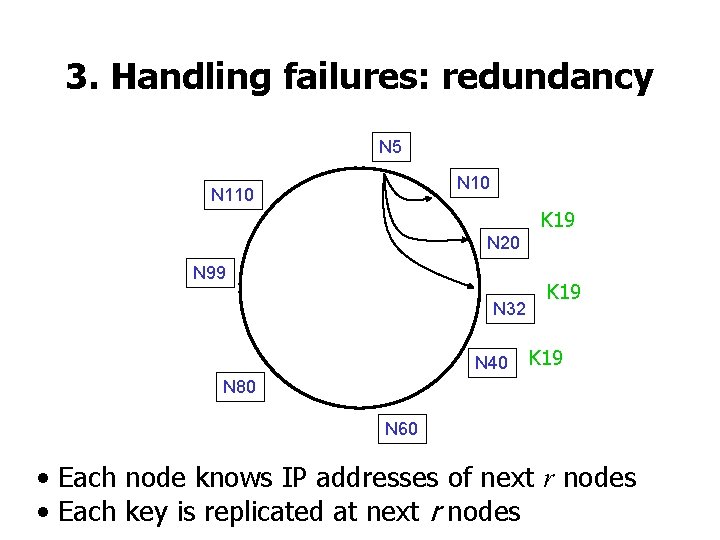

3. Handling failures: redundancy N 5 N 10 N 110 K 19 N 20 N 99 N 32 N 40 K 19 N 80 N 60 • Each node knows IP addresses of next r nodes • Each key is replicated at next r nodes

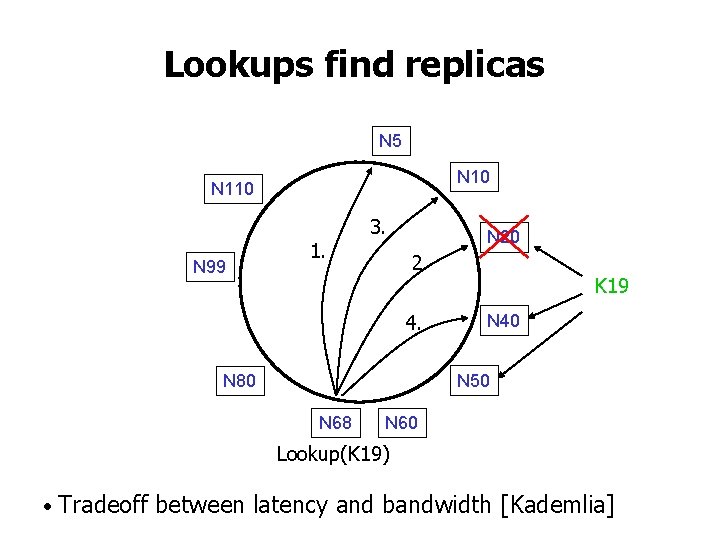

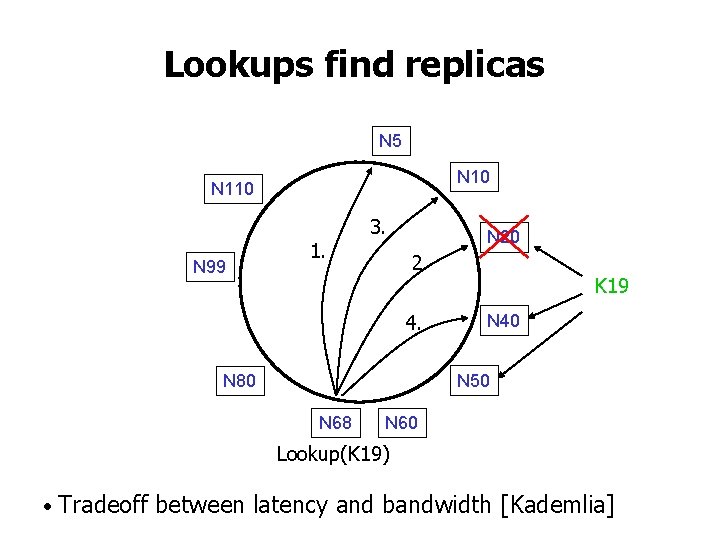

Lookups find replicas N 5 N 10 N 110 N 99 1. 3. N 20 2. 4. K 19 N 40 N 50 N 80 N 68 N 60 Lookup(K 19) • Tradeoff between latency and bandwidth [Kademlia]

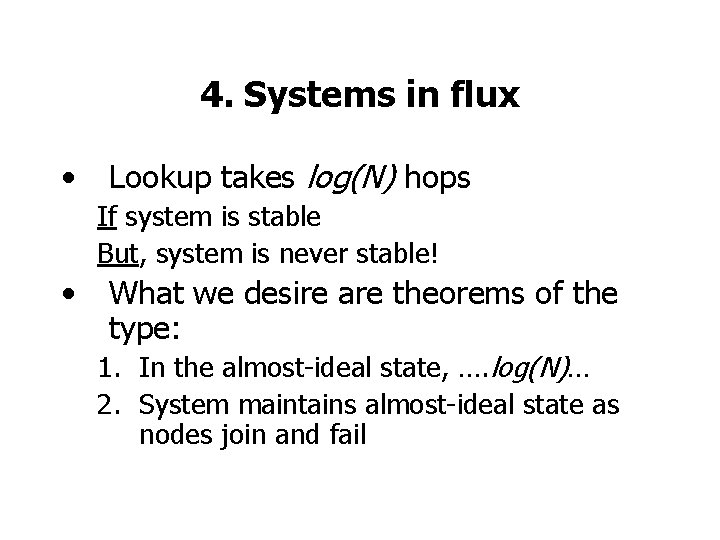

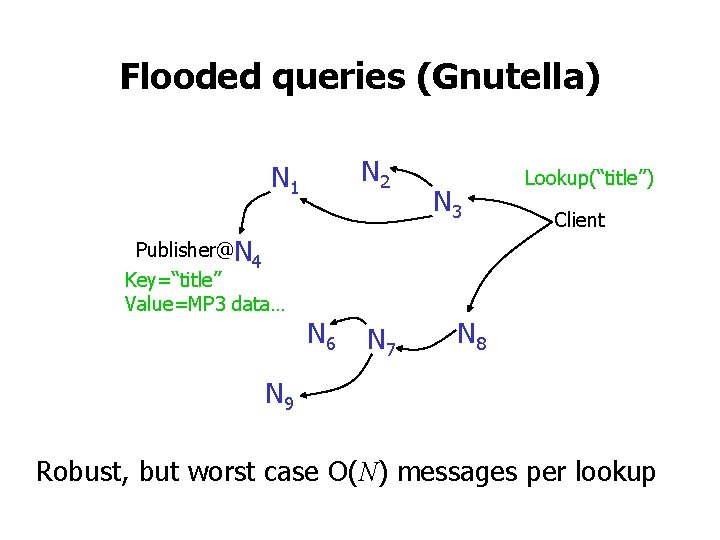

4. Systems in flux • Lookup takes log(N) hops If system is stable But, system is never stable! • What we desire are theorems of the type: 1. In the almost-ideal state, …. log(N)… 2. System maintains almost-ideal state as nodes join and fail

![Halflife LibenNowell 2002 N new nodes join N nodes N2 old nodes leave Half-life [Liben-Nowell 2002] N new nodes join N nodes N/2 old nodes leave •](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-32.jpg)

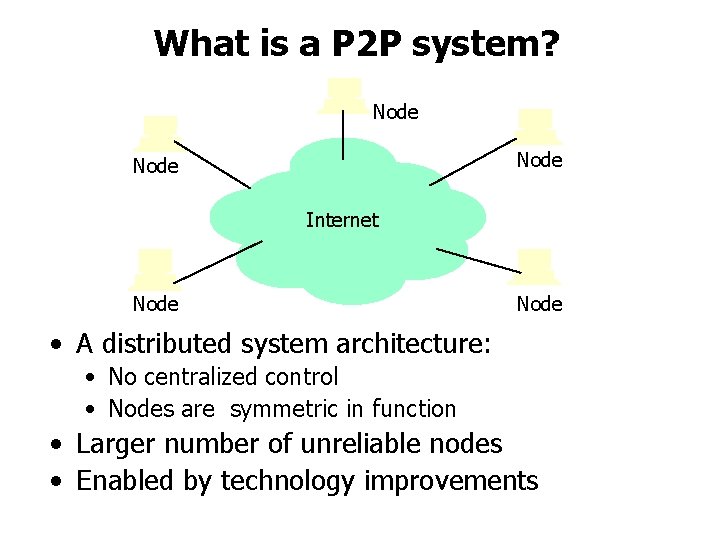

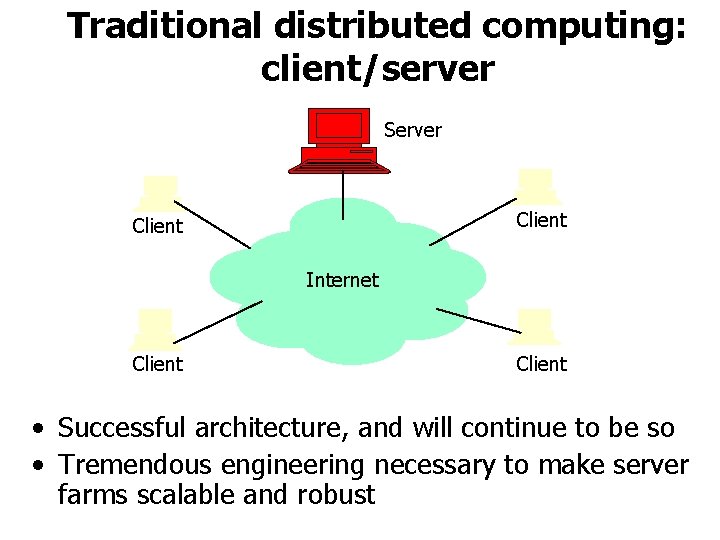

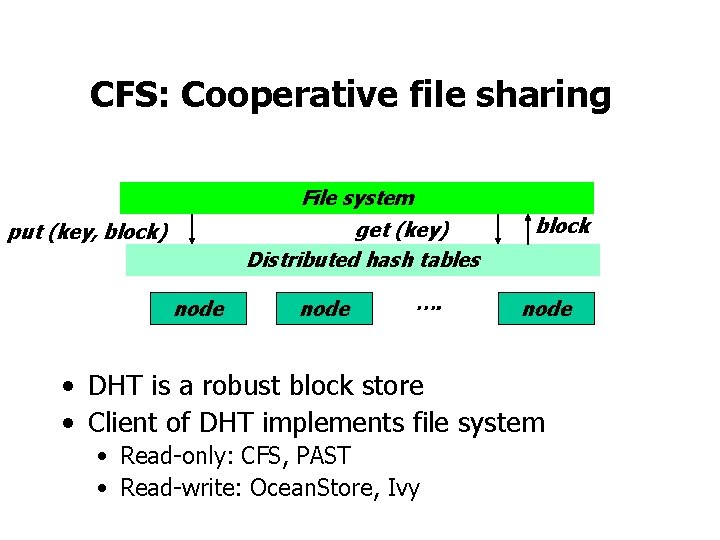

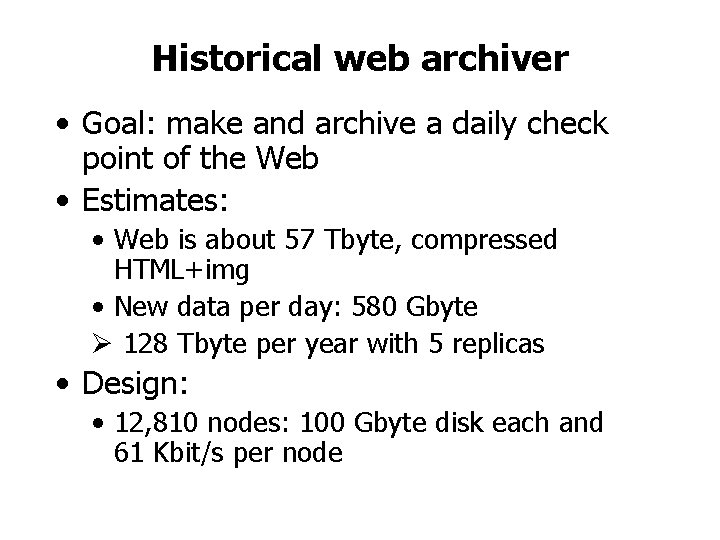

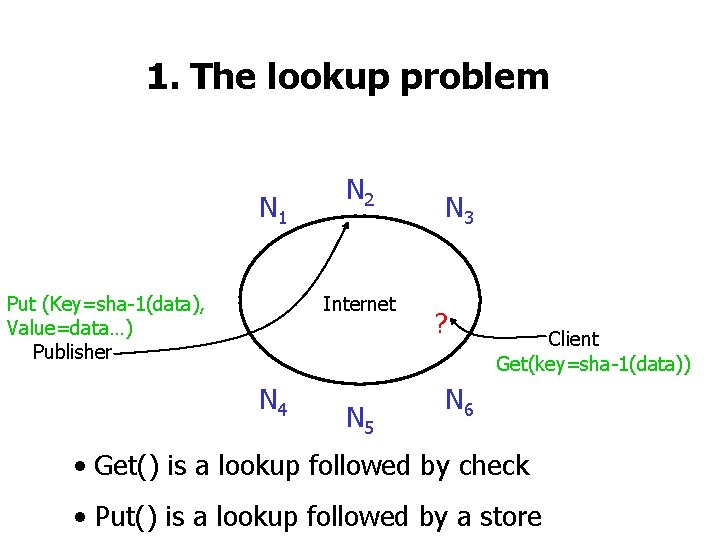

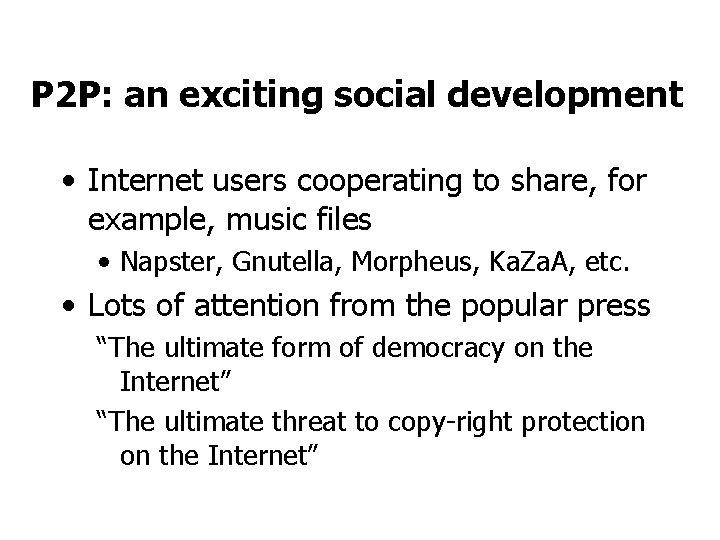

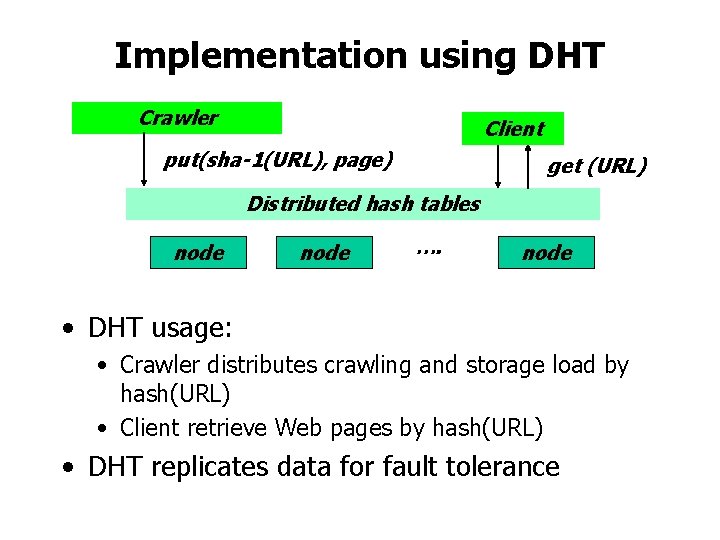

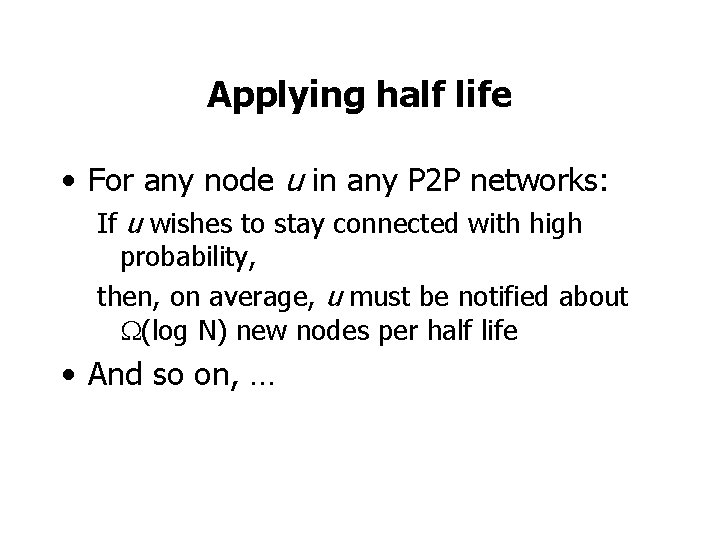

Half-life [Liben-Nowell 2002] N new nodes join N nodes N/2 old nodes leave • Doubling time: time for N joins • Halfing time: time for N/2 old nodes to fail • Half life: MIN(doubling-time, halfing-time)

Applying half life • For any node u in any P 2 P networks: If u wishes to stay connected with high probability, then, on average, u must be notified about (log N) new nodes per half life • And so on, …

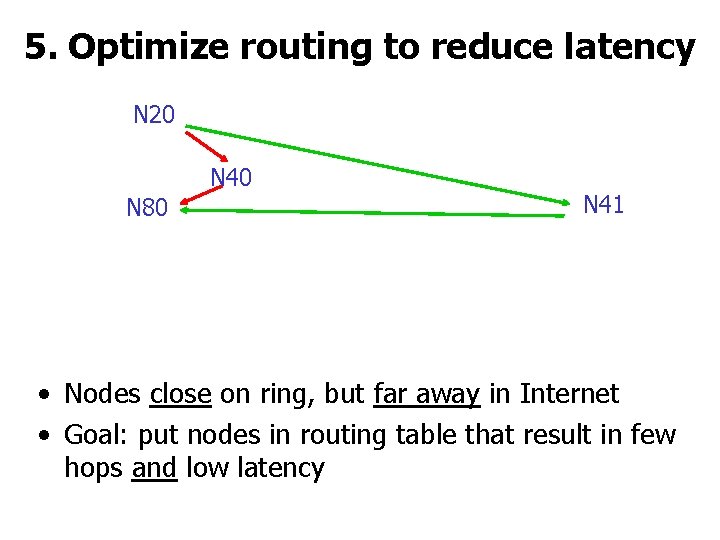

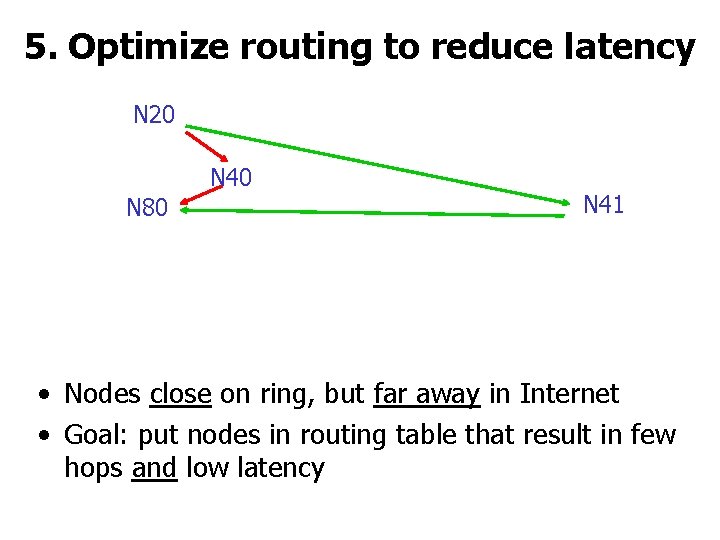

5. Optimize routing to reduce latency N 20 N 40 N 80 N 41 • Nodes close on ring, but far away in Internet • Goal: put nodes in routing table that result in few hops and low latency

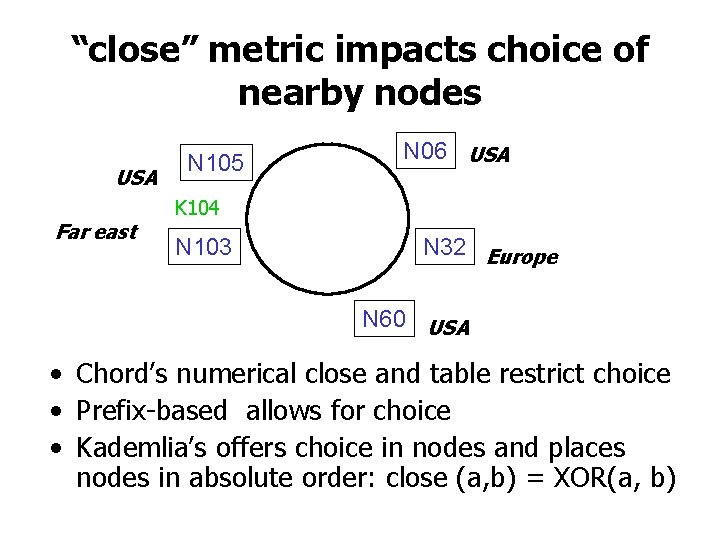

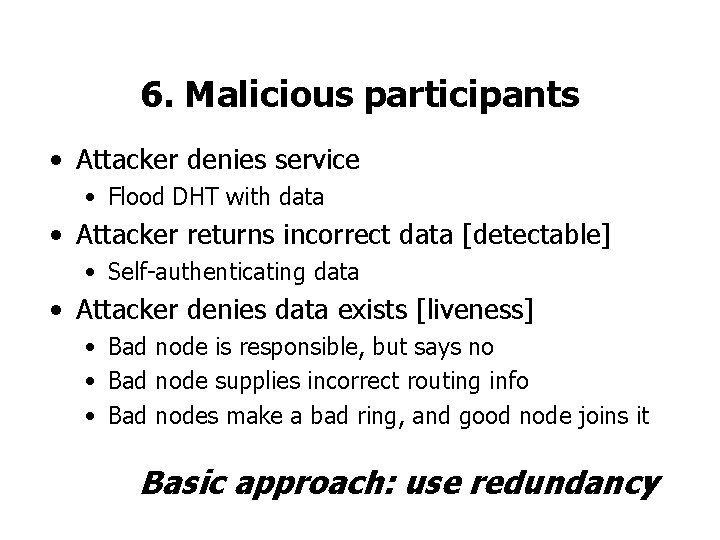

“close” metric impacts choice of nearby nodes USA Far east N 105 N 06 USA K 104 N 103 N 32 Europe N 60 USA • Chord’s numerical close and table restrict choice • Prefix-based allows for choice • Kademlia’s offers choice in nodes and places nodes in absolute order: close (a, b) = XOR(a, b)

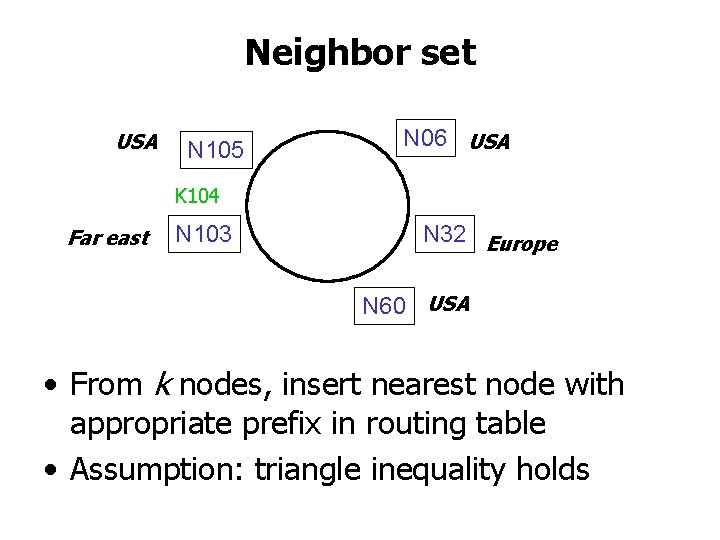

Neighbor set USA N 105 N 06 USA K 104 Far east N 103 N 32 Europe N 60 USA • From k nodes, insert nearest node with appropriate prefix in routing table • Assumption: triangle inequality holds

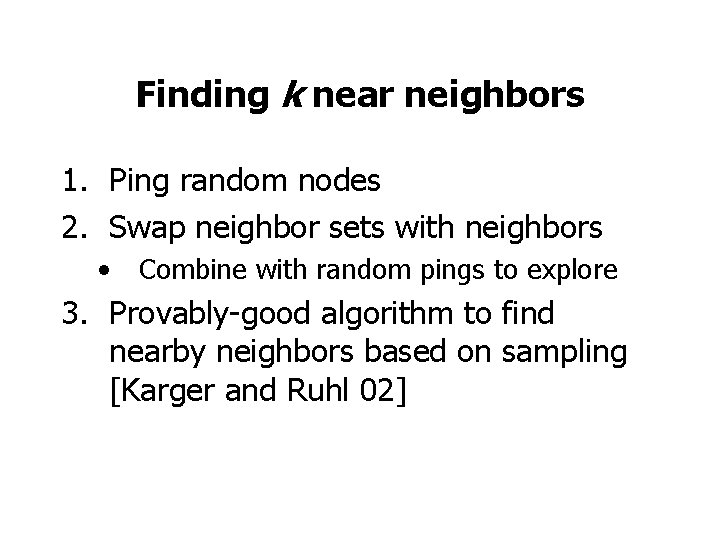

Finding k near neighbors 1. Ping random nodes 2. Swap neighbor sets with neighbors • Combine with random pings to explore 3. Provably-good algorithm to find nearby neighbors based on sampling [Karger and Ruhl 02]

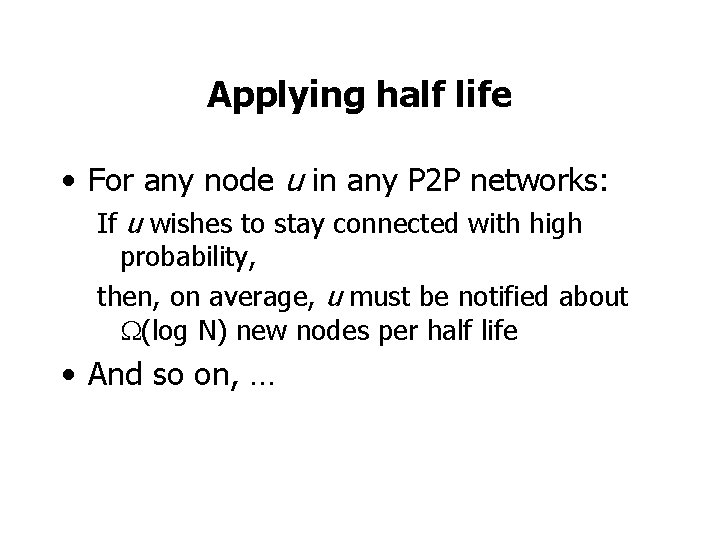

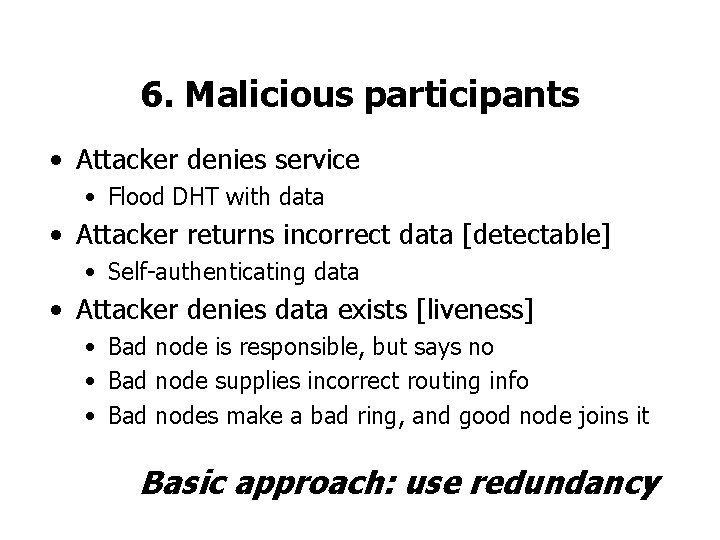

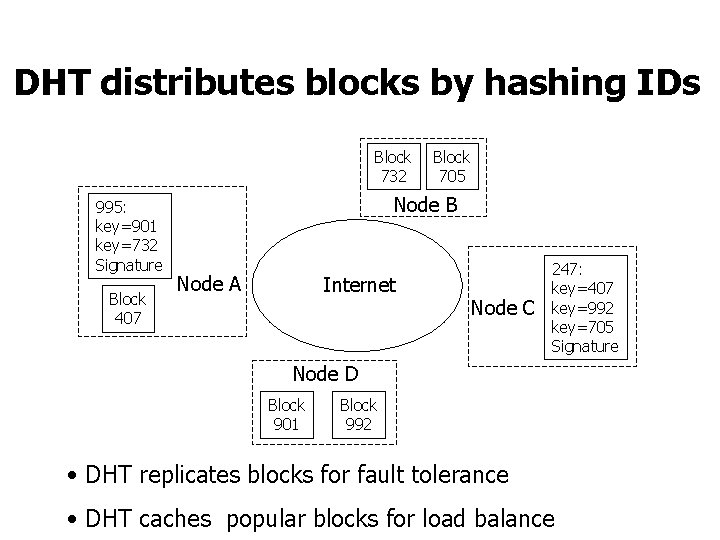

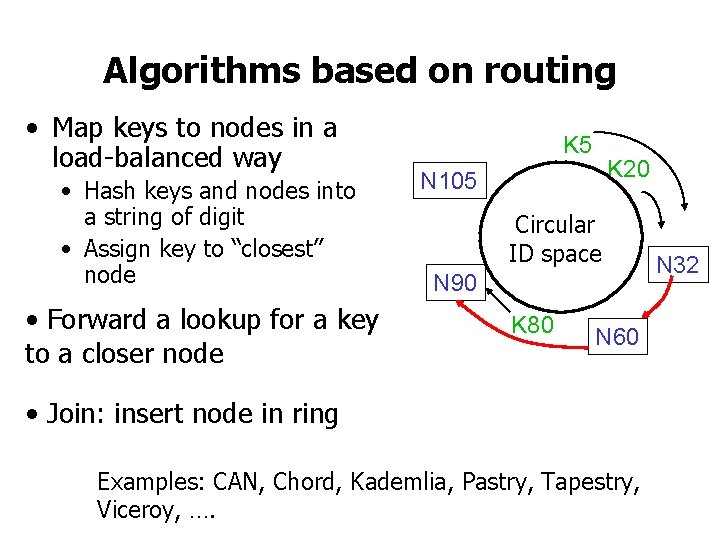

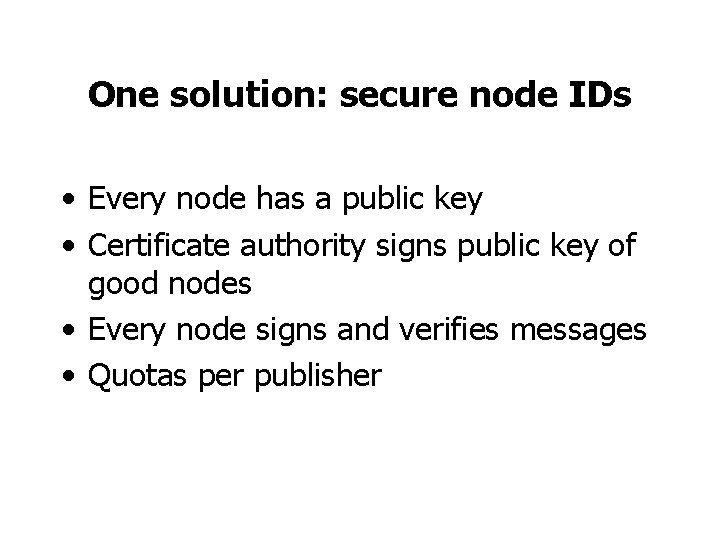

6. Malicious participants • Attacker denies service • Flood DHT with data • Attacker returns incorrect data [detectable] • Self-authenticating data • Attacker denies data exists [liveness] • Bad node is responsible, but says no • Bad node supplies incorrect routing info • Bad nodes make a bad ring, and good node joins it Basic approach: use redundancy

![Sybil attack Douceur 02 N 5 N 10 N 110 N 20 N 99 Sybil attack [Douceur 02] N 5 N 10 N 110 N 20 N 99](https://slidetodoc.com/presentation_image_h2/5f3ca420432d4fb8746a8917fe51fd1a/image-39.jpg)

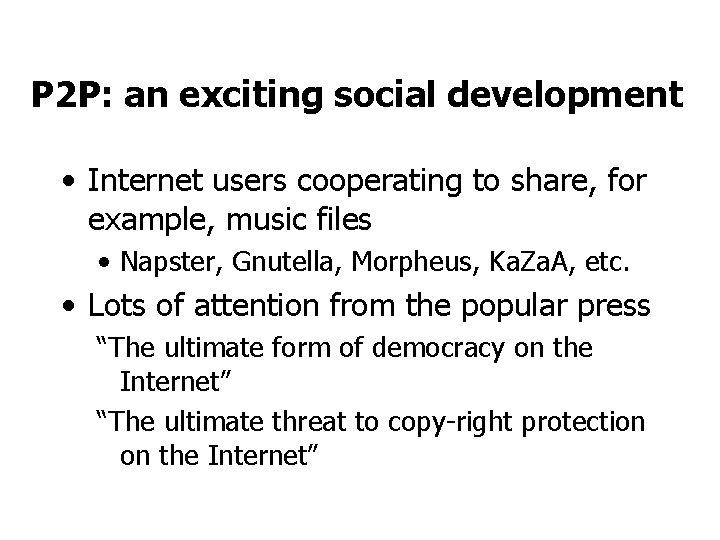

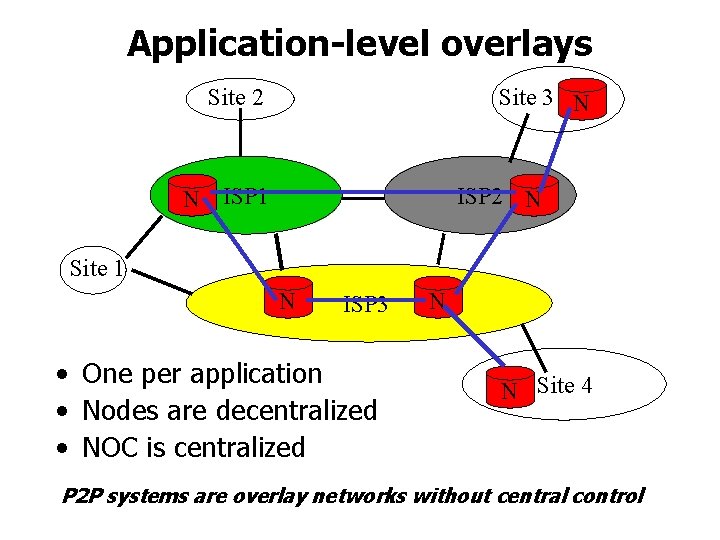

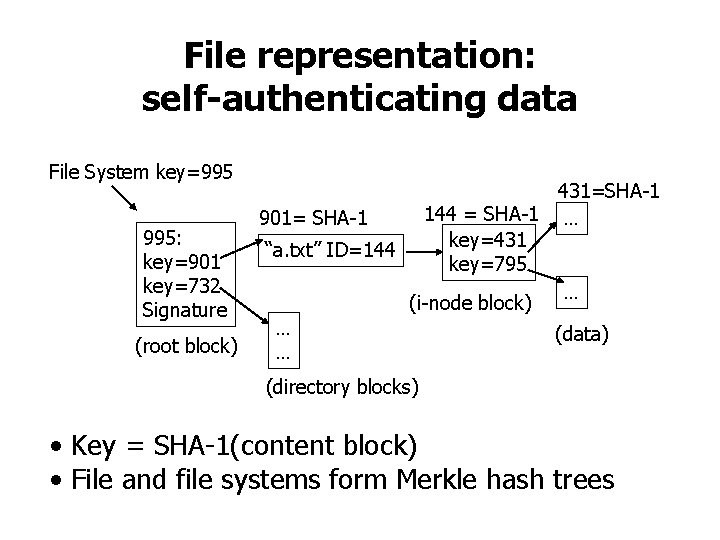

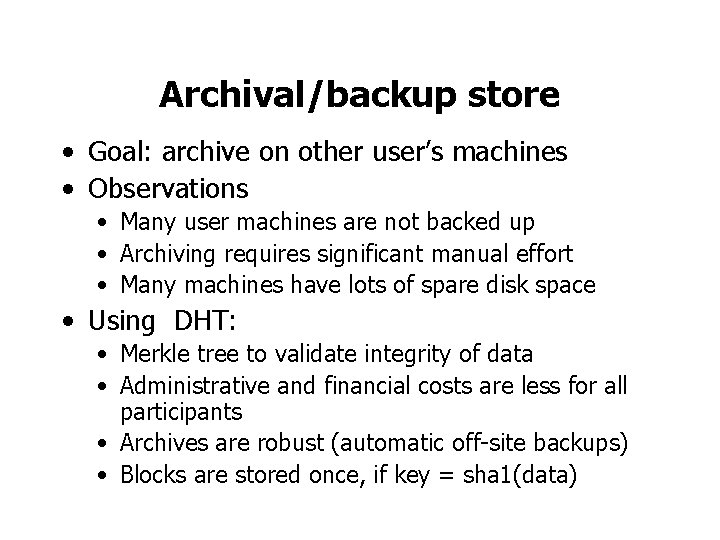

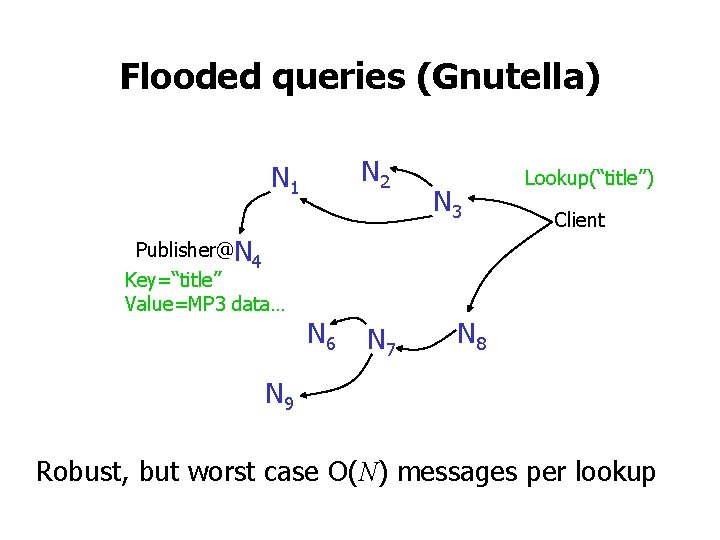

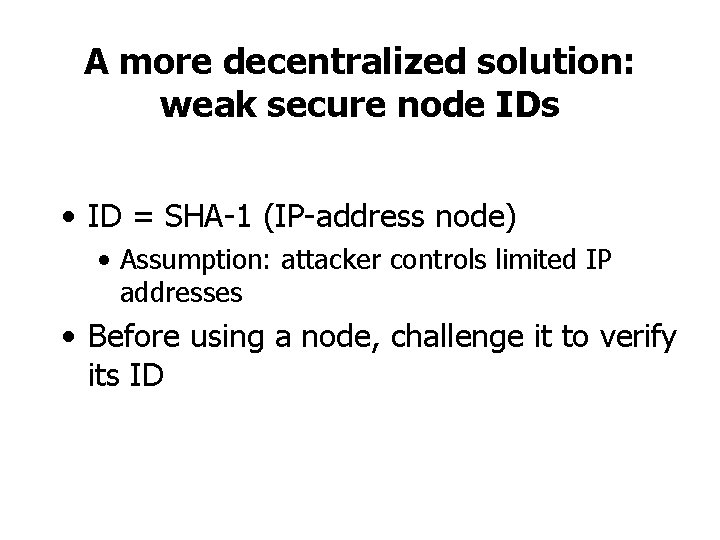

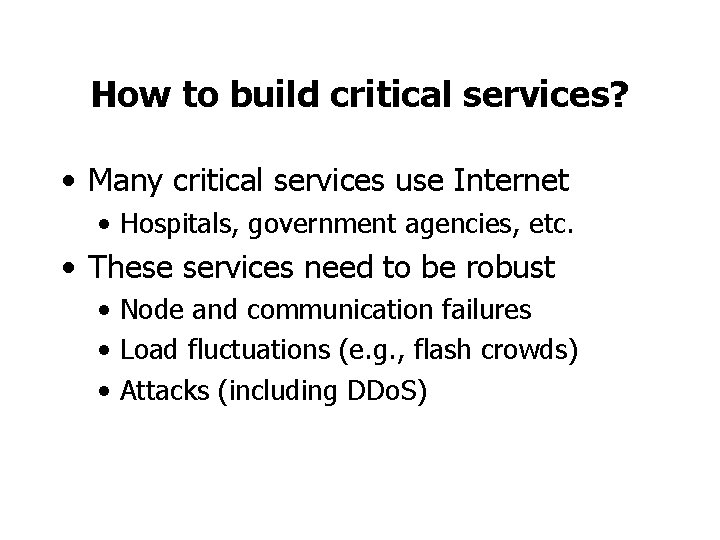

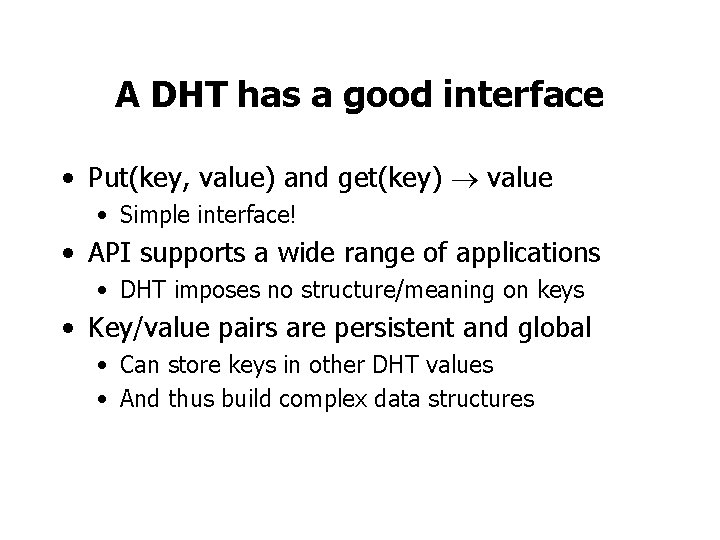

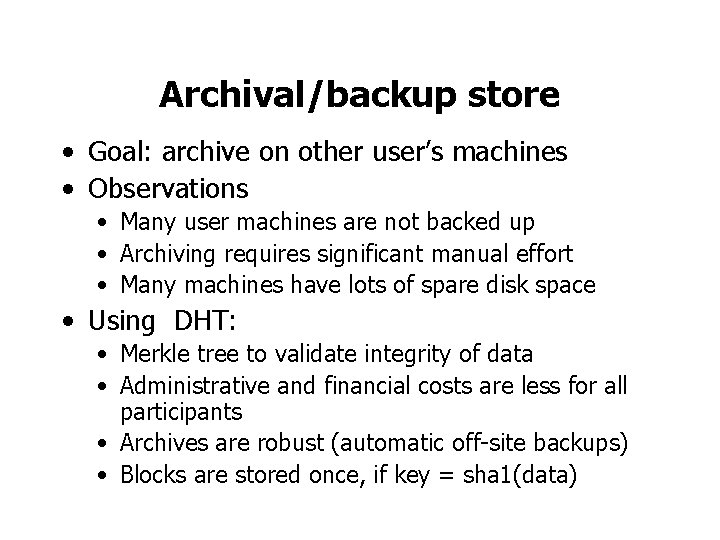

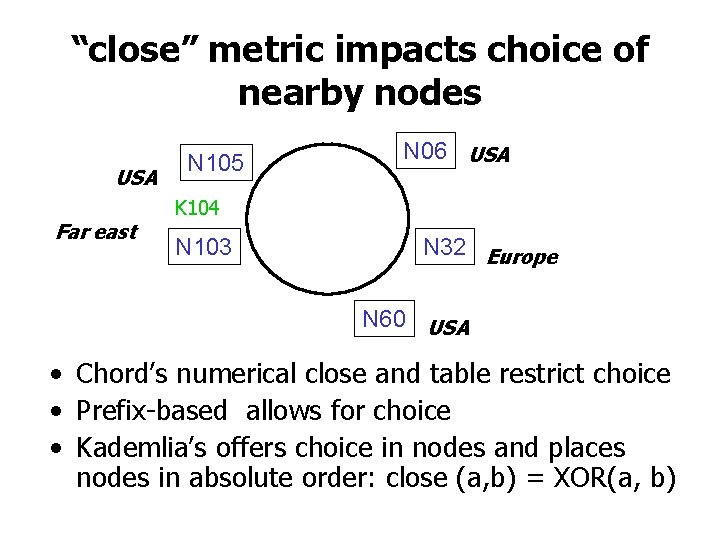

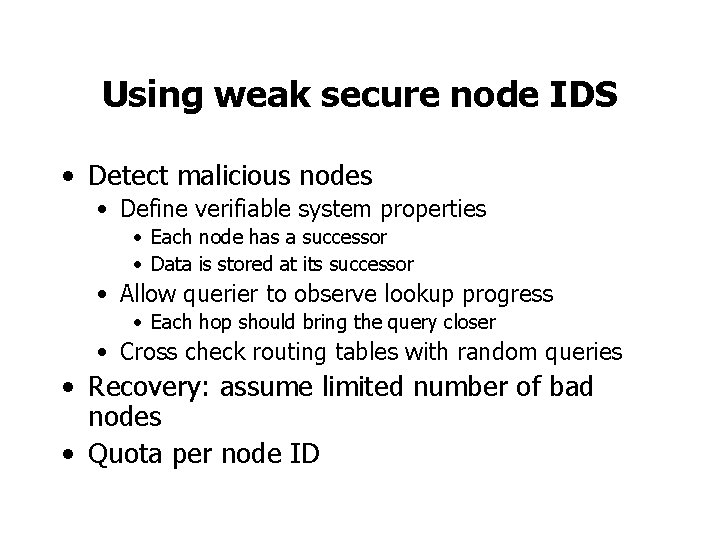

Sybil attack [Douceur 02] N 5 N 10 N 110 N 20 N 99 N 32 N 40 • Attacker creates multiple identities • Attacker controls enough nodes to foil the redundancy N 80 N 60 Ø Need a way to control creation of node IDs

One solution: secure node IDs • Every node has a public key • Certificate authority signs public key of good nodes • Every node signs and verifies messages • Quotas per publisher

Another solution: exploit practical byzantine protocols N 105 N 103 N 06 N N 32 N N 60 • A core set of servers is pre-configured with keys and perform admission control [Ocean. Store] • The servers achieve consensus with a practical byzantine recovery protocol [Castro and Liskov ’ 99 and ’ 00] • The servers serialize updates [Ocean. Store] or assign secure node Ids [Configuration service]

A more decentralized solution: weak secure node IDs • ID = SHA-1 (IP-address node) • Assumption: attacker controls limited IP addresses • Before using a node, challenge it to verify its ID

Using weak secure node IDS • Detect malicious nodes • Define verifiable system properties • Each node has a successor • Data is stored at its successor • Allow querier to observe lookup progress • Each hop should bring the query closer • Cross check routing tables with random queries • Recovery: assume limited number of bad nodes • Quota per node ID

Philosophical questions • How decentralized should systems be? • Gnutella versus content distribution network • Have a bit of both? (e. g. , Ocean. Store) • Why does the distributed systems community have more problems with decentralized systems than the networking community? • “A distributed system is a system in which a computer you don’t know about renders your own computer unusable” • Internet (BGP, Net. News)

What are we doing at MIT? • Building a system based on Chord • Applications: CFS, Herodotus, Melody, Backup store, Ivy, … • Collaborate with other institutions • P 2 P workshop, Planet. Lab • Berkeley, ICSI, NYU, and Rice as part of ITR • Building a large-scale testbed • RON, Planet. Lab

Summary • Once we have DHTs, building large-scale, distributed applications is easy • • • Single, shared infrastructure for many applications Robust in the face of failures and attacks Scalable to large number of servers Self configuring across administrative domains Easy to program • Let’s build DHTs …. stay tuned …. http: //project-iris. net

7. Programming abstraction • • Blocks versus files Database queries (join, etc. ) Mutable data (writers) Atomicity of DHT operations