Pearson Test of English Academic Automated Scoring 29

- Slides: 43

Pearson Test of English Academic Automated Scoring 29 July 2017 UECA PD Fest Melbourne Presentation Title Arial Bold 7 pt 1

Overview 1. General information 2. Automated testing 3. Automated scoring – Writing 4. Automated scoring – Speaking 5. Questions 2

Pearson Test of English (PTE) Academic • • Computer-based test of international, academic English The world’s first fully-automatically scored high-stakes test of academic English, including Speaking and Writing • All four skills (Listening, Speaking, Reading, Writing) • • 3 hours of testing Administered at Pearson’s certified test centres for high security • 20 different tasks • 11 performance-based tasks integrating multiple skills 3

Teachers’ opinion “Only 9% of English teachers surveyed thought that computers are as effective as humans at assessing English ability. ” “Not only do computers score at least as accurately as humans, they have significant advantages that allow them to score even more accurately. ” Van Moere, A. (2016) The Magnificent Marking Machine. Pearson and ELTjam 4

A bit of history • Computers have been scoring writing for more than 20 years. • Speech-recognition software has been scoring speaking tests for more than 15 years. • Pearson has machine-scored over 20 million written and spoken responses from all over the globe. 5

Artificial intelligence • Computer software ‘teaches’ computers to evaluate key features of language by being ‘fed’ large amounts of data, including scored test-taker and native-speaker samples of items. • Statistical models are then created to predict how expert raters would rate new samples. • The models are validated and the algorithms are refined and then the scoring engine is in charge of scoring. • Outliers are scored by humans. 6

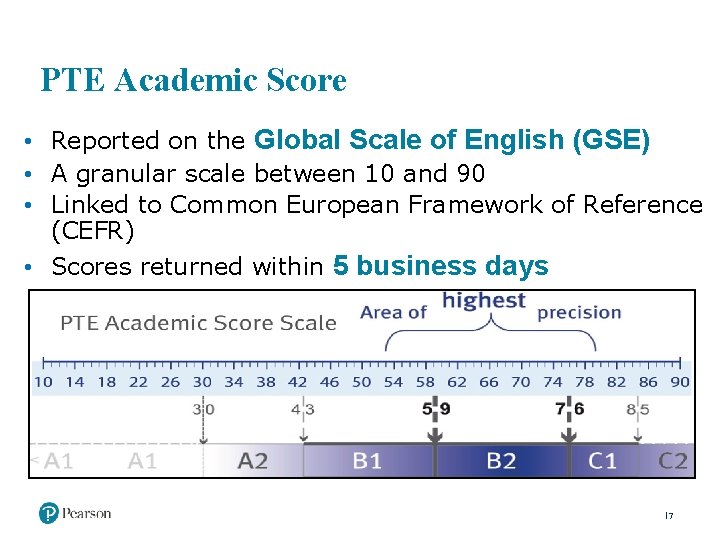

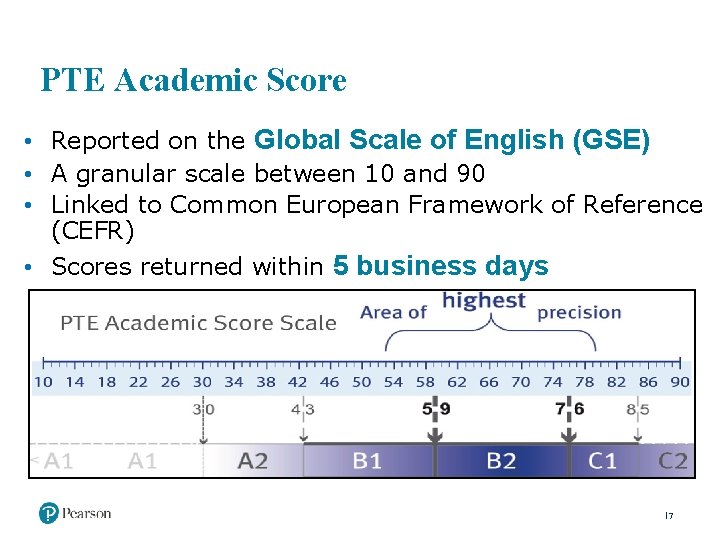

PTE Academic Score • Reported on the Global Scale of English (GSE) • A granular scale between 10 and 90 • Linked to Common European Framework of Reference (CEFR) • Scores returned within 5 business days 7

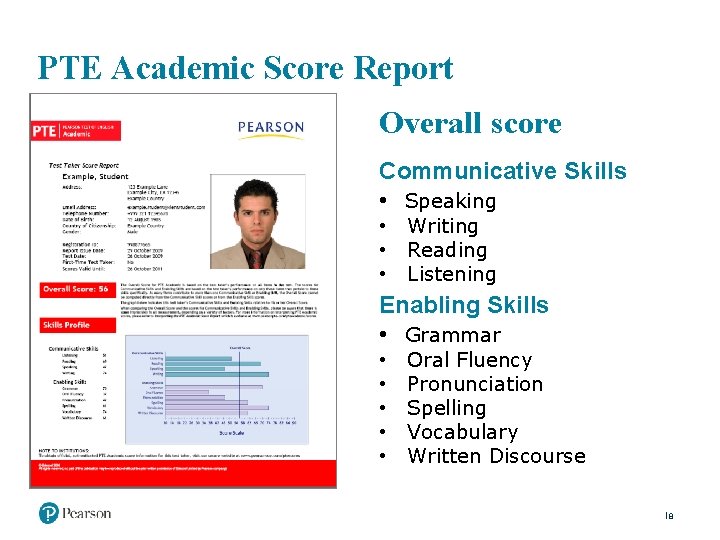

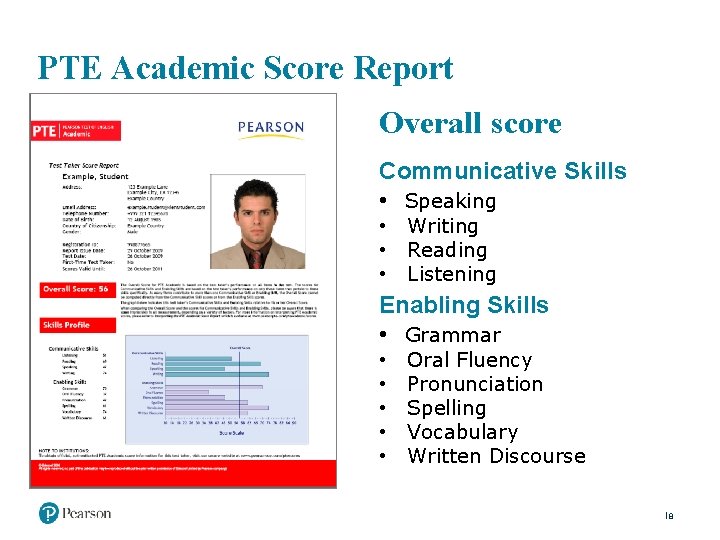

PTE Academic Score Report Overall score Communicative Skills • Speaking • • • Writing Reading Listening Enabling Skills • Grammar • • • Oral Fluency Pronunciation Spelling Vocabulary Written Discourse 8

Why use automated scoring? • Accuracy • Speed • Consistency • Objectivity • Efficiency 9

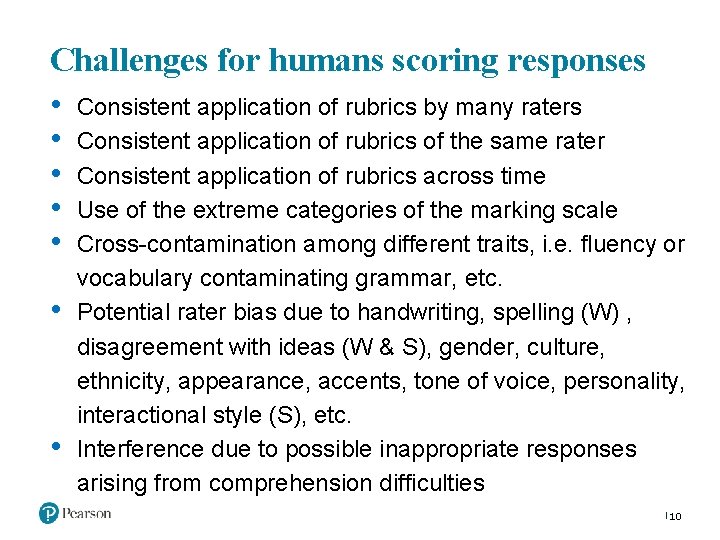

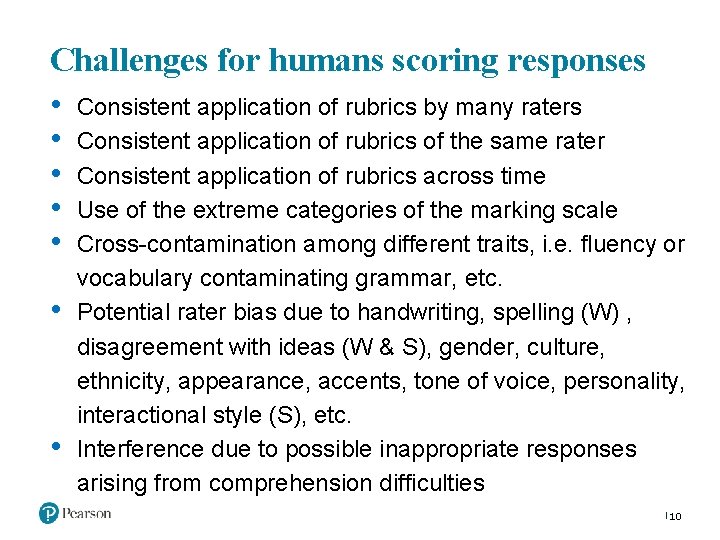

Challenges for humans scoring responses • • Consistent application of rubrics by many raters Consistent application of rubrics of the same rater Consistent application of rubrics across time Use of the extreme categories of the marking scale Cross-contamination among different traits, i. e. fluency or vocabulary contaminating grammar, etc. Potential rater bias due to handwriting, spelling (W) , disagreement with ideas (W & S), gender, culture, ethnicity, appearance, accents, tone of voice, personality, interactional style (S), etc. Interference due to possible inappropriate responses arising from comprehension difficulties 10

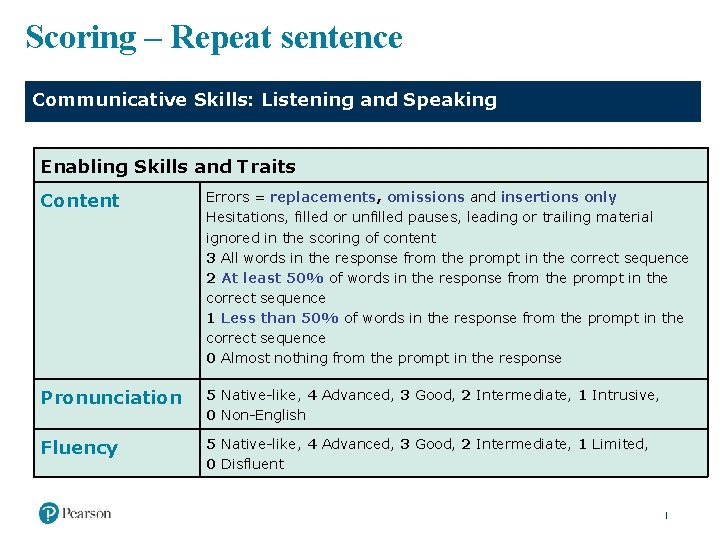

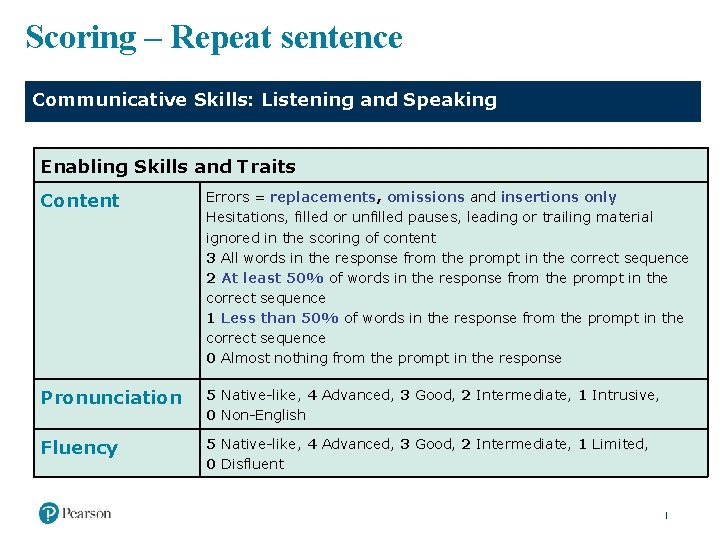

Scoring – Repeat sentence Communicative Skills: Listening and Speaking Enabling Skills and Traits Content Errors = replacements, omissions and insertions only Hesitations, filled or unfilled pauses, leading or trailing material ignored in the scoring of content 3 All words in the response from the prompt in the correct sequence 2 At least 50% of words in the response from the prompt in the correct sequence 1 Less than 50% of words in the response from the prompt in the correct sequence 0 Almost nothing from the prompt in the response Pronunciation 5 Native-like, 4 Advanced, 3 Good, 2 Intermediate, 1 Intrusive, 0 Non-English Fluency 5 Native-like, 4 Advanced, 3 Good, 2 Intermediate, 1 Limited, 0 Disfluent

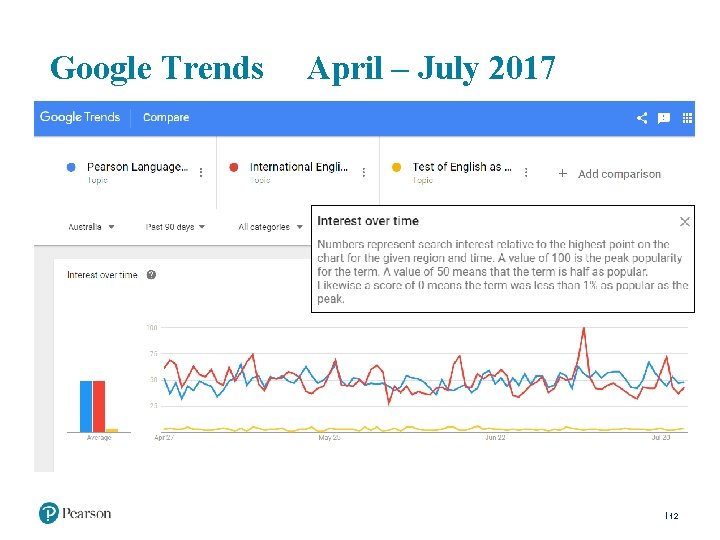

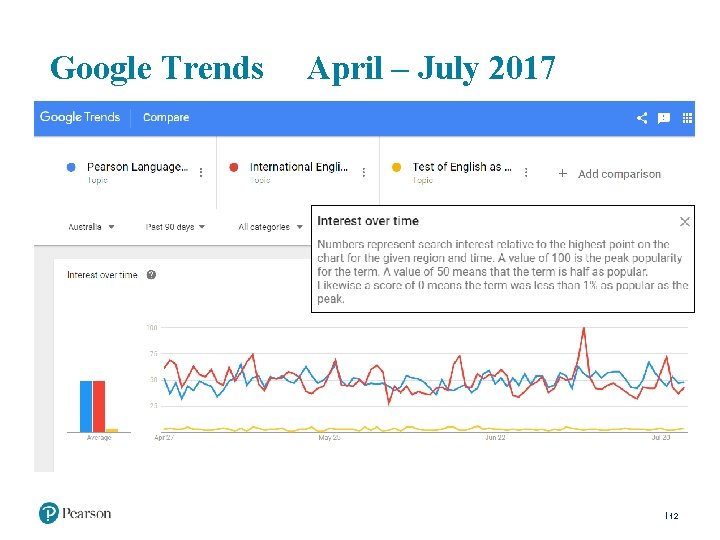

Google Trends April – July 2017 12

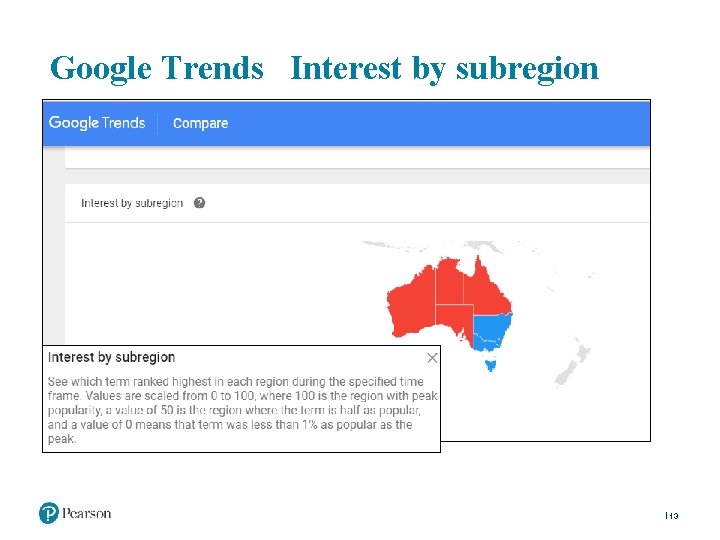

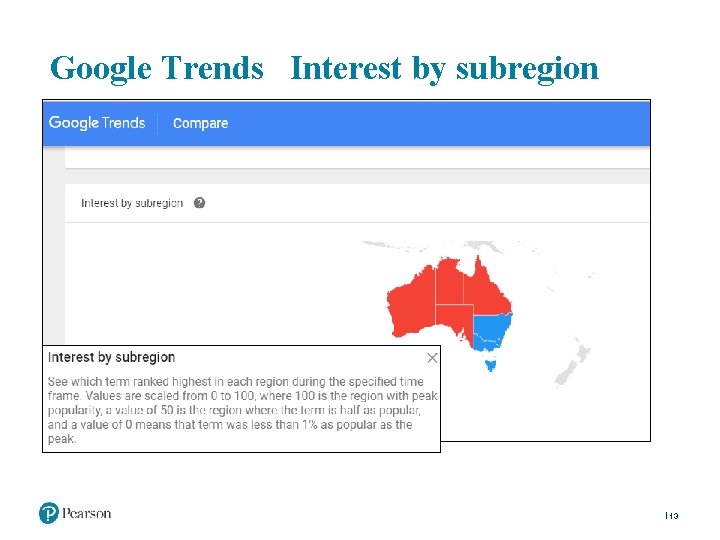

Google Trends Interest by subregion 13

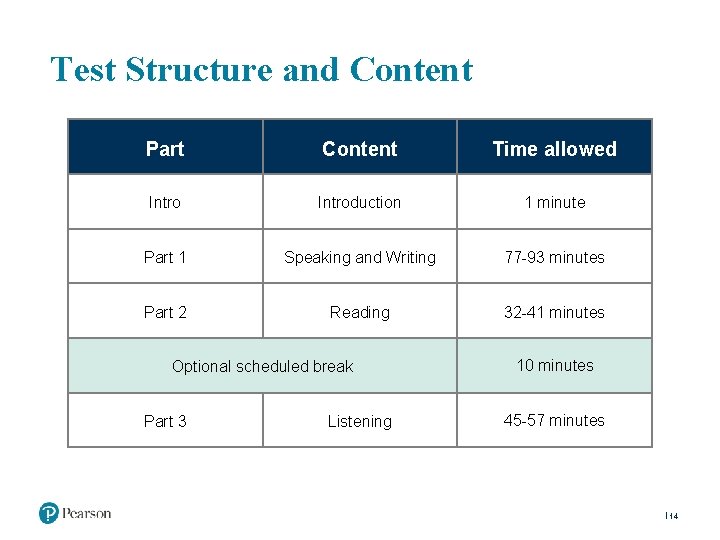

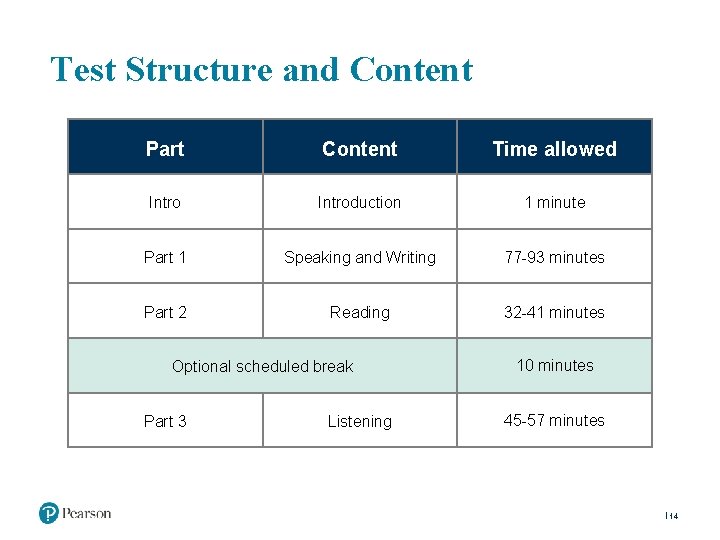

Test Structure and Content Part Content Time allowed Introduction 1 minute Part 1 Speaking and Writing 77 -93 minutes Part 2 Reading 32 -41 minutes Optional scheduled break Part 3 Listening 10 minutes 45 -57 minutes 14

Automated testing

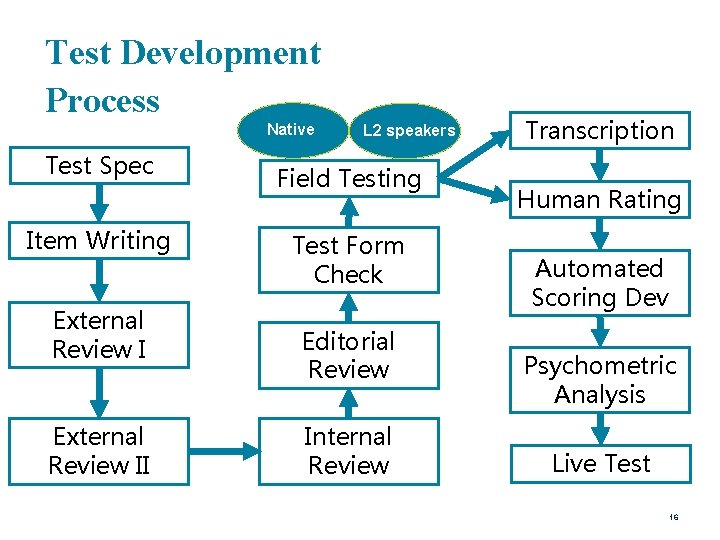

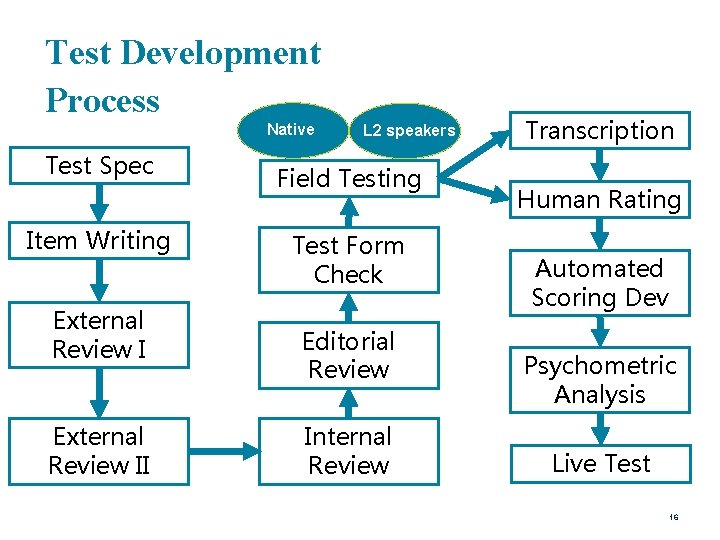

Test Development Process Native Test Spec Item Writing External Review II L 2 speakers Field Testing Test Form Check Editorial Review Internal Review Transcription Human Rating Automated Scoring Dev Psychometric Analysis Live Test 16

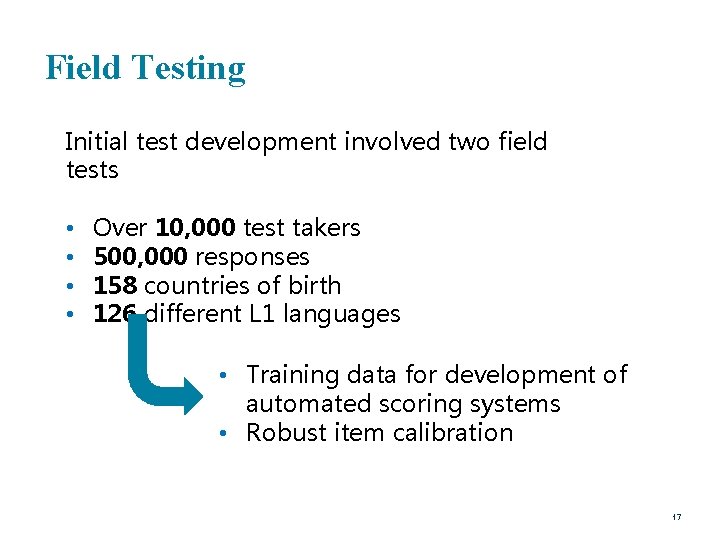

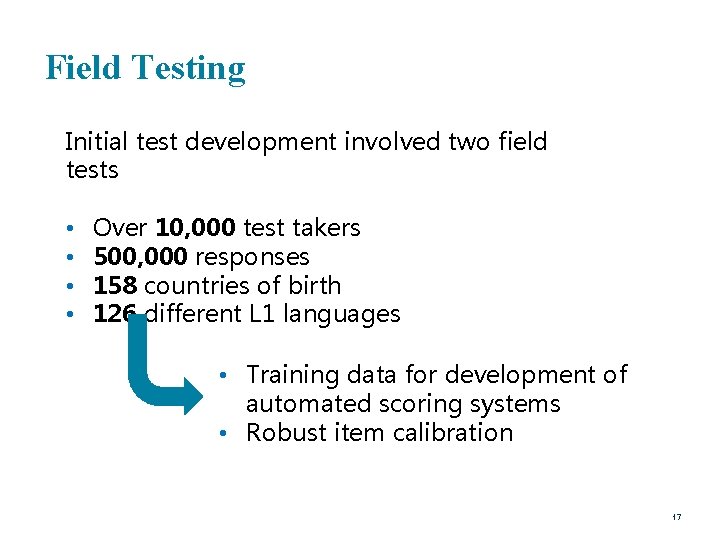

Field Testing Initial test development involved two field tests • • Over 10, 000 test takers 500, 000 responses 158 countries of birth 126 different L 1 languages • Training data for development of automated scoring systems • Robust item calibration 17

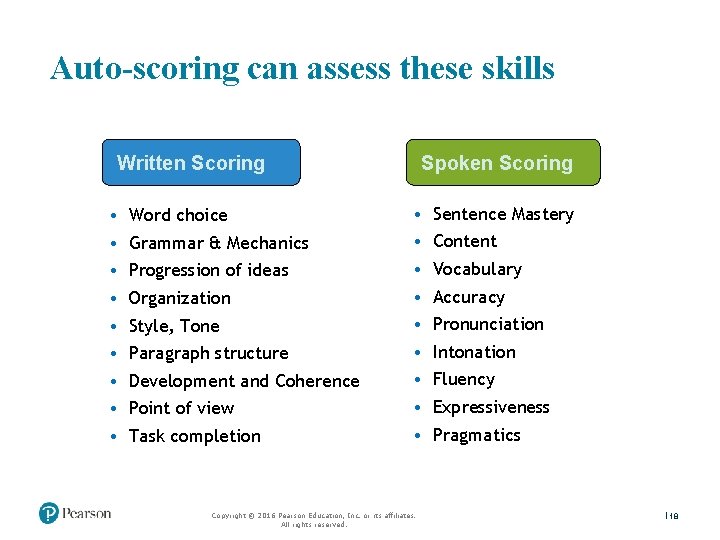

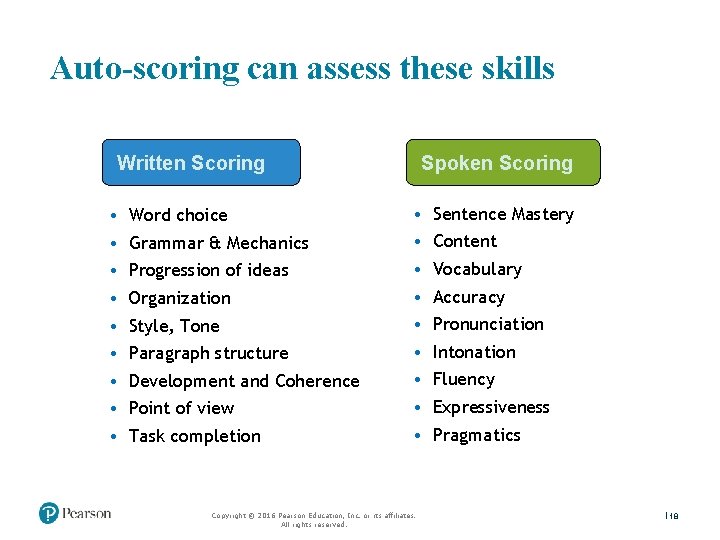

Auto-scoring can assess these skills Written Scoring • • • Word choice Grammar & Mechanics Progression of ideas Organization Style, Tone Paragraph structure Development and Coherence Point of view Task completion Spoken Scoring • • • Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. Sentence Mastery Content Vocabulary Accuracy Pronunciation Intonation Fluency Expressiveness Pragmatics 18

Automated Scoring – Writing

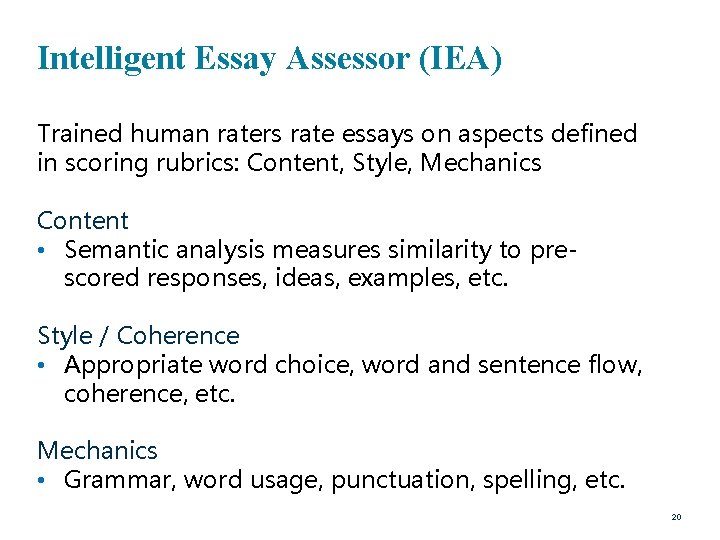

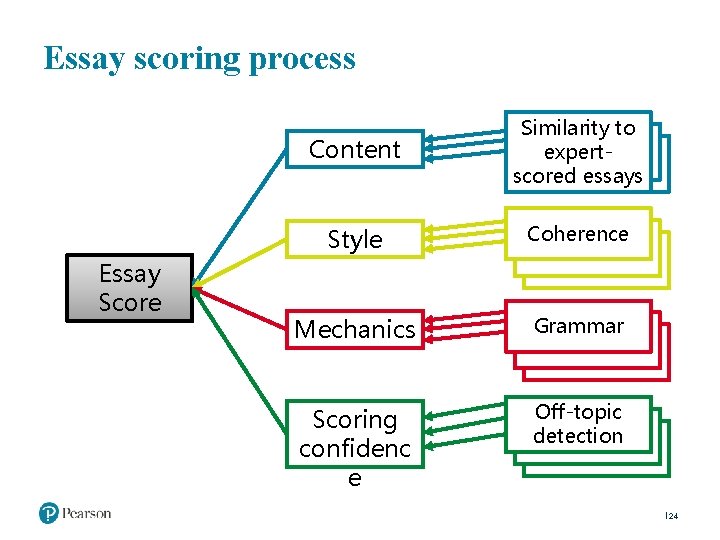

Intelligent Essay Assessor (IEA) Trained human raters rate essays on aspects defined in scoring rubrics: Content, Style, Mechanics Content • Semantic analysis measures similarity to prescored responses, ideas, examples, etc. Style / Coherence • Appropriate word choice, word and sentence flow, coherence, etc. Mechanics • Grammar, word usage, punctuation, spelling, etc. 20

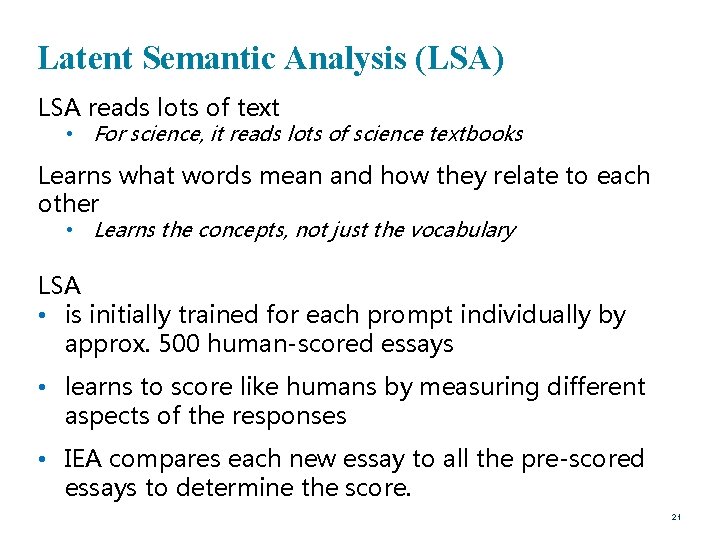

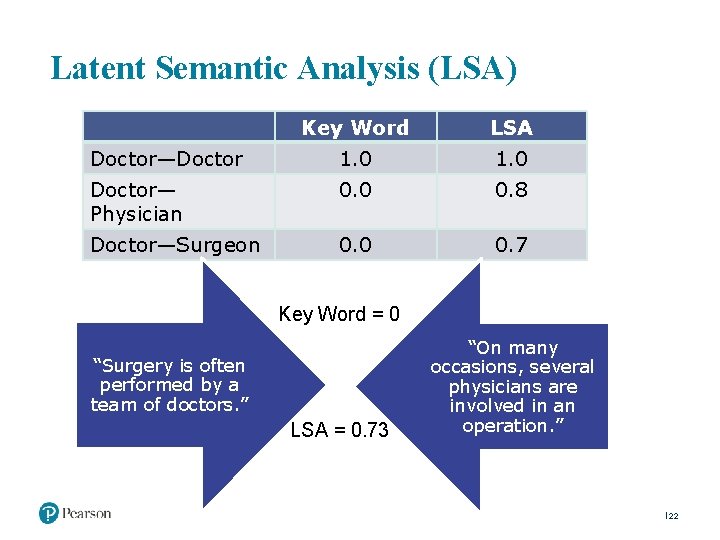

Latent Semantic Analysis (LSA) LSA reads lots of text • For science, it reads lots of science textbooks Learns what words mean and how they relate to each other • Learns the concepts, not just the vocabulary LSA • is initially trained for each prompt individually by approx. 500 human-scored essays • learns to score like humans by measuring different aspects of the responses • IEA compares each new essay to all the pre-scored essays to determine the score. 21

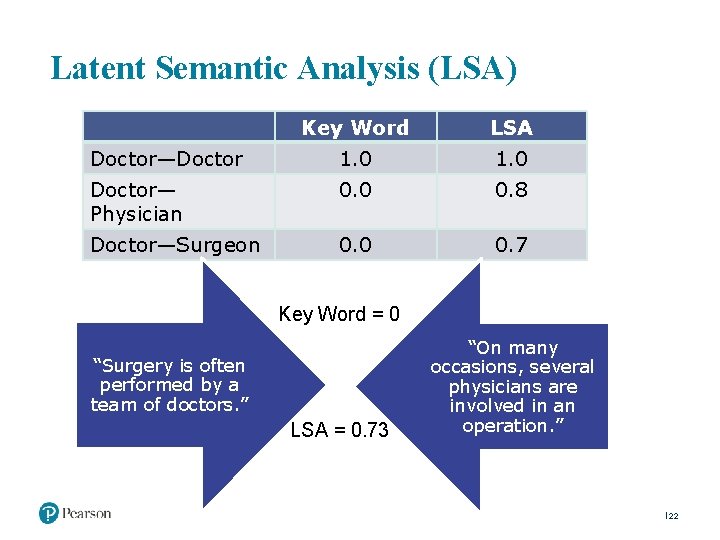

Latent Semantic Analysis (LSA) Key Word LSA Doctor—Doctor 1. 0 Doctor— Physician 0. 0 0. 8 Doctor—Surgeon 0. 0 0. 7 Key Word = 0 “Surgery is often performed by a team of doctors. ” LSA = 0. 73 “On many occasions, several physicians are involved in an operation. ” 22

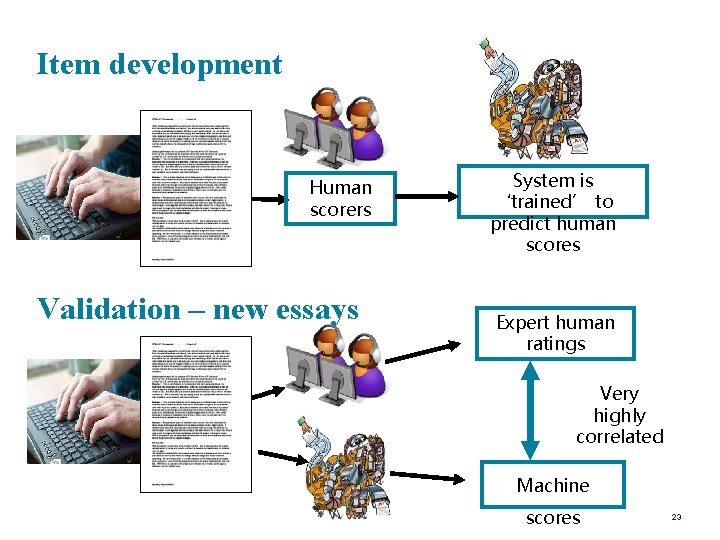

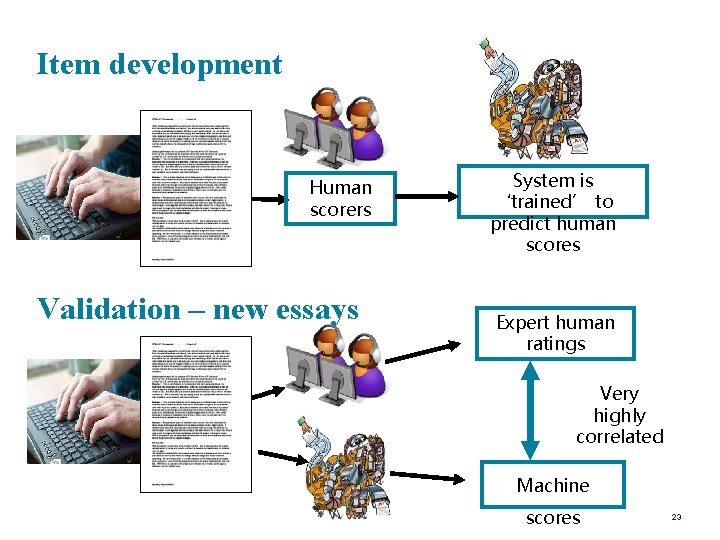

Item development Human scorers Validation – new essays System is ‘trained’ to predict human scores Expert human ratings Very highly correlated Machine scores 23

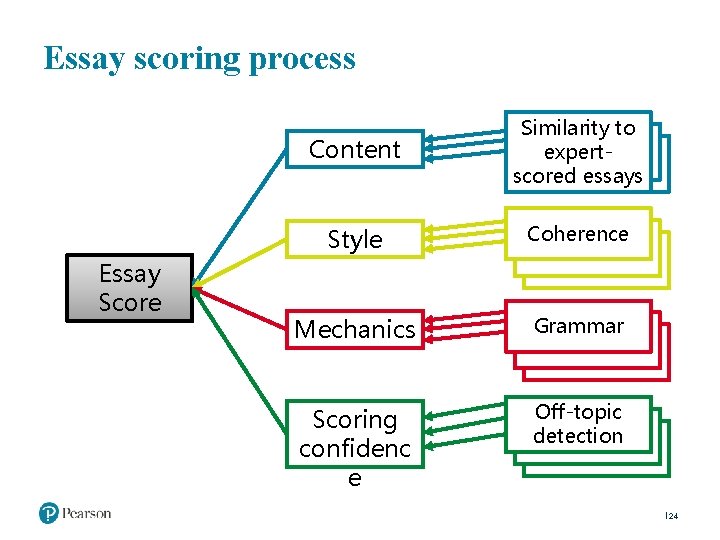

Essay scoring process Content Style Essay Score Similarity to # expertscored essays Coherence Mechanics Grammar # Scoring confidenc e Off-topic Coherence detection 24

Other Intelligent Essay Assessor (IEA) features • Detects off-topic or highly unusual essays • Detects if the IEA may not score an essay well (low confidence rating) • Detects embellishing with long words, non-standard language constructions, swear words, too long, too short, etc. • Detects surface features: ‘thus’, ‘therefore’ • Detects copying of sections of text to reach the word count • Detects plagiarism 25

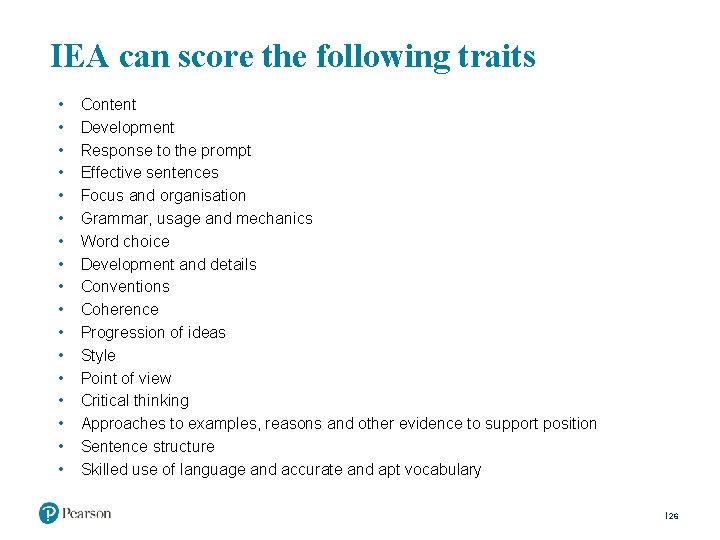

IEA can score the following traits • • • • • Content Development Response to the prompt Effective sentences Focus and organisation Grammar, usage and mechanics Word choice Development and details Conventions Coherence Progression of ideas Style Point of view Critical thinking Approaches to examples, reasons and other evidence to support position Sentence structure Skilled use of language and accurate and apt vocabulary 26

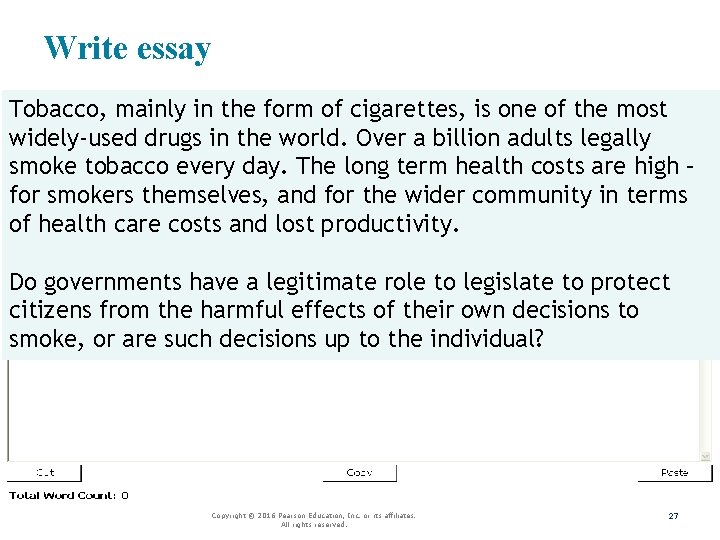

Write essay Tobacco, mainly in the form of cigarettes, is one of the most widely-used drugs in the world. Over a billion adults legally smoke tobacco every day. The long term health costs are high – for smokers themselves, and for the wider community in terms of health care costs and lost productivity. Do governments have a legitimate role to legislate to protect citizens from the harmful effects of their own decisions to smoke, or are such decisions up to the individual? Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. 27

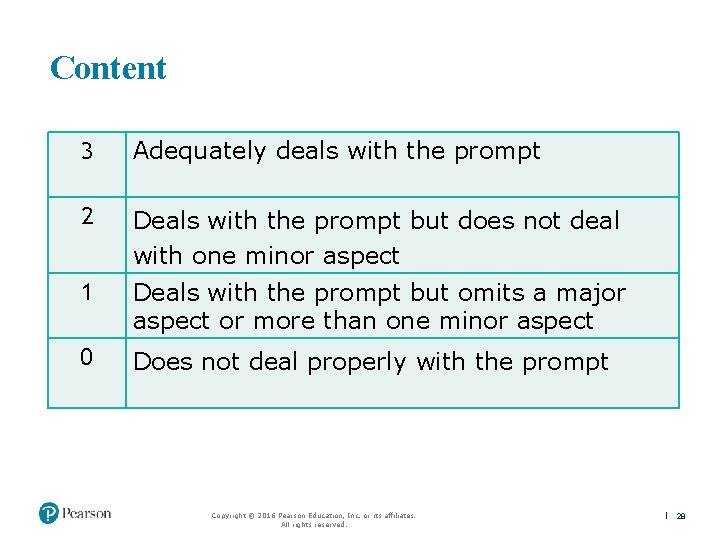

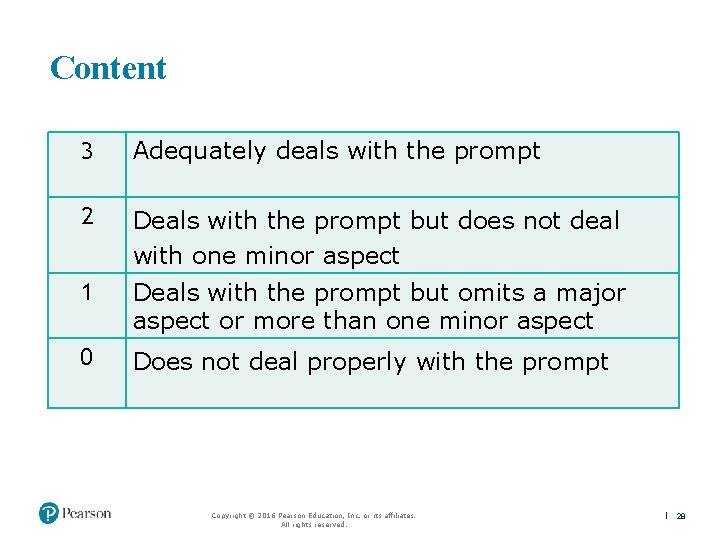

Content 3 Adequately deals with the prompt 2 Deals with the prompt but does not deal with one minor aspect 1 Deals with the prompt but omits a major aspect or more than one minor aspect 0 Does not deal properly with the prompt Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. 28 28

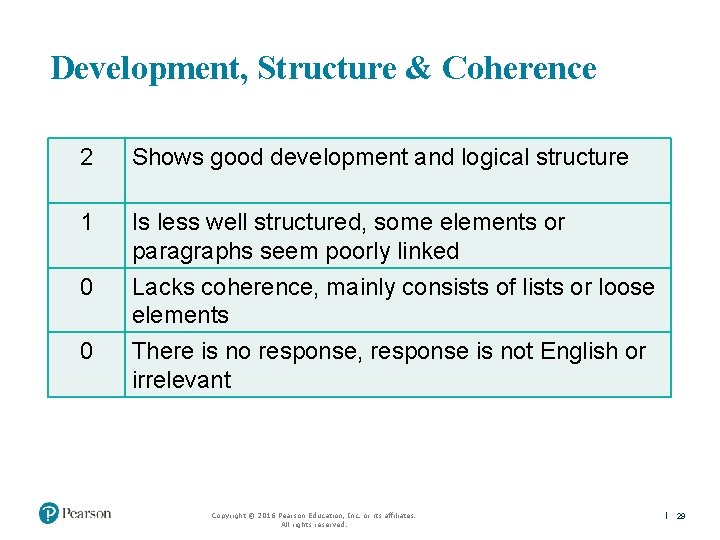

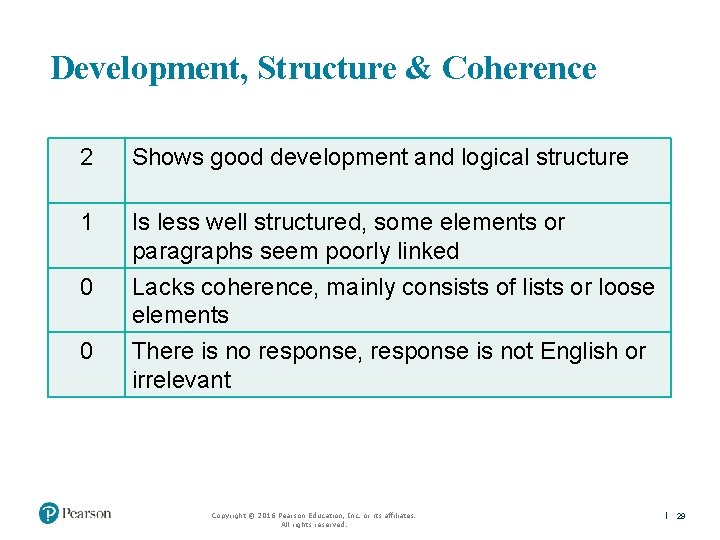

Development, Structure & Coherence 2 Shows good development and logical structure 1 Is less well structured, some elements or paragraphs seem poorly linked 0 Lacks coherence, mainly consists of lists or loose elements 0 There is no response, response is not English or irrelevant Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. 29 29

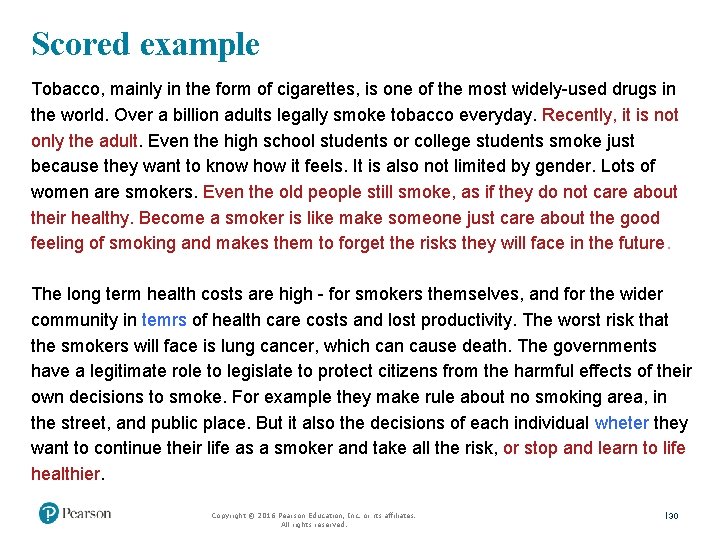

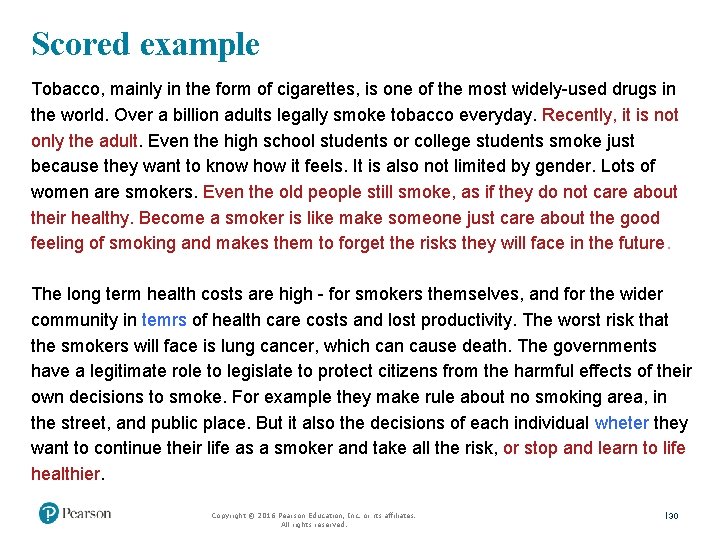

Scored example Tobacco, mainly in the form of cigarettes, is one of the most widely-used drugs in the world. Over a billion adults legally smoke tobacco everyday. Recently, it is not only the adult. Even the high school students or college students smoke just because they want to know how it feels. It is also not limited by gender. Lots of women are smokers. Even the old people still smoke, as if they do not care about their healthy. Become a smoker is like make someone just care about the good feeling of smoking and makes them to forget the risks they will face in the future. The long term health costs are high - for smokers themselves, and for the wider community in temrs of health care costs and lost productivity. The worst risk that the smokers will face is lung cancer, which can cause death. The governments have a legitimate role to legislate to protect citizens from the harmful effects of their own decisions to smoke. For example they make rule about no smoking area, in the street, and public place. But it also the decisions of each individual wheter they want to continue their life as a smoker and take all the risk, or stop and learn to life healthier. Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. 30

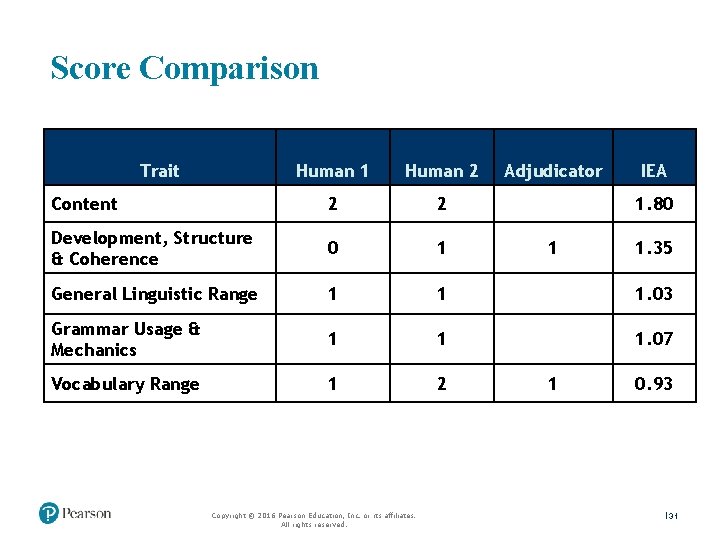

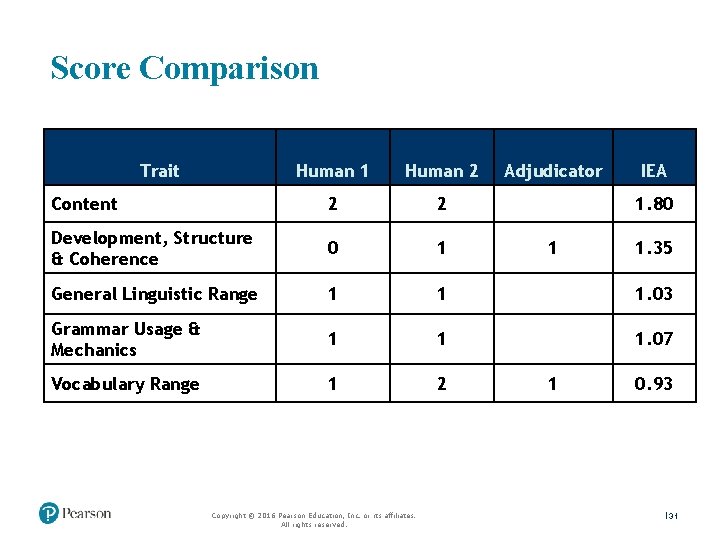

Score Comparison Trait Human 1 Human 2 Content 2 2 Development, Structure & Coherence 0 1 General Linguistic Range 1 1 1. 03 Grammar Usage & Mechanics 1 1 1. 07 Vocabulary Range 1 2 Copyright © 2016 Pearson Education, Inc. or its affiliates. All rights reserved. Adjudicator IEA 1. 80 1 1 1. 35 0. 93 31

Automated Scoring – Speaking

It’s better than Siri! Spontaneous vs Constrained speech • Siri reacts to spontaneous speech • Test taker responses are constrained by the test item.

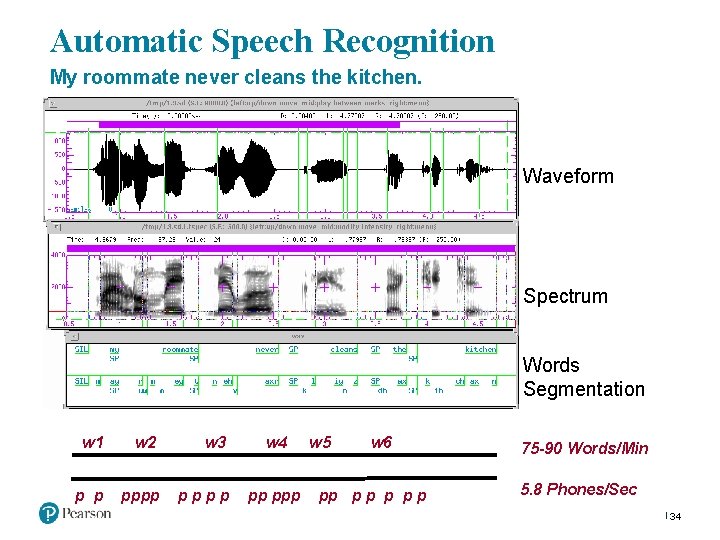

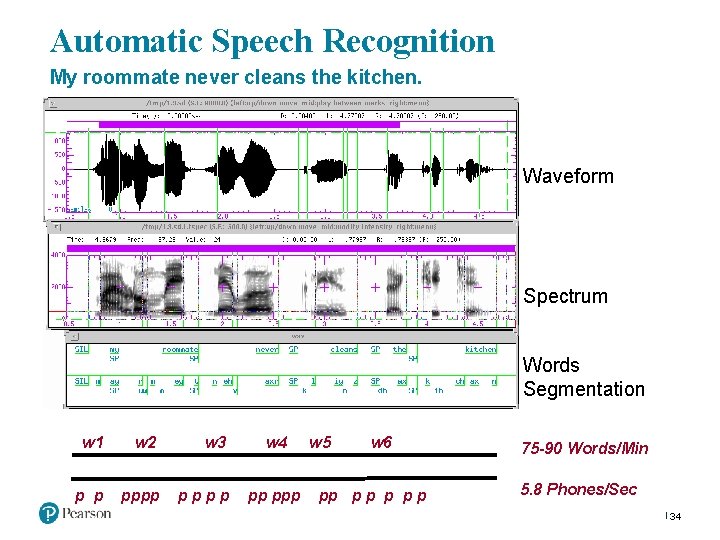

Automatic Speech Recognition My roommate never cleans the kitchen. Waveform Spectrum Words Segmentation w 1 w 2 w 3 p p pppp w 4 pp ppp w 5 w 6 pp p p 75 -90 Words/Min 5. 8 Phones/Sec 34

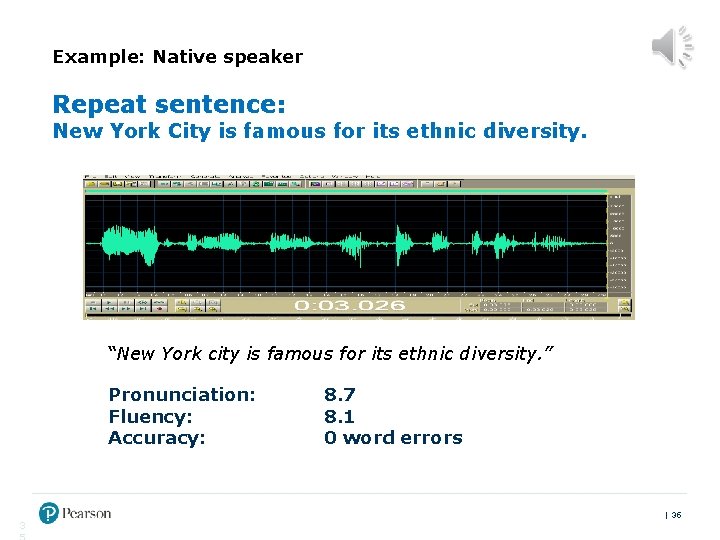

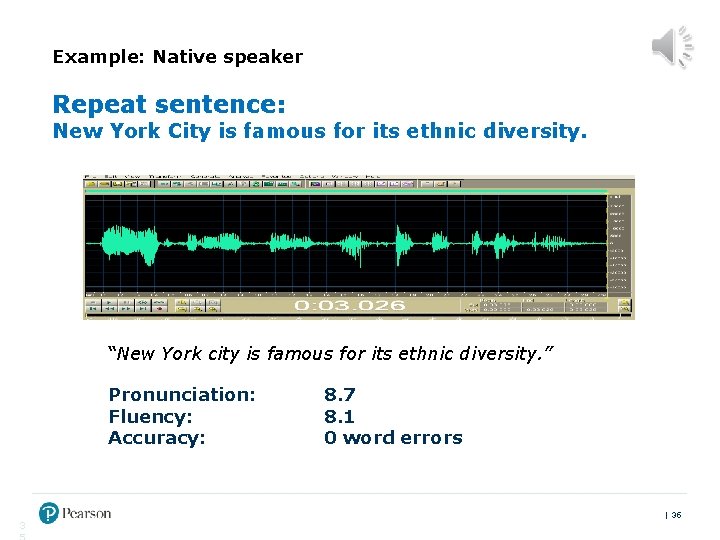

Example: Native speaker Repeat sentence: New York City is famous for its ethnic diversity. “New York city is famous for its ethnic diversity. ” Pronunciation: Fluency: Accuracy: 3 8. 7 8. 1 0 word errors Introducing PTE Academic 35

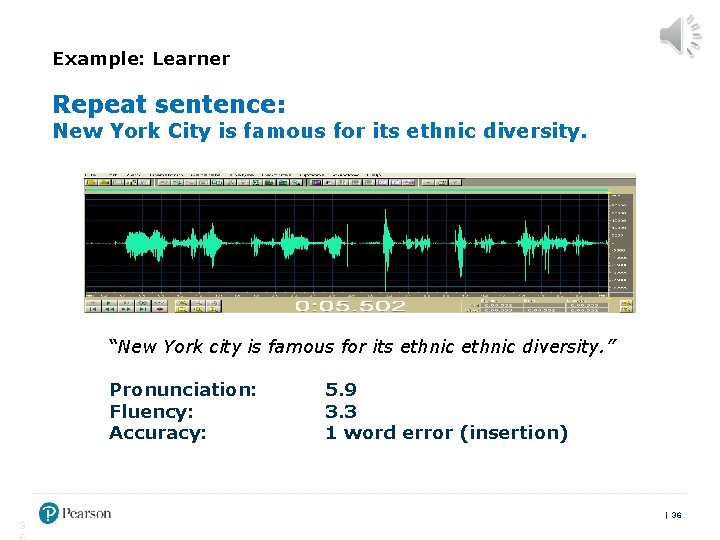

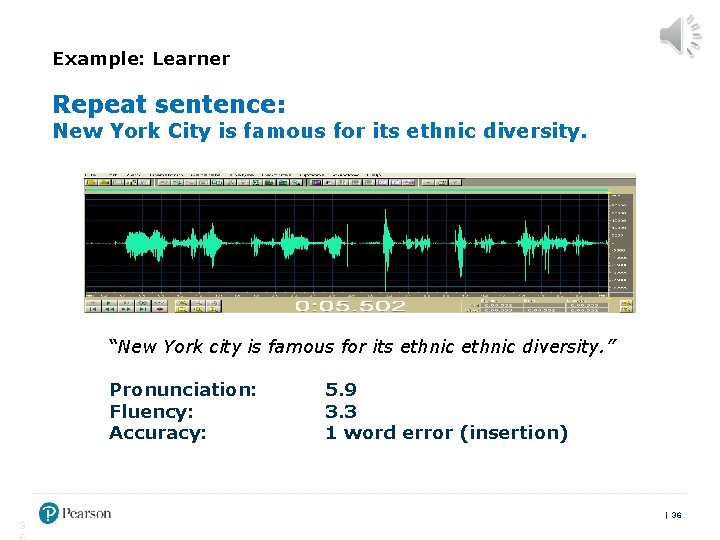

Example: Learner Repeat sentence: New York City is famous for its ethnic diversity. “New York city is famous for its ethnic diversity. ” Pronunciation: Fluency: Accuracy: 3 5. 9 3. 3 1 word error (insertion) Introducing PTE Academic 36

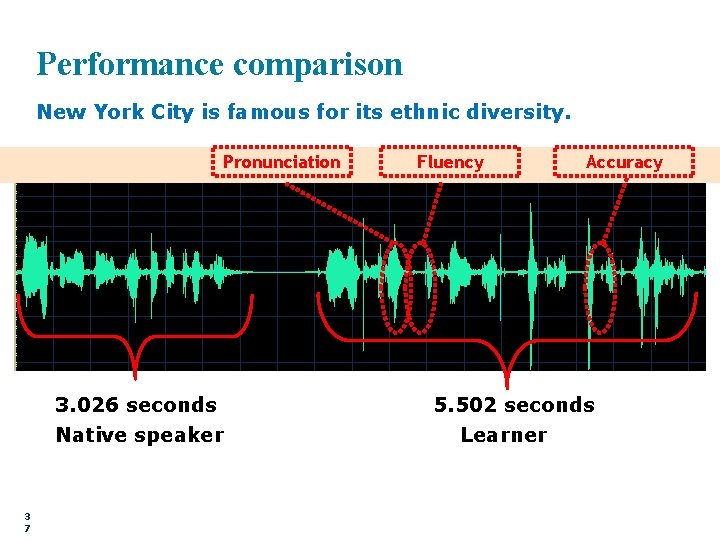

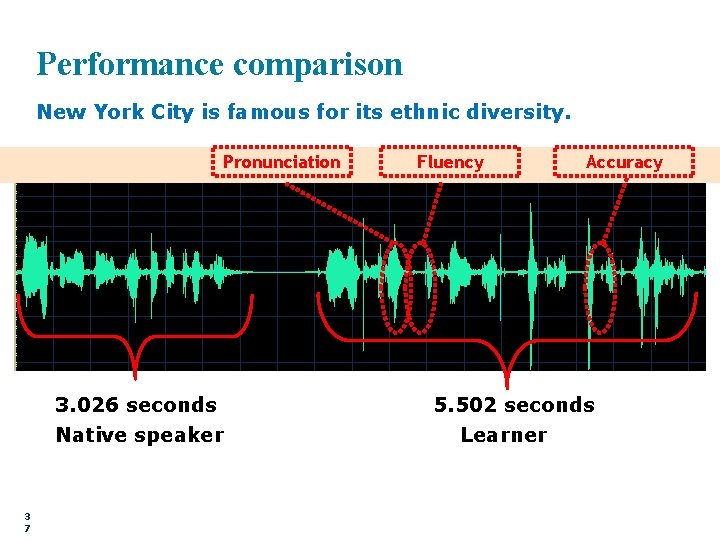

Performance comparison New York City is famous for its ethnic diversity. Pronunciation 3. 026 seconds Native speaker 3 7 Fluency Accuracy 5. 502 seconds Learner

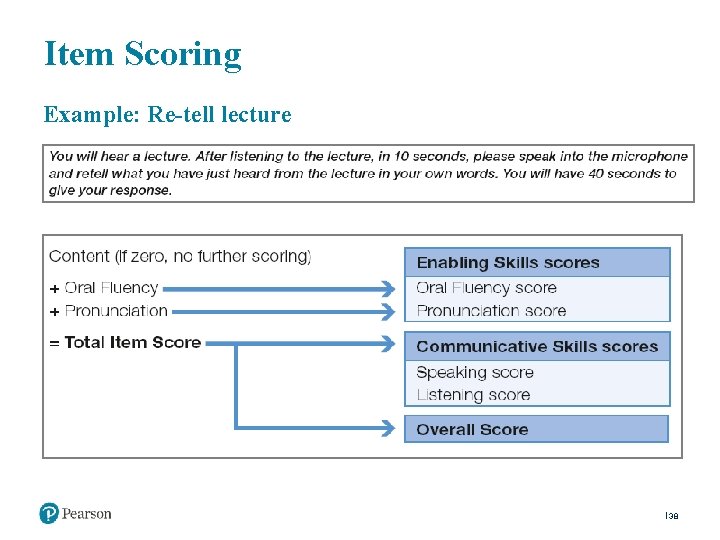

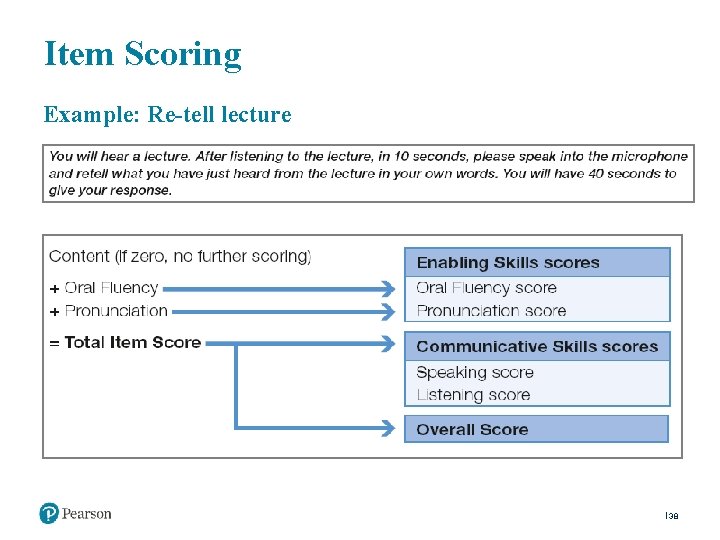

Item Scoring Example: Re-tell lecture 38

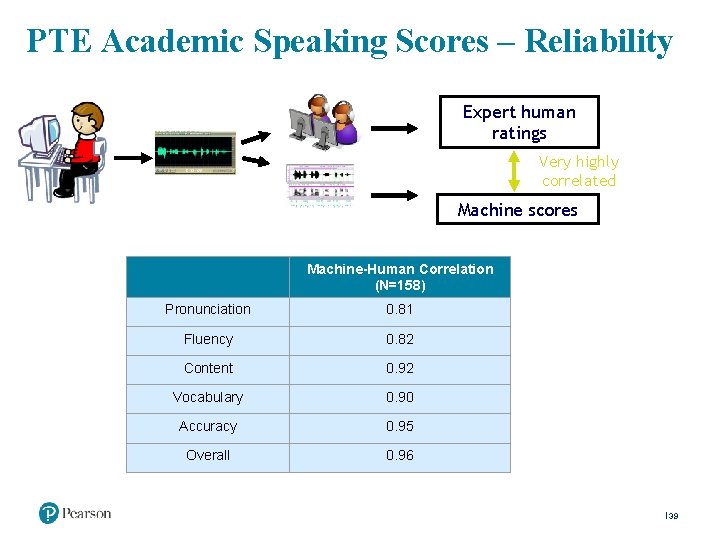

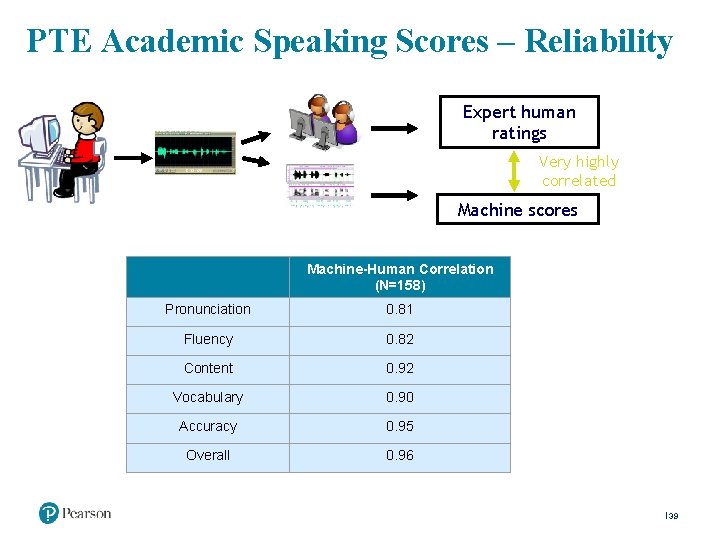

PTE Academic Speaking Scores – Reliability Expert human ratings Very highly correlated Machine scores Machine-Human Correlation (N=158) Pronunciation 0. 81 Fluency 0. 82 Content 0. 92 Vocabulary 0. 90 Accuracy 0. 95 Overall 0. 96 39

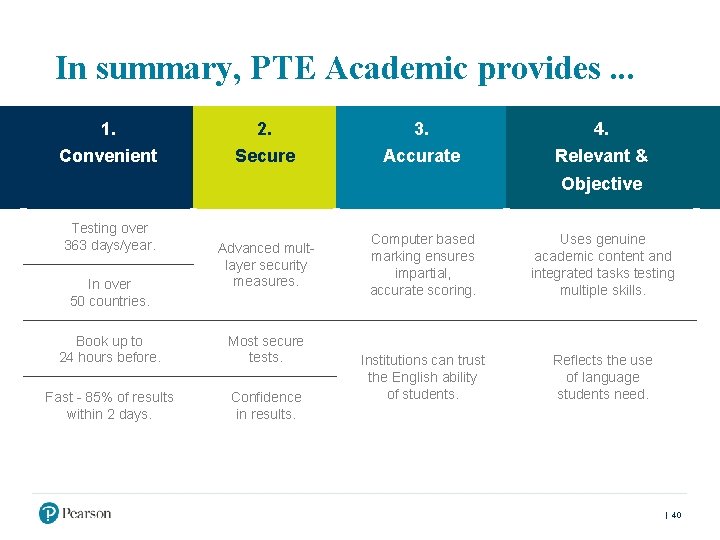

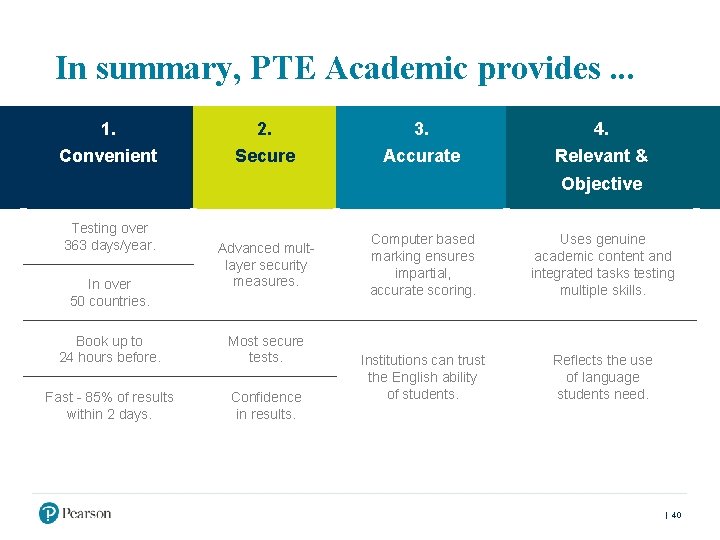

In summary, PTE Academic provides. . . 1. 2. 3. 4. Convenient Secure Accurate Relevant & Objective Testing over 363 days/year. In over 50 countries. Advanced multlayer security measures. Book up to 24 hours before. Most secure tests. Fast - 85% of results within 2 days. Confidence in results. Computer based marking ensures impartial, accurate scoring. Uses genuine academic content and integrated tasks testing multiple skills. Institutions can trust the English ability of students. Reflects the use of language students need. Introducing PTE Academic 40

References Bernstein, J. , Van Moere, A. , & Cheng, J. (2010). Validating automated speaking tests. Language Testing, 27(3), 355– 377. Bernstein, J. , De. Lund, D. , Foltz, P & Streeter, L. (2011). Pearson’s Automated Scoring of Writing, Speaking and Mathematics. Pearson. Brown, A. , Iwashita, N. & Mc. Namara, T. (2005). Rater Orientations and Test-Taker Performance on English-for-Academic-Purposes Speaking Tasks. ETS. Landauer, T. K. , Foltz, P. W. , & Laham, D. (1998). Introduction to Latent Semantic Analysis. Discourse Processes, 259– 284. Landauer, T. K. , Laham, D. , & Foltz, P. W. (2003). Automated scoring and annotation of essays with the Intelligent Essay Assessor. Automated essay scoring: A cross-disciplinary perspective, 87– 112. Van Moere, A. (2016). The Magnificent Marking Machine. Pearson and ELTjam Zhang, M. (2013), Contrasting Automated and Human Scoring of Essays. RD Connections 21. ETS. Introducing PTE Academic 41

Questions?