Pattern Recognition Neural Networks Other Methods Charles Tappert

- Slides: 43

Pattern Recognition: Neural Networks & Other Methods Charles Tappert Seidenberg School of CSIS, Pace University

Agenda n n Neural Network Definitions Linear Discriminant Functions n n Simple Two-layer Perceptron Multilayer Neural Networks Example Multilayer Neural Network Study Non Neural Network Pattern Reco Methods

Neural Network Definitions n n An artificial neural network (ANN) consists of artificial neuron units (threshold logic units) with weighted interconnections A Perceptron is a term created by Frank Rosenblatt in the late 1950 s for an ANN n n Unfortunately, the term Perceptron is often misconstrued to mean only a simple two-layer ANN Therefore, we use the term “simple Perceptron” when referring to a simple two-layer ANN

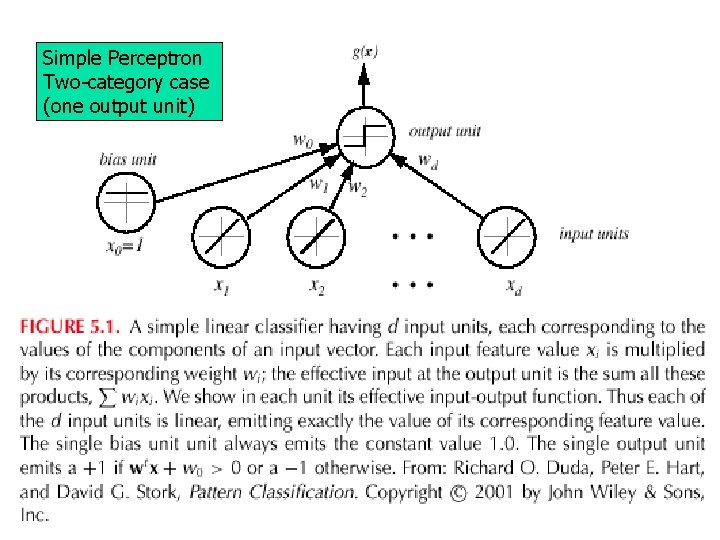

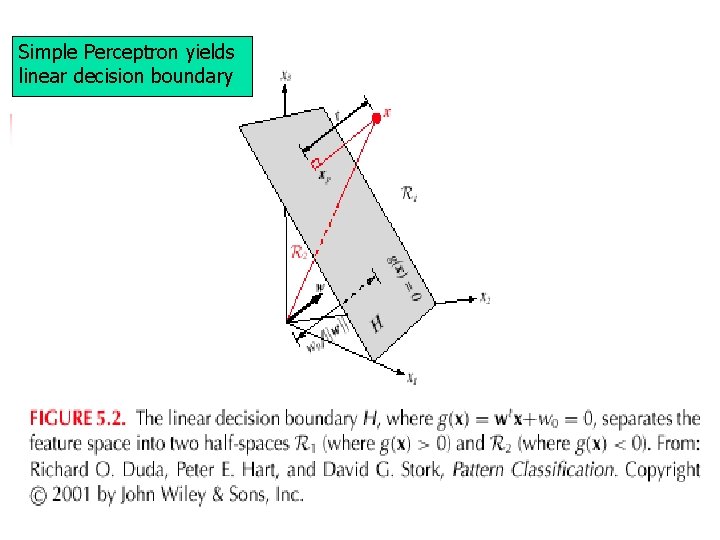

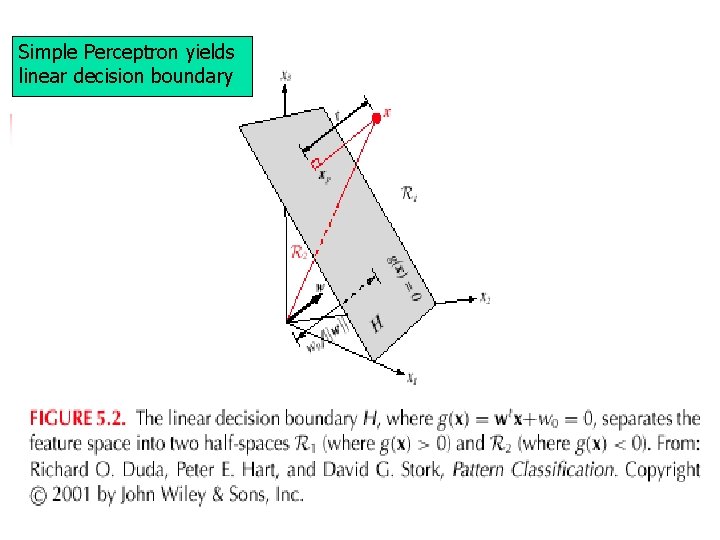

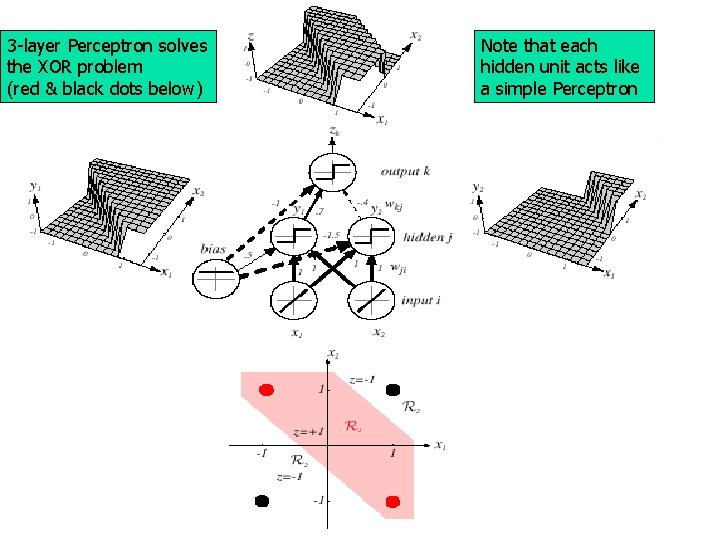

Linear Discriminant Functions n Linear functions of parameters (e. g. , features) n n n The product of an input vector and a weight vector Hyperplane decision boundaries Methods of solution n Simple two-layer Perceptron n n One weight set connecting input to output units Some simple problems unsolvable, e. g. XOR Solve linear algebra directly Support Vector Machines (SVM)

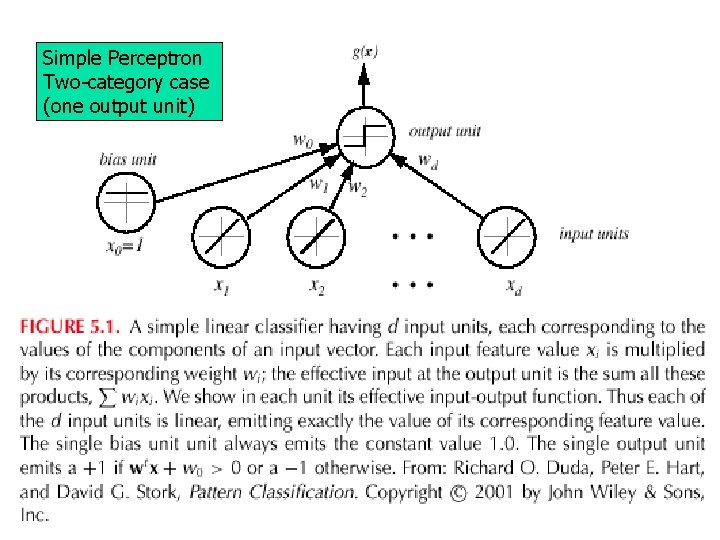

Simple Perceptron Two-category case (one output unit)

Simple Perceptron yields linear decision boundary

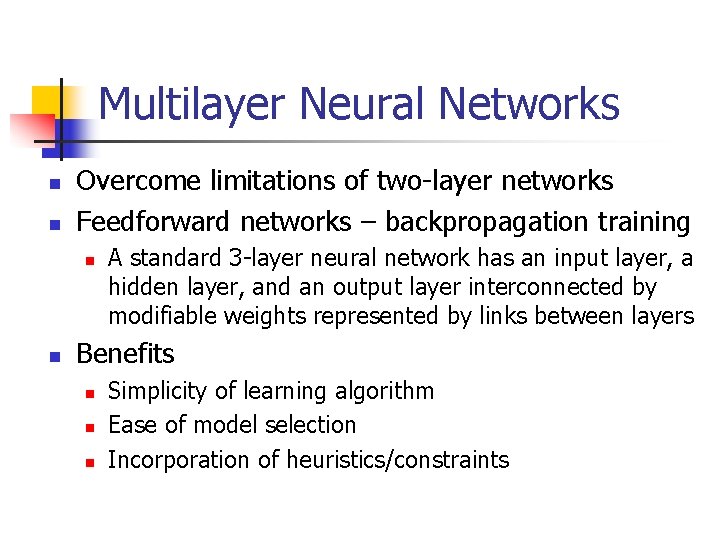

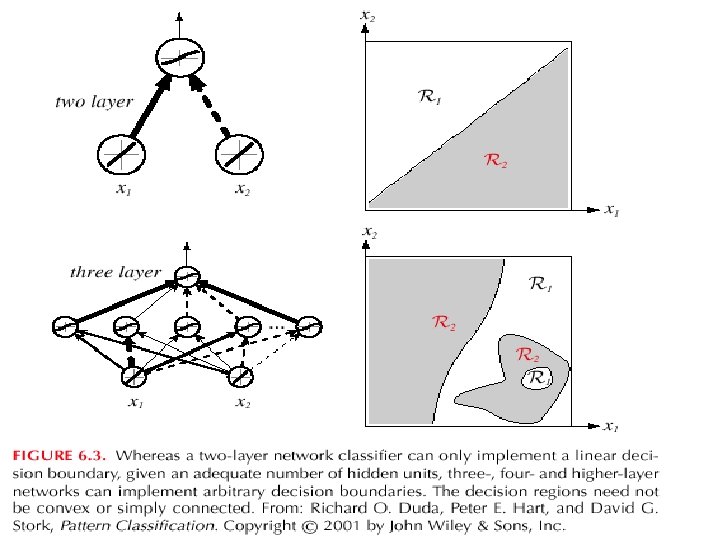

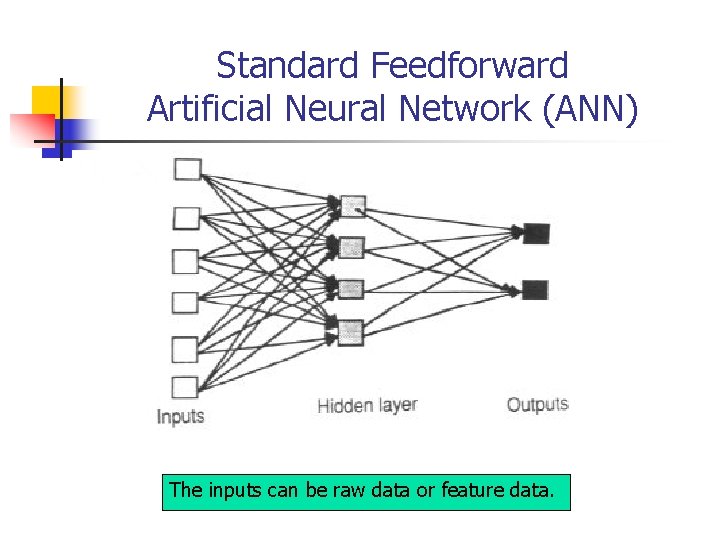

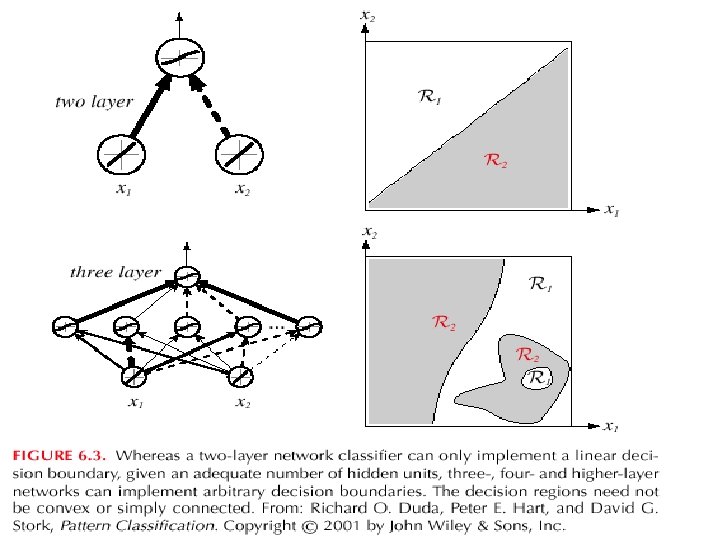

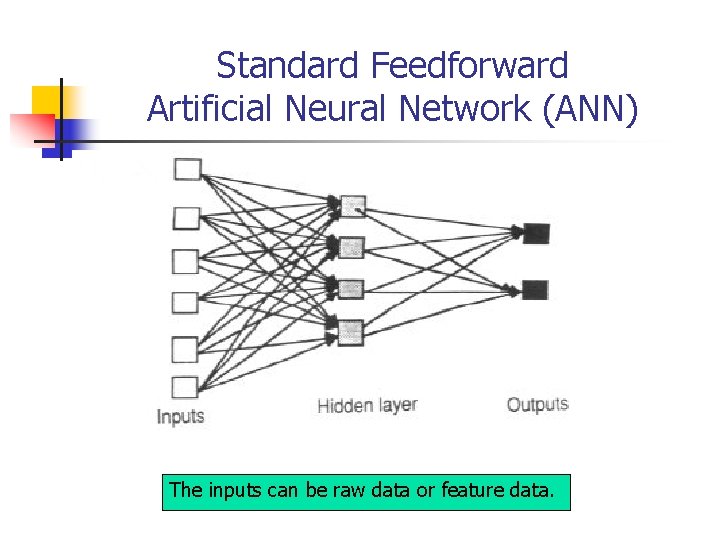

Multilayer Neural Networks n n Overcome limitations of two-layer networks Feedforward networks – backpropagation training n n A standard 3 -layer neural network has an input layer, a hidden layer, and an output layer interconnected by modifiable weights represented by links between layers Benefits n n n Simplicity of learning algorithm Ease of model selection Incorporation of heuristics/constraints

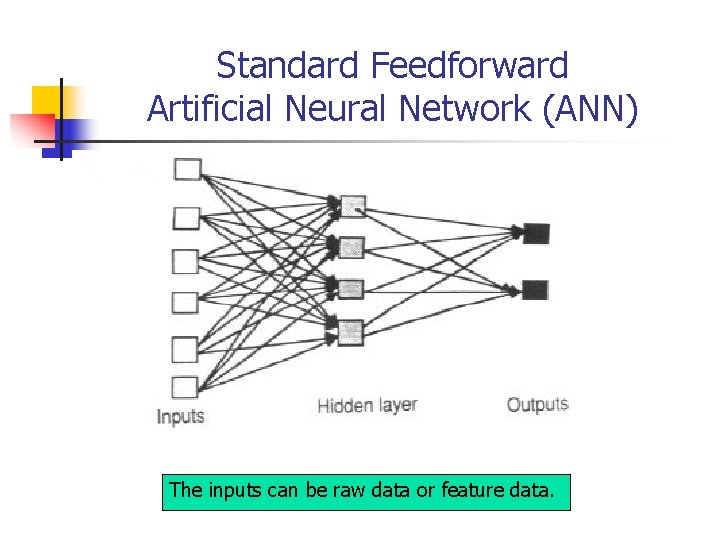

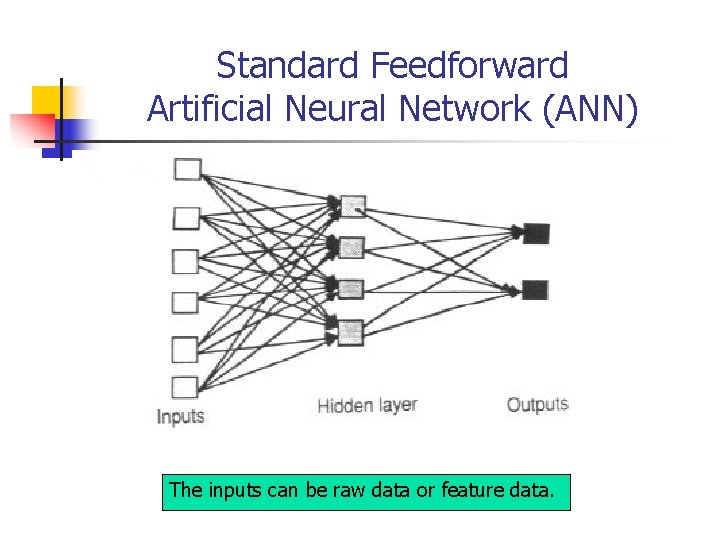

Standard Feedforward Artificial Neural Network (ANN) The inputs can be raw data or feature data.

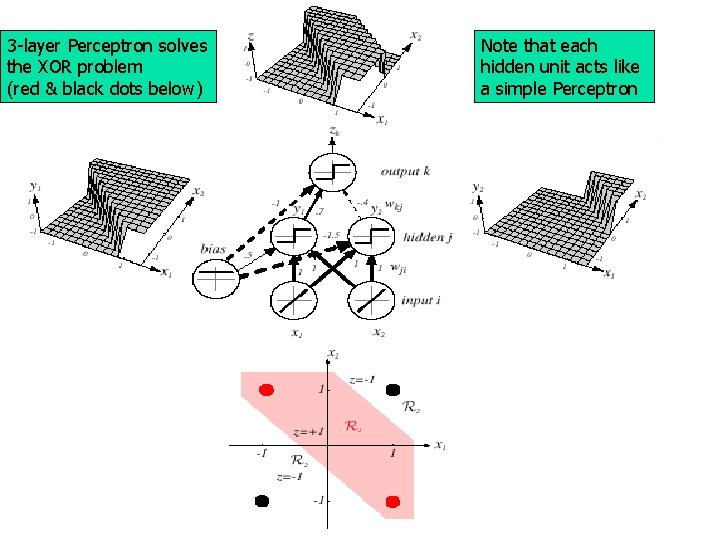

3 -layer Perceptron solves the XOR problem (red & black dots below) Note that each hidden unit acts like a simple Perceptron

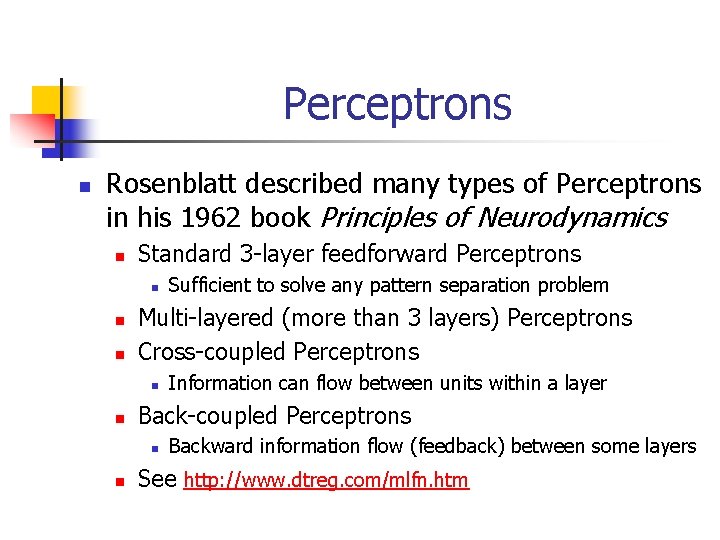

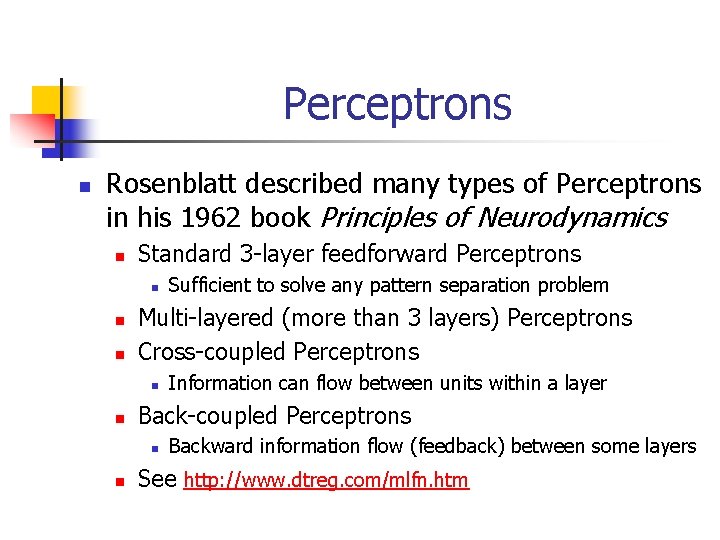

Perceptrons n Rosenblatt described many types of Perceptrons in his 1962 book Principles of Neurodynamics n Standard 3 -layer feedforward Perceptrons n n n Multi-layered (more than 3 layers) Perceptrons Cross-coupled Perceptrons n n Information can flow between units within a layer Back-coupled Perceptrons n n Sufficient to solve any pattern separation problem Backward information flow (feedback) between some layers See http: //www. dtreg. com/mlfn. htm

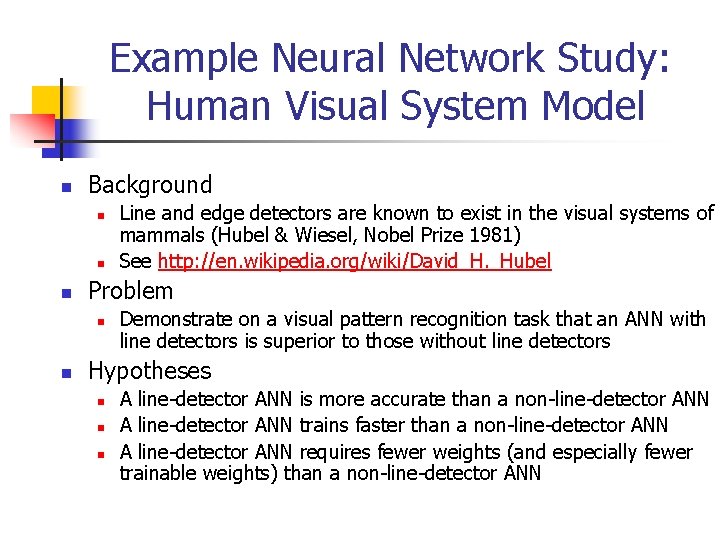

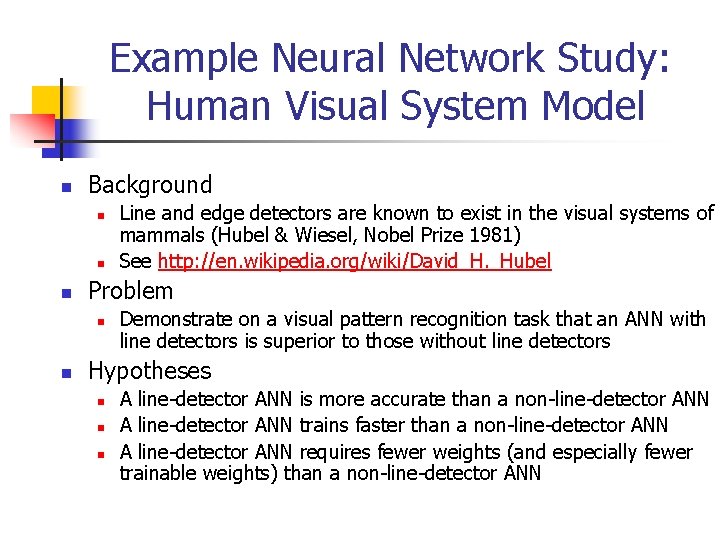

Example Neural Network Study: Human Visual System Model n Background n n n Problem n n Line and edge detectors are known to exist in the visual systems of mammals (Hubel & Wiesel, Nobel Prize 1981) See http: //en. wikipedia. org/wiki/David_H. _Hubel Demonstrate on a visual pattern recognition task that an ANN with line detectors is superior to those without line detectors Hypotheses n n n A line-detector ANN is more accurate than a non-line-detector ANN A line-detector ANN trains faster than a non-line-detector ANN A line-detector ANN requires fewer weights (and especially fewer trainable weights) than a non-line-detector ANN

Example Neural Network Study: Human Visual System Model n Introduction – make a case for the study n n n n The Visual System Biological Simulations of the Visual System ANN approach to visual pattern recognition ANNs Using Line and/or Edge Detectors Current Study Methodology Experimental Results Conclusions and Future Work

The Visual System n The Visual System Pathway n n Eye, optic nerve, lateral geniculate nucleus, visual cortex Hubel and Wiesel n n 1981 Nobel Prize for work in early 1960 s Cat’s visual cortex n n n cats anesthetized, eyes open with controlling muscles paralyzed to fix the stare in a specific direction thin microelectrodes measure activity in individual cells specifically sensitive to line of light at specific orientation Key discovery – line and edge detectors

Biological Simulations of Visual System Computational Neuroscience n n The Hubel-Wiesel discoveries were instrumental in the creation of what is now called computational neuroscience Which studies brain function in terms of information processing properties of structures that make up the nervous system Creates biologically detailed models of the brain November 2009 – IBM announced they created the largest brain simulation to date on the Blue Gene supercomputer n n A billion neurons and trillions of synapses exceeding those in the cat’s brain http: //www. popsci. com/technology/article/2009 -11/digital-cat-brain-runs-blue-gene -supercomputer

Artificial Neural Network Approach n n Machine learning scientists have taken a different approach to visual pattern recognition using simpler neural network models called ANNs The most common type of ANN used in pattern recognition is a 3 -layer feedforward ANN n n n Input layer Hidden layer Output layer

Standard Feedforward Artificial Neural Network (ANN) The inputs can be raw data or feature data.

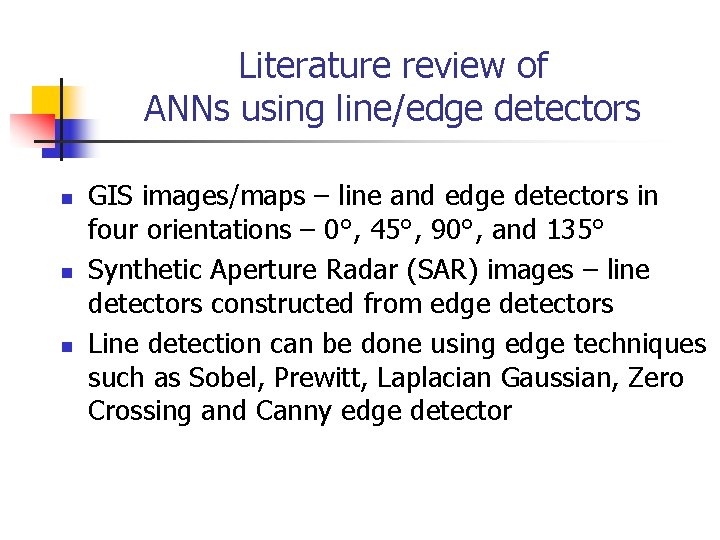

Literature review of ANNs using line/edge detectors n n n GIS images/maps – line and edge detectors in four orientations – 0°, 45°, 90°, and 135° Synthetic Aperture Radar (SAR) images – line detectors constructed from edge detectors Line detection can be done using edge techniques such as Sobel, Prewitt, Laplacian Gaussian, Zero Crossing and Canny edge detector

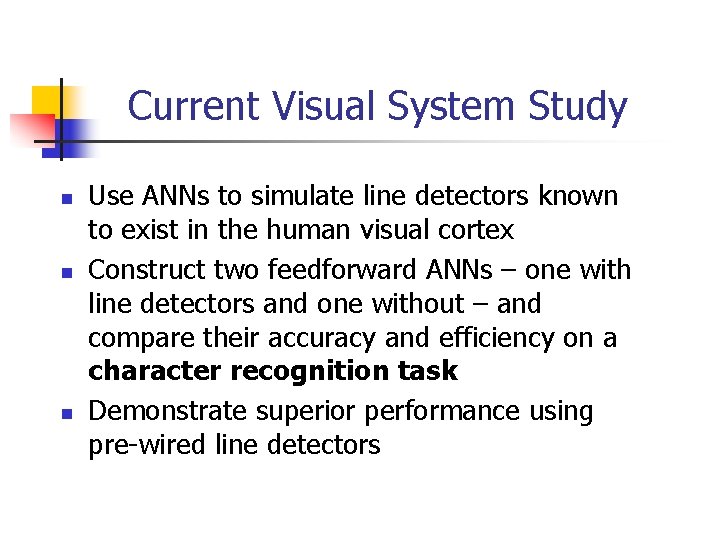

Current Visual System Study n n n Use ANNs to simulate line detectors known to exist in the human visual cortex Construct two feedforward ANNs – one with line detectors and one without – and compare their accuracy and efficiency on a character recognition task Demonstrate superior performance using pre-wired line detectors

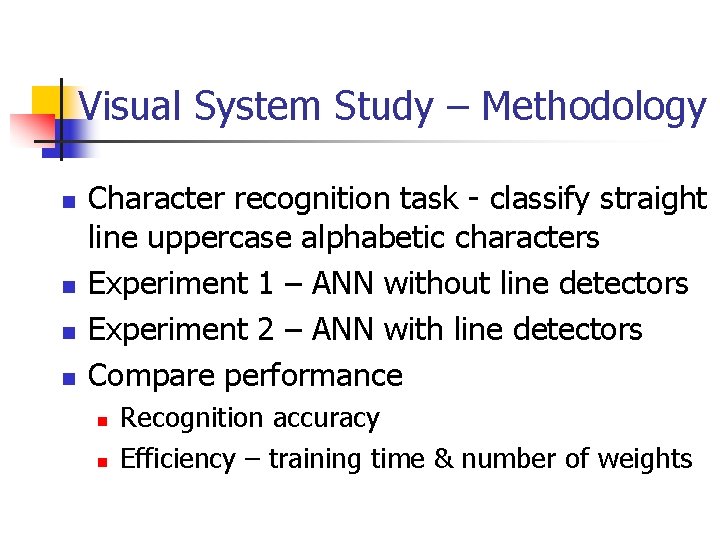

Visual System Study – Methodology n n Character recognition task - classify straight line uppercase alphabetic characters Experiment 1 – ANN without line detectors Experiment 2 – ANN with line detectors Compare performance n n Recognition accuracy Efficiency – training time & number of weights

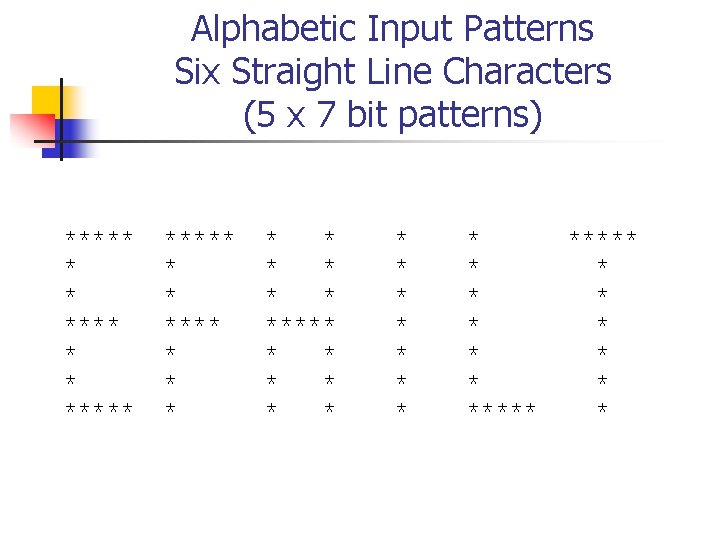

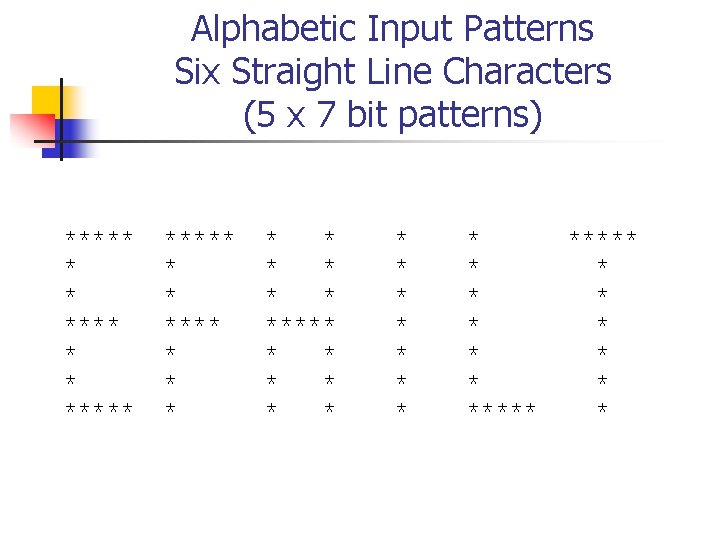

Alphabetic Input Patterns Six Straight Line Characters (5 x 7 bit patterns) ***** * * **** * * * * * * ***** * * * *

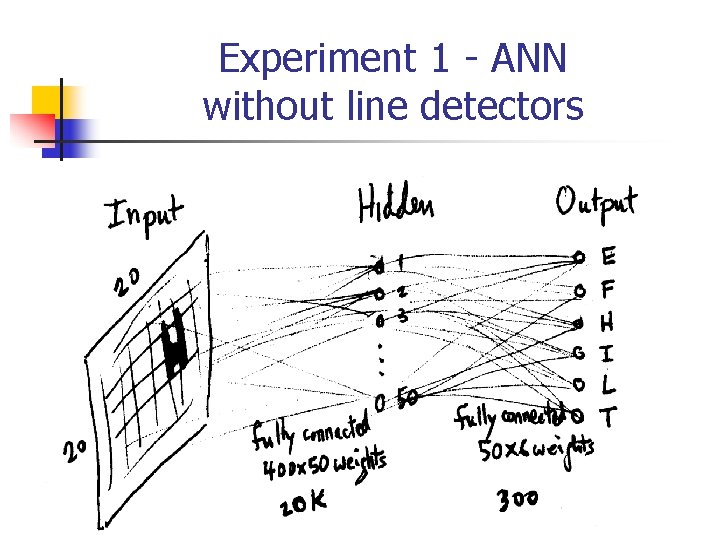

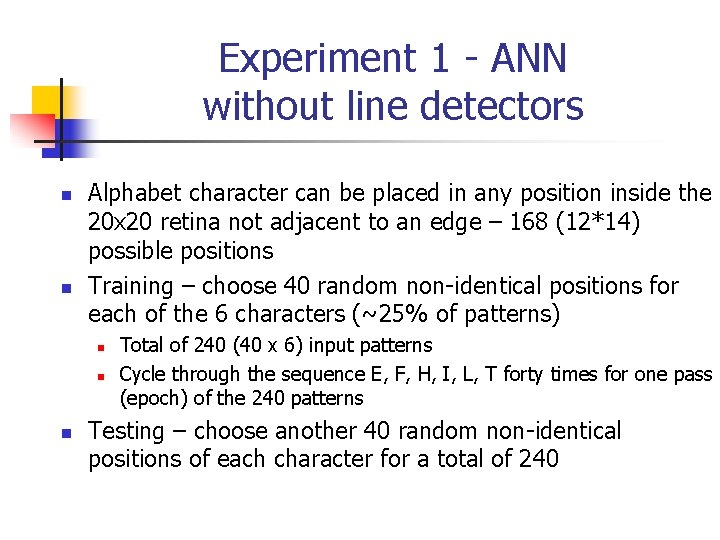

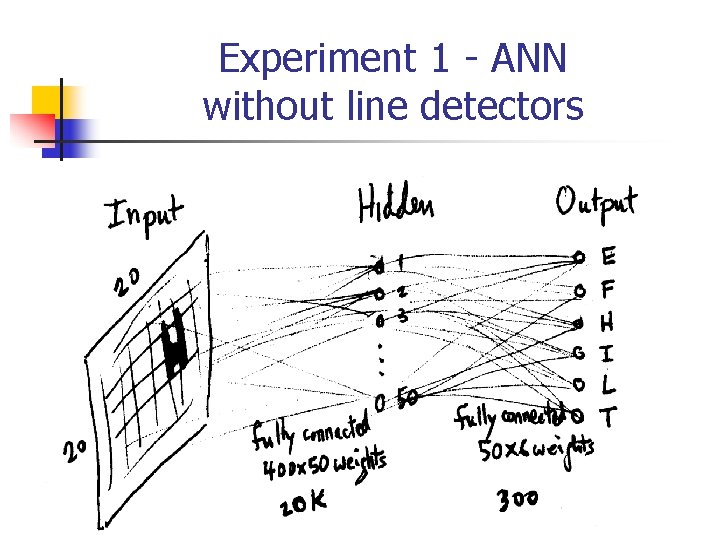

Experiment 1 - ANN without line detectors

Experiment 1 - ANN without line detectors n n Alphabet character can be placed in any position inside the 20 x 20 retina not adjacent to an edge – 168 (12*14) possible positions Training – choose 40 random non-identical positions for each of the 6 characters (~25% of patterns) n n n Total of 240 (40 x 6) input patterns Cycle through the sequence E, F, H, I, L, T forty times for one pass (epoch) of the 240 patterns Testing – choose another 40 random non-identical positions of each character for a total of 240

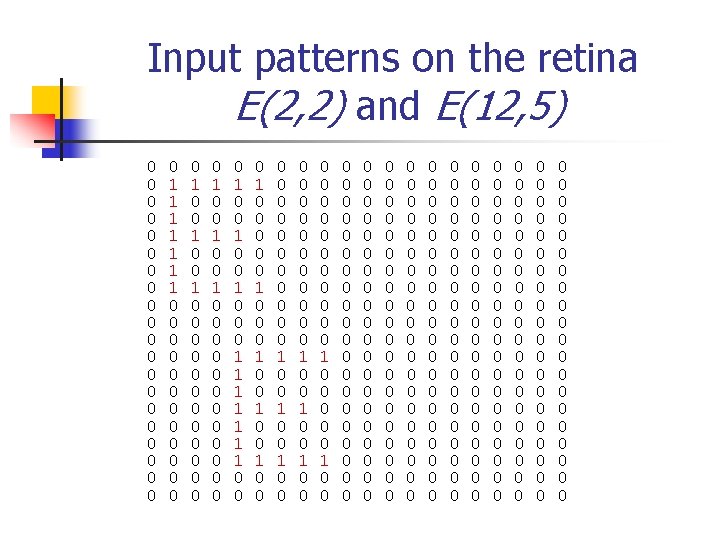

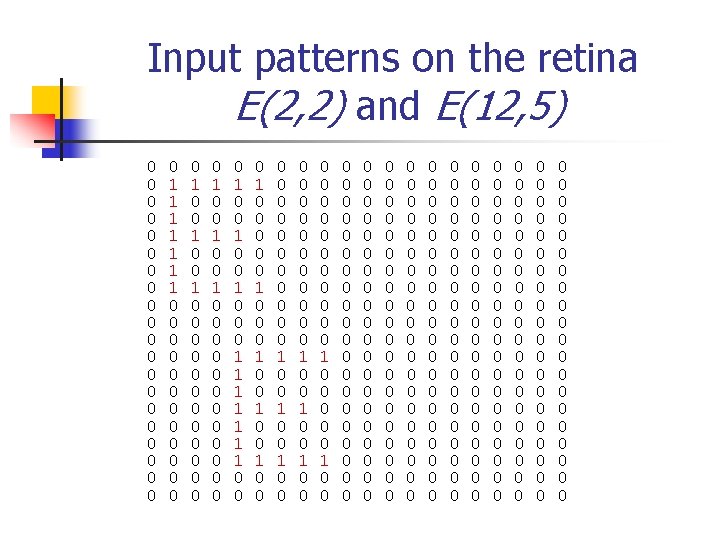

Input patterns on the retina E(2, 2) and E(12, 5) 0 0 0 0 0 0 1 1 1 1 0 0 0 0 0 0 0 1 0 0 1 0 0 0 0 1 0 0 0 1 1 1 1 0 0 0 0 0 1 0 0 1 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

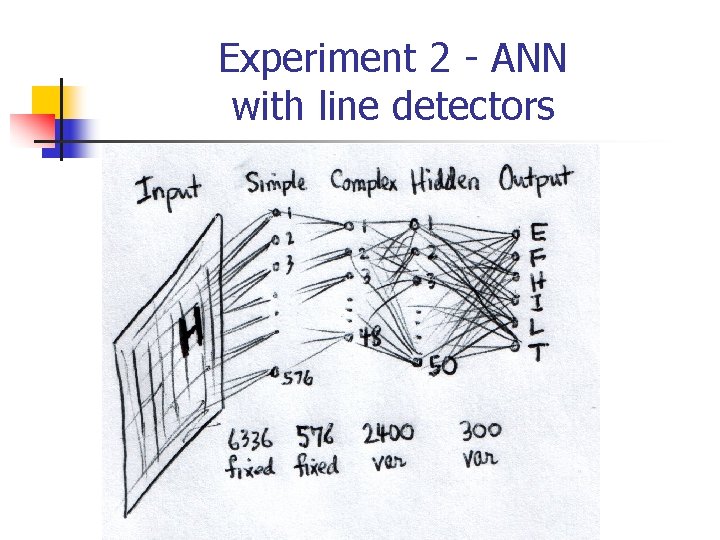

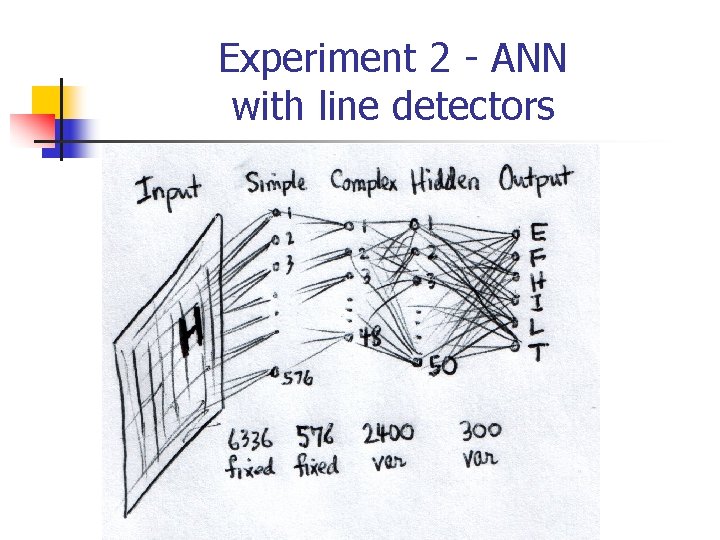

Experiment 2 - ANN with line detectors

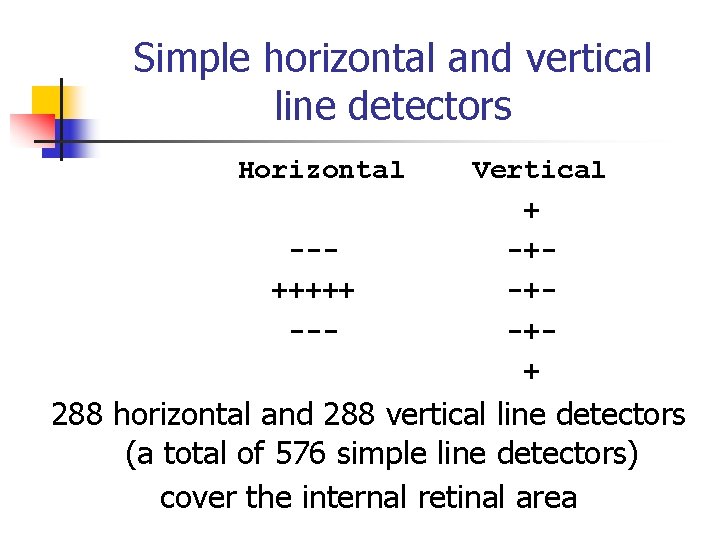

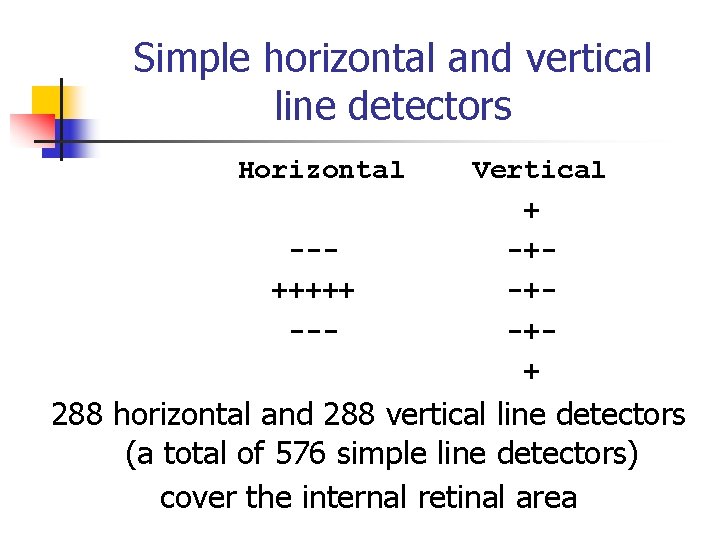

Simple horizontal and vertical line detectors Horizontal --+++++ --- Vertical + -+-+-++ 288 horizontal and 288 vertical line detectors (a total of 576 simple line detectors) cover the internal retinal area

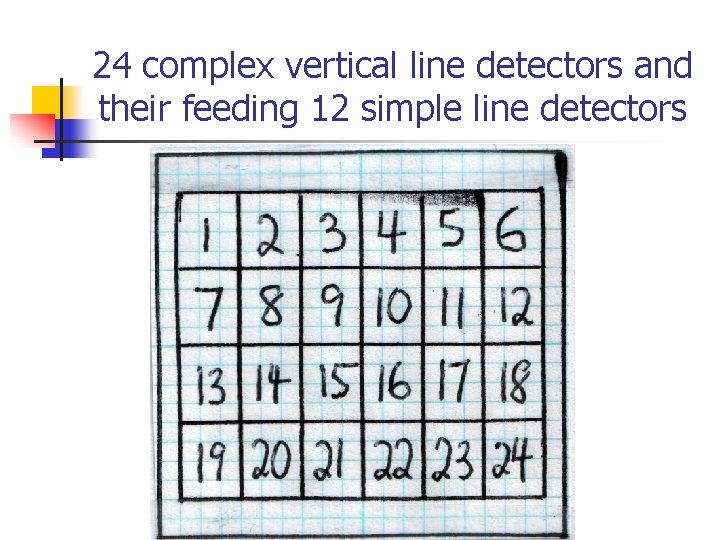

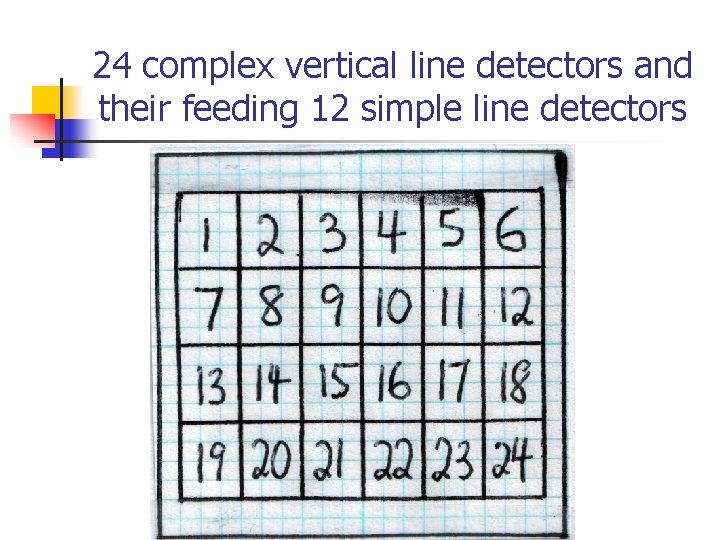

24 complex vertical line detectors and their feeding 12 simple line detectors

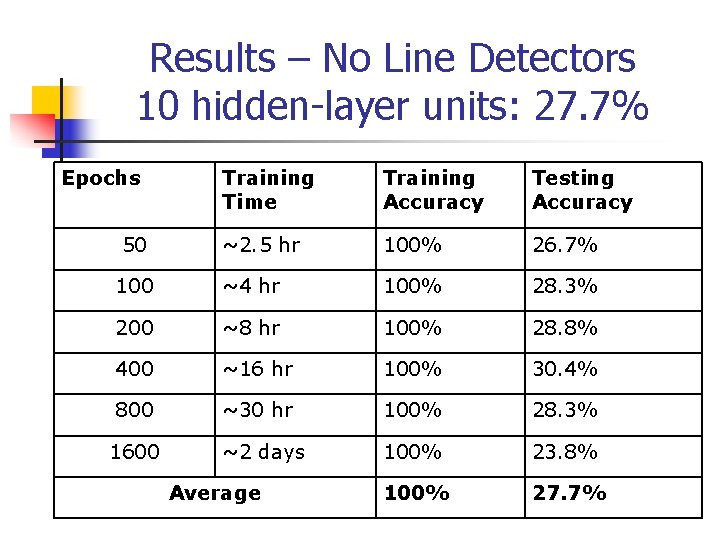

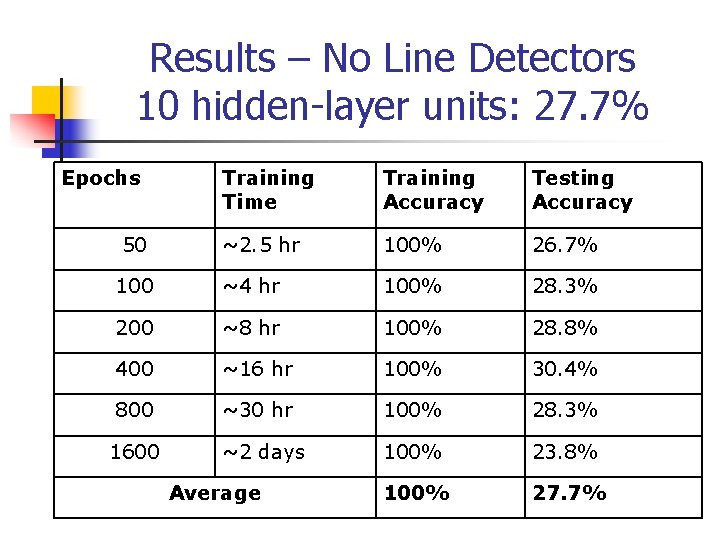

Results – No Line Detectors 10 hidden-layer units: 27. 7% Epochs Training Time Training Accuracy Testing Accuracy ~2. 5 hr 100% 26. 7% 100 ~4 hr 100% 28. 3% 200 ~8 hr 100% 28. 8% 400 ~16 hr 100% 30. 4% 800 ~30 hr 100% 28. 3% ~2 days 100% 23. 8% 100% 27. 7% 50 1600 Average

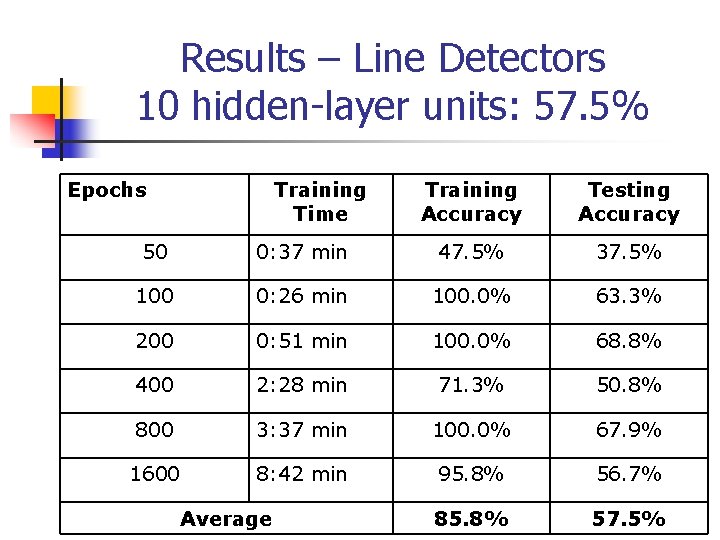

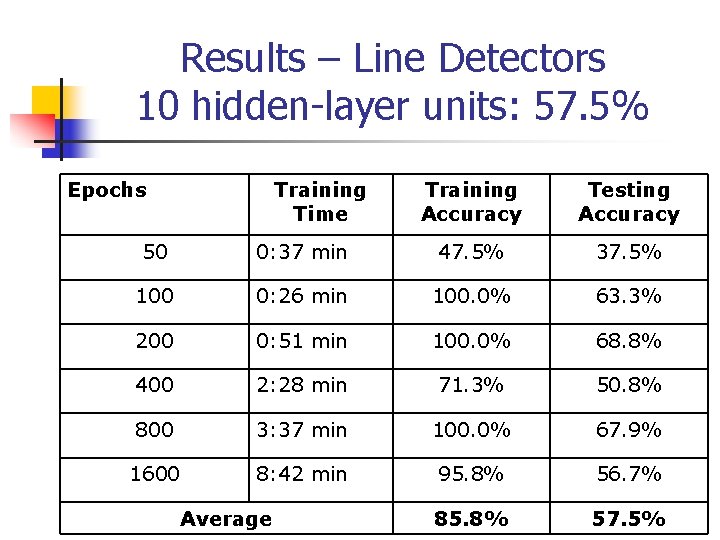

Results – Line Detectors 10 hidden-layer units: 57. 5% Epochs Training Time Training Accuracy Testing Accuracy 50 0: 37 min 47. 5% 37. 5% 100 0: 26 min 100. 0% 63. 3% 200 0: 51 min 100. 0% 68. 8% 400 2: 28 min 71. 3% 50. 8% 800 3: 37 min 100. 0% 67. 9% 1600 8: 42 min 95. 8% 56. 7% 85. 8% 57. 5% Average

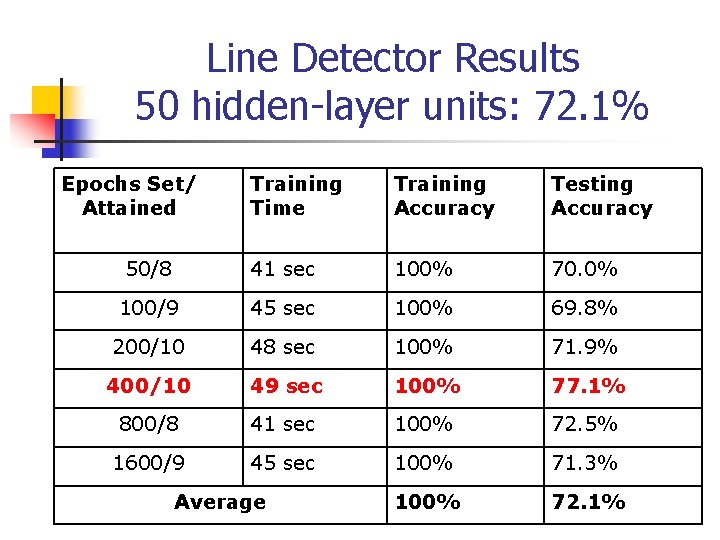

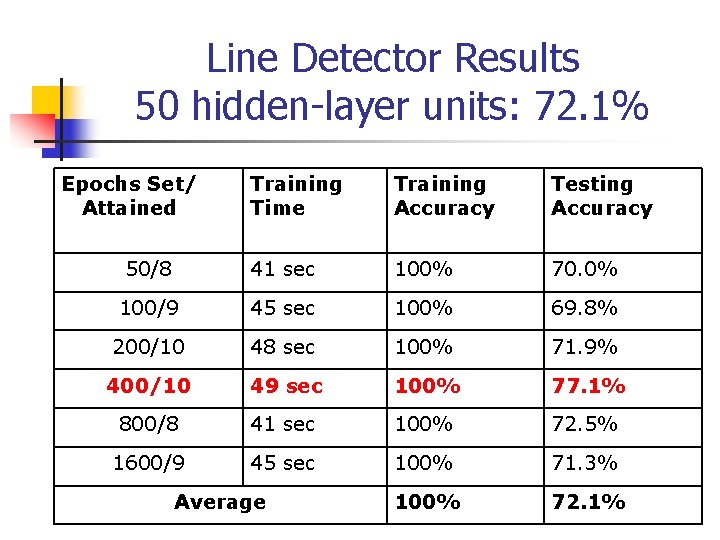

Line Detector Results 50 hidden-layer units: 72. 1% Epochs Set/ Attained Training Time Training Accuracy Testing Accuracy 50/8 41 sec 100% 70. 0% 100/9 45 sec 100% 69. 8% 200/10 48 sec 100% 71. 9% 400/10 49 sec 100% 77. 1% 800/8 41 sec 100% 72. 5% 1600/9 45 sec 100% 71. 3% 100% 72. 1% Average

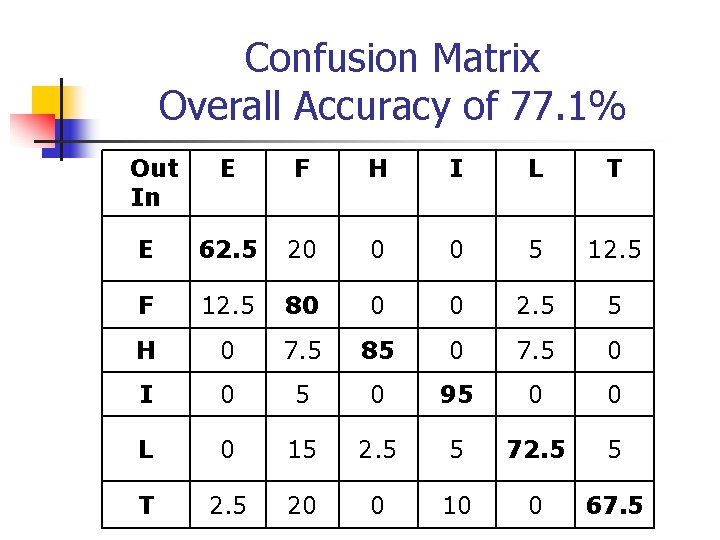

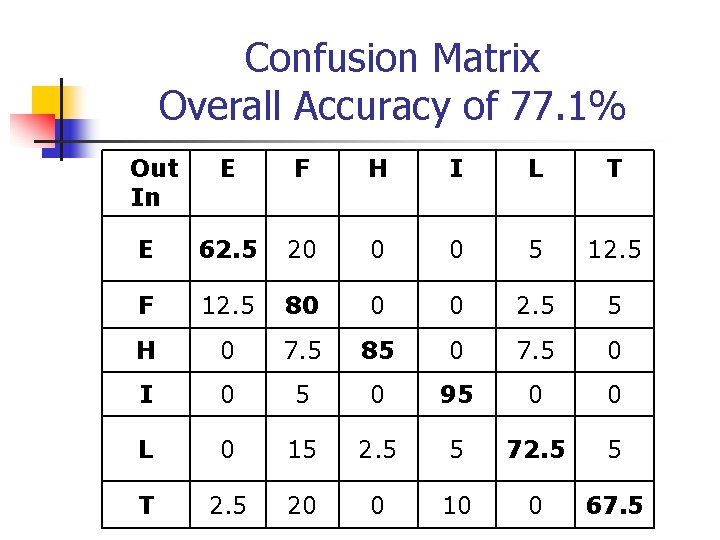

Confusion Matrix Overall Accuracy of 77. 1% Out In E F H I L T E 62. 5 20 0 0 5 12. 5 F 12. 5 80 0 0 2. 5 5 H 0 7. 5 85 0 7. 5 0 I 0 5 0 95 0 0 L 0 15 2. 5 5 72. 5 5 T 2. 5 20 0 10 0 67. 5

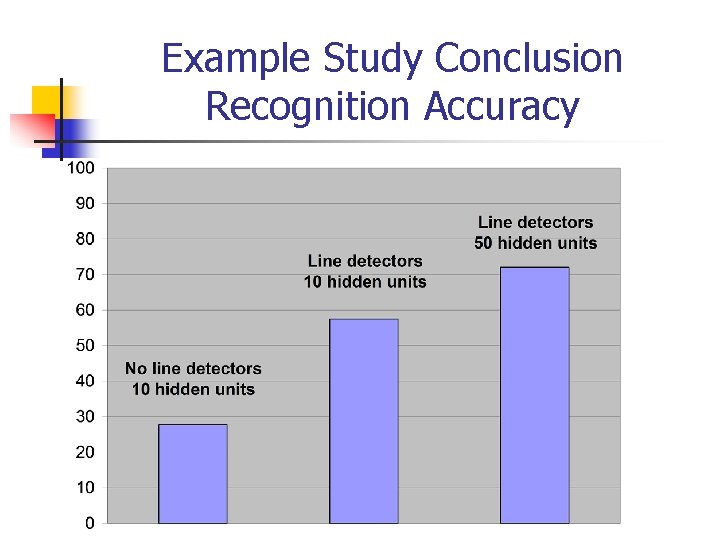

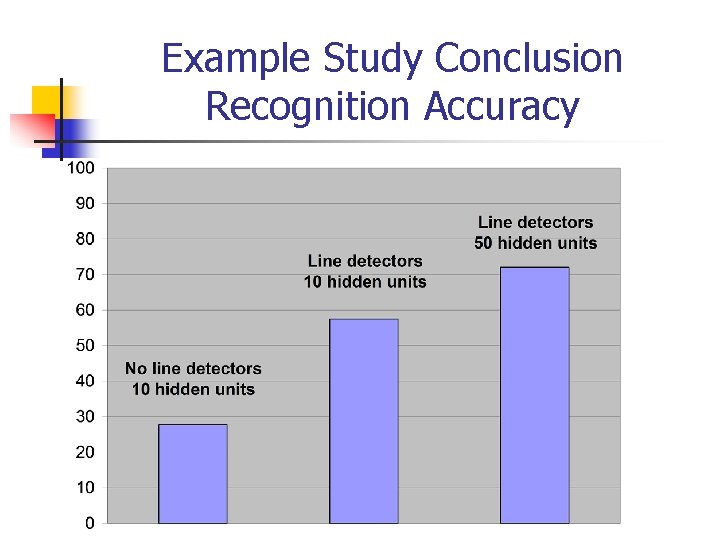

Example Study Conclusion Recognition Accuracy

Example Study Conclusion Efficiency of Training Time n ANN with line detectors resulted in a significantly more efficient network n training time decreased by several orders of magnitude

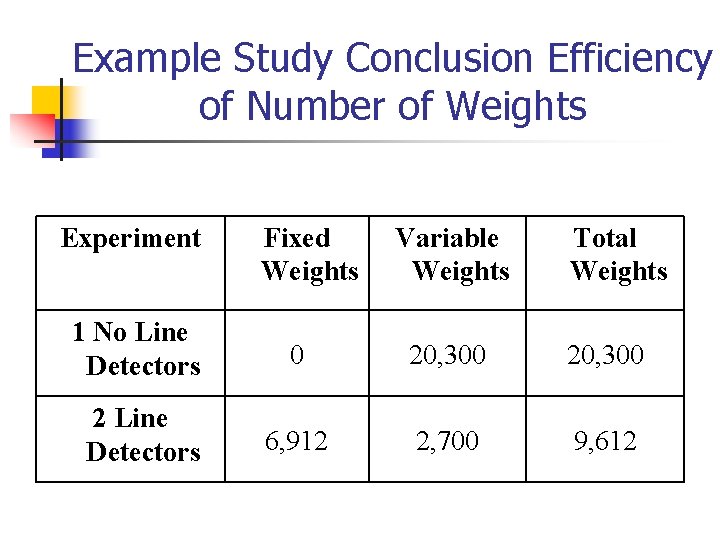

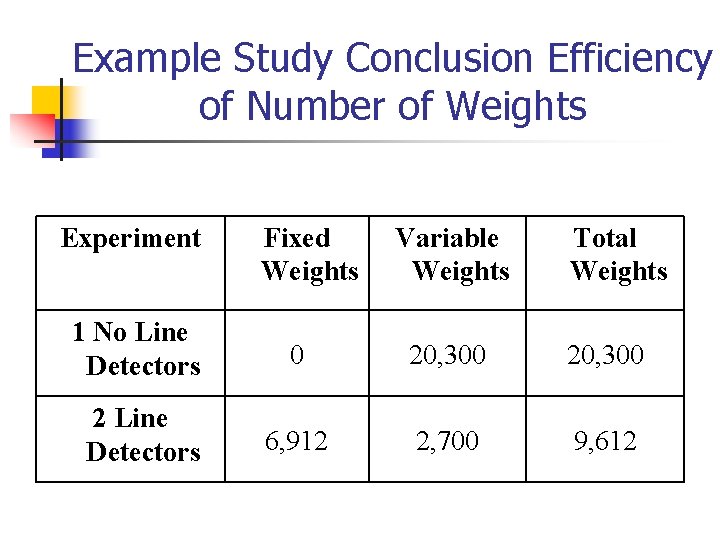

Example Study Conclusion Efficiency of Number of Weights Experiment Fixed Weights Variable Weights Total Weights 1 No Line Detectors 0 20, 300 2 Line Detectors 6, 912 2, 700 9, 612

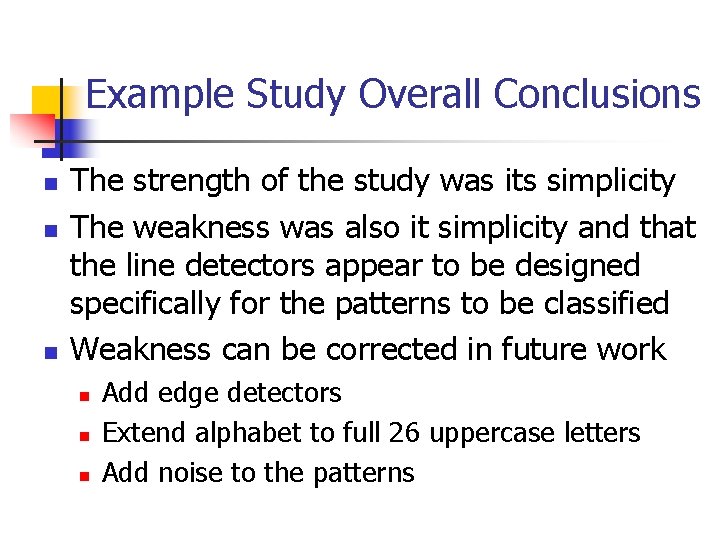

Example Study Overall Conclusions n n n The strength of the study was its simplicity The weakness was also it simplicity and that the line detectors appear to be designed specifically for the patterns to be classified Weakness can be corrected in future work n n n Add edge detectors Extend alphabet to full 26 uppercase letters Add noise to the patterns

Non Neural Network Methods n n n Stochastic methods Nonmetric methods Unsupervised learning (clustering)

Stochastic Methods n n n Relies of randomness to find model parameters Used for highly complex problems where gradient descent algorithms unlikely to work Methods n n n Simulated annealing Boltzman learning Genetic algorithms

Nonmetric Methods n Nominal data n n n No measure of distance between vectors No notion of similarity or ordering Methods n n Decision trees Grammatical methods n n e. g. , finite state machines Rule-based systems n e. g. , propositional logic or first-order logic

Unsupervised Learning n n n Often called clustering The system is not given a set of labeled patterns for training Instead, the system itself establishes the classes based on the regularities of the patterns

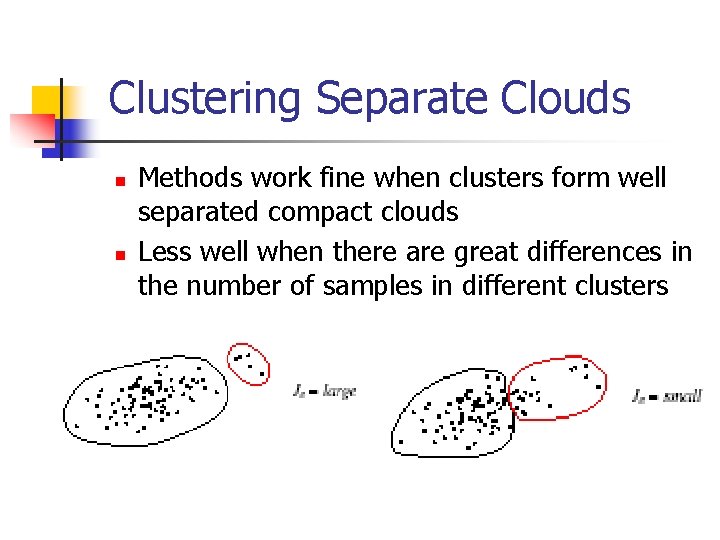

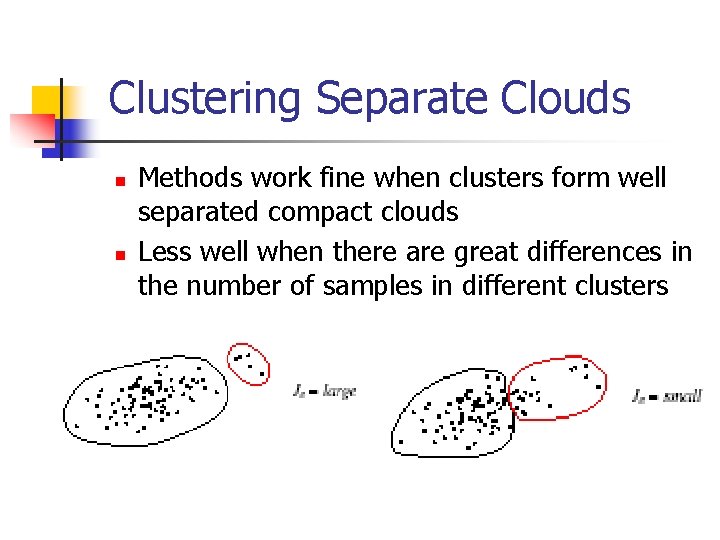

Clustering Separate Clouds n n Methods work fine when clusters form well separated compact clouds Less well when there are great differences in the number of samples in different clusters

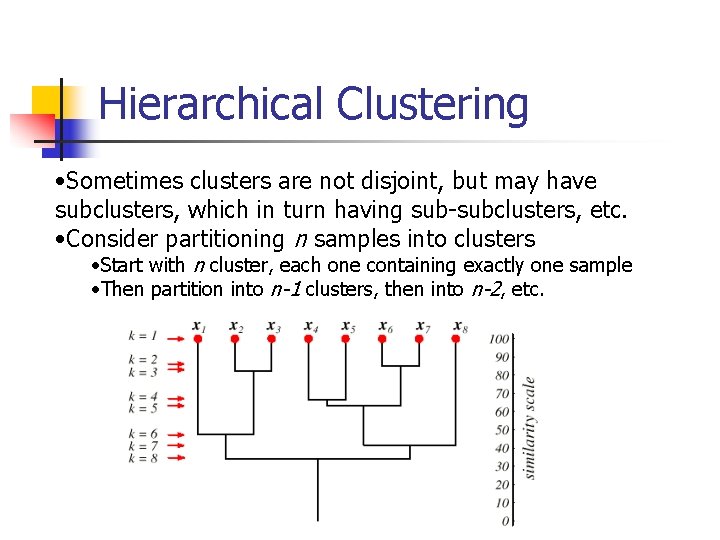

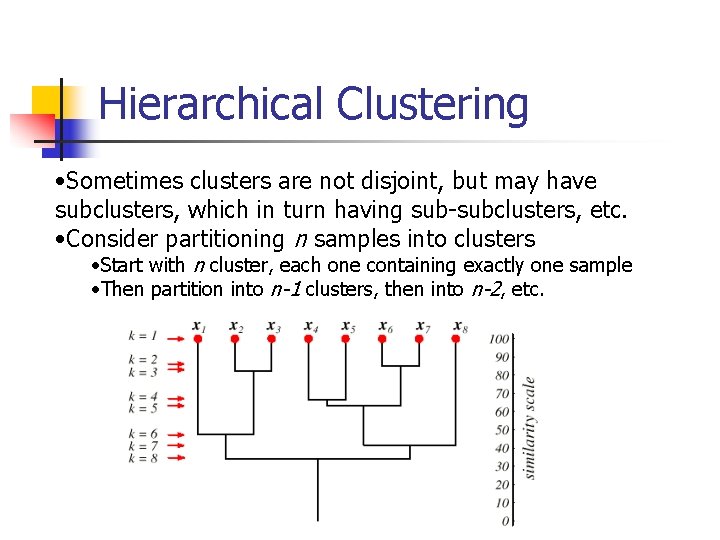

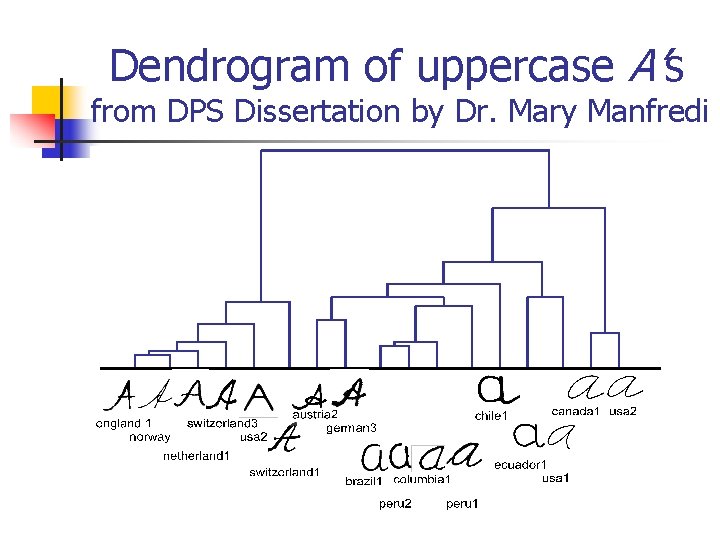

Hierarchical Clustering • Sometimes clusters are not disjoint, but may have subclusters, which in turn having sub-subclusters, etc. • Consider partitioning n samples into clusters • Start with n cluster, each one containing exactly one sample • Then partition into n-1 clusters, then into n-2, etc.

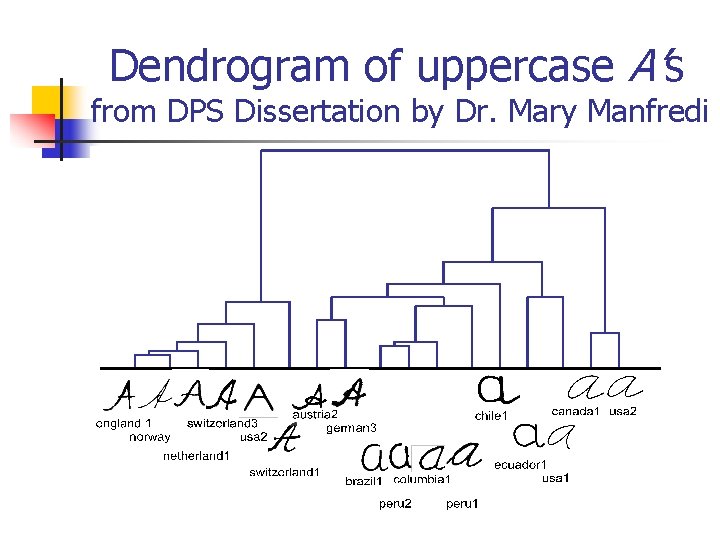

Dendrogram of uppercase A’s from DPS Dissertation by Dr. Mary Manfredi

Pattern Recognition DPS Dissertations (parentheses indicate in progress) n n n n n Visual Systems – Rick Bassett, Sheb Bishop, Tom Lombardi Speech Recognition – Jonathan Law Handwriting Recognition – Mary Manfredi Natural Language Processing – Bashir Ahmed, (Ted Markowitz) Neural Networks – (John Casarella, Robb Zucker) Keystroke Biometric – Mary Curtin, Mary Villani Stylometry Biometric – (John Stewart) Fundamental Research – Kwang Lee, Carl Abrams, Robert Zack [using keystroke data] Other – Karina Hernandez, Mark Ritzmann [using keystroke data], (John Galatti)