Pattern Recognition for Embedded Vision Template matching Statistical

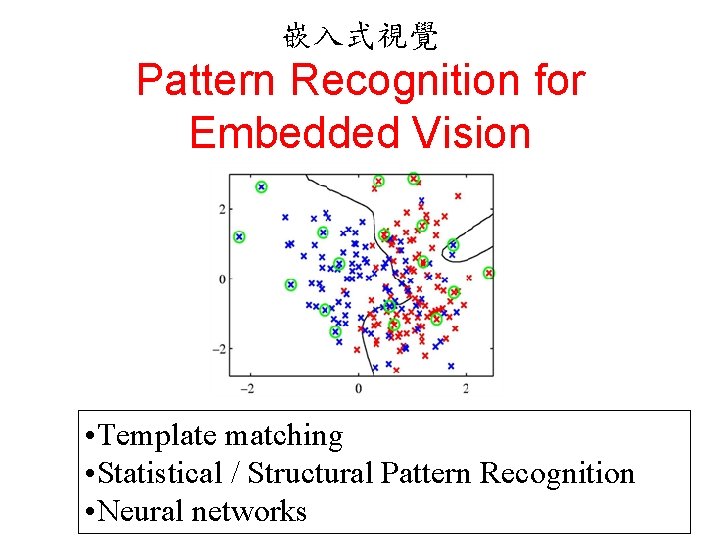

嵌入式視覺 Pattern Recognition for Embedded Vision • Template matching • Statistical / Structural Pattern Recognition • Neural networks

Embedded Vision System Image acquisition Image Processing Feature Extraction Decision Making(Pattern Recognition)

Pattern Recognition model 1. Template matching 2. Statistical Pattern Recognition: based on underlying statistical model of patterns and pattern classes. 3. Structural (or syntactic) Pattern Recognition : pattern classes represented by means of formal structures as grammars, automata, strings, etc. 4. Neural networks: classifier is represented as a network of cells modeling neurons of the human brain (connectionist approach).

Pattern Representation • A pattern is represented by a set of d features, or attributes, viewed as a d-dimensional feature vector.

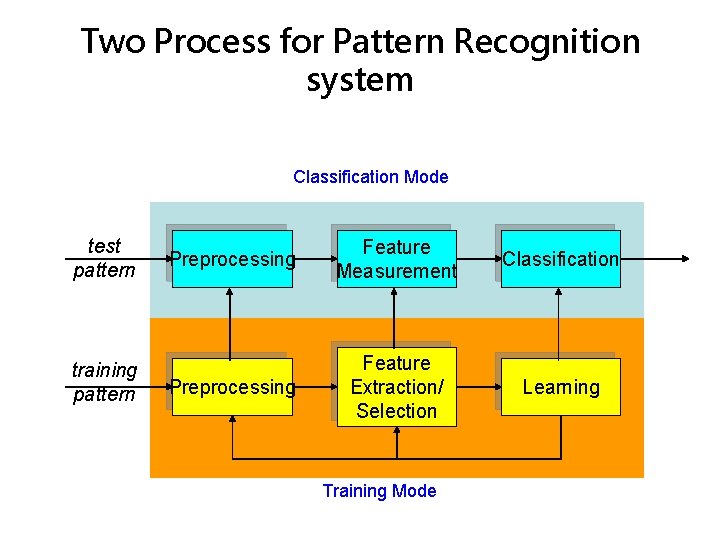

Two Process for Pattern Recognition system Classification Mode test pattern training pattern Preprocessing Feature Measurement Classification Preprocessing Feature Extraction/ Selection Learning Training Mode

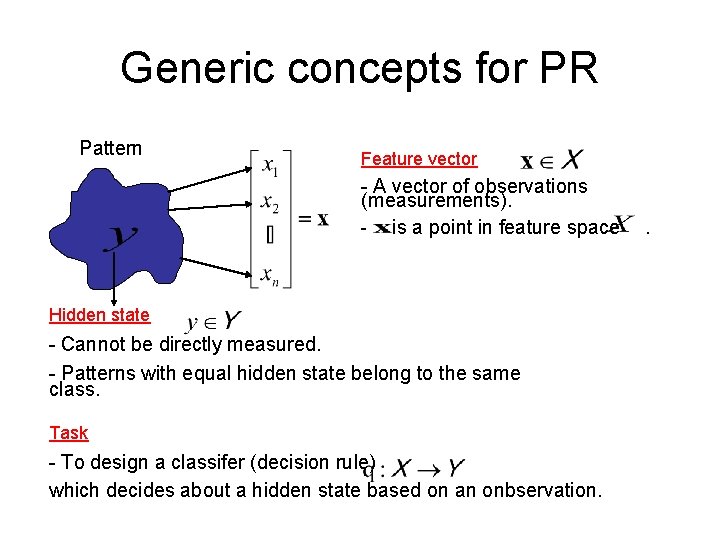

Generic concepts for PR Pattern Feature vector - A vector of observations (measurements). - is a point in feature space Hidden state - Cannot be directly measured. - Patterns with equal hidden state belong to the same class. Task - To design a classifer (decision rule) which decides about a hidden state based on an onbservation. .

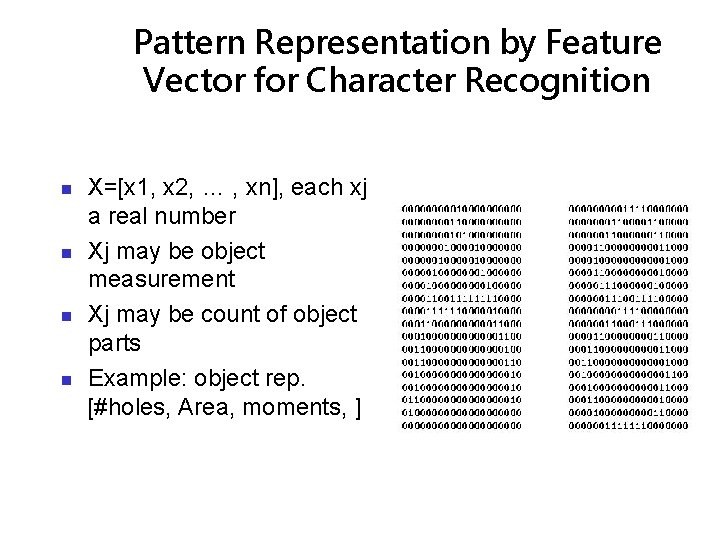

Pattern Representation by Feature Vector for Character Recognition X=[x 1, x 2, … , xn], each xj a real number Xj may be object measurement Xj may be count of object parts Example: object rep. [#holes, Area, moments, ]

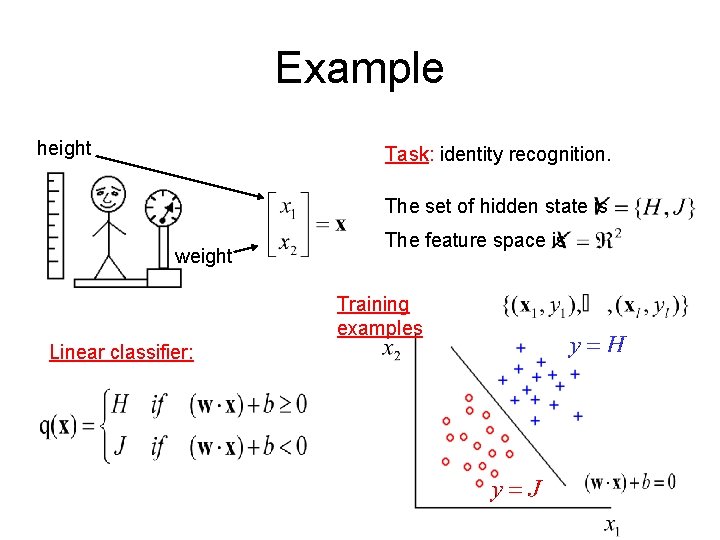

Example height Task: identity recognition. The set of hidden state is weight The feature space is Training examples Linear classifier:

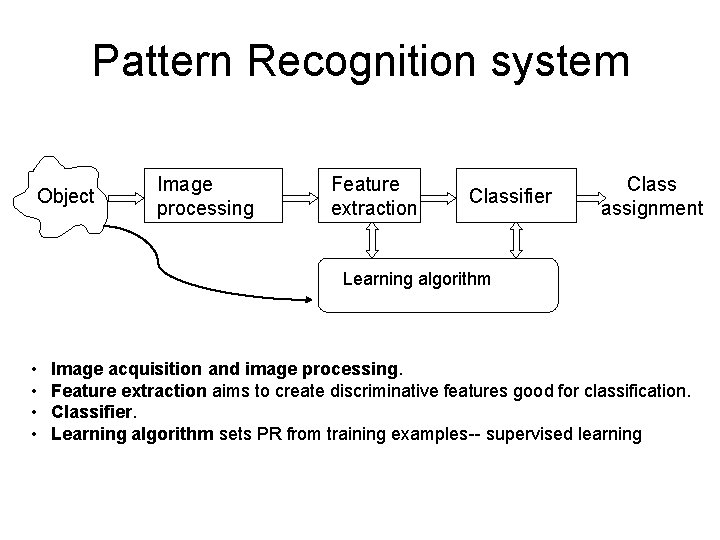

Pattern Recognition system Object Image processing Feature extraction Classifier Class assignment Learning algorithm • • Image acquisition and image processing. Feature extraction aims to create discriminative features good for classification. Classifier. Learning algorithm sets PR from training examples-- supervised learning

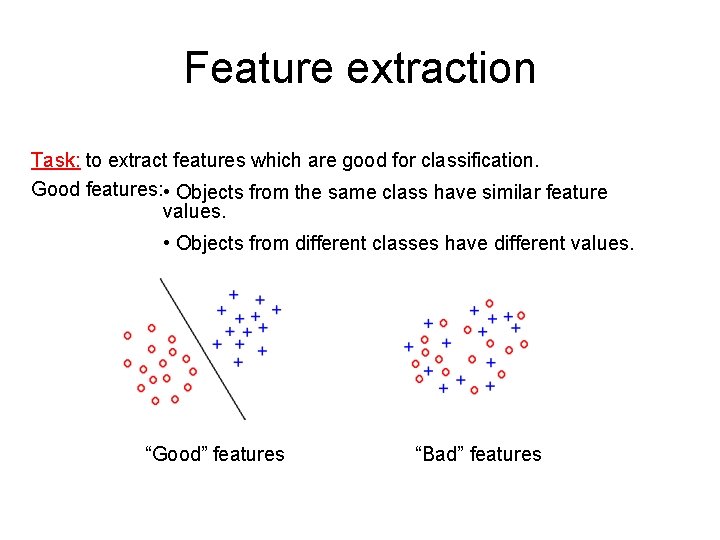

Feature extraction Task: to extract features which are good for classification. Good features: • Objects from the same class have similar feature values. • Objects from different classes have different values. “Good” features “Bad” features

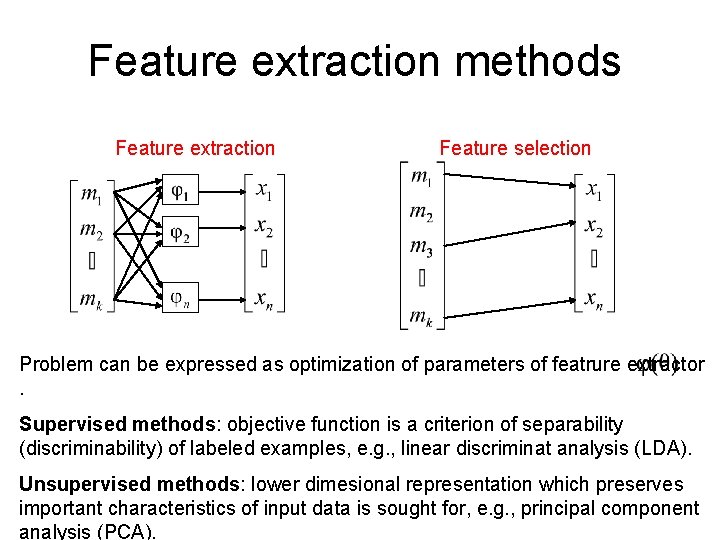

Feature extraction methods Feature extraction Feature selection Problem can be expressed as optimization of parameters of featrure extractor. Supervised methods: objective function is a criterion of separability (discriminability) of labeled examples, e. g. , linear discriminat analysis (LDA). Unsupervised methods: lower dimesional representation which preserves important characteristics of input data is sought for, e. g. , principal component analysis (PCA).

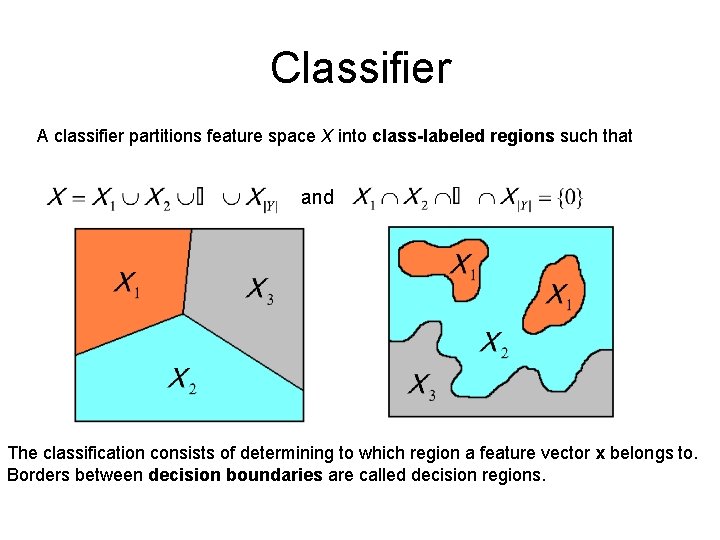

Classifier A classifier partitions feature space X into class-labeled regions such that and The classification consists of determining to which region a feature vector x belongs to. Borders between decision boundaries are called decision regions.

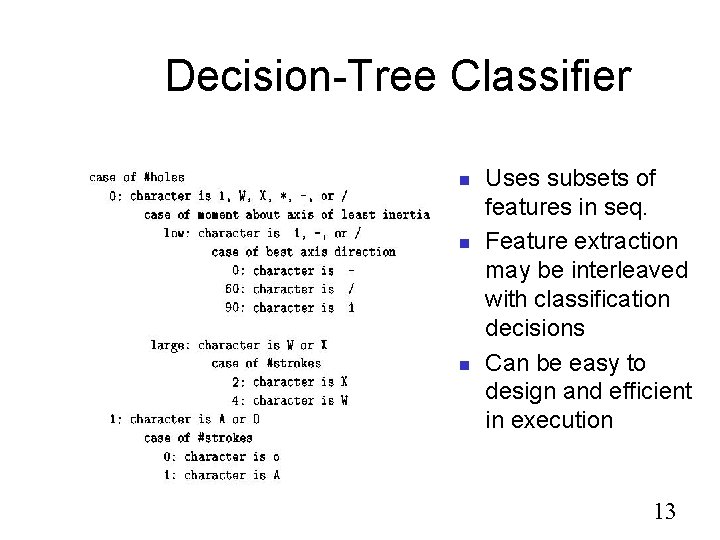

Decision-Tree Classifier CSE 803 Fall 2014 Uses subsets of features in seq. Feature extraction may be interleaved with classification decisions Can be easy to design and efficient in execution 13

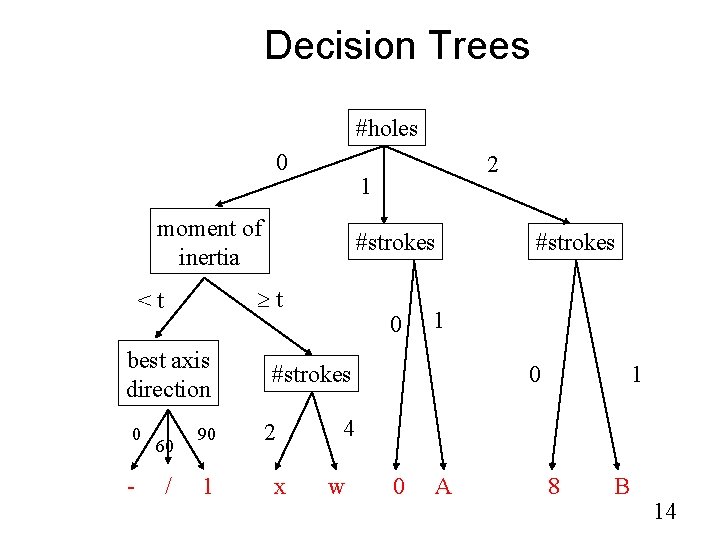

Decision Trees #holes 0 1 moment of inertia best axis direction 0 - #strokes t <t 60 / 90 1 2 0 1 #strokes 2 x #strokes 0 1 4 w 0 A 8 B 14

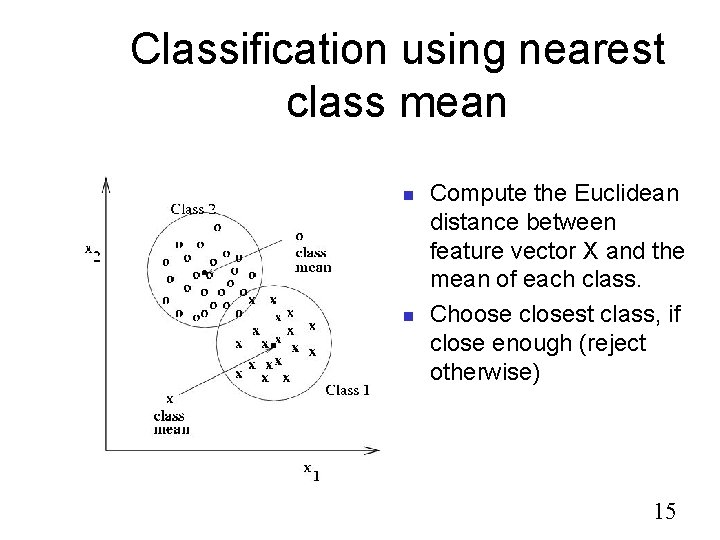

Classification using nearest class mean Compute the Euclidean distance between feature vector X and the mean of each class. Choose closest class, if close enough (reject otherwise) 15

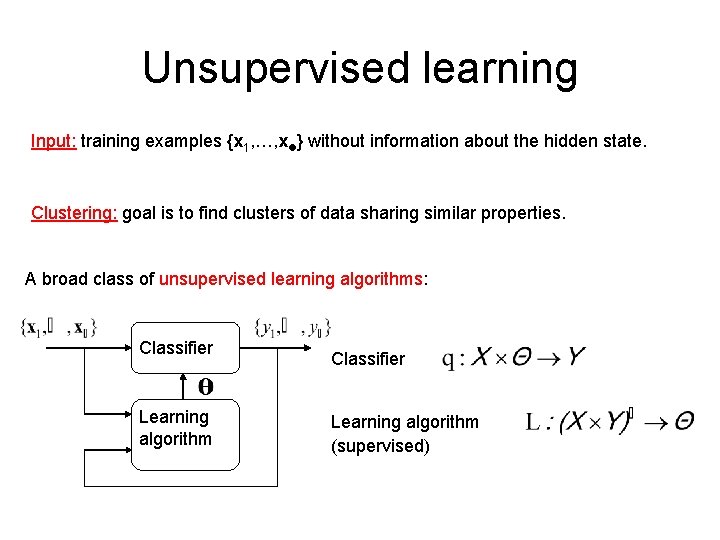

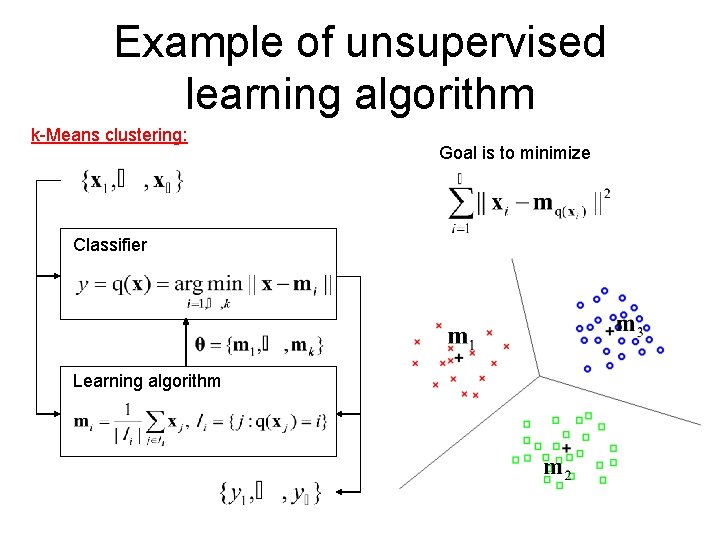

Unsupervised learning Input: training examples {x 1, …, x } without information about the hidden state. Clustering: goal is to find clusters of data sharing similar properties. A broad class of unsupervised learning algorithms: Classifier Learning algorithm (supervised)

Example of unsupervised learning algorithm k-Means clustering: Classifier Learning algorithm Goal is to minimize

- Slides: 17