Patient Matching Algorithm Challenge Informational Webinar Caitlin Ryan

Patient Matching Algorithm Challenge Informational Webinar Caitlin Ryan, PMP| IRIS Health Solutions LLC, Contract Support to ONC Adam Culbertson, M. S. | HIMSS Innovator in Residence, ONC

Agenda • ONC Overview • Background on Matching • About the Challenge » Eligibility Requirements » Registration » Project Submissions » Winners and Prizes • Calculating Metrics • Creating Test Data • Challenge Q&A 2

Office of the National Coordinator for Health IT (ONC) • The Office of the National Coordinator for Health Information Technology (ONC) is at the forefront of the administration’s health IT efforts and is a resource to the entire health system to support the adoption of health information technology and the promotion of nationwide health information exchange to improve health care, • ONC is organizationally located within the Office of the Secretary for the U. S. Department of Health and Human Services (HHS), • ONC is the principal federal entity charged with coordination of nationwide efforts to implement and use the most advanced health information technology and the electronic exchange of health information.

ONC Challenges Overview • The statutory authority for Challenges are hosted under Section 105 of the America COMPETES Reauthorization Act of 2010 (Public L. No 111 -358). • ONC Tech Lab - Innovation » Spotlight areas of high interest to ONC and HHS » Direct attention to new market opportunities » Continue work with start-up community and administer challenge contests » Increase awareness and uptake of new standards and data 4

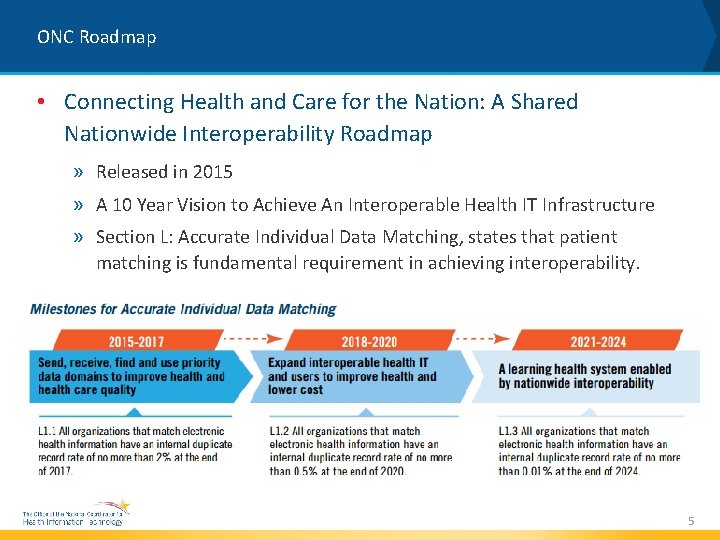

ONC Roadmap • Connecting Health and Care for the Nation: A Shared Nationwide Interoperability Roadmap » Released in 2015 » A 10 Year Vision to Achieve An Interoperable Health IT Infrastructure » Section L: Accurate Individual Data Matching, states that patient matching is fundamental requirement in achieving interoperability. 5

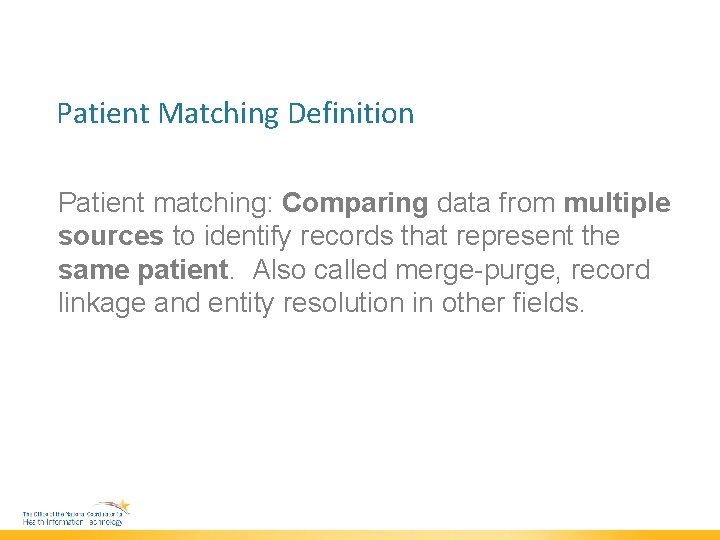

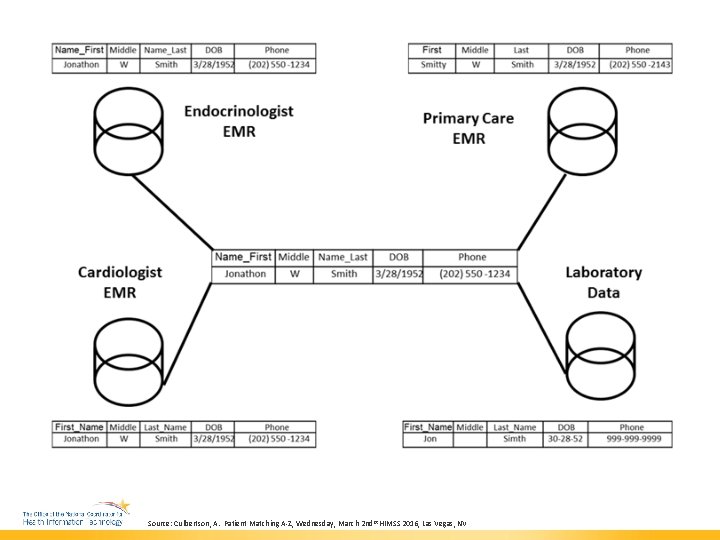

Patient Matching Definition Patient matching: Comparing data from multiple sources to identify records that represent the same patient. Also called merge-purge, record linkage and entity resolution in other fields.

Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

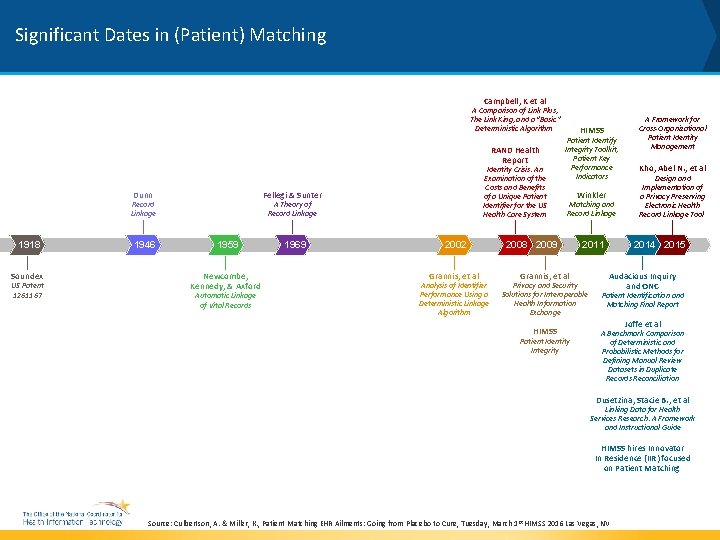

Significant Dates in (Patient) Matching Campbell, K et al A Comparison of Link Plus, The Link King, and a “Basic” Deterministic Algorithm RAND Health Report Dunn Fellegi & Sunter Record Linkage 1918 Soundex US Patent 1261167 1946 Identity Crisis: An Examination of the Costs and Benefits of a Unique Patient Identifier for the US Health Care System A Theory of Record Linkage 1959 Newcombe, Kennedy, & Axford Automatic Linkage of Vital Records 1969 2002 Grannis, et al Analysis of Identifier Performance Using a Deterministic Linkage Algorithm HIMSS Patient Identify Integrity Toolkit, Patient Key Performance Indicators Winkler Matching and Record Linkage 2008 2009 Grannis, et al 2011 Privacy and Security Solutions for Interoperable Health Information Exchange HIMSS Patient Identity Integrity A Framework for Cross-Organizational Patient Identity Management Kho, Abel N. , et al Design and Implementation of a Privacy Preserving Electronic Health Record Linkage Tool 2014 2015 Audacious Inquiry and ONC Patient Identification and Matching Final Report Joffe et al A Benchmark Comparison of Deterministic and Probabilistic Methods for Defining Manual Review Datasets in Duplicate Records Reconciliation Dusetzina, Stacie B. , et al Linking Data for Health Services Research: A Framework and Instructional Guide HIMSS hires Innovator In Residence (IIR) focused on Patient Matching Source: Culbertson, A. & Miller, K. , Patient Matching EHR Ailments: Going from Placebo to Cure, Tuesday, March 1 st HIMSS 2016 Las Vegas, NV

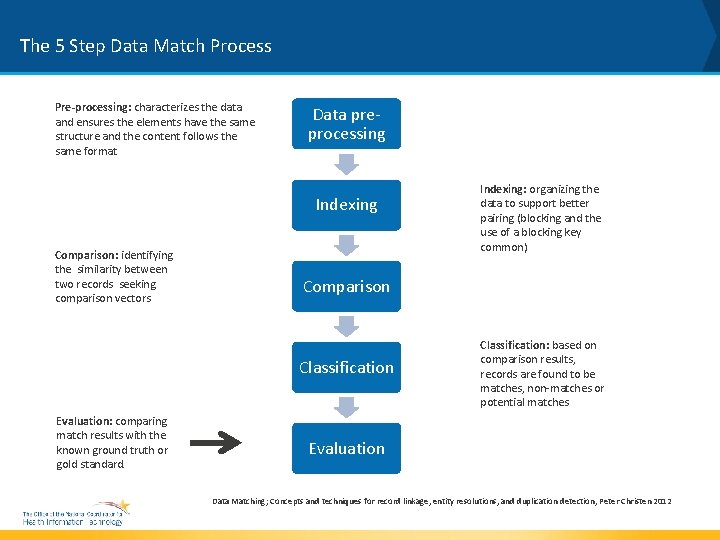

The 5 Step Data Match Process Pre-processing: characterizes the data and ensures the elements have the same structure and the content follows the same format Data preprocessing Indexing Comparison: identifying the similarity between two records seeking comparison vectors Comparison Classification Evaluation: comparing match results with the known ground truth or gold standard. Indexing: organizing the data to support better pairing (blocking and the use of a blocking key common) Classification: based on comparison results, records are found to be matches, non-matches or potential matches Evaluation Data Matching; Concepts and techniques for record linkage, entity resolutions, and duplication detection, Peter Christen 2012

Problem • Patient data matching has been noted as one key barriers to achieving interoperability in the Nations Road Map for Health IT • Patient Matching causes issues for over 50% of health information managers 1 • Problem will increases as we increase the volume of health data sharing • Data quality issues make matching more complicated • Lack of knowledge of patient matching algorithms performance, adoption of metrics 1) https: //ehrintelligence. com/news/patient-matching-issues-hindering-50 -of-him-professionals

Data Quality • Data Quality is a Key » Garbage in and Garbage out • Data entry errors are compound data matching complexity » Various algorithmic solutions to address these, not perfect • Types of errors: » Missing or Incomplete Values » Inaccurate data » Fat finger errors » Information is out of date » Transposed names » Misspelled names

Solution "If you can't measure it, you can't improve it. “ -Peter Drucker 12

ONC’s Patient Matching Algorithm Challenge The goal of this challenge is to: 1. Bring about greater transparency and data on the performance of existing patient matching algorithms, 2. Spur the adoption of performance metrics for patient data matching algorithm vendors, 3. and positively impact other aspects of patient matching such as deduplication and linking to clinical data. Website: www. patientmatchingchallenge. gov 13

Eligibility Requirements There is no age requirement for this challenge. All members of a team must meet the eligibility requirements. • Shall have registered to participate in the Challenge under the challenge requirements by ONC. • Shall have complied with all the stated requirements of the Challenge. • Businesses must be incorporated in and maintained a primary place of business in the United States; Individuals must be a citizen or permanent resident of the United States. • Shall not be an HHS employee. 14

Eligibility Requirements (cont’d) • May not be a federal entity or federal employee acting within the scope of their employment. • Federal grantees may not use federal funds to develop COMPETES Act challenge applications unless consistent with the purpose of their grant award. • Federal contractors may not use federal funds from a contract to develop COMPETES Act challenge applications or to fund efforts in support of a COMPETES Act challenge submission. • Participants must also agree to indemnify the Federal Government against third party claims for damages arising from or related to Challenge activities. 15

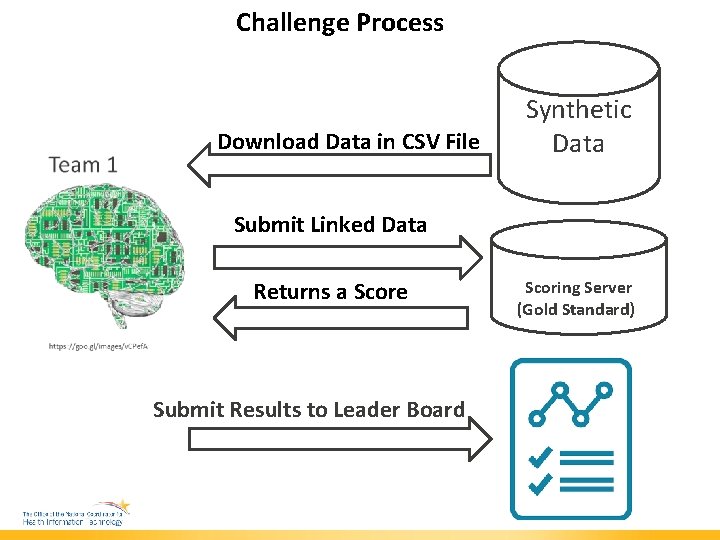

Challenge Process • Register your team • Contestants will unlock a test data set provided by ONC to run algorithms • Run Algorithm • Submit results for evaluation which will be scored against an “answer key” • Receive performance scores and appear on a Challenge leaderboard. • Repeat submission until satisfied with the result or have hit 100 submissions or end date has passed. 16

Challenge Process Download Data in CSV File Synthetic Data Set Submit Linked Data Returns a Score Submit Results to Leader Board Scoring Server (Gold Standard))

Registration • Visit the challenge website and fill in all required fields of the registration form • Create a username and password (1 account per team) • Enter a team name which will be used on the leader board » Can be used to keep team identities private • Acknowledge and agree to all terms and rules of the Challenge 18

Challenge Dataset synthetically generated by Just Associates using a proprietary software algorithm • Based on real-world data in an MPI and actual data discrepancies in each field across the fields • Known potential duplicate pairs mimic real world scenarios • Does not contain PHI • Approximately 1 M Patient Records • Available early June, will send out email when data set is made available 19

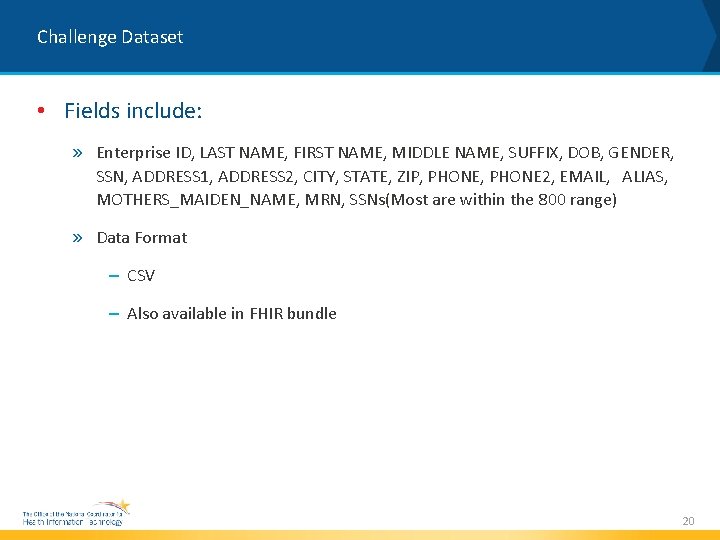

Challenge Dataset • Fields include: » Enterprise ID, LAST NAME, FIRST NAME, MIDDLE NAME, SUFFIX, DOB, GENDER, SSN, ADDRESS 1, ADDRESS 2, CITY, STATE, ZIP, PHONE 2, EMAIL, ALIAS, MOTHERS_MAIDEN_NAME, MRN, SSNs(Most are within the 800 range) » Data Format – CSV – Also available in FHIR bundle 20

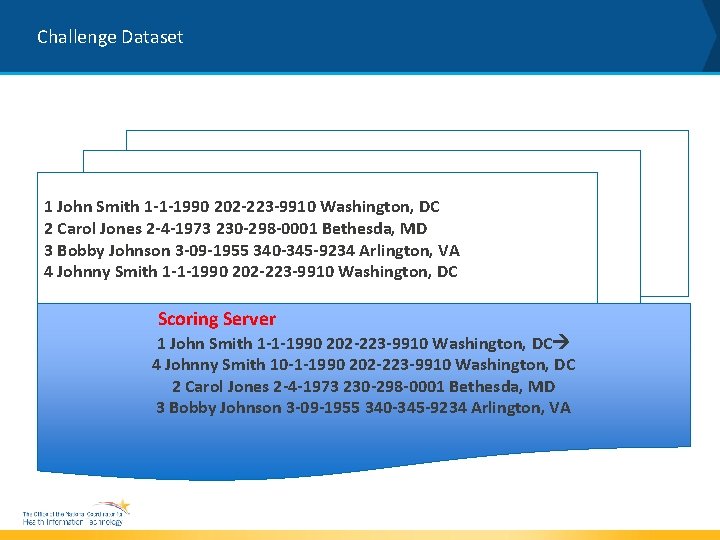

Challenge Dataset 1 John Smith 1 -1 -1990 202 -223 -9910 Washington, DC 2 Carol Jones 2 -4 -1973 230 -298 -0001 Bethesda, MD 3 Bobby Johnson 3 -09 -1955 340 -345 -9234 Arlington, VA 4 Johnny Smith 1 -1 -1990 202 -223 -9910 Washington, DC Scoring Server 1 John Smith 1 -1 -1990 202 -223 -9910 Washington, DC 4 Johnny Smith 10 -1 -1990 202 -223 -9910 Washington, DC 2 Carol Jones 2 -4 -1973 230 -298 -0001 Bethesda, MD 3 Bobby Johnson 3 -09 -1955 340 -345 -9234 Arlington, VA

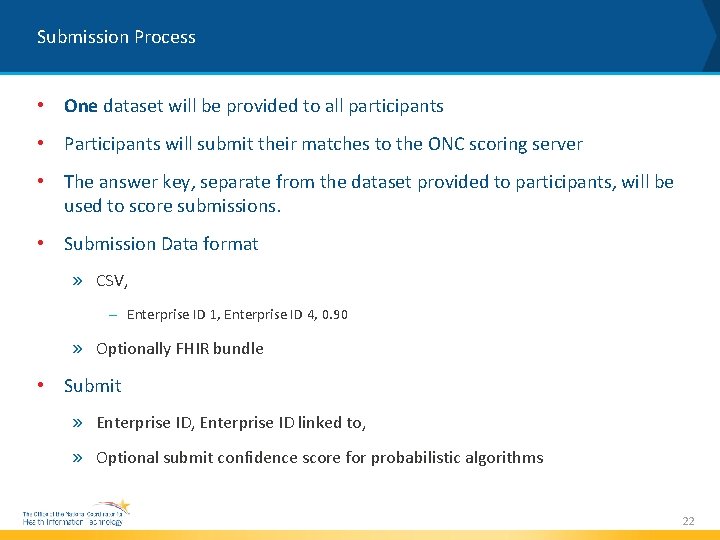

Submission Process • One dataset will be provided to all participants • Participants will submit their matches to the ONC scoring server • The answer key, separate from the dataset provided to participants, will be used to score submissions. • Submission Data format » CSV, – Enterprise ID 1, Enterprise ID 4, 0. 90 » Optionally FHIR bundle • Submit » Enterprise ID, Enterprise ID linked to, » Optional submit confidence score for probabilistic algorithms 22

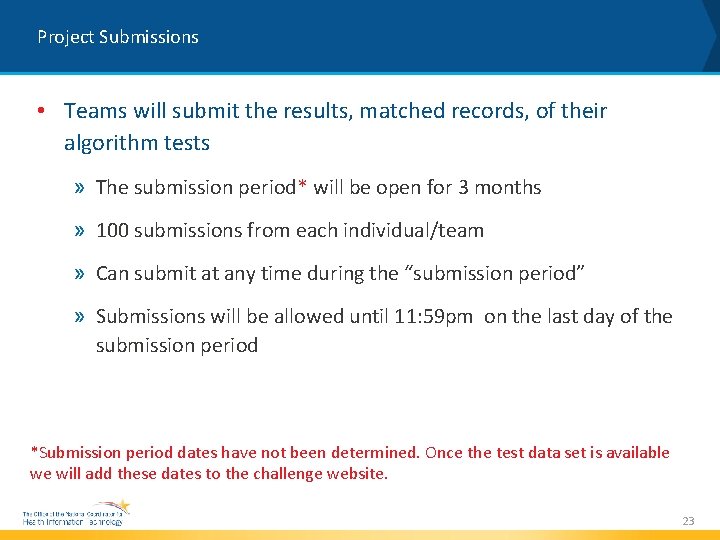

Project Submissions • Teams will submit the results, matched records, of their algorithm tests » The submission period* will be open for 3 months » 100 submissions from each individual/team » Can submit at any time during the “submission period” » Submissions will be allowed until 11: 59 pm on the last day of the submission period *Submission period dates have not been determined. Once the test data set is available we will add these dates to the challenge website. 23

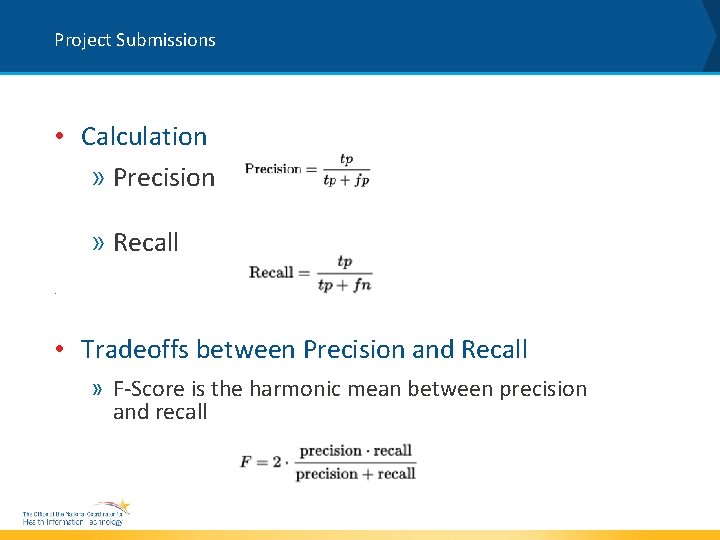

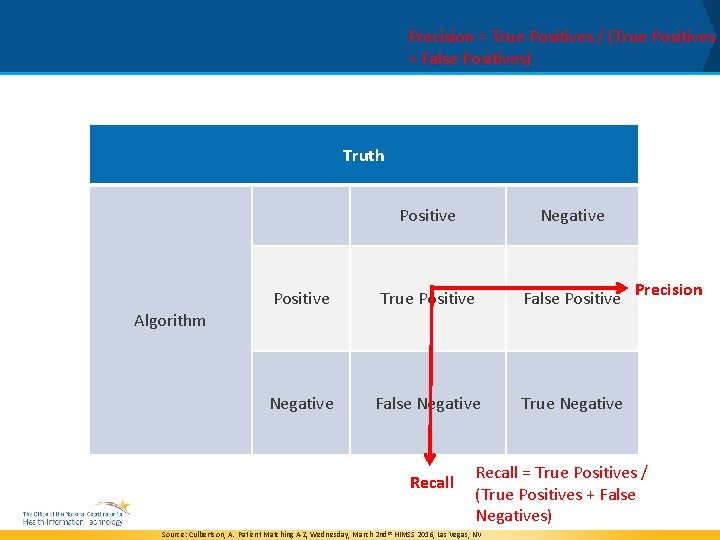

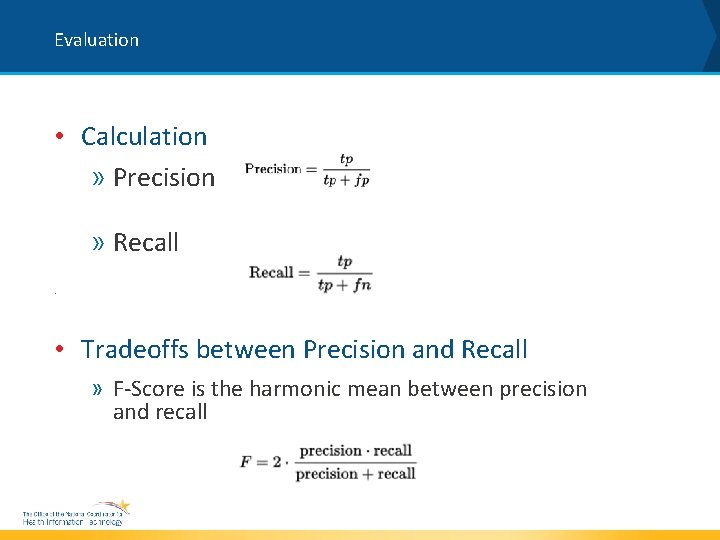

Project Submissions • Calculation » Precision » Recall • • Tradeoffs between Precision and Recall » F-Score is the harmonic mean between precision and recall

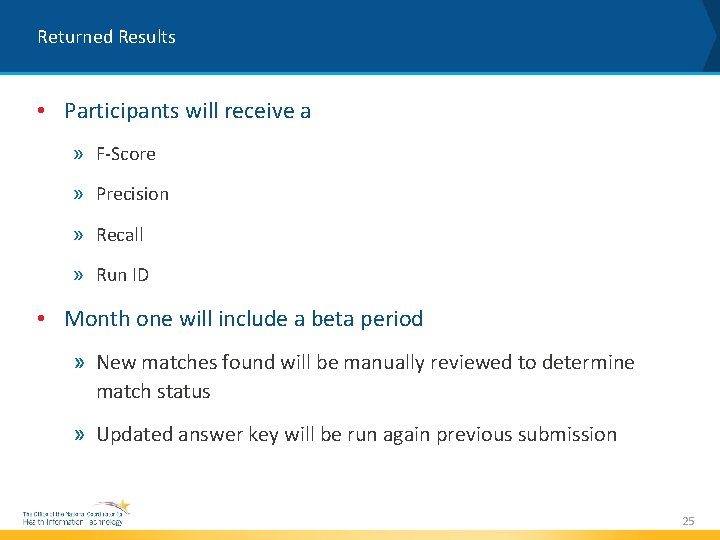

Returned Results • Participants will receive a » F-Score » Precision » Recall » Run ID • Month one will include a beta period » New matches found will be manually reviewed to determine match status » Updated answer key will be run again previous submission 25

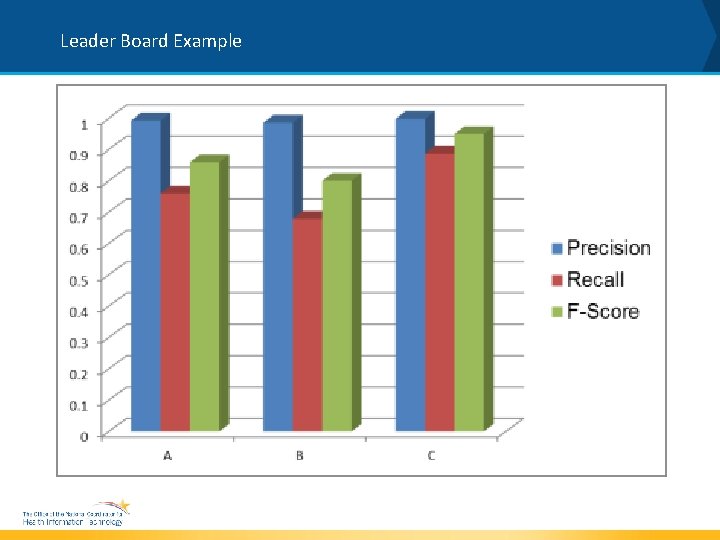

Leader Board Example

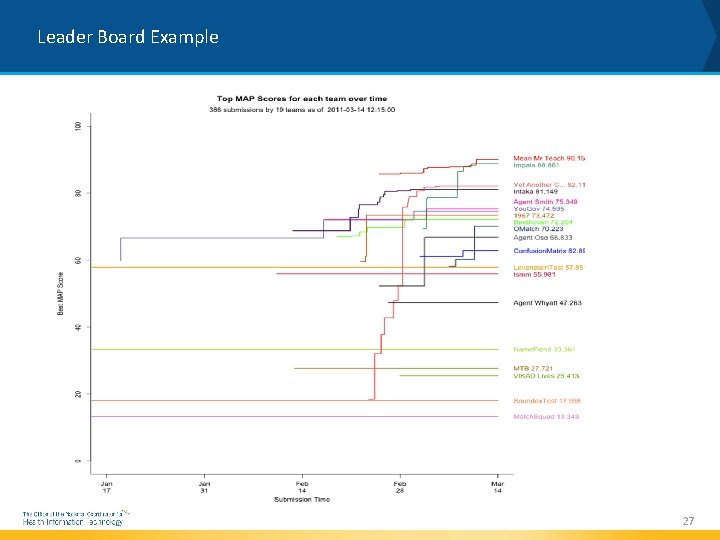

Leader Board Example 27

Winners and Prizes The Total Prize Purse for this challenge is $75, 000 Judging will be based upon the empirical evaluation of the performance of the algorithms. Highest F-Score 1 st- $25, 000 2 nd- $20, 000 3 rd- $15, 000 Best in Category: ($5, 000 ea. Category) • Precision • Recall • Best first f-score run 28

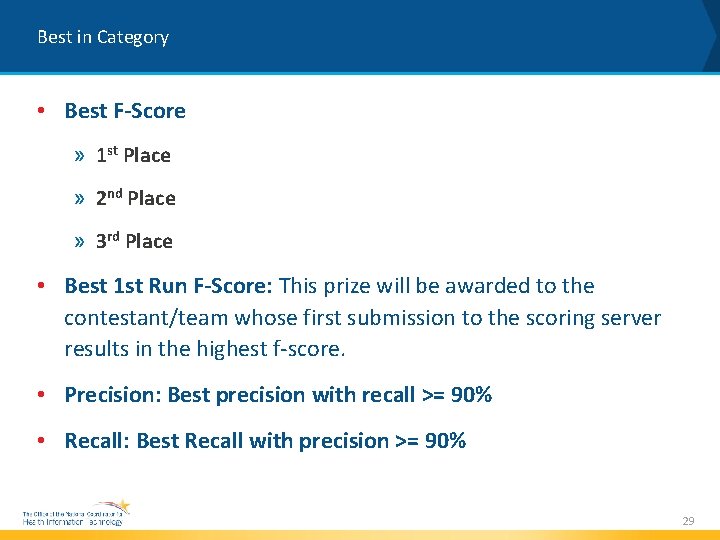

Best in Category • Best F-Score » 1 st Place » 2 nd Place » 3 rd Place • Best 1 st Run F-Score: This prize will be awarded to the contestant/team whose first submission to the scoring server results in the highest f-score. • Precision: Best precision with recall >= 90% • Recall: Best Recall with precision >= 90% 29

Metrics for Algorithm Performance

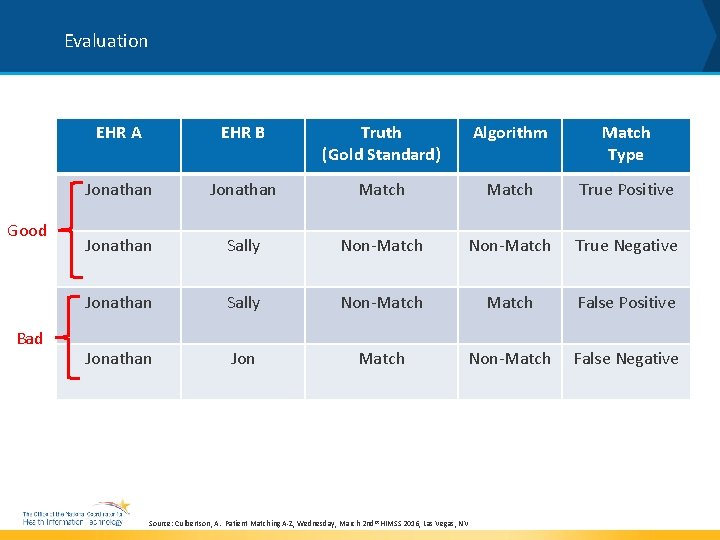

Ideal outcome of any matching exercise is correctly answering this one question hundreds or thousands of times, Are these two things the same thing? » Correctly identifying all the true positives and true negatives while minimizing the number of errors, false positives and false negatives Patient Matching Goal Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

Patient Matching Terminology • True Positive- The two records represent the same patient • True Negative- The two records don't represent the same patient Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

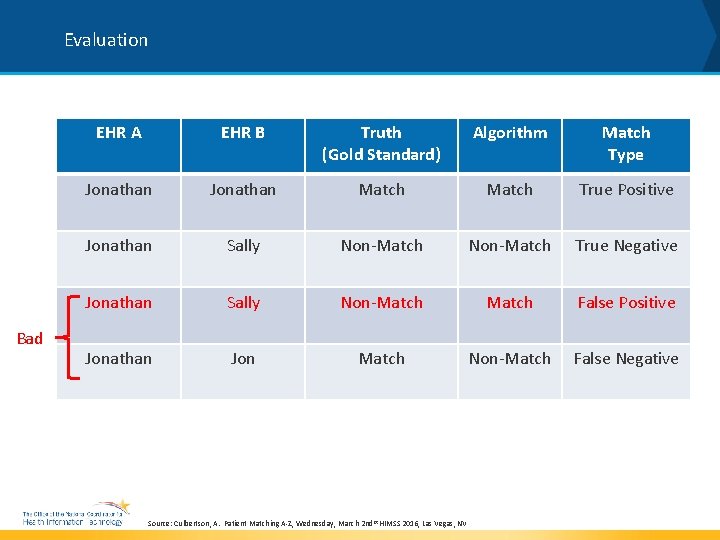

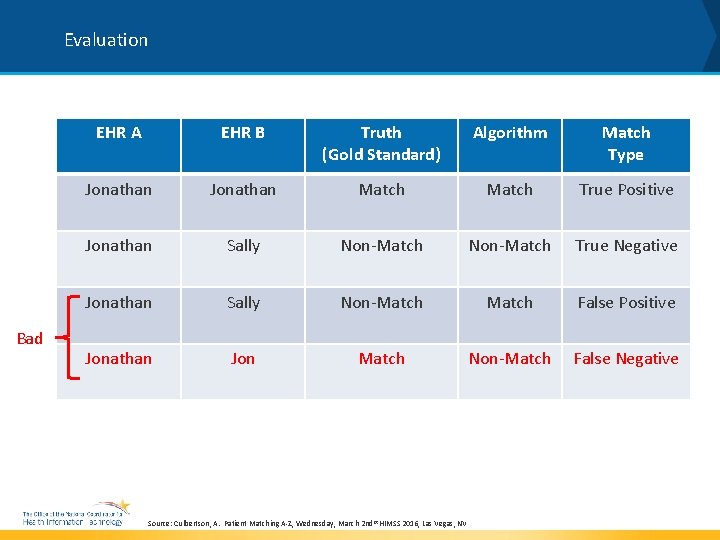

Patient Matching Terminology • False Negative: The algorithm misses a record that should be matched • False Positive: The algorithm creates a link to two records that don’t actually match Source: Culbertson, A. & Miller, K. , Patient Matching EHR Ailments: Going from Placebo to Cure, Tuesday, March 1 st HIMSS 2016 Las Vegas, NV

Evaluation Good Bad EHR A EHR B Truth (Gold Standard) Algorithm Match Type Jonathan Match True Positive Jonathan Sally Non-Match True Negative Jonathan Sally Non-Match False Positive Jonathan Jon Match Non-Match False Negative Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

Evaluation Bad EHR A EHR B Truth (Gold Standard) Algorithm Match Type Jonathan Match True Positive Jonathan Sally Non-Match True Negative Jonathan Sally Non-Match False Positive Jonathan Jon Match Non-Match False Negative Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

Evaluation Bad EHR A EHR B Truth (Gold Standard) Algorithm Match Type Jonathan Match True Positive Jonathan Sally Non-Match True Negative Jonathan Sally Non-Match False Positive Jonathan Jon Match Non-Match False Negative Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

Precision = True Positives / (True Positives + False Positives) Evaluation Truth Positive Negative Positive True Positive False Positive Negative False Negative True Negative Precision Algorithm Recall = True Positives / (True Positives + False Negatives) Source: Culbertson, A. Patient Matching A-Z, Wednesday, March 2 nd st HIMSS 2016, Las Vegas, NV

Evaluation • Calculation » Precision » Recall • • Tradeoffs between Precision and Recall » F-Score is the harmonic mean between precision and recall

Creating Test Data Sets

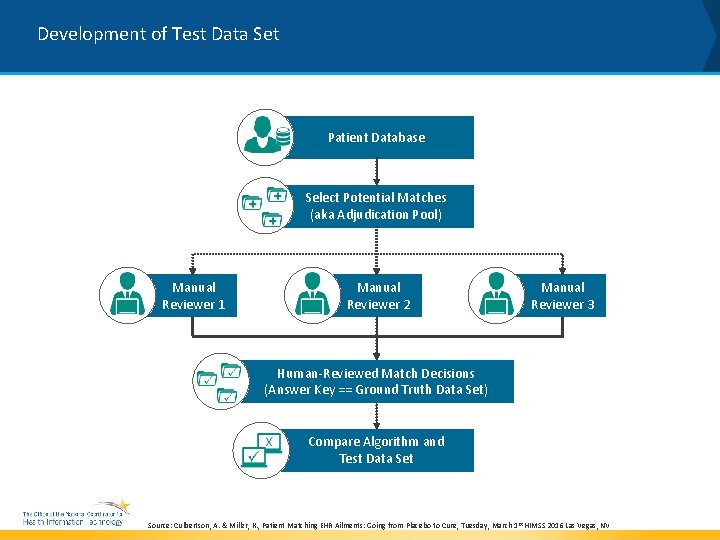

Development of Test Data Set Patient Database Select Potential Matches (aka Adjudication Pool) Manual Reviewer 1 Manual Reviewer 2 Manual Reviewer 3 Human-Reviewed Match Decisions (Answer Key == Ground Truth Data Set) Compare Algorithm and Test Data Set Source: Culbertson, A. & Miller, K. , Patient Matching EHR Ailments: Going from Placebo to Cure, Tuesday, March 1 st HIMSS 2016 Las Vegas, NV

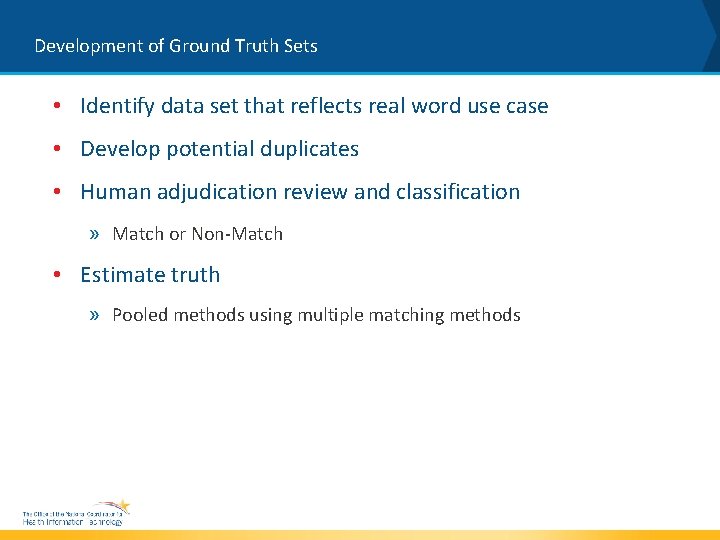

Development of Ground Truth Sets • Identify data set that reflects real word use case • Develop potential duplicates • Human adjudication review and classification » Match or Non-Match • Estimate truth » Pooled methods using multiple matching methods

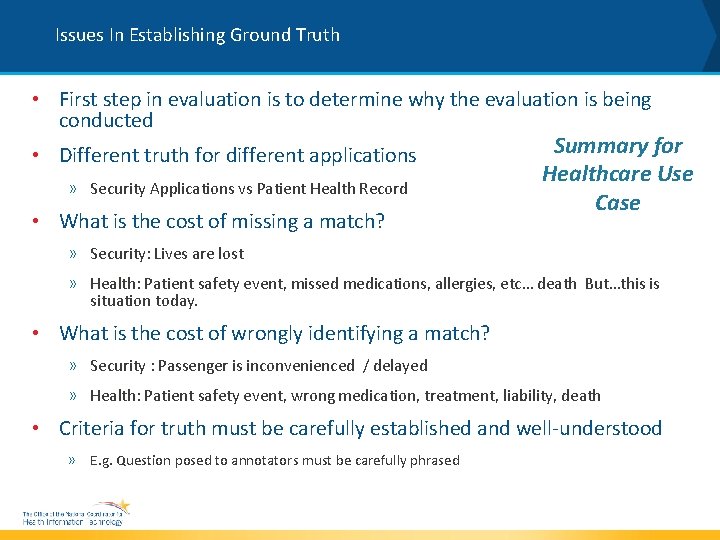

Issues In Establishing Ground Truth • First step in evaluation is to determine why the evaluation is being conducted • Different truth for different applications » Security Applications vs Patient Health Record • What is the cost of missing a match? Summary for Healthcare Use Case » Security: Lives are lost » Health: Patient safety event, missed medications, allergies, etc… death But…this is situation today. • What is the cost of wrongly identifying a match? » Security : Passenger is inconvenienced / delayed » Health: Patient safety event, wrong medication, treatment, liability, death • Criteria for truth must be carefully established and well-understood » E. g. Question posed to annotators must be carefully phrased

Issues In Establishing Ground Truth (cont’d) • Different truth for different applications » Credit check » Security applications » Customer support » De-duplication of mailing lists • What is the cost of missing a match? » New record entered into database » Irritated customer » Lives are lost • Criteria for truth must be carefully established and well-understood by annotators » Question posed to annotators must be carefully phrased

Issues In Establishing Ground Truth (cont’d) • How much time / expertise is available to judge (/discount) false positives? • Needs to reflect real word test use case • Evaluation results are only as good as the truth on which they are based » And only as appropriate as the evaluation is to the task that will be performed with the operational system • Absolute recall impossible to measure without completely known test set (i. e. “You don’t know what you’re missing. ”) » Estimate with pooled results

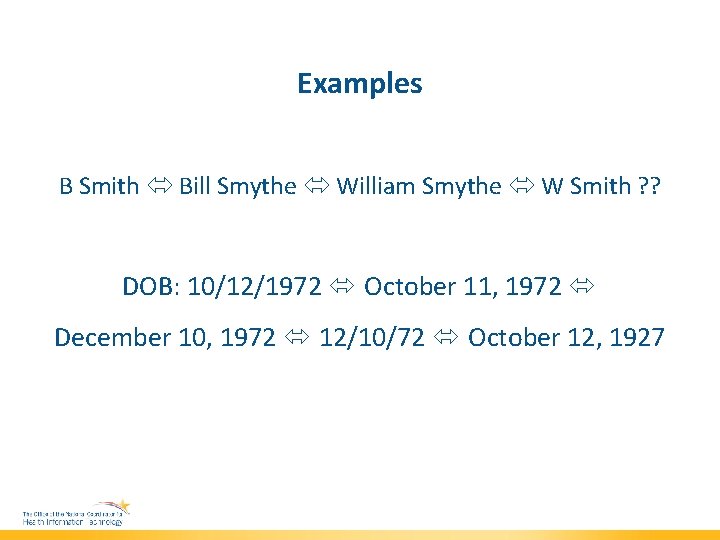

Examples B Smith Bill Smythe William Smythe W Smith ? ? DOB: 10/12/1972 October 11, 1972 December 10, 1972 12/10/72 October 12, 1927

Get Involved • Webinars on how to participate and challenge overview » May 24 th • Kicking off Patient Data Matching Algorithm Challenge in June • Participant Discussion Board • Website: www. patientmatchingchallenge. com 46

Acknowledgments Thank you to the following individuals and organizations for their involvement in the planning and development of this challenge : » Debbie Bucci and the ONC Team » HIMSS North America, Tom Leary, HIMSS » Greg Downing, HHS Idea Lab, ONC » Jerry and Beth Just and the Just Associates Team » Keith Miller and Andy Gregorowicz, MITRE » Caitlin Ryan, IRIS Health Solutions » Capitol Consulting Corporation Team 47

Additional Questions FOR ADDITIONAL QUESTIONS/INFORMATION CONTACT: Adam Culbertson, adam. culbertson@hhs. gov Debbie Bucci, debbie. bucci@hhs. gov (preferred) Phone: 202 -690 -0213 48

Thank you for your interest! The ONC Team @ONC_Health. IT @HHSONC

- Slides: 49