Particle swarm optimization or Genetic algorithm Yuzhao Wang

- Slides: 18

Particle swarm optimization or Genetic algorithm ? Yuzhao Wang, Zhibin Yu, Qixiao Liu, Jun. Qing Yu SIAT, CAS HUST

Motivation p Big Data Framework — parameter tuning n Hundreds of configuration parameters (Multivalued) n Critical for performance, optimization is needed! n Tuning parameters is time-consuming n Expertise knowledge is needed Data Collecting Model training Optimum searching n Genetic Algorithm(GA) , Particle Swarm Optimization(PSO) n Random Forest, Support Vector Machine. . .

Outline üBackgrouds üProposal and implementation üExperimental Evaluation

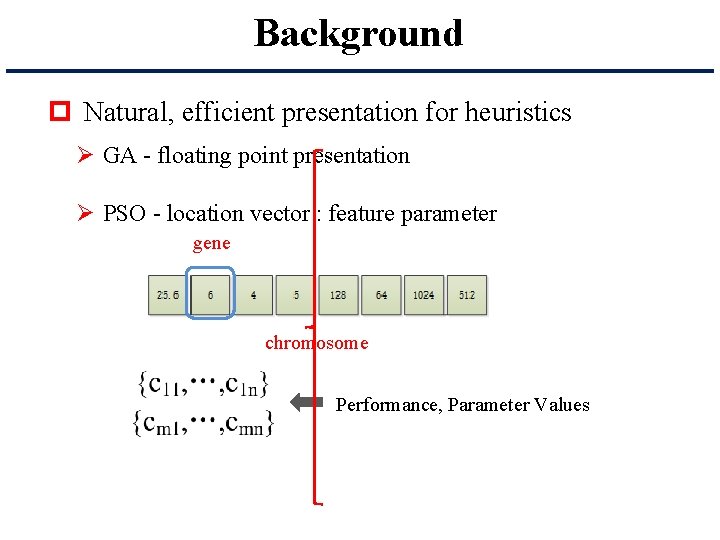

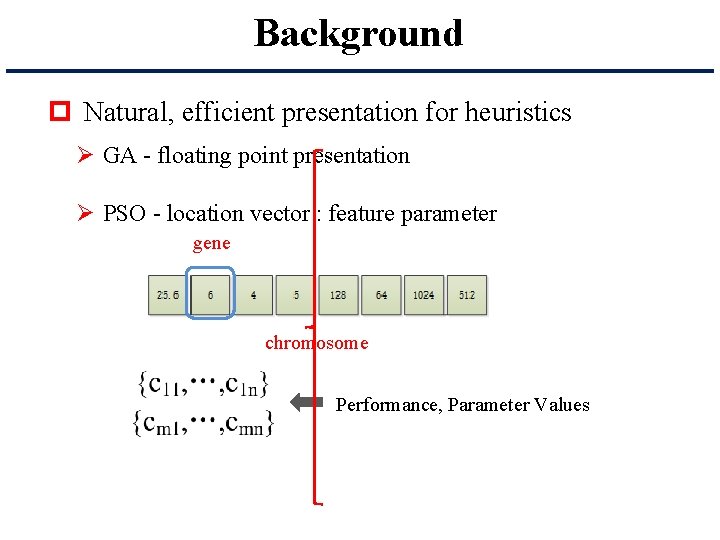

Background p Natural, efficient presentation for heuristics Ø GA - floating point presentation Ø PSO - location vector : feature parameter gene chromosome Performance, Parameter Values

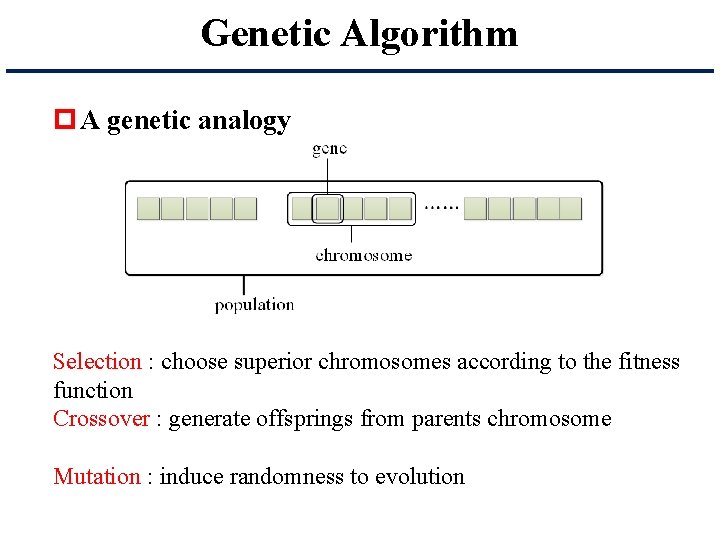

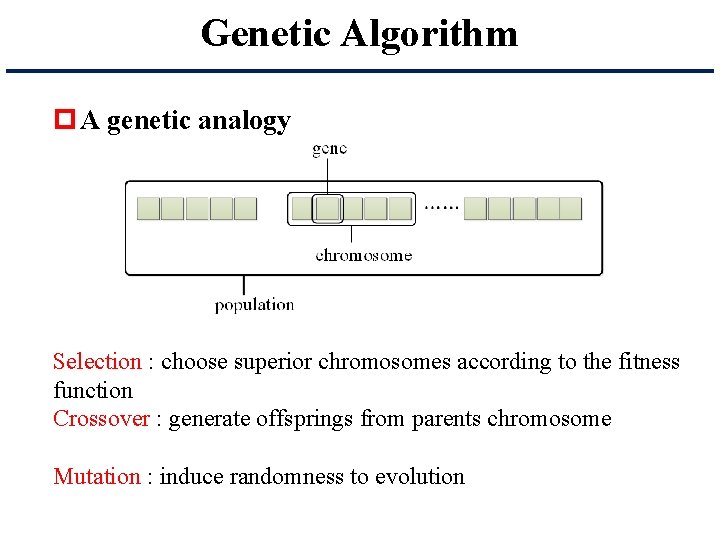

Genetic Algorithm p A genetic analogy Selection : choose superior chromosomes according to the fitness function Crossover : generate offsprings from parents chromosome Mutation : induce randomness to evolution

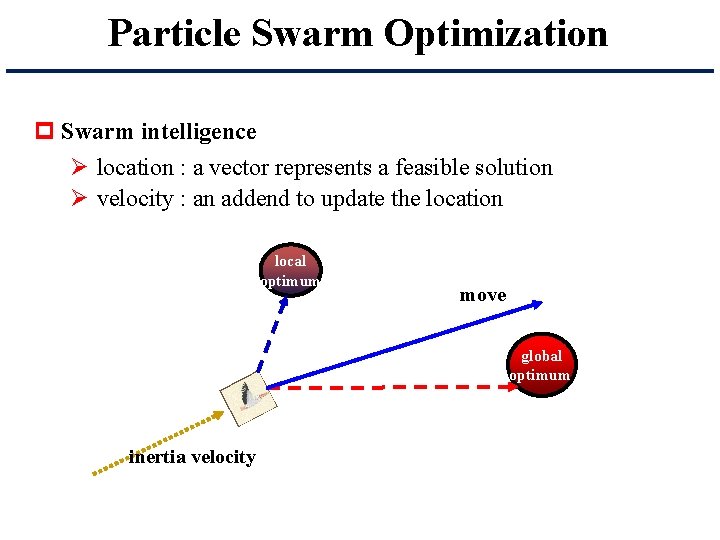

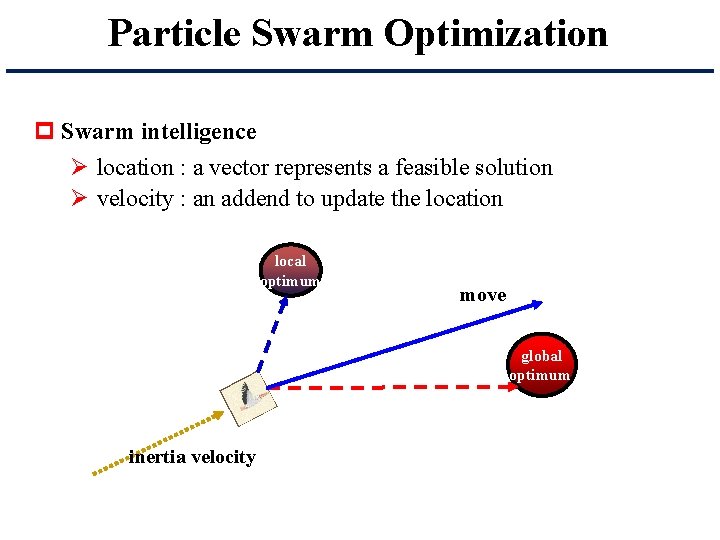

Particle Swarm Optimization p Swarm intelligence Ø location : a vector represents a feasible solution Ø velocity : an addend to update the location local optimum move global optimum inertia velocity

Outline üBackgrouds üModel and implementation üExperimental Evaluation

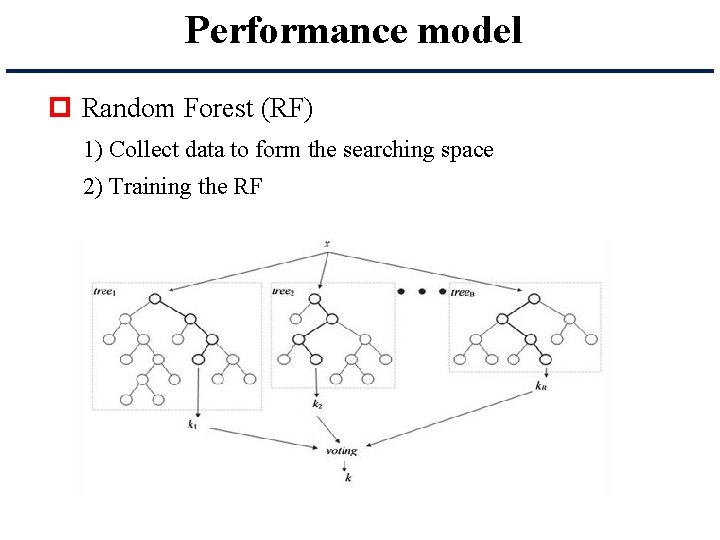

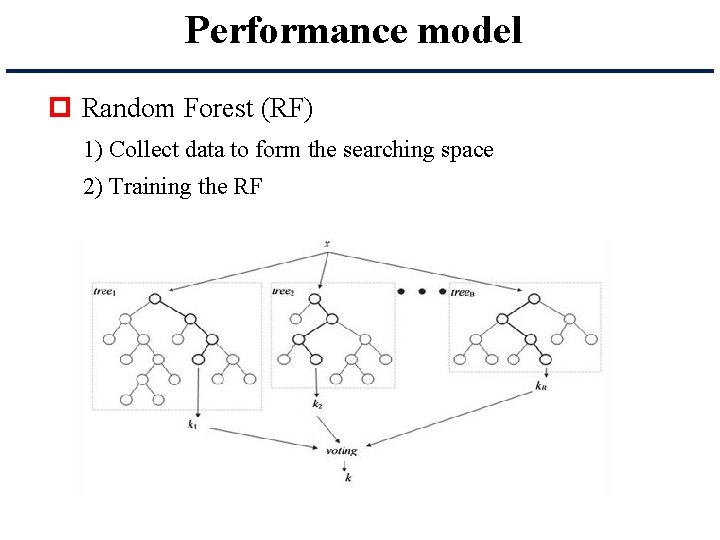

Performance model p Random Forest (RF) 1) Collect data to form the searching space 2) Training the RF

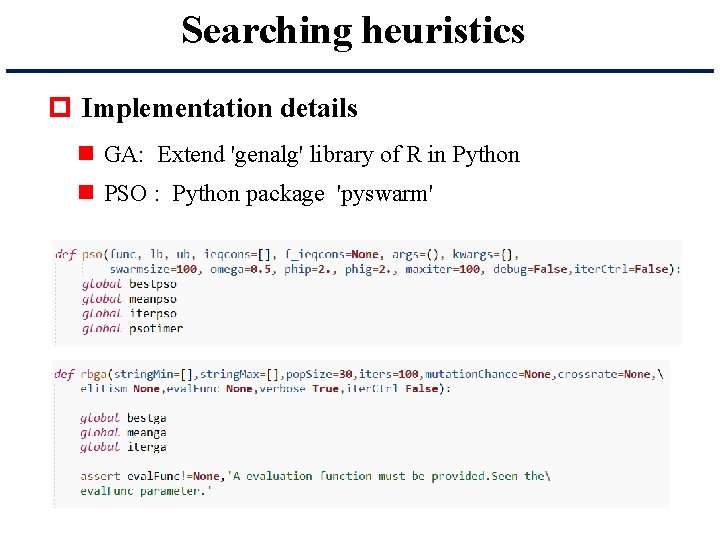

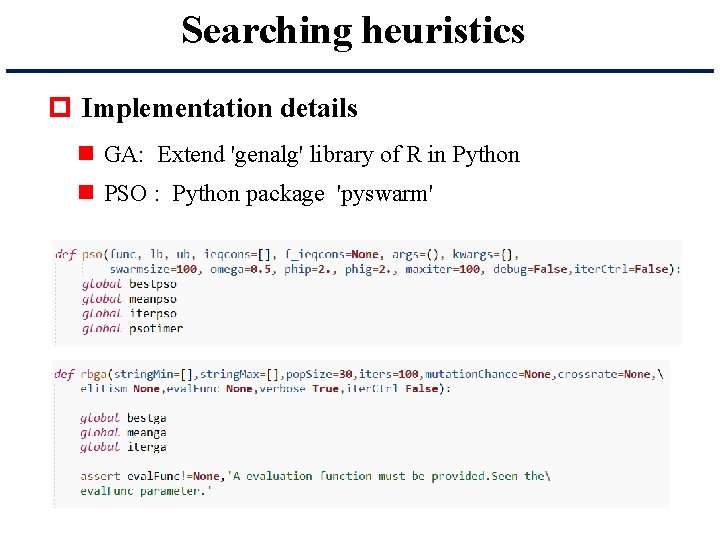

Searching heuristics p Implementation details n GA: Extend 'genalg' library of R in Python n PSO : Python package 'pyswarm'

Outline üBackgrouds üModel and implementation üExperimental Evaluation

Experimental setup p Platform Desktop: AMD quad-core processor, 4 GB DDR 4 memory Benchmark: Page. Rank p Metric Ø Evaluation of the convergent state: Standard Deviation l iterate

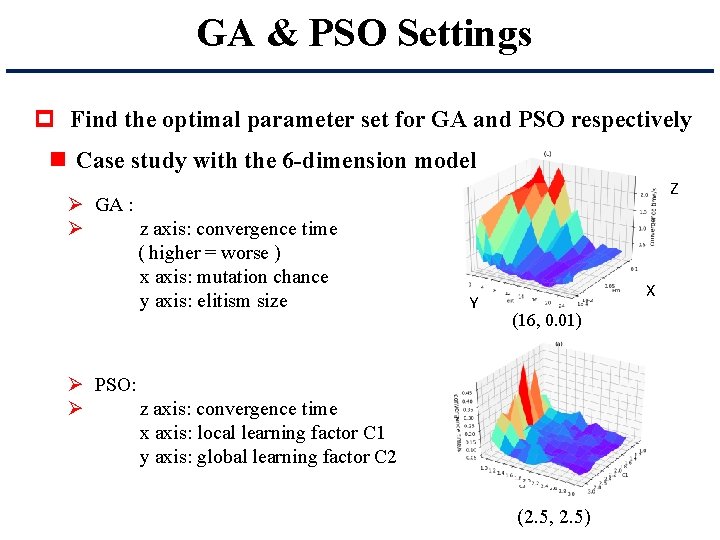

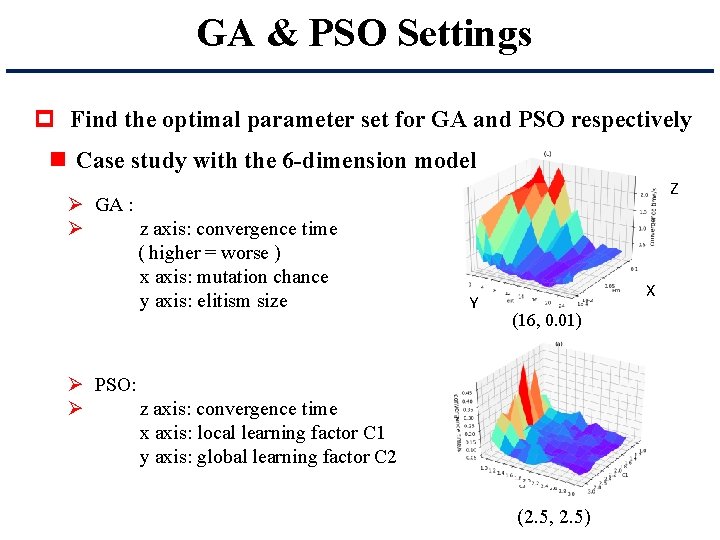

GA & PSO Settings p Find the optimal parameter set for GA and PSO respectively n Case study with the 6 -dimension model Ø GA : Ø z axis: convergence time ( higher = worse ) x axis: mutation chance y axis: elitism size Z Y X (16, 0. 01) Ø PSO: Ø z axis: convergence time x axis: local learning factor C 1 y axis: global learning factor C 2 (2. 5, 2. 5)

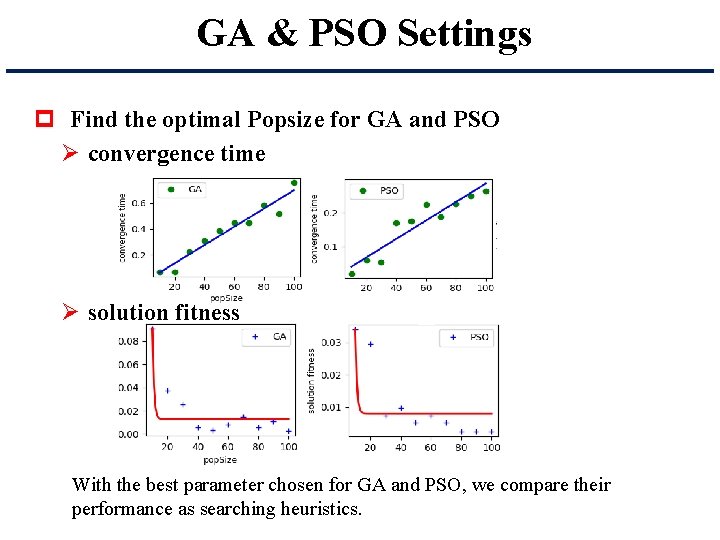

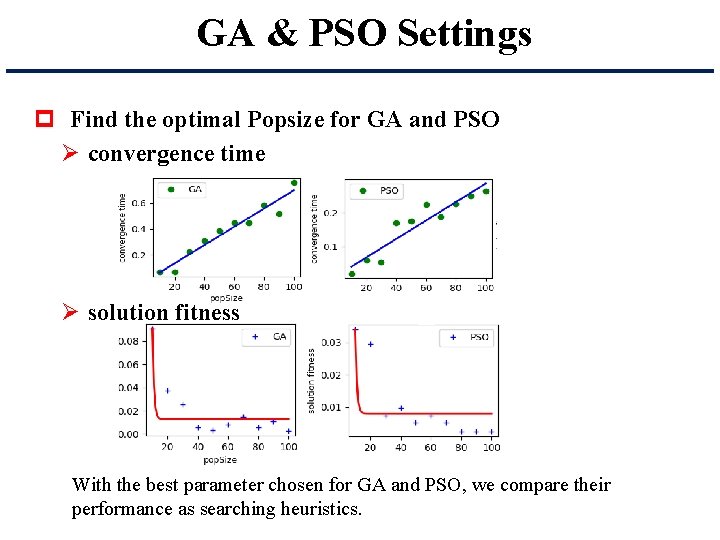

GA & PSO Settings p Find the optimal Popsize for GA and PSO Ø convergence time Ø solution fitness With the best parameter chosen for GA and PSO, we compare their performance as searching heuristics.

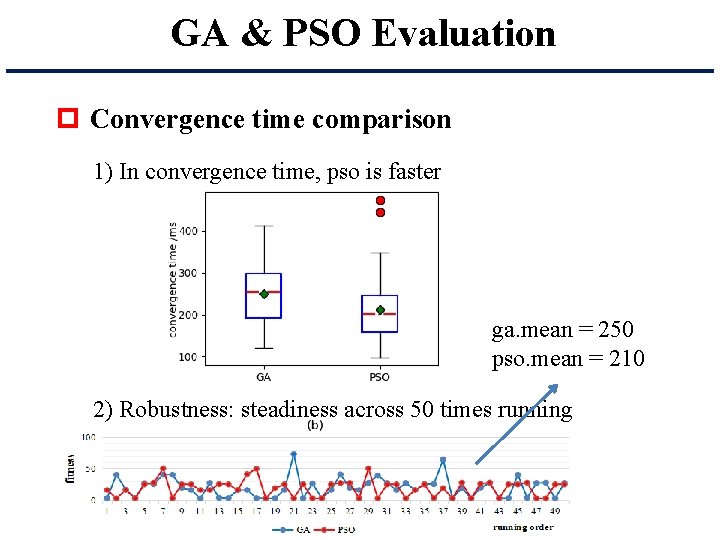

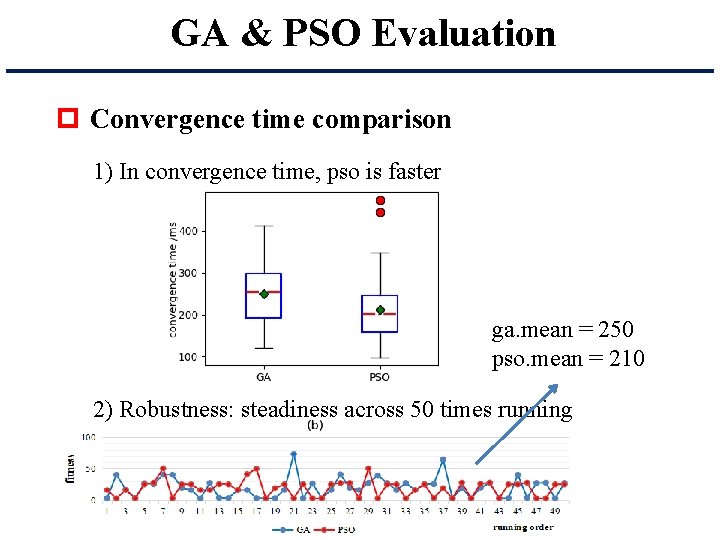

GA & PSO Evaluation p Convergence time comparison 1) In convergence time, pso is faster ga. mean = 250 pso. mean = 210 2) Robustness: steadiness across 50 times running

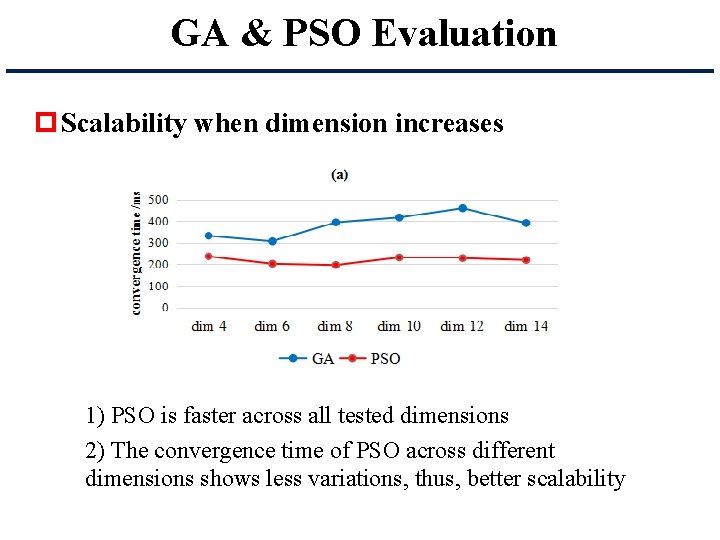

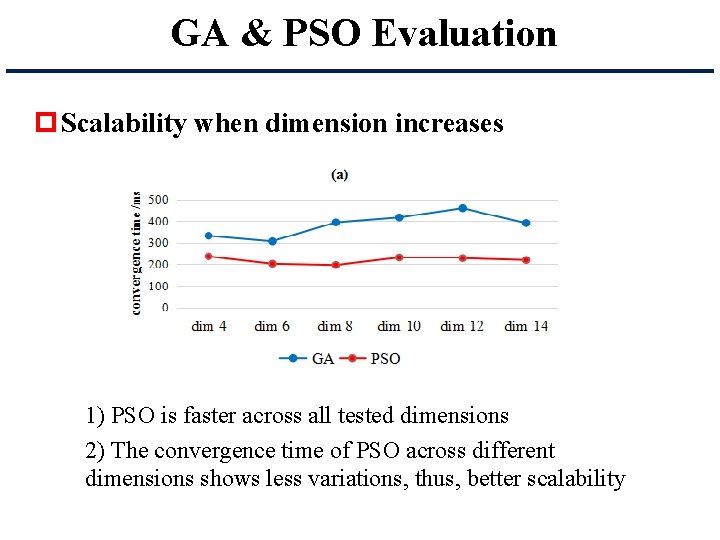

GA & PSO Evaluation p Scalability when dimension increases 1) PSO is faster across all tested dimensions 2) The convergence time of PSO across different dimensions shows less variations, thus, better scalability

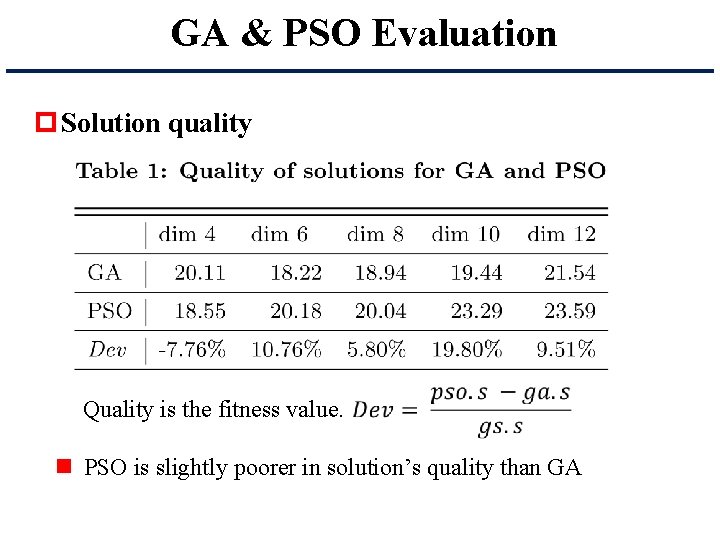

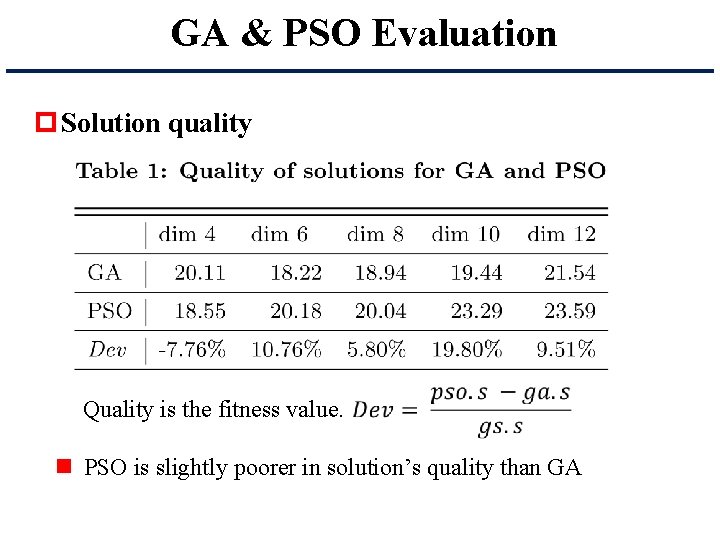

GA & PSO Evaluation p Solution quality Quality is the fitness value. n PSO is slightly poorer in solution’s quality than GA

Conclusion p PSO performs much faster while the performance is only slightly poorer than that of GA.