Particle Swarm Optimisation Representations for Simultaneous Clustering and

![Related Work • NMA_CFS [1] algorithm: Genetic Algorithm to do simultaneous C + FS. Related Work • NMA_CFS [1] algorithm: Genetic Algorithm to do simultaneous C + FS.](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-4.jpg)

![Compared Representations • Centroid (k fixed) • “Standard”: similar to [1, 2] • Dataset Compared Representations • Centroid (k fixed) • “Standard”: similar to [1, 2] • Dataset](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-5.jpg)

![Thanks Questions? References • [1] W. Sheng, X. Liu, and M. C. Fairhurst, “A Thanks Questions? References • [1] W. Sheng, X. Liu, and M. C. Fairhurst, “A](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-17.jpg)

- Slides: 17

Particle Swarm Optimisation Representations for Simultaneous Clustering and Feature Selection Andrew Lensen, Bing Xue, and Mengjie Zhang

Clustering • Task of grouping similar instances into a number of natural clusters. • Usually unsupervised – no separate test set, as no ground truth. • Many challenges: • Very large search space (NP-hard) • Cluster goodness? Compactness/density, separation, connectedness… • Number of clusters (K). • Even harder when many features: higher complexity. 2

Feature Selection (FS) • Why use all features? Some may be misleading/irrelevant/redundant. • FS selects a (small) subset of features to use. • Reduce complexity, improve interpretability of solutions. • EC has been widely successful for FS in classification tasks, but very little work for clustering (despite it being a harder task). • Simultaneously clustering and selecting features allows most appropriate features to be selected (embedded FS). 3

![Related Work NMACFS 1 algorithm Genetic Algorithm to do simultaneous C FS Related Work • NMA_CFS [1] algorithm: Genetic Algorithm to do simultaneous C + FS.](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-4.jpg)

Related Work • NMA_CFS [1] algorithm: Genetic Algorithm to do simultaneous C + FS. • Niching, local search techniques. • Variable length centroid representation for variable K crossover difficulties. • Tested on datasets with small #features (up to 30) and clusters (up to 7). • Javani et al. [2]: PSO method similar to NMA_CFS, but K fixed. • Other methods use EC for one of clustering/FS task and non-EC technique for other task. (Usually filter FS) • Better to optimise both at once with EC? 4

![Compared Representations Centroid k fixed Standard similar to 1 2 Dataset Compared Representations • Centroid (k fixed) • “Standard”: similar to [1, 2] • Dataset](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-5.jpg)

Compared Representations • Centroid (k fixed) • “Standard”: similar to [1, 2] • Dataset seeded • k-means seeded • Medoid: previously unused for C + FS • K fixed • K unfixed 5

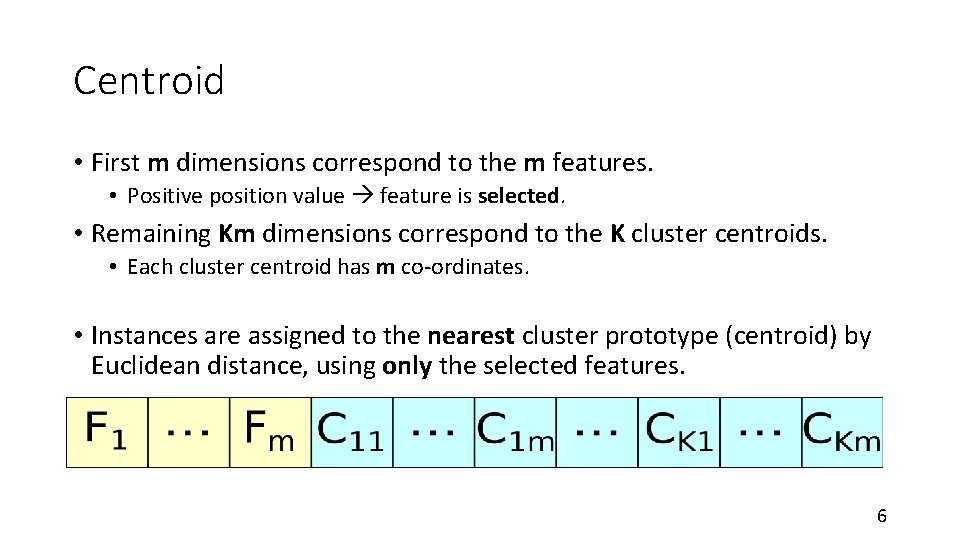

Centroid • First m dimensions correspond to the m features. • Positive position value feature is selected. • Remaining Km dimensions correspond to the K cluster centroids. • Each cluster centroid has m co-ordinates. • Instances are assigned to the nearest cluster prototype (centroid) by Euclidean distance, using only the selected features. 6

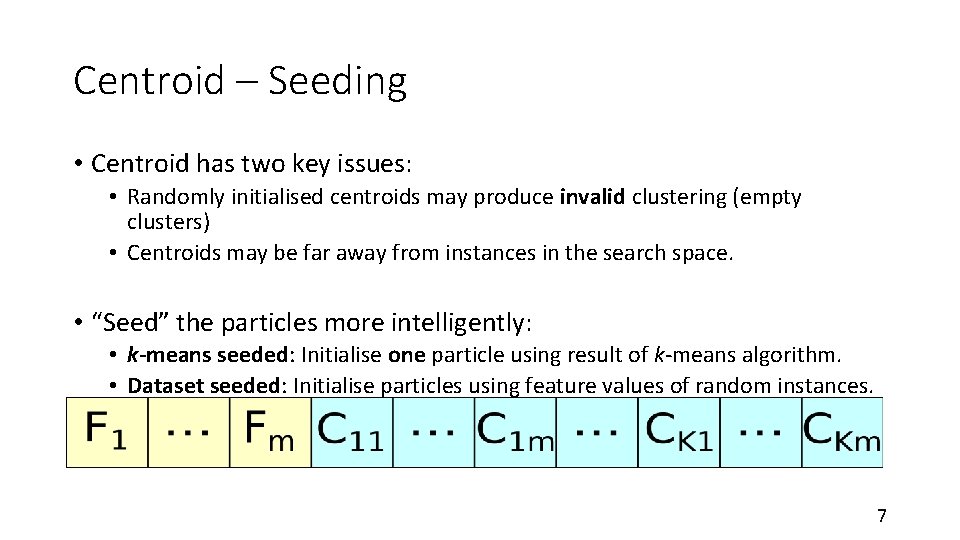

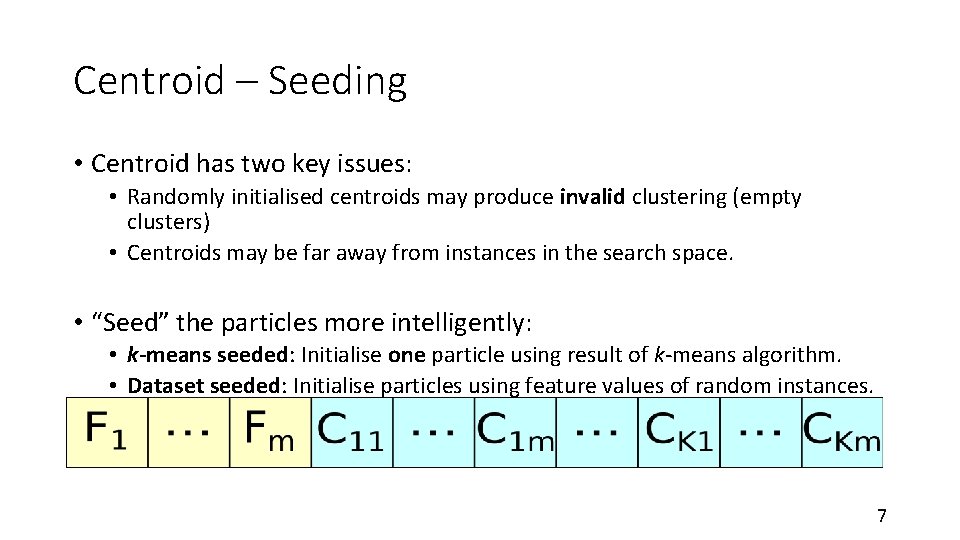

Centroid – Seeding • Centroid has two key issues: • Randomly initialised centroids may produce invalid clustering (empty clusters) • Centroids may be far away from instances in the search space. • “Seed” the particles more intelligently: • k-means seeded: Initialise one particle using result of k-means algorithm. • Dataset seeded: Initialise particles using feature values of random instances. 7

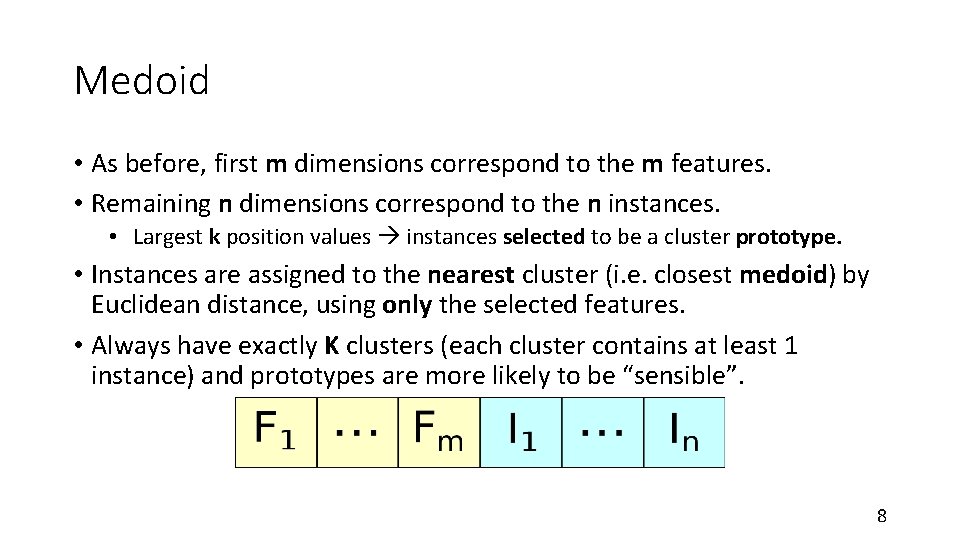

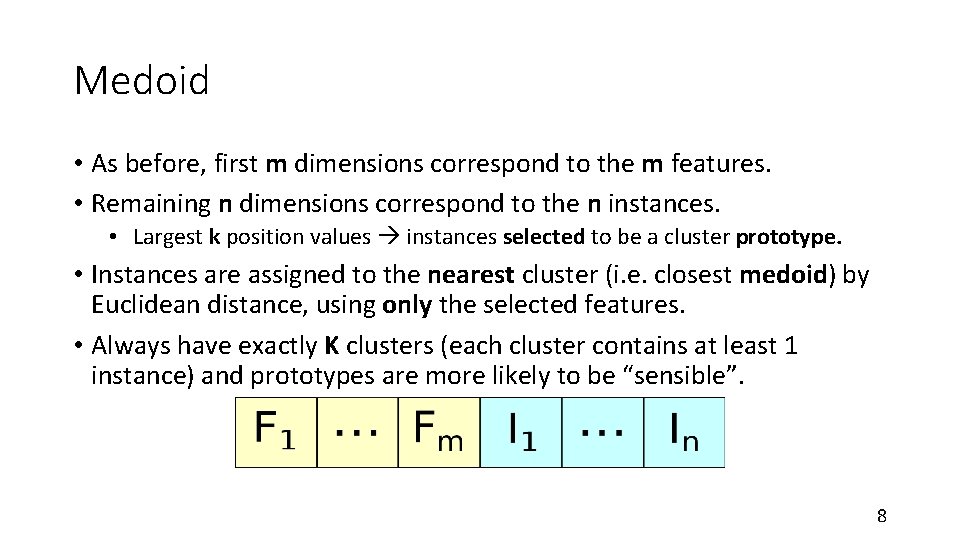

Medoid • As before, first m dimensions correspond to the m features. • Remaining n dimensions correspond to the n instances. • Largest k position values instances selected to be a cluster prototype. • Instances are assigned to the nearest cluster (i. e. closest medoid) by Euclidean distance, using only the selected features. • Always have exactly K clusters (each cluster contains at least 1 instance) and prototypes are more likely to be “sensible”. 8

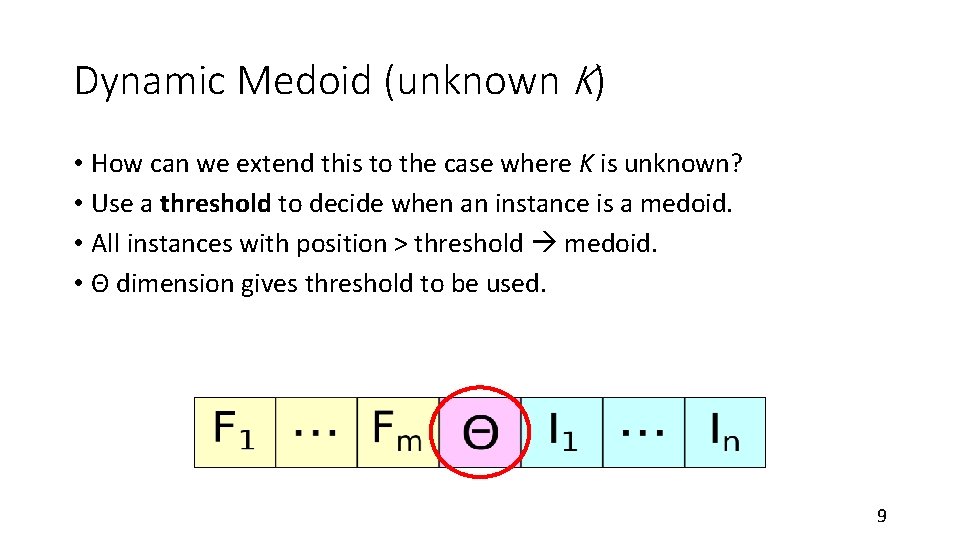

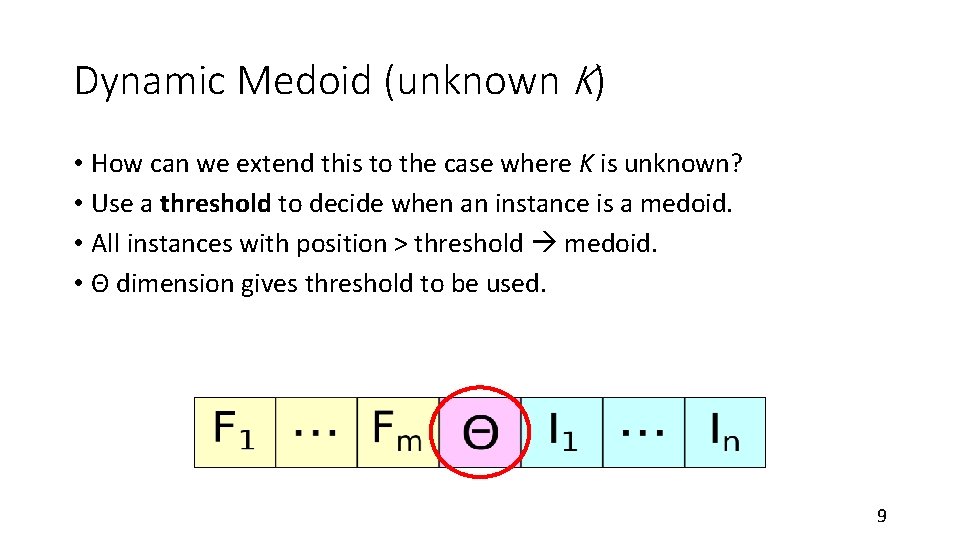

Dynamic Medoid (unknown K) • How can we extend this to the case where K is unknown? • Use a threshold to decide when an instance is a medoid. • All instances with position > threshold medoid. • Θ dimension gives threshold to be used. 9

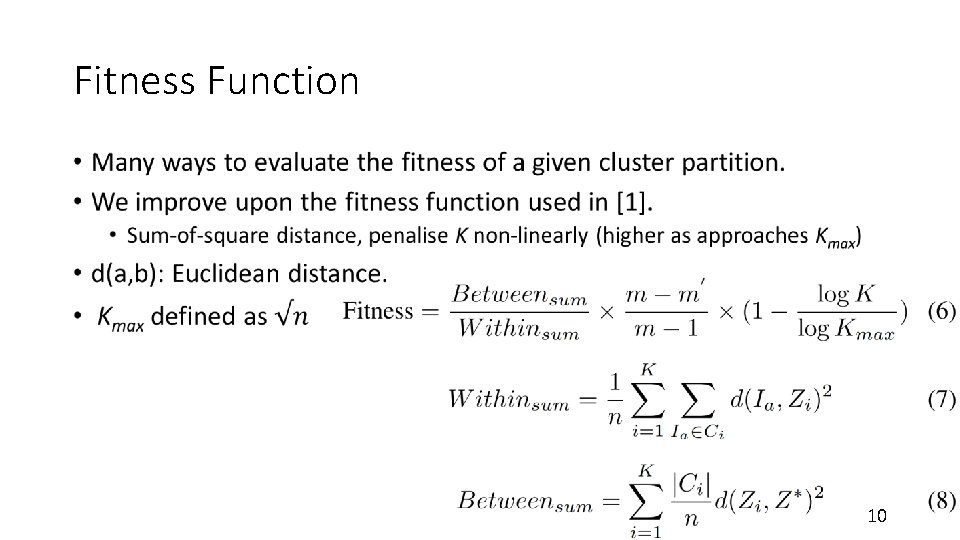

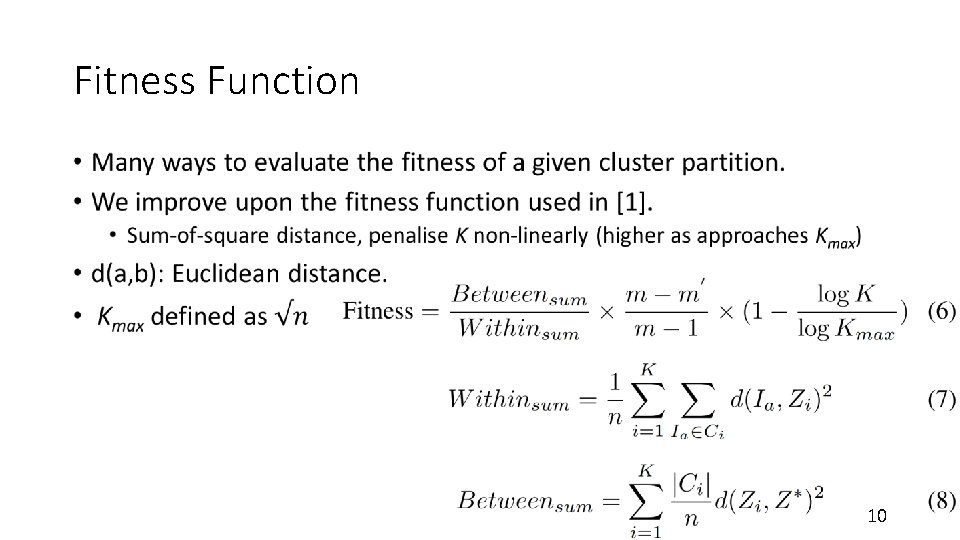

Fitness Function • 10

Datasets • Range of datasets with different numbers of features and classes (clusters). • Real-world datasets are classification datasets commonly used in clustering. • Class labels removed for training! • Tested on much larger datasets than existing work: 100 features, 40 clusters 11

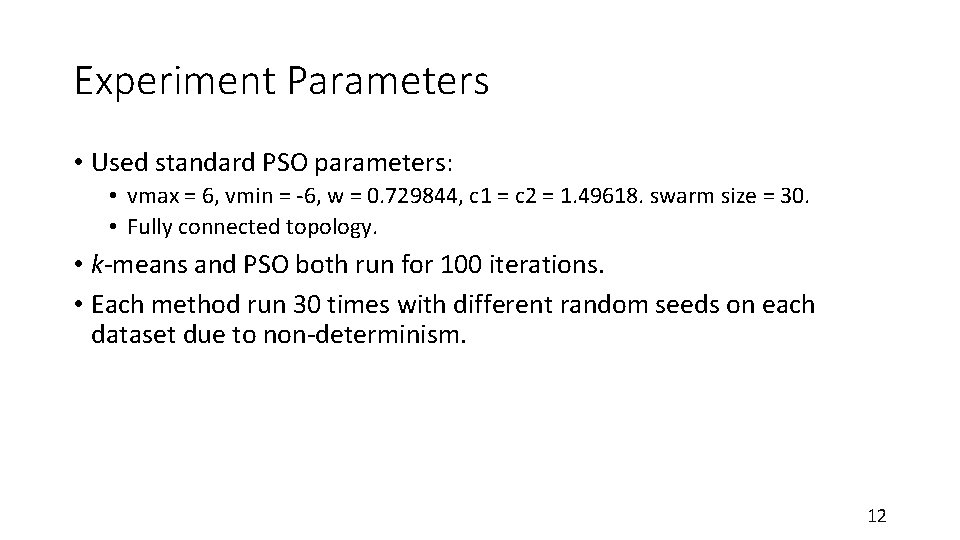

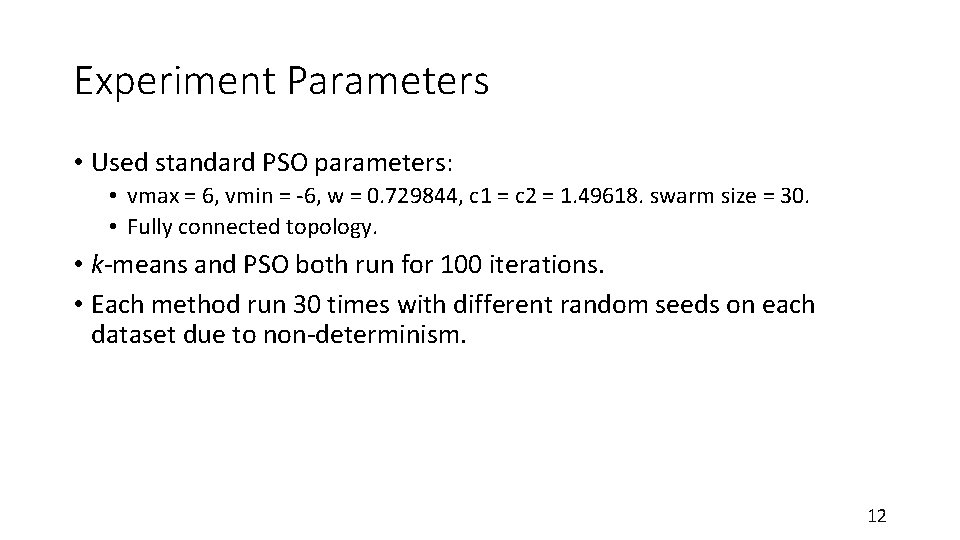

Experiment Parameters • Used standard PSO parameters: • vmax = 6, vmin = -6, w = 0. 729844, c 1 = c 2 = 1. 49618. swarm size = 30. • Fully connected topology. • k-means and PSO both run for 100 iterations. • Each method run 30 times with different random seeds on each dataset due to non-determinism. 12

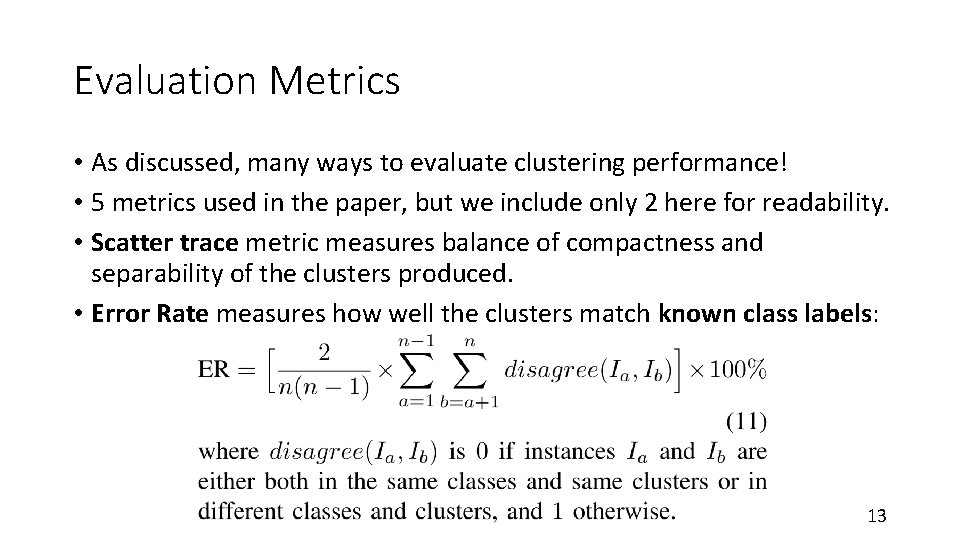

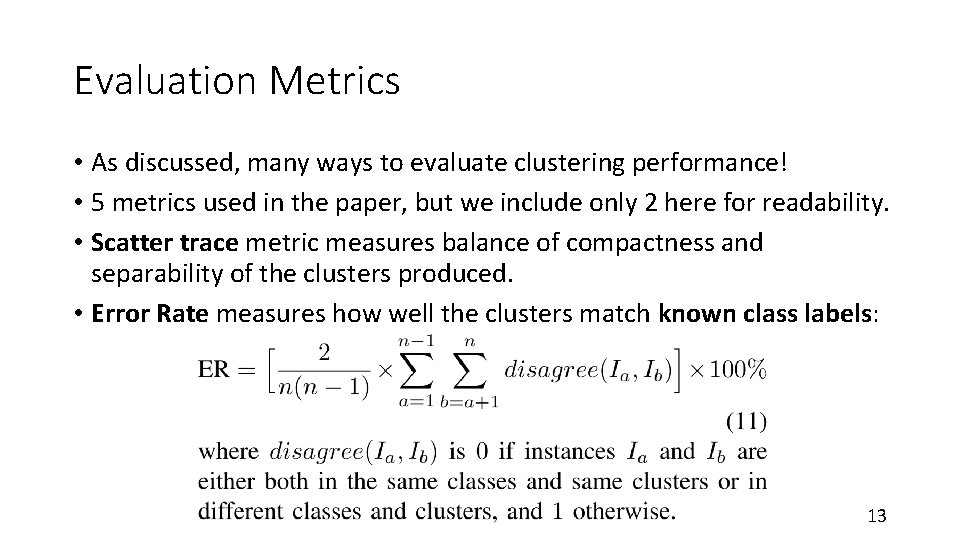

Evaluation Metrics • As discussed, many ways to evaluate clustering performance! • 5 metrics used in the paper, but we include only 2 here for readability. • Scatter trace metric measures balance of compactness and separability of the clusters produced. • Error Rate measures how well the clusters match known class labels: 13

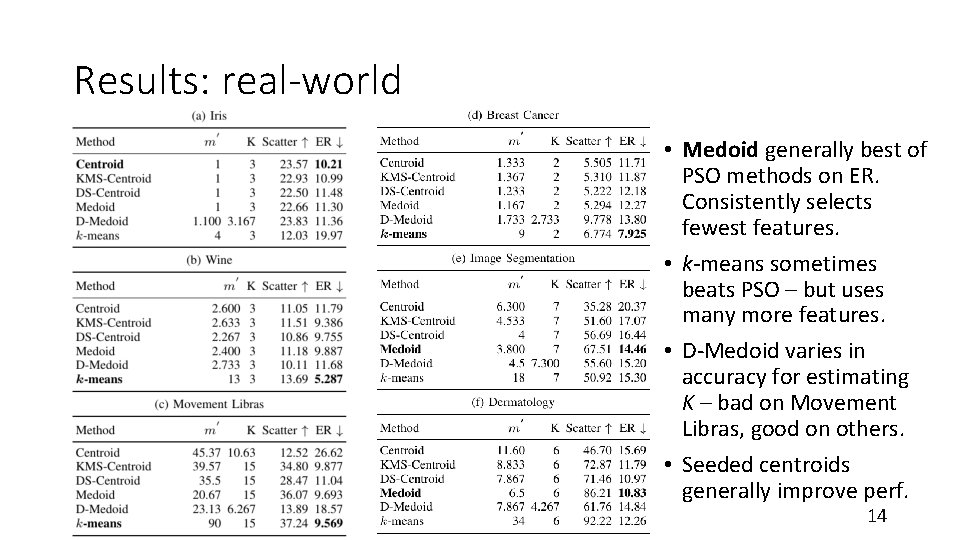

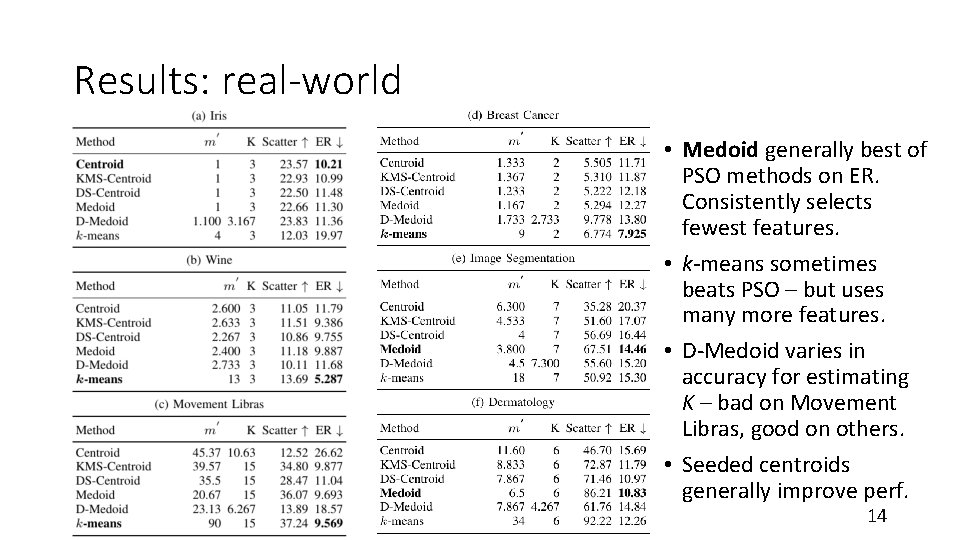

Results: real-world • Medoid generally best of PSO methods on ER. Consistently selects fewest features. • k-means sometimes beats PSO – but uses many more features. • D-Medoid varies in accuracy for estimating K – bad on Movement Libras, good on others. • Seeded centroids generally improve perf. 14

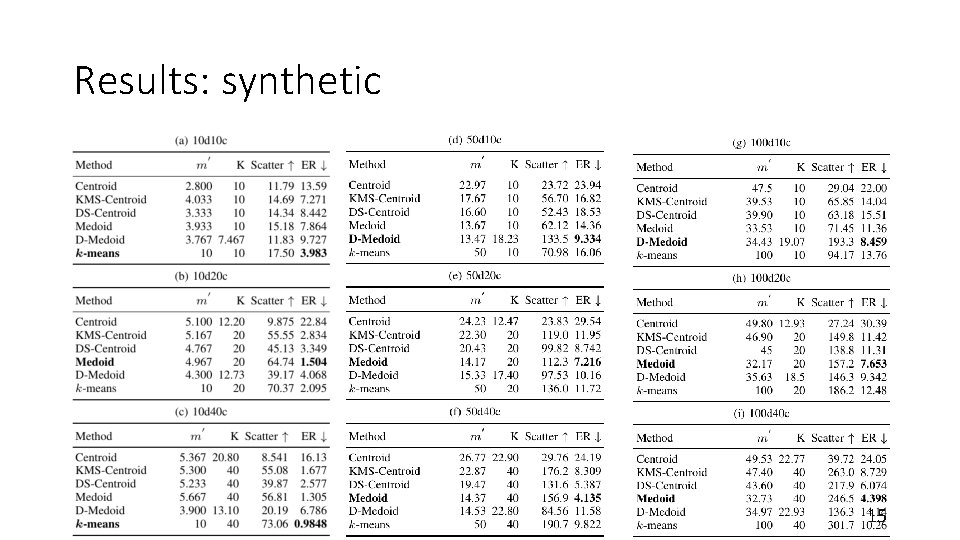

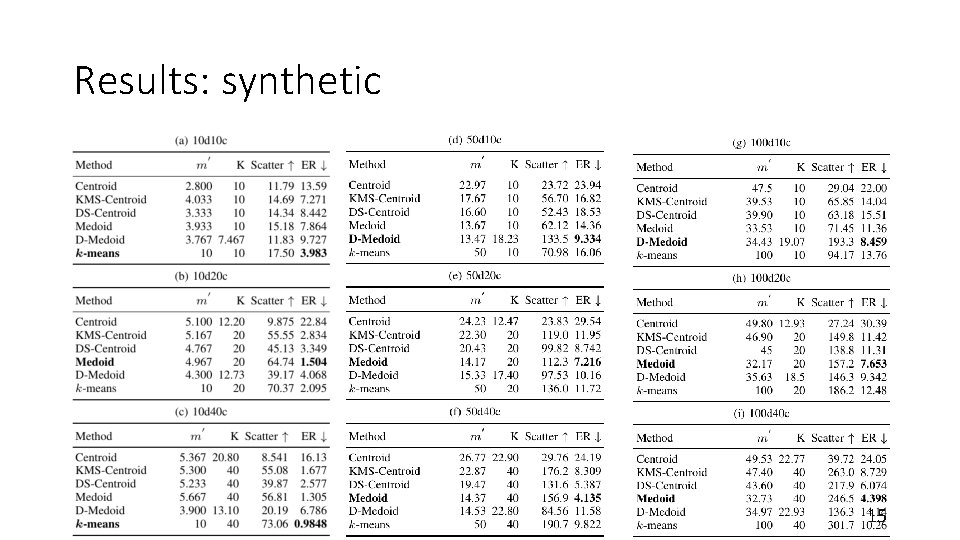

Results: synthetic 15

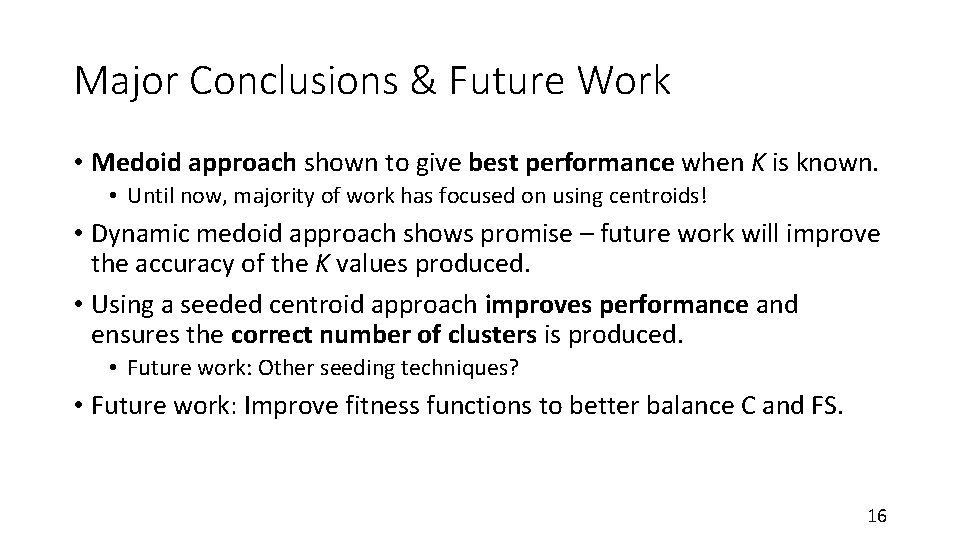

Major Conclusions & Future Work • Medoid approach shown to give best performance when K is known. • Until now, majority of work has focused on using centroids! • Dynamic medoid approach shows promise – future work will improve the accuracy of the K values produced. • Using a seeded centroid approach improves performance and ensures the correct number of clusters is produced. • Future work: Other seeding techniques? • Future work: Improve fitness functions to better balance C and FS. 16

![Thanks Questions References 1 W Sheng X Liu and M C Fairhurst A Thanks Questions? References • [1] W. Sheng, X. Liu, and M. C. Fairhurst, “A](https://slidetodoc.com/presentation_image_h2/d81b6f3ea318202c5154762292fbe19b/image-17.jpg)

Thanks Questions? References • [1] W. Sheng, X. Liu, and M. C. Fairhurst, “A niching memetic algorithm for simultaneous clustering and feature selection, ” IEEE Trans. Knowl. Data Eng. , vol. 20, no. 7, pp. 868– 879, 2008. • [2] M. Javani, K. Faez, and D. Aghlmandi, “Clustering and feature selection via PSO algorithm, ” in International Symposium on Artificial Intelligence and Signal Processing (AISP). IEEE, 2011, pp. 71– 76. 17