Partial and Semipartial Correlation Working With Residuals Questions

- Slides: 27

Partial and Semipartial Correlation Working With Residuals

Questions • Give a concrete example where it makes example (names of sense to compute a vbls, context) where it semipartial correlation. makes sense to compute Why semi rather than a partial correlation. partial? Why a partial rather • Why is regression more than semipartial? closely related to • Why is the squared semipartials than semipartial always less partials? than or equal to the • How could you use squared partial? ordinary regression to compute 3 rd order partials?

Partial Correlation • People differ in many ways. When one difference is correlated with an outcome, cannot tell whether it is spurious. • Would like to hold third variables constant, but cannot manipulate. • Can use statistical control. • Statistical control is based on residuals. If we regress X 2 on X 1 and take residuals of X 2, this part of X 2 will be uncorrelated with X 1, so anything X 2 residuals correlate with will not be explained by X 1.

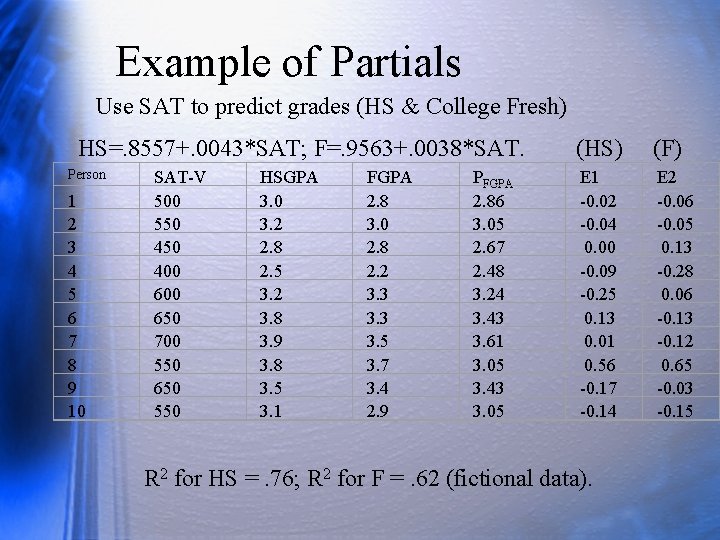

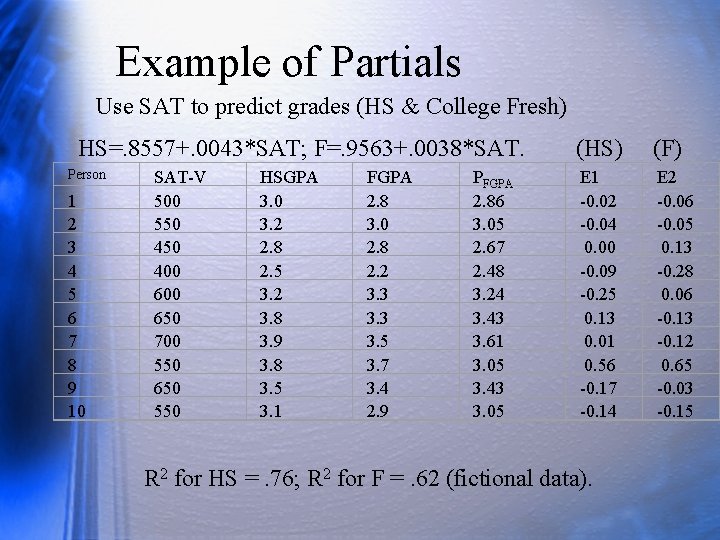

Example of Partials Use SAT to predict grades (HS & College Fresh) HS=. 8557+. 0043*SAT; F=. 9563+. 0038*SAT. Person 1 2 3 4 5 6 7 8 9 10 SAT-V 500 550 400 650 700 550 650 550 HSGPA 3. 0 3. 2 2. 8 2. 5 3. 2 3. 8 3. 9 3. 8 3. 5 3. 1 FGPA 2. 8 3. 0 2. 8 2. 2 3. 3 3. 5 3. 7 3. 4 2. 9 PFGPA 2. 86 3. 05 2. 67 2. 48 3. 24 3. 43 3. 61 3. 05 3. 43 3. 05 (HS) (F) E 1 -0. 02 -0. 04 0. 00 -0. 09 -0. 25 0. 13 0. 01 0. 56 -0. 17 -0. 14 R 2 for HS =. 76; R 2 for F =. 62 (fictional data). E 2 -0. 06 -0. 05 0. 13 -0. 28 0. 06 -0. 13 -0. 12 0. 65 -0. 03 -0. 15

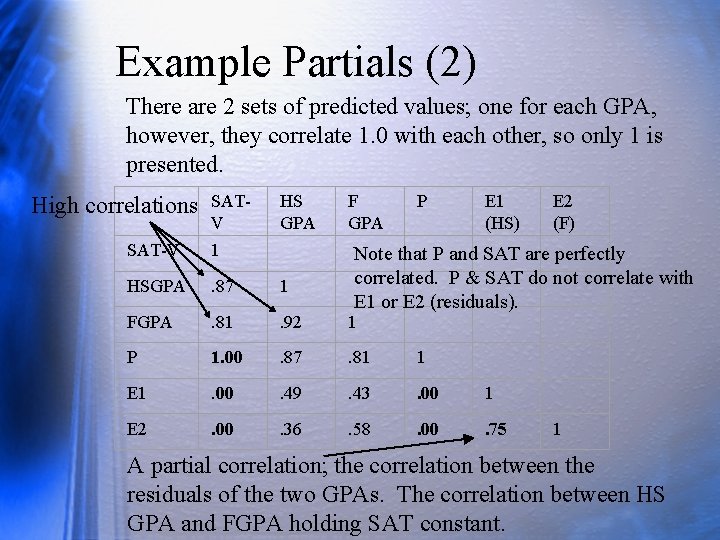

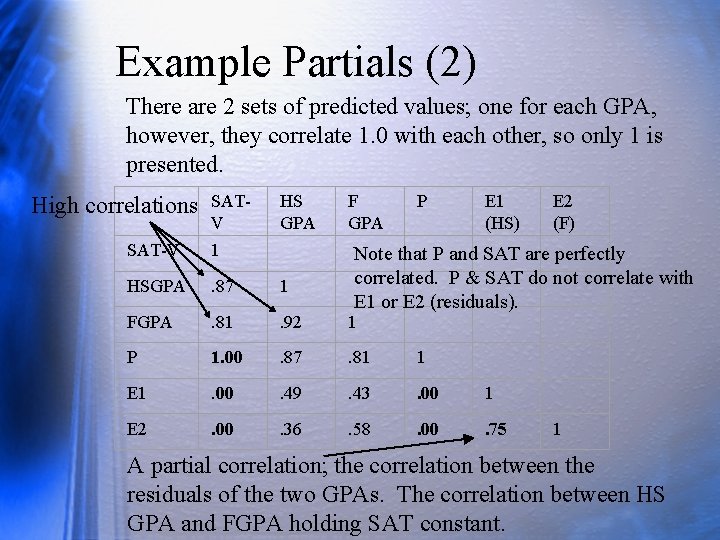

Example Partials (2) There are 2 sets of predicted values; one for each GPA, however, they correlate 1. 0 with each other, so only 1 is presented. High correlations SATV HS GPA F GPA SAT-V 1 HSGPA . 87 1 FGPA . 81 . 92 P 1. 00 E 1 E 2 P E 1 (HS) E 2 (F) Note that P and SAT are perfectly correlated. P & SAT do not correlate with E 1 or E 2 (residuals). 1 . 87 . 81 1 . 00 . 49 . 43 . 00 1 . 00 . 36 . 58 . 00 . 75 1 A partial correlation; the correlation between the residuals of the two GPAs. The correlation between HS GPA and FGPA holding SAT constant.

The Meaning of Partials • The partial is the result of holding constant a third variable via residuals. • It estimates what we would get if everyone had same value of 3 rd variable, e. g. , corr b/t 2 GPAs if all in sample have SAT of 500. • Some examples of partials? Control for SES, prior experience, what else?

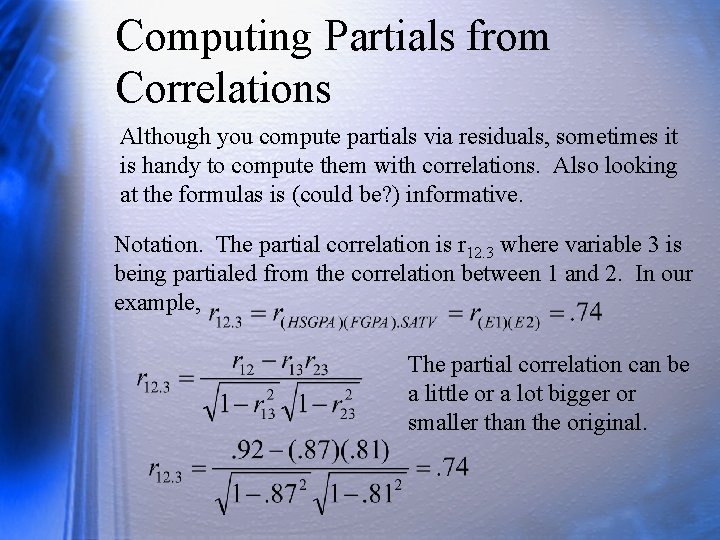

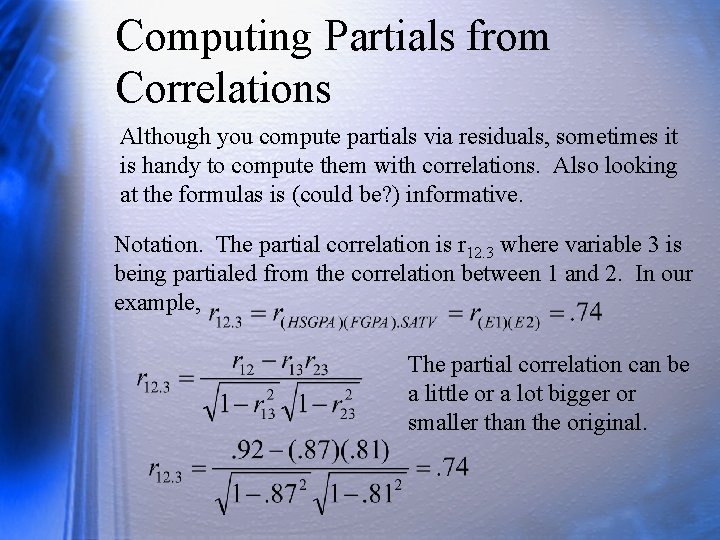

Computing Partials from Correlations Although you compute partials via residuals, sometimes it is handy to compute them with correlations. Also looking at the formulas is (could be? ) informative. Notation. The partial correlation is r 12. 3 where variable 3 is being partialed from the correlation between 1 and 2. In our example, The partial correlation can be a little or a lot bigger or smaller than the original.

The Order of a Partial • If you partial 1 vbl out of a correlation, the resulting partial is called a first order partial correlation. • If you partial 2 vbls out of a correlation, the resulting partial is called a second order partial correlation. Can have 3 rd, 4 th, etc. , order partials. • Unpartialed (raw) correlations are called zero order correlations because nothing is partialed out. • Can use regression to find residuals and compute partial correlations from the residuals, e. g. for r 12. 34, regress 1 and 2 on both 3 and 4, then compute correlation between 2 sets of residuals.

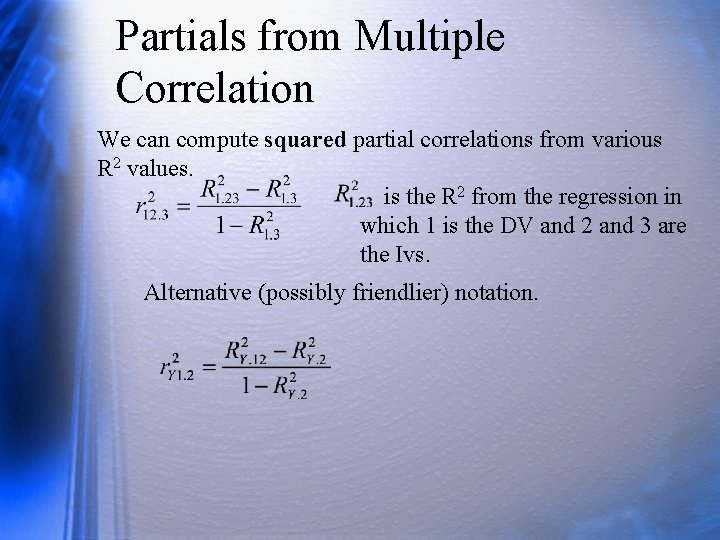

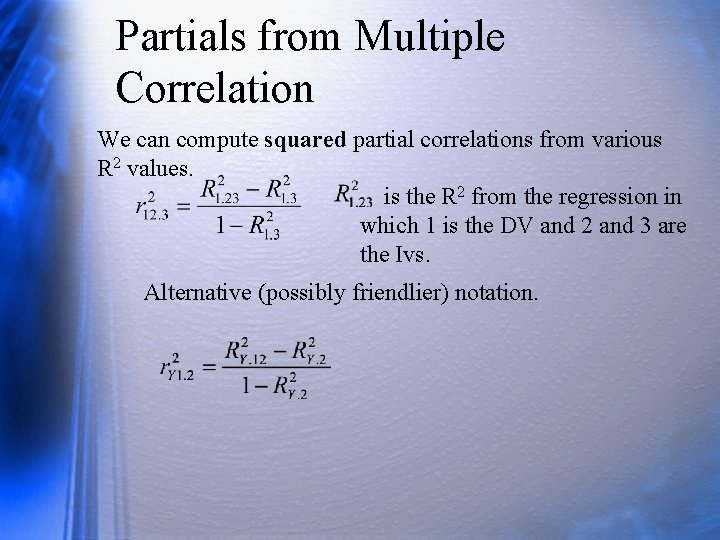

Partials from Multiple Correlation We can compute squared partial correlations from various R 2 values. is the R 2 from the regression in which 1 is the DV and 2 and 3 are the Ivs. Alternative (possibly friendlier) notation.

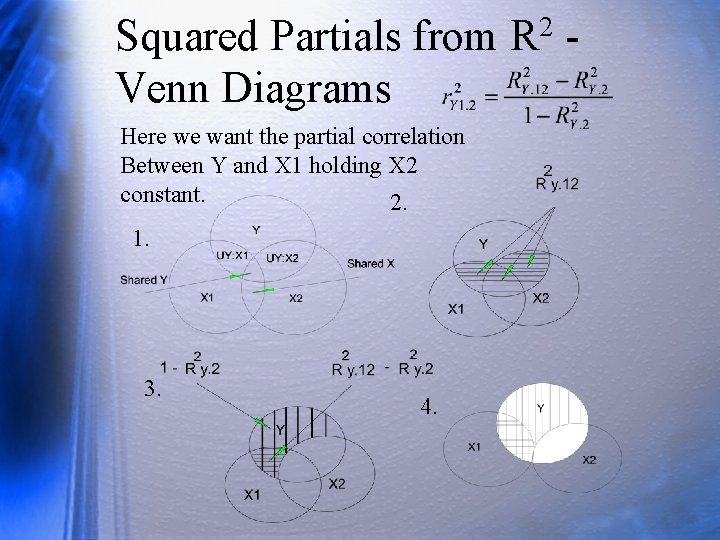

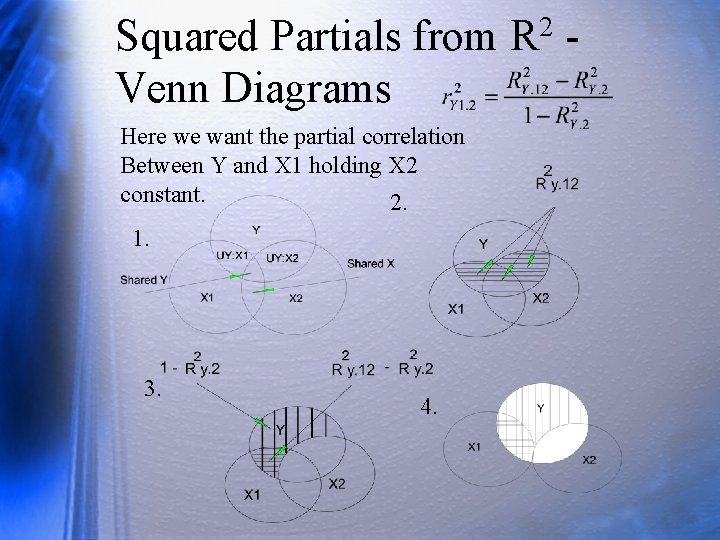

Squared Partials from R 2 - Venn Diagrams Here we want the partial correlation Between Y and X 1 holding X 2 constant. 2. 1. 3. 4.

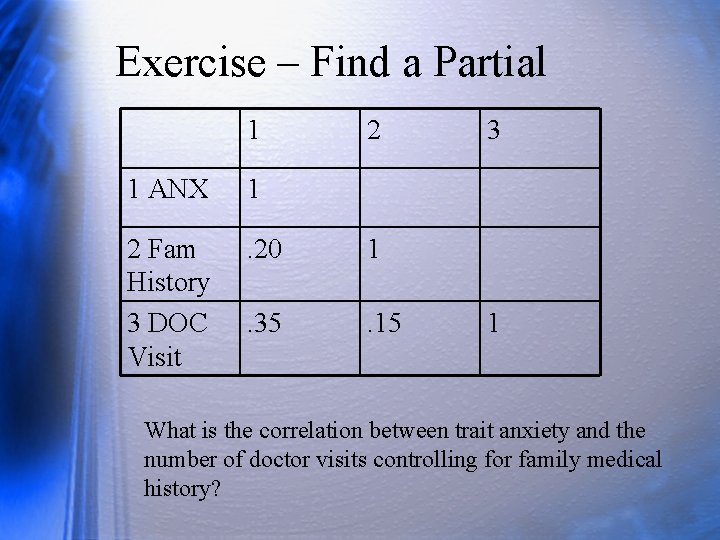

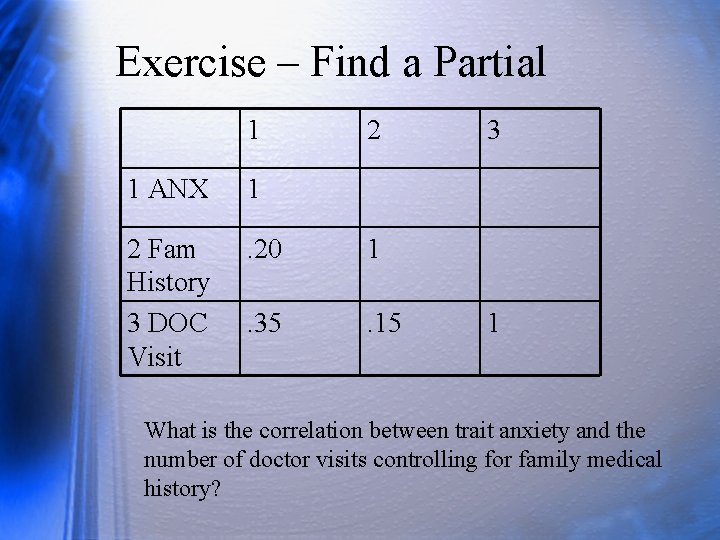

Exercise – Find a Partial 1 2 1 ANX 1 2 Fam History 3 DOC Visit . 20 1 . 35 . 15 3 1 What is the correlation between trait anxiety and the number of doctor visits controlling for family medical history?

Find a partial 1 2 1 ANX 1 2 Fam History . 20 1 3 DOC Visit . 35 . 15 3 1

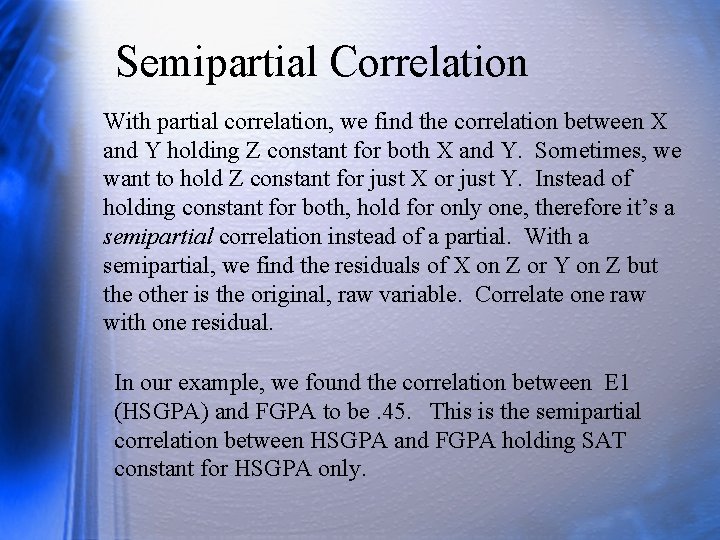

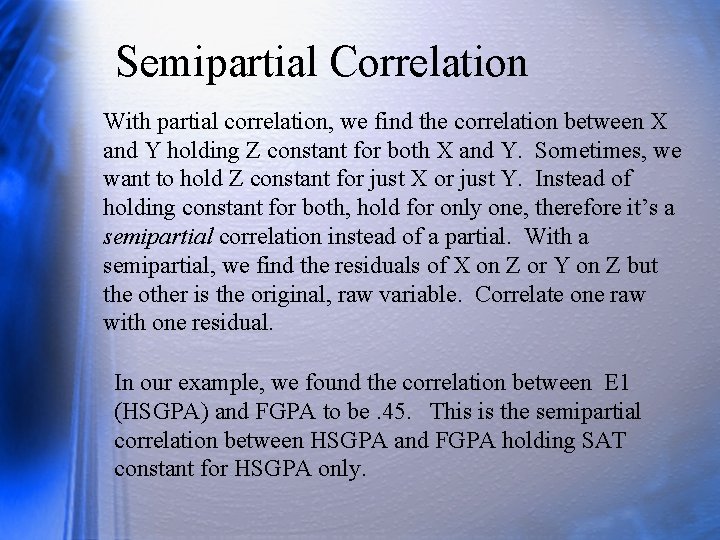

Semipartial Correlation With partial correlation, we find the correlation between X and Y holding Z constant for both X and Y. Sometimes, we want to hold Z constant for just X or just Y. Instead of holding constant for both, hold for only one, therefore it’s a semipartial correlation instead of a partial. With a semipartial, we find the residuals of X on Z or Y on Z but the other is the original, raw variable. Correlate one raw with one residual. In our example, we found the correlation between E 1 (HSGPA) and FGPA to be. 45. This is the semipartial correlation between HSGPA and FGPA holding SAT constant for HSGPA only.

Semipartials from Correlations Partial: Semipartial: Note that r 1(2. 3) means the semipartial correlation between variables 1 and 2 where 3 is partialled only from 2. In our example: Agrees with earlier results within rounding error.

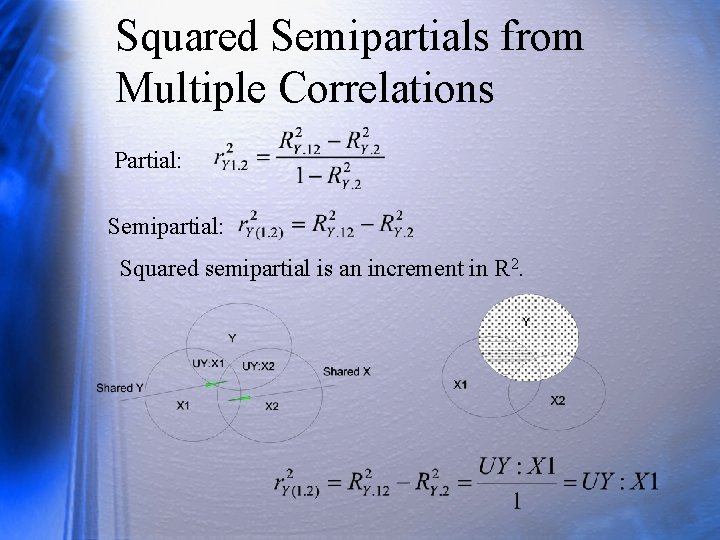

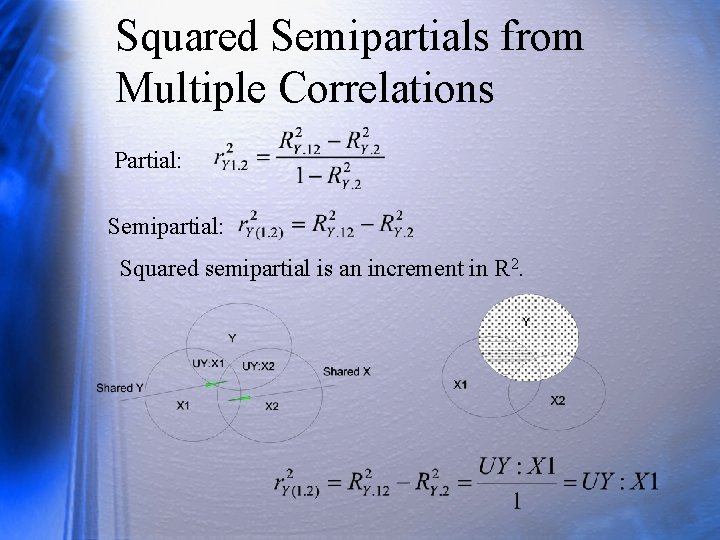

Squared Semipartials from Multiple Correlations Partial: Semipartial: Squared semipartial is an increment in R 2.

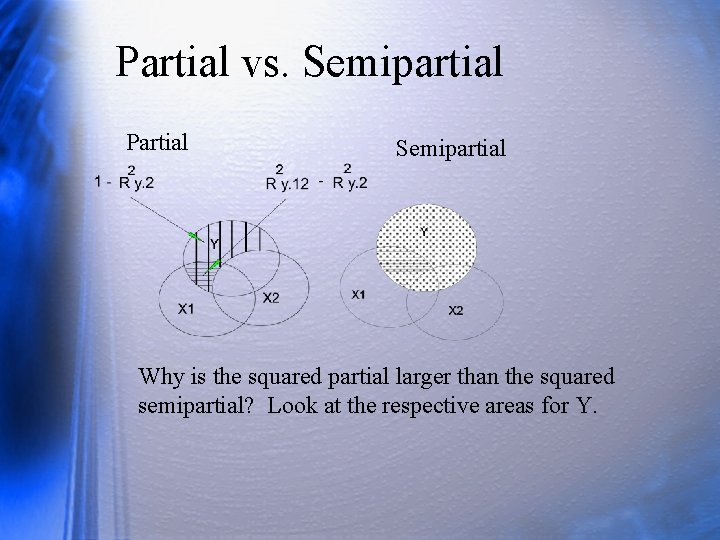

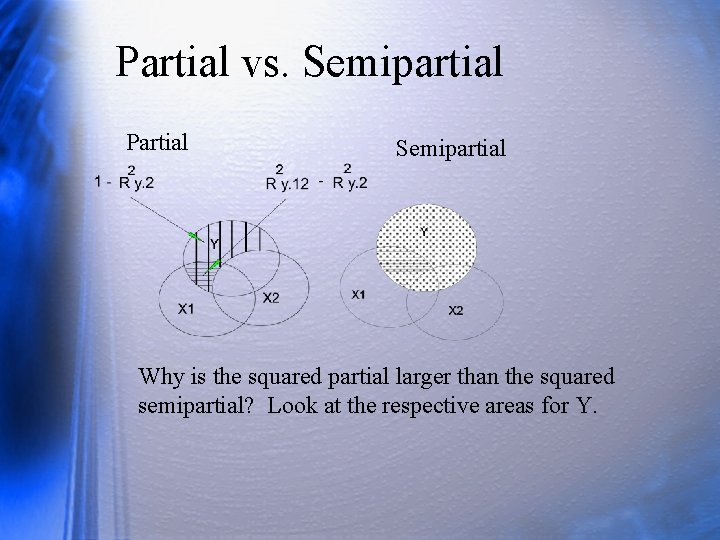

Partial vs. Semipartial Partial Semipartial Why is the squared partial larger than the squared semipartial? Look at the respective areas for Y.

Regression and Semipartial Correlation • Regression is essentially about semipartials • Each X is residualized on the other X variables. • For each X we add to the equation, we ask, “What is the unique contribution of this X above and beyond the others? ” Increment in R 2 when added last. • We do NOT residualize Y, just X. • Semipartial because X is residualized but Y is not. • b is the slope of Y on X, holding the other X variables constant.

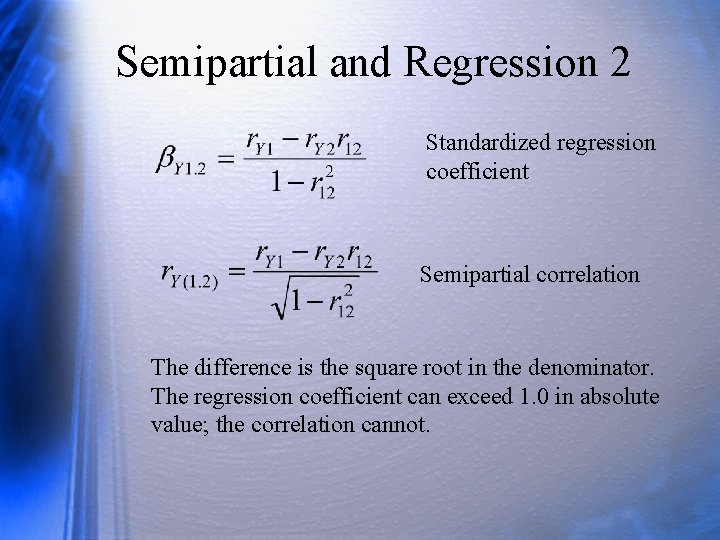

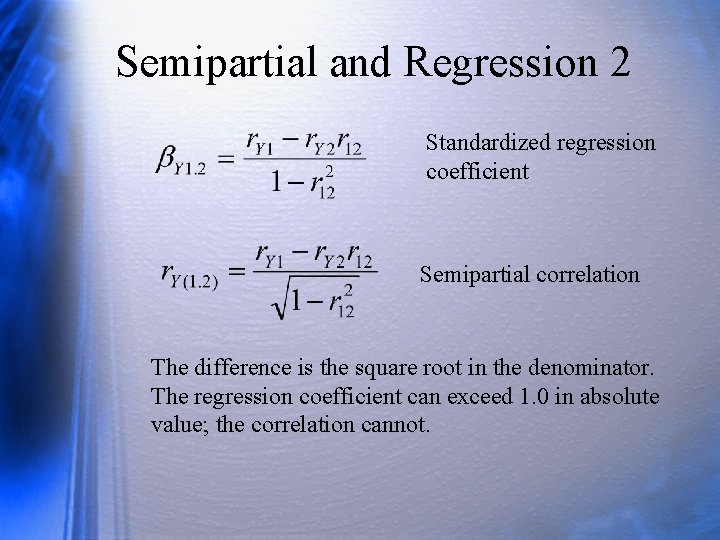

Semipartial and Regression 2 Standardized regression coefficient Semipartial correlation The difference is the square root in the denominator. The regression coefficient can exceed 1. 0 in absolute value; the correlation cannot.

Uses of Partial and Semipartial • The partial correlation is most often used when some third variable z is a plausible explanation of the correlation between X and Y. – Job characteristics and job sat by NA – Cog ability and grades by SES • The semipartial is most often used when we want to show that some variable adds incremental variance in Y above and beyond other X variable – Pilot performance and Cog ability, motor skills – Patient well being and surgery, social support

Review • Give a concrete example (names of vbls, context) where it makes sense to compute a partial correlation. Why a partial rather than semipartial? • Give a concrete example where it makes sense to compute a semipartial correlation. Why semi rather than partial?

Suppressor Effects • Hard to understand, but – Inspection of r not enough to tell value – Need to know to avoid looking dumb – Show problems with Venn diagrams • Think of observed variable as composite of different stuff, e. g. , satisfaction with car (price, prestige, etc. ). Different predictors associate with different parts.

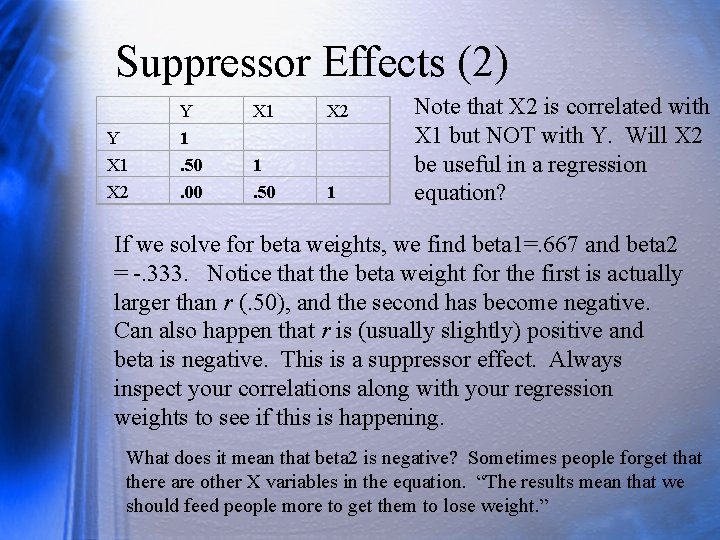

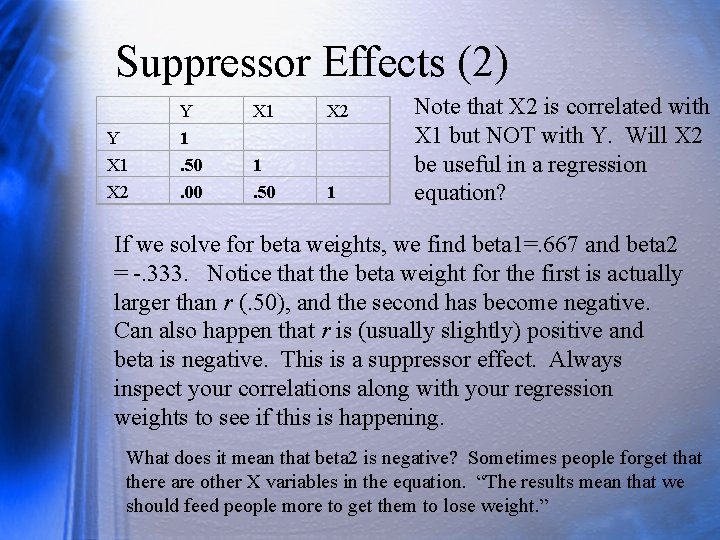

Suppressor Effects (2) Y X 1 X 2 Y 1. 50. 00 X 1 X 2 1. 50 1 Note that X 2 is correlated with X 1 but NOT with Y. Will X 2 be useful in a regression equation? If we solve for beta weights, we find beta 1=. 667 and beta 2 = -. 333. Notice that the beta weight for the first is actually larger than r (. 50), and the second has become negative. Can also happen that r is (usually slightly) positive and beta is negative. This is a suppressor effect. Always inspect your correlations along with your regression weights to see if this is happening. What does it mean that beta 2 is negative? Sometimes people forget that there are other X variables in the equation. “The results mean that we should feed people more to get them to lose weight. ”

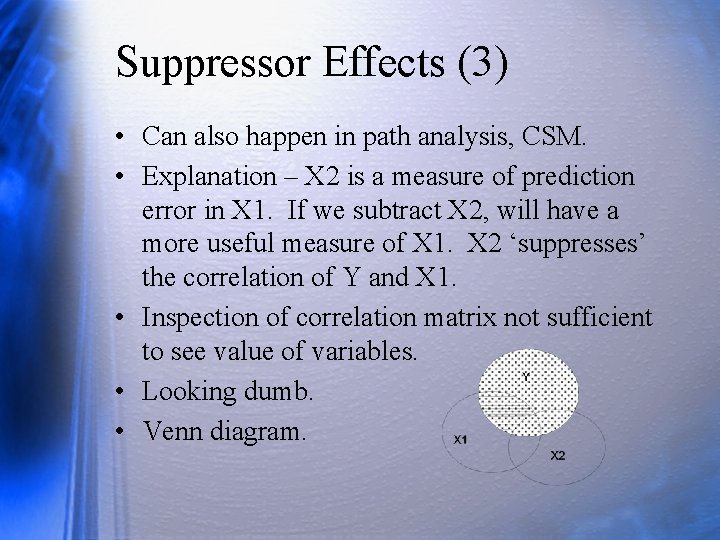

Suppressor Effects (3) • Can also happen in path analysis, CSM. • Explanation – X 2 is a measure of prediction error in X 1. If we subtract X 2, will have a more useful measure of X 1. X 2 ‘suppresses’ the correlation of Y and X 1. • Inspection of correlation matrix not sufficient to see value of variables. • Looking dumb. • Venn diagram.

Review • Why is the squared semipartial always less than or equal to the squared partial? • Why is regression more closely related to semipartials than partials? • How could you use ordinary regression to compute 3 rd order partials?

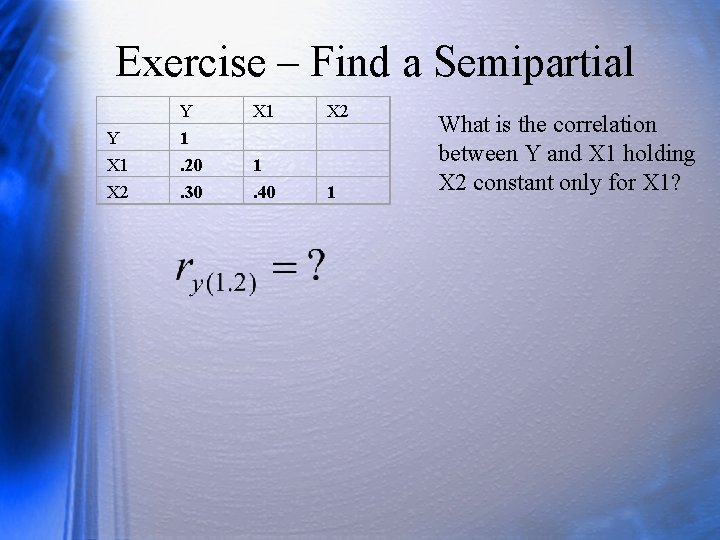

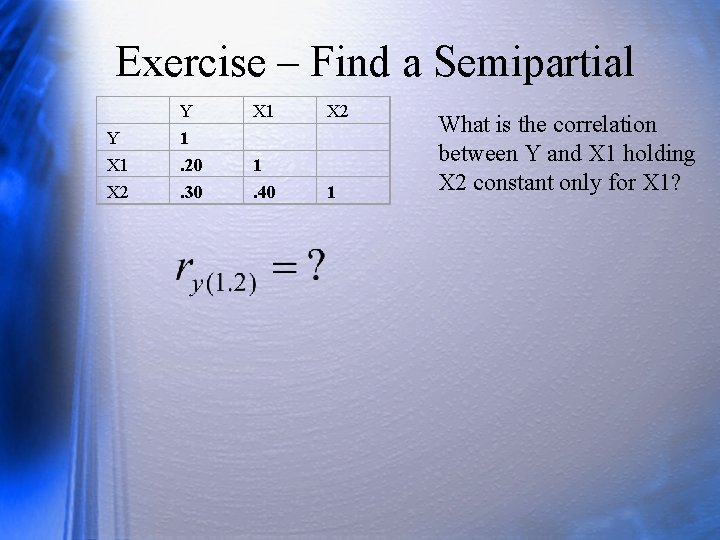

Exercise – Find a Semipartial Y X 1 X 2 Y 1. 20. 30 X 1 X 2 1. 40 1 What is the correlation between Y and X 1 holding X 2 constant only for X 1?

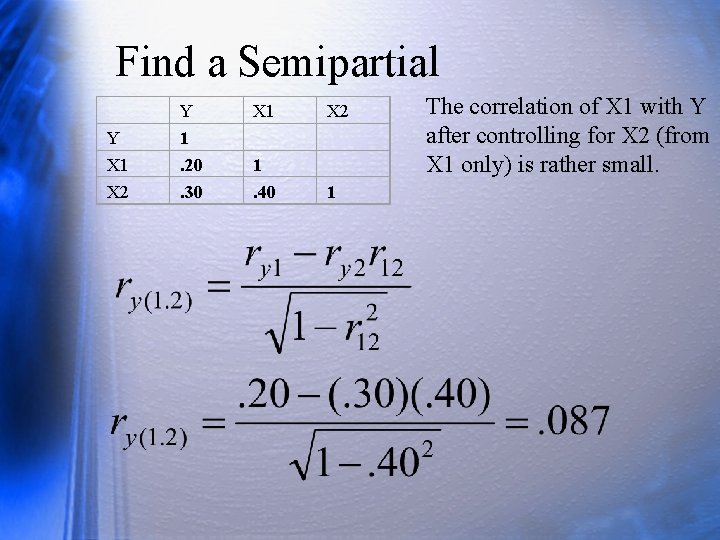

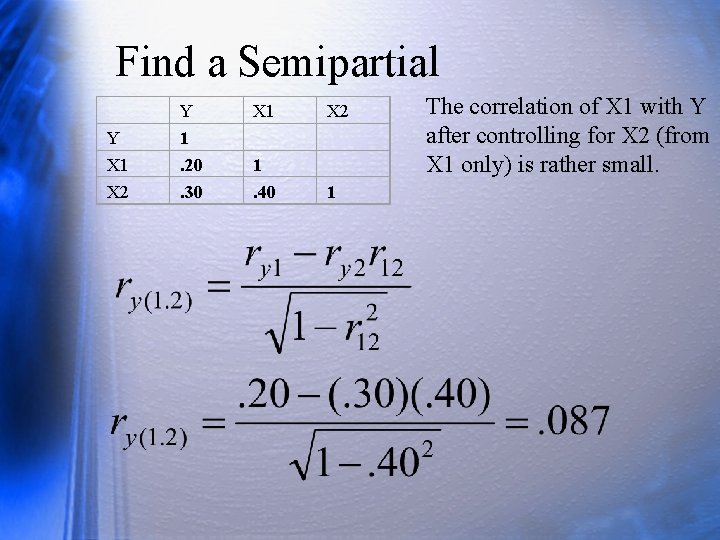

Find a Semipartial Y X 1 X 2 Y 1. 20. 30 X 1 X 2 1. 40 1 The correlation of X 1 with Y after controlling for X 2 (from X 1 only) is rather small.

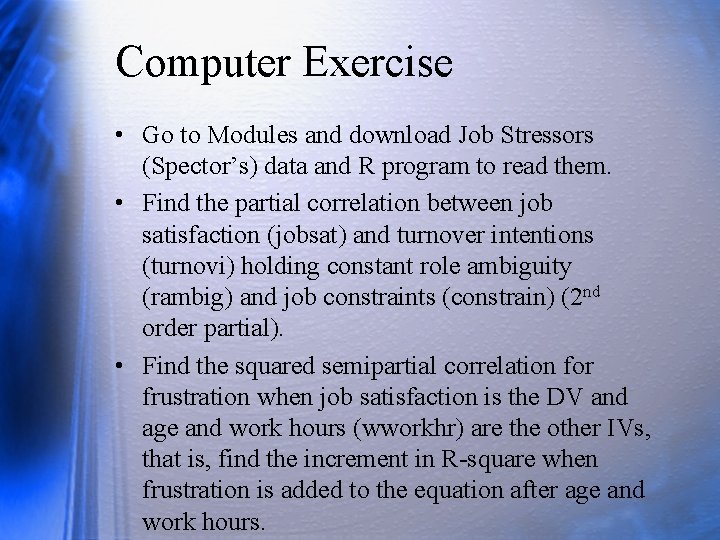

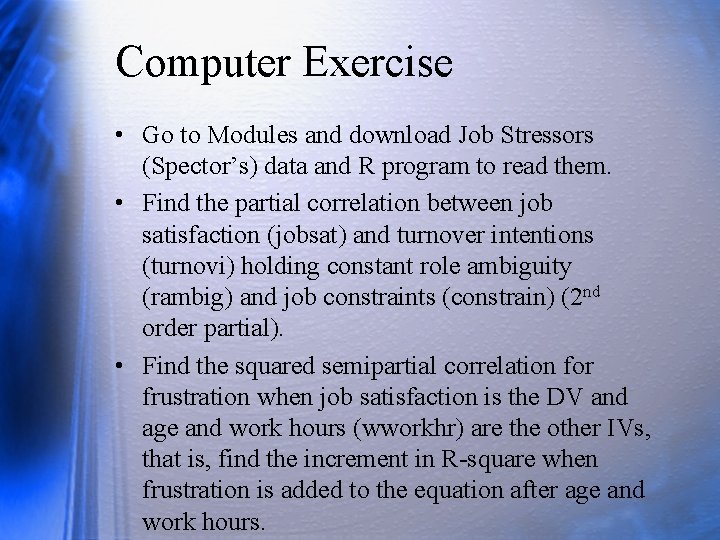

Computer Exercise • Go to Modules and download Job Stressors (Spector’s) data and R program to read them. • Find the partial correlation between job satisfaction (jobsat) and turnover intentions (turnovi) holding constant role ambiguity (rambig) and job constraints (constrain) (2 nd order partial). • Find the squared semipartial correlation for frustration when job satisfaction is the DV and age and work hours (wworkhr) are the other IVs, that is, find the increment in R-square when frustration is added to the equation after age and work hours.