Part 4 Current trend of parallel processing 1

![Using PVM (1) Using command pvm chen[11]% pvm> add opty 1 opty 2 opty Using PVM (1) Using command pvm chen[11]% pvm> add opty 1 opty 2 opty](https://slidetodoc.com/presentation_image/d0e6eab366cbef4b403993fdd0f51f84/image-14.jpg)

- Slides: 24

Part 4 Current trend of parallel processing (1) Cluster computing (2) Grid computing) 1

Traditional parallel processing • Vector type or shared memory type or distributed memory type • Special technology/architecture • Very expensive (millions 〜 100 millions dollars), long developing period • One computer used by a number of users • Special OS and programming languages • Limited users 2

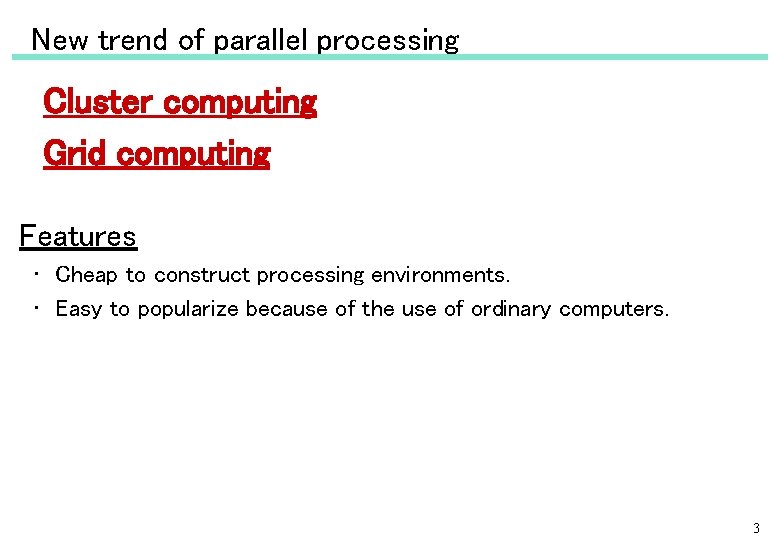

New trend of parallel processing Cluster computing Grid computing Features • Cheap to construct processing environments. • Easy to popularize because of the use of ordinary computers. 3

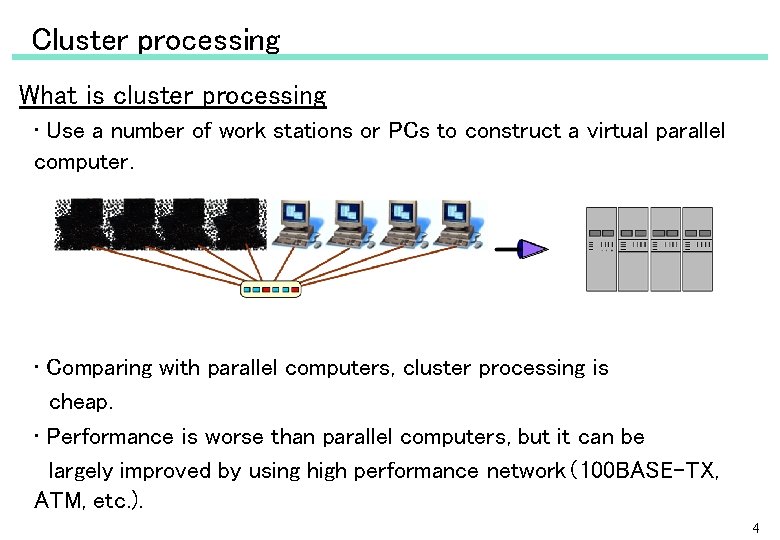

Cluster processing What is cluster processing • Use a number of work stations or PCs to construct a virtual parallel computer. • Comparing with parallel computers, cluster processing is cheap. • Performance is worse than parallel computers, but it can be largely improved by using high performance network(100 BASE-TX, ATM, etc. ). 4

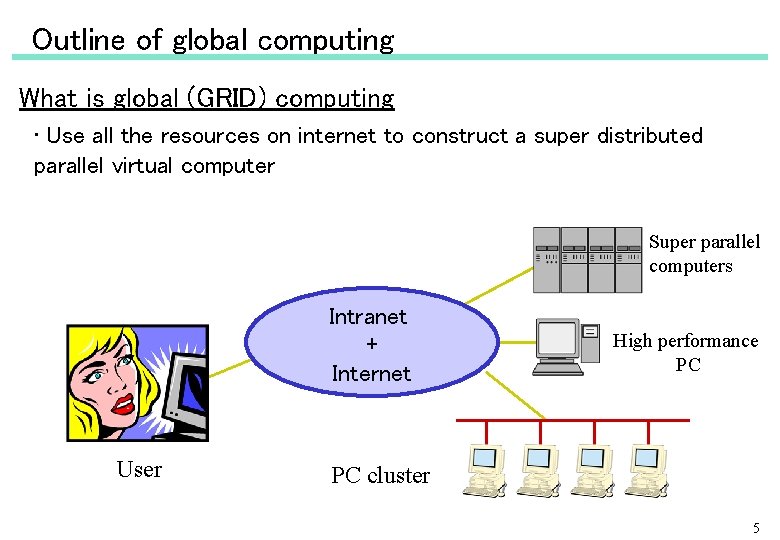

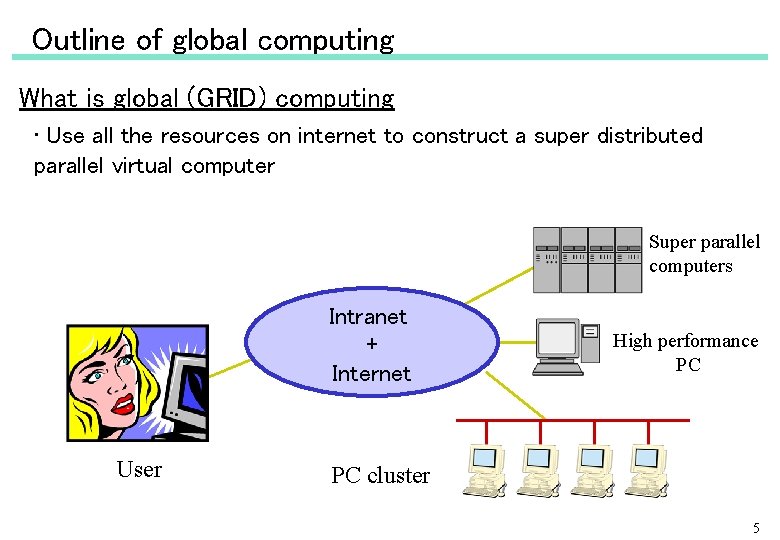

Outline of global computing What is global (GRID) computing • Use all the resources on internet to construct a super distributed parallel virtual computer Super parallel computers Intranet + Internet User High performance PC PC cluster 5

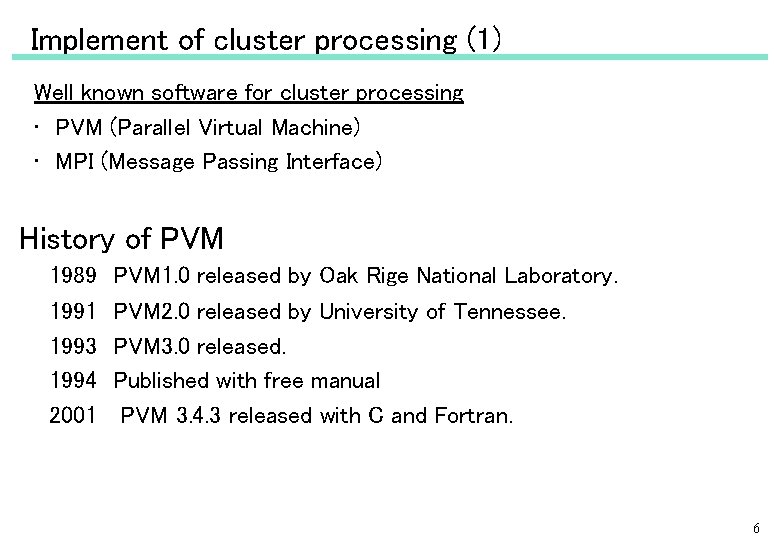

Implement of cluster processing (1) Well known software for cluster processing • PVM (Parallel Virtual Machine) • MPI (Message Passing Interface) History of PVM 1989 PVM 1. 0 released by Oak Rige National Laboratory. 1991 PVM 2. 0 released by University of Tennessee. 1993 PVM 3. 0 released. 1994 Published with free manual 2001 PVM 3. 4. 3 released with C and Fortran. 6

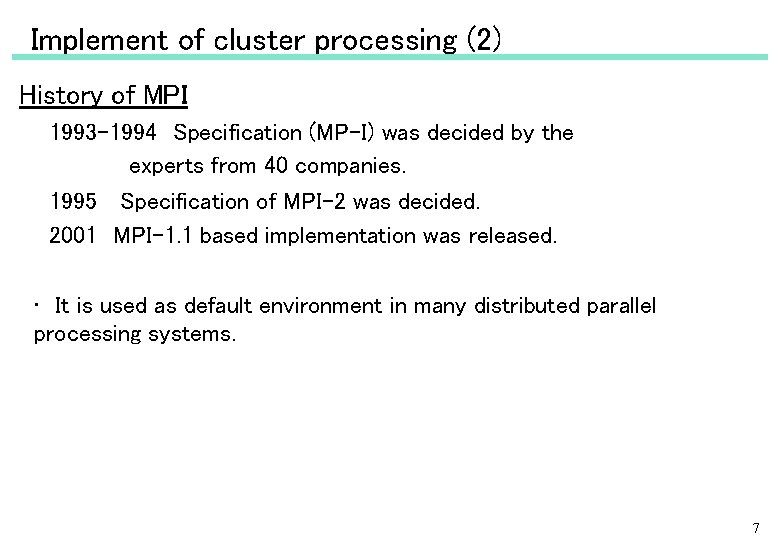

Implement of cluster processing (2) History of MPI 1993 -1994 Specification (MP-I) was decided by the experts from 40 companies. 1995 Specification of MPI-2 was decided. 2001 MPI-1. 1 based implementation was released. • It is used as default environment in many distributed parallel processing systems. 7

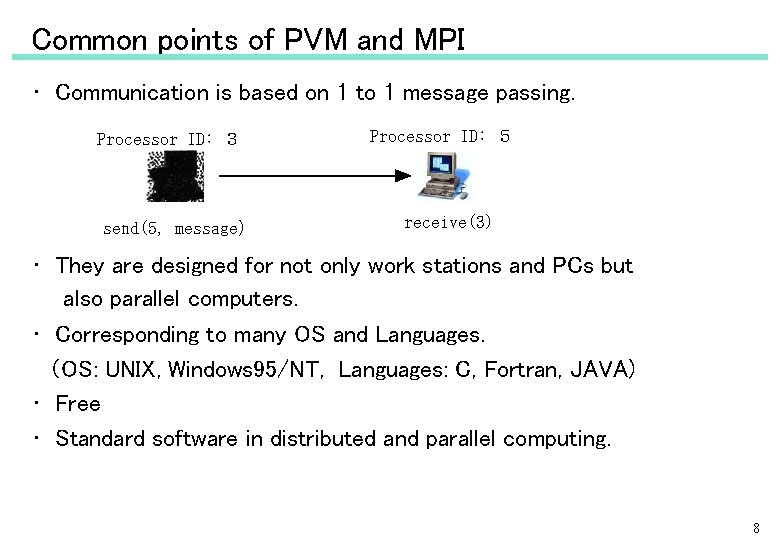

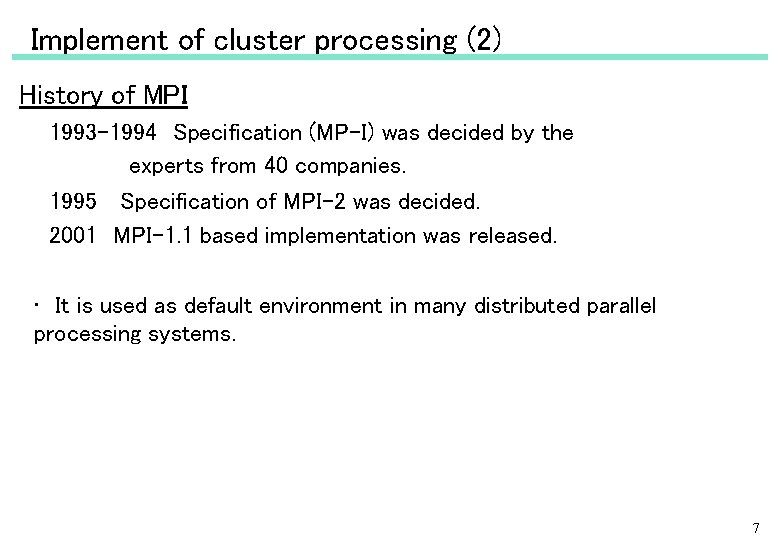

Common points of PVM and MPI • Communication is based on 1 to 1 message passing. Processor ID: 3 send(5, message) Processor ID: 5 receive(3) • They are designed for not only work stations and PCs but also parallel computers. • Corresponding to many OS and Languages. (OS: UNIX, Windows 95/NT, Languages: C, Fortran, JAVA) • Free • Standard software in distributed and parallel computing. 8

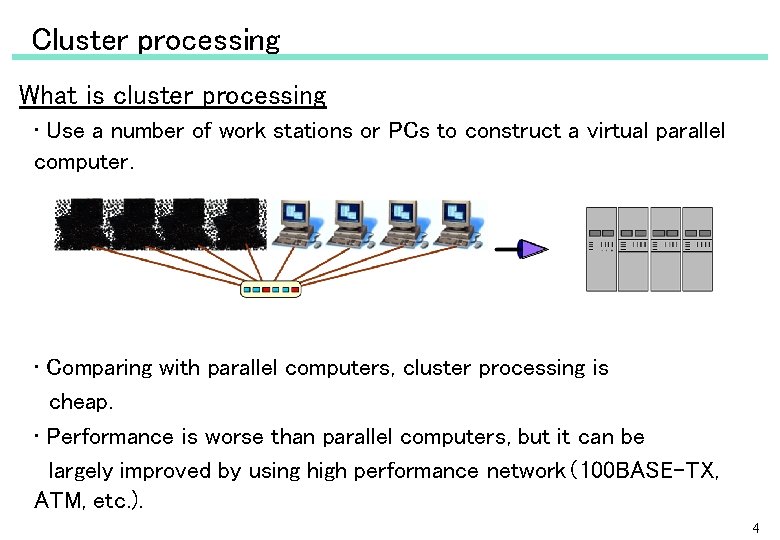

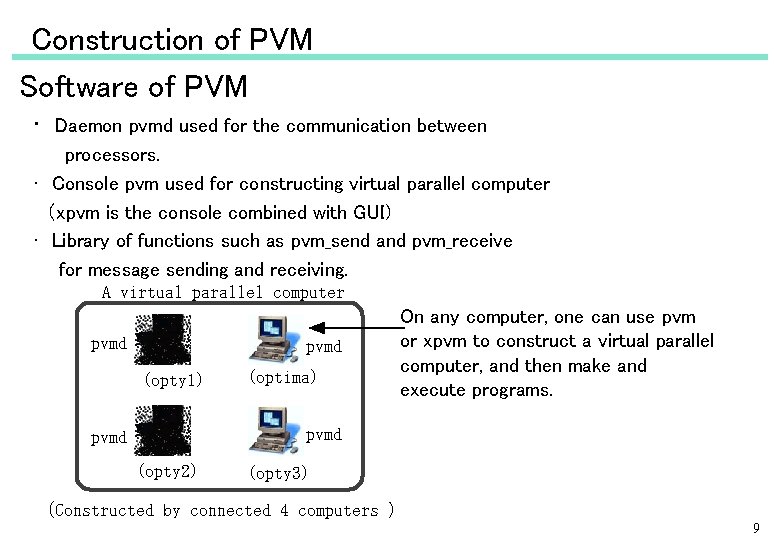

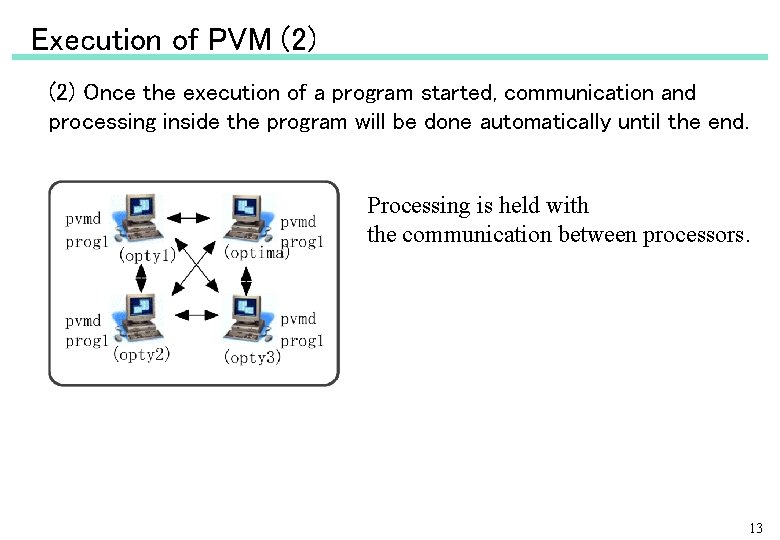

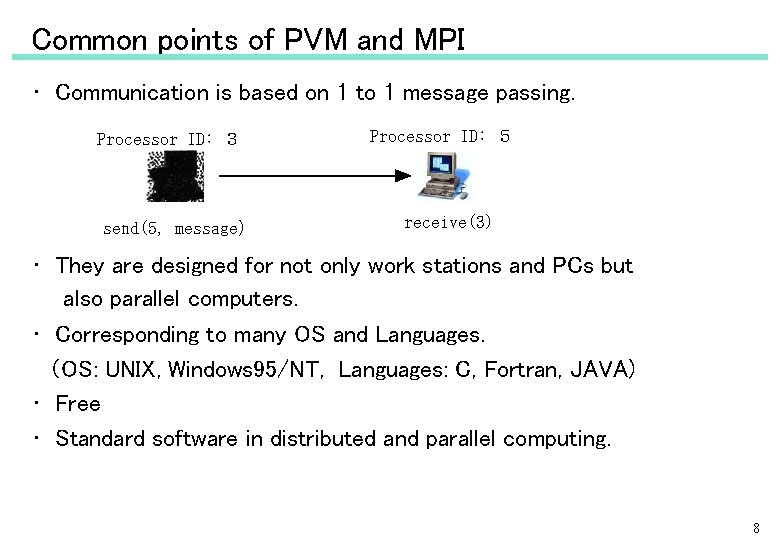

Construction of PVM Software of PVM • Daemon pvmd used for the communication between processors. • Console pvm used for constructing virtual parallel computer (xpvm is the console combined with GUI) • Library of functions such as pvm_send and pvm_receive for message sending and receiving. A virtual parallel computer pvmd (opty 1) (optima) On any computer, one can use pvm or xpvm to construct a virtual parallel computer, and then make and execute programs. pvmd (opty 2) (opty 3) (Constructed by connected 4 computers ) 9

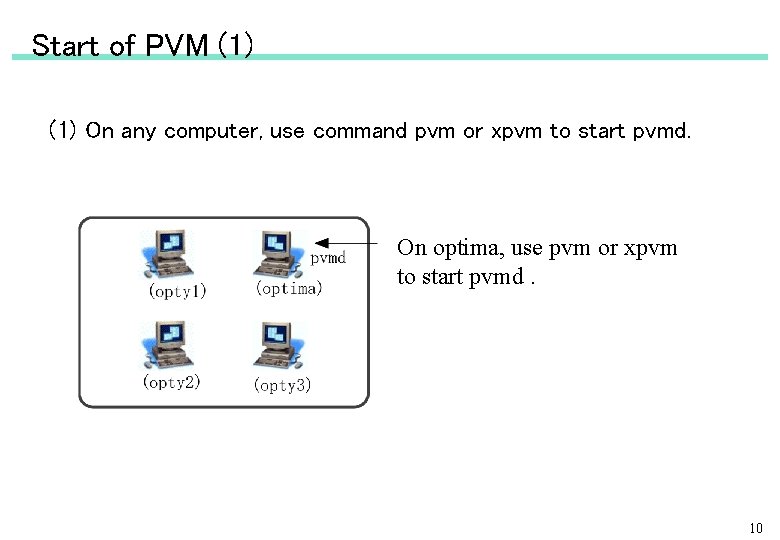

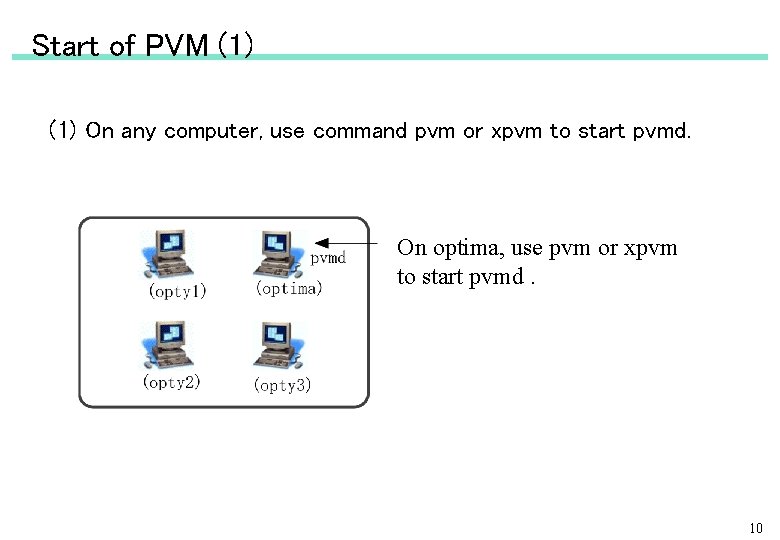

Start of PVM (1) On any computer, use command pvm or xpvm to start pvmd. On optima, use pvm or xpvm to start pvmd. 10

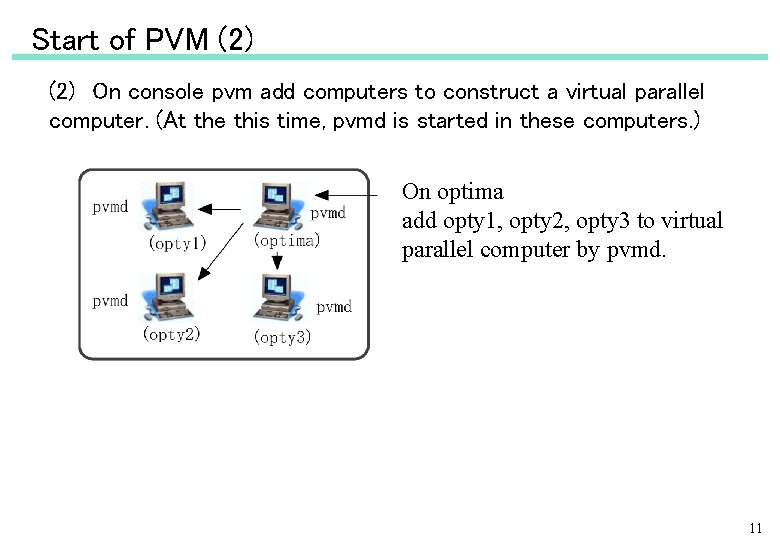

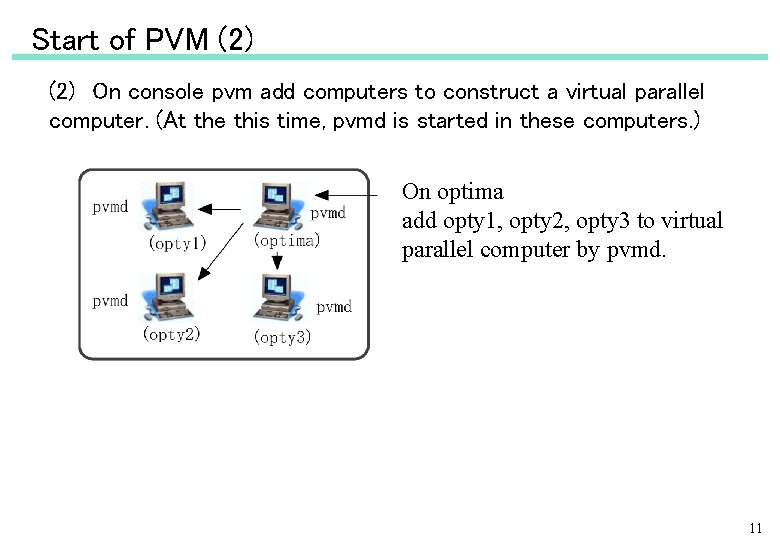

Start of PVM (2) On console pvm add computers to construct a virtual parallel computer. (At the this time, pvmd is started in these computers. ) On optima add opty 1, opty 2, opty 3 to virtual parallel computer by pvmd. 11

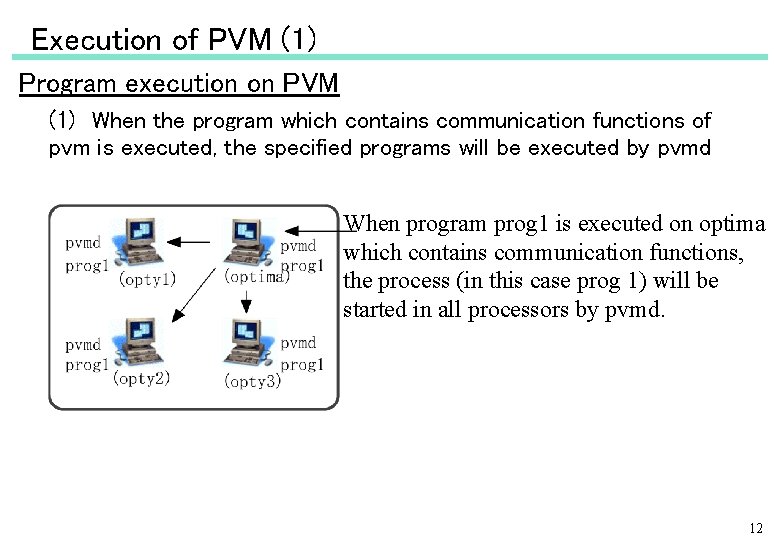

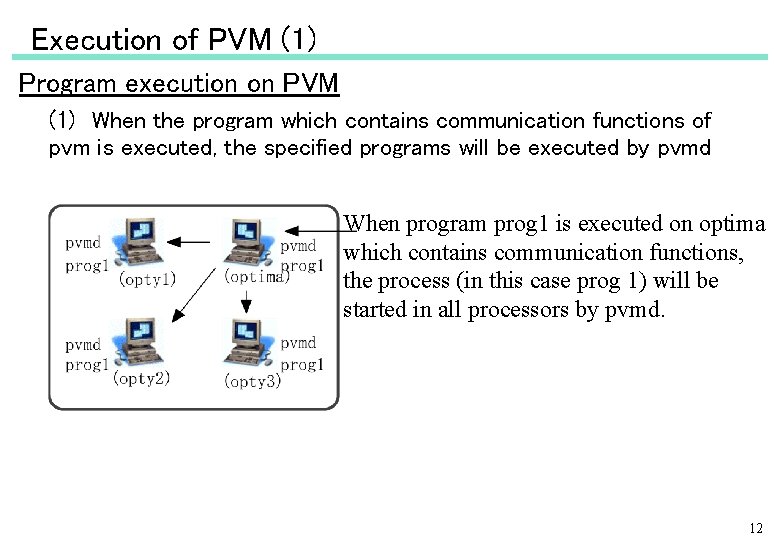

Execution of PVM (1) Program execution on PVM (1) When the program which contains communication functions of pvm is executed, the specified programs will be executed by pvmd When program prog 1 is executed on optima which contains communication functions, the process (in this case prog 1) will be started in all processors by pvmd. 12

Execution of PVM (2) Once the execution of a program started, communication and processing inside the program will be done automatically until the end. Processing is held with the communication between processors. 13

![Using PVM 1 Using command pvm chen11 pvm add opty 1 opty 2 opty Using PVM (1) Using command pvm chen[11]% pvm> add opty 1 opty 2 opty](https://slidetodoc.com/presentation_image/d0e6eab366cbef4b403993fdd0f51f84/image-14.jpg)

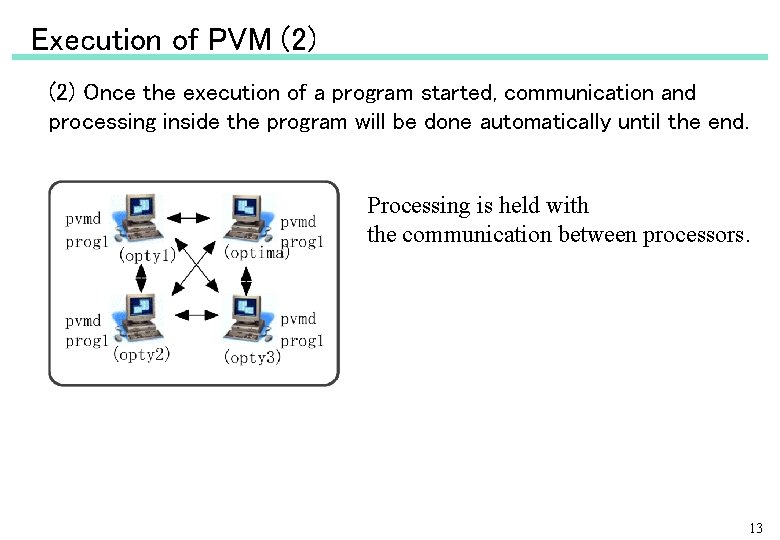

Using PVM (1) Using command pvm chen[11]% pvm> add opty 1 opty 2 opty 3(add opty 1, opty 2, opty 3 to the virtual computer) 3 successful HOST DTID opty 1 80000 opty 2 c 0000 opty 3 100000 pvm> conf (Show the configuration of the virtual parallel computer) 4 hosts, 1 data format HOST DTID ARCH SPEED DSIG optima 40000 X 86 SOL 2 1000 0 x 00408841 opty 1 80000 X 86 SOL 2 1000 0 x 00408841 opty 2 c 0000 X 86 SOL 2 1000 0 x 00408841 opty 3 100000 X 86 SOL 2 1000 0 x 00408841 pvm> spawn edm 4 (Execute program edm 4 on the virtual computer) 1 successful t 80001 pvm> halt (Halt of the virtual computer) Terminated chen[12]% 14

Using PVM (2) • Using xpvm (X application) 15

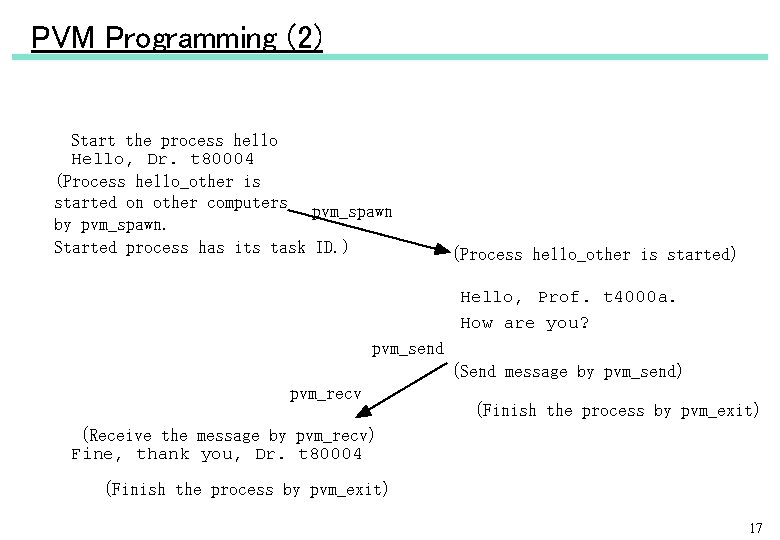

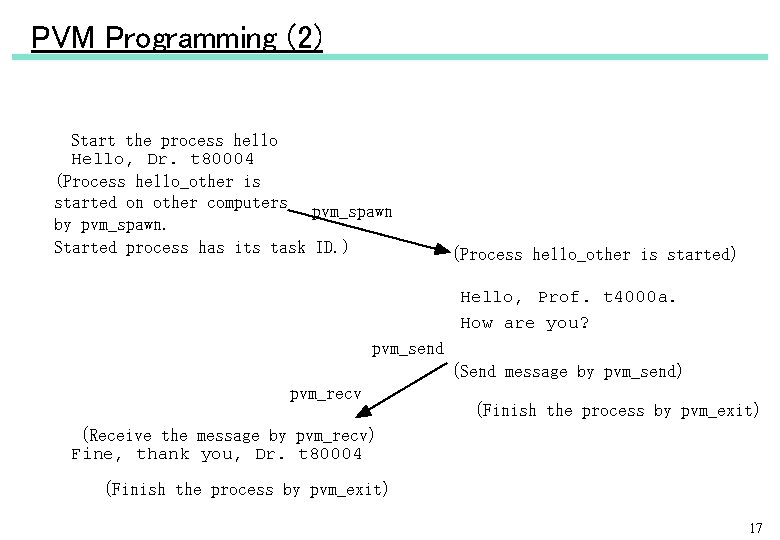

PVM programming (1) Example (hello. c, hello_other. c) 16

PVM Programming (2) Start the process hello Hello, Dr. t 80004 (Process hello_other is started on other computers pvm_spawn by pvm_spawn. Started process has its task ID. ) (Process hello_other is started) Hello, Prof. t 4000 a. How are you? pvm_send (Send message by pvm_send) pvm_recv (Finish the process by pvm_exit) (Receive the message by pvm_recv) Fine, thank you, Dr. t 80004 (Finish the process by pvm_exit) 17

Grid computing (1) • Distributed and parallel processing techniques - TCP/IP protocol such as telnet, rlogin - Cluster processing such as PVM, MPI - WWW related technique such as HTTP/HTML/CGI - Agent related technique such as Plangent, Aglets - . . . They can not be used to Grid computing without changing. (Some of them can be used. ) 18

Grid computing (2) Abilities necessary for grid computing • Generalization: easy to add computers • Security: unknown users,mobile users • Tolerance of fault • Heterogeneity: Language,OS,hardware, security level • High performance: super high performance, super high speed network • Scalability:Very large scale 19

Grid computing (3) History of Grid computing • 1980’s:Distributed computing • 1990’s:Gigabit network • I-way, 1995: Application • Alliance (NCSA) Virtual Machine Room • PACIs (NCSA/SDSC NSF National Technology Grid), 1998〜 • NASA Information Power Grid, 1999〜 • e. Grid (European Grid), 2000〜 • Ap. Grid (Asia-Pacific Grid) 2000〜 • . . . 20

Implement of grid computing Software for grid computing • Toolkit: Globus, Legion, App. Les • Message passing: MPICH-G 2 • Grid. RPC: Ninf, Netsolve, Nimrod • Business software: United Devices, Entropia, Parabon, . . . Ninf is developed in Japan 21

Achievement of Ninf • Solving 大規模非凸2次計画問題 by SCRM (kojima-Tuncel-Takeda) method: The world record is achieved by using large scale cluster (128 processors) • Solving Cyclic Polynomial Equation by Homotopy method: The world record is achieved by using large scale cluster (128 processors) 22

Summary (1) Changing in parallel processing • From parallel computers to cluster processing &grid computing • Combined with high performance network • Using popular technologies Using internet which has huge processing ability • Parallel processing becomes possible for ordinary users • Advanced technologies are necessary. 23

Summary (2) Cluster processing • wide used technologies are used for parallel processing. • Parallel processing becomes possible for ordinary users. Grid computing • 最先端の広帯域ネットワークを用いたインフラ技術 • Combination of network technology and computing technology • Great progress in computer related fields. Necessity of the comprehensive research • Parallel processing,Language,OS • Network • Application 24