Parole Analysis Perception and Automatic Recognition of Speech

- Slides: 22

Parole Analysis, Perception and Automatic Recognition of Speech Nancy Created in May 2001

Composition University staff Jean-Paul Haton (Prof. UHP), Irina Illina (MC. Nancy 2), Joseph di Martino (MC. UHP), Odile Mella (MC. UHP), Kamel Smaïli (Prof. Nancy 2), Armelle Brun (MC. Nancy 2), David Langlois (MC, IUFM), Vincent Colotte (MC. UHP), Slim Ouni (MC. Nancy 2) CNRS staff Anne Bonneau (CR), Dominique Fohr (CR), Yves Laprie (CR), Christophe Cerisara (CR) Invited scientist Jacques Feldmar (INRIA) Doctoral students Emmanuel Didiot, Pavel Kral, Joseph Razik, Blaise Potard, Vincent Robert, Sebastien Demange, Ghazi Bousselmi, Mathieu Camus, Farid Feïz, Guillaume Henry, Caroline Lavecchia Engineers Alexandre Lafosse (CNRS), Julien Maire (CNRS), Christophe Antoine (INRIA)

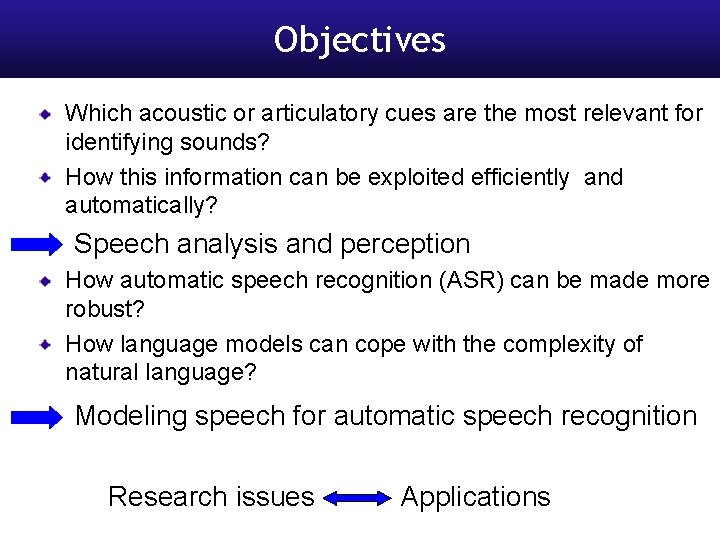

Objectives Which acoustic or articulatory cues are the most relevant for identifying sounds? How this information can be exploited efficiently and automatically? Speech analysis and perception How automatic speech recognition (ASR) can be made more robust? How language models can cope with the complexity of natural language? Modeling speech for automatic speech recognition Research issues Applications

Results

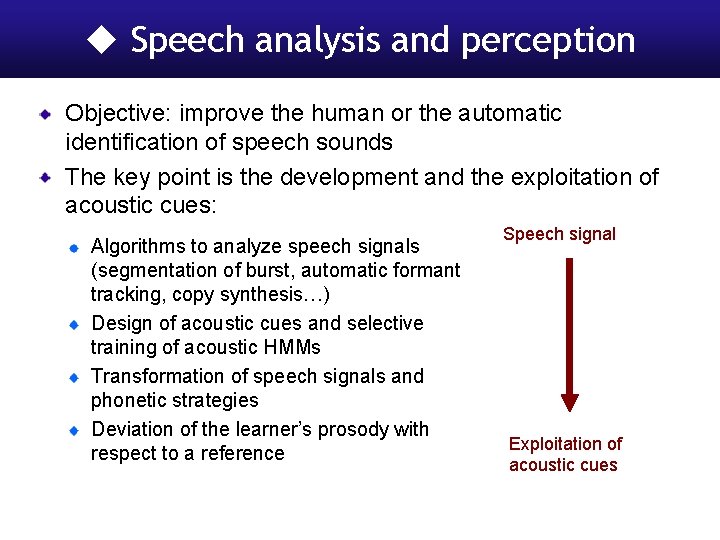

Speech analysis and perception Objective: improve the human or the automatic identification of speech sounds The key point is the development and the exploitation of acoustic cues: Algorithms to analyze speech signals (segmentation of burst, automatic formant tracking, copy synthesis…) Design of acoustic cues and selective training of acoustic HMMs Transformation of speech signals and phonetic strategies Deviation of the learner’s prosody with respect to a reference Speech signal Exploitation of acoustic cues

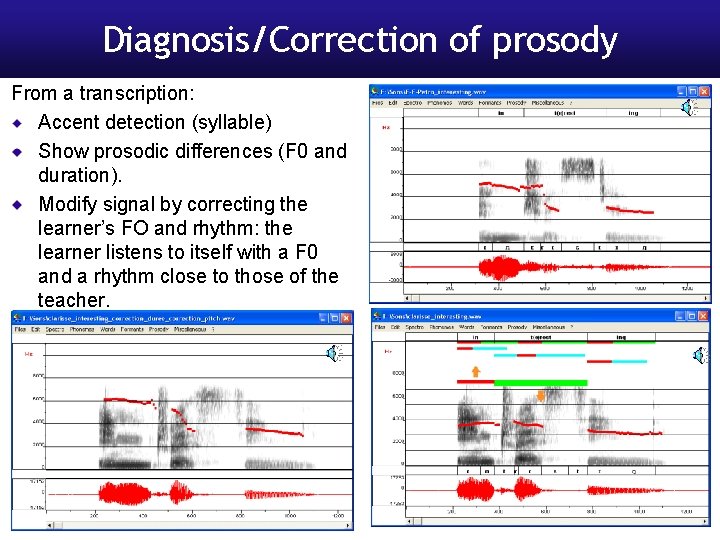

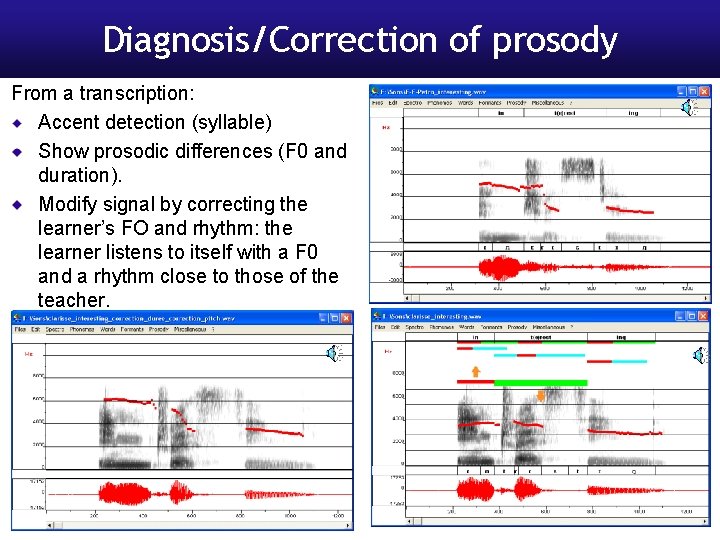

Diagnosis/Correction of prosody From a transcription: Accent detection (syllable) Show prosodic differences (F 0 and duration). Modify signal by correcting the learner’s FO and rhythm: the learner listens to itself with a F 0 and a rhythm close to those of the teacher.

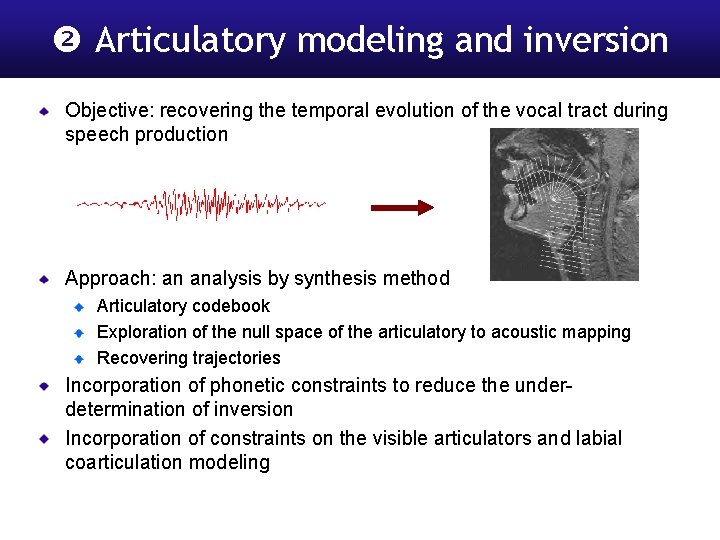

Articulatory modeling and inversion Objective: recovering the temporal evolution of the vocal tract during speech production Approach: an analysis by synthesis method Articulatory codebook Exploration of the null space of the articulatory to acoustic mapping Recovering trajectories Incorporation of phonetic constraints to reduce the underdetermination of inversion Incorporation of constraints on the visible articulators and labial coarticulation modeling

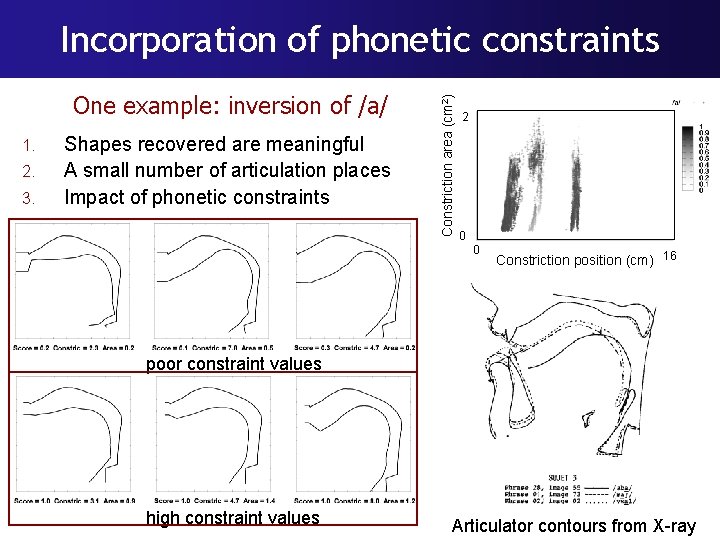

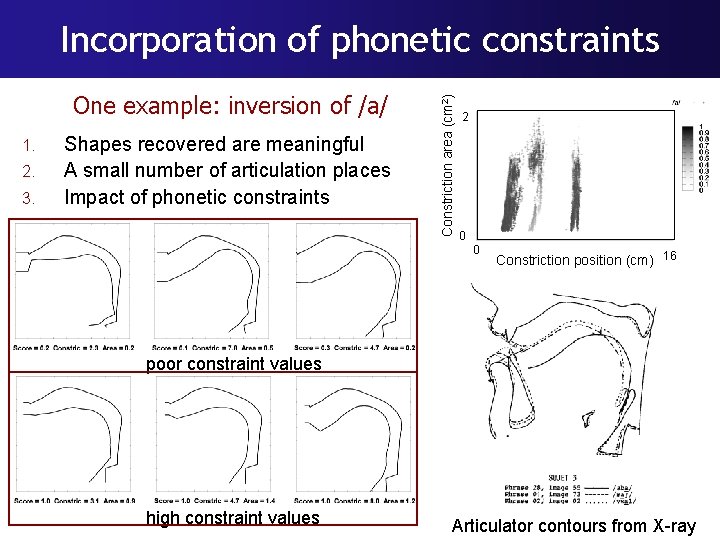

One example: inversion of /a/ 1. 2. 3. Shapes recovered are meaningful A small number of articulation places Impact of phonetic constraints Constriction area (cm 2) Incorporation of phonetic constraints 2 0 0 Constriction position (cm) 16 poor constraint values high constraint values Articulator contours from X-ray

Stochastic models for speech recognition Objective: improving robustness of speech recognition and designing new models Achievements: Signal: denoising method based on a probabilistic matching between clean and noisy speech stochastic matching algorithm to handle non-stationary noises Models: missing data recognition adaptation to speaker and noise Core recognition platforms: ESPERE (medium size vocabulary tasks) and ANTS (large vocabulary, used for audio stream annotation)

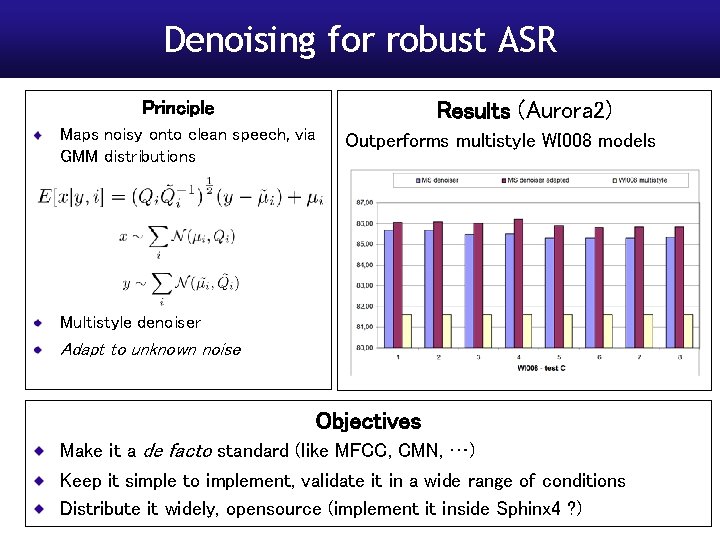

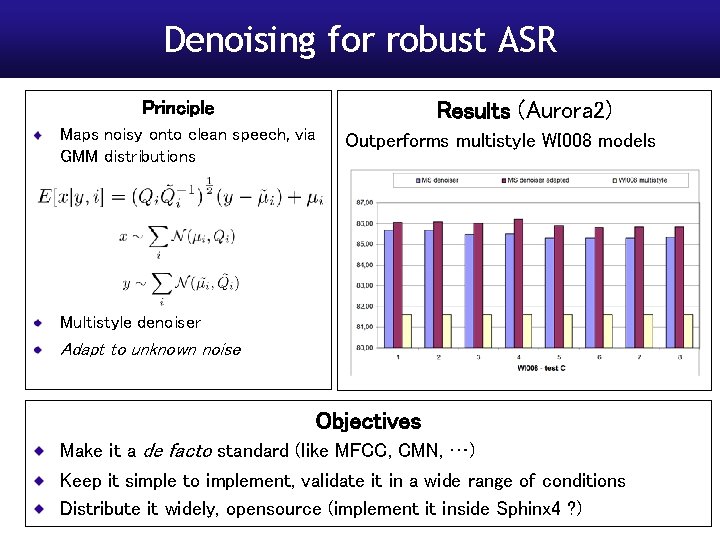

Denoising for robust ASR Principle Results (Aurora 2) Maps noisy onto clean speech, via GMM distributions Outperforms multistyle WI 008 models Multistyle denoiser Adapt to unknown noise Objectives Make it a de facto standard (like MFCC, CMN, …) Keep it simple to implement, validate it in a wide range of conditions Distribute it widely, opensource (implement it inside Sphinx 4 ? )

Language modeling Objective: models can cope with the complexity of natural language Achievements: Variable-length n-grams using phrases extracted from syntactic sequences of classes A new method to select the best contextual language model: use distant language model A set of methods in topic identification the combination of different methods outperforms the results obtained by the best one by 10% used for e-mail routing

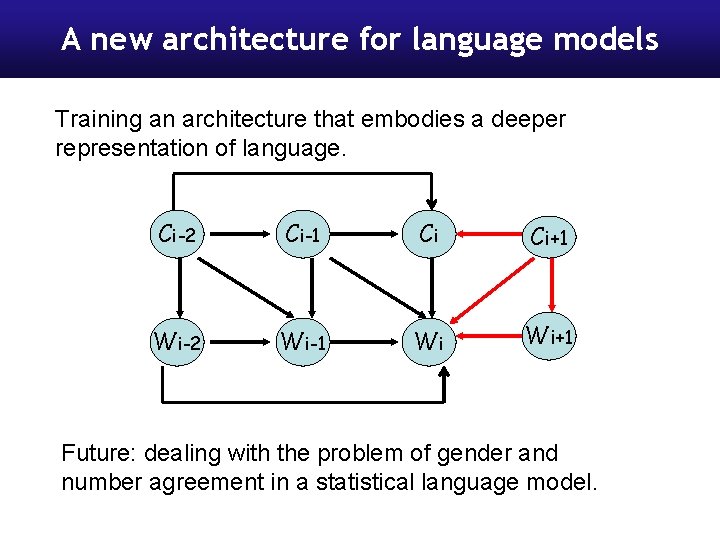

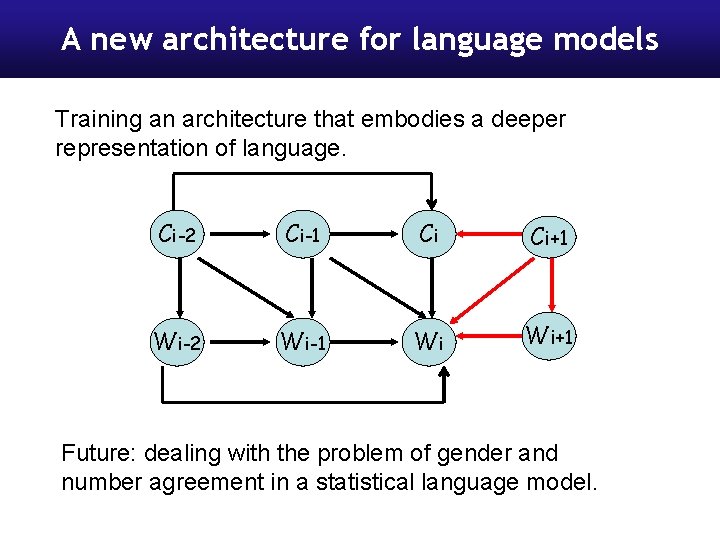

A new architecture for language models Training an architecture that embodies a deeper representation of language. Ci-2 Ci-1 Ci Ci+1 Wi-2 Wi-1 Wi Wi+1 Future: dealing with the problem of gender and number agreement in a statistical language model.

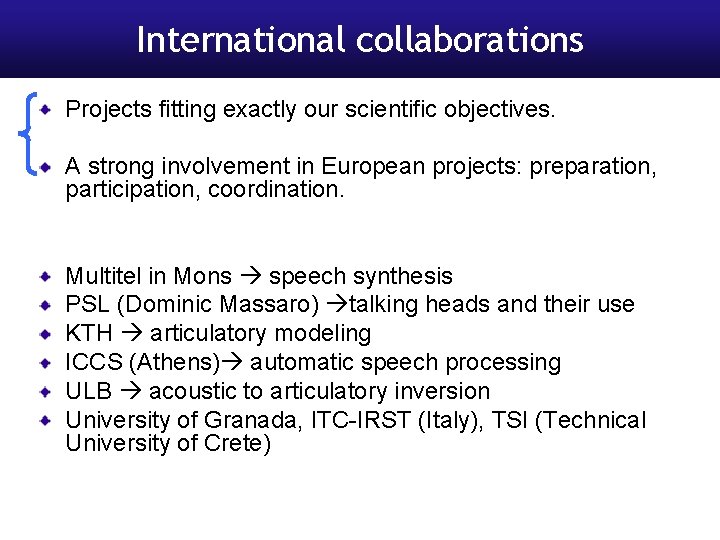

International collaborations Projects fitting exactly our scientific objectives. A strong involvement in European projects: preparation, participation, coordination. Multitel in Mons speech synthesis PSL (Dominic Massaro) talking heads and their use KTH articulatory modeling ICCS (Athens) automatic speech processing ULB acoustic to articulatory inversion University of Granada, ITC-IRST (Italy), TSI (Technical University of Crete)

Objectives for the next four years

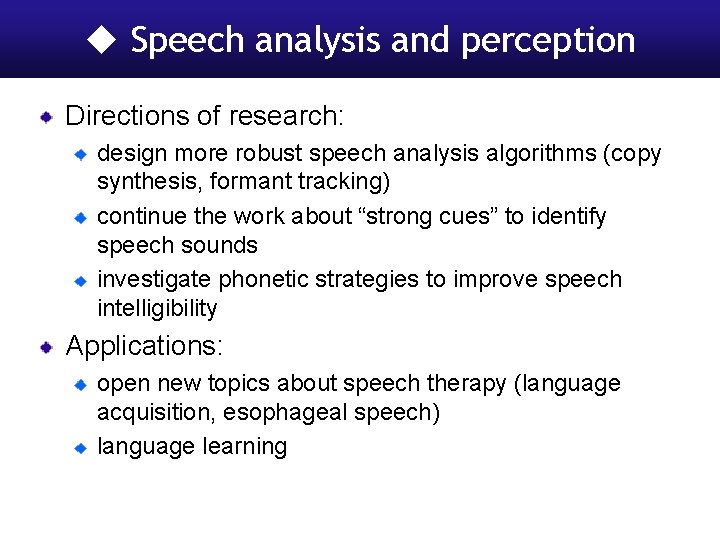

Speech analysis and perception Directions of research: design more robust speech analysis algorithms (copy synthesis, formant tracking) continue the work about “strong cues” to identify speech sounds investigate phonetic strategies to improve speech intelligibility Applications: open new topics about speech therapy (language acquisition, esophageal speech) language learning

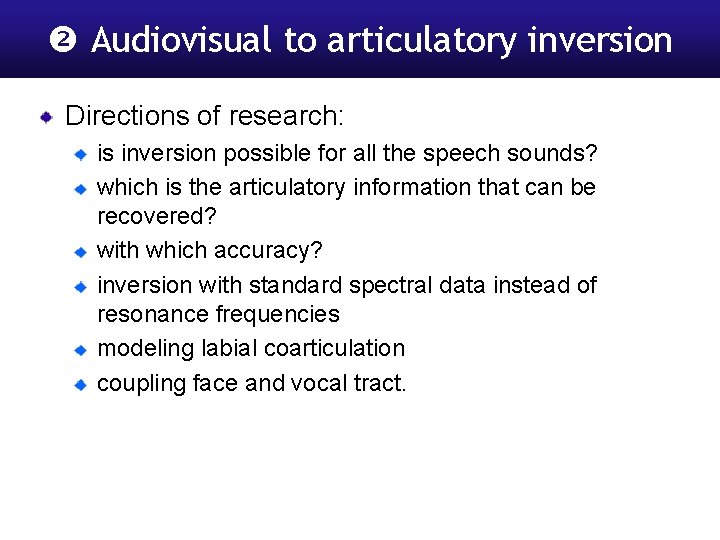

Audiovisual to articulatory inversion Directions of research: is inversion possible for all the speech sounds? which is the articulatory information that can be recovered? with which accuracy? inversion with standard spectral data instead of resonance frequencies modeling labial coarticulation coupling face and vocal tract.

Automatic speech recognition The challenge is to increase the robustness of ASR. Using external sources of information design a theoretical framework that can incorporate different sources of information one promising direction is to use well recognized sounds that contain strong cues Applications extend the usability of automatic speech recognition (non native speakers, children…)

Language models The challenge is to deal with the complexity of natural language in automatic speech recognition: How relevant linguistic knowledge can be retrieved automatically? How to make linguistic units more informative by supporting syntactic features? How several forms of linguistic knowledge can be used in a single framework? Application to speech translation Using phrase, i. e. relevant sequences of words, is a promising approach.

Multidisciplinarity has benefited each topic addressed in the team by: opening new directions of research “strong acoustic cues” confidence islands in automatic speech recognition by using models of well realized sounds giving help to improve another branch of research speech analysis to adjust parameters of Mel cepstral coefficient calculation using probabilistic learning for inversion providing tools from another research area addressed in the group piloting a talking head through automatic speech recognition speech alignment for language learning using language modeling to analyze sentences to be synthesized

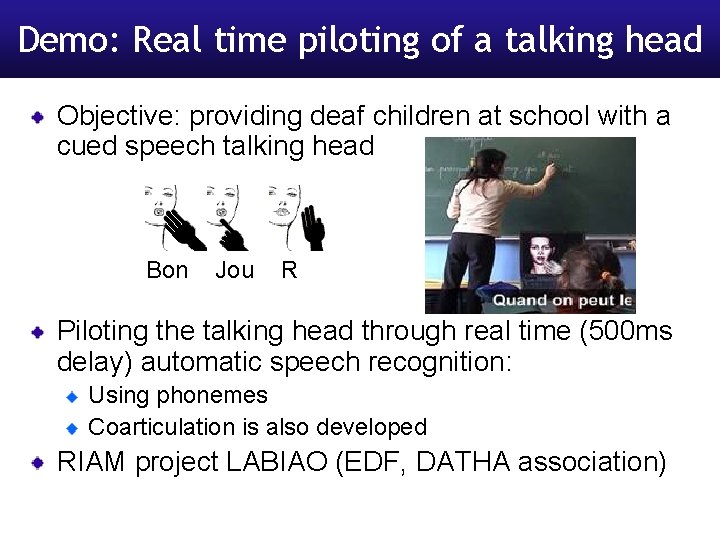

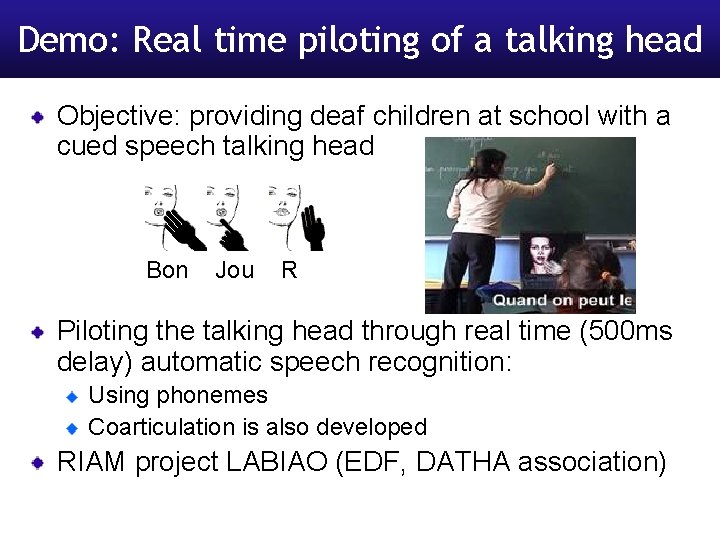

Demo: Real time piloting of a talking head Objective: providing deaf children at school with a cued speech talking head Bon Jou R Piloting the talking head through real time (500 ms delay) automatic speech recognition: Using phonemes Coarticulation is also developed RIAM project LABIAO (EDF, DATHA association)

Conclusion A deeper comprehension and modeling of speech production, perception and comprehension to allow natural interaction through speech.

Conclusion White noise filtered with Win. Snoori. A deeper comprehension and modeling of speech production, perception and comprehension to allow natural interaction through speech.