Parameter Servers slides courtesy of Aurick Qiao Joseph

![Stale Synchronous Parallel (SSP) [Ho et al 2013] 46 Stale Synchronous Parallel (SSP) [Ho et al 2013] 46](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-46.jpg)

![LDA Samplers Comparison [Yuan et al 2015] 85 LDA Samplers Comparison [Yuan et al 2015] 85](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-81.jpg)

![LDA Scale Comparison Yahoo. LDA (Sparse. LDA) [1] Parameter Server (Sparse. LDA)[2] Tencent Peacock LDA Scale Comparison Yahoo. LDA (Sparse. LDA) [1] Parameter Server (Sparse. LDA)[2] Tencent Peacock](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-83.jpg)

- Slides: 83

Parameter Servers (slides courtesy of Aurick Qiao, Joseph Gonzalez, Wei Dai, and Jinliang Wei) 1

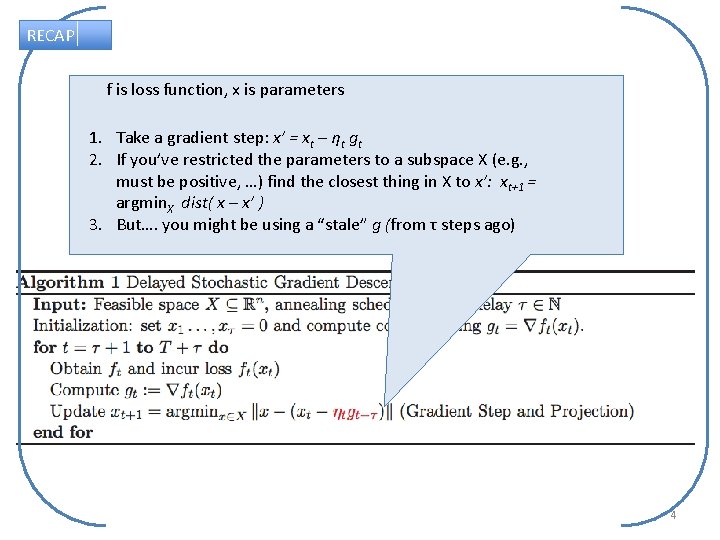

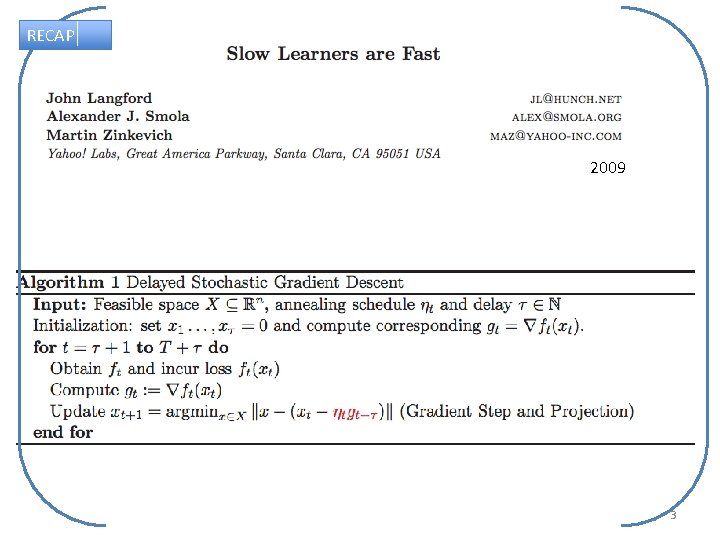

RECAP Regret analysis for on-line optimization 2

RECAP 2009 3

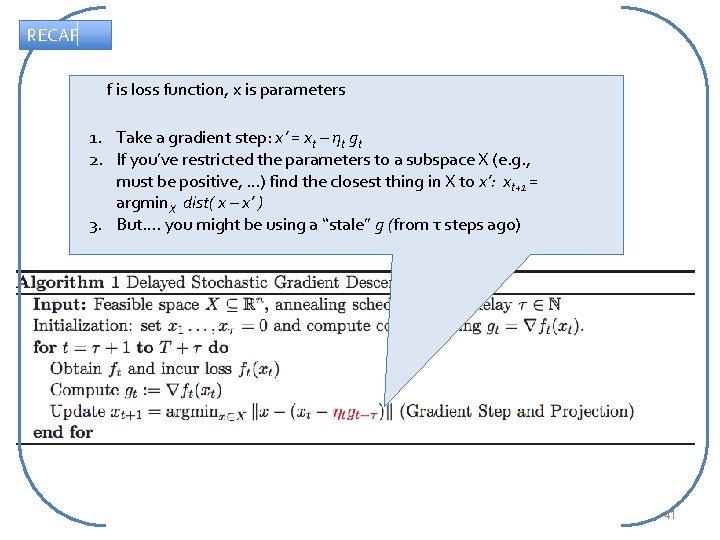

RECAP f is loss function, x is parameters 1. Take a gradient step: x’ = xt – ηt gt 2. If you’ve restricted the parameters to a subspace X (e. g. , must be positive, …) find the closest thing in X to x’: xt+1 = argmin. X dist( x – x’ ) 3. But…. you might be using a “stale” g (from τ steps ago) 4

RECAP Regret: how much loss was incurred during learning, over and above the loss incurrent with an optimal choice of x Special case: • ft is 1 if a mistake was made, 0 otherwise • ft(x*) = 0 for optimal x* Regret = # mistakes made in learning 5

RECAP Theorem: you can find a learning rate so that the regret of delayed SGD is bounded by T = # timesteps τ= staleness > 0 6

RECAP Theorem 8: you can do better if you assume (1) examples are i. i. d. (2) the gradients are smooth, analogous to the assumption about L: Then you can show a bound on expected regret dominant term No-delay loss 7

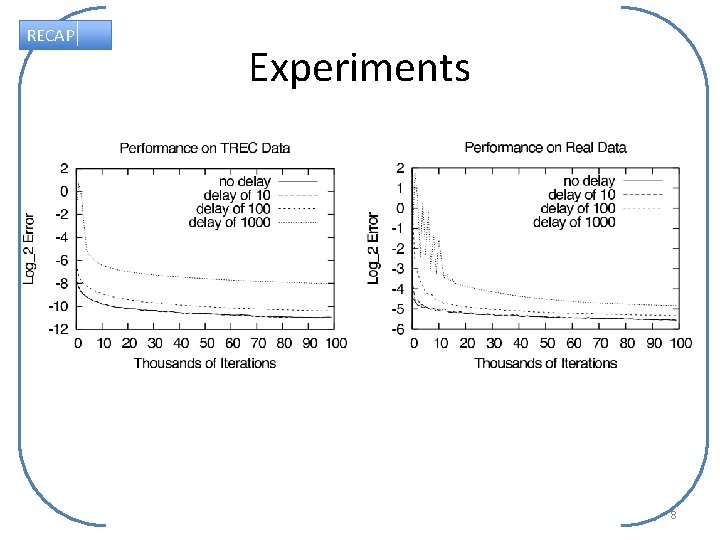

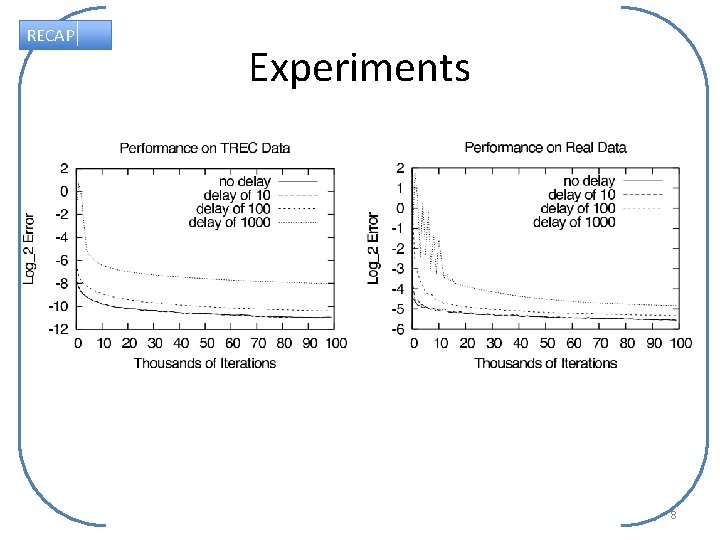

RECAP Experiments 8

RECAP Summary of “Slow Learners are Fast” • Generalization of iterative parameter mixing – run multiple learners in parallel – conceptually they share the same weight/parameter vector BUT … • Learners share weights imperfectly – learners are almost synchronized – there’s a bound τ on how stale the shared weights get 9

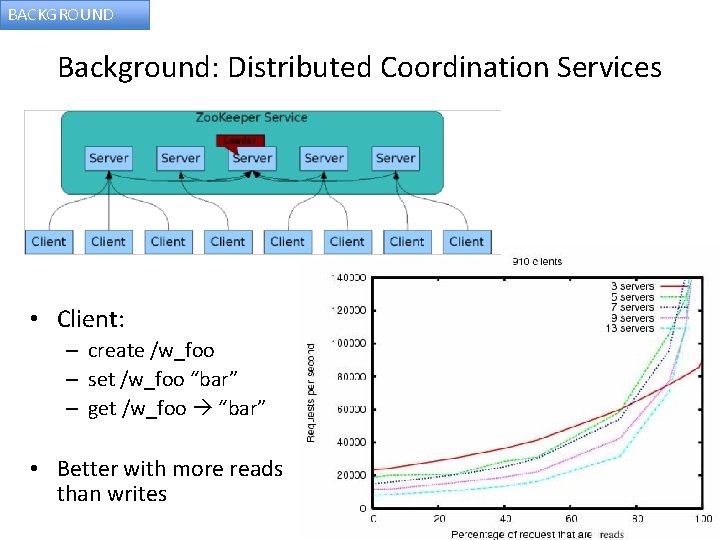

BACKGROUND Background: Distributed Coordination Services • Example: Apache Zoo. Keeper • Distributed processes coordinate through shared “data registers” (aka znodes) which look a bit like a shared in-memory filesystem 10

BACKGROUND Background: Distributed Coordination Services • Client: – create /w_foo – set /w_foo “bar” – get /w_foo “bar” • Better with more reads than writes 11

Parameter Servers (slides courtesy of Aurick Qiao Joseph Gonzalez, Wei Dai, and Jinliang Wei) 12

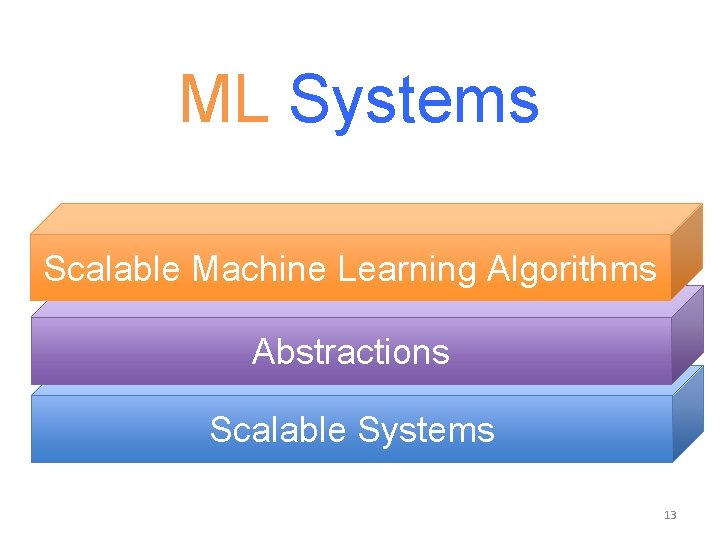

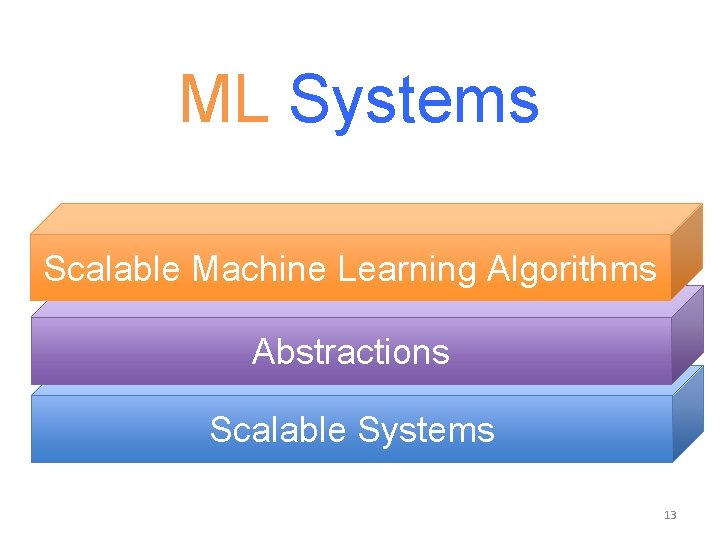

ML Systems Scalable Machine Learning Algorithms Abstractions Scalable Systems 13

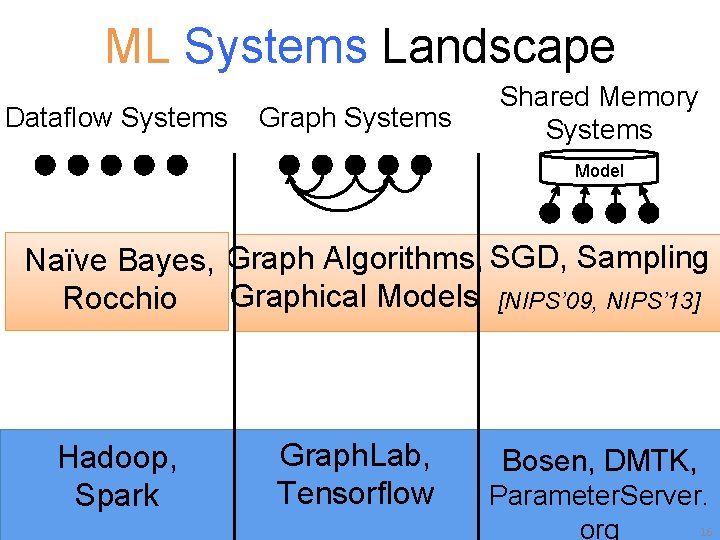

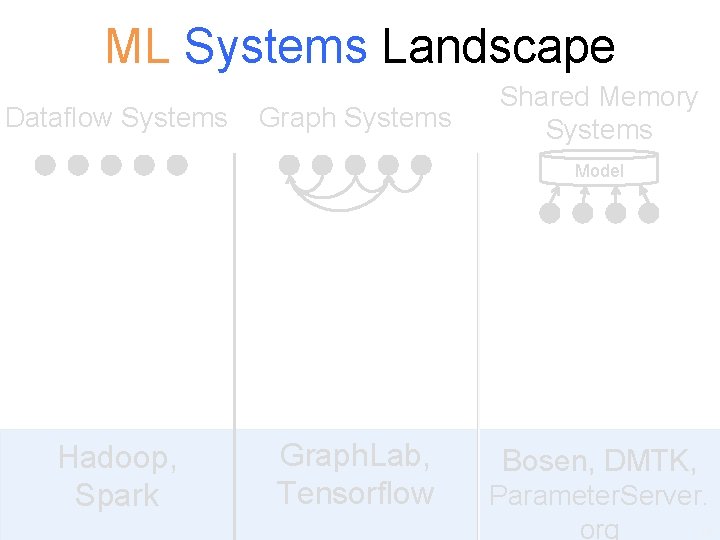

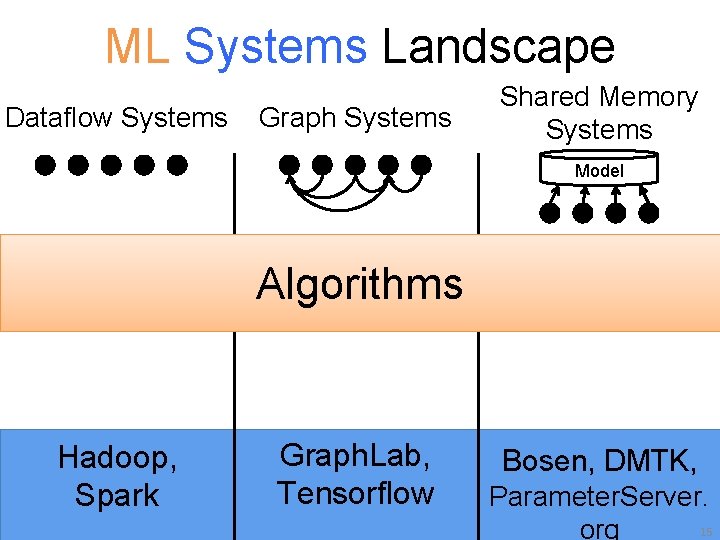

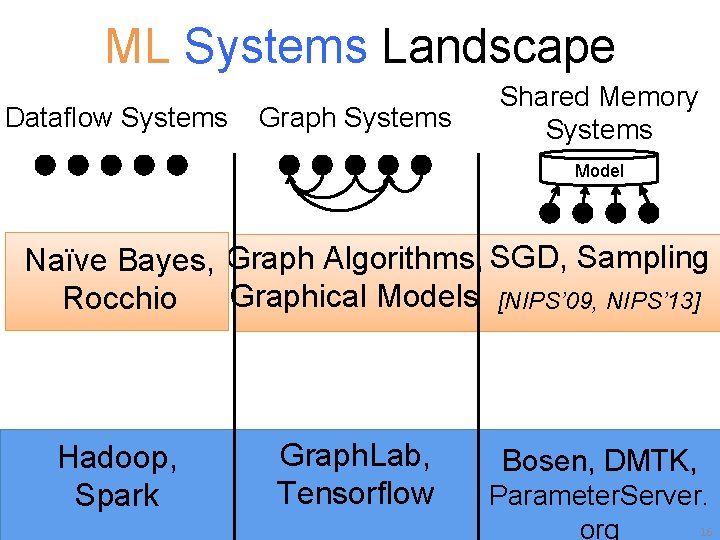

ML Systems Landscape Dataflow Systems Graph Systems Shared Memory Systems Model Hadoop, Spark Graph. Lab, Tensorflow Bosen, DMTK, Parameter. Server. 14 org

ML Systems Landscape Dataflow Systems Graph Systems Shared Memory Systems Model Algorithms Hadoop, Spark Graph. Lab, Tensorflow Bosen, DMTK, Parameter. Server. 15 org

ML Systems Landscape Dataflow Systems Graph Systems Shared Memory Systems Model Naïve Bayes, Graph Algorithms, SGD, Sampling Graphical Models [NIPS’ 09, NIPS’ 13] Rocchio Hadoop, Spark Graph. Lab, Tensorflow Bosen, DMTK, Parameter. Server. 16 org

ML Systems Landscape Dataflow Systems Graph Systems Shared Memory Systems Model Naïve Bayes, Graph Algorithms, SGD, Sampling Graphical Models [NIPS’ 09, NIPS’ 13] Rocchio Abstractions Hadoop & Spark Graph. Lab, Tensorflow Bosen, DMTK, Parameter. Server. 17 org

ML Systems Landscape Dataflow Systems Graph Systems Shared Memory Systems Model Naïve Bayes, Graph Algorithms, SGD, Sampling Graphical Models [NIPS’ 09, NIPS’ 13] Rocchio PIG, Guinea. Pig, … Hadoop & Spark Vertex. Programs [UAI’ 10] Parameter Server Graph. Lab, Tensorflow Bosen, DMTK, [VLDB’ 10] Parameter. Server. 18 org

ML Systems Landscape Dataflow Systems Graph Systems Simple case: Parameters of the ML system are stored in a distributed hash table that is accessible thru the network Param Servers used in Google, Yahoo, …. Academic work by Smola, Xing, … Shared Memory Systems Model [NIPS’ 09, NIPS’ 13] Parameter Serve [VLDB’ 10] 19

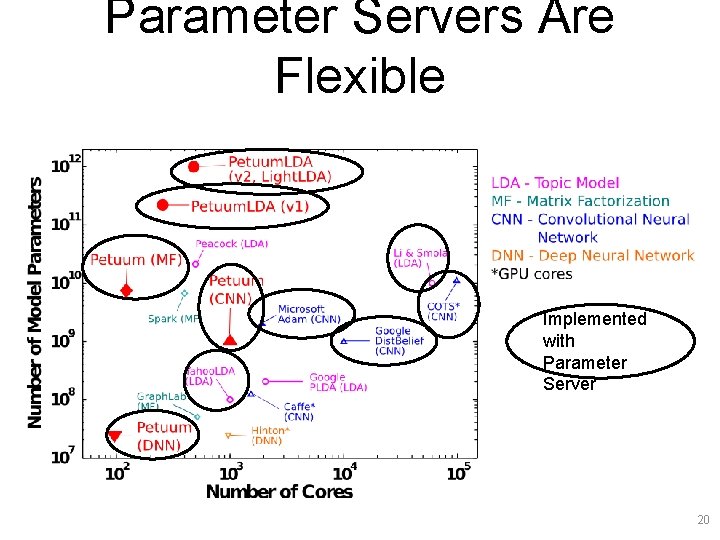

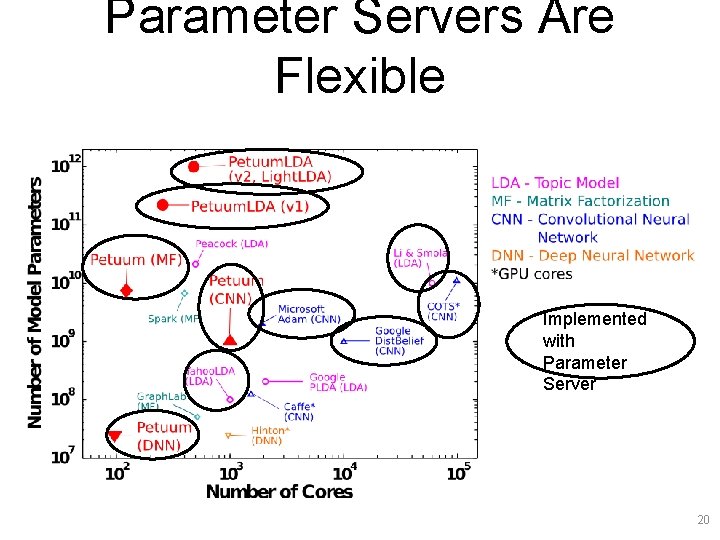

Parameter Servers Are Flexible Implemented with Parameter Server 20

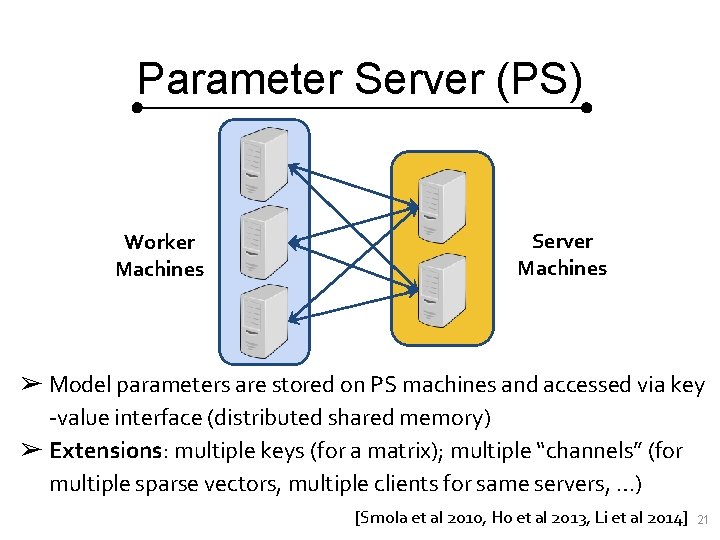

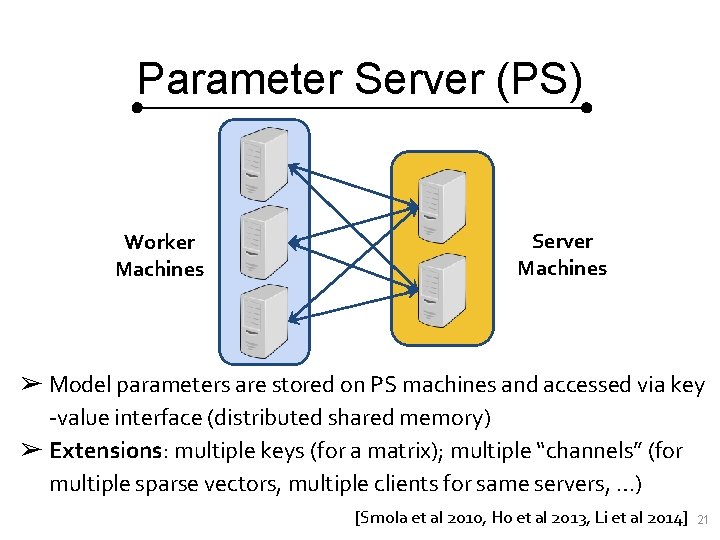

Parameter Server (PS) Worker Machines Server Machines ➢ Model parameters are stored on PS machines and accessed via key -value interface (distributed shared memory) ➢ Extensions: multiple keys (for a matrix); multiple “channels” (for multiple sparse vectors, multiple clients for same servers, …) [Smola et al 2010, Ho et al 2013, Li et al 2014] 21

Parameter Server (PS) Worker Machines Server Machines ➢ Extensions: push/pull interface to send/receive most recent copy of (subset of) parameters, blocking is optional ➢ Extension: can block until push/pulls with clock < (t – τ ) complete [Smola et al 2010, Ho et al 2013, Li et al 2014] 22

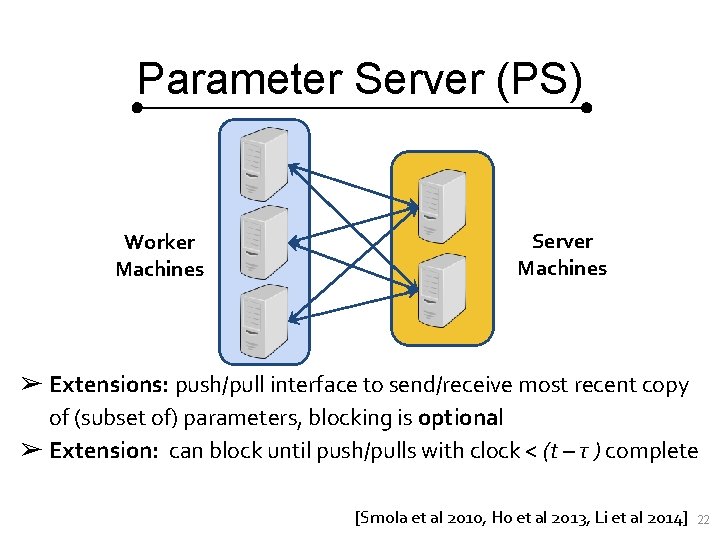

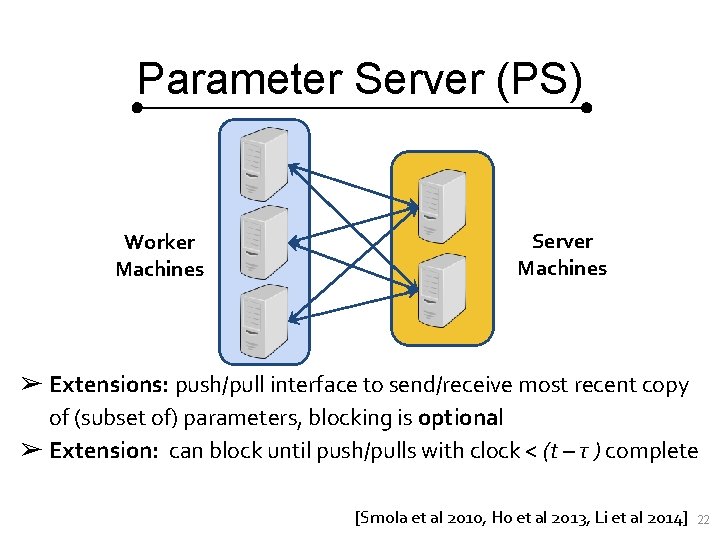

Data parallel learning with PS Parameter Server w 1 w 2 w 3 w 519 Data Parameter Server w 4 w 5 w 64 Data Parameter Server w 7 w 8 w 9 w 83 Data Split Data Across Machines w 72 Data 23

Data parallel learning with PS Parameter Server w 1 w 2 w 3 Parameter Server w 4 w 5 w 6 Parameter Server w 7 w 8 w 9 1. Different parts of the model on different servers. 2. Workers retrieve the part needed as needed Data Split Data Across Machines Data 24

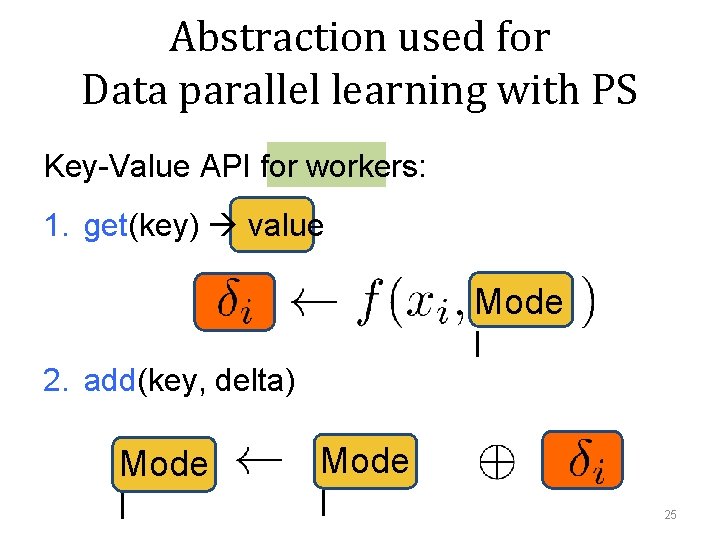

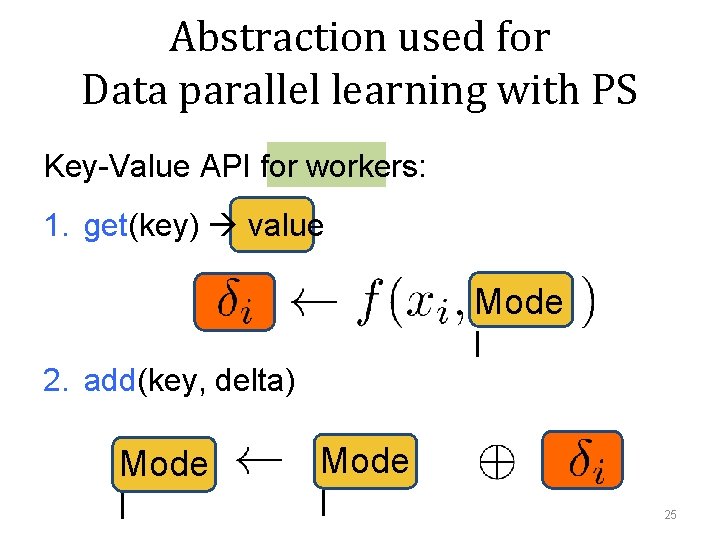

Abstraction used for Data parallel learning with PS Key-Value API for workers: 1. get(key) value Mode l 2. add(key, delta) Mode l 25

PS vs Hadoop Map-Reduce

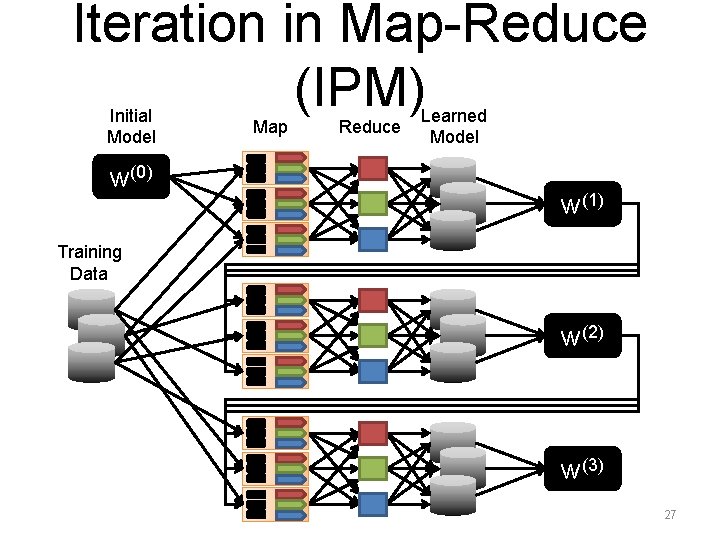

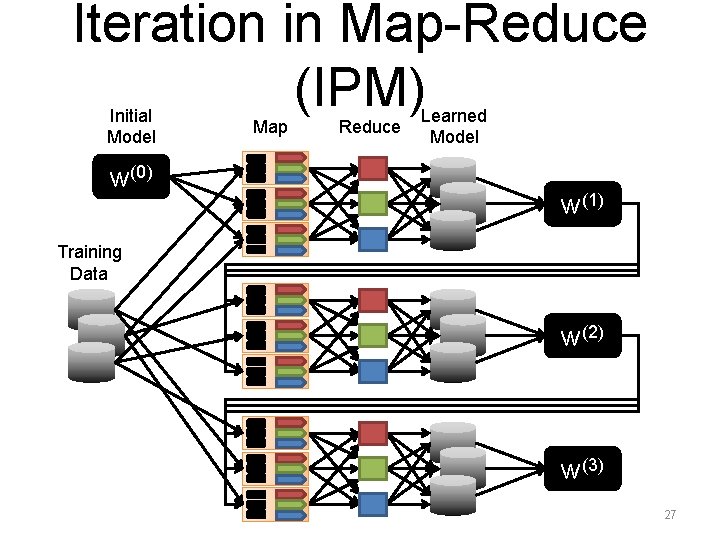

Iteration in Map-Reduce (IPM) Initial Model w(0) Map Reduce Learned Model w(1) Training Data w(2) w(3) 27

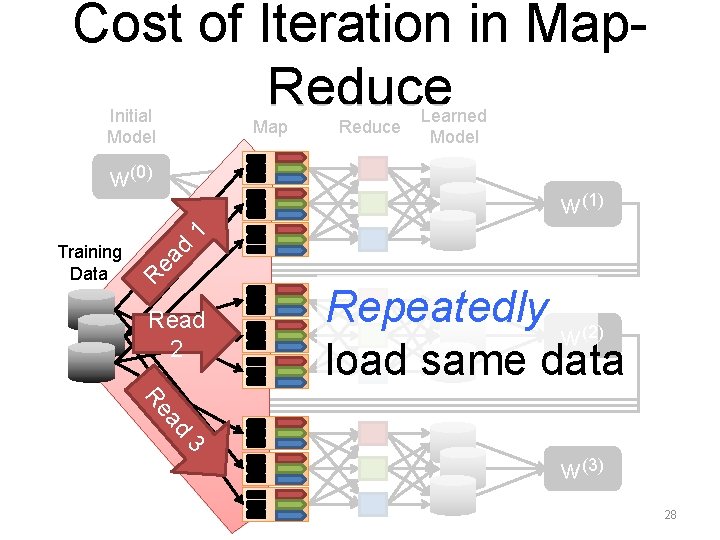

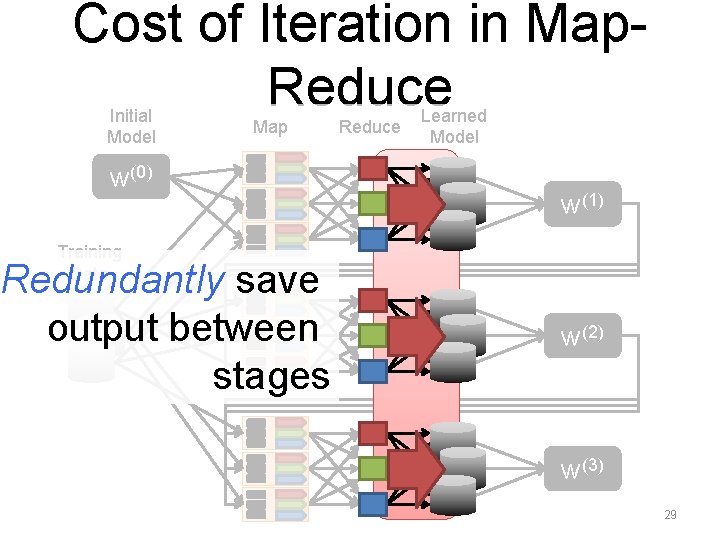

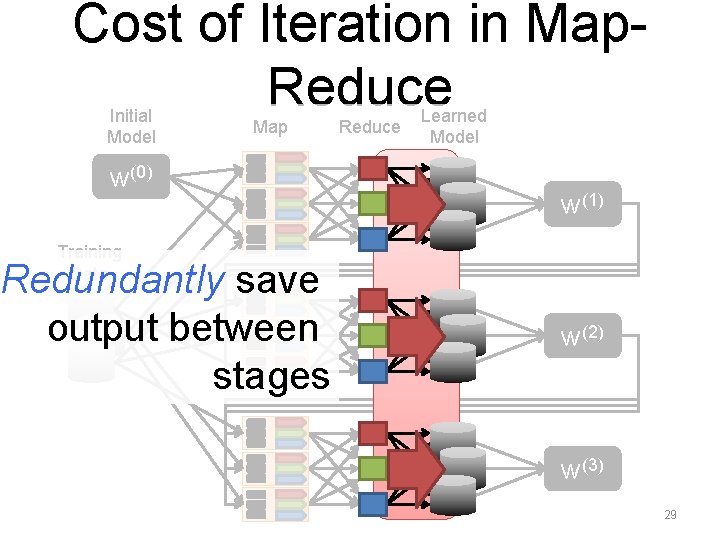

Cost of Iteration in Map. Reduce Initial Model Map w(0) Reduce Learned Model ea R Training Data d 1 w(1) Read 2 Repeatedly (2) w load same data ad Re 3 w(3) 28

Cost of Iteration in Map. Reduce Initial Model Map w(0) Reduce Learned Model w(1) Training Data Redundantly save output between stages w(2) w(3) 29

Parameter Servers Stale Synchronous Parallel Model (slides courtesy of Aurick Qiao Joseph Gonzalez, Wei Dai, and Jinliang Wei)

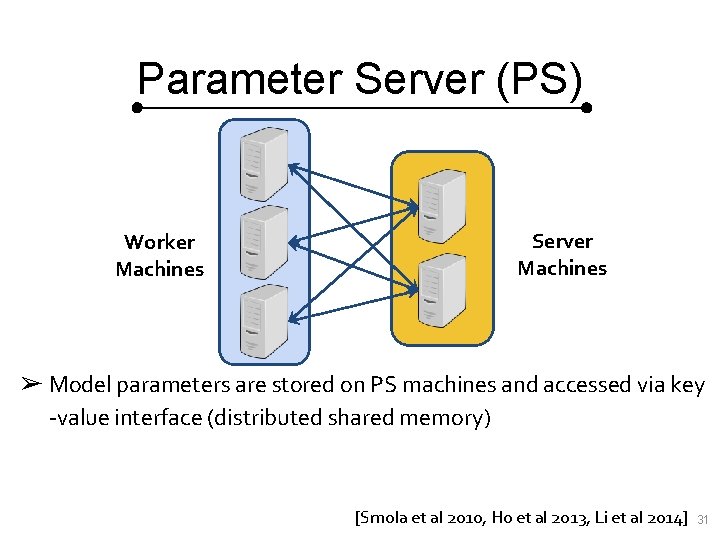

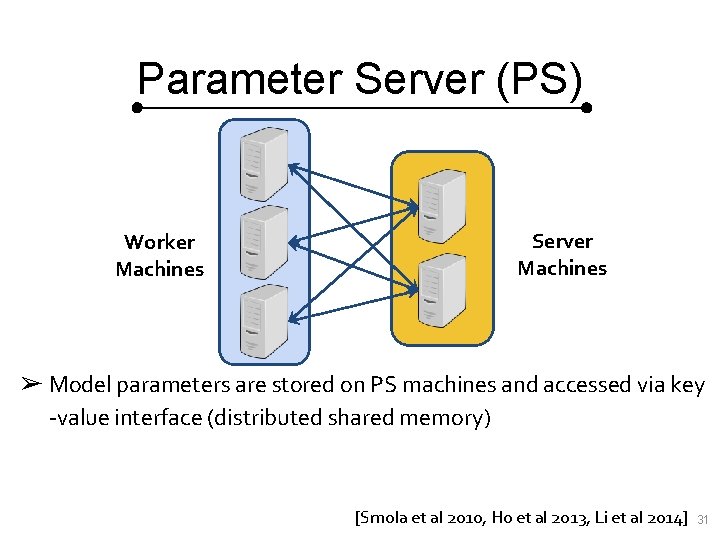

Parameter Server (PS) Worker Machines Server Machines ➢ Model parameters are stored on PS machines and accessed via key -value interface (distributed shared memory) [Smola et al 2010, Ho et al 2013, Li et al 2014] 31

Iterative ML Algorithms Data Worker Model Parameters ➢ Topic Model, matrix factorization, SVM, Deep Neural Network… 32

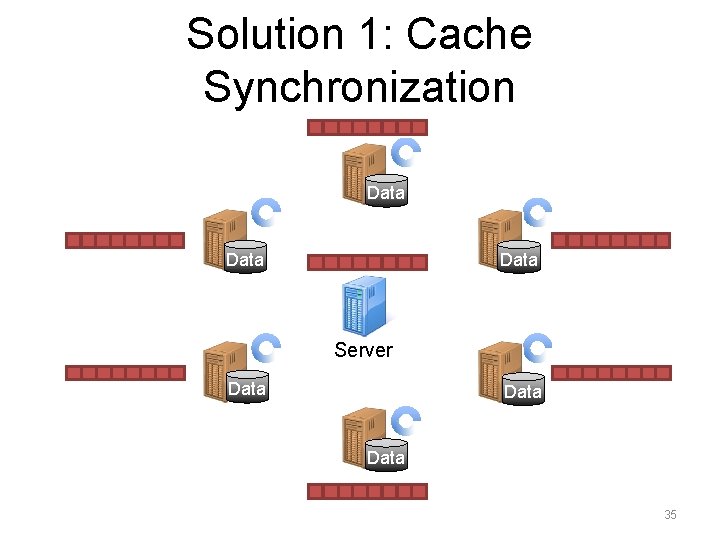

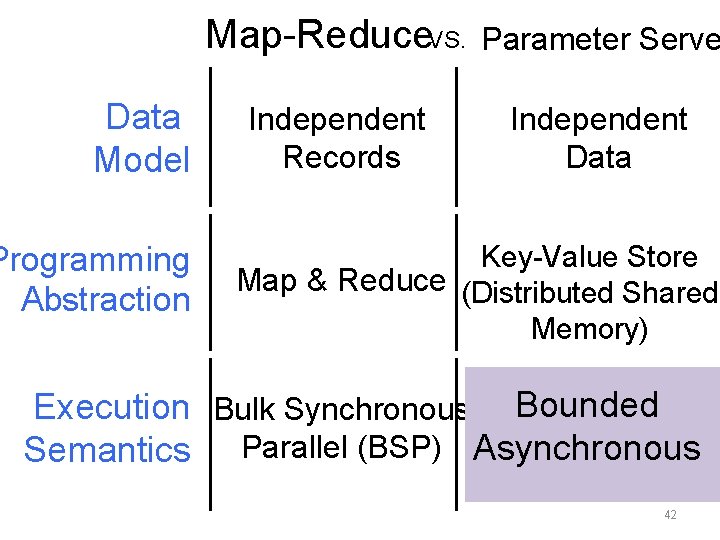

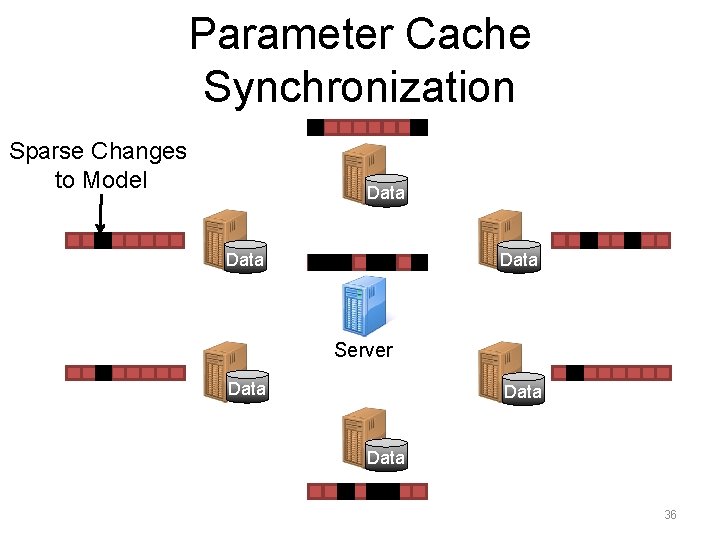

VS. Map-Reduce Data Model Programming Abstraction Independent Records Parameter Serve Independent Data Key-Value Store Map & Reduce (Distributed Shared Memory) Execution Bulk Synchronous Semantics Parallel (BSP) ? 33

The Problem: Networks Are Slow! get(key) add(key, delta Worker Machines Server Machines ➢ Network is slow compared to local memory access ➢ We want to explore options for handling this…. [Smola et al 2010, Ho et al 2013, Li et al 2014] 34

Solution 1: Cache Synchronization Data Server Data 35

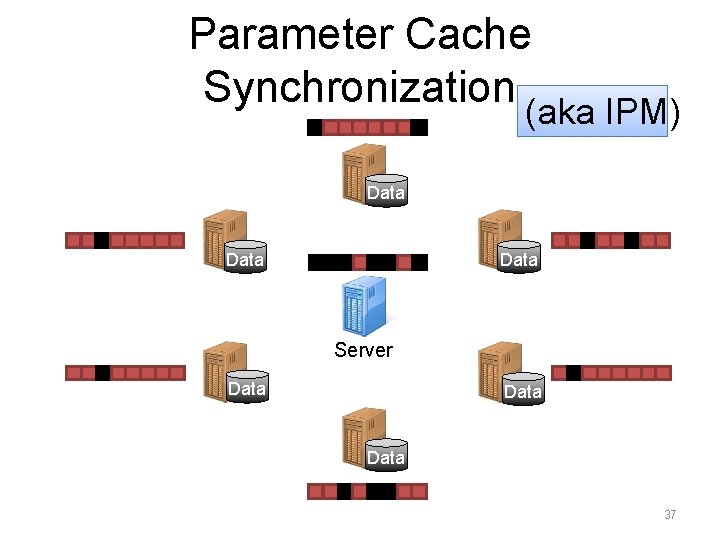

Parameter Cache Synchronization Sparse Changes to Model Data Server Data 36

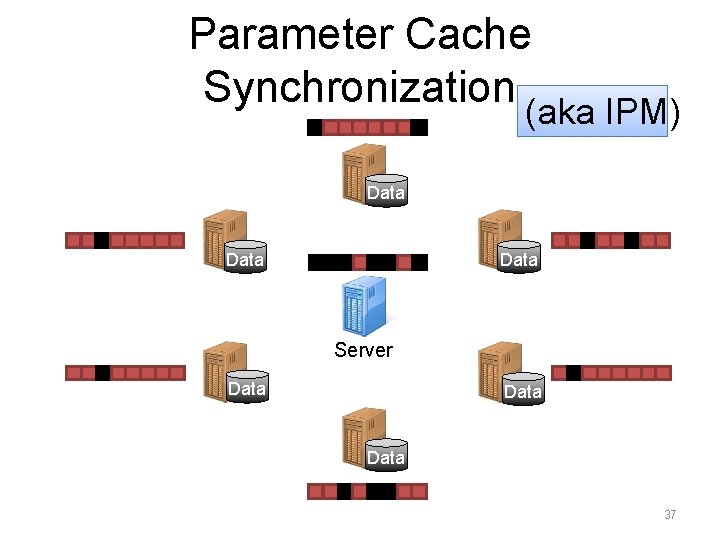

Parameter Cache Synchronization (aka IPM) Data Server Data 37

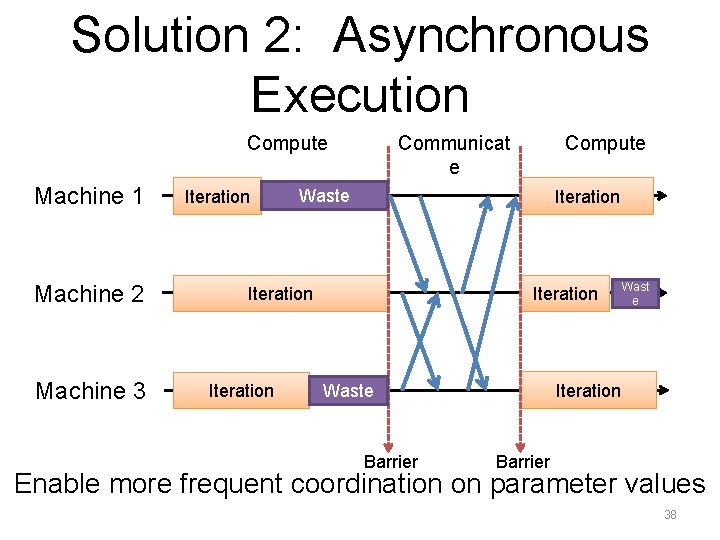

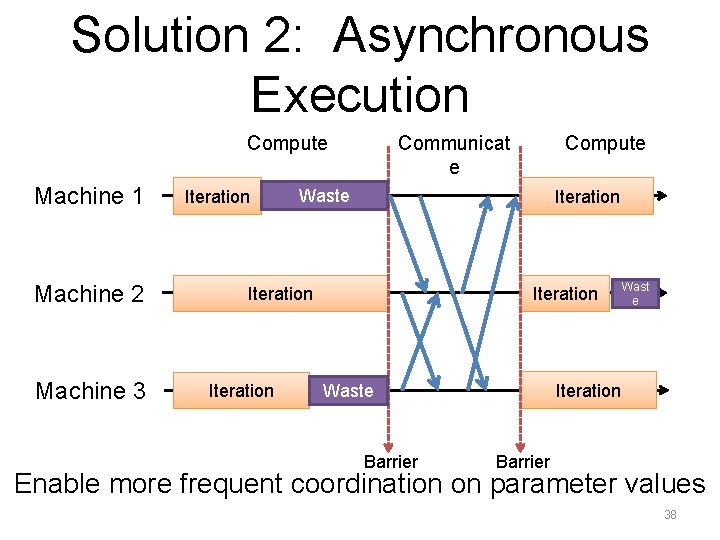

Solution 2: Asynchronous Execution Compute Machine 1 Machine 2 Machine 3 Iteration Communicat e Waste Iteration Compute Iteration Waste Barrier Wast e Barrier Enable more frequent coordination on parameter values 38

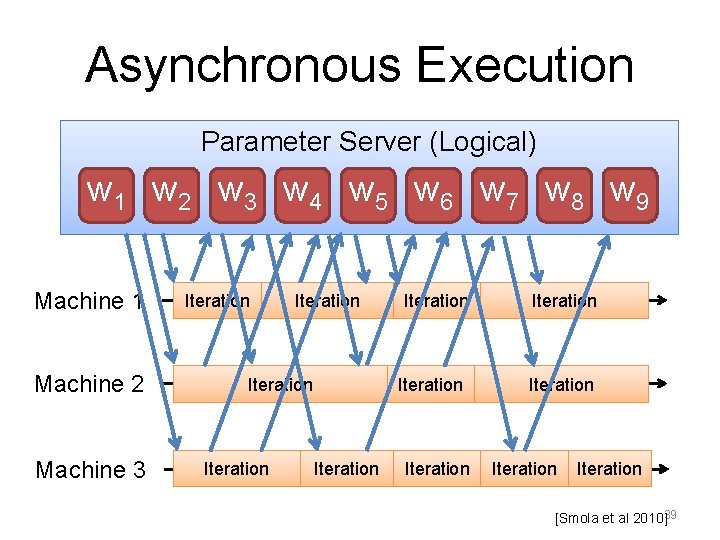

Asynchronous Execution Parameter Server (Logical) w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 w 9 Machine 1 Machine 2 Machine 3 Iteration Iteration Iteration [Smola et al 2010]39

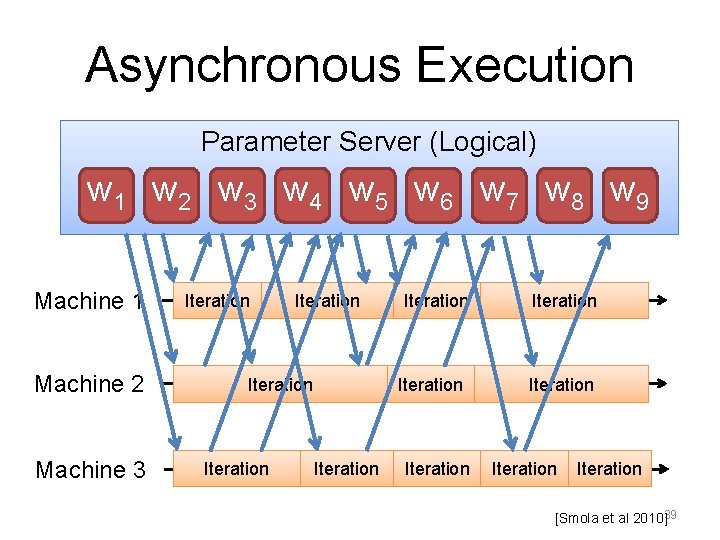

Asynchronous Execution Problem: Async lacks theoretical guarantee as distributed environment can have arbitrary delays from network & stragglers But…. 40

RECAP f is loss function, x is parameters 1. Take a gradient step: x’ = xt – ηt gt 2. If you’ve restricted the parameters to a subspace X (e. g. , must be positive, …) find the closest thing in X to x’: xt+1 = argmin. X dist( x – x’ ) 3. But…. you might be using a “stale” g (from τ steps ago) 41

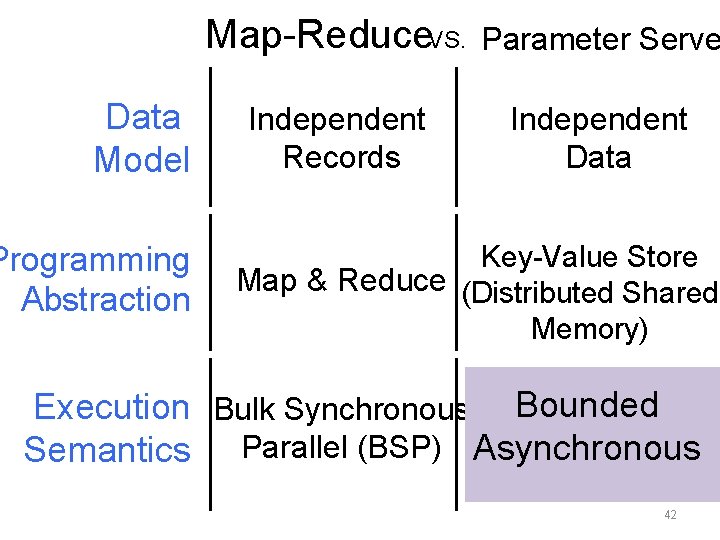

Data Model Programming Abstraction Map-Reduce. VS. Parameter Serve Independent Records Independent Data Key-Value Store Map & Reduce (Distributed Shared Memory) Execution Bulk Synchronous Bounded ? Parallel (BSP) Asynchronous Semantics 42

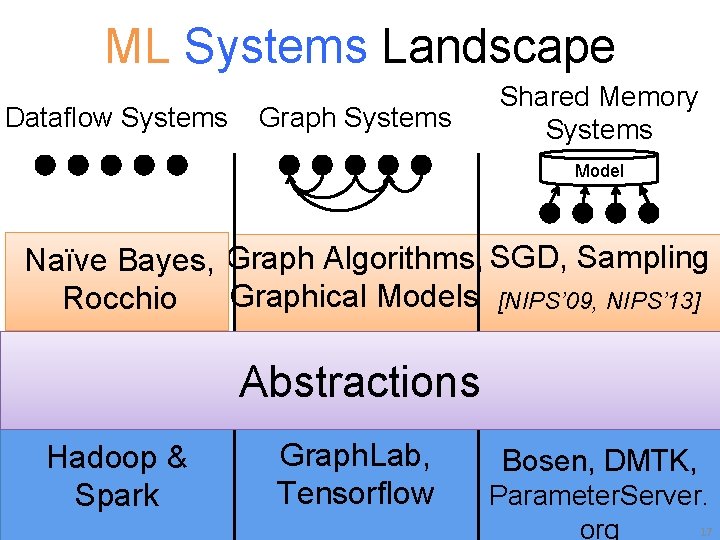

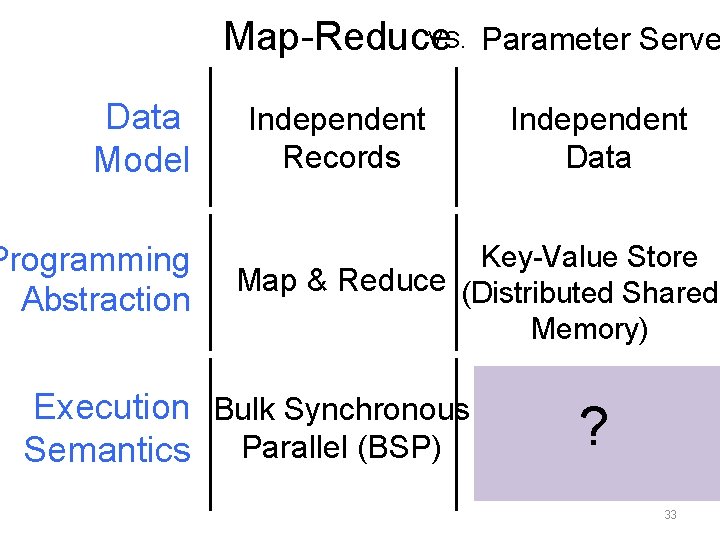

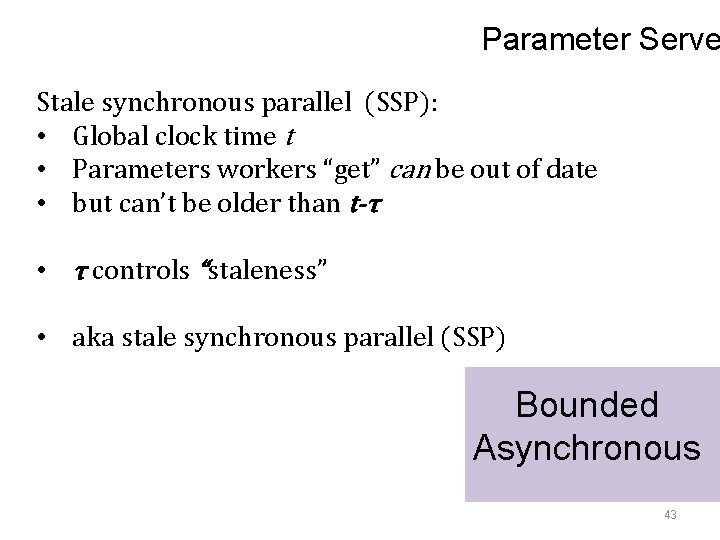

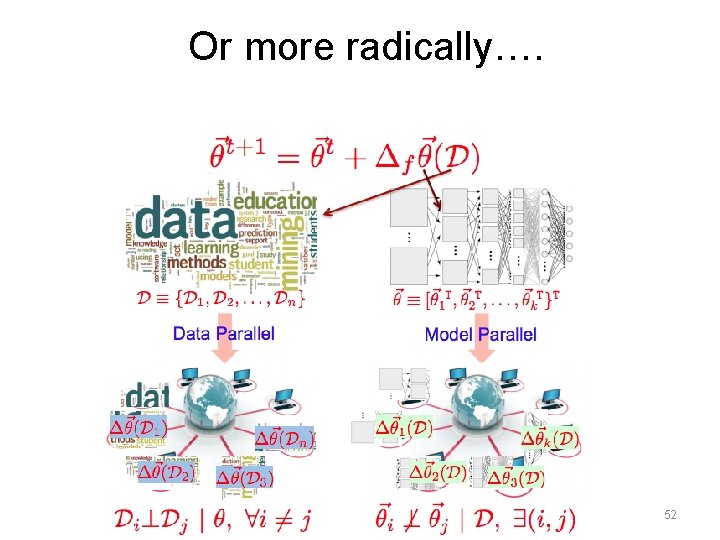

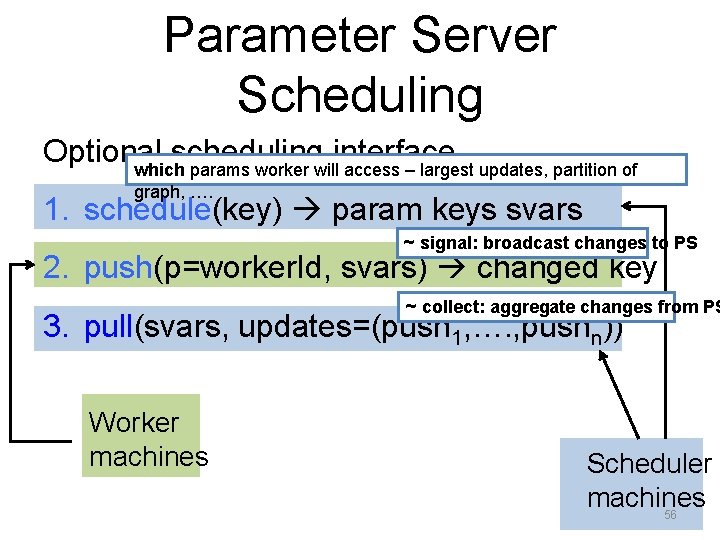

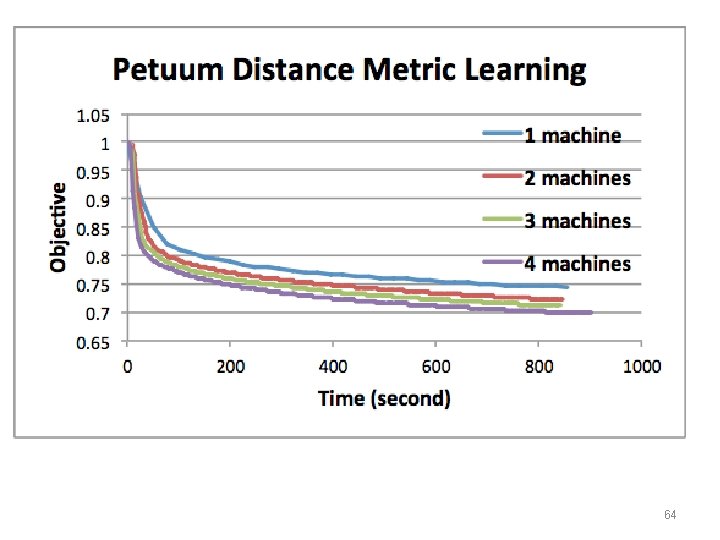

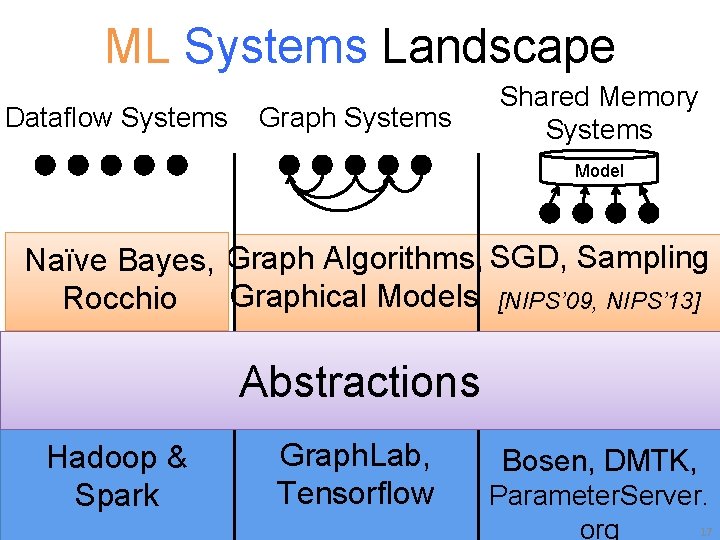

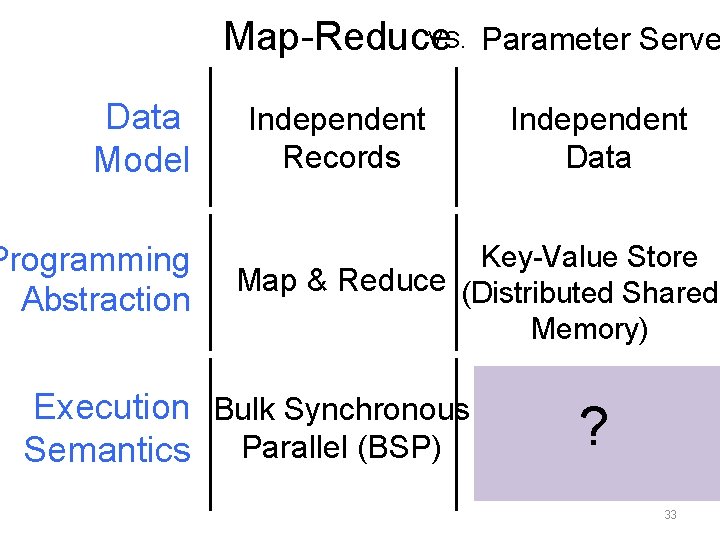

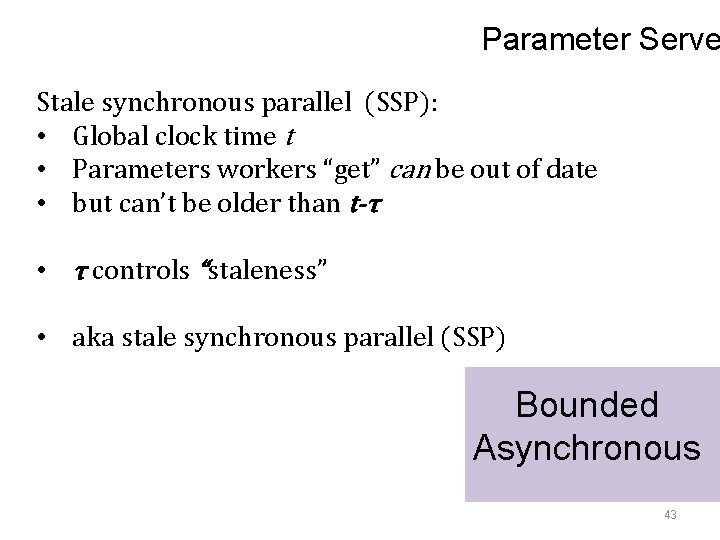

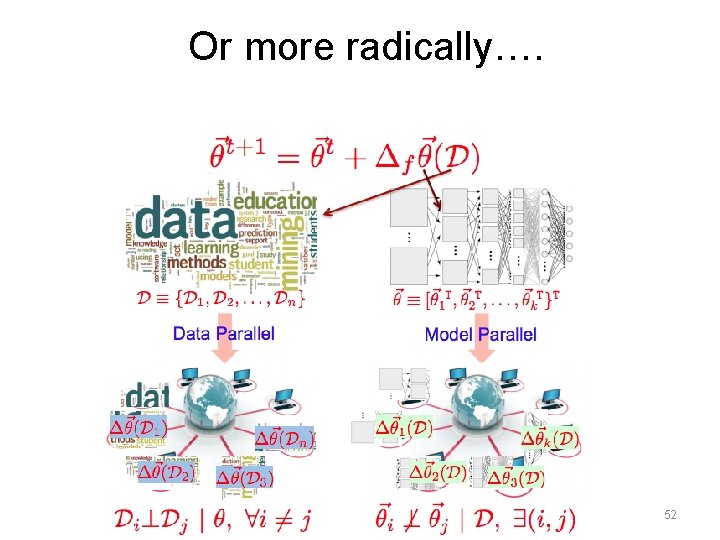

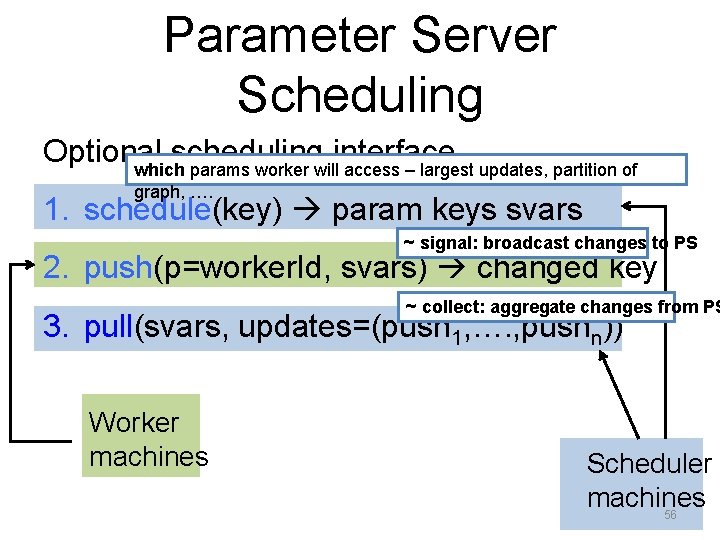

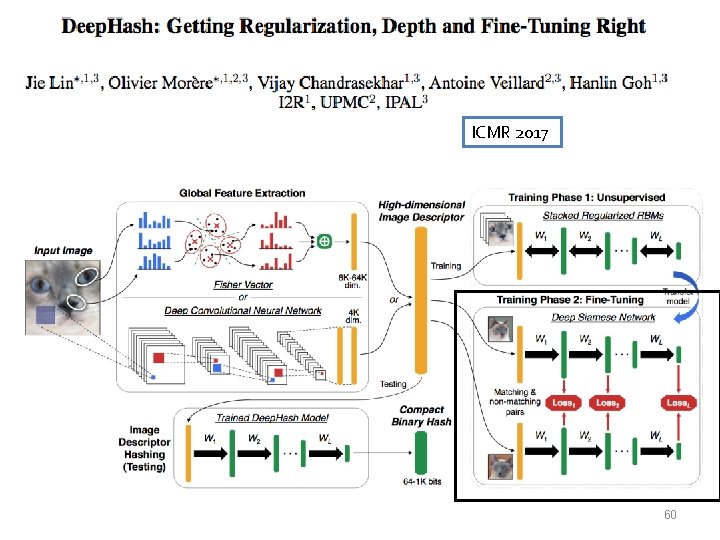

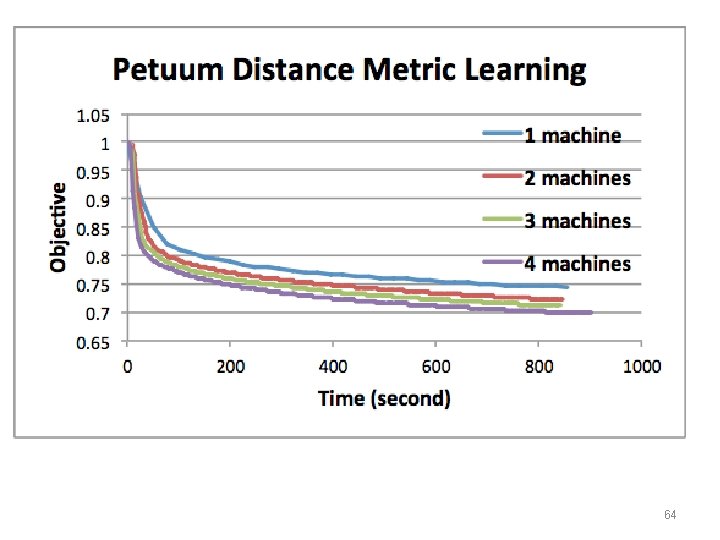

Parameter Serve Stale synchronous parallel (SSP): • Global clock time t • Parameters workers “get” can be out of date • but can’t be older than t-τ • τ controls “staleness” • aka stale synchronous parallel (SSP) Bounded Asynchronous 43

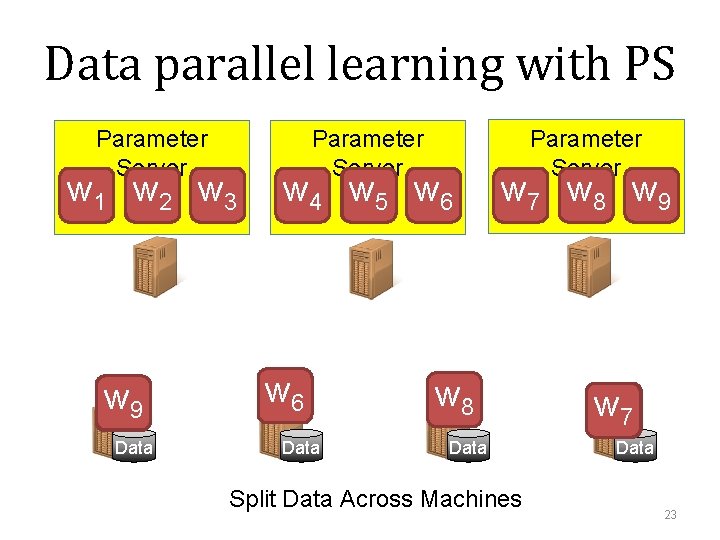

Stale Synchronous Parallel (SSP) ➢ Interpolate between BSP and Async and subsumes both ➢ Allow workers to usually run at own pace ➢ Fastest/slowest threads not allowed to drift >s clocks apart ➢ Efficiently implemented: Cache parameters [Ho et al 2013] 44

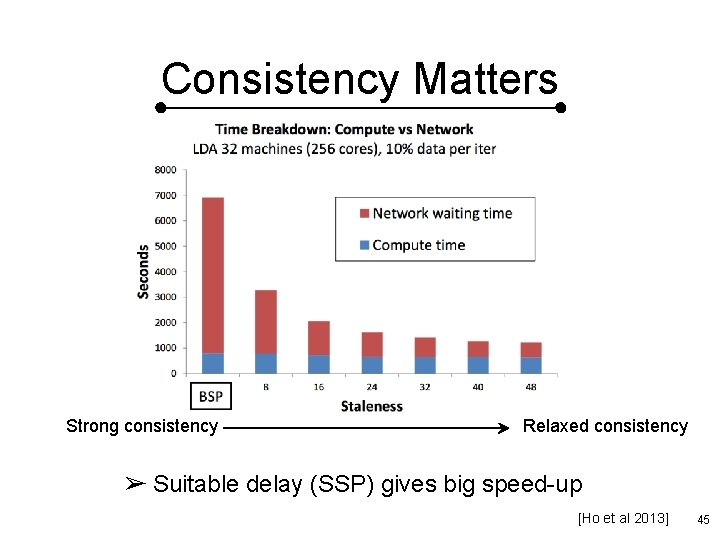

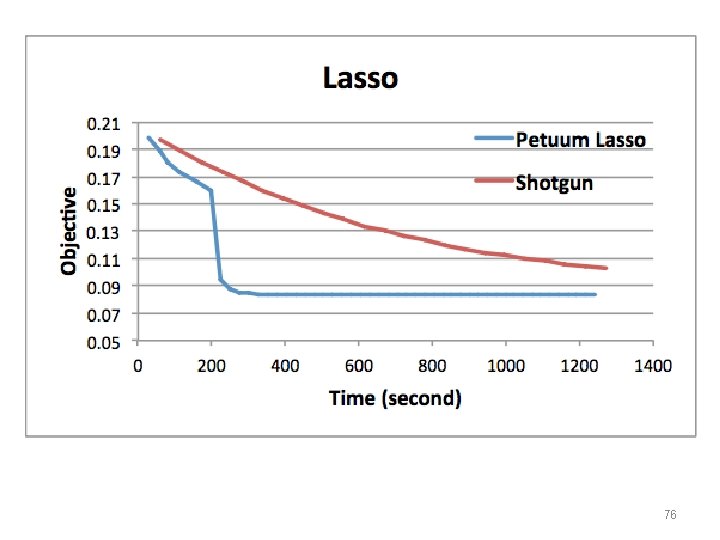

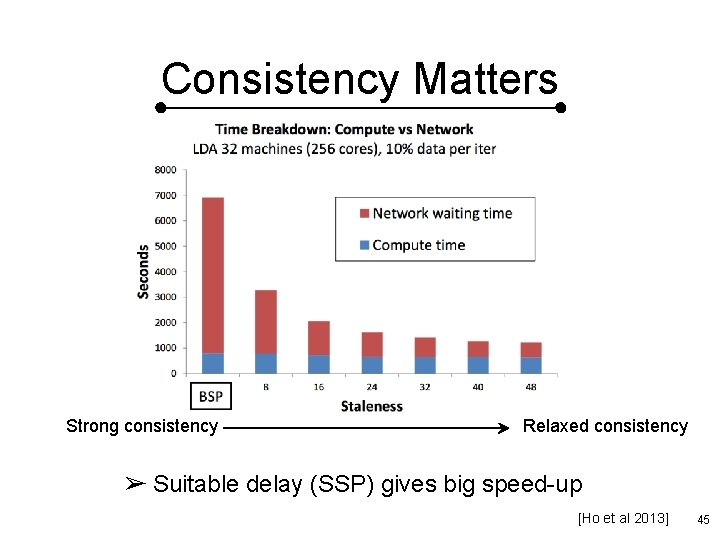

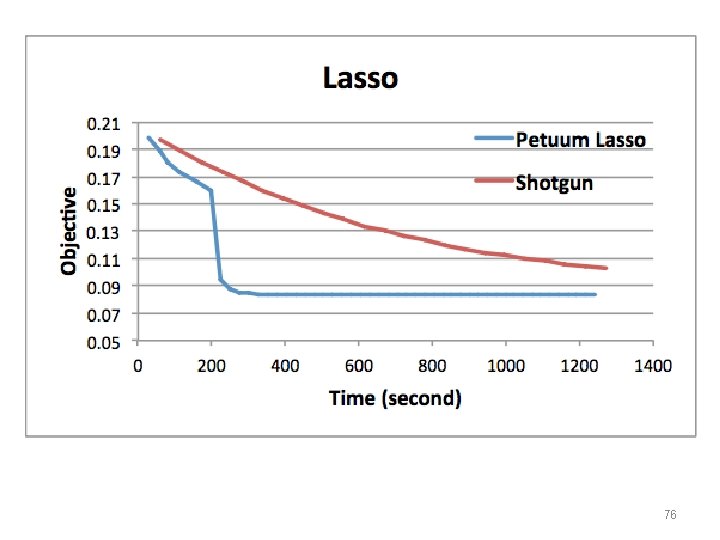

Consistency Matters Strong consistency Relaxed consistency ➢ Suitable delay (SSP) gives big speed-up [Ho et al 2013] 45

![Stale Synchronous Parallel SSP Ho et al 2013 46 Stale Synchronous Parallel (SSP) [Ho et al 2013] 46](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-46.jpg)

Stale Synchronous Parallel (SSP) [Ho et al 2013] 46

Beyond the PS/SSP Abstraction…

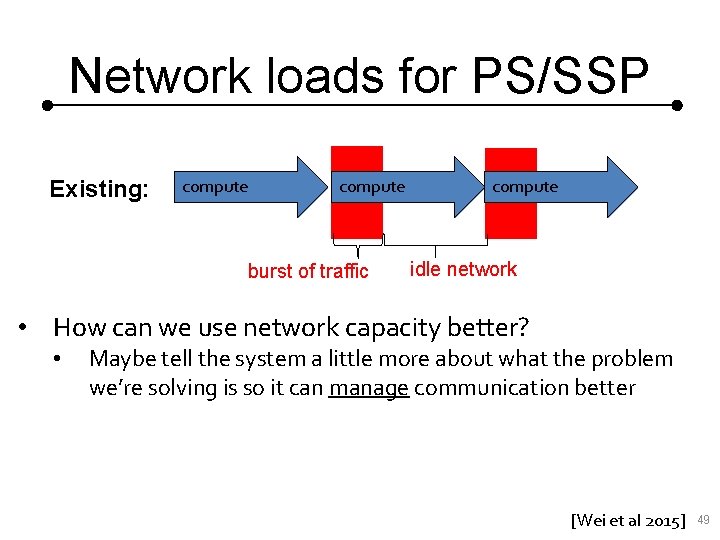

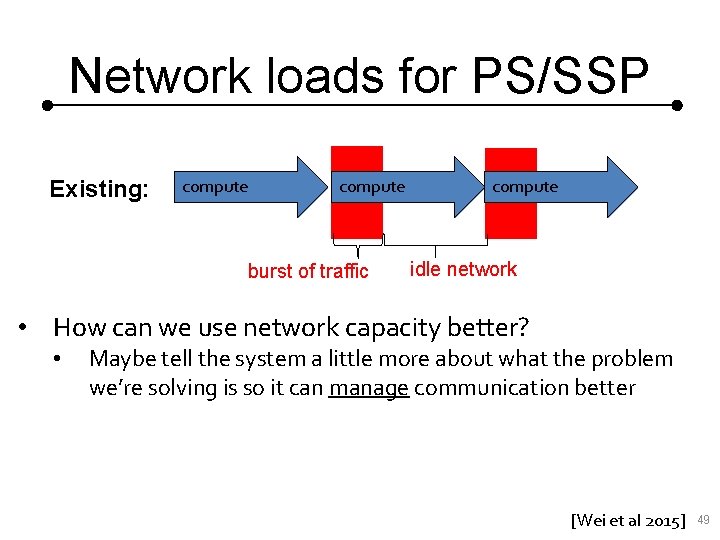

Network loads for PS/SSP Existing: compute burst of traffic compute idle network • How can we use network capacity better? • Maybe tell the system a little more about what the problem we’re solving is so it can manage communication better [Wei et al 2015] 49

Ways To Manage Communication • Model parameters are not equally important • E. g. Majority of the parameters may converge in a few iteration. • Communicate the more important parameter values or updates • Magnitude of the changes indicates importance • Magnitude-based prioritization strategies • Example: Relative-magnitude prioritization [Wei et al 2015] We saw many of these ideas in the signal/collect paper 51 51

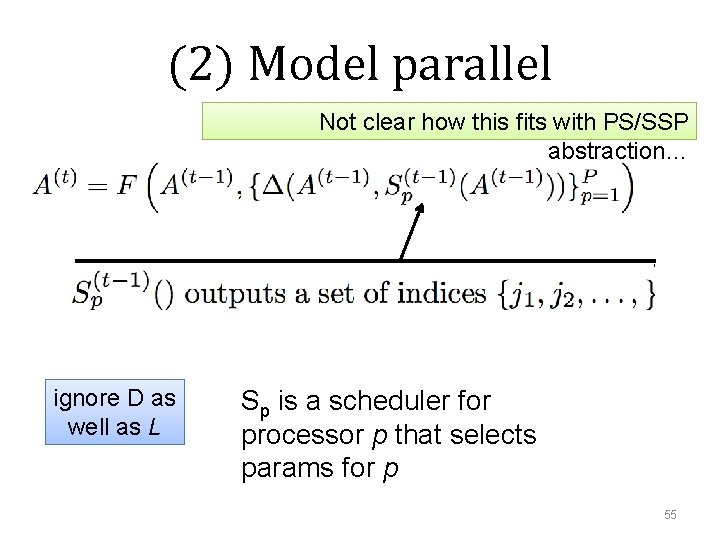

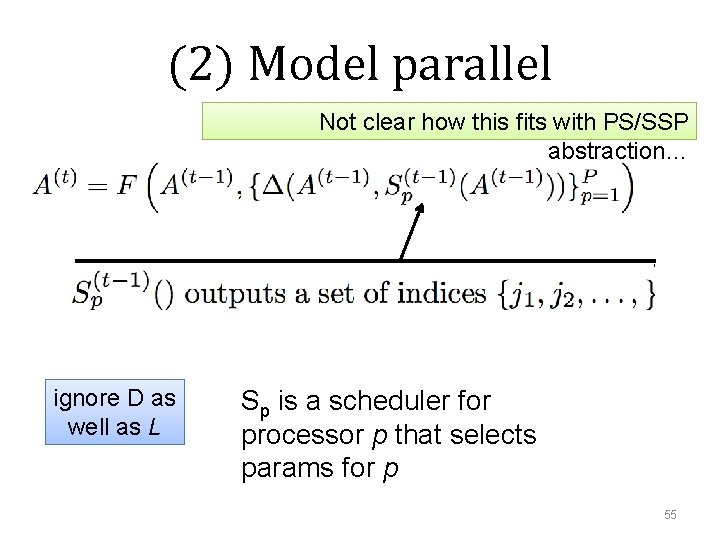

Or more radically…. 52

Iterative ML Algorithms A: params at time t L: loss Δ: grad D: data F: update ➢ Many ML algorithms are iterative-convergent ➢ Examples: Optimization, sampling methods ➢ Topic Model, matrix factorization, SVM, Deep Neural Network… 53

Iterative ML with a Parameter Server: (1) Data Parallel Good fit for PS/SSP abstraction Usually add here Δ: grad of L D: data, shard p Often add locally assume first ➢ Each worker assigned a data partition i. i. d (~ combiner) ➢ Model parameters are shared by workers ➢ Workers read and update the model parameters 54

(2) Model parallel Not clear how this fits with PS/SSP abstraction… ignore D as well as L Sp is a scheduler for processor p that selects params for p 55

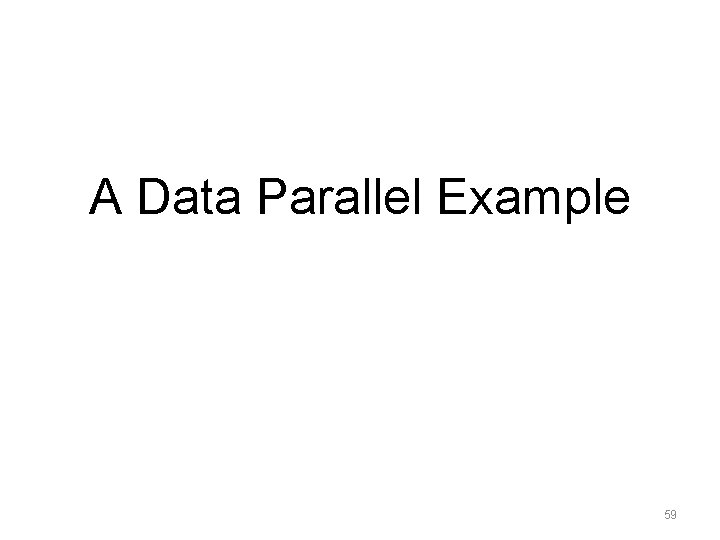

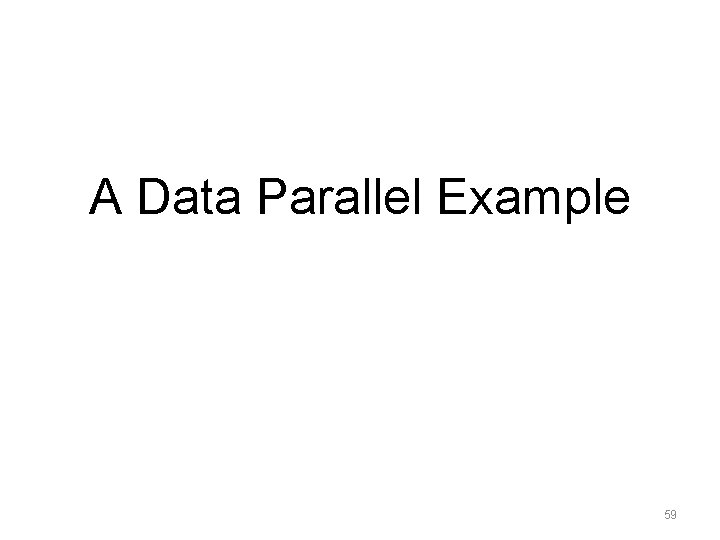

Parameter Server Scheduling Optional scheduling interface which params worker will access – largest updates, partition of graph, …. 1. schedule(key) param keys svars ~ signal: broadcast changes to PS 2. push(p=worker. Id, svars) changed key ~ collect: aggregate changes from PS 3. pull(svars, updates=(push 1, …. , pushn)) Worker machines Scheduler machines 56

Support for model-parallel programs 57

centrally executed distributed centrally executed Similar to signal-collect: schedule() defines graph, workers push params to scheduler, scheduler pulls to aggregate, and makes params available via get() and inc() 58

A Data Parallel Example 59

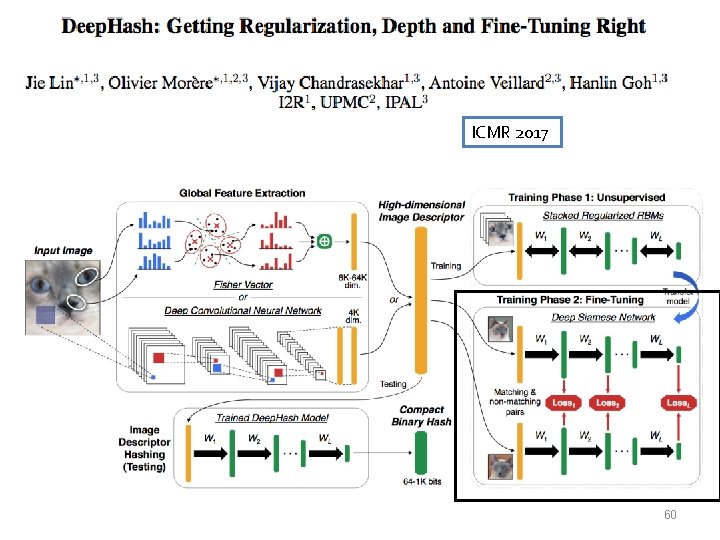

ICMR 2017 60

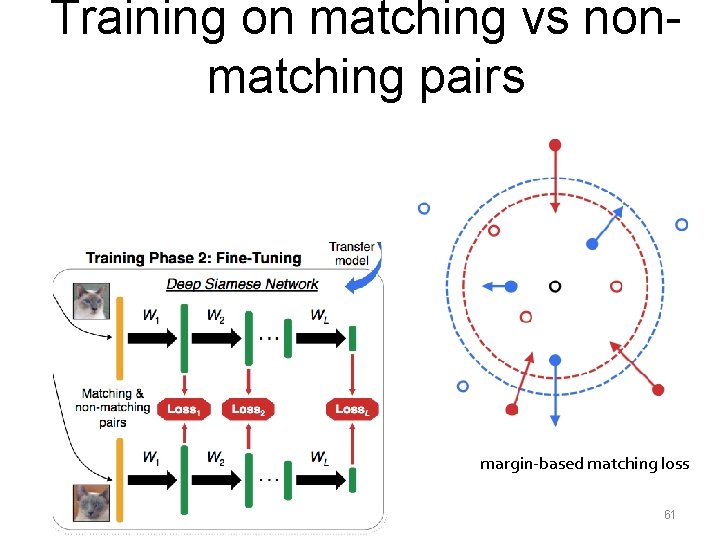

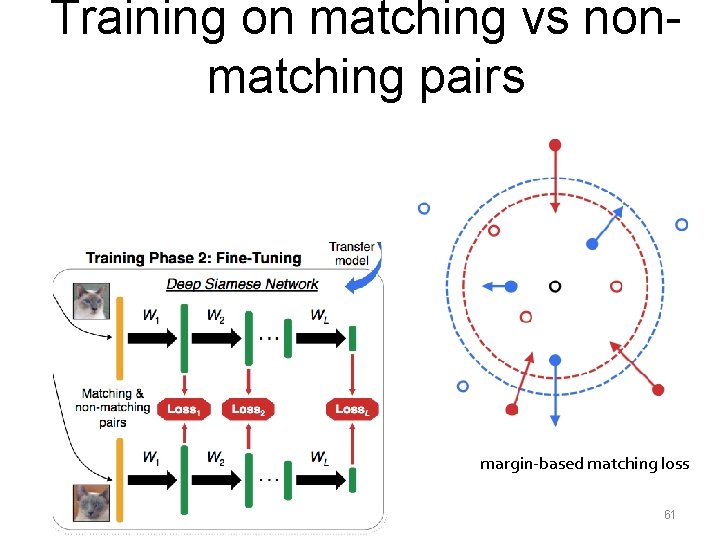

Training on matching vs nonmatching pairs margin-based matching loss 61

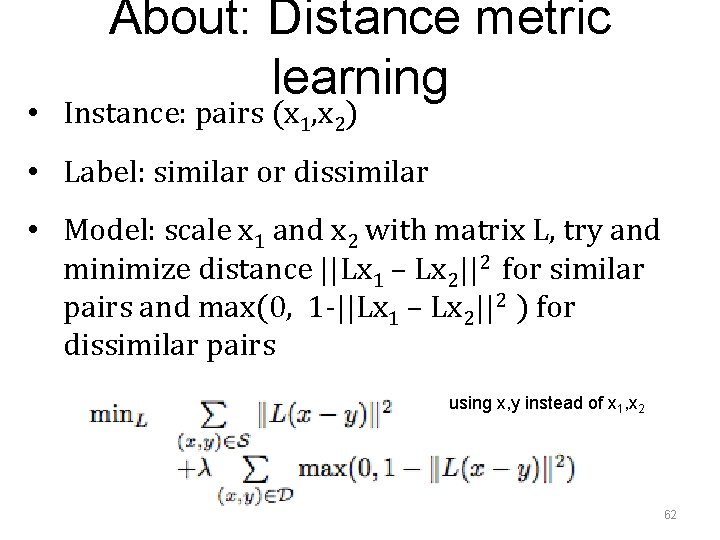

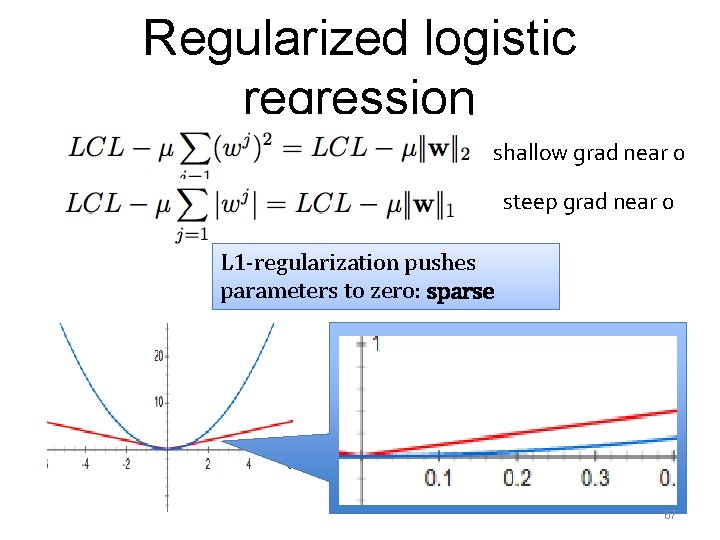

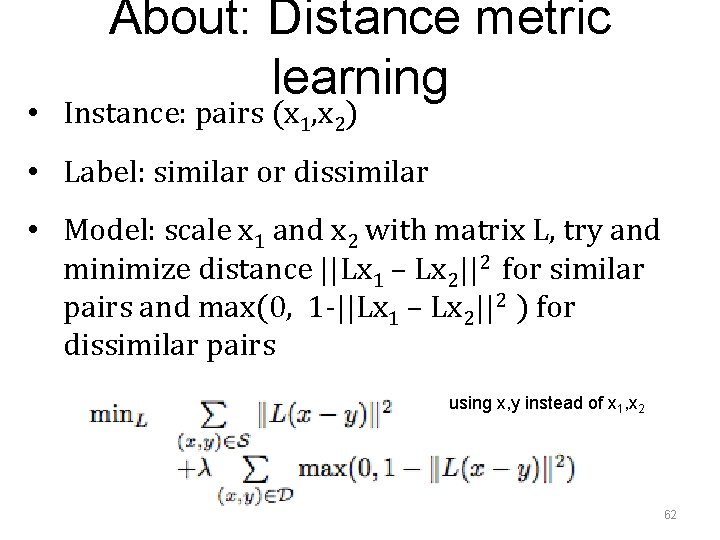

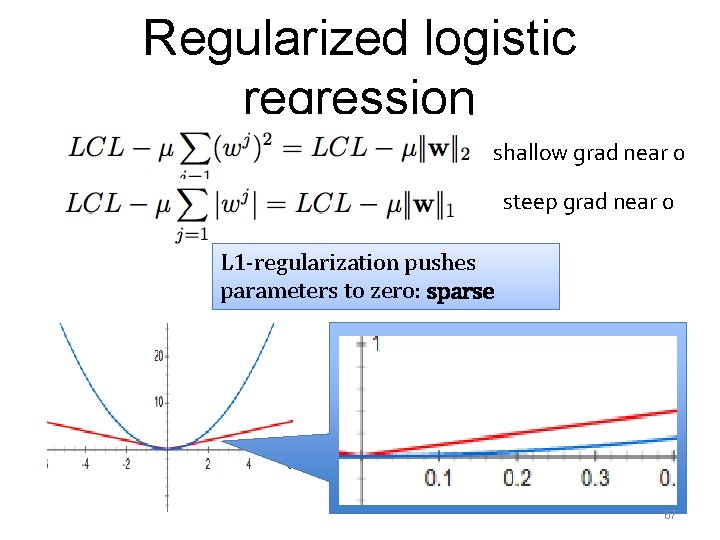

About: Distance metric learning • Instance: pairs (x 1, x 2) • Label: similar or dissimilar • Model: scale x 1 and x 2 with matrix L, try and minimize distance ||Lx 1 – Lx 2||2 for similar pairs and max(0, 1 -||Lx 1 – Lx 2||2 ) for dissimilar pairs using x, y instead of x 1, x 2 62

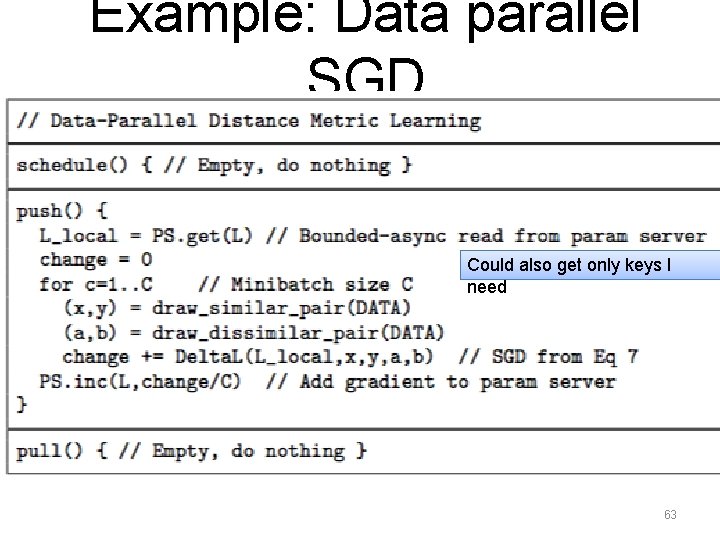

Example: Data parallel SGD Could also get only keys I need 63

64

A Model Parallel Example: Lasso 65

Regularized logistic regression Replace log conditional likelihood LCL with LCL + penalty for large weights, eg alternative penalty: 66

Regularized logistic regression shallow grad near 0 steep grad near 0 L 1 -regularization pushes parameters to zero: sparse 67

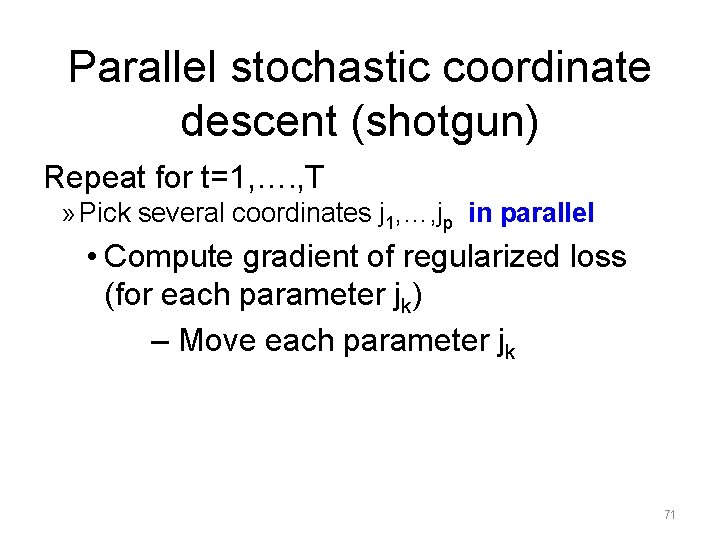

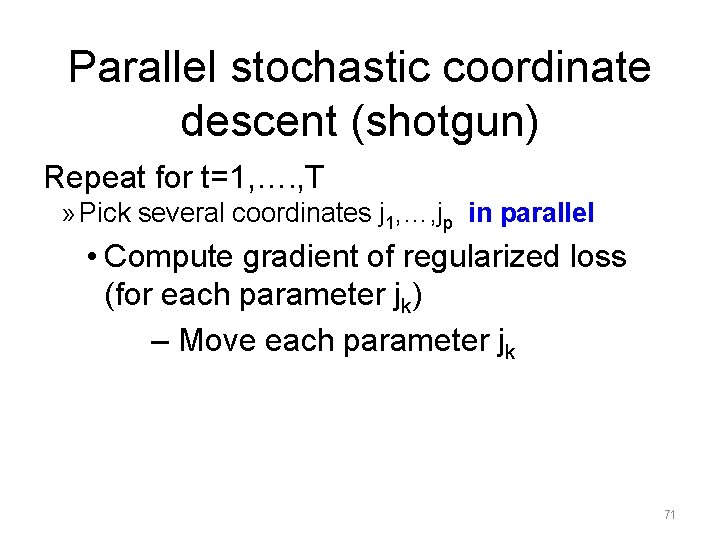

SGD Repeat for t=1, …. , T » For each example • Compute gradient of regularized loss (for that example) –Move all parameters in that direction (a little) 68

Coordinate descent Repeat for t=1, …. , T » For each parameter j • Compute gradient of regularized loss (for that parameter j) – Move that parameter j (a good ways, sometimes to its minimal value relative to the others) 69

Stochastic coordinate descent Repeat for t=1, …. , T » Pick a random parameter j • Compute gradient of regularized loss (for that parameter j) – Move that parameter j (a good ways, sometimes to its minimal value relative to the others) 70

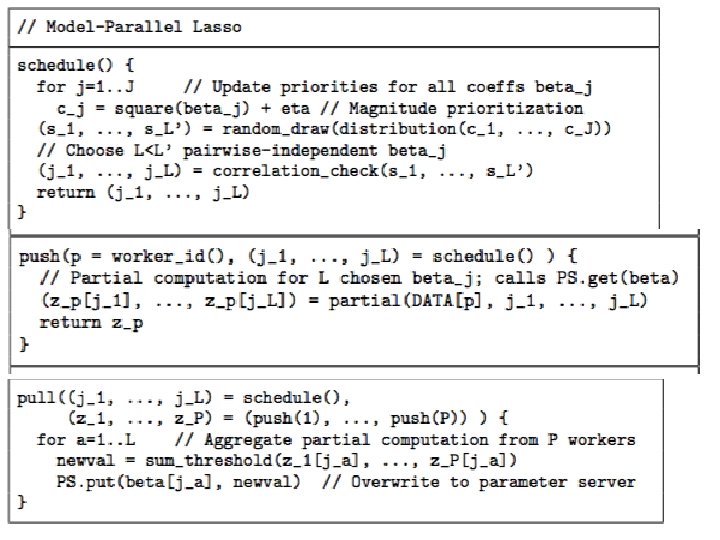

Parallel stochastic coordinate descent (shotgun) Repeat for t=1, …. , T » Pick several coordinates j 1, …, jp in parallel • Compute gradient of regularized loss (for each parameter jk) – Move each parameter jk 71

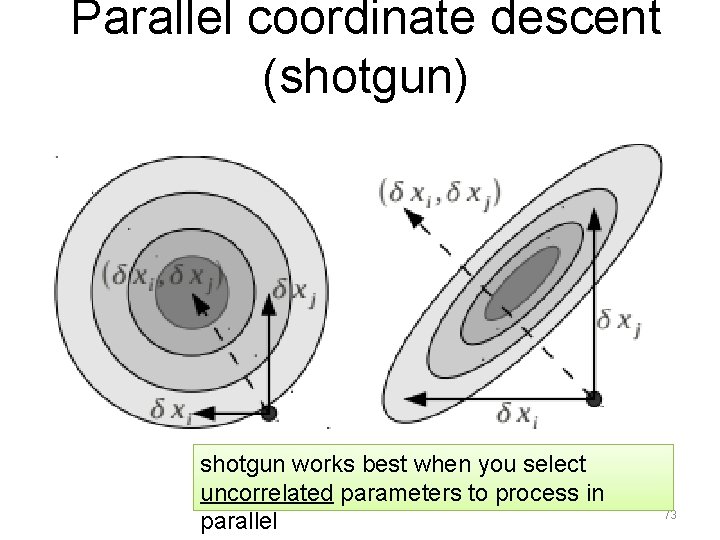

Parallel coordinate descent (shotgun) 72

Parallel coordinate descent (shotgun) shotgun works best when you select uncorrelated parameters to process in parallel 73

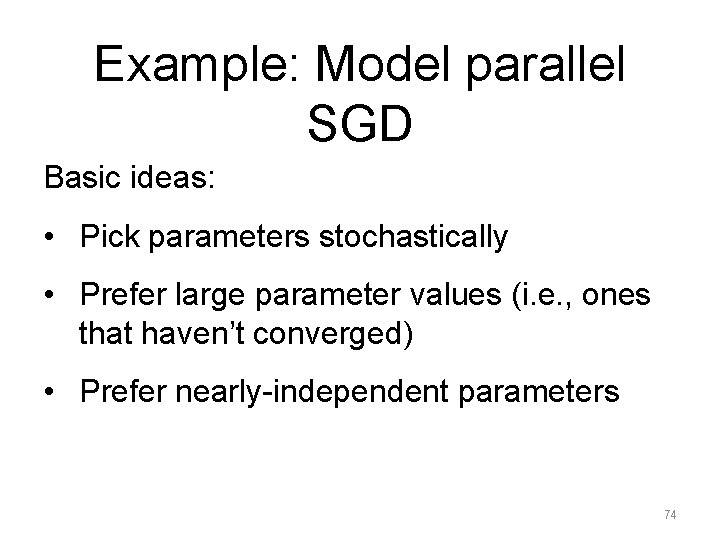

Example: Model parallel SGD Basic ideas: • Pick parameters stochastically • Prefer large parameter values (i. e. , ones that haven’t converged) • Prefer nearly-independent parameters 74

75

76

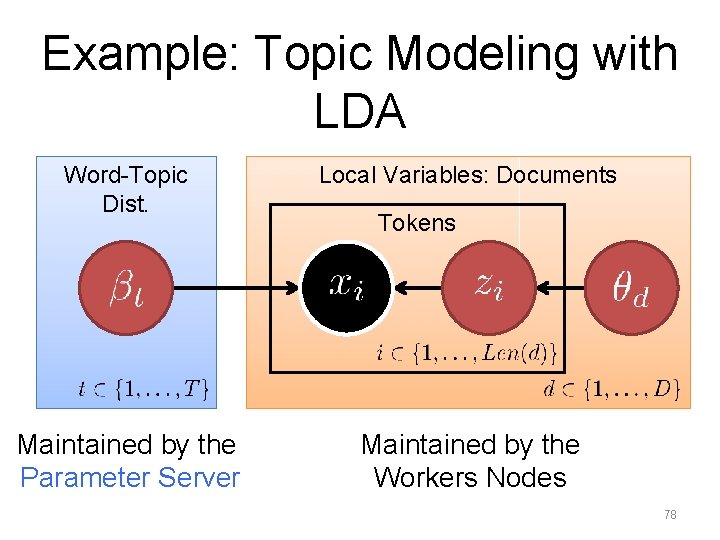

Case Study: Topic Modeling with LDA

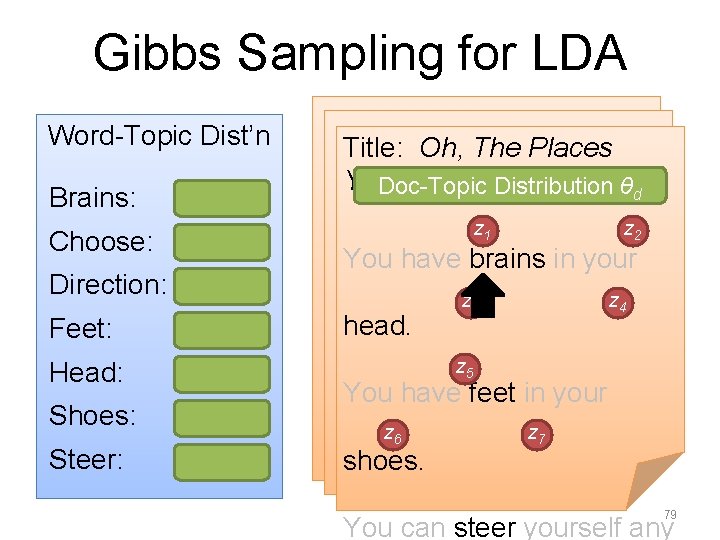

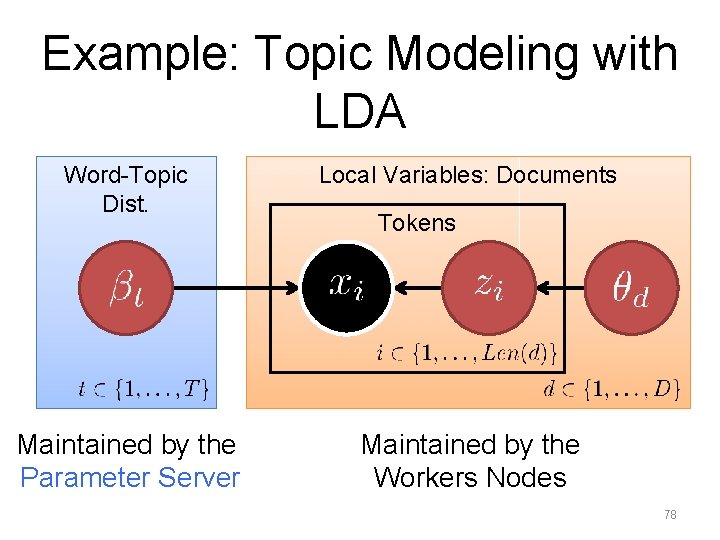

Example: Topic Modeling with LDA Word-Topic Dist. Local Variables: Documents Maintained by the Parameter Server Maintained by the Workers Nodes Tokens 78

Gibbs Sampling for LDA Word-Topic Dist’n Brains: Choose: Direction: Feet: Head: Shoes: Steer: Title: Oh, The Places You’ll Go! Distribution θd Doc-Topic z 1 z 2 You have brains in your head. z 3 z 4 z 5 You have feet in your z 6 shoes. z 7 79 You can steer yourself any

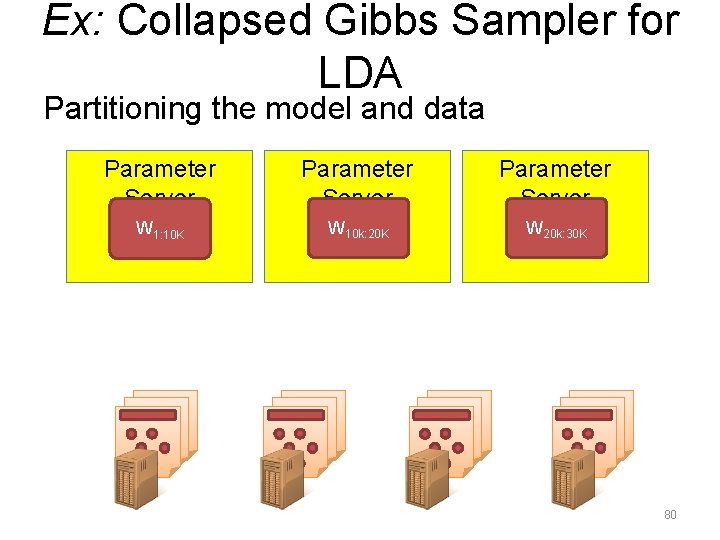

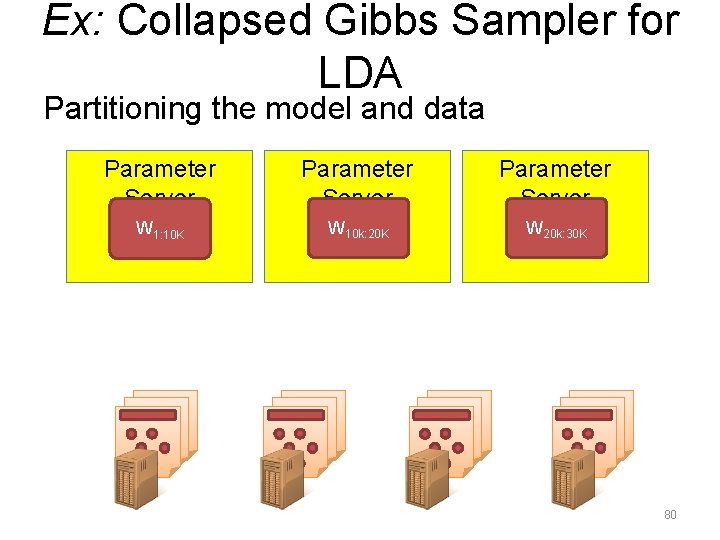

Ex: Collapsed Gibbs Sampler for LDA Partitioning the model and data Parameter Server W 1: 10 K W 10 k: 20 K W 20 k: 30 K 80

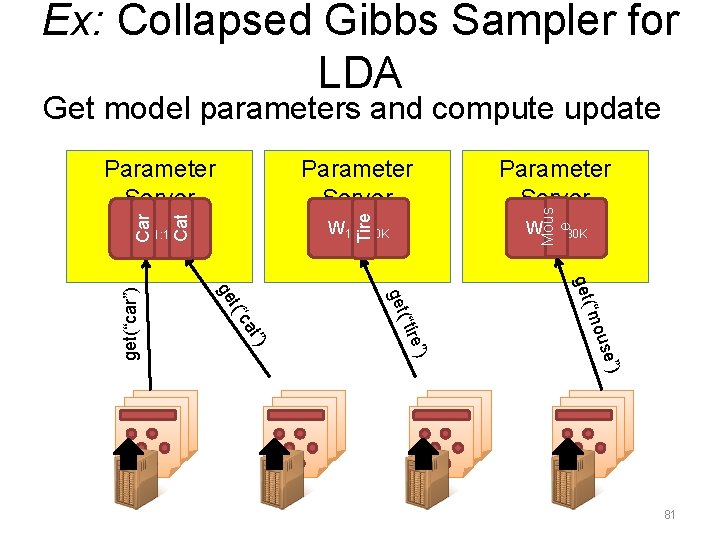

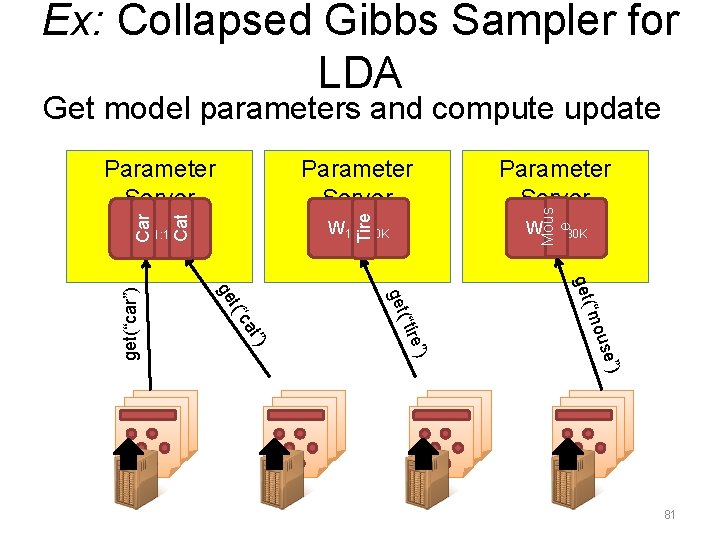

Ex: Collapsed Gibbs Sampler for LDA Get model parameters and compute update W 10 k: 20 K W 20 k: 30 K e”) ”) use “mo get( (“tir get t”) ca t(“ ge get(“car”) W 1: 10 K Parameter Server Mous e Tire Parameter Server Cat Car Parameter Server 81

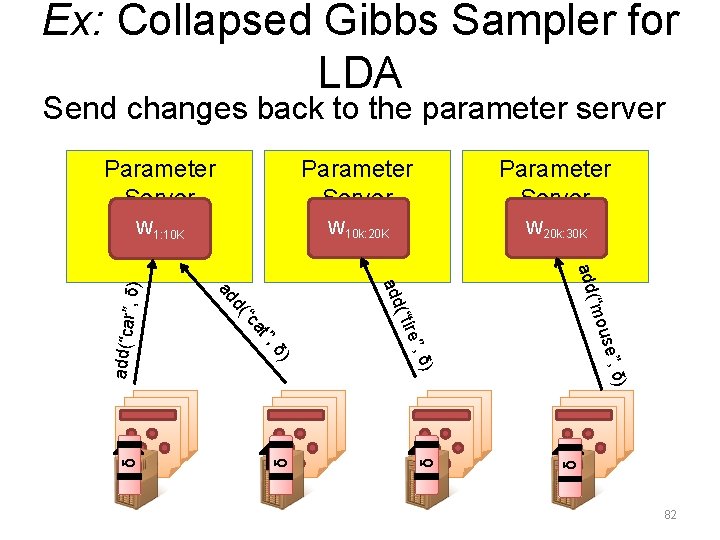

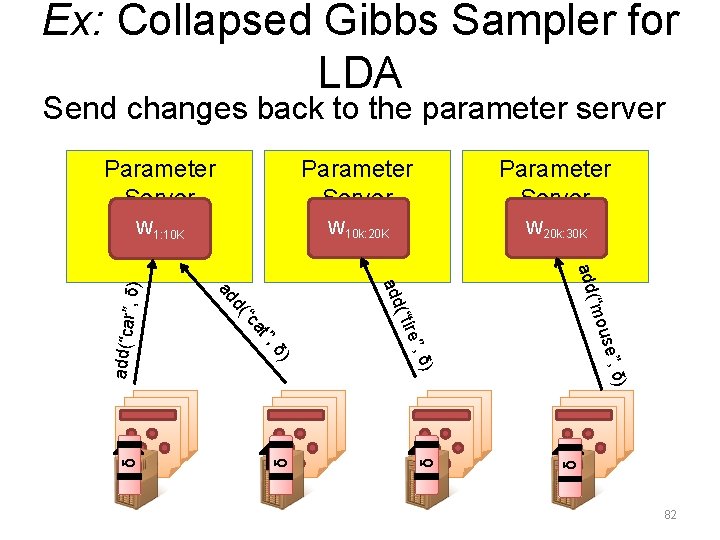

Ex: Collapsed Gibbs Sampler for LDA Send changes back to the parameter server Parameter Server W 1: 10 K W 10 k: 20 K W 20 k: 30 K δ δ δ add(“car δ , δ) use” “mo add( δ) δ) e”, (“tir add ”, at “c d( ad ”, δ ) Parameter Server 82

![LDA Samplers Comparison Yuan et al 2015 85 LDA Samplers Comparison [Yuan et al 2015] 85](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-81.jpg)

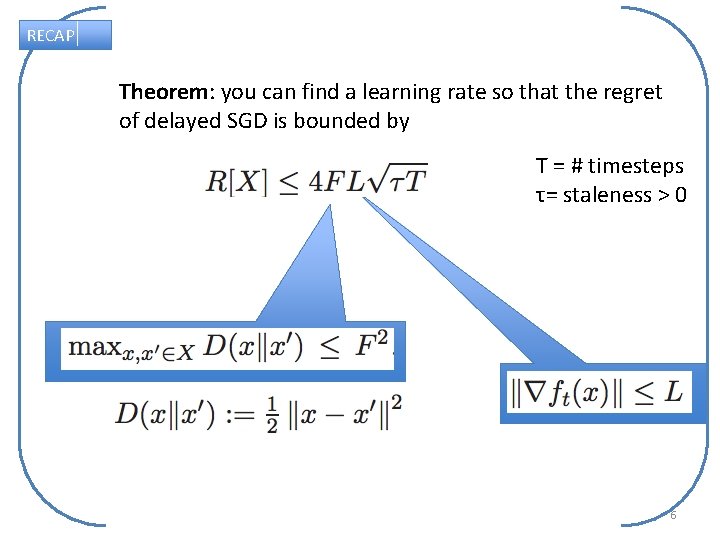

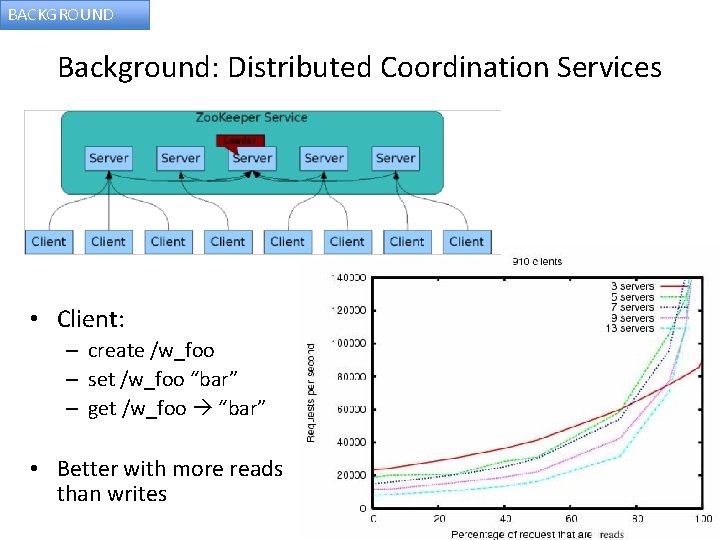

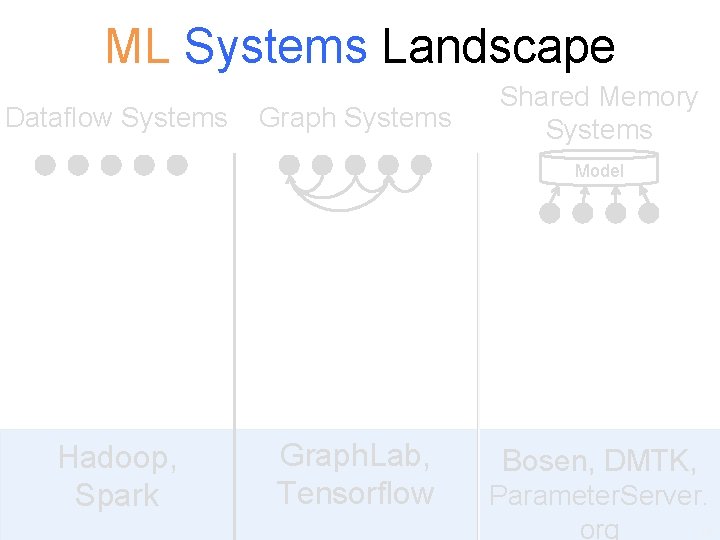

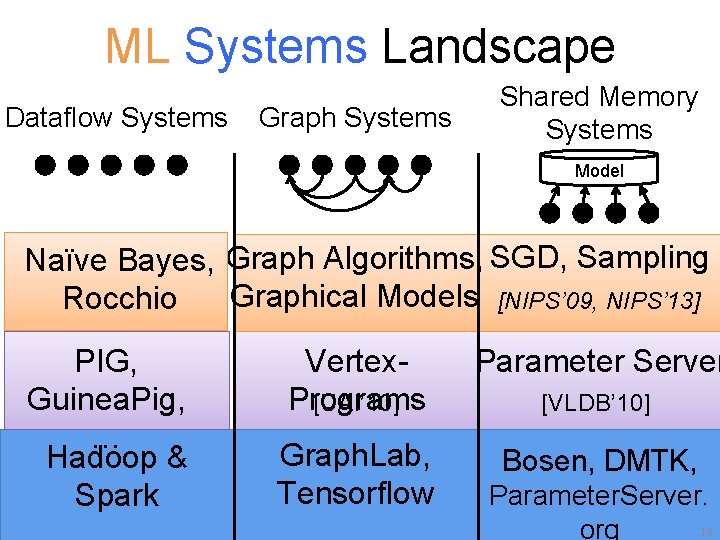

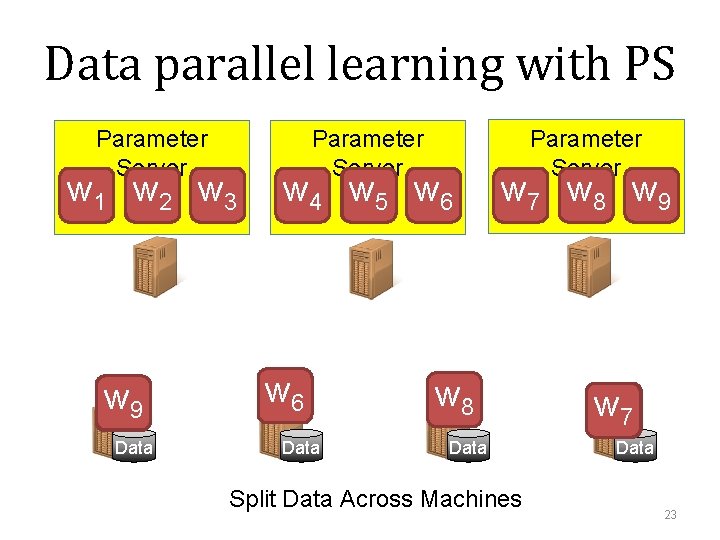

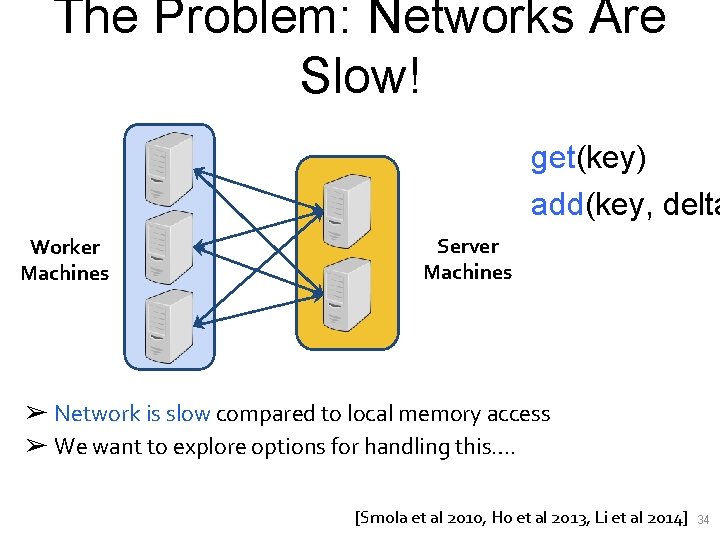

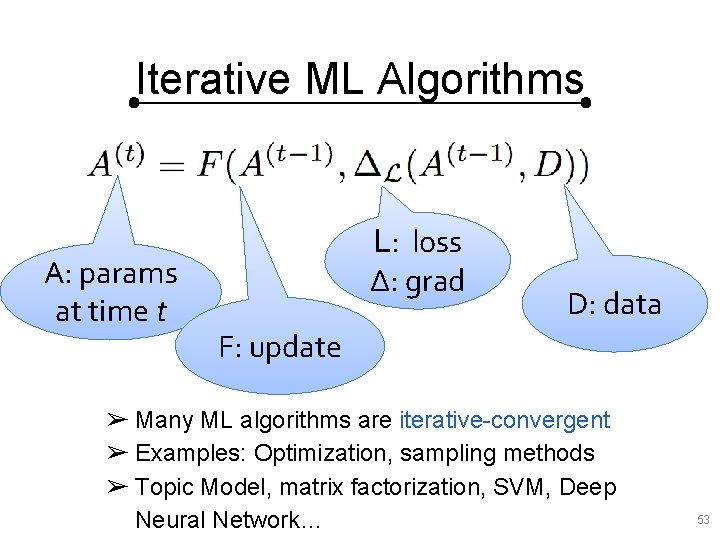

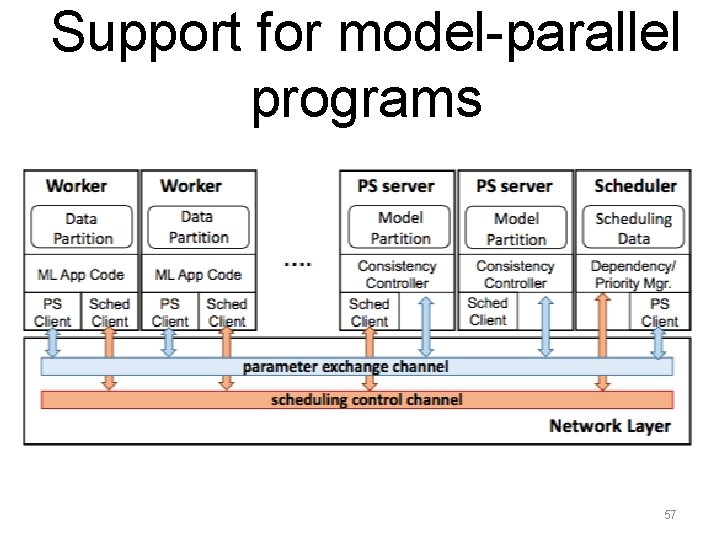

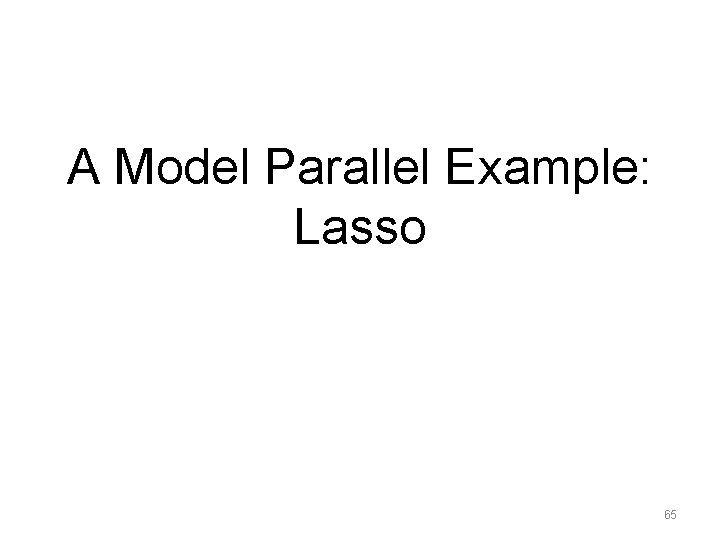

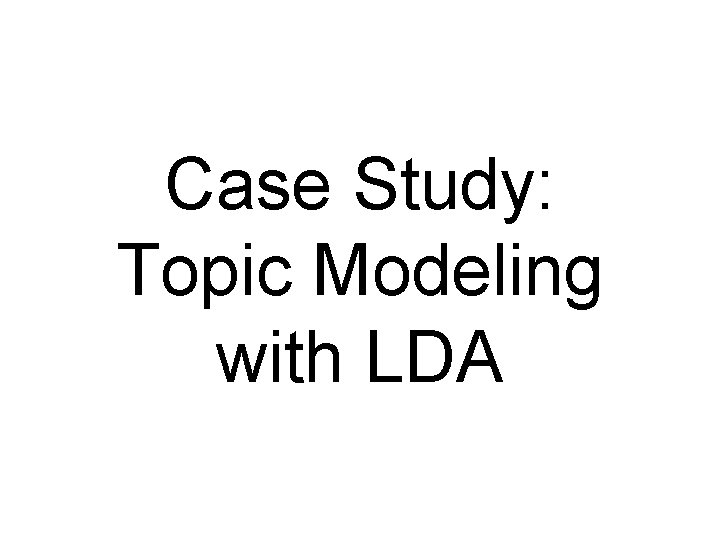

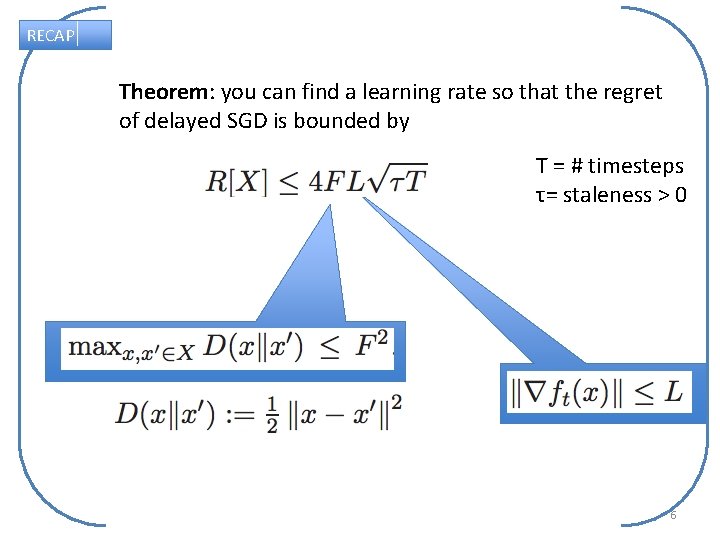

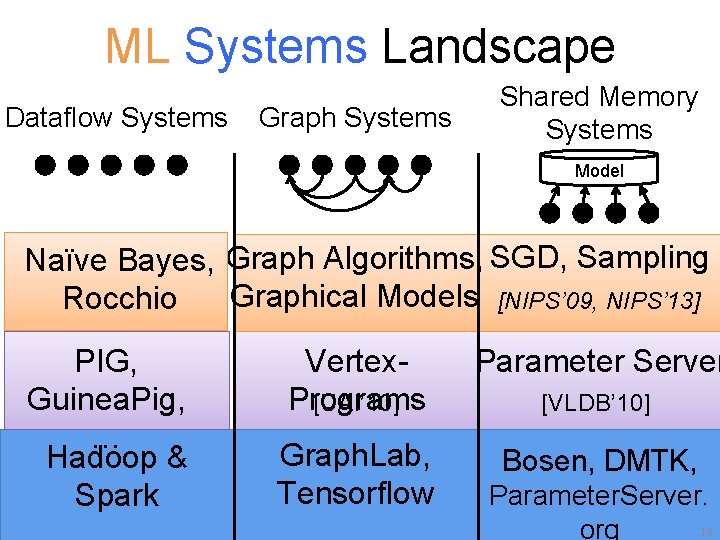

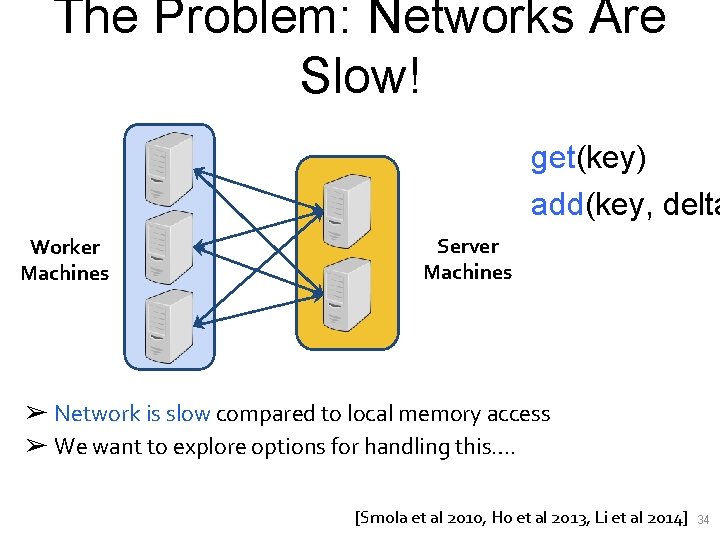

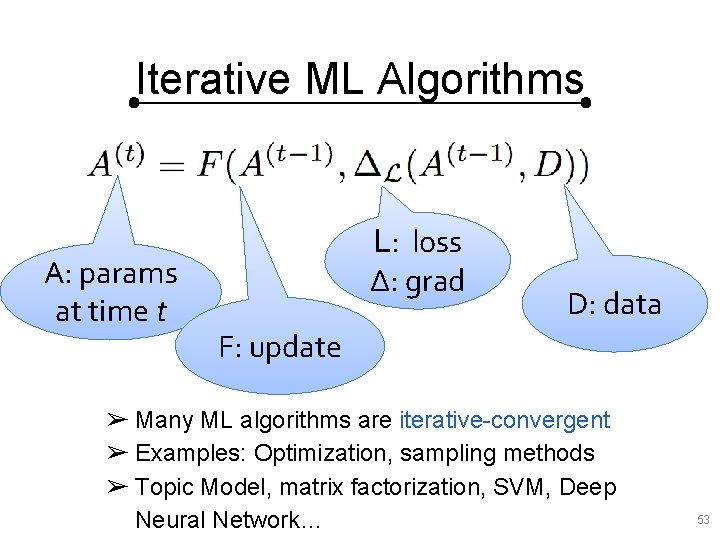

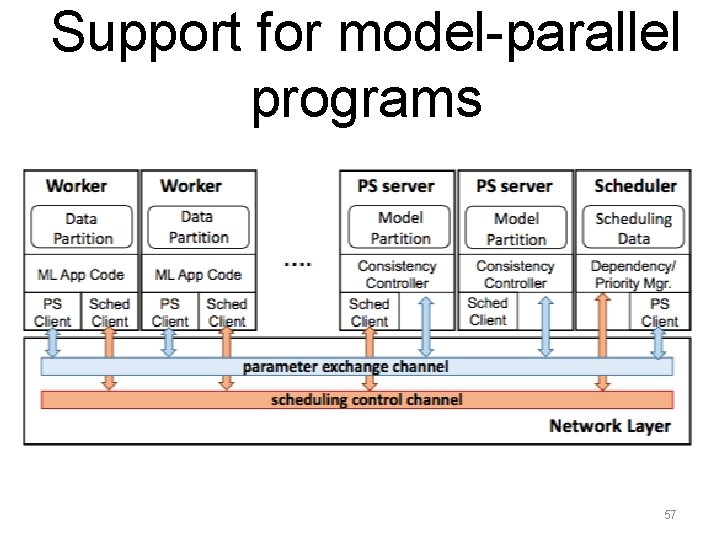

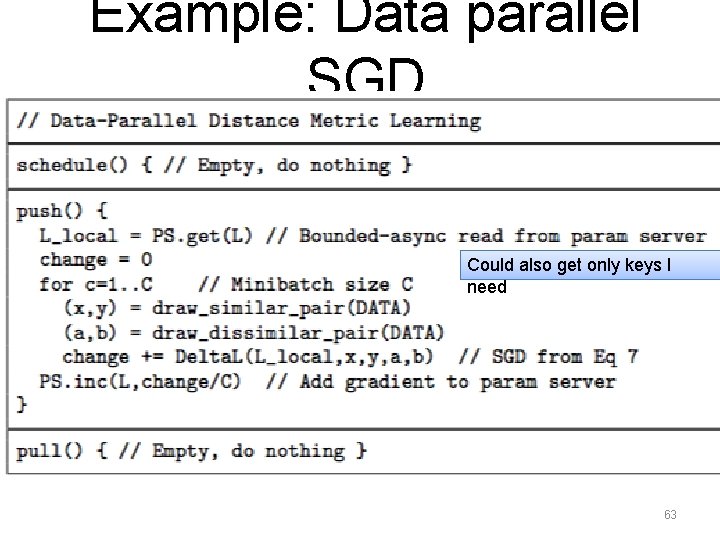

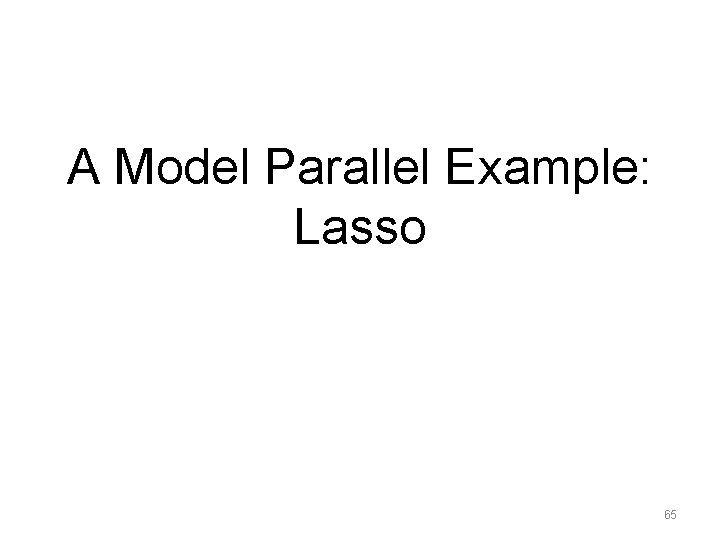

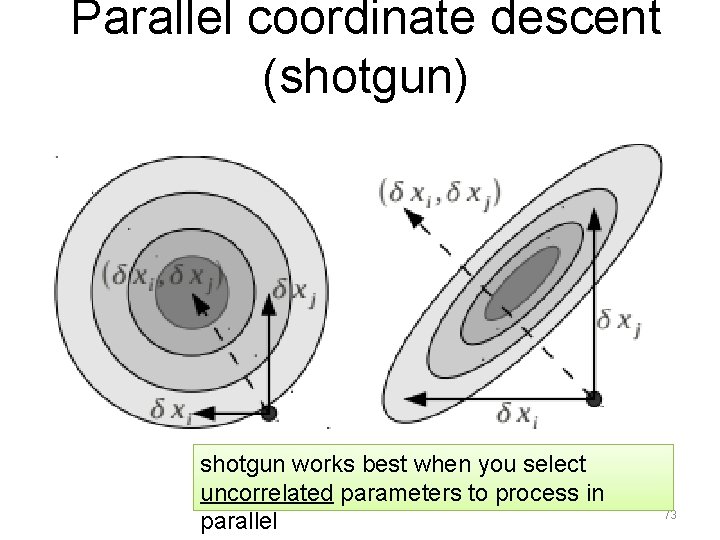

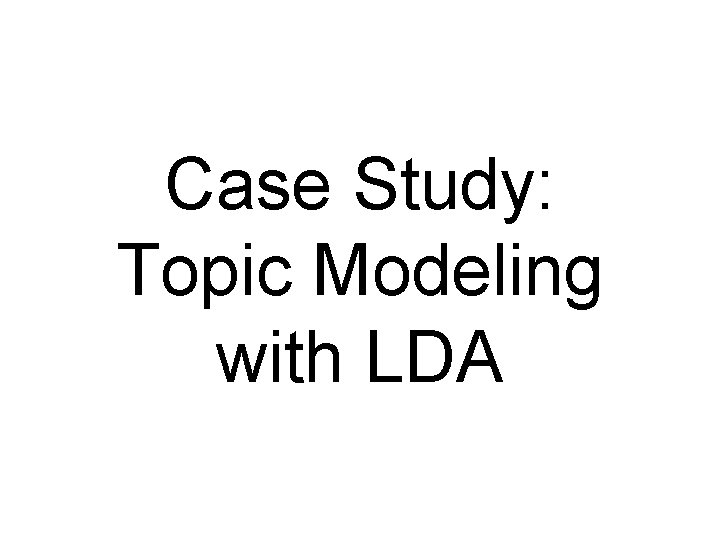

LDA Samplers Comparison [Yuan et al 2015] 85

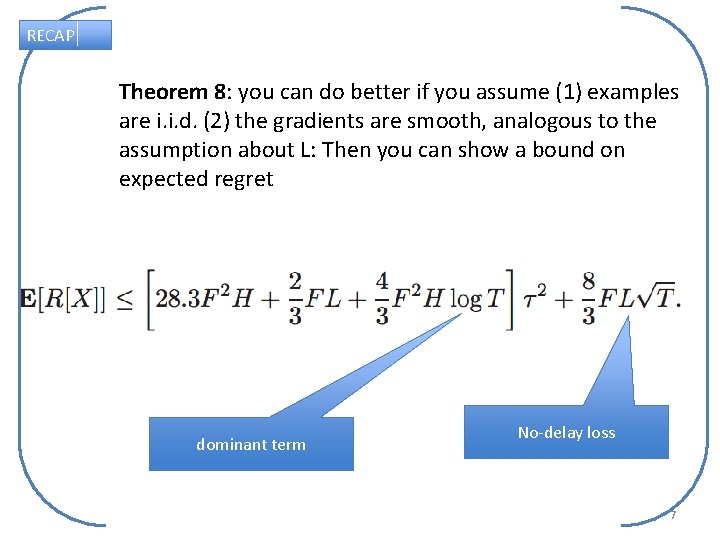

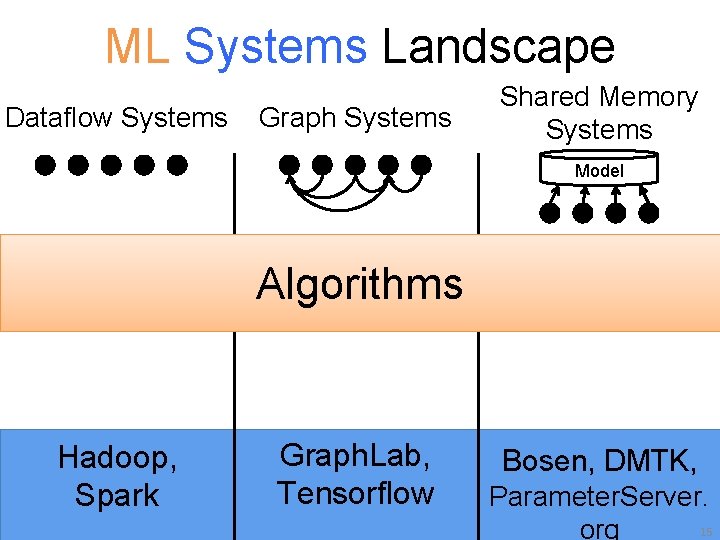

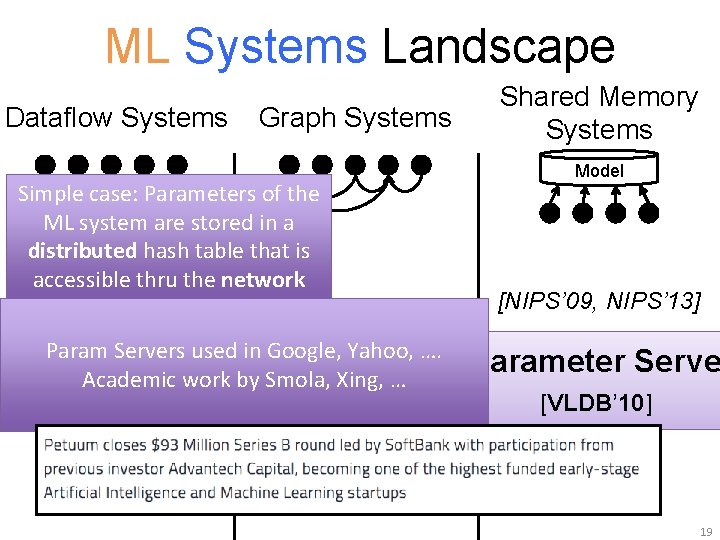

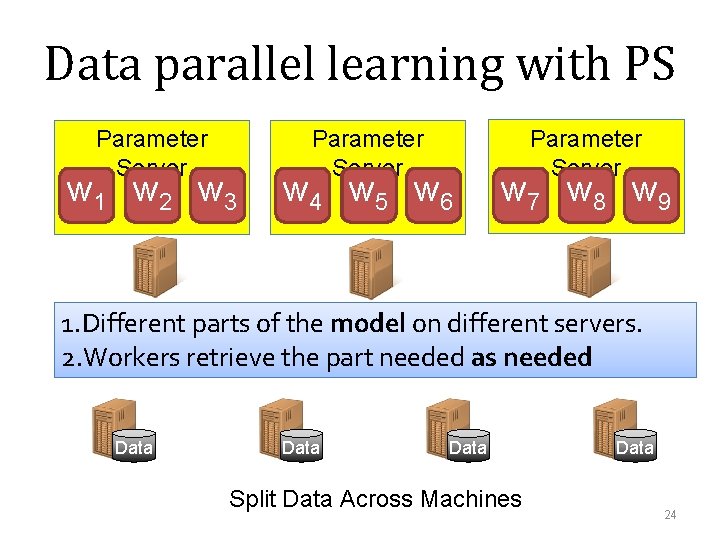

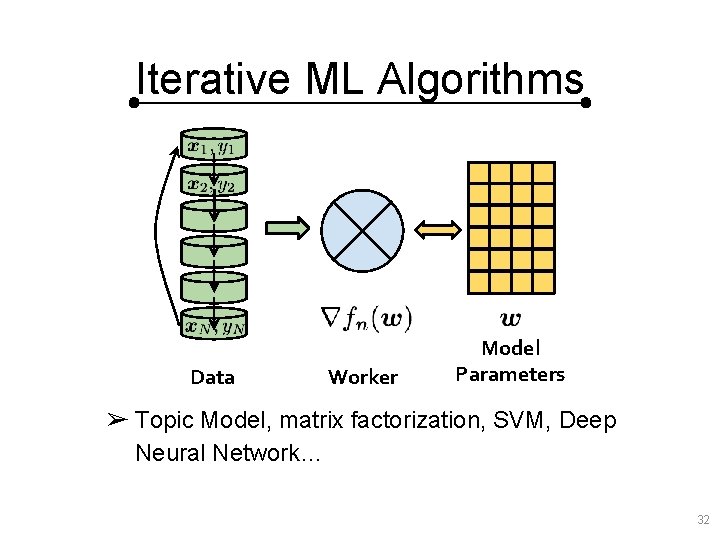

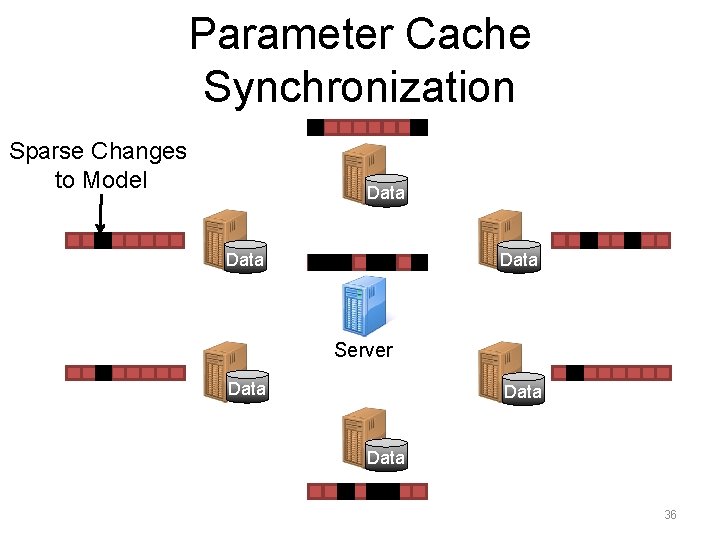

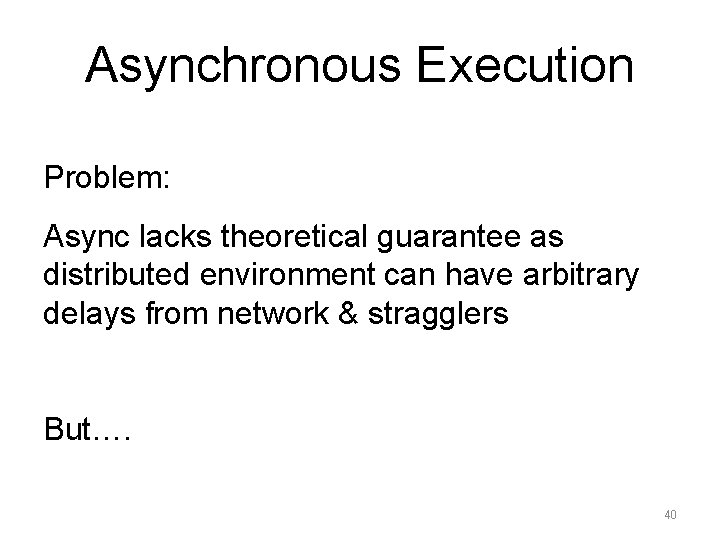

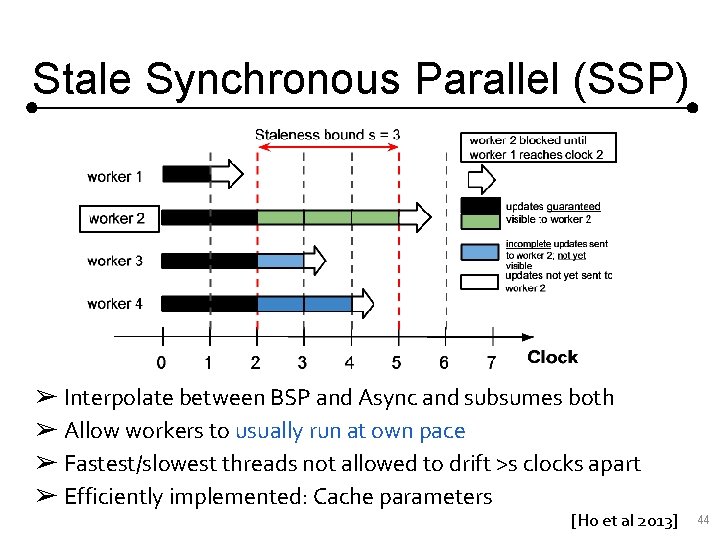

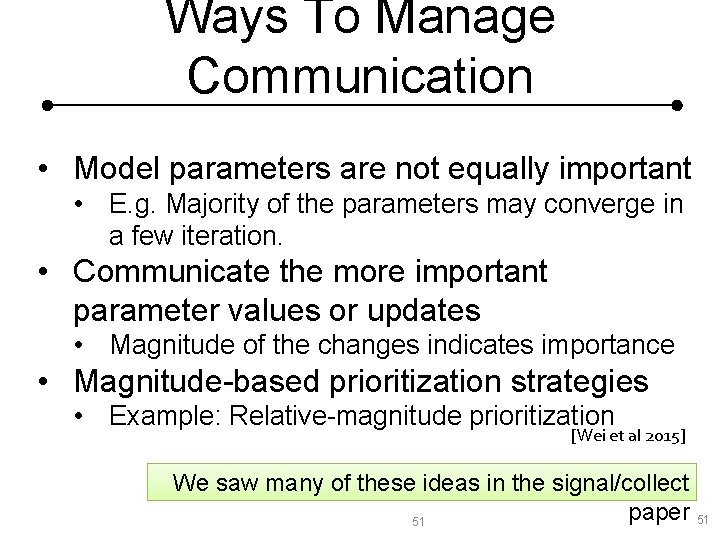

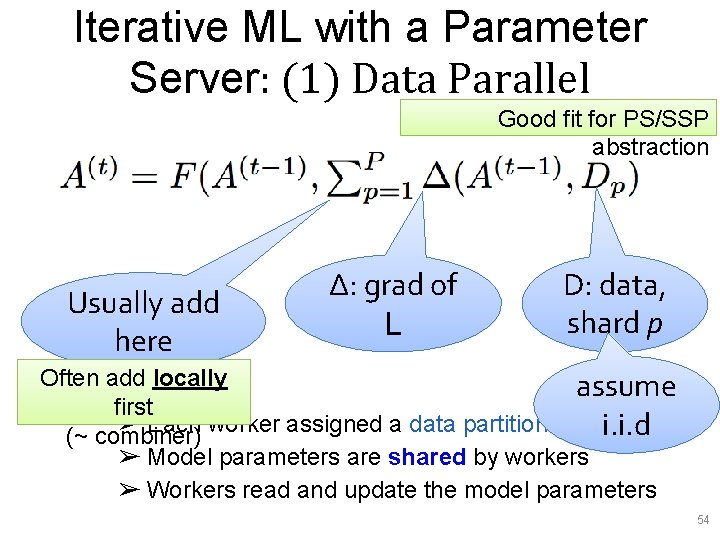

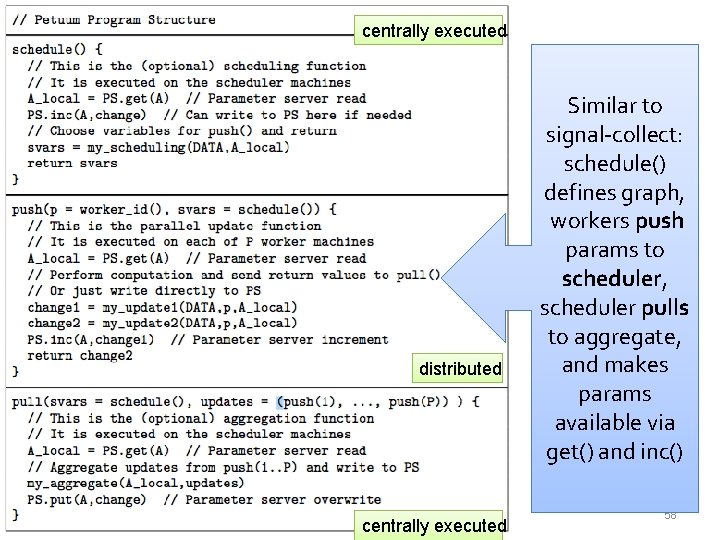

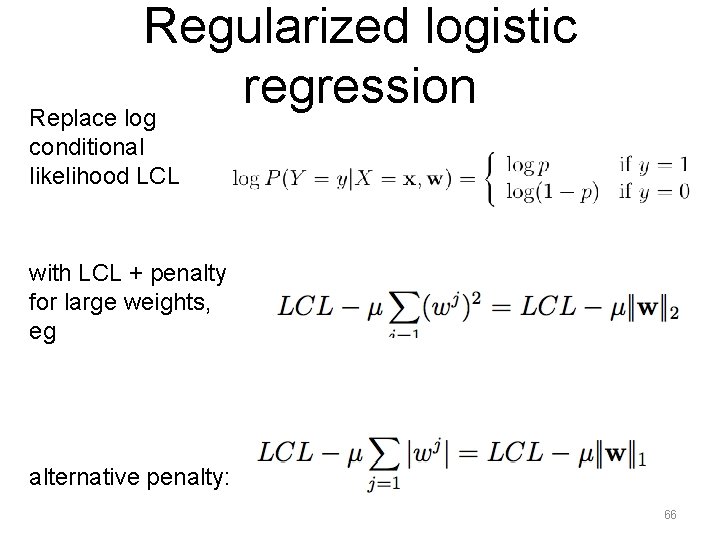

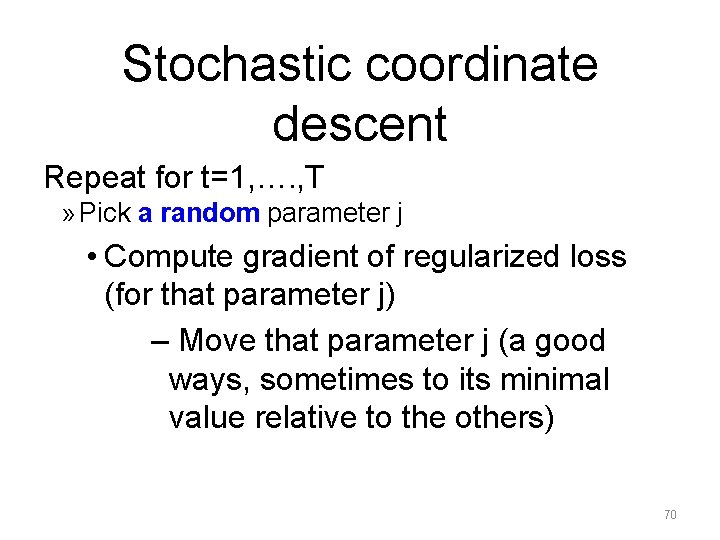

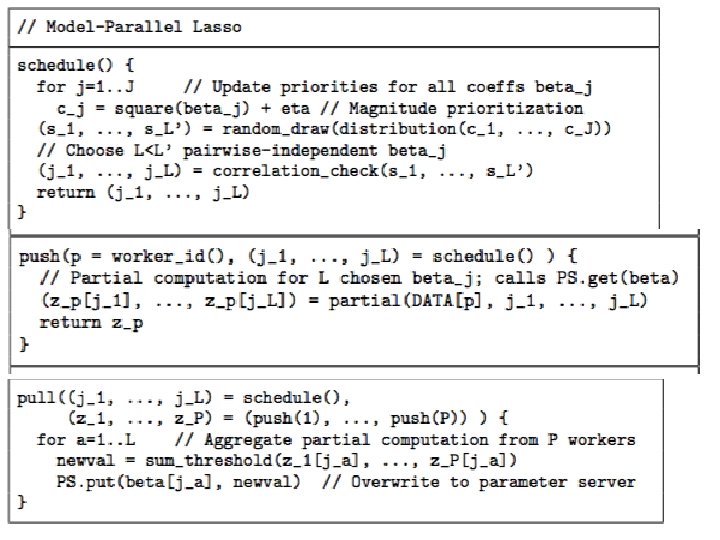

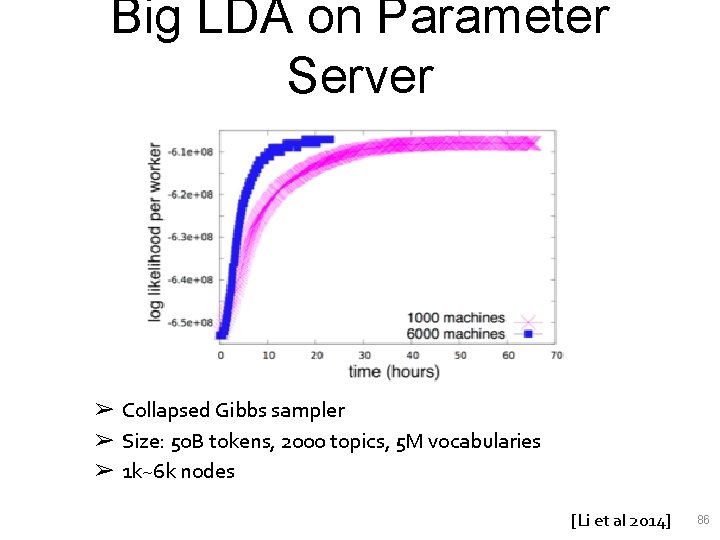

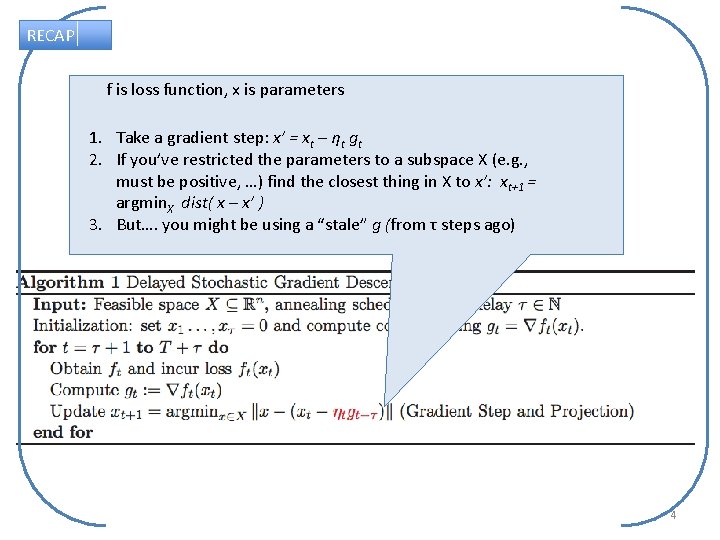

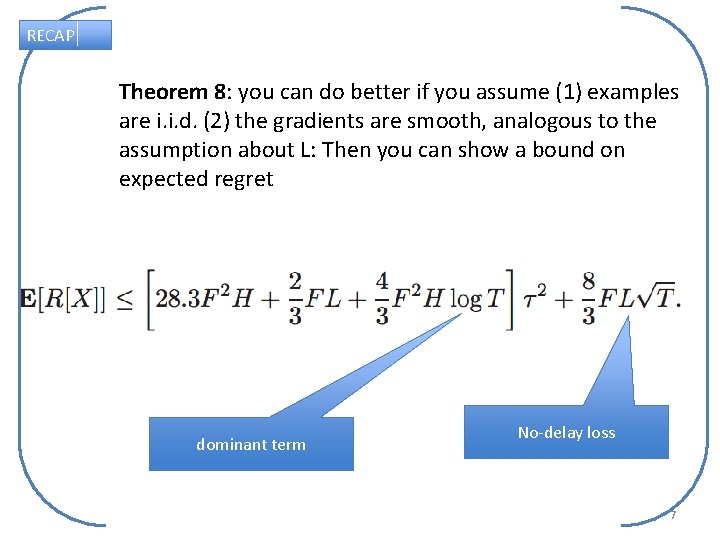

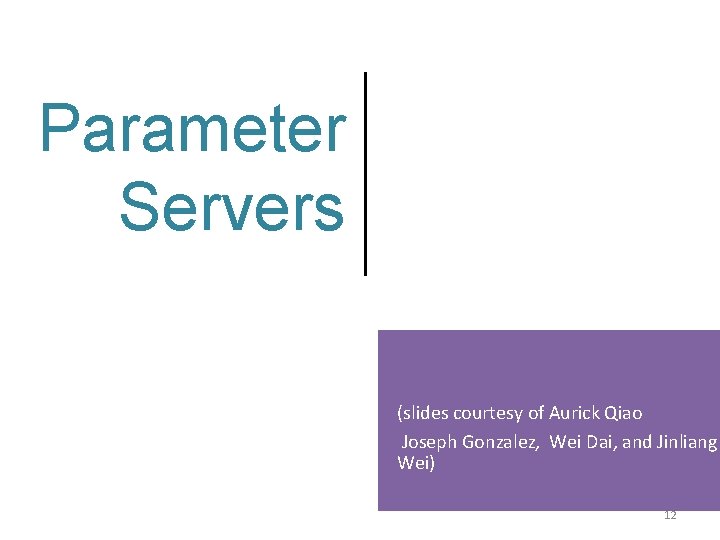

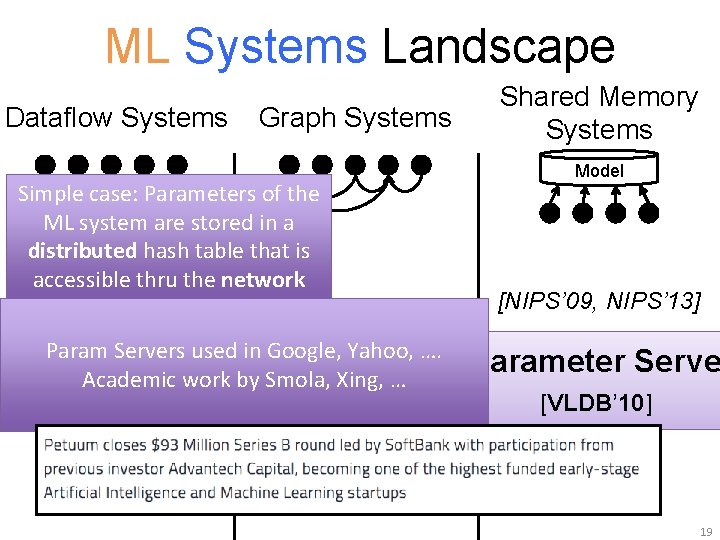

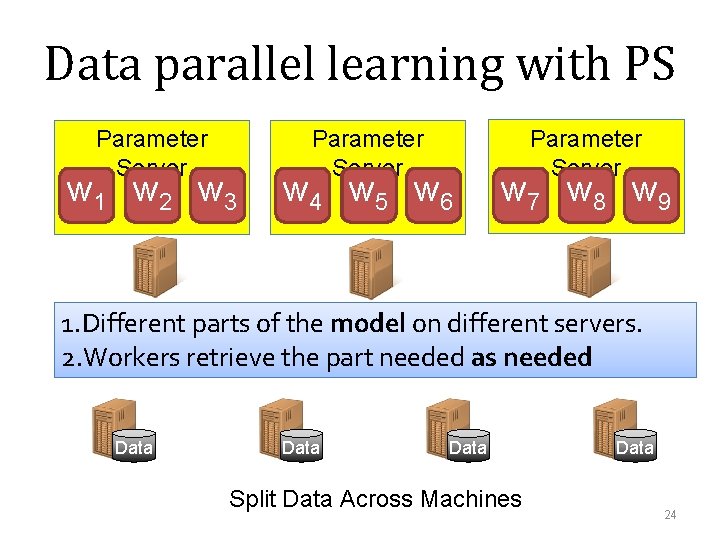

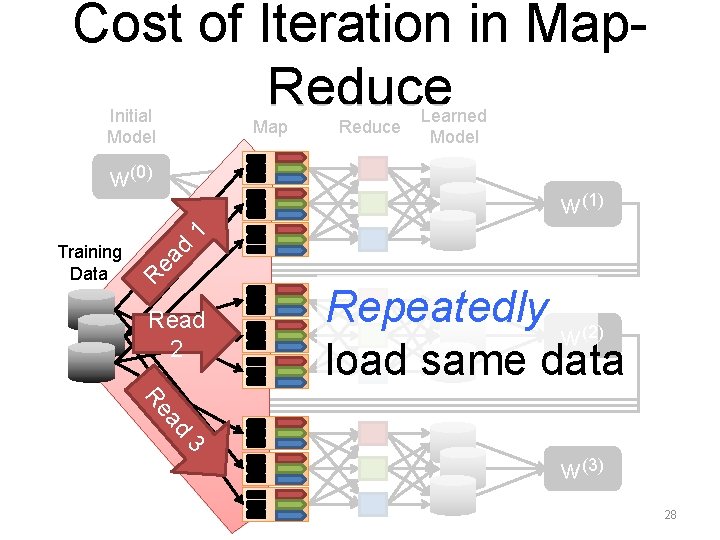

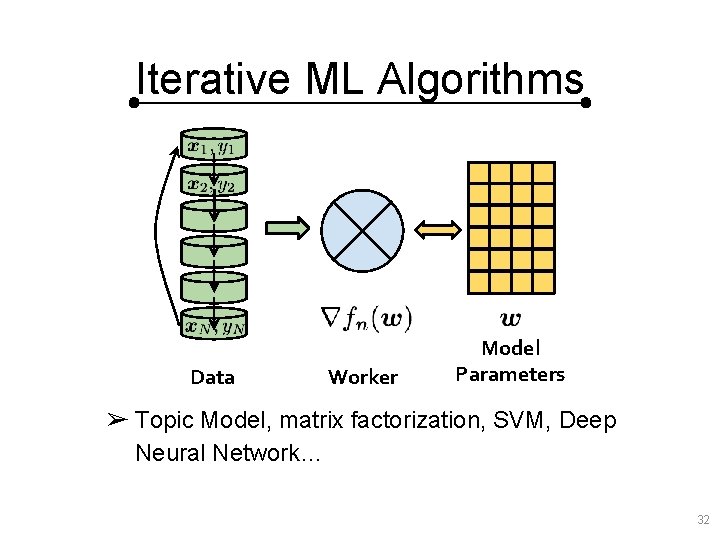

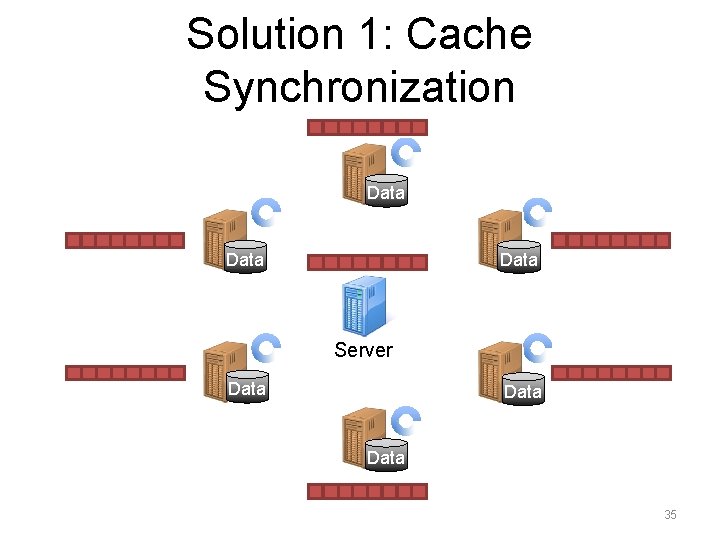

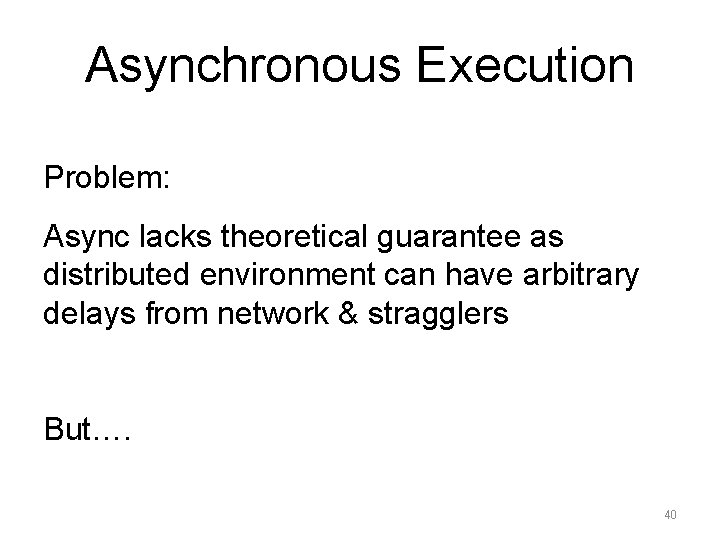

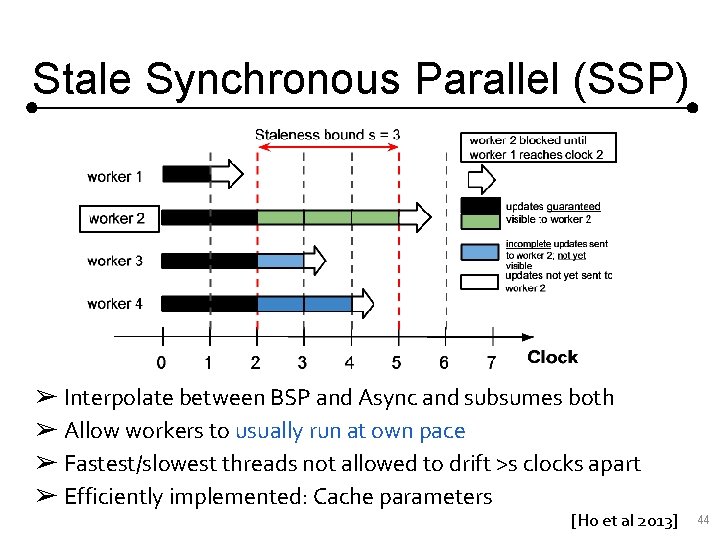

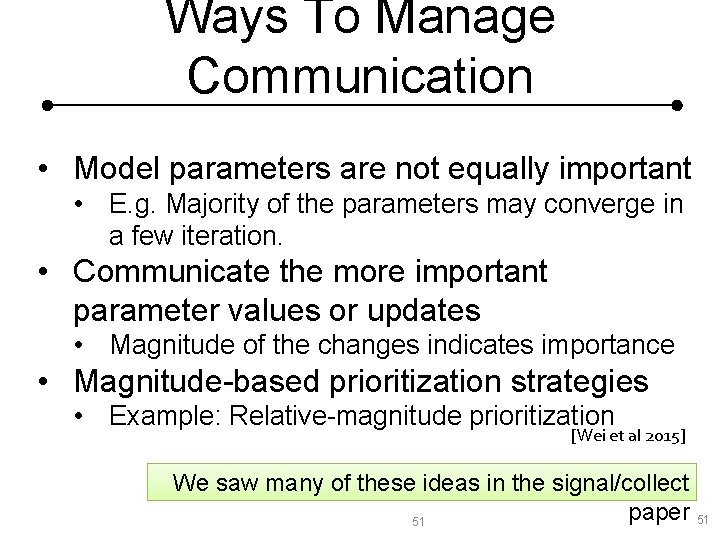

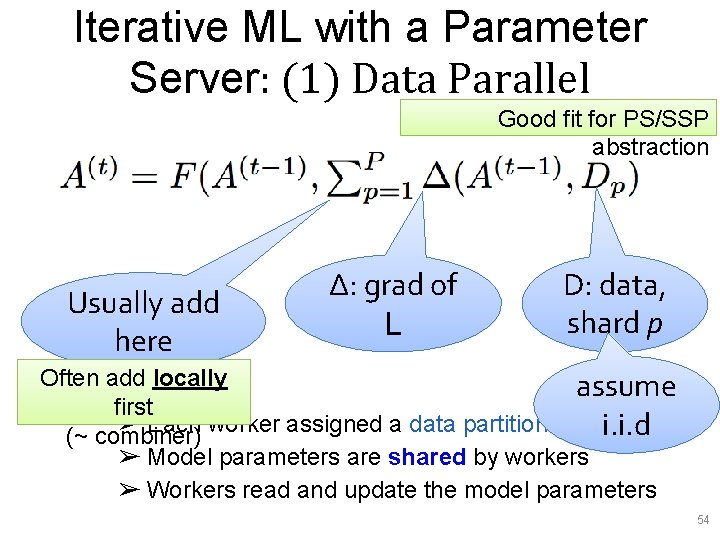

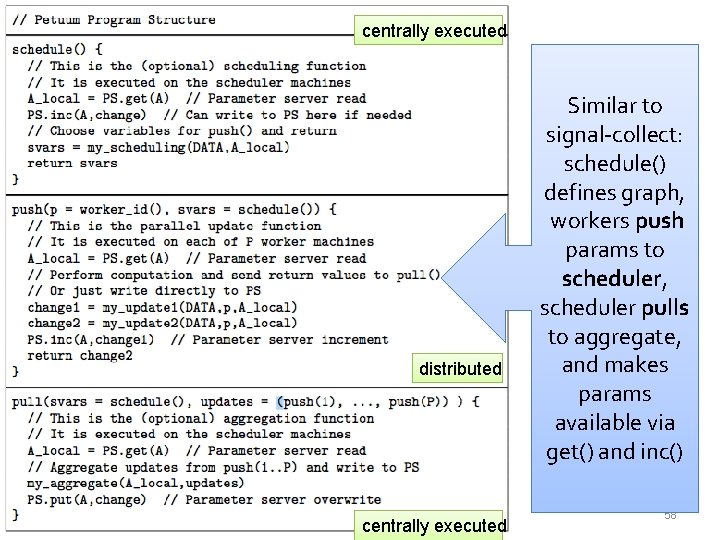

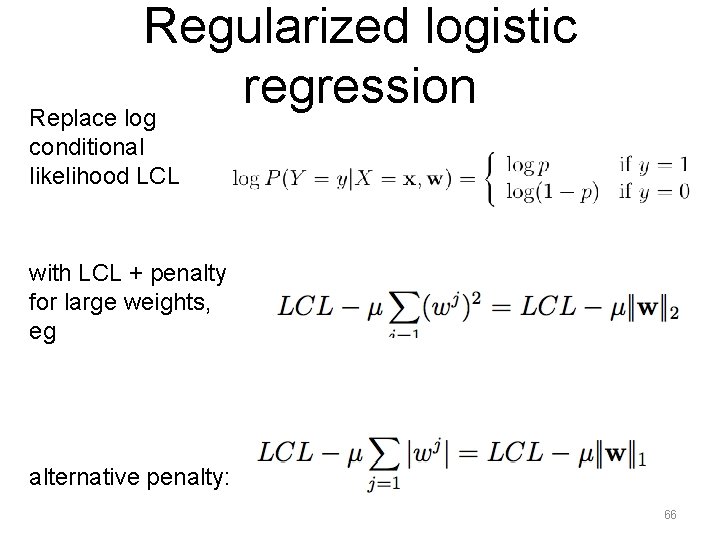

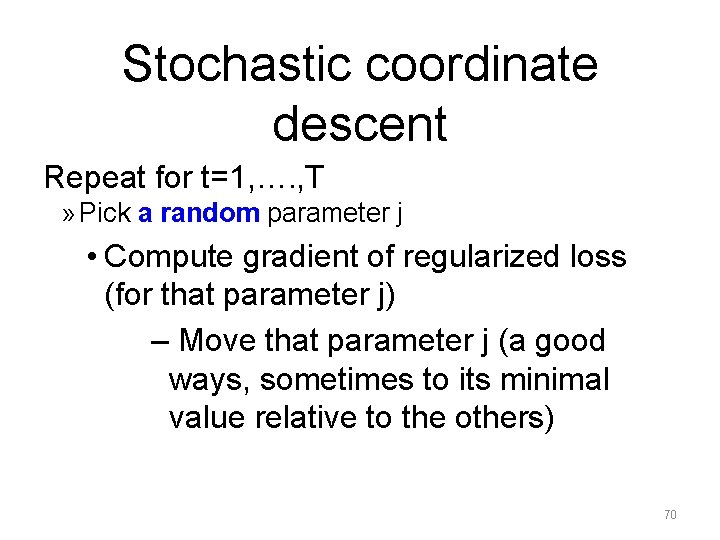

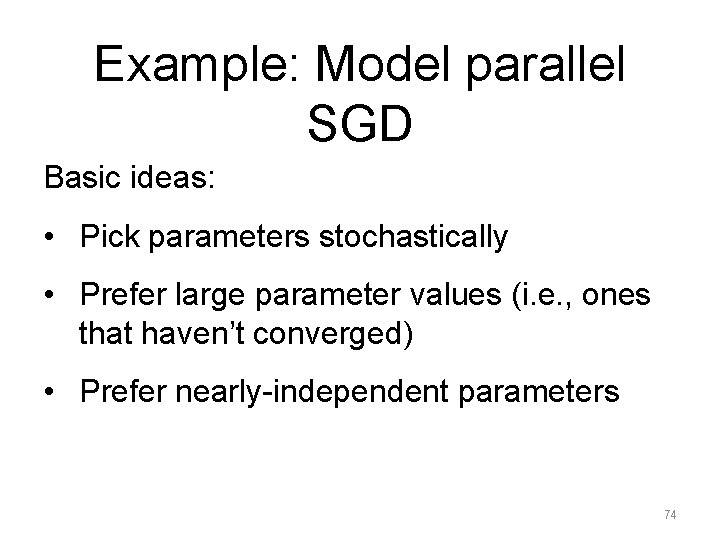

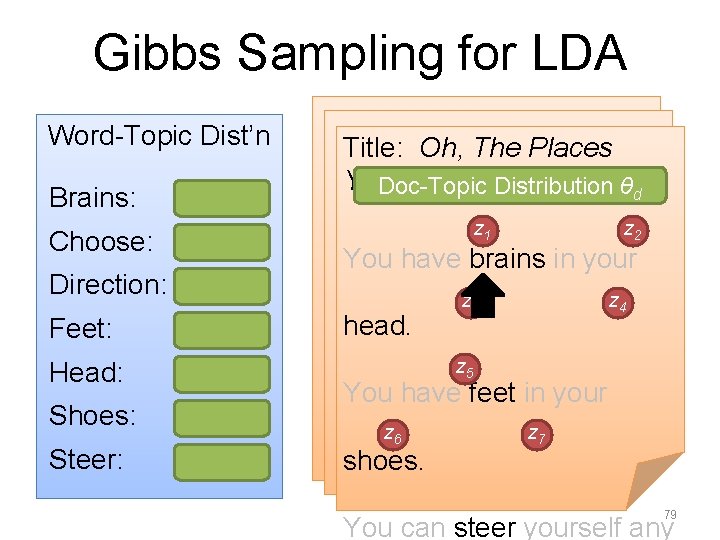

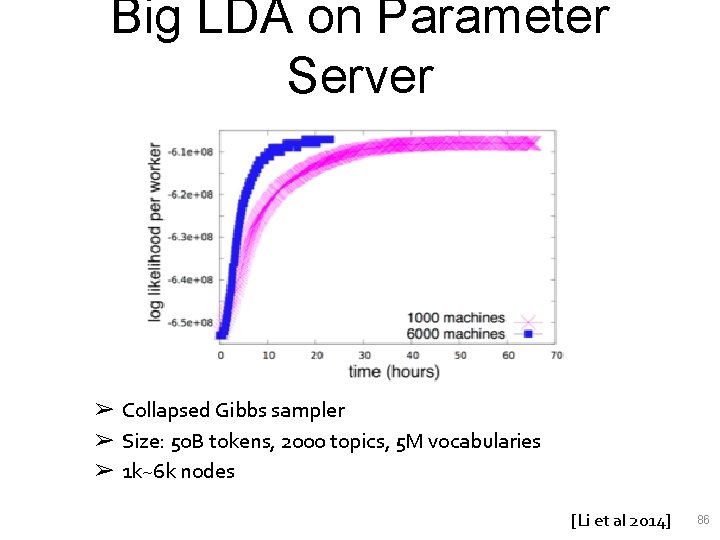

Big LDA on Parameter Server ➢ Collapsed Gibbs sampler ➢ Size: 50 B tokens, 2000 topics, 5 M vocabularies ➢ 1 k~6 k nodes [Li et al 2014] 86

![LDA Scale Comparison Yahoo LDA Sparse LDA 1 Parameter Server Sparse LDA2 Tencent Peacock LDA Scale Comparison Yahoo. LDA (Sparse. LDA) [1] Parameter Server (Sparse. LDA)[2] Tencent Peacock](https://slidetodoc.com/presentation_image/1003a79ae3d448eee4fc2814e91ebb54/image-83.jpg)

LDA Scale Comparison Yahoo. LDA (Sparse. LDA) [1] Parameter Server (Sparse. LDA)[2] Tencent Peacock (Sparse. LDA)[3] Alias. LDA [4] Petuum. LDA (Light. LDA) [5] # of words (dataset size) 20 M documents 50 B 4. 5 B 100 M 200 B # of topics 1000 2000 100 K 1024 1 M # of vocabularies est. 100 K[2] 5 M 210 K 100 K 1 M Time to converge N/A 20 hrs 6. 6 hrs/iterations 2 hrs 60 hrs # of machines 400 6000 (60 k cores) 500 cores 1 (1 core) 24 (480 cores) Machine specs N/A 10 cores, 128 GB RAM N/A 4 cores 12 GB RAM 20 cores, 256 GB RAM Parameter Server [1] Ahmed, Amr, et al. "Scalable inference in latent variable models. " WSDM, (2012). [2] Li, Mu, et al. "Scaling distributed machine learning with the parameter server. " OSDI. (2014). [3] Wang, Yi, et al. "Towards Topic Modeling for Big Data. " ar. Xiv: 1405. 4402 (2014). [4] Li, Aaron Q. , et al. "Reducing the sampling complexity of topic models. " KDD, (2014). [5] Yuan, Jinhui, et al. “Light. LDA: Big Topic Models on Modest Compute Clusters” ar. Xiv: 1412. 1576 (2014). 87