Parallelization of FFT in AFNI Huang Jingshan Xi

- Slides: 27

Parallelization of FFT in AFNI Huang, Jingshan Xi, Hong Department of Computer Science and Engineering University of South Carolina

Motivation l AFNI: a widely used software package for medical image processing l Drawback: not a real-time system l Our goal: make a parallelized version of AFNI l First step: parallelize the FFT part of AFNI

Outline l What is AFNI l FFT in AFNI l Introduction of MPI l Our method of parallelization l Experiment result and analysis l Conclusion

What is AFNI? l AFNI stands for Analysis of Functional Neuro. Images. l It is a set of C programs (over 1, 000 source code files) for processing, analyzing, and displaying functional MRI (FMRI) data - a technique for mapping human brain activity. l AFNI is an interactive program for viewing the results of 3 D functional neuroimaging.

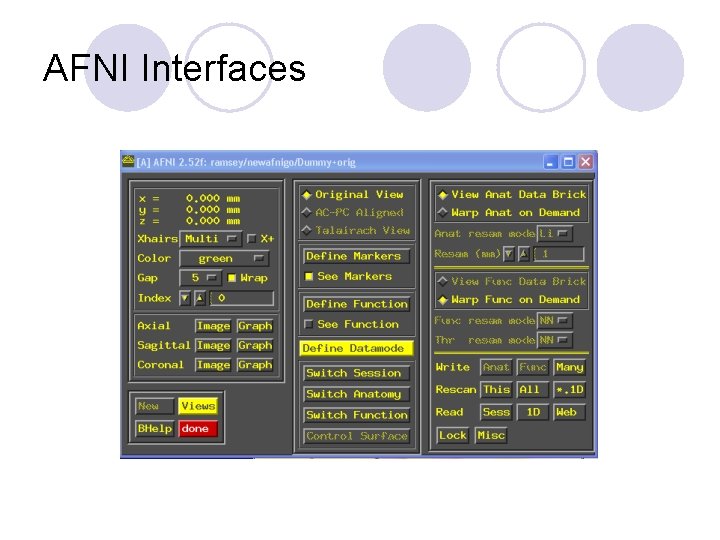

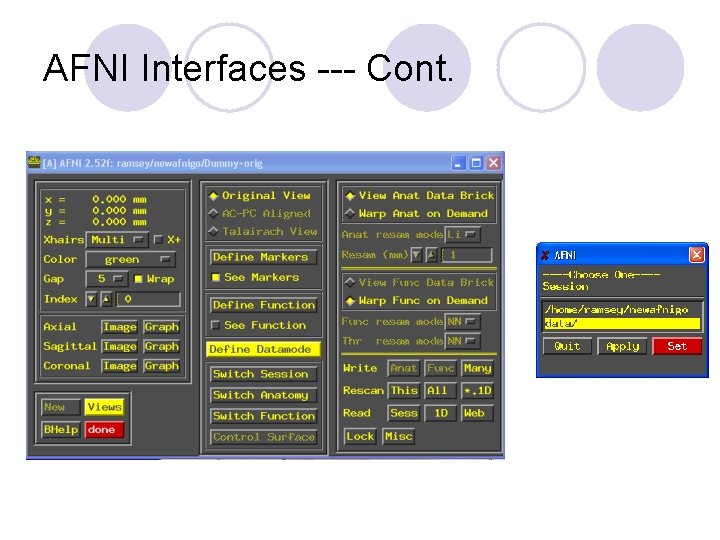

How to run AFNI? l Log on to clustering machine (daniel. cse. sc. edu) l Go to directory /home/ramsey/newafnigo l Run “afni” l Interface should show up at this time

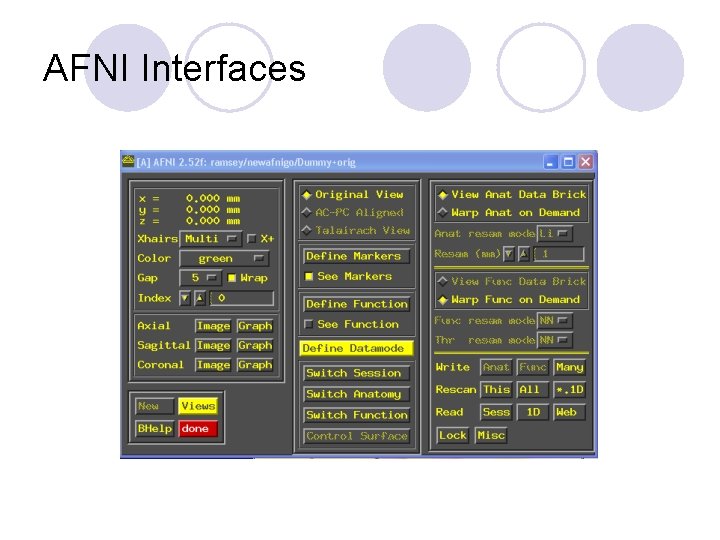

AFNI Interfaces

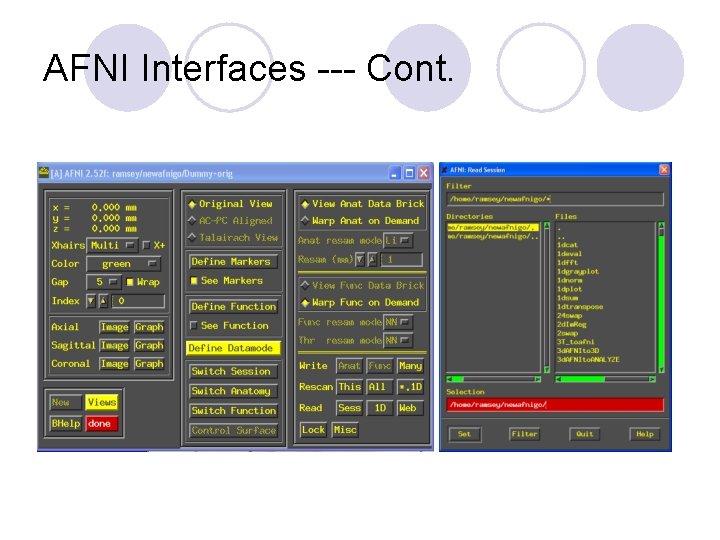

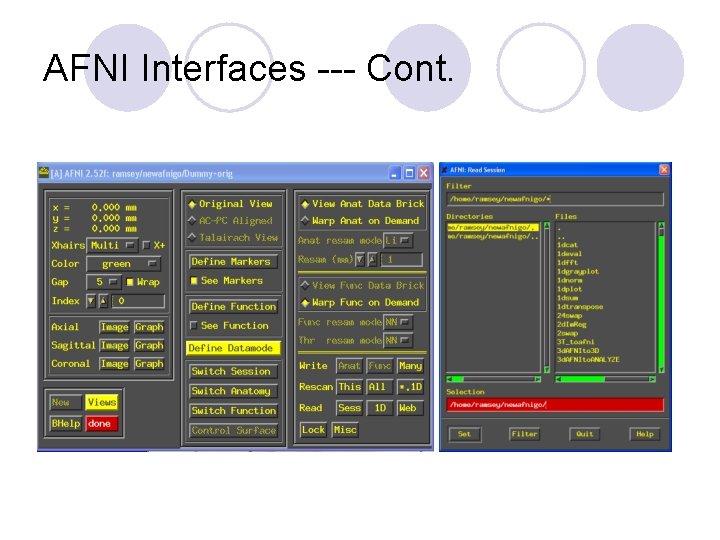

AFNI Interfaces --- Cont.

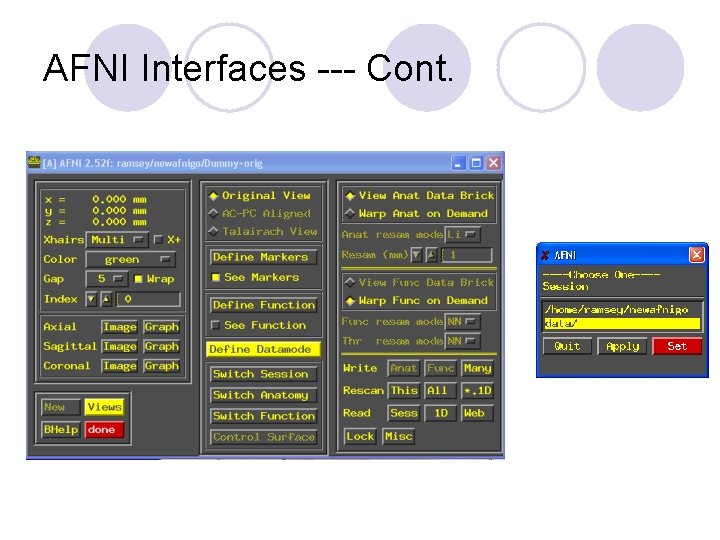

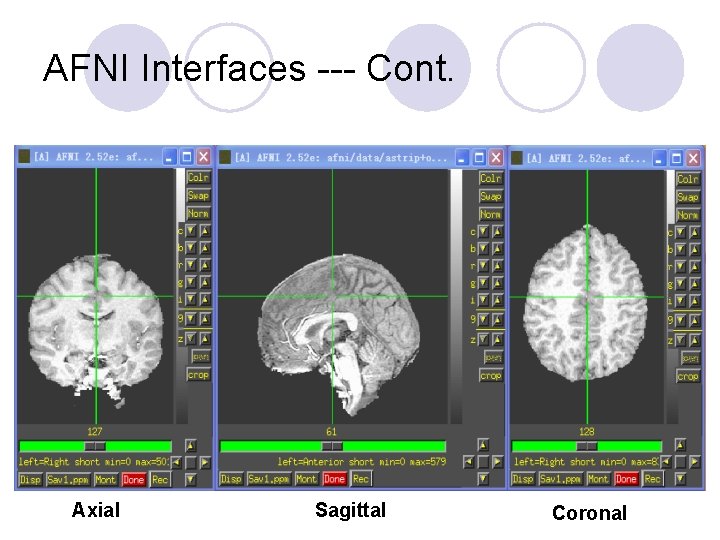

AFNI Interfaces --- Cont.

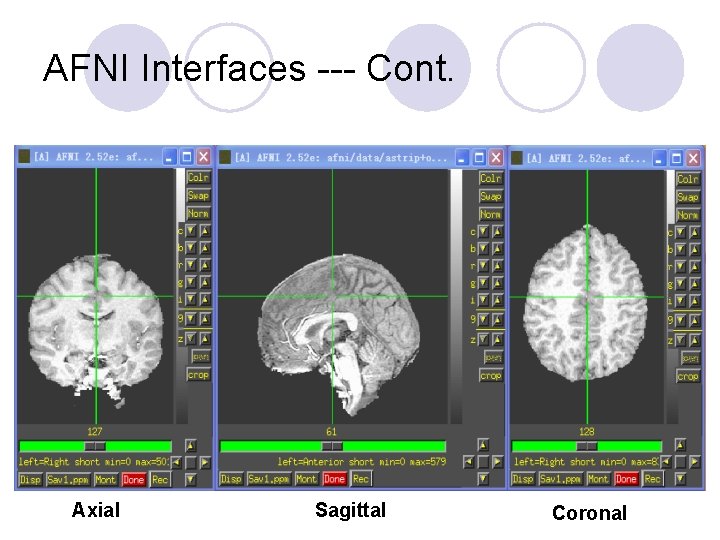

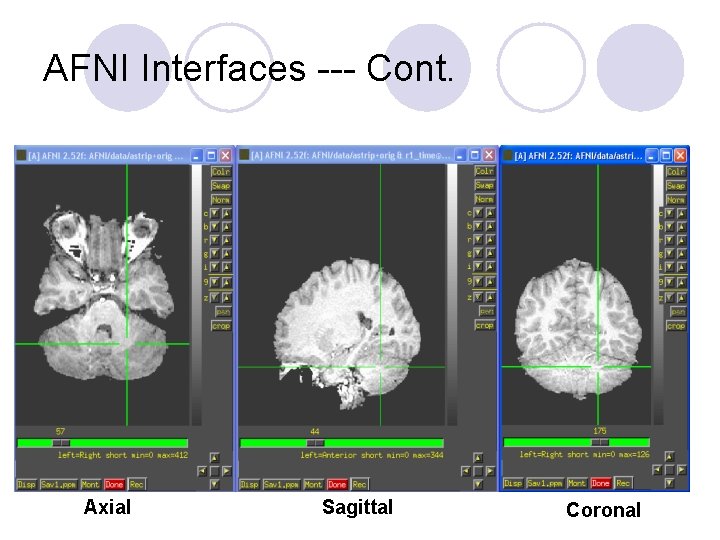

AFNI Interfaces --- Cont. Axial Sagittal Coronal

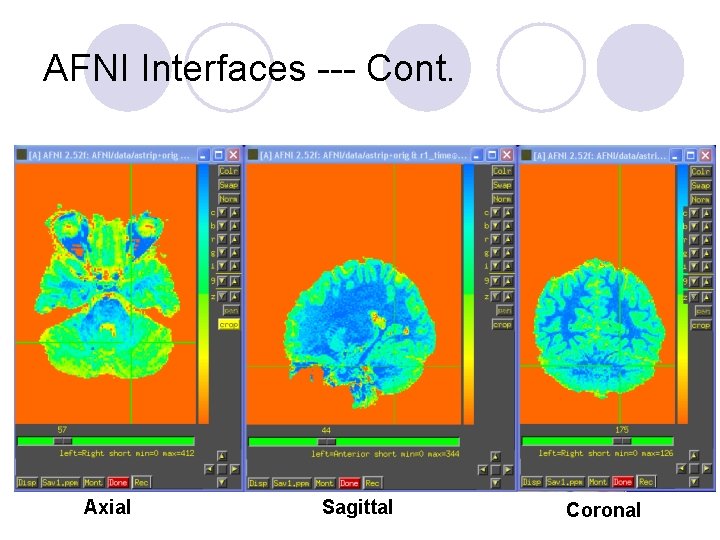

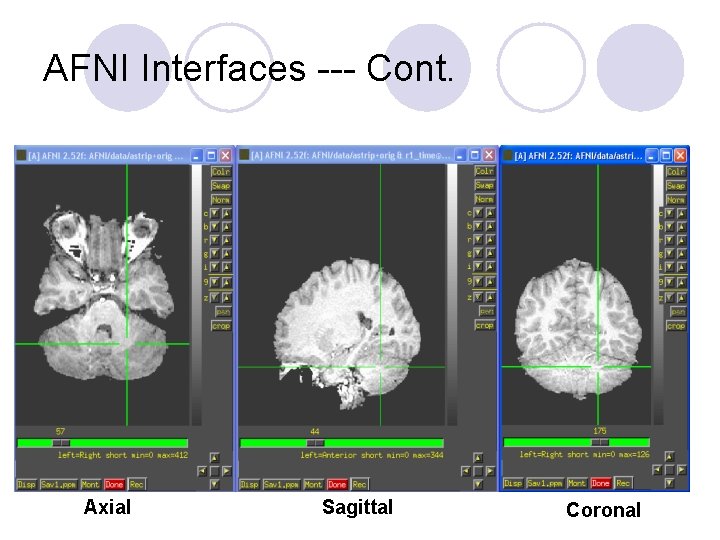

AFNI Interfaces --- Cont. Axial Sagittal Coronal

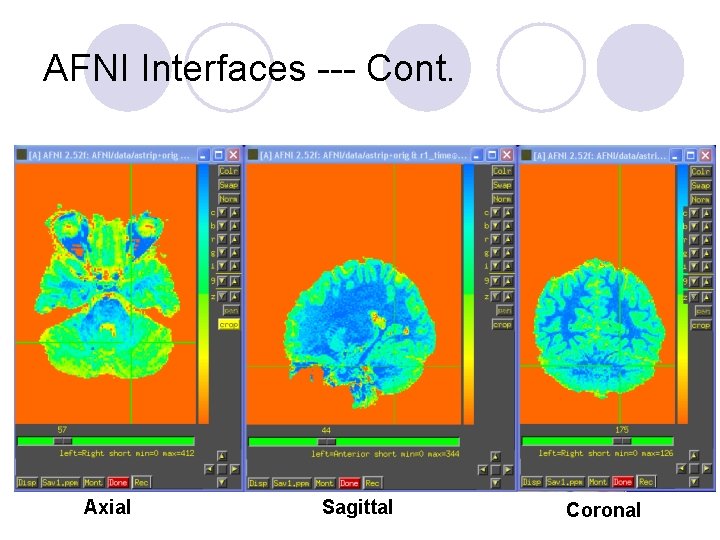

AFNI Interfaces --- Cont. Axial Sagittal Coronal

FFT in AFNI l Fast Fourier Transform: a kind of finite FT from discrete time domain to discrete spatial domain l Reduces the number of computations needed for N points from O(N 2)to O(Nlg. N) l Extensively used in AFNI l To parallelize FFT has great significance for AFNI

What is MPI? l MPI stands for Message-Passing Interface. l MPI is the most widely used approach to develop a parallel system. l MPI has specified a library of functions that can be called from a C or Fortran program. l The foundation of this library is a small group of functions that can be used to achieve parallelism by message passing.

What is Message Passing? l Explicitly transmits data from one process to another l Powerful and very general method of expressing parallelism l Drawback --- “assembly language of parallel computing”

What does MPI do for us? l Makes it possible to write libraries of parallel programs that are both portable and efficient l Use of these libraries will hide many of the details of parallel programming l Therefore make parallel computing much more accessible to professionals in all branches of science and engineering

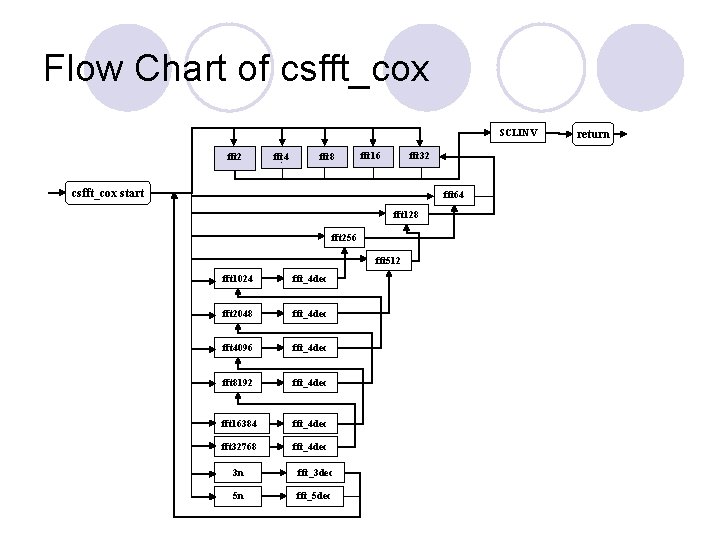

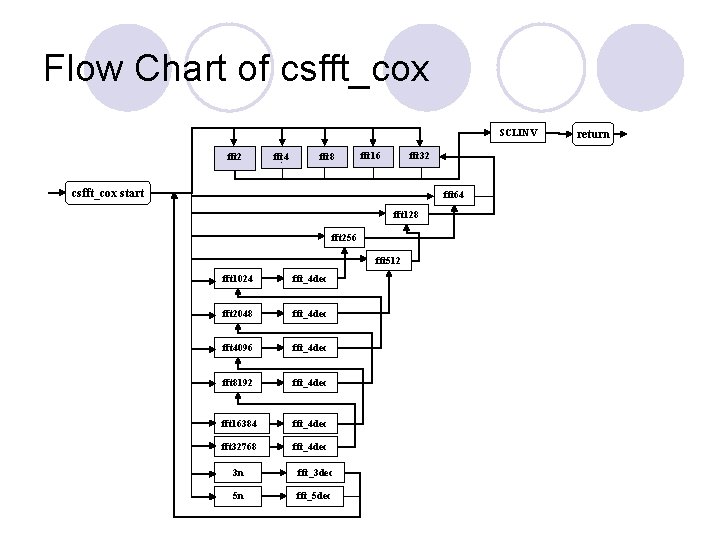

Our Objective l To parallelize FFT part of AFNI l In AFNI, when we call FFT function, we are in fact calling the csfft_cox() function, which we will see the detail in next slide

Flow Chart of csfft_cox SCLINV fft 2 fft 4 fft 8 fft 16 fft 32 3 csfft_cox start fft 64 fft 128 fft 256 fft 512 fft 1024 fft_4 dec fft 2048 fft_4 dec fft 4096 fft_4 dec fft 8192 fft_4 dec fft 16384 fft_4 dec fft 32768 fft_4 dec 3 n fft_3 dec 5 n fft_5 dec return

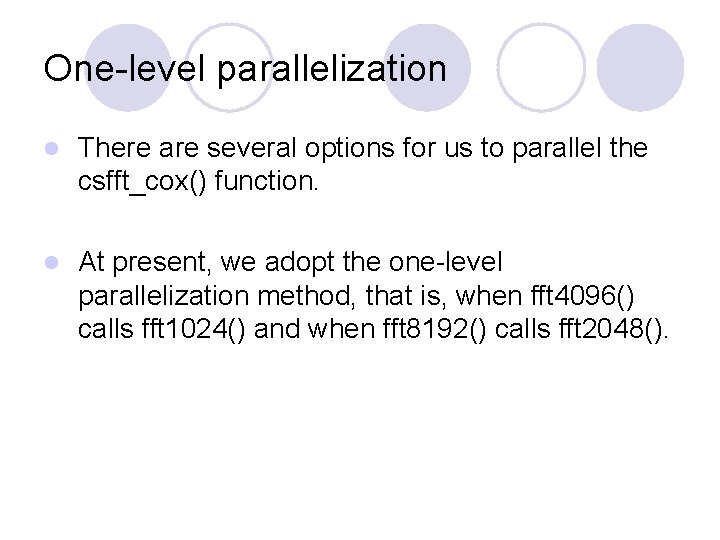

One-level parallelization l There are several options for us to parallel the csfft_cox() function. l At present, we adopt the one-level parallelization method, that is, when fft 4096() calls fft 1024() and when fft 8192() calls fft 2048().

Correctness of our parallel code l By doing FFT and IFFT consequently, we obtain a set of complex numbers that are almost the same as the ones in the original data file l The only difference comes from the storage error of floating point number (in the original code, such phenomena also exists) l So, what is the speedup then?

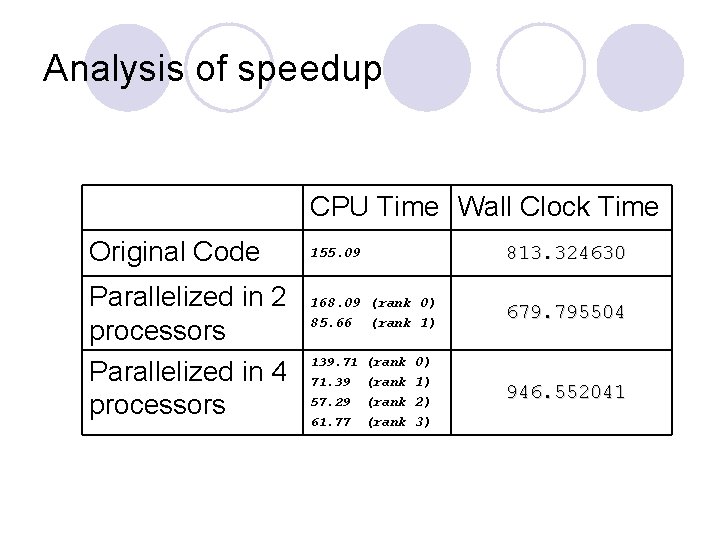

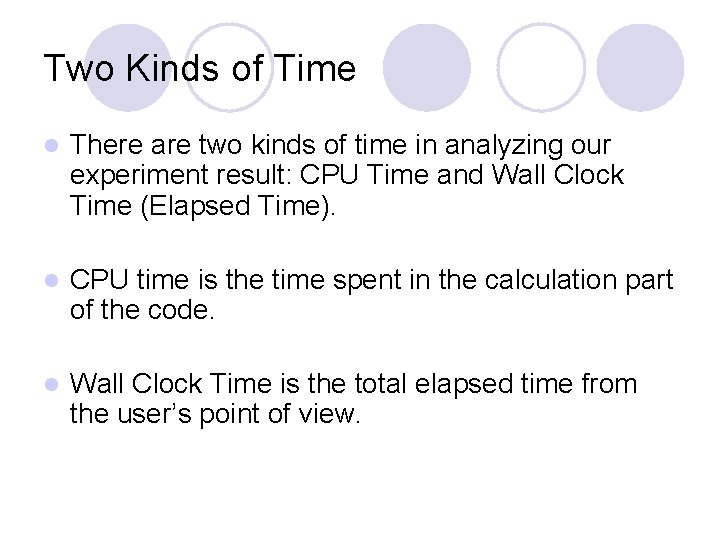

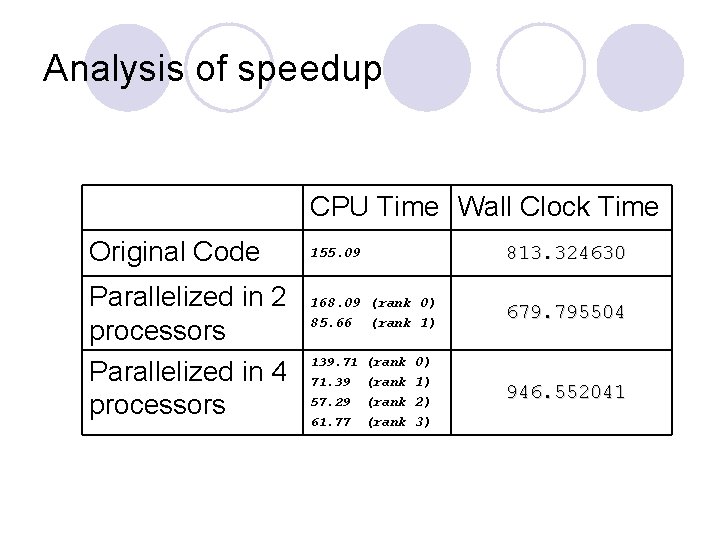

Two Kinds of Time l There are two kinds of time in analyzing our experiment result: CPU Time and Wall Clock Time (Elapsed Time). l CPU time is the time spent in the calculation part of the code. l Wall Clock Time is the total elapsed time from the user’s point of view.

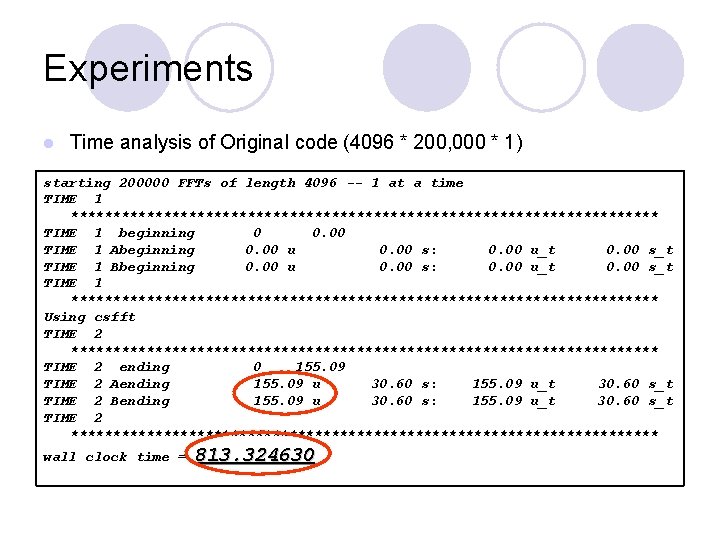

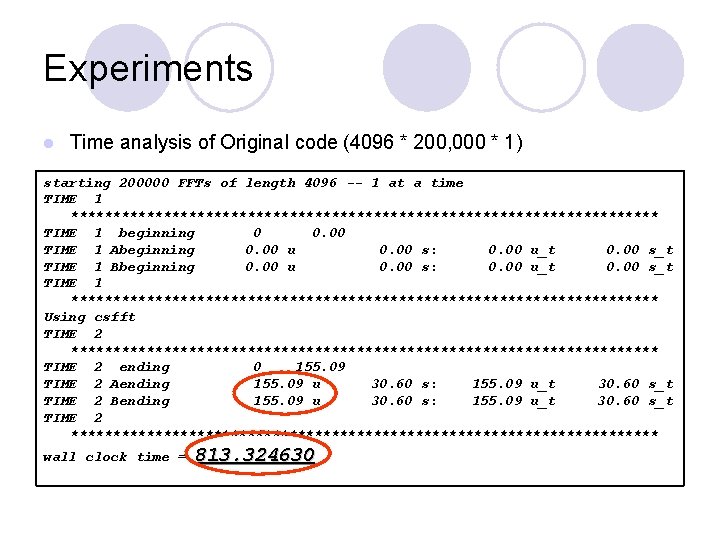

Experiments l Time analysis of Original code (4096 * 200, 000 * 1) starting 200000 FFTs of length 4096 -- 1 at a time TIME 1 *********************************** TIME 1 beginning 0 0. 00 TIME 1 Abeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 Bbeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 *********************************** Using csfft TIME 2 *********************************** TIME 2 ending 0 155. 09 TIME 2 Aending 155. 09 u 30. 60 s: 155. 09 u_t 30. 60 s_t TIME 2 Bending 155. 09 u 30. 60 s: 155. 09 u_t 30. 60 s_t TIME 2 *********************************** wall clock time = 813. 324630

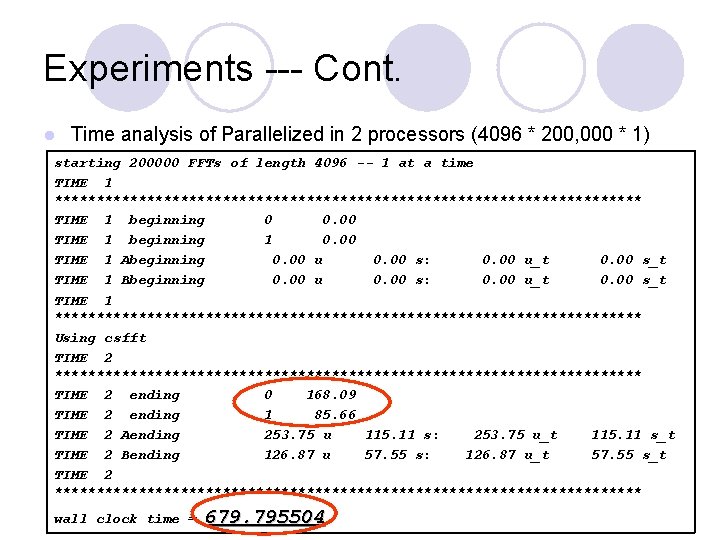

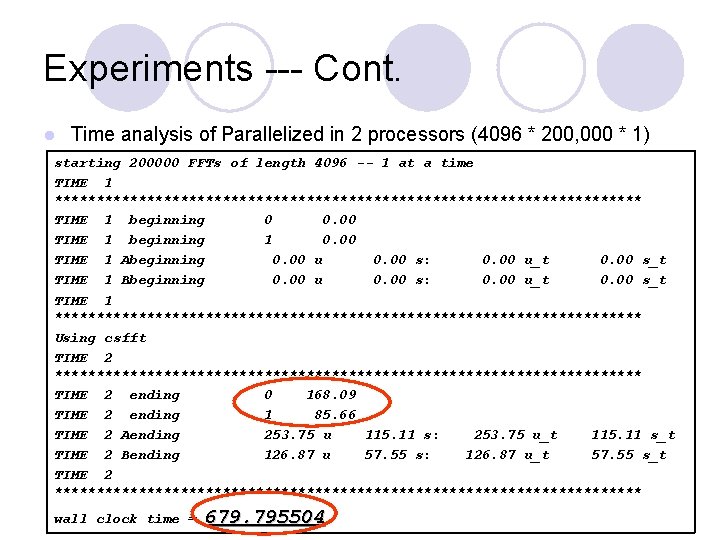

Experiments --- Cont. l Time analysis of Parallelized in 2 processors (4096 * 200, 000 * 1) starting 200000 FFTs of length 4096 -- 1 at a time TIME 1 *********************************** TIME 1 beginning 0 0. 00 TIME 1 beginning 1 0. 00 TIME 1 Abeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 Bbeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 *********************************** Using csfft TIME 2 *********************************** TIME 2 ending 0 168. 09 TIME 2 ending 1 85. 66 TIME 2 Aending 253. 75 u 115. 11 s: 253. 75 u_t 115. 11 s_t TIME 2 Bending 126. 87 u 57. 55 s: 126. 87 u_t 57. 55 s_t TIME 2 *********************************** wall clock time = 679. 795504

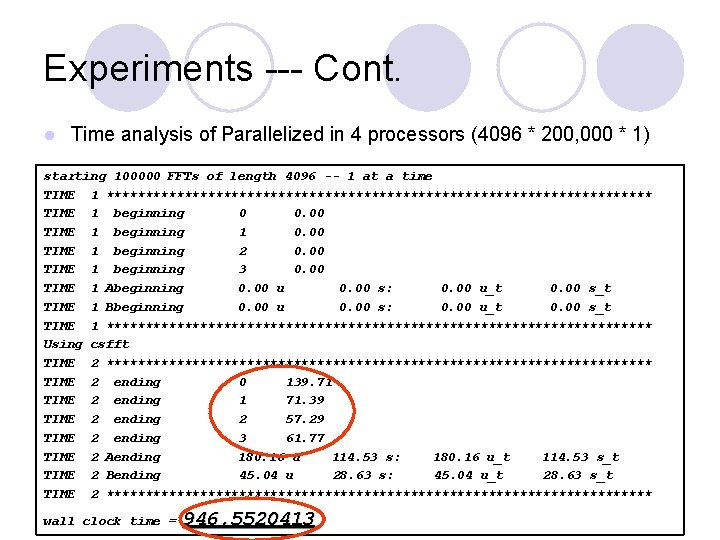

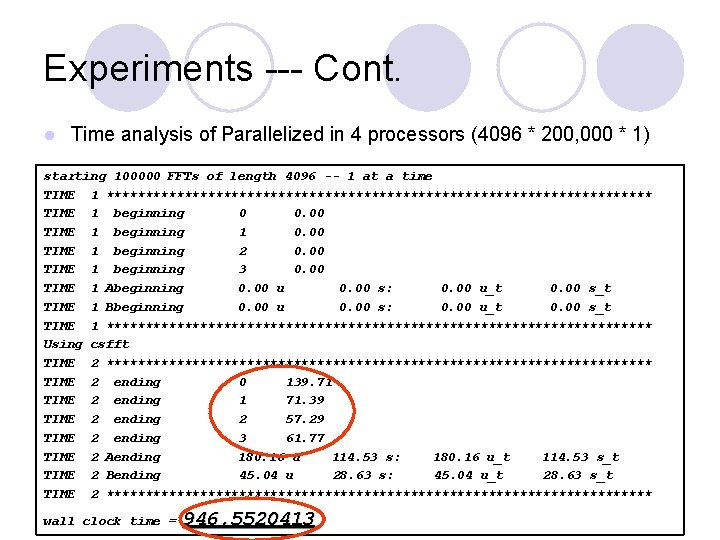

Experiments --- Cont. l Time analysis of Parallelized in 4 processors (4096 * 200, 000 * 1) starting 100000 FFTs of length 4096 -- 1 at a time TIME 1 *********************************** TIME 1 beginning 0 0. 00 TIME 1 beginning 1 0. 00 TIME 1 beginning 2 0. 00 TIME 1 beginning 3 0. 00 TIME 1 Abeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 Bbeginning 0. 00 u 0. 00 s: 0. 00 u_t 0. 00 s_t TIME 1 *********************************** Using csfft TIME 2 *********************************** TIME 2 ending 0 139. 71 TIME 2 ending 1 71. 39 TIME 2 ending 2 57. 29 TIME 2 ending 3 61. 77 TIME 2 Aending 180. 16 u 114. 53 s: 180. 16 u_t 114. 53 s_t TIME 2 Bending 45. 04 u 28. 63 s: 45. 04 u_t 28. 63 s_t TIME 2 *********************************** wall clock time = 946. 5520413

Analysis of speedup CPU Time Wall Clock Time Original Code Parallelized in 2 processors Parallelized in 4 processors 155. 09 813. 324630 168. 09 (rank 0) 85. 66 (rank 1) 679. 795504 139. 71 (rank 0) 71. 39 57. 29 61. 77 (rank 1) (rank 2) (rank 3) 946. 552041

Analysis of speedup --- Cont. l Two main reasons that we did not obtain the ideal speedup: 1. There exist the competitions among different users in the same CPU. 2. Due to the existing communication cost and some other overhead, it is impossible to obtain the ideal speedup in the real machines.

Conclusion We have parallelized the FFT part of AFNI software package based on MPI. The result shows that for the FFT algorithm itself, we obtain a speedup of around 30 percent. Increase the speedup of FFT parallelization of 3 d. Deconvolve program

Questions?