Parallelism in the Standard C What to Expect

![Vectorization: What’s a Big Deal? int a[n] =. . . ; int b[n] =. Vectorization: What’s a Big Deal? int a[n] =. . . ; int b[n] =.](https://slidetodoc.com/presentation_image_h2/e3878a96b617716313637fae3095478c/image-32.jpg)

- Slides: 41

Parallelism in the Standard C++: What to Expect in C++ 17 Artur Laksberg Microsoft Corp. May 8 th, 2014

Agenda Fundamentals Task regions Parallel Algorithms Parallelization Vectorization

Part 1: The Fundamentals

Renderscript Open. MP CUDA C++ AMP PPL TBB MPI Open. ACC Open. CL Cilk Plus GCD

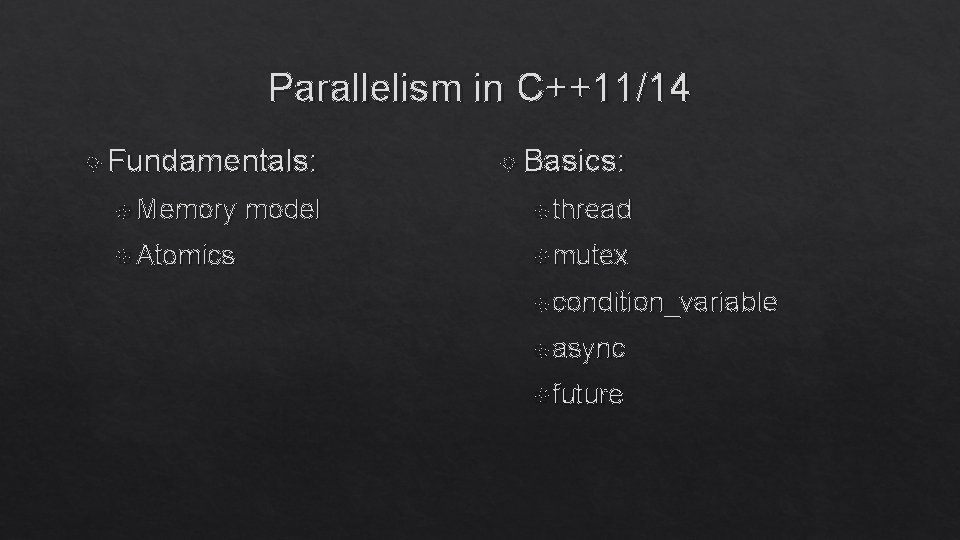

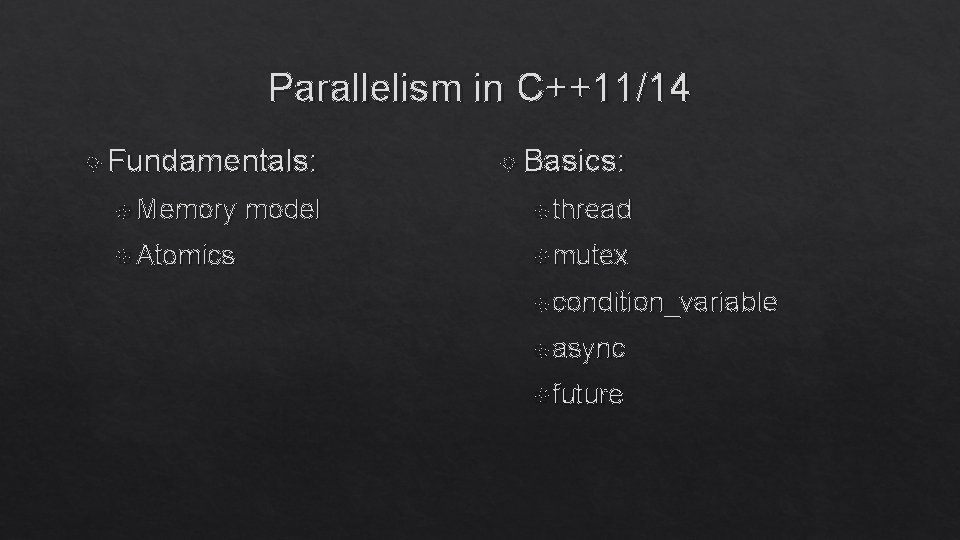

Parallelism in C++11/14 Fundamentals: Memory Atomics model Basics: thread mutex condition_variable async future

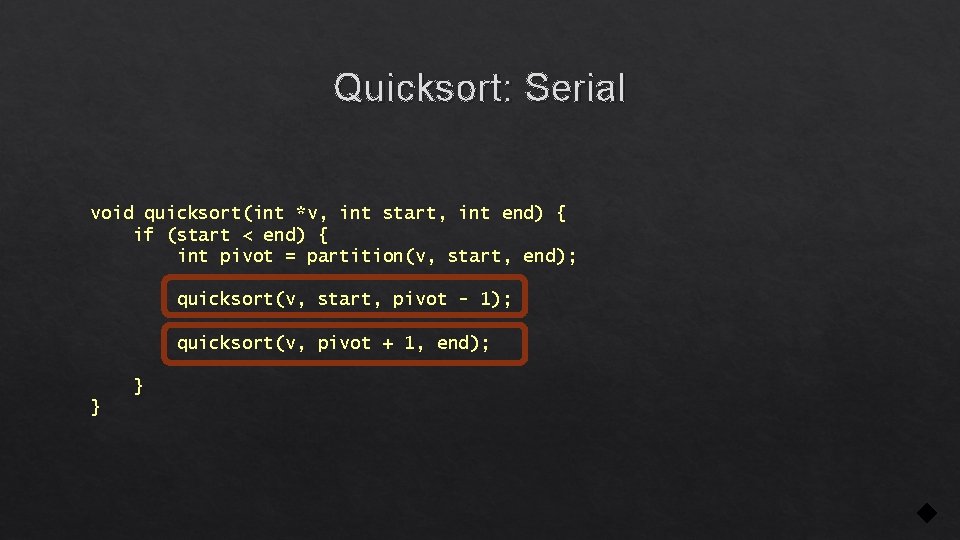

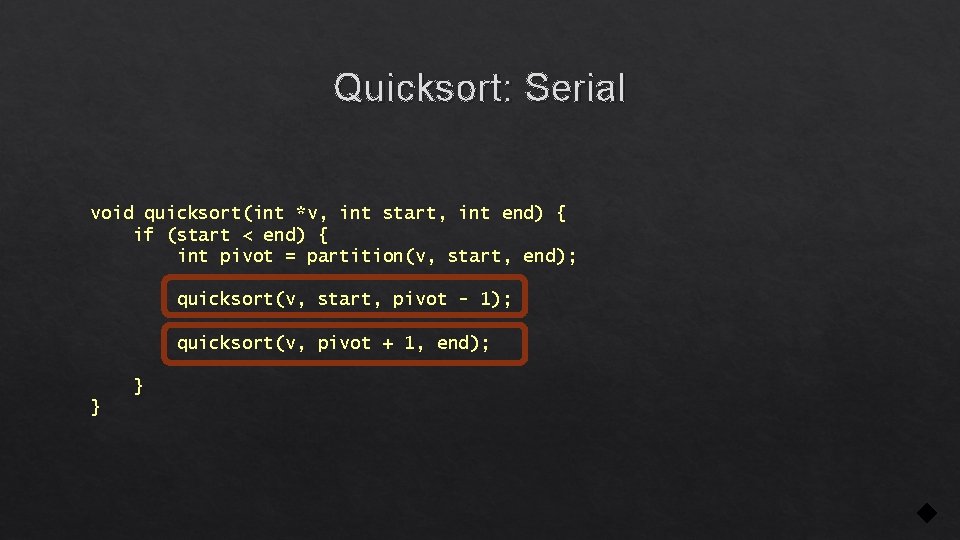

Quicksort: Serial void quicksort(int *v, int start, int end) { if (start < end) { int pivot = partition(v, start, end); quicksort(v, start, pivot - 1); quicksort(v, pivot + 1, end); } }

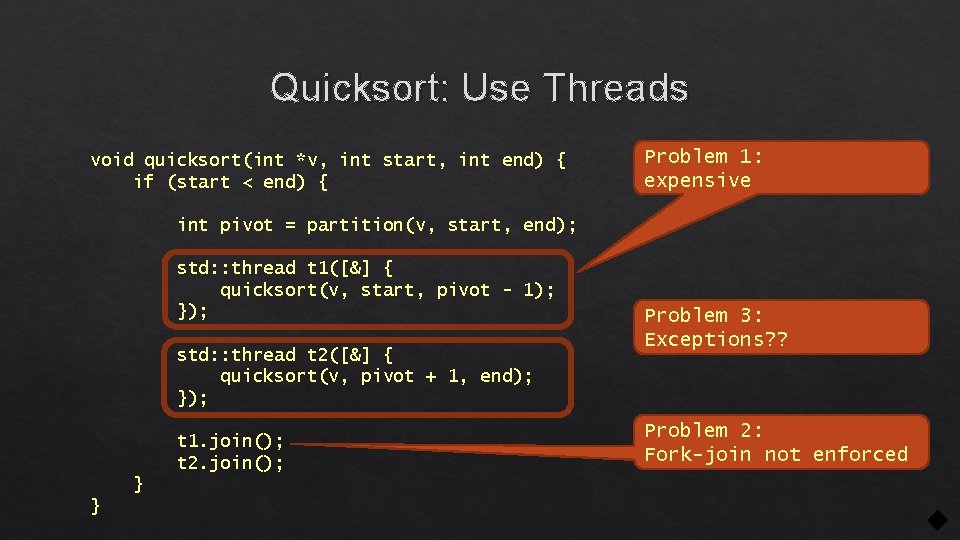

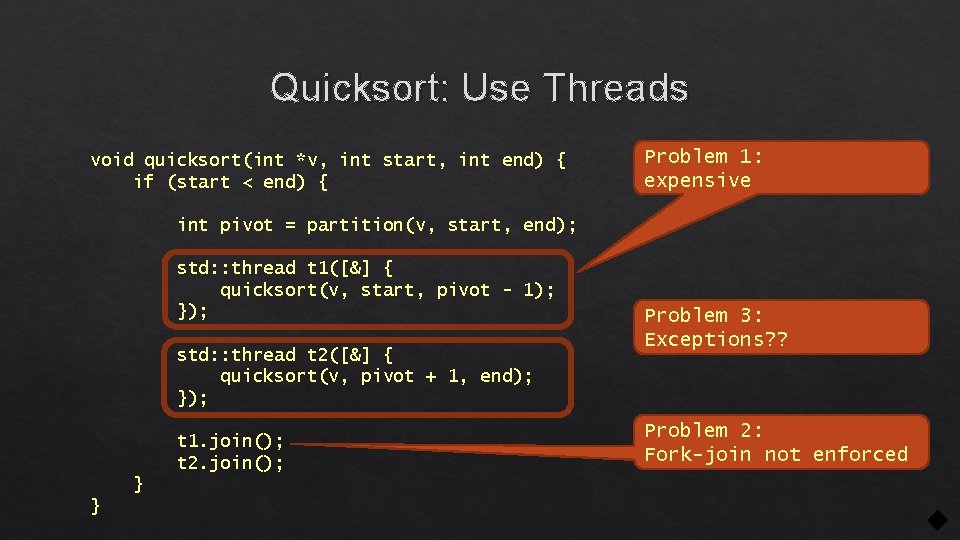

Quicksort: Use Threads void quicksort(int *v, int start, int end) { if (start < end) { Problem 1: expensive int pivot = partition(v, start, end); std: : thread t 1([&] { quicksort(v, start, pivot - 1); }); std: : thread t 2([&] { quicksort(v, pivot + 1, end); }); t 1. join(); t 2. join(); } } Problem 3: Exceptions? ? Problem 2: Fork-join not enforced

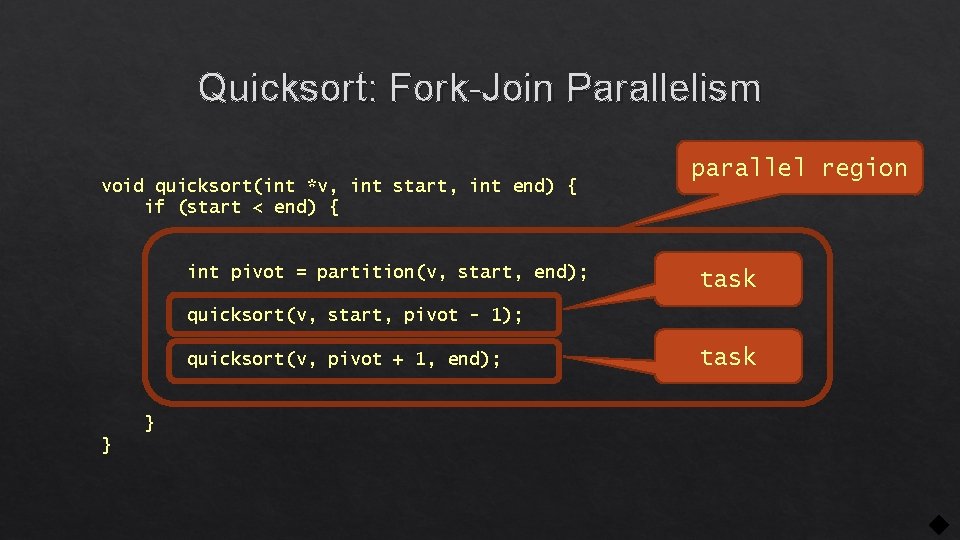

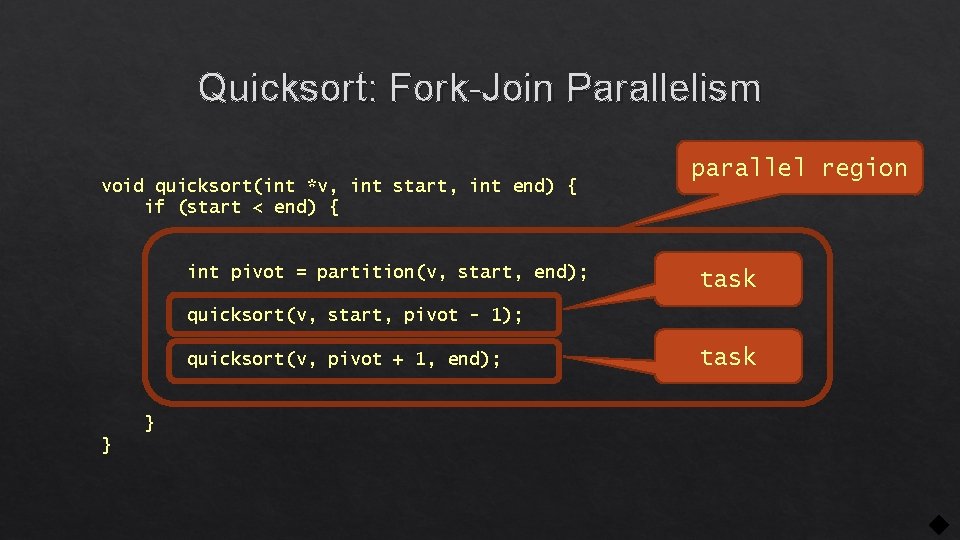

Quicksort: Fork-Join Parallelism void quicksort(int *v, int start, int end) { if (start < end) { int pivot = partition(v, start, end); parallel region task quicksort(v, start, pivot - 1); quicksort(v, pivot + 1, end); } } task

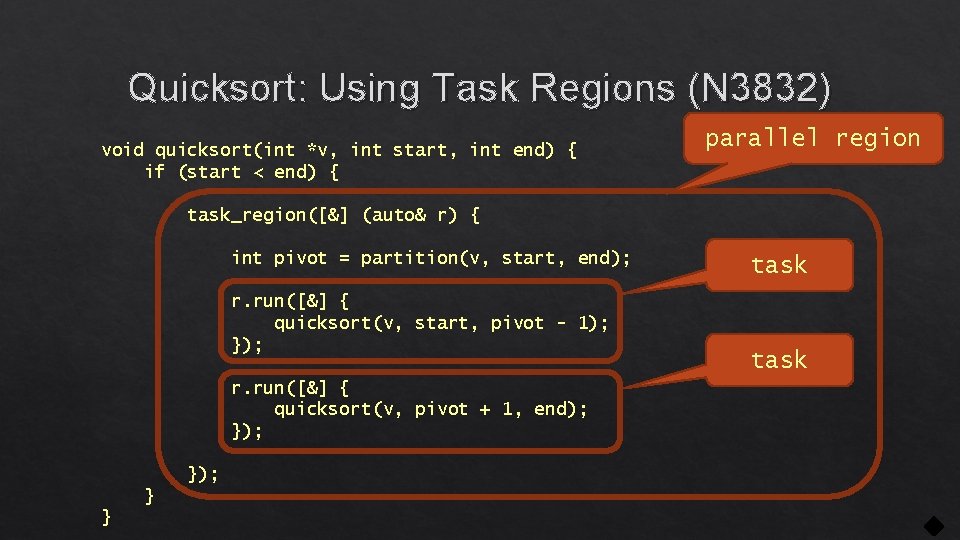

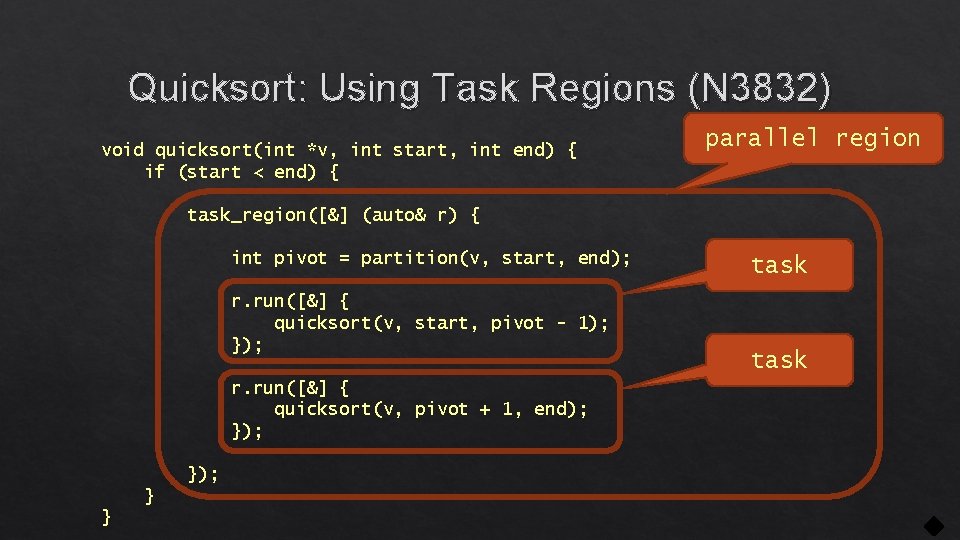

Quicksort: Using Task Regions (N 3832) void quicksort(int *v, int start, int end) { if (start < end) { parallel region task_region([&] (auto& r) { int pivot = partition(v, start, end); r. run([&] { quicksort(v, start, pivot - 1); }); r. run([&] { quicksort(v, pivot + 1, end); }); } } task

Under The Hood…

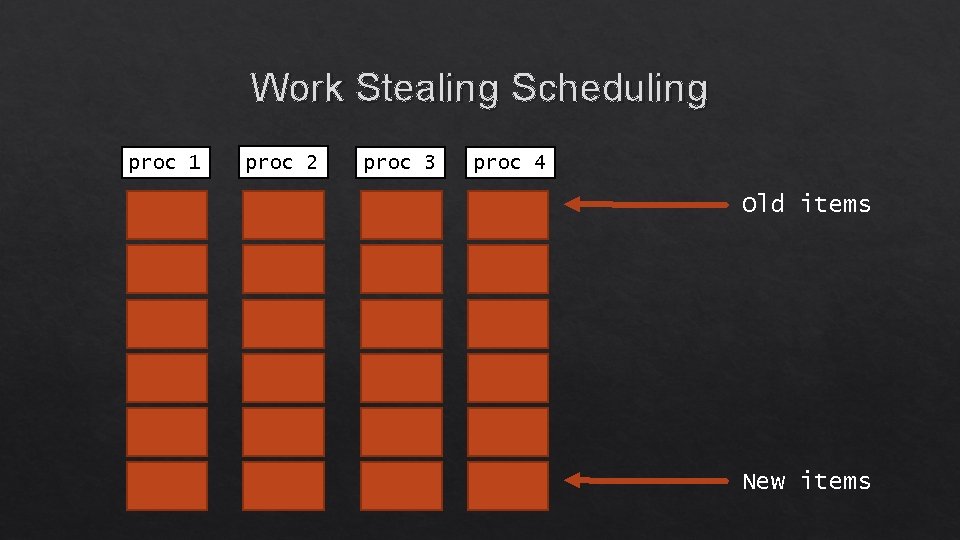

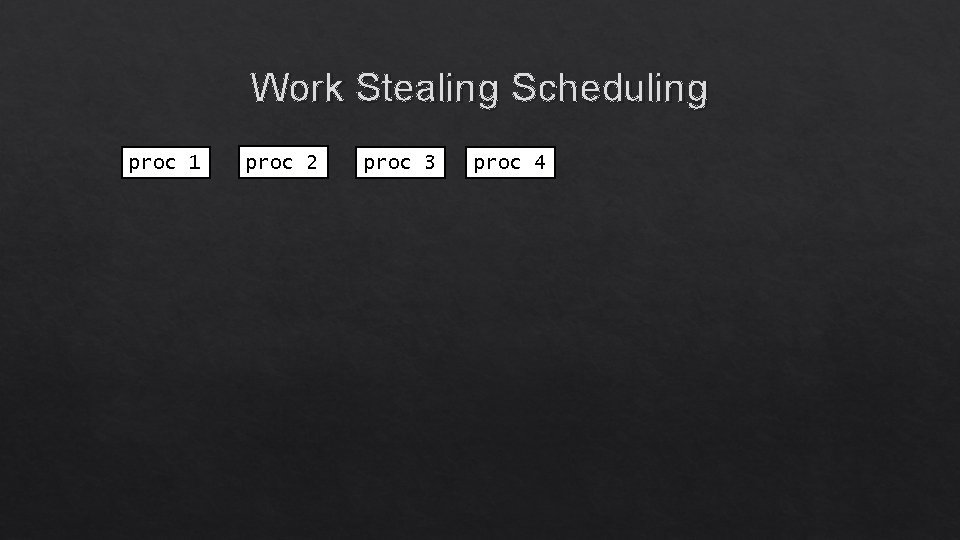

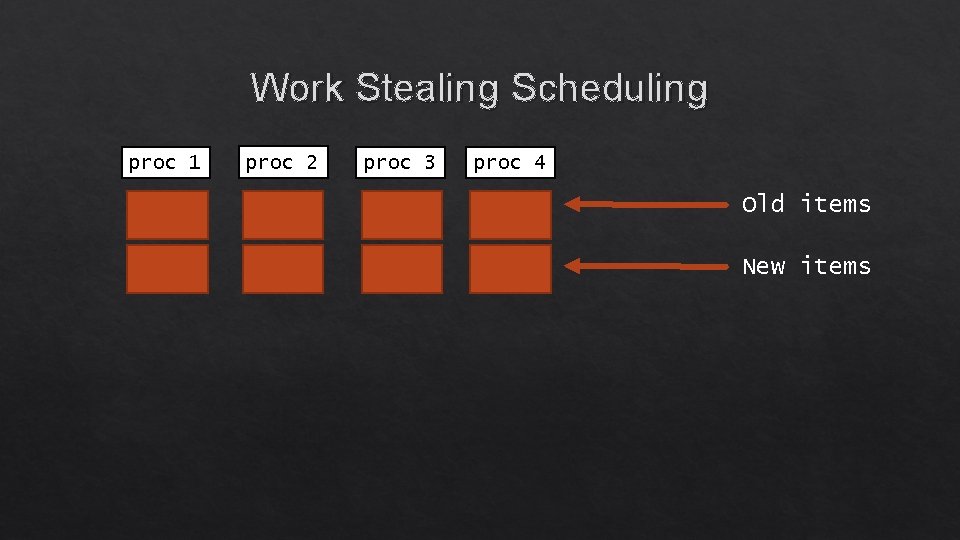

Work Stealing Scheduling proc 1 proc 2 proc 3 proc 4

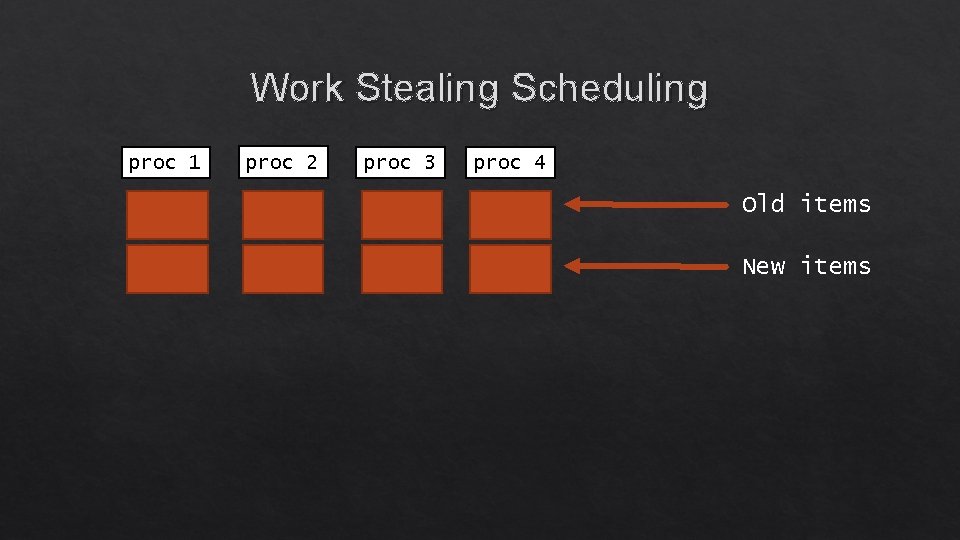

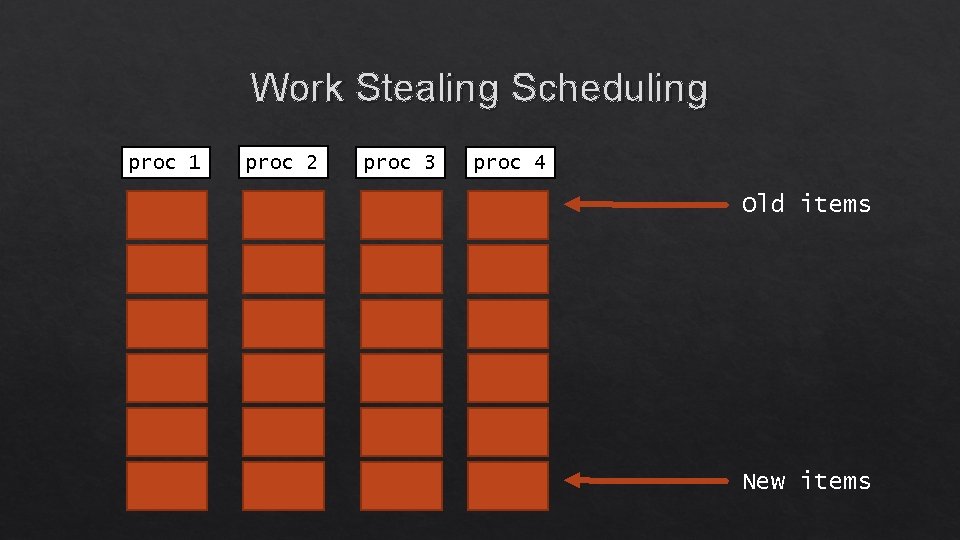

Work Stealing Scheduling proc 1 proc 2 proc 3 proc 4 Old items New items

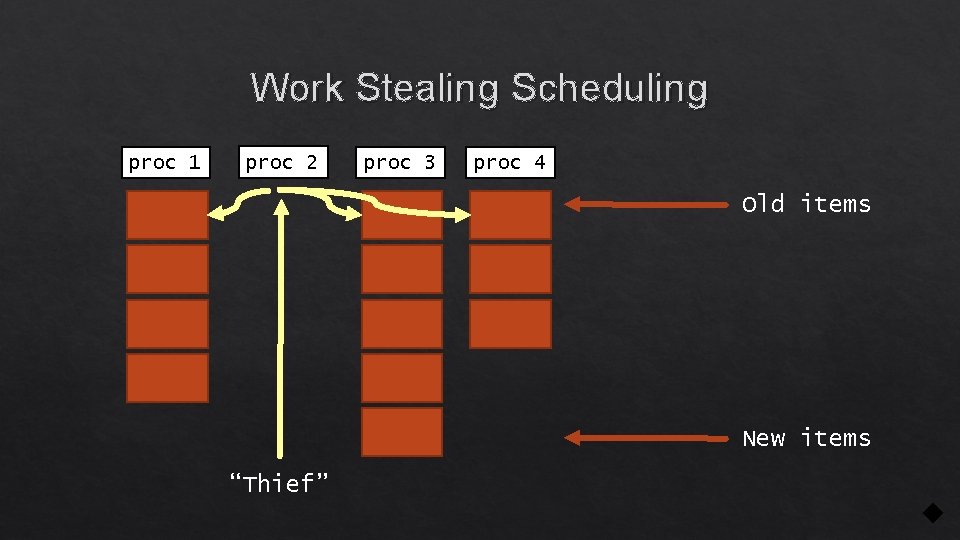

Work Stealing Scheduling proc 1 proc 2 proc 3 proc 4 Old items New items

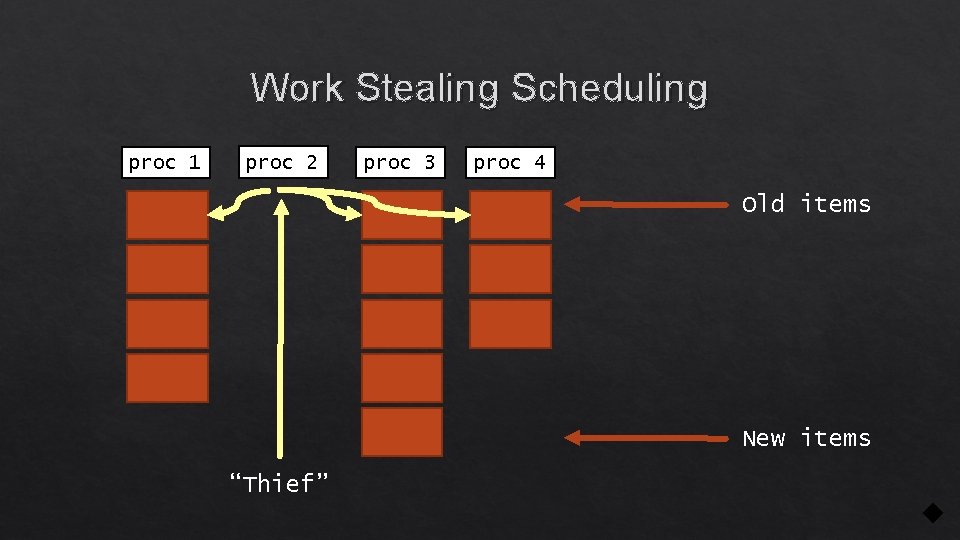

Work Stealing Scheduling proc 1 proc 2 proc 3 proc 4 Old items New items “Thief”

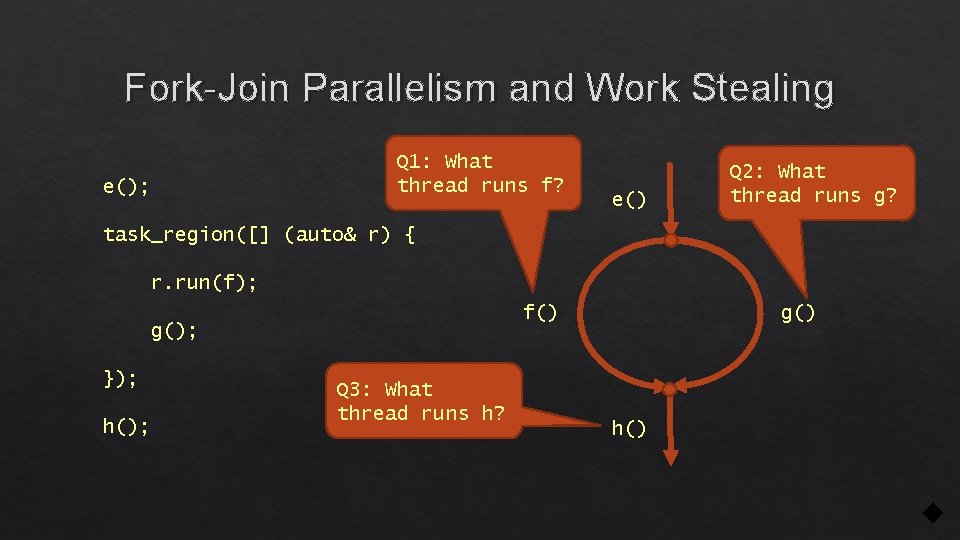

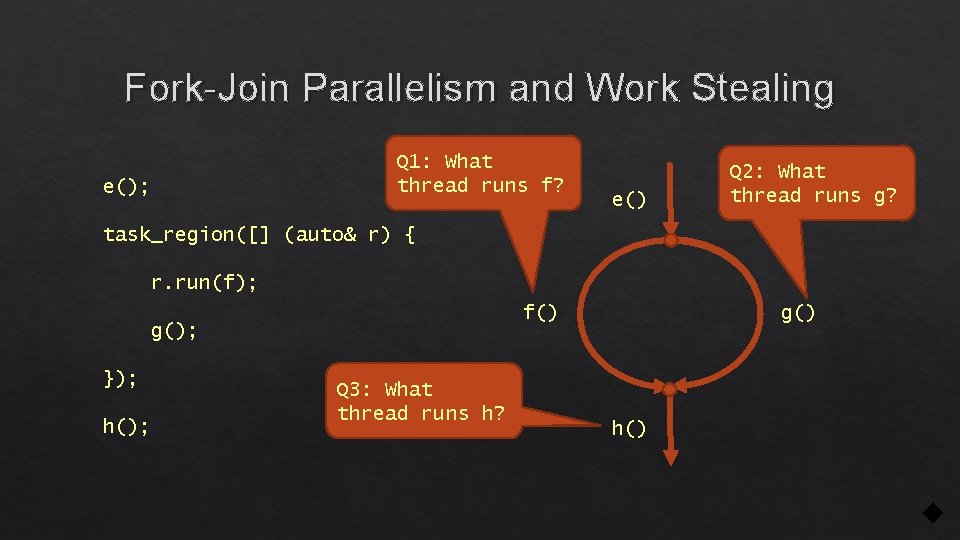

Fork-Join Parallelism and Work Stealing Q 1: What thread runs f? e(); e() Q 2: What thread runs g? task_region([] (auto& r) { r. run(f); g(); }); h(); g() f() Q 3: What thread runs h? h()

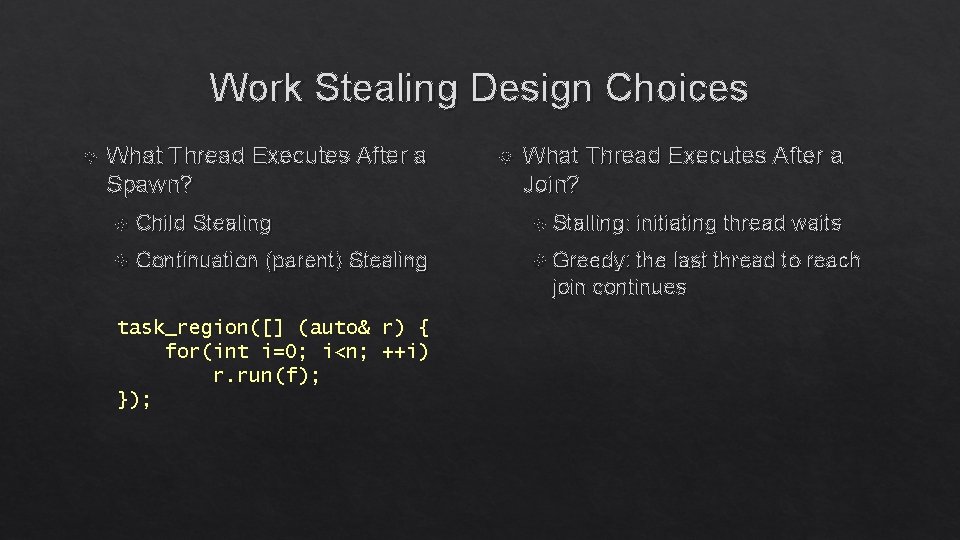

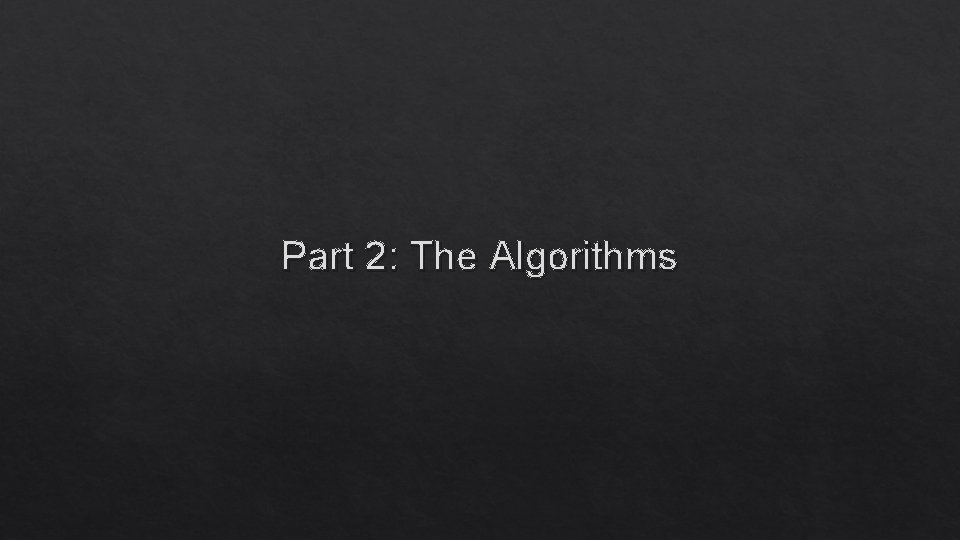

Work Stealing Design Choices What Thread Executes After a Spawn? What Thread Executes After a Join? Child Stealing Stalling: initiating thread waits Continuation (parent) Stealing Greedy: the last thread to reach join continues task_region([] (auto& r) { for(int i=0; i<n; ++i) r. run(f); });

Part 2: The Algorithms

Alex Stepanov: Start With The Algorithms

Inspiration Intel Threading Building Blocks Microsoft Parallel Nvidia Thrust Patterns Library, C++ AMP Performing Parallel Operations On Containers

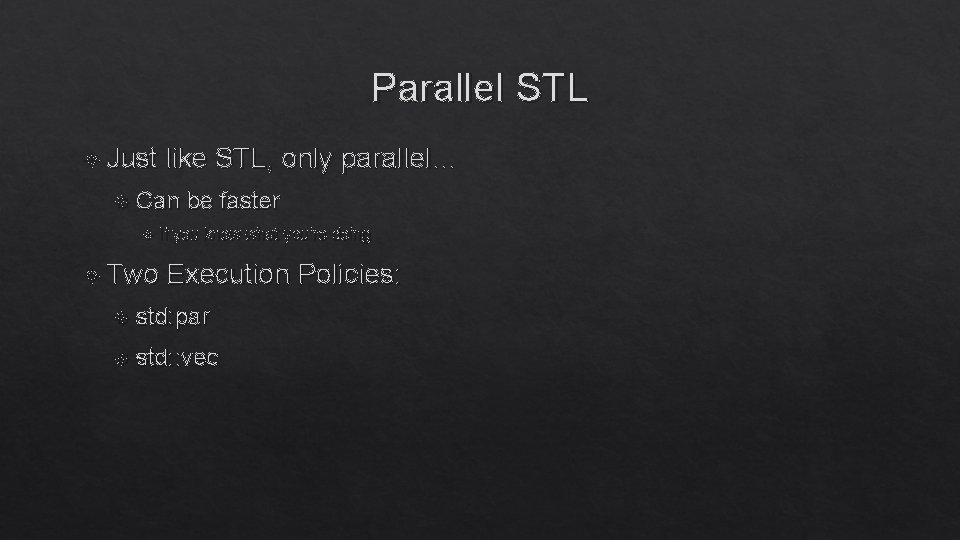

Parallel STL Just like STL, only parallel… Can be faster If you know what you’re doing Two Execution Policies: std: par std: : vec

Parallelization: What’s a Big Deal? Why not already parallel? std: : sort(begin, end, [](int a, int b) { return a < b; }); User-provided closures must be thread safe: int comparisons = 0; std: : sort(begin, end, [&](int a, int b) { comparisons++; return a < b; }); But also special-member functions, std: : swap etc.

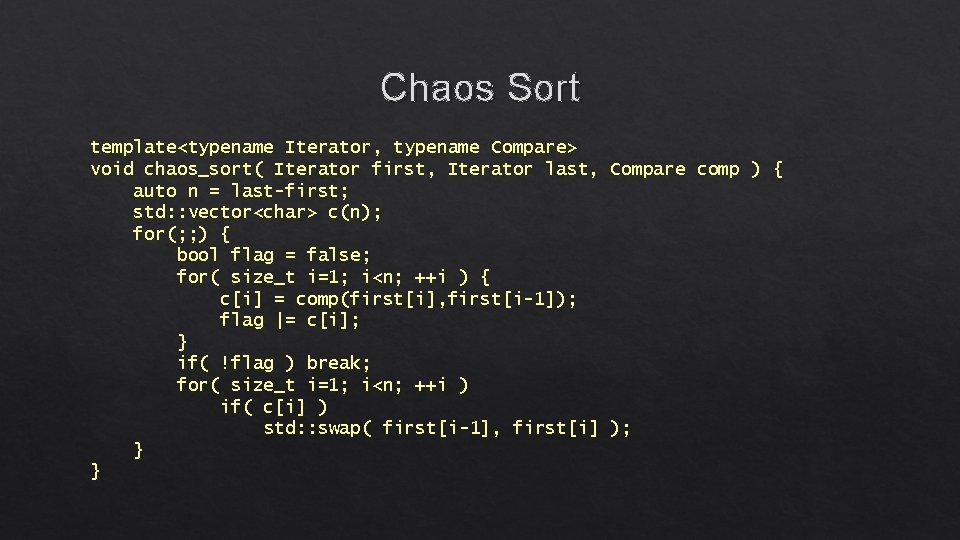

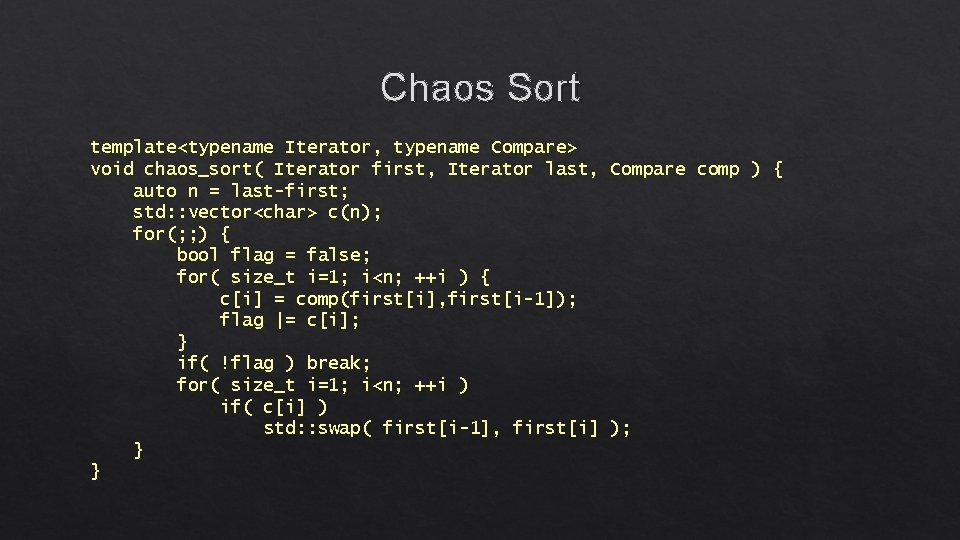

It’s a Contract What the user can do What the implementer can do Asymptotic Guarantees: std: : sort: O(n*log(n)), std: : stable_sort: O(n*log 2(n)), what about parallel sort? What is a valid implementation? (see next slide)

Chaos Sort template<typename Iterator, typename Compare> void chaos_sort( Iterator first, Iterator last, Compare comp ) { auto n = last-first; std: : vector<char> c(n); for(; ; ) { bool flag = false; for( size_t i=1; i<n; ++i ) { c[i] = comp(first[i], first[i-1]); flag |= c[i]; } if( !flag ) break; for( size_t i=1; i<n; ++i ) if( c[i] ) std: : swap( first[i-1], first[i] ); } }

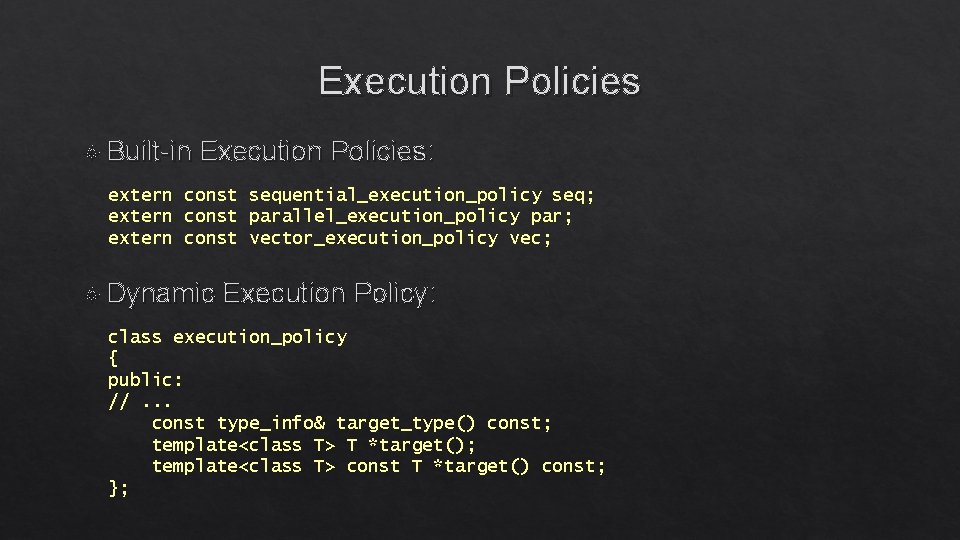

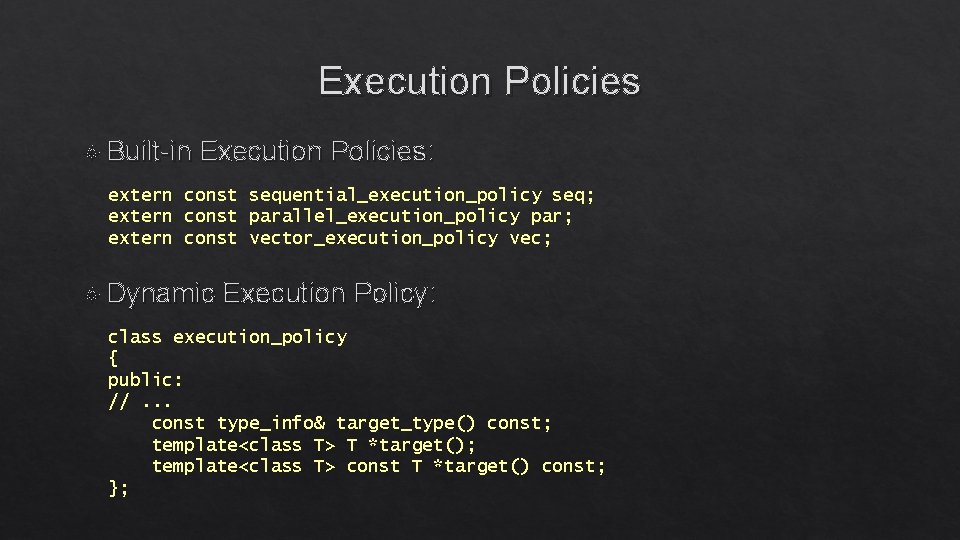

Execution Policies Built-in Execution Policies: extern const sequential_execution_policy seq; extern const parallel_execution_policy par; extern const vector_execution_policy vec; Dynamic Execution Policy: class execution_policy { public: //. . . const type_info& target_type() const; template<class T> T *target(); template<class T> const T *target() const; };

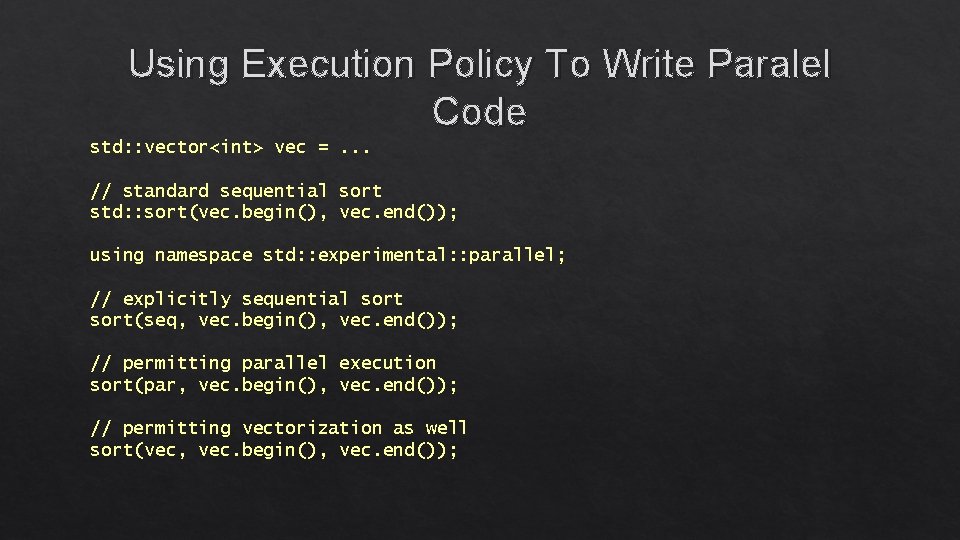

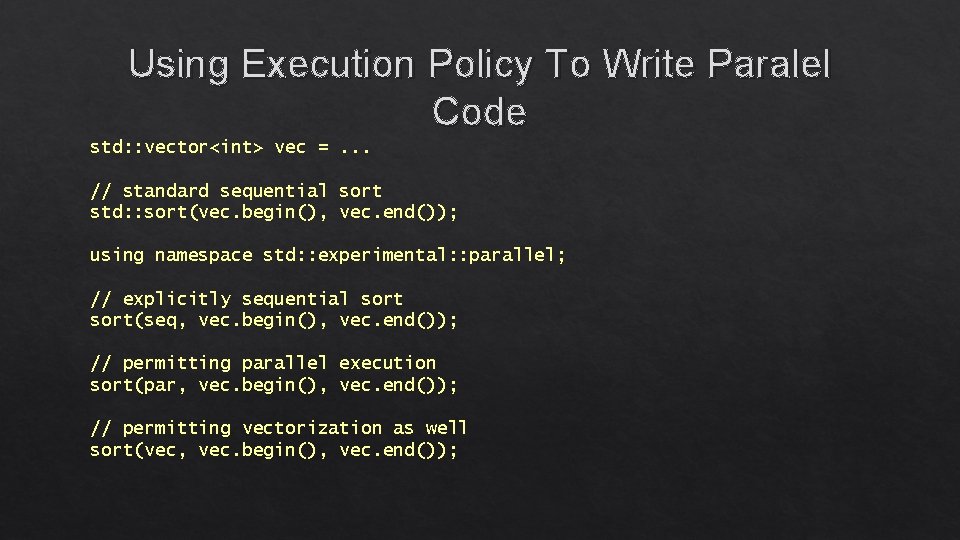

Using Execution Policy To Write Paralel Code std: : vector<int> vec =. . . // standard sequential sort std: : sort(vec. begin(), vec. end()); using namespace std: : experimental: : parallel; // explicitly sequential sort(seq, vec. begin(), vec. end()); // permitting parallel execution sort(par, vec. begin(), vec. end()); // permitting vectorization as well sort(vec, vec. begin(), vec. end());

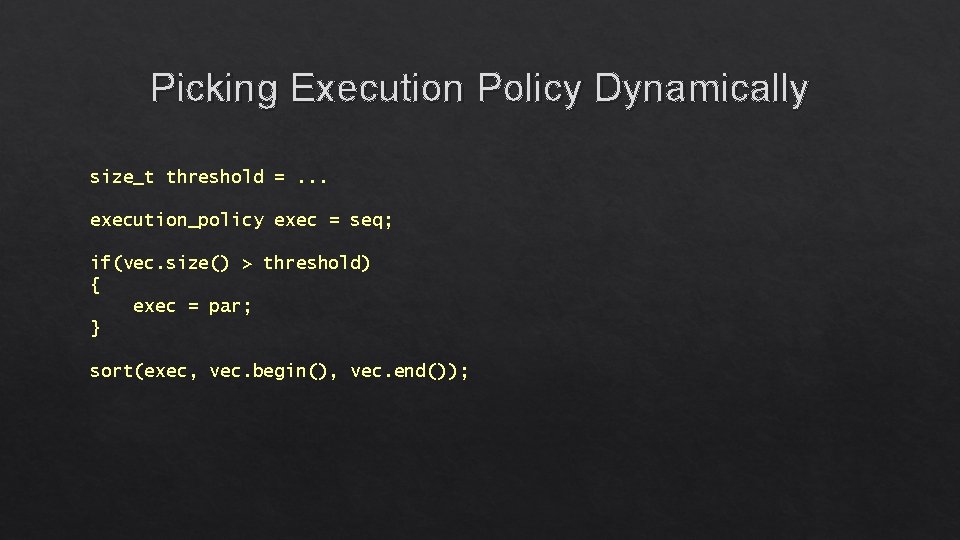

Picking Execution Policy Dynamically size_t threshold =. . . execution_policy exec = seq; if(vec. size() > threshold) { exec = par; } sort(exec, vec. begin(), vec. end());

Exception Handling In C++ philosophy, no exception is silently ignored Exception list: container of exception_ptr objects try { r = std: : inner_product(std: : par, a. begin(), a. end(), b. begin(), func 1, func 2, 0); } catch(const exception_list& list) { for(auto& exptr : list) { // process exception pointer exptr } }

Vectorization: A Tale From Agriculture

A Tale From Agriculture

A Tale From Agriculture

Idea: Fewer Tractors, Wider Plows

![Vectorization Whats a Big Deal int an int bn Vectorization: What’s a Big Deal? int a[n] =. . . ; int b[n] =.](https://slidetodoc.com/presentation_image_h2/e3878a96b617716313637fae3095478c/image-32.jpg)

Vectorization: What’s a Big Deal? int a[n] =. . . ; int b[n] =. . . ; for(int i=0; i<n; ++i) { a[i] = b[i] + c; } movdqu paddd movdqu xmm 1, XMMWORD PTR _b$[esp+eax+132] xmm 0, XMMWORD PTR _a$[esp+eax+132] xmm 1, xmm 2 xmm 1, xmm 0 XMMWORD PTR _a$[esp+eax+132], xmm 1 a[i: i+3] = b[i: i+3] + c;

Vector Lane is not a Thread! Taking locks Thread Then with thread_id x takes a lock… another “thread” with the same thread_id enters the lock… Deadlock!!! Exceptions Can we unwind 1/4 th of the stack?

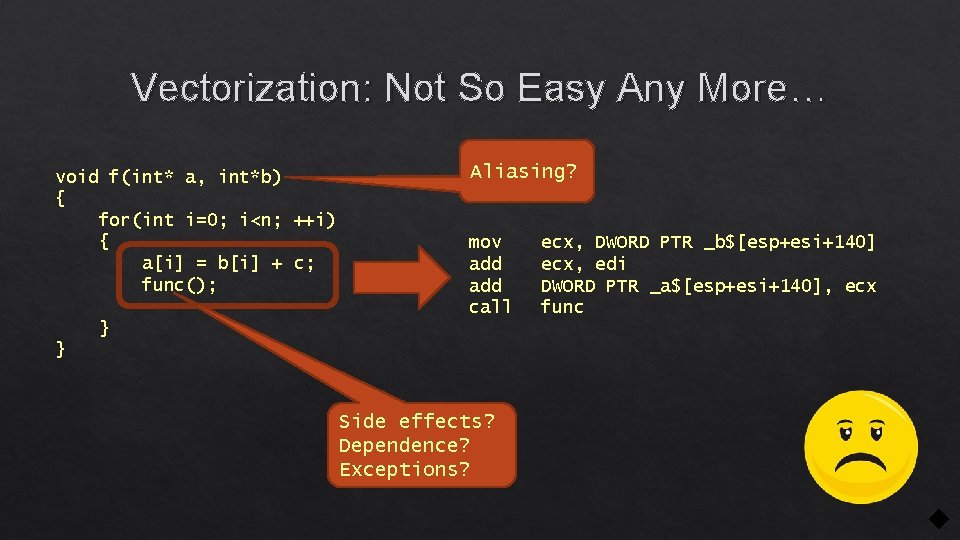

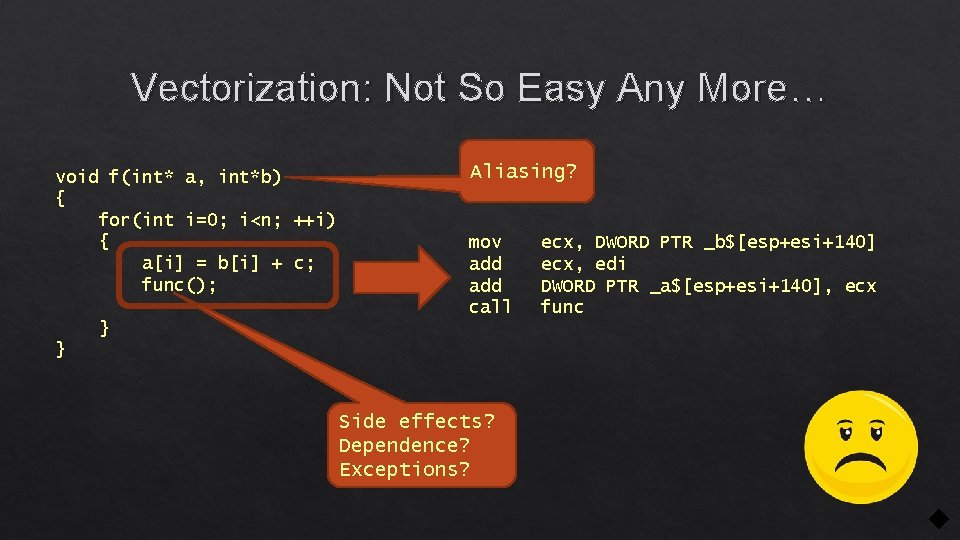

Vectorization: Not So Easy Any More… void f(int* a, int*b) { for(int i=0; i<n; ++i) { a[i] = b[i] + c; func(); Aliasing? mov add call } } Side effects? Dependence? Exceptions? ecx, DWORD PTR _b$[esp+esi+140] ecx, edi DWORD PTR _a$[esp+esi+140], ecx func

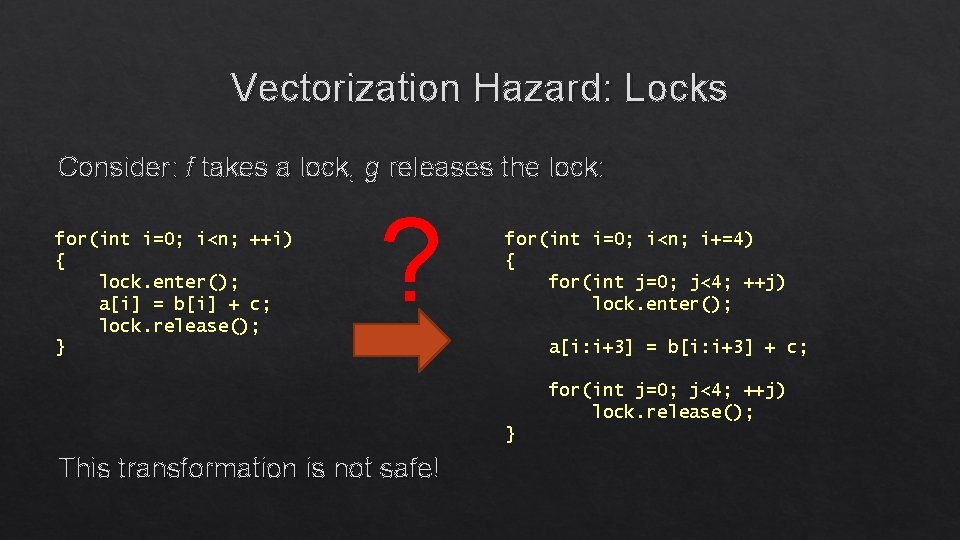

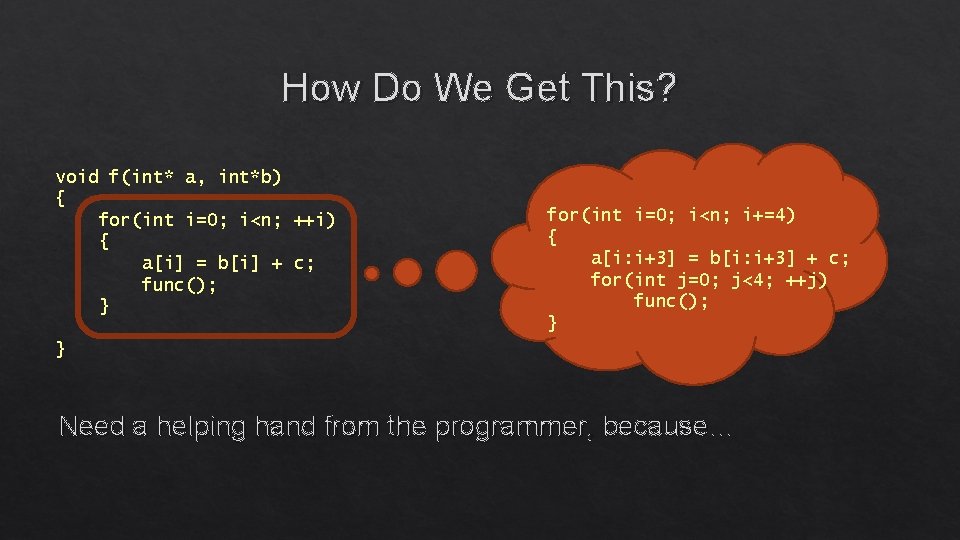

Vectorization Hazard: Locks Consider: f takes a lock, g releases the lock: for(int i=0; i<n; ++i) { lock. enter(); a[i] = b[i] + c; lock. release(); } ? for(int i=0; i<n; i+=4) { for(int j=0; j<4; ++j) lock. enter(); a[i: i+3] = b[i: i+3] + c; for(int j=0; j<4; ++j) lock. release(); } This transformation is not safe!

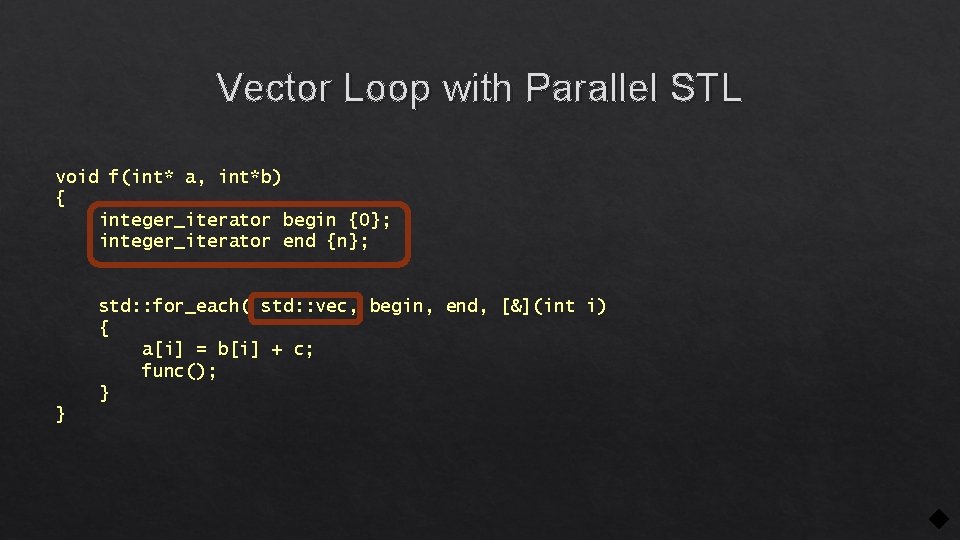

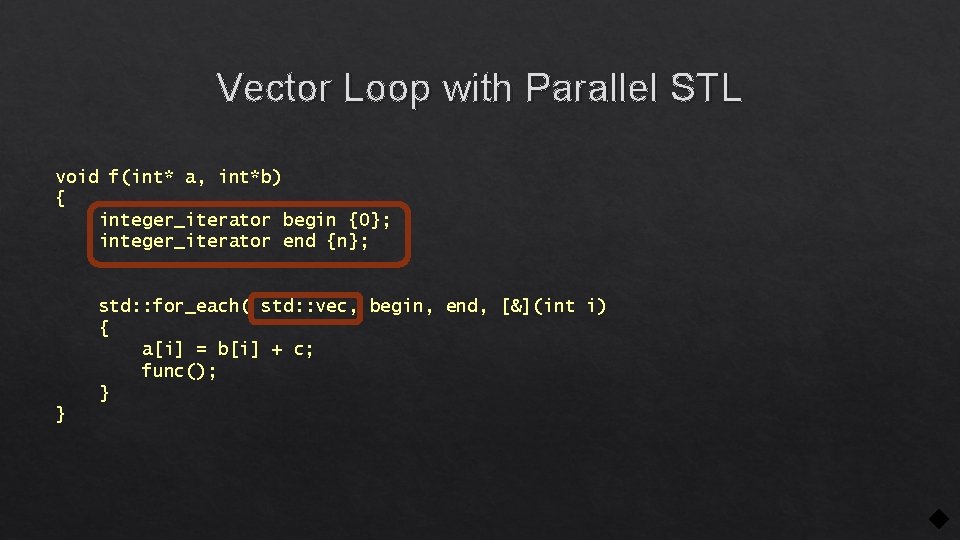

How Do We Get This? void f(int* a, int*b) { for(int i=0; i<n; ++i) { a[i] = b[i] + c; func(); } for(int i=0; i<n; i+=4) { a[i: i+3] = b[i: i+3] + c; for(int j=0; j<4; ++j) func(); } } Need a helping hand from the programmer, because…

Vector Loop with Parallel STL void f(int* a, int*b) { integer_iterator begin {0}; integer_iterator end {n}; std: : for_each( std: : vec, begin, end, [&](int i) { a[i] = b[i] + c; func(); } }

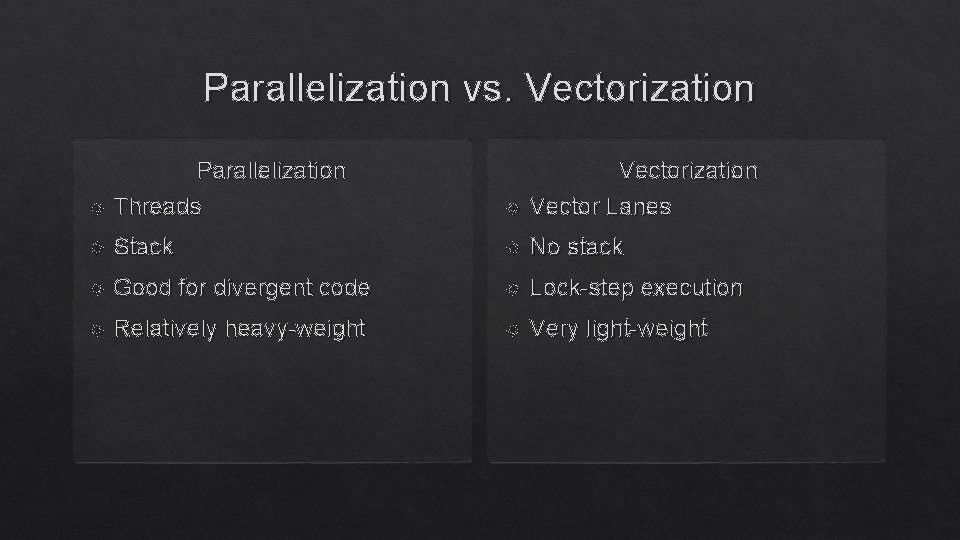

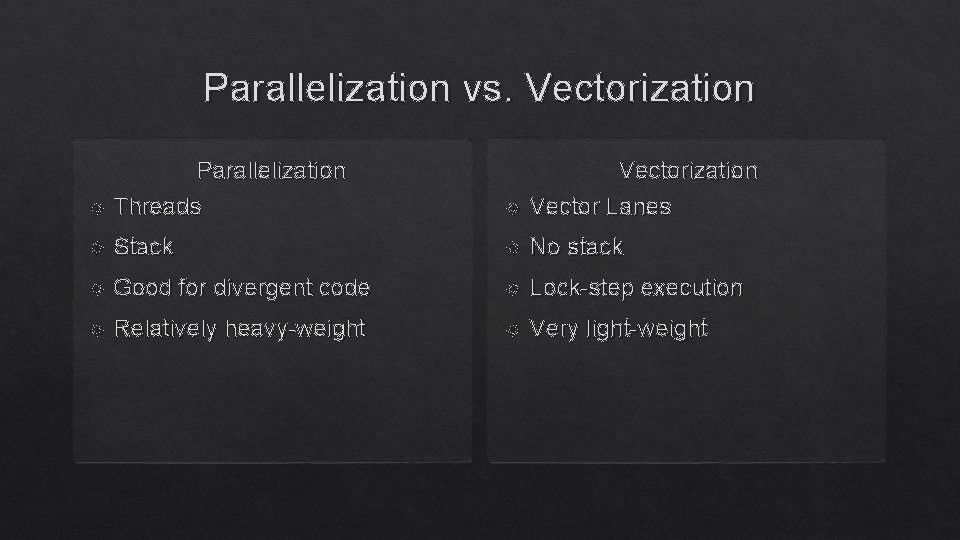

Parallelization vs. Vectorization Parallelization Threads Vectorization Vector Lanes Stack No stack Good for divergent code Lock-step execution Relatively heavy-weight Very light-weight

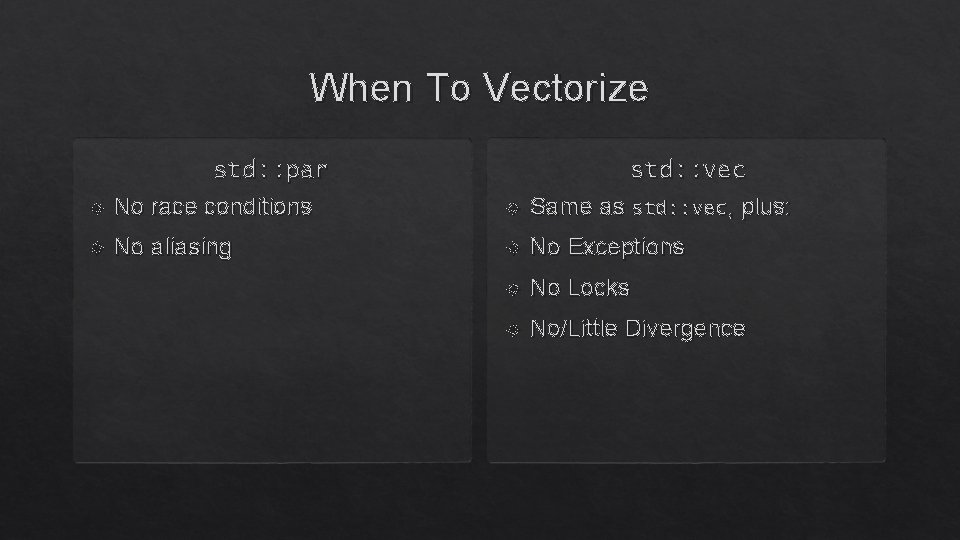

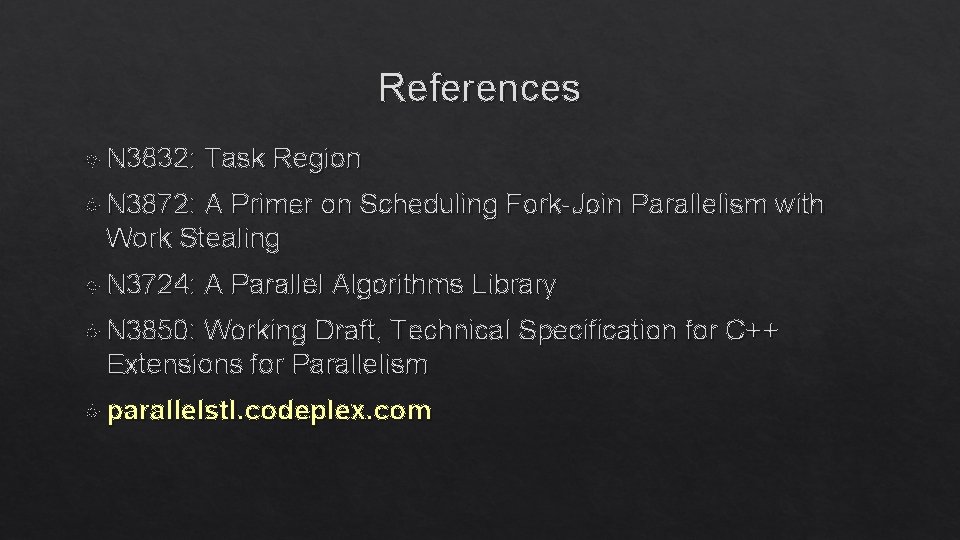

When To Vectorize std: : par No race conditions std: : vec Same as std: : vec, plus: No aliasing No Exceptions No Locks No/Little Divergence

References N 3832: Task Region N 3872: A Primer on Scheduling Fork-Join Parallelism with Work Stealing N 3724: A Parallel Algorithms Library N 3850: Working Draft, Technical Specification for C++ Extensions for Parallelism parallelstl. codeplex. com