Parallel transport prototype Andrei Gheata Motivation Parallel architectures

- Slides: 23

Parallel transport prototype Andrei Gheata

Motivation • Parallel architectures are evolving fast – Task parallelism in hybrid configurations – Instruction level parallelism (ILP) exploited more and more • 4 FLOP/cycle on modern processors • HEP applications are using very inefficiently these resources – Running in average 0. 6 instructions/cycle – Bad or inefficient usage of C++ leads to scattered data structures and cache misses (both instruction and data)

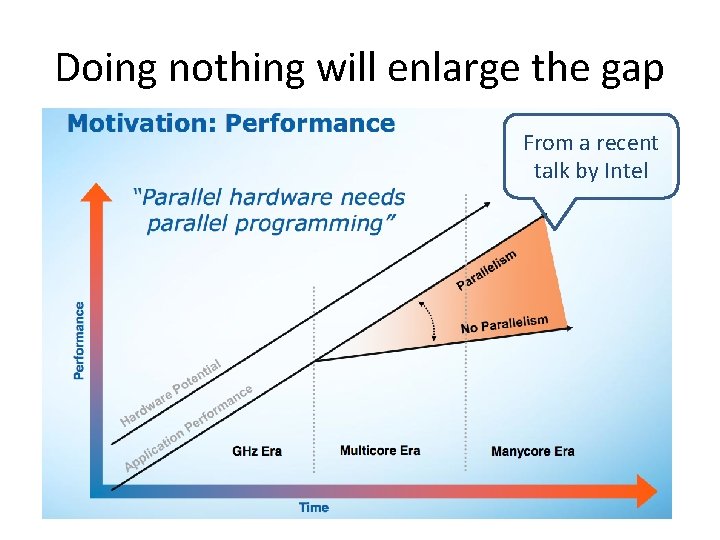

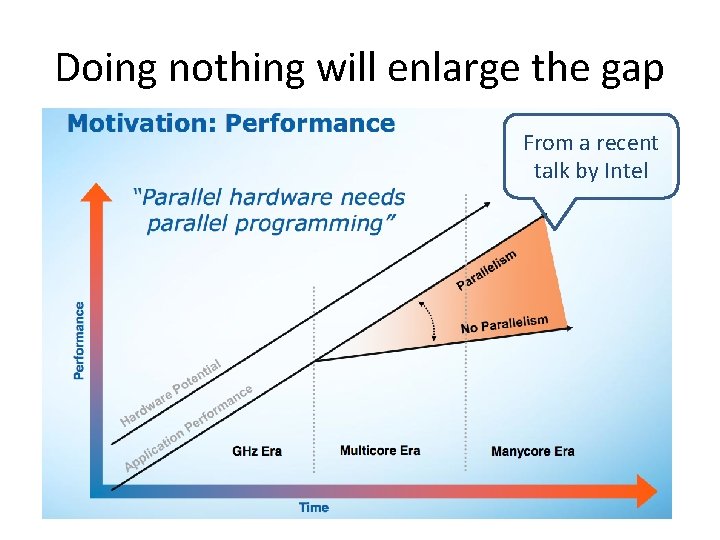

Doing nothing will enlarge the gap From a recent talk by Intel

Ali. Root – is there hope ? • Can we do more than running Ali. Root in threads and call it Ali. Root-MT ? – Even that would be a gain… • Seriously, what could be potentially parallelized ? – Simulation – most CPU-intensive, best candidate • Event parallelism in the first approach, re-design I/O – Reconstruction – quite modular • Task-oriented parallelism, re-design I/O – Analysis – we have a modular structure of tasks • Pool of threads, pool of tasks, marry them… • Possibly exploiting lower grain parallelism for CPUintensive track loops

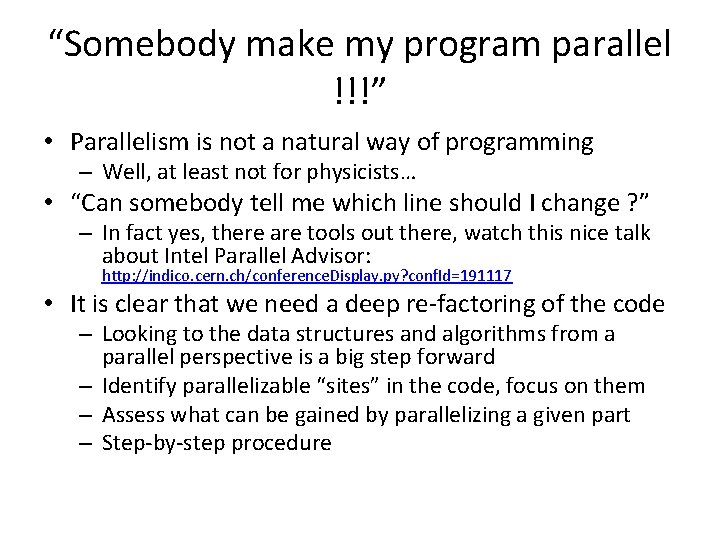

“Somebody make my program parallel !!!” • Parallelism is not a natural way of programming – Well, at least not for physicists… • “Can somebody tell me which line should I change ? ” – In fact yes, there are tools out there, watch this nice talk about Intel Parallel Advisor: http: //indico. cern. ch/conference. Display. py? conf. Id=191117 • It is clear that we need a deep re-factoring of the code – Looking to the data structures and algorithms from a parallel perspective is a big step forward – Identify parallelizable “sites” in the code, focus on them – Assess what can be gained by parallelizing a given part – Step-by-step procedure

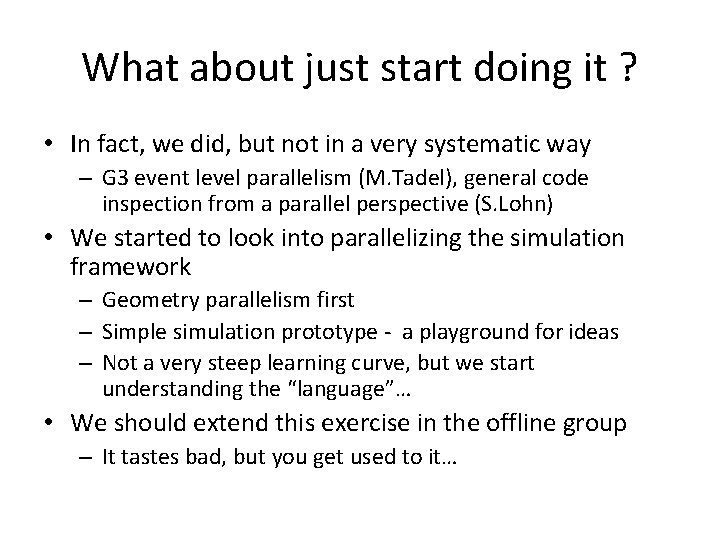

What about just start doing it ? • In fact, we did, but not in a very systematic way – G 3 event level parallelism (M. Tadel), general code inspection from a parallel perspective (S. Lohn) • We started to look into parallelizing the simulation framework – Geometry parallelism first – Simple simulation prototype - a playground for ideas – Not a very steep learning curve, but we start understanding the “language”… • We should extend this exercise in the offline group – It tastes bad, but you get used to it…

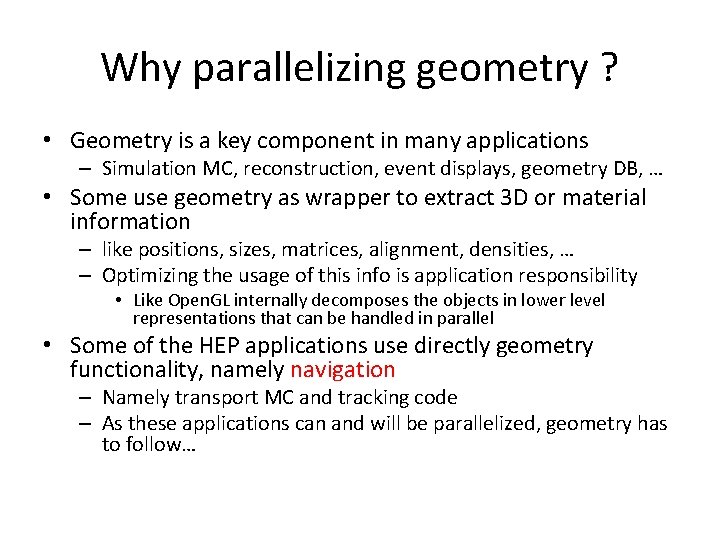

Why parallelizing geometry ? • Geometry is a key component in many applications – Simulation MC, reconstruction, event displays, geometry DB, … • Some use geometry as wrapper to extract 3 D or material information – like positions, sizes, matrices, alignment, densities, … – Optimizing the usage of this info is application responsibility • Like Open. GL internally decomposes the objects in lower level representations that can be handled in parallel • Some of the HEP applications use directly geometry functionality, namely navigation – Namely transport MC and tracking code – As these applications can and will be parallelized, geometry has to follow…

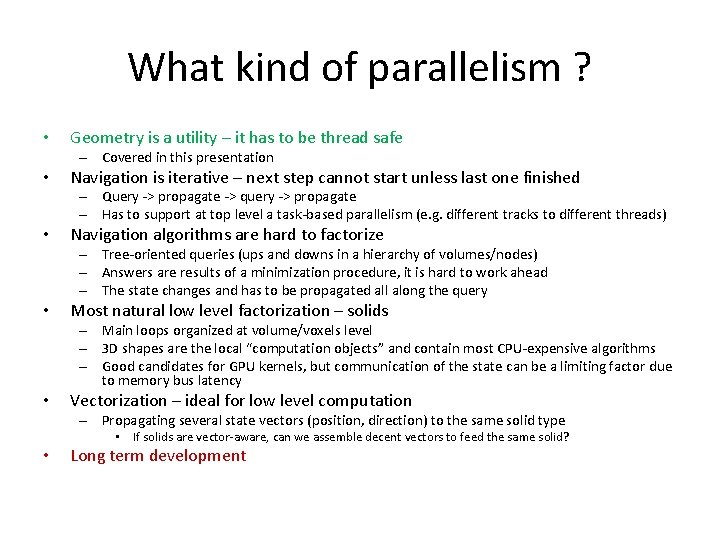

What kind of parallelism ? • Geometry is a utility – it has to be thread safe – Covered in this presentation • Navigation is iterative – next step cannot start unless last one finished – Query -> propagate -> query -> propagate – Has to support at top level a task-based parallelism (e. g. different tracks to different threads) • Navigation algorithms are hard to factorize – Tree-oriented queries (ups and downs in a hierarchy of volumes/nodes) – Answers are results of a minimization procedure, it is hard to work ahead – The state changes and has to be propagated all along the query • Most natural low level factorization – solids – Main loops organized at volume/voxels level – 3 D shapes are the local “computation objects” and contain most CPU-expensive algorithms – Good candidates for GPU kernels, but communication of the state can be a limiting factor due to memory bus latency • Vectorization – ideal for low level computation – Propagating several state vectors (position, direction) to the same solid type • If solids are vector-aware, can we assemble decent vectors to feed the same solid? • Long term development

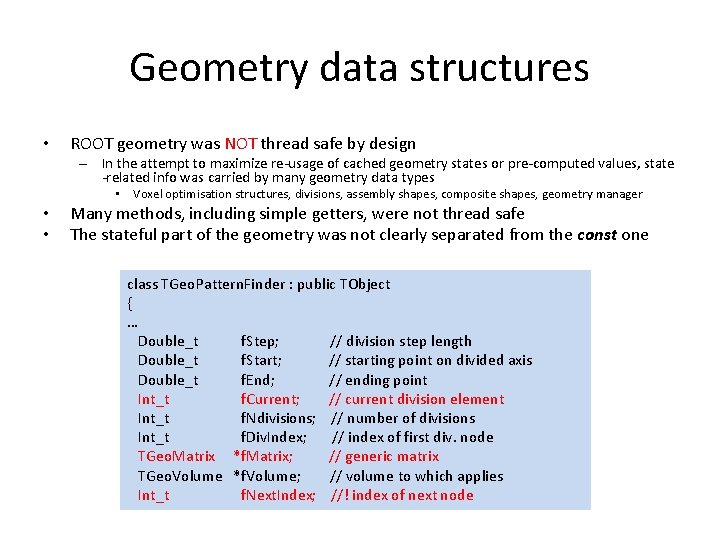

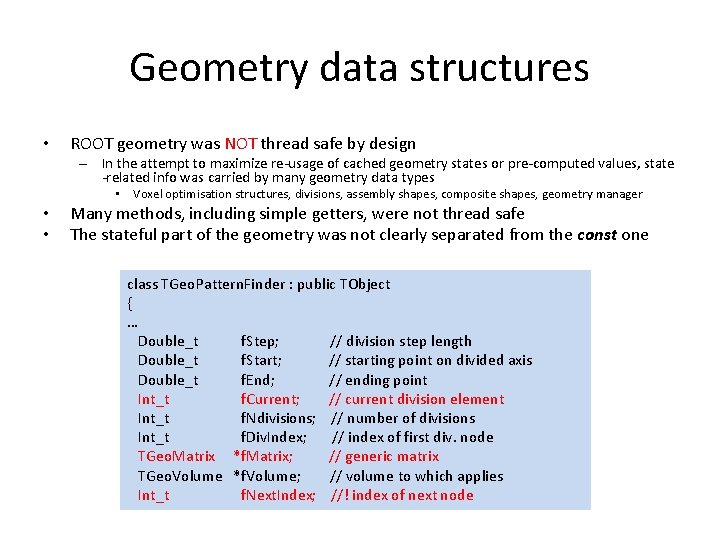

Geometry data structures • ROOT geometry was NOT thread safe by design – In the attempt to maximize re-usage of cached geometry states or pre-computed values, state -related info was carried by many geometry data types • Voxel optimisation structures, divisions, assembly shapes, composite shapes, geometry manager • • Many methods, including simple getters, were not thread safe The stateful part of the geometry was not clearly separated from the const one class TGeo. Pattern. Finder : public TObject { … Double_t f. Step; // division step length Double_t f. Start; // starting point on divided axis Double_t f. End; // ending point Int_t f. Current; // current division element Int_t f. Ndivisions; // number of divisions Int_t f. Div. Index; // index of first div. node TGeo. Matrix *f. Matrix; // generic matrix TGeo. Volume *f. Volume; // volume to which applies Int_t f. Next. Index; //! index of next node

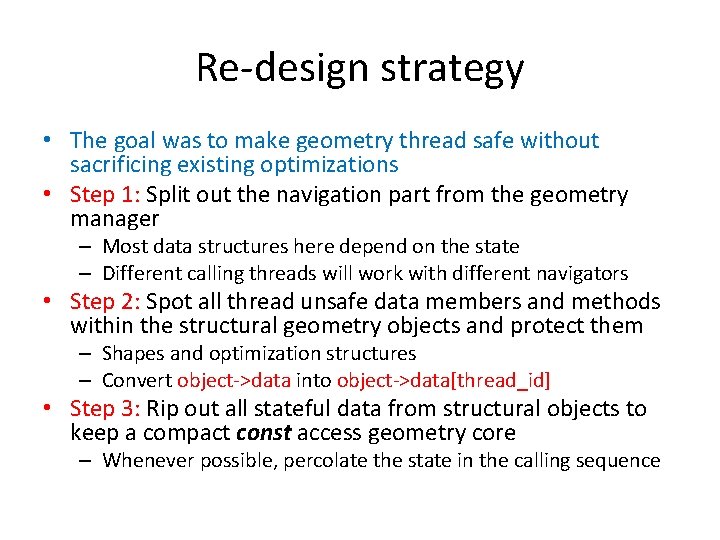

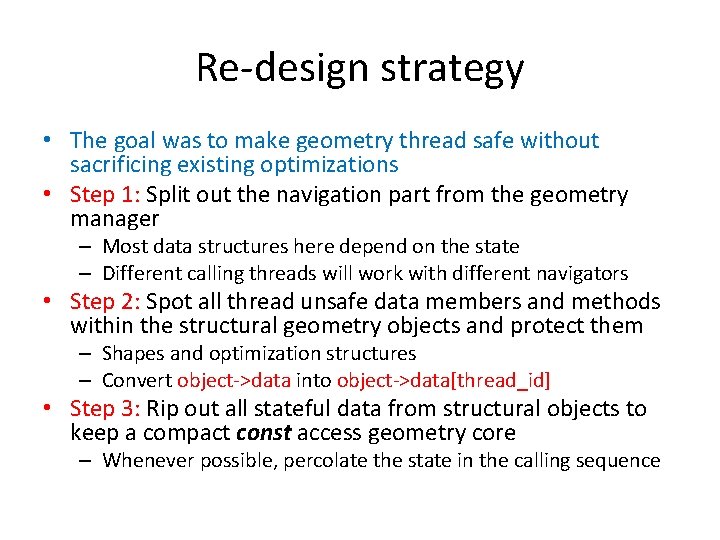

Re-design strategy • The goal was to make geometry thread safe without sacrificing existing optimizations • Step 1: Split out the navigation part from the geometry manager – Most data structures here depend on the state – Different calling threads will work with different navigators • Step 2: Spot all thread unsafe data members and methods within the structural geometry objects and protect them – Shapes and optimization structures – Convert object->data into object->data[thread_id] • Step 3: Rip out all stateful data from structural objects to keep a compact const access geometry core – Whenever possible, percolate the state in the calling sequence

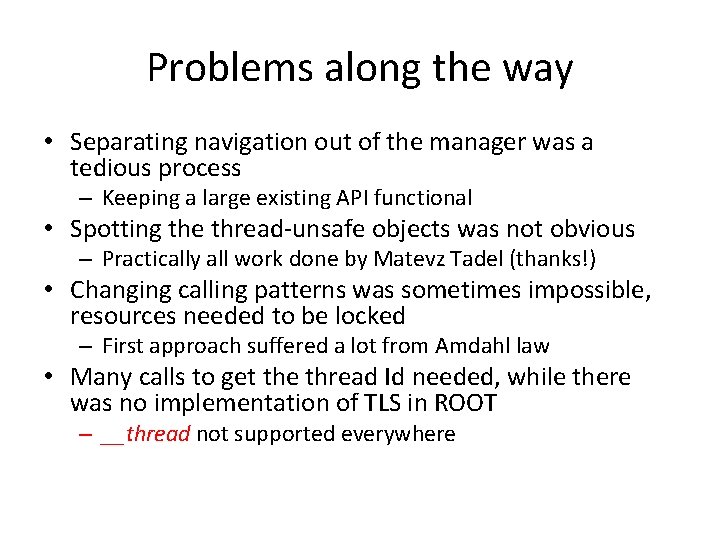

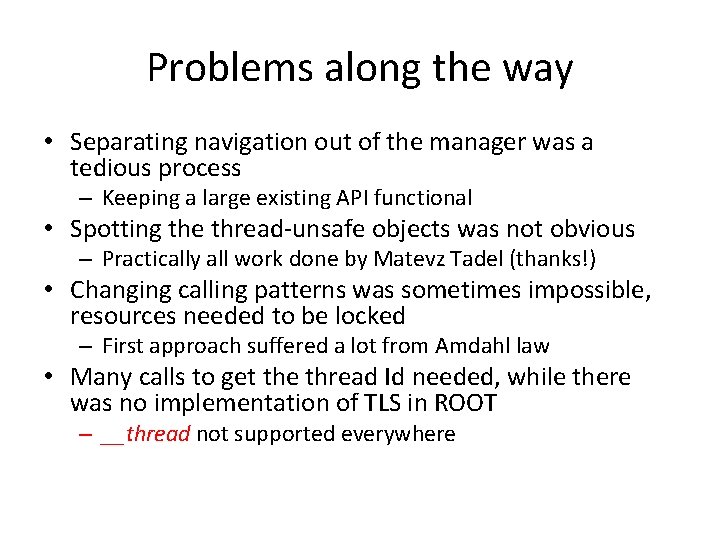

Problems along the way • Separating navigation out of the manager was a tedious process – Keeping a large existing API functional • Spotting the thread-unsafe objects was not obvious – Practically all work done by Matevz Tadel (thanks!) • Changing calling patterns was sometimes impossible, resources needed to be locked – First approach suffered a lot from Amdahl law • Many calls to get the thread Id needed, while there was no implementation of TLS in ROOT – __thread not supported everywhere

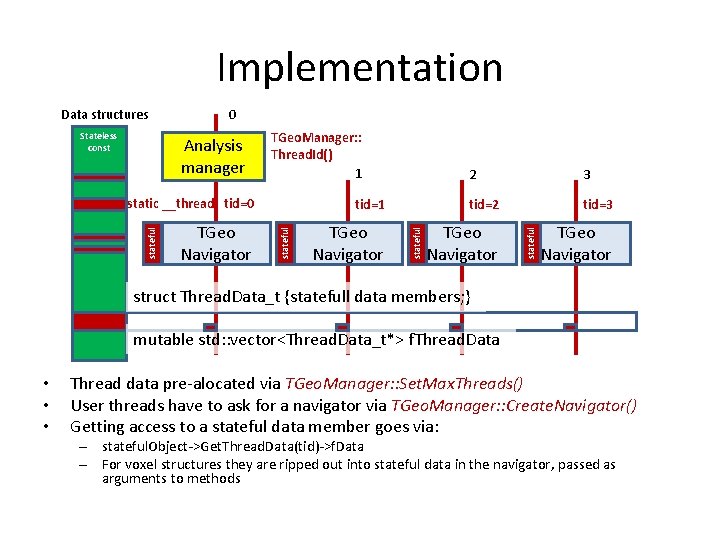

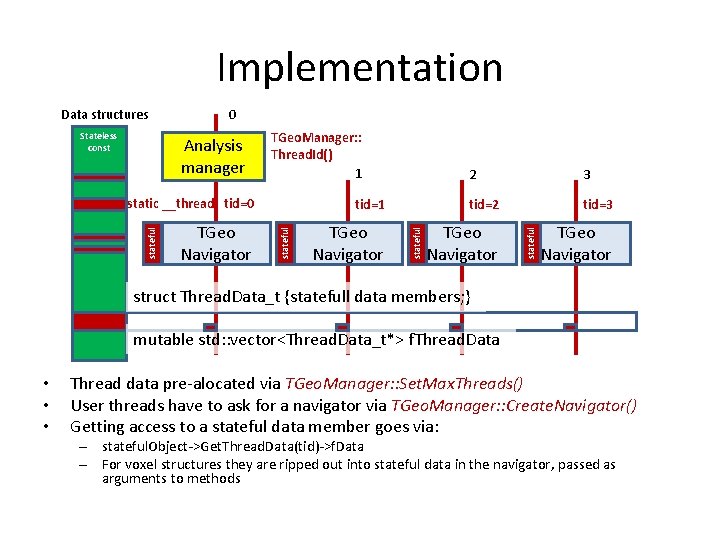

Implementation Data structures Stateless const 0 Analysis manager TGeo. Manager: : Thread. Id() 1 2 3 TGeo Navigator stateful tid=3 stateful tid=2 stateful tid=1 stateful static __thread tid=0 struct Thread. Data_t {statefull data members; } mutable std: : vector<Thread. Data_t*> f. Thread. Data • • • Thread data pre-alocated via TGeo. Manager: : Set. Max. Threads() User threads have to ask for a navigator via TGeo. Manager: : Create. Navigator() Getting access to a stateful data member goes via: – stateful. Object->Get. Thread. Data(tid)->f. Data – For voxel structures they are ripped out into stateful data in the navigator, passed as arguments to methods

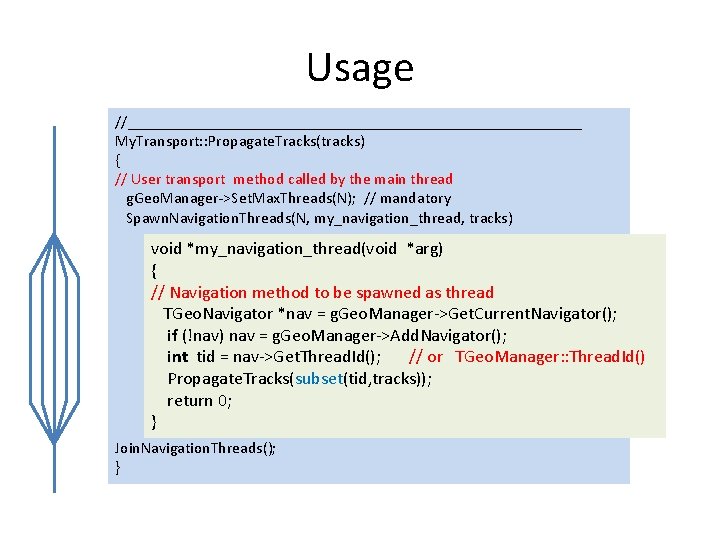

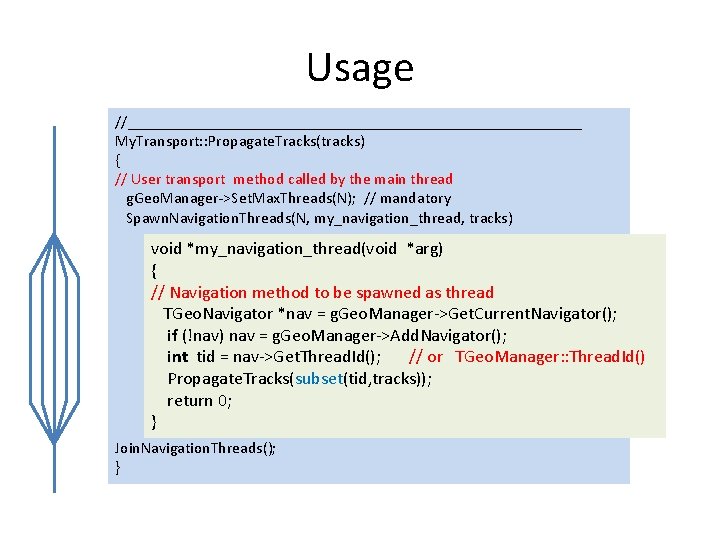

Usage //_____________________________ My. Transport: : Propagate. Tracks(tracks) { // User transport method called by the main thread g. Geo. Manager->Set. Max. Threads(N); // mandatory Spawn. Navigation. Threads(N, my_navigation_thread, tracks) void *my_navigation_thread(void *arg) { // Navigation method to be spawned as thread TGeo. Navigator *nav = g. Geo. Manager->Get. Current. Navigator(); if (!nav) nav = g. Geo. Manager->Add. Navigator(); int tid = nav->Get. Thread. Id(); // or TGeo. Manager: : Thread. Id() Propagate. Tracks(subset(tid, tracks)); return 0; } Join. Navigation. Threads(); }

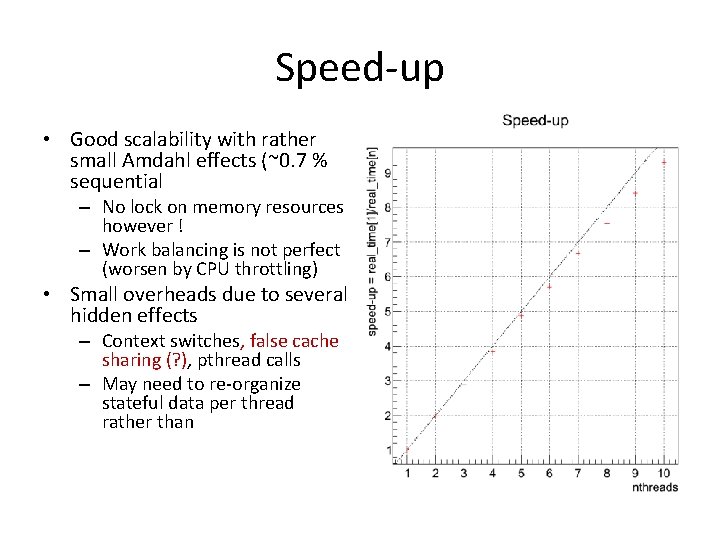

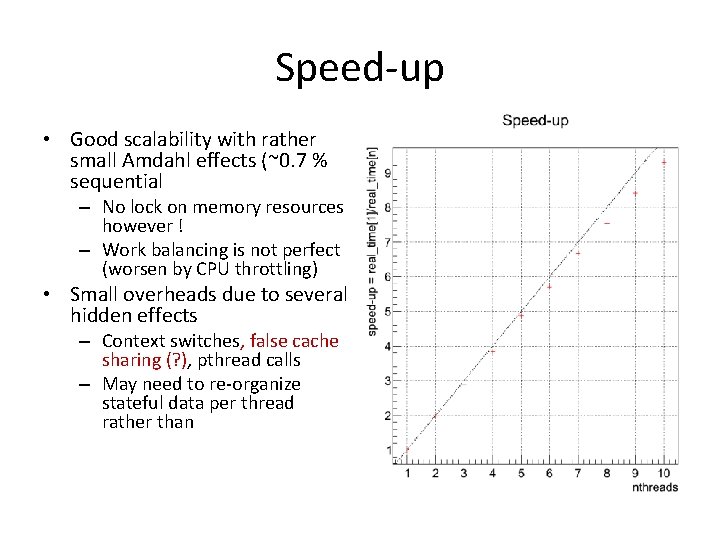

Speed-up • Good scalability with rather small Amdahl effects (~0. 7 % sequential – No lock on memory resources however ! – Work balancing is not perfect (worsen by CPU throttling) • Small overheads due to several hidden effects – Context switches, false cache sharing (? ), pthread calls – May need to re-organize stateful data per thread rather than

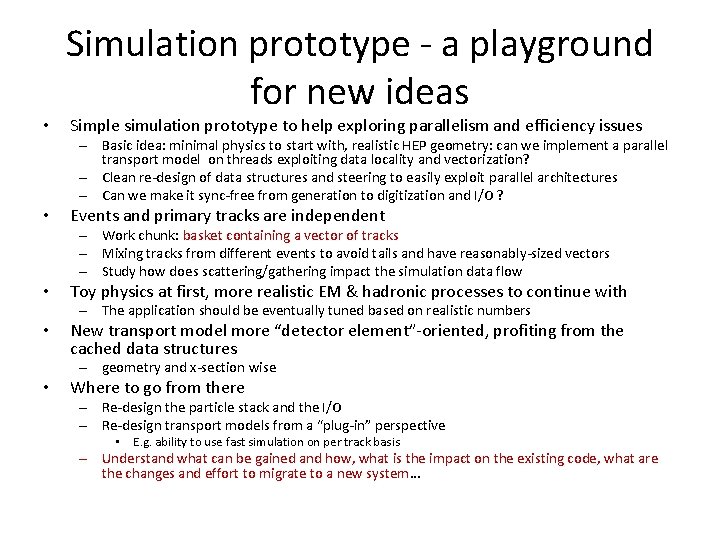

• Simulation prototype - a playground for new ideas Simple simulation prototype to help exploring parallelism and efficiency issues – Basic idea: minimal physics to start with, realistic HEP geometry: can we implement a parallel transport model on threads exploiting data locality and vectorization? – Clean re-design of data structures and steering to easily exploit parallel architectures – Can we make it sync-free from generation to digitization and I/O ? • Events and primary tracks are independent – Work chunk: basket containing a vector of tracks – Mixing tracks from different events to avoid tails and have reasonably-sized vectors – Study how does scattering/gathering impact the simulation data flow • Toy physics at first, more realistic EM & hadronic processes to continue with – The application should be eventually tuned based on realistic numbers • New transport model more “detector element”-oriented, profiting from the cached data structures – geometry and x-section wise • Where to go from there – Re-design the particle stack and the I/O – Re-design transport models from a “plug-in” perspective • E. g. ability to use fast simulation on per track basis – Understand what can be gained and how, what is the impact on the existing code, what are the changes and effort to migrate to a new system…

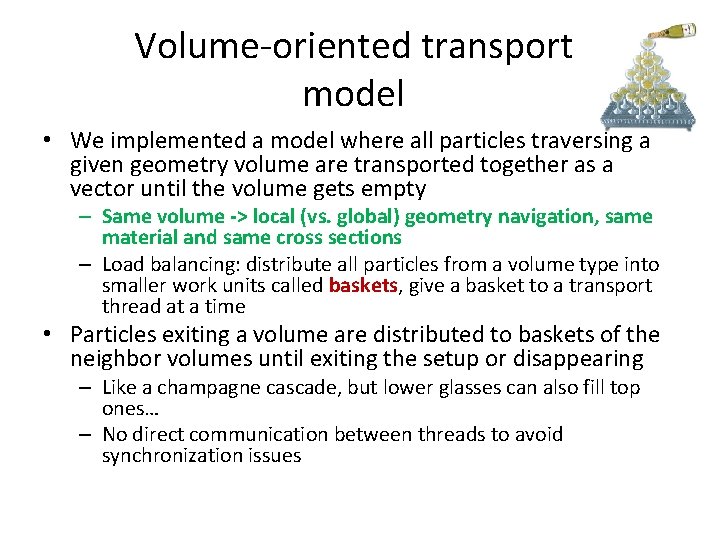

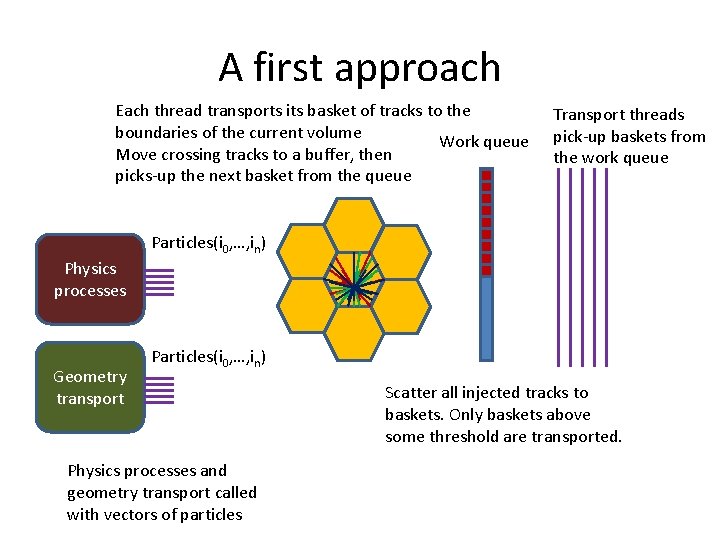

Volume-oriented transport model • We implemented a model where all particles traversing a given geometry volume are transported together as a vector until the volume gets empty – Same volume -> local (vs. global) geometry navigation, same material and same cross sections – Load balancing: distribute all particles from a volume type into smaller work units called baskets, give a basket to a transport thread at a time • Particles exiting a volume are distributed to baskets of the neighbor volumes until exiting the setup or disappearing – Like a champagne cascade, but lower glasses can also fill top ones… – No direct communication between threads to avoid synchronization issues

The beginning More events Inject event inbetter the volume to cut event containing tails and fillthe better IP the pipeline ! Realistic geometry + event generator

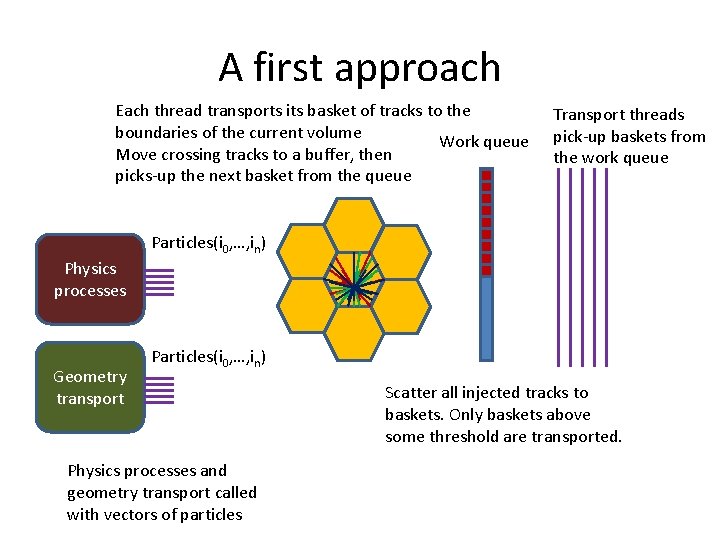

A first approach Each thread transports its basket of tracks to the boundaries of the current volume Work queue Move crossing tracks to a buffer, then picks-up the next basket from the queue Transport threads pick-up baskets from the work queue Particles(i 0, …, in) Physics processes Geometry transport Particles(i 0, …, in) Physics processes and geometry transport called with vectors of particles Scatter all injected tracks to baskets. Only baskets above some threshold are transported.

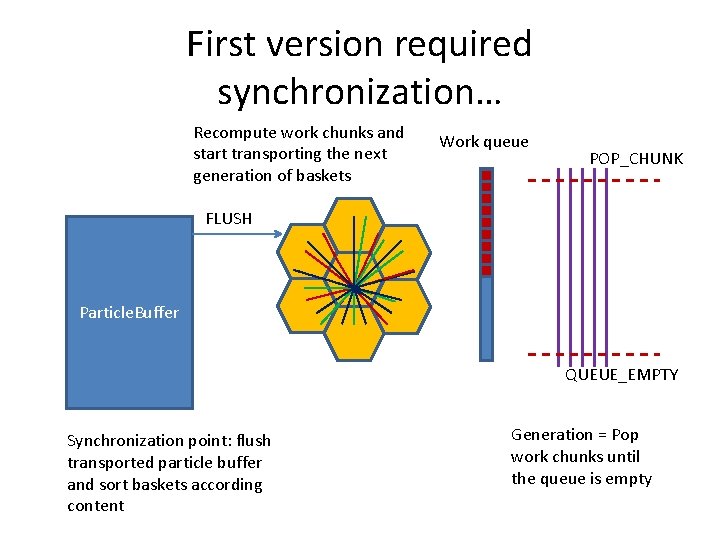

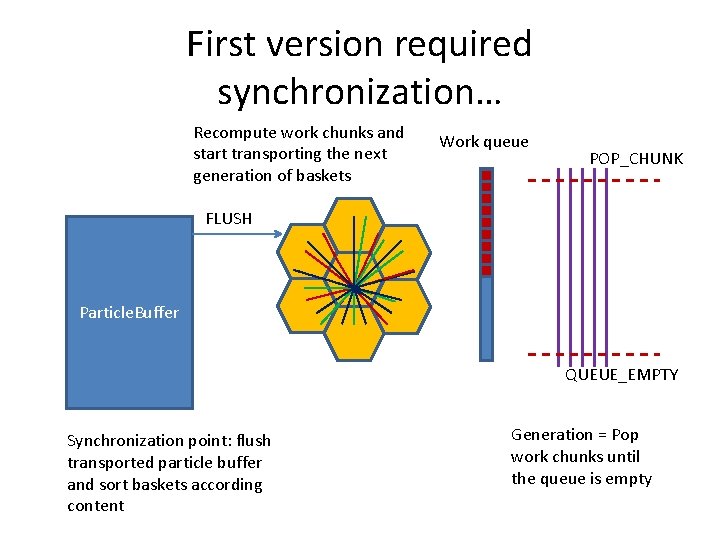

First version required synchronization… Recompute work chunks and start transporting the next generation of baskets Work queue POP_CHUNK FLUSH Particle. Buffer QUEUE_EMPTY Synchronization point: flush transported particle buffer and sort baskets according content Generation = Pop work chunks until the queue is empty

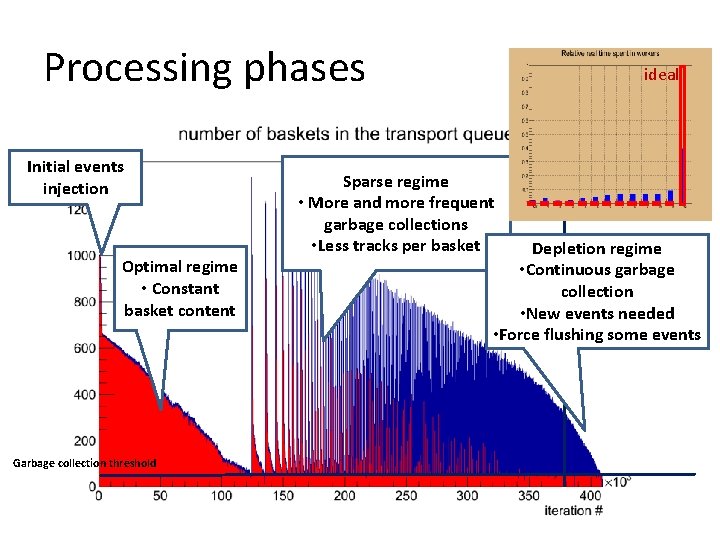

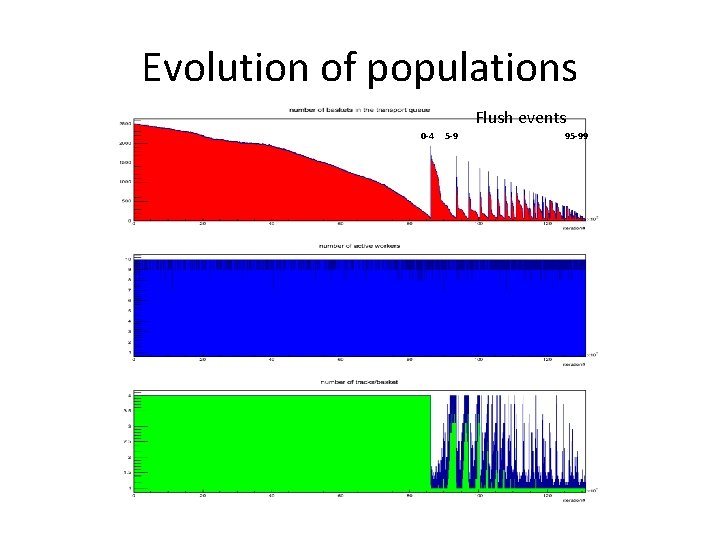

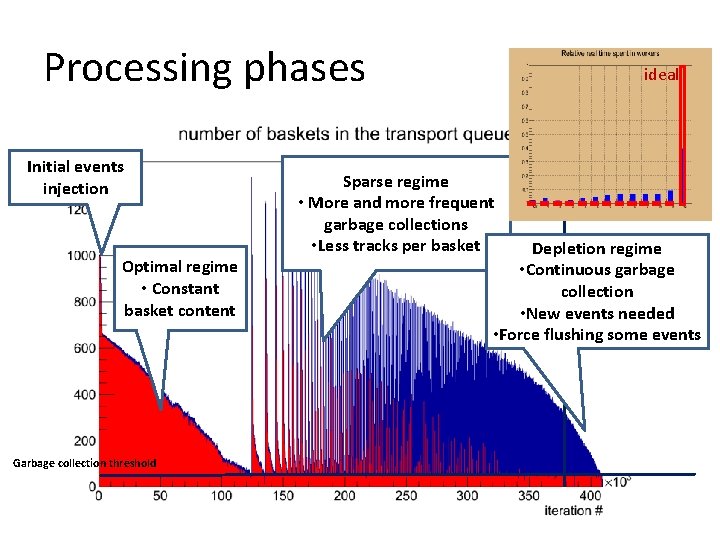

Processing phases Initial events injection Optimal regime • Constant basket content Garbage collection threshold ideal Sparse regime • More and more frequent garbage collections • Less tracks per basket Depletion regime • Continuous garbage collection • New events needed • Force flushing some events

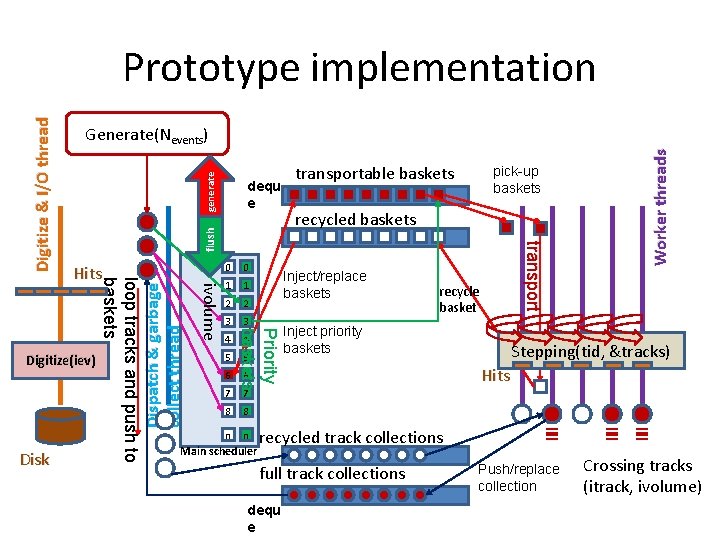

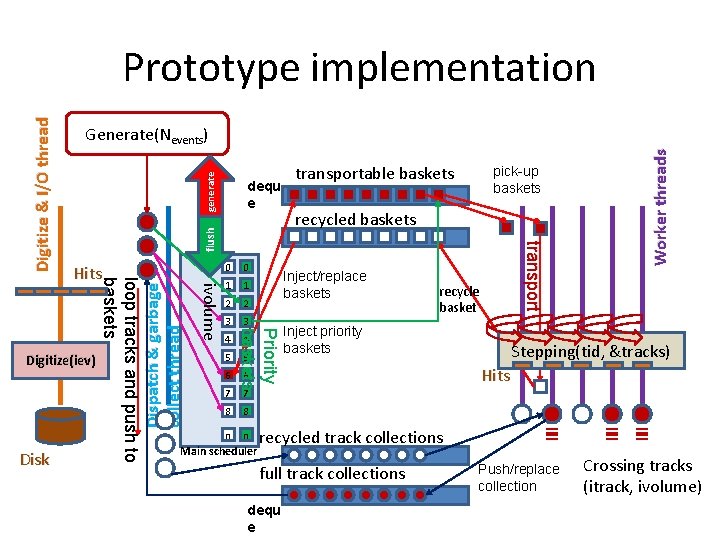

flush Dispatch & garbage collect thread 0 0 1 1 2 2 3 3 4 5 6 7 transportable baskets 4 5 pick-up baskets recycled baskets Inject/replace baskets Priority baskets ivolume loop tracks and push to baskets Hits Digitize(iev) Disk dequ e recycle basket Inject priority baskets 6 Worker threads generate Generate(Nevents) transport Digitize & I/O thread Prototype implementation Stepping(tid, &tracks) Hits 7 8 8 n n Main scheduler recycled track collections full track collections dequ e Push/replace collection Crossing tracks (itrack, ivolume)

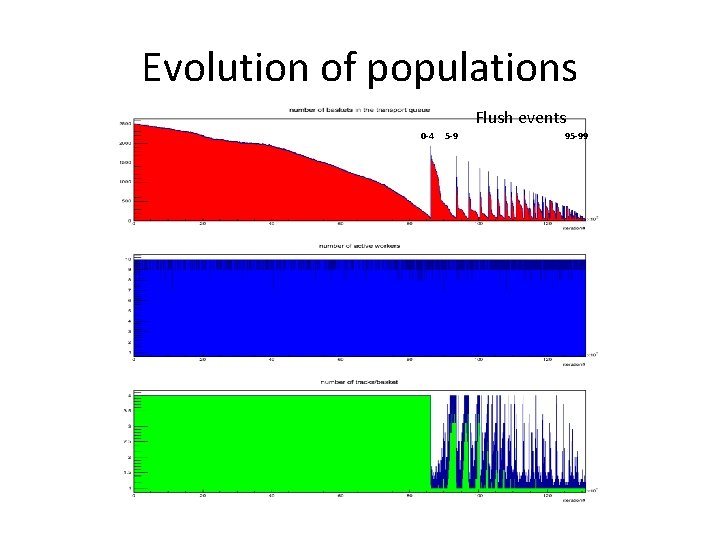

Evolution of populations Flush events 0 -4 5 -9 95 -99

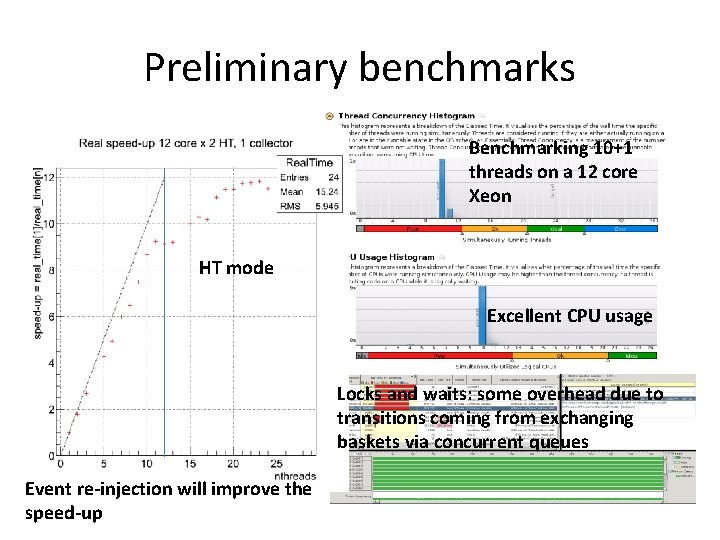

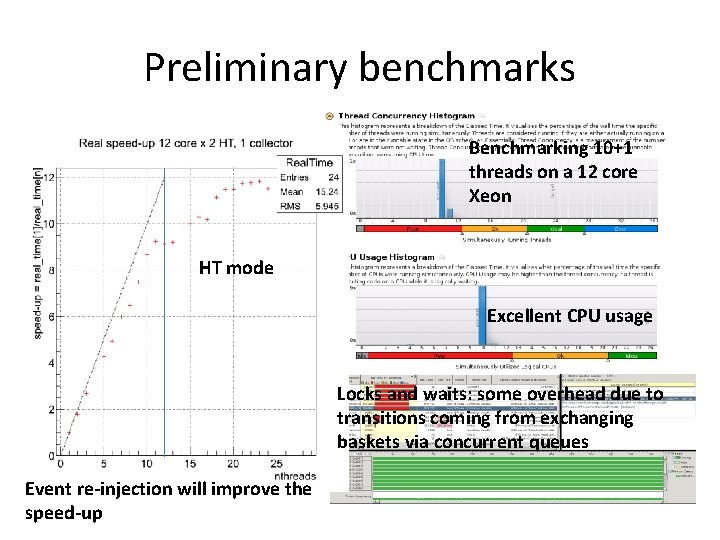

Preliminary benchmarks Benchmarking 10+1 threads on a 12 core Xeon HT mode Excellent CPU usage Locks and waits: some overhead due to transitions coming from exchanging baskets via concurrent queues Event re-injection will improve the speed-up