Parallel Scientific Computing Algorithms and Tools Lecture 1

- Slides: 54

Parallel Scientific Computing: Algorithms and Tools Lecture #1 APMA 2821 A, Spring 2008 Instructors: George Em Karniadakis Leopold Grinberg 1

Logistics q Contact: v. Office hours: GK: M 2 -4 pm; LG: W 2 -4 pm v. Email: {gk, lgrinb}@dam. brown. edu v. Web: www. cfm. brown. edu/people/gk/APMA 2821 A q Textbook: v Karniadakis & Kirby, “Parallel scientific computing in C++/MPI” q Other books: v Shonkwiler & Lefton, “Parallel and Vector Scientific Computing” v Wadleigh & Crawford, “Software Optimization for High Performance Computing” v Foster, “Designing and Building Parallel Programs” (available online) 2

Logistics q. CCV Accounts v. Email: Sharon_King@brown. edu q. Prerequisite: C/Fortran programming q. Grading: v 5 assignments/mini-projects: 50% v 1 Final project/presentation : 50% 3

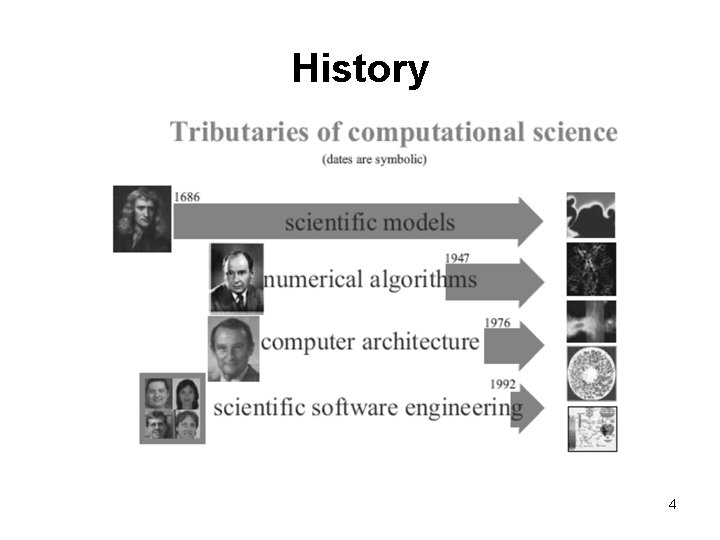

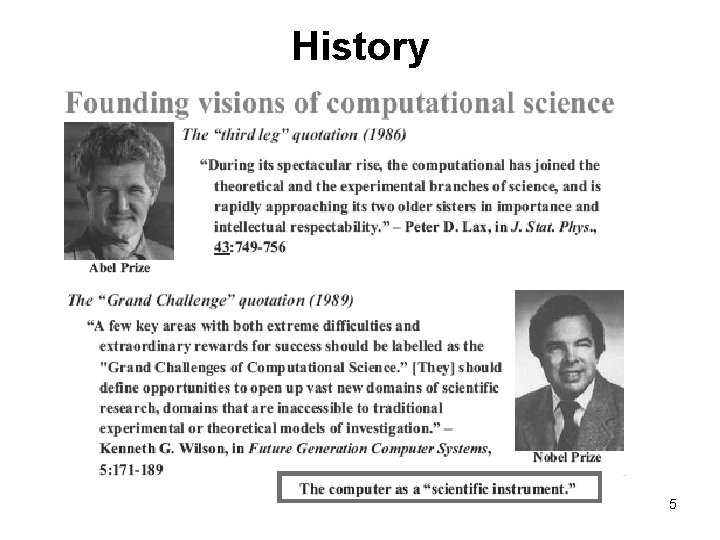

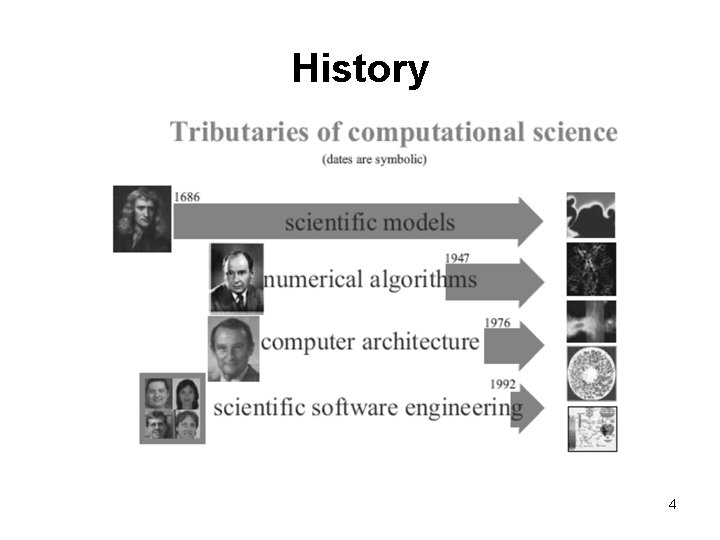

History 4

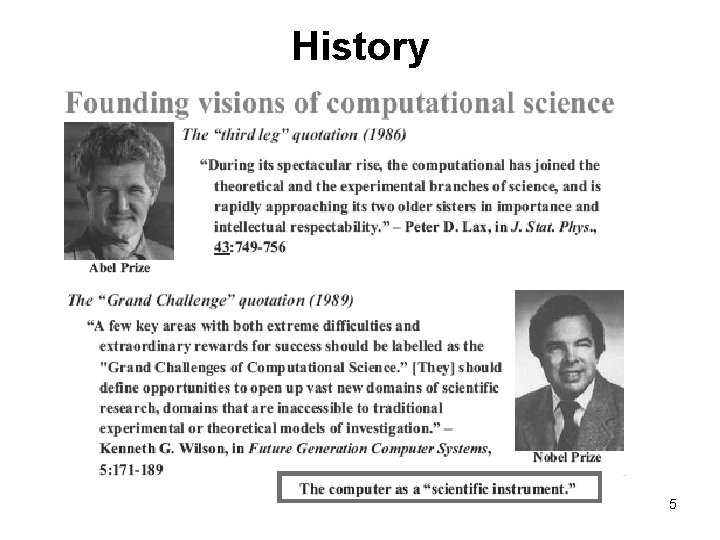

History 5

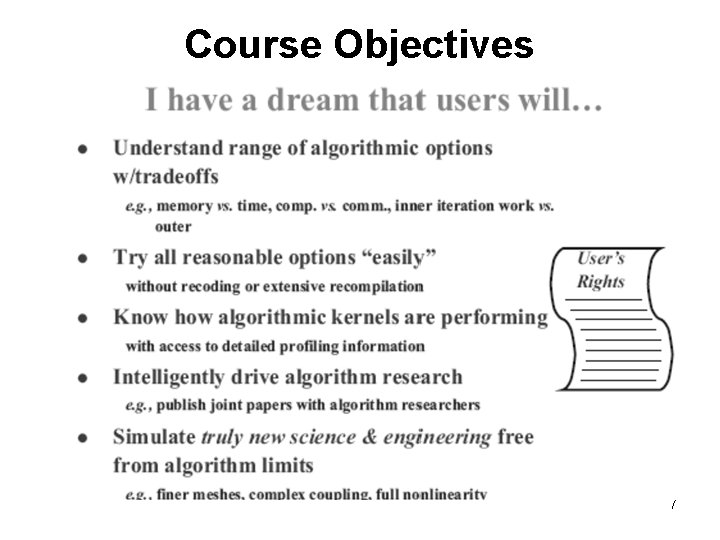

Course Objectives q Understanding of fundamental concepts and programming principles for development of high performance applications q. Able to program a range of parallel computers: PC clusters supercomputers q Make efficient use of high performance parallel computing in your own research 6

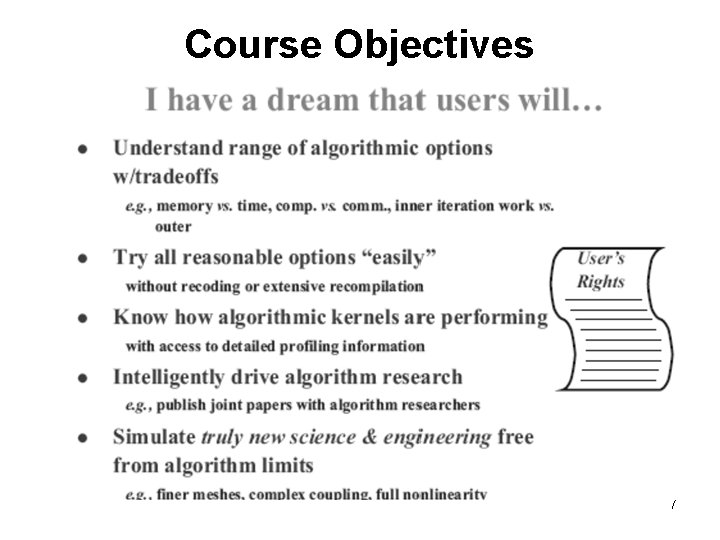

Course Objectives 7

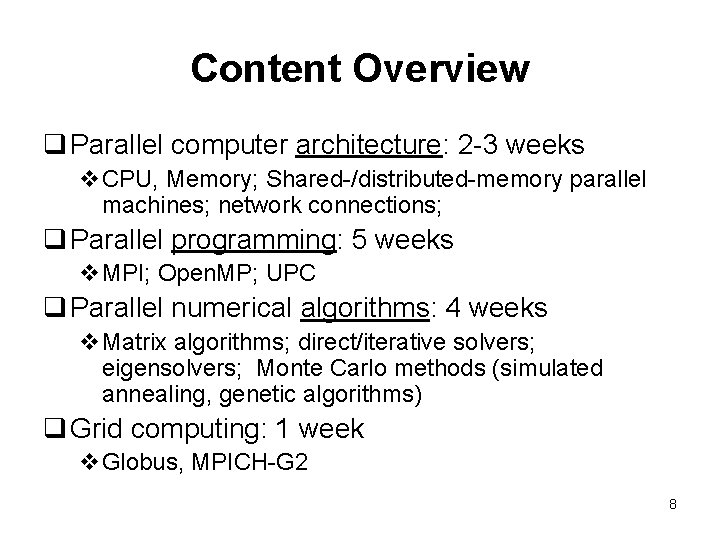

Content Overview q Parallel computer architecture: 2 -3 weeks v. CPU, Memory; Shared-/distributed-memory parallel machines; network connections; q Parallel programming: 5 weeks v. MPI; Open. MP; UPC q Parallel numerical algorithms: 4 weeks v. Matrix algorithms; direct/iterative solvers; eigensolvers; Monte Carlo methods (simulated annealing, genetic algorithms) q Grid computing: 1 week v. Globus, MPICH-G 2 8

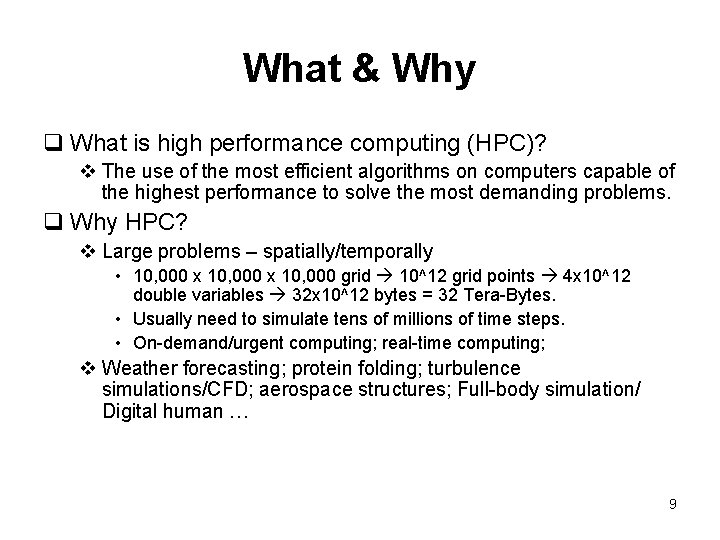

What & Why q What is high performance computing (HPC)? v The use of the most efficient algorithms on computers capable of the highest performance to solve the most demanding problems. q Why HPC? v Large problems – spatially/temporally • 10, 000 x 10, 000 grid 10^12 grid points 4 x 10^12 double variables 32 x 10^12 bytes = 32 Tera-Bytes. • Usually need to simulate tens of millions of time steps. • On-demand/urgent computing; real-time computing; v Weather forecasting; protein folding; turbulence simulations/CFD; aerospace structures; Full-body simulation/ Digital human … 9

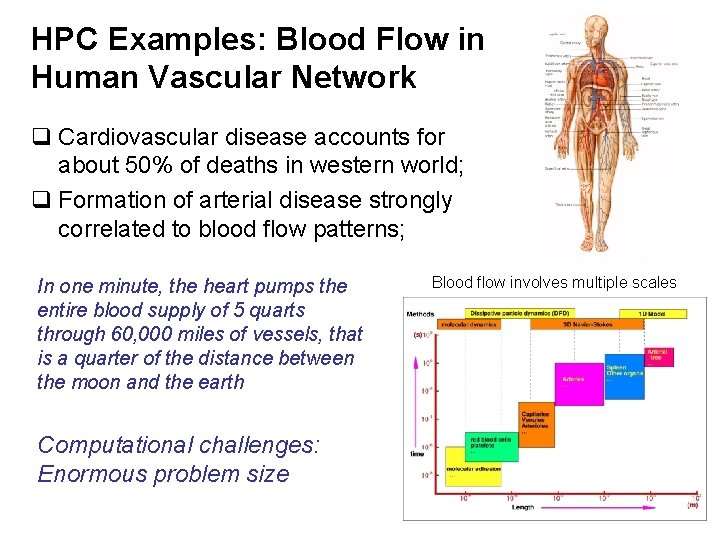

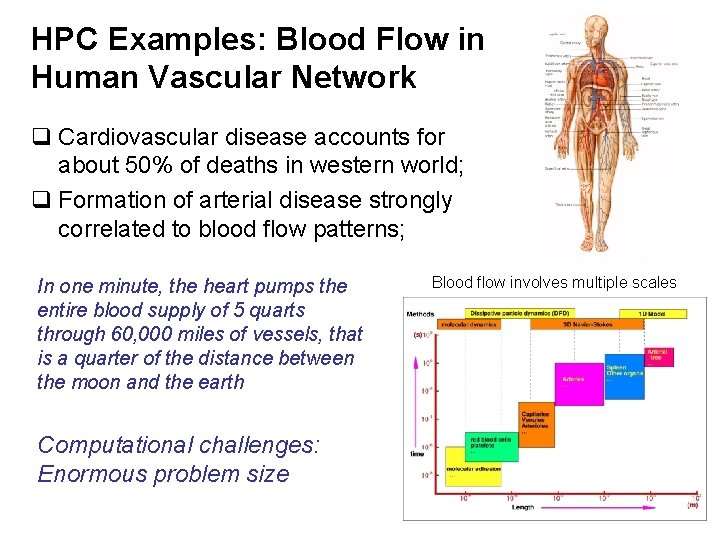

HPC Examples: Blood Flow in Human Vascular Network q Cardiovascular disease accounts for about 50% of deaths in western world; q Formation of arterial disease strongly correlated to blood flow patterns; In one minute, the heart pumps the entire blood supply of 5 quarts through 60, 000 miles of vessels, that is a quarter of the distance between the moon and the earth Blood flow involves multiple scales Computational challenges: Enormous problem size 10

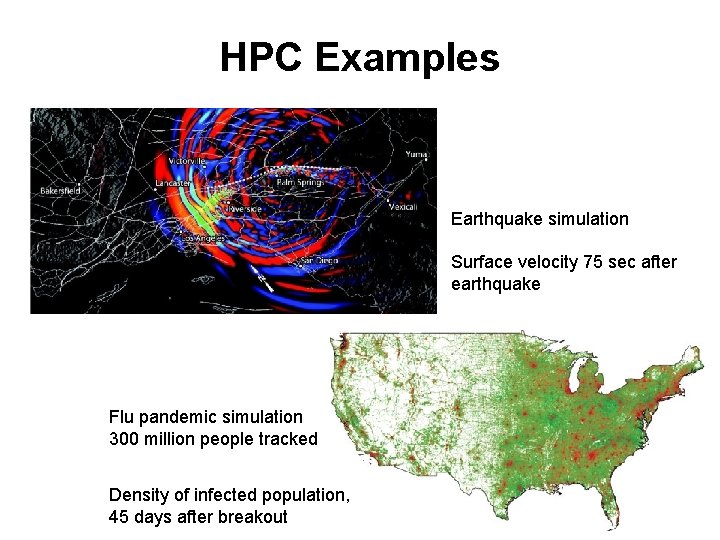

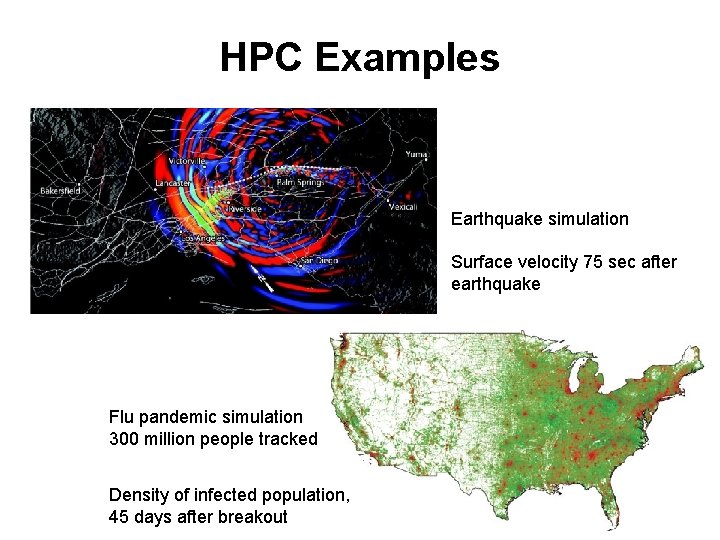

HPC Examples Earthquake simulation Surface velocity 75 sec after earthquake Flu pandemic simulation 300 million people tracked Density of infected population, 45 days after breakout 11

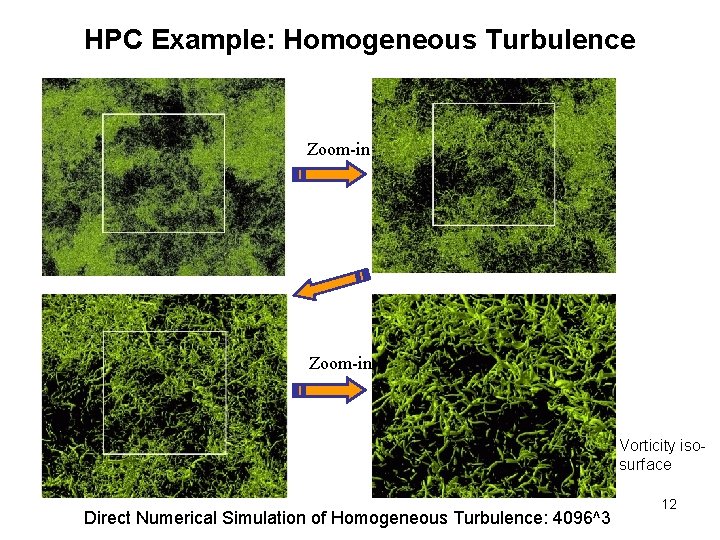

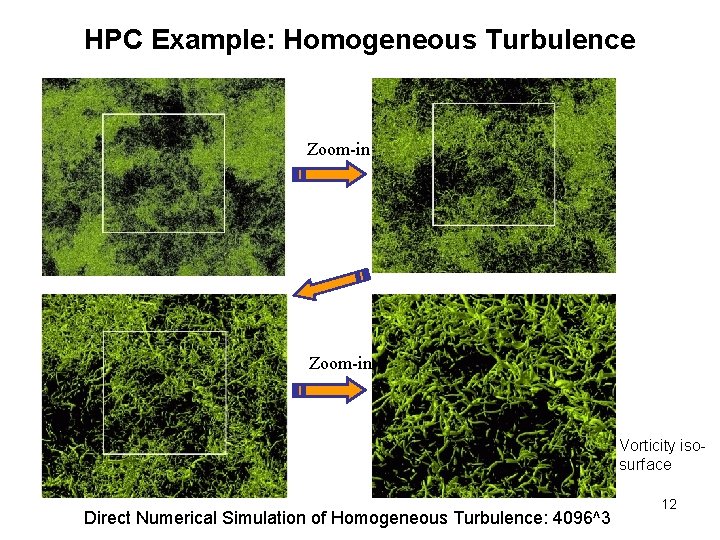

HPC Example: Homogeneous Turbulence Zoom-in Vorticity isosurface Direct Numerical Simulation of Homogeneous Turbulence: 4096^3 12

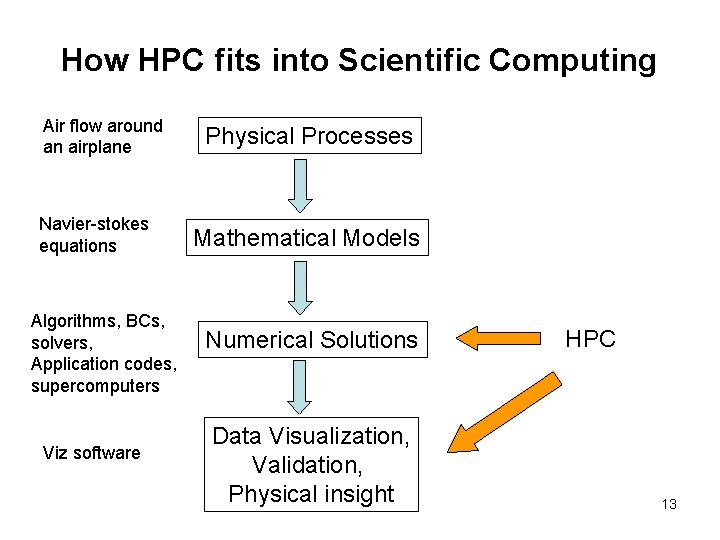

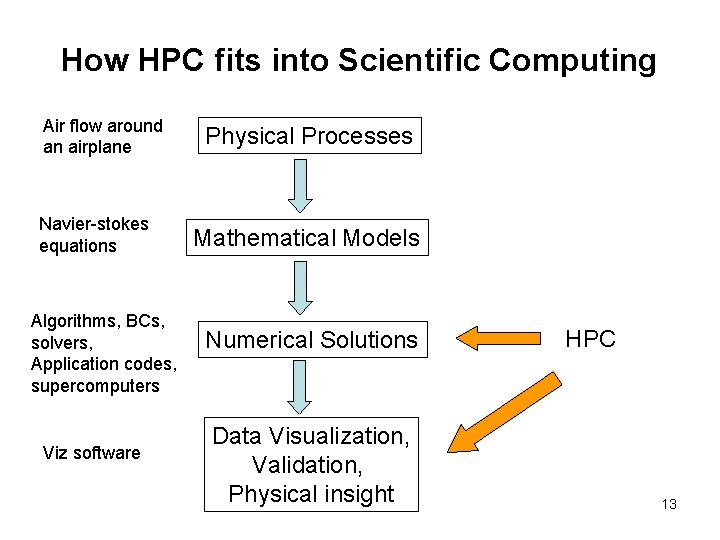

How HPC fits into Scientific Computing Air flow around an airplane Navier-stokes equations Algorithms, BCs, solvers, Application codes, supercomputers Viz software Physical Processes Mathematical Models Numerical Solutions Data Visualization, Validation, Physical insight HPC 13

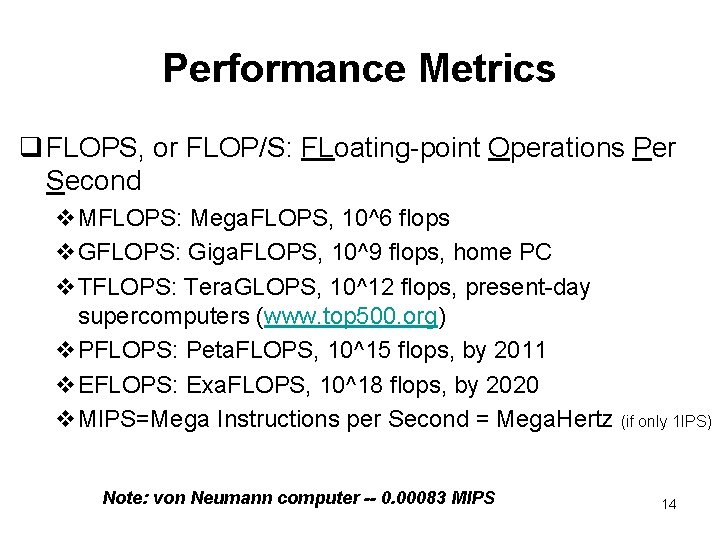

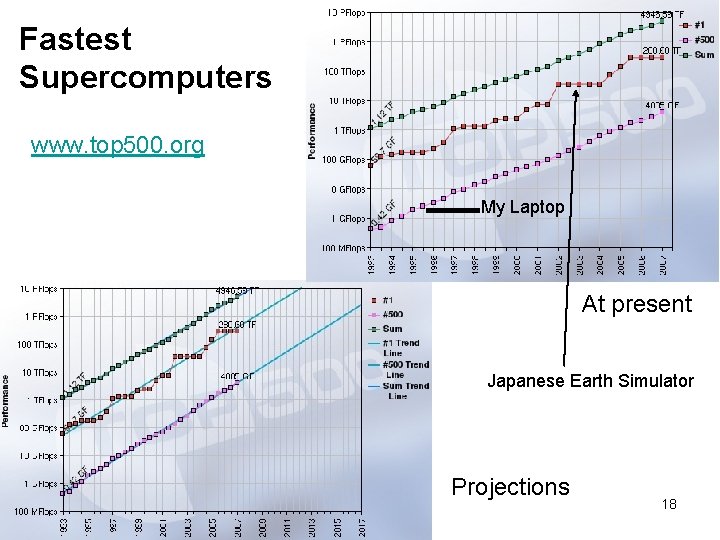

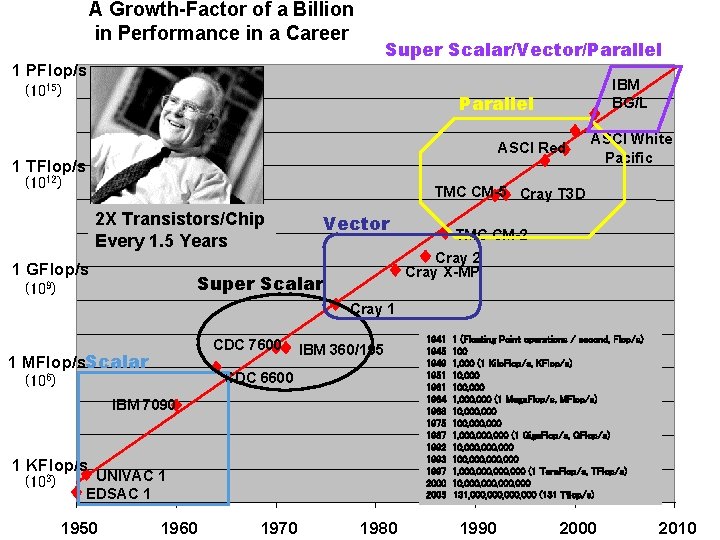

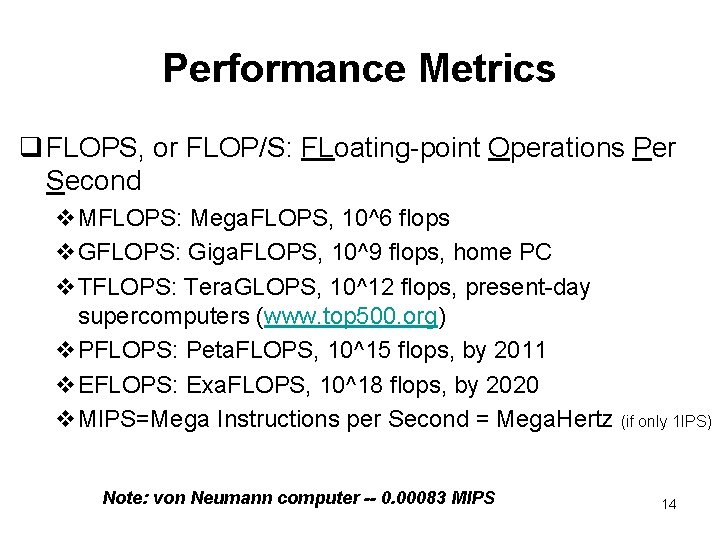

Performance Metrics q FLOPS, or FLOP/S: FLoating-point Operations Per Second v. MFLOPS: Mega. FLOPS, 10^6 flops v. GFLOPS: Giga. FLOPS, 10^9 flops, home PC v. TFLOPS: Tera. GLOPS, 10^12 flops, present-day supercomputers (www. top 500. org) v. PFLOPS: Peta. FLOPS, 10^15 flops, by 2011 v. EFLOPS: Exa. FLOPS, 10^18 flops, by 2020 v. MIPS=Mega Instructions per Second = Mega. Hertz (if only 1 IPS) Note: von Neumann computer -- 0. 00083 MIPS 14

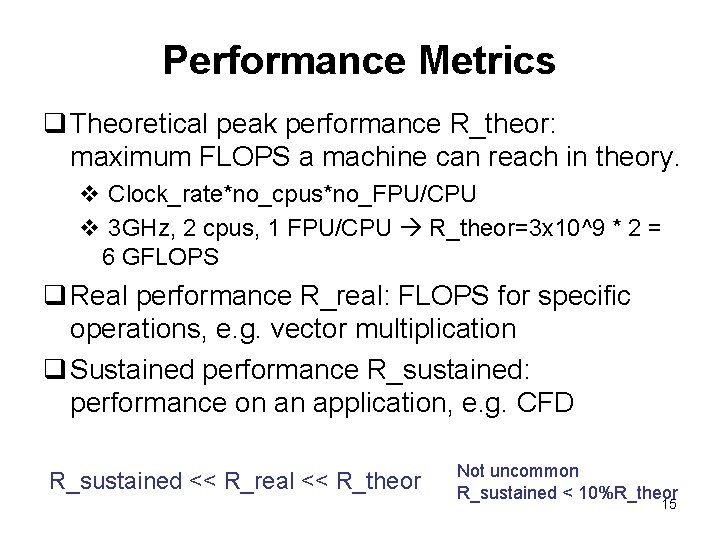

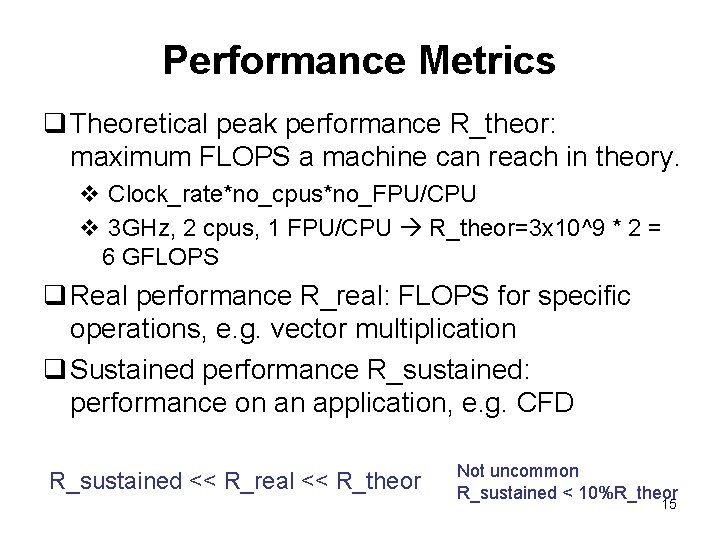

Performance Metrics q Theoretical peak performance R_theor: maximum FLOPS a machine can reach in theory. v Clock_rate*no_cpus*no_FPU/CPU v 3 GHz, 2 cpus, 1 FPU/CPU R_theor=3 x 10^9 * 2 = 6 GFLOPS q Real performance R_real: FLOPS for specific operations, e. g. vector multiplication q Sustained performance R_sustained: performance on an application, e. g. CFD R_sustained << R_real << R_theor Not uncommon R_sustained < 10%R_theor 15

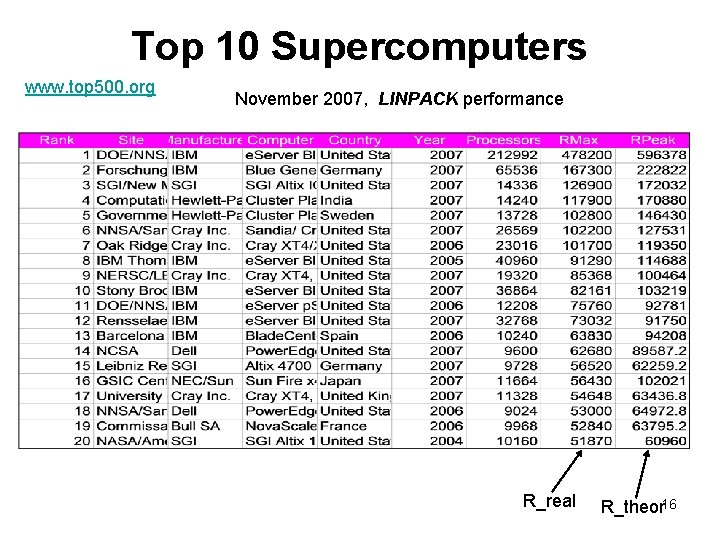

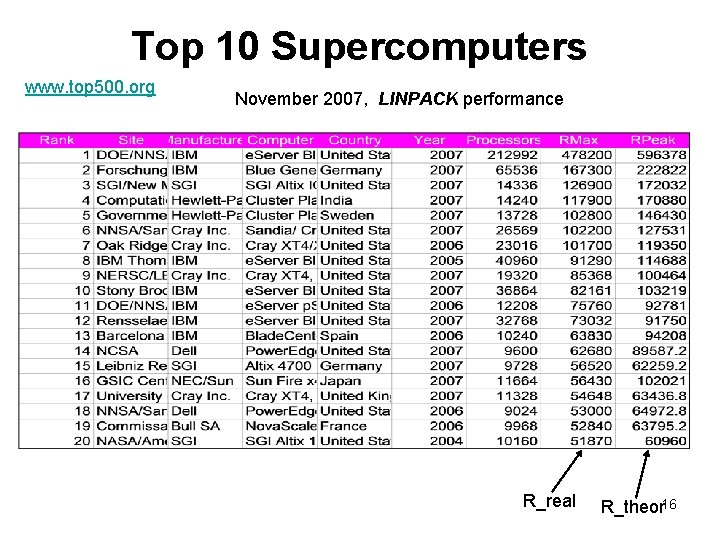

Top 10 Supercomputers www. top 500. org November 2007, LINPACK performance R_real R_theor 16

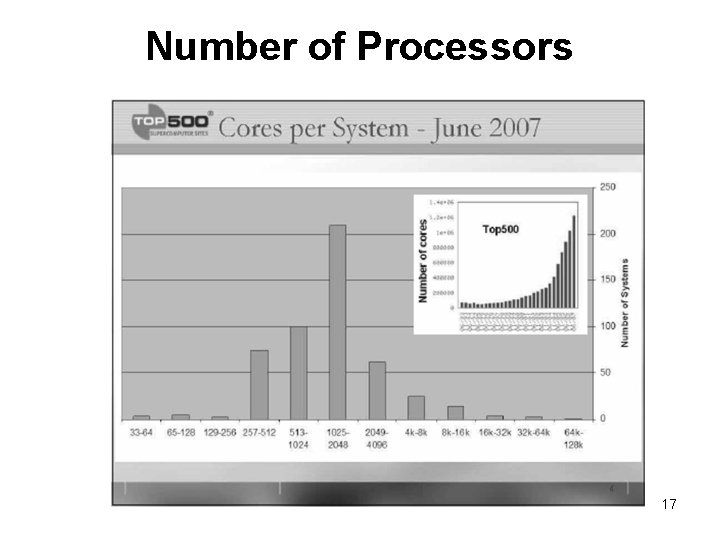

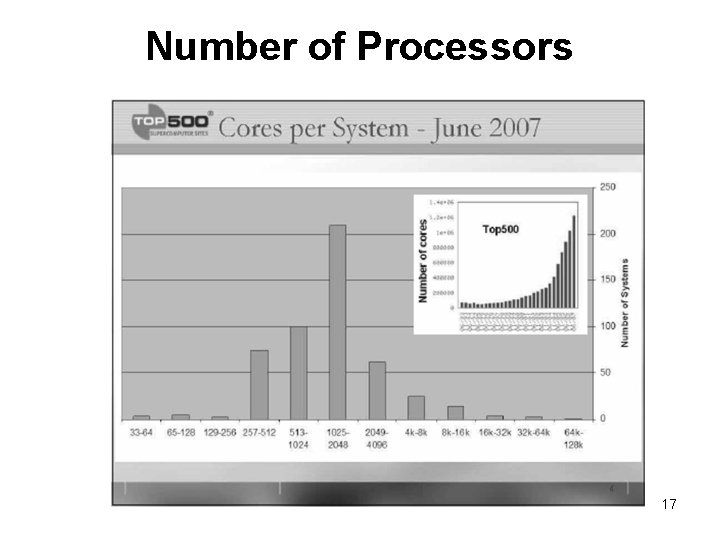

Number of Processors 17

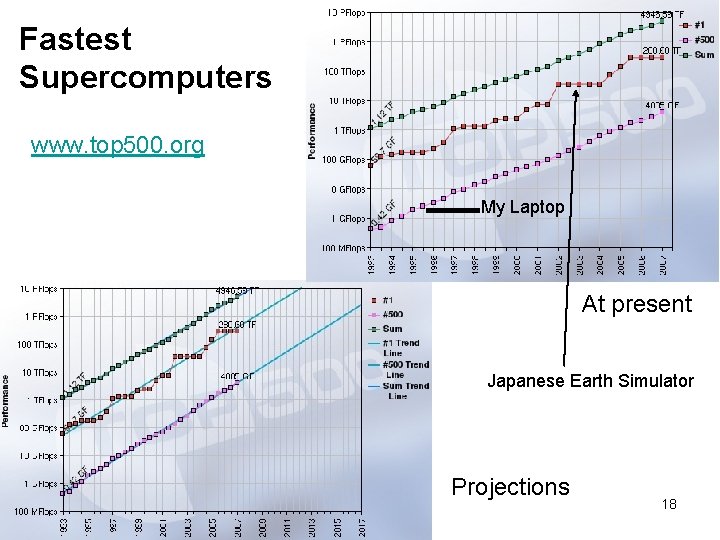

Fastest Supercomputers www. top 500. org My Laptop At present Japanese Earth Simulator Projections 18

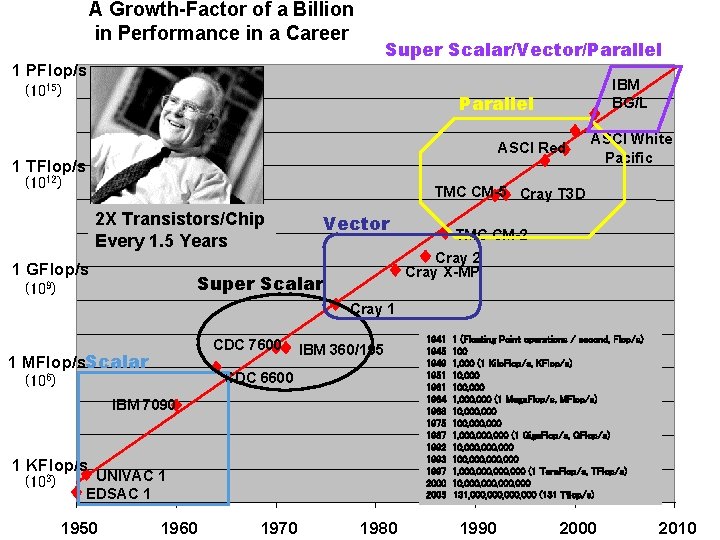

A Growth-Factor of a Billion in Performance in a Career Super Scalar/Vector/Parallel 1 PFlop/s (1015) IBM BG/L Parallel ASCI Red 1 TFlop/s (1012) ASCI White Pacific TMC CM-5 Cray T 3 D 2 X Transistors/Chip Every 1. 5 Years 1 GFlop/s Vector Cray 2 Cray X-MP Super Scalar (109) TMC CM-2 Cray 1 CDC 7600 1 MFlop/s. Scalar IBM 360/195 CDC 6600 (106) IBM 7090 1 KFlop/s (103) UNIVAC 1 EDSAC 1 1950 1960 1970 1980 1941 1945 1949 1951 1964 1968 1975 1987 1992 1993 1997 2000 2005 1 (Floating Point operations / second, Flop/s) 100 1, 000 (1 Kilo. Flop/s, KFlop/s) 10, 000 100, 000 1, 000 (1 Mega. Flop/s, MFlop/s) 10, 000 100, 000 1, 000, 000 (1 Giga. Flop/s, GFlop/s) 10, 000, 000 100, 000, 000 1, 000, 000 (1 Tera. Flop/s, TFlop/s) 10, 000, 000 131, 000, 000 (131 Tflop/s) 1990 2000 2010

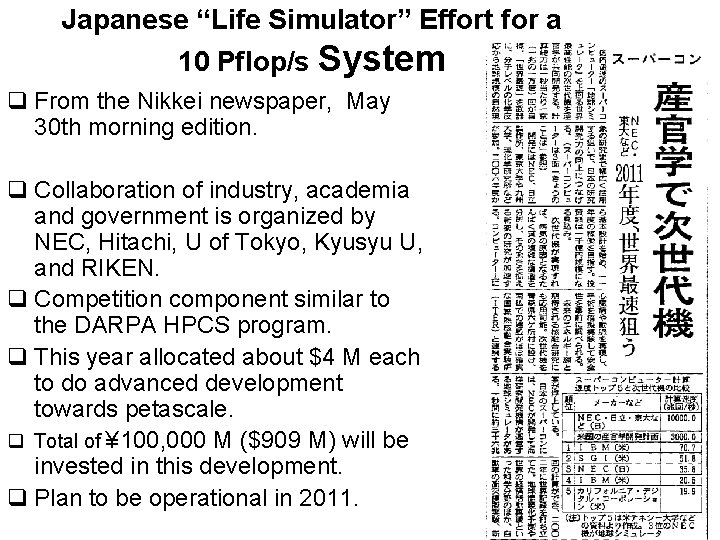

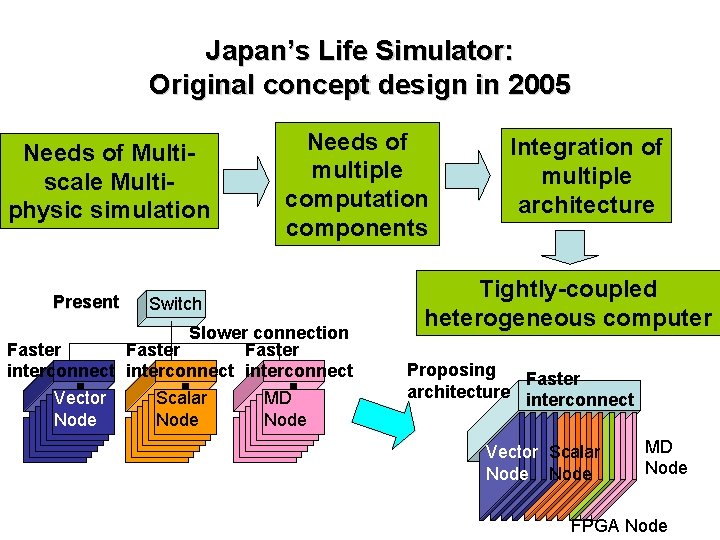

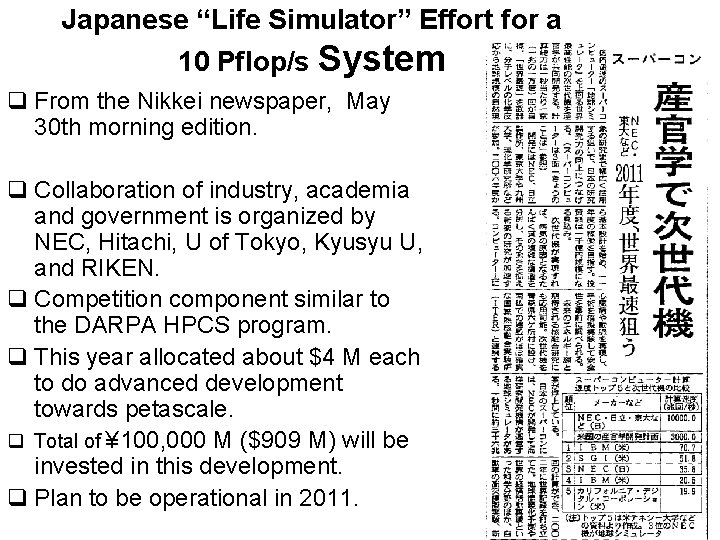

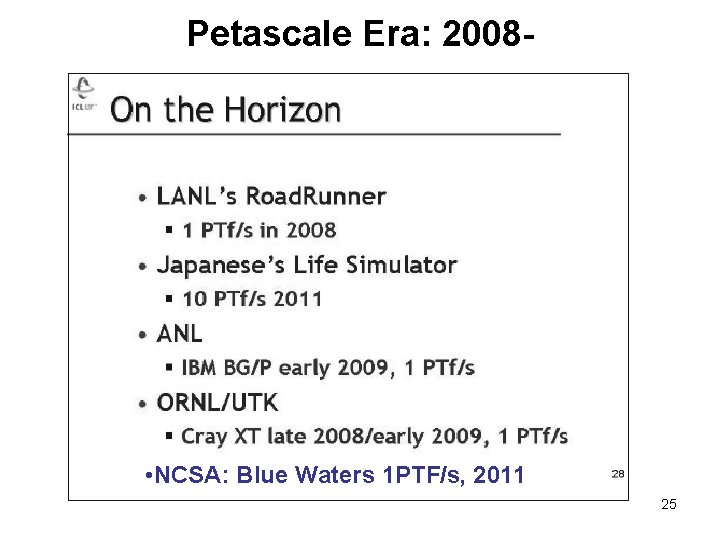

Japanese “Life Simulator” Effort for a 10 Pflop/s System q From the Nikkei newspaper, May 30 th morning edition. q Collaboration of industry, academia and government is organized by NEC, Hitachi, U of Tokyo, Kyusyu U, and RIKEN. q Competition component similar to the DARPA HPCS program. q This year allocated about $4 M each to do advanced development towards petascale. q Total of ¥ 100, 000 M ($909 M) will be invested in this development. q Plan to be operational in 2011.

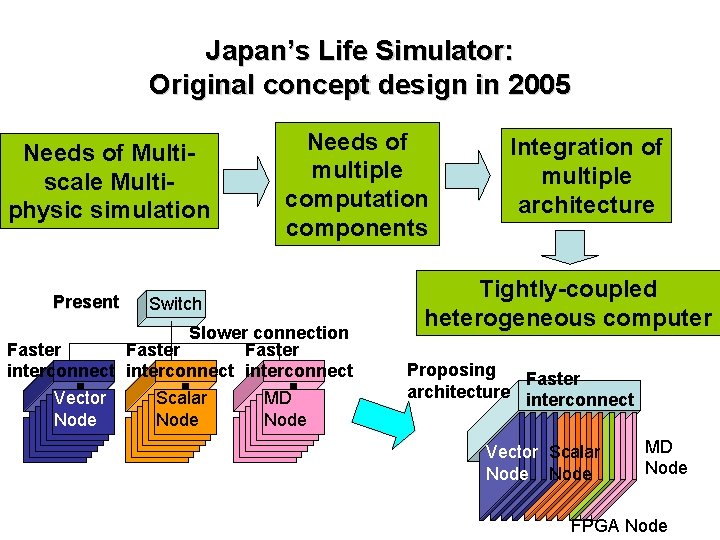

Japan’s Life Simulator: Original concept design in 2005 Needs of Multiscale Multiphysic simulation Present Needs of multiple computation components Switch Slower connection Faster interconnect Vector Node Scalar Node MD Node Integration of multiple architecture Tightly-coupled heterogeneous computer Proposing Faster architecture interconnect Vector Scalar Node MD Node FPGA Node

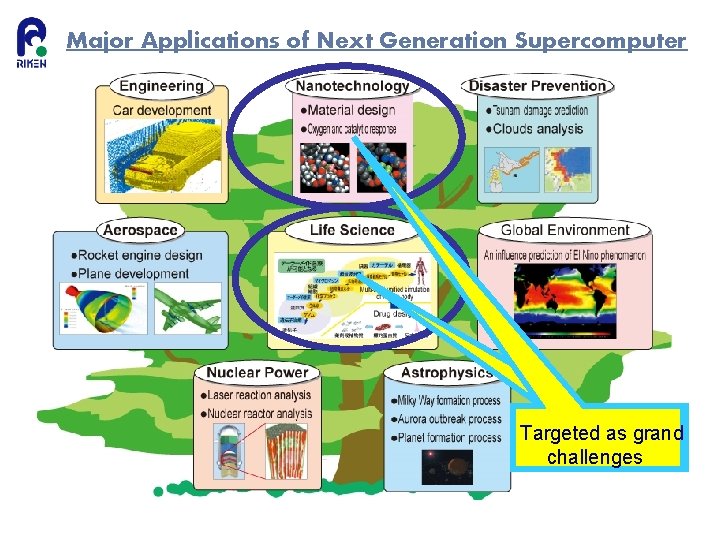

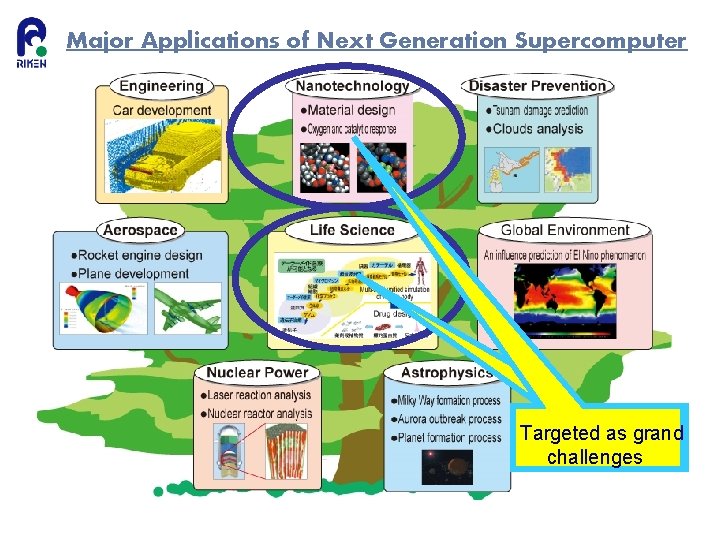

Major Applications of Next Generation Supercomputer Targeted as grand challenges

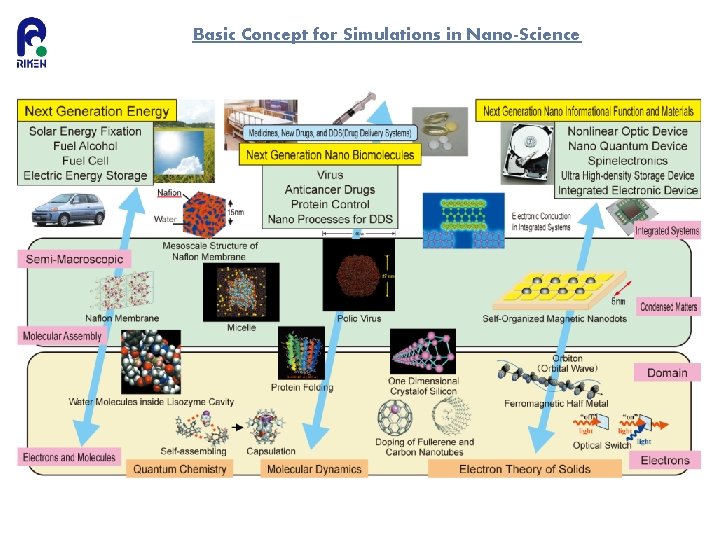

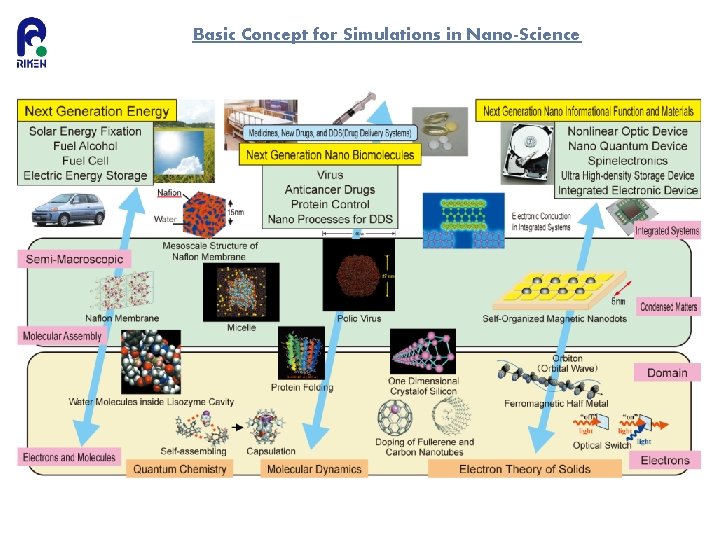

Basic Concept for Simulations in Nano-Science

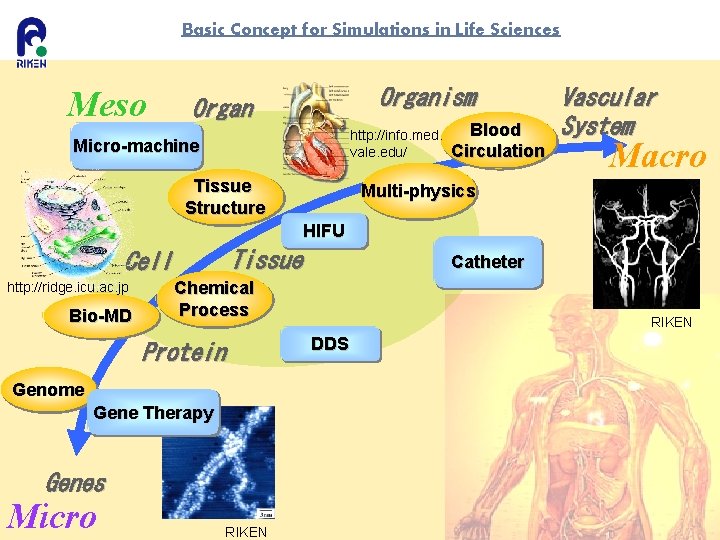

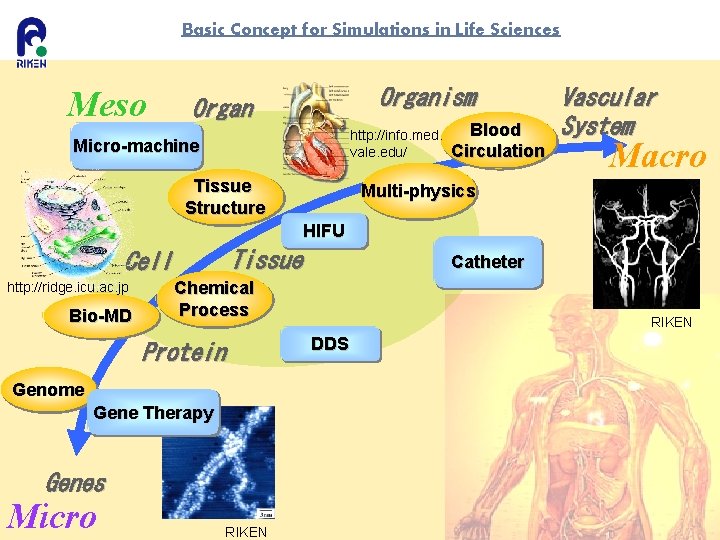

Basic Concept for Simulations in Life Sciences Meso Organism Organ http: //info. med. vale. edu/ Micro-machine Tissue Structure Blood Circulation Vascular System Macro Multi-physics HIFU Tissue Cell http: //ridge. icu. ac. jp Bio-MD Chemical Process Protein Genome Gene Therapy Genes Micro Catheter RIKEN DDS

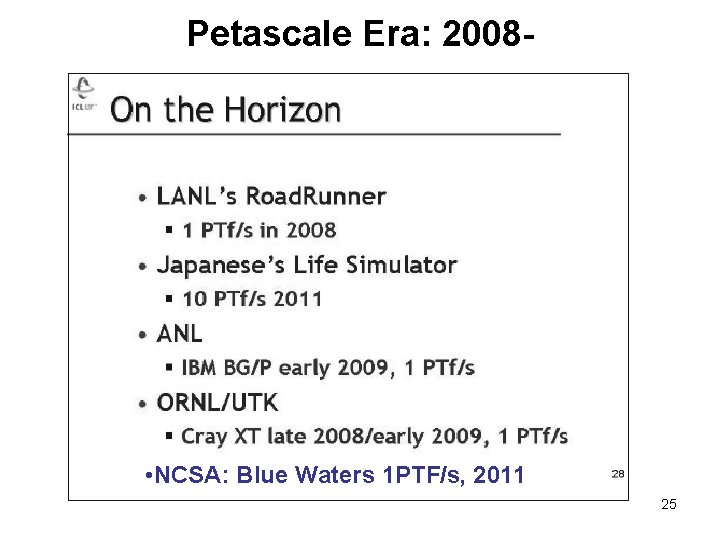

Petascale Era: 2008 - • NCSA: Blue Waters 1 PTF/s, 2011 25

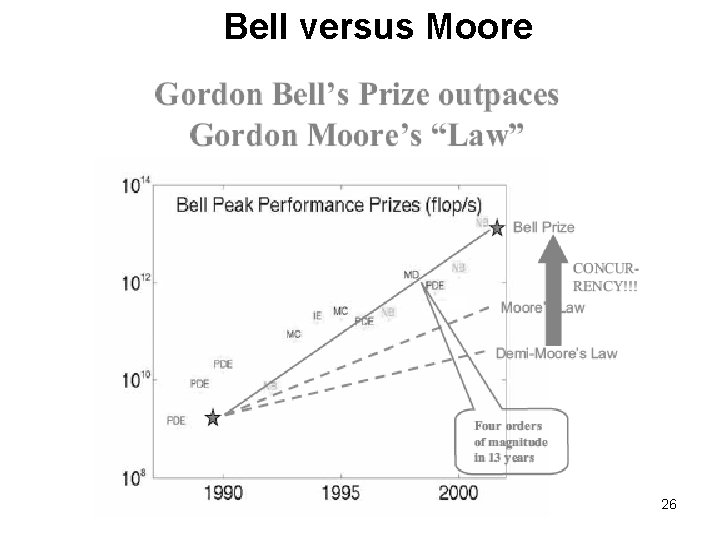

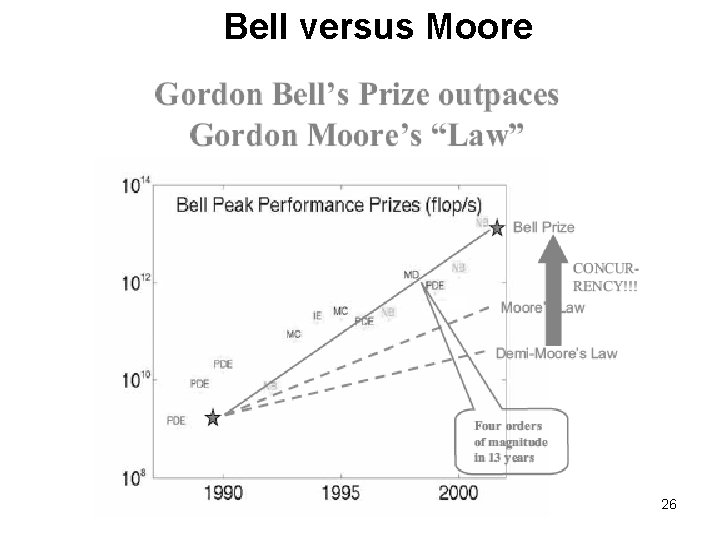

Bell versus Moore 26

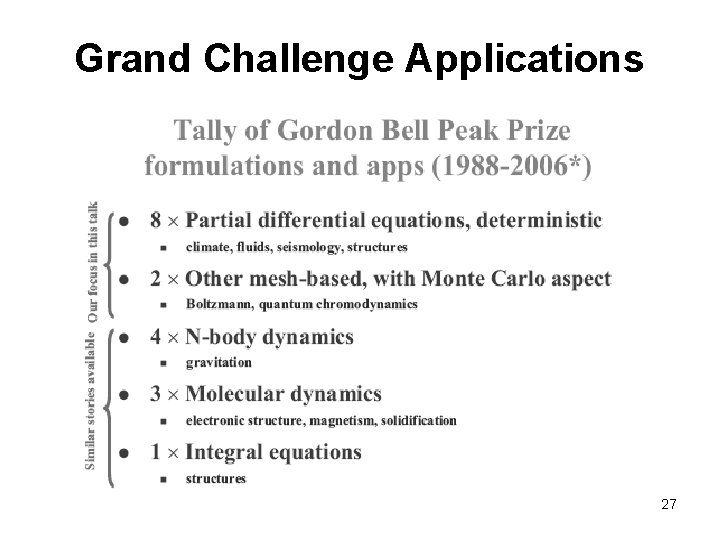

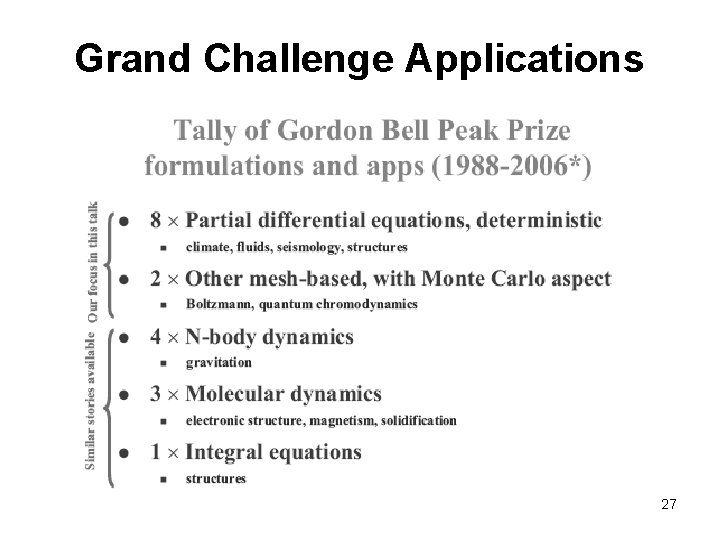

Grand Challenge Applications 27

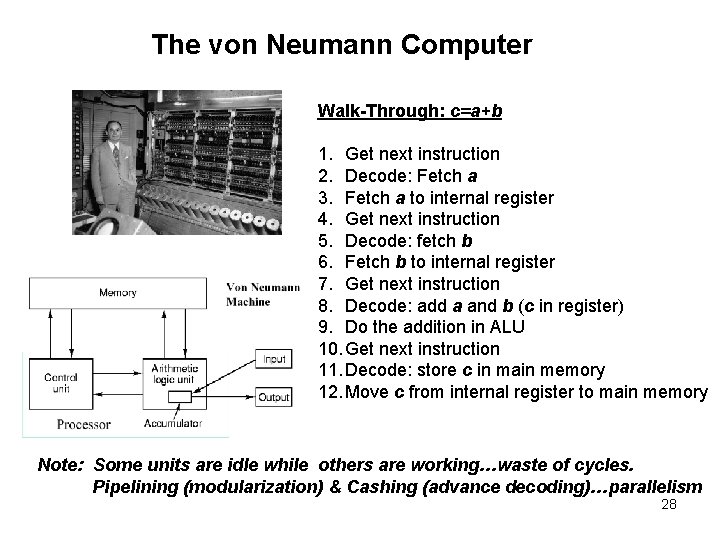

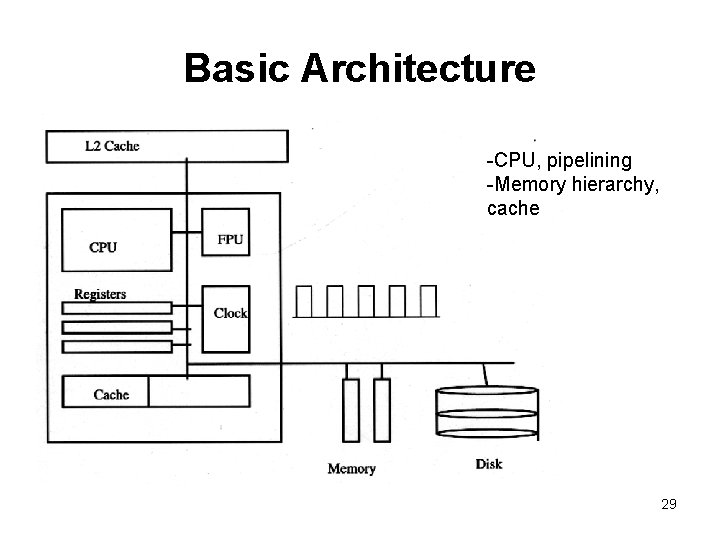

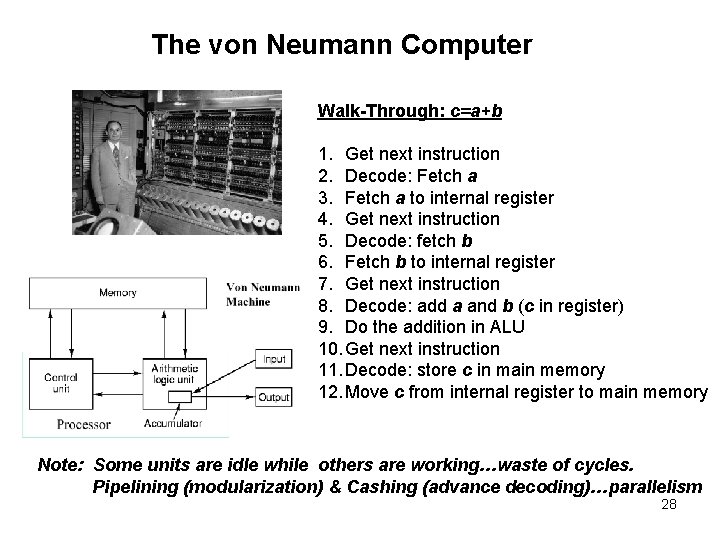

The von Neumann Computer Walk-Through: c=a+b 1. Get next instruction 2. Decode: Fetch a 3. Fetch a to internal register 4. Get next instruction 5. Decode: fetch b 6. Fetch b to internal register 7. Get next instruction 8. Decode: add a and b (c in register) 9. Do the addition in ALU 10. Get next instruction 11. Decode: store c in main memory 12. Move c from internal register to main memory Note: Some units are idle while others are working…waste of cycles. Pipelining (modularization) & Cashing (advance decoding)…parallelism 28

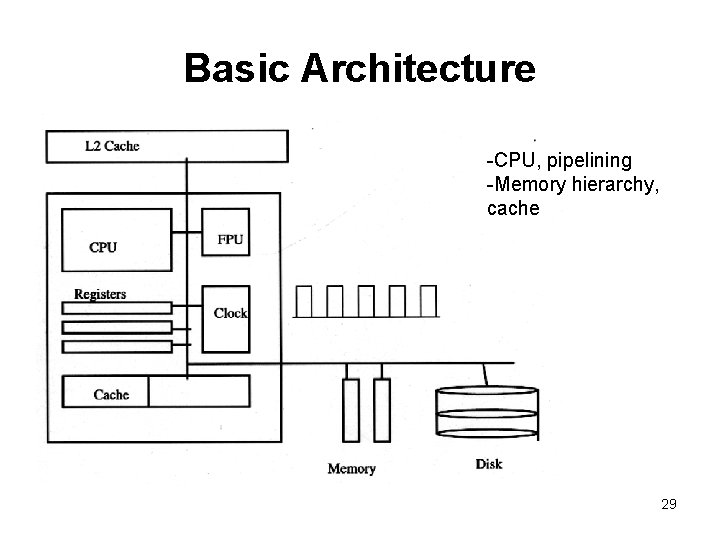

Basic Architecture -CPU, pipelining -Memory hierarchy, cache 29

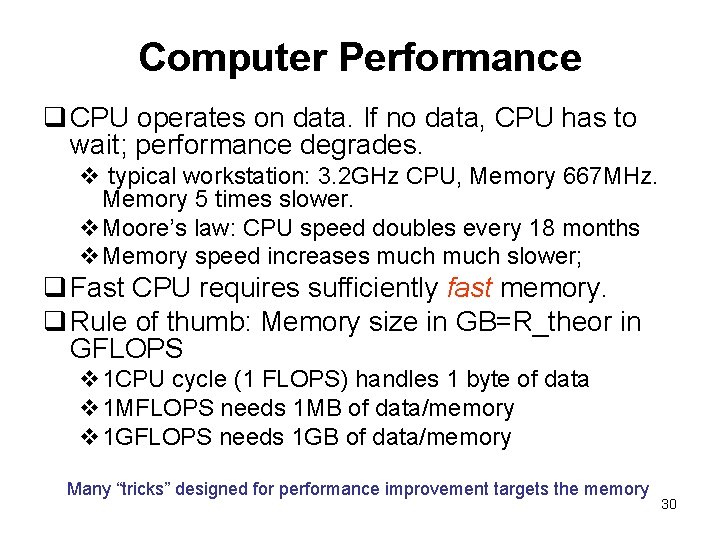

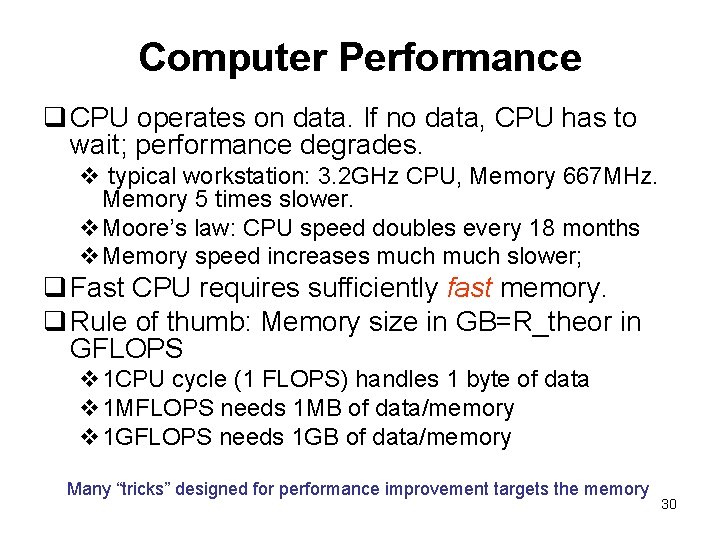

Computer Performance q CPU operates on data. If no data, CPU has to wait; performance degrades. v typical workstation: 3. 2 GHz CPU, Memory 667 MHz. Memory 5 times slower. v. Moore’s law: CPU speed doubles every 18 months v. Memory speed increases much slower; q Fast CPU requires sufficiently fast memory. q Rule of thumb: Memory size in GB=R_theor in GFLOPS v 1 CPU cycle (1 FLOPS) handles 1 byte of data v 1 MFLOPS needs 1 MB of data/memory v 1 GFLOPS needs 1 GB of data/memory Many “tricks” designed for performance improvement targets the memory 30

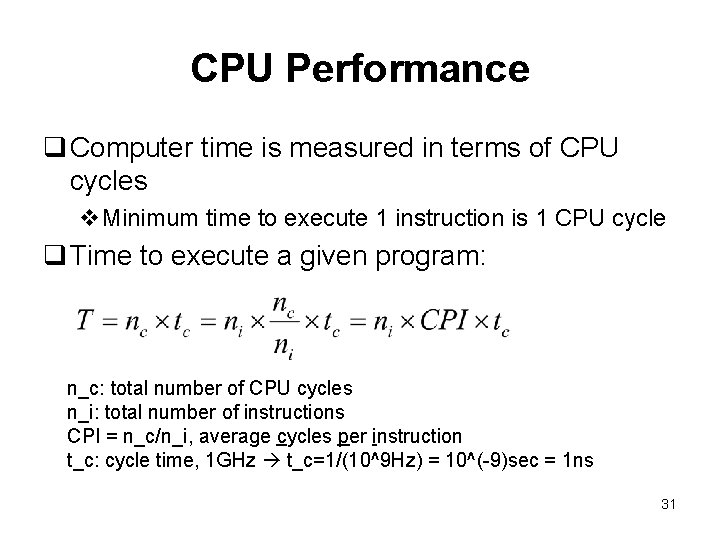

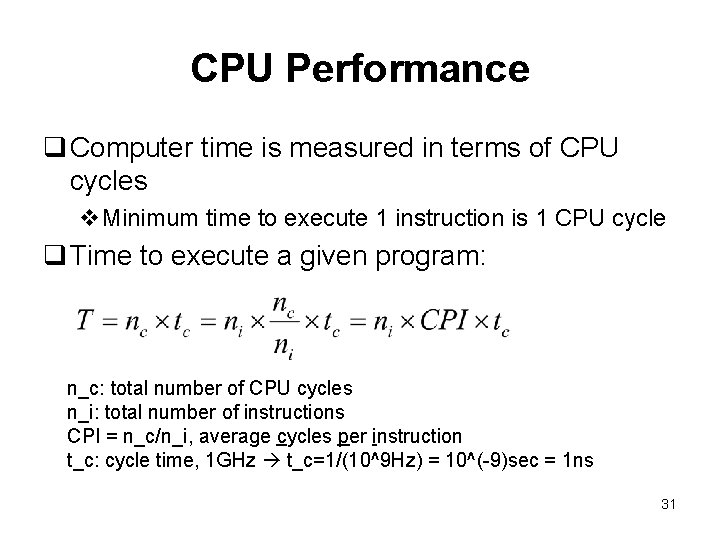

CPU Performance q Computer time is measured in terms of CPU cycles v. Minimum time to execute 1 instruction is 1 CPU cycle q Time to execute a given program: n_c: total number of CPU cycles n_i: total number of instructions CPI = n_c/n_i, average cycles per instruction t_c: cycle time, 1 GHz t_c=1/(10^9 Hz) = 10^(-9)sec = 1 ns 31

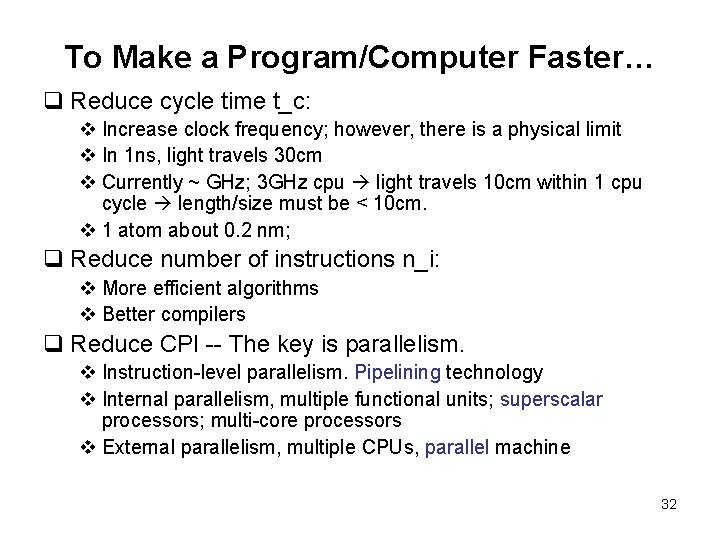

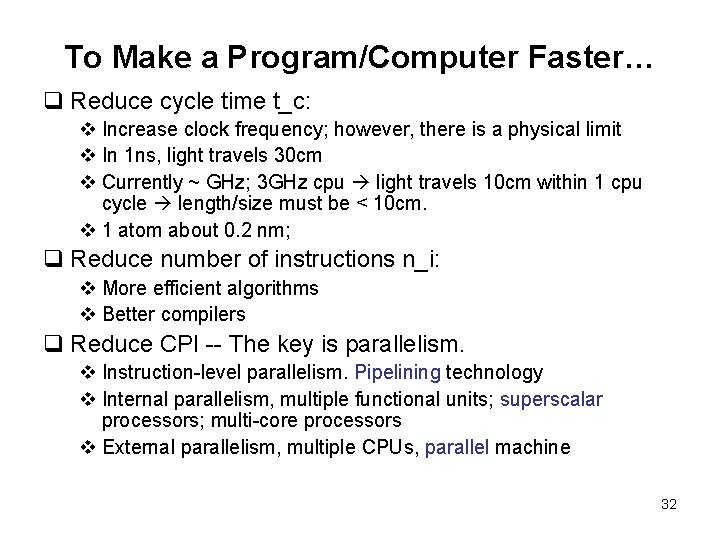

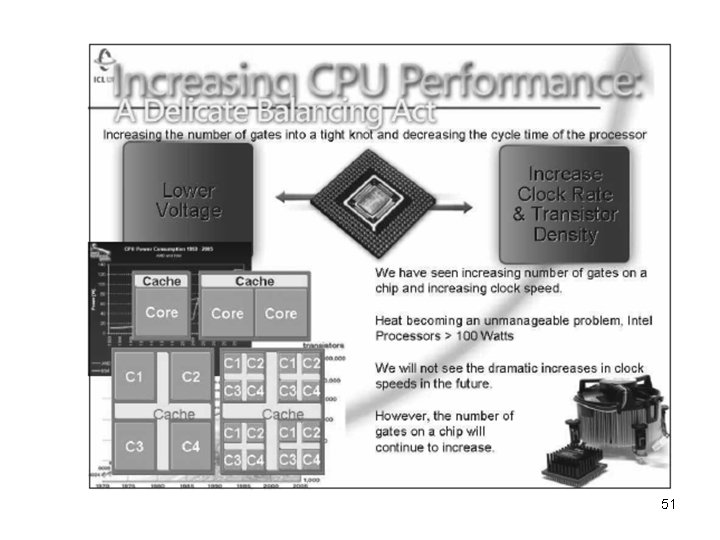

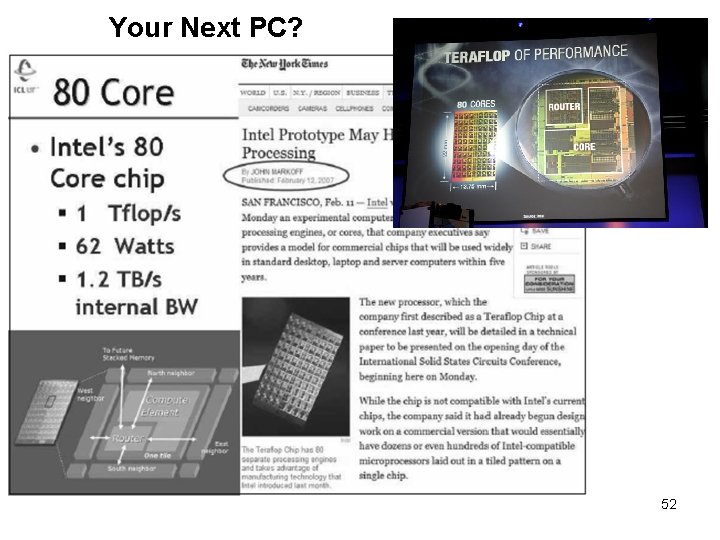

To Make a Program/Computer Faster… q Reduce cycle time t_c: v Increase clock frequency; however, there is a physical limit v In 1 ns, light travels 30 cm v Currently ~ GHz; 3 GHz cpu light travels 10 cm within 1 cpu cycle length/size must be < 10 cm. v 1 atom about 0. 2 nm; q Reduce number of instructions n_i: v More efficient algorithms v Better compilers q Reduce CPI -- The key is parallelism. v Instruction-level parallelism. Pipelining technology v Internal parallelism, multiple functional units; superscalar processors; multi-core processors v External parallelism, multiple CPUs, parallel machine 32

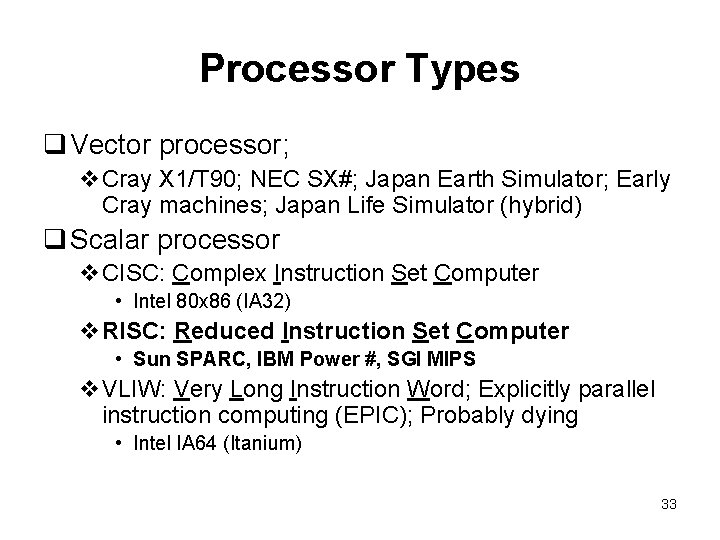

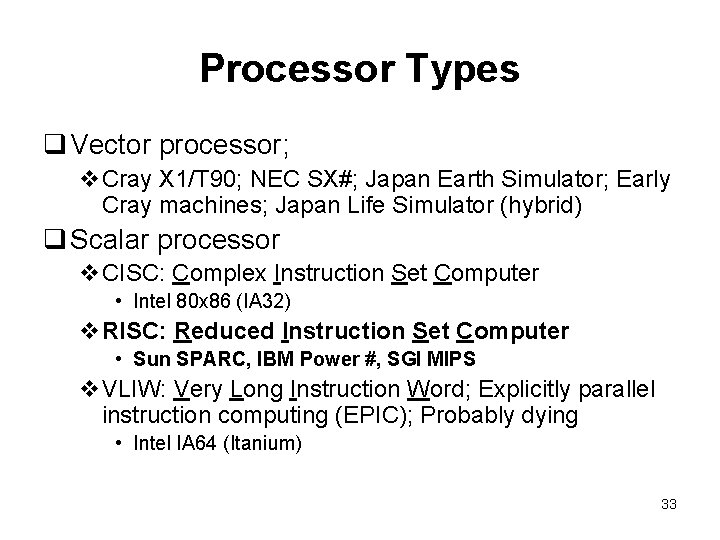

Processor Types q Vector processor; v. Cray X 1/T 90; NEC SX#; Japan Earth Simulator; Early Cray machines; Japan Life Simulator (hybrid) q Scalar processor v. CISC: Complex Instruction Set Computer • Intel 80 x 86 (IA 32) v. RISC: Reduced Instruction Set Computer • Sun SPARC, IBM Power #, SGI MIPS v. VLIW: Very Long Instruction Word; Explicitly parallel instruction computing (EPIC); Probably dying • Intel IA 64 (Itanium) 33

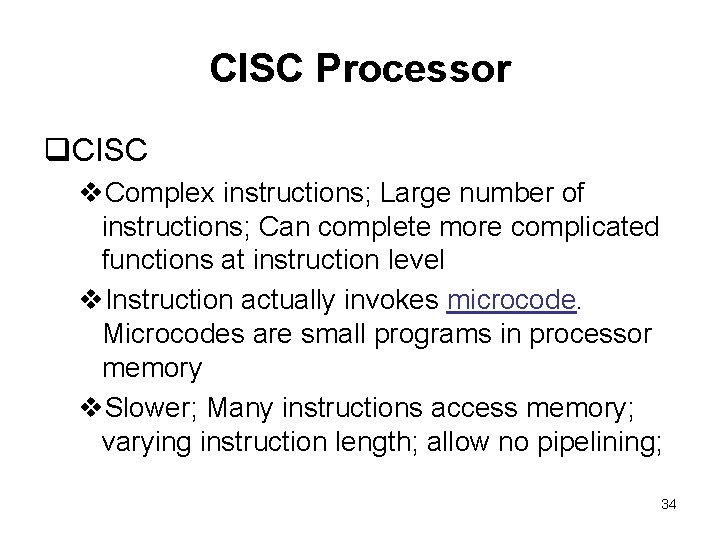

CISC Processor q. CISC v. Complex instructions; Large number of instructions; Can complete more complicated functions at instruction level v. Instruction actually invokes microcode. Microcodes are small programs in processor memory v. Slower; Many instructions access memory; varying instruction length; allow no pipelining; 34

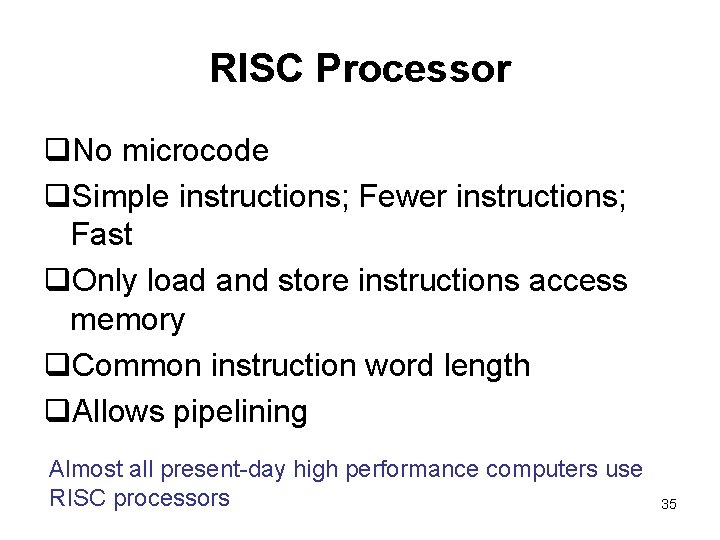

RISC Processor q. No microcode q. Simple instructions; Fewer instructions; Fast q. Only load and store instructions access memory q. Common instruction word length q. Allows pipelining Almost all present-day high performance computers use RISC processors 35

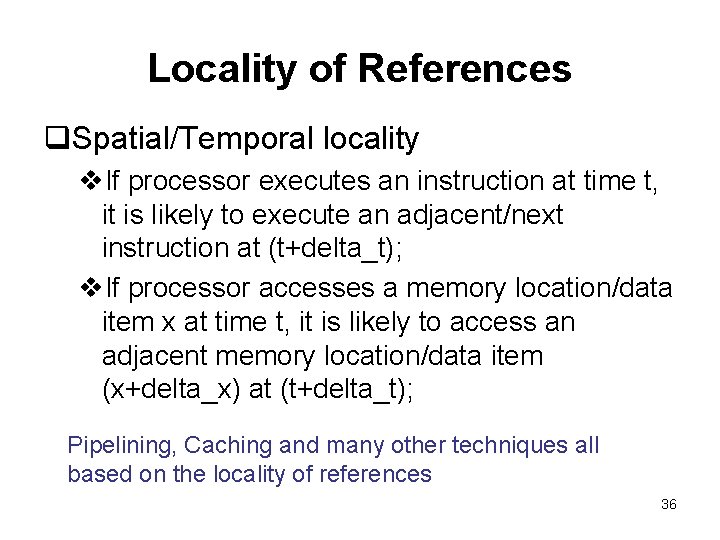

Locality of References q. Spatial/Temporal locality v. If processor executes an instruction at time t, it is likely to execute an adjacent/next instruction at (t+delta_t); v. If processor accesses a memory location/data item x at time t, it is likely to access an adjacent memory location/data item (x+delta_x) at (t+delta_t); Pipelining, Caching and many other techniques all based on the locality of references 36

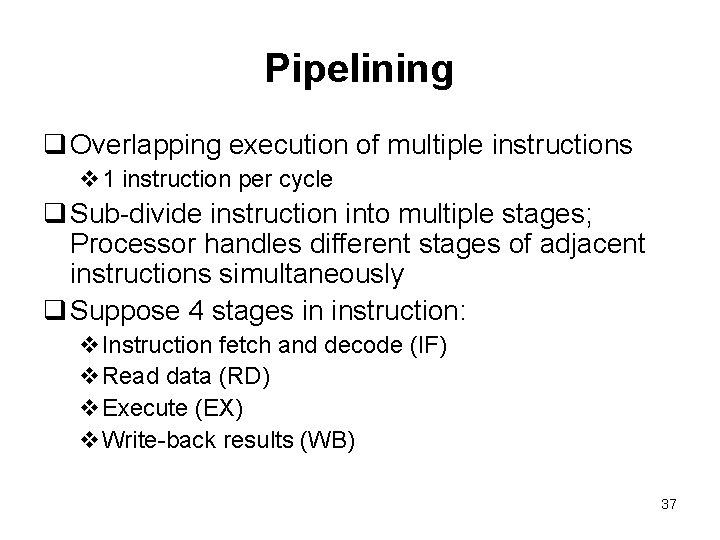

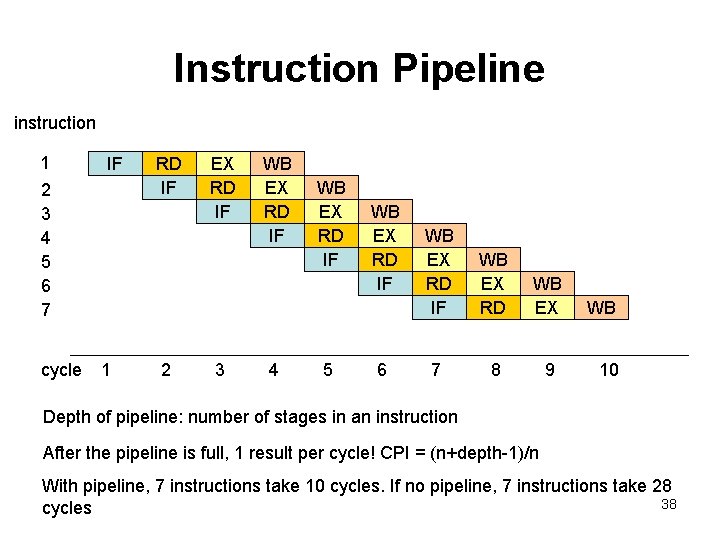

Pipelining q Overlapping execution of multiple instructions v 1 instruction per cycle q Sub-divide instruction into multiple stages; Processor handles different stages of adjacent instructions simultaneously q Suppose 4 stages in instruction: v. Instruction fetch and decode (IF) v. Read data (RD) v. Execute (EX) v. Write-back results (WB) 37

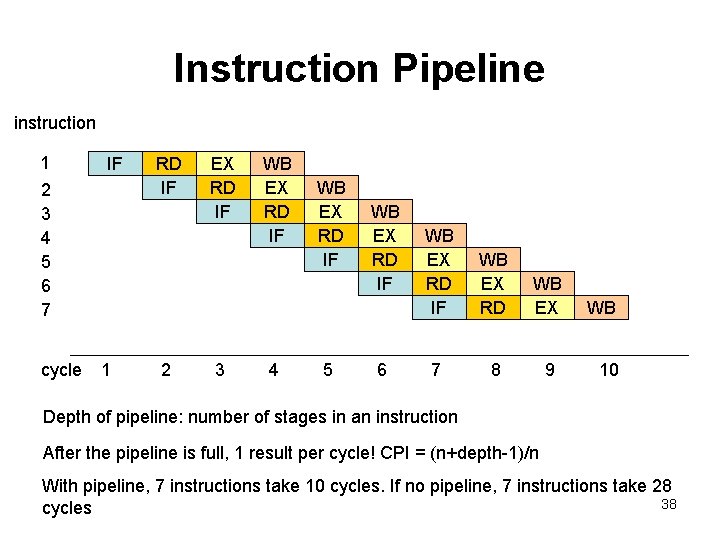

Instruction Pipeline instruction 1 IF 2 3 4 5 6 7 cycle 1 RD IF 2 EX RD IF 3 WB EX RD IF 4 WB EX RD IF 5 WB EX RD IF 6 WB EX RD IF WB EX RD WB EX 7 8 9 WB 10 Depth of pipeline: number of stages in an instruction After the pipeline is full, 1 result per cycle! CPI = (n+depth-1)/n With pipeline, 7 instructions take 10 cycles. If no pipeline, 7 instructions take 28 38 cycles

Inhibitors of Pipelining q. Dependencies between instructions interrupts pipelining, degrading performance v. Control dependence. v. Data dependence. 39

Control Dependence q Branching: when an instruction occurs after an conditional branch; so it is unknown whether that instruction will be executed beforehand v. Loop: for(i=0; i<n; i++)…; do…enddo v. Jump: goto … v. Condition: if…else… if(x>y) n=5; Branching in programs interrupts pipeline degrades performance Avoid excessive branching! 40

Data Dependence q when an instruction depends on data from a previous instruction x = 3*j; y = x+5. 0; // depends on previous instruction 41

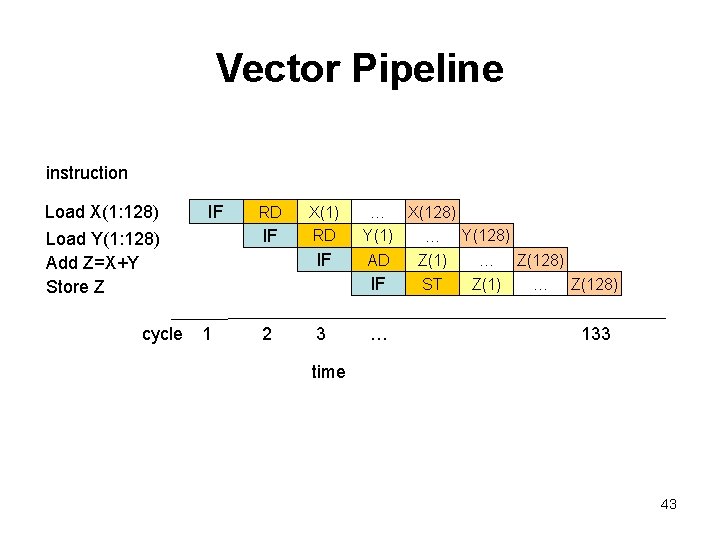

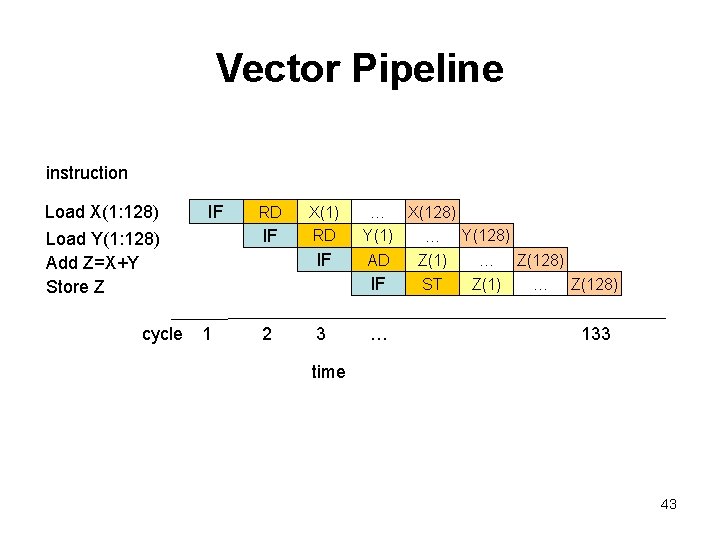

Vector Pipeline q Vector processors: with vector registers which can hold a vector, e. g. of 128 elements; v. Commonly encountered processors are scalar processors, e. g. home PC q Efficient for loops involving vectors. Instructions: for (i=0; i<128; i++) z[i] = x[i] + y[i] Vector Load X(1: 128) Vector Load Y(1: 128) Vector Add Z=X+Y Vector Store Z 42

Vector Pipeline instruction Load X(1: 128) IF IF Load Y(1: 128) Add Z=X+Y Store Z cycle RD X(1) RD IF … X(128) Y(1) … Y(128) AD IF 1 2 3 … Z(1) ST … Z(128) Z(1) … Z(128) 133 time 43

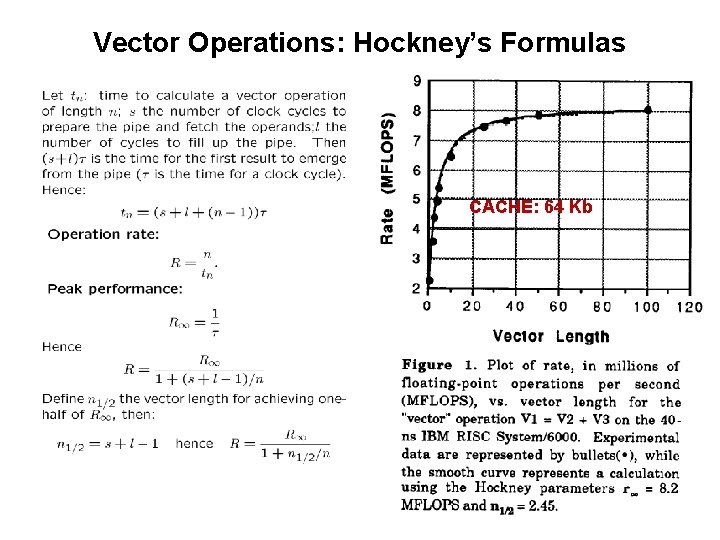

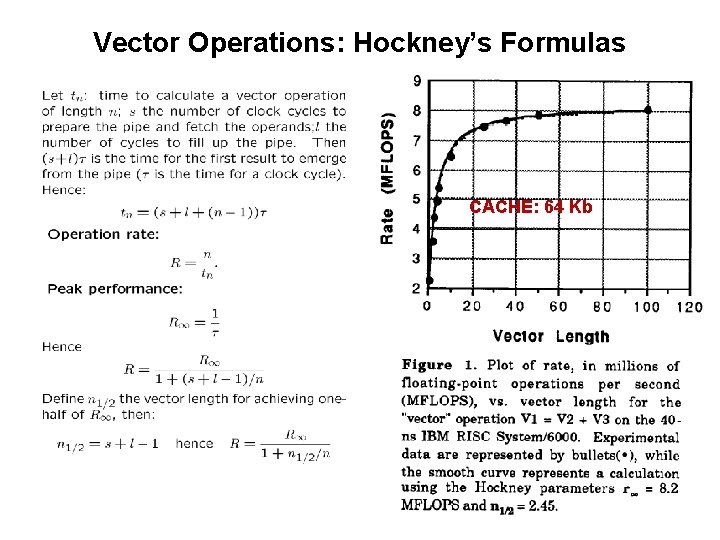

Vector Operations: Hockney’s Formulas CACHE: 64 Kb 44

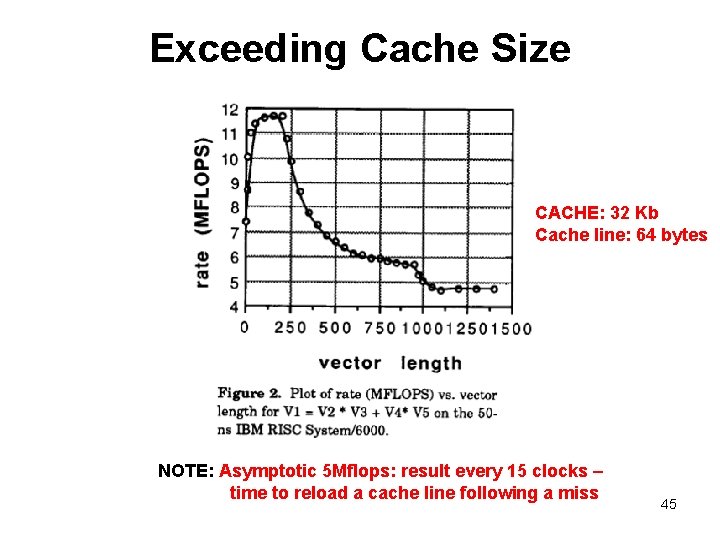

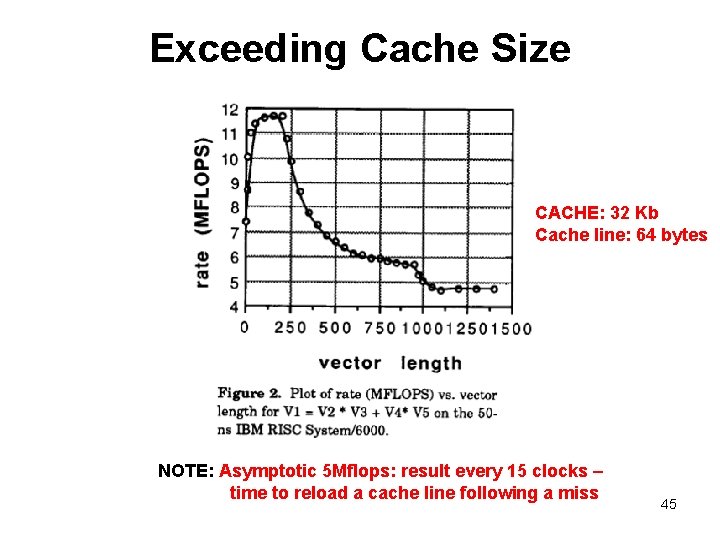

Exceeding Cache Size CACHE: 32 Kb Cache line: 64 bytes NOTE: Asymptotic 5 Mflops: result every 15 clocks – time to reload a cache line following a miss 45

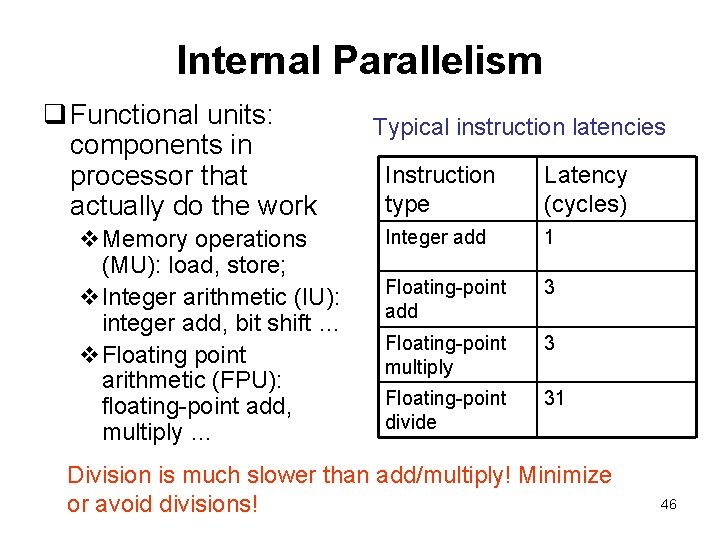

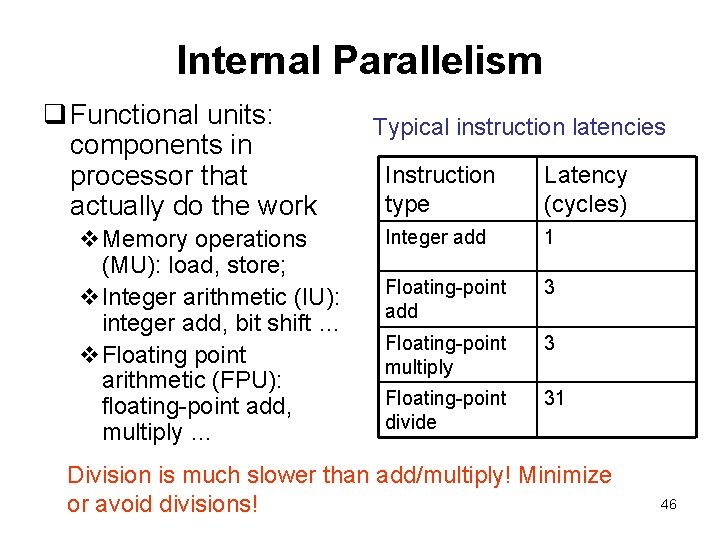

Internal Parallelism q Functional units: components in processor that actually do the work v. Memory operations (MU): load, store; v. Integer arithmetic (IU): integer add, bit shift … v. Floating point arithmetic (FPU): floating-point add, multiply … Typical instruction latencies Instruction type Latency (cycles) Integer add 1 Floating-point add 3 Floating-point multiply 3 Floating-point divide 31 Division is much slower than add/multiply! Minimize or avoid divisions! 46

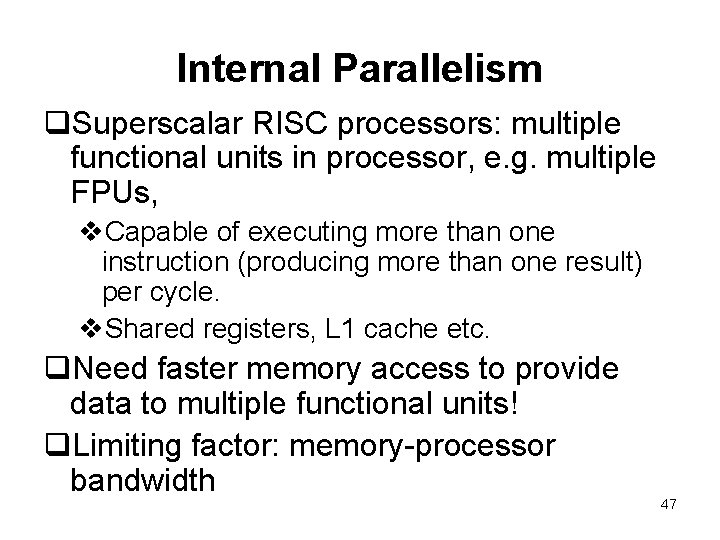

Internal Parallelism q. Superscalar RISC processors: multiple functional units in processor, e. g. multiple FPUs, v. Capable of executing more than one instruction (producing more than one result) per cycle. v. Shared registers, L 1 cache etc. q. Need faster memory access to provide data to multiple functional units! q. Limiting factor: memory-processor bandwidth 47

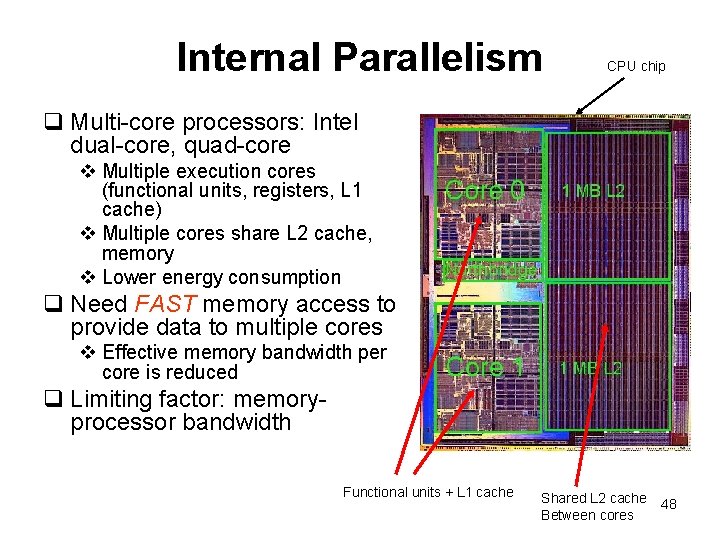

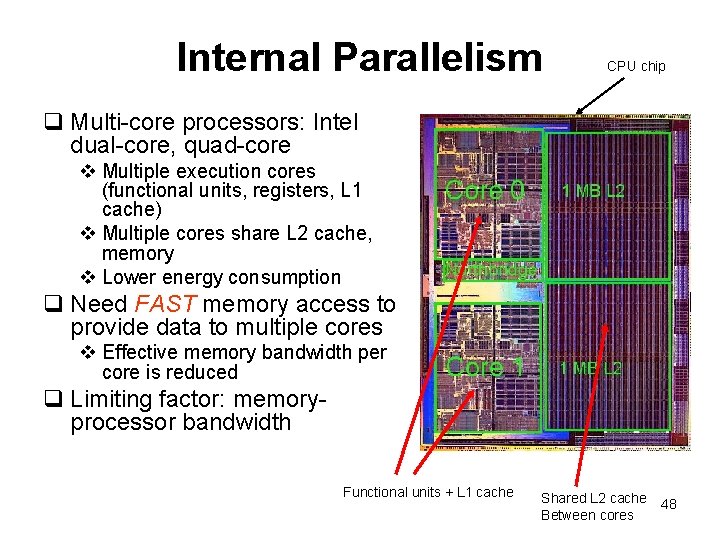

Internal Parallelism CPU chip q Multi-core processors: Intel dual-core, quad-core v Multiple execution cores (functional units, registers, L 1 cache) v Multiple cores share L 2 cache, memory v Lower energy consumption q Need FAST memory access to provide data to multiple cores v Effective memory bandwidth per core is reduced q Limiting factor: memoryprocessor bandwidth Functional units + L 1 cache Shared L 2 cache Between cores 48

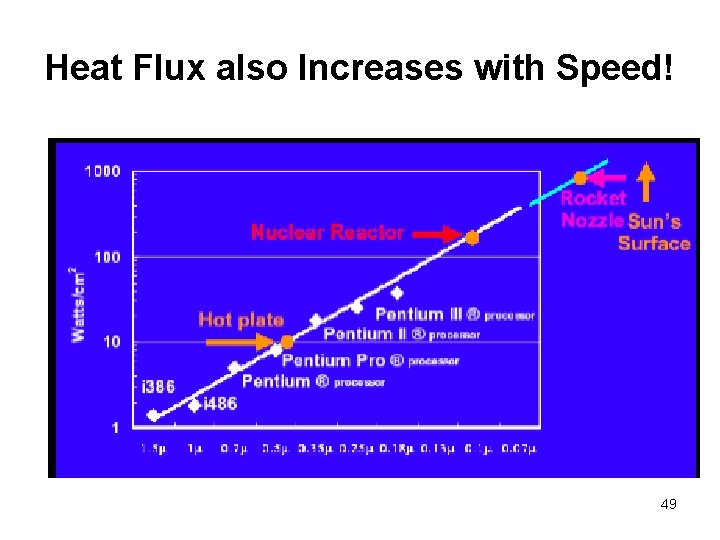

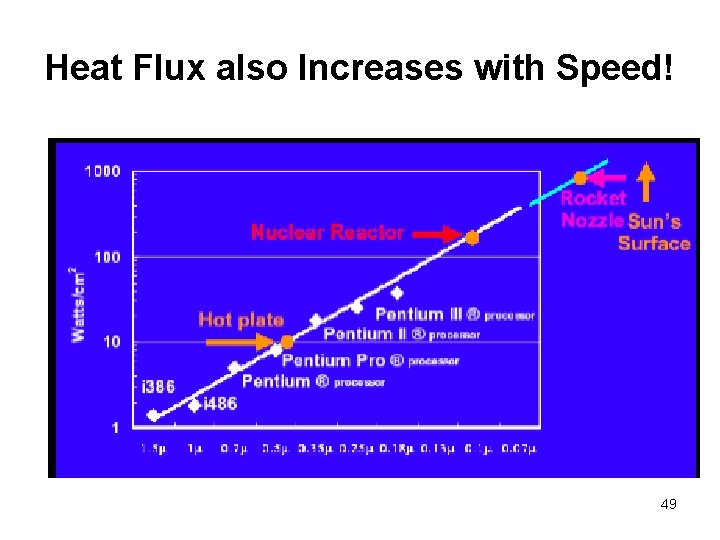

Heat Flux also Increases with Speed! 49

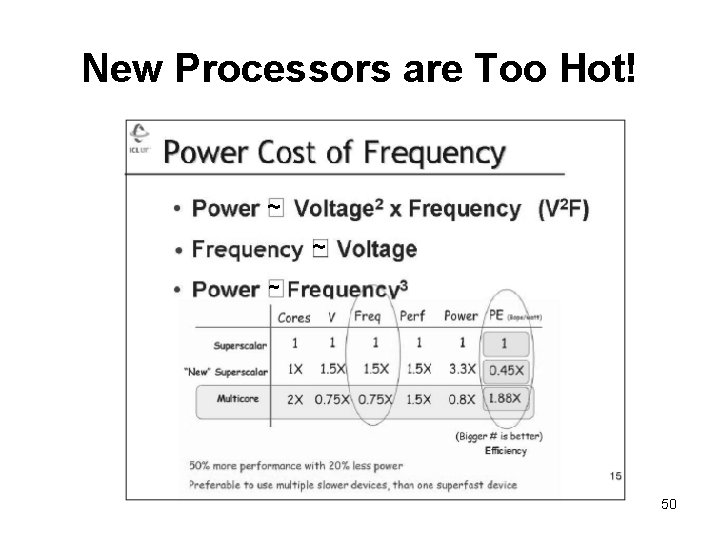

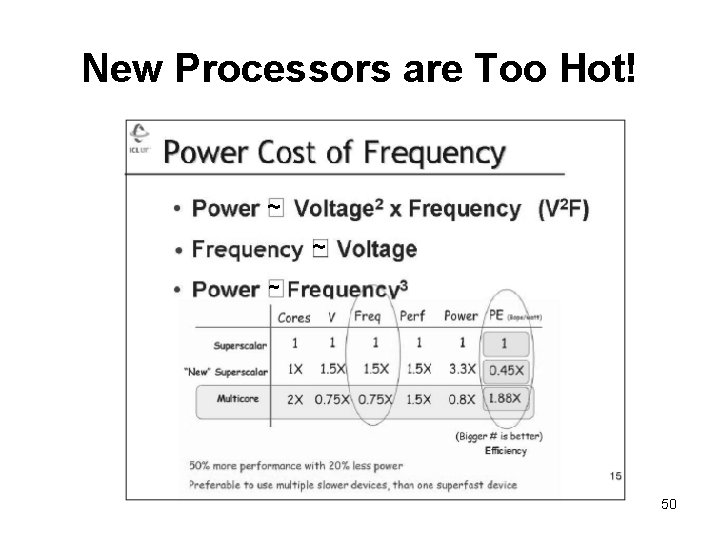

New Processors are Too Hot! ~ ~ ~ 50

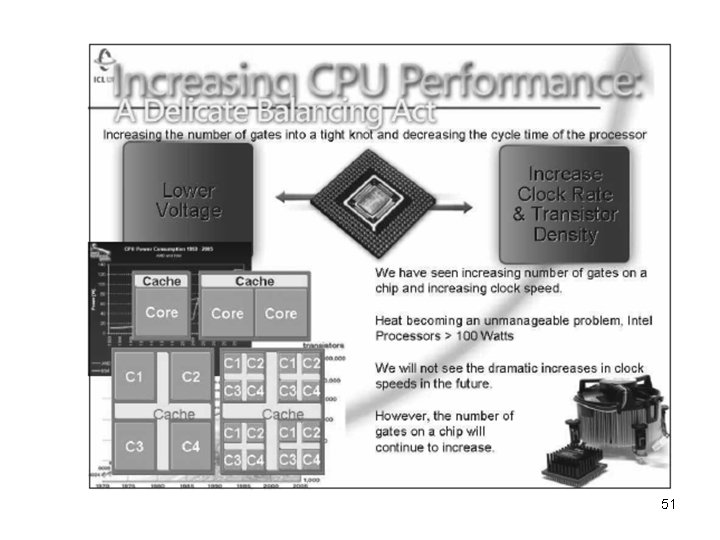

51

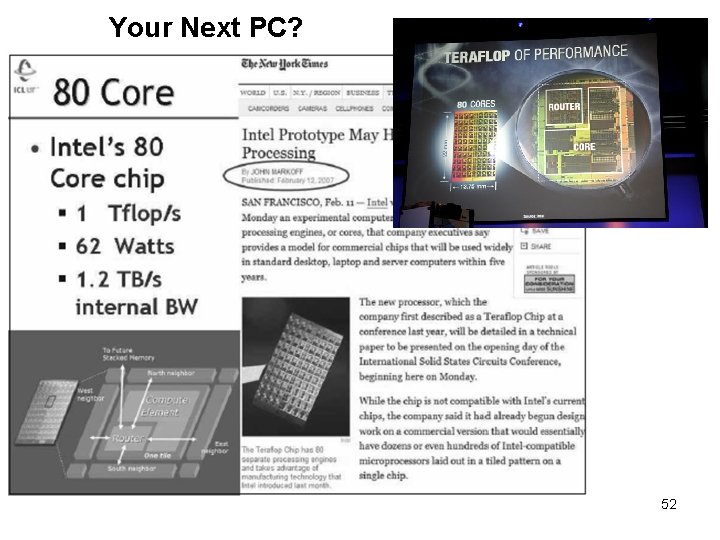

Your Next PC? 52

External Parallelism q. Parallel machines: Will be discussed later 53

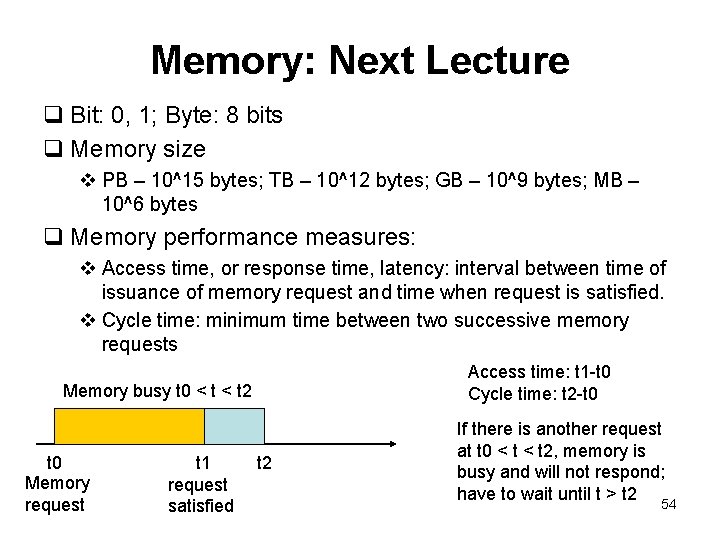

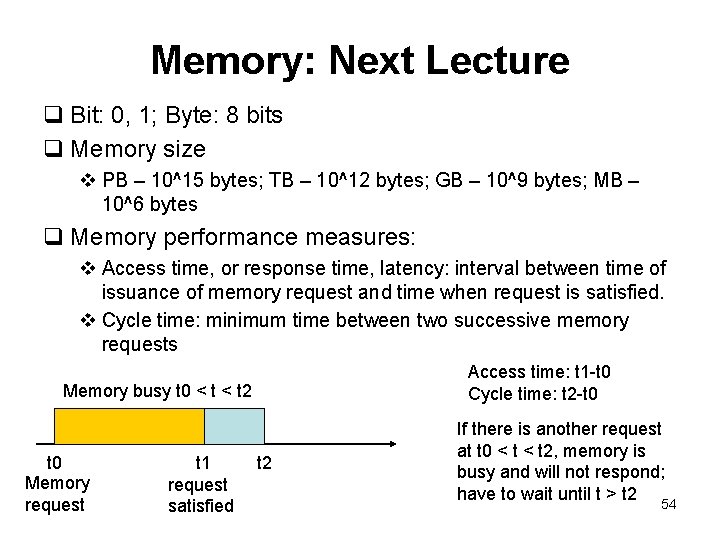

Memory: Next Lecture q Bit: 0, 1; Byte: 8 bits q Memory size v PB – 10^15 bytes; TB – 10^12 bytes; GB – 10^9 bytes; MB – 10^6 bytes q Memory performance measures: v Access time, or response time, latency: interval between time of issuance of memory request and time when request is satisfied. v Cycle time: minimum time between two successive memory requests Access time: t 1 -t 0 Cycle time: t 2 -t 0 Memory busy t 0 < t 2 t 0 Memory request t 1 request satisfied t 2 If there is another request at t 0 < t 2, memory is busy and will not respond; have to wait until t > t 2 54