Parallel Programming with PThreads Threads Sometimes called a

![int count = 0; int thread_ids[5] = {0, 1, 2, 3, 4}; pthread_mutex_t count_mutex; int count = 0; int thread_ids[5] = {0, 1, 2, 3, 4}; pthread_mutex_t count_mutex;](https://slidetodoc.com/presentation_image_h/c1e71526fcfb8367ae6efcff93e4a4cd/image-27.jpg)

- Slides: 51

Parallel Programming with PThreads

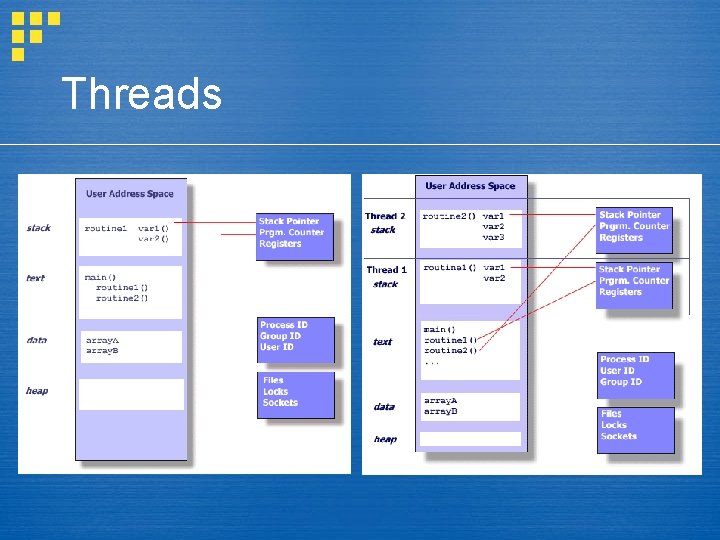

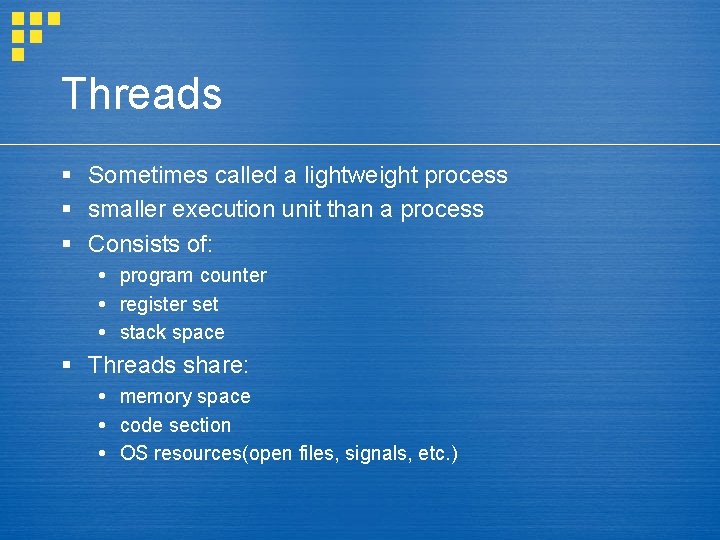

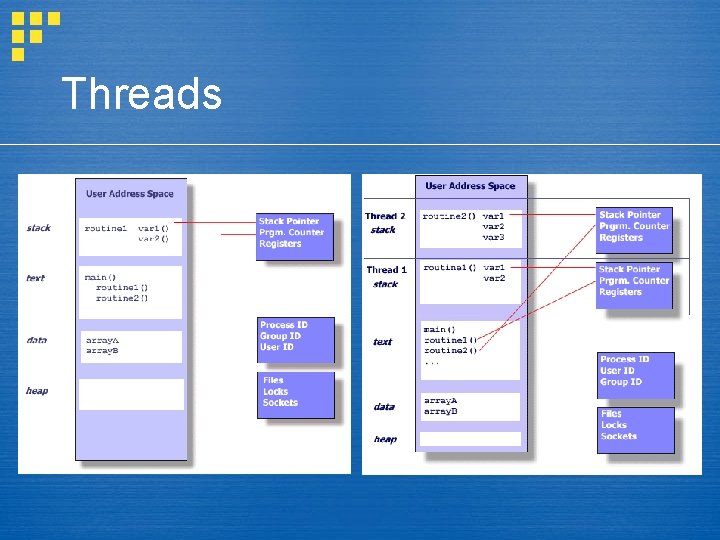

Threads § Sometimes called a lightweight process § smaller execution unit than a process § Consists of: program counter register set stack space § Threads share: memory space code section OS resources(open files, signals, etc. )

Threads

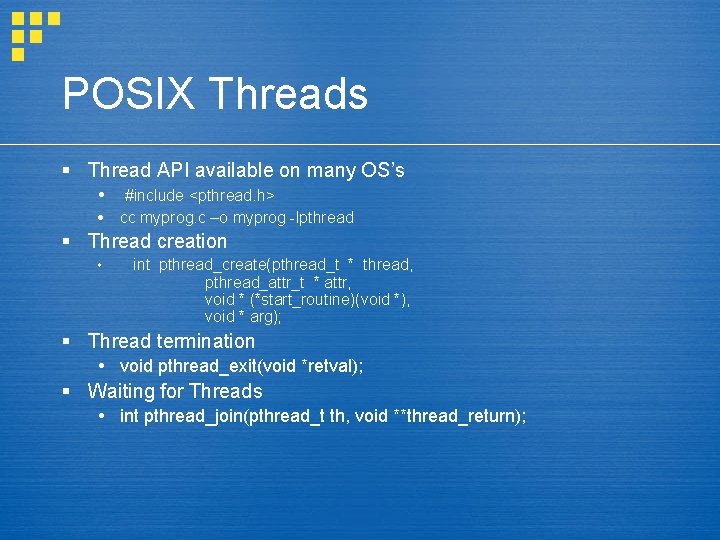

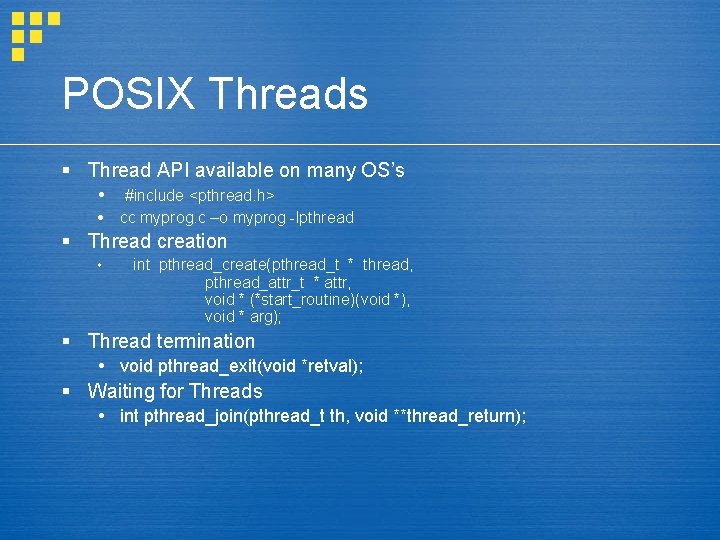

POSIX Threads § Thread API available on many OS’s #include <pthread. h> cc myprog. c –o myprog -lpthread § Thread creation int pthread_create(pthread_t * thread, pthread_attr_t * attr, void * (*start_routine)(void *), void * arg); § Thread termination void pthread_exit(void *retval); § Waiting for Threads int pthread_join(pthread_t th, void **thread_return);

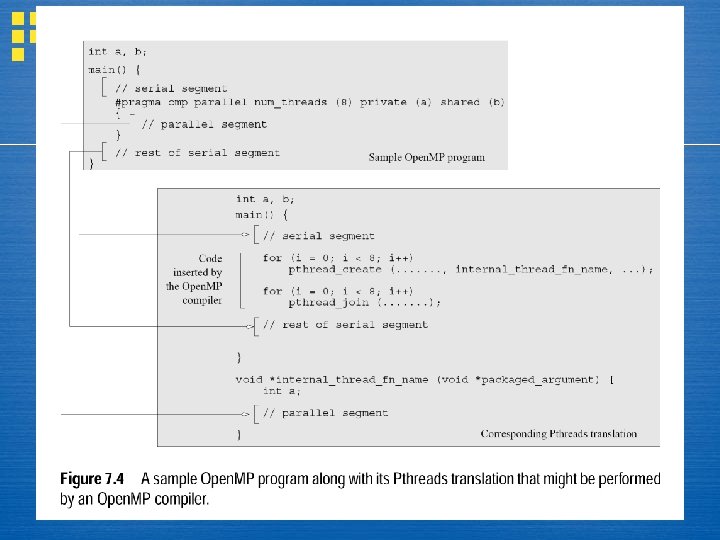

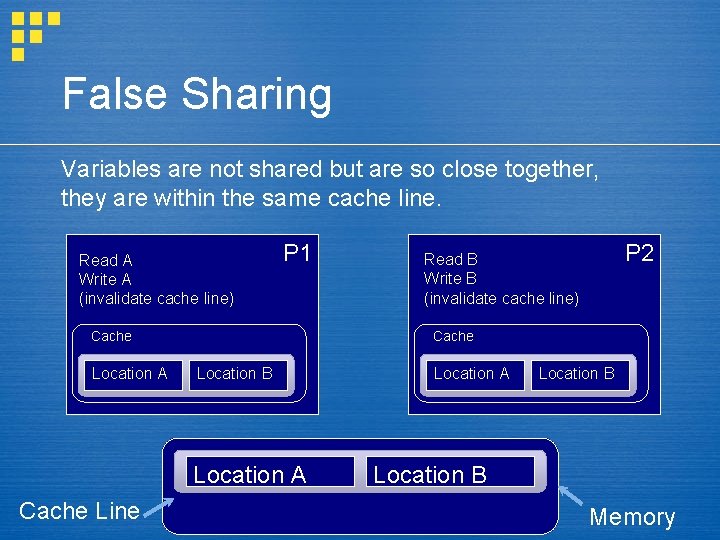

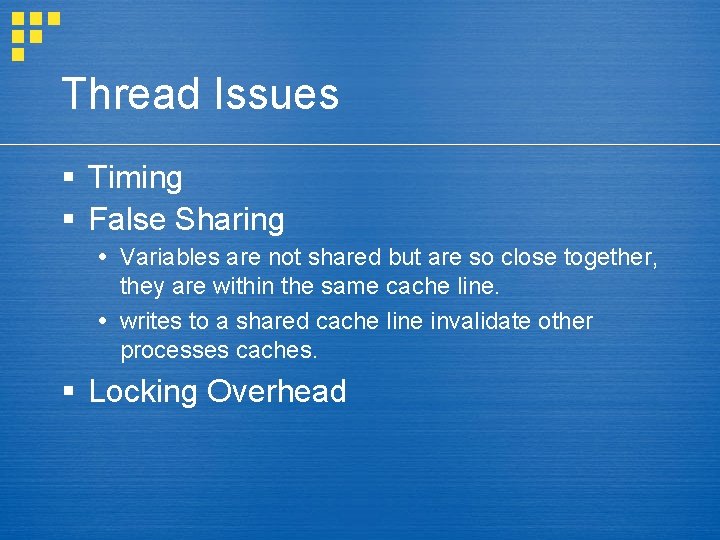

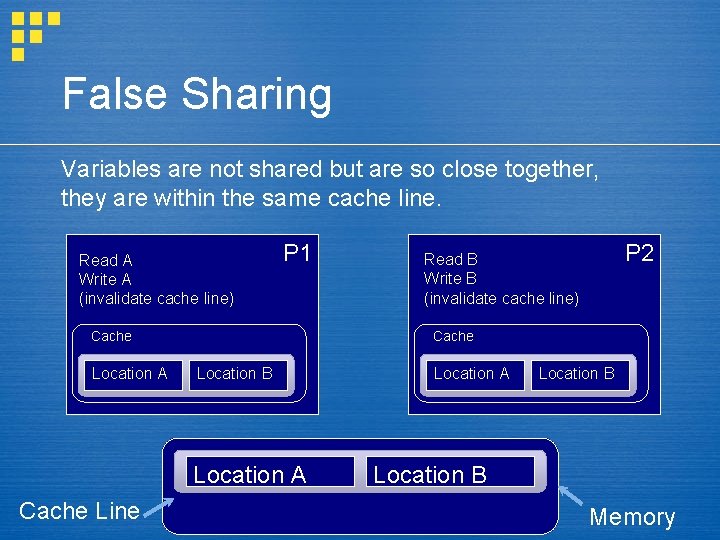

Thread Issues § Timing § False Sharing Variables are not shared but are so close together, they are within the same cache line. writes to a shared cache line invalidate other processes caches. § Locking Overhead

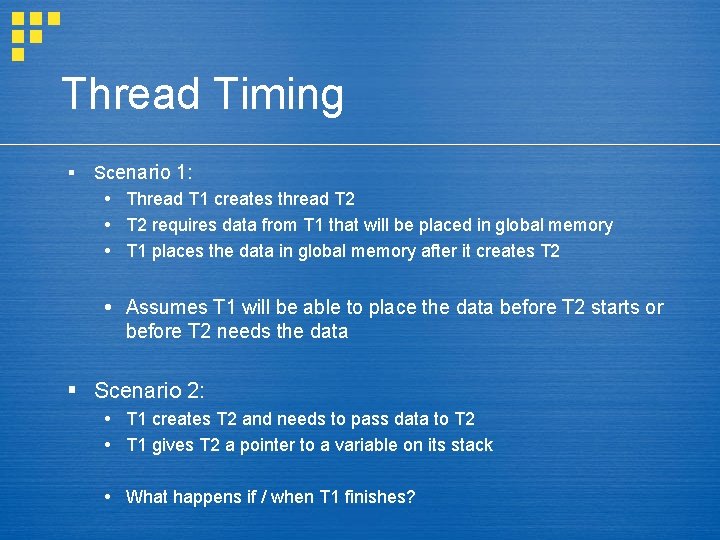

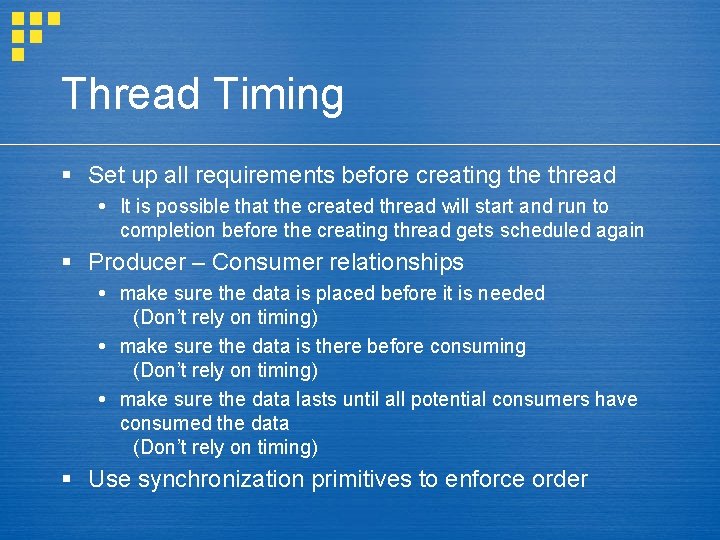

Thread Timing § Scenario 1: Thread T 1 creates thread T 2 requires data from T 1 that will be placed in global memory T 1 places the data in global memory after it creates T 2 Assumes T 1 will be able to place the data before T 2 starts or before T 2 needs the data § Scenario 2: T 1 creates T 2 and needs to pass data to T 2 T 1 gives T 2 a pointer to a variable on its stack What happens if / when T 1 finishes?

Thread Timing § Set up all requirements before creating the thread It is possible that the created thread will start and run to completion before the creating thread gets scheduled again § Producer – Consumer relationships make sure the data is placed before it is needed (Don’t rely on timing) make sure the data is there before consuming (Don’t rely on timing) make sure the data lasts until all potential consumers have consumed the data (Don’t rely on timing) § Use synchronization primitives to enforce order

False Sharing Variables are not shared but are so close together, they are within the same cache line. Read A Write A (invalidate cache line) P 1 Cache Location A Cache Location B Location A Cache Line P 2 Read B Write B (invalidate cache line) Location A Location B Memory

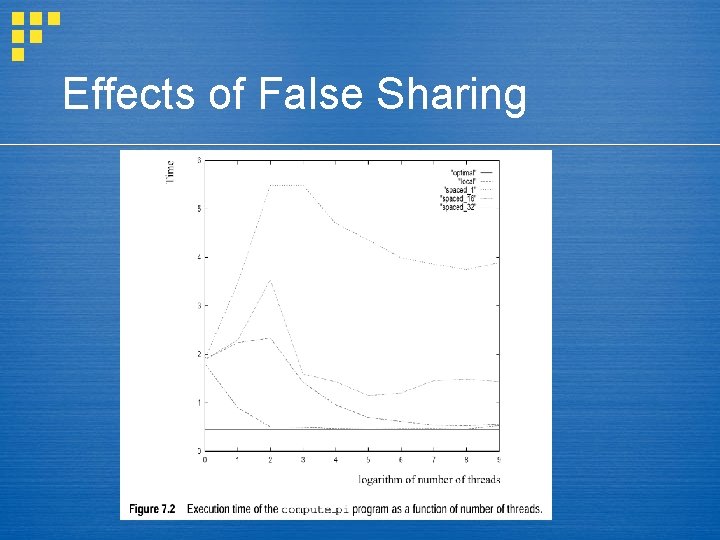

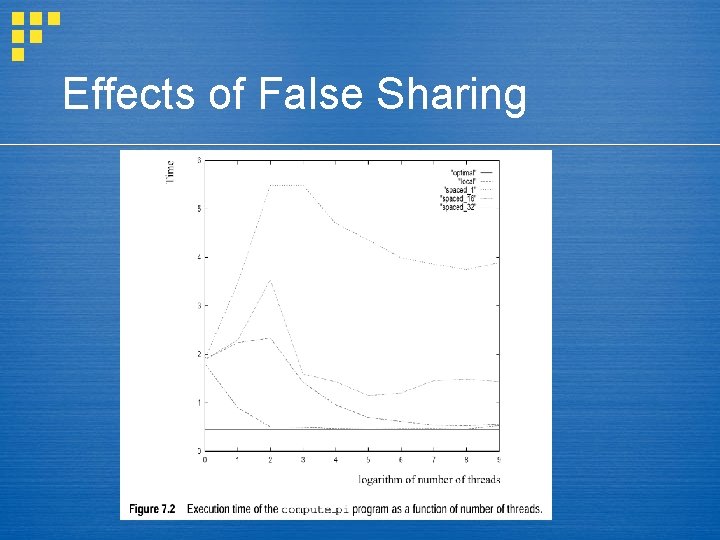

Effects of False Sharing

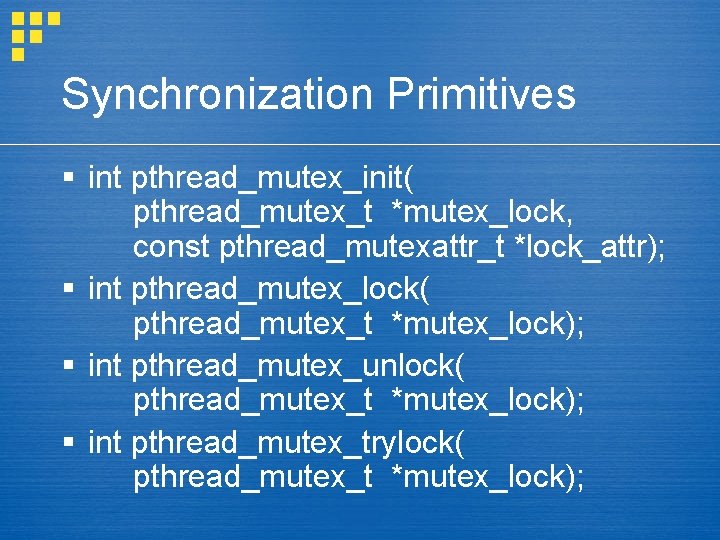

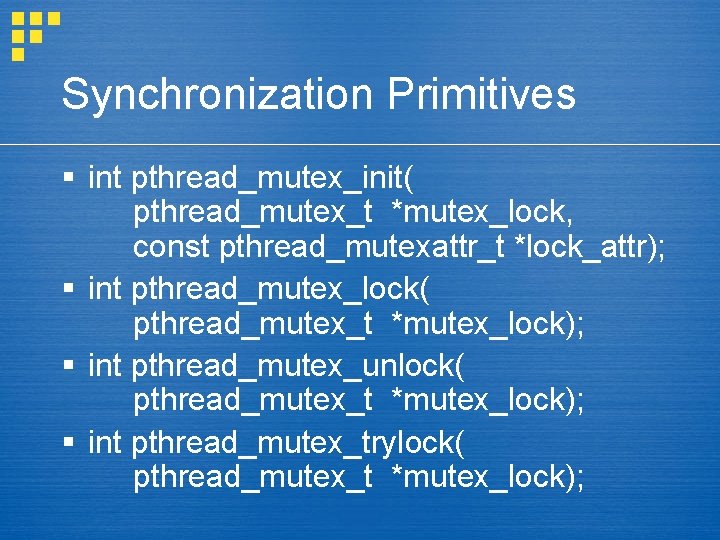

Synchronization Primitives § int pthread_mutex_init( pthread_mutex_t *mutex_lock, const pthread_mutexattr_t *lock_attr); § int pthread_mutex_lock( pthread_mutex_t *mutex_lock); § int pthread_mutex_unlock( pthread_mutex_t *mutex_lock); § int pthread_mutex_trylock( pthread_mutex_t *mutex_lock);

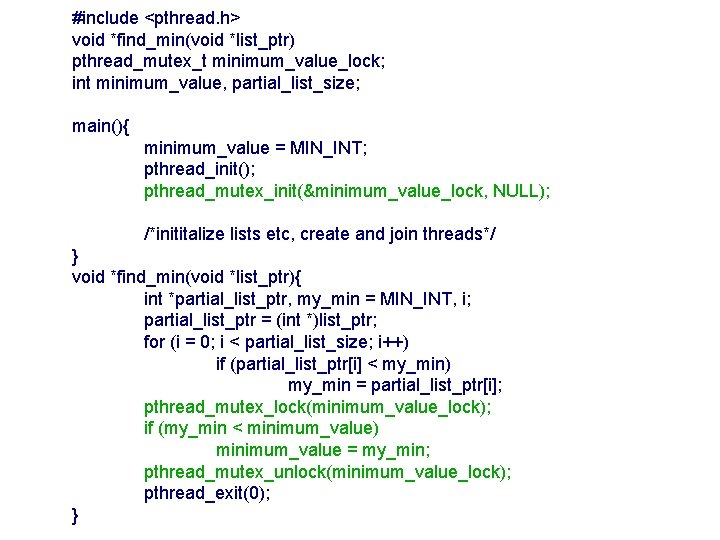

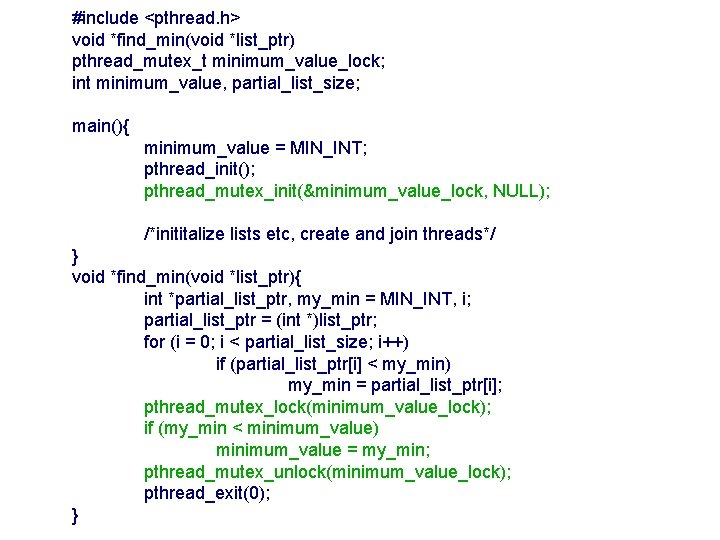

#include <pthread. h> void *find_min(void *list_ptr) pthread_mutex_t minimum_value_lock; int minimum_value, partial_list_size; main(){ minimum_value = MIN_INT; pthread_init(); pthread_mutex_init(&minimum_value_lock, NULL); /*inititalize lists etc, create and join threads*/ } void *find_min(void *list_ptr){ int *partial_list_ptr, my_min = MIN_INT, i; partial_list_ptr = (int *)list_ptr; for (i = 0; i < partial_list_size; i++) if (partial_list_ptr[i] < my_min) my_min = partial_list_ptr[i]; pthread_mutex_lock(minimum_value_lock); if (my_min < minimum_value) minimum_value = my_min; pthread_mutex_unlock(minimum_value_lock); pthread_exit(0); }

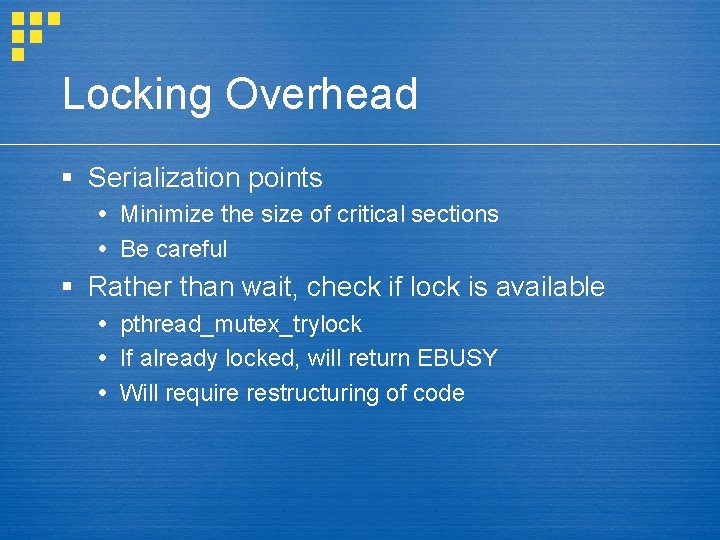

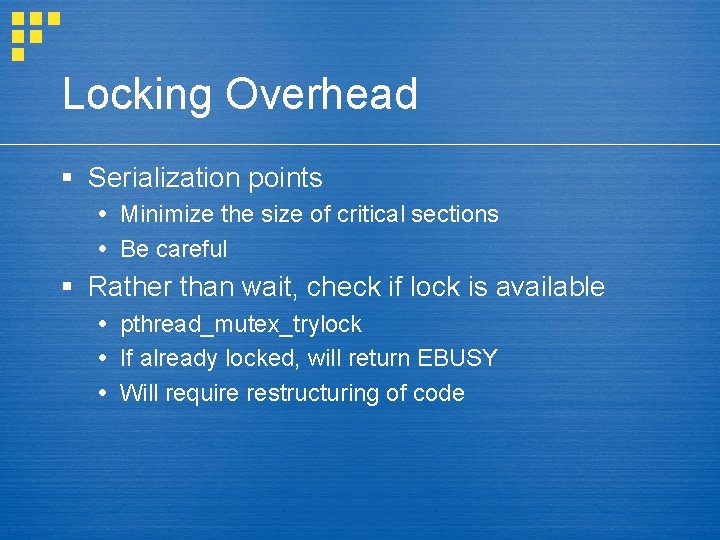

Locking Overhead § Serialization points Minimize the size of critical sections Be careful § Rather than wait, check if lock is available pthread_mutex_trylock If already locked, will return EBUSY Will require restructuring of code

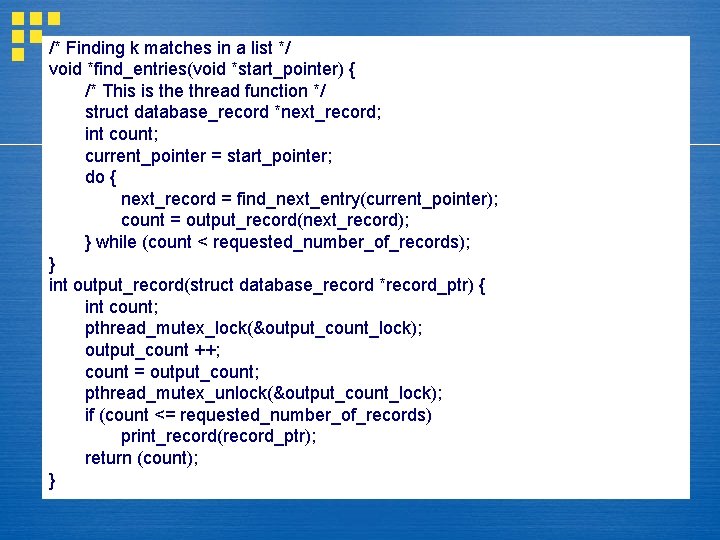

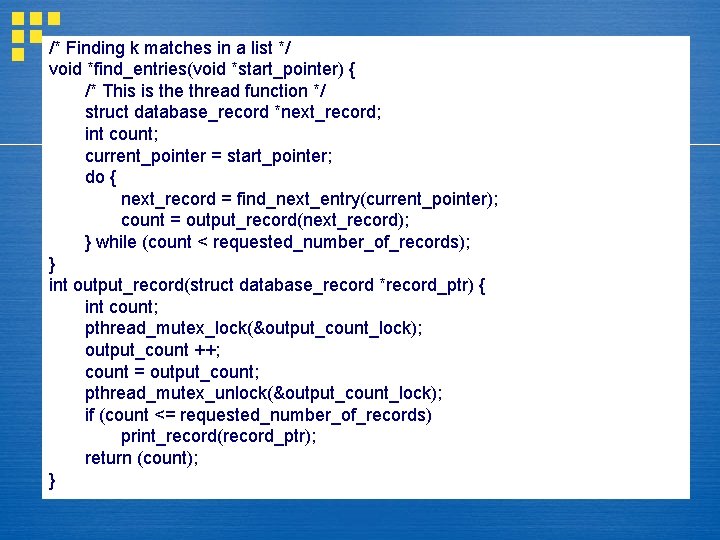

/* Finding k matches in a list */ void *find_entries(void *start_pointer) { /* This is the thread function */ struct database_record *next_record; int count; current_pointer = start_pointer; do { next_record = find_next_entry(current_pointer); count = output_record(next_record); } while (count < requested_number_of_records); } int output_record(struct database_record *record_ptr) { int count; pthread_mutex_lock(&output_count_lock); output_count ++; count = output_count; pthread_mutex_unlock(&output_count_lock); if (count <= requested_number_of_records) print_record(record_ptr); return (count); }

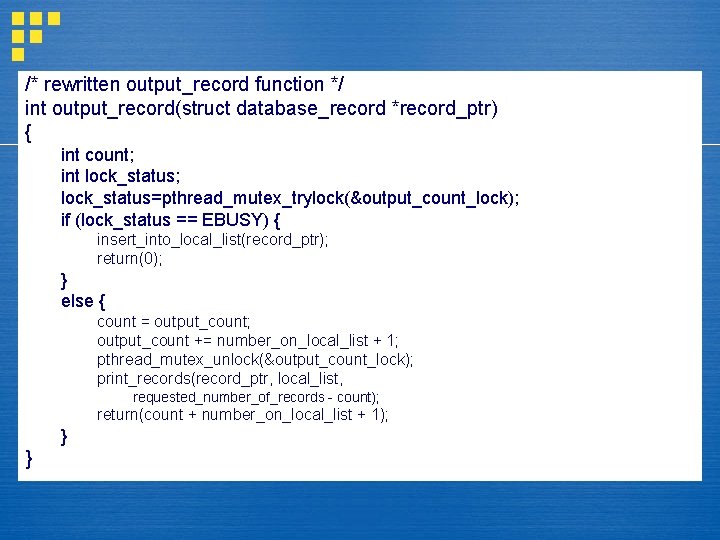

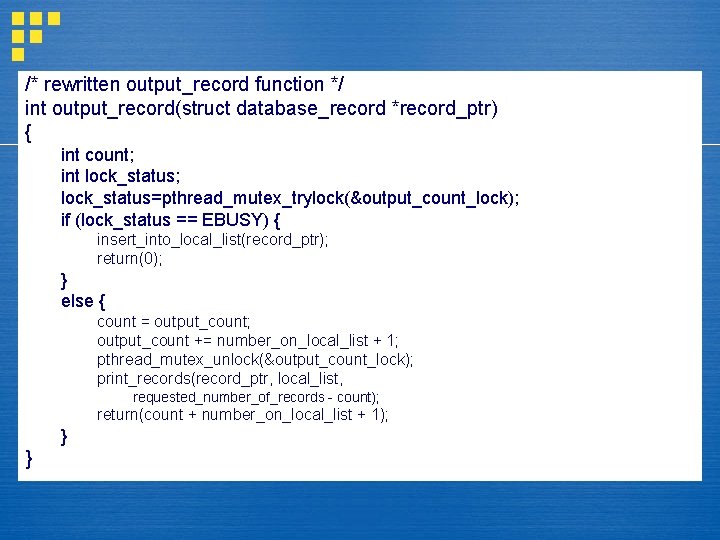

/* rewritten output_record function */ int output_record(struct database_record *record_ptr) { int count; int lock_status; lock_status=pthread_mutex_trylock(&output_count_lock); if (lock_status == EBUSY) { insert_into_local_list(record_ptr); return(0); } else { count = output_count; output_count += number_on_local_list + 1; pthread_mutex_unlock(&output_count_lock); print_records(record_ptr, local_list, requested_number_of_records - count); return(count + number_on_local_list + 1); } }

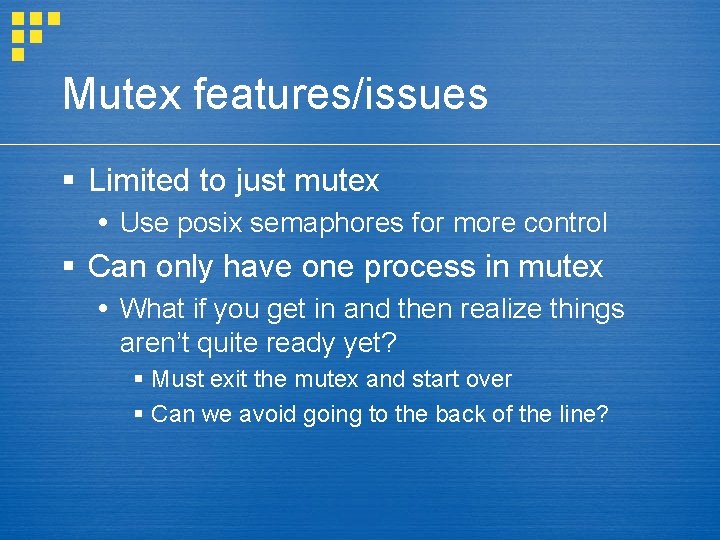

Mutex features/issues § Limited to just mutex Use posix semaphores for more control § Can only have one process in mutex What if you get in and then realize things aren’t quite ready yet? § Must exit the mutex and start over § Can we avoid going to the back of the line?

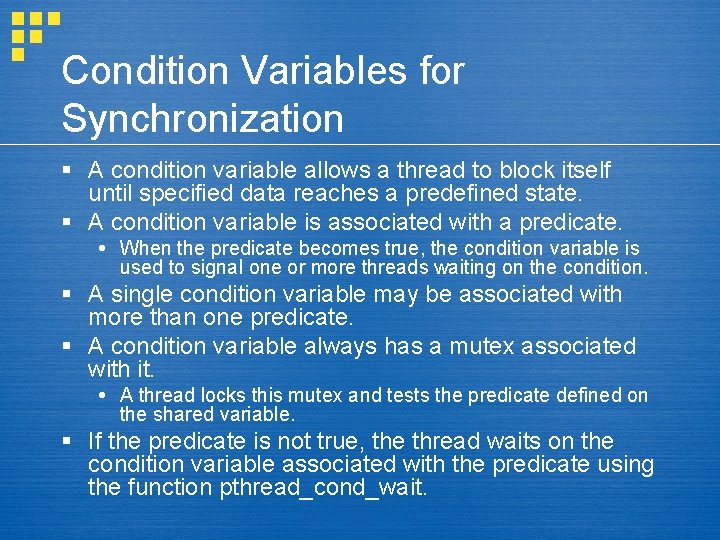

Condition Variables for Synchronization § A condition variable allows a thread to block itself until specified data reaches a predefined state. § A condition variable is associated with a predicate. When the predicate becomes true, the condition variable is used to signal one or more threads waiting on the condition. § A single condition variable may be associated with more than one predicate. § A condition variable always has a mutex associated with it. A thread locks this mutex and tests the predicate defined on the shared variable. § If the predicate is not true, the thread waits on the condition variable associated with the predicate using the function pthread_cond_wait.

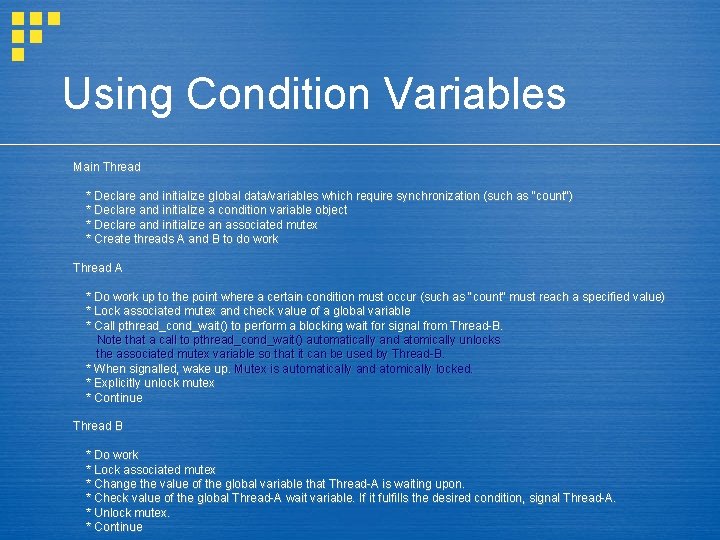

Using Condition Variables Main Thread * Declare and initialize global data/variables which require synchronization (such as "count") * Declare and initialize a condition variable object * Declare and initialize an associated mutex * Create threads A and B to do work Thread A * Do work up to the point where a certain condition must occur (such as "count" must reach a specified value) * Lock associated mutex and check value of a global variable * Call pthread_cond_wait() to perform a blocking wait for signal from Thread-B. Note that a call to pthread_cond_wait() automatically and atomically unlocks the associated mutex variable so that it can be used by Thread-B. * When signalled, wake up. Mutex is automatically and atomically locked. * Explicitly unlock mutex * Continue Thread B * Do work * Lock associated mutex * Change the value of the global variable that Thread-A is waiting upon. * Check value of the global Thread-A wait variable. If it fulfills the desired condition, signal Thread-A. * Unlock mutex. * Continue

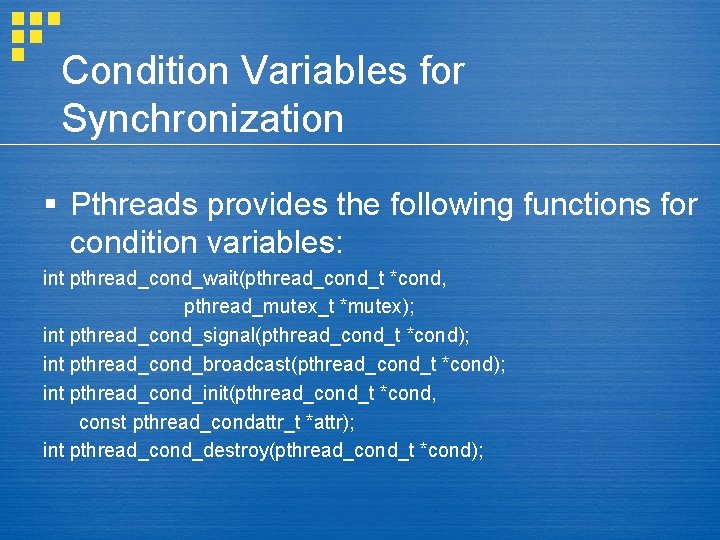

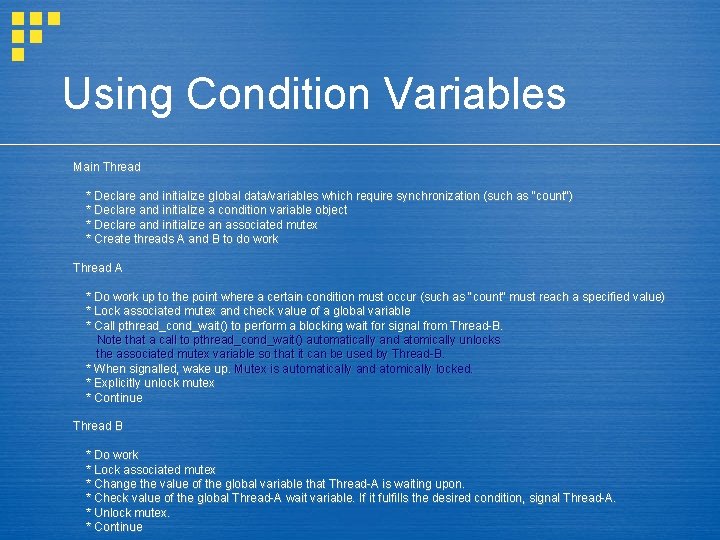

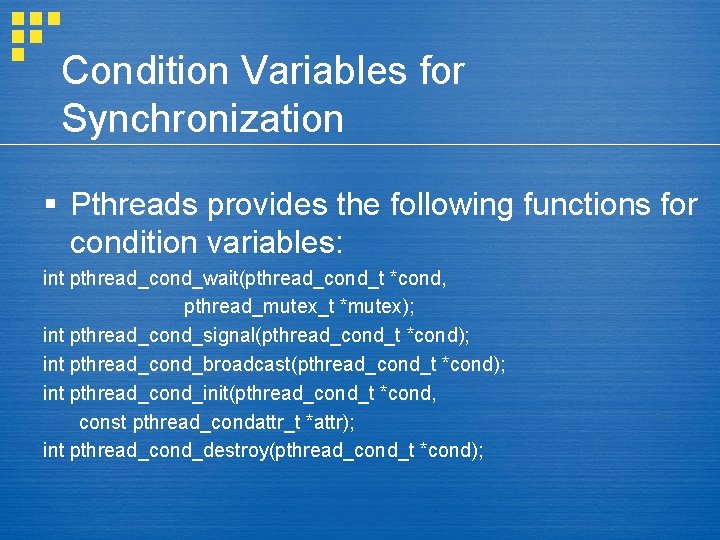

Condition Variables for Synchronization § Pthreads provides the following functions for condition variables: int pthread_cond_wait(pthread_cond_t *cond, pthread_mutex_t *mutex); int pthread_cond_signal(pthread_cond_t *cond); int pthread_cond_broadcast(pthread_cond_t *cond); int pthread_cond_init(pthread_cond_t *cond, const pthread_condattr_t *attr); int pthread_cond_destroy(pthread_cond_t *cond);

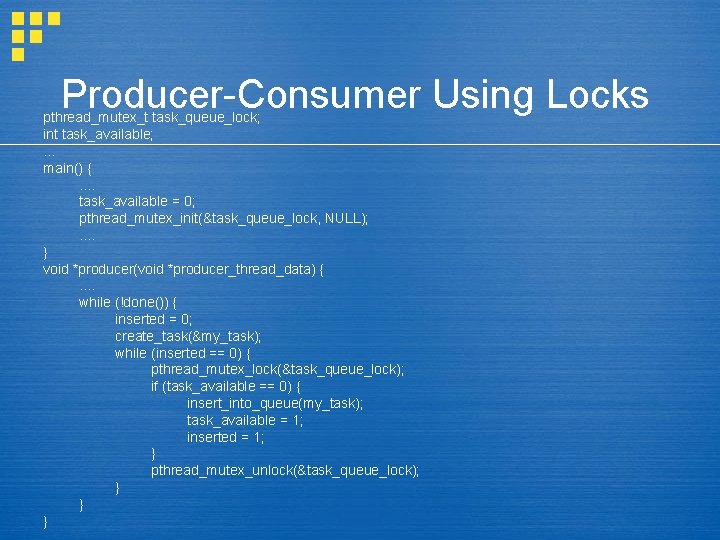

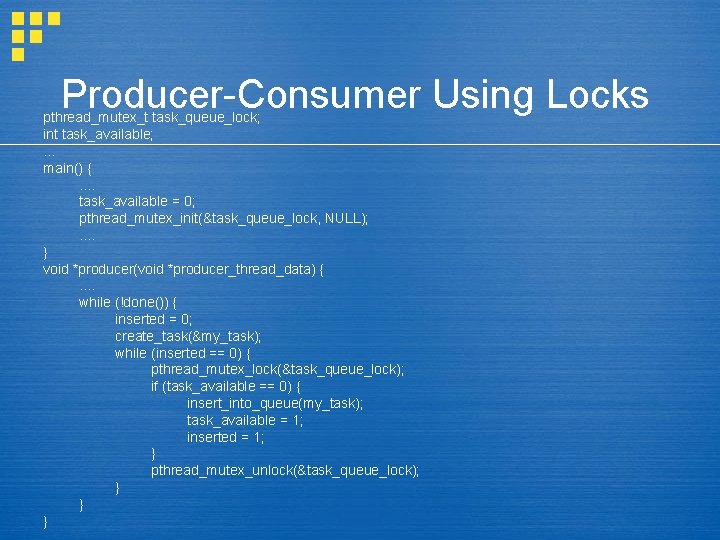

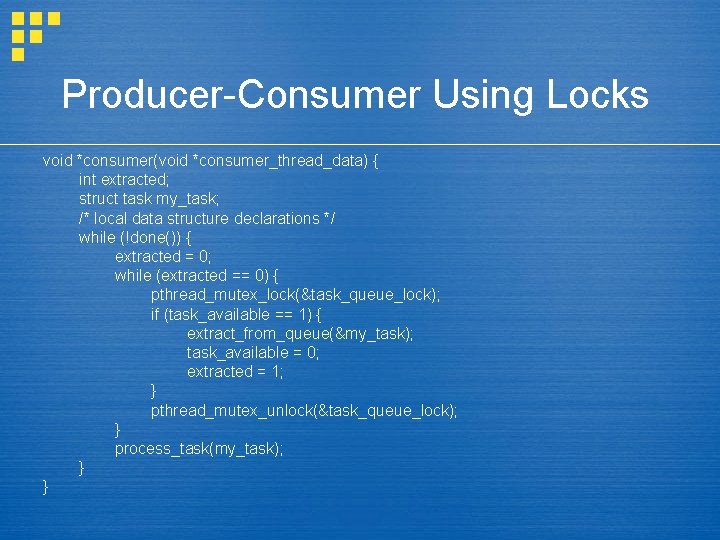

Producer-Consumer Using Locks pthread_mutex_t task_queue_lock; int task_available; . . . main() {. . task_available = 0; pthread_mutex_init(&task_queue_lock, NULL); . . } void *producer(void *producer_thread_data) {. . while (!done()) { inserted = 0; create_task(&my_task); while (inserted == 0) { pthread_mutex_lock(&task_queue_lock); if (task_available == 0) { insert_into_queue(my_task); task_available = 1; inserted = 1; } pthread_mutex_unlock(&task_queue_lock); } } }

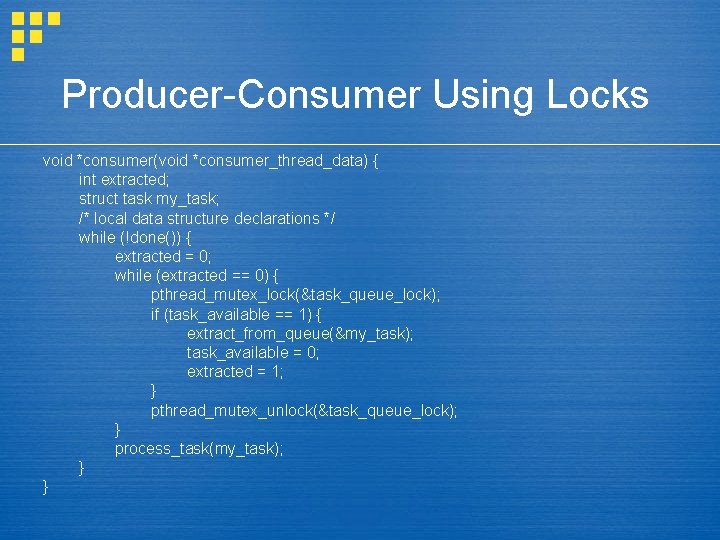

Producer-Consumer Using Locks void *consumer(void *consumer_thread_data) { int extracted; struct task my_task; /* local data structure declarations */ while (!done()) { extracted = 0; while (extracted == 0) { pthread_mutex_lock(&task_queue_lock); if (task_available == 1) { extract_from_queue(&my_task); task_available = 0; extracted = 1; } pthread_mutex_unlock(&task_queue_lock); } process_task(my_task); } }

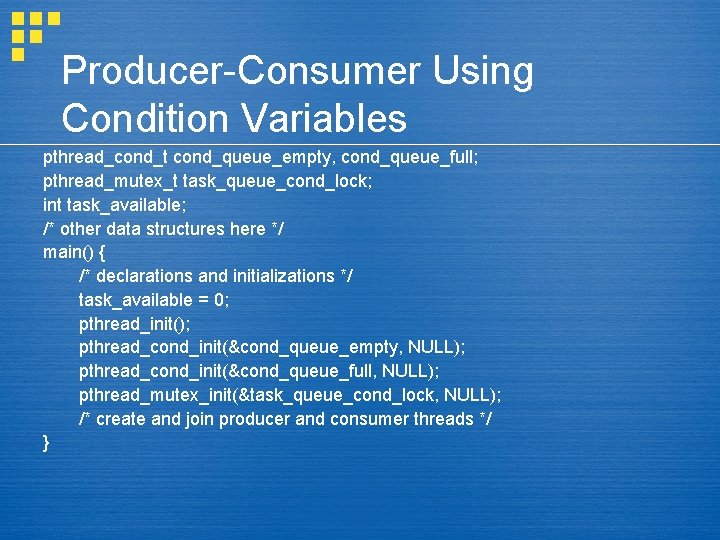

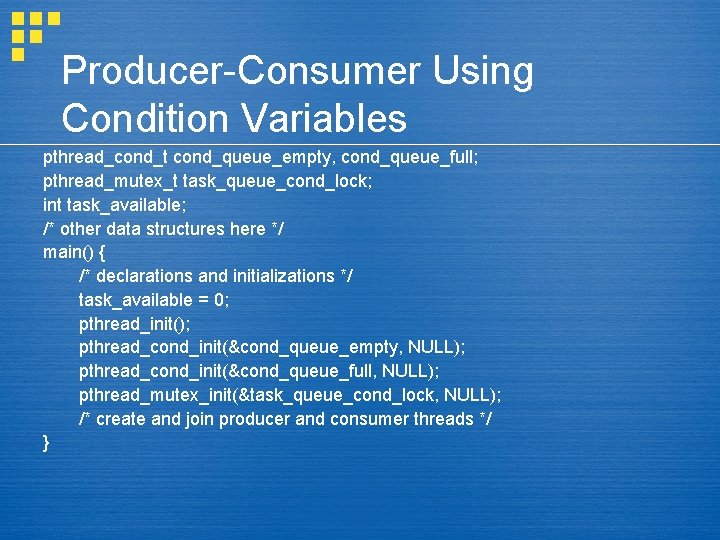

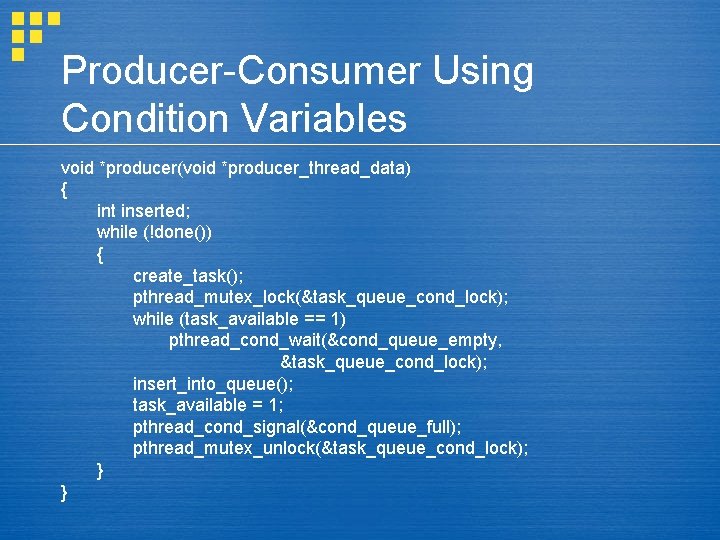

Producer-Consumer Using Condition Variables pthread_cond_t cond_queue_empty, cond_queue_full; pthread_mutex_t task_queue_cond_lock; int task_available; /* other data structures here */ main() { /* declarations and initializations */ task_available = 0; pthread_init(); pthread_cond_init(&cond_queue_empty, NULL); pthread_cond_init(&cond_queue_full, NULL); pthread_mutex_init(&task_queue_cond_lock, NULL); /* create and join producer and consumer threads */ }

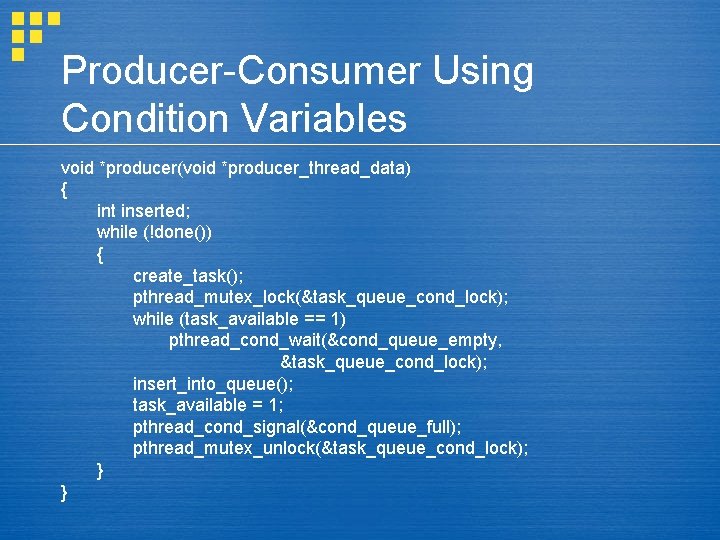

Producer-Consumer Using Condition Variables void *producer(void *producer_thread_data) { int inserted; while (!done()) { create_task(); pthread_mutex_lock(&task_queue_cond_lock); while (task_available == 1) pthread_cond_wait(&cond_queue_empty, &task_queue_cond_lock); insert_into_queue(); task_available = 1; pthread_cond_signal(&cond_queue_full); pthread_mutex_unlock(&task_queue_cond_lock); } }

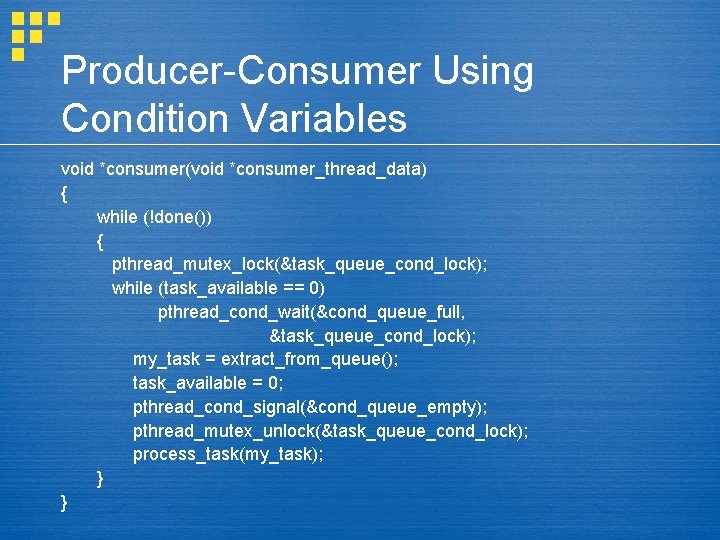

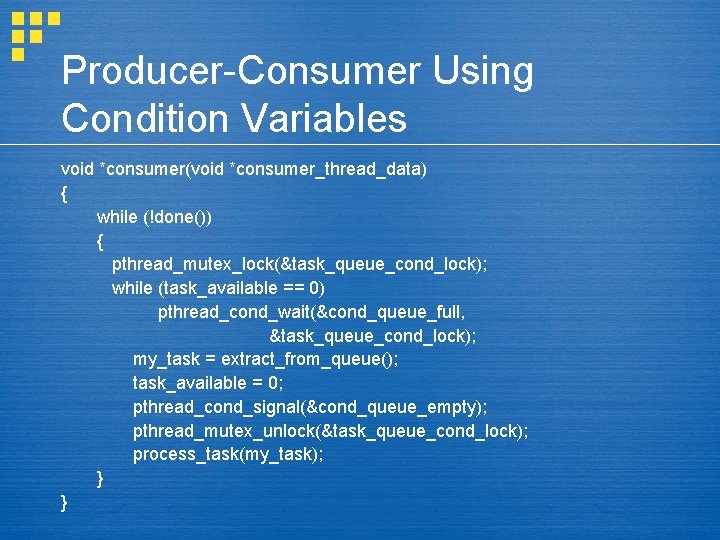

Producer-Consumer Using Condition Variables void *consumer(void *consumer_thread_data) { while (!done()) { pthread_mutex_lock(&task_queue_cond_lock); while (task_available == 0) pthread_cond_wait(&cond_queue_full, &task_queue_cond_lock); my_task = extract_from_queue(); task_available = 0; pthread_cond_signal(&cond_queue_empty); pthread_mutex_unlock(&task_queue_cond_lock); process_task(my_task); } }

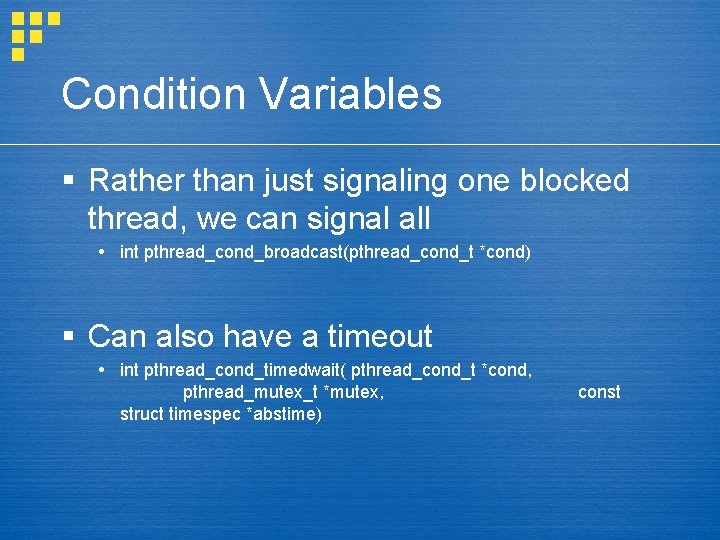

Condition Variables § Rather than just signaling one blocked thread, we can signal all int pthread_cond_broadcast(pthread_cond_t *cond) § Can also have a timeout int pthread_cond_timedwait( pthread_cond_t *cond, pthread_mutex_t *mutex, struct timespec *abstime) const

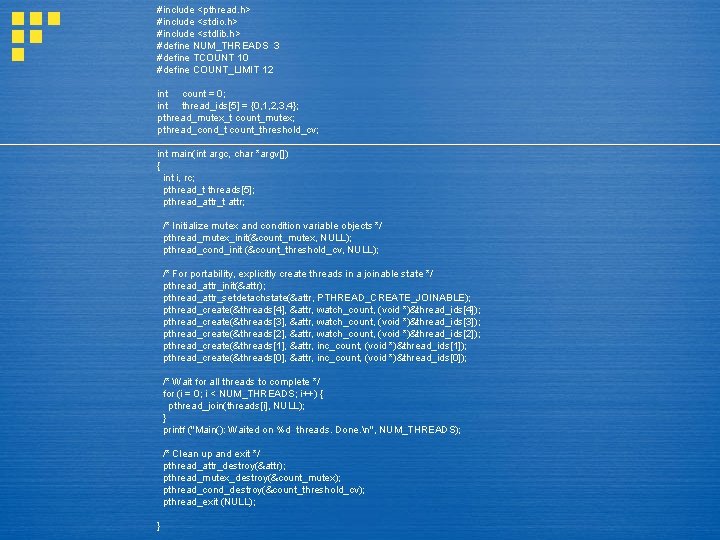

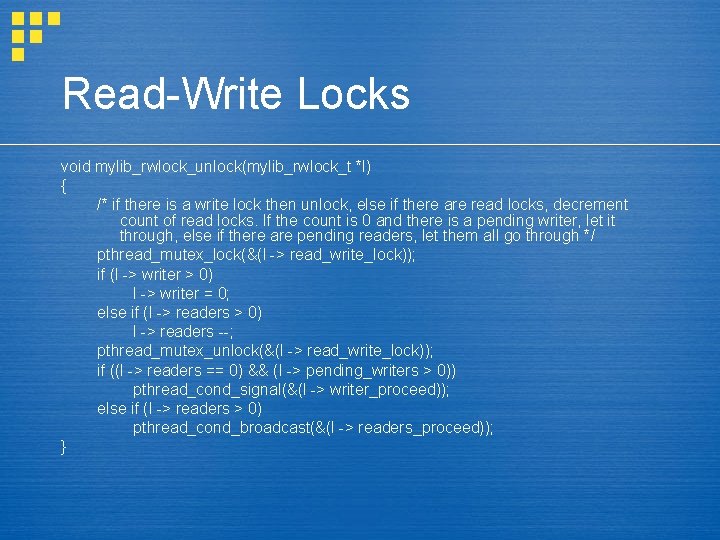

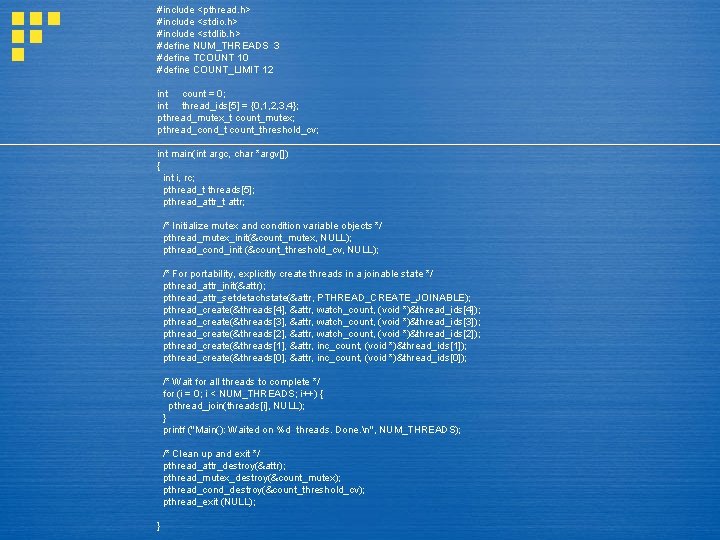

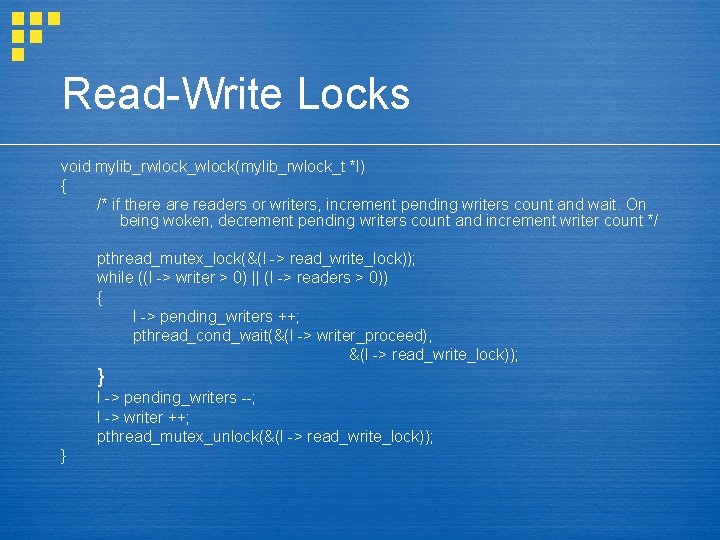

#include <pthread. h> #include <stdio. h> #include <stdlib. h> #define NUM_THREADS 3 #define TCOUNT 10 #define COUNT_LIMIT 12 int count = 0; int thread_ids[5] = {0, 1, 2, 3, 4}; pthread_mutex_t count_mutex; pthread_cond_t count_threshold_cv; int main(int argc, char *argv[]) { int i, rc; pthread_t threads[5]; pthread_attr_t attr; /* Initialize mutex and condition variable objects */ pthread_mutex_init(&count_mutex, NULL); pthread_cond_init (&count_threshold_cv, NULL); /* For portability, explicitly create threads in a joinable state */ pthread_attr_init(&attr); pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_JOINABLE); pthread_create(&threads[4], &attr, watch_count, (void *)&thread_ids[4]); pthread_create(&threads[3], &attr, watch_count, (void *)&thread_ids[3]); pthread_create(&threads[2], &attr, watch_count, (void *)&thread_ids[2]); pthread_create(&threads[1], &attr, inc_count, (void *)&thread_ids[1]); pthread_create(&threads[0], &attr, inc_count, (void *)&thread_ids[0]); /* Wait for all threads to complete */ for (i = 0; i < NUM_THREADS; i++) { pthread_join(threads[i], NULL); } printf ("Main(): Waited on %d threads. Done. n", NUM_THREADS); /* Clean up and exit */ pthread_attr_destroy(&attr); pthread_mutex_destroy(&count_mutex); pthread_cond_destroy(&count_threshold_cv); pthread_exit (NULL); }

![int count 0 int threadids5 0 1 2 3 4 pthreadmutext countmutex int count = 0; int thread_ids[5] = {0, 1, 2, 3, 4}; pthread_mutex_t count_mutex;](https://slidetodoc.com/presentation_image_h/c1e71526fcfb8367ae6efcff93e4a4cd/image-27.jpg)

int count = 0; int thread_ids[5] = {0, 1, 2, 3, 4}; pthread_mutex_t count_mutex; pthread_cond_t count_threshold_cv; void *inc_count(void *idp) { int j, i; double result=0. 0; int *my_id = idp; void *watch_count(void *idp) { int *my_id = idp; printf("Starting watch_count(): thread %dn", *my_id); for (i=0; i < TCOUNT; i++) { pthread_mutex_lock(&count_mutex); count++; /* Check the value of count and signal waiting thread when condition is reached. Note that this occurs while mutex is locked. */ if (count == COUNT_LIMIT) { pthread_cond_broadcast(&count_threshold_cv); printf("inc_count(): thread %d, count = %d Threshold reached. n", *my_id, count); } printf("inc_count(): thread %d, count = %d, unlocking mutexn", *my_id, count); pthread_mutex_unlock(&count_mutex); /* Do some work so threads can alternate on mutex lock */ for (j=0; j < 1000; j++) result = result + (double)random(); } pthread_exit(NULL); } /* Lock mutex and wait for signal. Note that the pthread_cond_wait routine will automatically and atomically unlock mutex while it waits. Also, note that if COUNT_LIMIT is reached before this routine is run by the waiting thread, the loop will be skipped to prevent pthread_cond_wait from never returning. */ pthread_mutex_lock(&count_mutex); if (count < COUNT_LIMIT) { pthread_cond_wait(&count_threshold_cv, &count_mutex); printf("watch_count(): thread %d Condition signal received. n", *my_id); } pthread_mutex_unlock(&count_mutex); pthread_exit(NULL); }

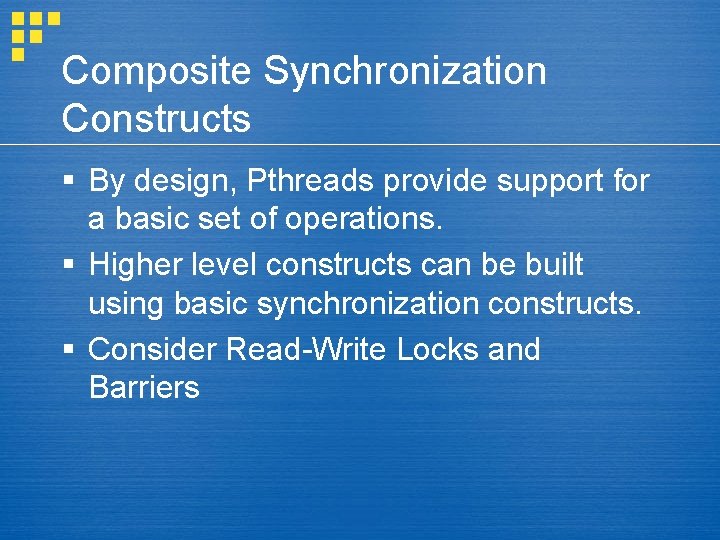

Composite Synchronization Constructs § By design, Pthreads provide support for a basic set of operations. § Higher level constructs can be built using basic synchronization constructs. § Consider Read-Write Locks and Barriers

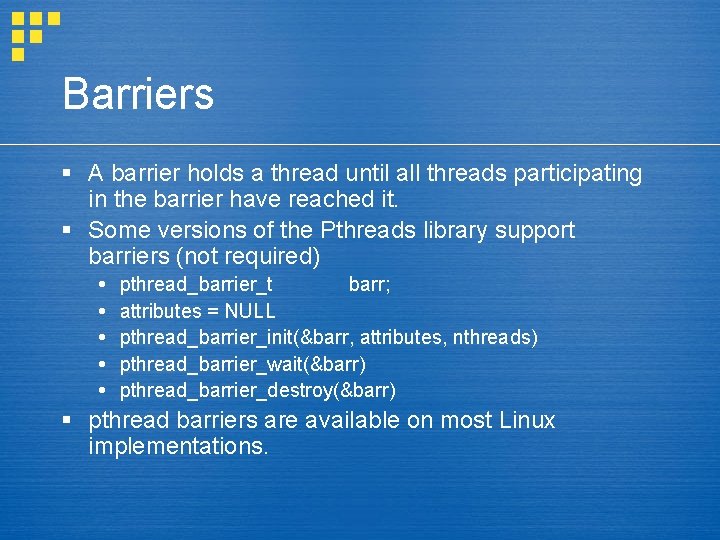

Barriers § A barrier holds a thread until all threads participating in the barrier have reached it. § Some versions of the Pthreads library support barriers (not required) pthread_barrier_t barr; attributes = NULL pthread_barrier_init(&barr, attributes, nthreads) pthread_barrier_wait(&barr) pthread_barrier_destroy(&barr) § pthread barriers are available on most Linux implementations.

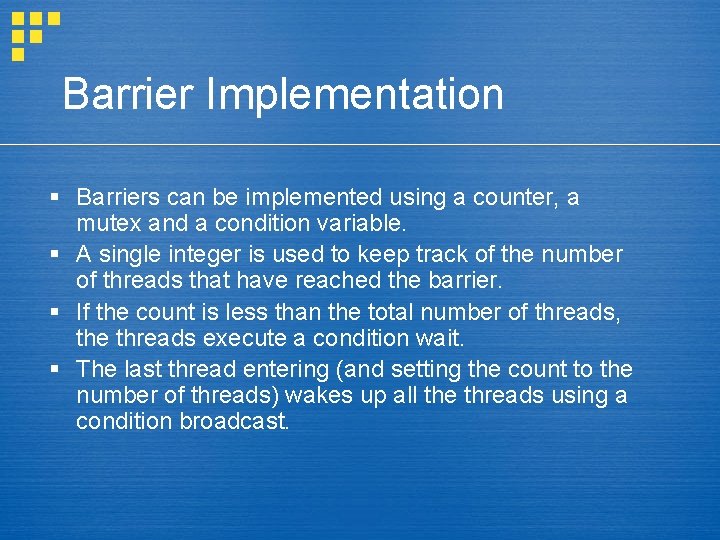

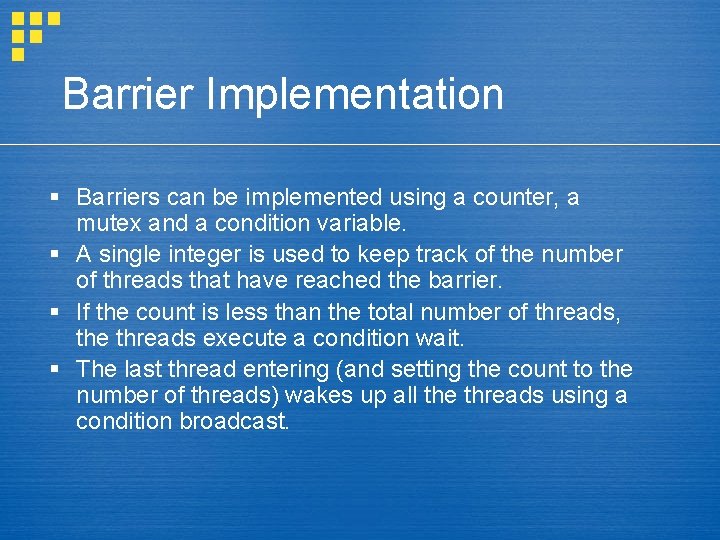

Barrier Implementation § Barriers can be implemented using a counter, a mutex and a condition variable. § A single integer is used to keep track of the number of threads that have reached the barrier. § If the count is less than the total number of threads, the threads execute a condition wait. § The last thread entering (and setting the count to the number of threads) wakes up all the threads using a condition broadcast.

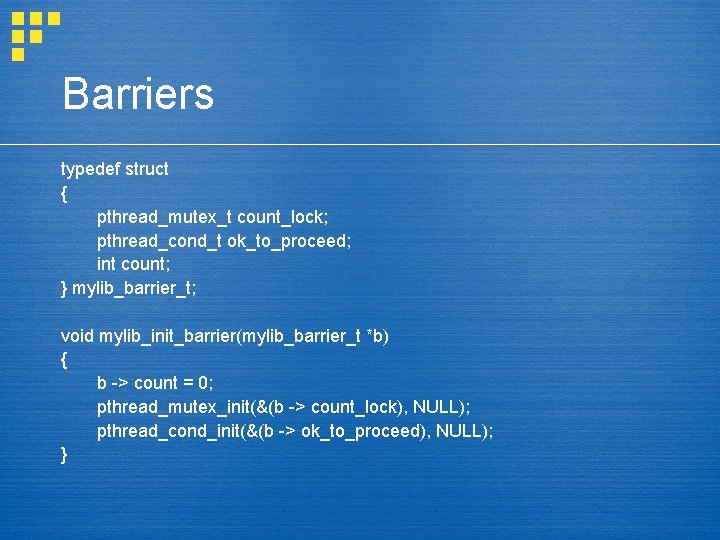

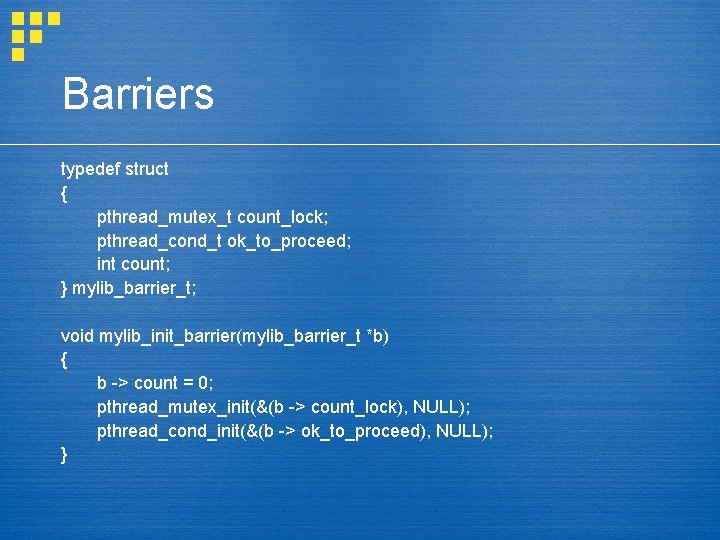

Barriers typedef struct { pthread_mutex_t count_lock; pthread_cond_t ok_to_proceed; int count; } mylib_barrier_t; void mylib_init_barrier(mylib_barrier_t *b) { b -> count = 0; pthread_mutex_init(&(b -> count_lock), NULL); pthread_cond_init(&(b -> ok_to_proceed), NULL); }

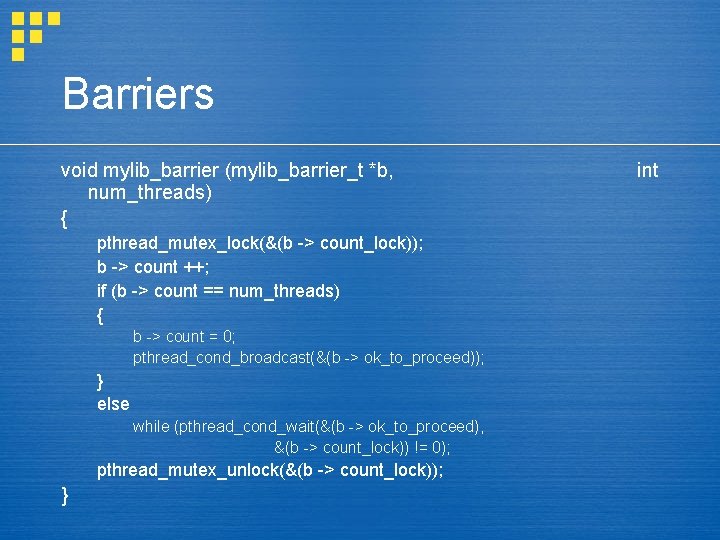

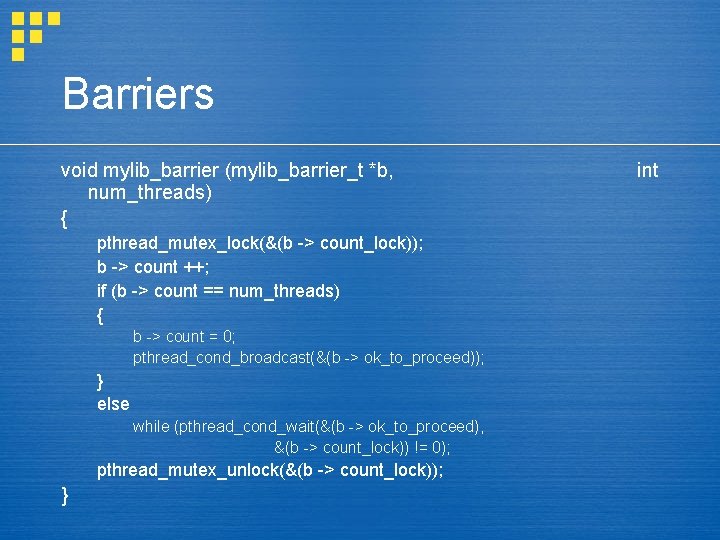

Barriers void mylib_barrier (mylib_barrier_t *b, num_threads) { pthread_mutex_lock(&(b -> count_lock)); b -> count ++; if (b -> count == num_threads) { b -> count = 0; pthread_cond_broadcast(&(b -> ok_to_proceed)); } else while (pthread_cond_wait(&(b -> ok_to_proceed), &(b -> count_lock)) != 0); pthread_mutex_unlock(&(b -> count_lock)); } int

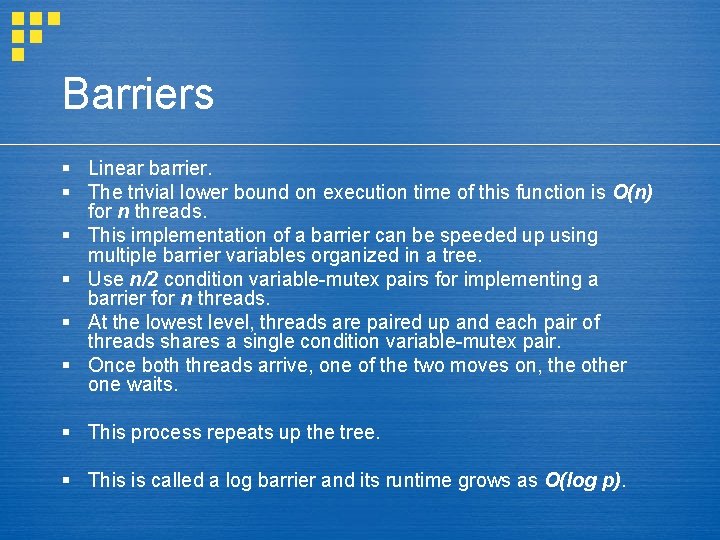

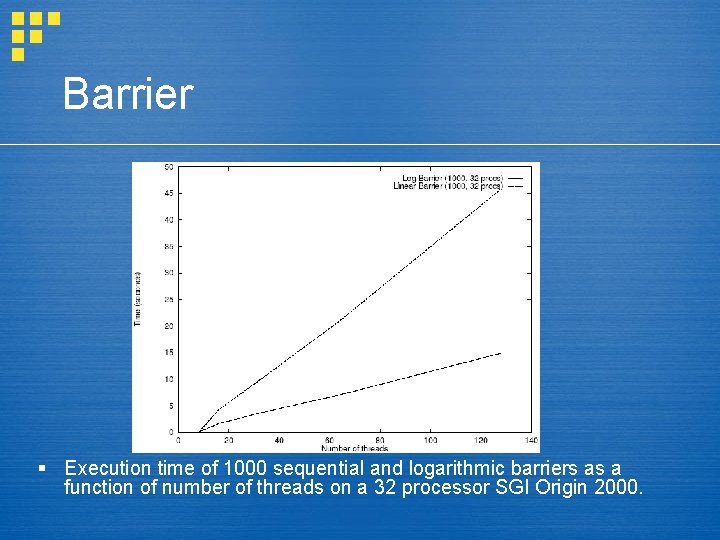

Barriers § Linear barrier. § The trivial lower bound on execution time of this function is O(n) for n threads. § This implementation of a barrier can be speeded up using multiple barrier variables organized in a tree. § Use n/2 condition variable-mutex pairs for implementing a barrier for n threads. § At the lowest level, threads are paired up and each pair of threads shares a single condition variable-mutex pair. § Once both threads arrive, one of the two moves on, the other one waits. § This process repeats up the tree. § This is called a log barrier and its runtime grows as O(log p).

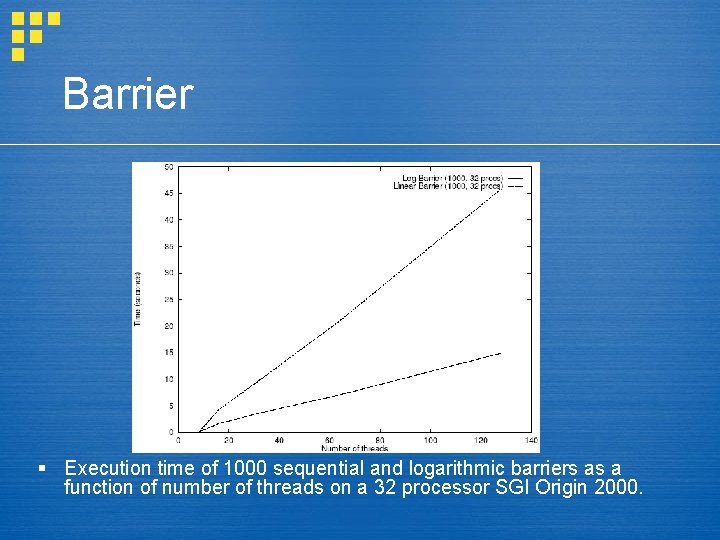

Barrier § Execution time of 1000 sequential and logarithmic barriers as a function of number of threads on a 32 processor SGI Origin 2000.

Barriers § Use pthread condition variables and mutexes. § Is this the best way? Forces a thread to sleep and give up the processor § Rather than give up the processor, just wait on a variable. busy wait § Will it be faster?

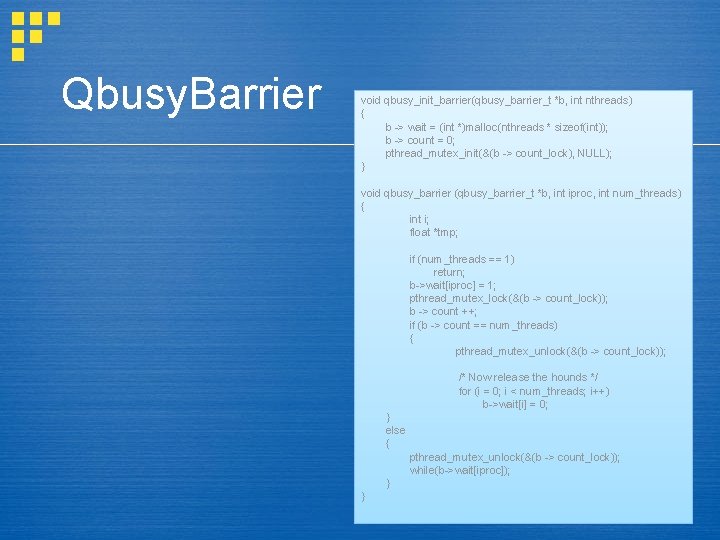

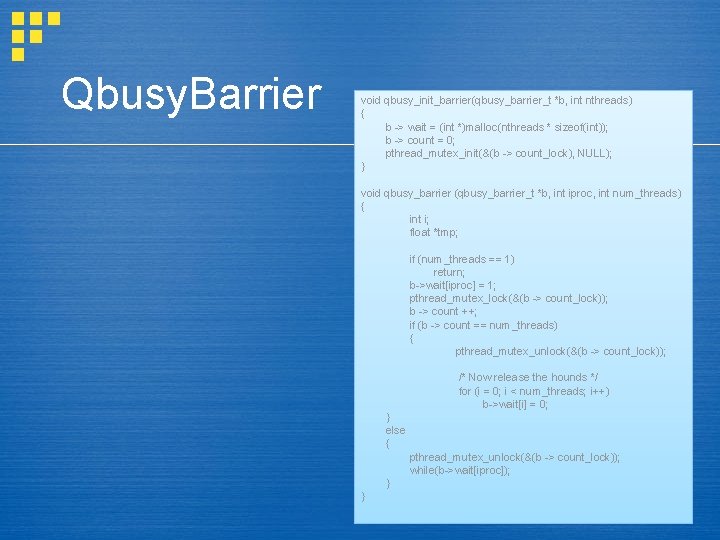

Qbusy. Barrier void qbusy_init_barrier(qbusy_barrier_t *b, int nthreads) { b -> wait = (int *)malloc(nthreads * sizeof(int)); b -> count = 0; pthread_mutex_init(&(b -> count_lock), NULL); } void qbusy_barrier (qbusy_barrier_t *b, int iproc, int num_threads) { int i; float *tmp; if (num_threads == 1) return; b->wait[iproc] = 1; pthread_mutex_lock(&(b -> count_lock)); b -> count ++; if (b -> count == num_threads) { pthread_mutex_unlock(&(b -> count_lock)); /* Now release the hounds */ for (i = 0; i < num_threads; i++) b->wait[i] = 0; } else { pthread_mutex_unlock(&(b -> count_lock)); while(b->wait[iproc]); } }

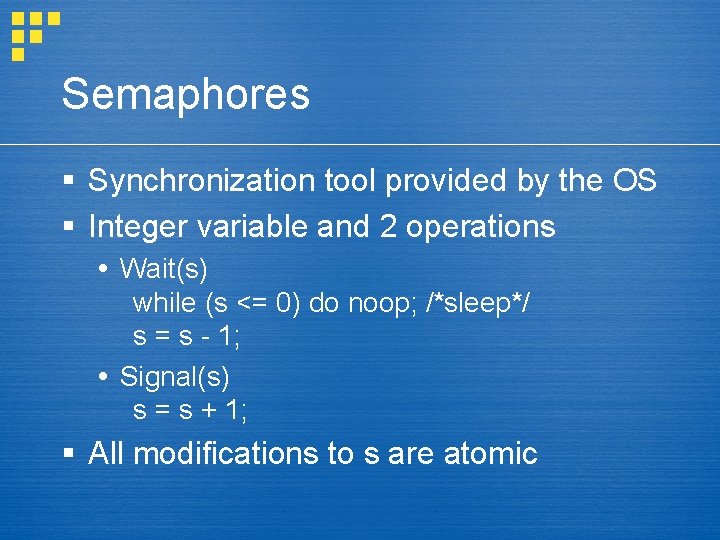

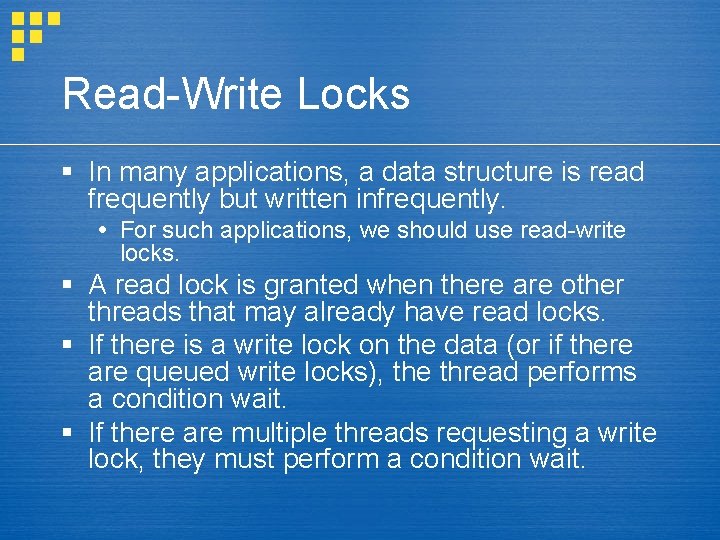

Read-Write Locks § In many applications, a data structure is read frequently but written infrequently. For such applications, we should use read-write locks. § A read lock is granted when there are other threads that may already have read locks. § If there is a write lock on the data (or if there are queued write locks), the thread performs a condition wait. § If there are multiple threads requesting a write lock, they must perform a condition wait.

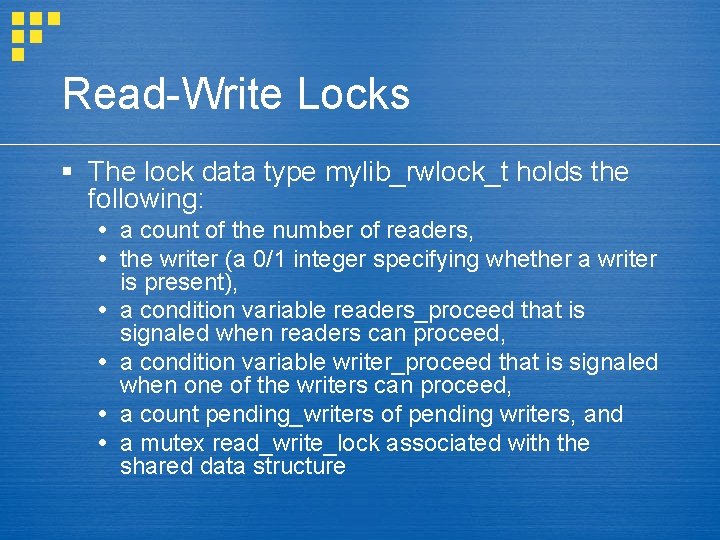

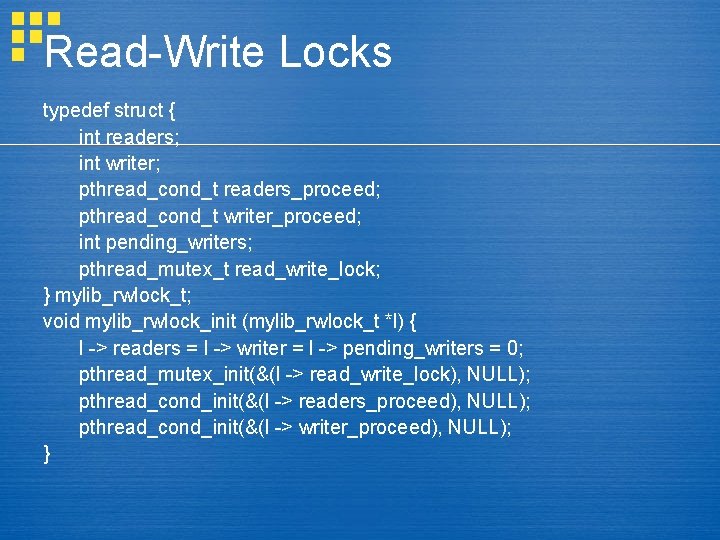

Read-Write Locks § The lock data type mylib_rwlock_t holds the following: a count of the number of readers, the writer (a 0/1 integer specifying whether a writer is present), a condition variable readers_proceed that is signaled when readers can proceed, a condition variable writer_proceed that is signaled when one of the writers can proceed, a count pending_writers of pending writers, and a mutex read_write_lock associated with the shared data structure

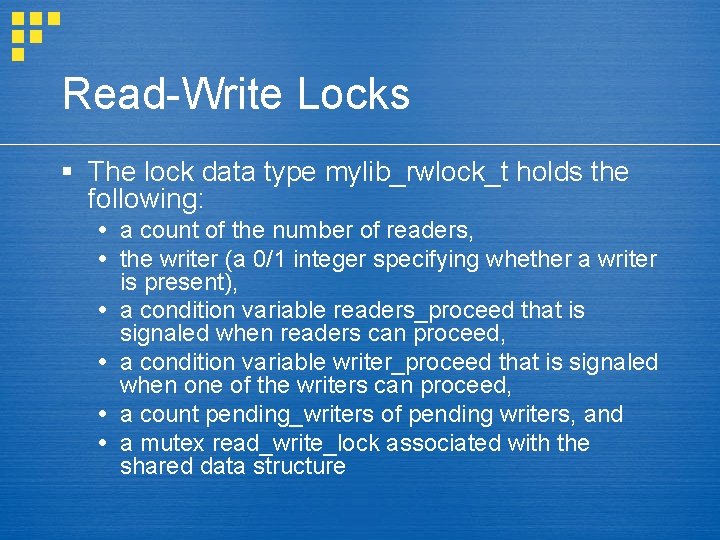

Read-Write Locks typedef struct { int readers; int writer; pthread_cond_t readers_proceed; pthread_cond_t writer_proceed; int pending_writers; pthread_mutex_t read_write_lock; } mylib_rwlock_t; void mylib_rwlock_init (mylib_rwlock_t *l) { l -> readers = l -> writer = l -> pending_writers = 0; pthread_mutex_init(&(l -> read_write_lock), NULL); pthread_cond_init(&(l -> readers_proceed), NULL); pthread_cond_init(&(l -> writer_proceed), NULL); }

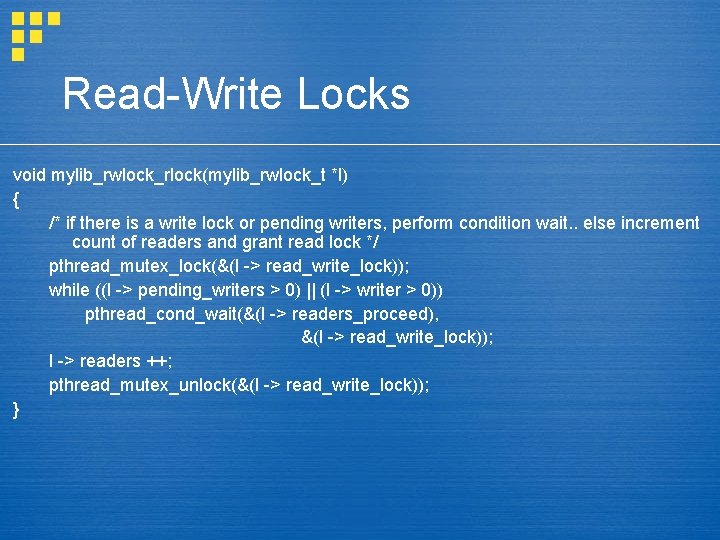

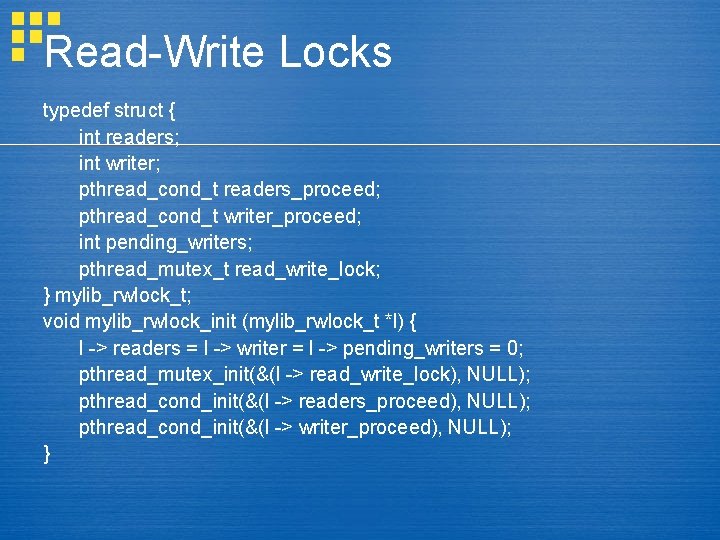

Read-Write Locks void mylib_rwlock_rlock(mylib_rwlock_t *l) { /* if there is a write lock or pending writers, perform condition wait. . else increment count of readers and grant read lock */ pthread_mutex_lock(&(l -> read_write_lock)); while ((l -> pending_writers > 0) || (l -> writer > 0)) pthread_cond_wait(&(l -> readers_proceed), &(l -> read_write_lock)); l -> readers ++; pthread_mutex_unlock(&(l -> read_write_lock)); }

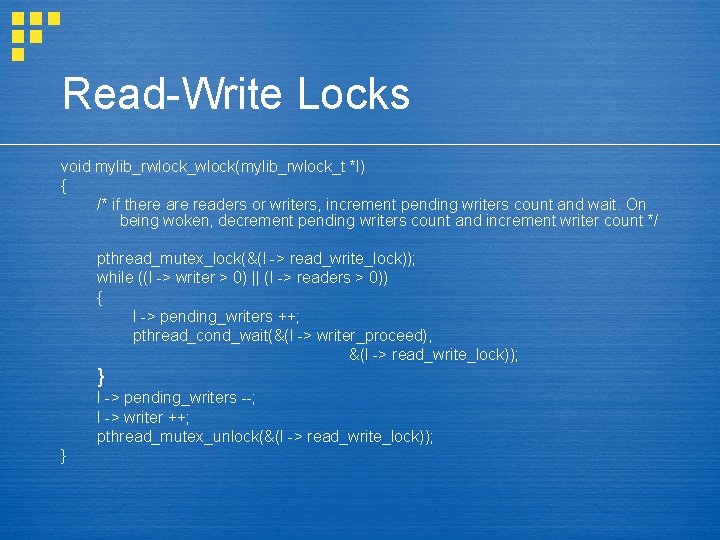

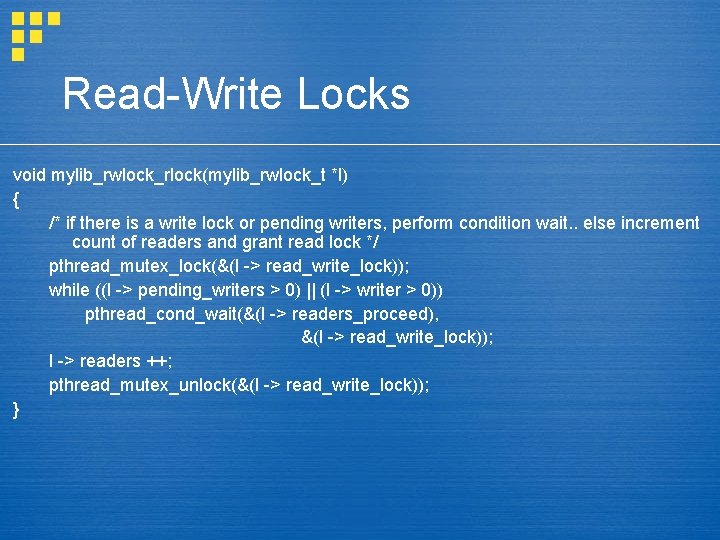

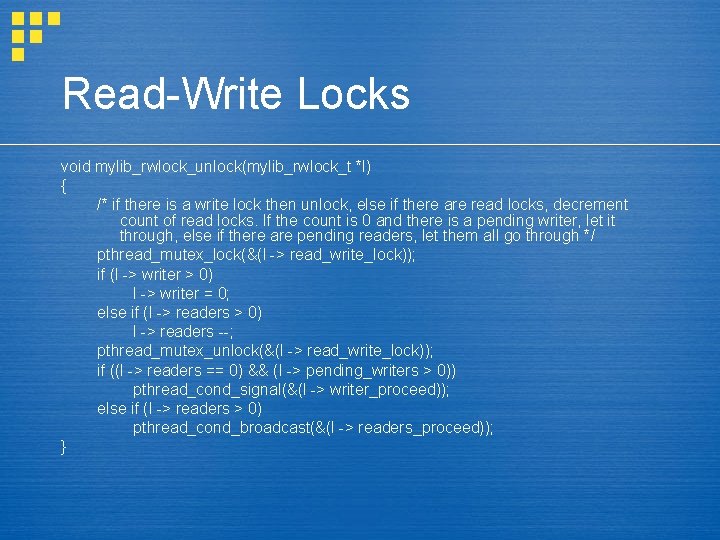

Read-Write Locks void mylib_rwlock_wlock(mylib_rwlock_t *l) { /* if there are readers or writers, increment pending writers count and wait. On being woken, decrement pending writers count and increment writer count */ pthread_mutex_lock(&(l -> read_write_lock)); while ((l -> writer > 0) || (l -> readers > 0)) { l -> pending_writers ++; pthread_cond_wait(&(l -> writer_proceed), &(l -> read_write_lock)); } l -> pending_writers --; l -> writer ++; pthread_mutex_unlock(&(l -> read_write_lock)); }

Read-Write Locks void mylib_rwlock_unlock(mylib_rwlock_t *l) { /* if there is a write lock then unlock, else if there are read locks, decrement count of read locks. If the count is 0 and there is a pending writer, let it through, else if there are pending readers, let them all go through */ pthread_mutex_lock(&(l -> read_write_lock)); if (l -> writer > 0) l -> writer = 0; else if (l -> readers > 0) l -> readers --; pthread_mutex_unlock(&(l -> read_write_lock)); if ((l -> readers == 0) && (l -> pending_writers > 0)) pthread_cond_signal(&(l -> writer_proceed)); else if (l -> readers > 0) pthread_cond_broadcast(&(l -> readers_proceed)); }

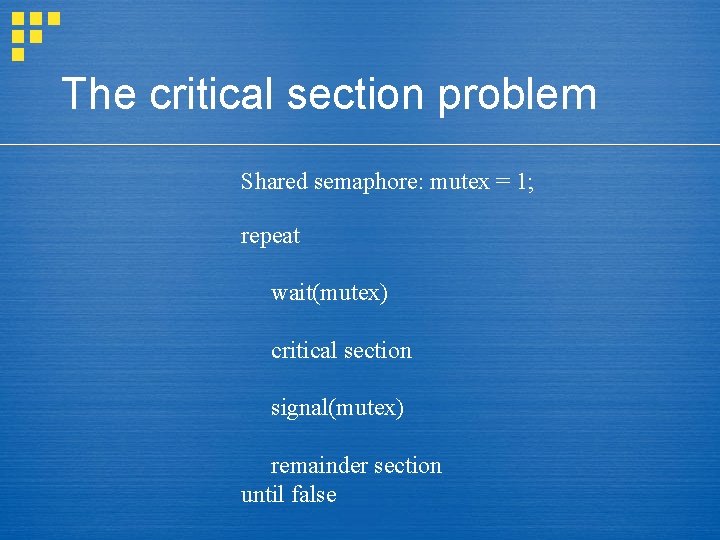

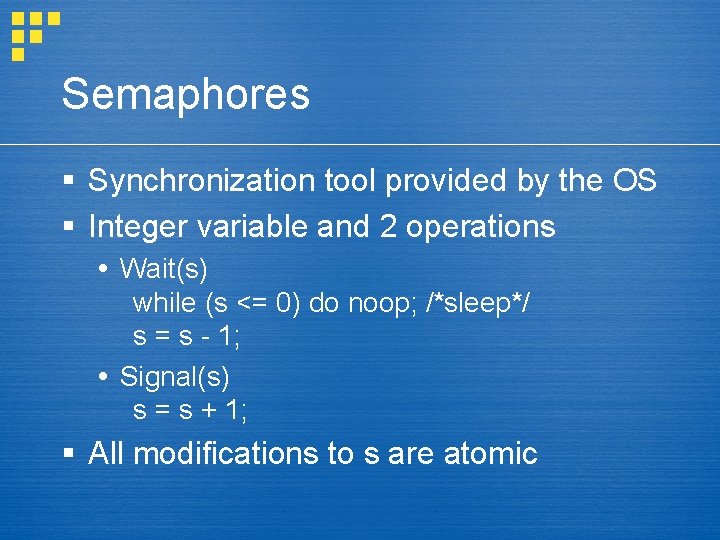

Semaphores § Synchronization tool provided by the OS § Integer variable and 2 operations Wait(s) while (s <= 0) do noop; /*sleep*/ s = s - 1; Signal(s) s = s + 1; § All modifications to s are atomic

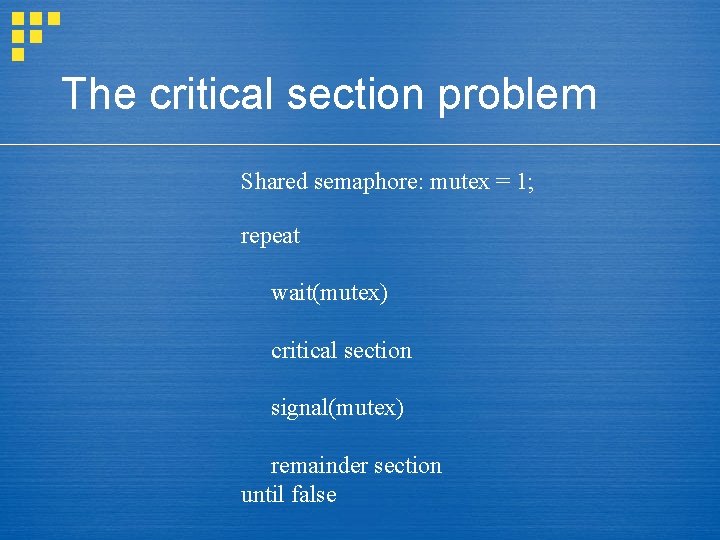

The critical section problem Shared semaphore: mutex = 1; repeat wait(mutex) critical section signal(mutex) remainder section until false

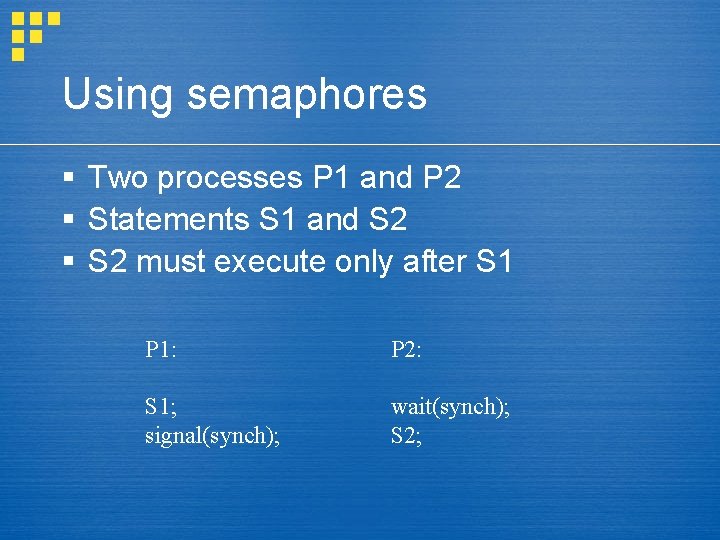

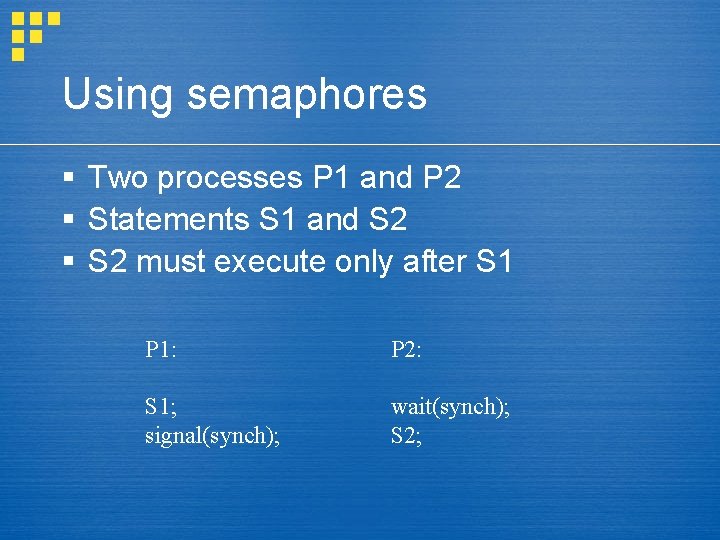

Using semaphores § Two processes P 1 and P 2 § Statements S 1 and S 2 § S 2 must execute only after S 1 P 1: P 2: S 1; signal(synch); wait(synch); S 2;

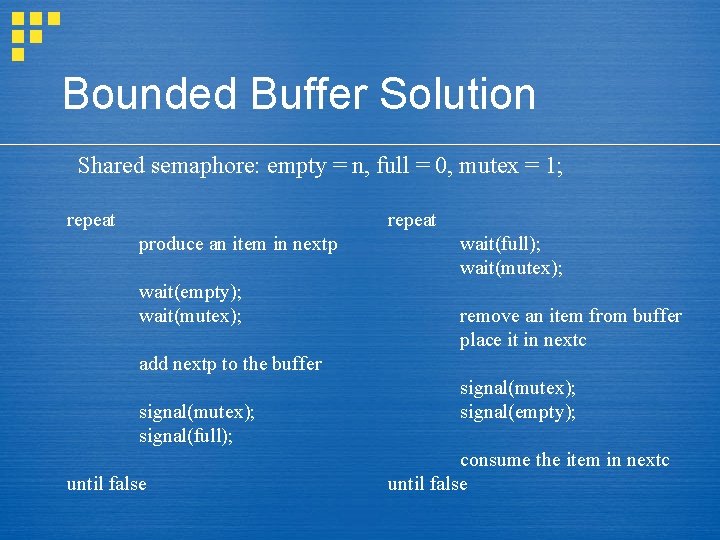

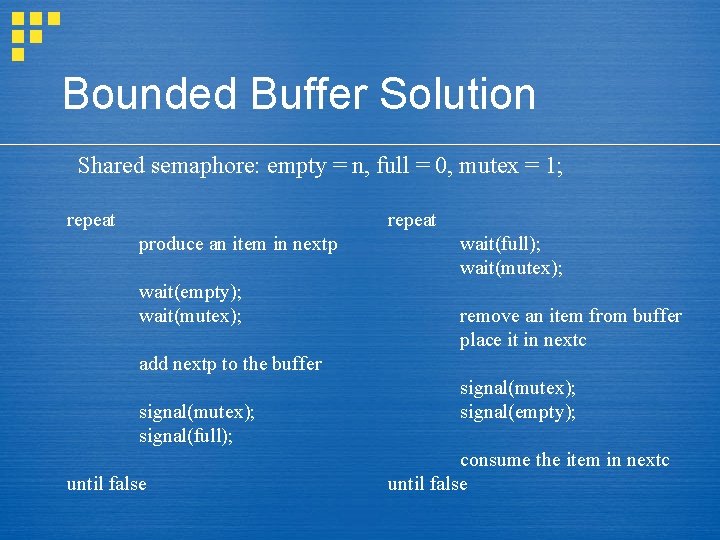

Bounded Buffer Solution Shared semaphore: empty = n, full = 0, mutex = 1; repeat produce an item in nextp wait(empty); wait(mutex); wait(full); wait(mutex); remove an item from buffer place it in nextc add nextp to the buffer signal(mutex); signal(full); until false signal(mutex); signal(empty); consume the item in nextc until false

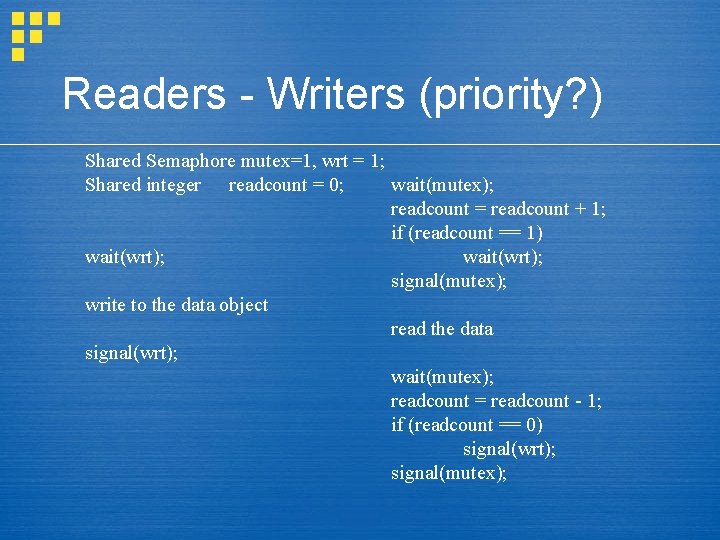

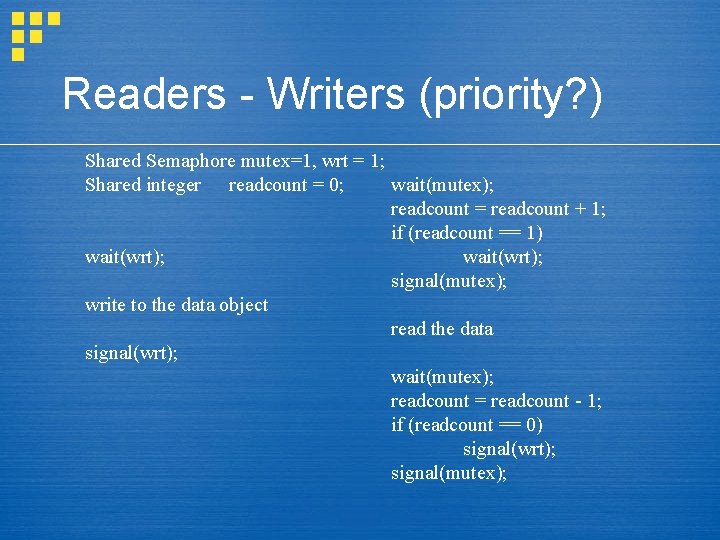

Readers - Writers (priority? ) Shared Semaphore mutex=1, wrt = 1; wait(mutex); Shared integer readcount = 0; readcount = readcount + 1; if (readcount == 1) wait(wrt); signal(mutex); write to the data object read the data signal(wrt); wait(mutex); readcount = readcount - 1; if (readcount == 0) signal(wrt); signal(mutex);

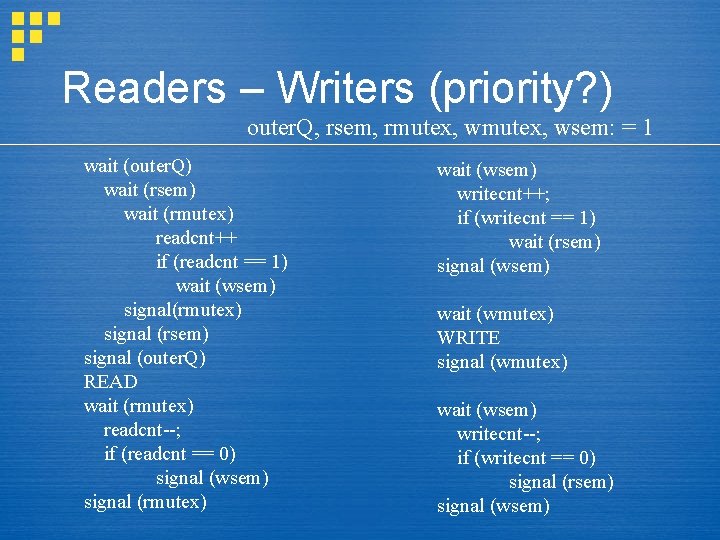

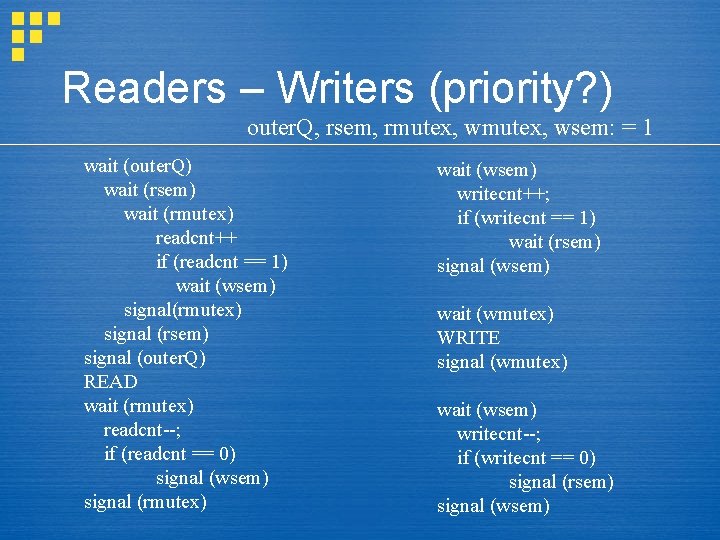

Readers – Writers (priority? ) outer. Q, rsem, rmutex, wsem: = 1 wait (outer. Q) wait (rsem) wait (rmutex) readcnt++ if (readcnt == 1) wait (wsem) signal(rmutex) signal (rsem) signal (outer. Q) READ wait (rmutex) readcnt--; if (readcnt == 0) signal (wsem) signal (rmutex) wait (wsem) writecnt++; if (writecnt == 1) wait (rsem) signal (wsem) wait (wmutex) WRITE signal (wmutex) wait (wsem) writecnt--; if (writecnt == 0) signal (rsem) signal (wsem)

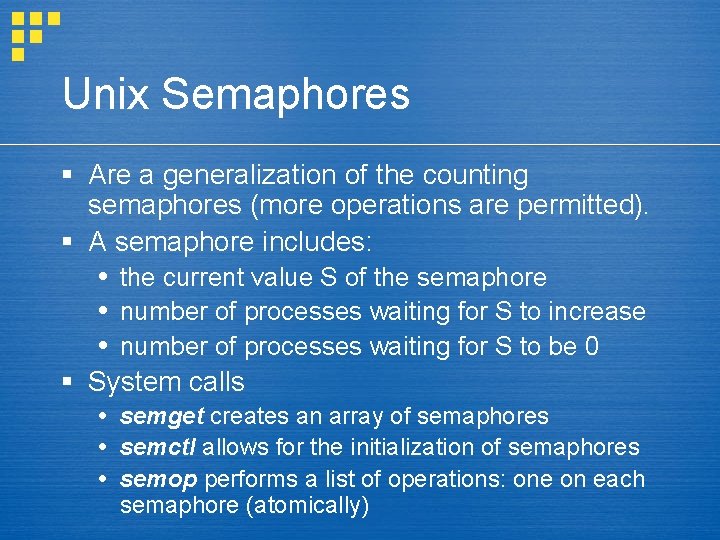

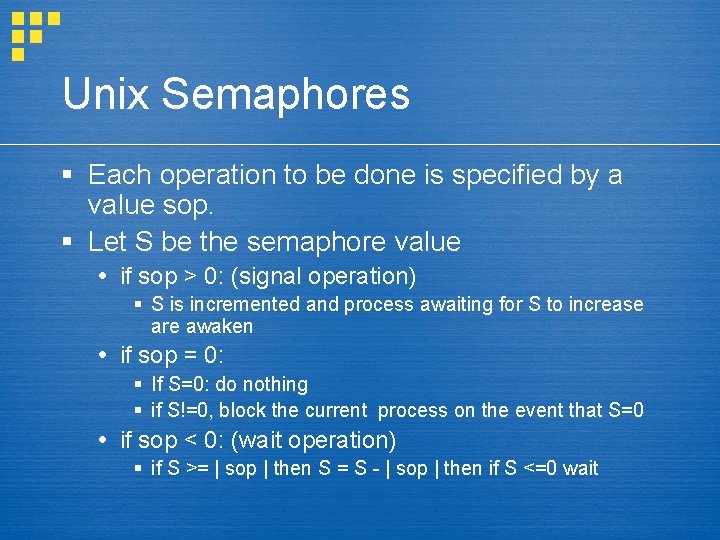

Unix Semaphores § Are a generalization of the counting semaphores (more operations are permitted). § A semaphore includes: the current value S of the semaphore number of processes waiting for S to increase number of processes waiting for S to be 0 § System calls semget creates an array of semaphores semctl allows for the initialization of semaphores semop performs a list of operations: one on each semaphore (atomically)

Unix Semaphores § Each operation to be done is specified by a value sop. § Let S be the semaphore value if sop > 0: (signal operation) § S is incremented and process awaiting for S to increase are awaken if sop = 0: § If S=0: do nothing § if S!=0, block the current process on the event that S=0 if sop < 0: (wait operation) § if S >= | sop | then S = S - | sop | then if S <=0 wait