Parallel Programming With NVIDIA CUDA zgr ahin 200711042

- Slides: 22

Parallel Programming With NVIDIA CUDA Özgür Şahin 200711042

Context Introduction : What is NVIDIA CUDA? CPU vs. GPU CUDA SDK Different Types of CUDA Applications Programming Models Of GPUs Motivations For Using CUDA Programming Model & Important Concepts • My NVIDIA Graphic Card • Summary • •

What Is NVIDIA Cuda? • CUDA is NVIDIA’s parallel computing architecture that enables dramatic increases in computing performance by harnessing the power of the GPU (graphics processing unit). • CUDA is an acronym for Computer Unified Device Architecture. • Heterogeneous - mixed serial-parallel programming • Scalable - hierarchical thread execution model • Accessible - minimal but expressive changes to C

More About CUDA • CUDA is a platform for performing massively parallel computations on graphics accelerators. • It was first available with their G 8 X line of graphics cards. (Year 2007) • With CUDA developments it is possible to do things like simulating networks of brain neurons. • CUDA brings the possibility of using supercomputing to the everyday computers…

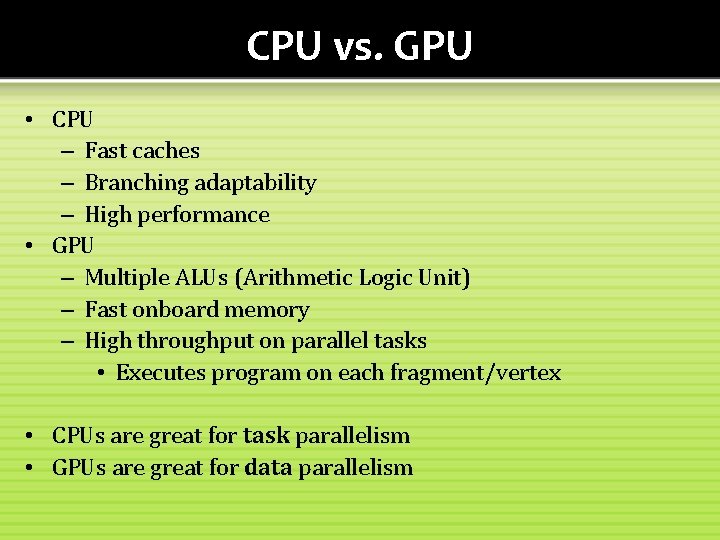

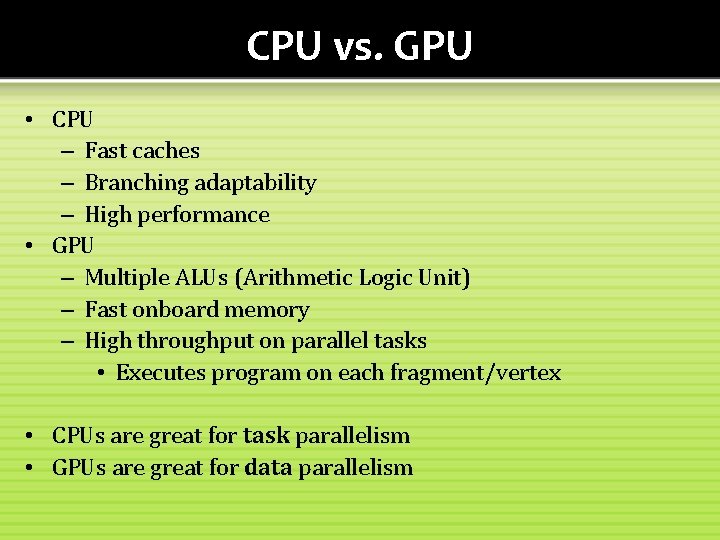

CPU vs. GPU • CPU – Fast caches – Branching adaptability – High performance • GPU – Multiple ALUs (Arithmetic Logic Unit) – Fast onboard memory – High throughput on parallel tasks • Executes program on each fragment/vertex • CPUs are great for task parallelism • GPUs are great for data parallelism

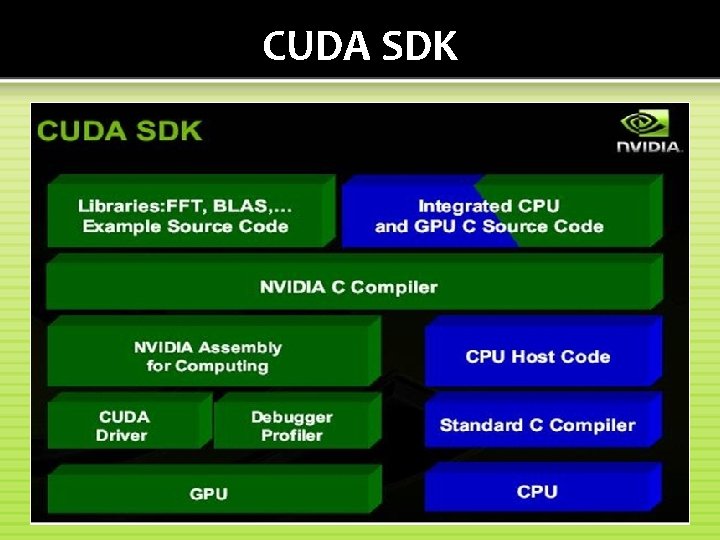

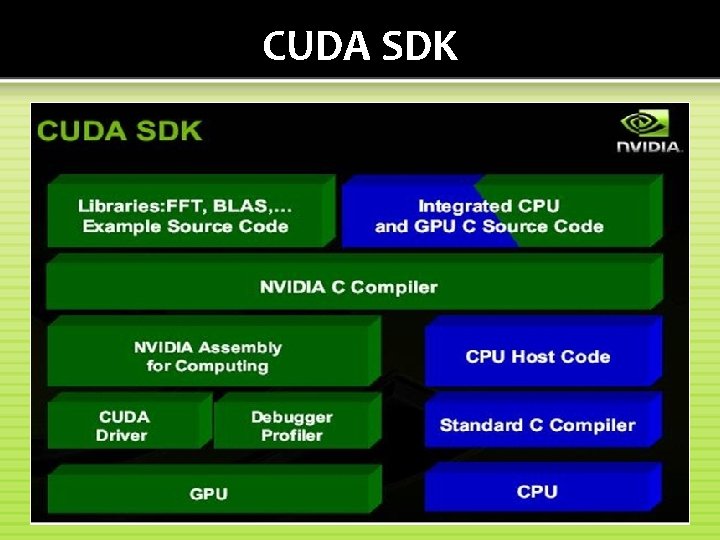

CUDA SDK

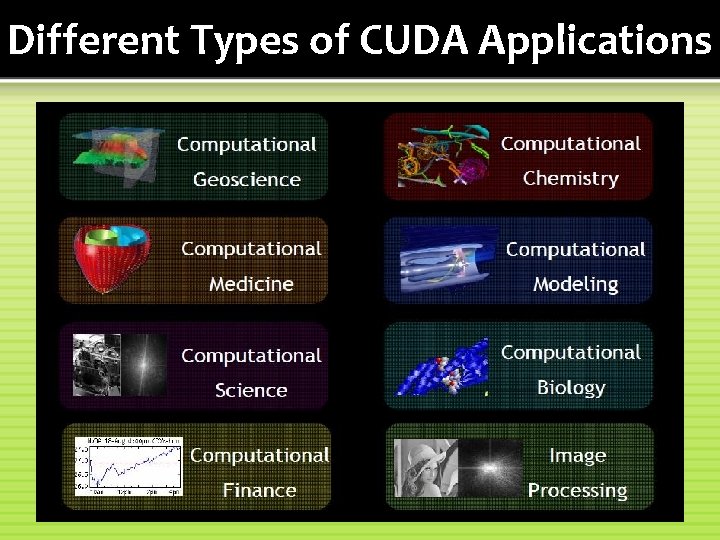

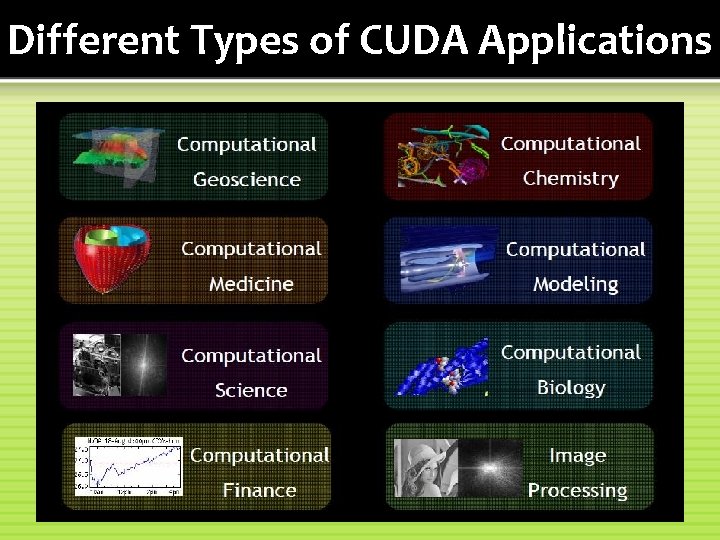

Different Types of CUDA Applications • The use of Graphics Processing Units (GPUs) for rendering is well known, but their power for general parallel computation has only recently been explored. Parallel algorithms running on GPUs can often achieve up to 100 x speedup over similar CPU algorithms, with many existing applications for physics simulations, signal processing, financial modeling, neural networks, and countless other fields.

Different Types of CUDA Applications

Programming Models Of GPUs • There are three uses of GPUs. 1) GPU Programming Model 2) GPGPU Programming Model 3) CUDA Programming Model

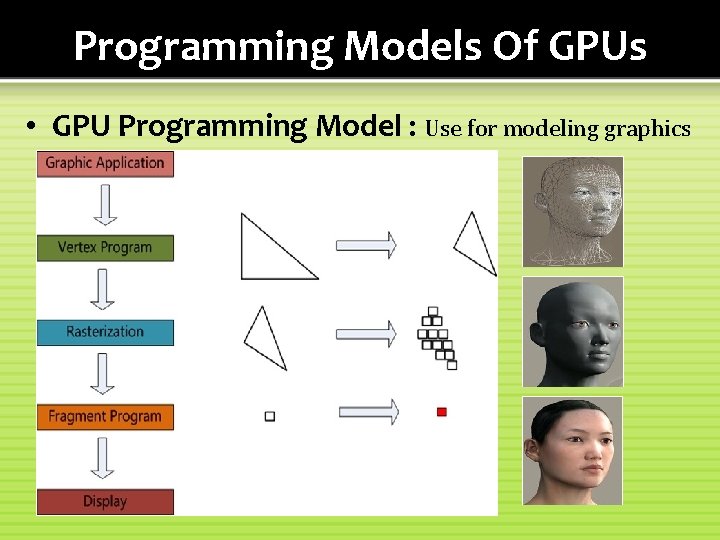

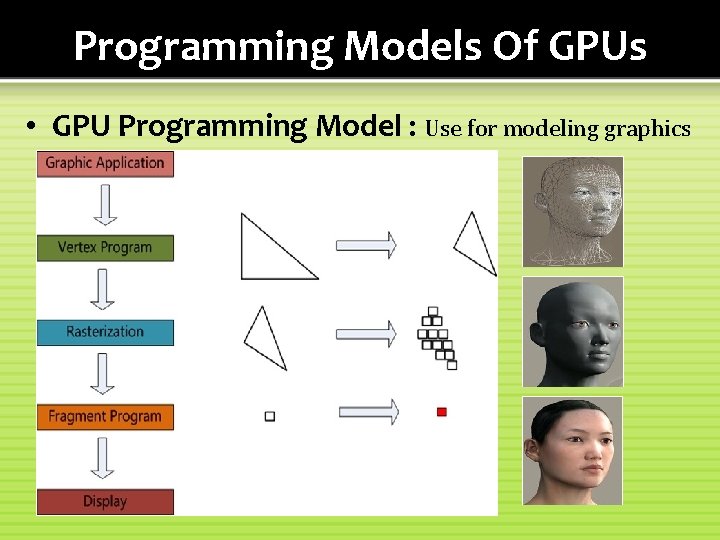

Programming Models Of GPUs • GPU Programming Model : Use for modeling graphics

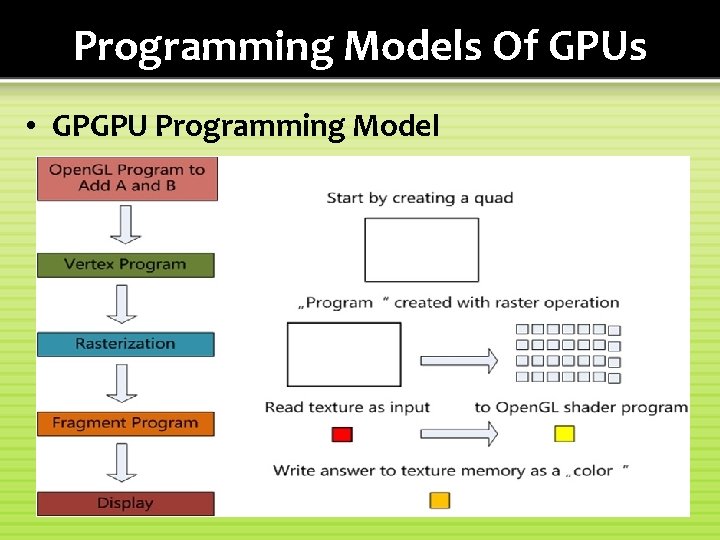

Programming Models Of GPUs • GPGPU Programming Model : General-purpose computing on GPUs (GPGPU, also referred to as GPGP and less often GP²) is the technique of using a GPU, which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the CPU. • Shortly trick the GPU into general-purpose computing by casting problem as graphics. • Turn data into images ("texture maps") • Turn algorithms into image synthesis ("rending passes") • Drawbacks: • Tough learning curve • Potentially high overhead of graphics API • Highly constrained memory layout & access model • Need for many passes drives up bandwidth consumption

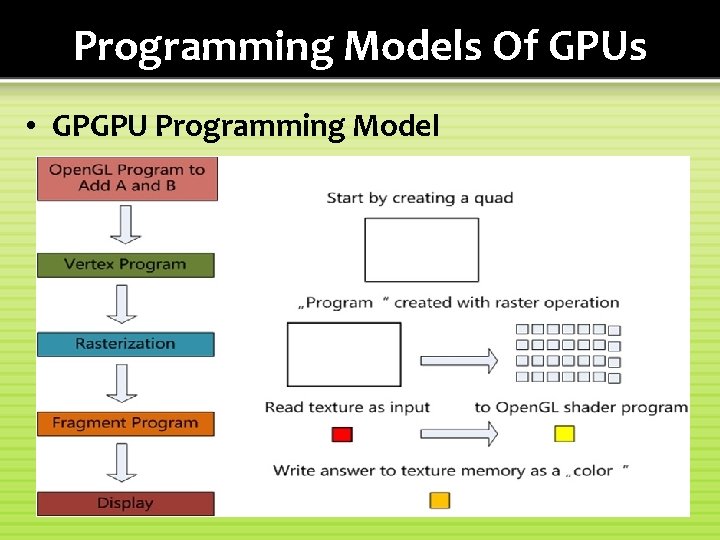

Programming Models Of GPUs • GPGPU Programming Model

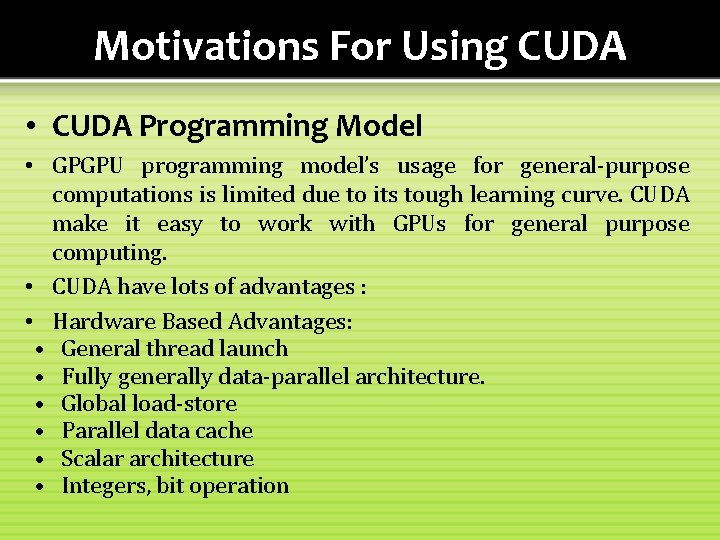

Motivations For Using CUDA • CUDA Programming Model • GPGPU programming model’s usage for general-purpose computations is limited due to its tough learning curve. CUDA make it easy to work with GPUs for general purpose computing. • CUDA have lots of advantages : • Hardware Based Advantages: • General thread launch • Fully generally data-parallel architecture. • Global load-store • Parallel data cache • Scalar architecture • Integers, bit operation

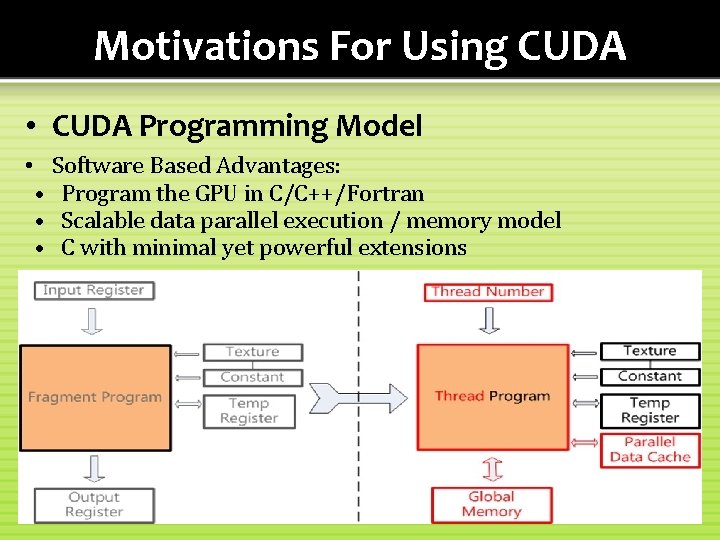

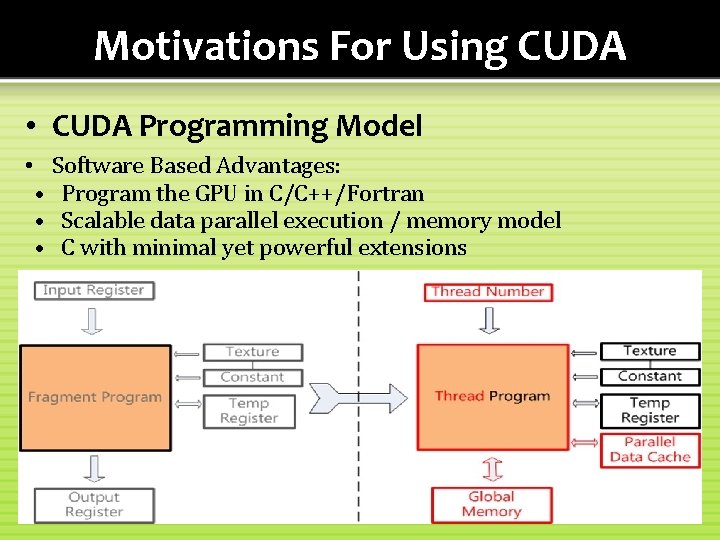

Motivations For Using CUDA • CUDA Programming Model • Software Based Advantages: • Program the GPU in C/C++/Fortran • Scalable data parallel execution / memory model • C with minimal yet powerful extensions

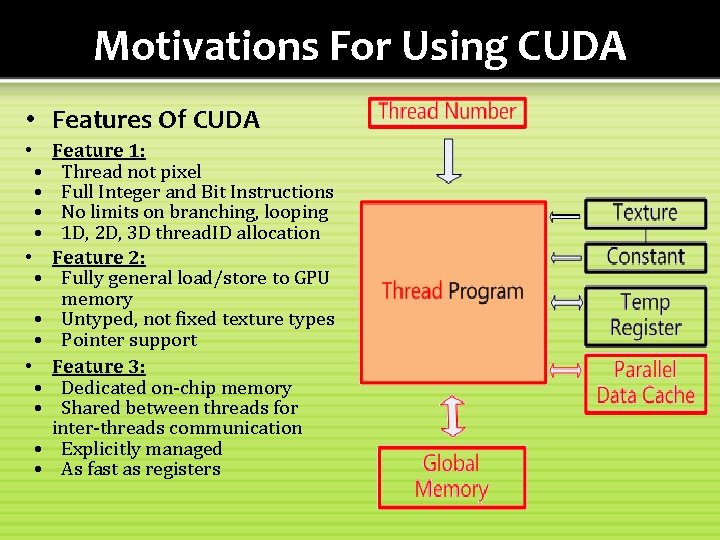

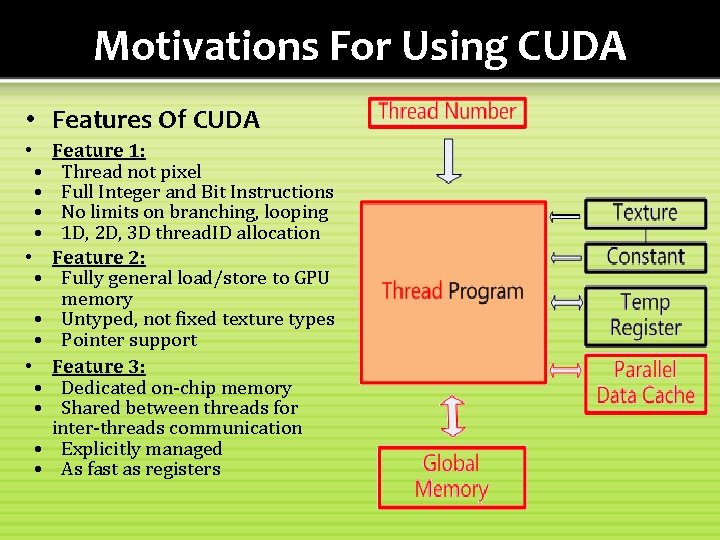

Motivations For Using CUDA • Features Of CUDA • Feature 1: • Thread not pixel • Full Integer and Bit Instructions • No limits on branching, looping • 1 D, 2 D, 3 D thread. ID allocation • Feature 2: • Fully general load/store to GPU memory • Untyped, not fixed texture types • Pointer support • Feature 3: • Dedicated on-chip memory • Shared between threads for inter-threads communication • Explicitly managed • As fast as registers

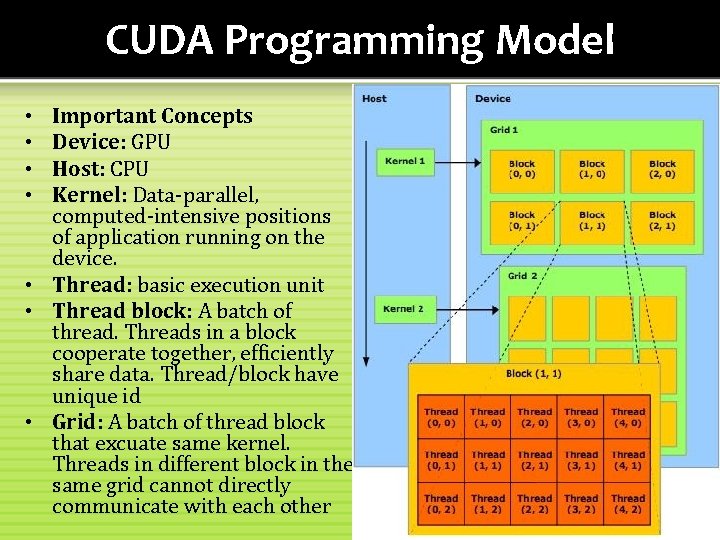

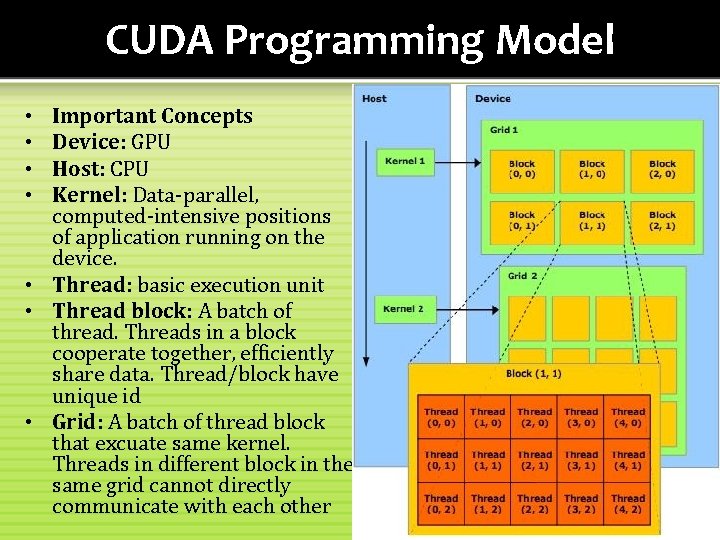

CUDA Programming Model Important Concepts Device: GPU Host: CPU Kernel: Data-parallel, computed-intensive positions of application running on the device. • Thread: basic execution unit • Thread block: A batch of thread. Threads in a block cooperate together, efficiently share data. Thread/block have unique id • Grid: A batch of thread block that excuate same kernel. Threads in different block in the same grid cannot directly communicate with each other • •

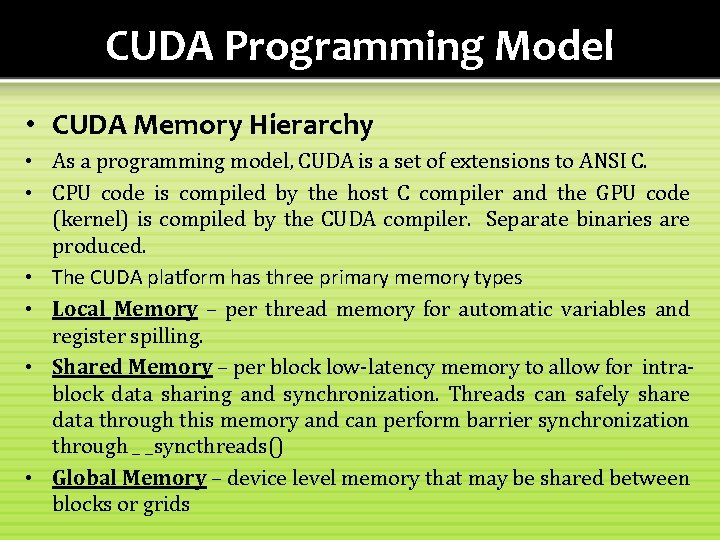

CUDA Programming Model • CUDA Memory Hierarchy • As a programming model, CUDA is a set of extensions to ANSI C. • CPU code is compiled by the host C compiler and the GPU code (kernel) is compiled by the CUDA compiler. Separate binaries are produced. • The CUDA platform has three primary memory types • Local Memory – per thread memory for automatic variables and register spilling. • Shared Memory – per block low-latency memory to allow for intrablock data sharing and synchronization. Threads can safely share data through this memory and can perform barrier synchronization through _ _syncthreads() • Global Memory – device level memory that may be shared between blocks or grids

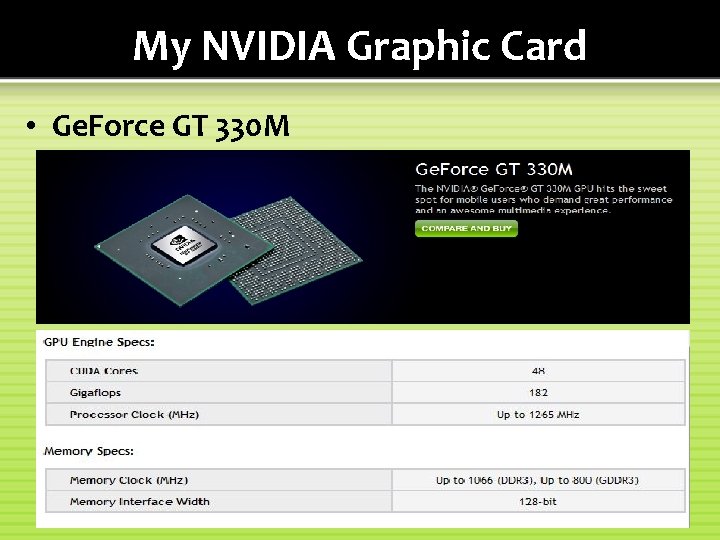

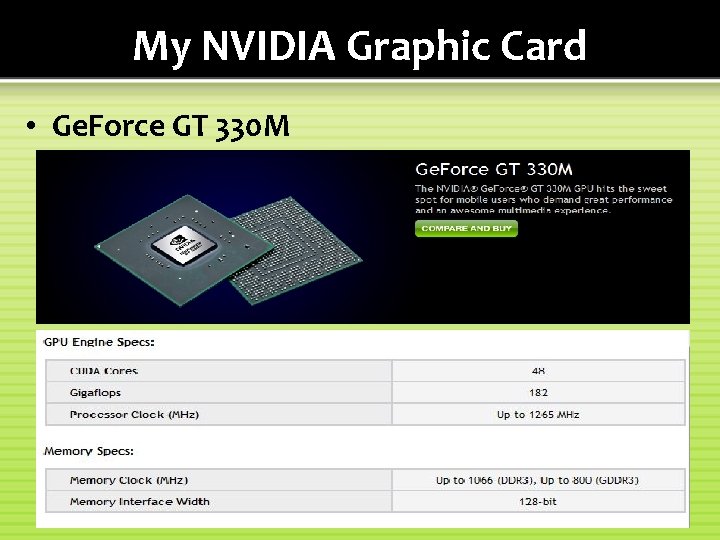

My NVIDIA Graphic Card • Ge. Force GT 330 M

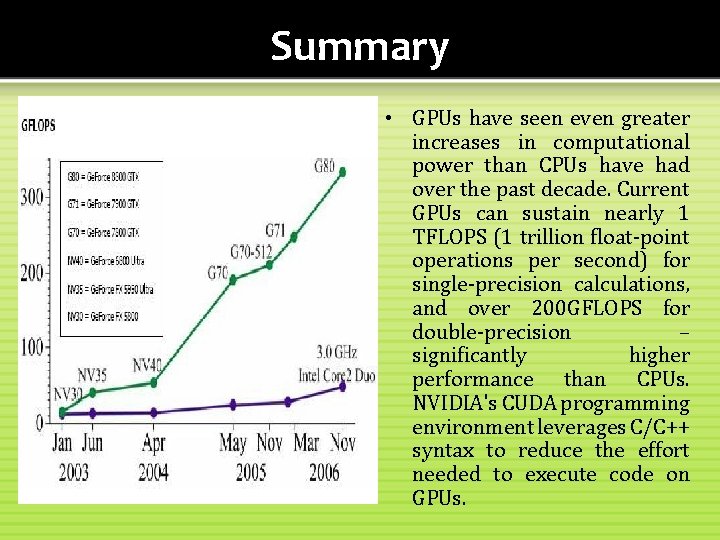

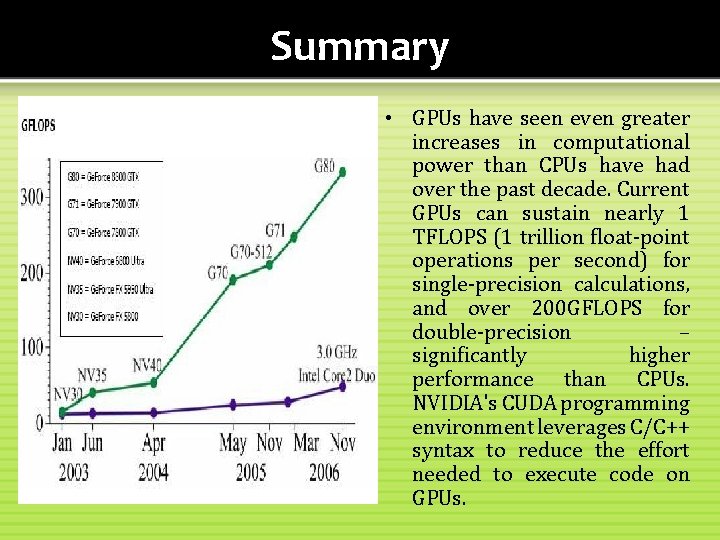

Summary • GPUs have seen even greater increases in computational power than CPUs have had over the past decade. Current GPUs can sustain nearly 1 TFLOPS (1 trillion float-point operations per second) for single-precision calculations, and over 200 GFLOPS for double-precision – significantly higher performance than CPUs. NVIDIA's CUDA programming environment leverages C/C++ syntax to reduce the effort needed to execute code on GPUs.

Summary • CUDA allows • massive parallel computing • with a relative low price • high integrated solution • personal supercomputing • eco-friendly production • easy to learn

Summary • But the problem is. . . • slightly low precision • no recursive function call • hard to use for irregular join/fork logic • no concurrency between jobs • Debugging GPU code can be very tedious as no direct form of error checking exists.

Thank You For Listening Questions?