Parallel Programming Techniques and Applications Using Networked Workstations

- Slides: 6

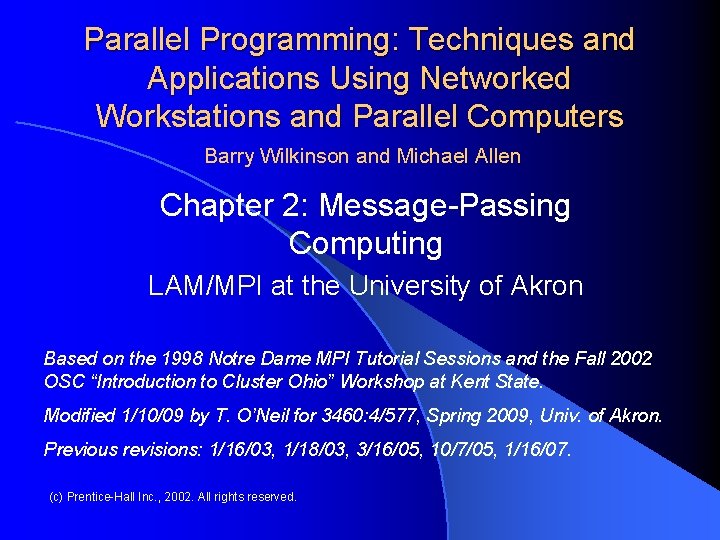

Parallel Programming: Techniques and Applications Using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Chapter 2: Message-Passing Computing LAM/MPI at the University of Akron Based on the 1998 Notre Dame MPI Tutorial Sessions and the Fall 2002 OSC “Introduction to Cluster Ohio” Workshop at Kent State. Modified 1/10/09 by T. O’Neil for 3460: 4/577, Spring 2009, Univ. of Akron. Previous revisions: 1/16/03, 1/18/03, 3/16/05, 10/7/05, 1/16/07. (c) Prentice-Hall Inc. , 2002. All rights reserved.

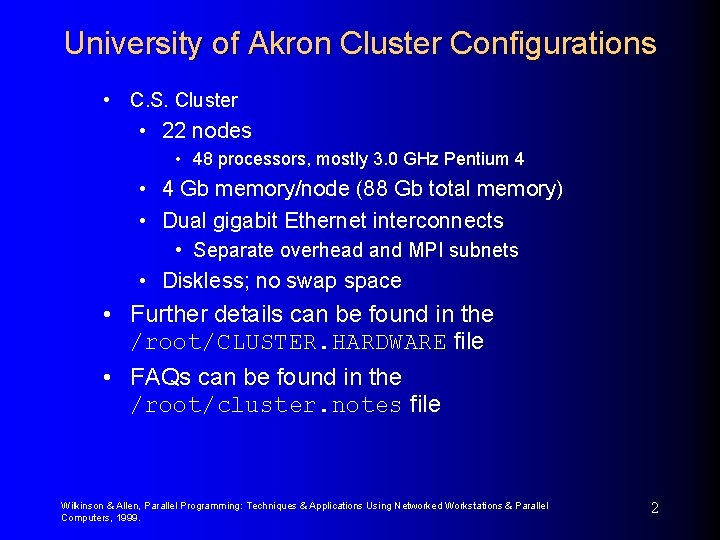

University of Akron Cluster Configurations • C. S. Cluster • 22 nodes • 48 processors, mostly 3. 0 GHz Pentium 4 • 4 Gb memory/node (88 Gb total memory) • Dual gigabit Ethernet interconnects • Separate overhead and MPI subnets • Diskless; no swap space • Further details can be found in the /root/CLUSTER. HARDWARE file • FAQs can be found in the /root/cluster. notes file Wilkinson & Allen, Parallel Programming: Techniques & Applications Using Networked Workstations & Parallel Computers, 1999. 2

Accessing the Clusters • The only way is to remotely access the front end node of the cluster: ssh your_id@cluster. cs. uakron. edu • ssh sends your commands over an encrypted stream so your passwords and so forth can’t be sniffed over the network • Batch nodes are not connected to the external network Wilkinson & Allen, Parallel Programming: Techniques & Applications Using Networked Workstations & Parallel Computers, 1999. 3

Setting Up LAM/MPI on the Cluster • The first time you access the cluster establish your identity before attempting to use other nodes. • Enter the command ssh-keygen and hit return until no longer prompted • Then issue the commands • cat ~/. ssh/*. pub >> ~/. ssh/authorized_keys • cat /root/. ssh/known_hosts >> ~/. ssh/known_hosts • Now issue the command cp /root/lamboot. full. to copy the full boot file to your home directory. • Assuming you don’t need all nodes for your programs, edit this file for your job size and save as hostfile. Wilkinson & Allen, Parallel Programming: Techniques & Applications Using Networked Workstations & Parallel Computers, 1999. 4

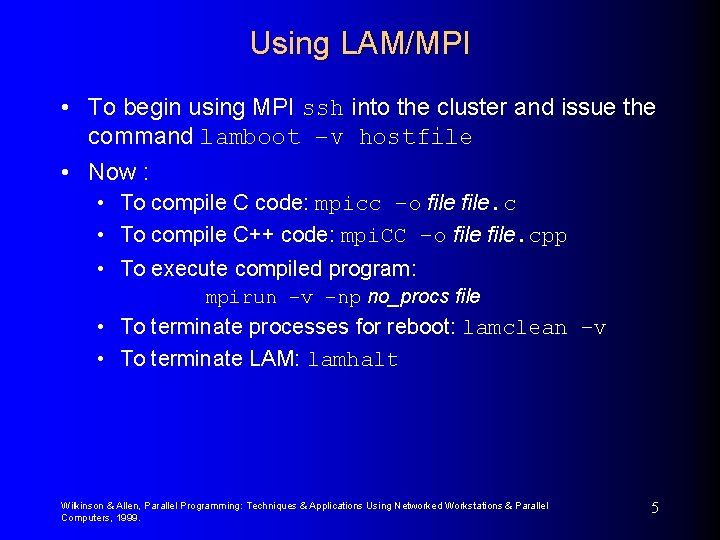

Using LAM/MPI • To begin using MPI ssh into the cluster and issue the command lamboot –v hostfile • Now : • To compile C code: mpicc –o file. c • To compile C++ code: mpi. CC –o file. cpp • To execute compiled program: mpirun –v –np no_procs file • To terminate processes for reboot: lamclean –v • To terminate LAM: lamhalt Wilkinson & Allen, Parallel Programming: Techniques & Applications Using Networked Workstations & Parallel Computers, 1999. 5

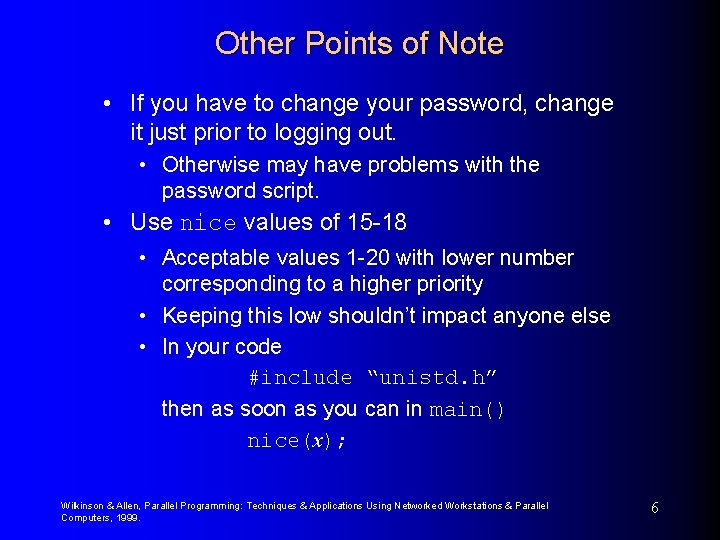

Other Points of Note • If you have to change your password, change it just prior to logging out. • Otherwise may have problems with the password script. • Use nice values of 15 -18 • Acceptable values 1 -20 with lower number corresponding to a higher priority • Keeping this low shouldn’t impact anyone else • In your code #include “unistd. h” then as soon as you can in main() nice(x); Wilkinson & Allen, Parallel Programming: Techniques & Applications Using Networked Workstations & Parallel Computers, 1999. 6