Parallel Programming And Heterogeneous Computing Opportunities and Challanges

![Simple Instruction Multiple Data for(i = 0; i <= MAX; i++) c[i] = a[i] Simple Instruction Multiple Data for(i = 0; i <= MAX; i++) c[i] = a[i]](https://slidetodoc.com/presentation_image_h2/a5b135032ebf0e65eb5e70ebe07e5f1a/image-9.jpg)

- Slides: 55

Parallel Programming And Heterogeneous Computing Opportunities and Challanges of Parallel Programming

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 2 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 3 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

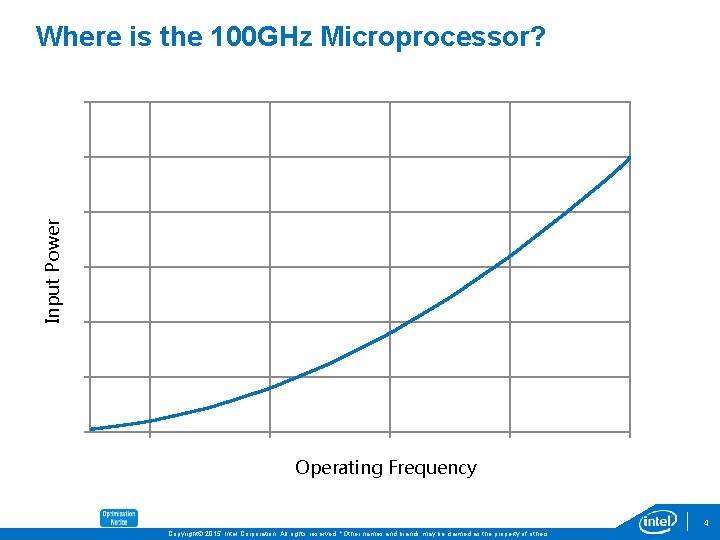

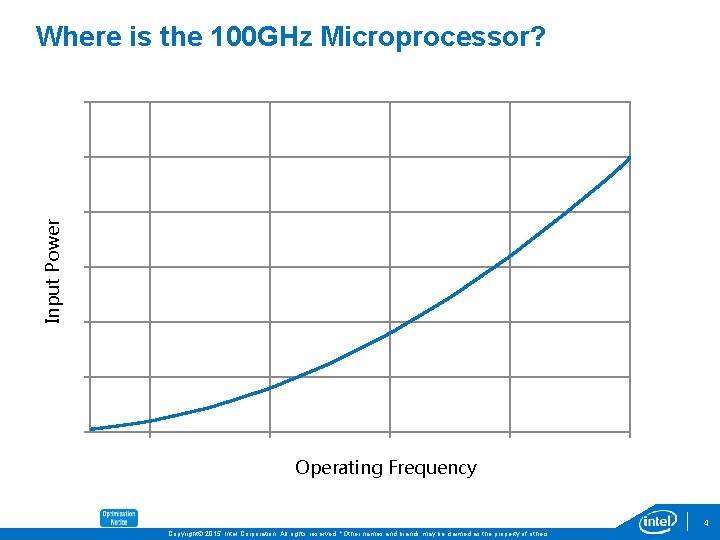

Input Power Where is the 100 GHz Microprocessor? Operating Frequency 4 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

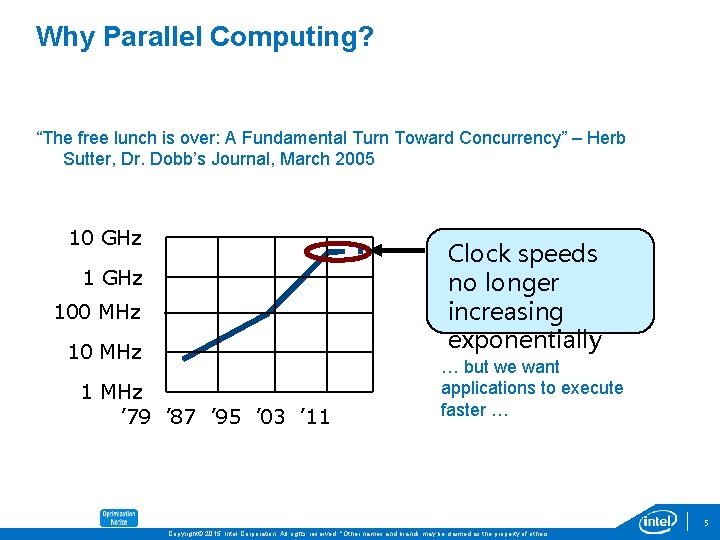

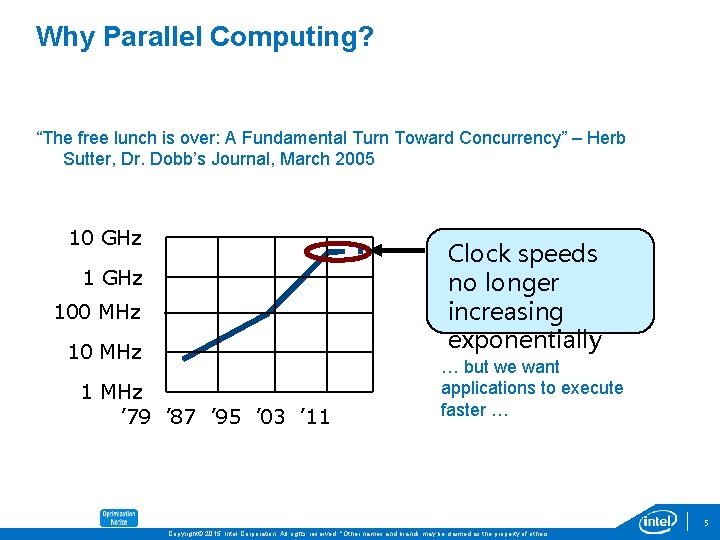

Why Parallel Computing? “The free lunch is over: A Fundamental Turn Toward Concurrency” – Herb Sutter, Dr. Dobb’s Journal, March 2005 10 GHz Clock speeds no longer increasing exponentially 1 GHz 100 MHz 1 MHz ’ 79 ’ 87 ’ 95 ’ 03 ’ 11 … but we want applications to execute faster … 5 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

How can we accomplish this? Through parallel-computing… Attempt to speed solution of a particular task by a. Dividing task into sub-tasks b. Executing sub-tasks simultaneously on multiple processors Therefore successful attempts require both a. Understanding of where parallelism can be effective b. Knowledge of how to design and implement good solutions 6 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

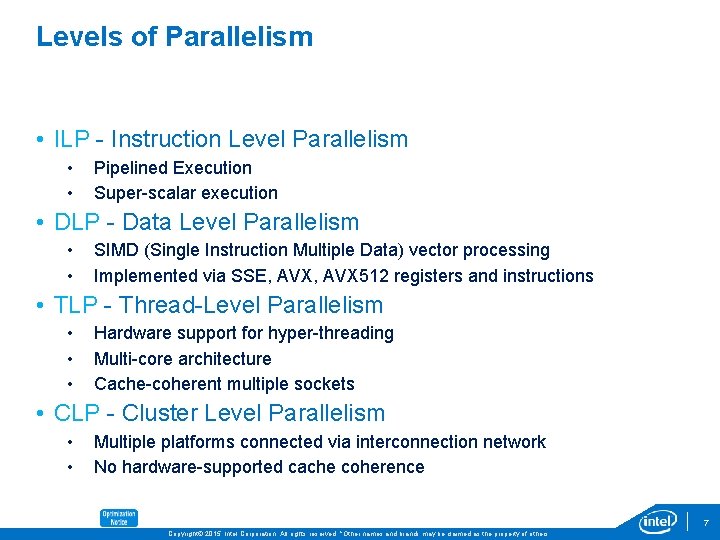

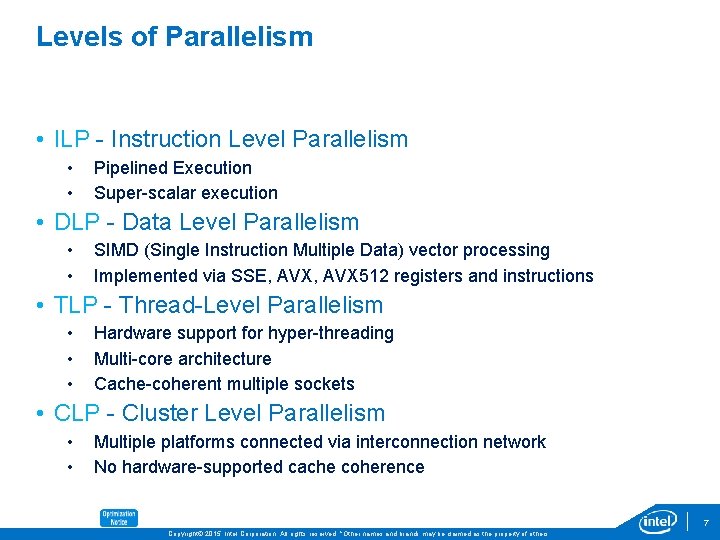

Levels of Parallelism • ILP - Instruction Level Parallelism • • Pipelined Execution Super-scalar execution • DLP - Data Level Parallelism • • SIMD (Single Instruction Multiple Data) vector processing Implemented via SSE, AVX 512 registers and instructions • TLP - Thread-Level Parallelism • • • Hardware support for hyper-threading Multi-core architecture Cache-coherent multiple sockets • CLP - Cluster Level Parallelism • • Multiple platforms connected via interconnection network No hardware-supported cache coherence 7 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

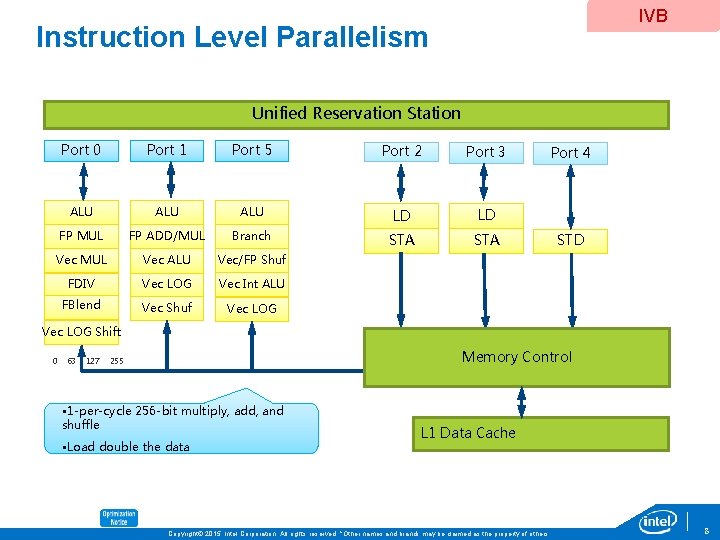

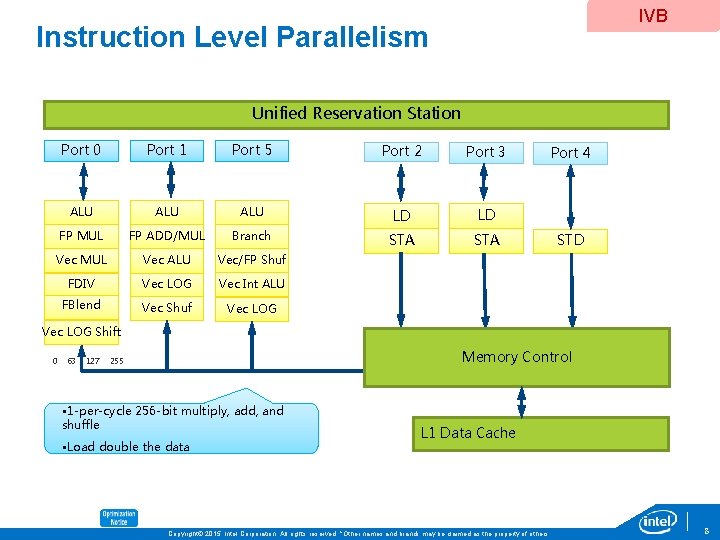

IVB Instruction Level Parallelism Unified Reservation Station Port 0 Port 1 Port 5 Port 2 Port 3 ALU ALU LD LD FP MUL FP ADD/MUL Branch STA Vec MUL Vec ALU Vec/FP Shuf FDIV Vec LOG Vec Int ALU FBlend 2 Branch Vec Shuf Vec LOG Port 4 STD Vec LOG Shift 0 63 127 Memory Control 255 • 1 -per-cycle 256 -bit multiply, add, and shuffle • Load double the data L 1 Data Cache Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others. 8

![Simple Instruction Multiple Data fori 0 i MAX i ci ai Simple Instruction Multiple Data for(i = 0; i <= MAX; i++) c[i] = a[i]](https://slidetodoc.com/presentation_image_h2/a5b135032ebf0e65eb5e70ebe07e5f1a/image-9.jpg)

Simple Instruction Multiple Data for(i = 0; i <= MAX; i++) c[i] = a[i] + b[i]; a[i] for(i = 0; i <= MAX; i+8) c[i: 8] = a[i: 8] + b[i: 8]; a[i+7] a[i+6] a[i+5] a[i+4] + a[i+3] a[i+2] a[i+1] a[i] + b[i] b[i+7] b[i+6] b[i+5] b[i+4] b[i+3] b[i+2] b[i+1] b[i] c[i+7] c[i+6] c[i+5] c[i+4] c[i+3] c[i+2] c[i+1] c[i] 9 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

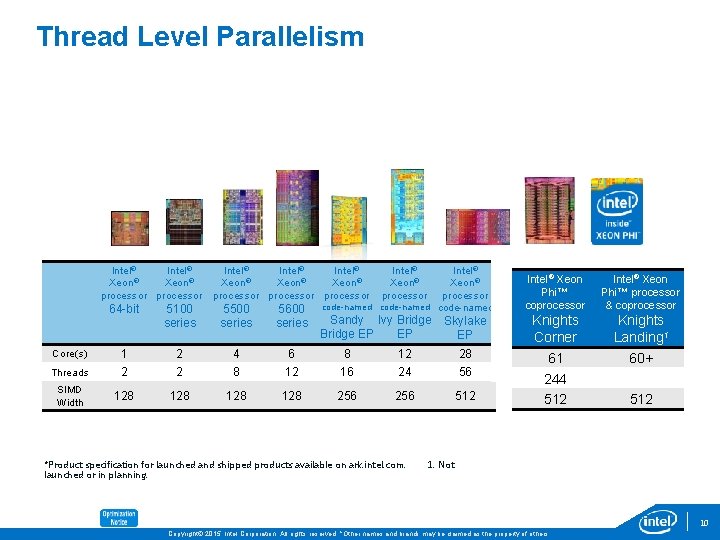

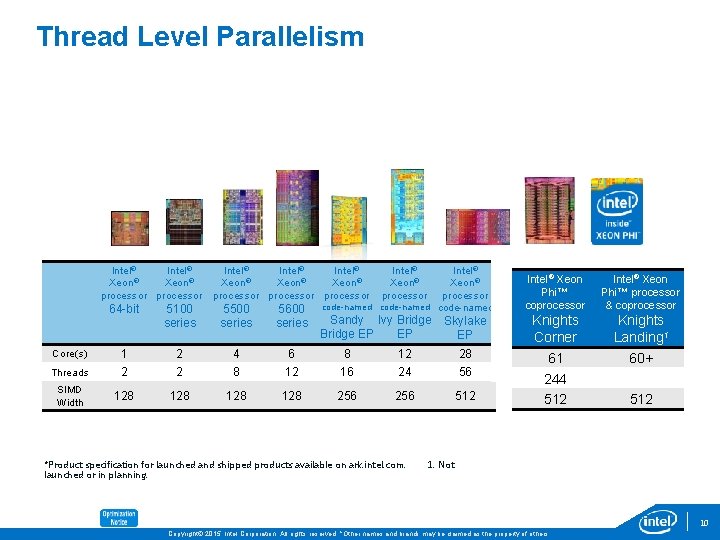

Thread Level Parallelism Intel® ® Xeon® processor Intel® ® ® Xeon® processor Intel® Xeon® processor code-named 64 -bit 5100 series 5500 series 5600 series Core(s) 1 2 4 6 8 12 28 Threads 2 2 8 12 16 24 56 SIMD Width 128 128 256 512 Sandy Ivy Bridge EP EP *Product specification for launched and shipped products available on ark. intel. com. launched or in planning. Skylake EP Intel® Xeon Phi™ coprocessor Intel® Xeon Phi™ processor & coprocessor Knights Corner 61 244 512 Knights Landing 1 60+ 512 1. Not 10 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

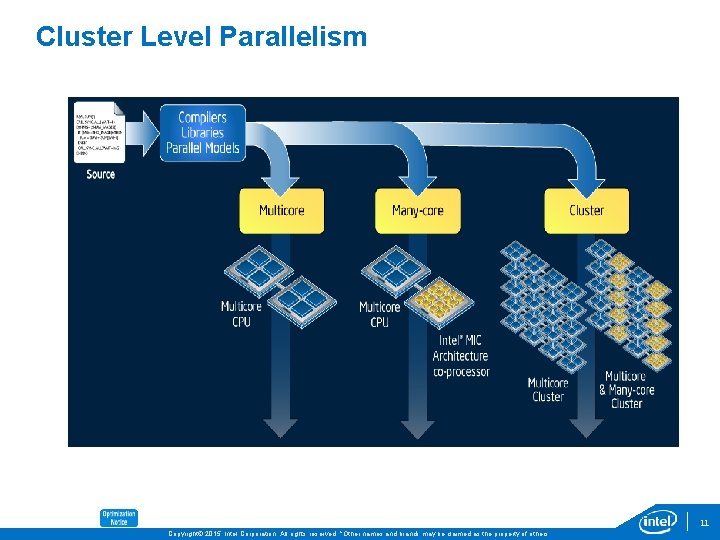

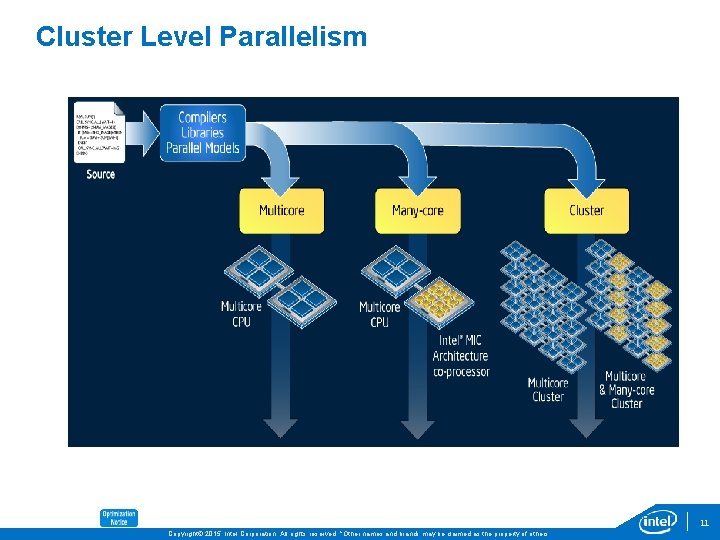

Cluster Level Parallelism 11 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

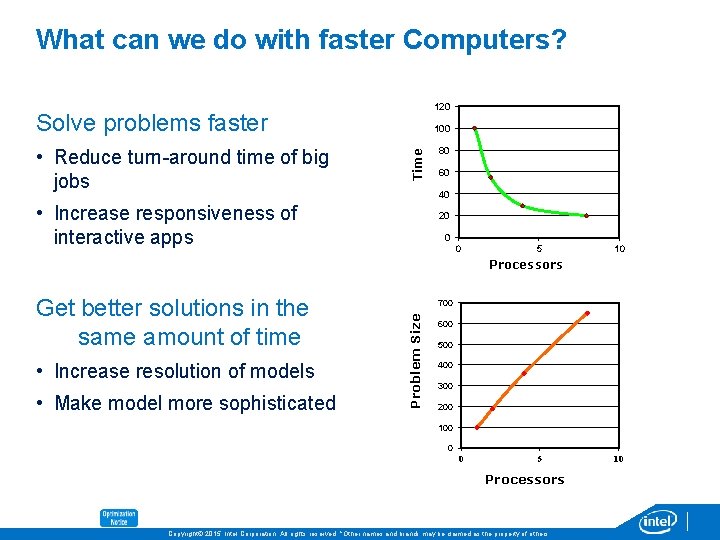

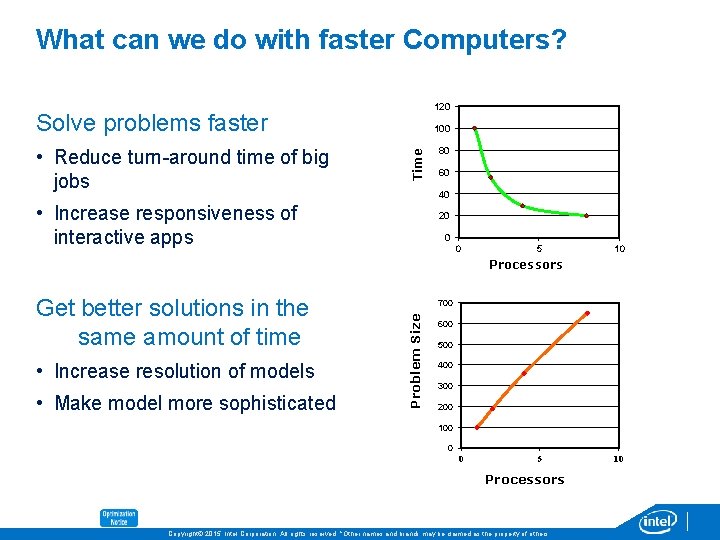

What can we do with faster Computers? 120 Solve problems faster Time • Reduce turn-around time of big jobs 100 80 60 40 • Increase responsiveness of interactive apps 20 0 0 5 10 Processors • Increase resolution of models • Make model more sophisticated 700 Problem Size Get better solutions in the same amount of time 600 500 400 300 200 100 0 0 5 Processors 12 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others. 10

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 13 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

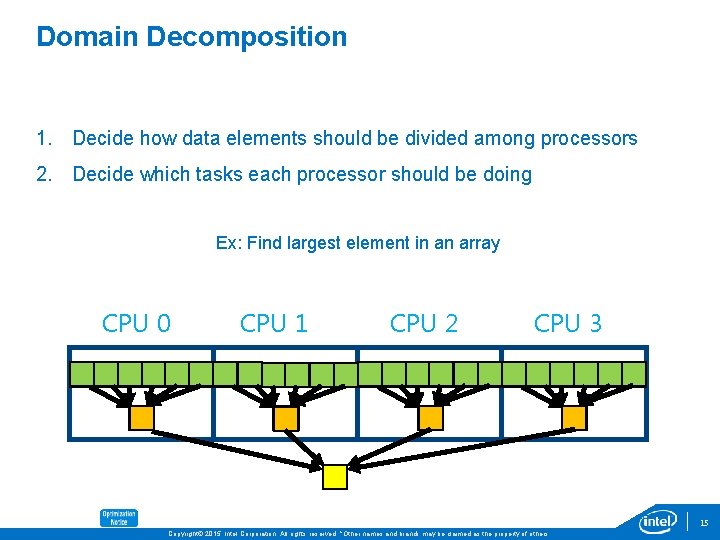

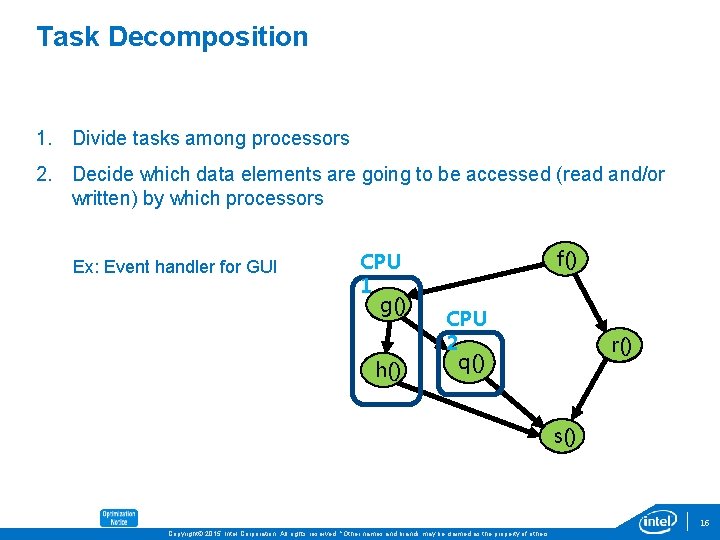

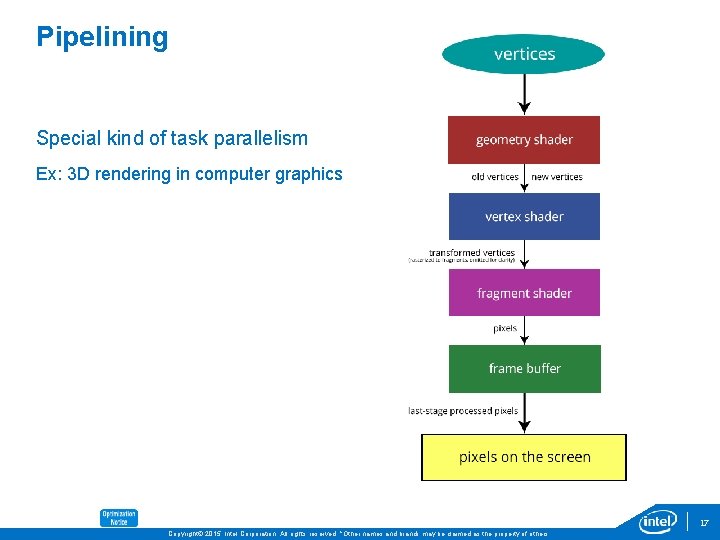

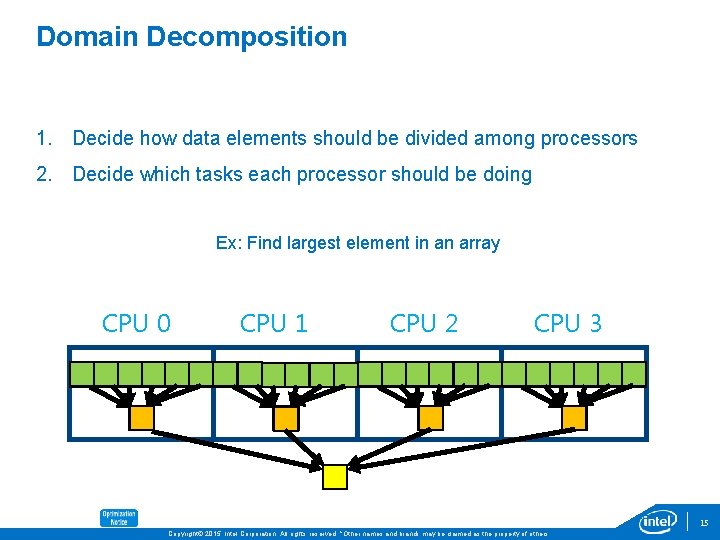

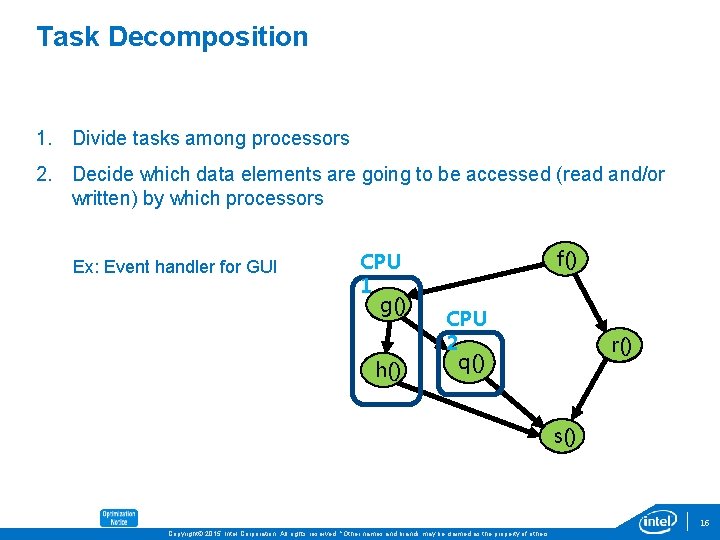

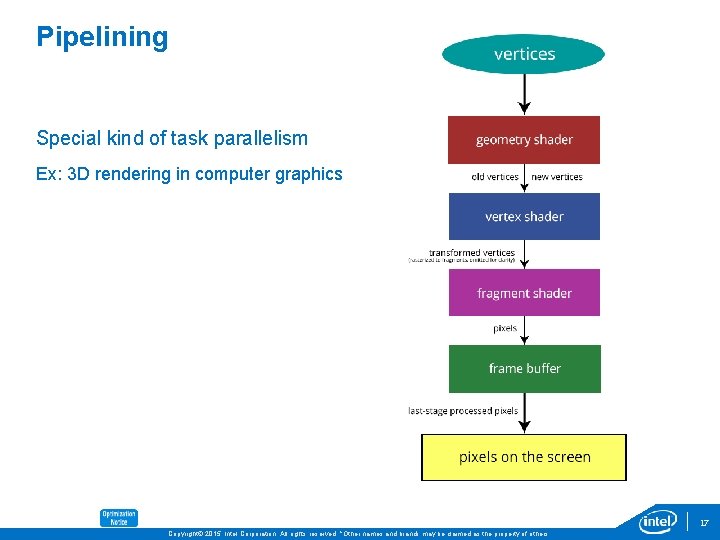

Concepts Methodology • Study problem/sequential program/code-segment • Look for opportunities for parallelism • Keep all processors busy doing useful parallel work Ways to Parallelize • Domain Decomposition (data decomposition) • Task Decomposition (functional decomposition) • Pipelining 14 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Domain Decomposition 1. Decide how data elements should be divided among processors 2. Decide which tasks each processor should be doing Ex: Find largest element in an array CPU 0 CPU 1 CPU 2 CPU 3 15 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Task Decomposition 1. Divide tasks among processors 2. Decide which data elements are going to be accessed (read and/or written) by which processors Ex: Event handler for GUI CPU 1 g() h() f() CPU 2 q() r() s() 16 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Pipelining Special kind of task parallelism Ex: 3 D rendering in computer graphics 17 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

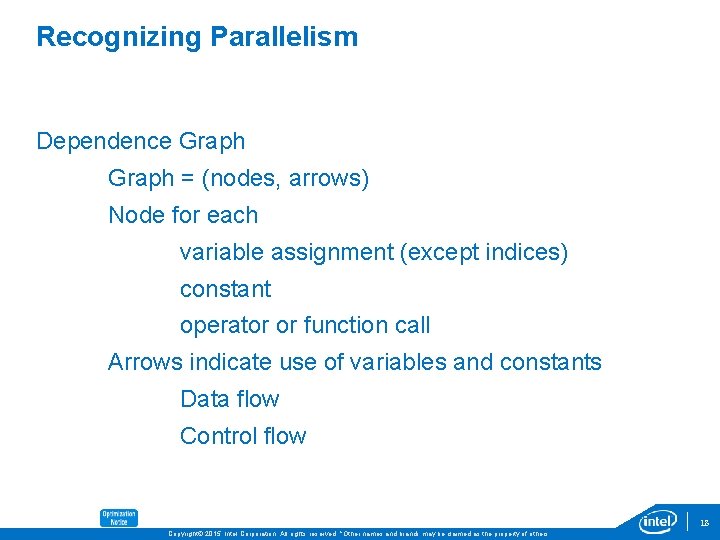

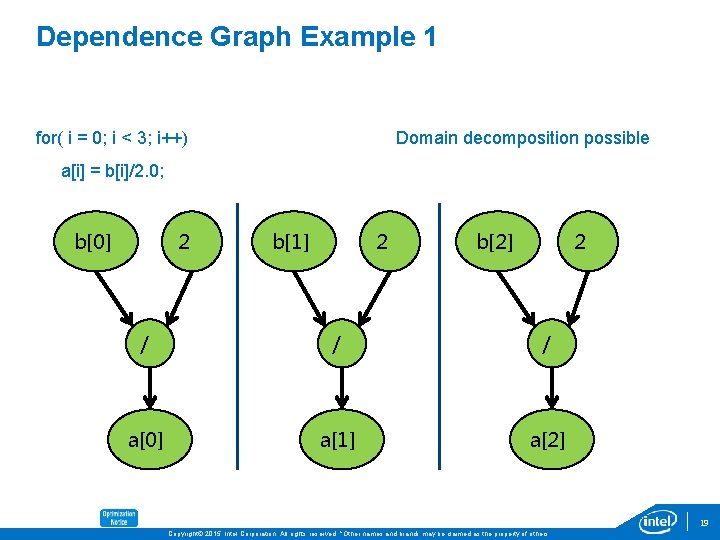

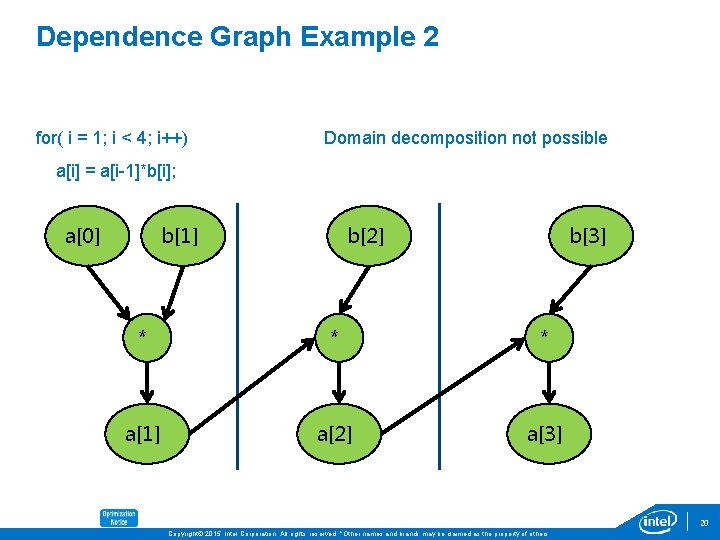

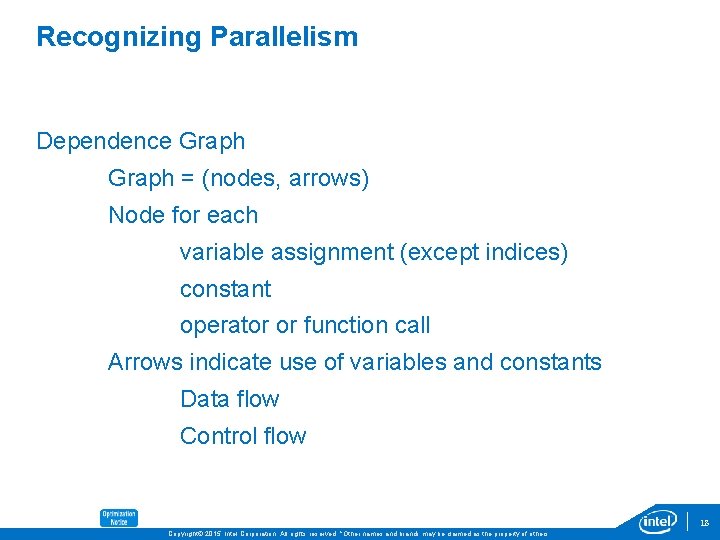

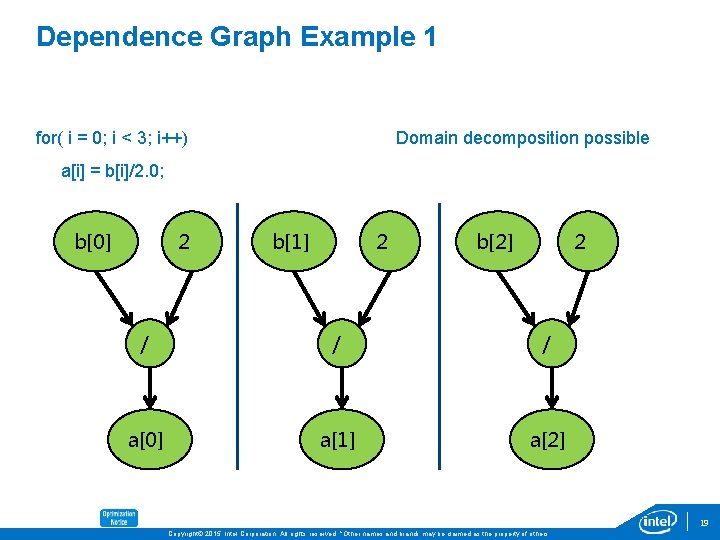

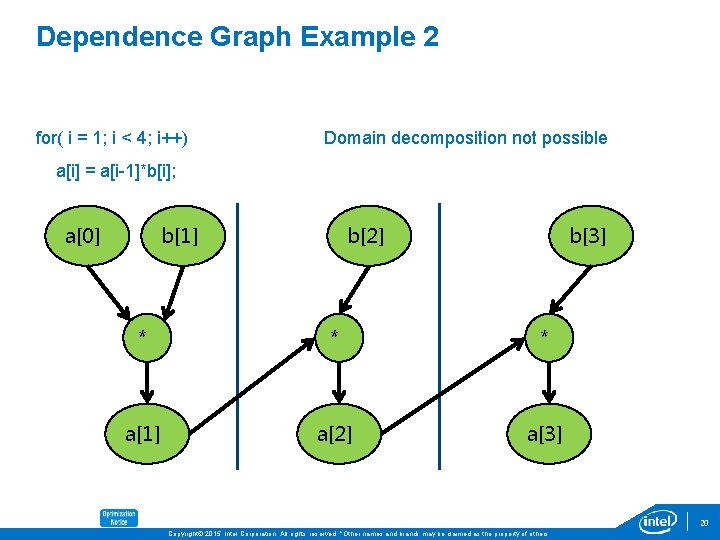

Recognizing Parallelism Dependence Graph = (nodes, arrows) Node for each variable assignment (except indices) constant operator or function call Arrows indicate use of variables and constants Data flow Control flow 18 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Dependence Graph Example 1 for( i = 0; i < 3; i++) Domain decomposition possible a[i] = b[i]/2. 0; b[0] 2 b[1] 2 b[2] 2 / / / a[0] a[1] a[2] 19 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Dependence Graph Example 2 for( i = 1; i < 4; i++) Domain decomposition not possible a[i] = a[i-1]*b[i]; a[0] b[1] b[2] b[3] * * * a[1] a[2] a[3] 20 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 21 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

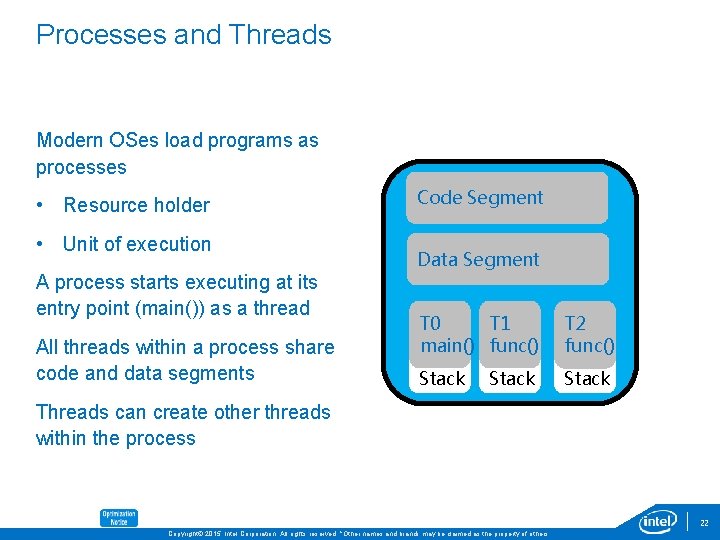

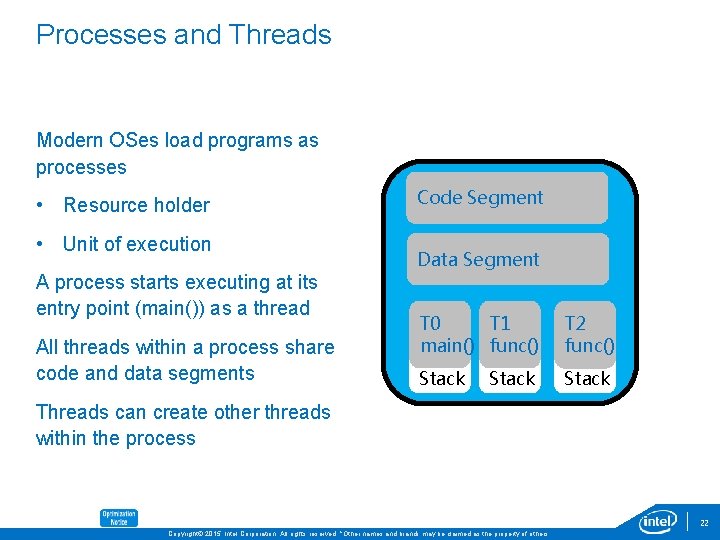

Processes and Threads Modern OSes load programs as processes • Resource holder • Unit of execution A process starts executing at its entry point (main()) as a thread All threads within a process share code and data segments Code Segment Data Segment T 0 T 1 main() func() T 2 func() Stack Threads can create other threads within the process 22 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

What is a Thread? “A unit of control within a process” – Carver and Tai (Modern Multithreading) Ø Main thread executes program’s main() function Ø Main thread may create other threads to execute other functions Ø Threads have their own state § Program counter (instruction pointer), Registers, stack Ø Threads share process’s data, code but have a unique stack Ø Threads have lower overhead than a processes 9/14/2021 23 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

What are Threads Good for? Ø Making programs easier to understand, especially for task parallelism Ø Overlapping computation and I/O (or other high latency events) Ø Improving responsiveness of GUIs Improving performance through parallel execution 24 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Fork-Join Threading Model When program begins execution, only the master thread is active Master thread executes sequential portions of the program For parallel portions of the program, master thread forks (creates/awakens) additional threads At join (end of the parallel section of the code), extra threads are suspended or die 25 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

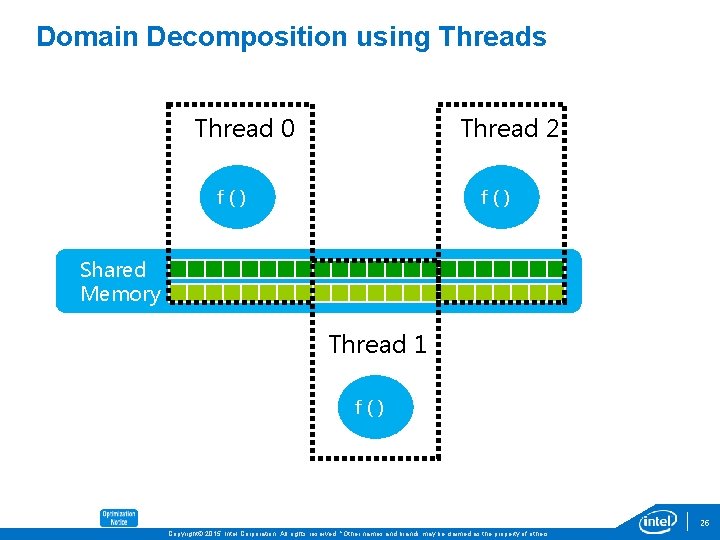

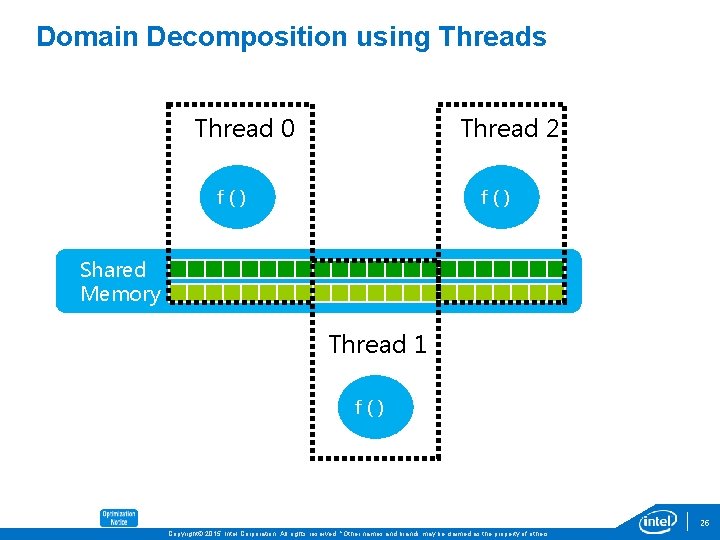

Domain Decomposition using Threads Thread 0 Thread 2 f() Shared Memory Thread 1 f() 26 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 27 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Distributed- vs. Shared Memory Ø Parallel Concepts don‘t change Ø Difference is how information gets passed on Ø Shared memory information pools vs. Explicit message passing Ø Message- Passing Interface (MPI) - most common explicit message passing standard for distributed memory systems 28 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Message-Passing Interface (MPI) MPI is a standard which gets implemented in form of libraries not a language Libraries for inter-process communication and data exchange Function categories • Point-to-point communication • Collective communication • Communicator topologies • User-defined data types • Utilities (for example, timing and initialization) 29 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Few Issues 6. Heterogeneous Hardware 30 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

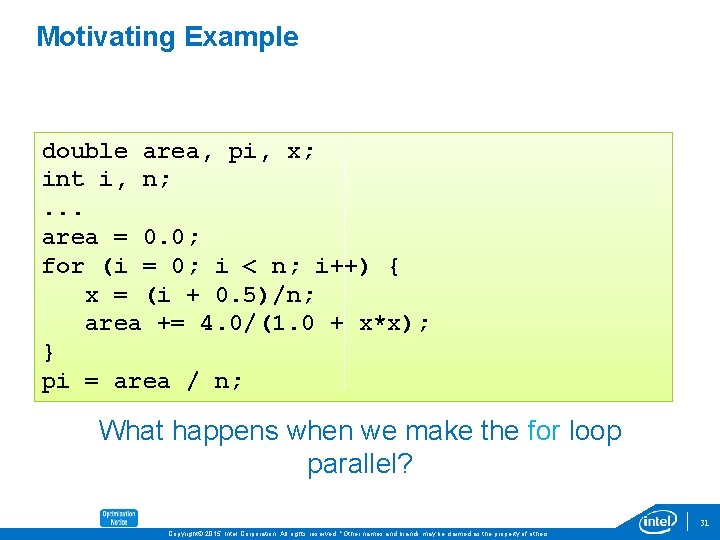

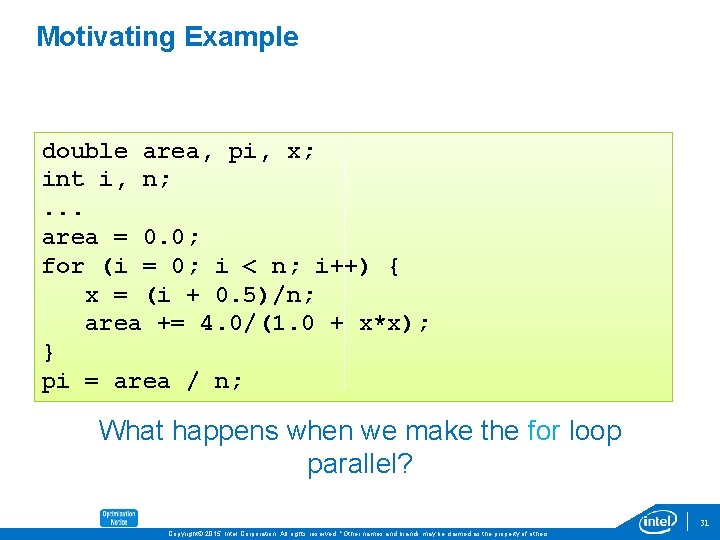

Motivating Example double area, pi, x; int i, n; . . . area = 0. 0; for (i = 0; i < n; i++) { x = (i + 0. 5)/n; area += 4. 0/(1. 0 + x*x); } pi = area / n; What happens when we make the for loop parallel? 31 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

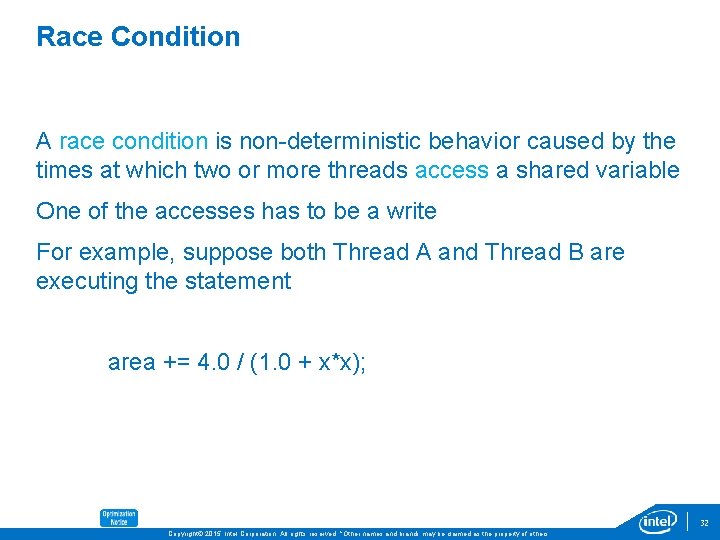

Race Condition A race condition is non-deterministic behavior caused by the times at which two or more threads access a shared variable One of the accesses has to be a write For example, suppose both Thread A and Thread B are executing the statement area += 4. 0 / (1. 0 + x*x); 32 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

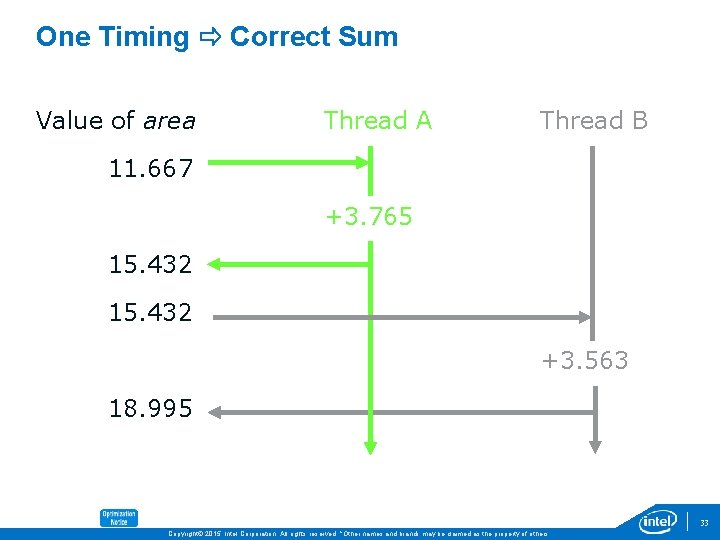

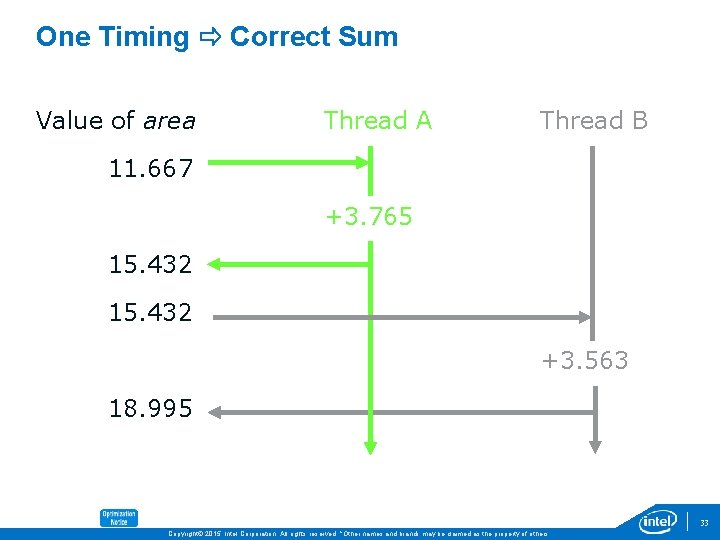

One Timing Correct Sum Value of area Thread A Thread B 11. 667 +3. 765 15. 432 +3. 563 18. 995 33 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

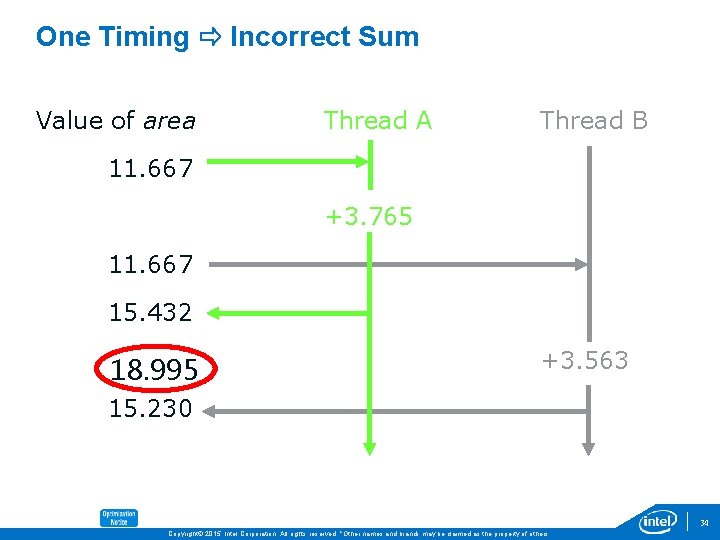

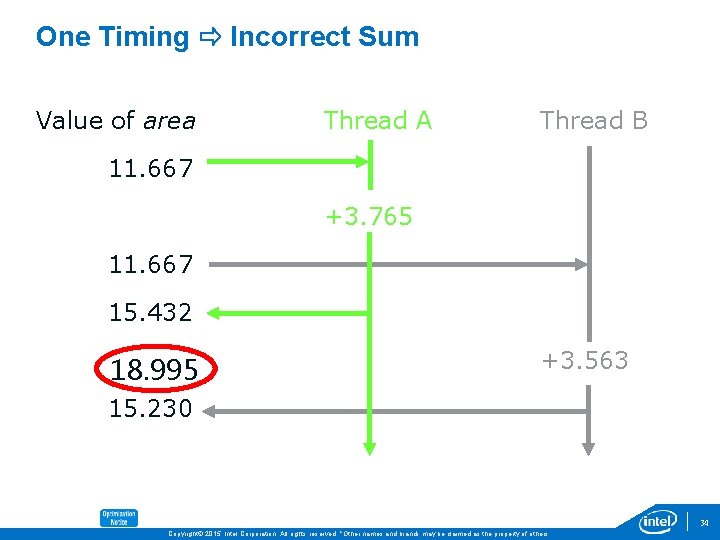

One Timing Incorrect Sum Value of area Thread A Thread B 11. 667 +3. 765 11. 667 15. 432 18. 995 +3. 563 15. 230 34 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Why Race Conditions are Nasty Programs with race conditions exhibit non-deterministic behavior • Sometimes give correct result • Sometimes give erroneous result Programs work correctly on trivial data sets and small number of threads Errors more likely to occur when number of threads or execution time increases Debugging race conditions can be difficult 35 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Mutual Exclusion We can prevent the race conditions described earlier by ensuring that only one thread at a time references and updates shared variable or data structure Mutual exclusion refers to a kind of synchronization that allows only a single thread or process to have access to a shared resource at a time Mutual exclusion is implemented using some form of locking mechanism 36 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Critical Sections A critical section is a portion of code that threads execute in a mutually exclusive fashion The critical section can be implemented using synchronization primitives • pthread_mutex on Linux*, CRITICAL_SECTION object in Win 32* Good news: critical sections eliminate race conditions Bad news: critical sections are executed sequentially More bad news: you have to identify critical sections yourself 37 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

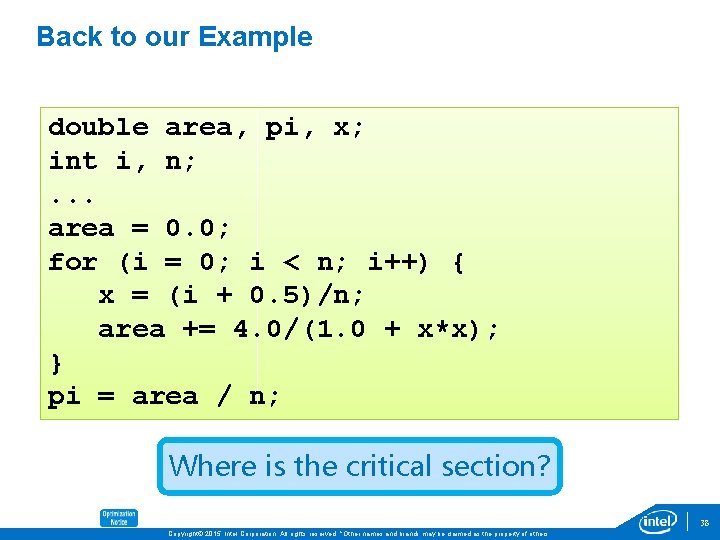

Back to our Example double area, pi, x; int i, n; . . . area = 0. 0; for (i = 0; i < n; i++) { x = (i + 0. 5)/n; area += 4. 0/(1. 0 + x*x); } pi = area / n; Where is the critical section? 38 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

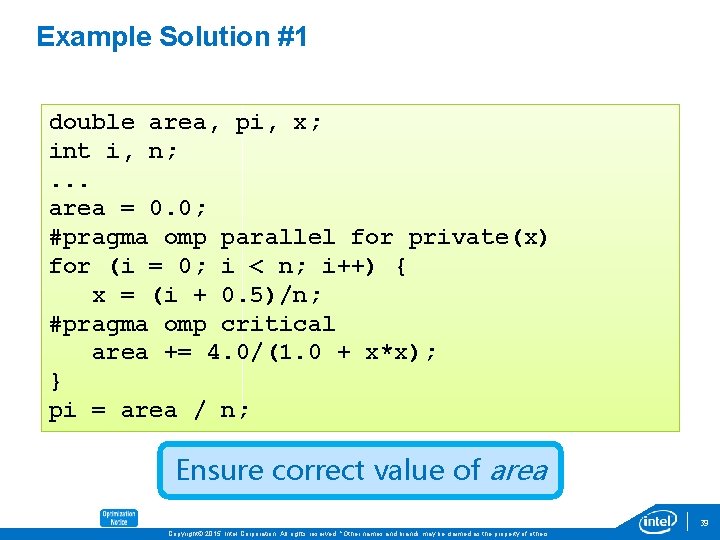

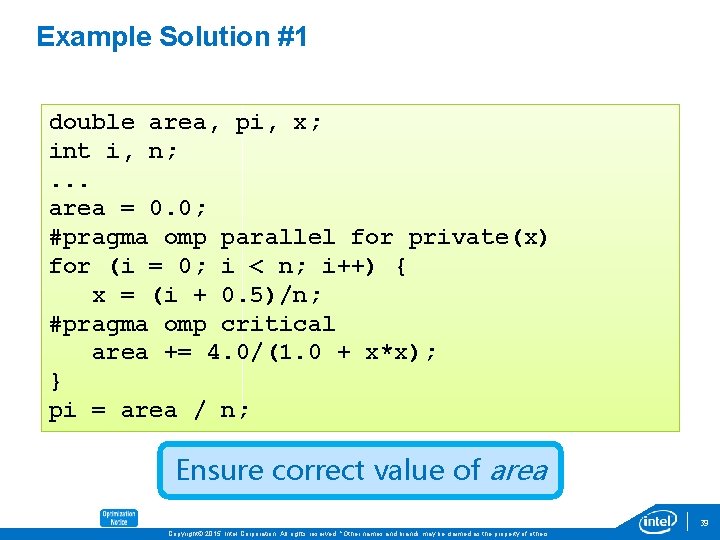

Example Solution #1 double area, pi, x; int i, n; . . . area = 0. 0; #pragma omp parallel for private(x) for (i = 0; i < n; i++) { x = (i + 0. 5)/n; #pragma omp critical area += 4. 0/(1. 0 + x*x); } pi = area / n; Ensure correct value of area 39 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

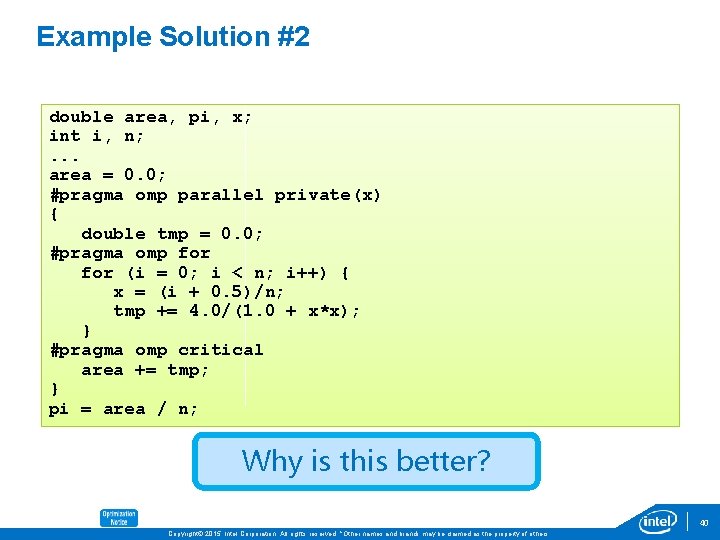

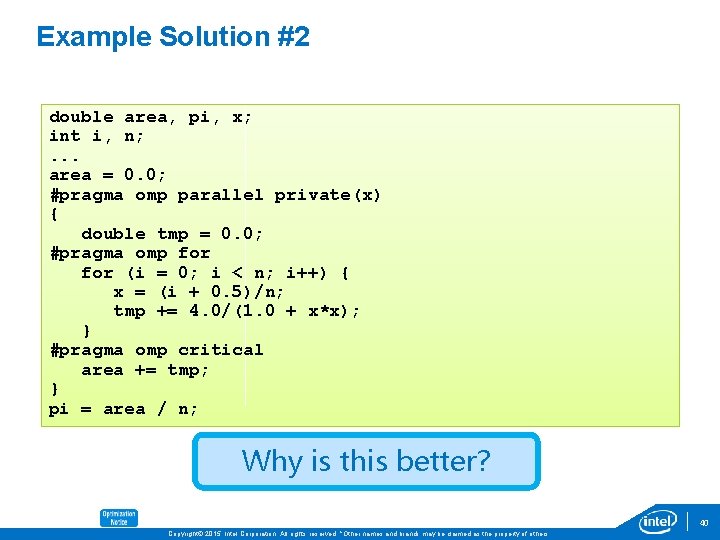

Example Solution #2 double area, pi, x; int i, n; . . . area = 0. 0; #pragma omp parallel private(x) { double tmp = 0. 0; #pragma omp for (i = 0; i < n; i++) { x = (i + 0. 5)/n; tmp += 4. 0/(1. 0 + x*x); } #pragma omp critical area += tmp; } pi = area / n; Why is this better? 40 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

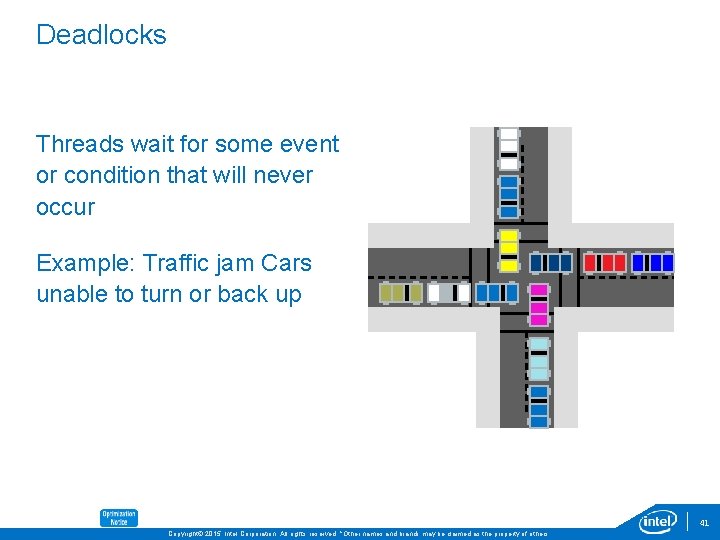

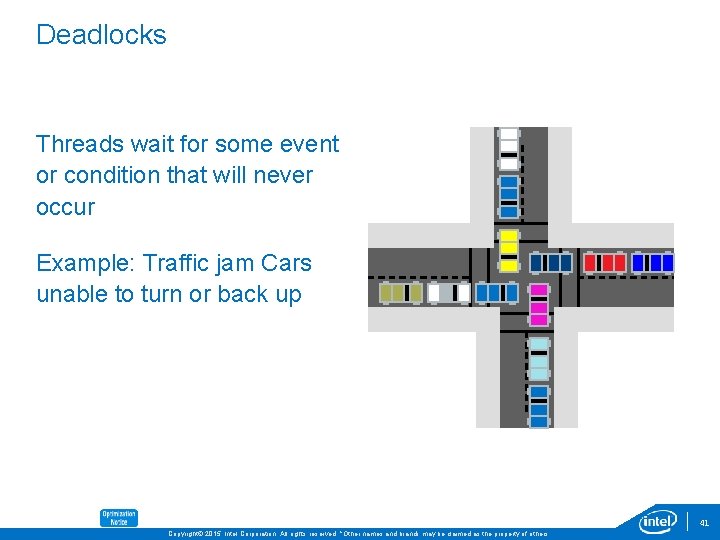

Deadlocks Threads wait for some event or condition that will never occur Example: Traffic jam Cars unable to turn or back up 41 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Agenda 1. Introduction 2. Exploiting Parallelism 3. Shared Memory 4. Distributed Memory 5. Issues 6. Heterogeneous Hardware 42 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

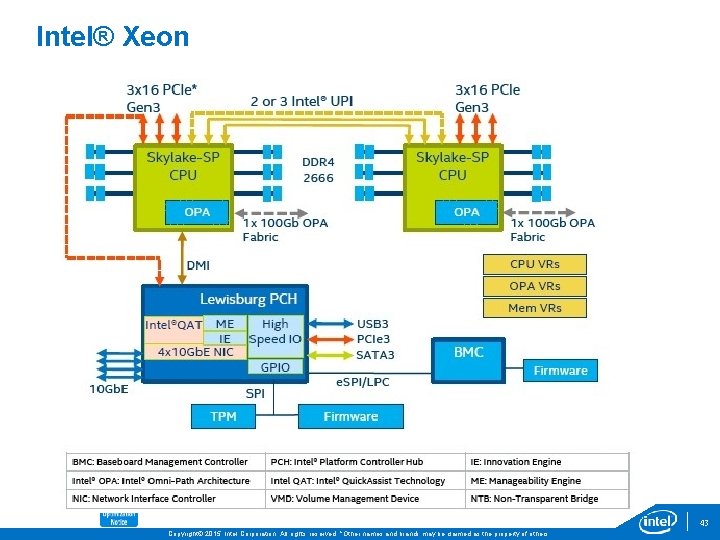

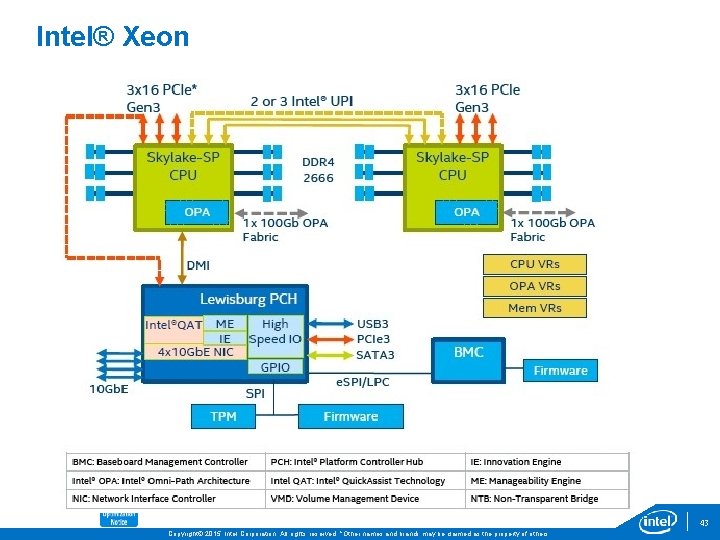

Intel® Xeon 43 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

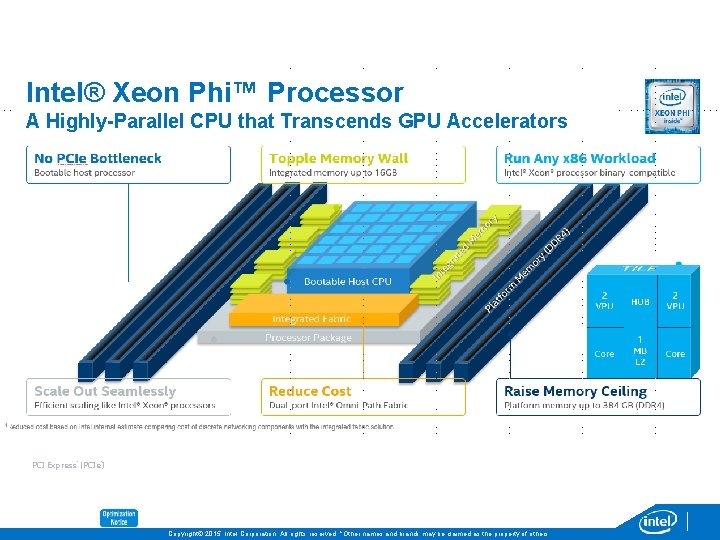

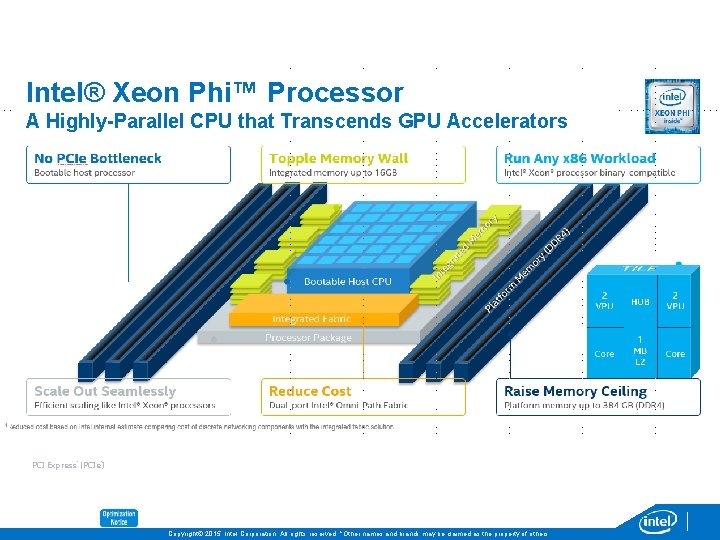

Intel® Xeon Phi™ Processor A Highly-Parallel CPU that Transcends GPU Accelerators 53 PCI Express* (PCIe*) Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

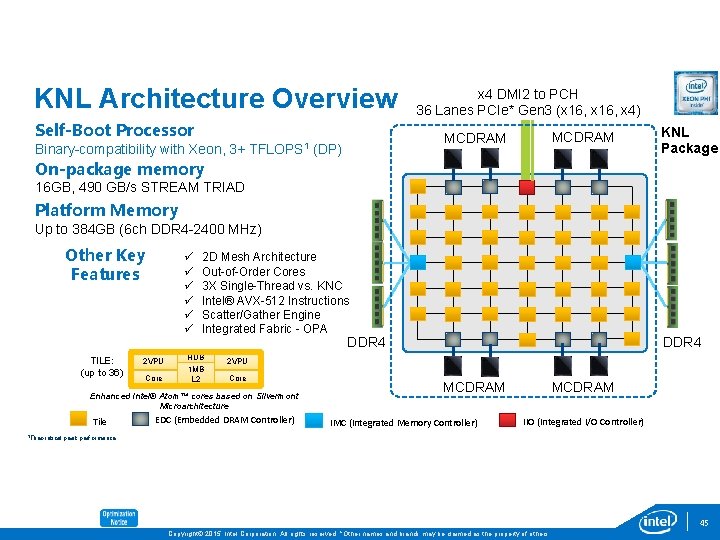

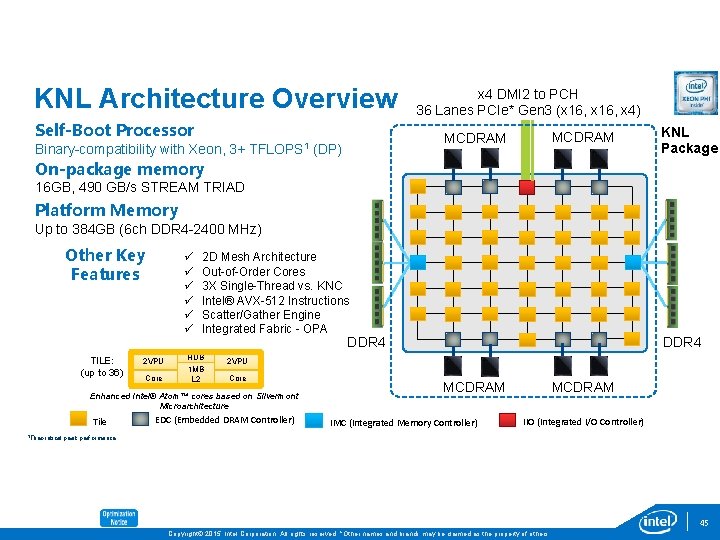

KNL Architecture Overview Self-Boot Processor Binary-compatibility with Xeon, 3+ TFLOPS 1 x 4 DMI 2 to PCH 36 Lanes PCIe* Gen 3 (x 16, x 4) MCDRAM (DP) KNL Package On-package memory 16 GB, 490 GB/s STREAM TRIAD Platform Memory Up to 384 GB (6 ch DDR 4 -2400 MHz) Other Key Features TILE: (up to 36) ü ü ü 2 VPU Core 2 D Mesh Architecture Out-of-Order Cores 3 X Single-Thread vs. KNC Intel® AVX-512 Instructions Scatter/Gather Engine Integrated Fabric - OPA HUB 1 MB L 2 2 VPU Core Enhanced Intel® Atom™ cores based on Silvermont Microarchitecture Tile 1 Theoretical DDR 4 EDC (Embedded DRAM Controller) MCDRAM IMC (Integrated Memory Controller) MCDRAM IIO (Integrated I/O Controller) peak performance 45 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

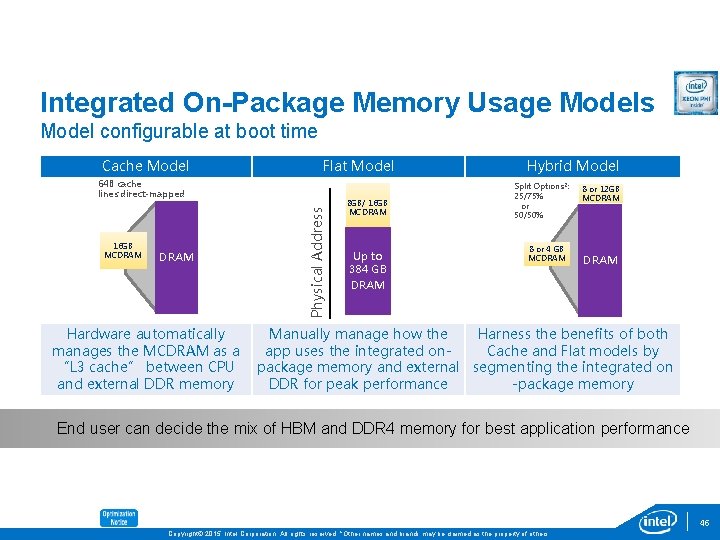

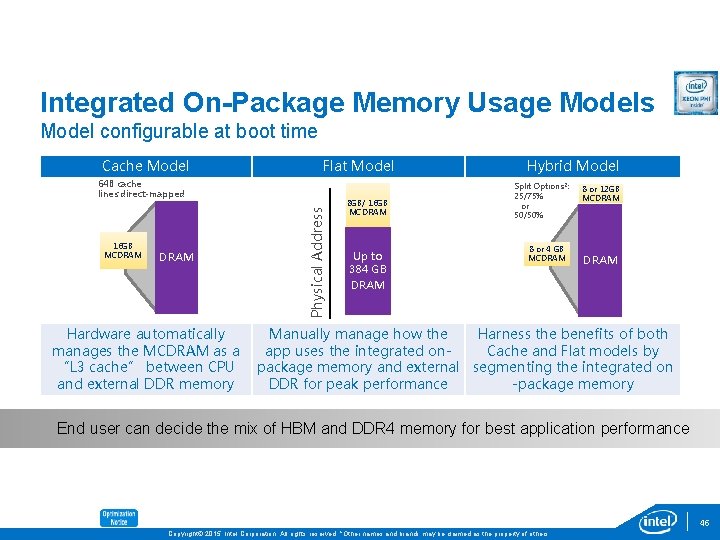

Integrated On-Package Memory Usage Models Model configurable at boot time Cache Model Flat Model 16 GB MCDRAM Hardware automatically manages the MCDRAM as a “L 3 cache” between CPU and external DDR memory Physical Address 64 B cache lines direct-mapped 8 GB/ 16 GB MCDRAM Up to 384 GB DRAM Hybrid Model Split Options 2: 25/75% or 50/50% 8 or 4 GB MCDRAM 8 or 12 GB MCDRAM Manually manage how the Harness the benefits of both app uses the integrated on. Cache and Flat models by package memory and external segmenting the integrated on DDR for peak performance -package memory End user can decide the mix of HBM and DDR 4 memory for best application performance 46 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

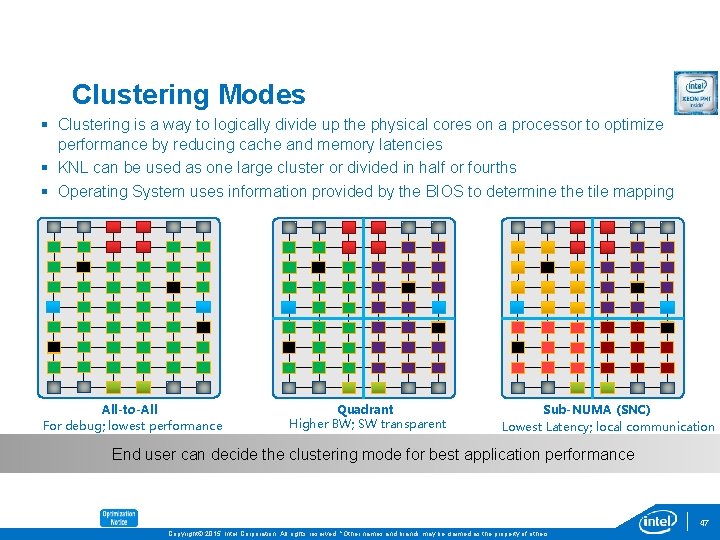

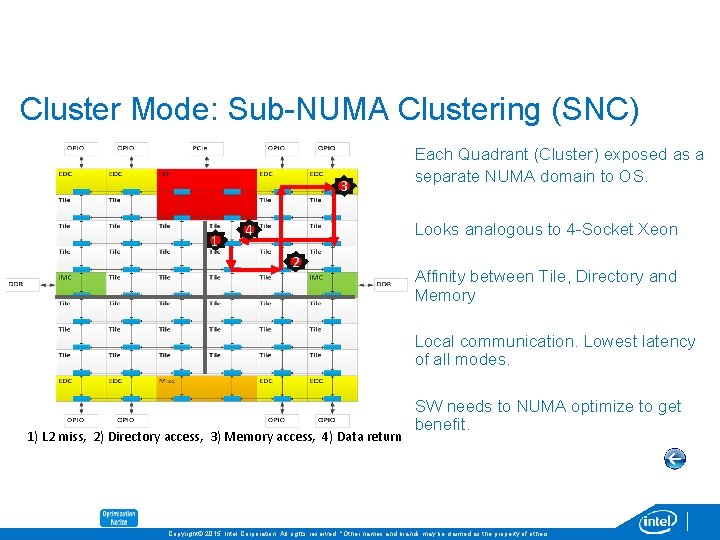

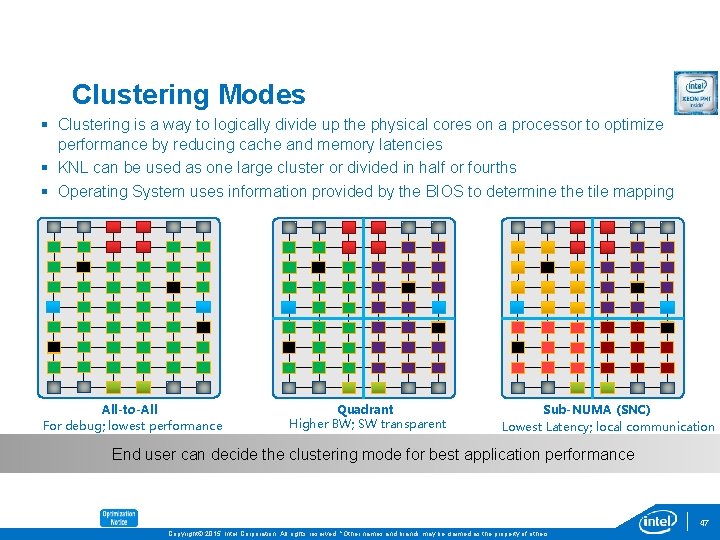

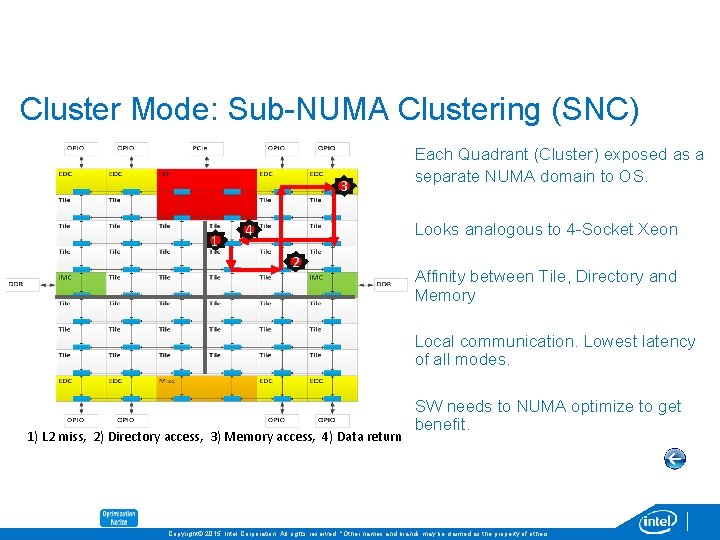

Clustering Modes § Clustering is a way to logically divide up the physical cores on a processor to optimize performance by reducing cache and memory latencies § KNL can be used as one large cluster or divided in half or fourths § Operating System uses information provided by the BIOS to determine the tile mapping All-to-All For debug; lowest performance Quadrant Higher BW; SW transparent Sub-NUMA (SNC) Lowest Latency; local communication End user can decide the clustering mode for best application performance 47 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

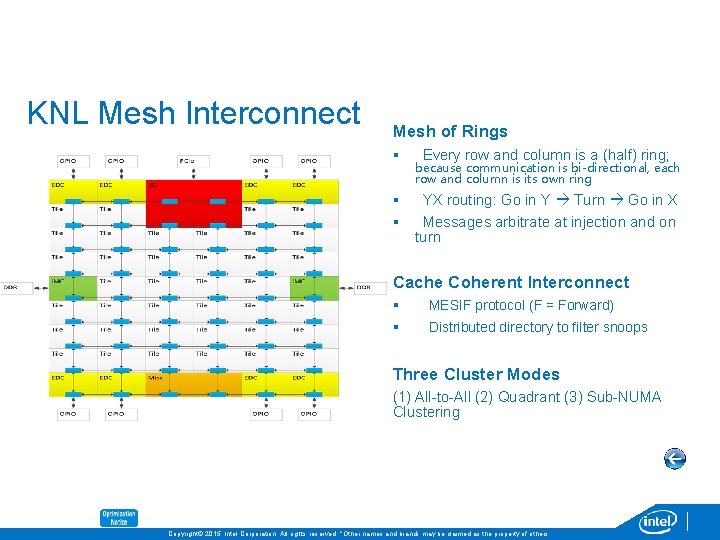

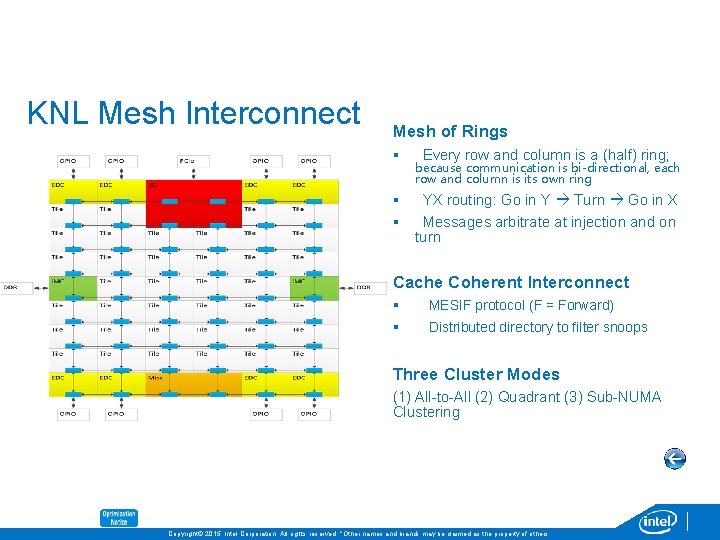

KNL Mesh Interconnect Mesh of Rings § § § Every row and column is a (half) ring; because communication is bi-directional, each row and column is its own ring YX routing: Go in Y Turn Go in X Messages arbitrate at injection and on turn Cache Coherent Interconnect § § MESIF protocol (F = Forward) Distributed directory to filter snoops Three Cluster Modes (1) All-to-All (2) Quadrant (3) Sub-NUMA Clustering Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

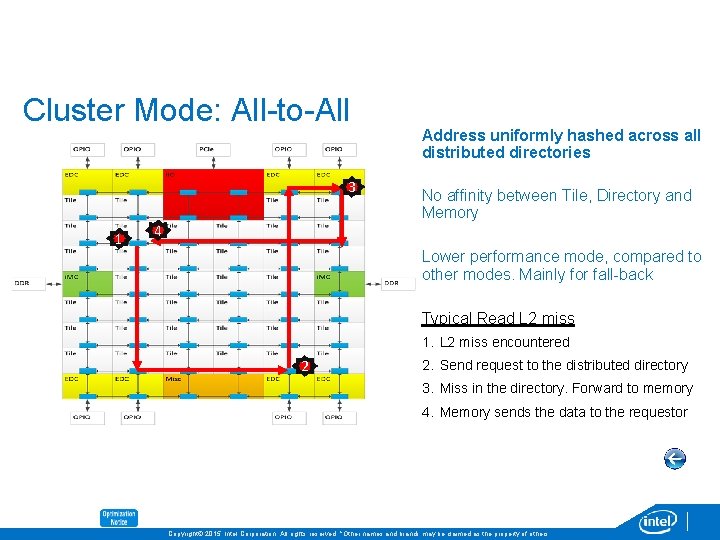

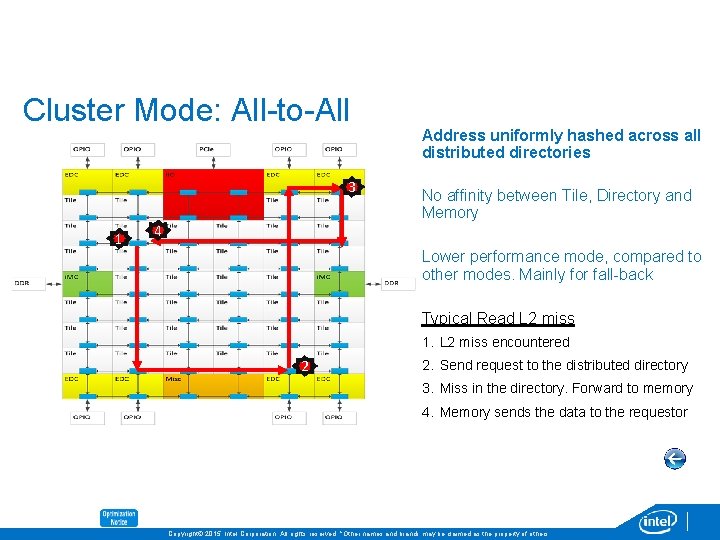

Cluster Mode: All-to-All 3 1 4 Address uniformly hashed across all distributed directories No affinity between Tile, Directory and Memory Lower performance mode, compared to other modes. Mainly for fall-back Typical Read L 2 miss 1. L 2 miss encountered 2 2. Send request to the distributed directory 3. Miss in the directory. Forward to memory 4. Memory sends the data to the requestor Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

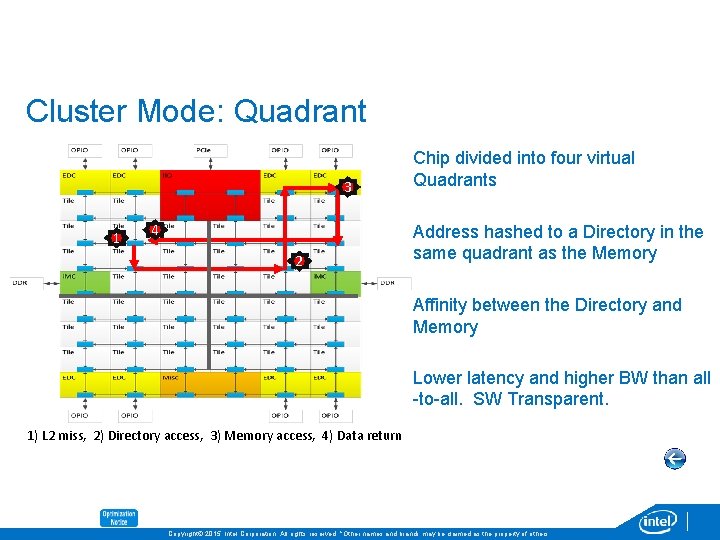

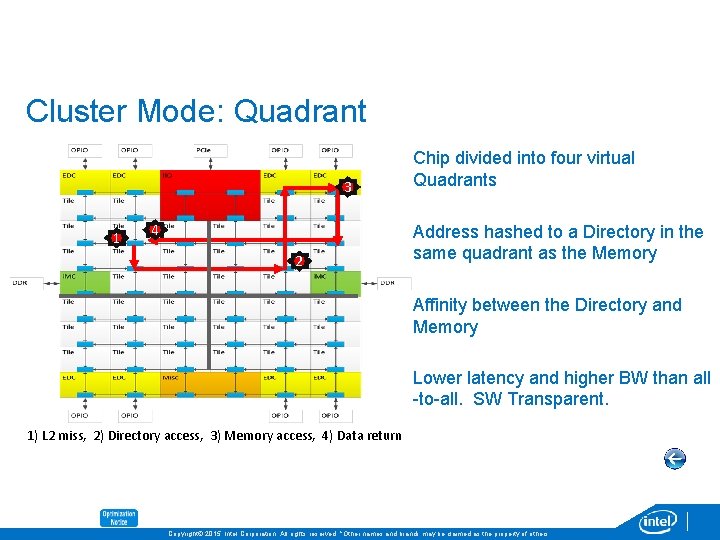

Cluster Mode: Quadrant 3 1 4 2 Chip divided into four virtual Quadrants Address hashed to a Directory in the same quadrant as the Memory Affinity between the Directory and Memory Lower latency and higher BW than all -to-all. SW Transparent. 1) L 2 miss, 2) Directory access, 3) Memory access, 4) Data return Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Cluster Mode: Sub-NUMA Clustering (SNC) 3 1 4 Each Quadrant (Cluster) exposed as a separate NUMA domain to OS. Looks analogous to 4 -Socket Xeon 2 Affinity between Tile, Directory and Memory Local communication. Lowest latency of all modes. 1) L 2 miss, 2) Directory access, 3) Memory access, 4) Data return SW needs to NUMA optimize to get benefit. Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

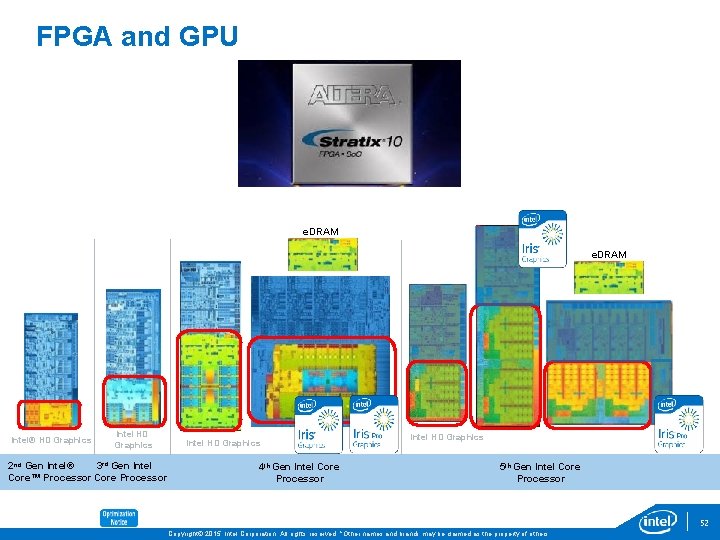

FPGA and GPU e. DRAM Intel® HD Graphics Intel HD Graphics 3 rd Gen Intel 2 nd Gen Intel® Core™ Processor Core Processor Intel HD Graphics 4 th Gen Intel Core Processor Intel HD Graphics 5 th Gen Intel Core Processor 52 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

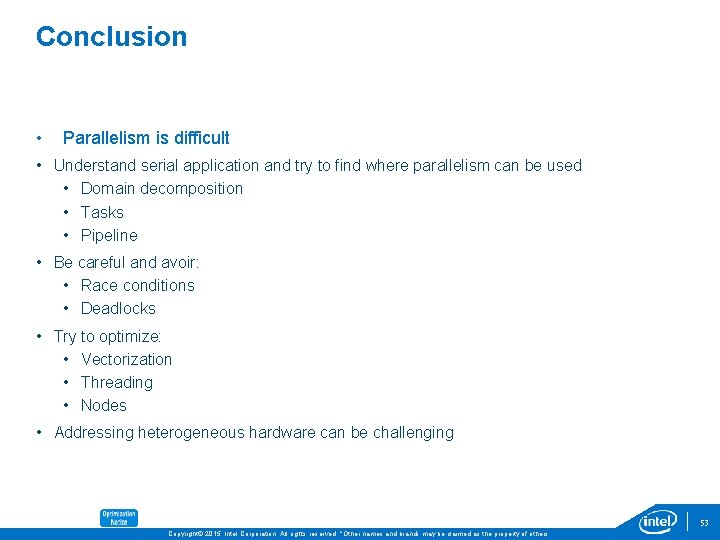

Conclusion • Parallelism is difficult • Understand serial application and try to find where parallelism can be used • Domain decomposition • Tasks • Pipeline • Be careful and avoir: • Race conditions • Deadlocks • Try to optimize: • Vectorization • Threading • Nodes • Addressing heterogeneous hardware can be challenging 53 Copyright© 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others.

Legal Disclaimer & Optimization Notice INFORMATION IN THIS DOCUMENT IS PROVIDED “AS IS”. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO THIS INFORMATION INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and Mobile. Mark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. Copyright © 2015, Intel Corporation. All rights reserved. Intel, Pentium, Xeon Phi, Core, VTune, Cilk, and the Intel logo are trademarks of Intel Corporation in the U. S. and other countries. Materials distributed for lab sessions may redistribute source codes obtained under various Open Source licenses with additional materials supporting the lab distributed under the Intel Sample Source Code License. A copy of this latter license should be an included file within the distribution and covers this presentation along with lab source samples. The passed-through Open Source projects might not perform as well as the originals, since lab preparation may include the de-optimization of certain sections to provide lab examples for analysis tool exercises. Optimization Notice Intel’s compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE 2, SSE 3, and SSSE 3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice revision #20110804 54 Copyright © 2015, Intel Corporation. All rights reserved. *Other names and brands may be claimed as the property of others. Optimization Notice