Parallel Processing CS 730 Lecture 5 Shared Memory

- Slides: 18

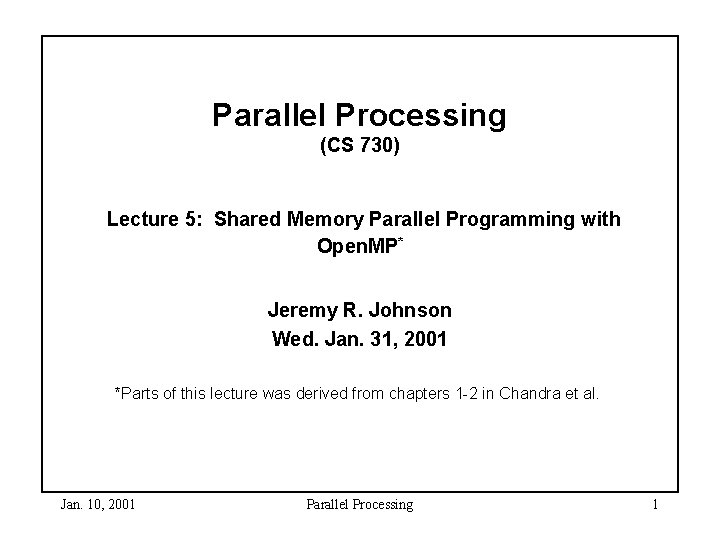

Parallel Processing (CS 730) Lecture 5: Shared Memory Parallel Programming with Open. MP* Jeremy R. Johnson Wed. Jan. 31, 2001 *Parts of this lecture was derived from chapters 1 -2 in Chandra et al. Jan. 10, 2001 Parallel Processing 1

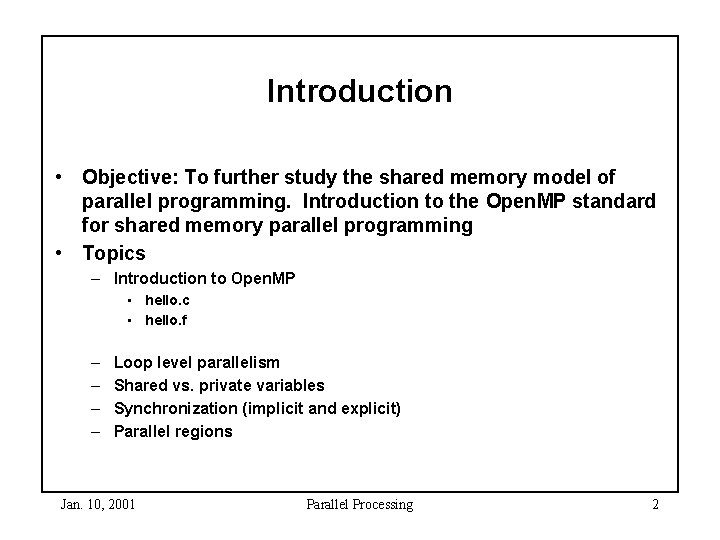

Introduction • Objective: To further study the shared memory model of parallel programming. Introduction to the Open. MP standard for shared memory parallel programming • Topics – Introduction to Open. MP • hello. c • hello. f – – Loop level parallelism Shared vs. private variables Synchronization (implicit and explicit) Parallel regions Jan. 10, 2001 Parallel Processing 2

Open. MP • Extension to FORTRAN, C/C++ – Uses directives (comments in FORTRAN, pragma in C/C++) • ignored without compiler support • Some library support required • Shared memory model – – – – parallel regions loop level parallelism implicit thread model communication via shared address space private vs. shared variables (declaration) explicit synchronization via directives (e. g. critical) library routines for returning thread information (e. g. omp_get_num_threads(), omp_get_thread_num() ) – Environment variables used to provide system info (e. g. OMP_NUM_THREADS) Jan. 10, 2001 Parallel Processing 3

Benefits • Provides incremental parallelism • Small increase in code size • Simpler model than message passing • Easier to use than thread library • With hardware and compiler support smaller granularity than message passing. Jan. 10, 2001 Parallel Processing 4

Further Information • Adopted as a standard in 1997 – Initiated by SGI • www. openmp. org • Chandra, Dagum, Kohr, Maydan, Mc. Donald, Menon, “Parallel Programming in Open. MP”, Morgan Kaufman Publishers, 2001. Jan. 10, 2001 Parallel Processing 5

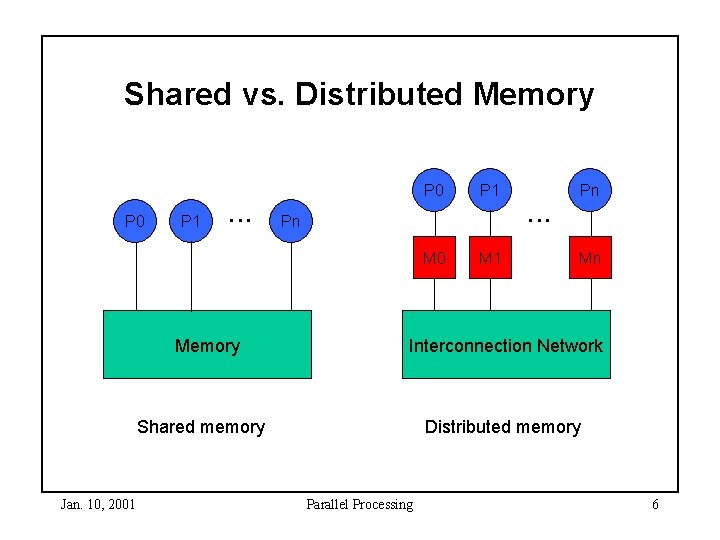

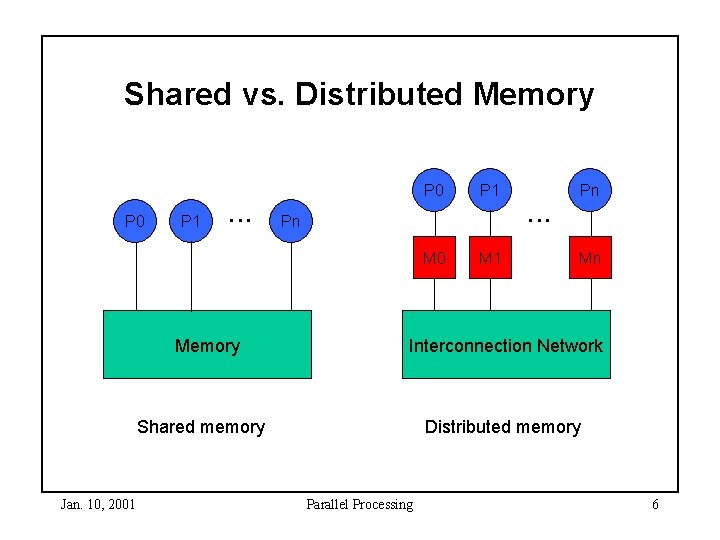

Shared vs. Distributed Memory P 0 P 1 . . . Memory P 1 M 0 M 1 Pn . . . Pn Mn Interconnection Network Shared memory Jan. 10, 2001 P 0 Distributed memory Parallel Processing 6

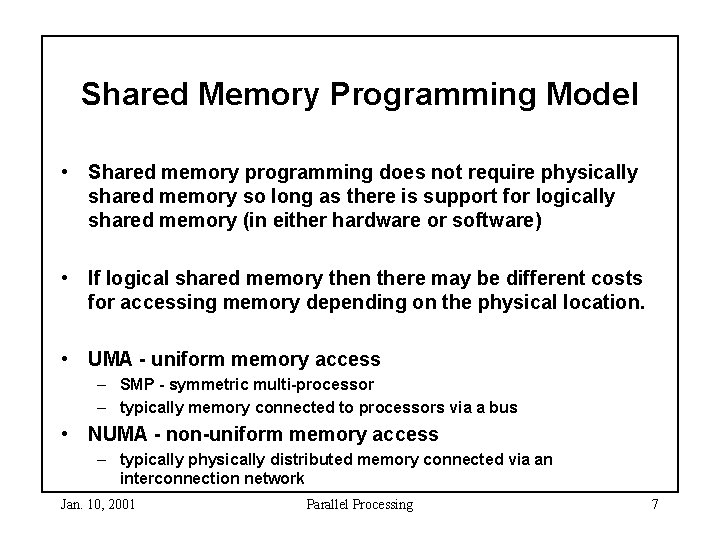

Shared Memory Programming Model • Shared memory programming does not require physically shared memory so long as there is support for logically shared memory (in either hardware or software) • If logical shared memory then there may be different costs for accessing memory depending on the physical location. • UMA - uniform memory access – SMP - symmetric multi-processor – typically memory connected to processors via a bus • NUMA - non-uniform memory access – typically physically distributed memory connected via an interconnection network Jan. 10, 2001 Parallel Processing 7

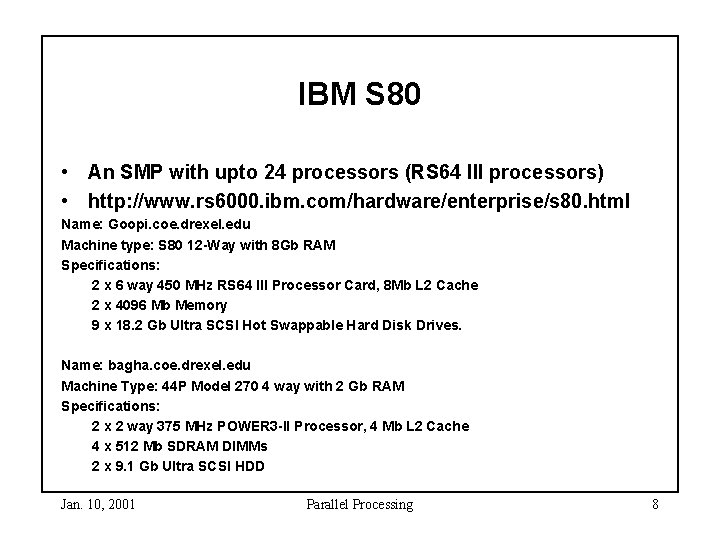

IBM S 80 • An SMP with upto 24 processors (RS 64 III processors) • http: //www. rs 6000. ibm. com/hardware/enterprise/s 80. html Name: Goopi. coe. drexel. edu Machine type: S 80 12 -Way with 8 Gb RAM Specifications: 2 x 6 way 450 MHz RS 64 III Processor Card, 8 Mb L 2 Cache 2 x 4096 Mb Memory 9 x 18. 2 Gb Ultra SCSI Hot Swappable Hard Disk Drives. Name: bagha. coe. drexel. edu Machine Type: 44 P Model 270 4 way with 2 Gb RAM Specifications: 2 x 2 way 375 MHz POWER 3 -II Processor, 4 Mb L 2 Cache 4 x 512 Mb SDRAM DIMMs 2 x 9. 1 Gb Ultra SCSI HDD Jan. 10, 2001 Parallel Processing 8

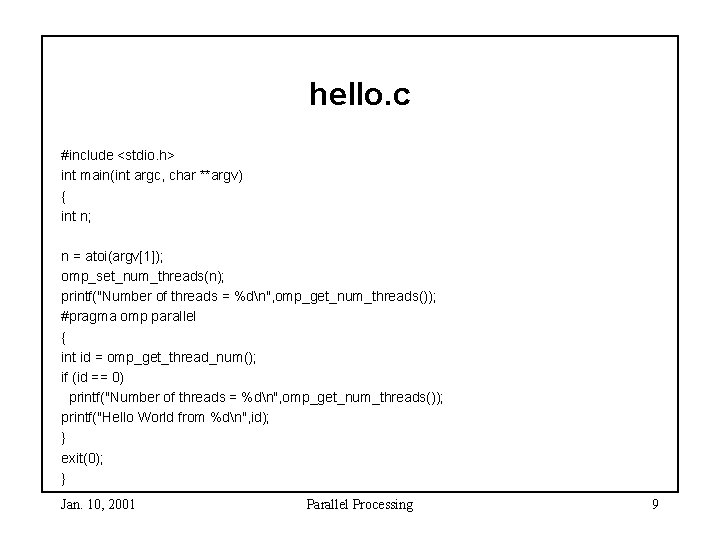

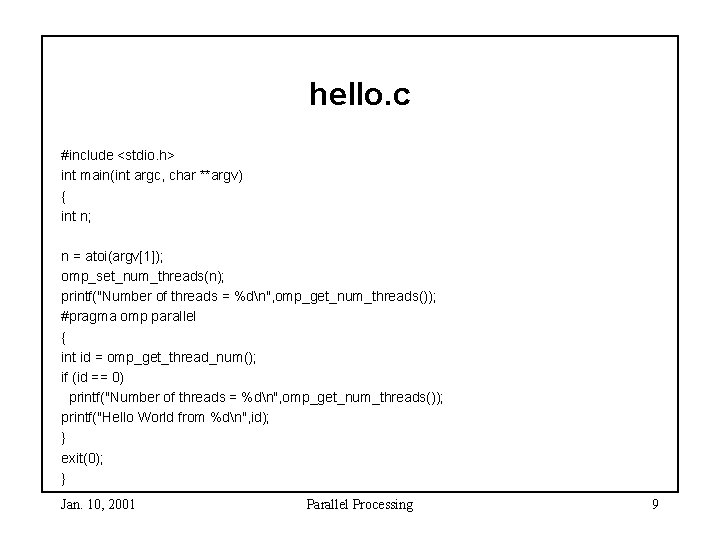

hello. c #include <stdio. h> int main(int argc, char **argv) { int n; n = atoi(argv[1]); omp_set_num_threads(n); printf("Number of threads = %dn", omp_get_num_threads()); #pragma omp parallel { int id = omp_get_thread_num(); if (id == 0) printf("Number of threads = %dn", omp_get_num_threads()); printf("Hello World from %dn", id); } exit(0); } Jan. 10, 2001 Parallel Processing 9

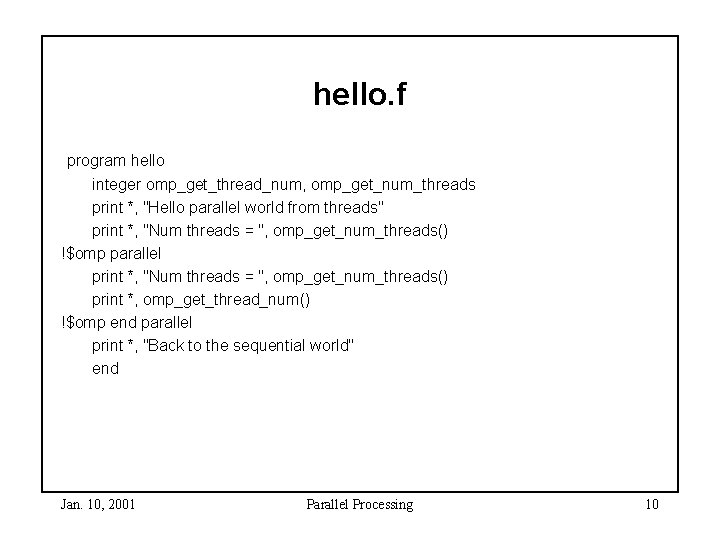

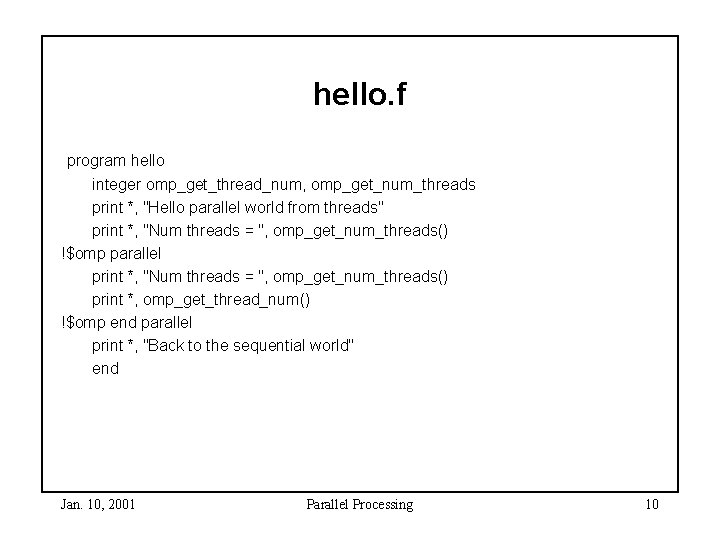

hello. f program hello integer omp_get_thread_num, omp_get_num_threads print *, "Hello parallel world from threads" print *, "Num threads = ", omp_get_num_threads() !$omp parallel print *, "Num threads = ", omp_get_num_threads() print *, omp_get_thread_num() !$omp end parallel print *, "Back to the sequential world" end Jan. 10, 2001 Parallel Processing 10

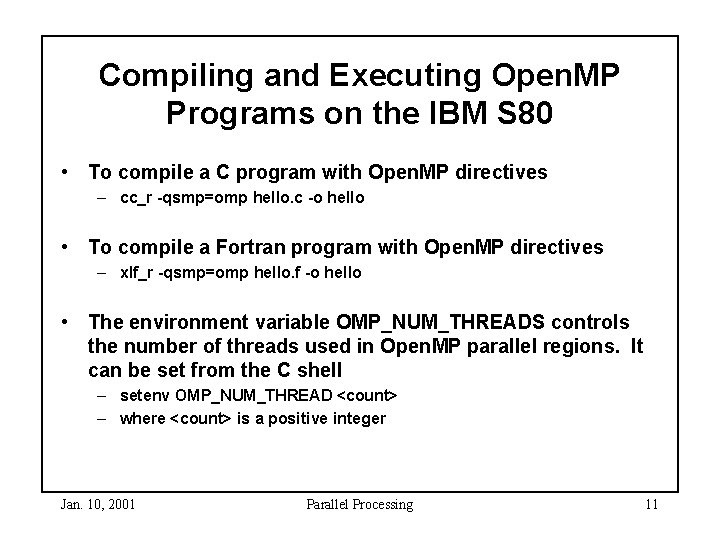

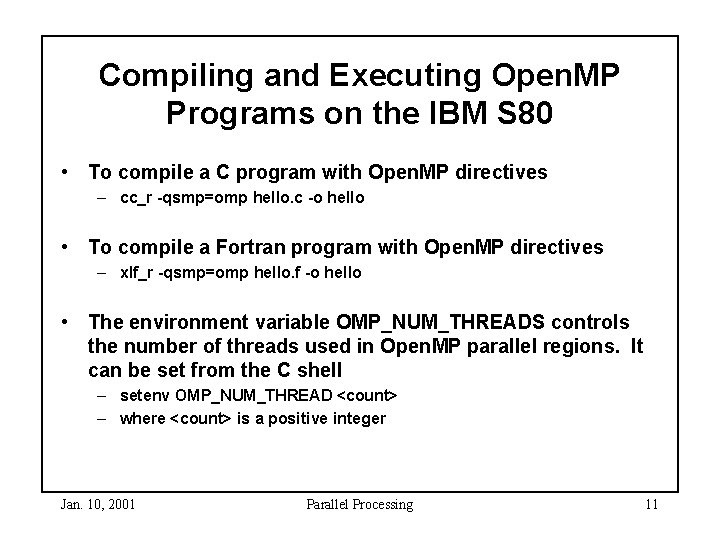

Compiling and Executing Open. MP Programs on the IBM S 80 • To compile a C program with Open. MP directives – cc_r -qsmp=omp hello. c -o hello • To compile a Fortran program with Open. MP directives – xlf_r -qsmp=omp hello. f -o hello • The environment variable OMP_NUM_THREADS controls the number of threads used in Open. MP parallel regions. It can be set from the C shell – setenv OMP_NUM_THREAD <count> – where <count> is a positive integer Jan. 10, 2001 Parallel Processing 11

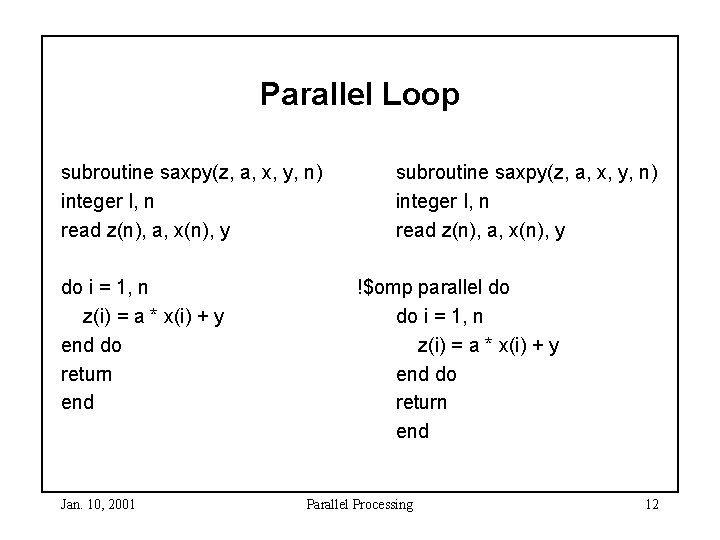

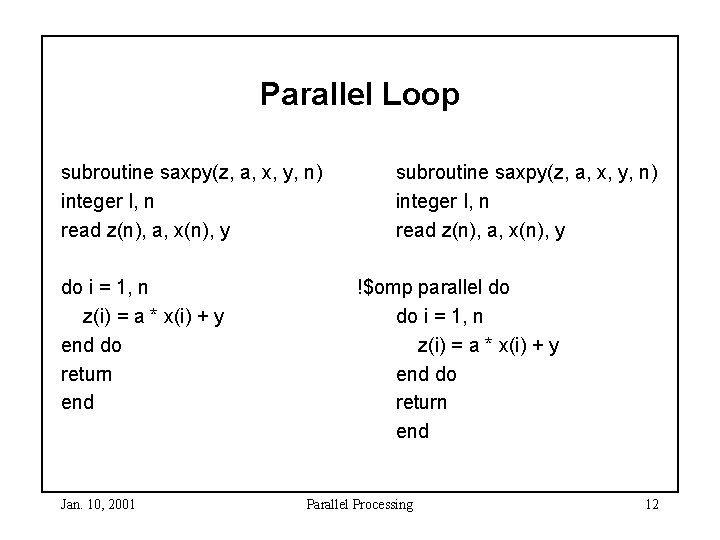

Parallel Loop subroutine saxpy(z, a, x, y, n) integer I, n read z(n), a, x(n), y do i = 1, n z(i) = a * x(i) + y end do return end Jan. 10, 2001 subroutine saxpy(z, a, x, y, n) integer I, n read z(n), a, x(n), y !$omp parallel do do i = 1, n z(i) = a * x(i) + y end do return end Parallel Processing 12

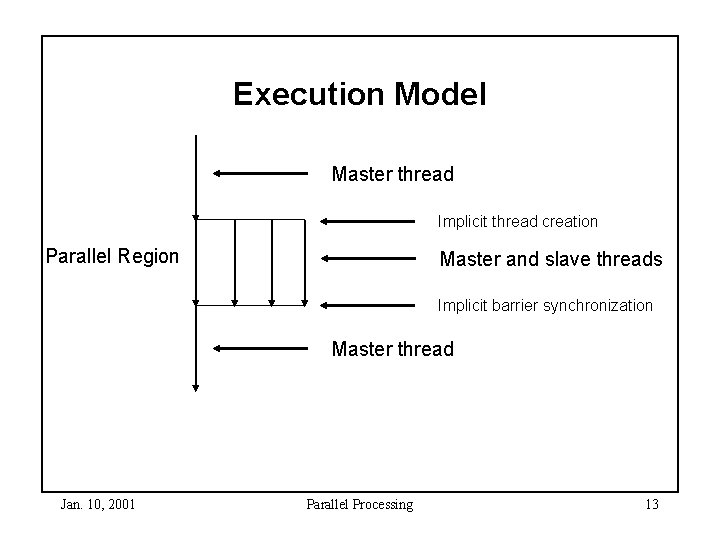

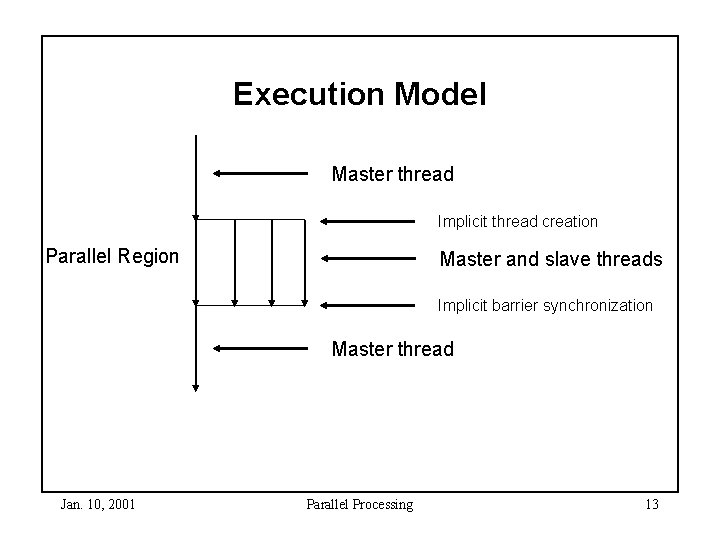

Execution Model Master thread Implicit thread creation Parallel Region Master and slave threads Implicit barrier synchronization Master thread Jan. 10, 2001 Parallel Processing 13

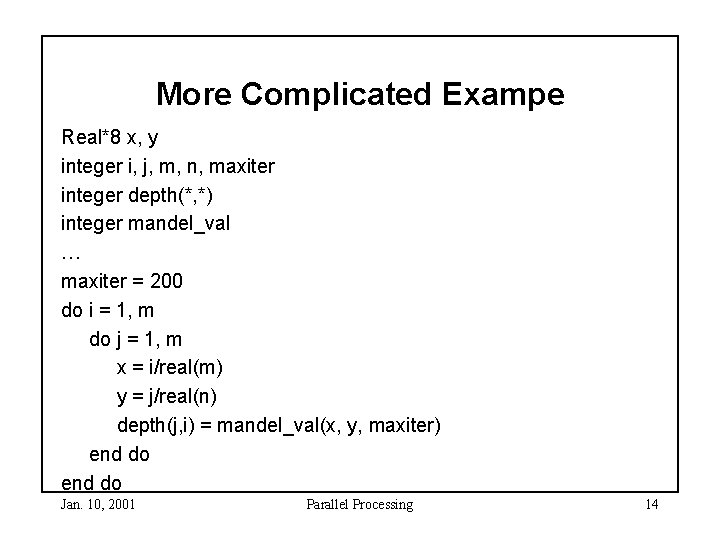

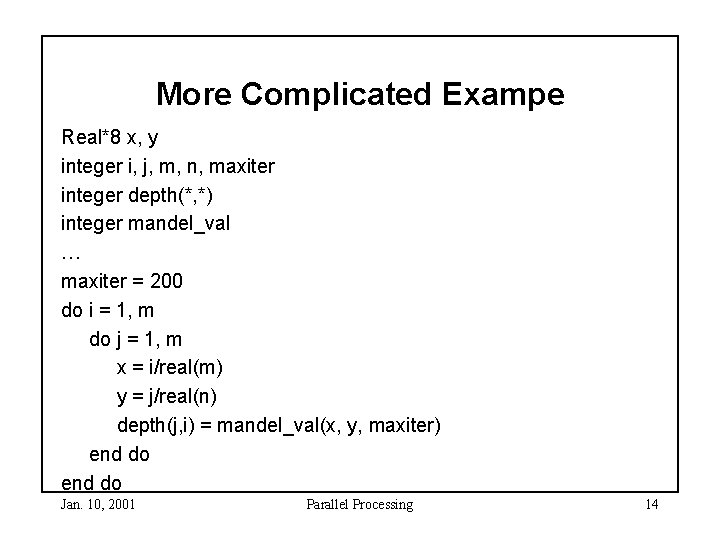

More Complicated Exampe Real*8 x, y integer i, j, m, n, maxiter integer depth(*, *) integer mandel_val … maxiter = 200 do i = 1, m do j = 1, m x = i/real(m) y = j/real(n) depth(j, i) = mandel_val(x, y, maxiter) end do Jan. 10, 2001 Parallel Processing 14

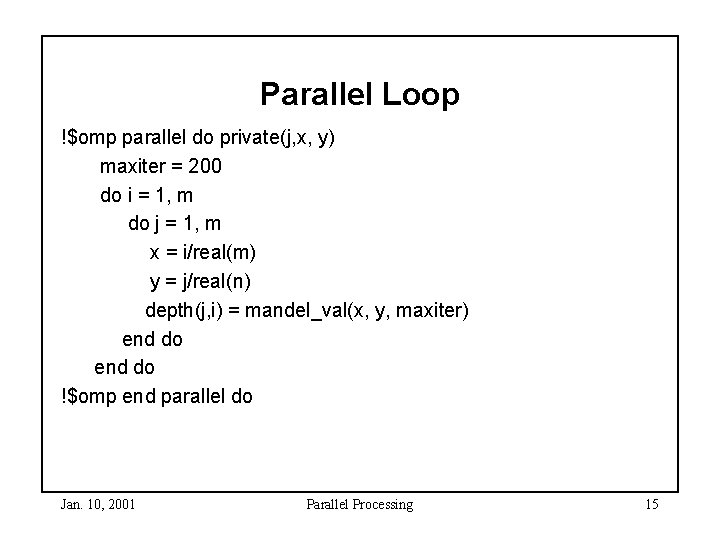

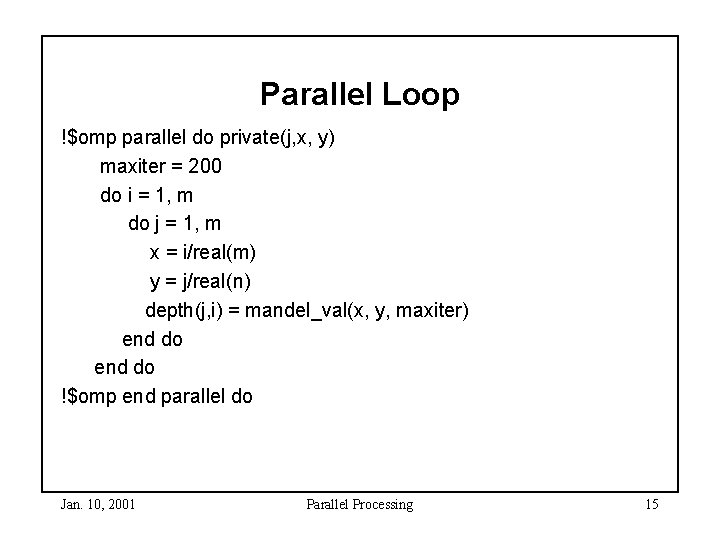

Parallel Loop !$omp parallel do private(j, x, y) maxiter = 200 do i = 1, m do j = 1, m x = i/real(m) y = j/real(n) depth(j, i) = mandel_val(x, y, maxiter) end do !$omp end parallel do Jan. 10, 2001 Parallel Processing 15

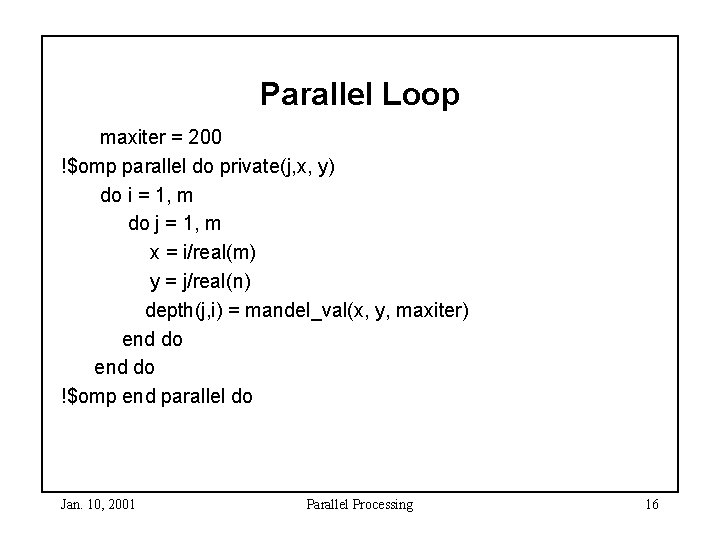

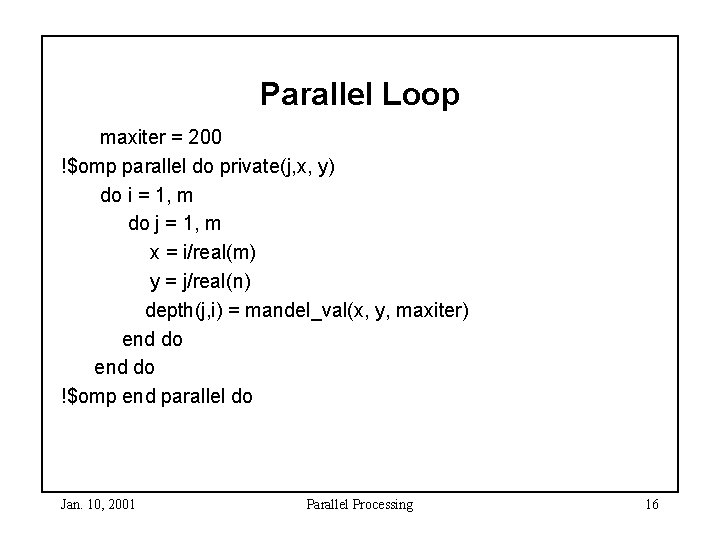

Parallel Loop maxiter = 200 !$omp parallel do private(j, x, y) do i = 1, m do j = 1, m x = i/real(m) y = j/real(n) depth(j, i) = mandel_val(x, y, maxiter) end do !$omp end parallel do Jan. 10, 2001 Parallel Processing 16

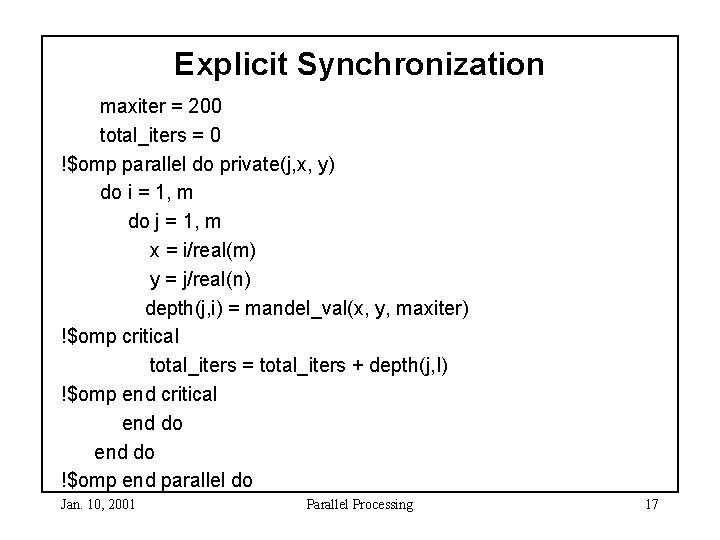

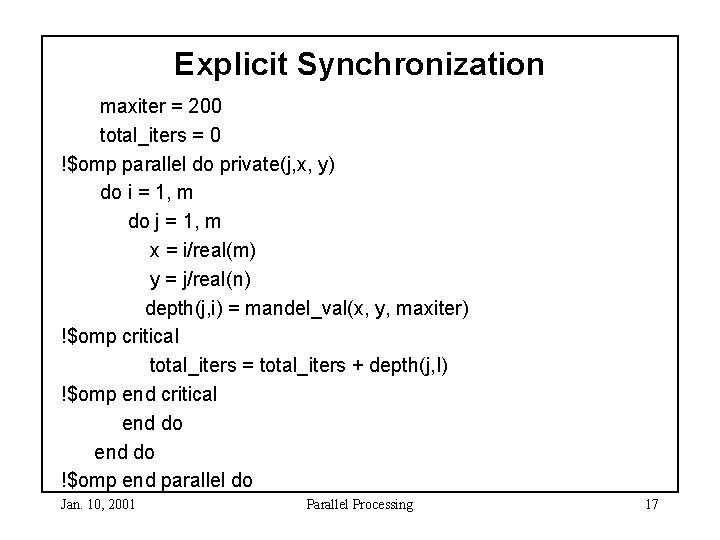

Explicit Synchronization maxiter = 200 total_iters = 0 !$omp parallel do private(j, x, y) do i = 1, m do j = 1, m x = i/real(m) y = j/real(n) depth(j, i) = mandel_val(x, y, maxiter) !$omp critical total_iters = total_iters + depth(j, I) !$omp end critical end do !$omp end parallel do Jan. 10, 2001 Parallel Processing 17

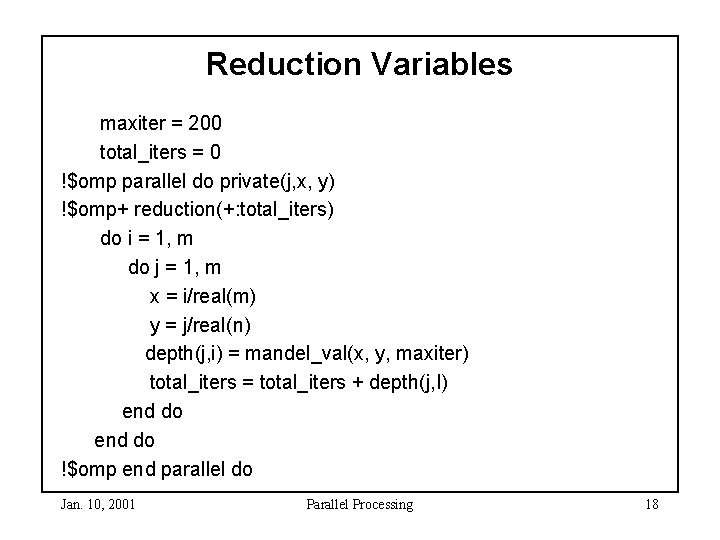

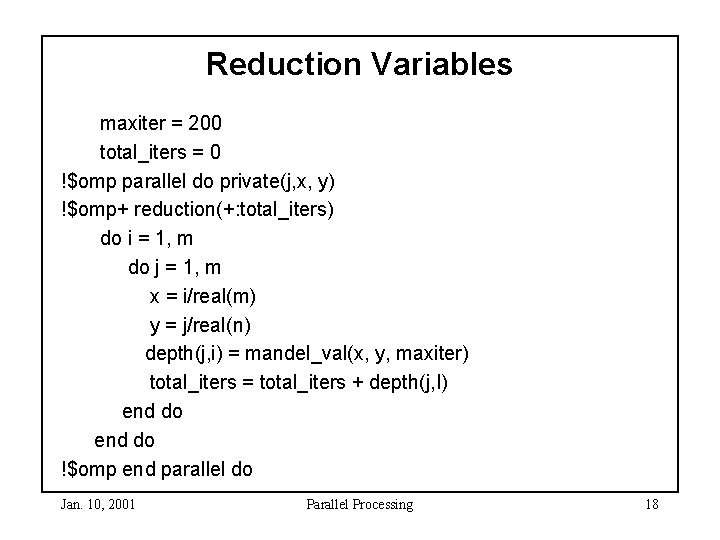

Reduction Variables maxiter = 200 total_iters = 0 !$omp parallel do private(j, x, y) !$omp+ reduction(+: total_iters) do i = 1, m do j = 1, m x = i/real(m) y = j/real(n) depth(j, i) = mandel_val(x, y, maxiter) total_iters = total_iters + depth(j, I) end do !$omp end parallel do Jan. 10, 2001 Parallel Processing 18