Parallel Performance Evaluation using the TAU Performance System

- Slides: 57

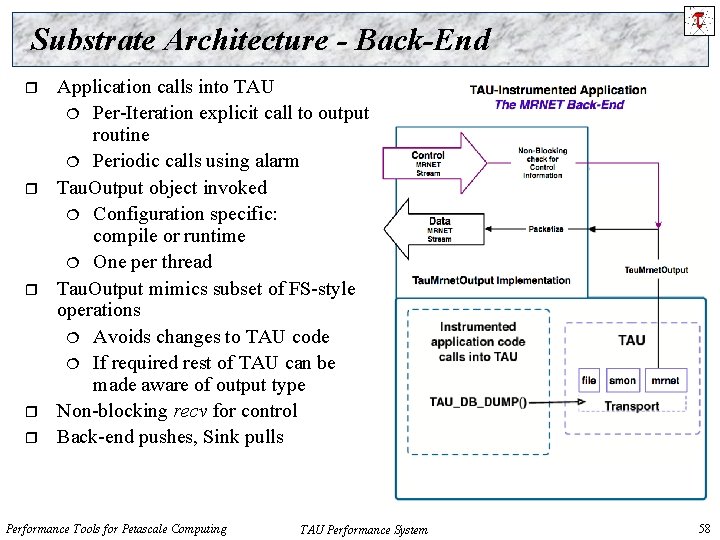

Parallel Performance Evaluation using the TAU Performance System Project Workshop on Performance Tools for Petascale Computing 9: 30 – 10: 30 am, Tuesday, July 17, 2007, Snowbird, UT Sameer S. Shende sameer@cs. uoregon. edu http: //www. cs. uoregon. edu/research/tau Performance Research Laboratory University of Oregon

Acknowledgements r r r r Dr. Allen D. Malony, Professor Alan Morris, Senior software engineer Wyatt Spear, Software engineer Scott Biersdorff, Software engineer Kevin Huck, Ph. D. student Aroon Nataraj, Ph. D. student Brad Davidson, Systems administrator Performance Tools for Petascale Computing TAU Performance System 2

TAU Performance System r r Tuning and Analysis Utilities (15+ year project effort) Performance system framework for HPC systems r Targets a general complex system computation model r Entities: nodes / contexts / threads Multi-level: system / software / parallelism Measurement and analysis abstraction Integrated toolkit for performance problem solving r Integrated, scalable, flexible, and parallel Instrumentation, measurement, analysis, and visualization Portable performance profiling and tracing facility Performance data management and data mining Partners: LLNL, ANL, LANL, Research Center Jülich Performance Tools for Petascale Computing TAU Performance System 4

TAU Parallel Performance System Goals r Portable (open source) parallel performance system r r r Multi-level, multi-language performance instrumentation Flexible and configurable performance measurement Support for multiple parallel programming paradigms r r r Computer system architectures and operating systems Different programming languages and compilers Multi-threading, message passing, mixed-mode, hybrid, object oriented (generic), component-based Support for performance mapping Integration of leading performance technology Scalable (very large) parallel performance analysis Performance Tools for Petascale Computing TAU Performance System 5

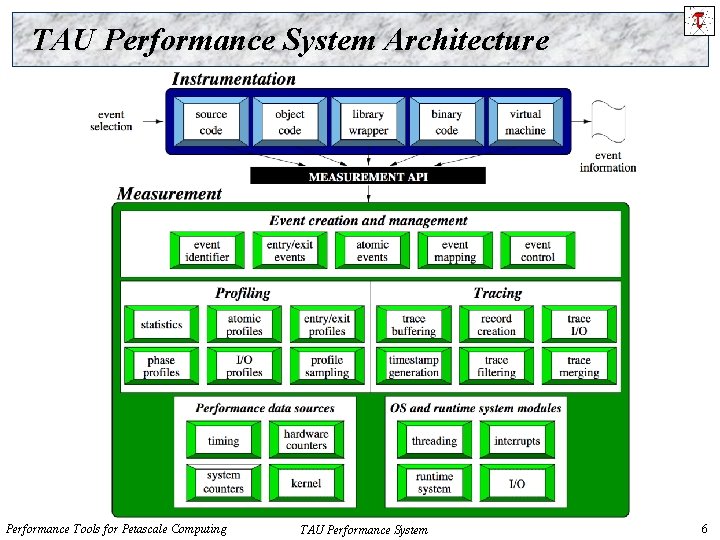

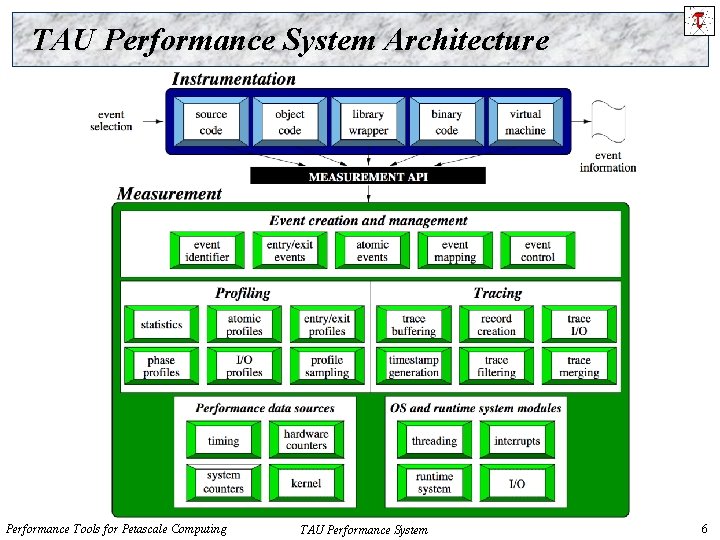

TAU Performance System Architecture Performance Tools for Petascale Computing TAU Performance System 6

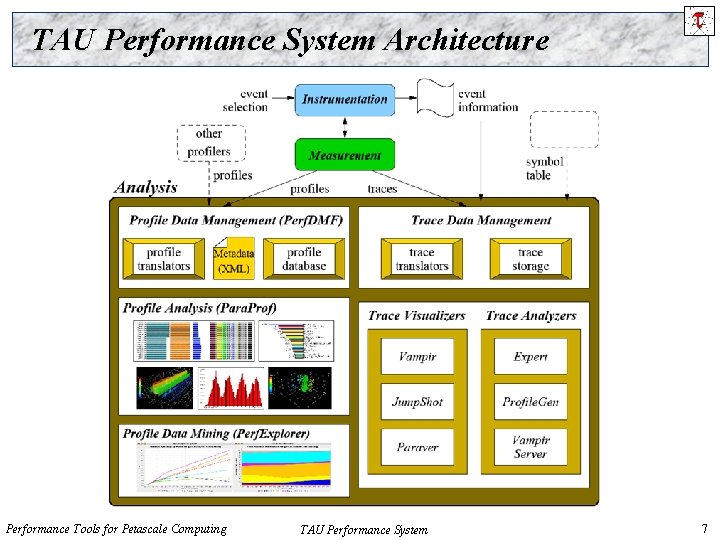

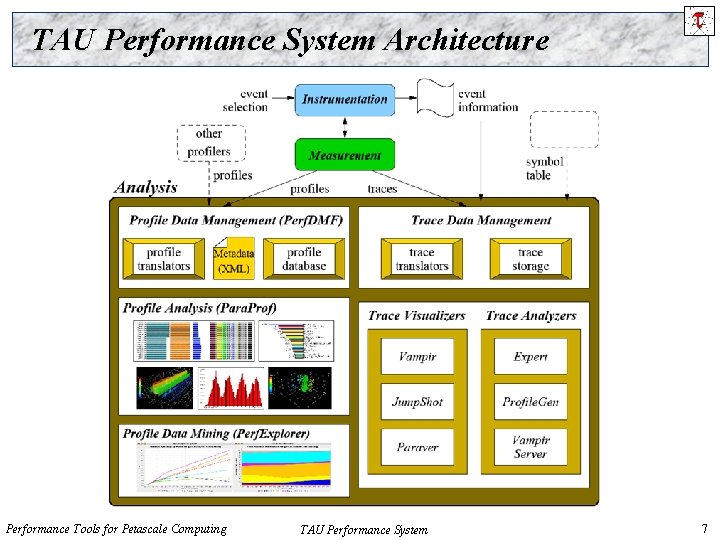

TAU Performance System Architecture Performance Tools for Petascale Computing TAU Performance System 7

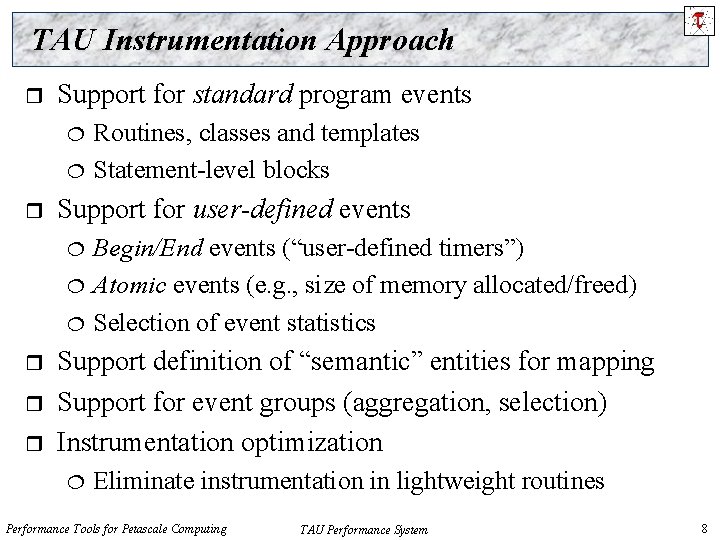

TAU Instrumentation Approach r Support for standard program events r Support for user-defined events r r r Routines, classes and templates Statement-level blocks Begin/End events (“user-defined timers”) Atomic events (e. g. , size of memory allocated/freed) Selection of event statistics Support definition of “semantic” entities for mapping Support for event groups (aggregation, selection) Instrumentation optimization Eliminate instrumentation in lightweight routines Performance Tools for Petascale Computing TAU Performance System 8

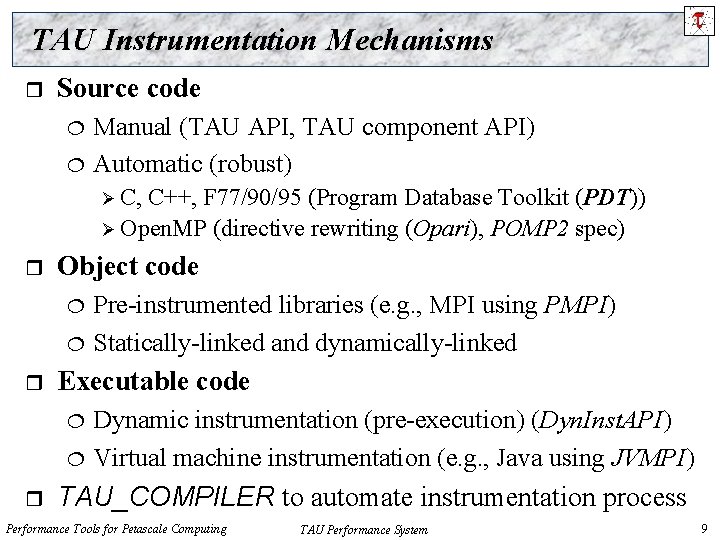

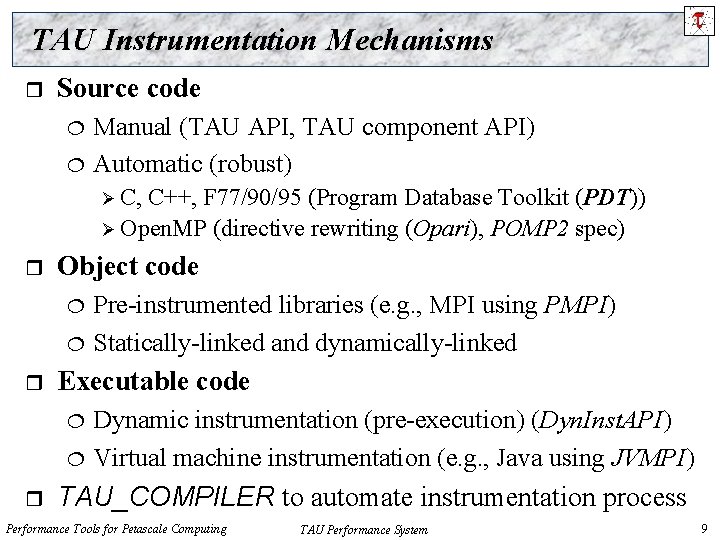

TAU Instrumentation Mechanisms r Source code Manual (TAU API, TAU component API) Automatic (robust) Ø C, C++, F 77/90/95 (Program Database Toolkit (PDT)) Ø Open. MP (directive rewriting (Opari), POMP 2 spec) r Object code r Executable code r Pre-instrumented libraries (e. g. , MPI using PMPI) Statically-linked and dynamically-linked Dynamic instrumentation (pre-execution) (Dyn. Inst. API) Virtual machine instrumentation (e. g. , Java using JVMPI) TAU_COMPILER to automate instrumentation process Performance Tools for Petascale Computing TAU Performance System 9

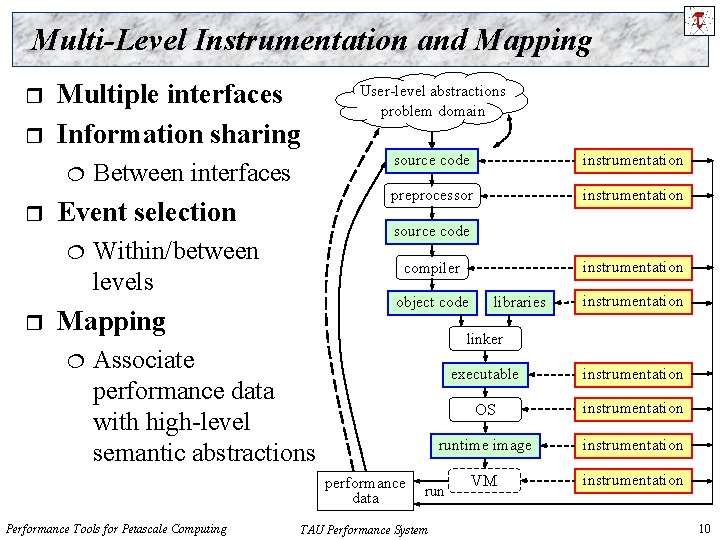

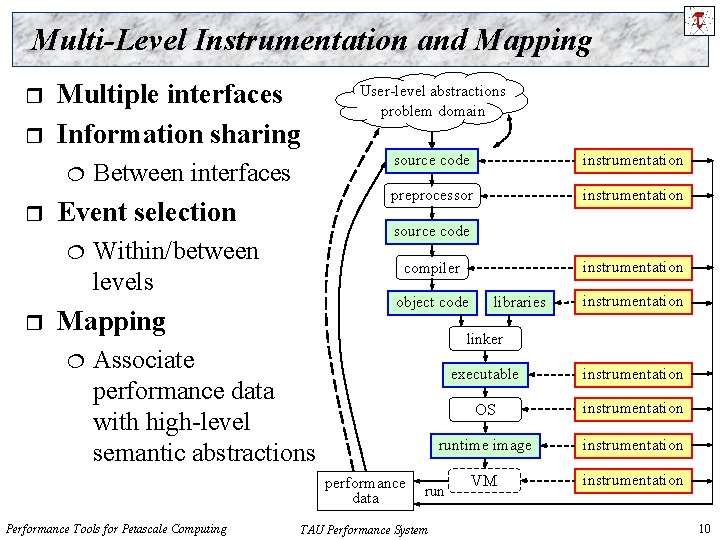

Multi-Level Instrumentation and Mapping r r Multiple interfaces Information sharing r Event selection r Between interfaces source code instrumentation preprocessor instrumentation source code Within/between levels object code libraries instrumentation linker Associate performance data with high-level semantic abstractions performance data Performance Tools for Petascale Computing instrumentation compiler Mapping User-level abstractions problem domain executable instrumentation OS instrumentation runtime image instrumentation VM instrumentation run TAU Performance System 10

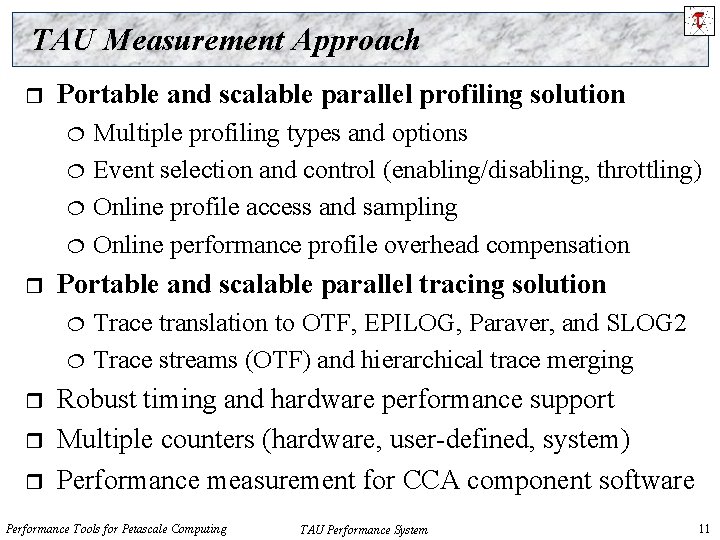

TAU Measurement Approach r Portable and scalable parallel profiling solution r Portable and scalable parallel tracing solution r r r Multiple profiling types and options Event selection and control (enabling/disabling, throttling) Online profile access and sampling Online performance profile overhead compensation Trace translation to OTF, EPILOG, Paraver, and SLOG 2 Trace streams (OTF) and hierarchical trace merging Robust timing and hardware performance support Multiple counters (hardware, user-defined, system) Performance measurement for CCA component software Performance Tools for Petascale Computing TAU Performance System 11

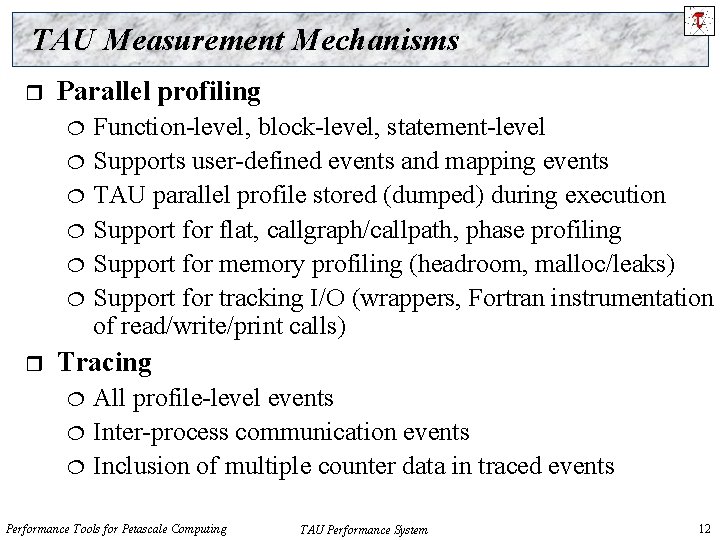

TAU Measurement Mechanisms r Parallel profiling r Function-level, block-level, statement-level Supports user-defined events and mapping events TAU parallel profile stored (dumped) during execution Support for flat, callgraph/callpath, phase profiling Support for memory profiling (headroom, malloc/leaks) Support for tracking I/O (wrappers, Fortran instrumentation of read/write/print calls) Tracing All profile-level events Inter-process communication events Inclusion of multiple counter data in traced events Performance Tools for Petascale Computing TAU Performance System 12

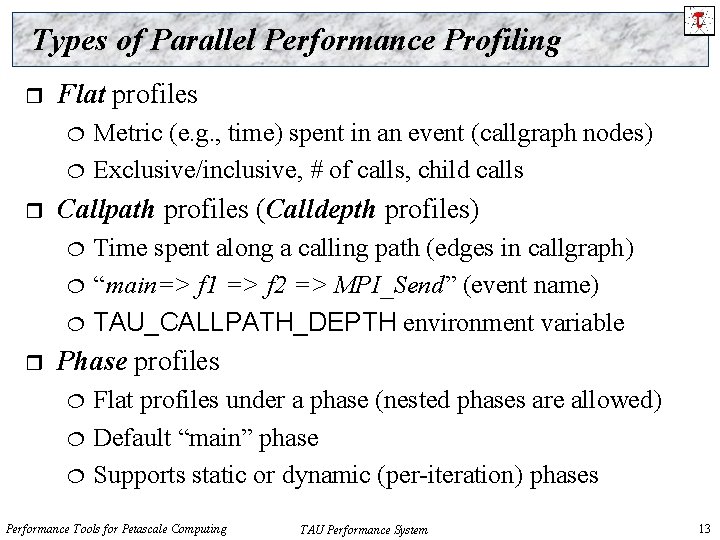

Types of Parallel Performance Profiling r Flat profiles r Callpath profiles (Calldepth profiles) r Metric (e. g. , time) spent in an event (callgraph nodes) Exclusive/inclusive, # of calls, child calls Time spent along a calling path (edges in callgraph) “main=> f 1 => f 2 => MPI_Send” (event name) TAU_CALLPATH_DEPTH environment variable Phase profiles Flat profiles under a phase (nested phases are allowed) Default “main” phase Supports static or dynamic (per-iteration) phases Performance Tools for Petascale Computing TAU Performance System 13

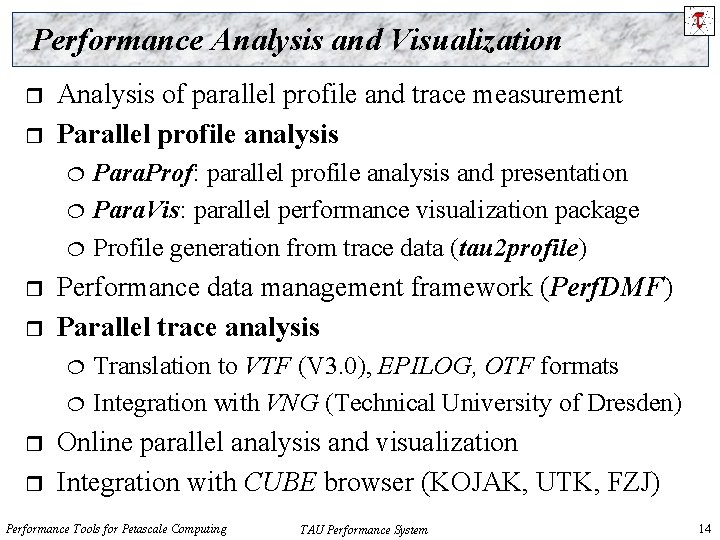

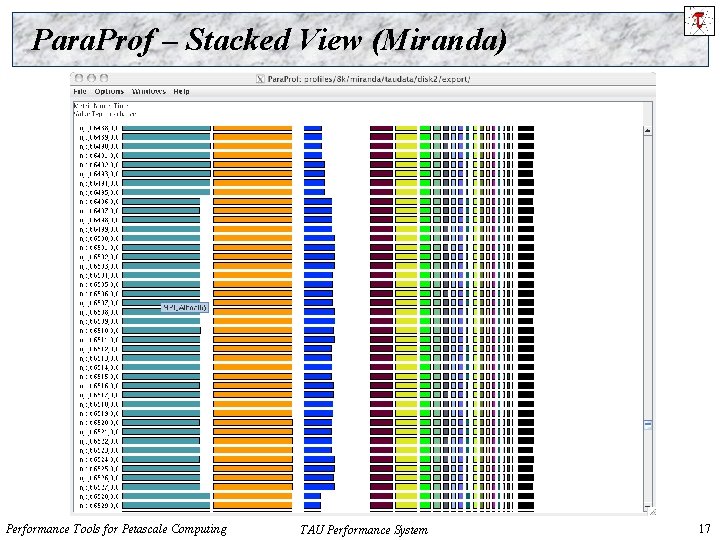

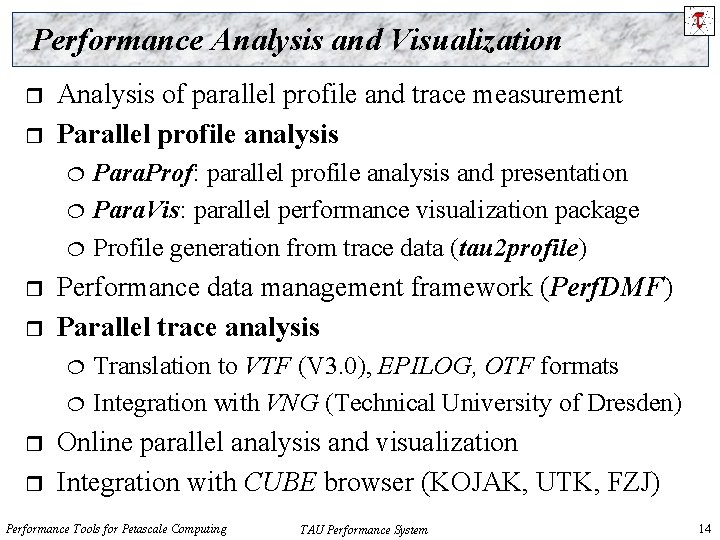

Performance Analysis and Visualization r r Analysis of parallel profile and trace measurement Parallel profile analysis r r Performance data management framework (Perf. DMF) Parallel trace analysis r r Para. Prof: parallel profile analysis and presentation Para. Vis: parallel performance visualization package Profile generation from trace data (tau 2 profile) Translation to VTF (V 3. 0), EPILOG, OTF formats Integration with VNG (Technical University of Dresden) Online parallel analysis and visualization Integration with CUBE browser (KOJAK, UTK, FZJ) Performance Tools for Petascale Computing TAU Performance System 14

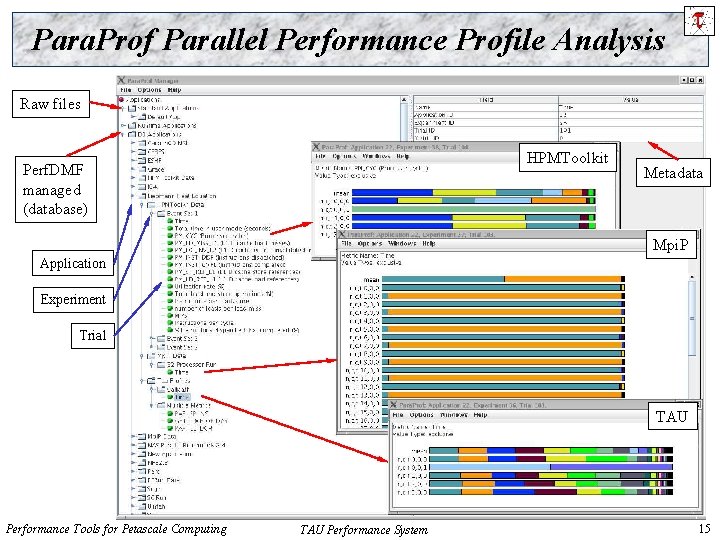

Para. Prof Parallel Performance Profile Analysis Raw files HPMToolkit Perf. DMF managed (database) Metadata Mpi. P Application Experiment Trial TAU Performance Tools for Petascale Computing TAU Performance System 15

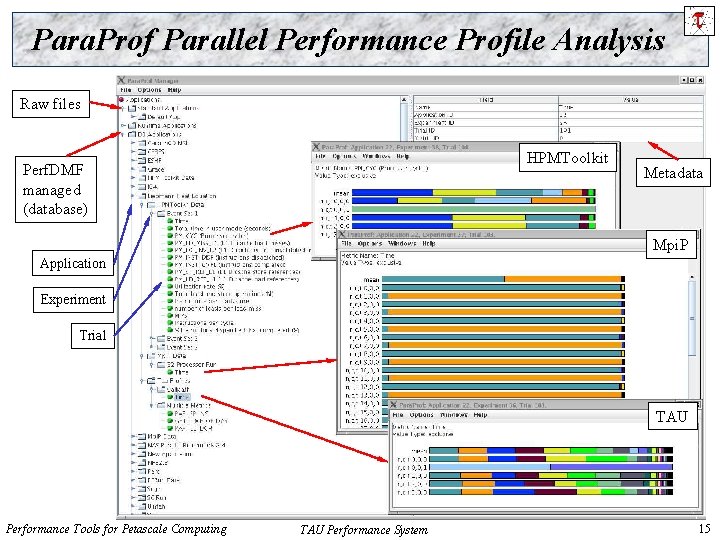

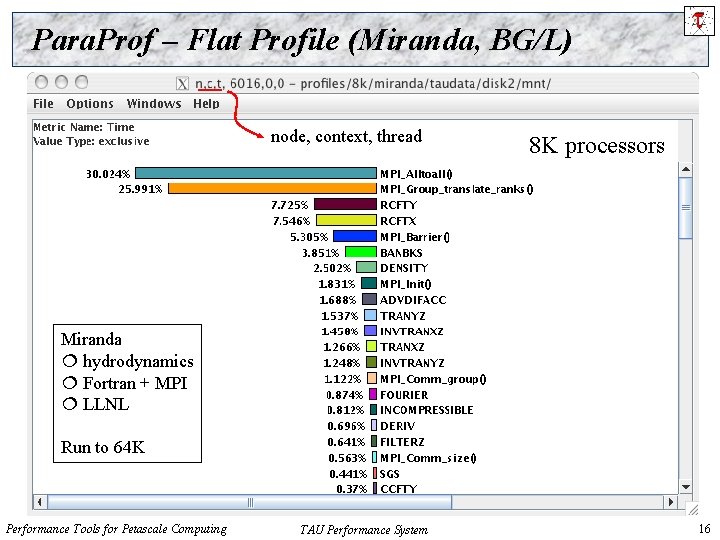

Para. Prof – Flat Profile (Miranda, BG/L) node, context, thread 8 K processors Miranda hydrodynamics Fortran + MPI LLNL Run to 64 K Performance Tools for Petascale Computing TAU Performance System 16

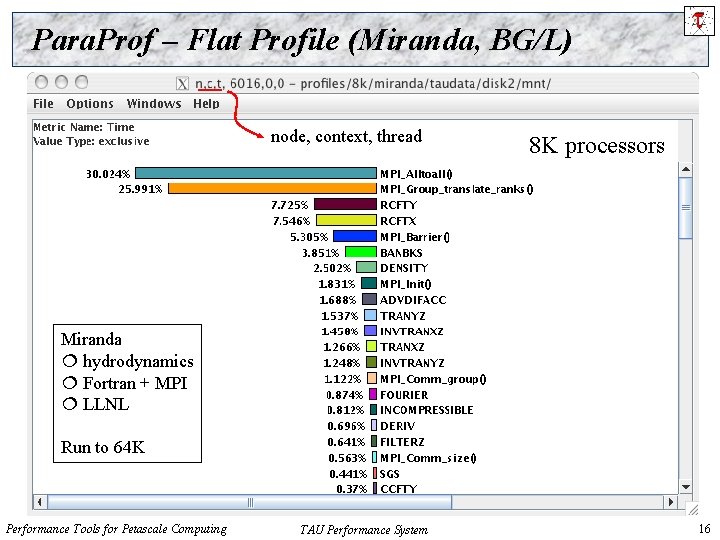

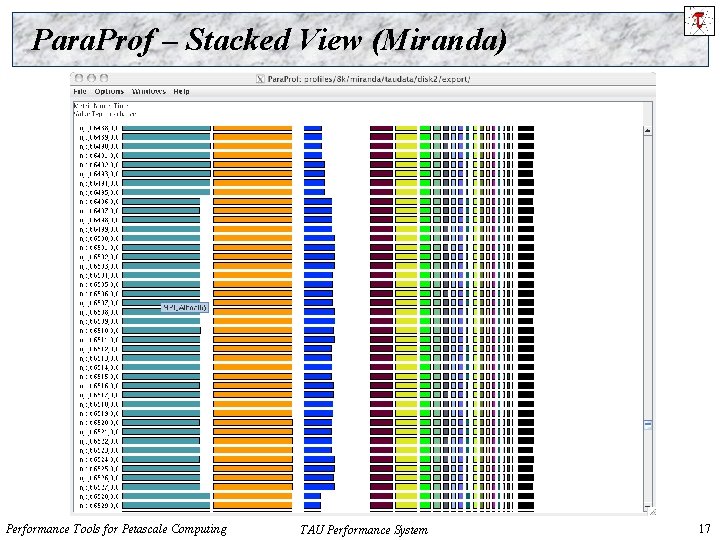

Para. Prof – Stacked View (Miranda) Performance Tools for Petascale Computing TAU Performance System 17

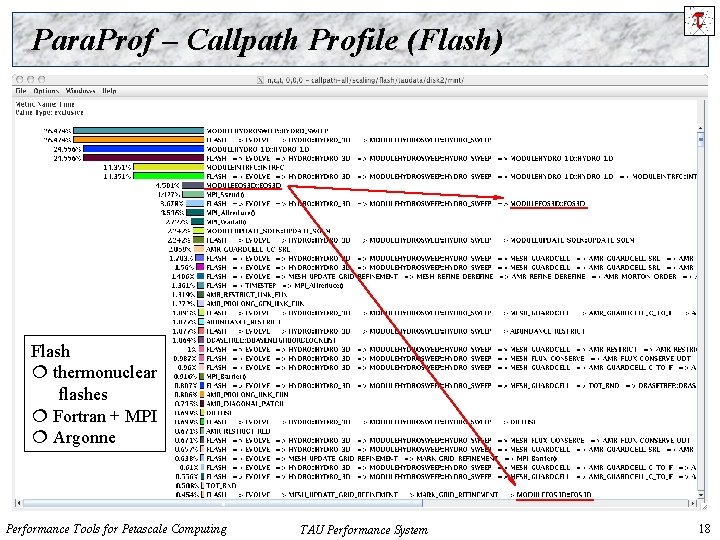

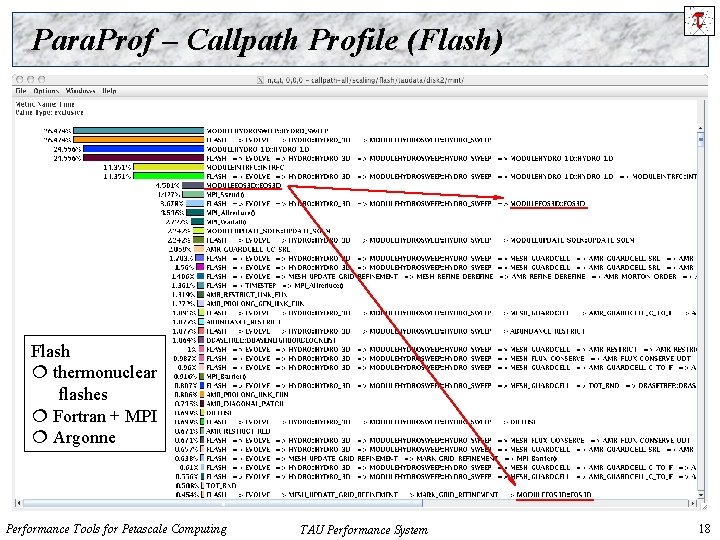

Para. Prof – Callpath Profile (Flash) Flash thermonuclear flashes Fortran + MPI Argonne Performance Tools for Petascale Computing TAU Performance System 18

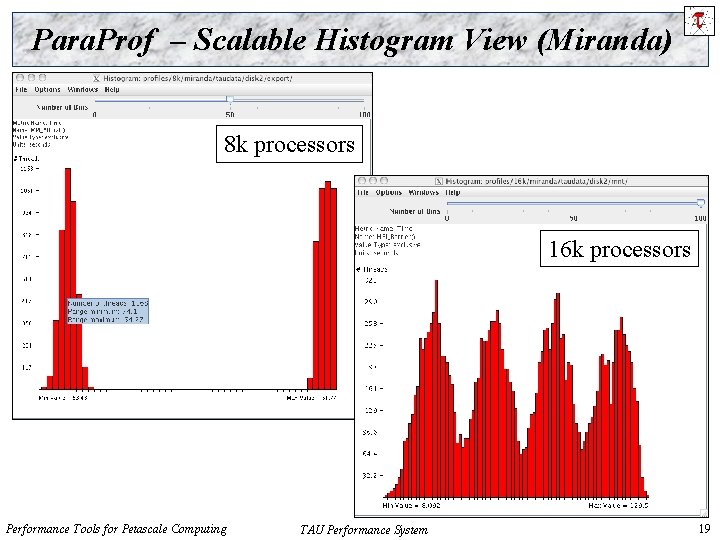

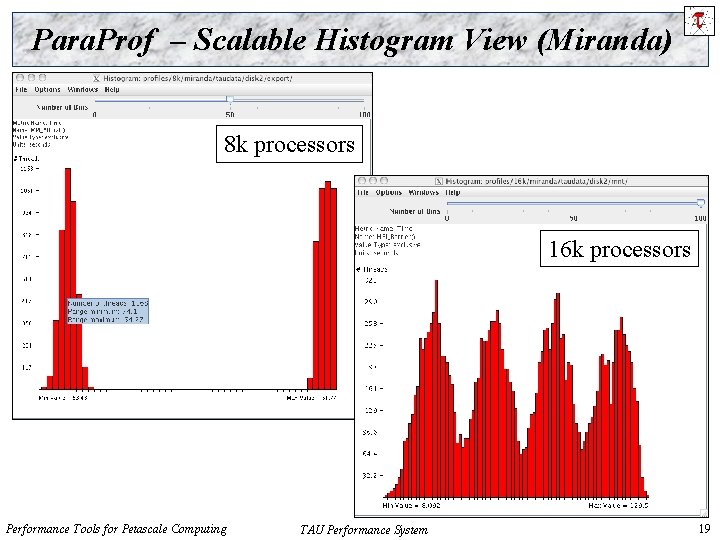

Para. Prof – Scalable Histogram View (Miranda) 8 k processors 16 k processors Performance Tools for Petascale Computing TAU Performance System 19

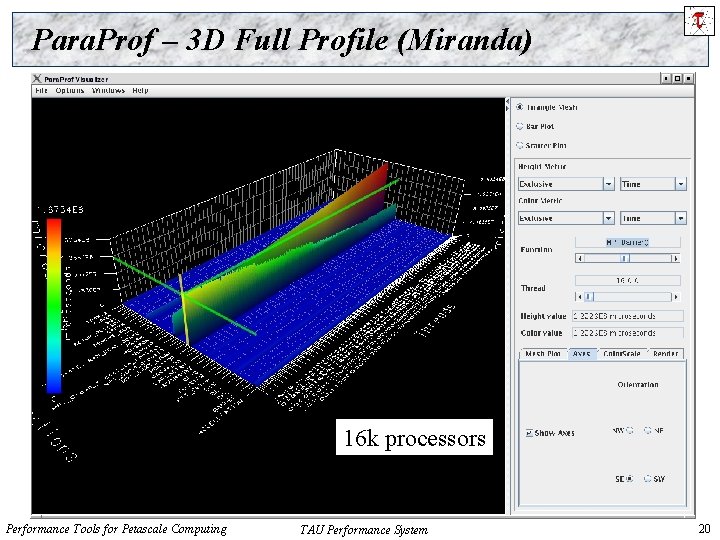

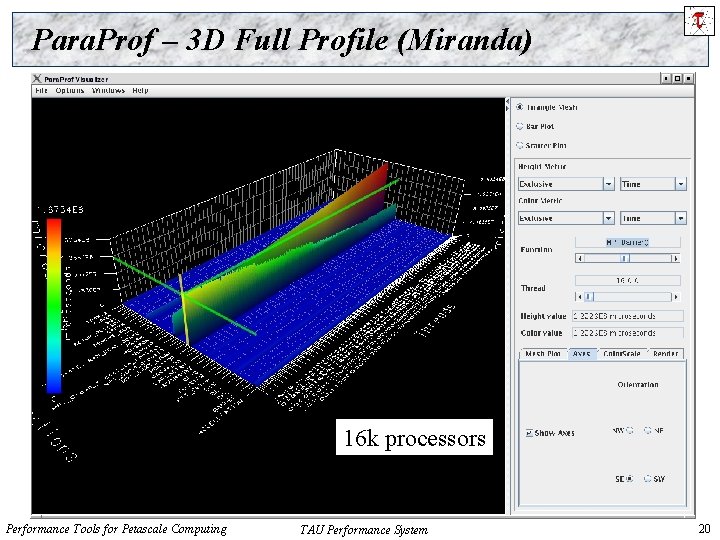

Para. Prof – 3 D Full Profile (Miranda) 16 k processors Performance Tools for Petascale Computing TAU Performance System 20

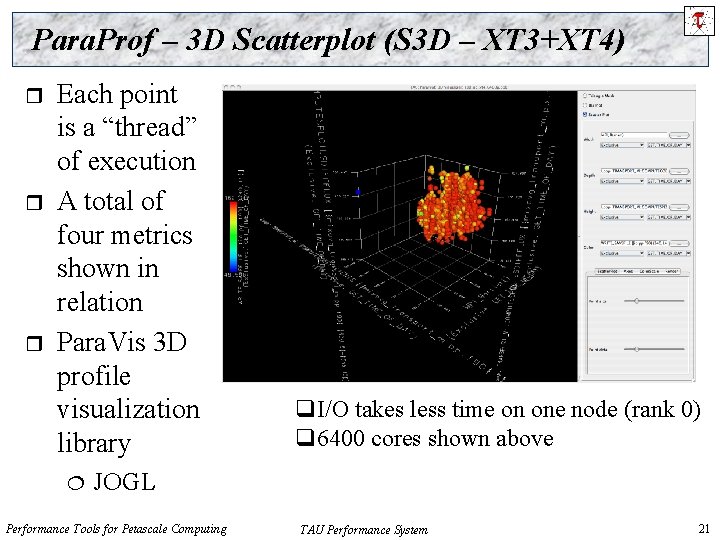

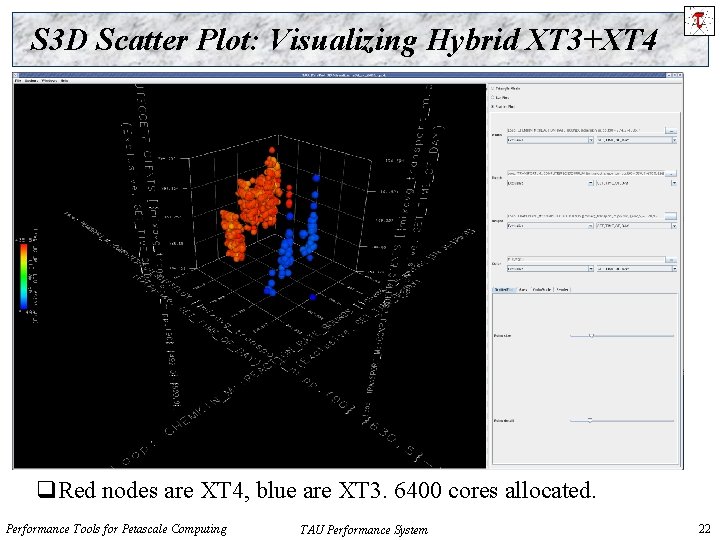

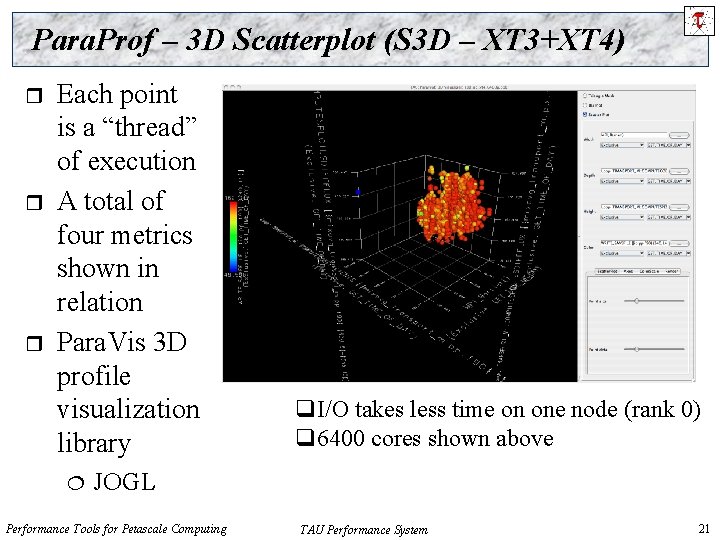

Para. Prof – 3 D Scatterplot (S 3 D – XT 3+XT 4) r r r Each point is a “thread” of execution A total of four metrics shown in relation Para. Vis 3 D profile visualization library q. I/O takes less time on one node (rank 0) q 6400 cores shown above JOGL Performance Tools for Petascale Computing TAU Performance System 21

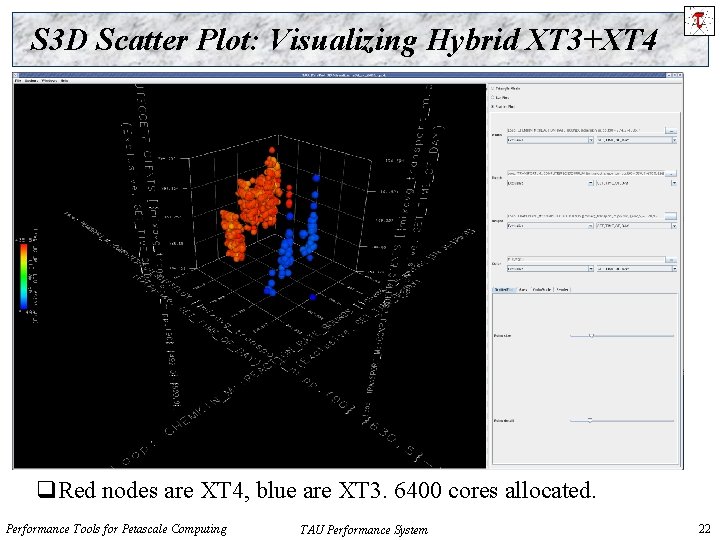

S 3 D Scatter Plot: Visualizing Hybrid XT 3+XT 4 q. Red nodes are XT 4, blue are XT 3. 6400 cores allocated. Performance Tools for Petascale Computing TAU Performance System 22

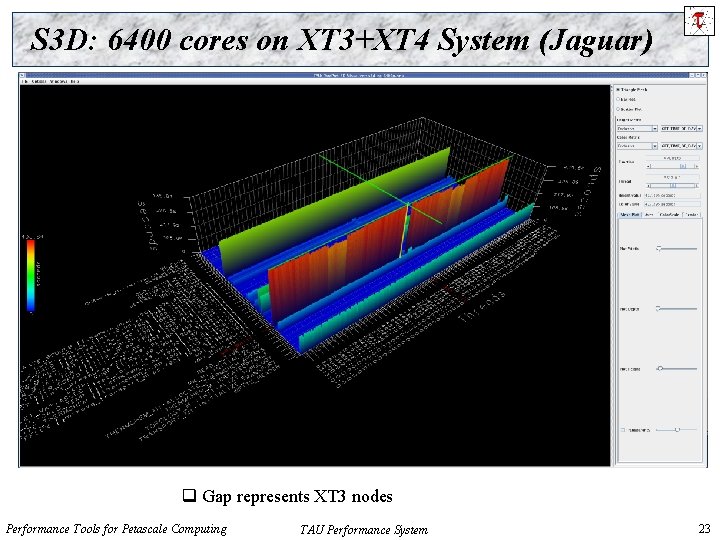

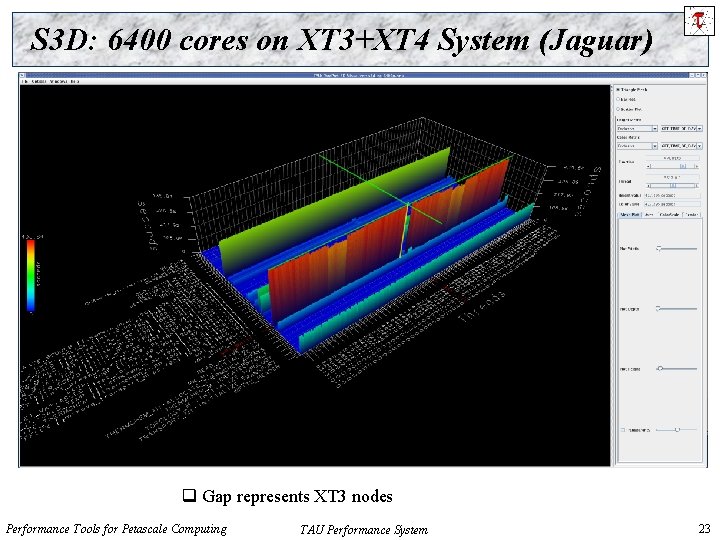

S 3 D: 6400 cores on XT 3+XT 4 System (Jaguar) q Gap represents XT 3 nodes Performance Tools for Petascale Computing TAU Performance System 23

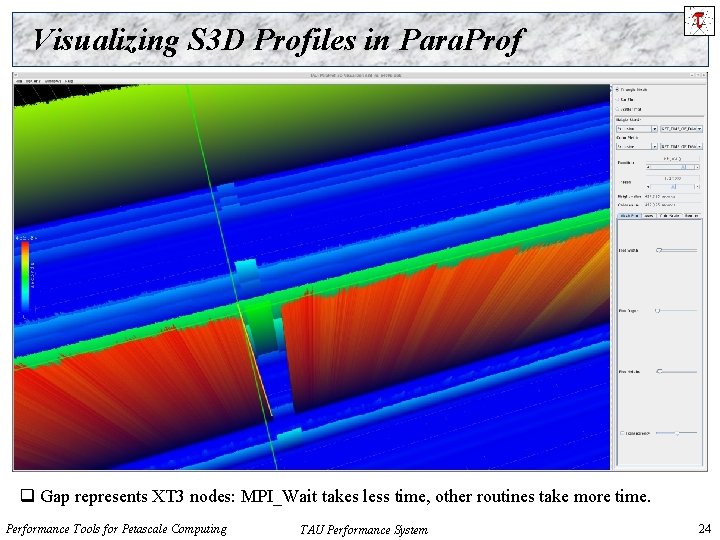

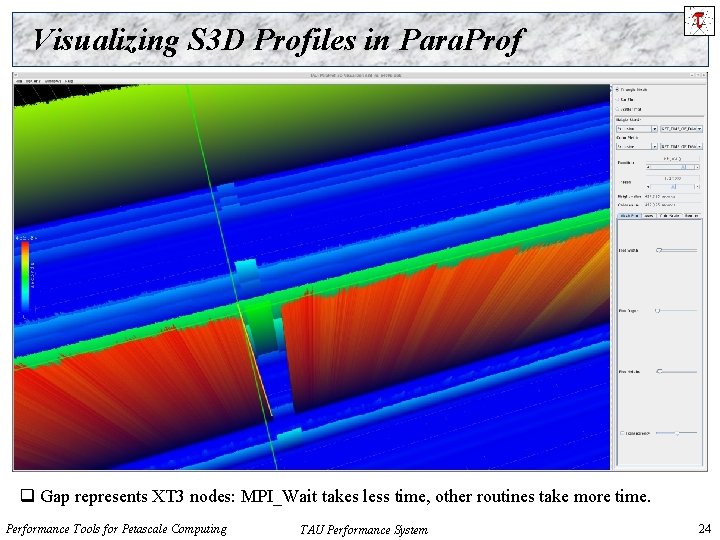

Visualizing S 3 D Profiles in Para. Prof q Gap represents XT 3 nodes: MPI_Wait takes less time, other routines take more time. Performance Tools for Petascale Computing TAU Performance System 24

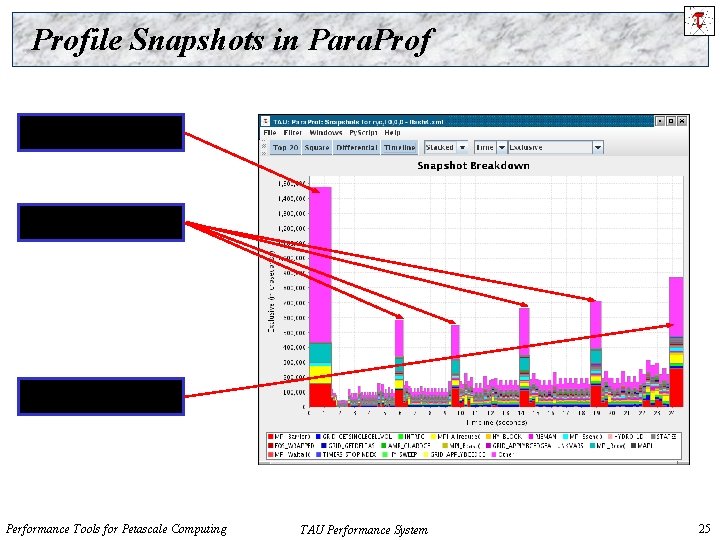

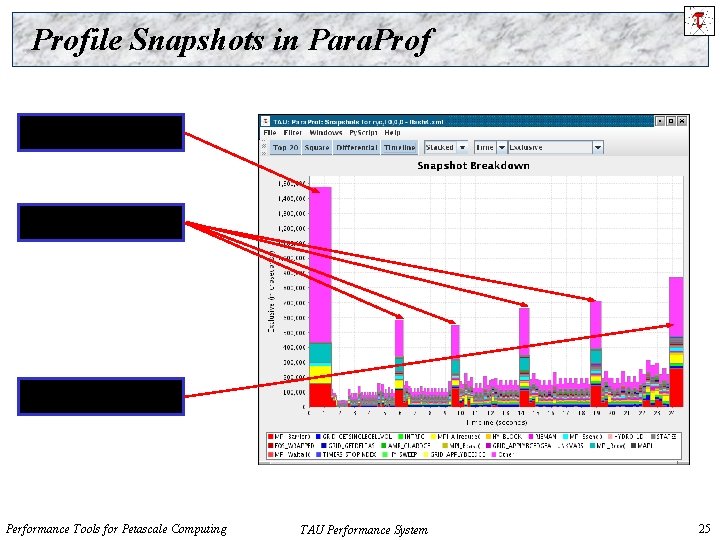

Profile Snapshots in Para. Prof Initialization Checkpointing Finalization Performance Tools for Petascale Computing TAU Performance System 25

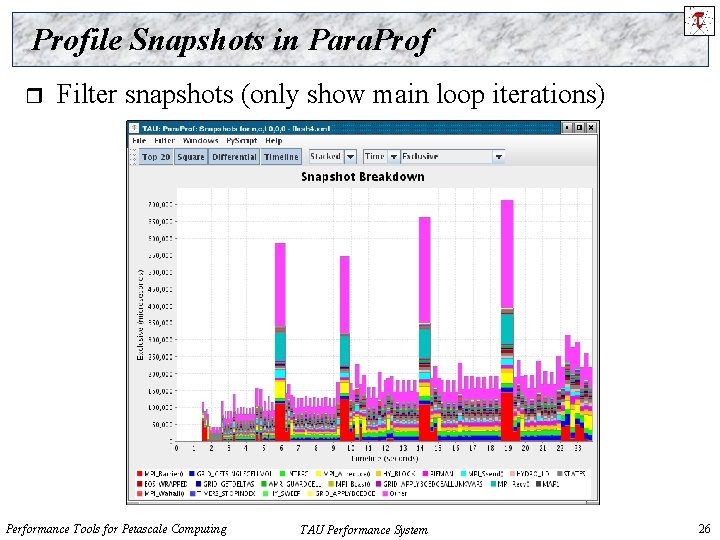

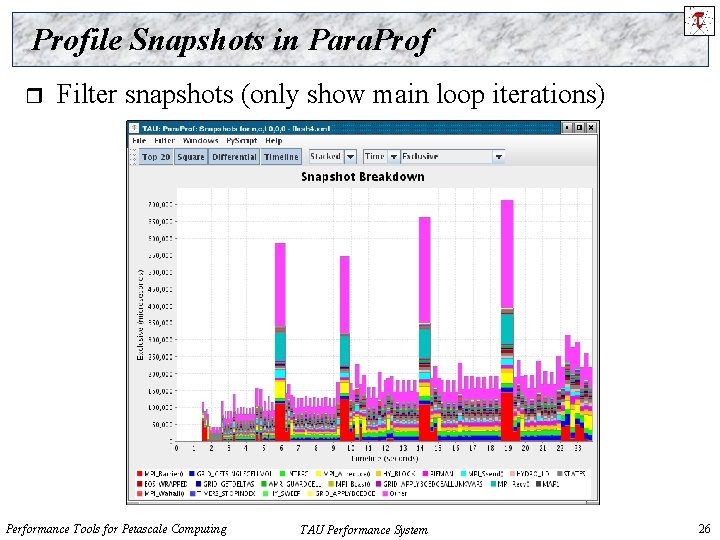

Profile Snapshots in Para. Prof r Filter snapshots (only show main loop iterations) Performance Tools for Petascale Computing TAU Performance System 26

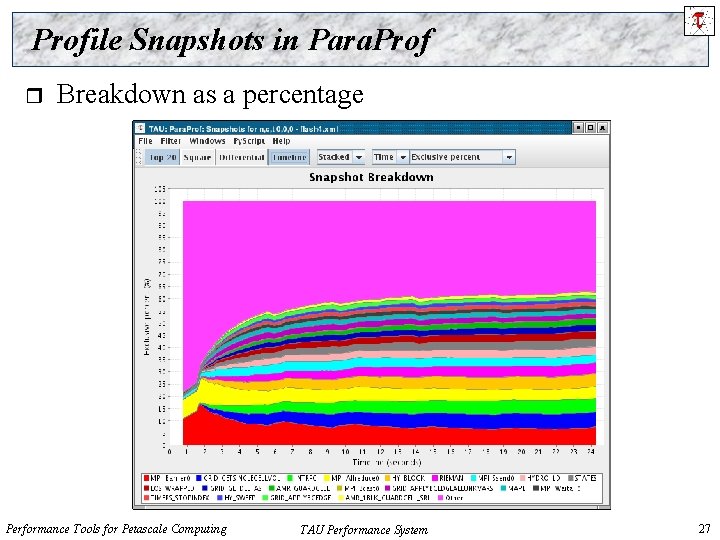

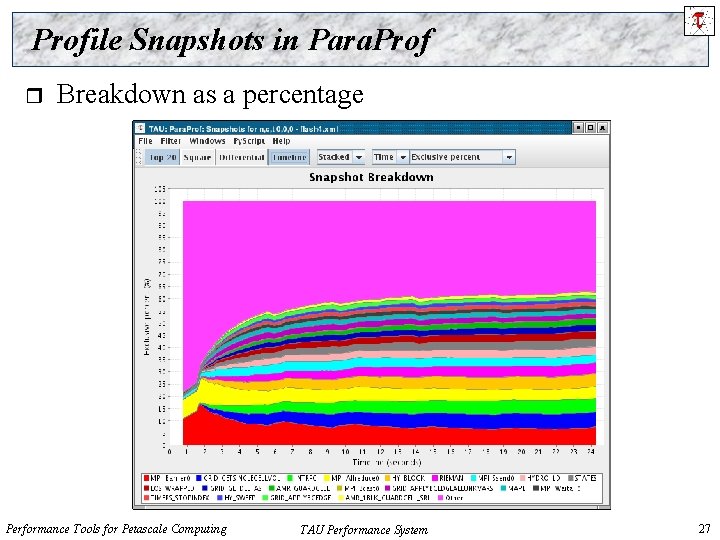

Profile Snapshots in Para. Prof r Breakdown as a percentage Performance Tools for Petascale Computing TAU Performance System 27

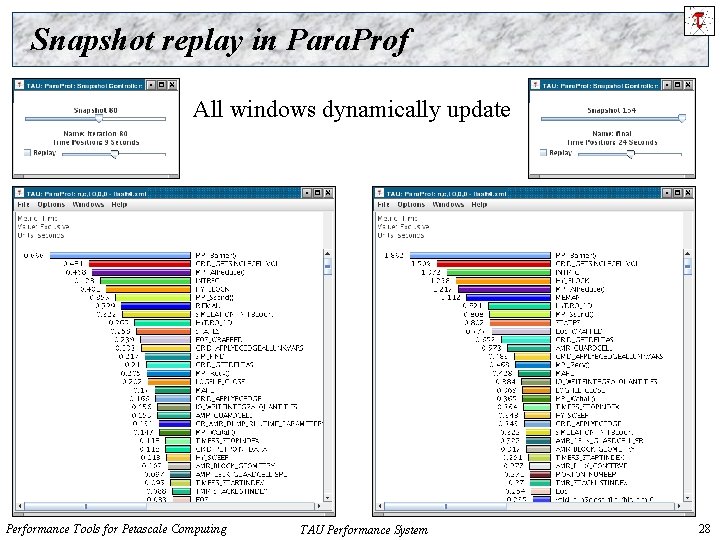

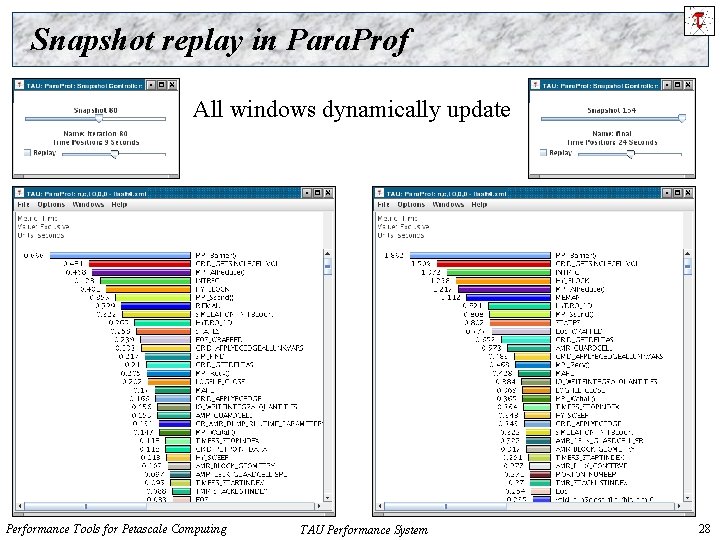

Snapshot replay in Para. Prof All windows dynamically update Performance Tools for Petascale Computing TAU Performance System 28

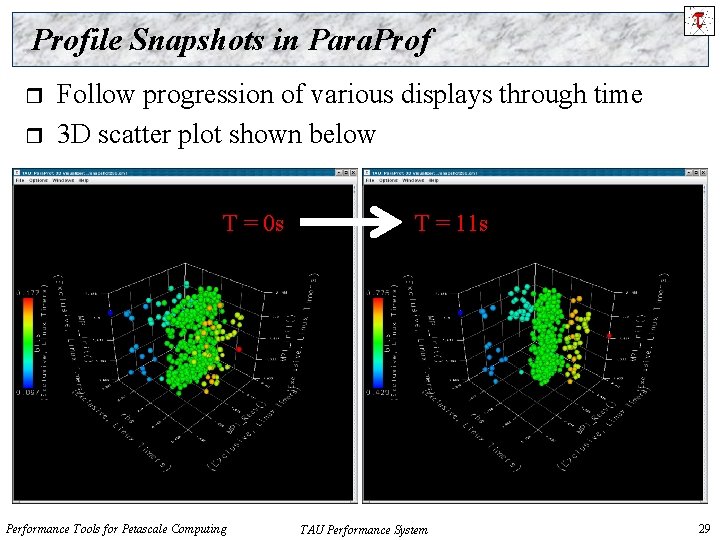

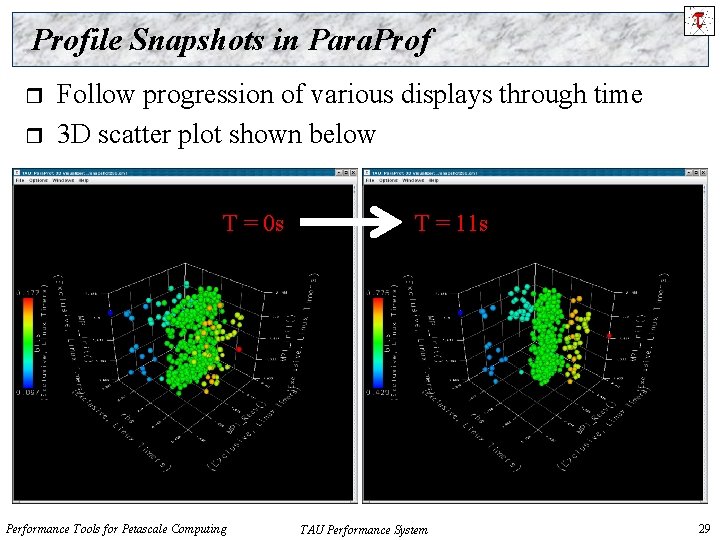

Profile Snapshots in Para. Prof r r Follow progression of various displays through time 3 D scatter plot shown below T = 0 s Performance Tools for Petascale Computing T = 11 s TAU Performance System 29

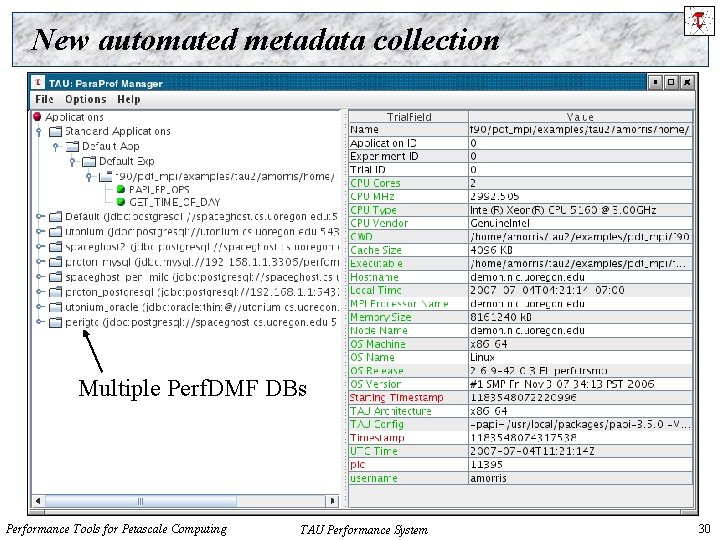

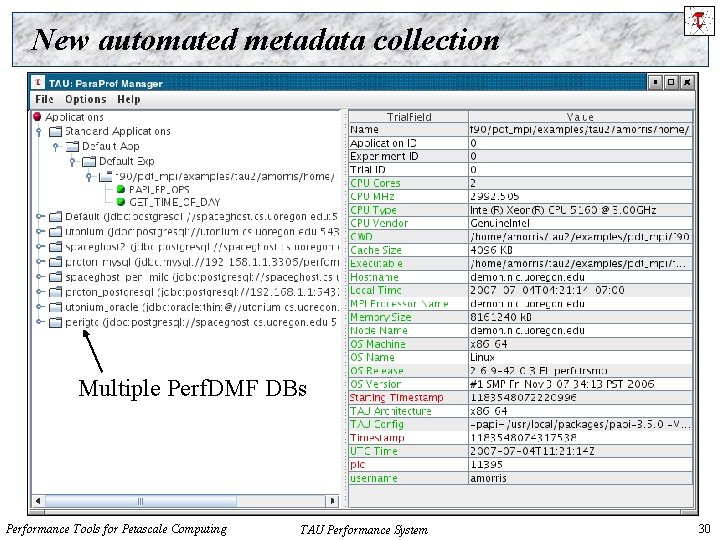

New automated metadata collection Multiple Perf. DMF DBs Performance Tools for Petascale Computing TAU Performance System 30

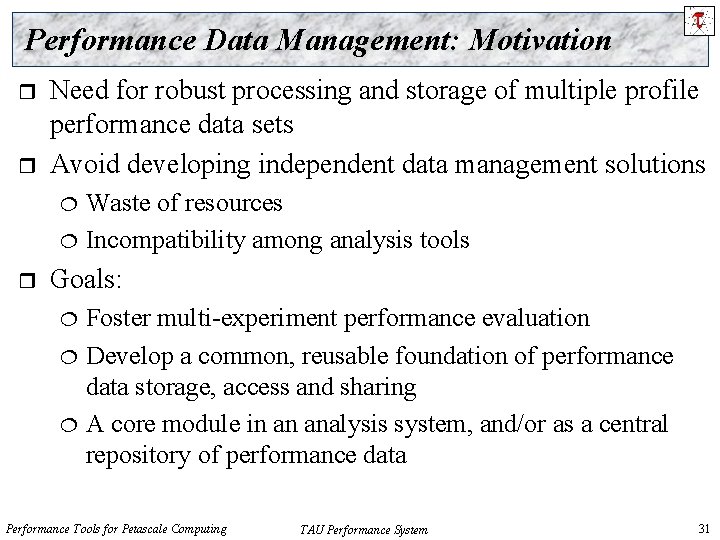

Performance Data Management: Motivation r r Need for robust processing and storage of multiple profile performance data sets Avoid developing independent data management solutions Waste of resources Incompatibility among analysis tools r Goals: Foster multi-experiment performance evaluation Develop a common, reusable foundation of performance data storage, access and sharing A core module in an analysis system, and/or as a central repository of performance data Performance Tools for Petascale Computing TAU Performance System 31

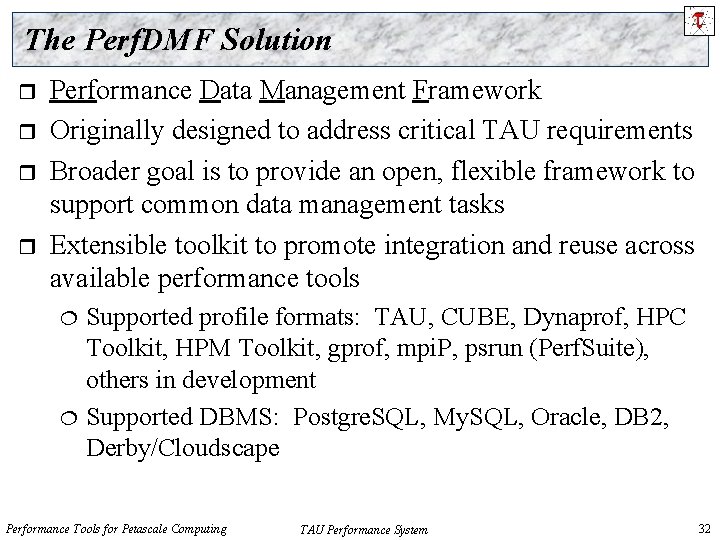

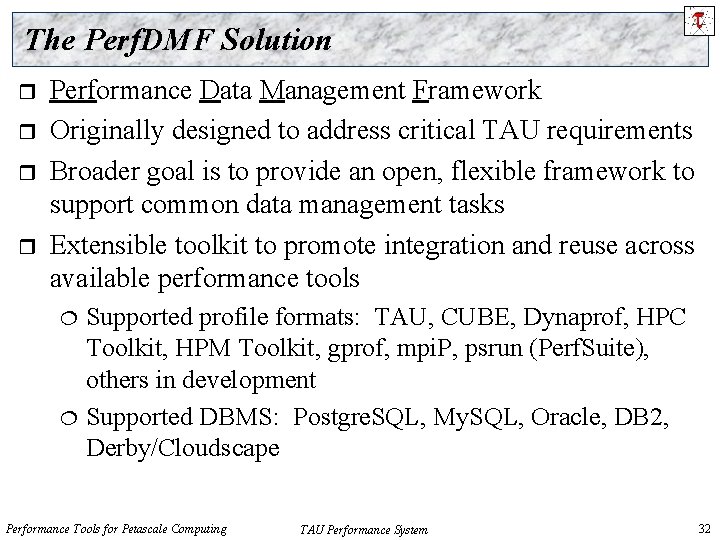

The Perf. DMF Solution r r Performance Data Management Framework Originally designed to address critical TAU requirements Broader goal is to provide an open, flexible framework to support common data management tasks Extensible toolkit to promote integration and reuse across available performance tools Supported profile formats: TAU, CUBE, Dynaprof, HPC Toolkit, HPM Toolkit, gprof, mpi. P, psrun (Perf. Suite), others in development Supported DBMS: Postgre. SQL, My. SQL, Oracle, DB 2, Derby/Cloudscape Performance Tools for Petascale Computing TAU Performance System 32

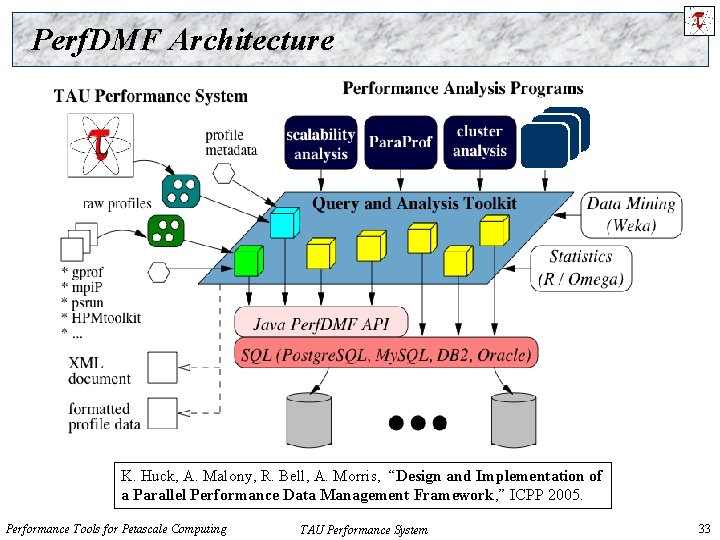

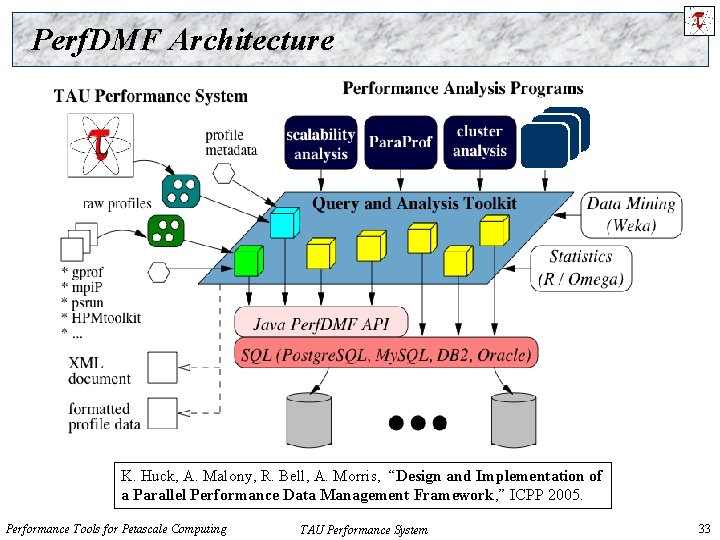

Perf. DMF Architecture K. Huck, A. Malony, R. Bell, A. Morris, “Design and Implementation of a Parallel Performance Data Management Framework, ” ICPP 2005. Performance Tools for Petascale Computing TAU Performance System 33

Recent Perf. DMF Development r Integration of XML metadata for each profile Common Profile Attributes Thread/process specific Profile Attributes Automatic collection of runtime information Any other data the user wants to collect can be added Ø Build information Ø Job submission information Two methods for acquiring metadata: Ø TAU_METADATA() call from application Ø Optional XML file added when saving profile to Perf. DMF TAU Metadata XML schema is simple, easy to generate from scripting tools (no XML libraries required) Performance Tools for Petascale Computing TAU Performance System 34

Performance Data Mining (Objectives) r Conduct parallel performance analysis process r r Multi-experiment performance analysis Large-scale performance data reduction r In a systematic, collaborative and reusable manner Manage performance complexity Discover performance relationship and properties Automate process Summarize characteristics of large processor runs Implement extensible analysis framework Abstraction / automation of data mining operations Interface to existing analysis and data mining tools Performance Tools for Petascale Computing TAU Performance System 35

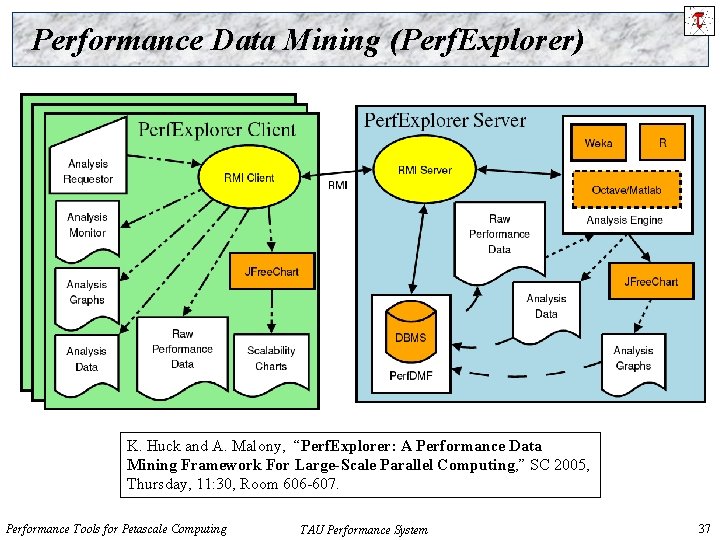

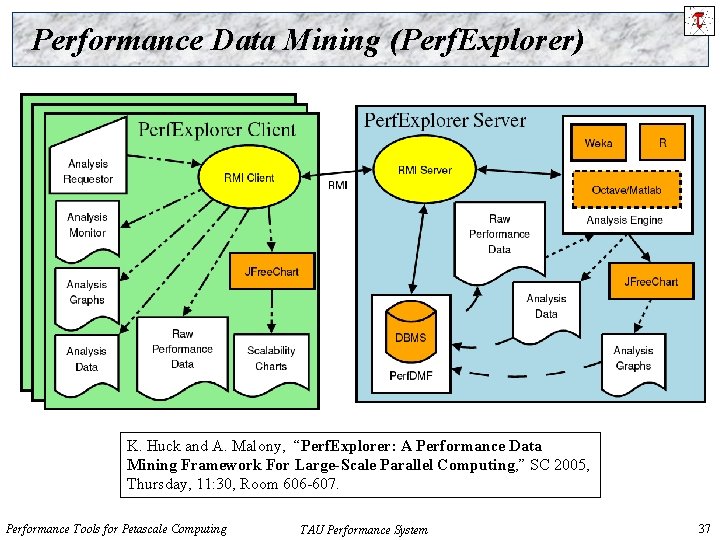

Performance Data Mining (Perf. Explorer) r Performance knowledge discovery framework Data mining analysis applied to parallel performance data Ø comparative, Use the existing TAU infrastructure Ø TAU r clustering, correlation, dimension reduction, … performance profiles, Perf. DMF Client-server based system architecture Technology integration Java API and toolkit for portability Perf. DMF R-project/Omegahat, Octave/Matlab statistical analysis WEKA data mining package JFree. Chart for visualization, vector output (EPS, SVG) Performance Tools for Petascale Computing TAU Performance System 36

Performance Data Mining (Perf. Explorer) K. Huck and A. Malony, “Perf. Explorer: A Performance Data Mining Framework For Large-Scale Parallel Computing, ” SC 2005, Thursday, 11: 30, Room 606 -607. Performance Tools for Petascale Computing TAU Performance System 37

Perf. Explorer Analysis Methods r r Data summaries, distributions, scatterplots Clustering r r Correlation analysis Dimension reduction r r k-means Hierarchical PCA Random linear projection Thresholds Comparative analysis Data management views Performance Tools for Petascale Computing TAU Performance System 38

Perf. DMF and the TAU Portal r Development of the TAU portal Common repository for collaborative data sharing Profile uploading, downloading, user management Paraprof, Perf. Explorer can be launched from the portal using Java Web Start (no TAU installation required) r Portal URL http: //tau. nic. uoregon. edu Performance Tools for Petascale Computing TAU Performance System 39

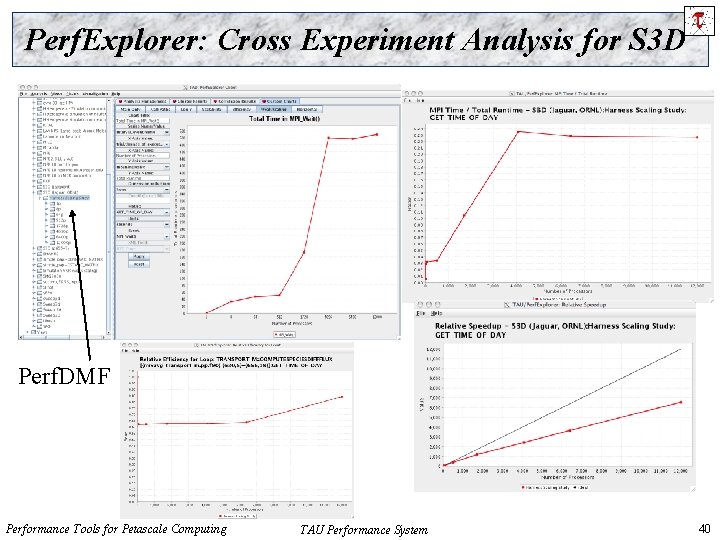

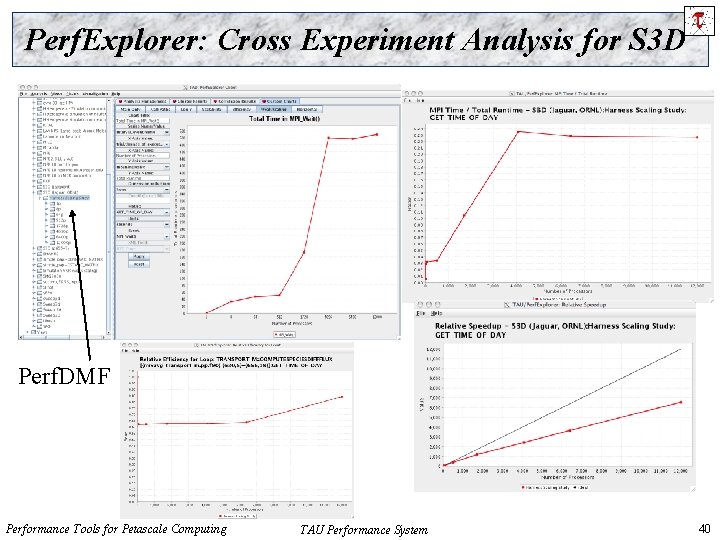

Perf. Explorer: Cross Experiment Analysis for S 3 D Perf. DMF Performance Tools for Petascale Computing TAU Performance System 40

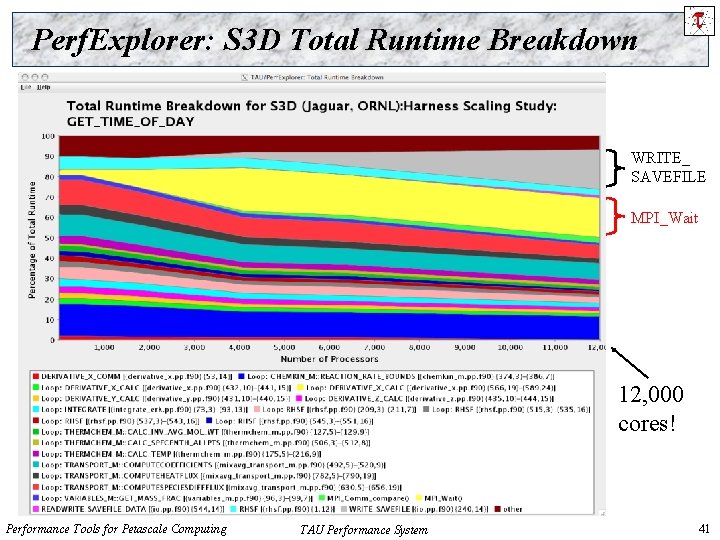

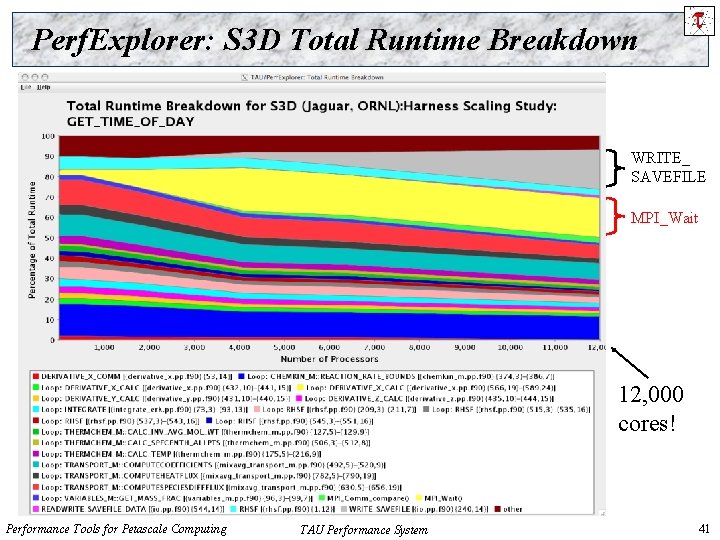

Perf. Explorer: S 3 D Total Runtime Breakdown WRITE_ SAVEFILE MPI_Wait 12, 000 cores! Performance Tools for Petascale Computing TAU Performance System 41

TAU Plug-Ins for Eclipse: Motivation r High performance software development environments r Integrated development environments r Tools may be complicated to use Interfaces and mechanisms differ between platforms / OS Consistent development environment Numerous enhancements to development process Standard in industrial software development Integrated performance analysis Tools limited to single platform or programming language Rarely compatible with 3 rd party analysis tools Little or no support for parallel projects Performance Tools for Petascale Computing TAU Performance System 42

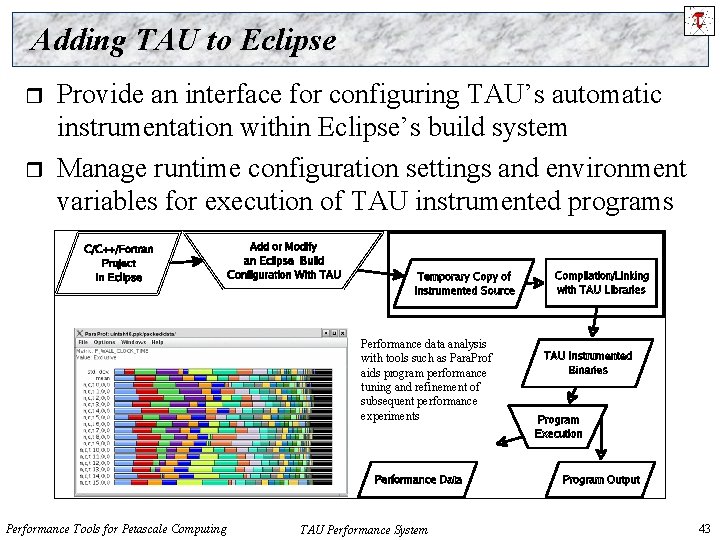

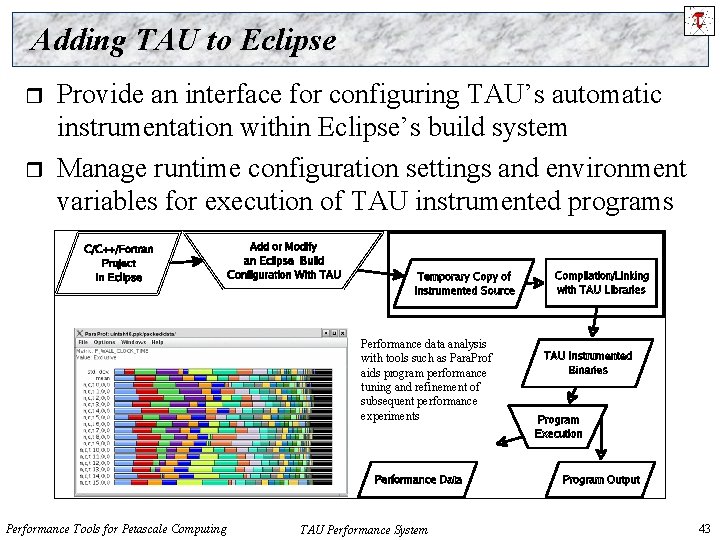

Adding TAU to Eclipse r r Provide an interface for configuring TAU’s automatic instrumentation within Eclipse’s build system Manage runtime configuration settings and environment variables for execution of TAU instrumented programs Performance data analysis with tools such as Para. Prof aids program performance tuning and refinement of subsequent performance experiments Performance Tools for Petascale Computing TAU Performance System 43

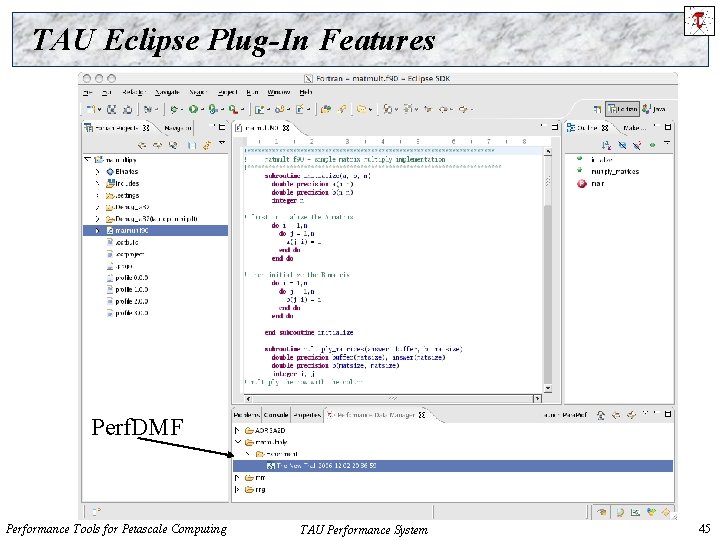

TAU Eclipse Plug-In Features r Performance data collection r Graphical selection of TAU stub makefiles and compiler options Automatic instrumentation, compilation and execution of target C, C++ or Fortran projects Selective instrumentation via source editor and source outline views Full integration with the Parallel Tools Platform (PTP) parallel launch system for performance data collection from parallel jobs launched within Eclipse Performance data management Automatically place profile output in a Perf. DMF database or upload to TAU-Portal Launch Para. Prof on profile data collected in Eclipse, with performance counters linked back to the Eclipse source editor Performance Tools for Petascale Computing TAU Performance System 44

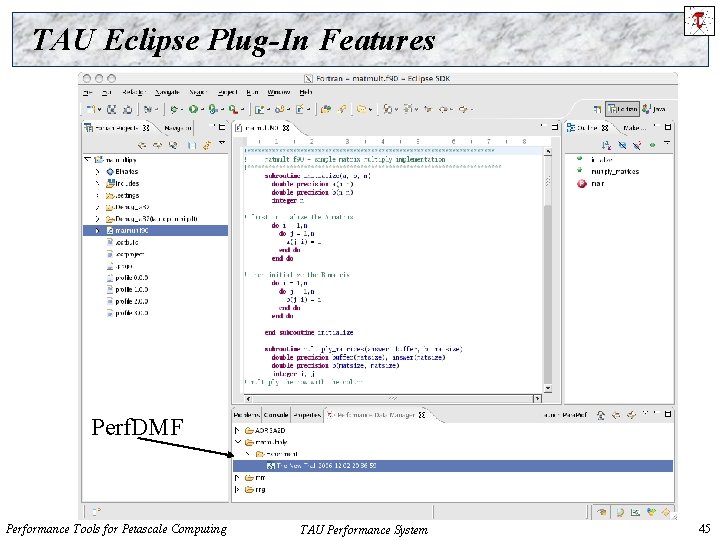

TAU Eclipse Plug-In Features Perf. DMF Performance Tools for Petascale Computing TAU Performance System 45

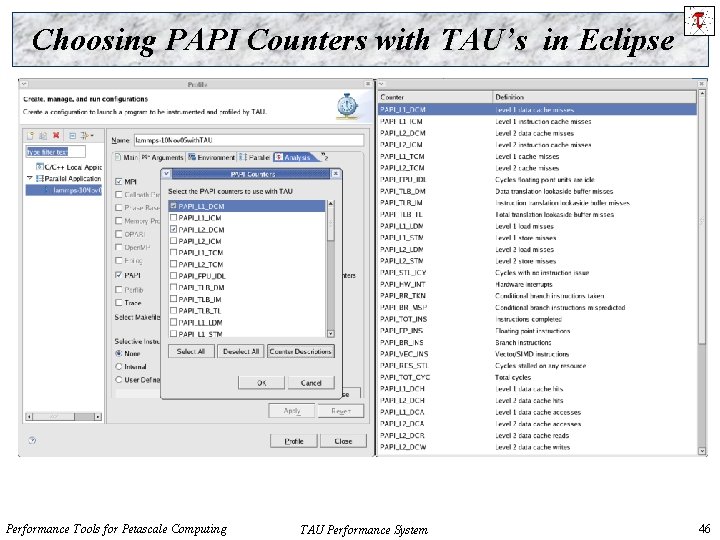

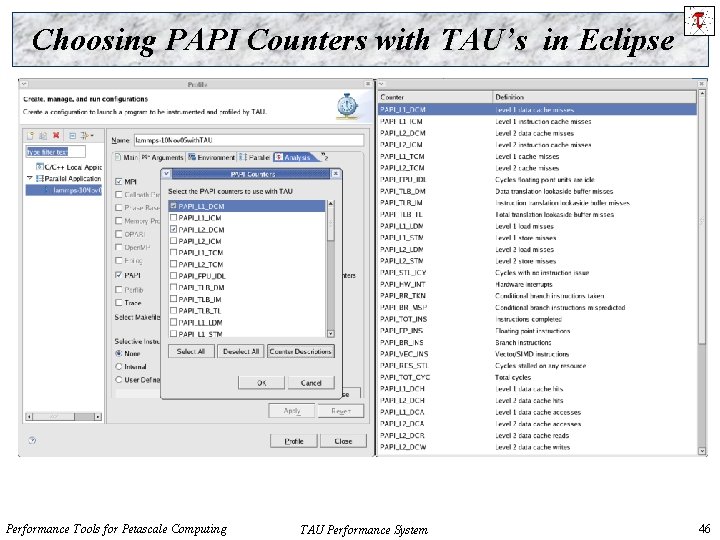

Choosing PAPI Counters with TAU’s in Eclipse Performance Tools for Petascale Computing TAU Performance System 46

Future Plug-In Development r Integration of additional TAU components r r r Automatic selective instrumentation based on previous experimental results Trace format conversion from within Eclipse Trace and profile visualization within Eclipse Scalability testing interface Additional user interface enhancements Performance Tools for Petascale Computing TAU Performance System 47

KTAU Project r Trend toward Extremely Large Scales r r r System-level influences are increasingly dominant performance bottleneck contributors Application sensitivity at scale to the system (e. g. , OS noise) Complex I/O path and subsystems another example Isolating system-level factors non-trivial OS Kernel instrumentation and measurement is important to understanding system-level influences But can we closely correlate observed application and OS performance? KTAU / TAU (Part of the ANL/UO Zepto. OS Project) Integrated methodology and framework to measure whole-system performance Performance Tools for Petascale Computing TAU Performance System 48

Applying KTAU+TAU r r How does real OS-noise affect real applications on target platforms? Requires a tightly coupled performance measurement & analysis approach provided by KTAU+TAU Provides an estimate of application slowdown due to Noise (and in particular, different noise-components - IRQ, scheduling, etc) Can empower both application and the middleware and OS communities. A. Nataraj, A. Morris, A. Malony, M. Sottile, P. Beckman, “The Ghost in the Machine : Observing the Effects of Kernel Operation on Parallel Application Performance”, SC’ 07. Measuring and analyzing complex, multi-component I/O subsystems in systems like BG(L/P) (work in progress). Performance Tools for Petascale Computing TAU Performance System 49

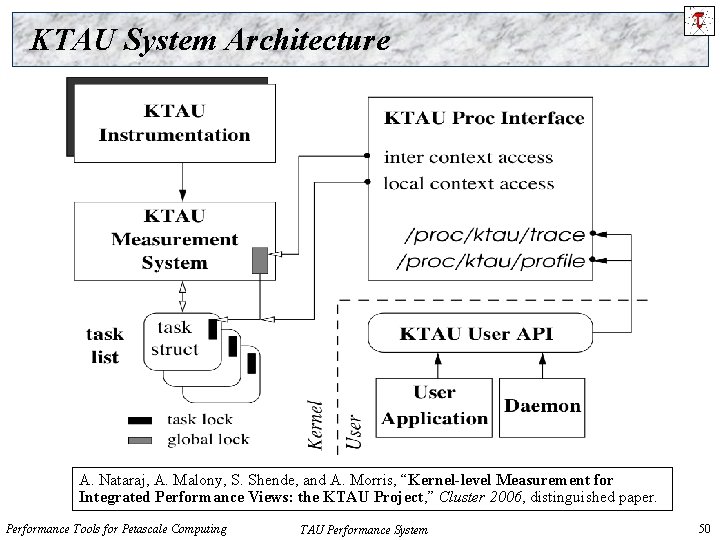

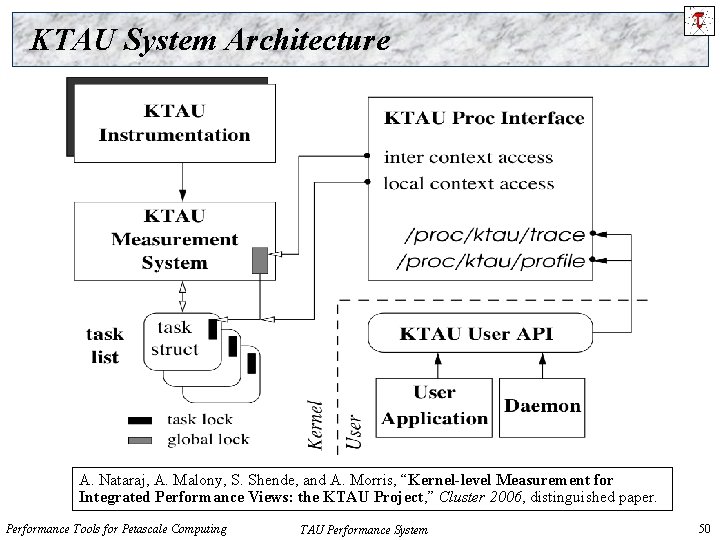

KTAU System Architecture A. Nataraj, A. Malony, S. Shende, and A. Morris, “Kernel-level Measurement for Integrated Performance Views: the KTAU Project, ” Cluster 2006, distinguished paper. Performance Tools for Petascale Computing TAU Performance System 50

TAU: Interoperability r What we can offer other tools: Automated source-level instrumentation (tau_instrumentor, PDT) Para. Prof 3 D profile browser Perf. DMF database, Perf. Explorer cross-experiment analysis tool Eclipse/PTP plugins for performance evaluation tools Conversion of trace and profile formats Kernel-level performance tracking using KTAU Support for most HPC platforms, compilers, MPI-1, 2 wrappers r What help we need from other projects: Common API for compiler instrumentation Ø Ø Support for sampling for hybrid instrumentation/sampling measurement Ø HPCToolkit, Perf. Suite Portable, robust binary rewriting system that requires no root previleges Ø Scalasca/Kojak and Vampir. Trace compiler wrappers Intel, Sun, GNU, Hitachi, PGI, … Dyninst. API Scalable communication framework for runtime data analysis Ø MRNet, Supermon Performance Tools for Petascale Computing TAU Performance System 51

Support Acknowledgements US Department of Energy (DOE) Office of Science Ø MICS, Argonne National Lab ASC/NNSA Ø University of Utah ASC/NNSA Level 1 Ø ASC/NNSA, Lawrence Livermore National Lab r US Department of Defense (Do. D) r NSF Software and Tools for High-End Computing r Research Centre Juelich r TU Dresden r Los Alamos National Laboratory r Para. Tools, Inc. r Performance Tools for Petascale Computing TAU Performance System 52

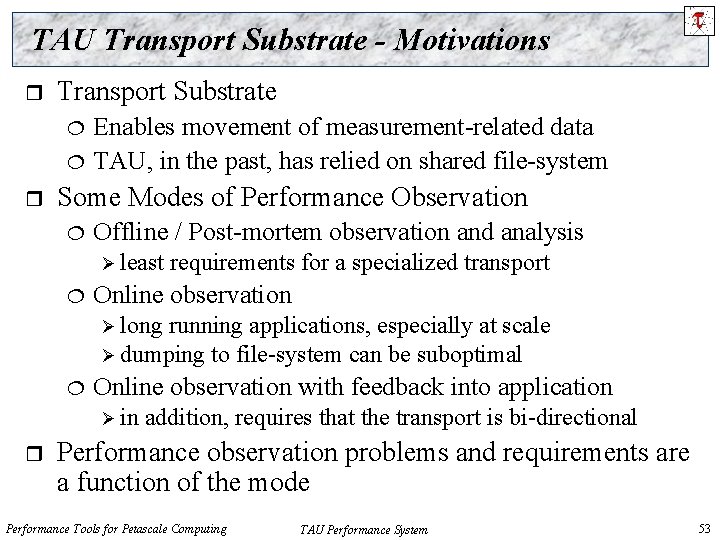

TAU Transport Substrate - Motivations r Transport Substrate r Enables movement of measurement-related data TAU, in the past, has relied on shared file-system Some Modes of Performance Observation Offline / Post-mortem observation and analysis Ø least requirements for a specialized transport Online observation Ø long running applications, especially at scale Ø dumping to file-system can be suboptimal Online observation with feedback into application Ø in r addition, requires that the transport is bi-directional Performance observation problems and requirements are a function of the mode Performance Tools for Petascale Computing TAU Performance System 53

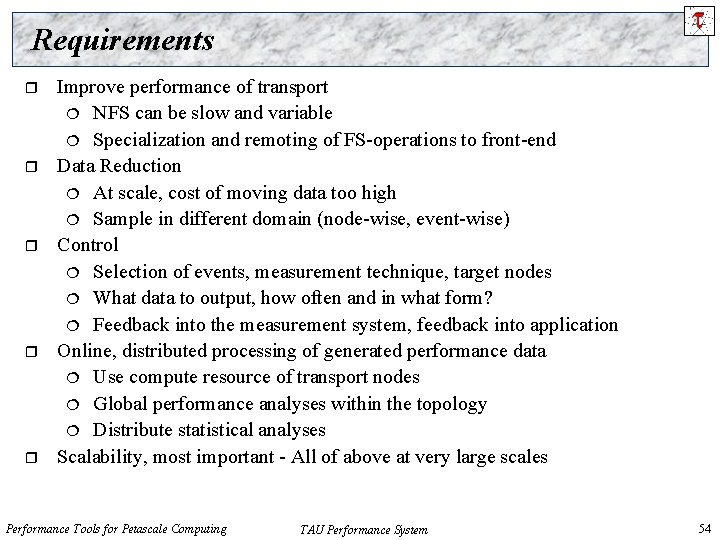

Requirements r r r Improve performance of transport NFS can be slow and variable Specialization and remoting of FS-operations to front-end Data Reduction At scale, cost of moving data too high Sample in different domain (node-wise, event-wise) Control Selection of events, measurement technique, target nodes What data to output, how often and in what form? Feedback into the measurement system, feedback into application Online, distributed processing of generated performance data Use compute resource of transport nodes Global performance analyses within the topology Distribute statistical analyses Scalability, most important - All of above at very large scales Performance Tools for Petascale Computing TAU Performance System 54

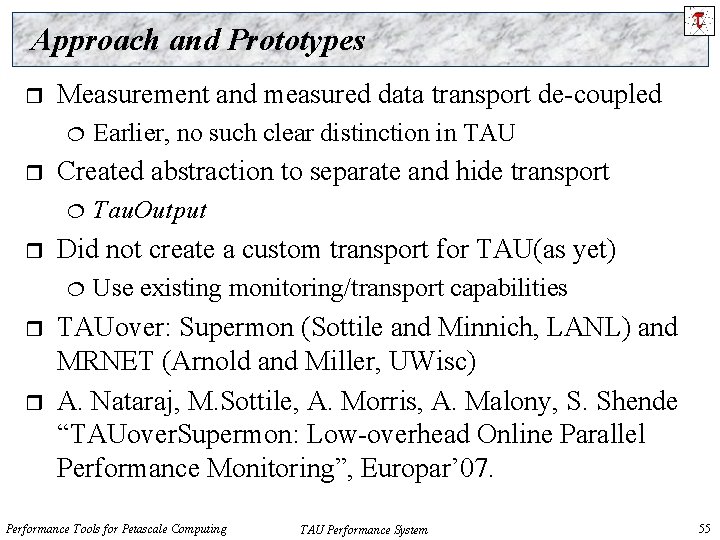

Approach and Prototypes r Measurement and measured data transport de-coupled r Created abstraction to separate and hide transport r r Tau. Output Did not create a custom transport for TAU(as yet) r Earlier, no such clear distinction in TAU Use existing monitoring/transport capabilities TAUover: Supermon (Sottile and Minnich, LANL) and MRNET (Arnold and Miller, UWisc) A. Nataraj, M. Sottile, A. Morris, A. Malony, S. Shende “TAUover. Supermon: Low-overhead Online Parallel Performance Monitoring”, Europar’ 07. Performance Tools for Petascale Computing TAU Performance System 55

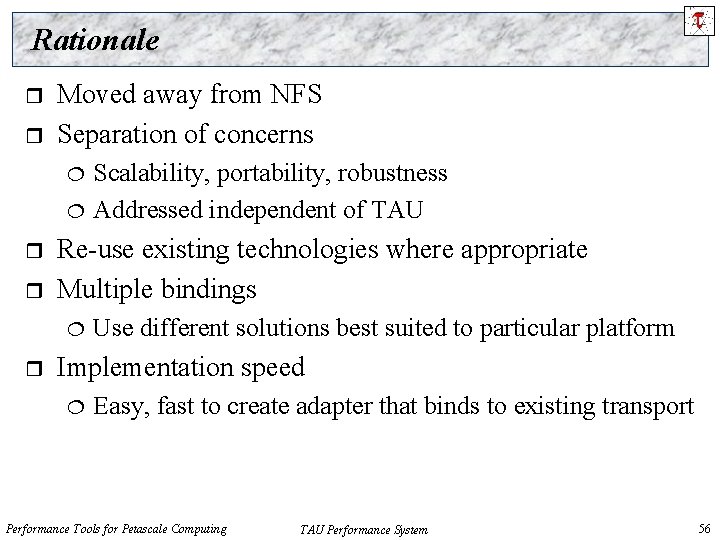

Rationale r r Moved away from NFS Separation of concerns r r Re-use existing technologies where appropriate Multiple bindings r Scalability, portability, robustness Addressed independent of TAU Use different solutions best suited to particular platform Implementation speed Easy, fast to create adapter that binds to existing transport Performance Tools for Petascale Computing TAU Performance System 56

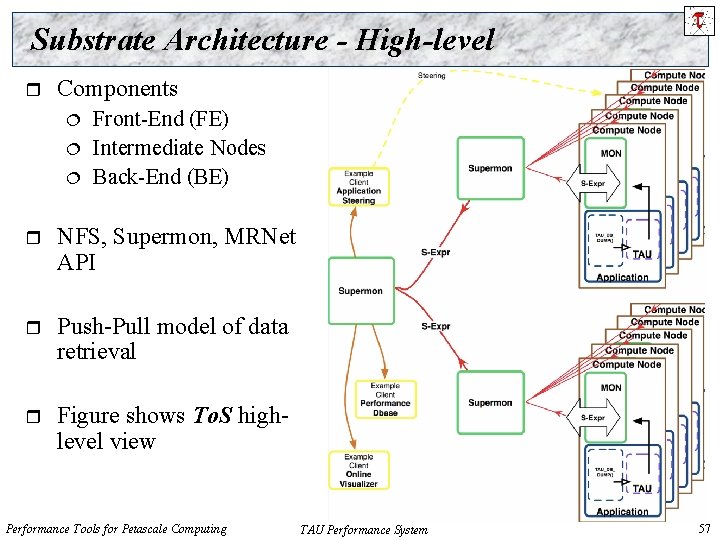

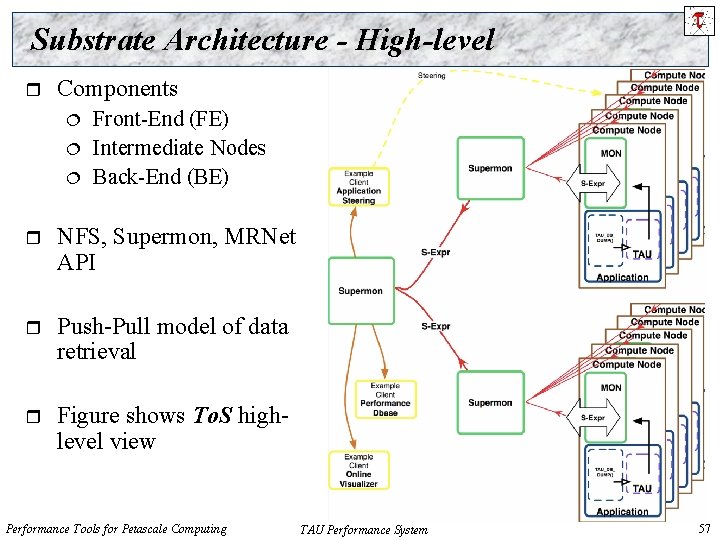

Substrate Architecture - High-level r Components Front-End (FE) Intermediate Nodes Back-End (BE) r NFS, Supermon, MRNet API r Push-Pull model of data retrieval r Figure shows To. S highlevel view Performance Tools for Petascale Computing TAU Performance System 57

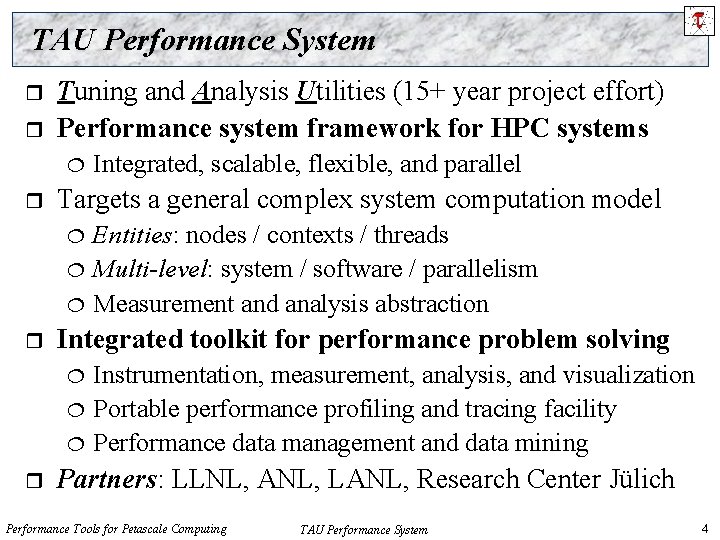

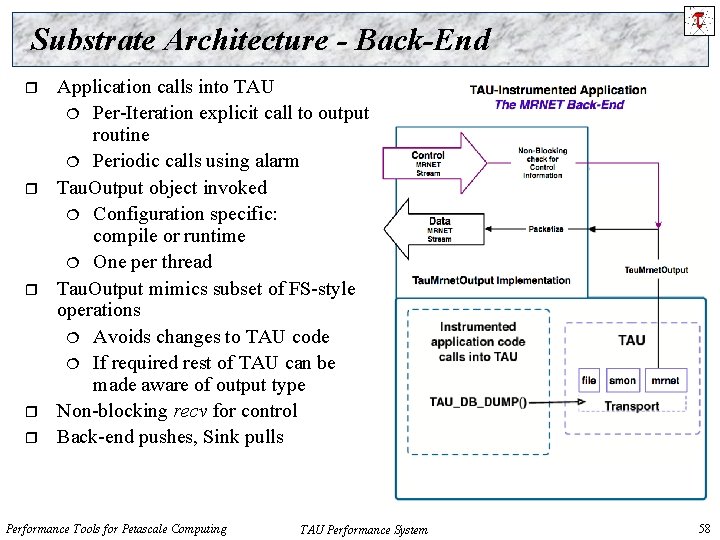

Substrate Architecture - Back-End r r r Application calls into TAU Per-Iteration explicit call to output routine Periodic calls using alarm Tau. Output object invoked Configuration specific: compile or runtime One per thread Tau. Output mimics subset of FS-style operations Avoids changes to TAU code If required rest of TAU can be made aware of output type Non-blocking recv for control Back-end pushes, Sink pulls Performance Tools for Petascale Computing TAU Performance System 58