Parallel Data Mining and Processing with HadoopMap Reduce

- Slides: 49

Parallel Data Mining and Processing with Hadoop/Map. Reduce CS 240 A/290 N, Tao Yang

Overview • What is Map. Reduce? • Related technologies –Hadoop/Google file system • Data processing/mining with Map. Reduce © Spinnaker Labs, Inc.

Motivations • Motivations – Large-scale data processing on clusters – Massively parallel (hundreds or thousands of CPUs) – Reliable execution with easy data access • Functions – Automatic parallelization & distribution – Fault-tolerance – Status and monitoring tools – A clean abstraction for programmers » Functional programming meets distributed computing » A batch data processing system

Parallel Data Processing in a Cluster • Scalability to large data volumes: – Scan 1000 TB on 1 node @ 100 MB/s = 24 days – Scan on 1000 -node cluster = 35 minutes • Cost-efficiency: – Commodity nodes /network » Cheap, but not high bandwidth, sometime unreliable – Automatic fault-tolerance (fewer admins) – Easy to use (fewer programmers)

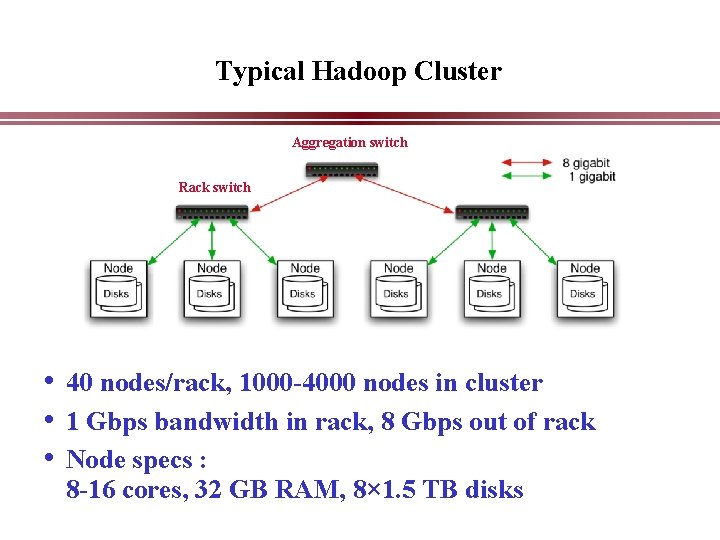

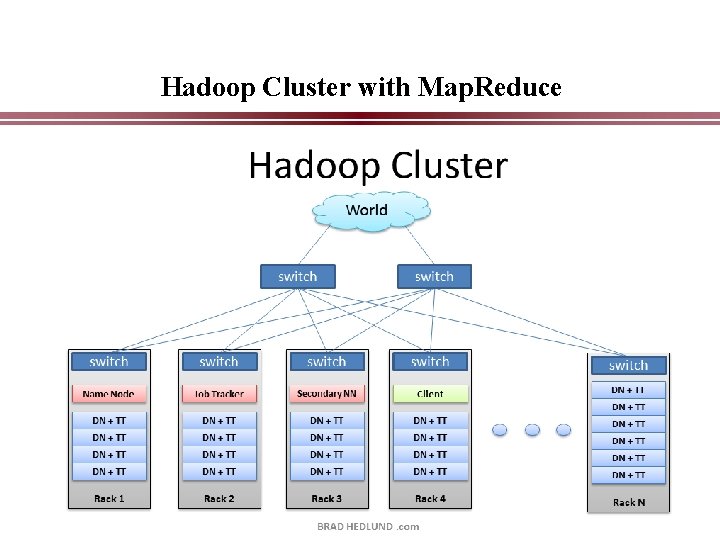

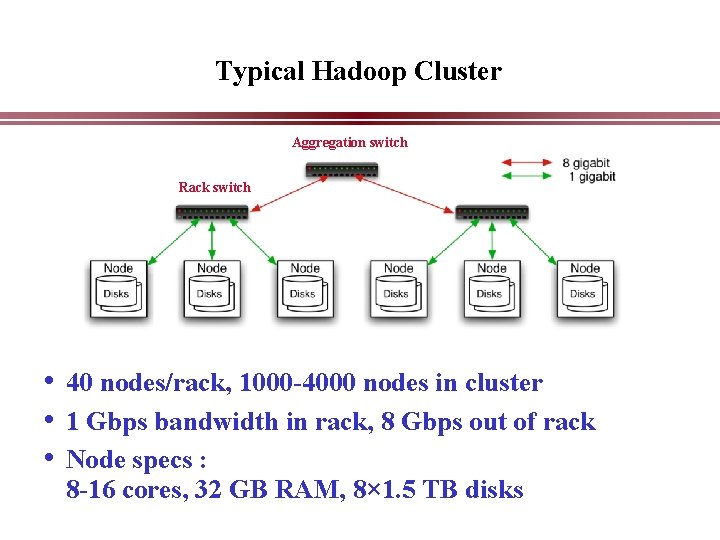

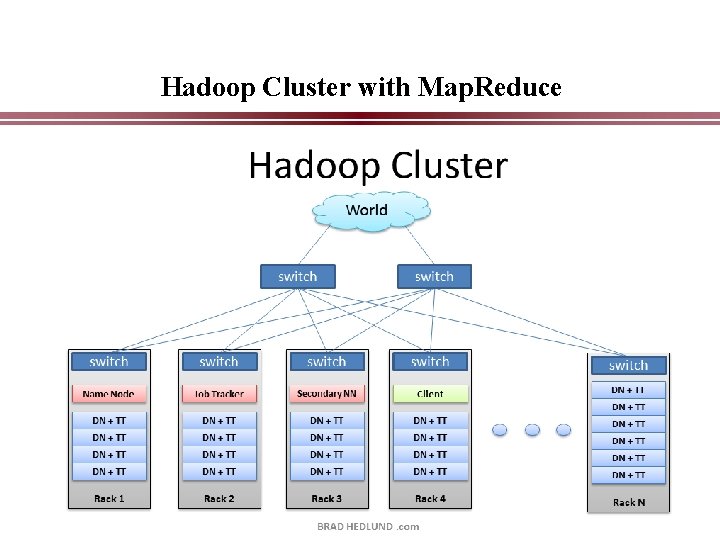

Typical Hadoop Cluster Aggregation switch Rack switch • 40 nodes/rack, 1000 -4000 nodes in cluster • 1 Gbps bandwidth in rack, 8 Gbps out of rack • Node specs : 8 -16 cores, 32 GB RAM, 8× 1. 5 TB disks

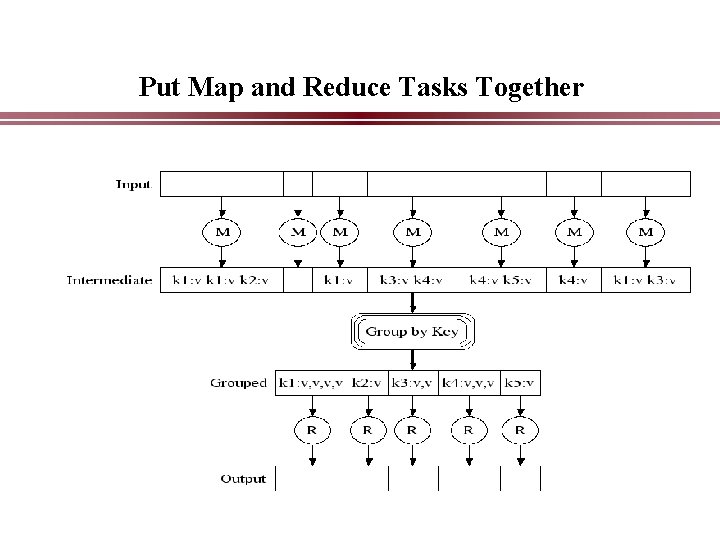

Map. Reduce Programming Model • Inspired from map and reduce operations commonly used in functional programming languages like Lisp. • Have multiple map tasks and reduce tasks • Users implement interface of two primary methods: – Map: (key 1, val 1) → (key 2, val 2) – Reduce: (key 2, [val 2 val 3 val 4 …. ]) → [val]

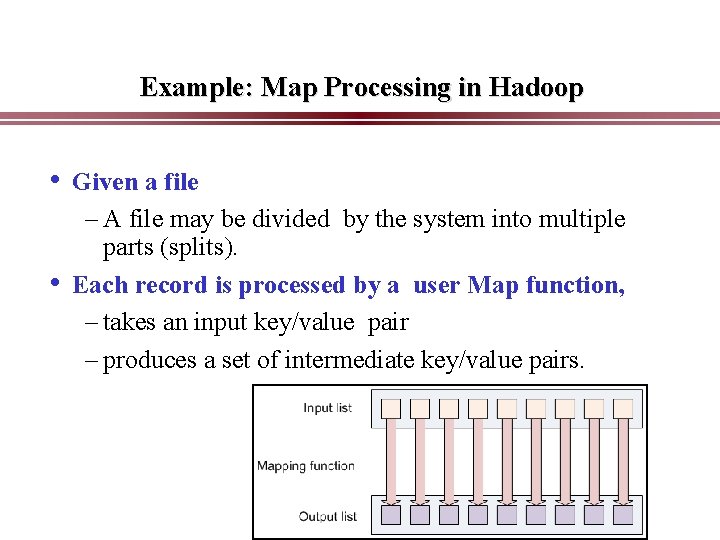

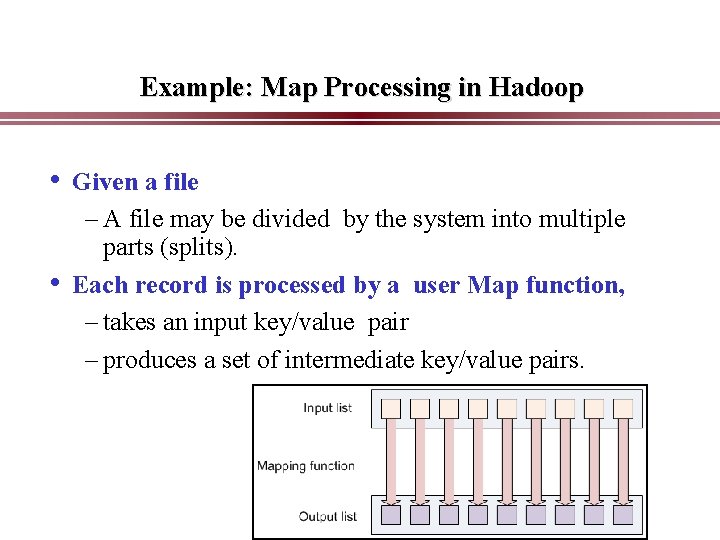

Example: Map Processing in Hadoop • Given a file – A file may be divided by the system into multiple parts (splits). • Each record is processed by a user Map function, – takes an input key/value pair – produces a set of intermediate key/value pairs.

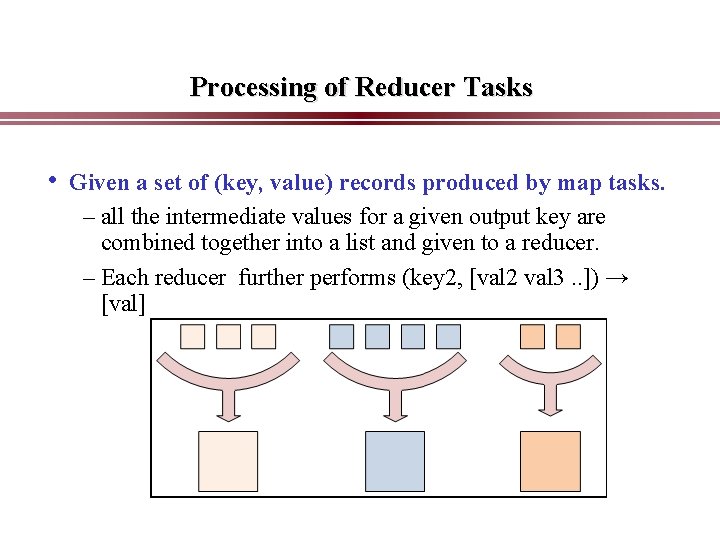

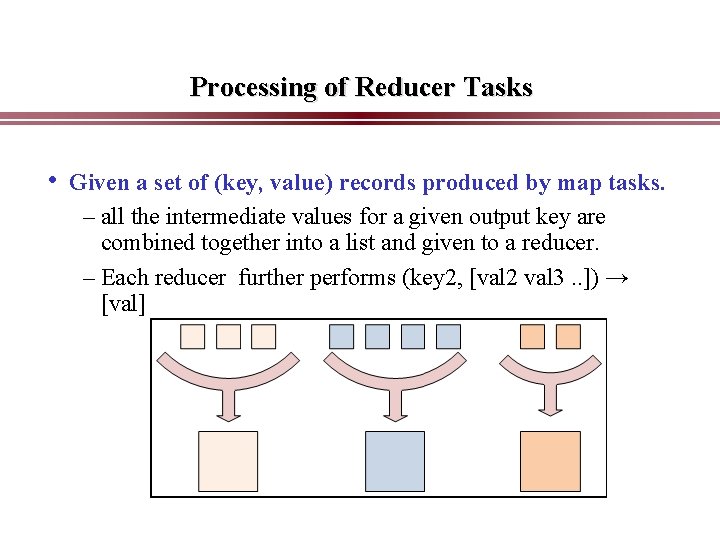

Processing of Reducer Tasks • Given a set of (key, value) records produced by map tasks. – all the intermediate values for a given output key are combined together into a list and given to a reducer. – Each reducer further performs (key 2, [val 2 val 3. . ]) → [val]

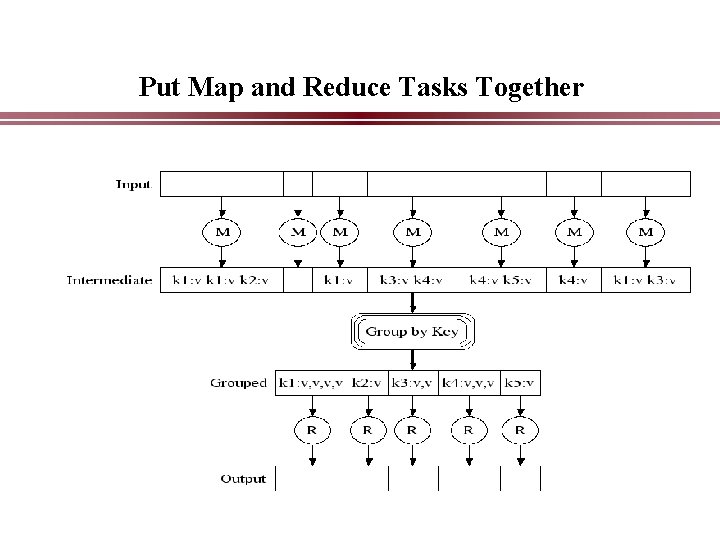

Put Map and Reduce Tasks Together

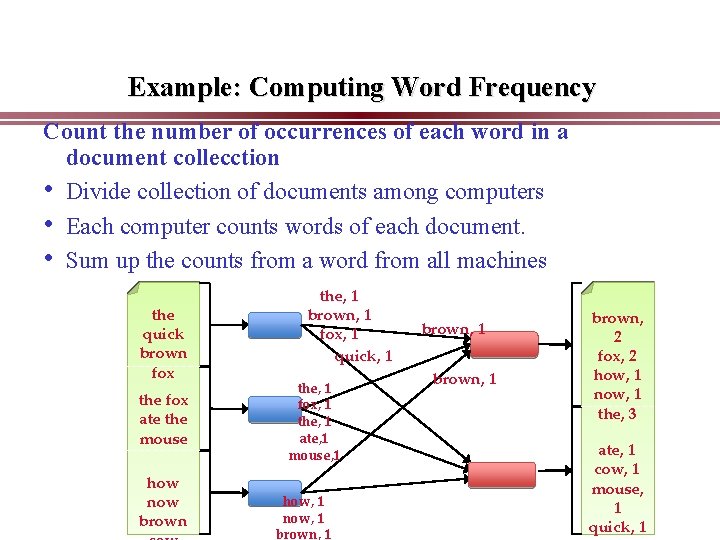

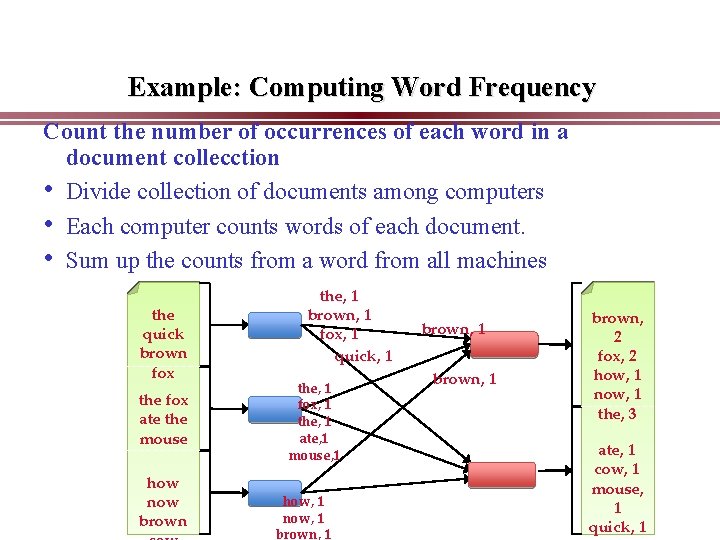

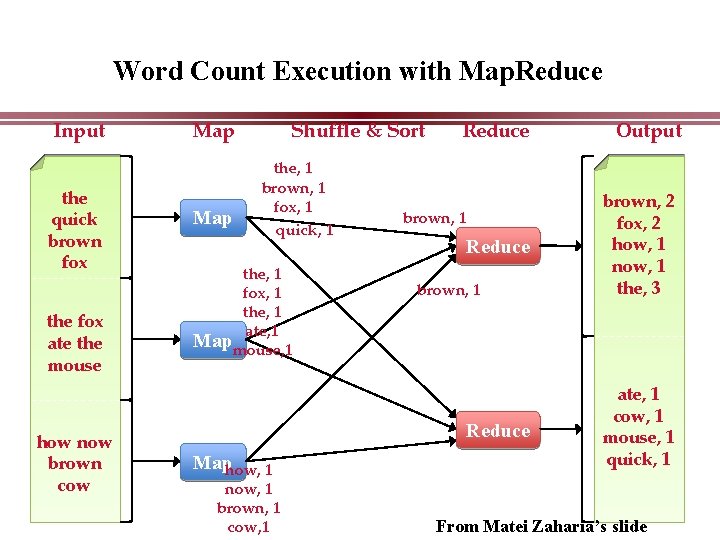

Example: Computing Word Frequency Count the number of occurrences of each word in a document collecction • Divide collection of documents among computers • Each computer counts words of each document. • Sum up the counts from a word from all machines the quick brown fox the fox ate the mouse how now brown the, 1 brown, 1 fox, 1 quick, 1 the, 1 fox, 1 the, 1 ate, 1 mouse, 1 how, 1 now, 1 brown, 2 fox, 2 how, 1 now, 1 the, 3 ate, 1 cow, 1 mouse, 1 quick, 1

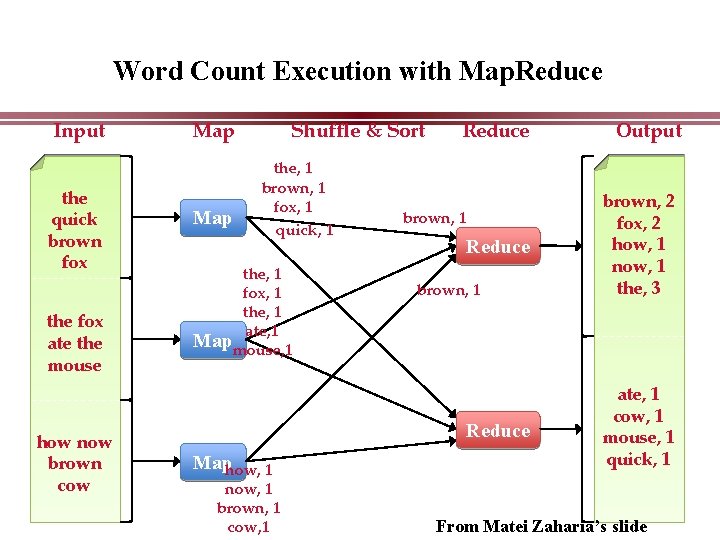

Word Count Execution with Map. Reduce Input the quick brown fox the fox ate the mouse how now brown cow Map Shuffle & Sort the, 1 brown, 1 fox, 1 quick, 1 the, 1 fox, 1 the, 1 ate, 1 Mapmouse, 1 Reduce brown, 1 Reduce Maphow, 1 now, 1 brown, 1 cow, 1 Output brown, 2 fox, 2 how, 1 now, 1 the, 3 ate, 1 cow, 1 mouse, 1 quick, 1 From Matei Zaharia’s slide

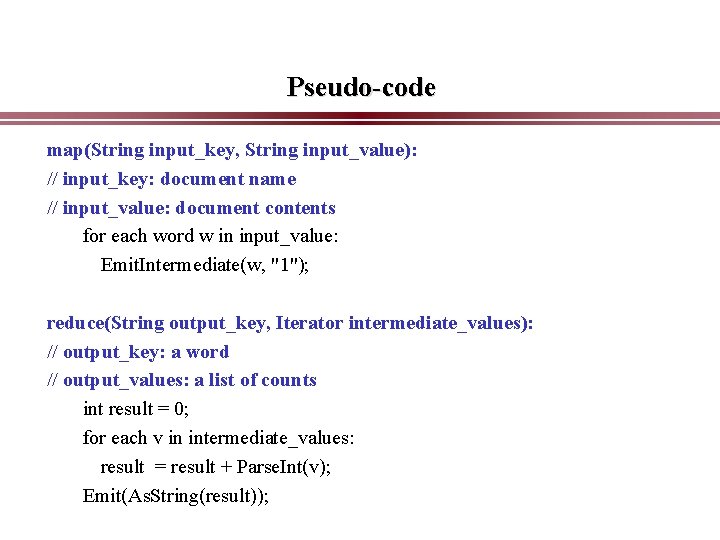

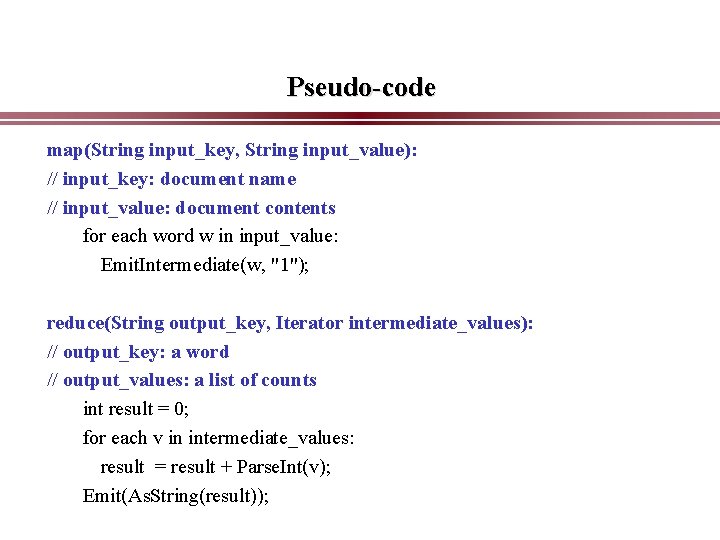

Pseudo-code map(String input_key, String input_value): // input_key: document name // input_value: document contents for each word w in input_value: Emit. Intermediate(w, "1"); reduce(String output_key, Iterator intermediate_values): // output_key: a word // output_values: a list of counts int result = 0; for each v in intermediate_values: result = result + Parse. Int(v); Emit(As. String(result));

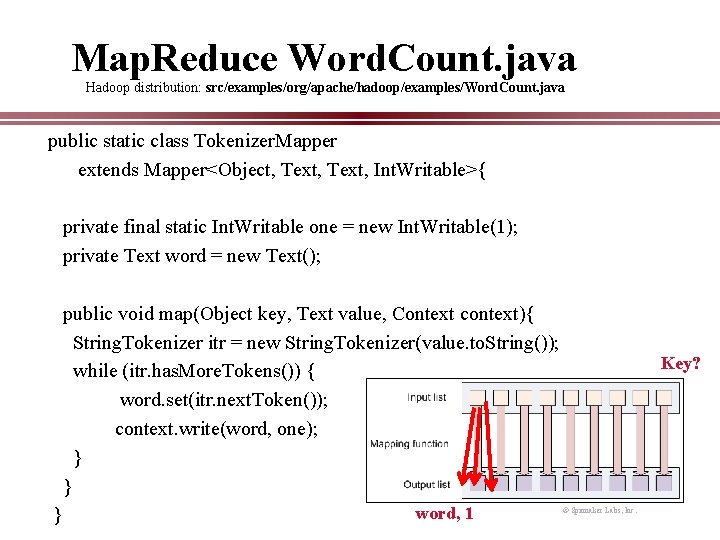

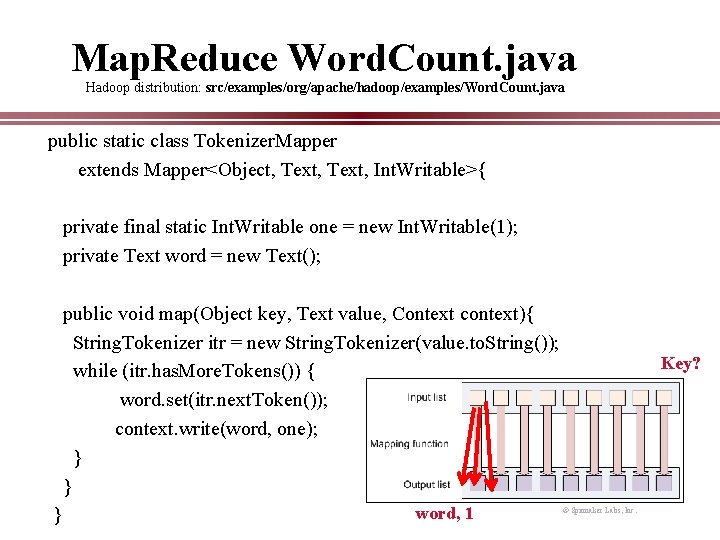

Map. Reduce Word. Count. java Hadoop distribution: src/examples/org/apache/hadoop/examples/Word. Count. java public static class Tokenizer. Mapper extends Mapper<Object, Text, Int. Writable>{ private final static Int. Writable one = new Int. Writable(1); private Text word = new Text(); public void map(Object key, Text value, Context context){ String. Tokenizer itr = new String. Tokenizer(value. to. String()); while (itr. has. More. Tokens()) { word. set(itr. next. Token()); context. write(word, one); } } } word, 1 Key? © Spinnaker Labs, Inc.

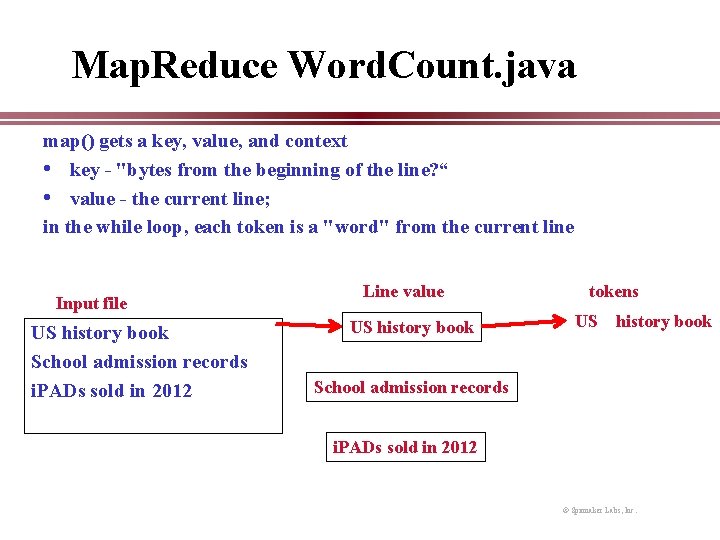

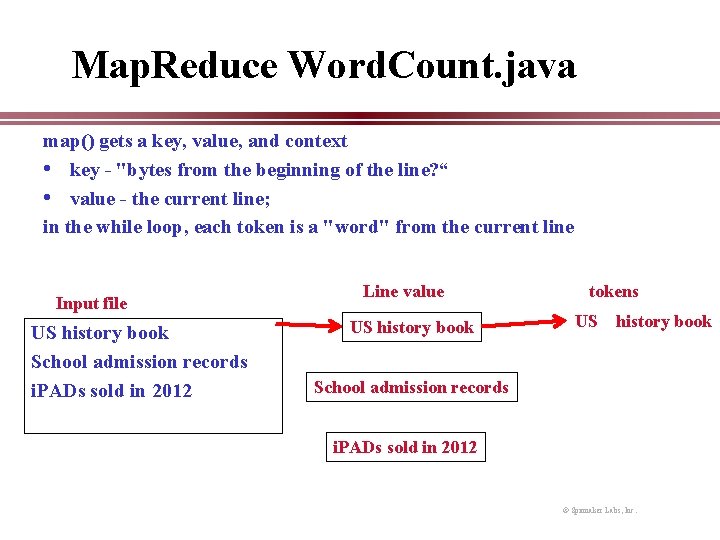

Map. Reduce Word. Count. java map() gets a key, value, and context • key - "bytes from the beginning of the line? “ • value - the current line; in the while loop, each token is a "word" from the current line Input file US history book School admission records i. PADs sold in 2012 Line value US history book tokens US history book School admission records i. PADs sold in 2012 © Spinnaker Labs, Inc.

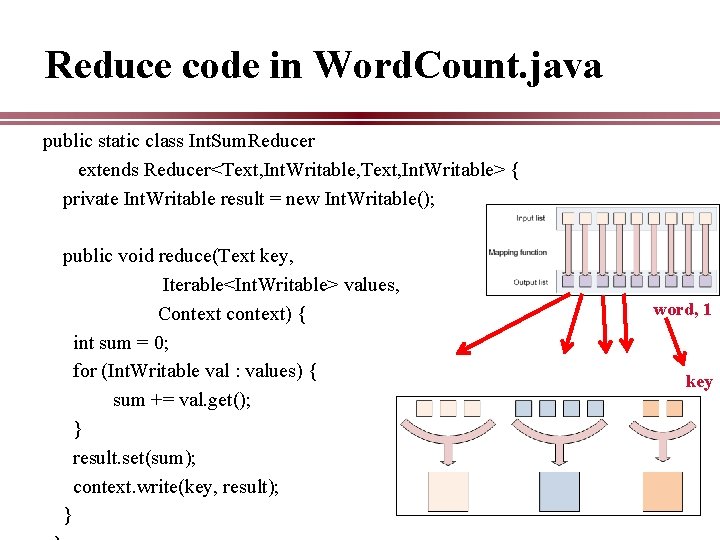

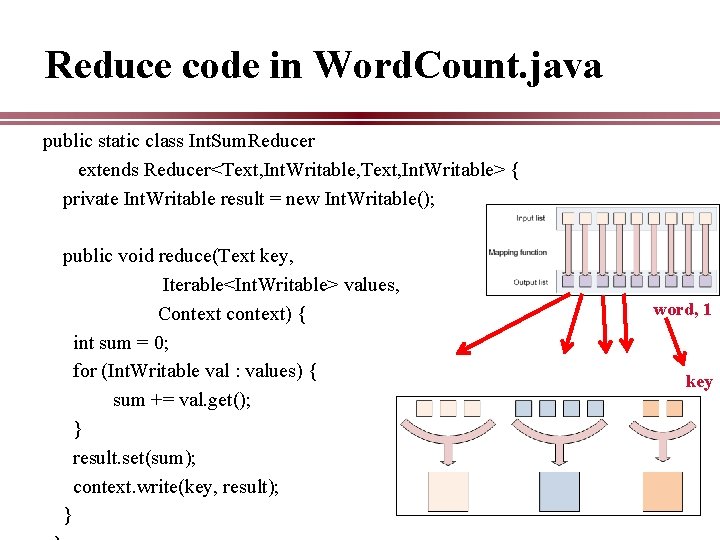

Reduce code in Word. Count. java public static class Int. Sum. Reducer extends Reducer<Text, Int. Writable, Text, Int. Writable> { private Int. Writable result = new Int. Writable(); public void reduce(Text key, Iterable<Int. Writable> values, Context context) { int sum = 0; for (Int. Writable val : values) { sum += val. get(); } result. set(sum); context. write(key, result); } word, 1 key © Spinnaker Labs, Inc.

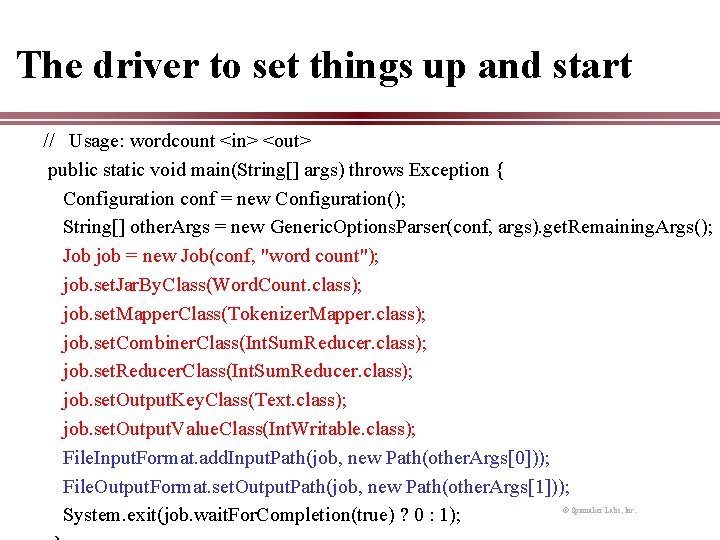

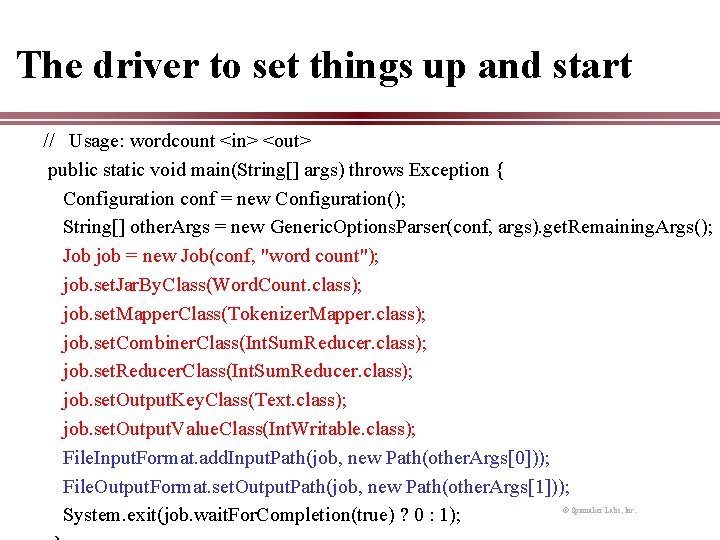

The driver to set things up and start // Usage: wordcount <in> <out> public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] other. Args = new Generic. Options. Parser(conf, args). get. Remaining. Args(); Job job = new Job(conf, "word count"); job. set. Jar. By. Class(Word. Count. class); job. set. Mapper. Class(Tokenizer. Mapper. class); job. set. Combiner. Class(Int. Sum. Reducer. class); job. set. Reducer. Class(Int. Sum. Reducer. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); File. Input. Format. add. Input. Path(job, new Path(other. Args[0])); File. Output. Format. set. Output. Path(job, new Path(other. Args[1])); System. exit(job. wait. For. Completion(true) ? 0 : 1); © Spinnaker Labs, Inc.

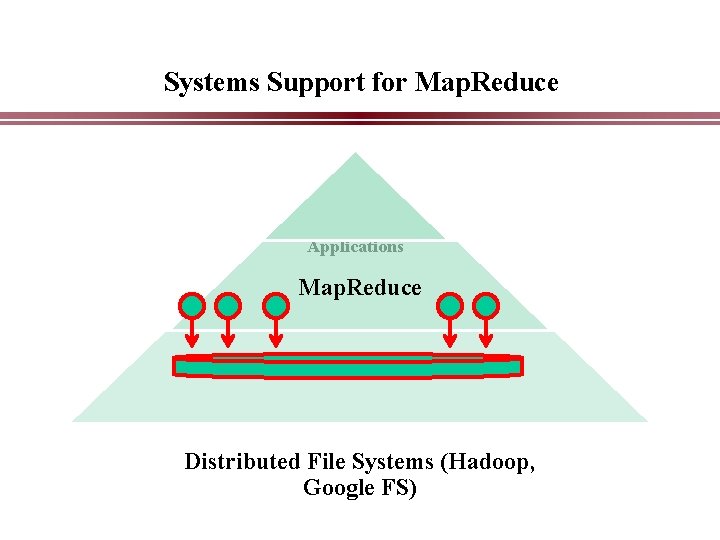

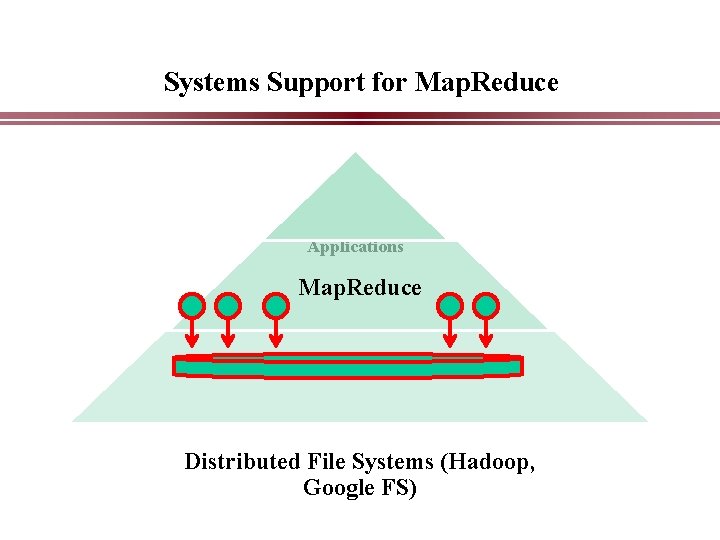

Systems Support for Map. Reduce Applications Map. Reduce Distributed File Systems (Hadoop, Google FS)

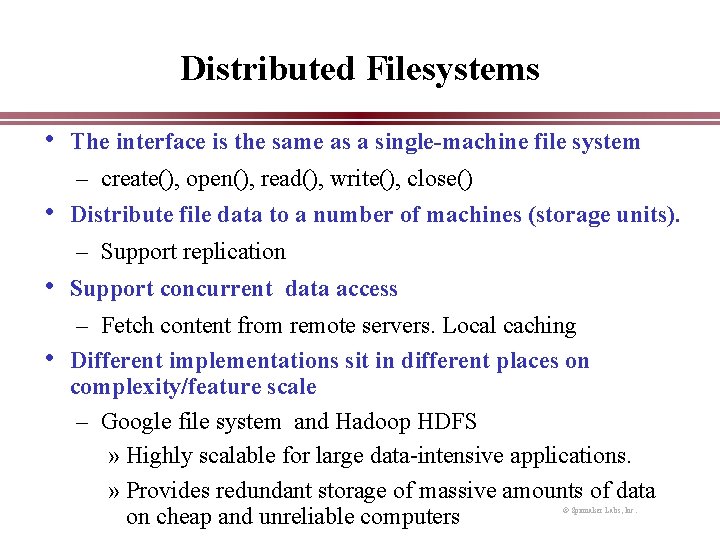

Distributed Filesystems • The interface is the same as a single-machine file system – create(), open(), read(), write(), close() • Distribute file data to a number of machines (storage units). – Support replication • Support concurrent data access – Fetch content from remote servers. Local caching • Different implementations sit in different places on complexity/feature scale – Google file system and Hadoop HDFS » Highly scalable for large data-intensive applications. » Provides redundant storage of massive amounts of data on cheap and unreliable computers © Spinnaker Labs, Inc.

Assumptions of GFS/Hadoop DFS • High component failure rates • • – Inexpensive commodity components fail all the time “Modest” number of HUGE files – Just a few million – Each is 100 MB or larger; multi-GB files typical Files are write-once, mostly appended to – Perhaps concurrently Large streaming reads High sustained throughput favored over low latency © Spinnaker Labs, Inc.

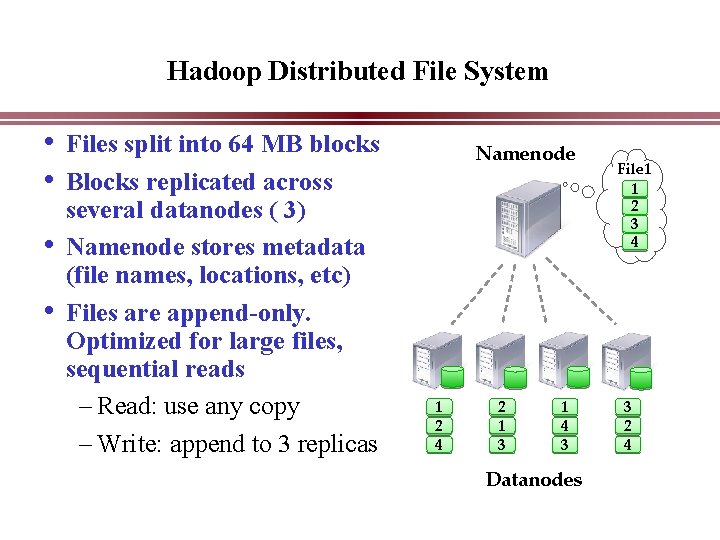

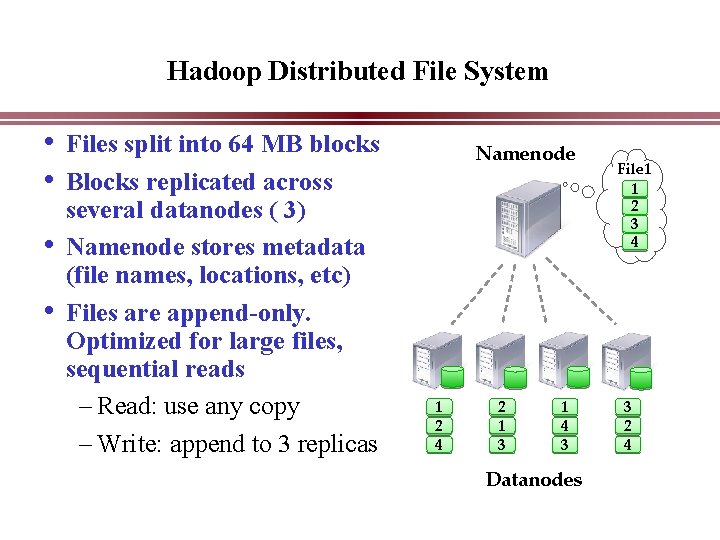

Hadoop Distributed File System • Files split into 64 MB blocks • Blocks replicated across • • several datanodes ( 3) Namenode stores metadata (file names, locations, etc) Files are append-only. Optimized for large files, sequential reads – Read: use any copy – Write: append to 3 replicas Namenode 1 2 4 2 1 3 1 4 3 Datanodes File 1 1 2 3 4 3 2 4

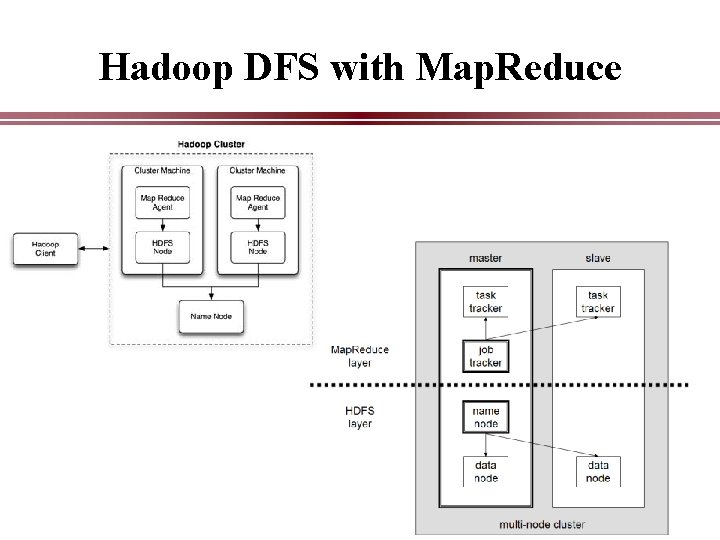

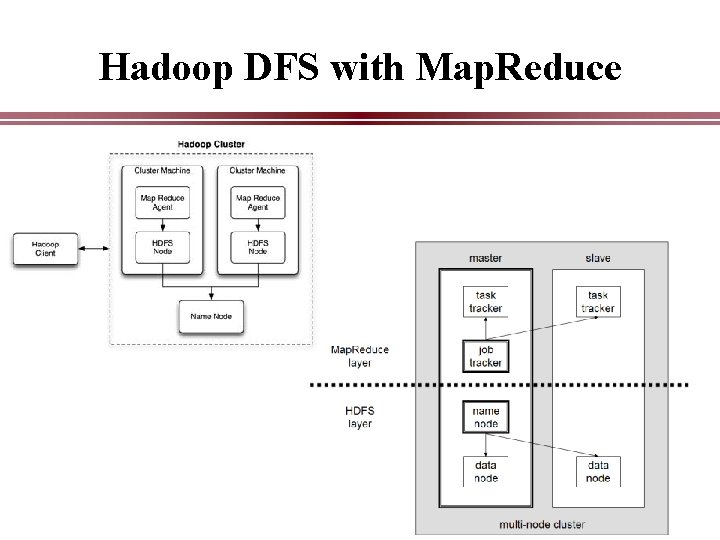

Hadoop DFS with Map. Reduce

Hadoop Cluster with Map. Reduce

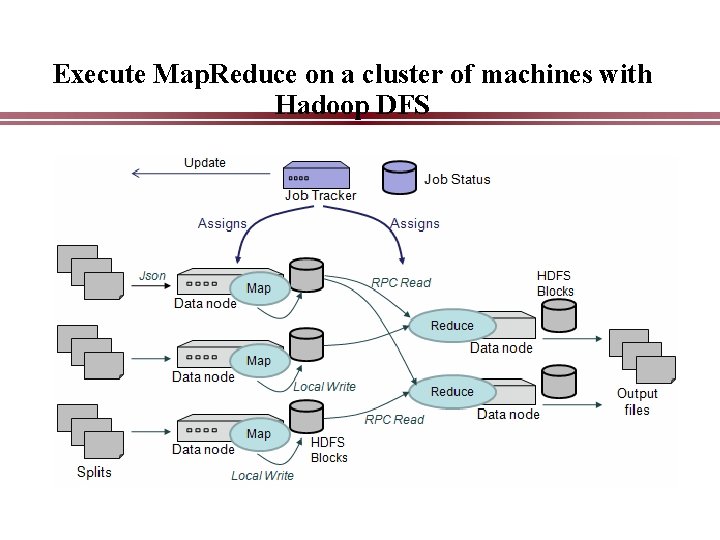

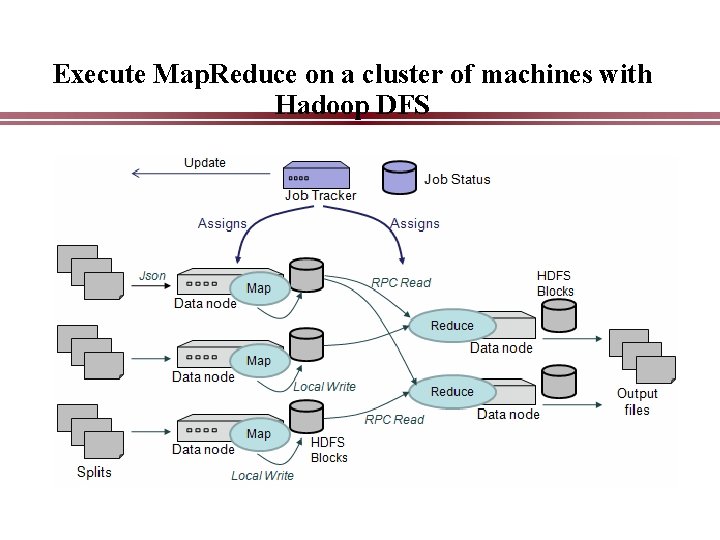

Execute Map. Reduce on a cluster of machines with Hadoop DFS

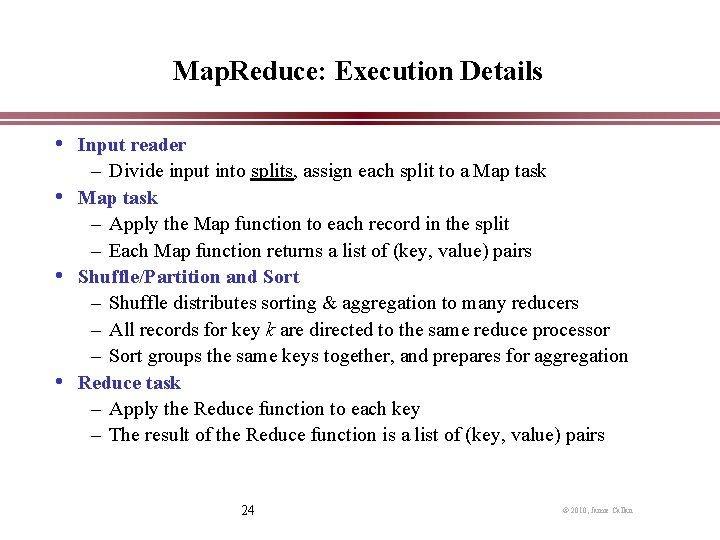

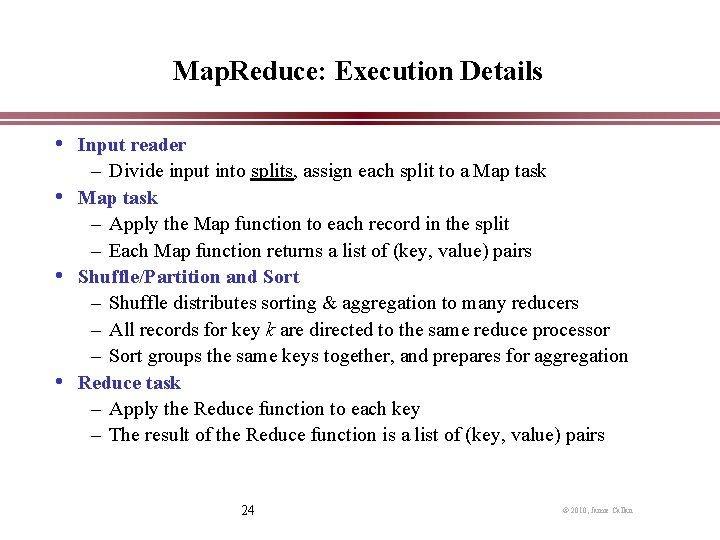

Map. Reduce: Execution Details • Input reader – Divide input into splits, assign each split to a Map task • Map task – Apply the Map function to each record in the split – Each Map function returns a list of (key, value) pairs • Shuffle/Partition and Sort – Shuffle distributes sorting & aggregation to many reducers – All records for key k are directed to the same reduce processor – Sort groups the same keys together, and prepares for aggregation • Reduce task – Apply the Reduce function to each key – The result of the Reduce function is a list of (key, value) pairs 24 © 2010, Jamie Callan

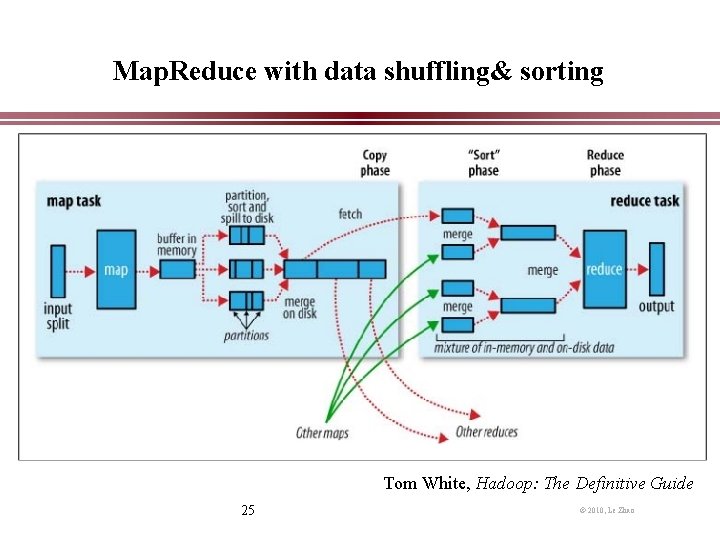

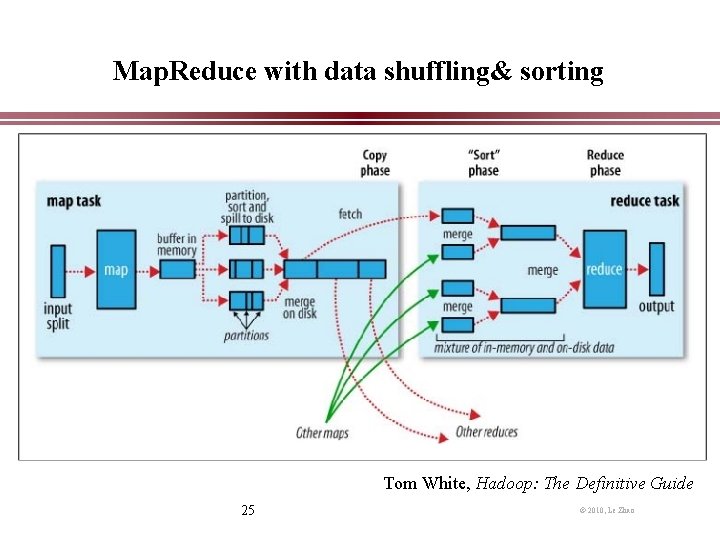

Map. Reduce with data shuffling& sorting Tom White, Hadoop: The Definitive Guide 25 © 2010, Le Zhao

Map. Reduce: Fault Tolerance • Handled via re-execution of tasks. Task completion committed through master • Mappers save outputs to local disk before serving to reducers – Allows recovery if a reducer crashes – Allows running more reducers than # of nodes • If a task crashes: – Retry on another node » OK for a map because it had no dependencies » OK for reduce because map outputs are on disk – If the same task repeatedly fails, fail the job or ignore that input block – : For the fault tolerance to work, user tasks must be deterministic and sideeffect-free 2. If a node crashes: – Relaunch its current tasks on other nodes – Relaunch any maps the node previously ran » Necessary because their output files were lost along with the crashed node

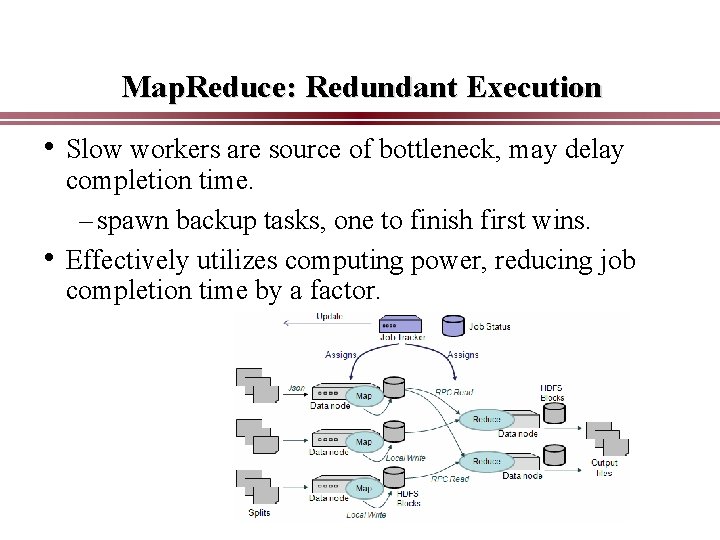

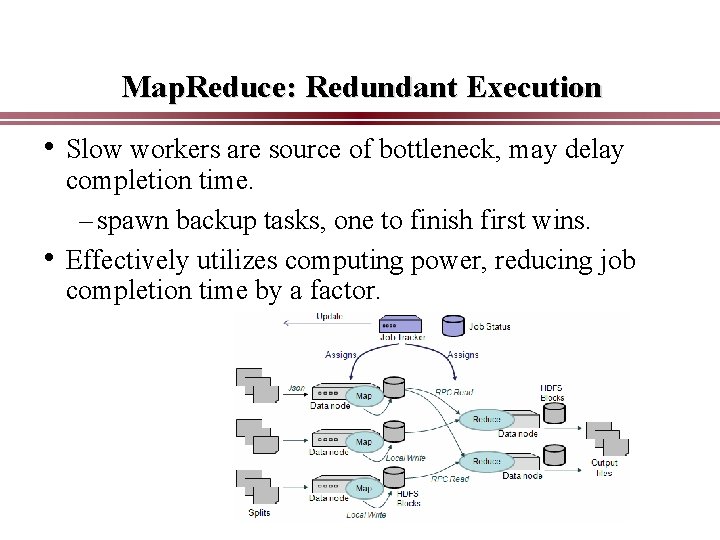

Map. Reduce: Redundant Execution • Slow workers are source of bottleneck, may delay • completion time. – spawn backup tasks, one to finish first wins. Effectively utilizes computing power, reducing job completion time by a factor.

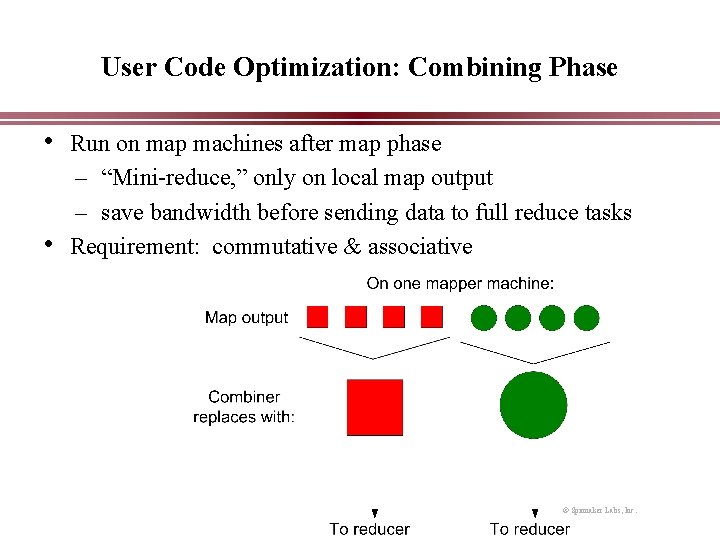

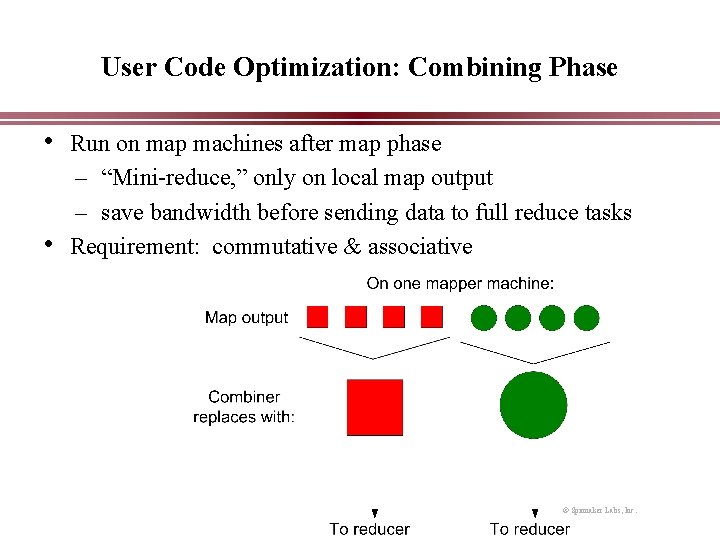

User Code Optimization: Combining Phase • Run on map machines after map phase – “Mini-reduce, ” only on local map output – save bandwidth before sending data to full reduce tasks • Requirement: commutative & associative © Spinnaker Labs, Inc.

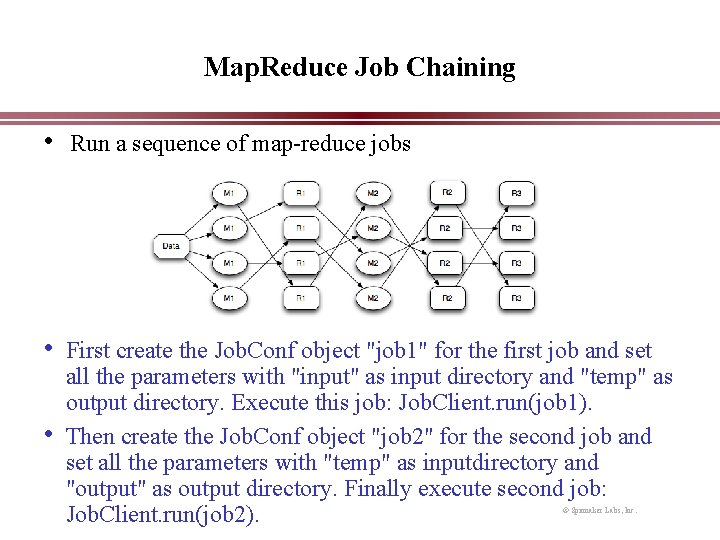

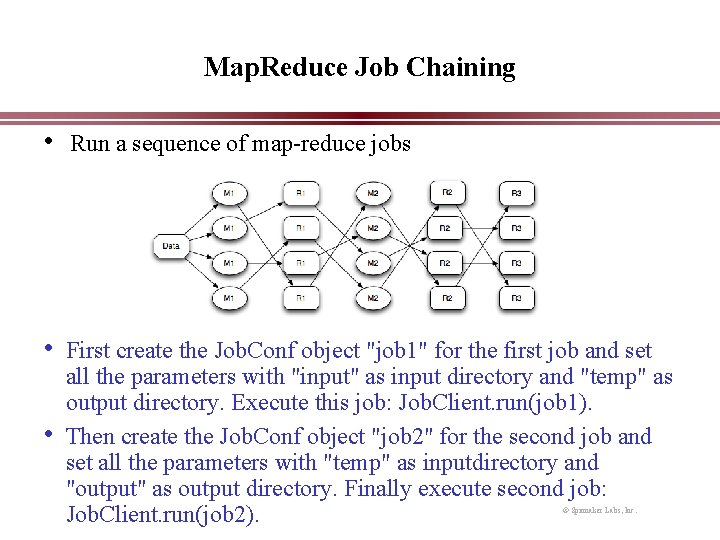

Map. Reduce Job Chaining • Run a sequence of map-reduce jobs • First create the Job. Conf object "job 1" for the first job and set • all the parameters with "input" as input directory and "temp" as output directory. Execute this job: Job. Client. run(job 1). Then create the Job. Conf object "job 2" for the second job and set all the parameters with "temp" as inputdirectory and "output" as output directory. Finally execute second job: Job. Client. run(job 2). © Spinnaker Labs, Inc.

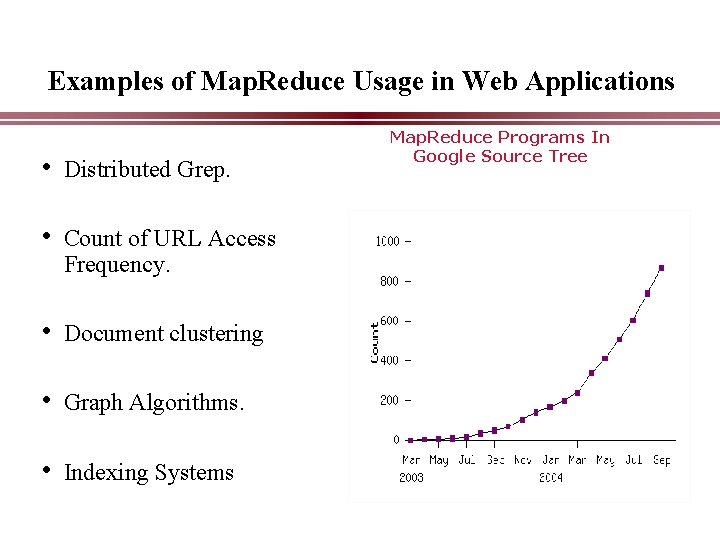

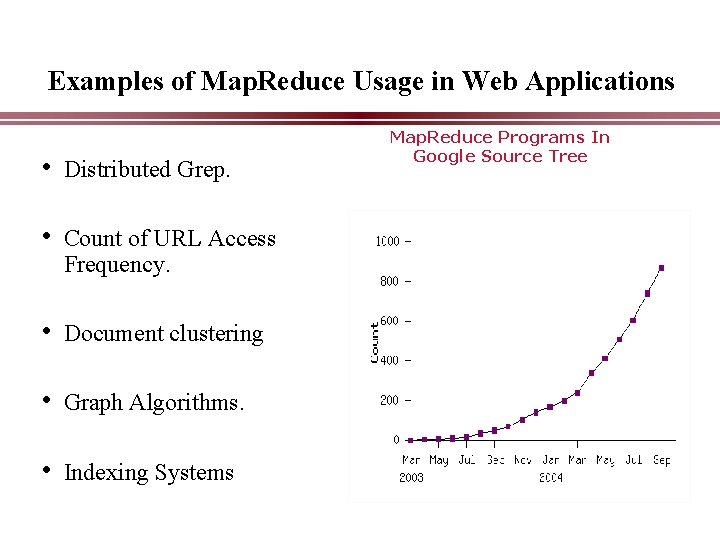

Examples of Map. Reduce Usage in Web Applications • Distributed Grep. • Count of URL Access Frequency. • Document clustering • Graph Algorithms. • Indexing Systems Map. Reduce Programs In Google Source Tree

Hadoop and Tools • Various Linux Hadoop clusters around • • • – Cluster +Hadoop: http: //hadoop. apache. org – Triton sample: ~tyang-ucsb/mapreduce 2 – Amazon EC 2 Winows and other platforms – The Net. Beans plugin simulates Hadoop – The workflow view works on Windows Pig Latin, a SQL-like high level data processing script language Hive, Data warehouse, SQL Mahout, Machine Learning algorithms on Hadoop HBase, Distributed data store as a large table 31

Map. Reduce Applications: Case Studies • Map Only processing • Producing inverted index for web search • Page. Rank graph processing 32

Map. Reduce Use Case : Map Only Data distributive tasks – Map Only • E. g. classify individual documents • Map does everything – Input: (docno, doc_content), … – Output: (docno, [class, …]), … • No reduce tasks 33

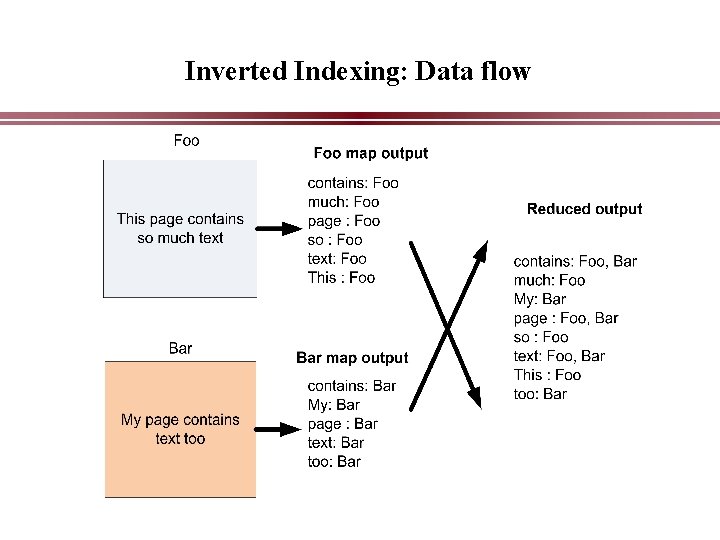

Map. Reduce Use Case: Inverted Indexing Preliminaries Construction of inverted lists for document search • Input: documents: (docid, [term, term. . ]), (docid, [term, . . ]), . . • Output: (term, [docid, …]) – E. g. , (apple, [1, 23, 49, 127, …]) 34 © 2010, Jamie Callan

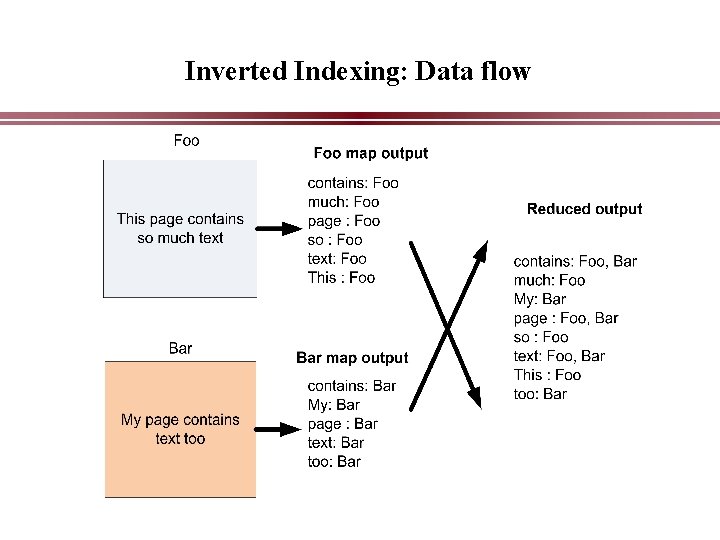

Inverted Indexing: Data flow

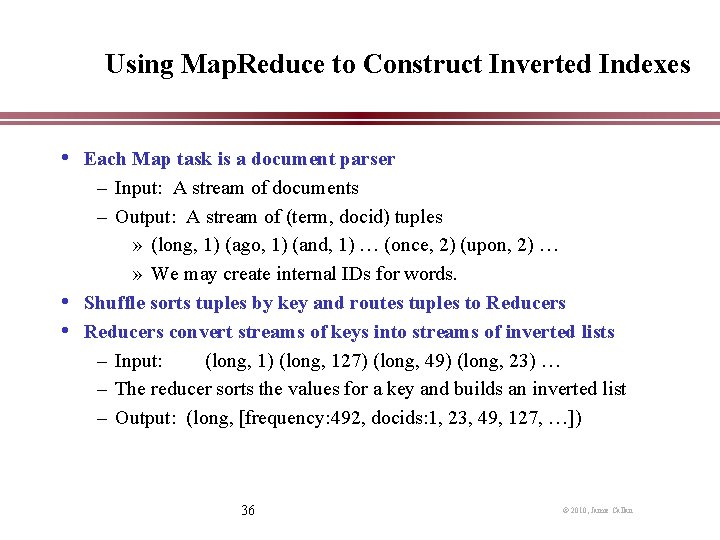

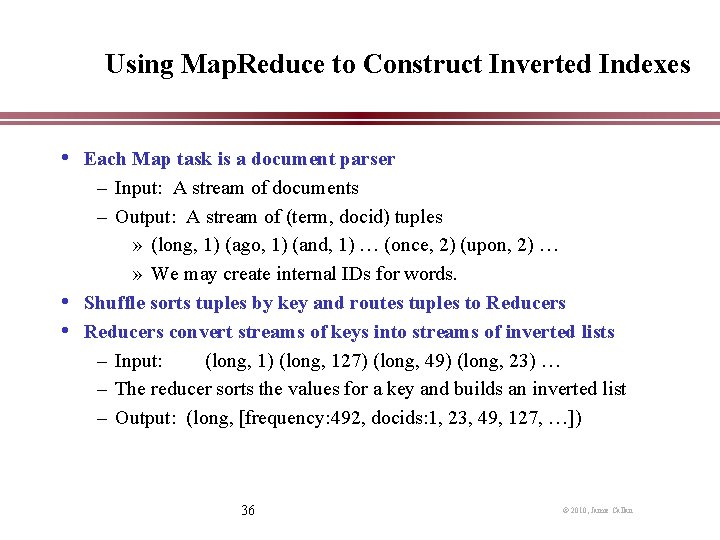

Using Map. Reduce to Construct Inverted Indexes • Each Map task is a document parser – Input: A stream of documents – Output: A stream of (term, docid) tuples » (long, 1) (ago, 1) (and, 1) … (once, 2) (upon, 2) … » We may create internal IDs for words. • Shuffle sorts tuples by key and routes tuples to Reducers • Reducers convert streams of keys into streams of inverted lists – Input: (long, 1) (long, 127) (long, 49) (long, 23) … – The reducer sorts the values for a key and builds an inverted list – Output: (long, [frequency: 492, docids: 1, 23, 49, 127, …]) 36 © 2010, Jamie Callan

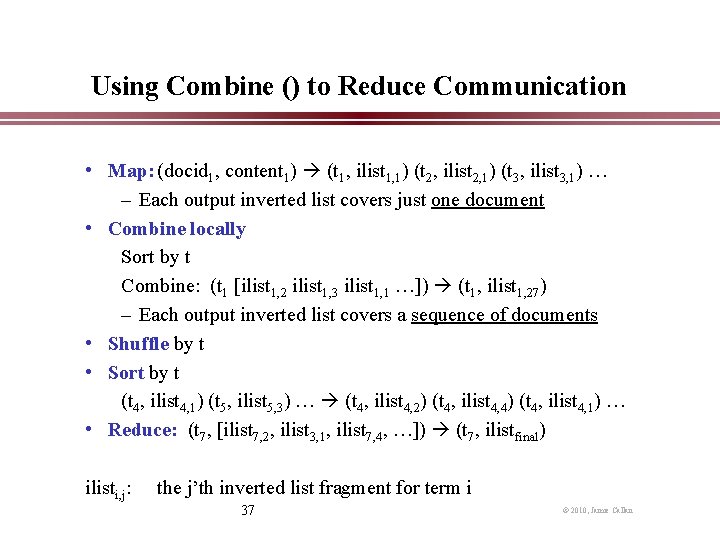

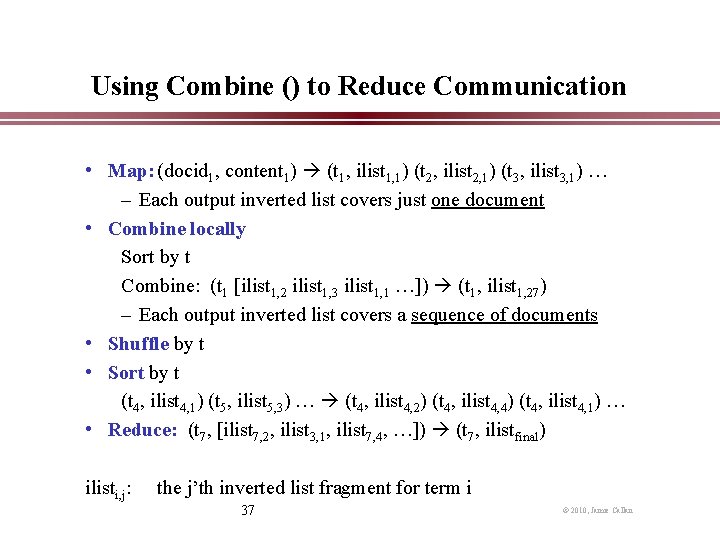

Using Combine () to Reduce Communication • Map: (docid 1, content 1) (t 1, ilist 1, 1) (t 2, ilist 2, 1) (t 3, ilist 3, 1) … – Each output inverted list covers just one document • Combine locally Sort by t Combine: (t 1 [ilist 1, 2 ilist 1, 3 ilist 1, 1 …]) (t 1, ilist 1, 27) – Each output inverted list covers a sequence of documents • Shuffle by t • Sort by t (t 4, ilist 4, 1) (t 5, ilist 5, 3) … (t 4, ilist 4, 2) (t 4, ilist 4, 4) (t 4, ilist 4, 1) … • Reduce: (t 7, [ilist 7, 2, ilist 3, 1, ilist 7, 4, …]) (t 7, ilistfinal) ilisti, j: the j’th inverted list fragment for term i 37 © 2010, Jamie Callan

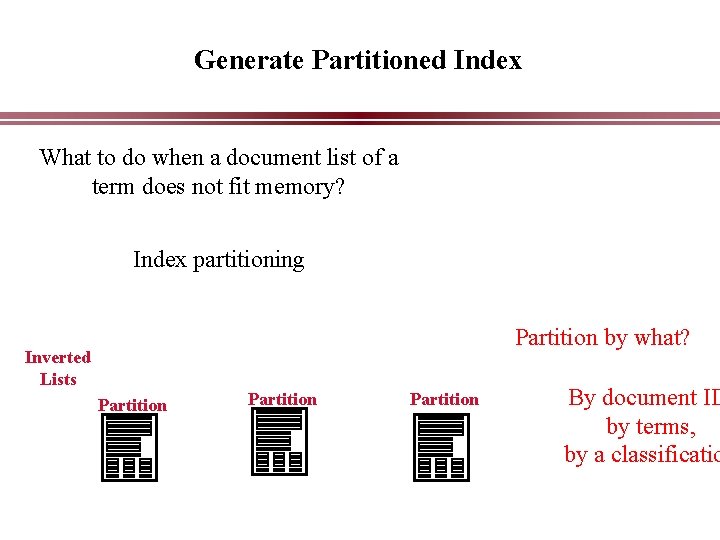

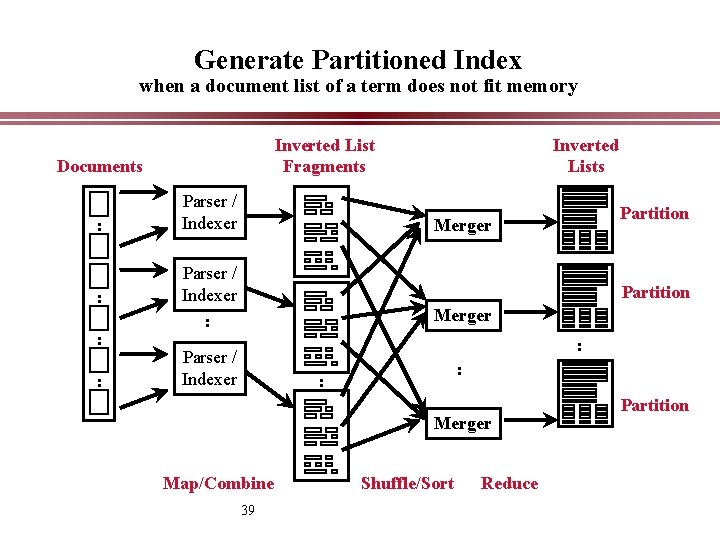

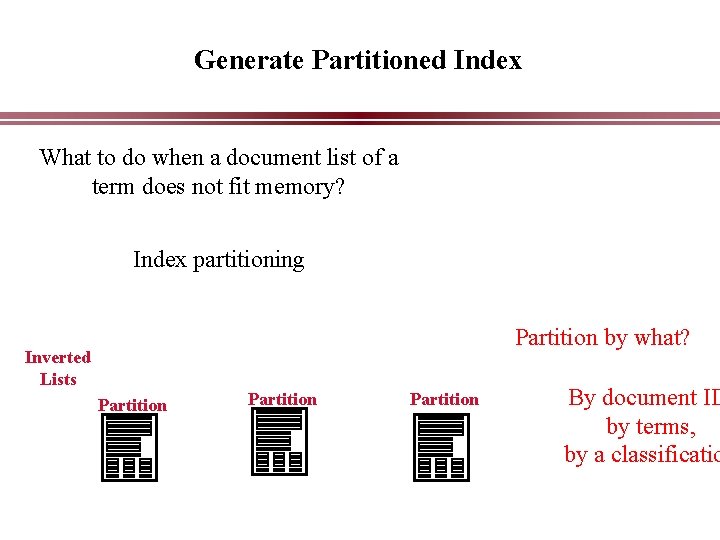

Generate Partitioned Index What to do when a document list of a term does not fit memory? Index partitioning Partition by what? Inverted Lists Partition By document ID by terms, by a classificatio

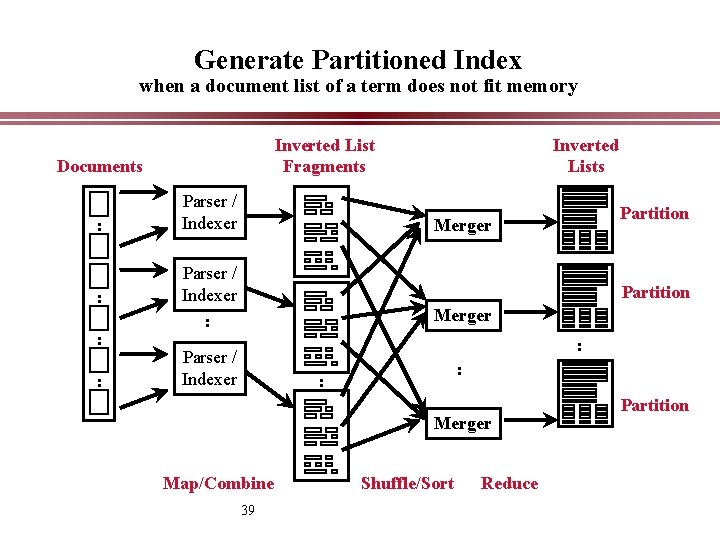

Generate Partitioned Index when a document list of a term does not fit memory Inverted List Fragments Documents : : : : Parser / Indexer Inverted Lists Partition Merger Parser / Indexer : Partition Merger : Parser / Indexer : : Merger Map/Combine 39 Shuffle/Sort Reduce Partition

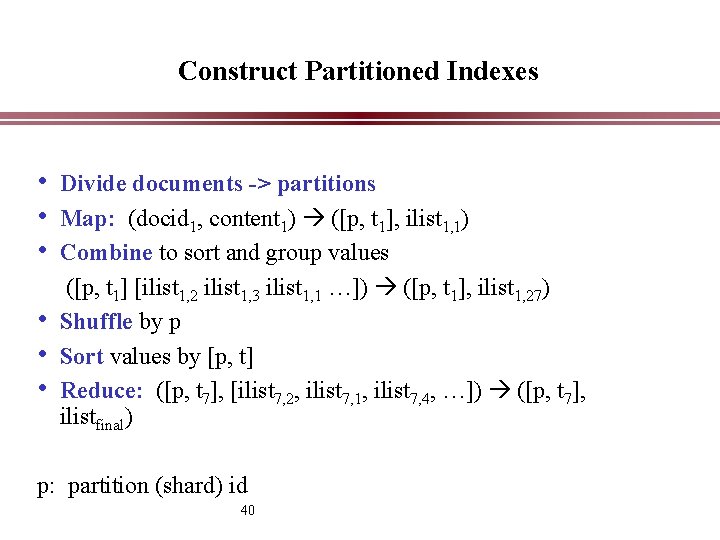

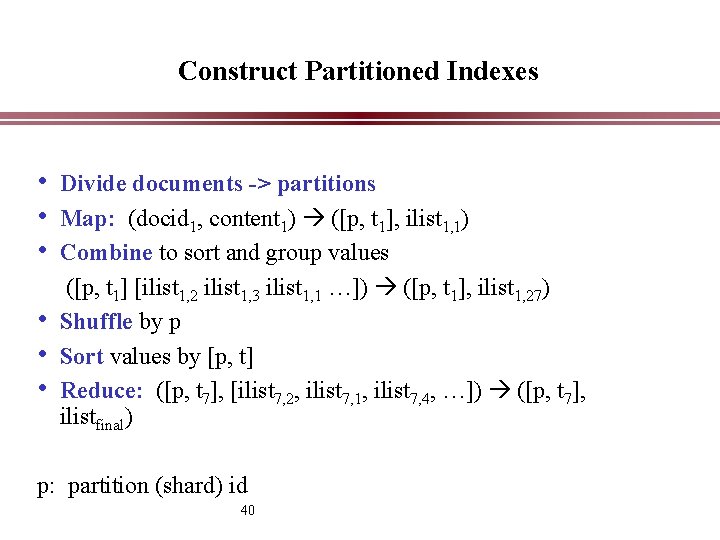

Construct Partitioned Indexes • Divide documents -> partitions • Map: (docid 1, content 1) ([p, t 1], ilist 1, 1) • Combine to sort and group values • • • ([p, t 1] [ilist 1, 2 ilist 1, 3 ilist 1, 1 …]) ([p, t 1], ilist 1, 27) Shuffle by p Sort values by [p, t] Reduce: ([p, t 7], [ilist 7, 2, ilist 7, 1, ilist 7, 4, …]) ([p, t 7], ilistfinal) p: partition (shard) id 40

Map. Reduce Use Case: Page. Rank

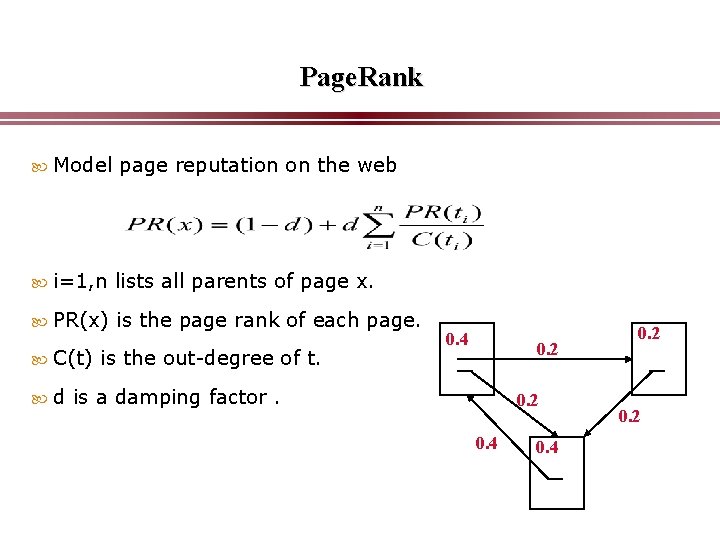

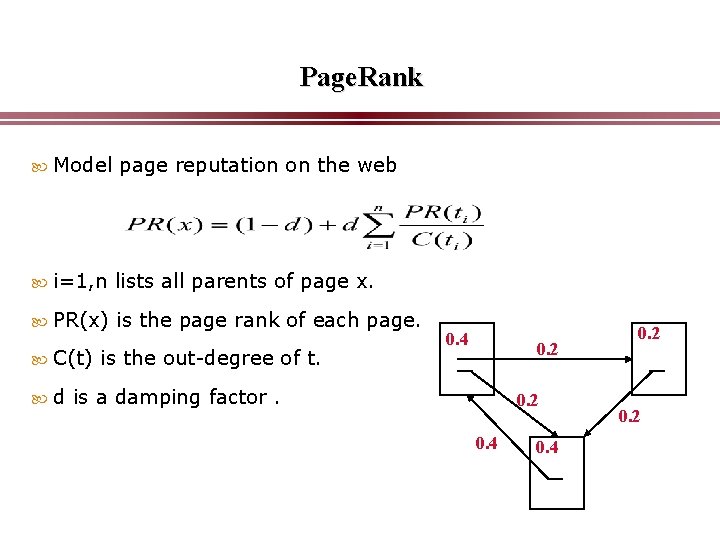

Page. Rank Model page reputation on the web i=1, n lists all parents of page x. PR(x) is the page rank of each page. C(t) d is the out-degree of t. 0. 4 0. 2 is a damping factor. 0. 2 0. 4 0. 2

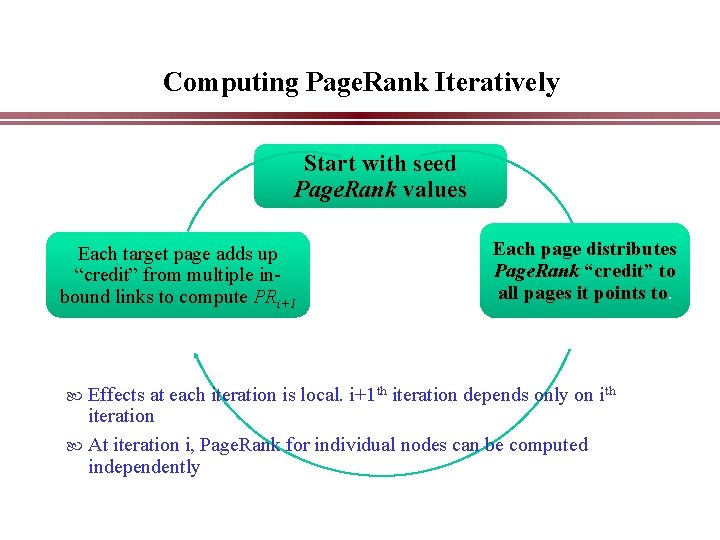

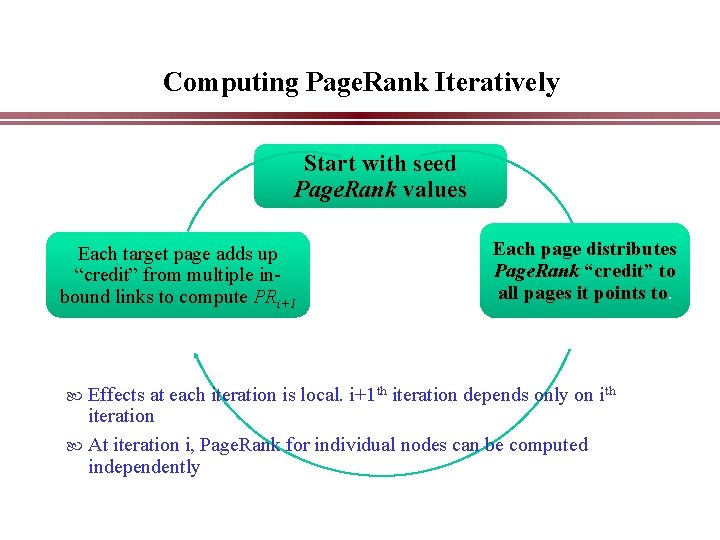

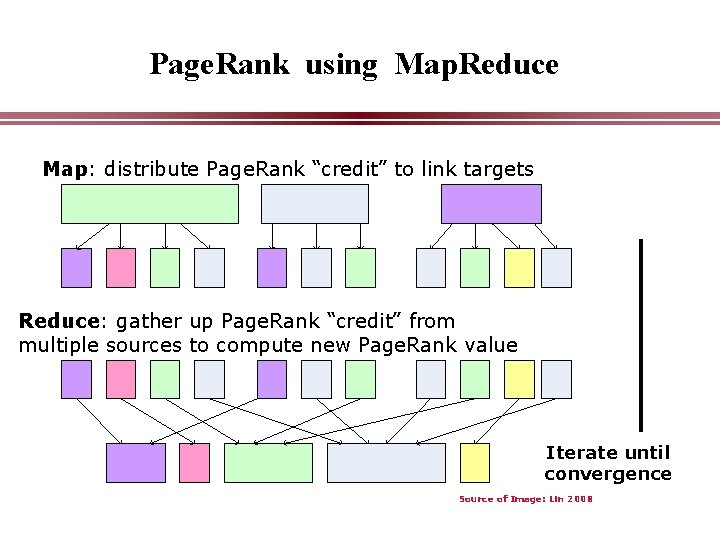

Computing Page. Rank Iteratively Start with seed Page. Rank values Each target page adds up “credit” from multiple inbound links to compute PRi+1 Effects Each page distributes Page. Rank “credit” to all pages it points to. at each iteration is local. i+1 th iteration depends only on ith iteration At iteration i, Page. Rank for individual nodes can be computed independently

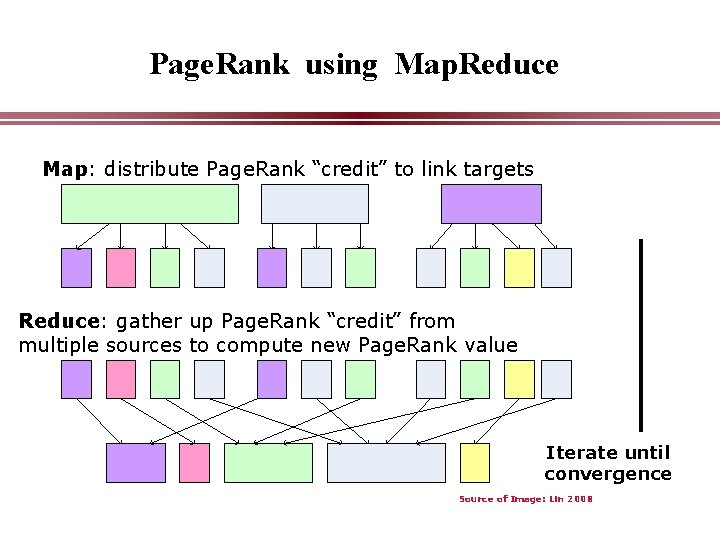

Page. Rank using Map. Reduce Map: distribute Page. Rank “credit” to link targets Reduce: gather up Page. Rank “credit” from multiple sources to compute new Page. Rank value Iterate until convergence Source of Image: Lin 2008

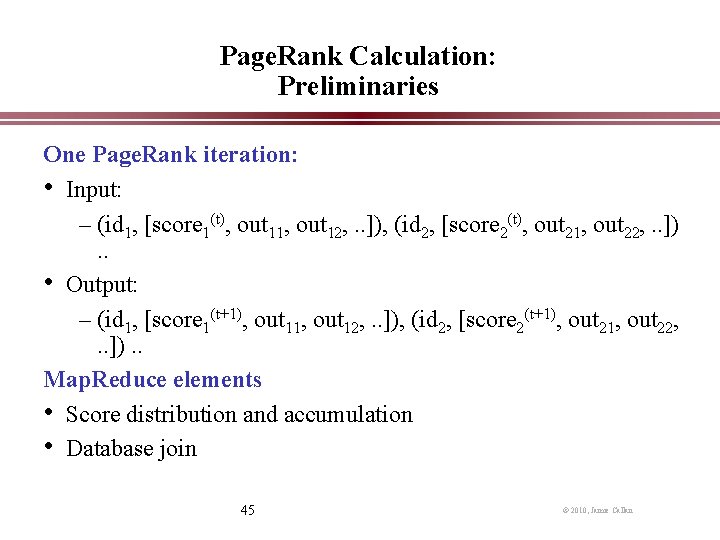

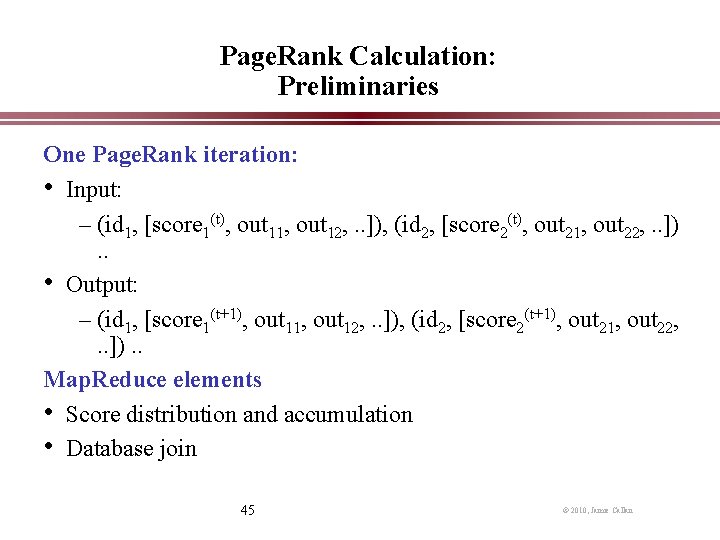

Page. Rank Calculation: Preliminaries One Page. Rank iteration: • Input: – (id 1, [score 1(t), out 11, out 12, . . ]), (id 2, [score 2(t), out 21, out 22, . . ]). . • Output: – (id 1, [score 1(t+1), out 11, out 12, . . ]), (id 2, [score 2(t+1), out 21, out 22, . . ]). . Map. Reduce elements • Score distribution and accumulation • Database join 45 © 2010, Jamie Callan

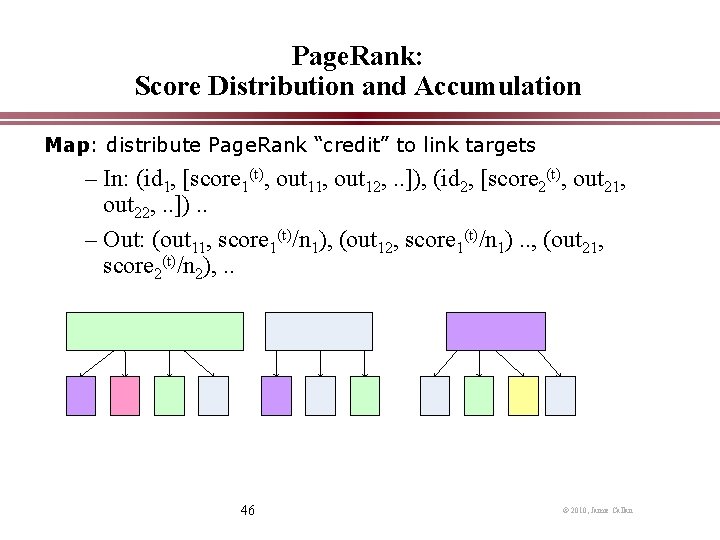

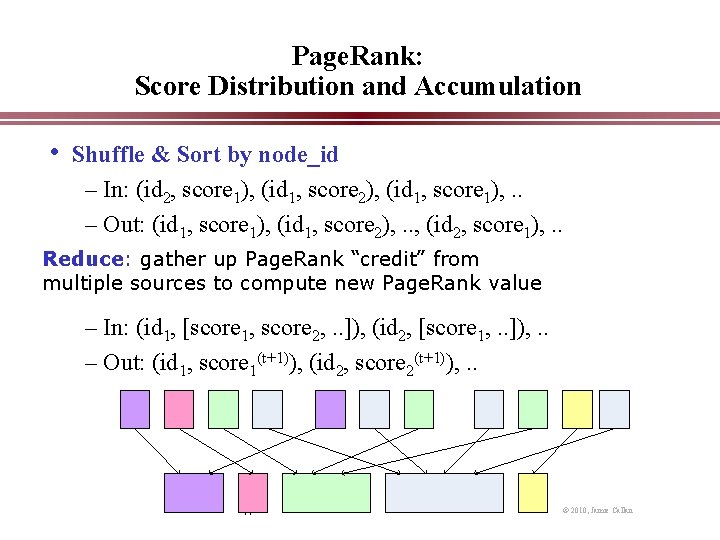

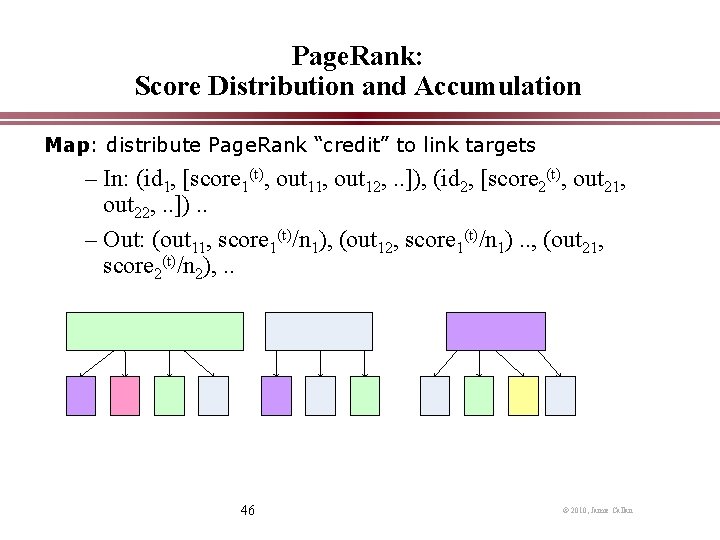

Page. Rank: Score Distribution and Accumulation Map: distribute Page. Rank “credit” to link targets – In: (id 1, [score 1(t), out 11, out 12, . . ]), (id 2, [score 2(t), out 21, out 22, . . ]). . – Out: (out 11, score 1(t)/n 1), (out 12, score 1(t)/n 1). . , (out 21, score 2(t)/n 2), . . 46 © 2010, Jamie Callan

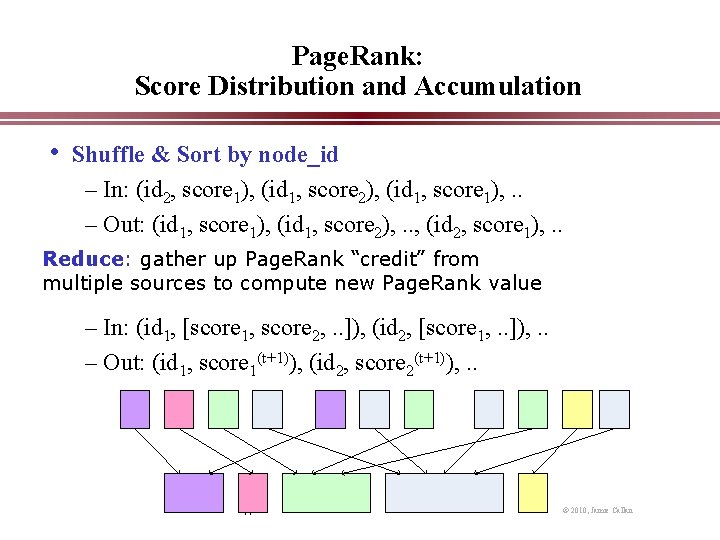

Page. Rank: Score Distribution and Accumulation • Shuffle & Sort by node_id – In: (id 2, score 1), (id 1, score 2), (id 1, score 1), . . – Out: (id 1, score 1), (id 1, score 2), . . , (id 2, score 1), . . Reduce: gather up Page. Rank “credit” from multiple sources to compute new Page. Rank value – In: (id 1, [score 1, score 2, . . ]), (id 2, [score 1, . . ]), . . – Out: (id 1, score 1(t+1)), (id 2, score 2(t+1)), . . 47 © 2010, Jamie Callan

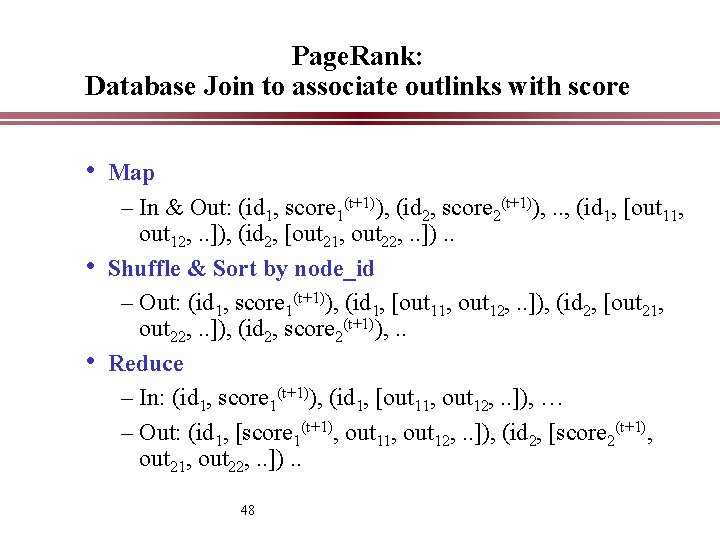

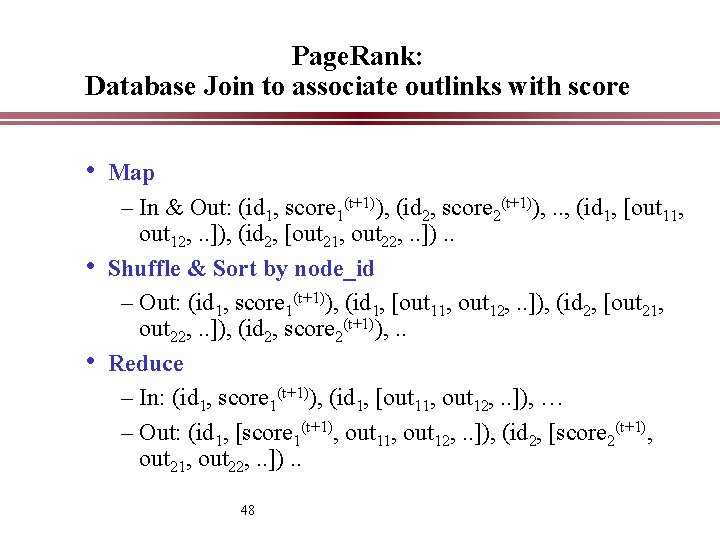

Page. Rank: Database Join to associate outlinks with score • Map – In & Out: (id 1, score 1(t+1)), (id 2, score 2(t+1)), . . , (id 1, [out 11, out 12, . . ]), (id 2, [out 21, out 22, . . ]). . • Shuffle & Sort by node_id – Out: (id 1, score 1(t+1)), (id 1, [out 11, out 12, . . ]), (id 2, [out 21, out 22, . . ]), (id 2, score 2(t+1)), . . • Reduce – In: (id 1, score 1(t+1)), (id 1, [out 11, out 12, . . ]), … – Out: (id 1, [score 1(t+1), out 11, out 12, . . ]), (id 2, [score 2(t+1), out 21, out 22, . . ]). . 48

Conclusions • Map. Reduce advantages • Application cases – Map only: for totally distributive computation – Map+Reduce: for filtering & aggregation – Inverted indexing: combiner, complex keys – Page. Rank: 49 © 2010, Jamie Callan