Parallel Crawlers Junghoo Cho Hector GarciaMolina Stanford University

- Slides: 35

Parallel Crawlers Junghoo Cho, Hector Garcia-Molina Stanford University Presented By: Raffi Margaliot Ori Elkin

What Is a Crawler? § § A program that downloads and stores web pages: • Starts off by placing an initial set of URLs, S 0 , in a queue, where all URLs to be retrieved are kept and prioritized. • From this queue, the crawler gets a URL (in some order), downloads the page, extracts any URLs in the downloaded page, and puts the new URLs in the queue. • This process is repeated until the crawler decides to stop. Collected pages are later used for other applications, such as a web search engine or a web cache.

What Is a Parallel Crawler? § § As the size of the web grows, it becomes more difficult to retrieve the whole or a significant portion of the web using a single process. It becomes imperative to parallelize a crawling process, in order to finish downloading pages in a reasonable amount of time. We refer to this type of crawler as a parallel crawler. The main goal in designing a parallel crawler, is to maximize its performance (Download rate) & minimize the overhead from parallelization.

What’s This Paper About? § § Propose multiple architectures for a parallel crawler. Identify fundamental issues related to parallel crawling. Propose metrics to evaluate a parallel crawler. Compare the proposed architectures using 40 million pages collected from the web. The results will clarify the relative merits of each architecture and provide a good guideline on when to adopt which architecture.

What We Know Already: § § § Many existing search engines already use some sort of parallelization. There has been little scientific research conducted on this topic. Little has been known on the tradeoffs among various design choices for a parallel crawler.

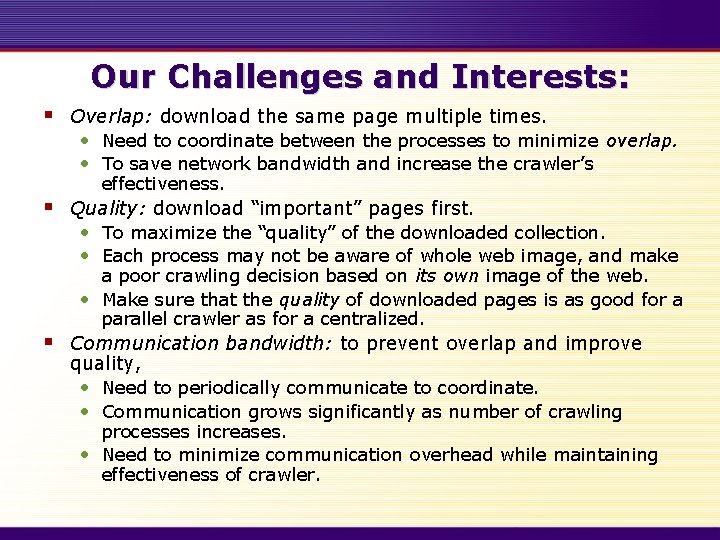

Our Challenges and Interests: § § § Overlap: download the same page multiple times. • Need to coordinate between the processes to minimize overlap. • To save network bandwidth and increase the crawler’s effectiveness. Quality: download “important” pages first. • To maximize the “quality” of the downloaded collection. • Each process may not be aware of whole web image, and make a poor crawling decision based on its own image of the web. • Make sure that the quality of downloaded pages is as good for a parallel crawler as for a centralized. Communication bandwidth: to prevent overlap and improve quality, • Need to periodically communicate to coordinate. • Communication grows significantly as number of crawling processes increases. • Need to minimize communication overhead while maintaining effectiveness of crawler.

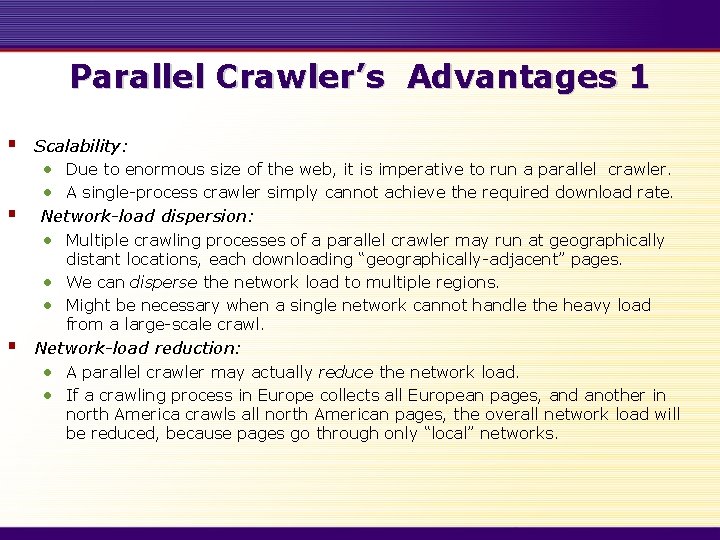

Parallel Crawler’s Advantages 1 § Scalability: • Due to enormous size of the web, it is imperative to run a parallel crawler. • A single-process crawler simply cannot achieve the required download rate. § Network-load dispersion: • Multiple crawling processes of a parallel crawler may run at geographically distant locations, each downloading “geographically-adjacent” pages. • We can disperse the network load to multiple regions. • Might be necessary when a single network cannot handle the heavy load from a large-scale crawl. § Network-load reduction: • A parallel crawler may actually reduce the network load. • If a crawling process in Europe collects all European pages, and another in north America crawls all north American pages, the overall network load will be reduced, because pages go through only “local” networks.

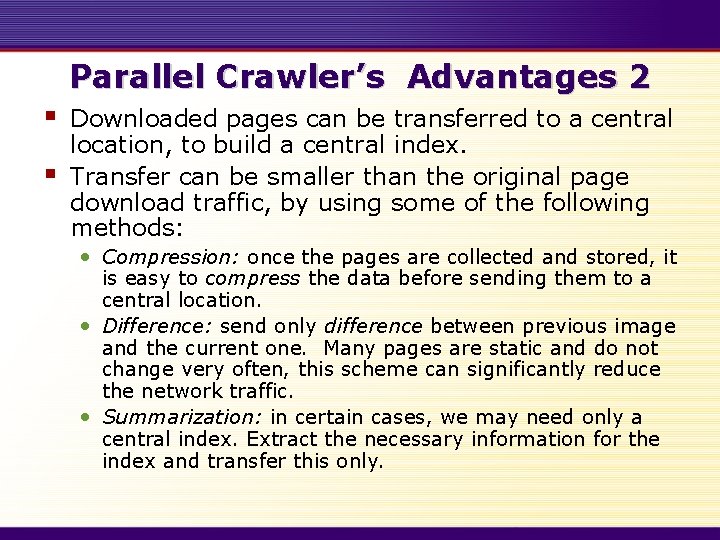

Parallel Crawler’s Advantages 2 § § Downloaded pages can be transferred to a central location, to build a central index. Transfer can be smaller than the original page download traffic, by using some of the following methods: • Compression: once the pages are collected and stored, it is easy to compress the data before sending them to a central location. • Difference: send only difference between previous image and the current one. Many pages are static and do not change very often, this scheme can significantly reduce the network traffic. • Summarization: in certain cases, we may need only a central index. Extract the necessary information for the index and transfer this only.

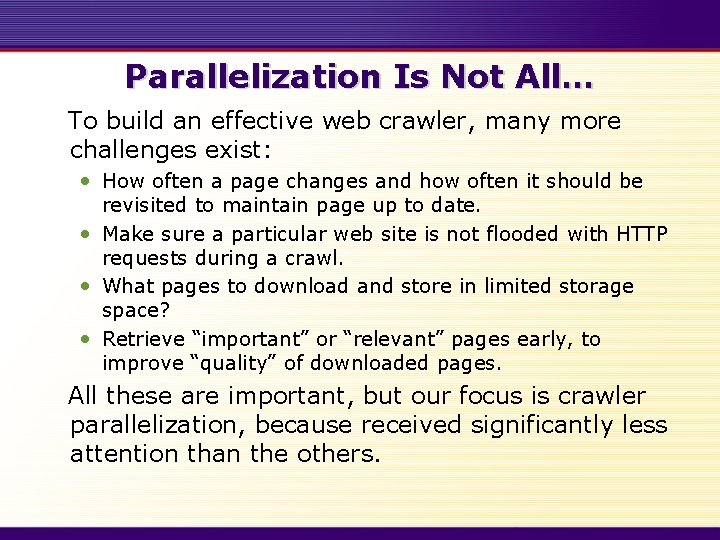

Parallelization Is Not All… To build an effective web crawler, many more challenges exist: • How often a page changes and how often it should be revisited to maintain page up to date. • Make sure a particular web site is not flooded with HTTP requests during a crawl. • What pages to download and store in limited storage space? • Retrieve “important” or “relevant” pages early, to improve “quality” of downloaded pages. All these are important, but our focus is crawler parallelization, because received significantly less attention than the others.

Architecture of Parallel Crawler § § A parallel crawler consists of multiple crawling processes: “c-proc. ” C-proc performs single-process crawler tasks: • Downloads pages from the web. • Stores downloaded pages locally. • Extracts URLs from downloaded pages and follows links. § Depending on how the c-proc’s split the download task, some of the extracted links may be sent to other c-proc’s.

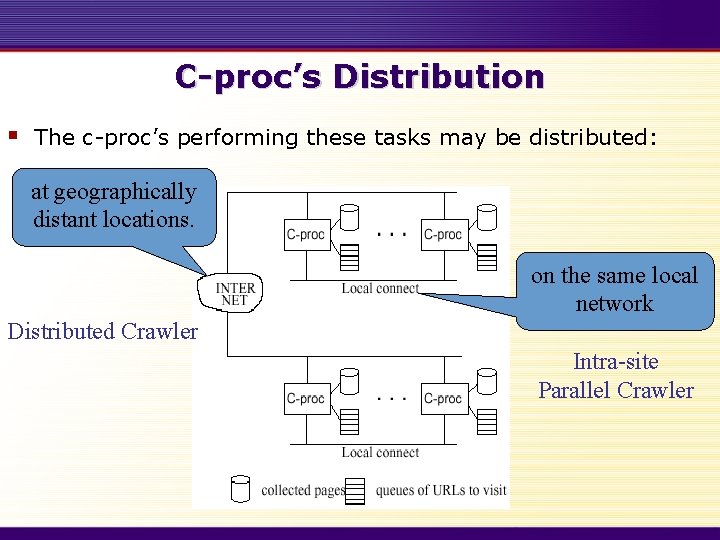

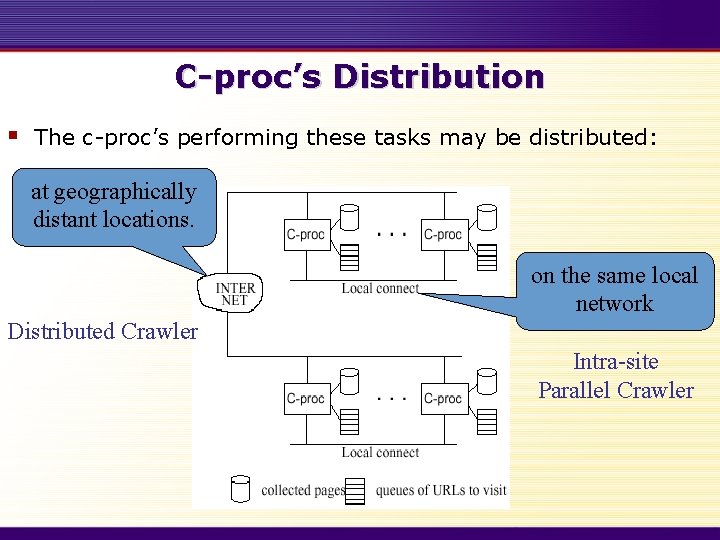

C-proc’s Distribution § The c-proc’s performing these tasks may be distributed: at geographically distant locations. on the same local network Distributed Crawler Intra-site Parallel Crawler

Intra-site Parallel Crawler § § All c-proc’s run on the same local network. Communicate through a high speed interconnect. All c-proc’s use the same local network when they download pages from remote web sites. The network load from c-proc’s is centralized at a single location where they operate.

Distributed Crawler § § § C-proc’s run at geographically distant locations. Connected by the internet (or a WAN). Can disperse and even reduce network load on the overall network. When c-proc’s run at distant locations and communicate through the internet, it becomes important how often and how much c-proc’s need to communicate. The bandwidth between c-proc’s may be limited and sometimes unavailable, as is often the case with the internet.

Coordination to Avoid Overlap: § § To avoid overlap, c-proc’s need to coordinate with each other on what pages to download. This coordination can be done in one of the following ways: • Independent download. • Dynamic assignment. • Static assignment. § In the next few slides we will explore each of these methods:

Overlap Avoidance: Independent Download § § § § Download pages totally independently without any coordination. Each c-proc starts with its own set of seed URLs and follows links without consulting with other c-proc’s. Downloaded pages may overlap. We may hope that this overlap will not be significant if all c-proc’s start from different seed URLs. Minimal coordination overhead. Very scalable. We will not directly cover this option due to its overlap problem.

§ § § § § Overlap Avoidance: Dynamic Assignment A central coordinator logically divides the web into small partitions (using a certain partitioning function). Dynamically assigns each partition to a c-proc for download. Central coordinator constantly decides on what partition to crawl next and sends URLs within this partition to a c-proc as seed URLs. C-proc downloads the pages and extracts links from them. Links to pages in the same partition are followed by the c-proc in another partition are reported to coordinator. Coordinator uses link as a seed URL for appropriate partition. Web can be partitioned at various granularities. Communication between c-proc and the central unit may vary depending on the granularity of partitioning function. Coordinator may become major bottleneck and need to be parallelized.

Overlap Avoidance: Static Assignment § § The web is partitioned and assigned to each c-proc before they start to crawl. Every c-proc knows which c-proc is responsible for which page during a crawl, and the crawler does not need a central coordinator. Some pages in the partition may have links to pages in another partition: inter-partition links. C-proc may handle inter-partition links in a number of modes: • Firewall mode. • Cross-over mode. • Exchange mode.

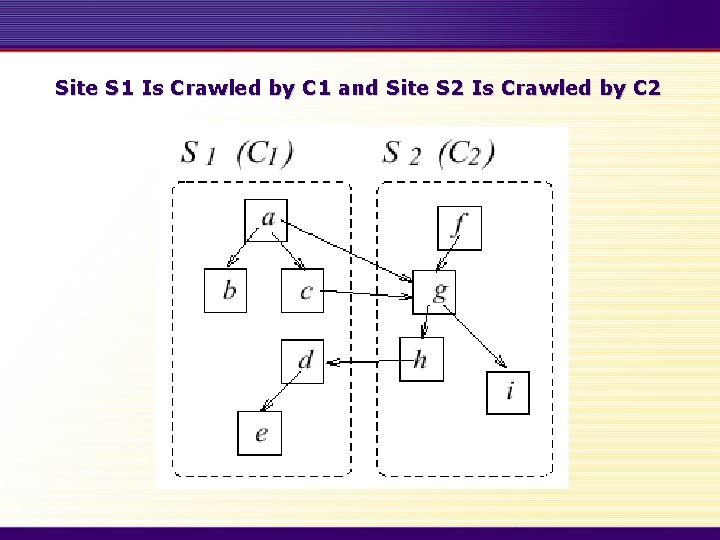

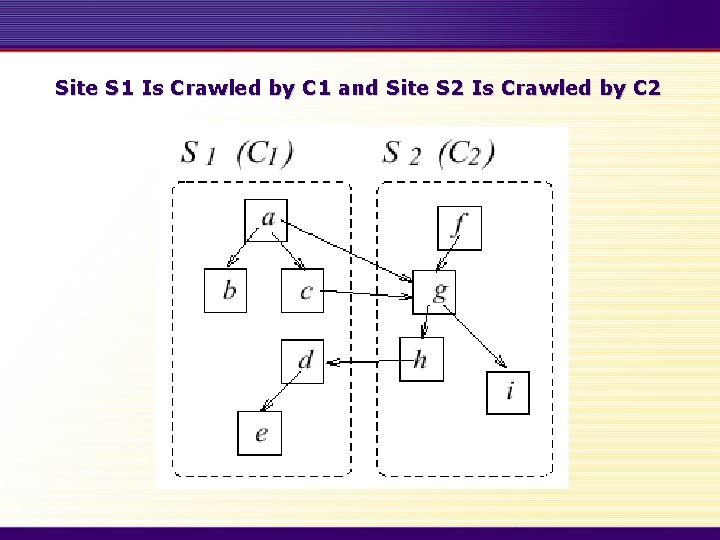

Site S 1 Is Crawled by C 1 and Site S 2 Is Crawled by C 2

Static Assignment Crawling Modes: Firewall Mode § § Each C-proc downloads only the pages within its partition and does not follow any interpartition link. All inter-partition links are ignored and thrown away. The overall crawler does not have any overlap, but may not download all pages, C-proc’s run independently - no coordination or URL exchanges.

Static Assignment Crawling Modes: Cross-over Mode § § C-proc downloads pages within its partition. When it runs out of pages in its partition, it follows inter-partition links. Downloaded pages may overlap but the overall crawler can download more pages than the firewall mode. C-proc’s do not communicate with each other, follow only the links they discovered.

Static Assignment Crawling Modes Exchange Mode § § § C-proc’s periodically and incrementally exchange inter-partition URLs. Processes do not follow inter-partition links. The overall crawler can avoid overlap, while maximizing coverage.

Methods for URL Exchange Reduction § § § Firewall and cross-over: • Independent. • Overlap. • May not download some pages. Exchange mode avoids these problems but requires constant URL exchange between cproc’s. To reduce URL exchange: • Batch communication. • Replication.

URL Exchange Reduction: Batch Communication § § § Wait with transferring an inter-partition URL, collect a set of URLs and send in a batch. A c-proc collects all inter-partition URLs until it downloads k pages. Partitions collected URLs and sends them to an appropriate c-proc. Starts to collect a new set of URLs from the next downloaded pages. Advantages: • Less communication overhead. • Absolute number of exchanged URLs will decrease.

URL Exchange Reduction: Replication § § § The number of incoming links to pages on the web follows a Zipfan distribution. Reduce URL exchanges by replicating the most “popular” URLs at each c-proc and stop transferring them. Identify the most popular k URLs based on the image of the web collected in a previous crawl (or on the fly) and replicate them. Significantly reduce URL exchanges, even if we replicate a small number of URLs. Replicated URLs may be used as the seed URLs.

Ways to Partition the Web: URL-hash Based § § Partition based on hash value of the URL. Pages in the same site can be assigned to different c-proc’s. Locality of link structure is not reflected in the partition. There will be many inter-partition links.

Ways to Partition the Web: Site-hash Based § § Compute the hash value only on the site name of a URL. Pages in the same site will be allocated to the same partition. Only some of the inter-site links will be interpartition links. Reduce the number of inter-partition links significantly.

Ways to Partition the Web: Hierarchical § § Partition the web hierarchically based on the URLs of pages. For example, divide the web into three partitions: • . Com domain. • . Net domain. • All other pages. Fewer inter-partition links - pages may point to more pages in the same domain. In preliminary experiments, no significant difference between the previous scheme, as long as each scheme splits the web into roughly the same number of partitions.

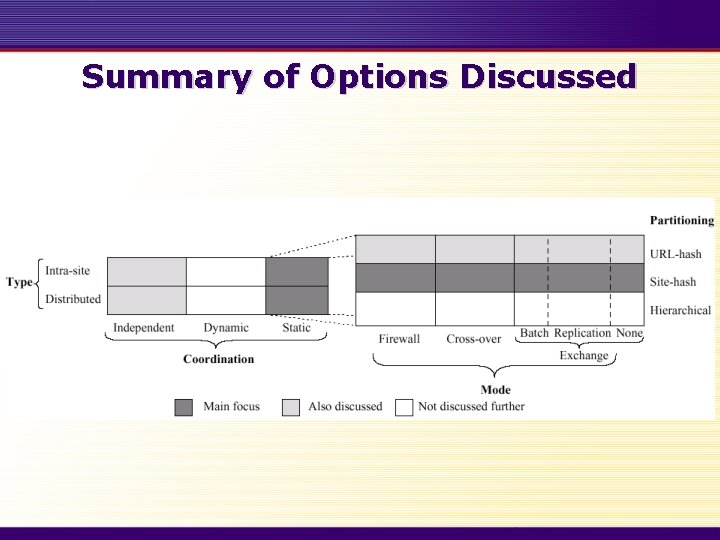

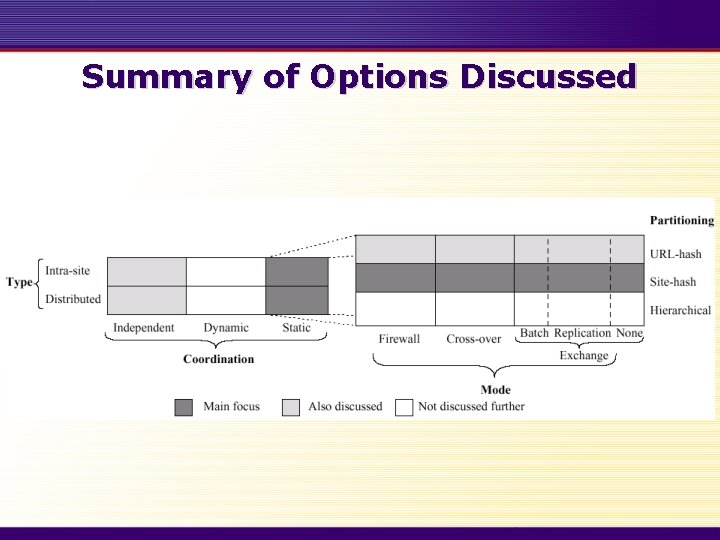

Summary of Options Discussed

Evaluation Models § Metrics that will quantify the advantages and disadvantages of different parallel crawling schemes, and used in the experiments: • Overlap • Coverage • Quality • Communication overhead

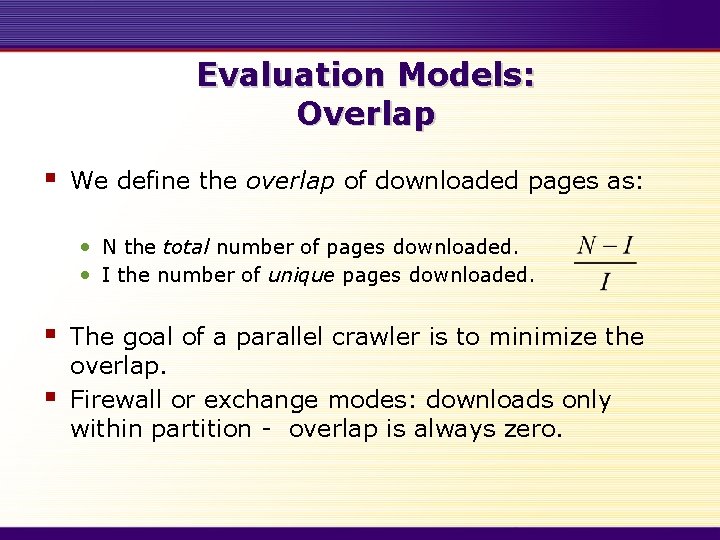

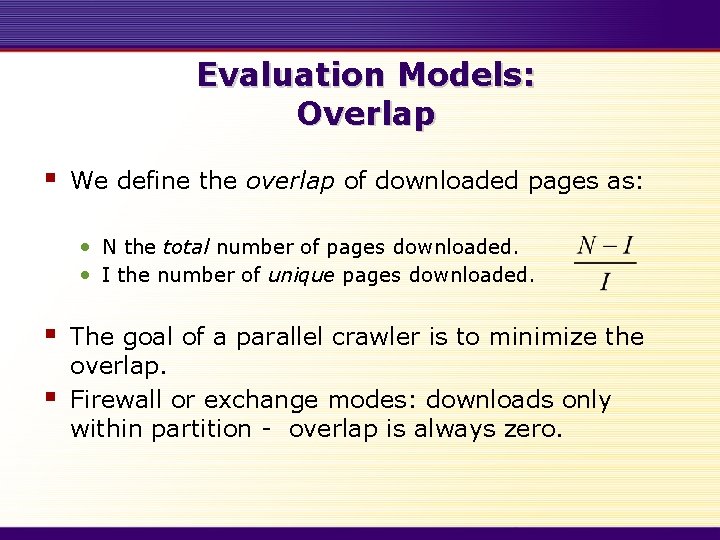

Evaluation Models: Overlap § We define the overlap of downloaded pages as: • N the total number of pages downloaded. • I the number of unique pages downloaded. § § The goal of a parallel crawler is to minimize the overlap. Firewall or exchange modes: downloads only within partition - overlap is always zero.

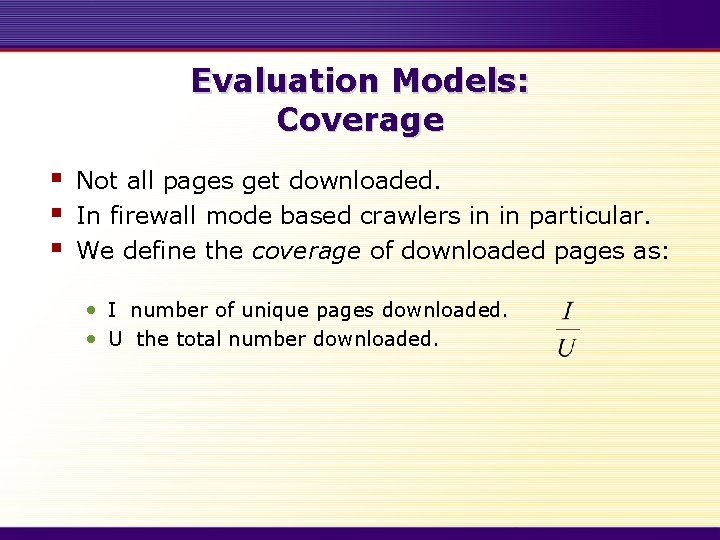

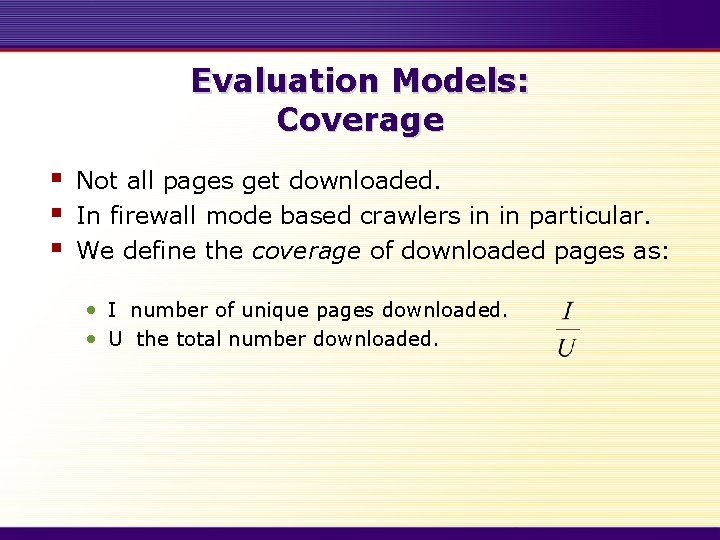

Evaluation Models: Coverage § § § Not all pages get downloaded. In firewall mode based crawlers in in particular. We define the coverage of downloaded pages as: • I number of unique pages downloaded. • U the total number downloaded.

Evaluation Models: Quality § § § Crawlers cannot download the whole web. Try to download an “important”or “relevant” section of the web. To implement this policy: importance metric (like backlink count). A single-process crawler - constantly keeps track of how many backlinks each page has, and first visits the page with the highest backlink count. Pages downloaded in this way may not be the top 1 million pages, because the page selection is not based on the entire web, only on what has been so far.

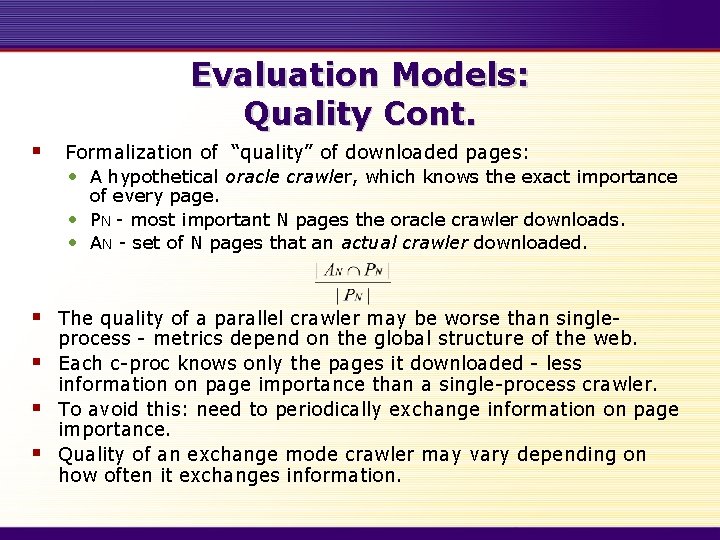

Evaluation Models: Quality Cont. § Formalization of “quality” of downloaded pages: • A hypothetical oracle crawler, which knows the exact importance of every page. • PN - most important N pages the oracle crawler downloads. • AN - set of N pages that an actual crawler downloaded. § § The quality of a parallel crawler may be worse than singleprocess - metrics depend on the global structure of the web. Each c-proc knows only the pages it downloaded - less information on page importance than a single-process crawler. To avoid this: need to periodically exchange information on page importance. Quality of an exchange mode crawler may vary depending on how often it exchanges information.

Evaluation Models Communication Overhead § § § In exchange mode: need to exchange messages to coordinate their work & swap their inter-partition URLs periodically. To quantify how much communication is required for this exchange, we define communication overhead as the average number of inter-partition URLs exchanged per downloaded page. A crawler based on the firewall and the cross-over mode do not have any communication overhead, because they do not exchange any inter-partition URLs.

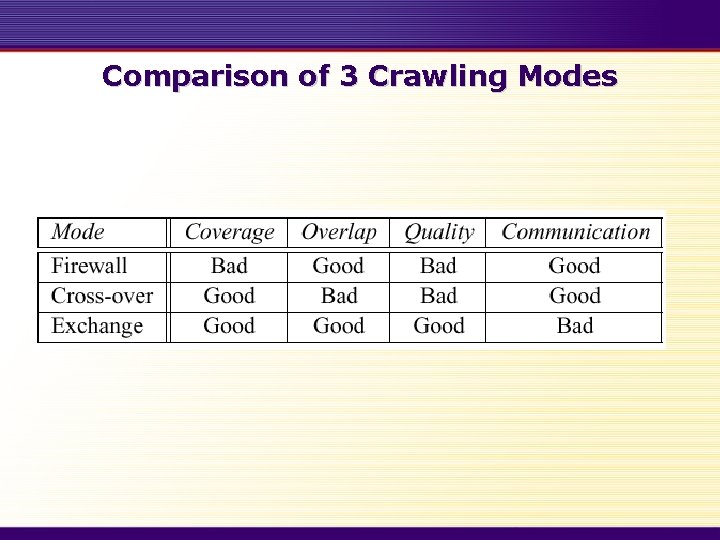

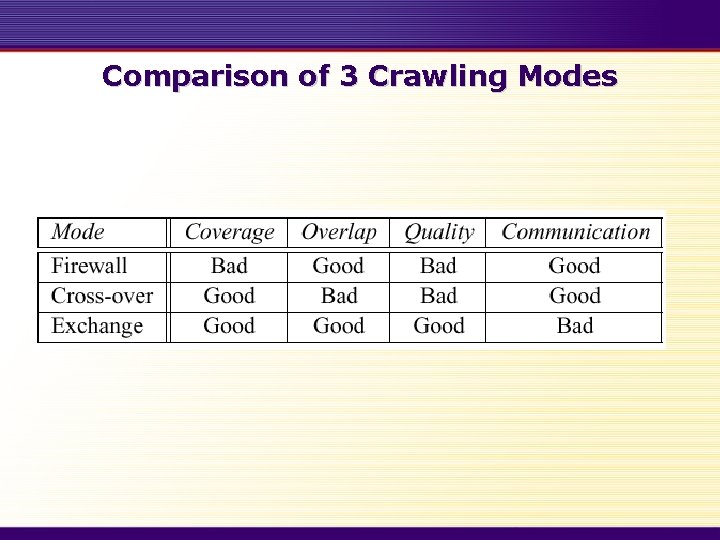

Comparison of 3 Crawling Modes