PARALLEL COMPUTING TEAM 4 SAHIL ARORA RASHMI CHAUDHARY

- Slides: 43

PARALLEL COMPUTING TEAM 4 SAHIL ARORA | RASHMI CHAUDHARY SUMEDHA INAMDAR | DANIEL MOJAHEDI

PRESENTATION OUTLINE • Introduction • Flynn’s Taxonomy • Shared Memory vs Distributed Memory • Handler’s Classification • Grain Size • Parallel Programming

INTRODUCTION • Parallel computing is a type of computation in which there is simultaneous execution of processes. • Large problems can often be divided into smaller ones, which can then be solved at the same time. • Parallelism results in improved performance by distributing computational load among several processors.

IMPORTANCE OF PARALLEL COMPUTING Challenges Faced with Von Neumann Architecture • High demand on bus to transfer data from single CPU to single Memory causes lag in processing speed (Von Neumann bottleneck). • Efforts to reduce distance between CPU and Memory have reached physical limits. • Increased energy consumption and heat emissions due to increased processor speeds. Solutions Found in Parallel Computing • Increased number of CPU and Memory units allows for data to transfer in parallel through multiple buses instead of trying to continually transfer via one bus. • Multiple cores and computer systems reduce pressure to increase individual core speeds and thus reduce energy requirements and heat emissions (though do not remove them altogether).

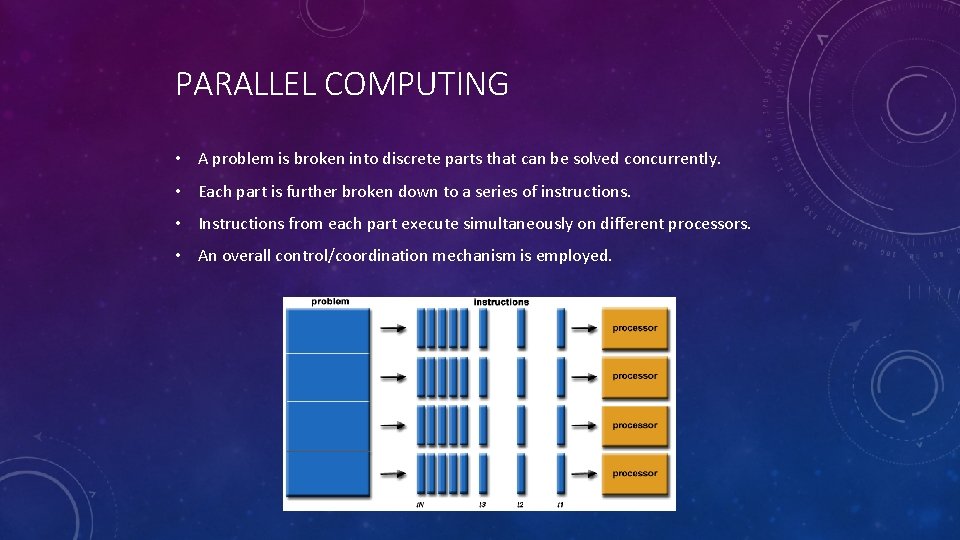

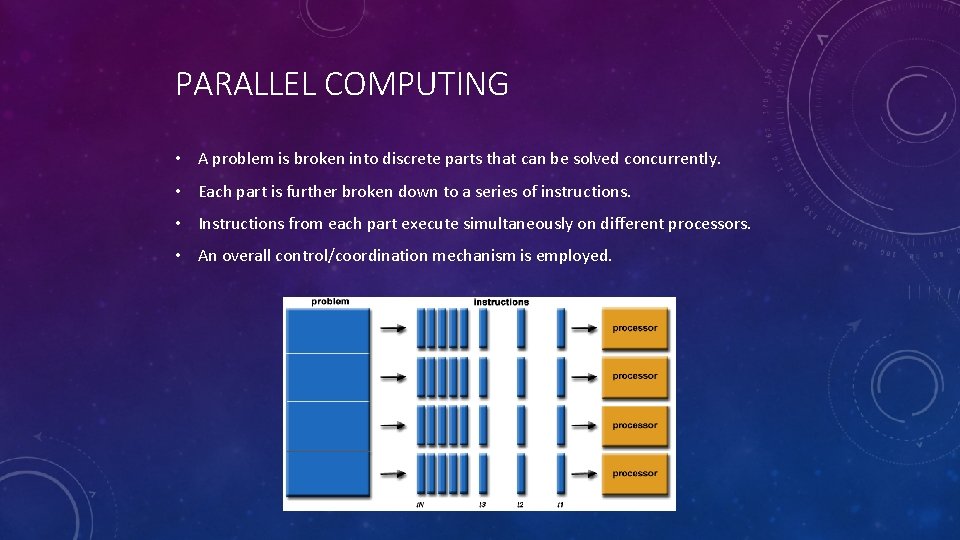

PARALLEL COMPUTING • A problem is broken into discrete parts that can be solved concurrently. • Each part is further broken down to a series of instructions. • Instructions from each part execute simultaneously on different processors. • An overall control/coordination mechanism is employed.

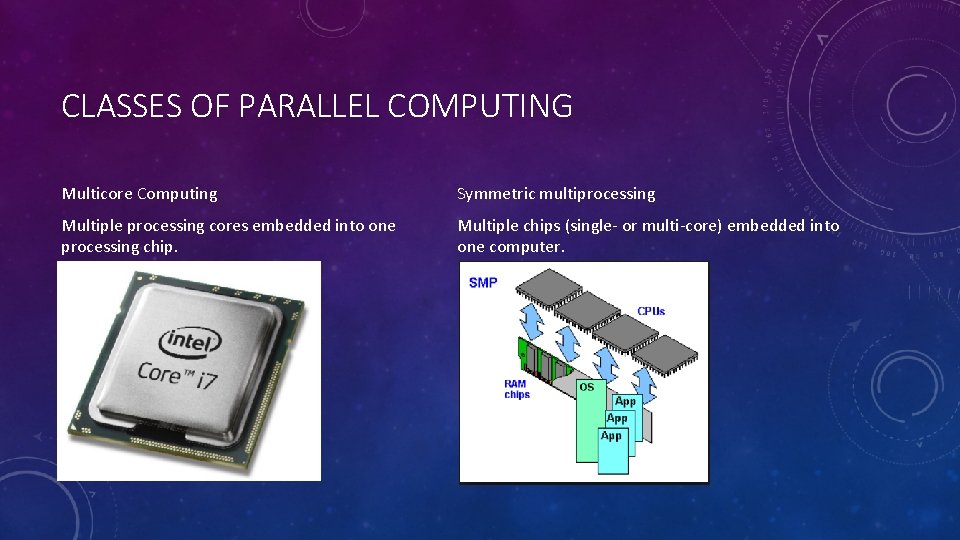

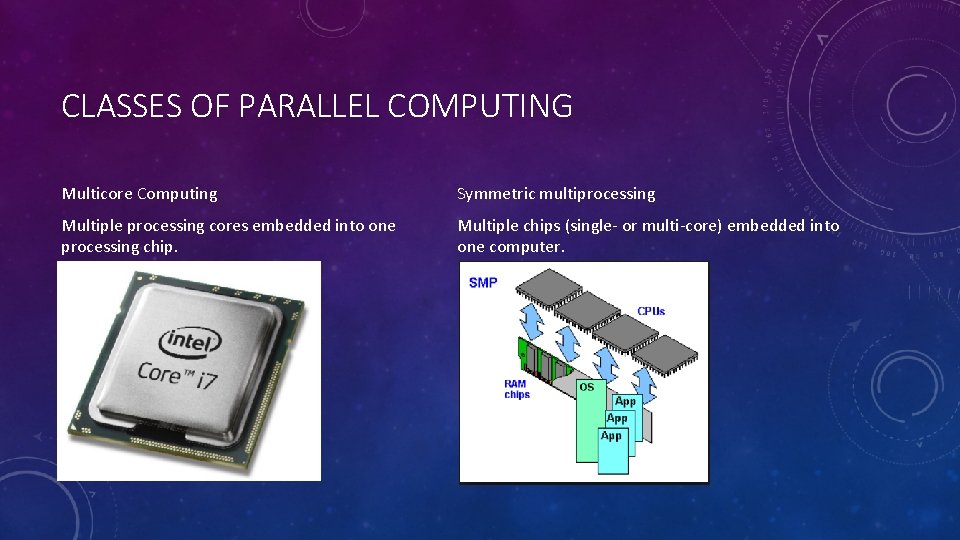

CLASSES OF PARALLEL COMPUTING Multicore Computing Symmetric multiprocessing Multiple processing cores embedded into one processing chip. Multiple chips (single- or multi-core) embedded into one computer.

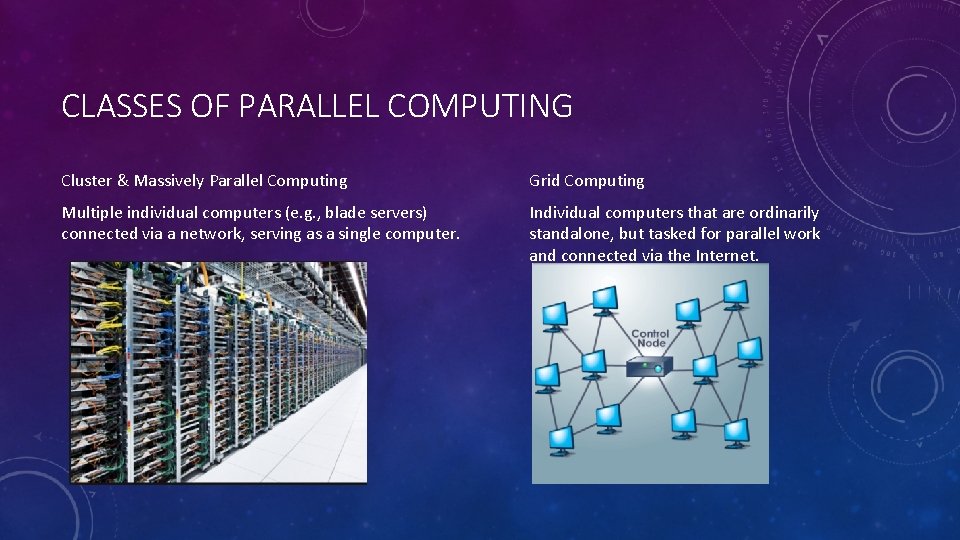

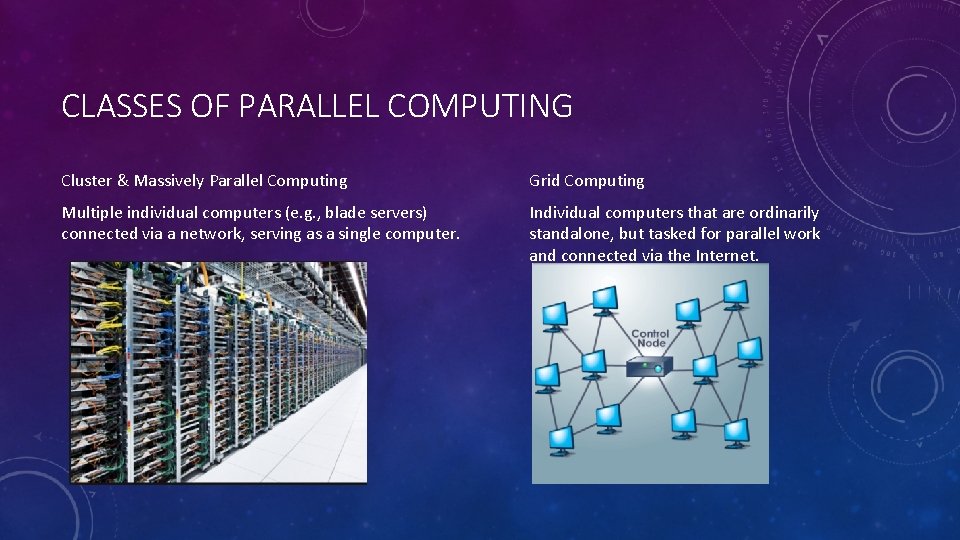

CLASSES OF PARALLEL COMPUTING Cluster & Massively Parallel Computing Grid Computing Multiple individual computers (e. g. , blade servers) connected via a network, serving as a single computer. Individual computers that are ordinarily standalone, but tasked for parallel work and connected via the Internet.

TYPES OF CLASSIFICATION • Classification based on the instruction and data streams • Classification based on how the memory is accessed • Classification based on the structure of computers • Classification based on grain size

CLASSIFICATION OF PARALLEL COMPUTERS • Flynn’s Taxonomy based on Instruction and data streams • The Structural classification based on different computer organizations. • The Handler’s classification on levels of pipelining versus parallelism. • Grain Size levels of parallelism, looking at which levels of a program can be parallelized.

FLYNN’S TAXONOMY • One of the more widely used classifications is called Flynn's Taxonomy. • Flynn's taxonomy is a classification of computer architectures and it was first studied and proposed by Michael Flynn. • Flynn's taxonomy distinguishes multi-processor computer architectures according to how they can be classified along the two independent dimensions of Instruction Stream and Data Stream. Each of these dimensions can have only one of two possible states: Single or Multiple.

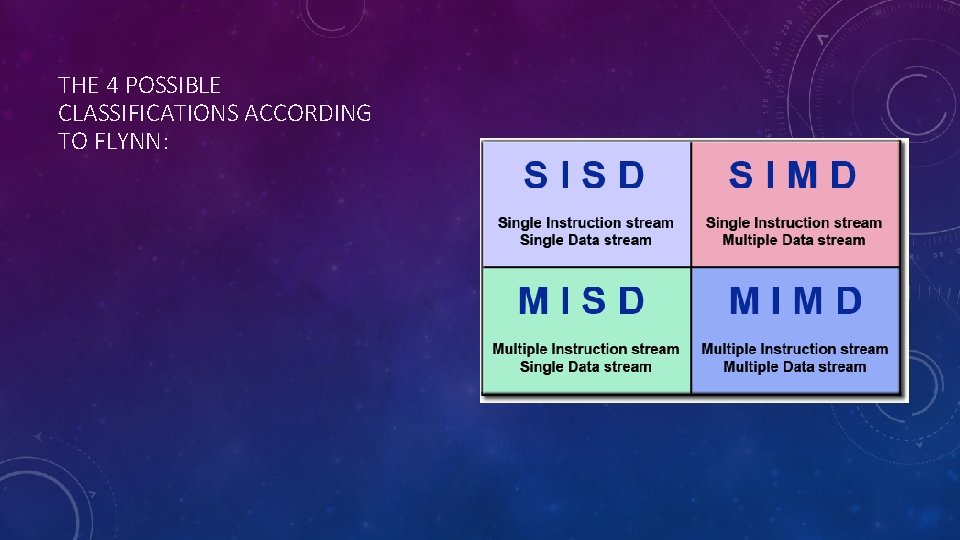

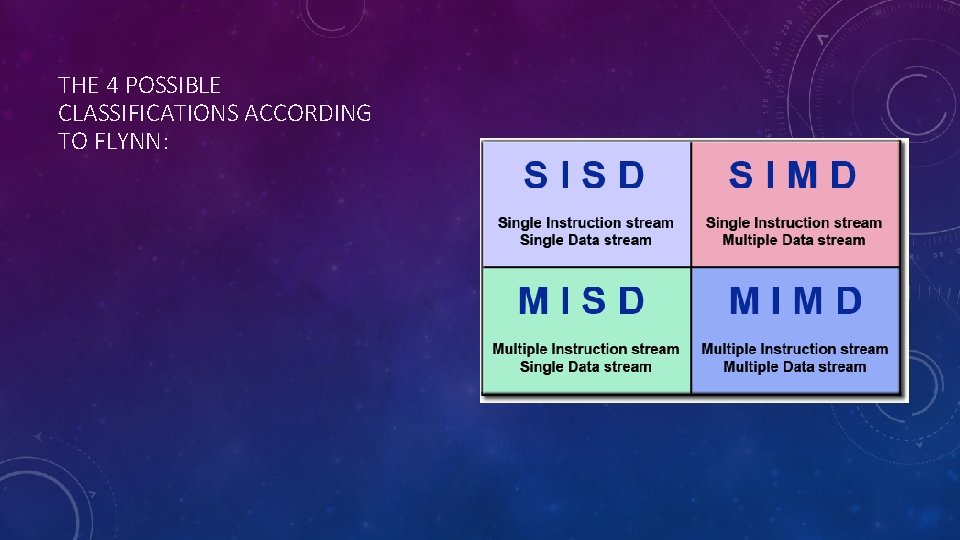

THE 4 POSSIBLE CLASSIFICATIONS ACCORDING TO FLYNN:

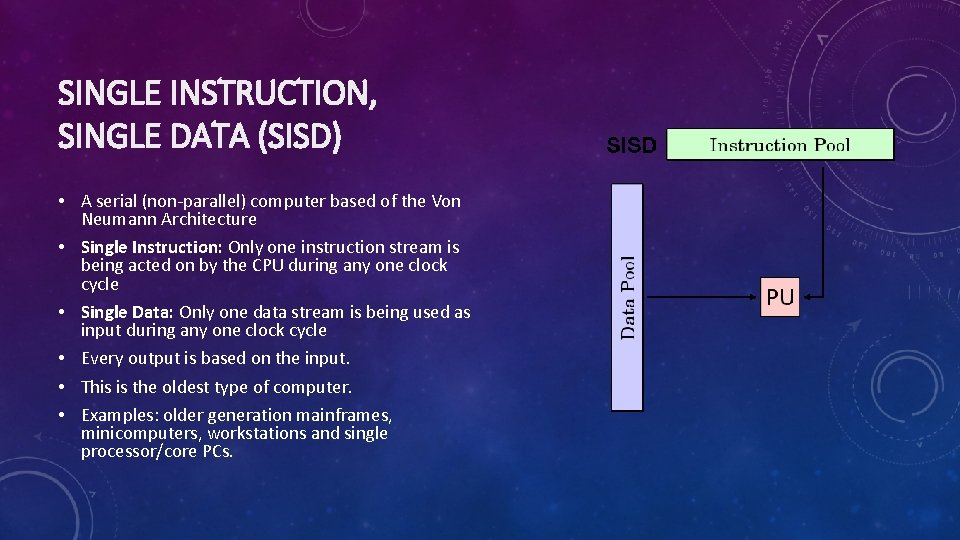

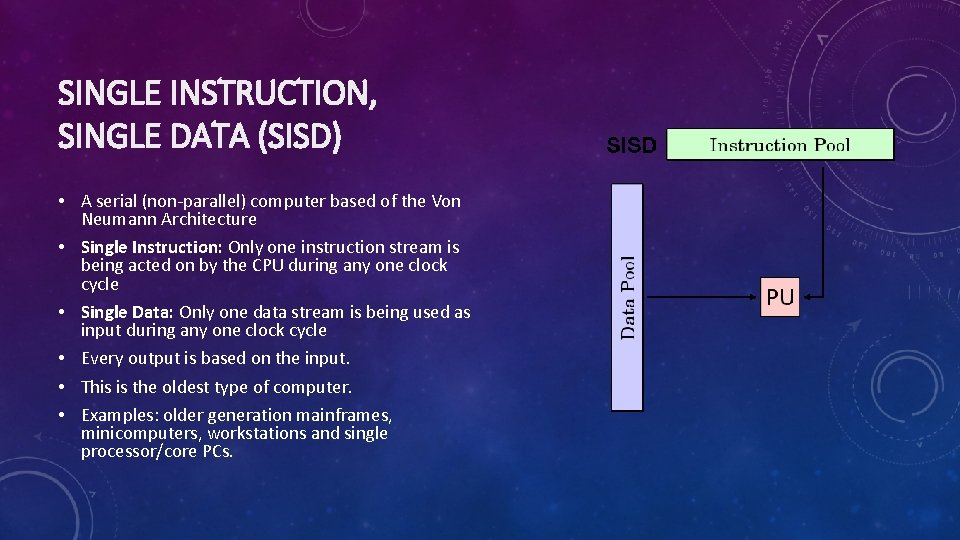

SINGLE INSTRUCTION, SINGLE DATA (SISD) • A serial (non-parallel) computer based of the Von Neumann Architecture • Single Instruction: Only one instruction stream is being acted on by the CPU during any one clock cycle • Single Data: Only one data stream is being used as input during any one clock cycle • Every output is based on the input. • This is the oldest type of computer. • Examples: older generation mainframes, minicomputers, workstations and single processor/core PCs.

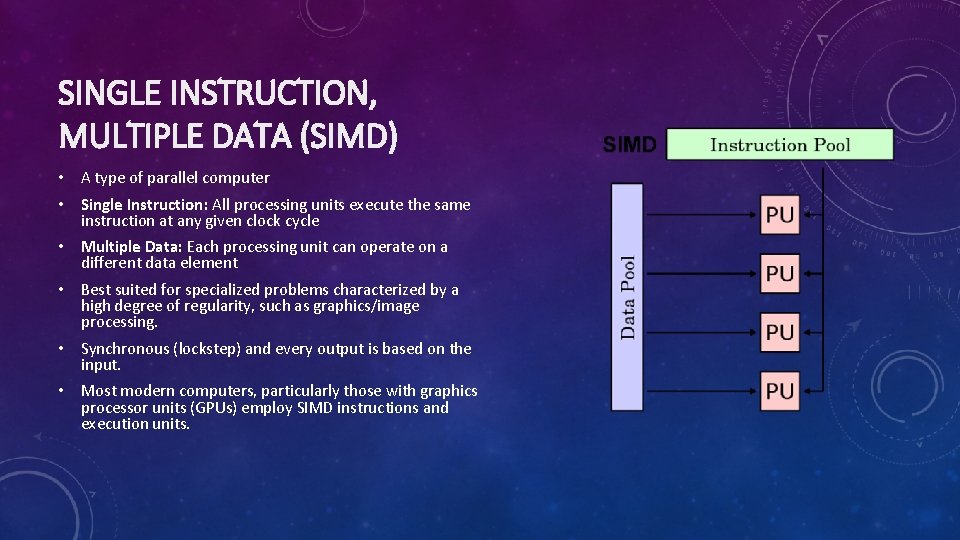

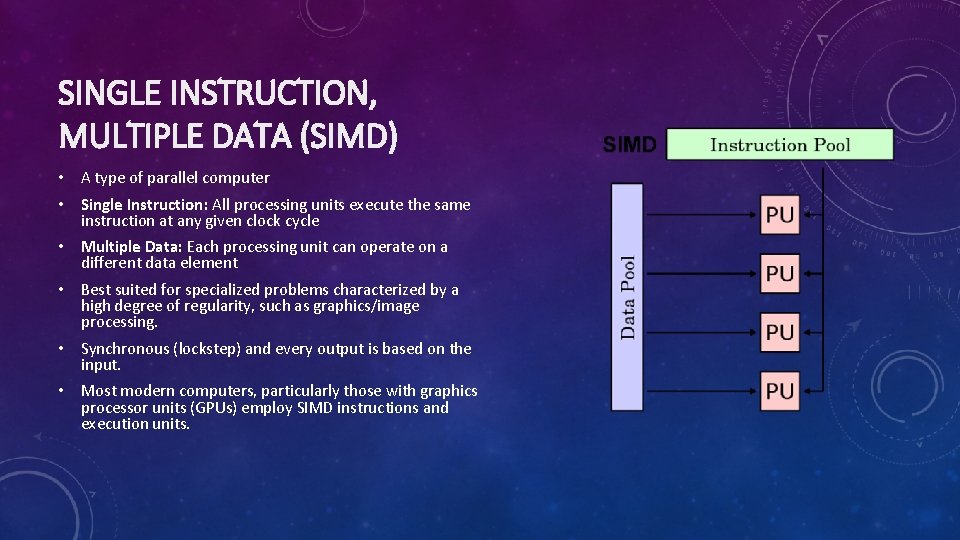

SINGLE INSTRUCTION, MULTIPLE DATA (SIMD) • A type of parallel computer • Single Instruction: All processing units execute the same instruction at any given clock cycle • Multiple Data: Each processing unit can operate on a different data element • Best suited for specialized problems characterized by a high degree of regularity, such as graphics/image processing. • Synchronous (lockstep) and every output is based on the input. • Most modern computers, particularly those with graphics processor units (GPUs) employ SIMD instructions and execution units.

SIMD(CONTINUED) • ONE processor starts the program and It sends code to the other processors • As per the diagram the front-end is represented by Instruction Pool which sends code to individual PU’s which execute the remaining code. • Each processor executes the same code • Example: Sum elements of array A for each Pi, i = 0 to 9 // “executed” by front-end Ti = 0 for j = 0 to 9 // Pi sums its segment Ti = Ti + A[i * 10 + j]

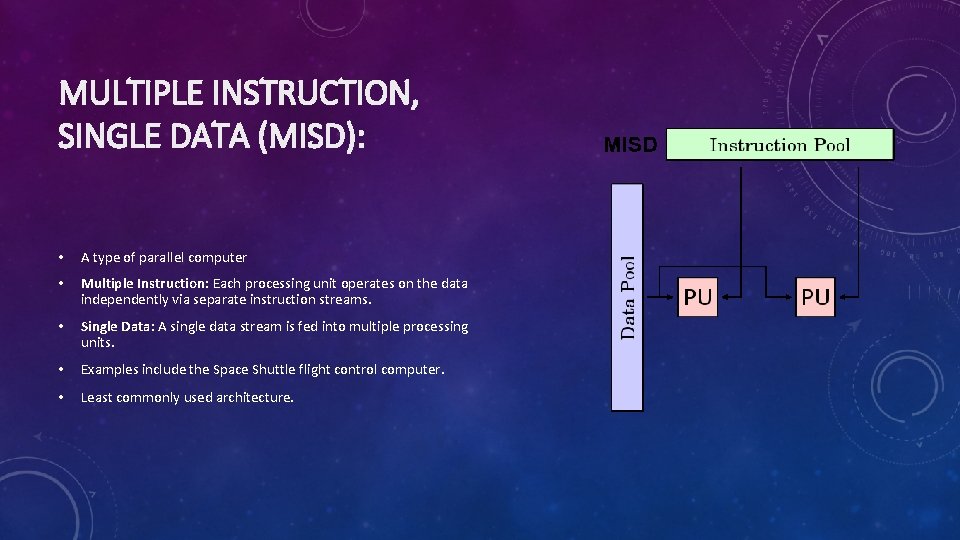

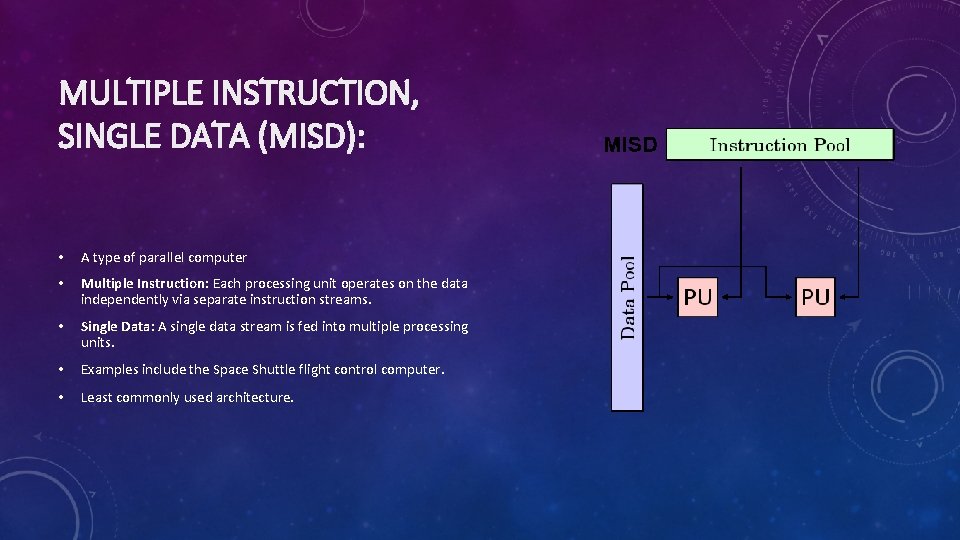

MULTIPLE INSTRUCTION, SINGLE DATA (MISD): • A type of parallel computer • Multiple Instruction: Each processing unit operates on the data independently via separate instruction streams. • Single Data: A single data stream is fed into multiple processing units. • Examples include the Space Shuttle flight control computer. • Least commonly used architecture.

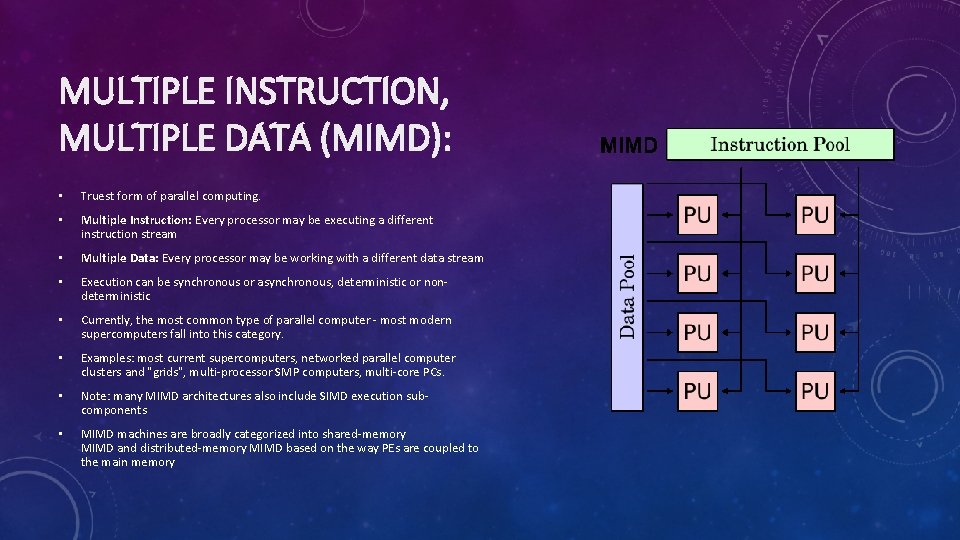

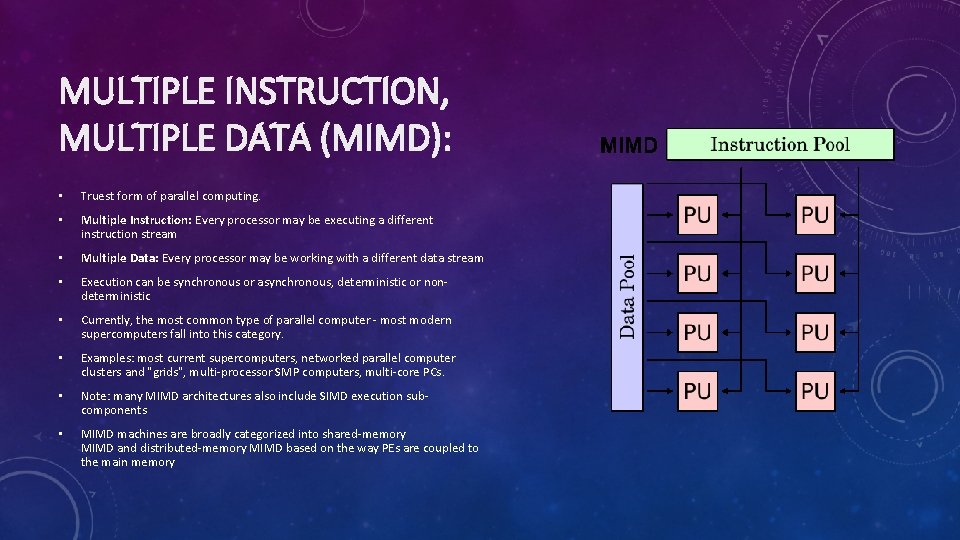

MULTIPLE INSTRUCTION, MULTIPLE DATA (MIMD): • Truest form of parallel computing. • Multiple Instruction: Every processor may be executing a different instruction stream • Multiple Data: Every processor may be working with a different data stream • Execution can be synchronous or asynchronous, deterministic or nondeterministic • Currently, the most common type of parallel computer - most modern supercomputers fall into this category. • Examples: most current supercomputers, networked parallel computer clusters and "grids", multi-processor SMP computers, multi-core PCs. • Note: many MIMD architectures also include SIMD execution subcomponents • MIMD machines are broadly categorized into shared-memory MIMD and distributed-memory MIMD based on the way PEs are coupled to the main memory

MODERN PARALLEL ARCHITECTURES • Basic architectural schemes: Shared Memory Computer / Tightly Coupled Systems Distributed Memory Computer/ Loosely Coupled Systems

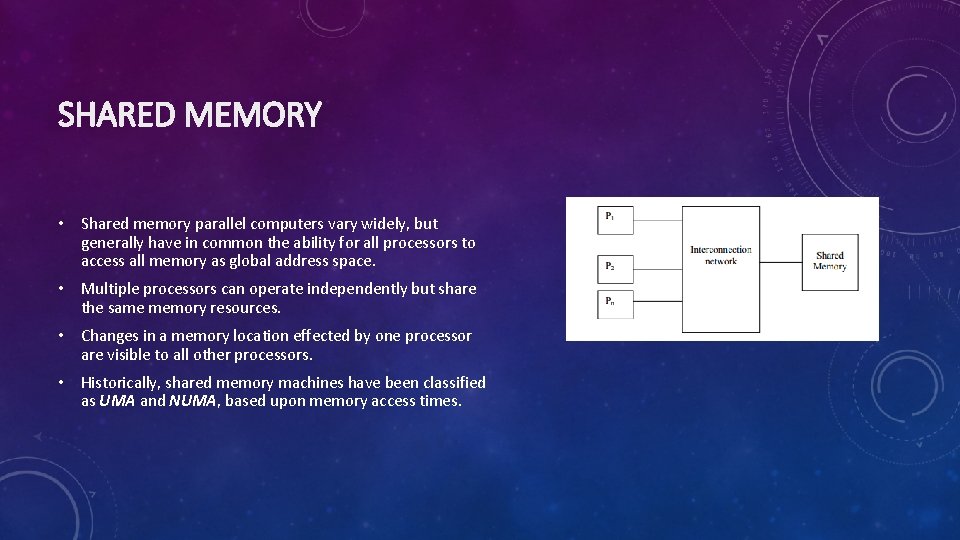

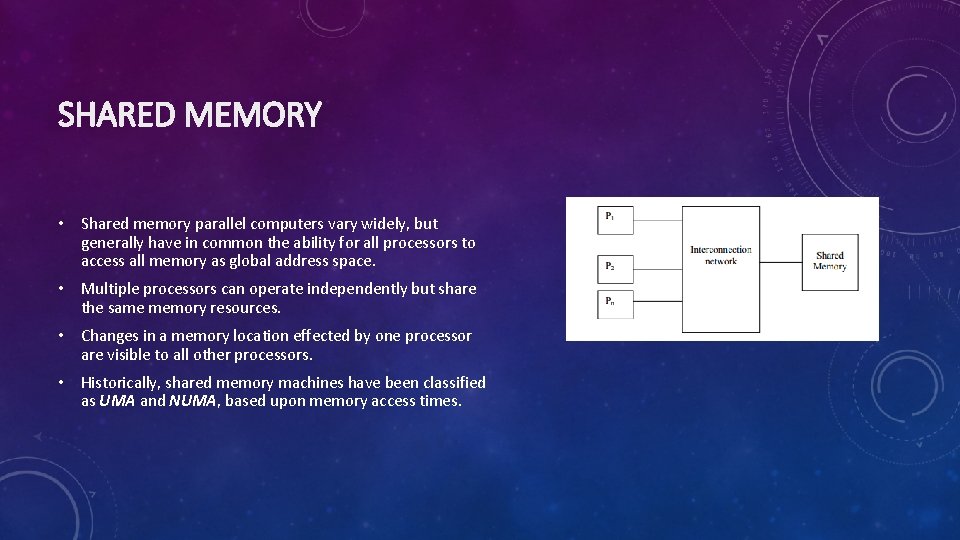

SHARED MEMORY • Shared memory parallel computers vary widely, but generally have in common the ability for all processors to access all memory as global address space. • Multiple processors can operate independently but share the same memory resources. • Changes in a memory location effected by one processor are visible to all other processors. • Historically, shared memory machines have been classified as UMA and NUMA, based upon memory access times.

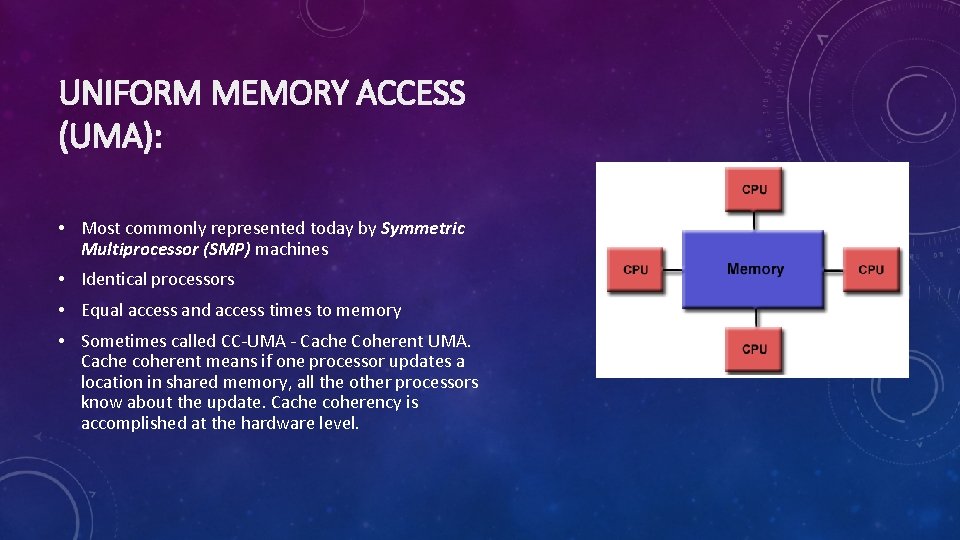

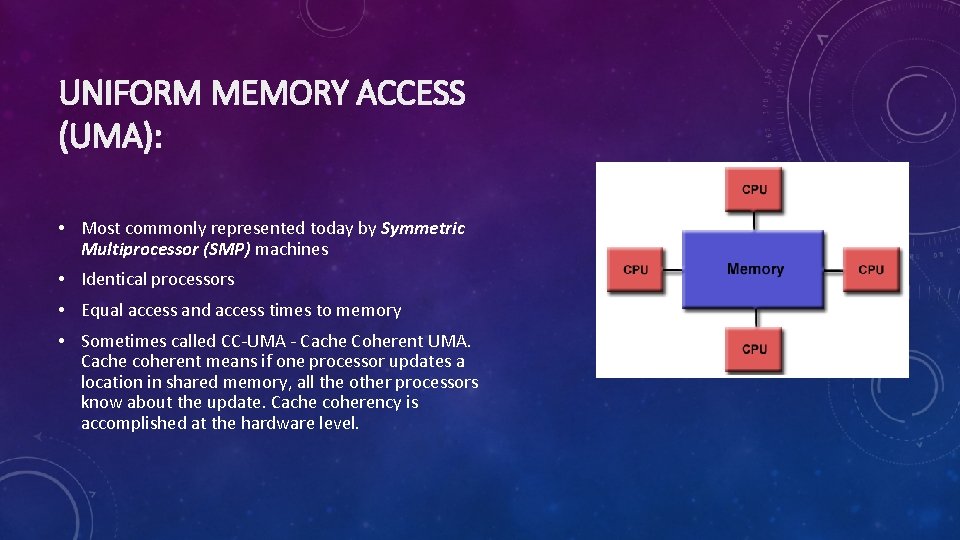

UNIFORM MEMORY ACCESS (UMA): • Most commonly represented today by Symmetric Multiprocessor (SMP) machines • Identical processors • Equal access and access times to memory • Sometimes called CC-UMA - Cache Coherent UMA. Cache coherent means if one processor updates a location in shared memory, all the other processors know about the update. Cache coherency is accomplished at the hardware level.

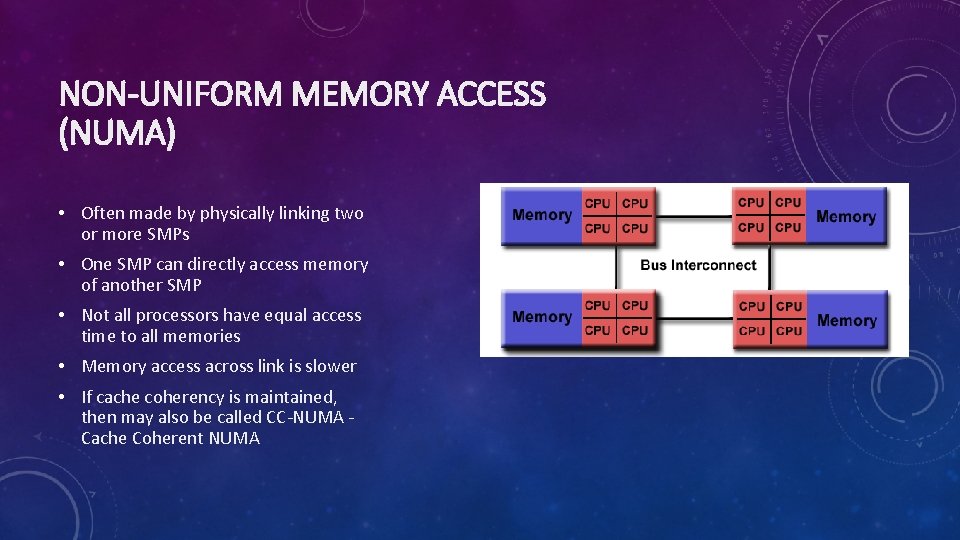

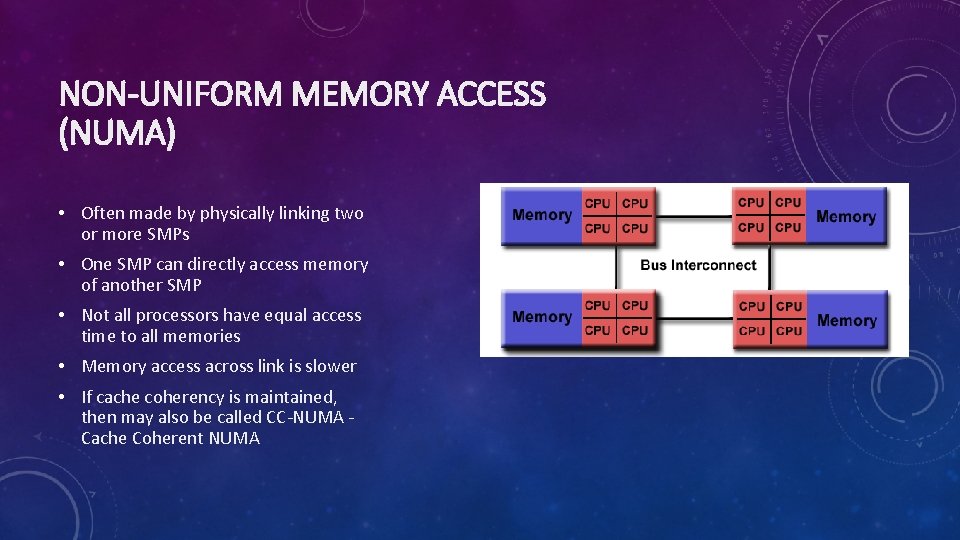

NON-UNIFORM MEMORY ACCESS (NUMA) • Often made by physically linking two or more SMPs • One SMP can directly access memory of another SMP • Not all processors have equal access time to all memories • Memory access across link is slower • If cache coherency is maintained, then may also be called CC-NUMA - Cache Coherent NUMA

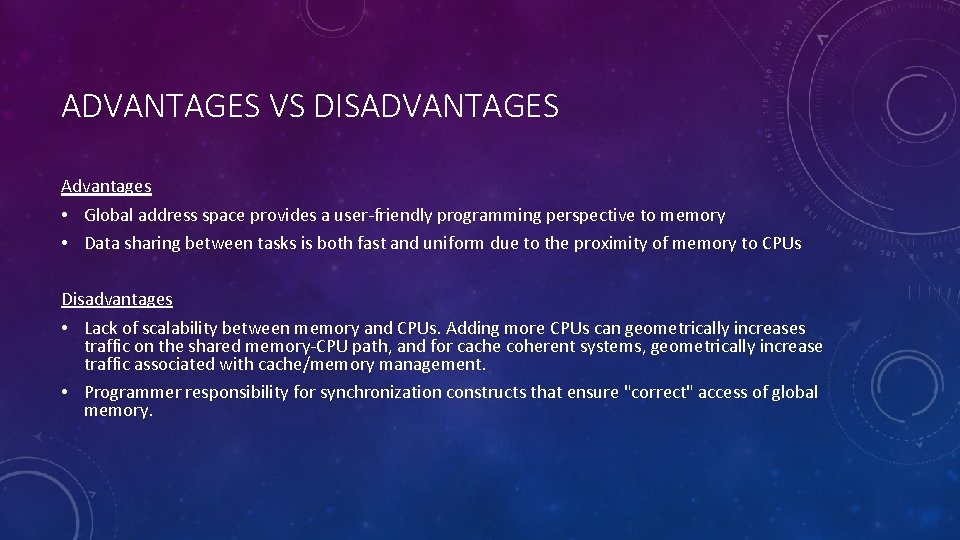

ADVANTAGES VS DISADVANTAGES Advantages • Global address space provides a user-friendly programming perspective to memory • Data sharing between tasks is both fast and uniform due to the proximity of memory to CPUs Disadvantages • Lack of scalability between memory and CPUs. Adding more CPUs can geometrically increases traffic on the shared memory-CPU path, and for cache coherent systems, geometrically increase traffic associated with cache/memory management. • Programmer responsibility for synchronization constructs that ensure "correct" access of global memory.

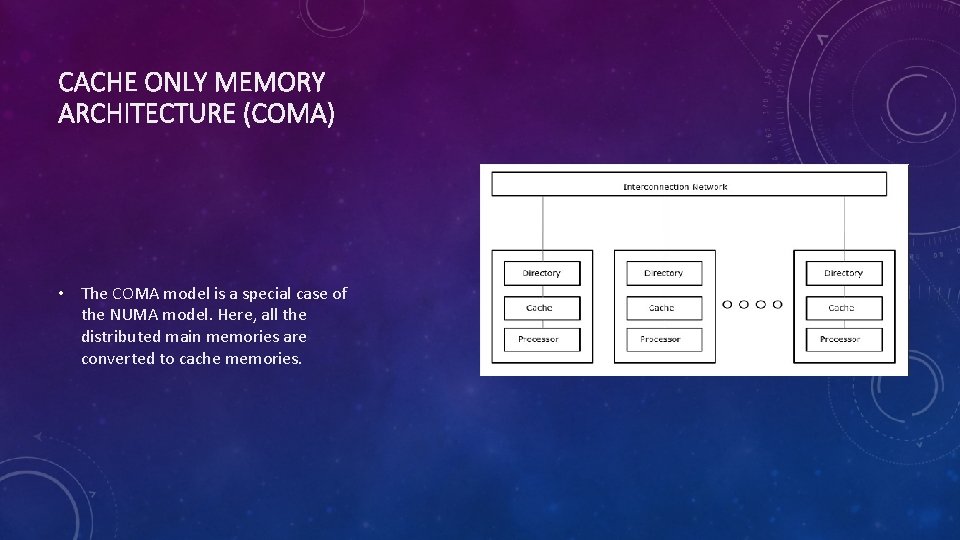

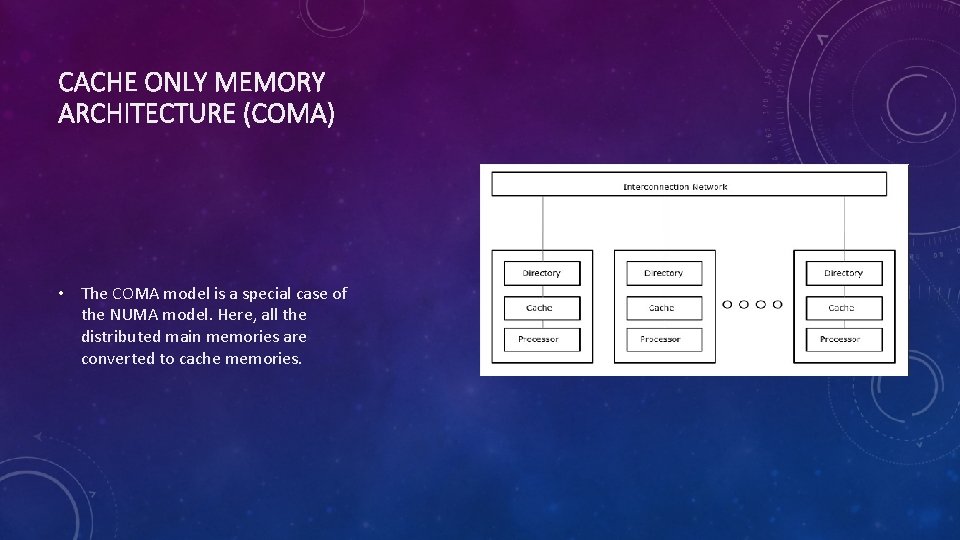

CACHE ONLY MEMORY ARCHITECTURE (COMA) • The COMA model is a special case of the NUMA model. Here, all the distributed main memories are converted to cache memories.

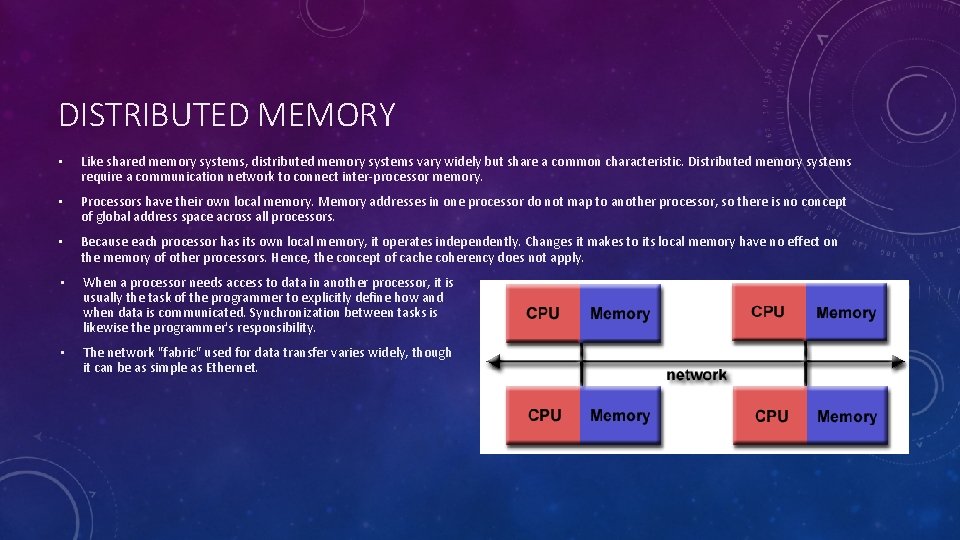

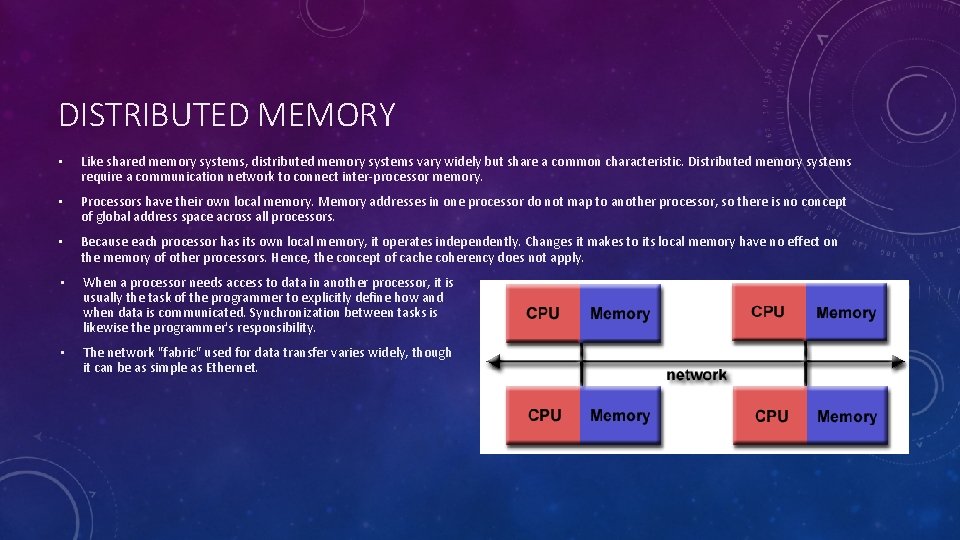

DISTRIBUTED MEMORY • Like shared memory systems, distributed memory systems vary widely but share a common characteristic. Distributed memory systems require a communication network to connect inter-processor memory. • Processors have their own local memory. Memory addresses in one processor do not map to another processor, so there is no concept of global address space across all processors. • Because each processor has its own local memory, it operates independently. Changes it makes to its local memory have no effect on the memory of other processors. Hence, the concept of cache coherency does not apply. • When a processor needs access to data in another processor, it is usually the task of the programmer to explicitly define how and when data is communicated. Synchronization between tasks is likewise the programmer's responsibility. • The network "fabric" used for data transfer varies widely, though it can be as simple as Ethernet.

ADVANTAGES VS DISADVANTAGES Advantages • Memory is scalable with the number of processors. Increase the number of processors and the size of memory increases proportionately. • Each processor can rapidly access its own memory without interference and without the overhead incurred with trying to maintain global cache coherency. • Cost effectiveness: can use commodity, off-the-shelf processors and networking. Disadvantages • The programmer is responsible for many of the details associated with data communication between processors. • It may be difficult to map existing data structures, based on global memory, to this memory organization. • Non-uniform memory access times - data residing on a remote node takes longer to access than node local data.

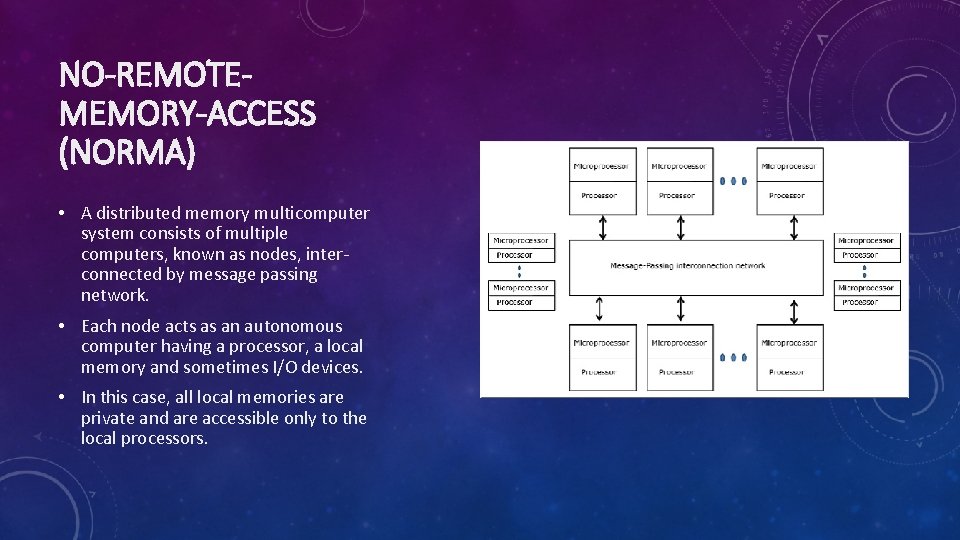

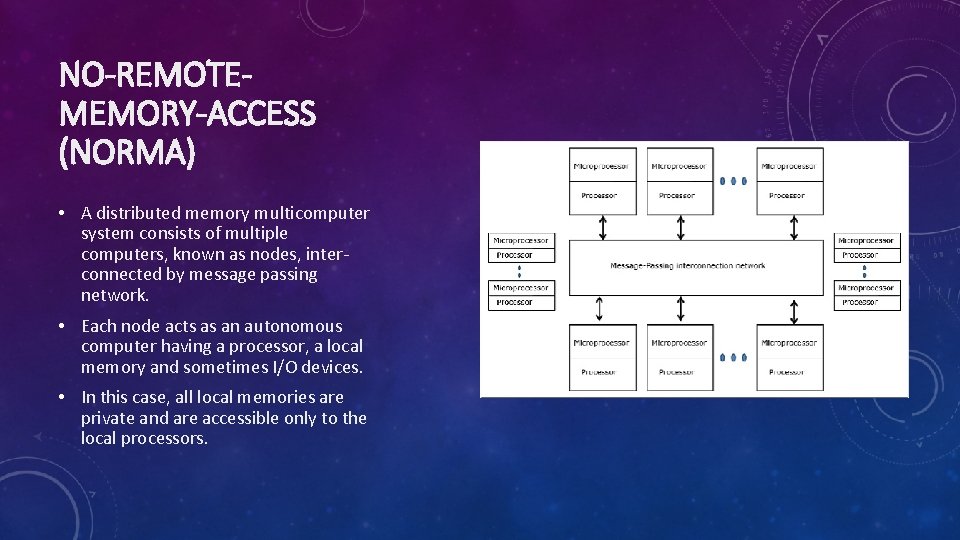

NO-REMOTEMEMORY-ACCESS (NORMA) • A distributed memory multicomputer system consists of multiple computers, known as nodes, interconnected by message passing network. • Each node acts as an autonomous computer having a processor, a local memory and sometimes I/O devices. • In this case, all local memories are private and are accessible only to the local processors.

HYBRID DISTRIBUTED – SHARED MEMORY • The largest and fastest computers in the world today employ both shared and distributed memory architectures. • The shared memory component can be a shared memory machine and/or graphics processing units (GPU). • The distributed memory component is the networking of multiple shared memory/GPU machines, which know only about their own memory - not the memory on another machine. Therefore, network communications are required to move data from one machine to another. • Current trends seem to indicate that this type of memory architecture will continue to prevail and increase at the high end of computing for the foreseeable future.

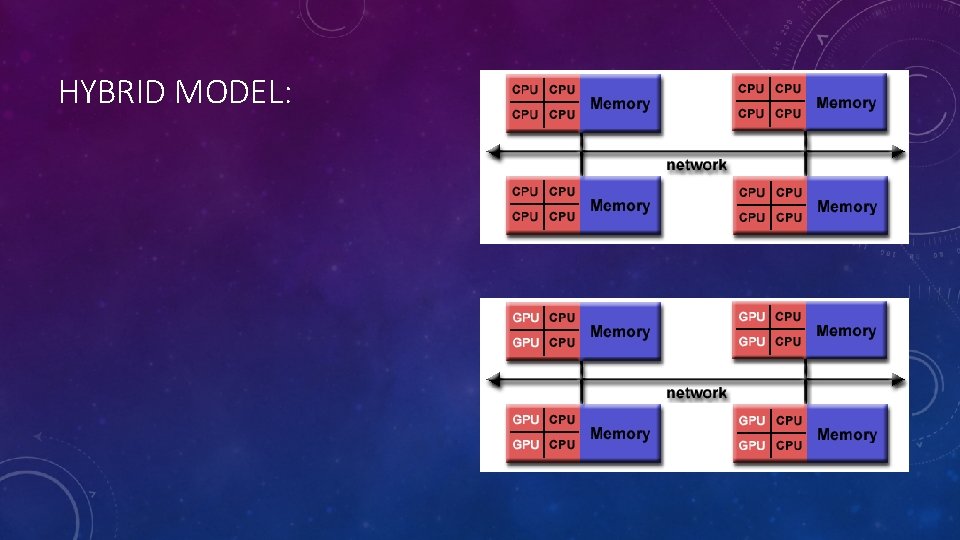

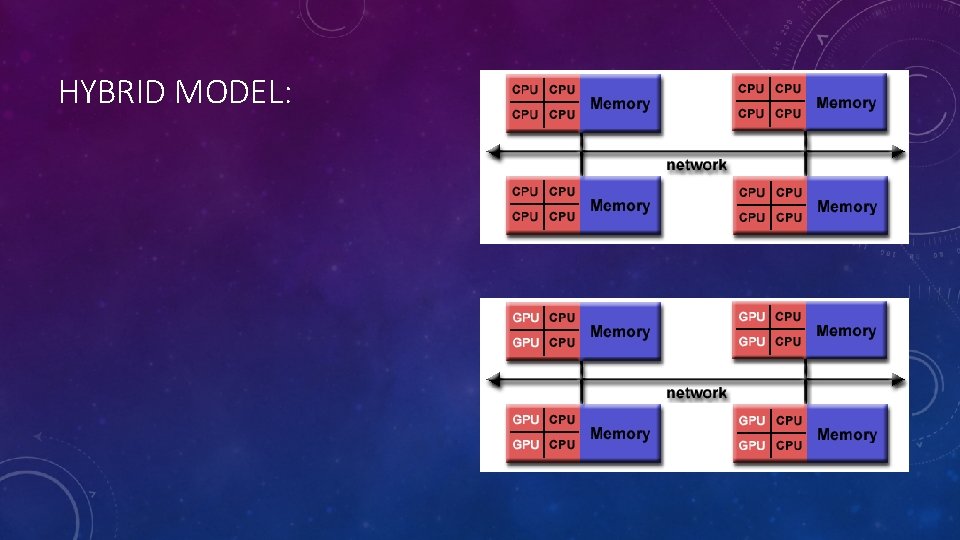

HYBRID MODEL:

ADVANTAGES AND DISADVANTAGES • Increased scalability is an important advantage • Increased programmer complexity is an important disadvantage

HANDLER’S CLASSIFICATION • Classification system for describing the pipelining and parallelism of computers. • Key Terms • Processor control unit (PCU): A Processor or CPU • Arithmetic logic unit (ALU): A Functional Unit or Processing Element • Bit-level circuit (BLC): Logic Circuit Needed to Perform One-bit Operations in the ALU

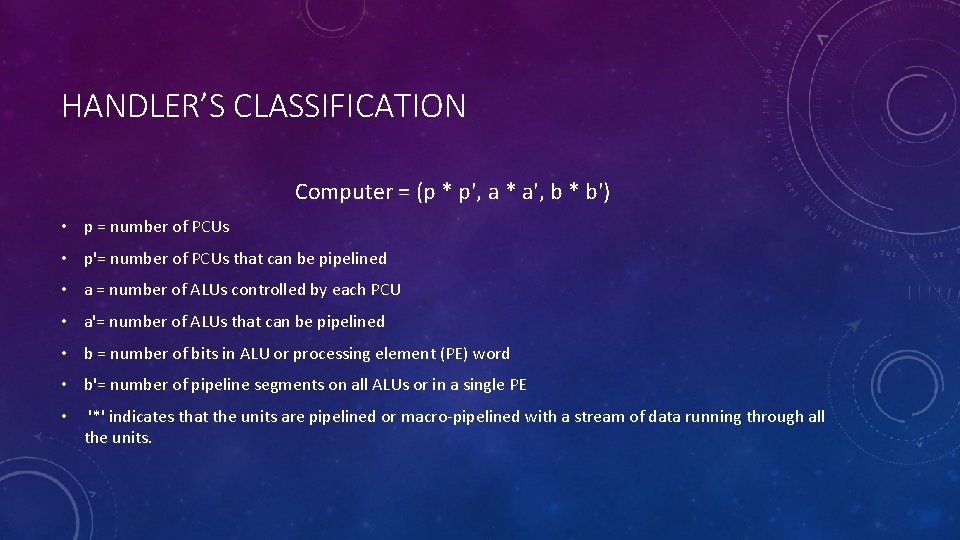

HANDLER’S CLASSIFICATION Computer = (p * p', a * a', b * b') • p = number of PCUs • p'= number of PCUs that can be pipelined • a = number of ALUs controlled by each PCU • a'= number of ALUs that can be pipelined • b = number of bits in ALU or processing element (PE) word • b'= number of pipeline segments on all ALUs or in a single PE • '*' indicates that the units are pipelined or macro-pipelined with a stream of data running through all the units.

GRAIN SIZE The architectures described above, and the needs of the program being run on the architecture, determine the grain size or levels of parallelism that may be run. • Instruction Level: Highest level of parallelism. Causes higher overhead to coordinate work. • Loop Level: Achieved by compiler. Can be more difficult to organize, depending on loop structure. • Procedure Level: Up to programmer to coordinate. • Program Level: Coarsest level of parallelism. Can usually be coordinated by operating system.

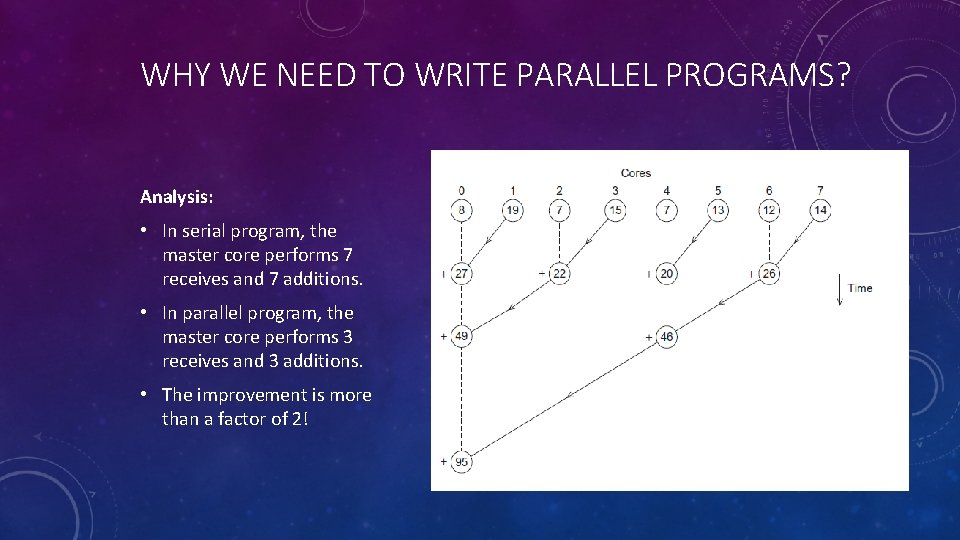

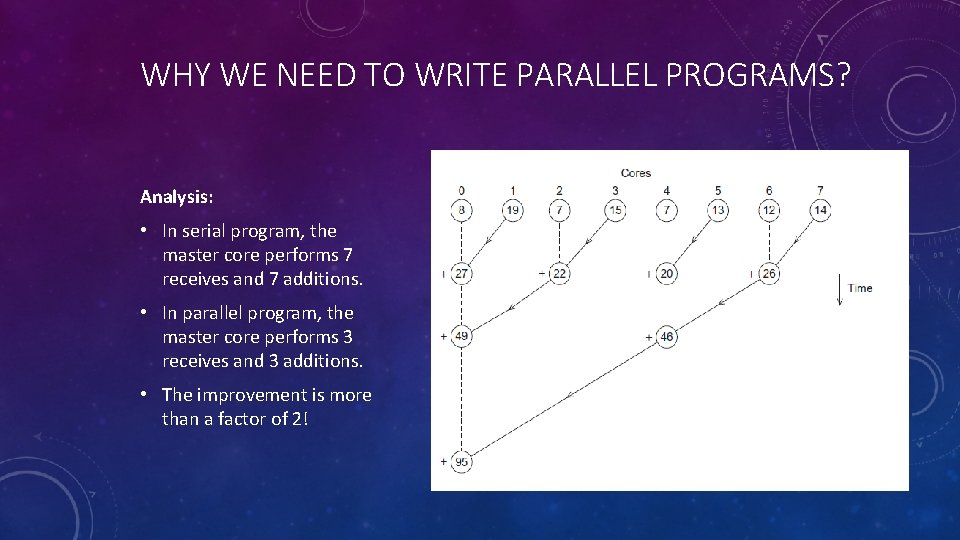

WHY WE NEED TO WRITE PARALLEL PROGRAMS? Analysis: • In serial program, the master core performs 7 receives and 7 additions. • In parallel program, the master core performs 3 receives and 3 additions. • The improvement is more than a factor of 2!

DEPENDENCIES Parallel Programing can be quite challenging. Instructions must be independent of one another in order to be run in parallel. Types of Dependencies: • Flow Dependence: The output of one instruction is the input of another. • Antidependence: When one instruction alters data that was already by another instruction. Can cause data corruption. • Output Dependence: Two instructions write to the same value. Any change to their order can create invalid values. • I/O Dependence: Two instructions call for I/O operations on the same file. Such dependencies must be taken into consideration when running programs on parallel computers.

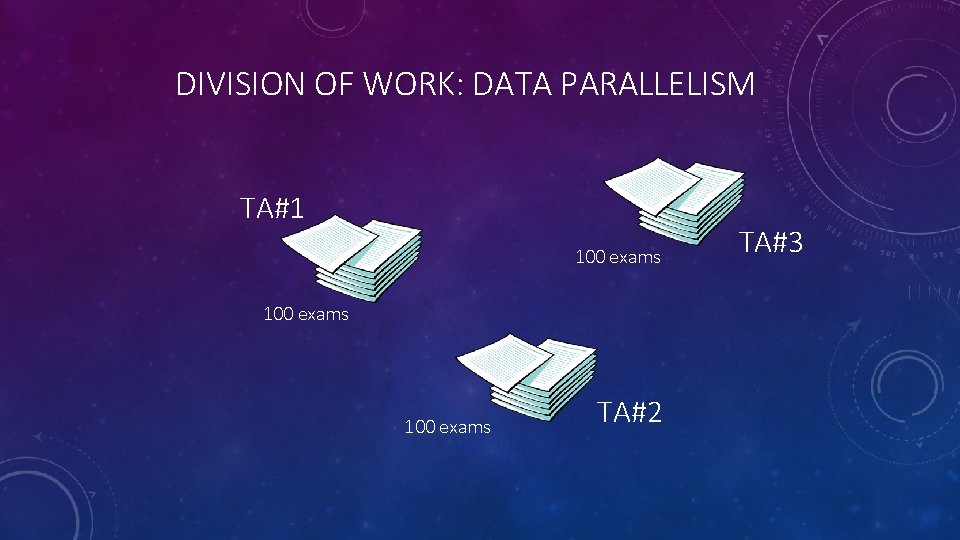

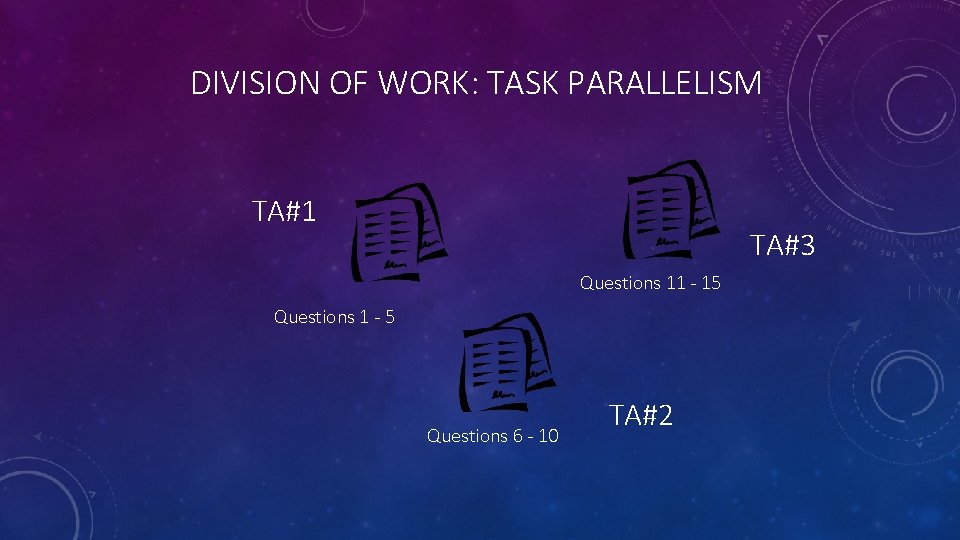

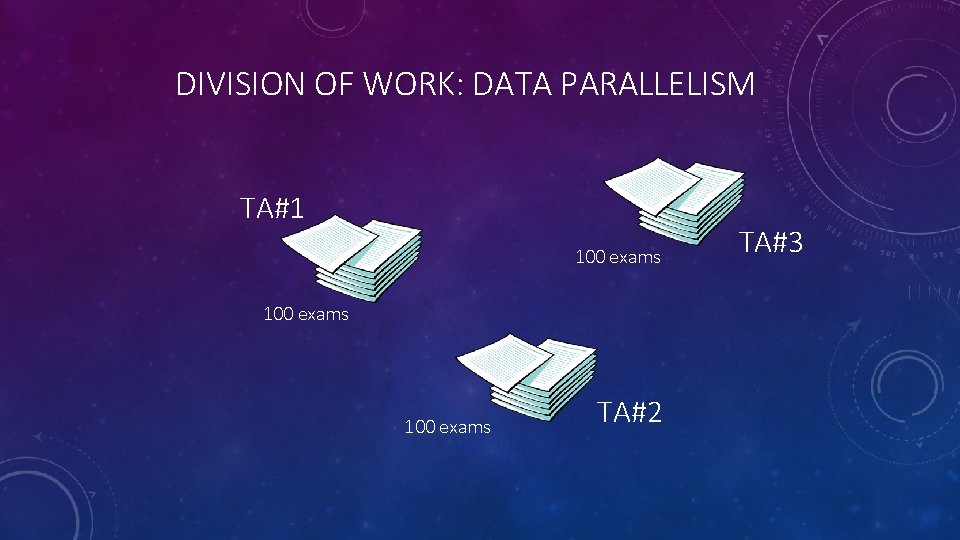

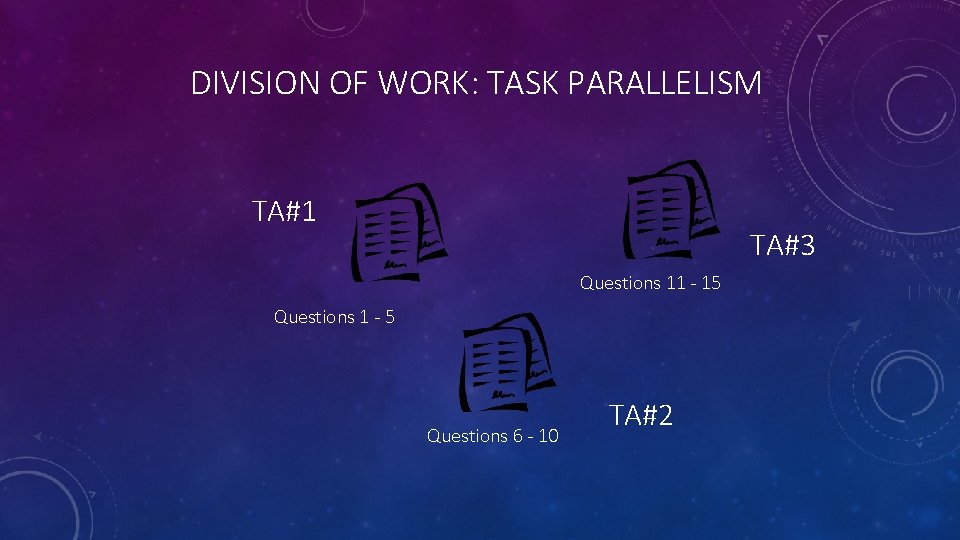

HOW DO WE WRITE PARALLEL PROGRAM? • Task parallelism • Partition various tasks carried out solving the problem among the cores. • Data parallelism • Partition the data used in solving the problem among the cores. • Each core carries out similar operations on it’s part of the data. 15 questions 300 exams

DIVISION OF WORK: DATA PARALLELISM TA#1 100 exams TA#2 TA#3

DIVISION OF WORK: TASK PARALLELISM TA#1 TA#3 Questions 11 - 15 Questions 1 - 5 Questions 6 - 10 TA#2

PARALLEL PROGRAM DESIGN : FOSTER’S METHODOLOGY • Partitioning : Divide the computation to be performed and the data operated on by the computation into small tasks. • Communication : Determine what communication needs to be carried out among the tasks identified in the previous step. • Agglomeration or Aggregation : Combine tasks and communications identified in the first step into larger tasks. • Mapping : assign the composite tasks identified in the previous step to processes/threads.

WHAT WE’LL BE DOING: • Learning to write programs that are explicitly parallel. • Using three APIs : • Message-Passing Interface (MPI) • Posix Threads (Pthreads) • Open. MP (Open Multi-Processing)

MPI – DISTRIBUTED MEMORY SYSTEM • A library used within conventional sequential languages (Fortran, C, C++) • Based on Single Program, Multiple Data (SPMD) • MPI_Init : Tells MPI to do all the necessary setup. • MPI_Finalize : Tells MPI we’re done, so clean up anything allocated for this program. • Point to point communication: MPI_Send, MPI_Recv • Collective communications : MPI_Reduce, MPI_Allreduce, MPI_Bcast, MPI_Scatter, MPI_Gather, MPI_Allgather, MPI_Barrier

PTHREADS : SHARED MEMORY SYSTEM • Threads are analogous to a “light-weight” process. • In Pthreads programs, all the threads have access to global variables, while local variables usually are private to the thread running the function. • Race condition : Multiple threads attempt to access a shared resource. • Critical section : Block of code that updates a shared resource that can only be updated by one thread at a time. • Avoid conflicting access to critical sections : Busy-waiting, Mutex, Semaphore • Read-write lock : Used when it’s safe for multiple threads to simultaneously read a data structure, but if a thread needs to modify or write to the data structure, then only that thread can access the data structure during the modification.

OPENMP : SHARED MEMORY SYSTEM • Open. MP uses both special functions and preprocessor directives called pragmas. • Open. MP programs start multiple threads rather than multiple processes. • A reduction is a computation that repeatedly applies the same reduction operator to a sequence of operands in order to get a single result. • All intermediate results stored in reduction operator. • No critical handling is required if we use reduction operators. • By default most systems use a block-partitioning of the iterations in a parallelized for loop.

THANK YOU!

BIBLIOGRAPHY • Introduction to Parallel Computing, Lawrence Livermore National Laboratory https: //computing. llnl. gov/tutorials/parallel_comp/ • Deng, Y. (2013). Applied parallel computing. Singapore ; Hong Kong: World Scientific. (Available online at KSU Library. ) • Pacheco, P. (2011). An introduction to parallel programming. Amsterdam ; Boston: Morgan Kaufmann. (Available online at KSU Library. ) • Razdan, S. (2014). Fundamentals of parallel computing. (Available online at KSU Library. ) • https: //en. wikipedia. org/wiki/Parallel_computing