Parallel computing on the grid Experiences from computational

- Slides: 57

Parallel computing on the grid: Experiences from computational finance Ian STOKES-REES INRIA Sophia-Antipolis France 1

Outline § § § Reminder: Grid Vision Grid Computing Strategies Parallel Application Development on the Grid Pro. Active Picsou. Grid Project 2

Reminder: Grid Vision § § § Federated Large Scale Heterogeneous Collaborative Dynamic Globally distributed 3

Strategy 1: Infrastructure-level Grid § § § Fire-and-forget non-interactive “tasks” Queued individually Run individually Results collected and collated at a later date Example Problems: particle physics computing: reconstruction, Monte Carlo simulation § Example Systems: EGEE/WLCG, NGS, Terra. Grid, OSG, Grid 5000 § Users: have traditional computing tasks, with no “grid” in them, and just need CPUs to run them on 4

Strategy 2: Application-level Grid § Builds on infrastructure grid resources § Provides complete application § Grid interface built into application § Or application is only way to access underlying Grid § Example Applications: e. Dia. Mo. ND, My. Grid, UNICORE § Users: Specific to the application, but are “end users”. Typically don’t expect to “download and install” software. Rather, use “grid application” designed for their specific needs. 5

Strategy 3: Library-level grid § Single-system application linked-in with grid library § (semi-) transparently handles application deployment § § and execution across grid resources Developers look after “grid” issues either directly or via Library APIs/functionality. Varying levels of transparency in current offerings Example Libraries: Globus, OMII services, g. Lite (perhaps not yet), Pro. Active, MPICH-G 2 (Globus MPI), Grid. MPI Users: Software developers who want to leverage grid computing in their applications. 6

Parallel Application Development on the Grid § Big grid resources are out there (WLCG, NGS, OSG, § § Grid 5000) Managed by other people (great!) Not always possible to install individually on each system and monitor/tweak operation (not so great!) Remember “Grid Vision”: § Heterogeous § Dynamic § Federated How to develop parallel algorithms/applications for distributed, heterogenous systems? 7

Parallel Application Development on the Grid (II) § § Synchronisation is difficult (obviously) Distributed logging is difficult Distributed debugging is really difficult Requires a slightly different development paradigm: § Granularity of computation needs to be more coarse § Asynchrony is important to avoid blocking § Simplicity is important to aid debugging and reduce sources of error 8

Pro. Active: Value Proposition § Java VM to reduce/eliminate hardware and software heterogeneity § Forces use of Java everywhere § Doesn’t hide performance differences! § Benefit from reflection and dynamic class loading § Wrap objects in “gridified” sub-class § Provide asynchrony and multi-threading through “Active Objects” § Futures § Wait-by-necessity § And other features auto-magically added either by developers or at run-time via Active Object factory onto wrapped classes. 9

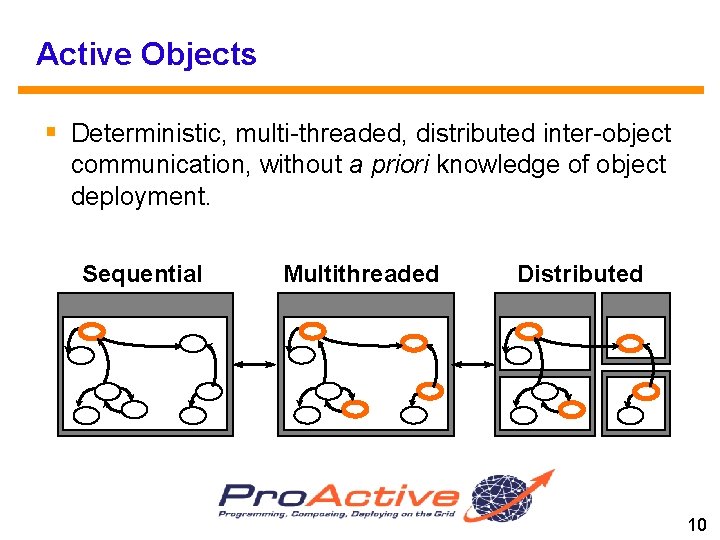

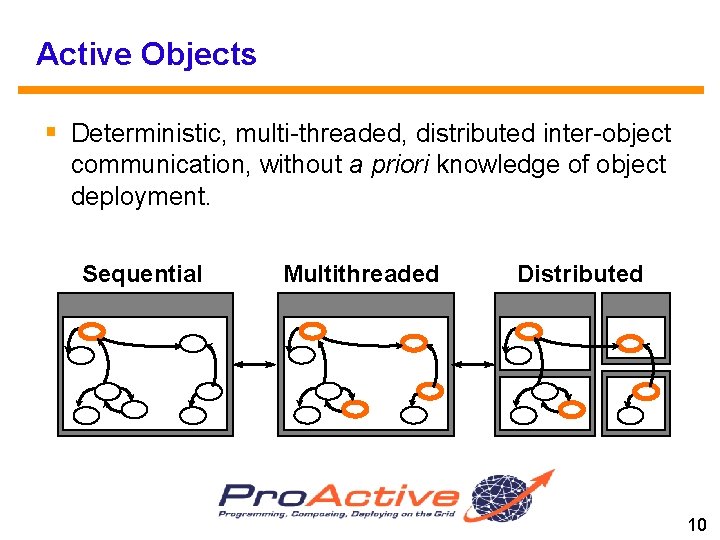

Active Objects § Deterministic, multi-threaded, distributed inter-object communication, without a priori knowledge of object deployment. Sequential Multithreaded Distributed 10

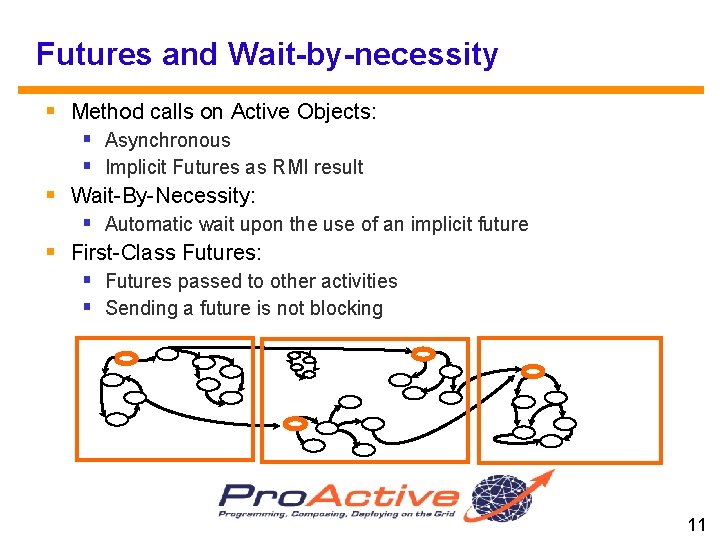

Futures and Wait-by-necessity § Method calls on Active Objects: § Asynchronous § Implicit Futures as RMI result § Wait-By-Necessity: § Automatic wait upon the use of an implicit future § First-Class Futures: § Futures passed to other activities § Sending a future is not blocking 11

Creating Active Objects My. Class obj_norm = new My. Class(<params>); My. Class obj_act = new. Active(“My. Class”, <params>); Result r 1 = obj_norm. foo(param); Result r 2 = obj_act. foo(param); Result r 3 = obj_act. bar(param); //. . . r 2. bar(); //Wait-By-Necessity § In other words, very little effort by developer to introduce distributed multi-threading into application. 12

Active Object Internals Standard object § An active object is composed of several objects : 1. The object being activated: Active Object 2. A single thread 3. The queue of pending requests 4. A set of standard Java objects 1 Objet Active object Proxy Object 1 3 2 Body 13

And lots of other nice features… § § § P 2 P interface File sharing/distribution Security Typed group communication (OOSPMD) Graphical Distributed Monitoring/Debugging § IC 2 D application Timing and performance API (Tim. IT) Object migration Load balancing Fault Tolerance/Check-pointing Run time deployment configuration (clusters/nodes) Component model Plus lots of docs, APIs, tutorials, examples, etc. 14

Check it out § Web: http: //proactive. objectweb. org § Email: Ian. Stokes-Rees@inria. fr § Or, ask me more about it at the pub § BTW, group has spin-off company coming this summer… 15

Picsou. Grid: Computational Finance on the Grid § What? § Option pricing § Why? § Surprisingly, not done much in a grid domain § Not many openly available implementations (parallel or not) § How? § Pro. Active (i. e. Java) on Grid 5000 and other grids (WLCG, NGS, DAS-3, …) 16

High Level Project Objectives Framework for distributed computational finance algorithms Investigate grid component model http: //gridcomp. ercim. org/ Implement open source versions of parallel algorithms for computational finance Utilise Pro. Active grid middleware Deploy and evaluate on various grid platforms Grid 5000 (France) DAS 3 (Netherlands) EGEE (Europe) 17

Grid Emphasis § This presentation and subsequent paper focuses on developing an architecture for parallel grid computing with: § Multi site (5+) § Large scale (500 -2000 cores) § Long term (days to weeks) § Multi-grid (2+) § Consequently, de-emphasizes computational financespecific aspects (i. e. algorithms and application domain) § However other team members are working hard on this! 18

Pro. Active http: //www. objectweb. org/proactive Java Library for Distributed Computing Developed by INRIA Sophia Antipolis, France (Project OASIS) 50 -100 person-years R&D work invested Provides transparent asynchronous distributed method calls Implemented on top of Java RMI Fully documented (600 page manual) Available under LGPL Used in commercial applications Graphical debugger 19

Pro. Active (II) OO SPMD with “Active Objects” Any Java Object can automatically be turned into an “Active Object” Utilises Java Reflection “Wait by necessity” and “futures” allow method calls to return immediately and then subsequent object access blocks until result is ready Objects appear local but may be deployed on any system within Pro. Active environment (local system/cluster, or remote system, cluster, or grid) Easy Integration with Existing Systems Extensions seamlessly support various cluster, network, and grid environments: Globus, ssh, http(s), LSF, PBS, SGE, EGEE, Grid 5000 20

Background – Options Option trading: financial instruments which allow buyers to bet on future asset prices and sellers to reduce risk of owning asset Call option: allows holder to purchase an asset at a fixed price in the future Put option: allows holder to sell an asset at a fixed price in the future Option Pricing: European: fixed future exercise date American: can be exercised any time up to expiry date Basket: prices a set of options together Barrier: exercise depends on a certain barrier price being reached Uses Monte Carlo simulations Possibility to aggregate statistical results 21

Background – Picsou. Grid v 1, 2, 3 Original versions of Picsou. Grid utilised: Grid 5000 Pro. Active Java. Spaces Implemented European Simple, Basket, and Barrier Pricing Medium-size distributed system: 4 sites, 180 nodes Short operational runs (5 -10 minutes) Fault Tolerance mechanisms Achieved 90 x speed-up with 140 systems 65% efficiency Reported in e-Science 2006 (Amsterdam, Nov 2006) A Fault Tolerant and Multi-Paradigm Grid Architecture for Time Constrained Problems. Application to Option Pricing in Finance. 22

Picsou. Grid v 3 Performance Multi-site Peak speed-up Performance degradation 23

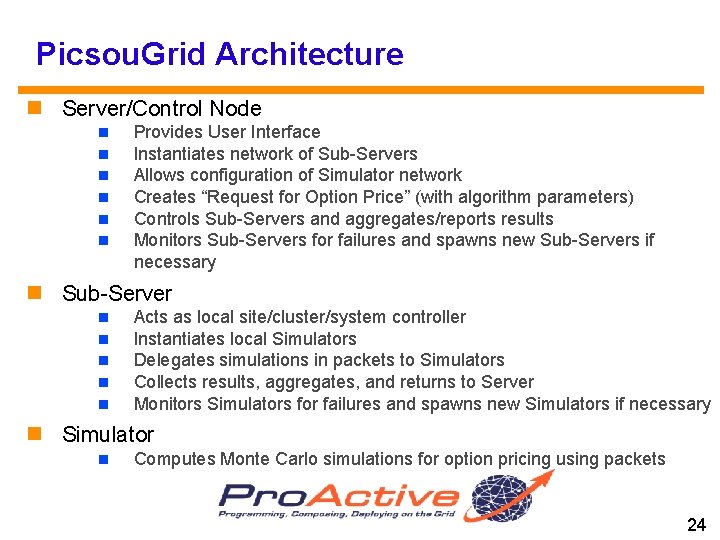

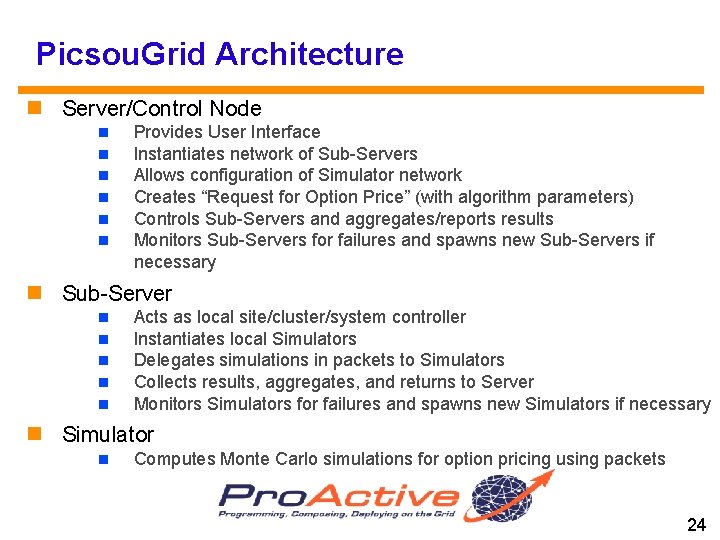

Picsou. Grid Architecture Server/Control Node Provides User Interface Instantiates network of Sub-Servers Allows configuration of Simulator network Creates “Request for Option Price” (with algorithm parameters) Controls Sub-Servers and aggregates/reports results Monitors Sub-Servers for failures and spawns new Sub-Servers if necessary Sub-Server Acts as local site/cluster/system controller Instantiates local Simulators Delegates simulations in packets to Simulators Collects results, aggregates, and returns to Server Monitors Simulators for failures and spawns new Simulators if necessary Simulator Computes Monte Carlo simulations for option pricing using packets 24

Picsou. Grid Deployment and Operation option pricing request MC simulation packet heartbeat monitor MC result Client Server Pro. Active Worker Sub- Pro. Active Server Worker Sub. Server reserve workers Pro. Active DB Java. Space virtual shared memory (to v 3) 25

Picsou. Grid v 5 Design Objectives Multi-Grid 5000 g. Lite/EGEE INRIA Sophia desktop cluster Decoupled Workers Autonomous Independent deployment and operation P 2 P discover and acquire Long Running, Multi-Algorithm Create “standing” application Augment (or reduce) P 2 P worker network based on demand Computational tasks specify algorithm and parameters 26

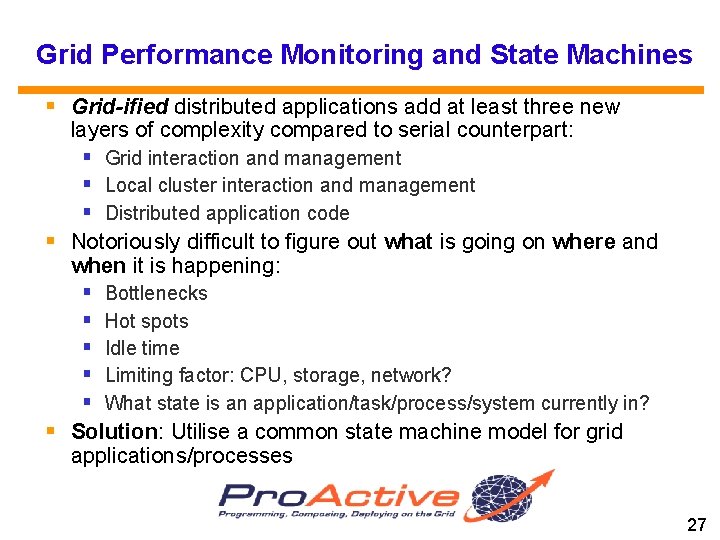

Grid Performance Monitoring and State Machines § Grid-ified distributed applications add at least three new layers of complexity compared to serial counterpart: § Grid interaction and management § Local cluster interaction and management § Distributed application code § Notoriously difficult to figure out what is going on where and when it is happening: § Bottlenecks § Hot spots § Idle time § Limiting factor: CPU, storage, network? § What state is an application/task/process/system currently in? § Solution: Utilise a common state machine model for grid applications/processes 27

Layered System Grid Site Cluster Host Core VM Process 28

“Proof” of layering What I execute on a Grid 5000 Submit (UI) Node: mysub -l nodes=30 es-bench 1 e 6 What eventually runs on Worker Node: /bin/sh -c /usr/lib/oarexecuser. sh /tmp/OAR_59658 30 59658 istokes-rees /bin/bash ~/proc/fgrillon 1. nancy. grid 5000. fr/submit N script -wrapper ~/bin/script-wrapper fgrillon 1. nancy. grid 5000. fr ~/es-bench 1 e 6 Granted, this is nothing more than good system design and separation of concerns We are just looking at the implicit API layers of “the grid” Universal interface: command shell, environment variables and file system 29

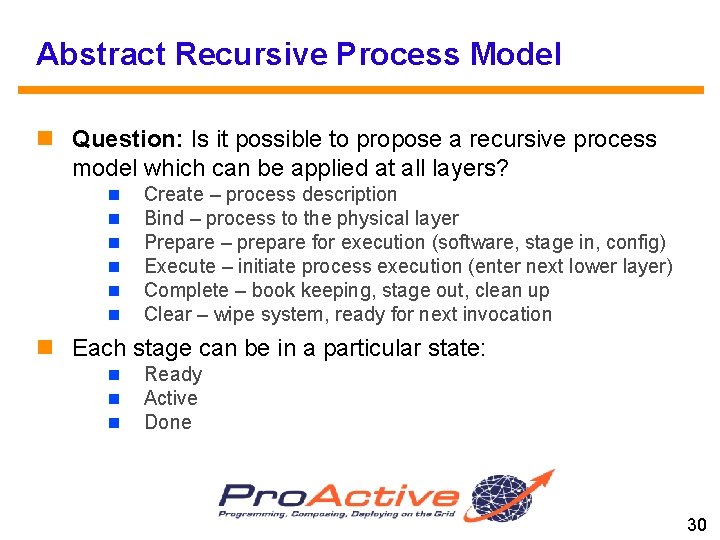

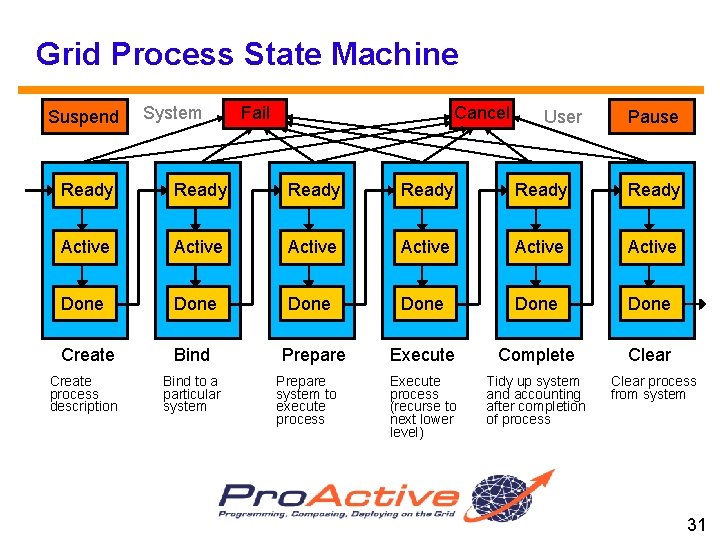

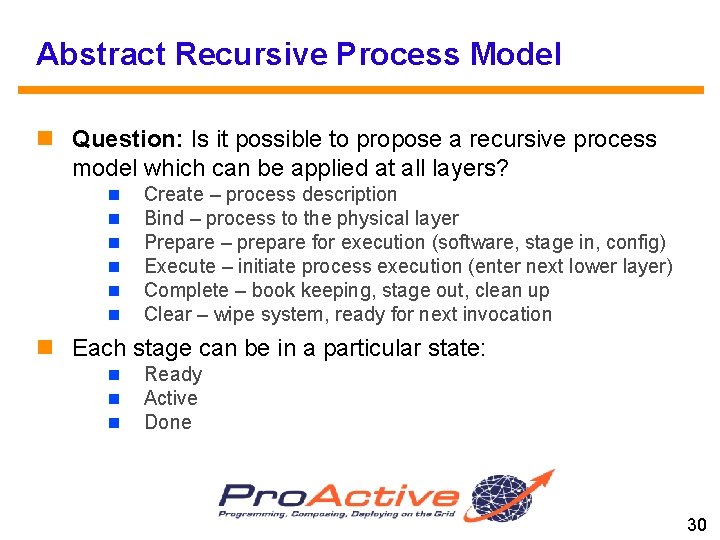

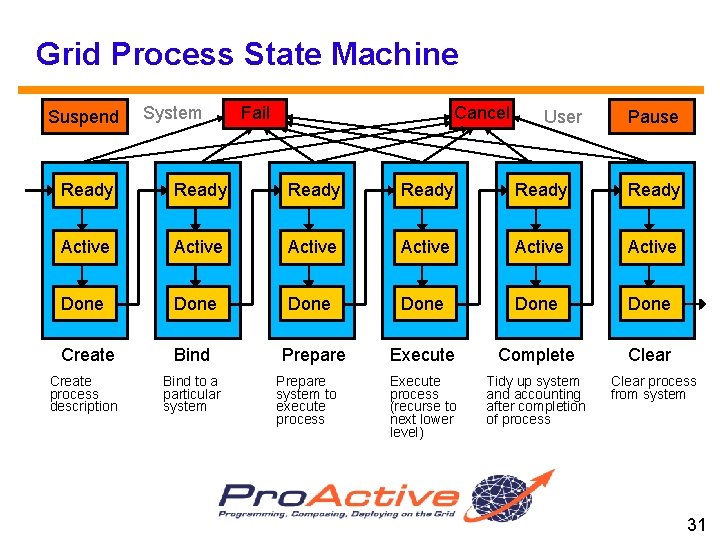

Abstract Recursive Process Model Question: Is it possible to propose a recursive process model which can be applied at all layers? Create – process description Bind – process to the physical layer Prepare – prepare for execution (software, stage in, config) Execute – initiate process execution (enter next lower layer) Complete – book keeping, stage out, clean up Clear – wipe system, ready for next invocation Each stage can be in a particular state: Ready Active Done 30

Grid Process State Machine Suspend System Fail Cancel User Pause Ready Ready Active Active Done Done Create Bind Prepare Execute Complete Clear Execute process (recurse to next lower level) Tidy up system and accounting after completion of process Create process description Bind to a particular system Prepare system to execute process Clear process from system 31

CREAM Job States Create Bind Workload Management System § Can be mapped to Grid Process State Machine § This only shows one level of mapping § In practice, would apply state machine at Grid level, LRMS level, and task level Suspend § New LCG/EGEE Prepare Execute § Timestamps on state entry: § Layer. Stage. State. Failed Cancelled Done Failed 32

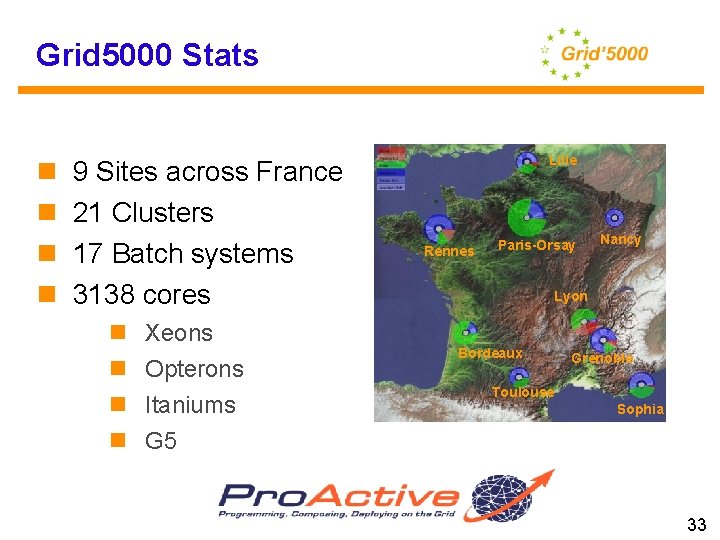

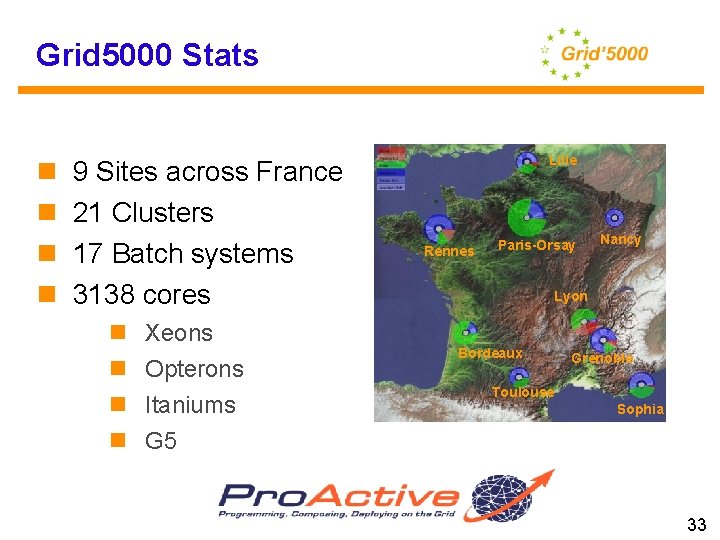

Grid 5000 Stats 9 Sites across France 21 Clusters 17 Batch systems 3138 cores Xeons Opterons Itaniums Lille Rennes Paris-Orsay Nancy Lyon Bordeaux Grenoble Toulouse Sophia G 5 33

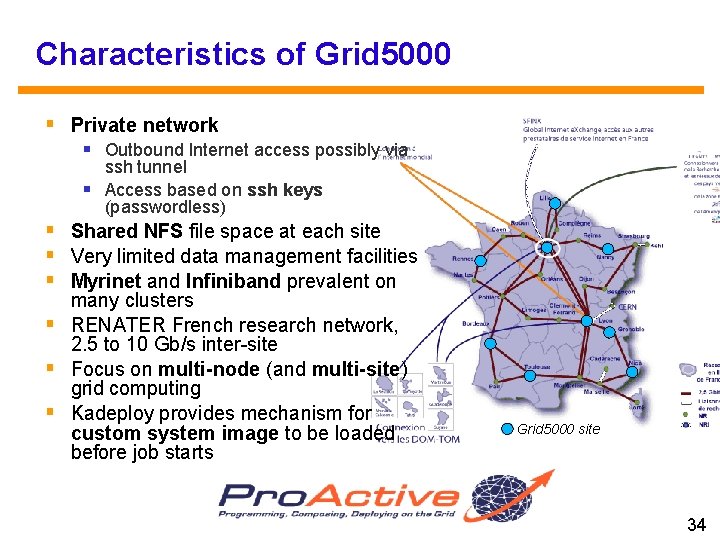

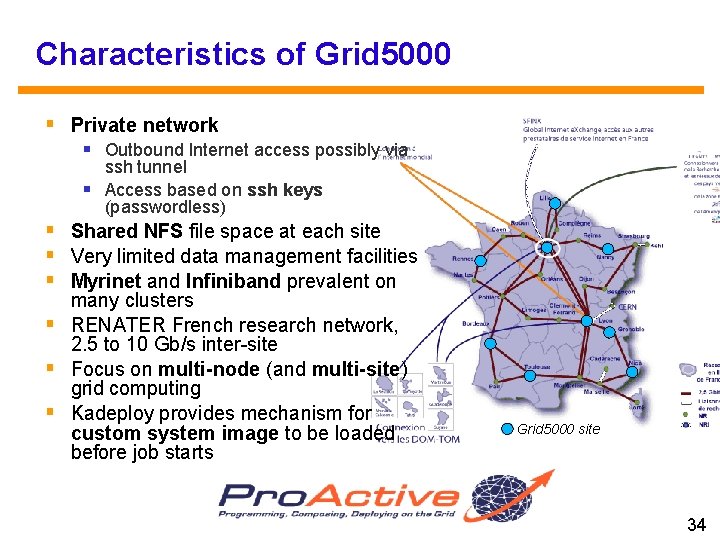

Characteristics of Grid 5000 § Private network § Outbound Internet access possibly via ssh tunnel § Access based on ssh keys (passwordless) § Shared NFS file space at each site § Very limited data management facilities § Myrinet and Infiniband prevalent on many clusters § RENATER French research network, 2. 5 to 10 Gb/s inter-site § Focus on multi-node (and multi-site) grid computing § Kadeploy provides mechanism for custom system image to be loaded before job starts Grid 5000 site 34

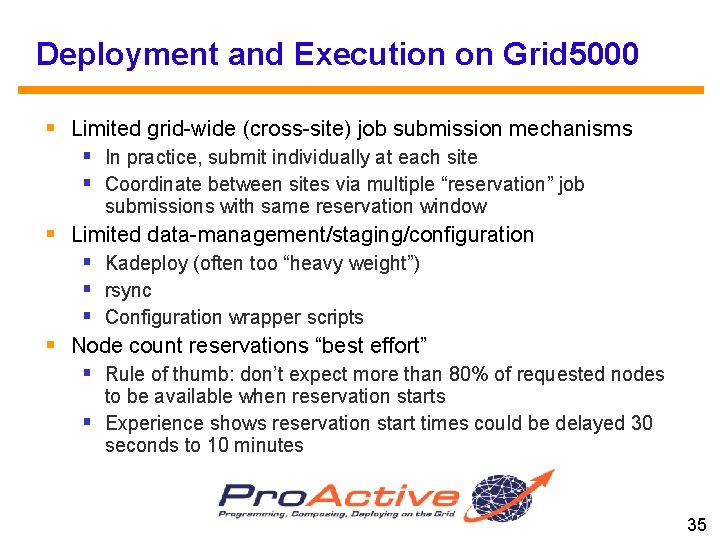

Deployment and Execution on Grid 5000 § Limited grid-wide (cross-site) job submission mechanisms § In practice, submit individually at each site § Coordinate between sites via multiple “reservation” job submissions with same reservation window § Limited data-management/staging/configuration § Kadeploy (often too “heavy weight”) § rsync § Configuration wrapper scripts § Node count reservations “best effort” § Rule of thumb: don’t expect more than 80% of requested nodes to be available when reservation starts § Experience shows reservation start times could be delayed 30 seconds to 10 minutes 35

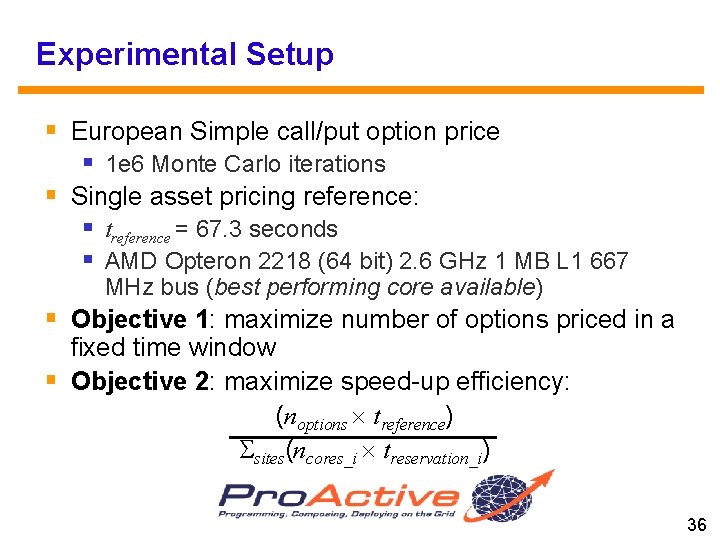

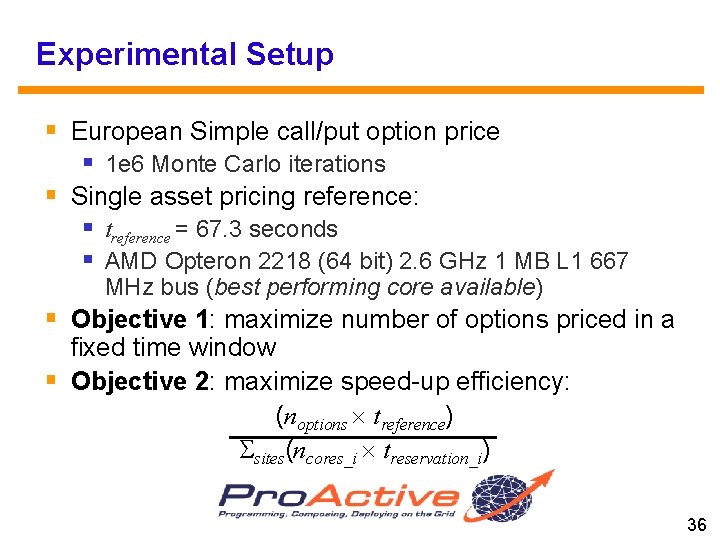

Experimental Setup § European Simple call/put option price § 1 e 6 Monte Carlo iterations § Single asset pricing reference: § treference = 67. 3 seconds § AMD Opteron 2218 (64 bit) 2. 6 GHz 1 MB L 1 667 MHz bus (best performing core available) § Objective 1: maximize number of options priced in a fixed time window § Objective 2: maximize speed-up efficiency: (noptions treference) sites(ncores_i treservation_i) 36

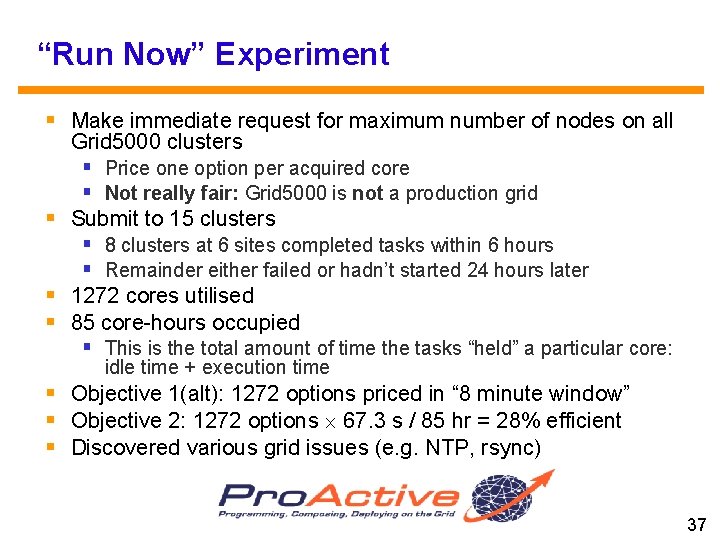

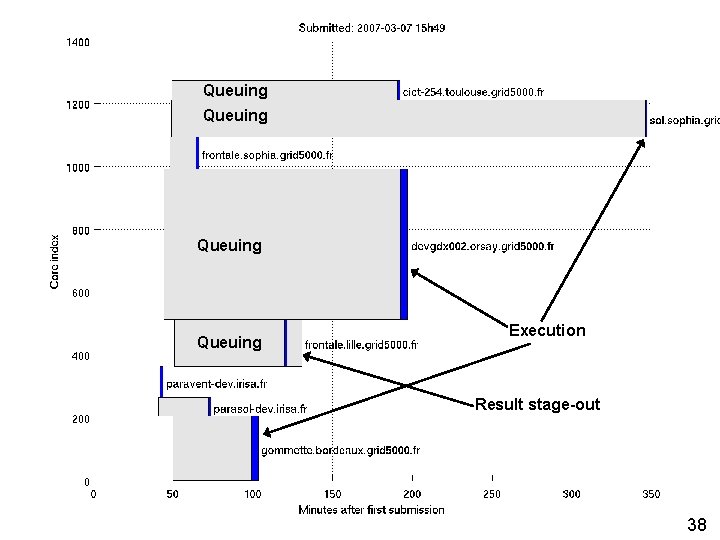

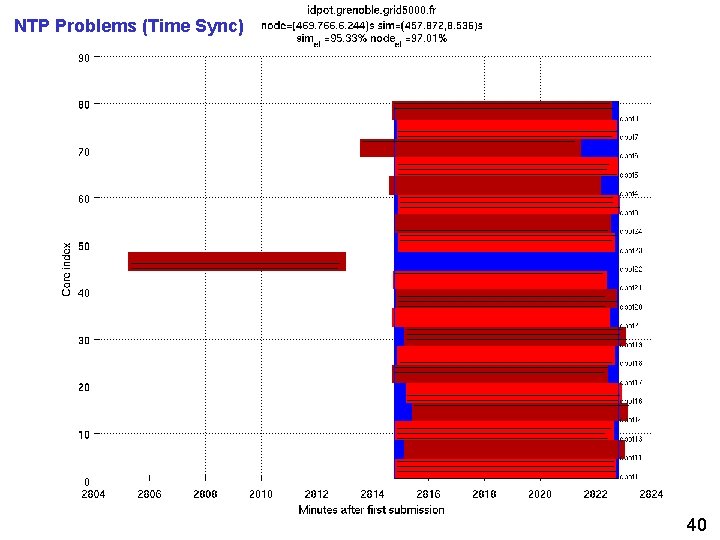

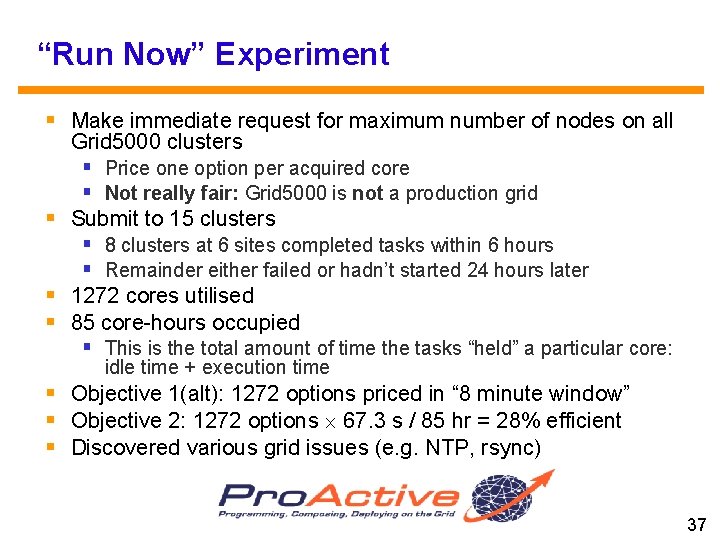

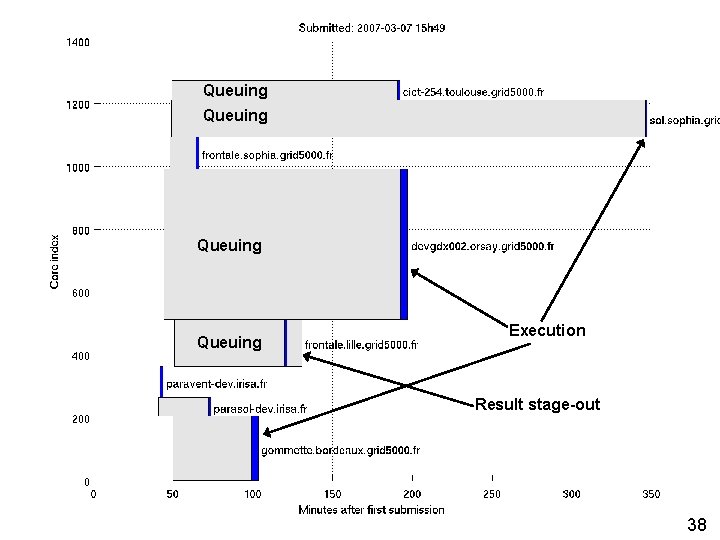

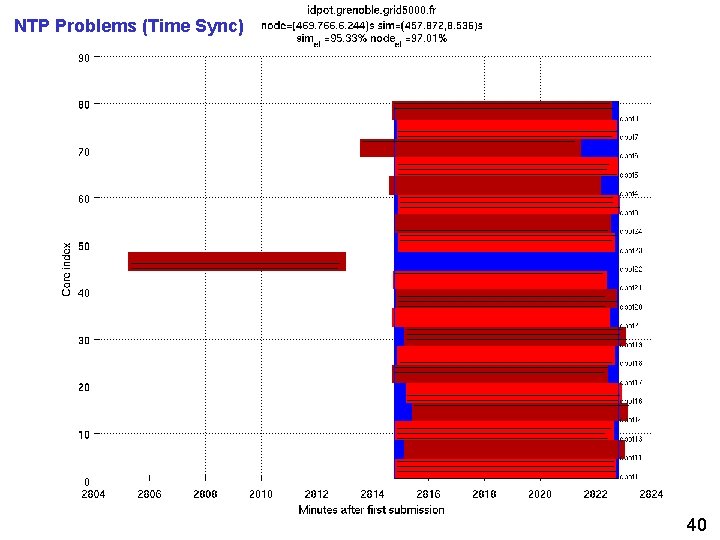

“Run Now” Experiment § Make immediate request for maximum number of nodes on all Grid 5000 clusters § Price one option per acquired core § Not really fair: Grid 5000 is not a production grid § Submit to 15 clusters § 8 clusters at 6 sites completed tasks within 6 hours § Remainder either failed or hadn’t started 24 hours later § 1272 cores utilised § 85 core-hours occupied § This is the total amount of time the tasks “held” a particular core: idle time + execution time § Objective 1(alt): 1272 options priced in “ 8 minute window” § Objective 2: 1272 options 67. 3 s / 85 hr = 28% efficient § Discovered various grid issues (e. g. NTP, rsync) 37

Queuing Execution Result stage-out 38

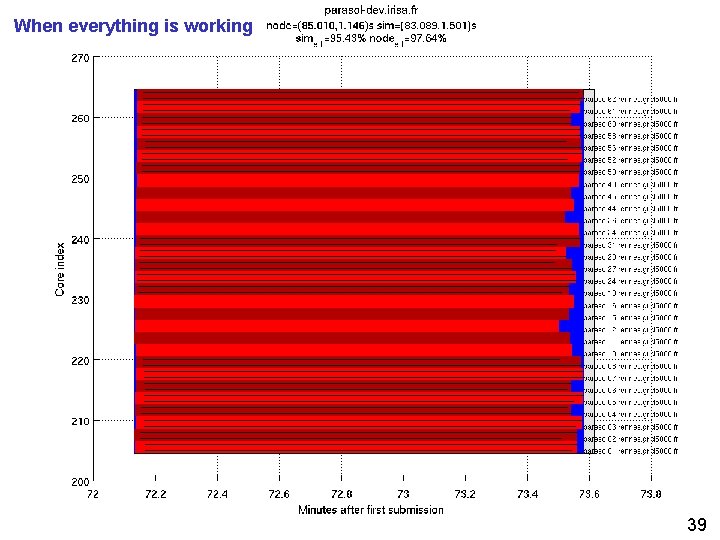

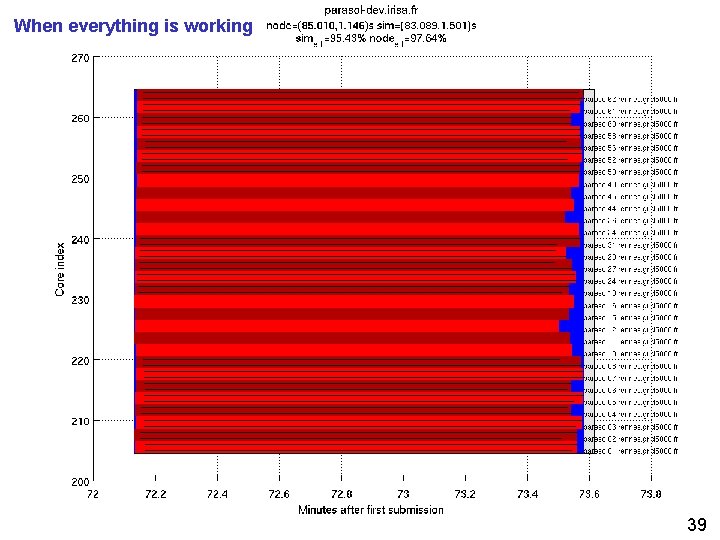

When everything is working 39

NTP Problems (Time Sync) 40

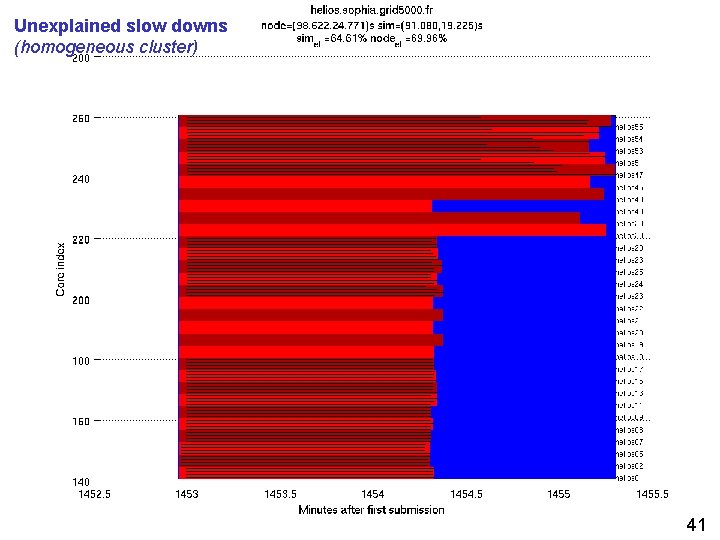

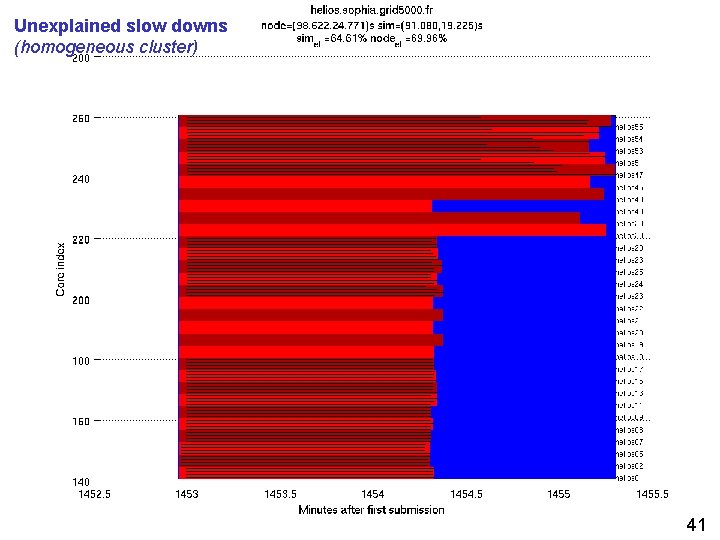

Unexplained slow downs (homogeneous cluster) 41

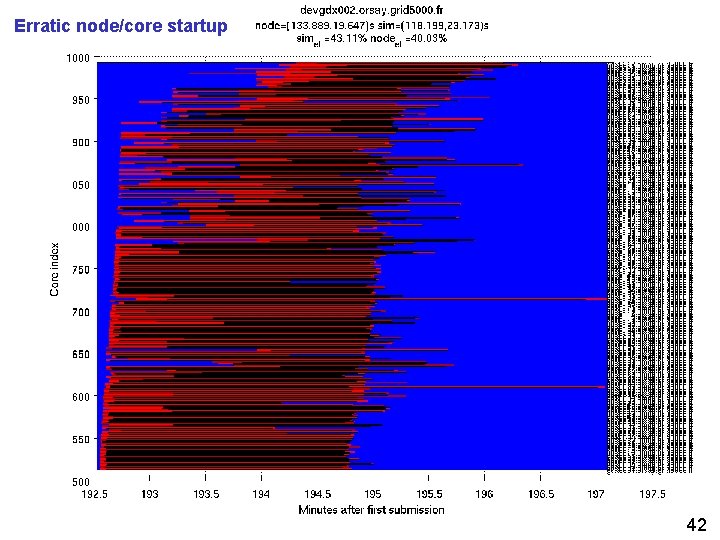

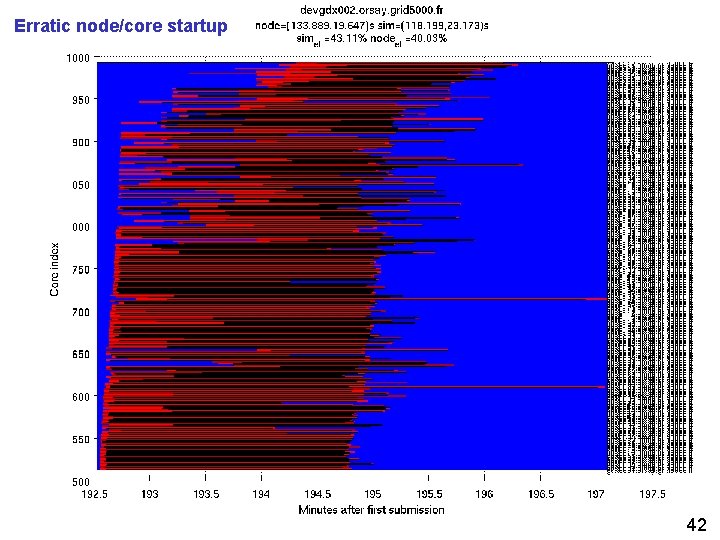

Erratic node/core startup 42

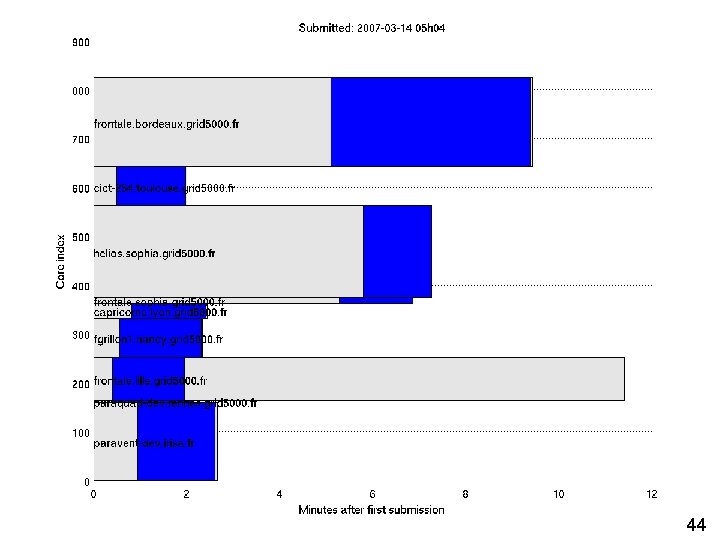

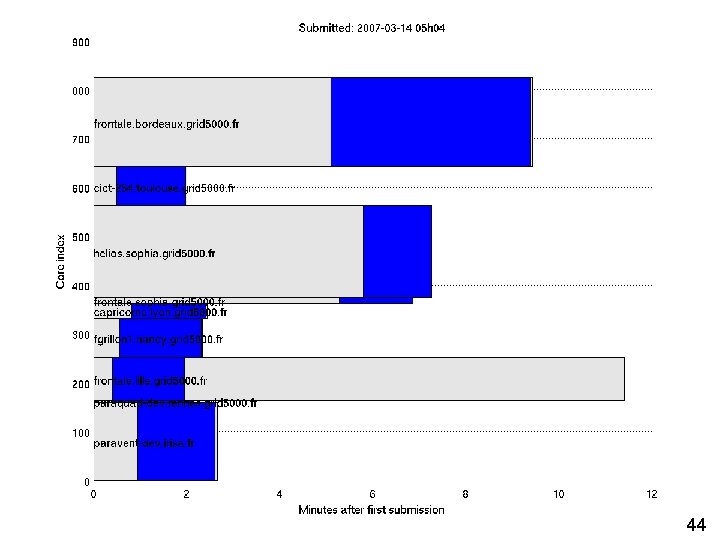

Coordinated Start with Reservation § Reservation made 12+ hours in advance § Confirmed no other reservations for time slot § Start time at “low utilisation” point of 6: 05 am § 5 minutes provided for system restarts and Kadeploy re-imaging after end of reservations going to 6 am § Submitted to 12 clusters, at 8 sites § § § § 9 clusters at 7 sites ran successfully 894 cores utilised 31. 3 core-hours occupied No cluster reservation started “on time” § Start time delays of 20 s to 5. 5 minutes § Illustrates difficulty of cross-site coordinated parallel processing Objective 1: 894 options priced in 9. 5 minute window Objective 2: 894 options 67. 3 s / 31. 3 hr = 53. 4% efficient Still problems (heterogeneous clusters, NTP, rsync) 43

44

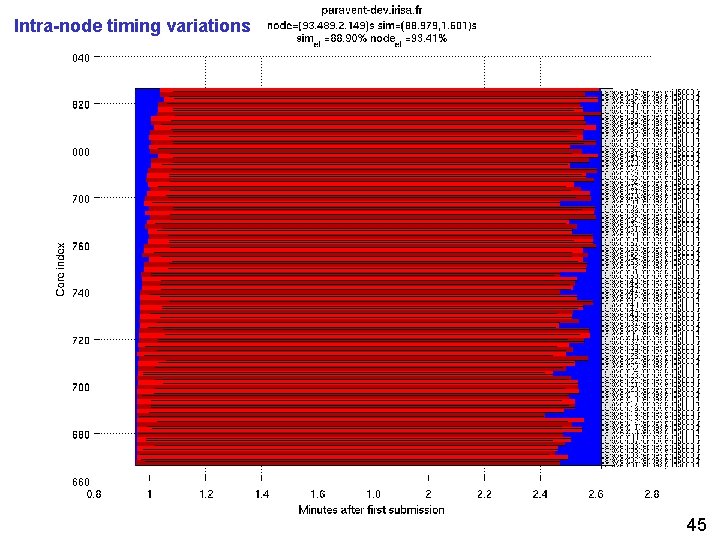

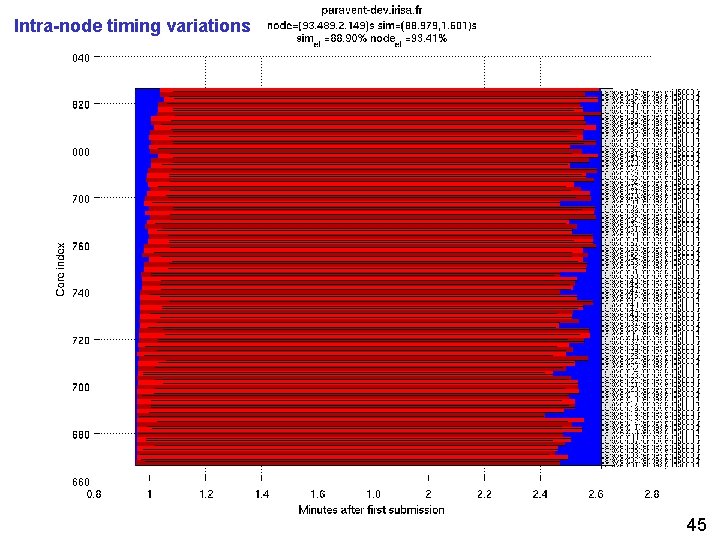

Intra-node timing variations 45

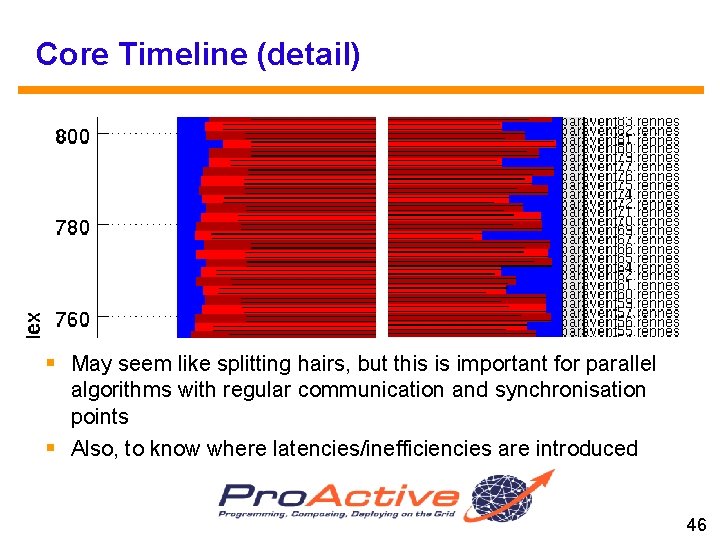

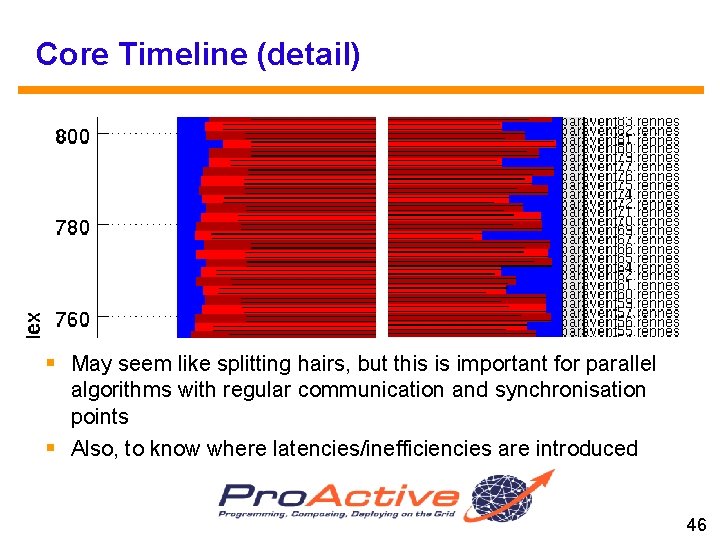

Core Timeline (detail) § May seem like splitting hairs, but this is important for parallel algorithms with regular communication and synchronisation points § Also, to know where latencies/inefficiencies are introduced 46

Heterogeneous clusters (hyper threading on) 47

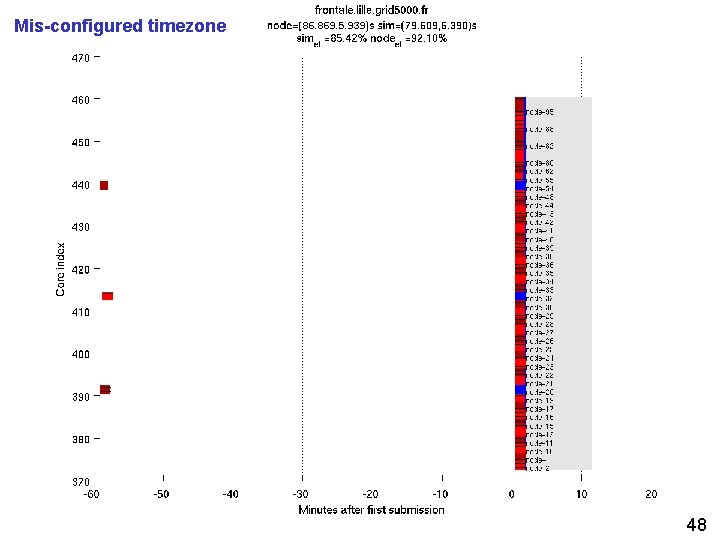

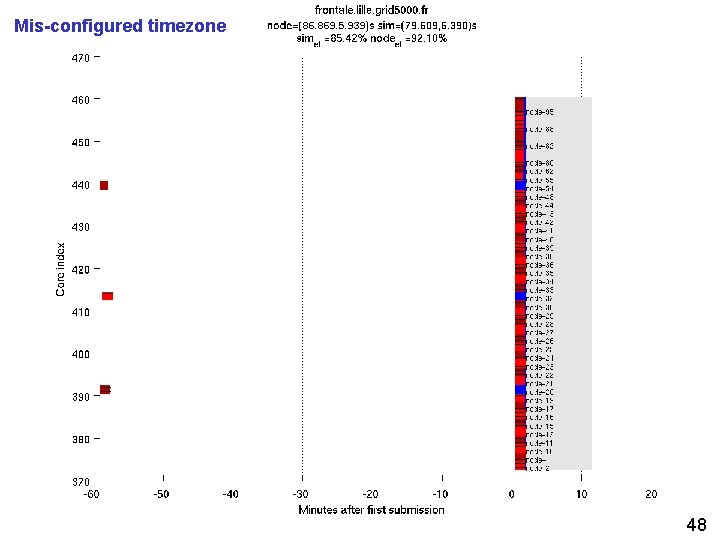

Mis-configured timezone 48

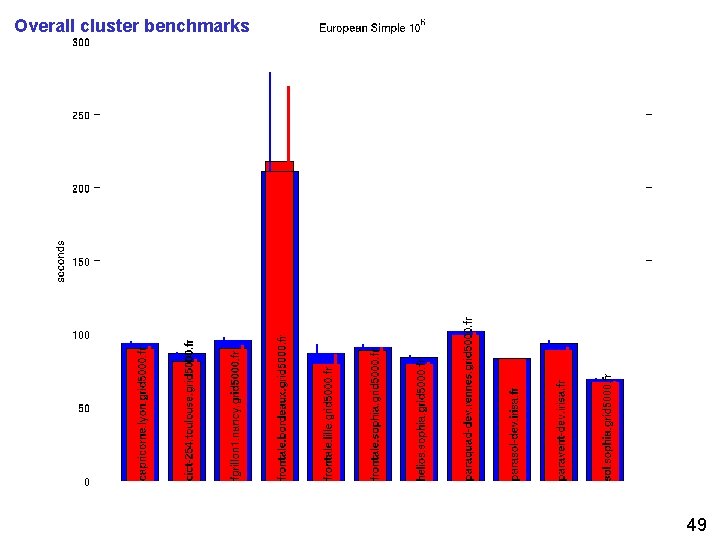

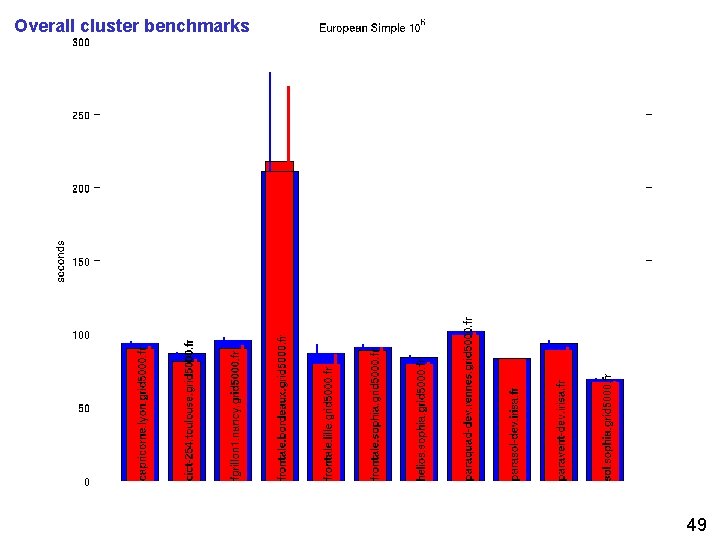

Overall cluster benchmarks 49

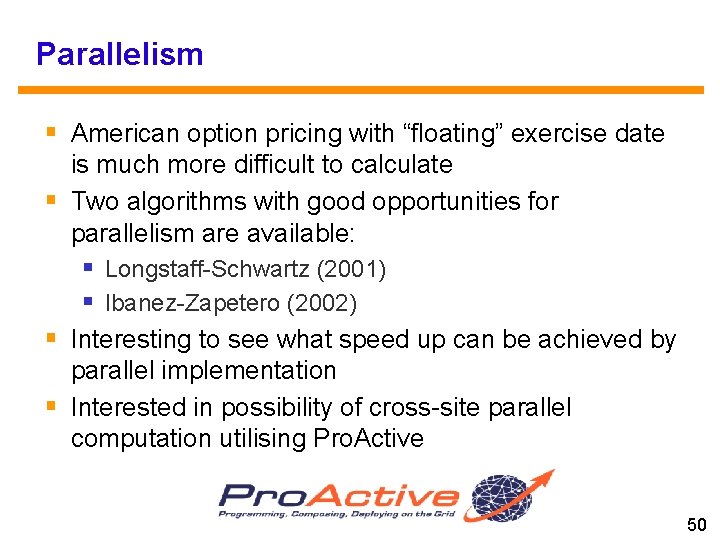

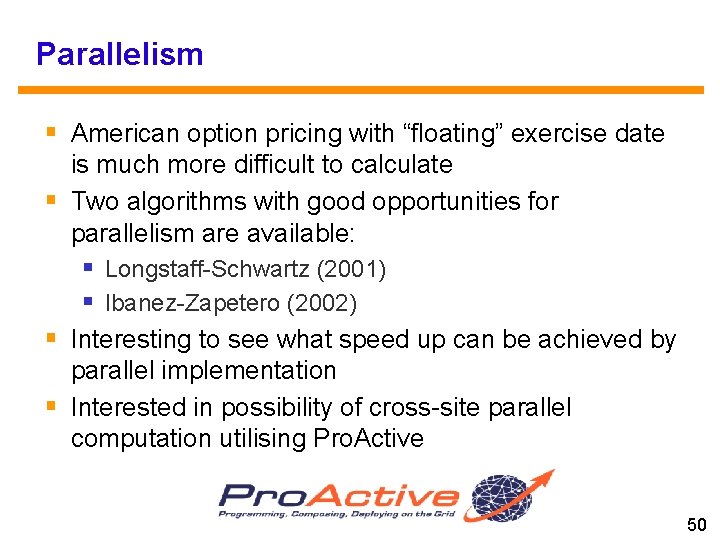

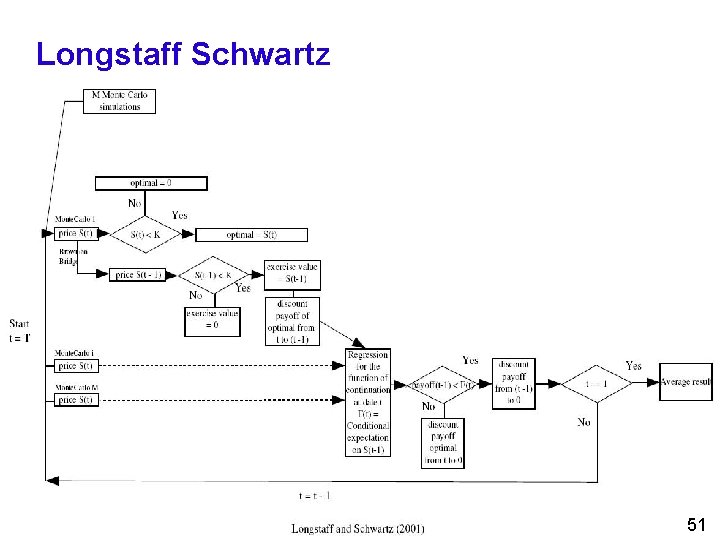

Parallelism § American option pricing with “floating” exercise date is much more difficult to calculate § Two algorithms with good opportunities for parallelism are available: § Longstaff-Schwartz (2001) § Ibanez-Zapetero (2002) § Interesting to see what speed up can be achieved by parallel implementation § Interested in possibility of cross-site parallel computation utilising Pro. Active 50

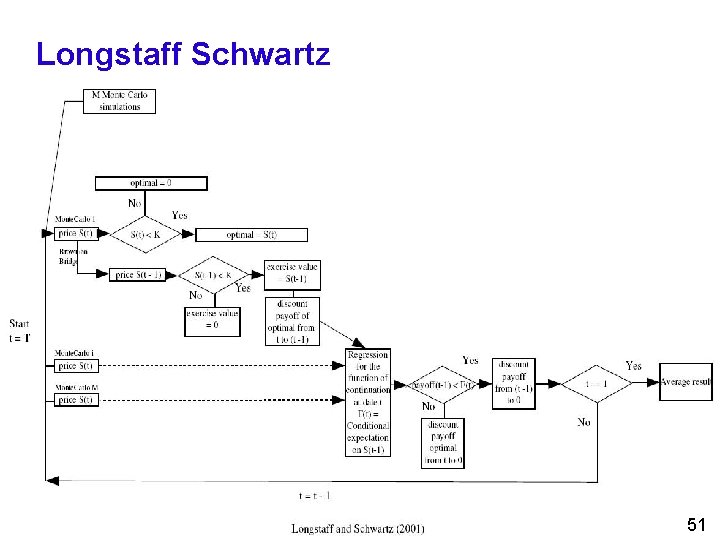

Longstaff Schwartz 51

Ibanez-Zapetero 52

Multi-Grids § Very interested in experimenting with Multi-Grid environment: § Grid 5000 § g. Lite/EGEE § DAS 3 § Local cluster/desktop-grid/p 2 p network § Pro. Active deploys on LCG (g. Lite/EGEE) § Other Pro. Active applications deployed and run successfully § VO problems in Feb/March meant Picsou. Grid could not be run on LCG – so no results for ISGC! § Investigate use of HTTP-based task pools to bridge “grids” 53

Future for Picsou. Grid § Many more computational finance algorithms have already been developed and need to be similarly benchmarked: § Barrier, Basket § American (Longstaff-Schwartz and Ibanez-Zapatero) § “Continuous” operation of option pricing, rather than “one-shot” § Incorporate dynamic node availability § Improve modularization/componentization of finance algorithms 54

Summary of Observations § Deploying parallel applications in a grid environment continues to be a challenging problem § Heterogeneity in a grid is pervasive and still hard to deal with § Understanding performance issues, hot spots, bottlenecks, wasted idle time, and synchronisation points can be aided by a grid process model § Middleware really is critical: g. Lite, LRMS, OAR, Pro. Active, etc. need to provide end users and application developers with reliable, consistent, and easy to use interface to “the grid” 55

Thank you Questions? https: //gforge. inria. fr/projects/picsougrid/ Ian. Stokes-Rees@inria. fr 56

57