Parallel Computing Message Passing Interface EE 8603 SPECIAL

![Point-to-Point Communication Modes • Send communication modes: – synchronous send MPI_SSEND – buffered [asynchronous] Point-to-Point Communication Modes • Send communication modes: – synchronous send MPI_SSEND – buffered [asynchronous]](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-21.jpg)

![Derived Data Types Data Layout and the Describing Datatype Handle struct buff_layout {int i_val[3]; Derived Data Types Data Layout and the Describing Datatype Handle struct buff_layout {int i_val[3];](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-38.jpg)

![Experimental results (LAN) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] LAN Experimental results (LAN) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] LAN](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-64.jpg)

![Experimental results (Wireless) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] Wireless Experimental results (Wireless) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] Wireless](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-65.jpg)

- Slides: 68

Parallel Computing Message Passing Interface EE 8603 - SPECIAL TOPICS ON PARALLEL COMPUTING Professor: Nagi Mekhiel Presented by: Leili, Sanaz, Mojgan, Reza Ryerson University

Outline • • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 2

Overview Other Servers Web Server Mail Server Workstation Head Node Compute Node MPI Interconnect Ring Private Network Compute Node 06 November 2020 Compute Node Ryerson University 3

Overview Parallel Computing • A task is broken down into tasks, performed by separate workers or processes • Processes interact by exchanging information • What do we basically need? – The ability to start the tasks – A way for them to communicate 11/6/2020 Ryerson University 4

Overview What is MPI? • A message passing library specification – Message-passing model – Not a compiler specification (i. e. not a language) – Not a specific product • Designed for parallel computers, clusters, and heterogeneous networks 11/6/2020 Ryerson University 5

Overview Synchronous Communication • A synchronous communication does not complete until the message has been received. –A FAX or registered mail. beep ok 11/6/2020 Ryerson University 6

Overview Asynchronous Communication • An asynchronous communication completes as soon as the message is on the way. – A post card or email. 11/6/2020 Ryerson University 7

Overview Collective Communications of Collective Transfers • Types Point-to-point communications involve pairs of processes. • • Barrier Many message passing systems provide operations which allow – Synchronizes larger numbers of processors. processes to participate – No data is exchanged but the barrier blocks until all processes. have called the barrier routine. • Broadcast (sometimes multicast) – A broadcast is a one-to-many communication. – One processor sends one message to several destinations. • Reduction – Often useful in a many-to-one communication. 11/6/2020 Ryerson University 8

Overview What’s in a Message? • • An MPI message is an array of elements of a particular MPI datatype. All MPI messages are typed –The type of the contents must be specified in both the send and the receive. Basic C Datatypes in MPI Datatype C datatype MPI_CHAR signed char MPI_SHORT signed short int MPI_INT signed int MPI_LONG signed long int MPI_UNSIGNED_CHAR unsigned char MPI_UNSIGNED_SHORT unsigned short int MPI_UNSIGNED_INT unsigned int MPI_UNSIGNED_LONG unsigned long int MPI_FLOAT float MPI_DOUBLE double MPI_LONG_DOUBLE long double MPI_BYTE MPI_PACKED 11/6/2020 Ryerson University 9

Overview MPI Handles • MPI maintains internal data-structures which are referenced by the user through handles. • Handles can be returned by and passed to MPI procedures. • Handles can be copied by the usual assignment operation. MPI Errors • MPI routines return an int that can contain an error code. • The default action on the detection of an error is to cause the parallel operation to abort. • The default can be changed to return an error code. 11/6/2020 Ryerson University 10

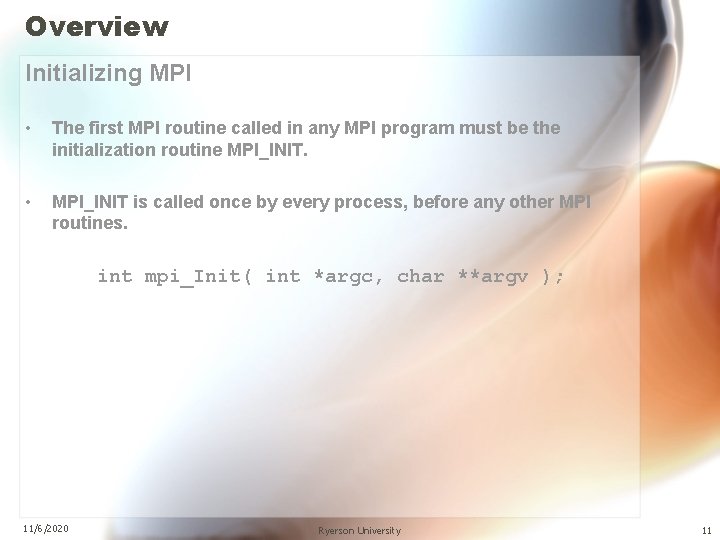

Overview Initializing MPI • The first MPI routine called in any MPI program must be the initialization routine MPI_INIT. • MPI_INIT is called once by every process, before any other MPI routines. int mpi_Init( int *argc, char **argv ); 11/6/2020 Ryerson University 11

Overview Skeleton MPI Program #include <mpi. h> main( int argc, char** argv ) { MPI_Init( &argc, &argv ); /* main part of the program */ MPI_Finalize(); } 11/6/2020 Ryerson University 12

Overview Point-to-point Communication • Always involves exactly two processes • The destination is identified by its rank within the communicator • There are four communication modes provided by MPI (these modes refer to sending not receiving) – – 11/6/2020 Standard Synchronous Buffered Ready Ryerson University 13

Overview Standard Send MPI_Send( buf, count, datatype, dest, tag, comm ) Where – buf is the address of the data to be sent – count is the number of elements of the MPI datatype which buf contains – datatype is the MPI datatype – dest is the destination process for the message. This is specified by the rank of the destination within the group associated with the communicator comm – tag is a marker used by the sender to distinguish between different types of messages – comm is the communicator shared by the sender and the receiver 11/6/2020 Ryerson University 14

Overview Synchronous Send MPI_Ssend( buf, count, datatype, dest, tag, comm ) – can be started whether or not a matching receive was posted – will complete successfully only if a matching receive is posted, and the receive operation has started to receive the message sent by the synchronous send. – provides synchronous communication semantics: a communication does not complete at either end before both processes rendezvous at the communication. – has non-local completion semantics. 11/6/2020 Ryerson University 15

Overview Buffered Send • A buffered-mode send – Can be started whether or not a matching receive has been posted. It may complete before a matching receive is posted. – Has local completion semantics: its completion does not depend on the occurrence of a matching receive. – In order to complete the operation, it may be necessary to buffer the outgoing message locally. For that purpose, buffer space is provided by the application. 11/6/2020 Ryerson University 16

Overview Ready Mode Send • A ready-mode send – completes immediately – may be started only if the matching receive has already been posted. – has the same semantics as a standard-mode send. – saves on overhead by avoiding handshaking and buffering 11/6/2020 Ryerson University 17

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 11/6/2020 Ryerson University 18

Point-to-Point Communication • Communication between two processes. • Source process sends message to destination process. • Communication takes place within a communicator, e. g. , MPI_COMM_WORLD. • Processes are identified by their ranks in the communicator 0 2 1 4 11/6/2020 06 November 2020 message 5 3 source destination 6 Ryerson University 19

Point-to-Point Communication For a communication to succeed: • Sender must specify a valid destination rank. • Receiver must specify a valid source rank. • The communicator must be the same. • Tags must match. • Message datatypes must match. • Receiver’s buffer must be large enough. 11/6/2020 06 November 2020 Ryerson University 20

![PointtoPoint Communication Modes Send communication modes synchronous send MPISSEND buffered asynchronous Point-to-Point Communication Modes • Send communication modes: – synchronous send MPI_SSEND – buffered [asynchronous]](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-21.jpg)

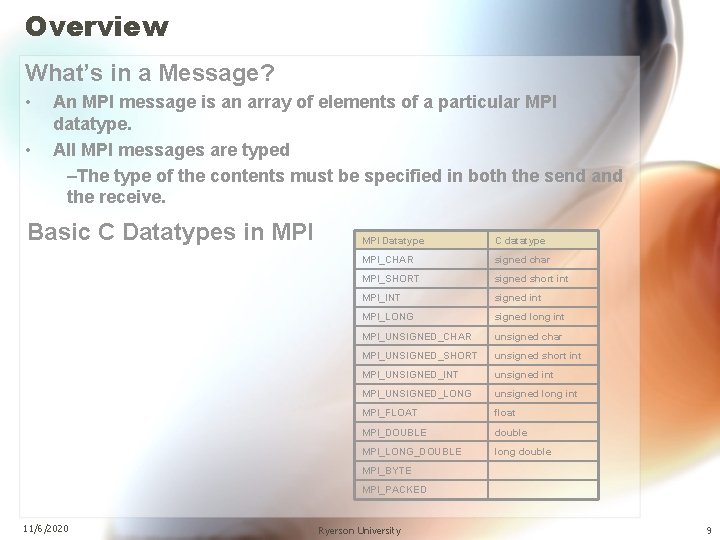

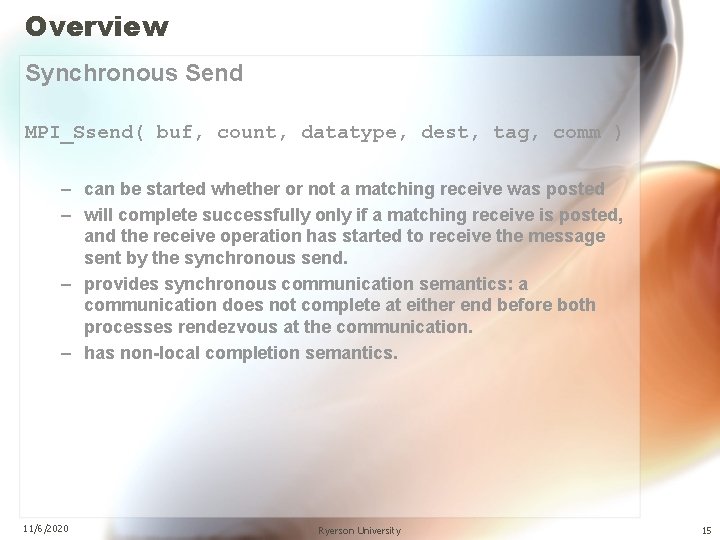

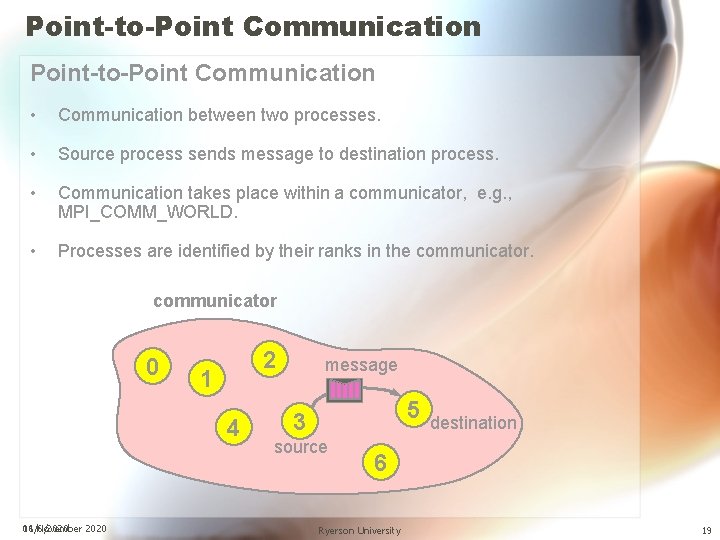

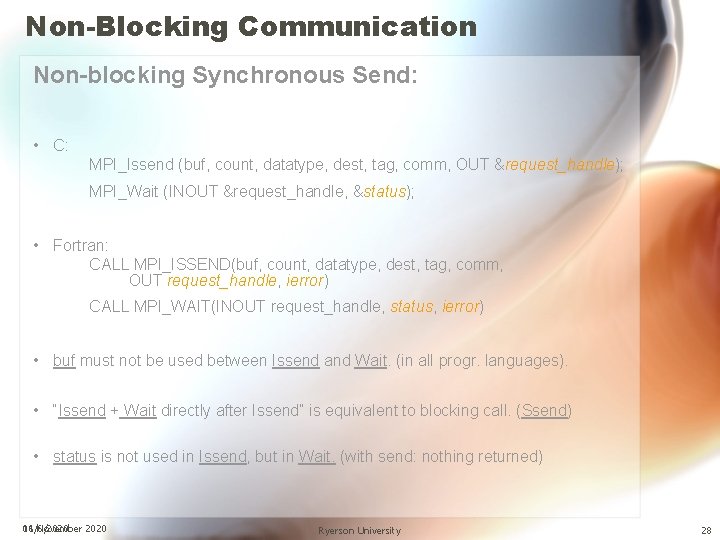

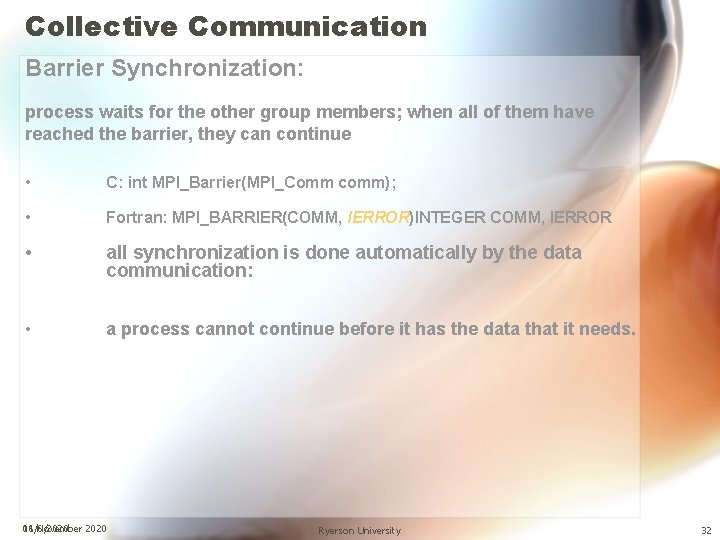

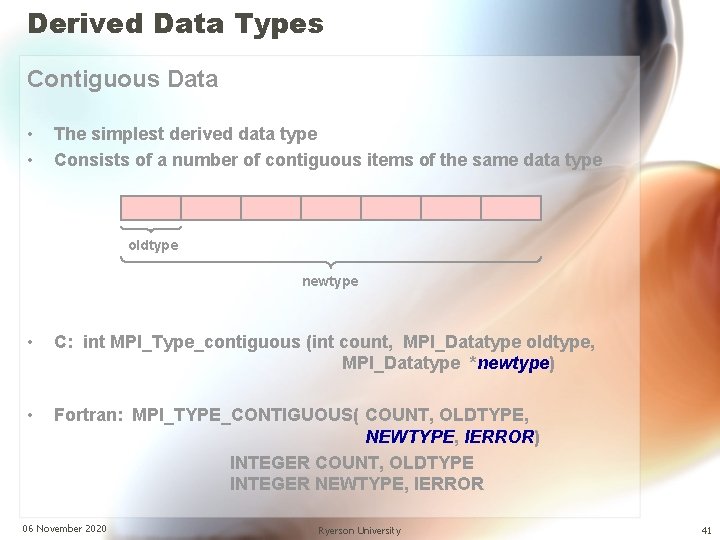

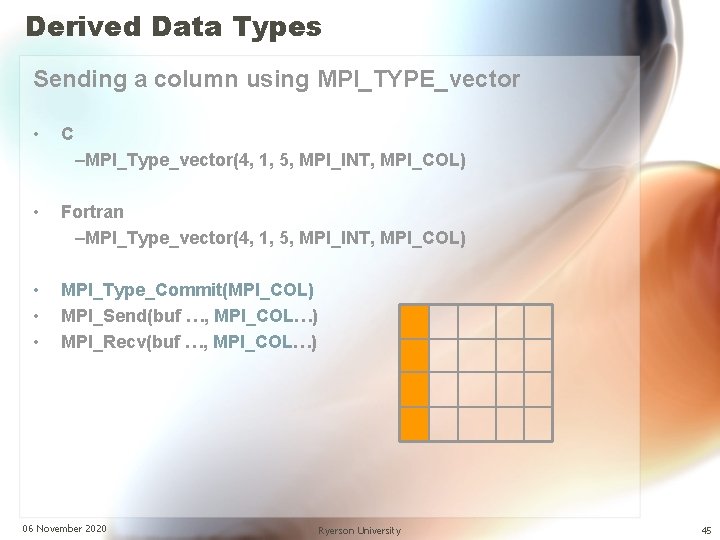

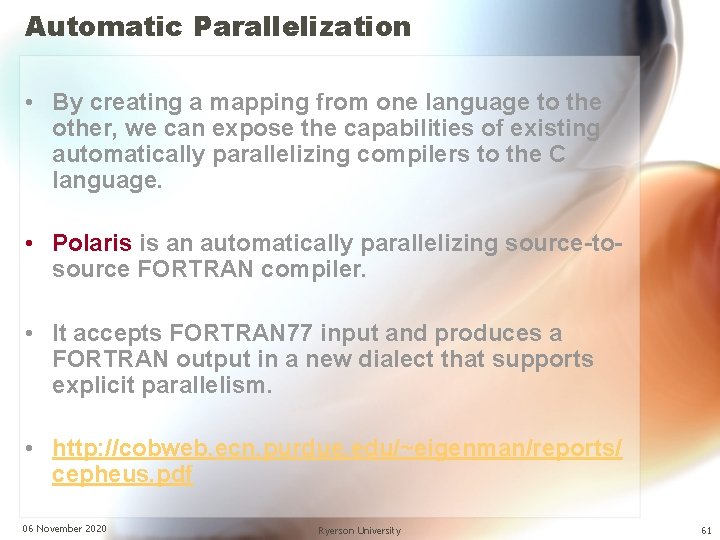

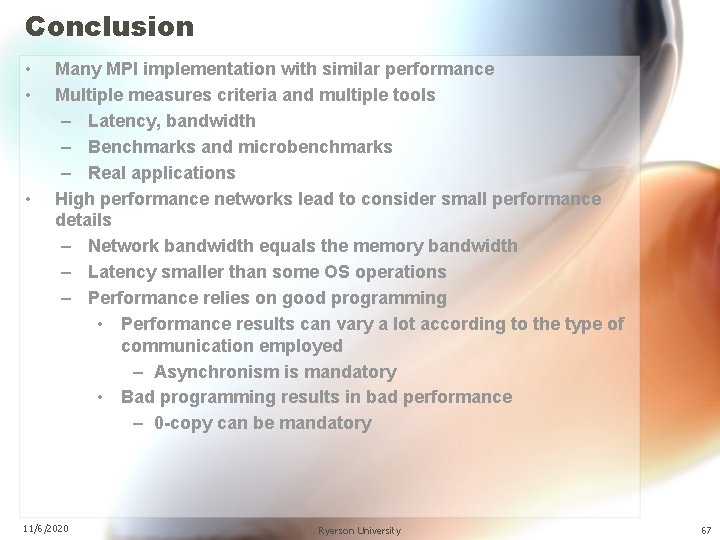

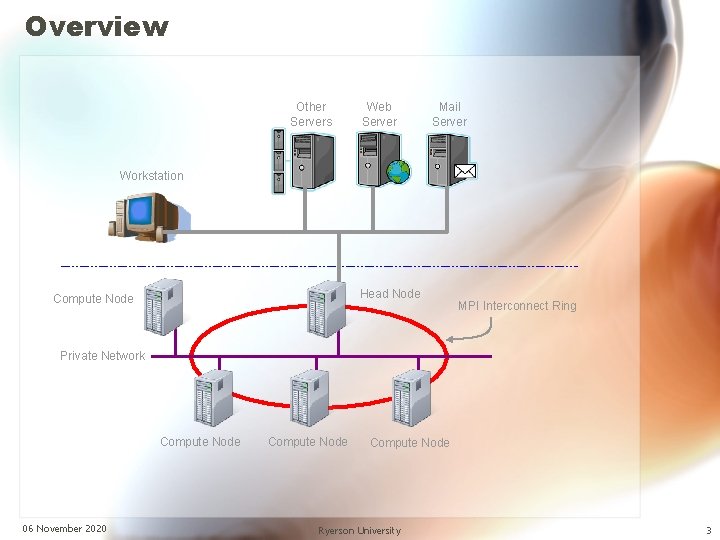

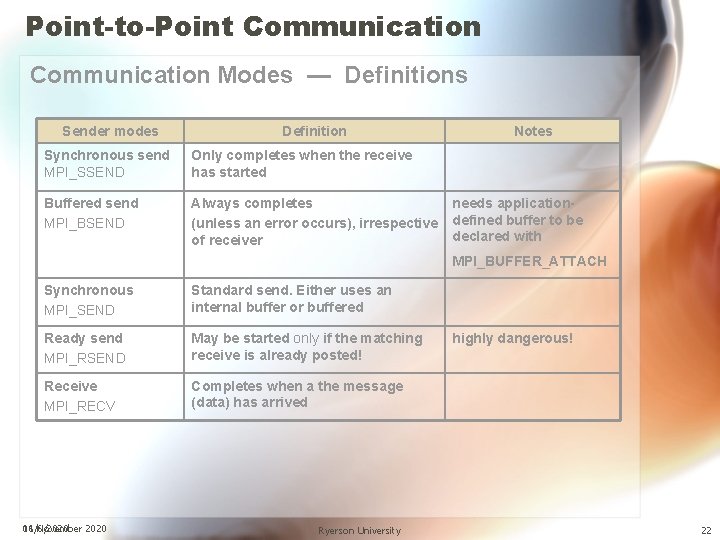

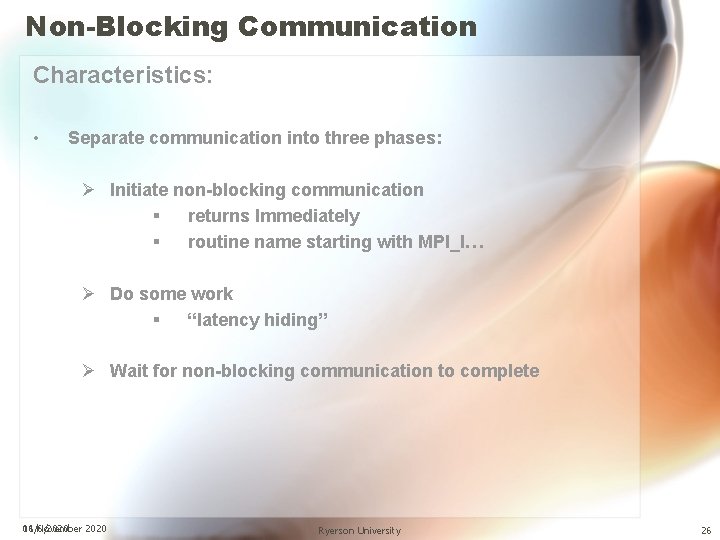

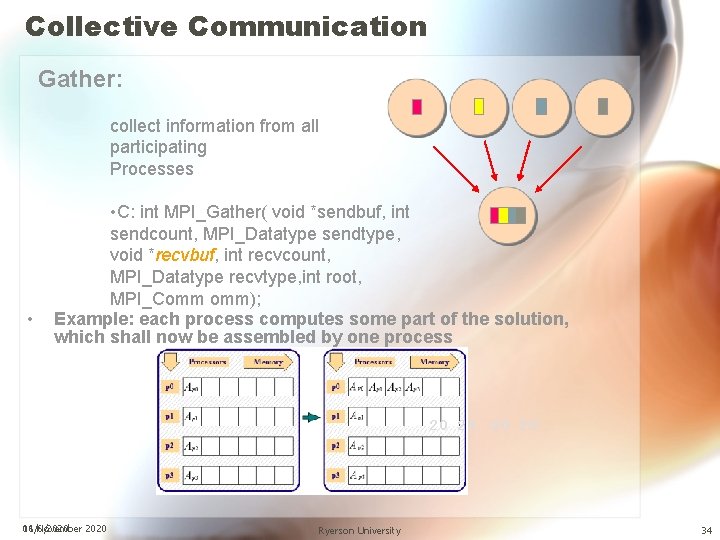

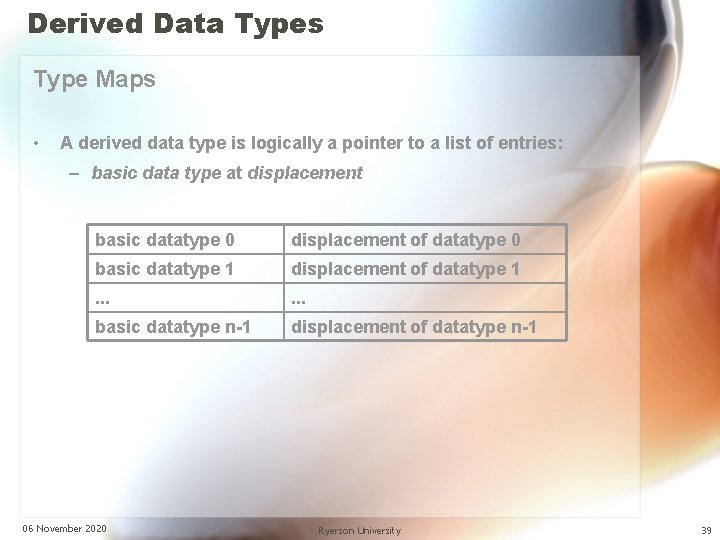

Point-to-Point Communication Modes • Send communication modes: – synchronous send MPI_SSEND – buffered [asynchronous] send MPI_BSEND – standard send MPI_SEND – Ready send MPI_RSEND • Receiving all modes 11/6/2020 06 November 2020 MPI_RECV Ryerson University 21

Point-to-Point Communication Modes — Definitions Sender modes Definition Synchronous send MPI_SSEND Only completes when the receive has started Buffered send MPI_BSEND Always completes (unless an error occurs), irrespective of receiver Notes needs applicationdefined buffer to be declared with MPI_BUFFER_ATTACH Synchronous MPI_SEND Standard send. Either uses an internal buffer or buffered Ready send MPI_RSEND May be started only if the matching receive is already posted! Receive MPI_RECV Completes when a the message (data) has arrived 11/6/2020 06 November 2020 Ryerson University highly dangerous! 22

Point-to-Point Communication Message Order Preservation • Rule for messages on the same connection, i. e. , same communicator, source, and destination rank. • Messages do not overtake each other. • This is true even for non-synchronous sends. 0 2 1 4 • 5 3 6 If both receives match both messages, then the order is preserved. 11/6/2020 06 November 2020 Ryerson University 23

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 24

Non-Blocking Communication Meaning of Blocking and Non-Blocking: • Blocking: the program will not return from the subroutine call until the copy to/from the system buffer has finished. • Non-blocking: the program immediately returns from the subroutine call. It is not assured that the copy to/from the system buffer has completed so that user has to make sure of the completion of the copy. 11/6/2020 Ryerson University 25

Non-Blocking Communication Characteristics: • Separate communication into three phases: Ø Initiate non-blocking communication § returns Immediately § routine name starting with MPI_I… Ø Do some work § “latency hiding” Ø Wait for non-blocking communication to complete 06 November 2020 11/6/2020 Ryerson University 26

Non-Blocking Communication Non-Blocking Examples • Non-Blocking Send MPI_Isend(. . . ) doing some other work MPI_Wait(. . . ) • Non-Blocking receive MPI_Irecv(. . . ) doing some other work MPI_Wait(. . . ) = waiting until operation locally completed 06 November 2020 11/6/2020 Ryerson University 27

Non-Blocking Communication Non-blocking Synchronous Send: • C: MPI_Issend (buf, count, datatype, dest, tag, comm, OUT &request_handle); MPI_Wait (INOUT &request_handle, &status); • Fortran: CALL MPI_ISSEND(buf, count, datatype, dest, tag, comm, OUT request_handle, ierror) CALL MPI_WAIT(INOUT request_handle, status, ierror) • buf must not be used between Issend and Wait. (in all progr. languages). • “Issend + Wait directly after Issend” is equivalent to blocking call. (Ssend) • status is not used in Issend, but in Wait. (with send: nothing returned) 06 November 2020 11/6/2020 Ryerson University 28

Non-Blocking Communications Non-blocking Receive: • C: MPI_Irecv (buf, count, datatype, source, tag, comm, OUT &request_handle); MPI_Wait (INOUT &request_handle, &status); • Fortran: CALL MPI_IRECV (buf, count, datatype, source, tag, comm, OUT request_handle, ierror) CALL MPI_WAIT( INOUT request_handle, status, ierror) • buf must not be used between Irecv and Wait (in all progr. languages) 06 November 2020 11/6/2020 Ryerson University 29

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 30

Collective Communication Characteristic: • Communications exist in a group of processes. • Must be called by all processes in a communicator. • Synchronization may or may not occur. • All collective operations are blocking. • Receive buffers must have exactly the same size as send buffers. 11/6/2020 06 November 2020 Ryerson University 31

Collective Communication Barrier Synchronization: process waits for the other group members; when all of them have reached the barrier, they can continue • C: int MPI_Barrier(MPI_Comm comm); • Fortran: MPI_BARRIER(COMM, IERROR)INTEGER COMM, IERROR • all synchronization is done automatically by the data communication: • a process cannot continue before it has the data that it needs. 11/6/2020 06 November 2020 Ryerson University 32

Collective Communication Broadcast: • sends the data to all members of the group given by a communicator. • C: int MPI_Bcast(void *buf, int count, MPI_Datatype datatype, int root, MPI_Comm comm); • Example: the first process that finds the solution in a competition informs everyone to stop 11/6/2020 06 November 2020 Ryerson University 33

Collective Communication Gather: collect information from all participating Processes • • C: int MPI_Gather( void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm omm); Example: each process computes some part of the solution, which shall now be assembled by one process 20 20 11/6/2020 06 November 2020 Ryerson University 34

Collective Communication Scatter: Distribution of data among processes. • C: int MPI_Scatter( void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm); Example: two vectors are distributed in order to prepare a parallel computation of their scalar product 11/6/2020 06 November 2020 Ryerson University 35

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 36

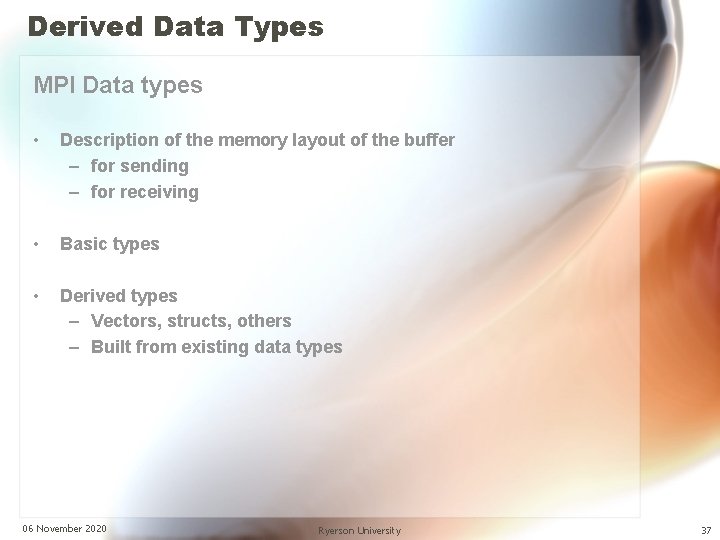

Derived Data Types MPI Data types • Description of the memory layout of the buffer – for sending – for receiving • Basic types • Derived types – Vectors, structs, others – Built from existing data types 06 November 2020 Ryerson University 37

![Derived Data Types Data Layout and the Describing Datatype Handle struct bufflayout int ival3 Derived Data Types Data Layout and the Describing Datatype Handle struct buff_layout {int i_val[3];](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-38.jpg)

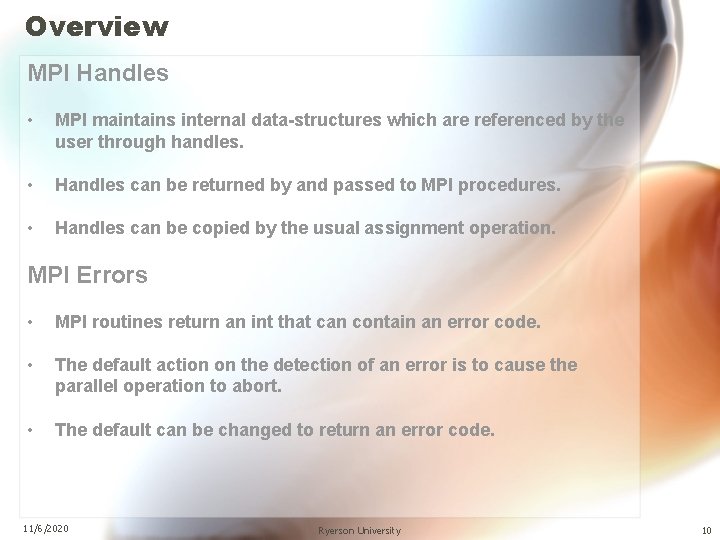

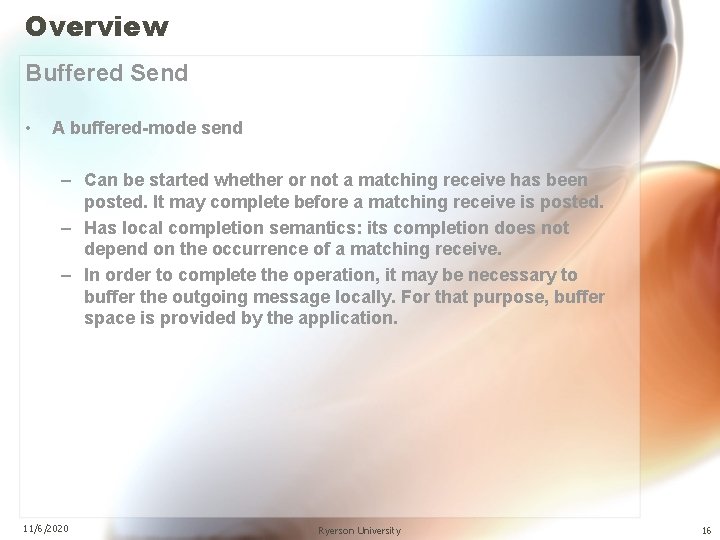

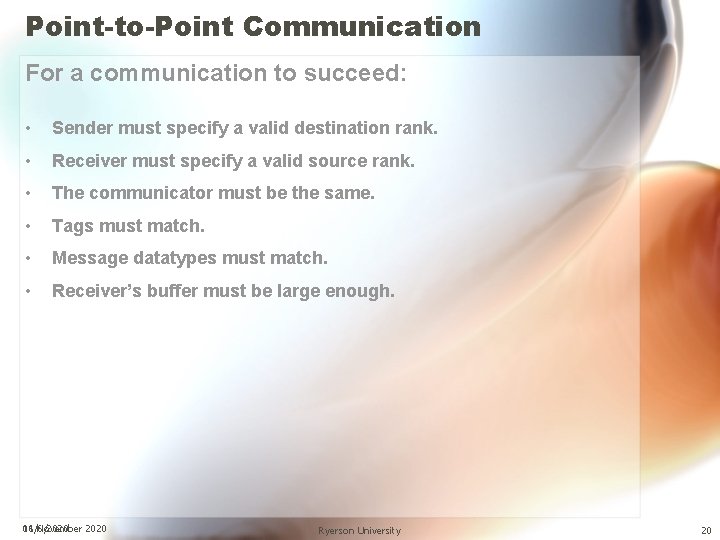

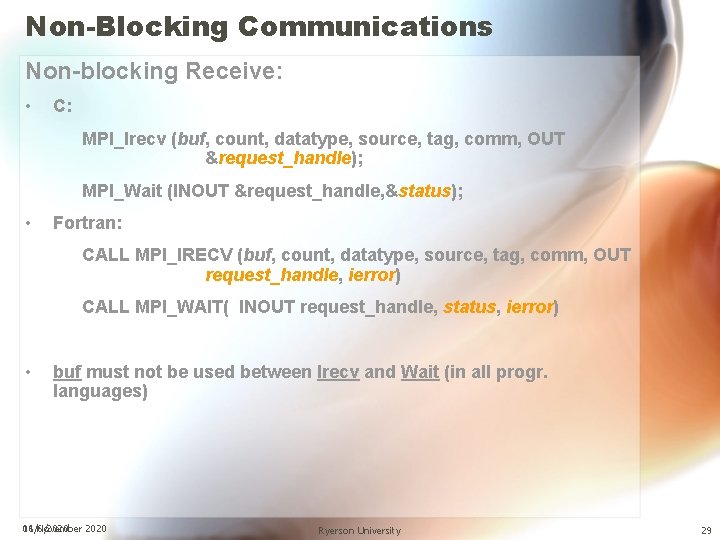

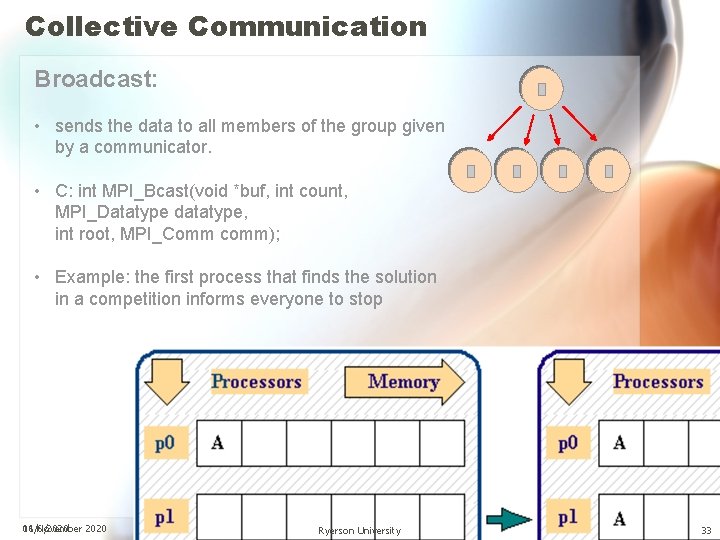

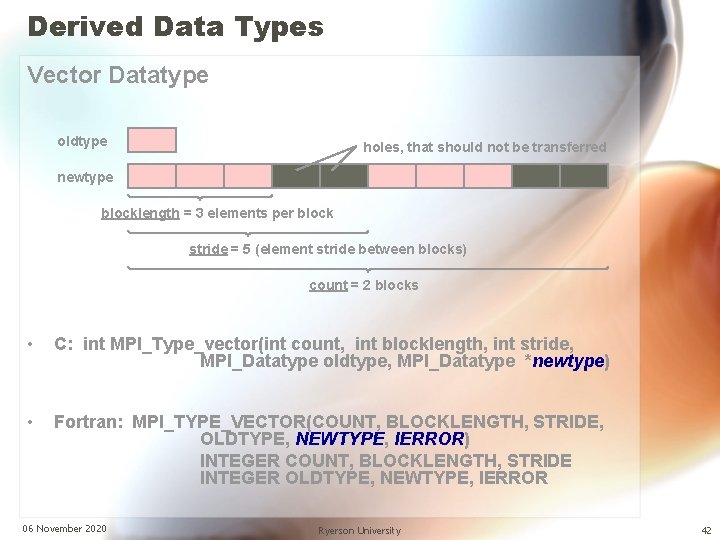

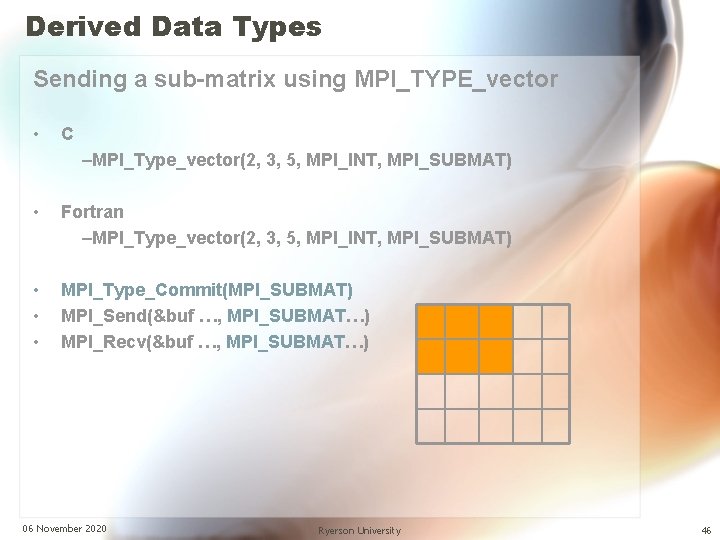

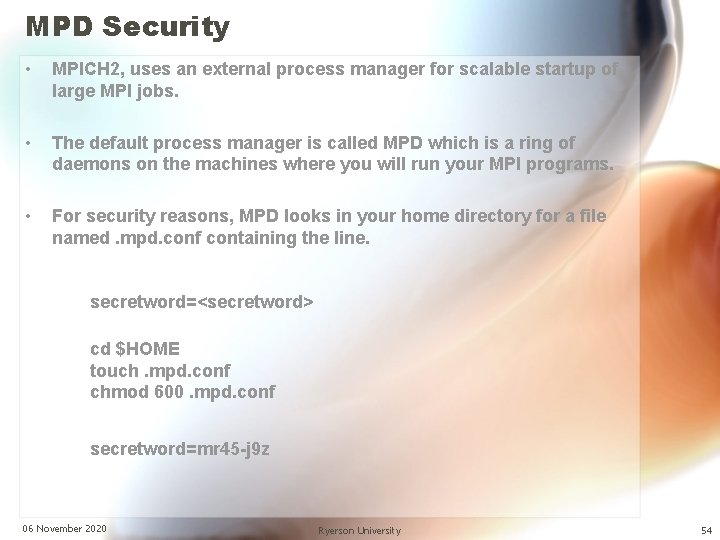

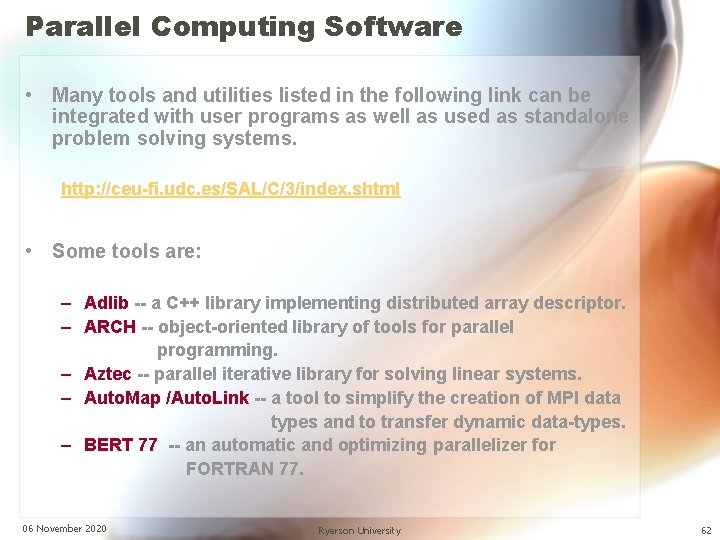

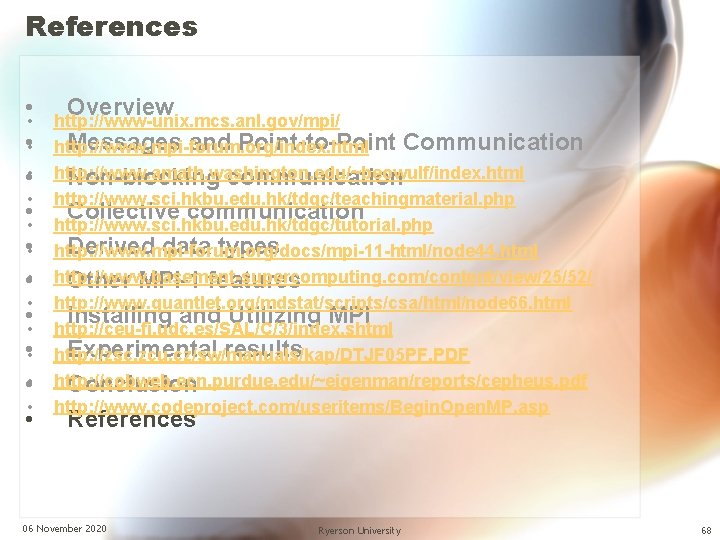

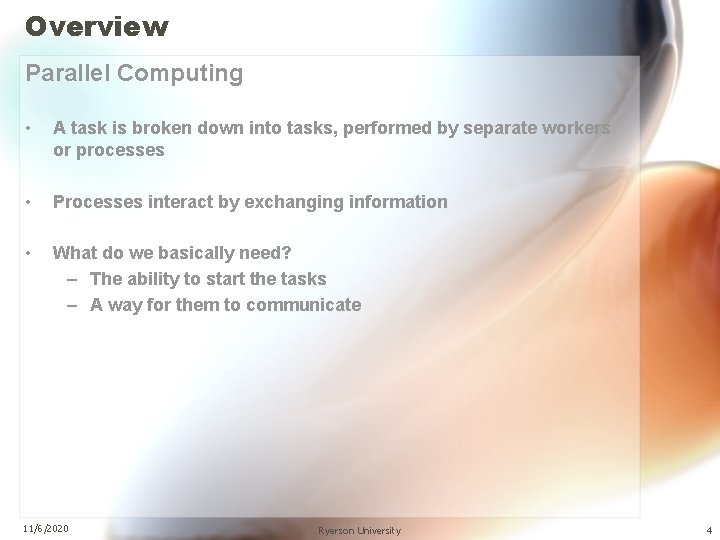

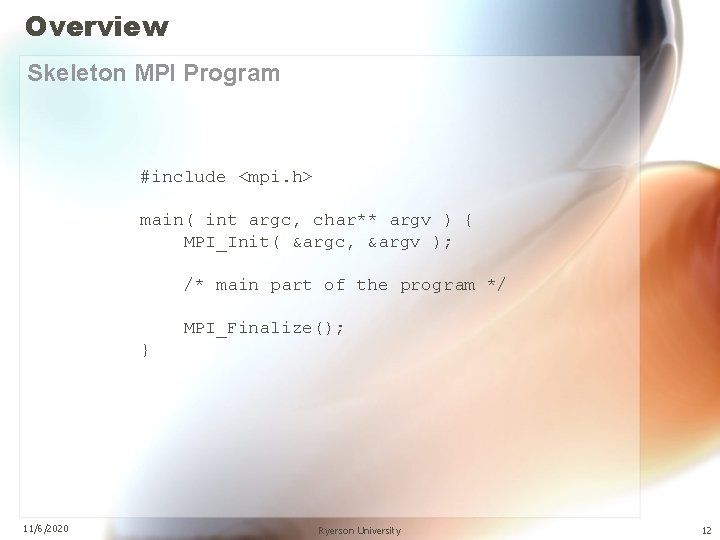

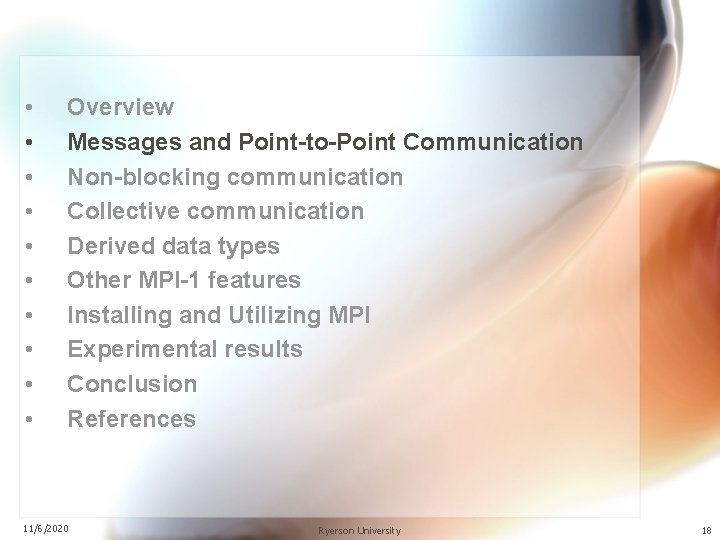

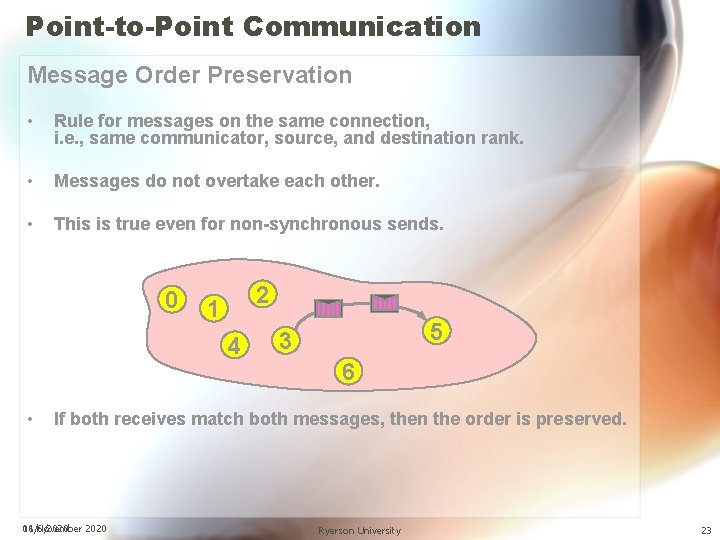

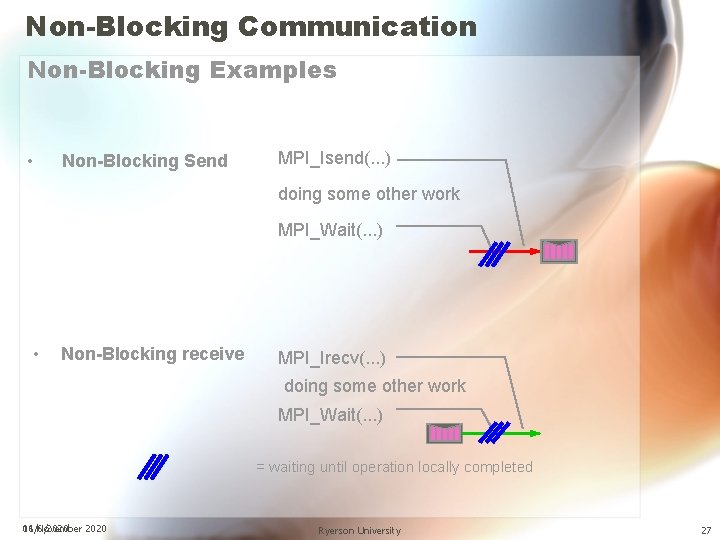

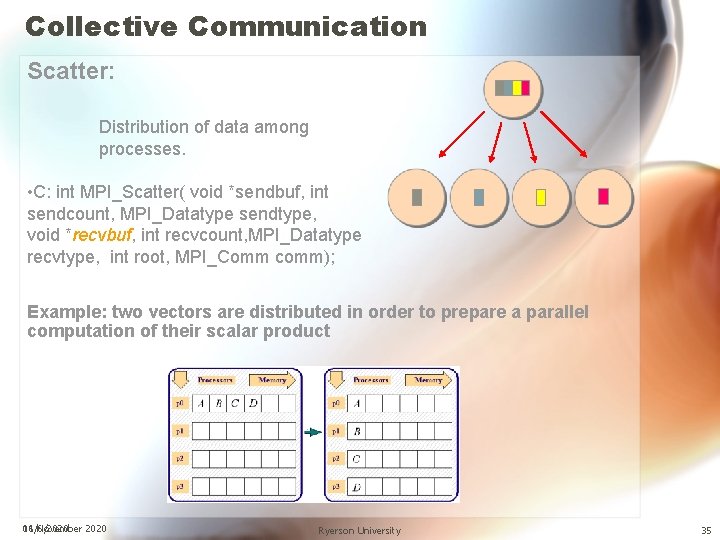

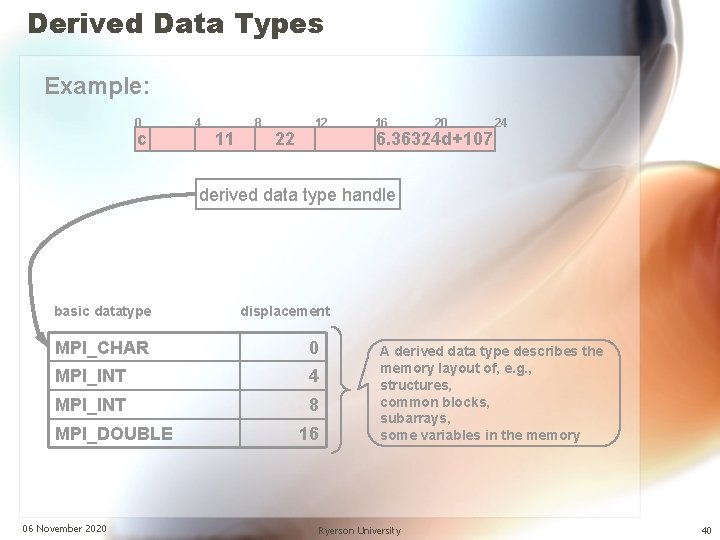

Derived Data Types Data Layout and the Describing Datatype Handle struct buff_layout {int i_val[3]; double d_val[5]; } buffer; array_of_types[0]=MPI_INT; array_of_blocklengths[0]=3; array_of_displacements[0]=0; array_of_types[1]=MPI_DOUBLE; array_of_blocklengths[1]=5; array_of_displacements[1]=…; MPI_Type_struct(2, array_of_blocklengths, array_of_displacements, array_of_types, &buff_datatype); Compiler MPI_Type_commit(&buff_datatype); MPI_Send(&buffer, 1, buff_datatype, …) &buffer = the start address of the data int 06 November 2020 the datatype handle describes the data layout double Ryerson University 38

Derived Data Types Type Maps • A derived data type is logically a pointer to a list of entries: – basic data type at displacement basic datatype 0 displacement of datatype 0 basic datatype 1 displacement of datatype 1 . . . basic datatype n-1 displacement of datatype n-1 06 November 2020 Ryerson University 39

Derived Data Types Example: 0 c 4 11 8 22 12 16 20 6. 36324 d+107 24 derived data type handle basic datatype displacement MPI_CHAR 0 MPI_INT 4 MPI_INT 8 MPI_DOUBLE 06 November 2020 16 A derived data type describes the memory layout of, e. g. , structures, common blocks, subarrays, some variables in the memory Ryerson University 40

Derived Data Types Contiguous Data • • The simplest derived data type Consists of a number of contiguous items of the same data type oldtype newtype • C: int MPI_Type_contiguous (int count, MPI_Datatype oldtype, MPI_Datatype *newtype) • Fortran: MPI_TYPE_CONTIGUOUS( COUNT, OLDTYPE, NEWTYPE, IERROR) INTEGER COUNT, OLDTYPE INTEGER NEWTYPE, IERROR 06 November 2020 Ryerson University 41

Derived Data Types Vector Datatype oldtype holes, that should not be transferred newtype blocklength = 3 elements per block stride = 5 (element stride between blocks) count = 2 blocks • C: int MPI_Type_vector(int count, int blocklength, int stride, MPI_Datatype oldtype, MPI_Datatype *newtype) • Fortran: MPI_TYPE_VECTOR(COUNT, BLOCKLENGTH, STRIDE, OLDTYPE, NEWTYPE, IERROR) INTEGER COUNT, BLOCKLENGTH, STRIDE INTEGER OLDTYPE, NEWTYPE, IERROR 06 November 2020 Ryerson University 42

Derived Data Types MPI_TYPE_VECTOR: An example • Sending the first row of a N*M Matrix – C – Fortran • Sending the first column of an N*M Matrix – C – Fortran 06 November 2020 Ryerson University 43

Derived Data Types Sending a row using MPI_TYPE_vector • C –MPI_Type_vector(1, 5, 1, MPI_INT, MPI_ROW) • Fortran –MPI_Type_vector(1, 5, 1, MPI_INT, MPI_ROW) • • • MPI_Type_Commit(MPI_ROW) MPI_Send(&buf …, MPI_ROW…) MPI_Recv(&buf …, MPI_ROW…) 06 November 2020 Ryerson University 44

Derived Data Types Sending a column using MPI_TYPE_vector • C –MPI_Type_vector(4, 1, 5, MPI_INT, MPI_COL) • Fortran –MPI_Type_vector(4, 1, 5, MPI_INT, MPI_COL) • • • MPI_Type_Commit(MPI_COL) MPI_Send(buf …, MPI_COL…) MPI_Recv(buf …, MPI_COL…) 06 November 2020 Ryerson University 45

Derived Data Types Sending a sub-matrix using MPI_TYPE_vector • C –MPI_Type_vector(2, 3, 5, MPI_INT, MPI_SUBMAT) • Fortran –MPI_Type_vector(2, 3, 5, MPI_INT, MPI_SUBMAT) • • • MPI_Type_Commit(MPI_SUBMAT) MPI_Send(&buf …, MPI_SUBMAT…) MPI_Recv(&buf …, MPI_SUBMAT…) 06 November 2020 Ryerson University 46

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 47

Other MPI features (1) • Point-to-point – MPI_Sendrecv & MPI_Sendrecv_replace – Null processes, MPI_PROC_NULL (see Chap. 7? ? , slide on MPI_Cart_shift) – MPI_Pack & MPI_Unpack – MPI_Probe: check length (tag, source rank) before calling MPI_Recv – MPI_Iprobe: check whether a message is available – MPI_Request_free, MPI_Cancel – Persistent requests – MPI_BOTTOM (in point-to-point and collective communication) • Collective Operations – MPI_Allgather MPI_Reduce_scatter A B C ABC ABC MPI_Alltoall A 1 B 1 C 1 A 2 B 2 C 2 A 3 B 3 C 3 A 1 A 2 A 3 B 1 B 2 B 3 C 1 C 2 C 3 A 1 B 1 C 1 A 2 B 2 C 2 A 3 B 3 C 3 A B C – MPI_. . . . …v (Gatherv, Scatterv, Allgatherv, Alltoallv) • Topologies – MPI_DIMS_CREATE 06 November 2020 Ryerson University 48

Other MPI features (2) Error Handling • the communication should be reliable • if the MPI program is erroneous: – by default: abort, if error detected by MPI library otherwise, unpredictable behavior – Fortran: call MPI_Errhandler_set ( comm, MPI_ERRORS_RETURN, ierr) – C: MPI_Errhandler_set ( comm, MPI_ERRORS_RETURN); then • ierror returned by each MPI routine • undefined state after an erroneous MPI call has occurred (only MPI_ABORT(…) should be still callable) 06 November 2020 Ryerson University 49

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 50

Installing MPI sequence: /home/grad/yourname> tar xfz mpich 2 -1. 0. 6 p 1. tar. gz sequence: /home/grad/yourname> mkdir mpich 2 -install sequence: /home/grad/yourname> cd mpich 2 -1. 0. 6 sequence: /home/grad/yourname/mpich 2 -1. 0. 6> configure –prefix=/home/you/mpich 2 -install |& tee configure. log 06 November 2020 Ryerson University 51

Building MPICH 2 sequence: /home/grad/yourname/mpich 2 -1. 0. 6 > make |& tee make. log sequence: /home/grad/yourname/mpich 2 -1. 0. 6 > make install |& tee install. log 06 November 2020 Ryerson University 52

Building MPICH 2 sequence: /home/grad/yourname/mpich 2 -1. 0. 6 > setenv PATH /home/you/mpich 2 -install/bin: $PATH To check that everything is in order at this point by doing: which mpd which mpicc which mpiexec which mpirun 06 November 2020 Ryerson University 53

MPD Security • MPICH 2, uses an external process manager for scalable startup of large MPI jobs. • The default process manager is called MPD which is a ring of daemons on the machines where you will run your MPI programs. • For security reasons, MPD looks in your home directory for a file named. mpd. conf containing the line. secretword=<secretword> cd $HOME touch. mpd. conf chmod 600. mpd. conf secretword=mr 45 -j 9 z 06 November 2020 Ryerson University 54

Bringing up a Ring of One MPD • The first sanity check consists of bringing up a ring of one MPD on the local machine – Mpd & • Testing one MPD command – mpdtrace • Bringing the “ring” down – mpdallexit 06 November 2020 Ryerson University 55

Bringing up a Ring of MPDs • Now we will bring up a ring of mpd’s on a set of machines. There is a work around. mpd & mpdtrace –l Then log into each of the other machines, put the install/bin directory in your path, and do: mpd -h <hostname> -p <port> & 06 November 2020 Ryerson University 56

Automatic Ring Startup • Avoiding password prompt – – cd ~/. ssh-keygen -t rsa cp id_rsa. pub authorized_keys mpdboot -n 4 -f mpd. hosts 06 November 2020 Ryerson University 57

Testing the Ring • Test the ring we have just created mpdtrace mpdringtest 1000 06 November 2020 Ryerson University 58

Testing the Ring • Test that the ring can run a multiprocess job mpiexec -n <number> hostname mpiexec -l -n 30 /bin/hostname 06 November 2020 Ryerson University 59

Compiling & Running an MPI Job • Compilation in C: – mpicc -o prog. c • Compilation in C++: – mpi. CC -o prpg prog. c (Bull) – mpicxx -o prog. cpp (IBM cluster) • Compilation in Fortran: – mpif 77 -o prog. f – mpif 90 -o prog. f 90 • Executing program with num processes: – mprun –n num prog (Bull) – mpiexec -n num prog (Standard MPI-2) 06 November 2020 Ryerson University 60

Automatic Parallelization • By creating a mapping from one language to the other, we can expose the capabilities of existing automatically parallelizing compilers to the C language. • Polaris is an automatically parallelizing source-tosource FORTRAN compiler. • It accepts FORTRAN 77 input and produces a FORTRAN output in a new dialect that supports explicit parallelism. • http: //cobweb. ecn. purdue. edu/~eigenman/reports/ cepheus. pdf 06 November 2020 Ryerson University 61

Parallel Computing Software • Many tools and utilities listed in the following link can be integrated with user programs as well as used as standalone problem solving systems. http: //ceu-fi. udc. es/SAL/C/3/index. shtml • Some tools are: – Adlib -- a C++ library implementing distributed array descriptor. – ARCH -- object-oriented library of tools for parallel programming. – Aztec -- parallel iterative library for solving linear systems. – Auto. Map /Auto. Link -- a tool to simplify the creation of MPI data types and to transfer dynamic data-types. – BERT 77 -- an automatic and optimizing parallelizer for FORTRAN 77. 06 November 2020 Ryerson University 62

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 63

![Experimental results LAN For i0 i10 i do A600 500 x B500 600 LAN Experimental results (LAN) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] LAN](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-64.jpg)

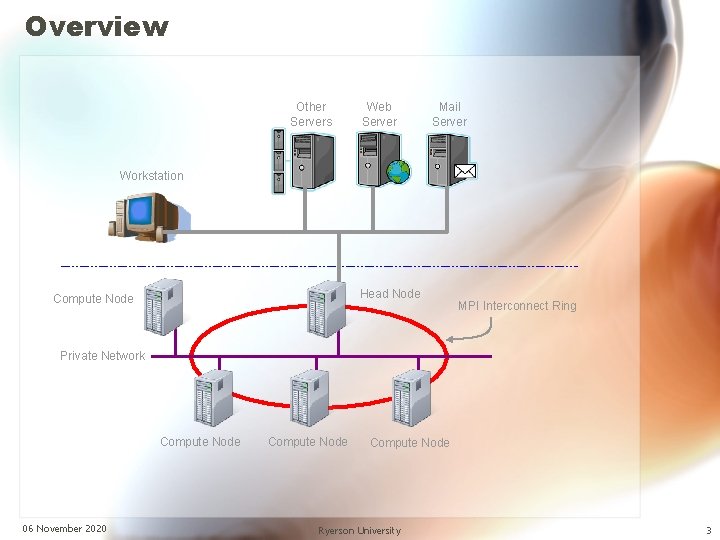

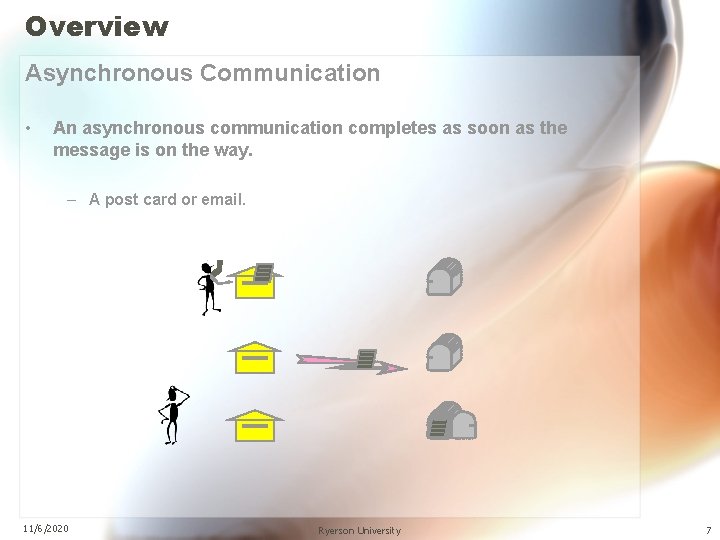

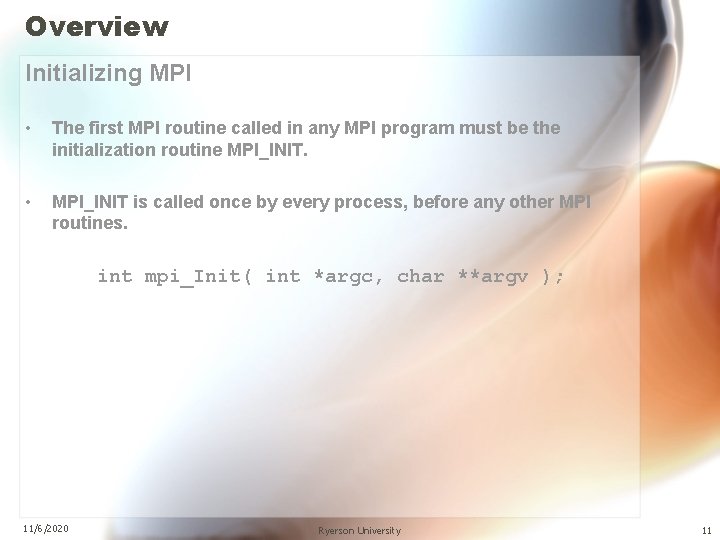

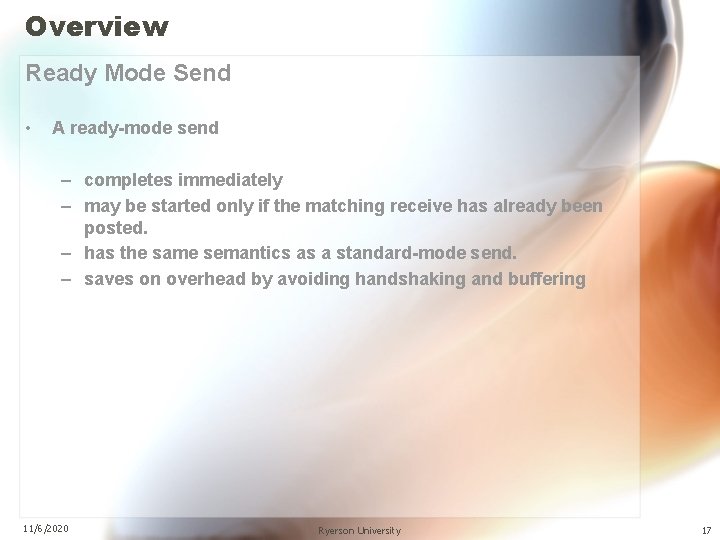

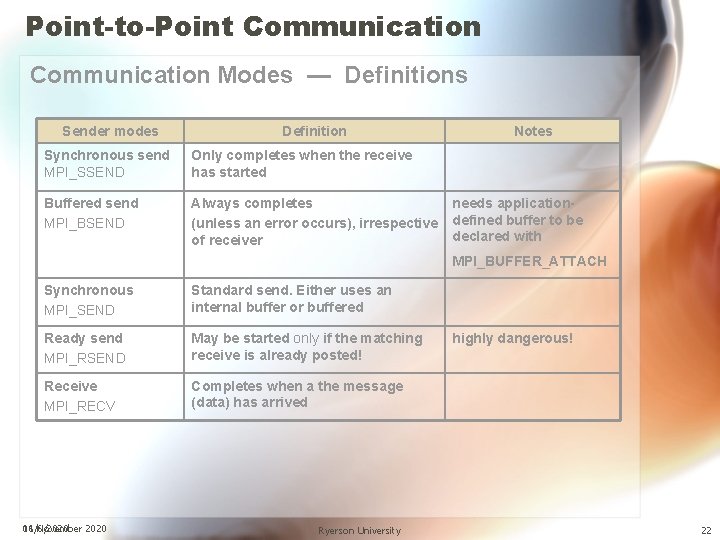

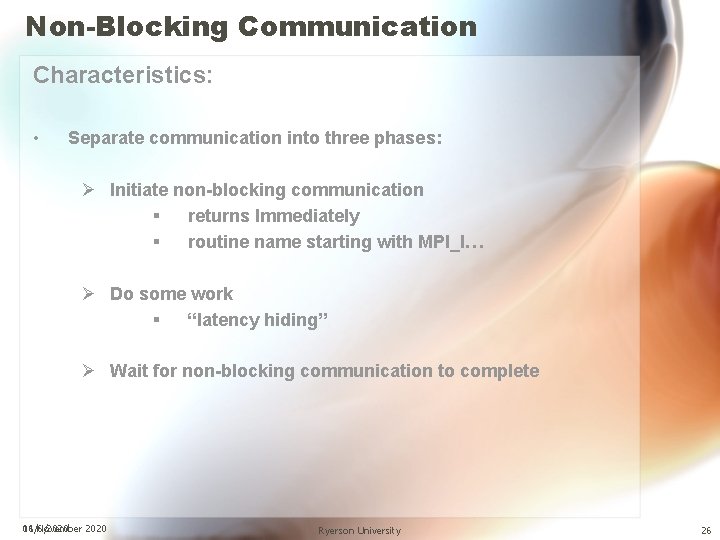

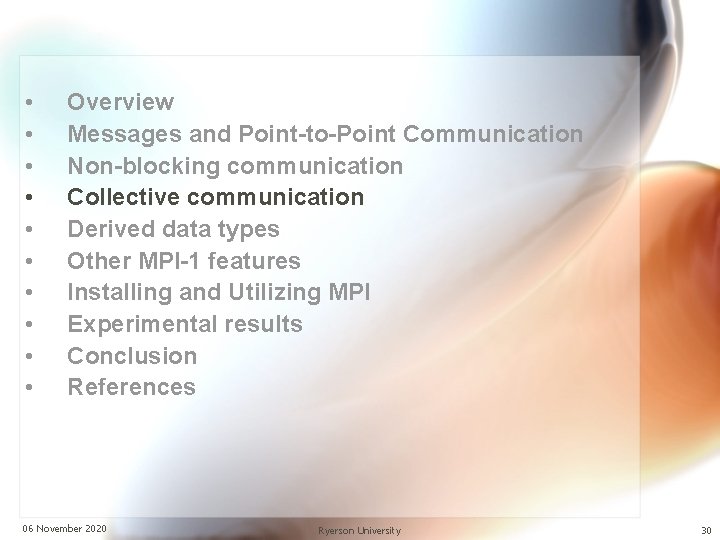

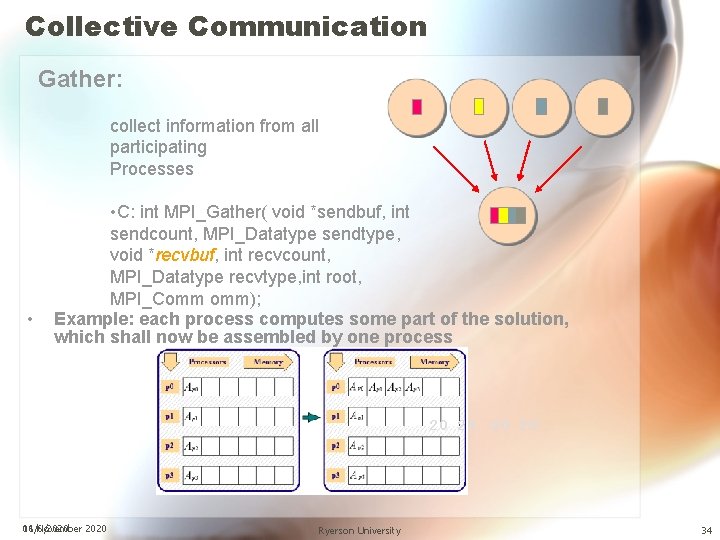

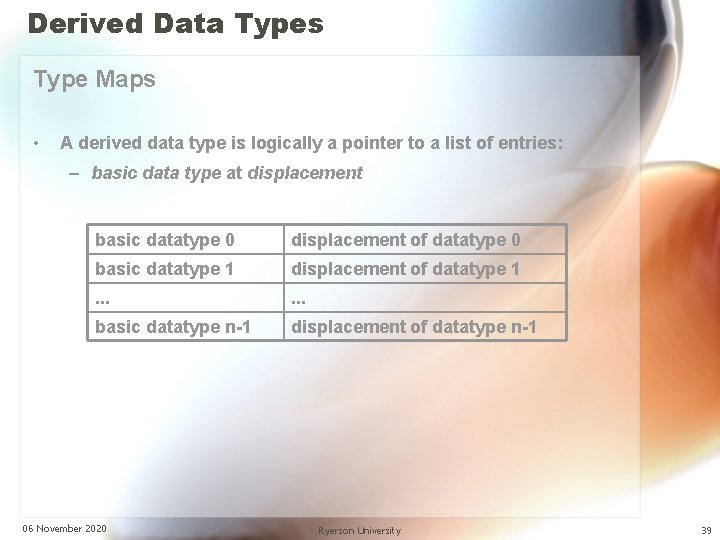

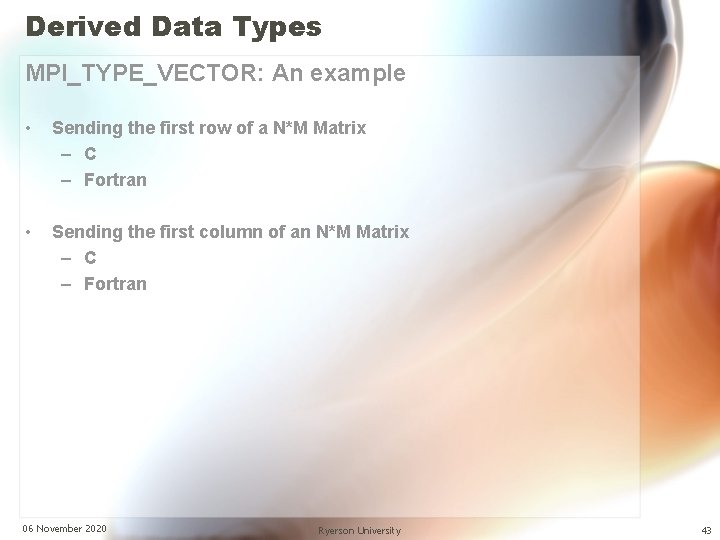

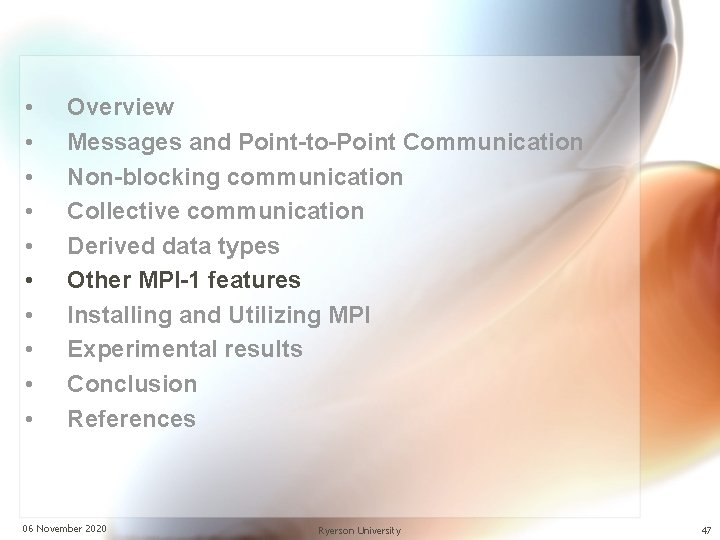

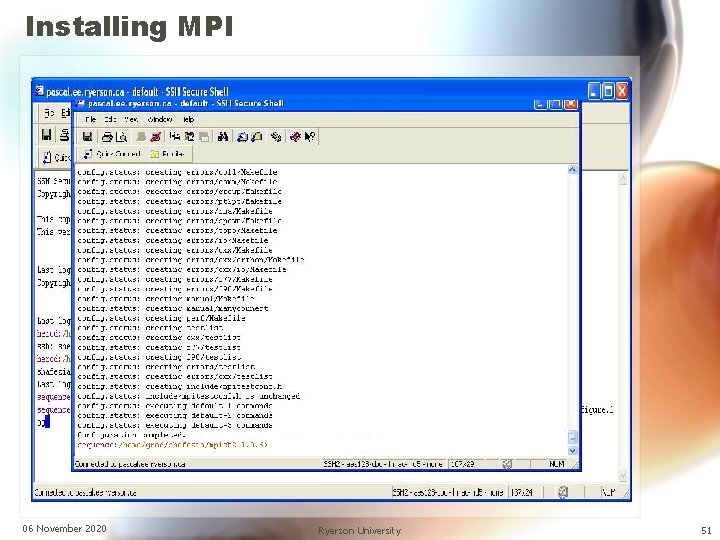

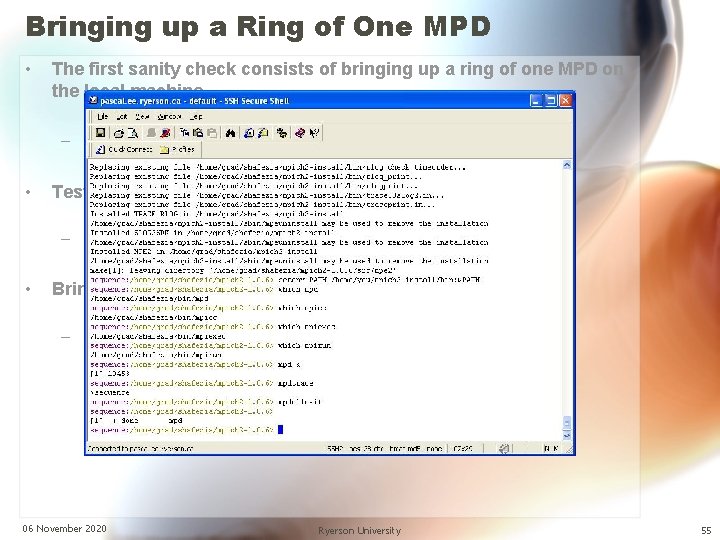

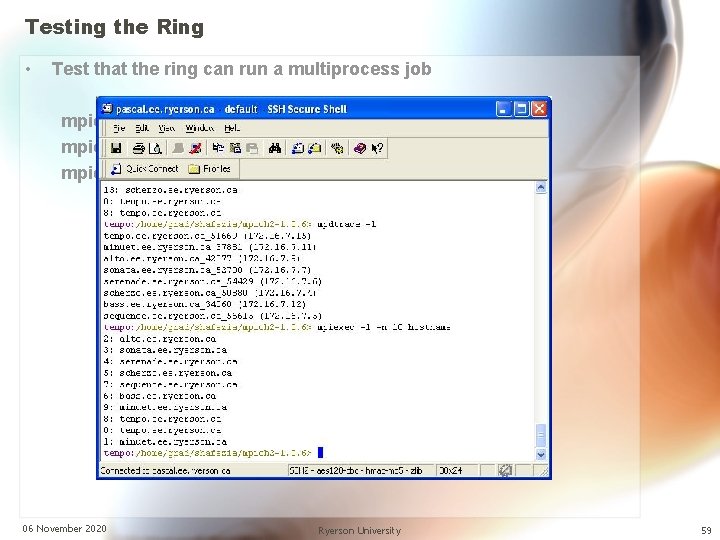

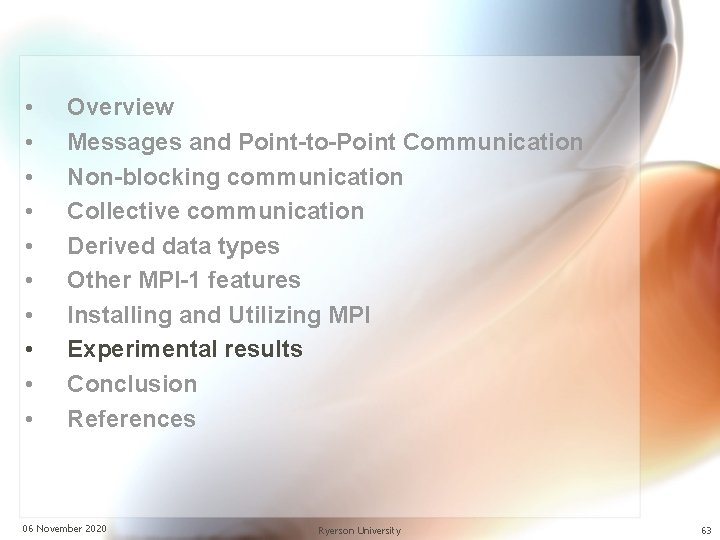

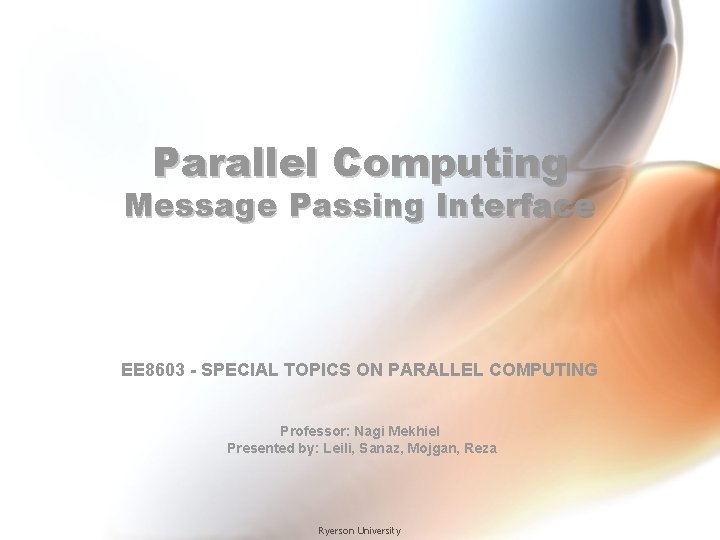

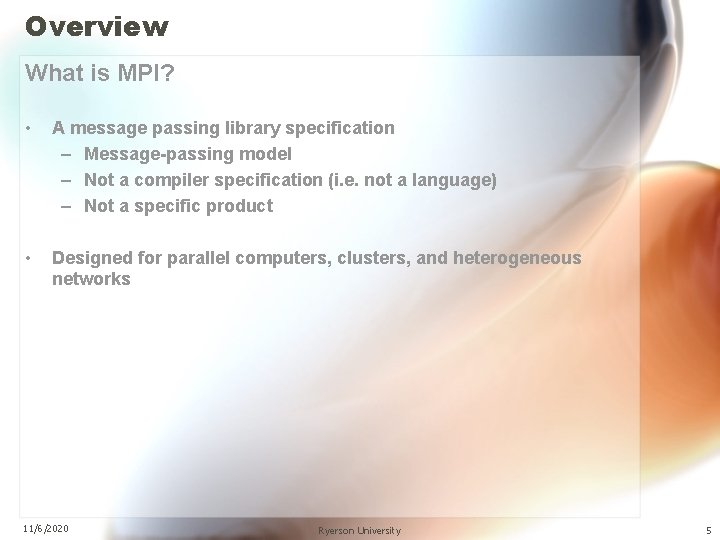

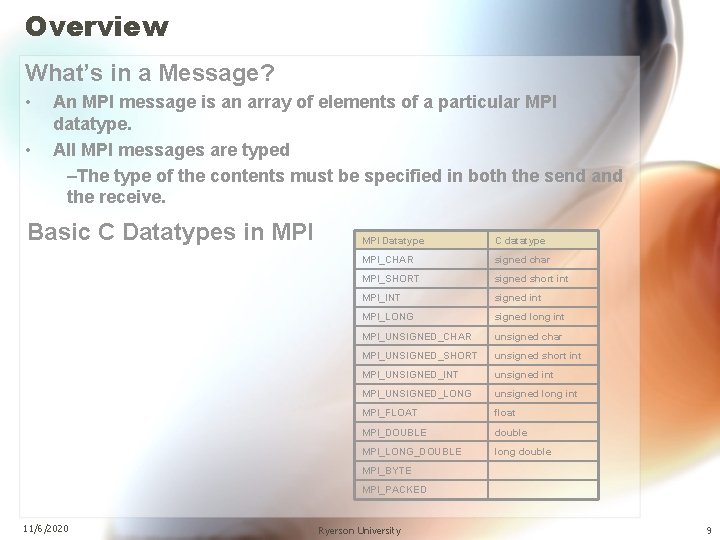

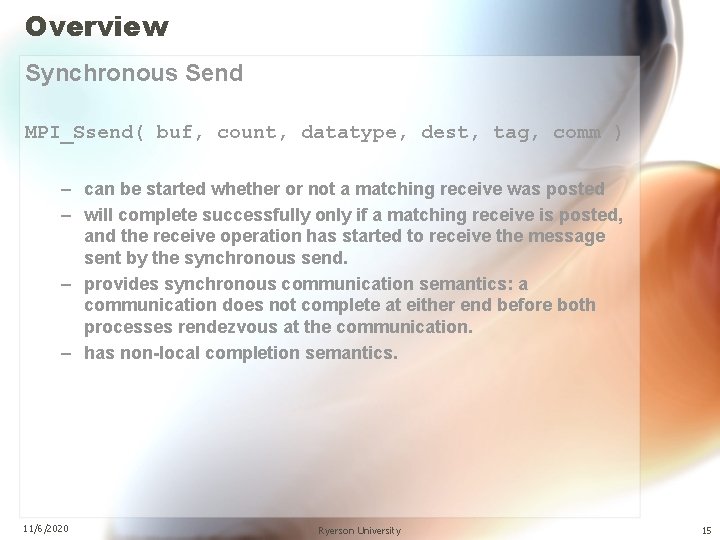

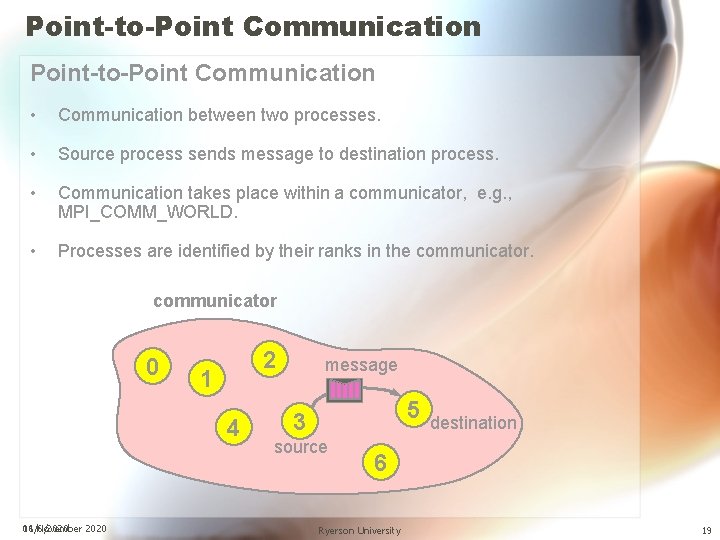

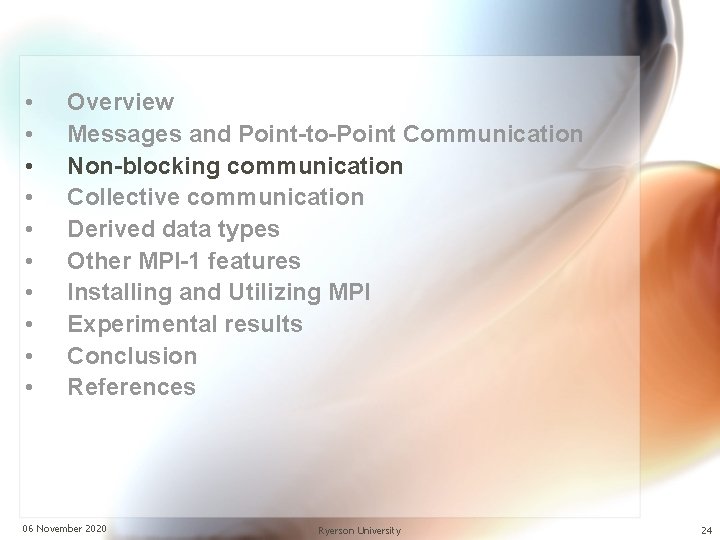

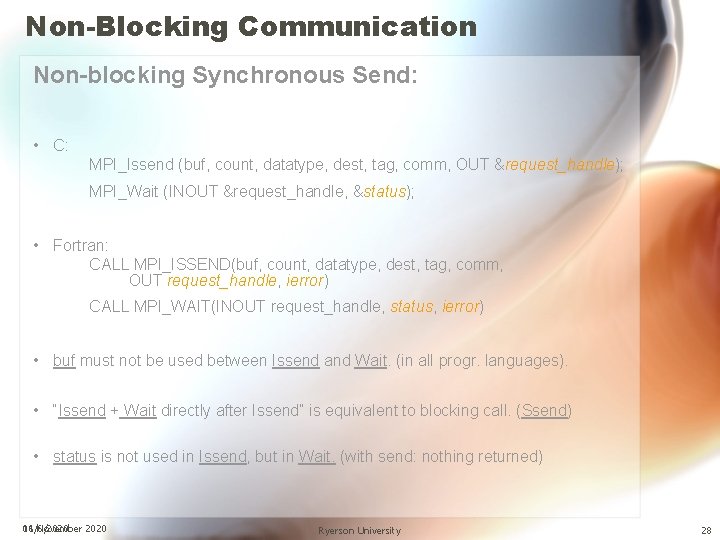

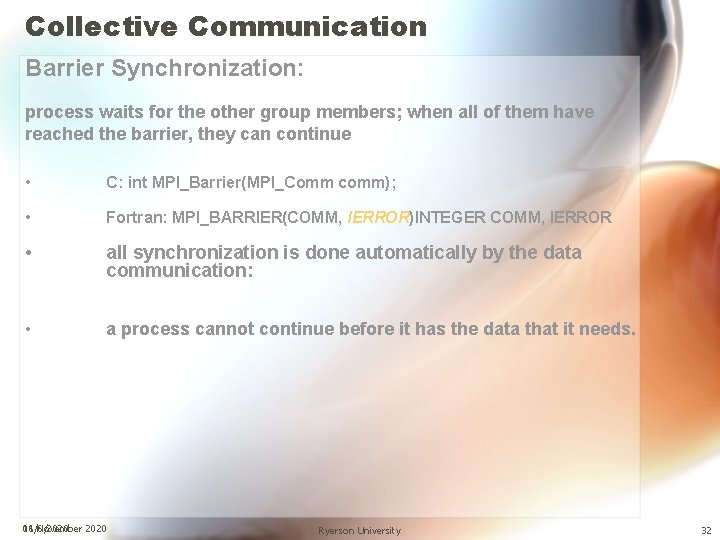

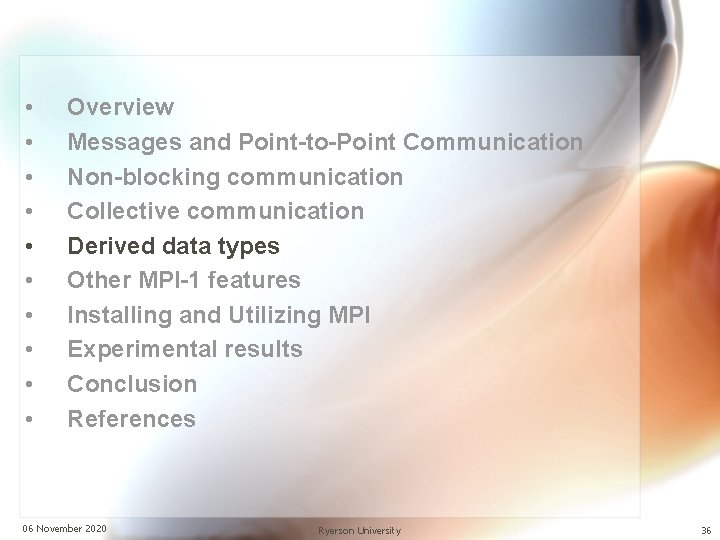

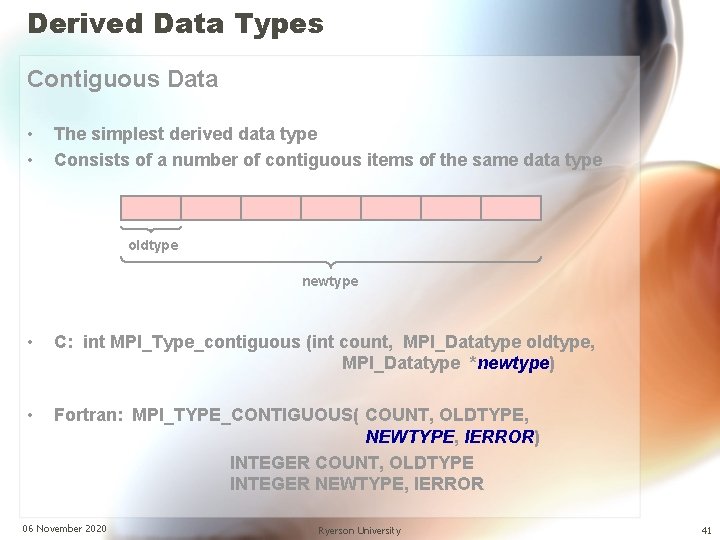

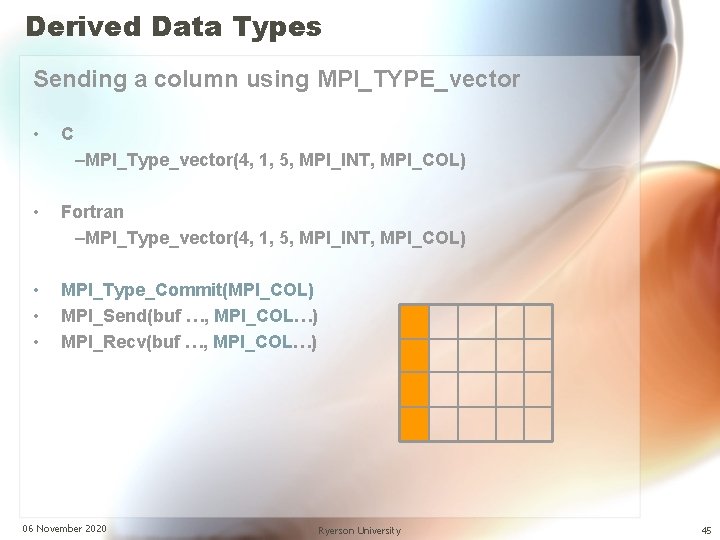

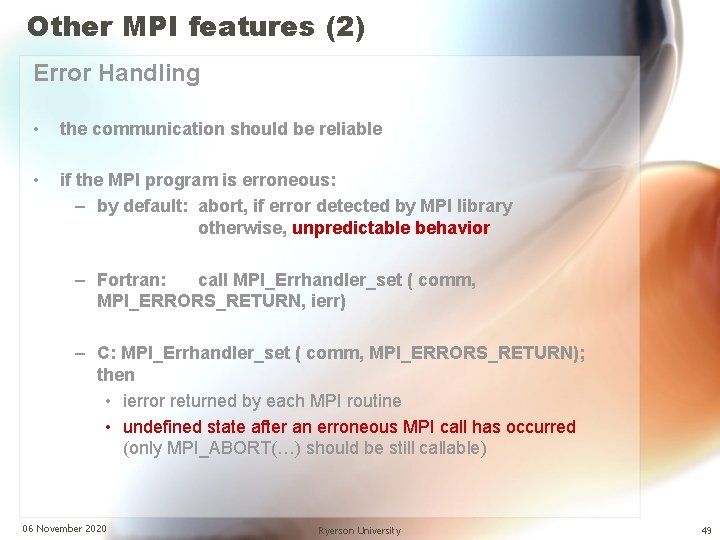

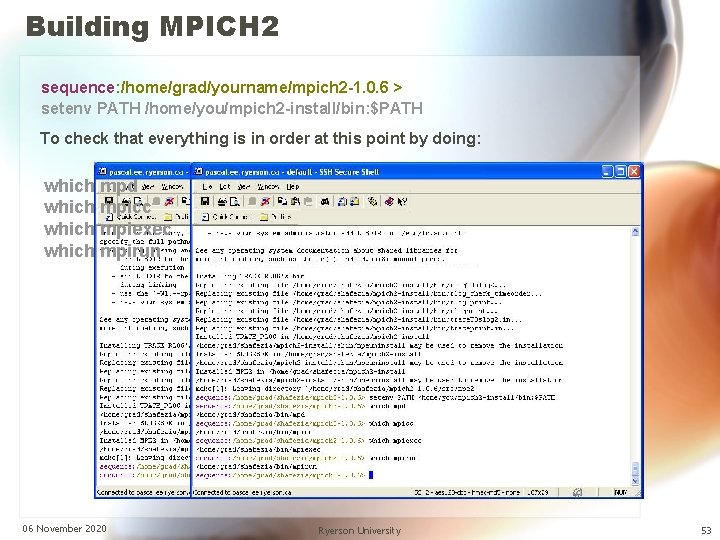

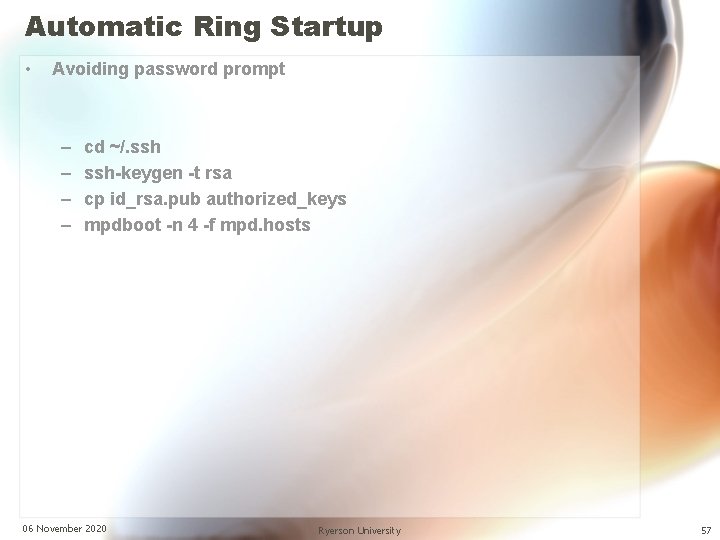

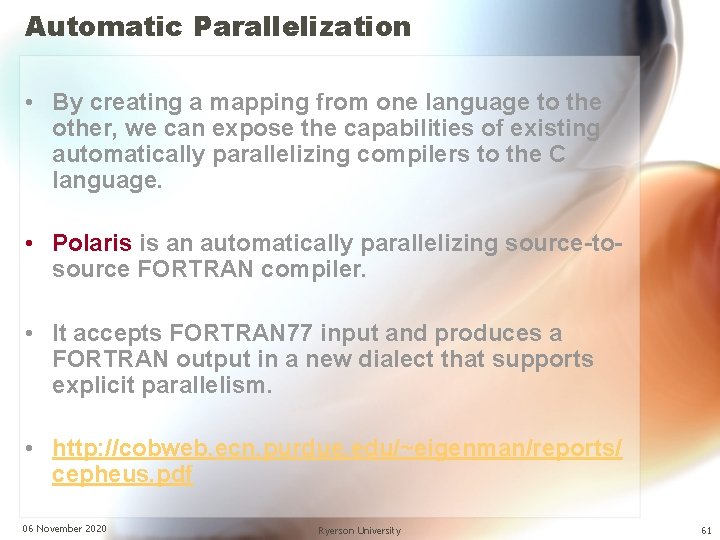

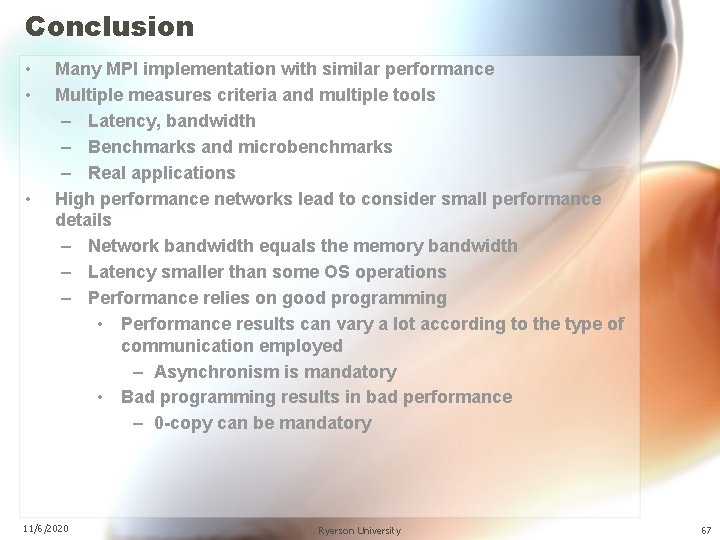

Experimental results (LAN) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] LAN Active server Time 11/6/2020 06 November 2020 2 3 5 8 10 15 249 154 140 100 115 140 Ryerson University 64

![Experimental results Wireless For i0 i10 i do A600 500 x B500 600 Wireless Experimental results (Wireless) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] Wireless](https://slidetodoc.com/presentation_image/59e85b38878662259ff38faf3fede1ca/image-65.jpg)

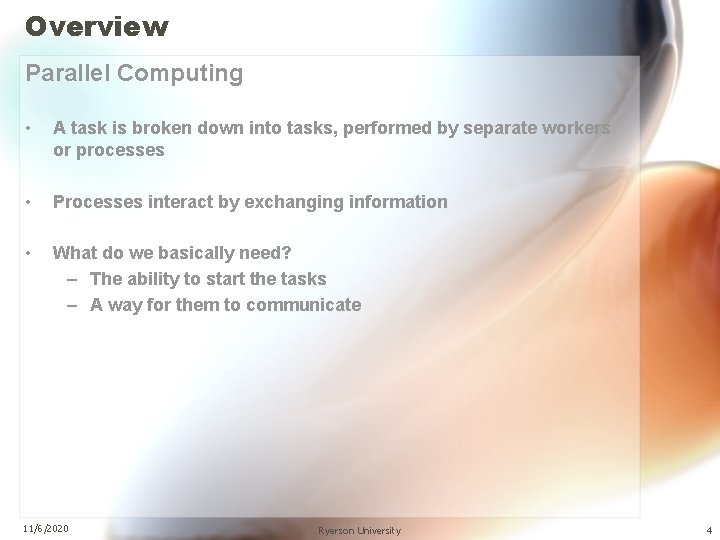

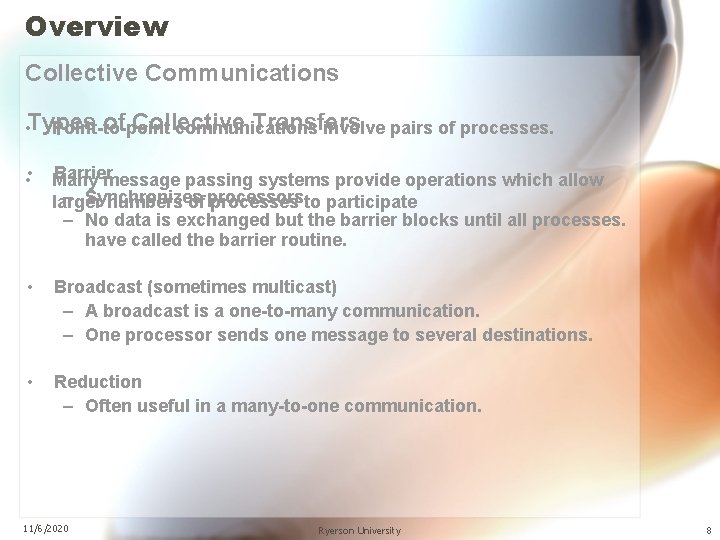

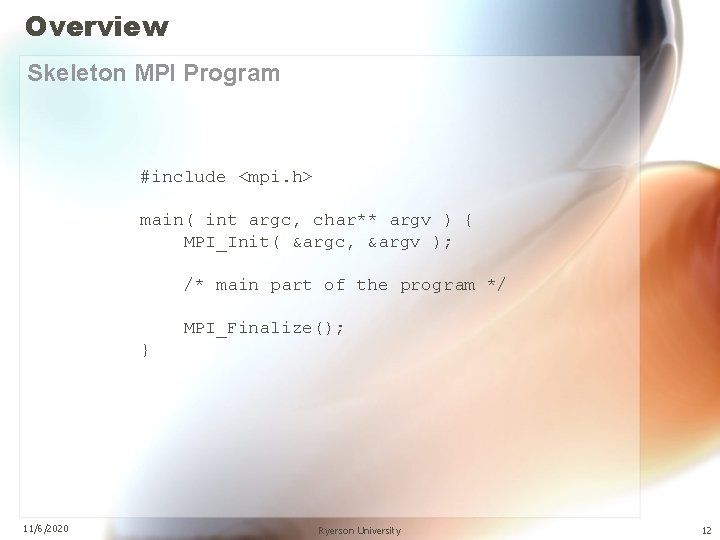

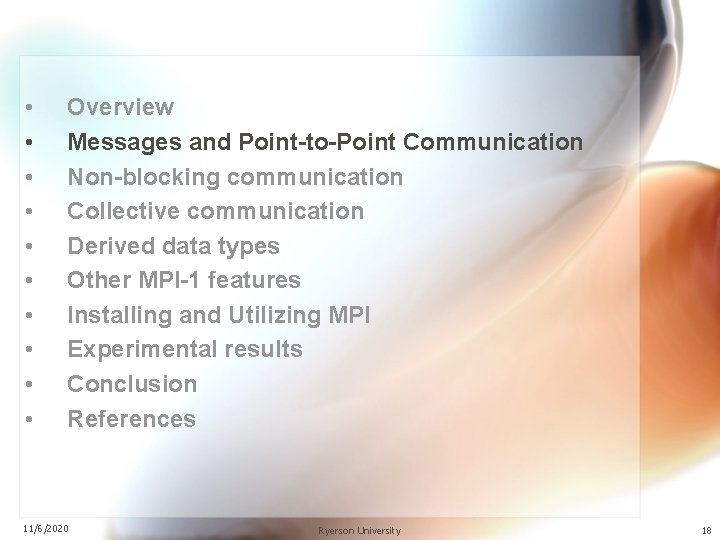

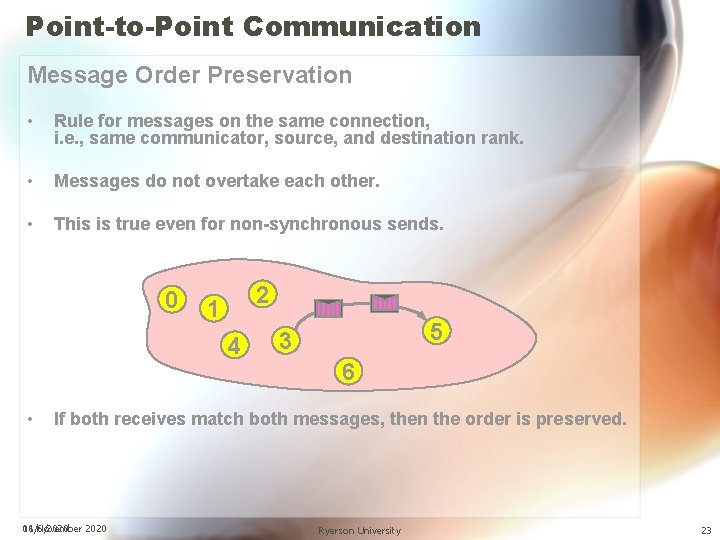

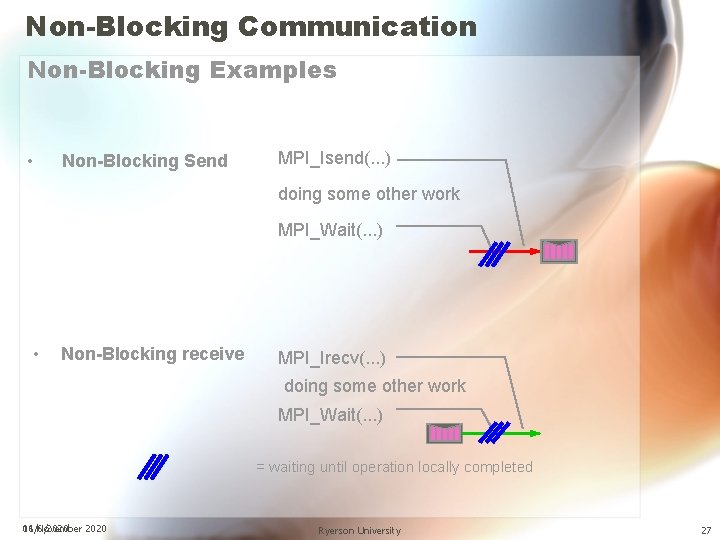

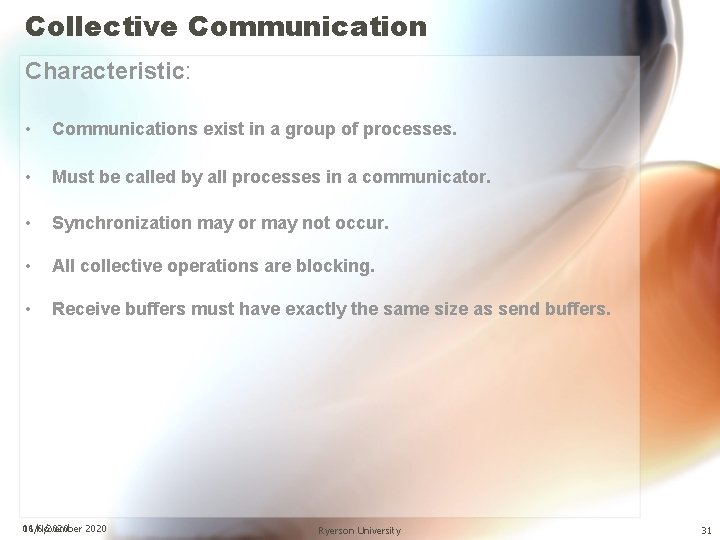

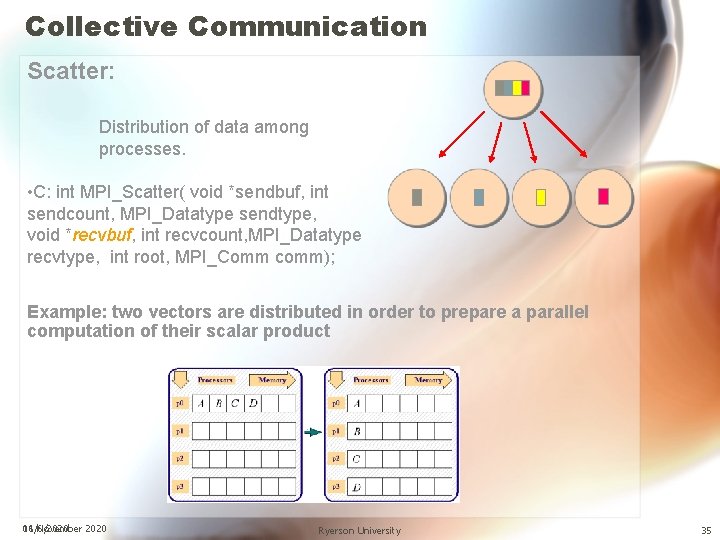

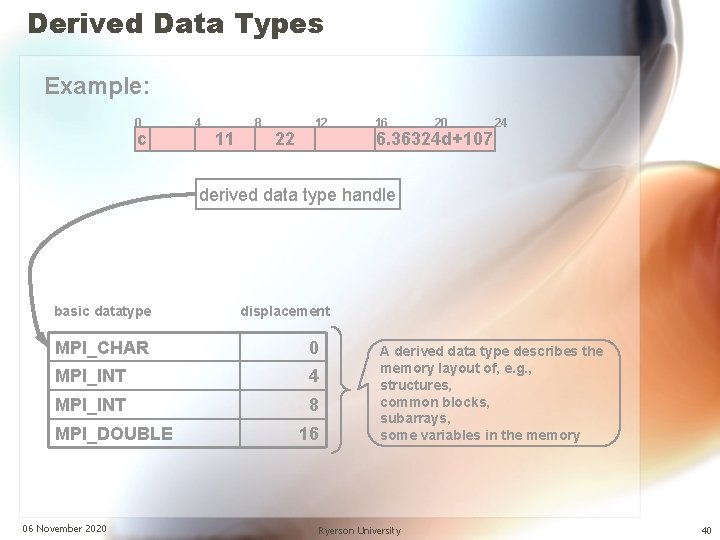

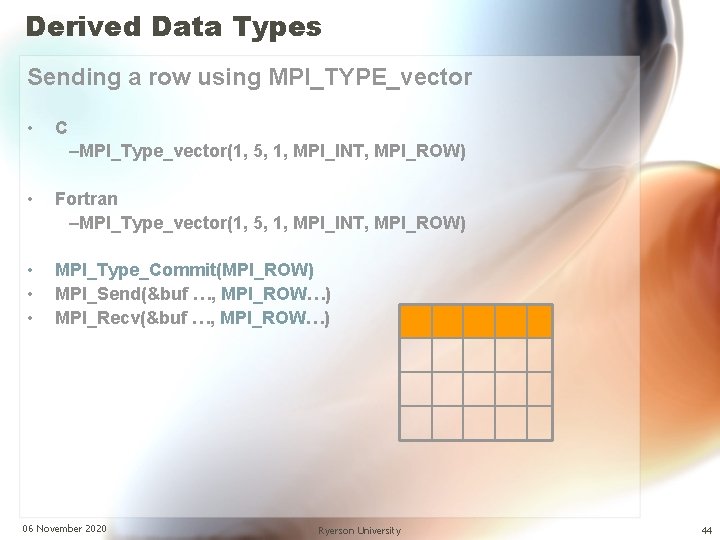

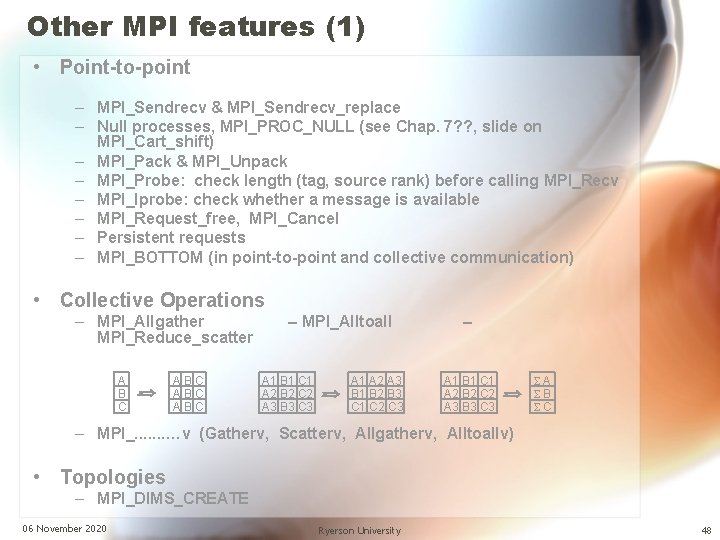

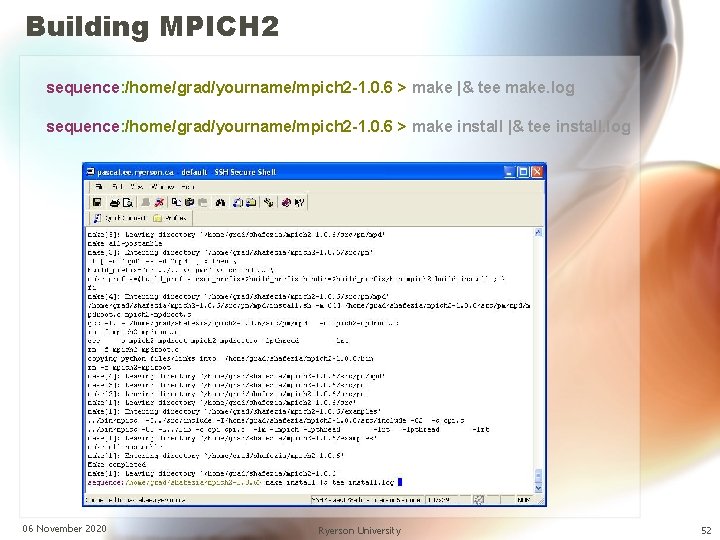

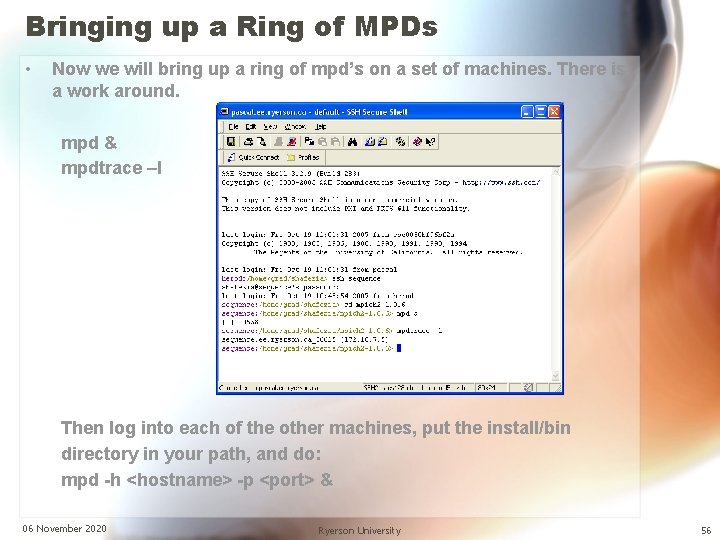

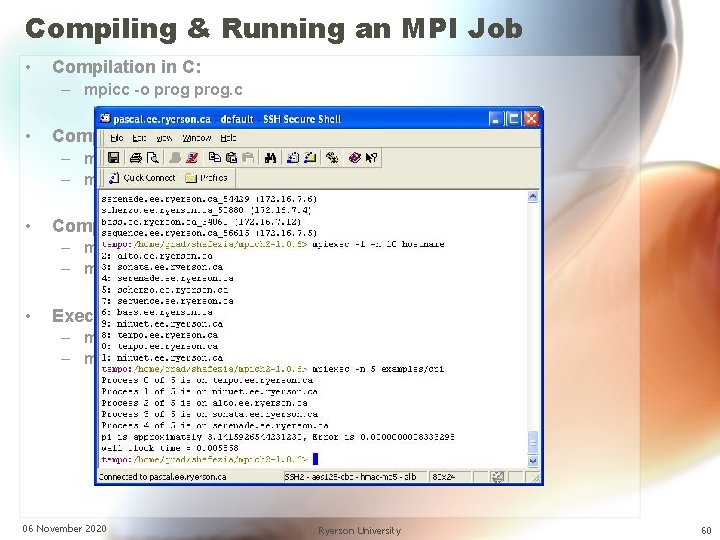

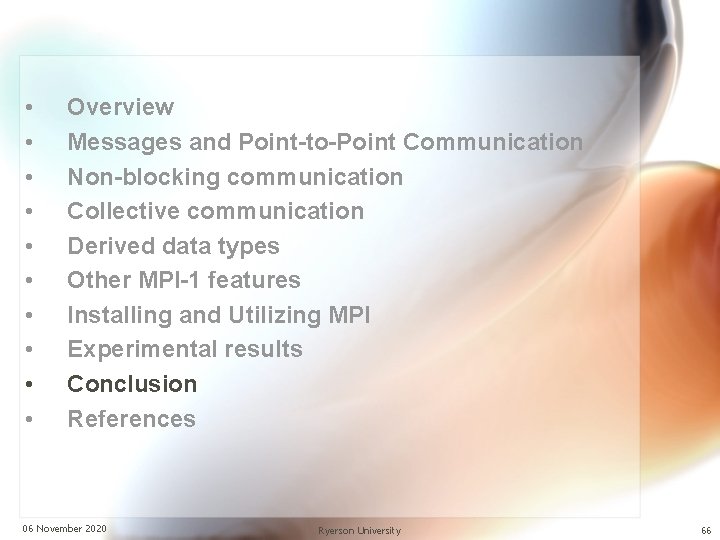

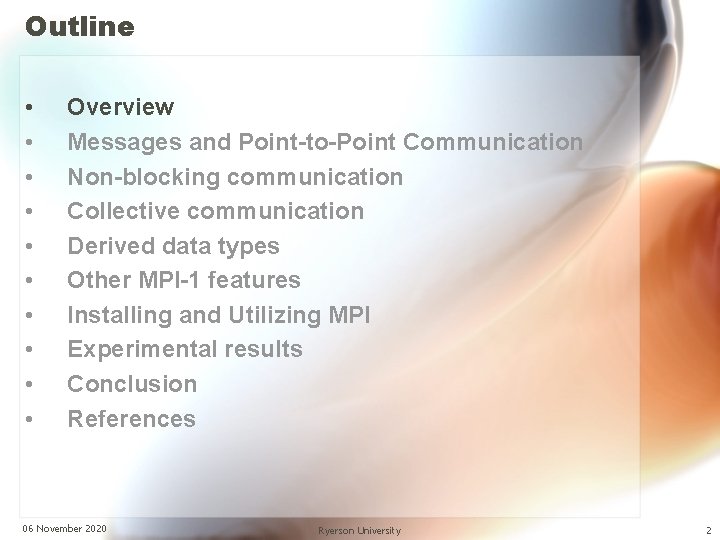

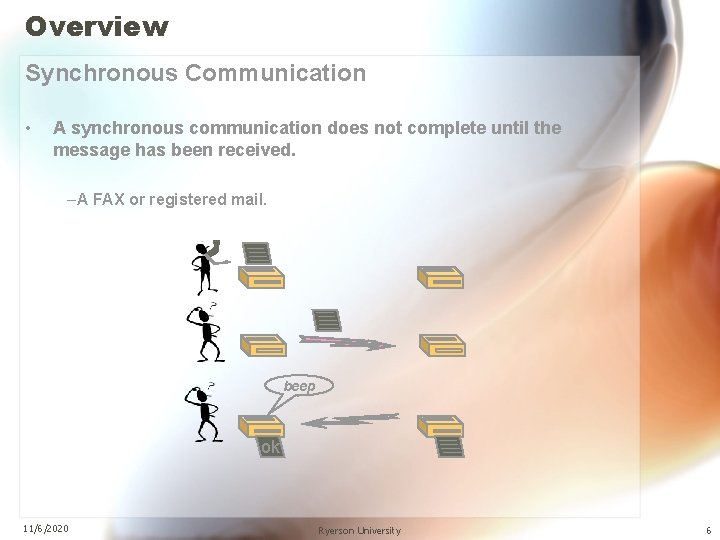

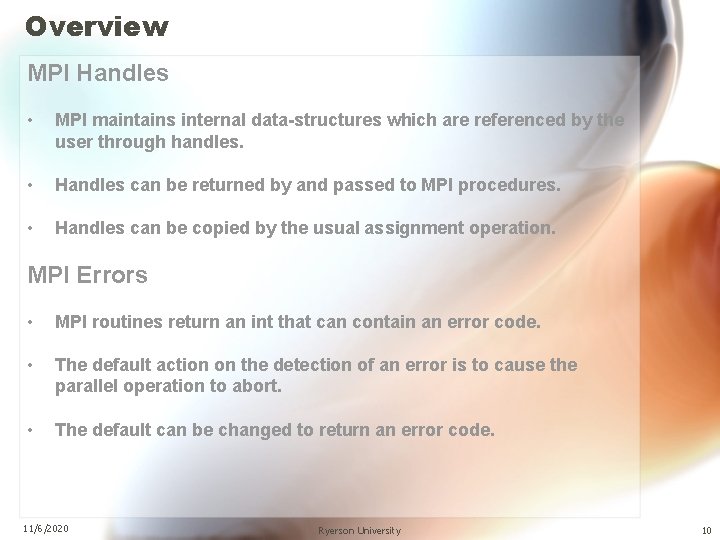

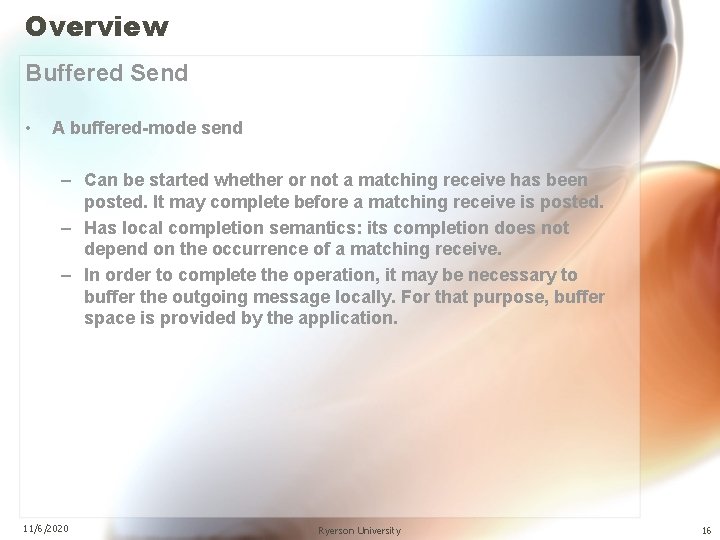

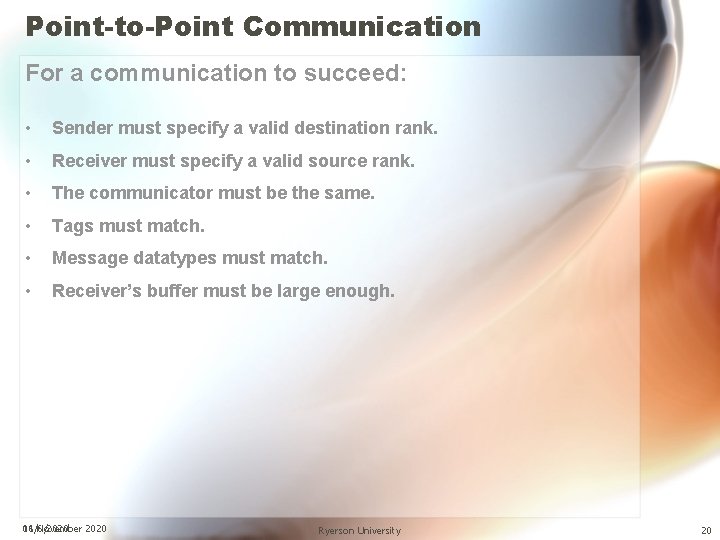

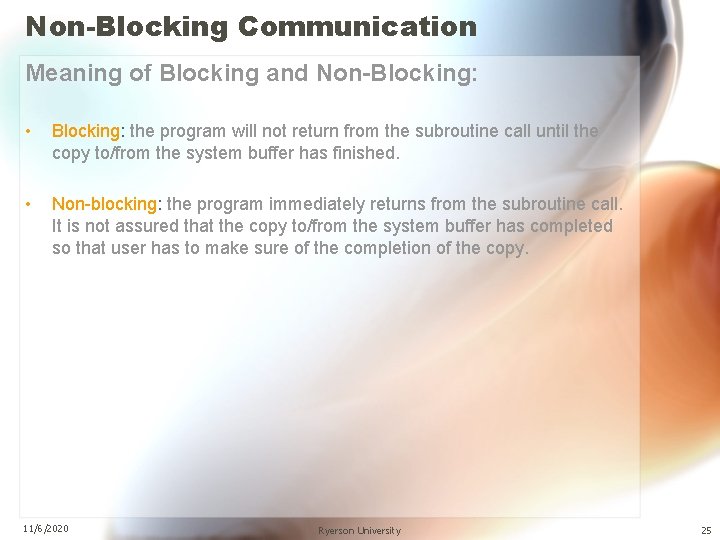

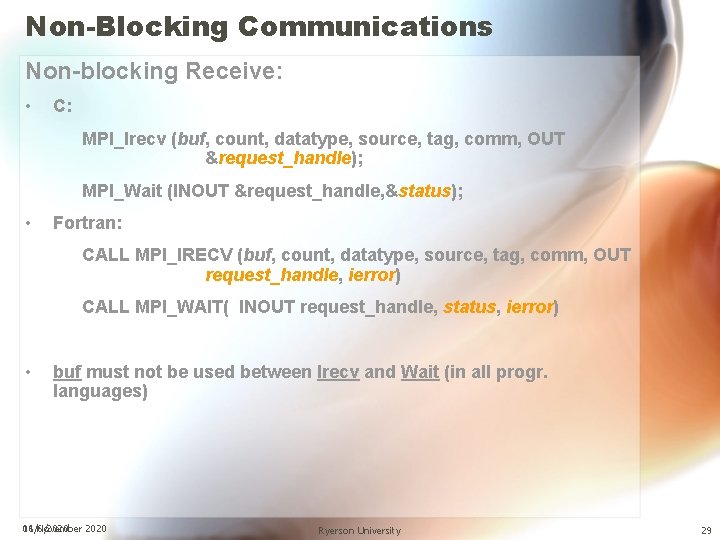

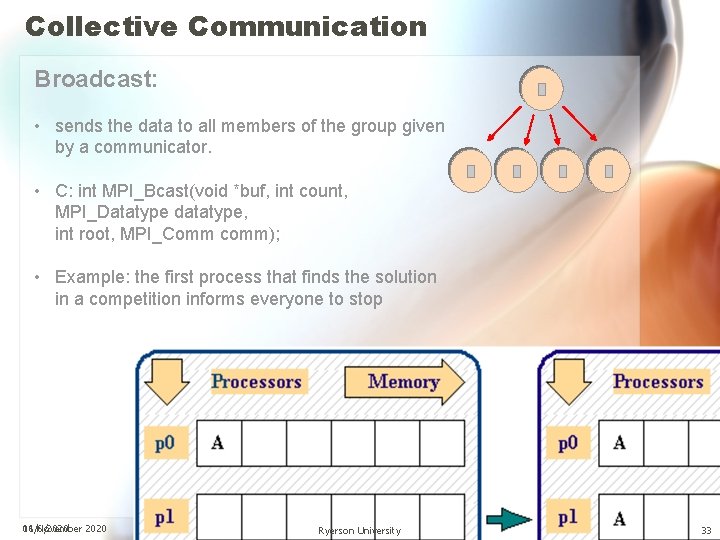

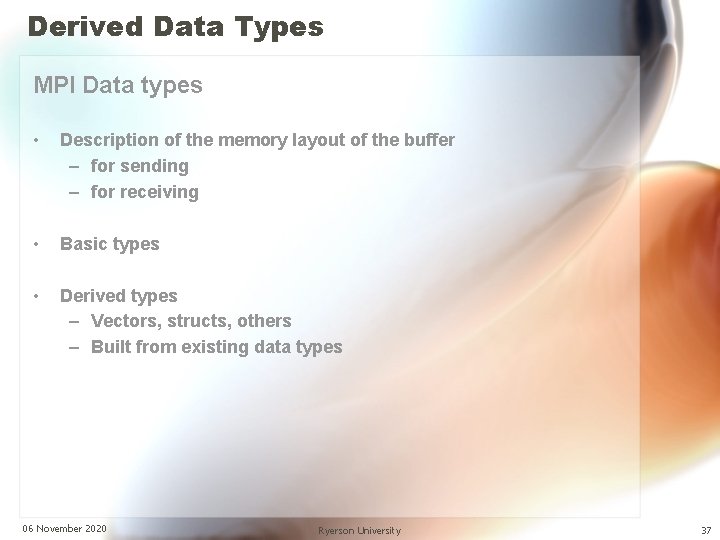

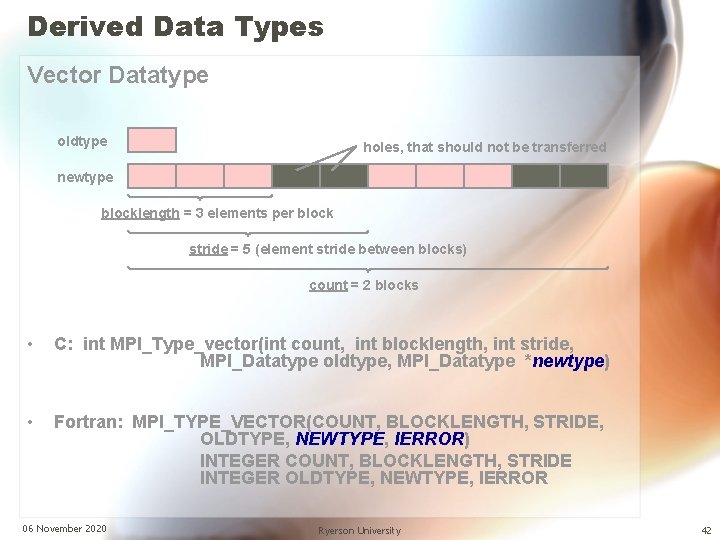

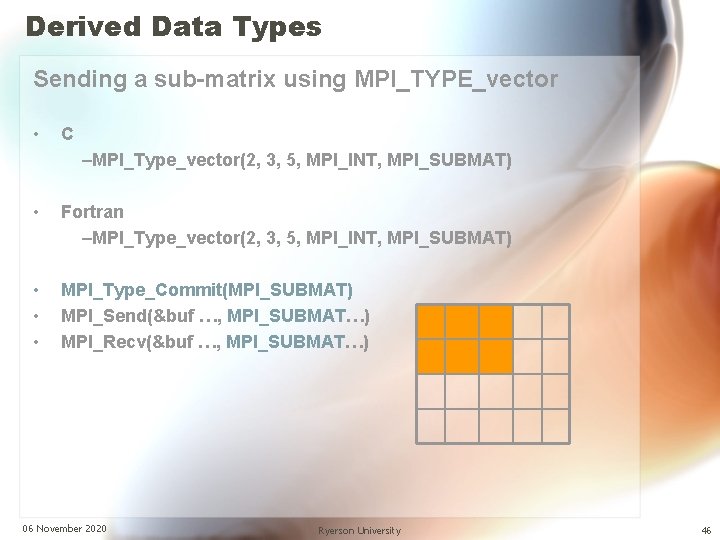

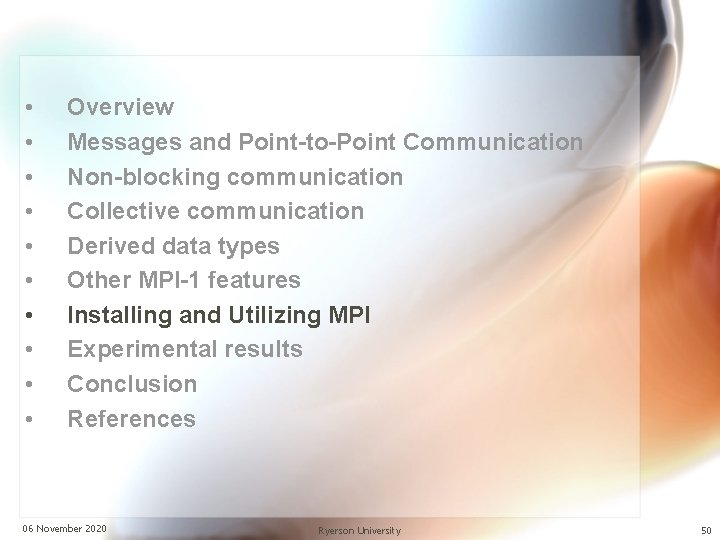

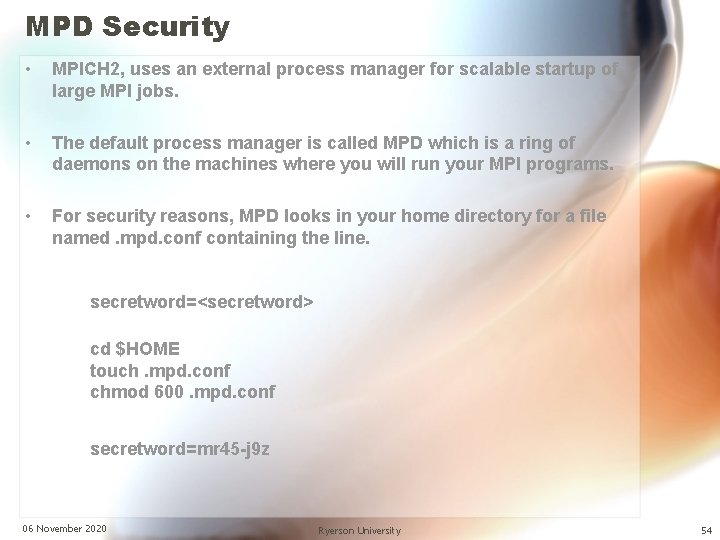

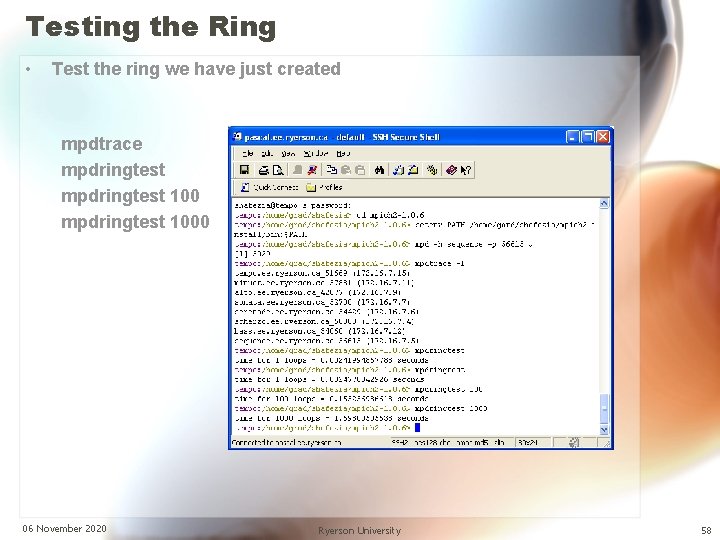

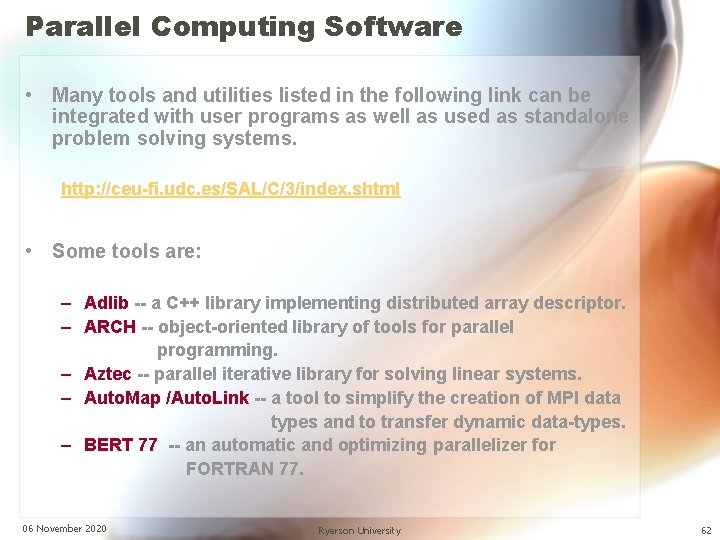

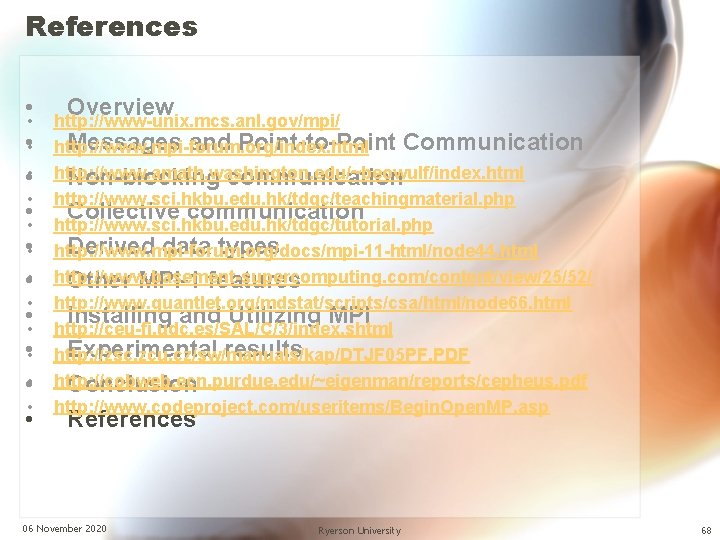

Experimental results (Wireless) For (i=0, i<10, i++) do A[600, 500] x B[500, 600] Wireless Active server Time 11/6/2020 06 November 2020 2 3 5 8 10 15 413 349 337 324 321 454 Ryerson University 65

• • • Overview Messages and Point-to-Point Communication Non-blocking communication Collective communication Derived data types Other MPI-1 features Installing and Utilizing MPI Experimental results Conclusion References 06 November 2020 Ryerson University 66

Conclusion • • • Many MPI implementation with similar performance Multiple measures criteria and multiple tools – Latency, bandwidth – Benchmarks and microbenchmarks – Real applications High performance networks lead to consider small performance details – Network bandwidth equals the memory bandwidth – Latency smaller than some OS operations – Performance relies on good programming • Performance results can vary a lot according to the type of communication employed – Asynchronism is mandatory • Bad programming results in bad performance – 0 -copy can be mandatory 11/6/2020 Ryerson University 67

References • • • • • • • • Overview http: //www-unix. mcs. anl. gov/mpi/ Messages and Point-to-Point Communication http: //www. mpi-forum. org/index. html http: //www. amath. washington. edu/~beowulf/index. html Non-blocking communication http: //www. sci. hkbu. edu. hk/tdgc/teachingmaterial. php Collective communication http: //www. sci. hkbu. edu. hk/tdgc/tutorial. php Derived data types http: //www. mpi-forum. org/docs/mpi-11 -html/node 44. html http: //www. basement-supercomputing. com/content/view/25/52/ Other MPI-1 features http: //www. quantlet. org/mdstat/scripts/csa/html/node 66. html Installing and Utilizing MPI http: //ceu-fi. udc. es/SAL/C/3/index. shtml Experimental results http: //zsc. zcu. cz/sw/manuals/kap/DTJF 05 PF. PDF http: //cobweb. ecn. purdue. edu/~eigenman/reports/cepheus. pdf Conclusion http: //www. codeproject. com/useritems/Begin. Open. MP. asp References 06 November 2020 Ryerson University 68