Parallel Computing CSCI 201 Principles of Software Development

- Slides: 16

Parallel Computing CSCI 201 Principles of Software Development Jeffrey Miller, Ph. D. jeffrey. miller@usc. edu

Outline • Parallel Computing • Program USC CSCI 201 L

Parallel Computing ▪ Parallel computing studies software systems where components located on connected components communicate through message passing › Individual threads have only a partial knowledge of the problem › Parallel computing is a term used for programs that operate within a shared memory space with multiple processors or cores • Parallel Computing USC CSCI 201 L 3/16

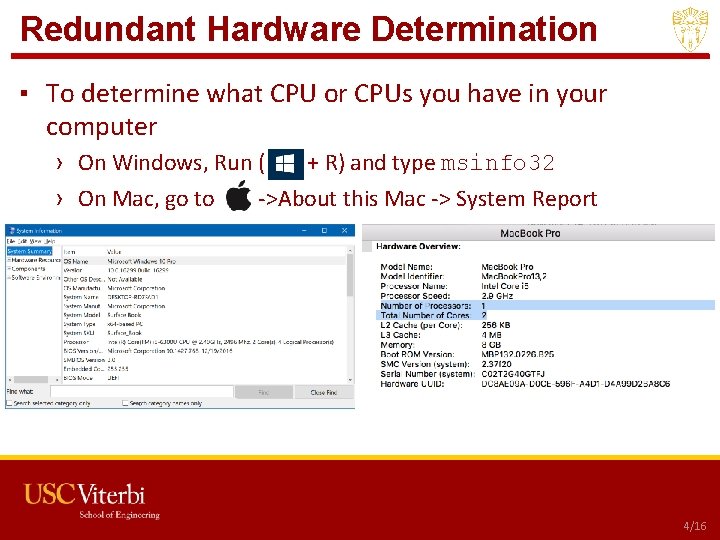

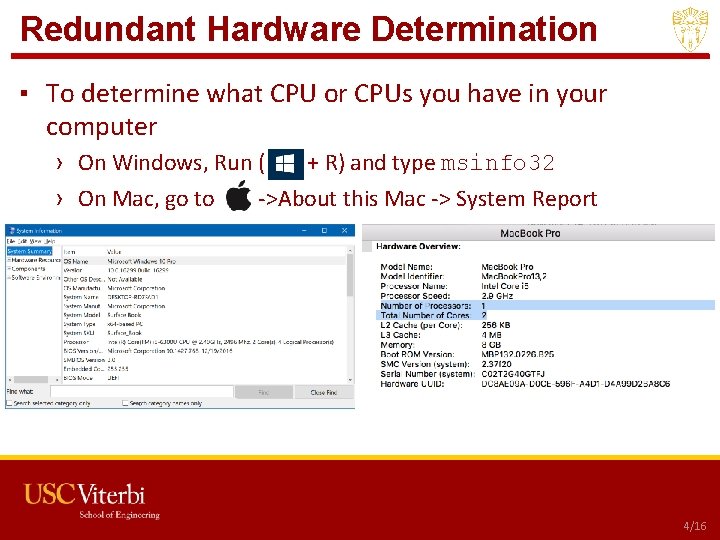

Redundant Hardware Determination ▪ To determine what CPU or CPUs you have in your computer › On Windows, Run ( + R) and type msinfo 32 › On Mac, go to ->About this Mac -> System Report • Parallel Computing USC CSCI 201 L 4/16

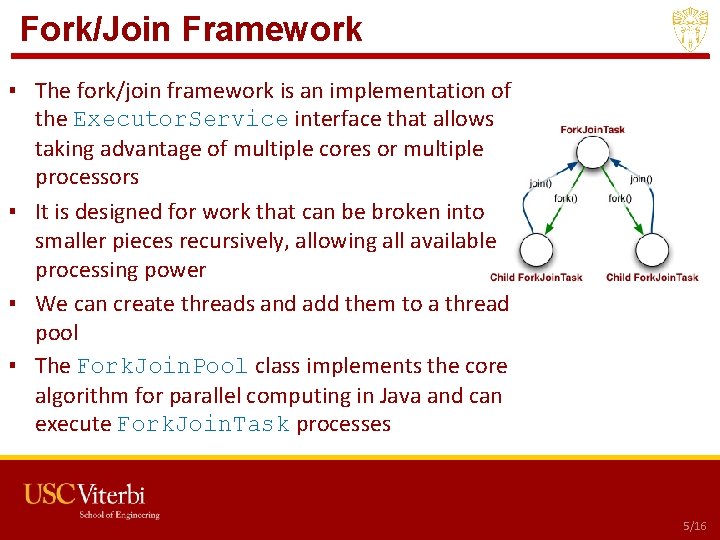

Fork/Join Framework ▪ The fork/join framework is an implementation of the Executor. Service interface that allows taking advantage of multiple cores or multiple processors ▪ It is designed for work that can be broken into smaller pieces recursively, allowing all available processing power ▪ We can create threads and add them to a thread pool ▪ The Fork. Join. Pool class implements the core algorithm for parallel computing in Java and can execute Fork. Join. Task processes • Parallel Computing USC CSCI 201 L 5/16

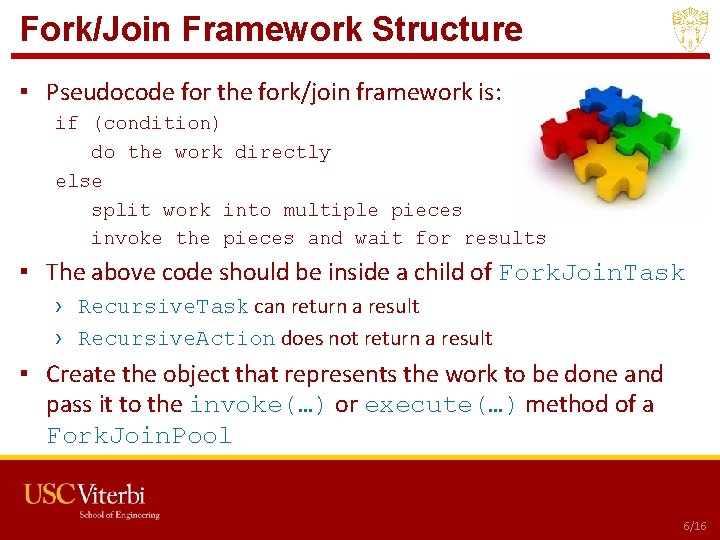

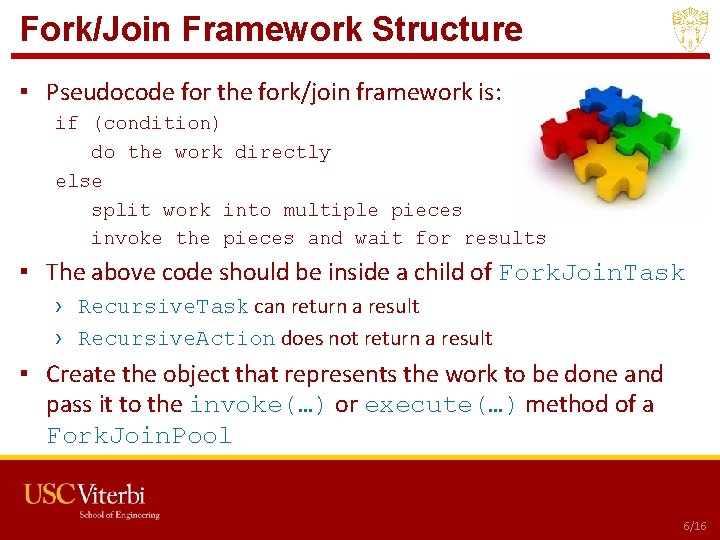

Fork/Join Framework Structure ▪ Pseudocode for the fork/join framework is: if (condition) do the work directly else split work into multiple pieces invoke the pieces and wait for results ▪ The above code should be inside a child of Fork. Join. Task › Recursive. Task can return a result › Recursive. Action does not return a result ▪ Create the object that represents the work to be done and pass it to the invoke(…) or execute(…) method of a Fork. Join. Pool • Parallel Computing USC CSCI 201 L 6/16

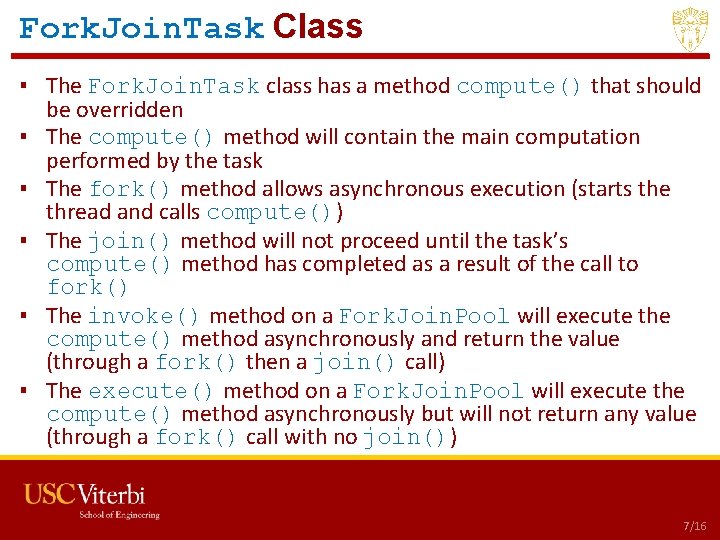

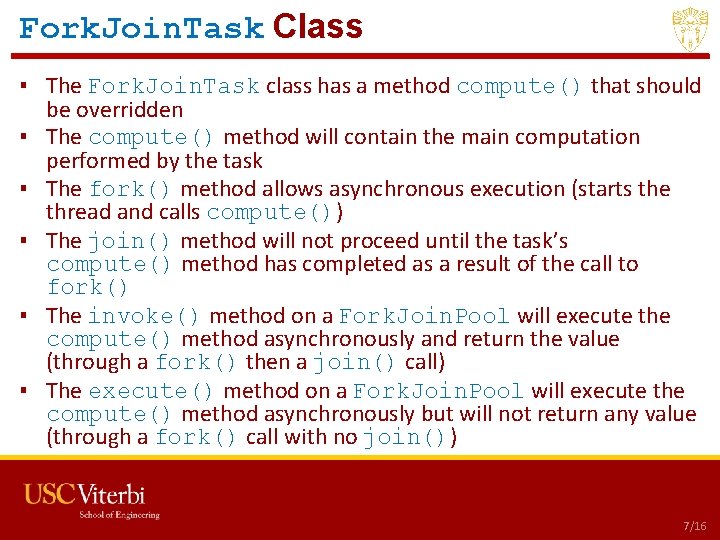

Fork. Join. Task Class ▪ The Fork. Join. Task class has a method compute() that should be overridden ▪ The compute() method will contain the main computation performed by the task ▪ The fork() method allows asynchronous execution (starts the thread and calls compute()) ▪ The join() method will not proceed until the task’s compute() method has completed as a result of the call to fork() ▪ The invoke() method on a Fork. Join. Pool will execute the compute() method asynchronously and return the value (through a fork() then a join() call) ▪ The execute() method on a Fork. Join. Pool will execute the compute() method asynchronously but will not return any value (through a fork() call with no join()) • Parallel Computing USC CSCI 201 L 7/16

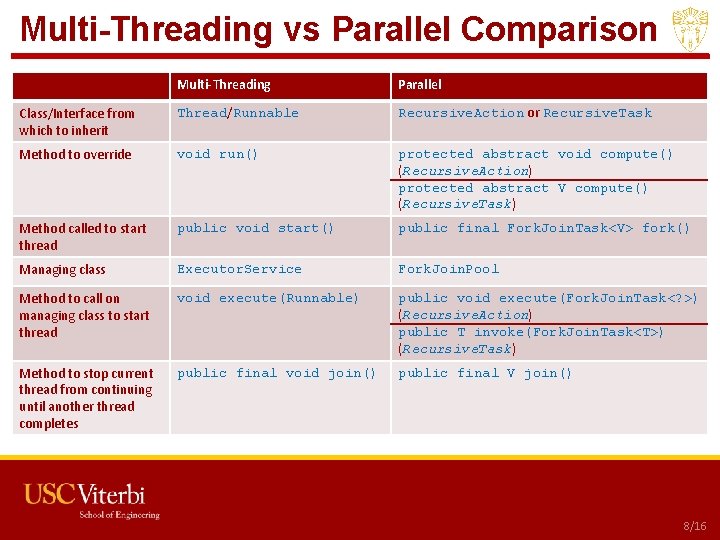

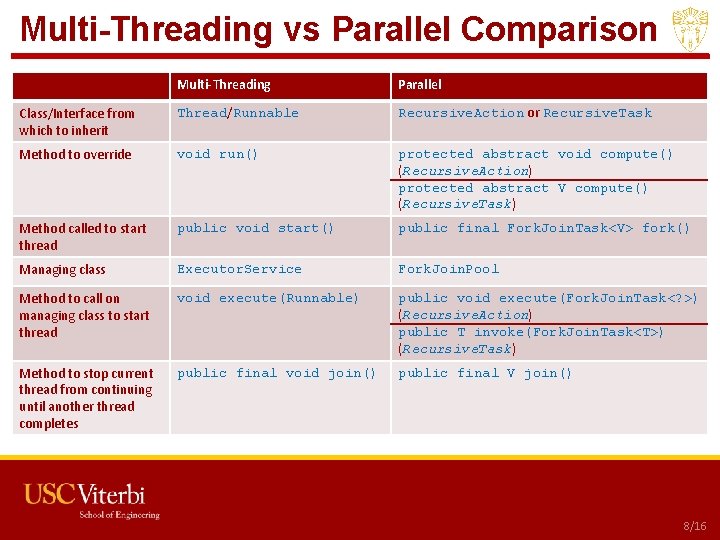

Multi-Threading vs Parallel Comparison • Multi-Threading Parallel Class/Interface from which to inherit Thread/Runnable Recursive. Action or Recursive. Task Method to override void run() protected abstract void compute() (Recursive. Action) protected abstract V compute() (Recursive. Task) Method called to start thread public void start() public final Fork. Join. Task<V> fork() Managing class Executor. Service Fork. Join. Pool Method to call on managing class to start thread void execute(Runnable) public void execute(Fork. Join. Task<? >) (Recursive. Action) public T invoke(Fork. Join. Task<T>) (Recursive. Task) Method to stop current thread from continuing until another thread completes public final void join() public final V join() Parallel Computing USC CSCI 201 L 8/16

Running Time ▪ Any time you fork a task, there is overhead in moving that to a new CPU, executing it, and getting the response ▪ Just because you are parallelizing code does not mean that you will have an improvement in execution speed ▪ If you fork more threads than you have CPUs, the threads will execute in a concurrent manner (time-slicing similar to multi-threading) in each CPU • Parallel Computing USC CSCI 201 L 9/16

Program ▪ Write a program to add all of the numbers between 0 and 1, 000, 000. ▪ Do this in a single-threaded manner and then in a parallel manner. ▪ Compare the running times. • Parallel Computing USC CSCI 201 L 10/16

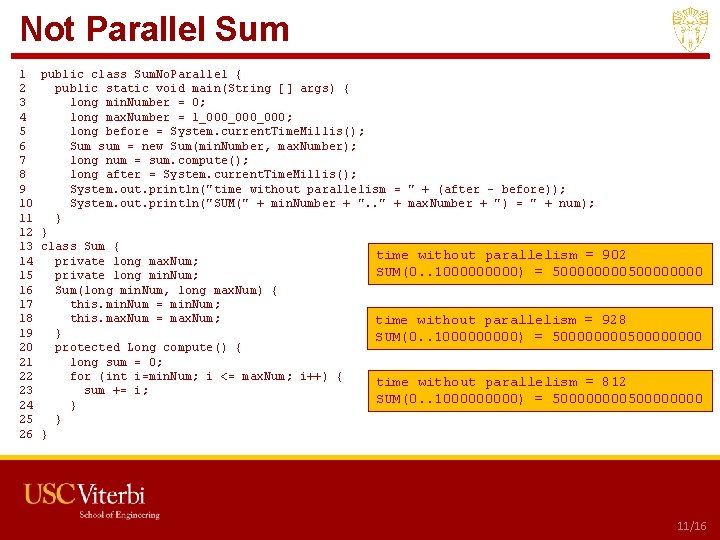

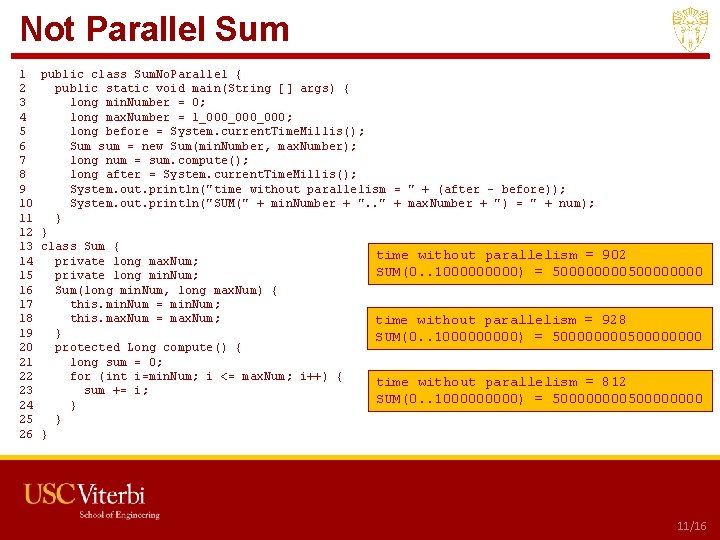

Not Parallel Sum 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 public class Sum. No. Parallel { public static void main(String [] args) { long min. Number = 0; long max. Number = 1_000_000; long before = System. current. Time. Millis(); Sum sum = new Sum(min. Number, max. Number); long num = sum. compute(); long after = System. current. Time. Millis(); System. out. println("time without parallelism = " + (after - before)); System. out. println("SUM(" + min. Number + ". . " + max. Number + ") = " + num); } } class Sum { time without parallelism = 902 private long max. Num; SUM(0. . 100000) = 500000000 private long min. Num; Sum(long min. Num, long max. Num) { this. min. Num = min. Num; this. max. Num = max. Num; time without parallelism = 928 } SUM(0. . 100000) = 500000000 protected Long compute() { long sum = 0; for (int i=min. Num; i <= max. Num; i++) { time without parallelism = 812 sum += i; SUM(0. . 100000) = 500000000 } } } USC CSCI 201 L 11/16

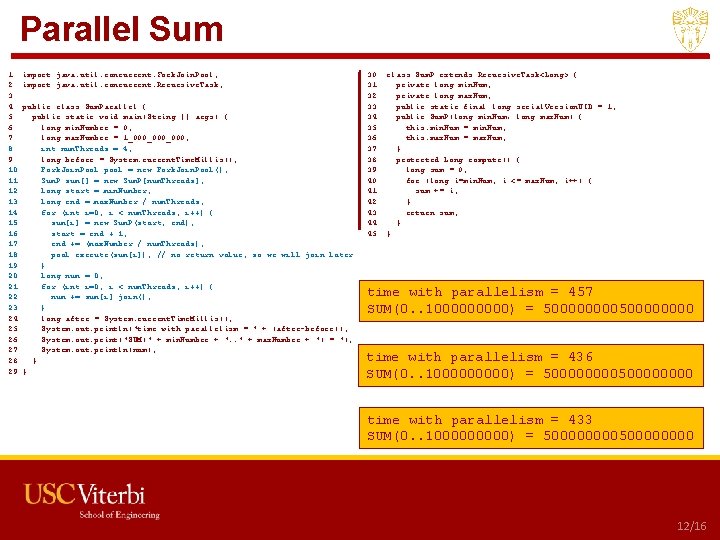

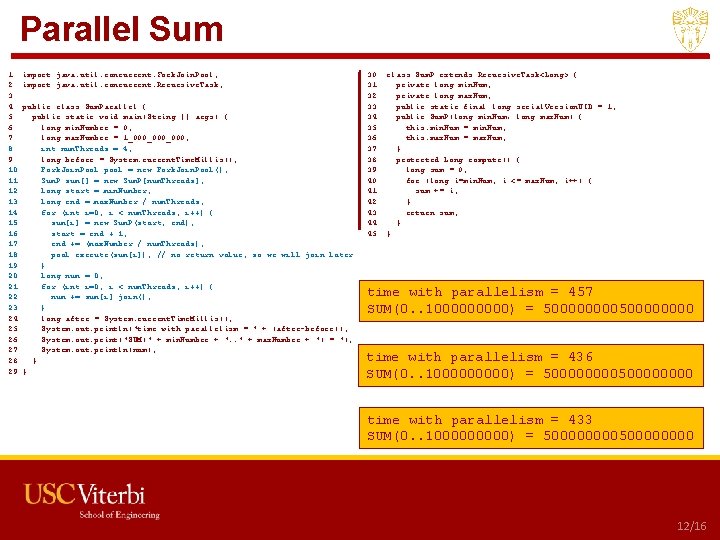

Parallel Sum 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 import java. util. concurrent. Fork. Join. Pool; import java. util. concurrent. Recursive. Task; public class Sum. Parallel { public static void main(String [] args) { long min. Number = 0; long max. Number = 1_000_000; int num. Threads = 4; long before = System. current. Time. Millis(); Fork. Join. Pool pool = new Fork. Join. Pool(); Sum. P sum[] = new Sum. P[num. Threads]; long start = min. Number; long end = max. Number / num. Threads; for (int i=0; i < num. Threads; i++) { sum[i] = new Sum. P(start, end); start = end + 1; end += (max. Number / num. Threads); pool. execute(sum[i]); // no return value, so we will join later } long num = 0; for (int i=0; i < num. Threads; i++) { num += sum[i]. join(); } long after = System. current. Time. Millis(); System. out. println("time with parallelism = " + (after-before)); System. out. print("SUM(" + min. Number + ". . " + max. Number + ") = "); System. out. println(num); } } 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 class Sum. P extends Recursive. Task<Long> { private long min. Num; private long max. Num; public static final long serial. Version. UID = 1; public Sum. P(long min. Num, long max. Num) { this. min. Num = min. Num; this. max. Num = max. Num; } protected Long compute() { long sum = 0; for (long i=min. Num; i <= max. Num; i++) { sum += i; } return sum; } } time with parallelism = 457 SUM(0. . 100000) = 500000000 time with parallelism = 436 SUM(0. . 100000) = 500000000 time with parallelism = 433 SUM(0. . 100000) = 500000000 USC CSCI 201 L 12/16

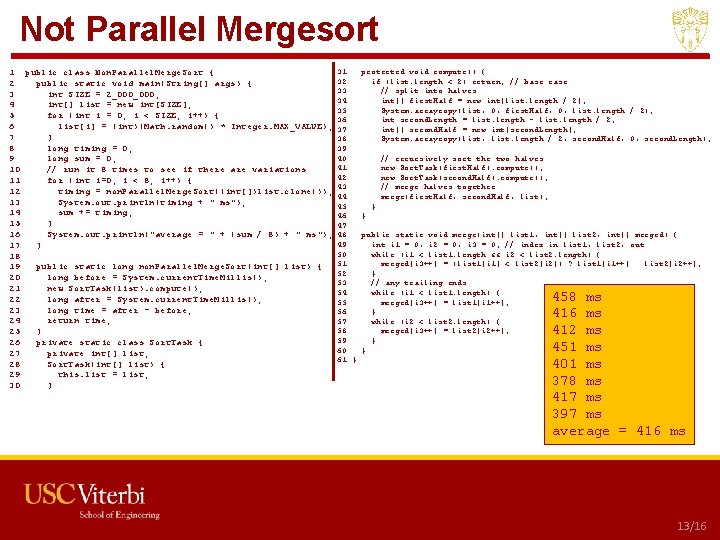

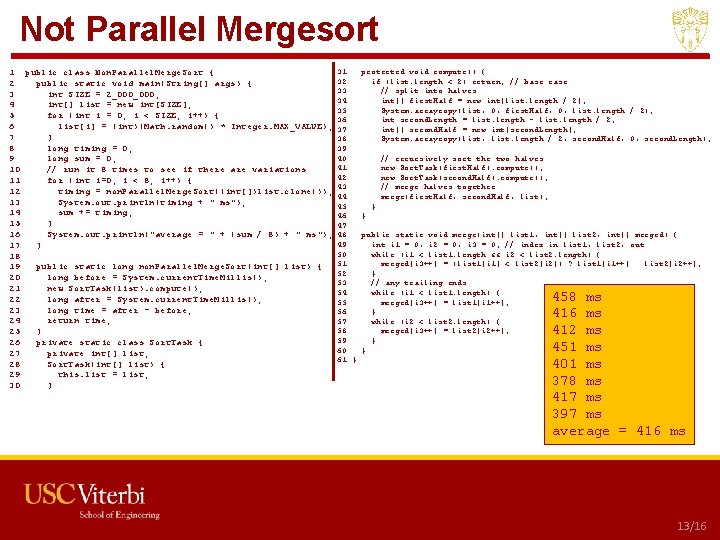

Not Parallel Mergesort 1 public class Non. Parallel. Merge. Sort { 2 public static void main(String[] args) { 3 int SIZE = 2_000; 4 int[] list = new int[SIZE]; 5 for (int i = 0; i < SIZE; i++) { 6 list[i] = (int)(Math. random() * Integer. MAX_VALUE); 7 } 8 long timing = 0; 9 long sum = 0; 10 // run it 8 times to see if there are variations 11 for (int i=0; i < 8; i++) { 12 timing = non. Parallel. Merge. Sort((int[])list. clone()); 13 System. out. println(timing + " ms"); 14 sum += timing; 15 } 16 System. out. println("average = " + (sum / 8) + " ms"); 17 } 18 19 public static long non. Parallel. Merge. Sort(int[] list) { 20 long before = System. current. Time. Millis(); 21 new Sort. Task(list). compute(); 22 long after = System. current. Time. Millis(); 23 long time = after - before; 24 return time; 25 } 26 private static class Sort. Task { 27 private int[] list; 28 Sort. Task(int[] list) { 29 this. list = list; 30 } 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 } protected void compute() { if (list. length < 2) return; // base case // split into halves int[] first. Half = new int[list. length / 2]; System. arraycopy(list, 0, first. Half, 0, list. length / 2); int second. Length = list. length - list. length / 2; int[] second. Half = new int[second. Length]; System. arraycopy(list, list. length / 2, second. Half, 0, second. Length); // recursively sort the two halves new Sort. Task(first. Half). compute(); new Sort. Task(second. Half). compute(); // merge halves together merge(first. Half, second. Half, list); } } public static void merge(int[] list 1, int[] list 2, int[] merged) { int i 1 = 0, i 2 = 0, i 3 = 0; // index in list 1, list 2, out while (i 1 < list 1. length && i 2 < list 2. length) { merged[i 3++] = (list 1[i 1] < list 2[i 2]) ? list 1[i 1++] : list 2[i 2++]; } // any trailing ends while (i 1 < list 1. length) { merged[i 3++] = list 1[i 1++]; } while (i 2 < list 2. length) { merged[i 3++] = list 2[i 2++]; } } 458 ms 416 ms 412 ms 451 ms 401 ms 378 ms 417 ms 397 ms average = 416 ms USC CSCI 201 L 13/16

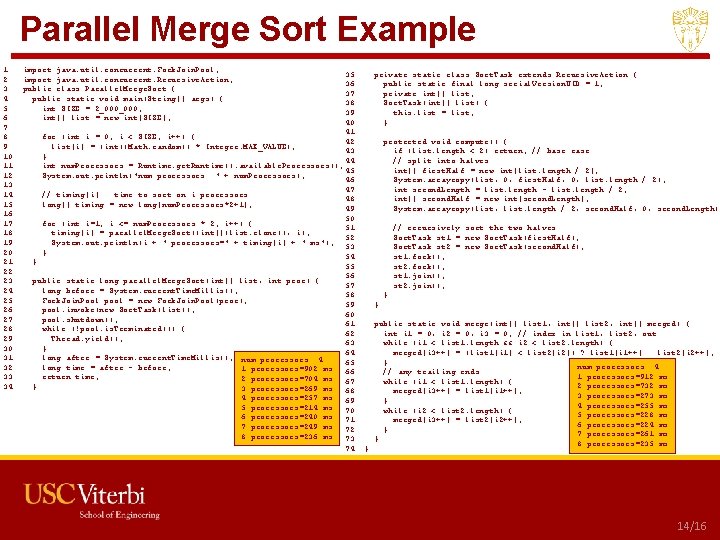

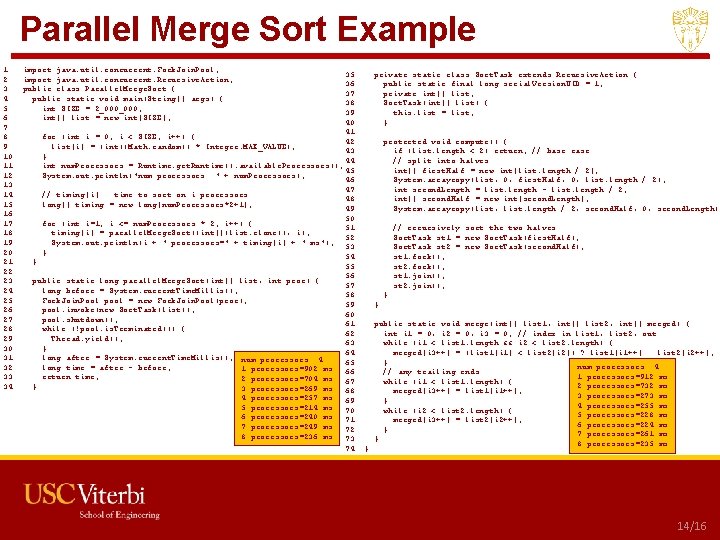

Parallel Merge Sort Example 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 • import java. util. concurrent. Fork. Join. Pool; import java. util. concurrent. Recursive. Action; public class Parallel. Merge. Sort { public static void main(String[] args) { int SIZE = 2_000; int[] list = new int[SIZE]; 35 36 37 38 39 40 41 for (int i = 0; i < SIZE; i++) { 42 list[i] = (int)(Math. random() * Integer. MAX_VALUE); 43 } 44 int num. Processors = Runtime. get. Runtime(). available. Processors(); 45 System. out. println("num processors: " + num. Processors); 46 47 // timing[i] : time to sort on i processors 48 long[] timing = new long[num. Processors*2+1]; 49 50 for (int i=1; i <= num. Processors * 2; i++) { 51 timing[i] = parallel. Merge. Sort((int[])list. clone(), i); 52 System. out. println(i + " processors=" + timing[i] + " ms"); 53 } 54 } 55 56 public static long parallel. Merge. Sort(int[] list, int proc) { 57 long before = System. current. Time. Millis(); 58 Fork. Join. Pool pool = new Fork. Join. Pool(proc); 59 pool. invoke(new Sort. Task(list)); 60 pool. shutdown(); 61 while (!pool. is. Terminated()) { 62 Thread. yield(); 63 } 64 long after = System. current. Time. Millis(); num processors: 4 65 long time = after - before; 1 processors=902 ms 66 return time; 2 processors=704 ms 67 } 3 processors=269 ms 68 4 processors=257 ms 69 5 processors=214 ms 70 6 processors=240 ms 71 7 processors=249 ms 72 8 processors=236 ms 73 74 Parallel Computing private static class Sort. Task extends Recursive. Action { public static final long serial. Version. UID = 1; private int[] list; Sort. Task(int[] list) { this. list = list; } protected void compute() { if (list. length < 2) return; // base case // split into halves int[] first. Half = new int[list. length / 2]; System. arraycopy(list, 0, first. Half, 0, list. length / 2); int second. Length = list. length - list. length / 2; int[] second. Half = new int[second. Length]; System. arraycopy(list, list. length / 2, second. Half, 0, second. Length); // recursively sort the two halves Sort. Task st 1 = new Sort. Task(first. Half); Sort. Task st 2 = new Sort. Task(second. Half); st 1. fork(); st 2. fork(); st 1. join(); st 2. join(); } } } public static void merge(int[] list 1, int[] list 2, int[] merged) { int i 1 = 0, i 2 = 0, i 3 = 0; // index in list 1, list 2, out while (i 1 < list 1. length && i 2 < list 2. length) { merged[i 3++] = (list 1[i 1] < list 2[i 2]) ? list 1[i 1++] : list 2[i 2++]; } num processors: 4 // any trailing ends 1 processors=912 ms while (i 1 < list 1. length) { 2 processors=732 ms merged[i 3++] = list 1[i 1++]; 3 processors=273 ms } 4 processors=255 ms while (i 2 < list 2. length) { 5 processors=228 ms merged[i 3++] = list 2[i 2++]; 6 processors=224 ms } 7 processors=261 ms } 8 processors=235 ms USC CSCI 201 L 14/16

Outline • Parallel Computing • Program USC CSCI 201 L

Program ▪ Modify the Parallel. Merge. Sort code to split the array into the same number of sub-arrays as processors/cores on your computer. Does that provide a lower execution time than splitting the array into two sub -arrays? Why or why not? • Parallel Computing USC CSCI 201 L 16/16