Parallel Computing 3 Models of Parallel Computations Ondej

- Slides: 53

Parallel Computing 3 Models of Parallel Computations Ondřej Jakl Institute of Geonics, Academy of Sci. of the 1 CR

Outline of the lecture • Aspects of practical parallel programming • Parallel programming models • Data parallel – High Performance Fortran • Shared variables/memory – compiler’s support: automatic/assisted parallelization – Open. MP – thread libraries • Message passing 2

Parallel programming (1) • Primary goal: maximization of performance Trade-off – specific approaches are expected to be more efficient than universal ones • considerable diversity in parallel hardware • techniques/tools are much more dependent on the target platform than in sequential programming – understanding the hardware will make it easier to make programs get high performance • back to the era of assembly programming? • On the contrary, standard/portable/universal methods increase the productivity in software development and maintenance 3

Parallel programming (2) • Parallel programs are more difficult to write and debug than sequential ones – parallel algorithms can be generally qualitatively different form the corresponding sequential ones • the change of the form of the code may be not enough – several new classes of potential software bugs (e. g. race conditions) – difficult debugging – issues of scalability 4

General approaches • Special programming language supporting concurrency – theoretically advantageous, in practice not as much popular – ex. : Ada, Occam, Sisal, etc. (there are dozens of designs) – language extensions: CC++, Fortran M, etc. • Universal programming language (C, Fortran, . . . ) with parallelizing compiler – autodetection of parallelism in the sequential code – easier for shared memory, limited efficiency • matter of future? (despite of 30 years of intense research) – ex. : Forge 90 for Fortran (1992), some standard compilers • Universal programming language plus a library of external parallelizing functions – mainstream nowadays – ex. : PVM (Parallel Virtual Machine), MPI (Message Passing Interface), Pthreads a. o. 5

Parallel programming models • A parallel programming model is a set of software technologies to express parallel algorithms and match applications with the underlying parallel systems [Wikipedia] • Considered models: – data parallel [just introductory info in this course] – shared variables/memory [related to the Open. MP lecture in part II of the course] – message passing [continued in the next lecture (MPI)] 6

Data parallel model

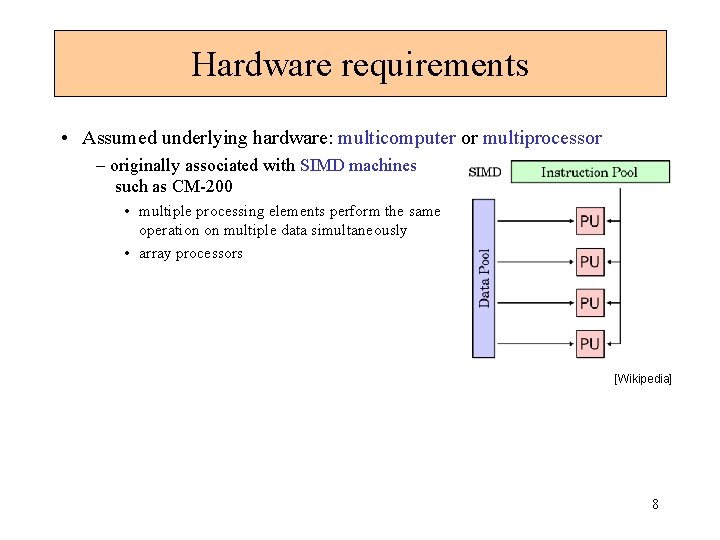

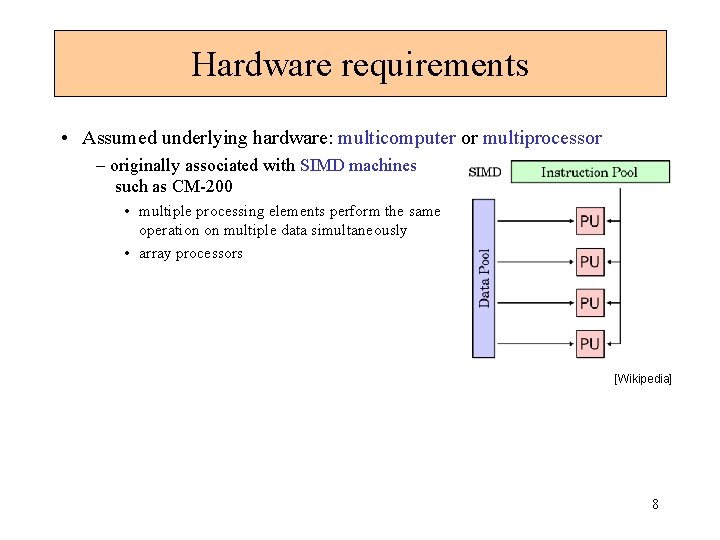

Hardware requirements • Assumed underlying hardware: multicomputer or multiprocessor – originally associated with SIMD machines such as CM-200 • multiple processing elements perform the same operation on multiple data simultaneously • array processors [Wikipedia] 8

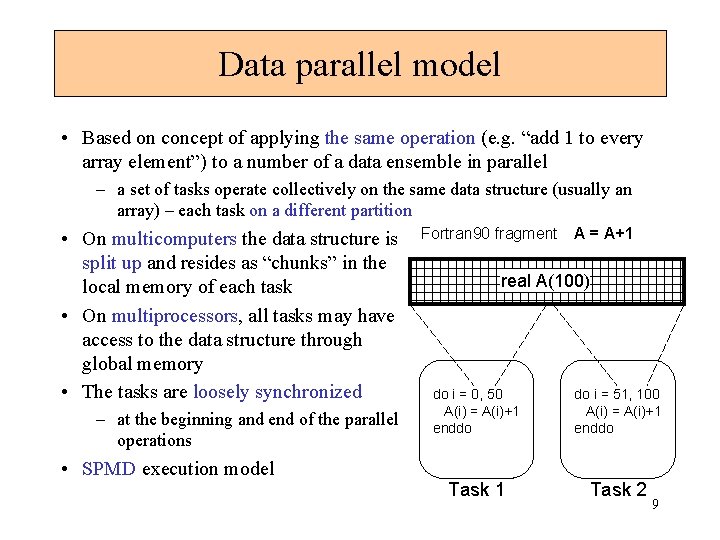

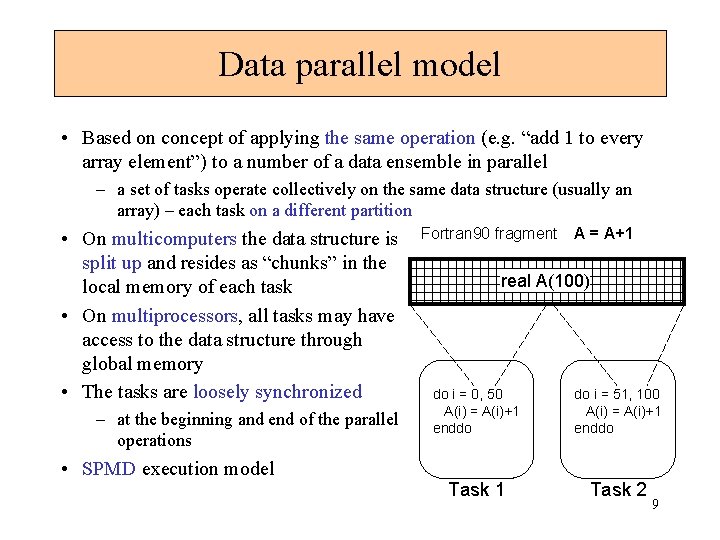

Data parallel model • Based on concept of applying the same operation (e. g. “add 1 to every array element”) to a number of a data ensemble in parallel – a set of tasks operate collectively on the same data structure (usually an array) – each task on a different partition • On multicomputers the data structure is split up and resides as “chunks” in the local memory of each task • On multiprocessors, all tasks may have access to the data structure through global memory • The tasks are loosely synchronized – at the beginning and end of the parallel operations Fortran 90 fragment A = A+1 real A(100) do i = 0, 50 A(i) = A(i)+1 enddo do i = 51, 100 A(i) = A(i)+1 enddo Task 1 Task 2 • SPMD execution model 9

Characteristics • Higher-level parallel programming – data distribution and communication done by compiler • transfer low-level details from programmer to compiler – compiler converts the program into standard code with calls to a message passing library (MPI usually); all message passing is done invisibly to the programmer + Ease of use – simple to write, debug and maintain • no explicit message passing • single-threaded control (no spawn, fork, etc. ) – Restricted flexibility and control – only suitable for certain applications • data in large arrays • similar independent operations on each element • naturally load-balanced – harder to get top performance • reliant on good compilers 10

High Performance Fortran • The best known representative of data parallel programming language • HPF version 1. 0 in 1993 (extends Fortran 90), version 2. 0 in 1997 • Extensions to Fortran 90 to support data parallel model, including – directives to tell compiler how to distribute data • DISTRIBUTE, ALIGN directives • ignored as comments in serial Fortran compilers – – mathematical operations on array-valued arguments reduction operations on arrays FORALL construct assertions that can improve optimization of generated code • INDEPENDENT directive – additional intrinsics and library routines • Available e. g. in the Portland Group PGI Workstation package – http: //www. pgroup. com/products/pgiworkstation. htm • Nowadays not frequently used 11

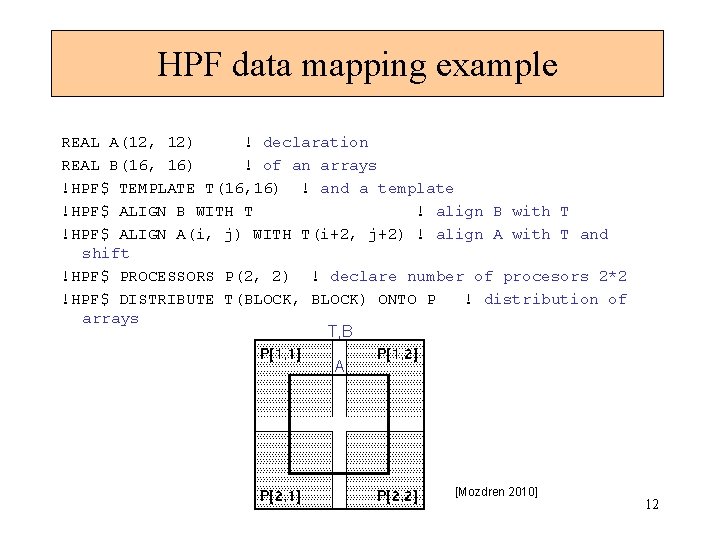

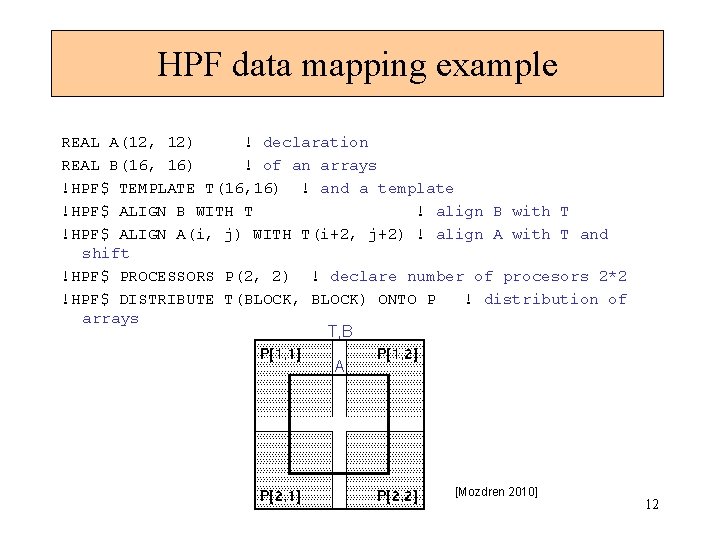

HPF data mapping example REAL A(12, 12) ! declaration REAL B(16, 16) ! of an arrays !HPF$ TEMPLATE T(16, 16) ! and a template !HPF$ ALIGN B WITH T ! align B with T !HPF$ ALIGN A(i, j) WITH T(i+2, j+2) ! align A with T and shift !HPF$ PROCESSORS P(2, 2) ! declare number of procesors 2*2 !HPF$ DISTRIBUTE T(BLOCK, BLOCK) ONTO P ! distribution of arrays T, B A [Mozdren 2010] 12

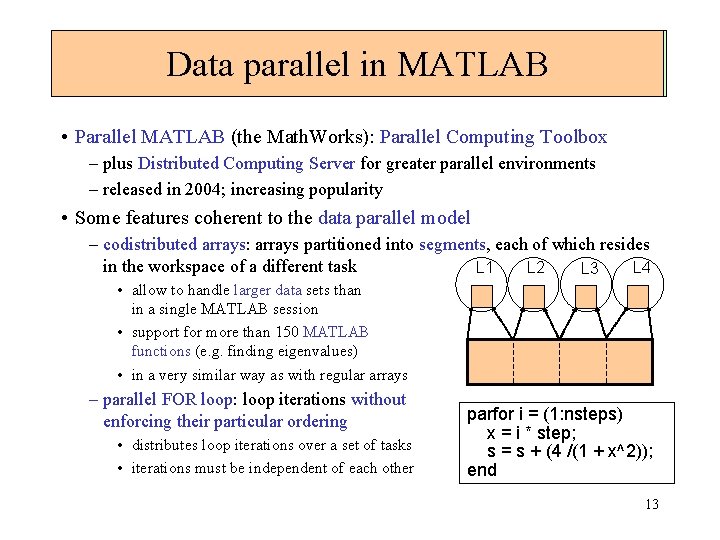

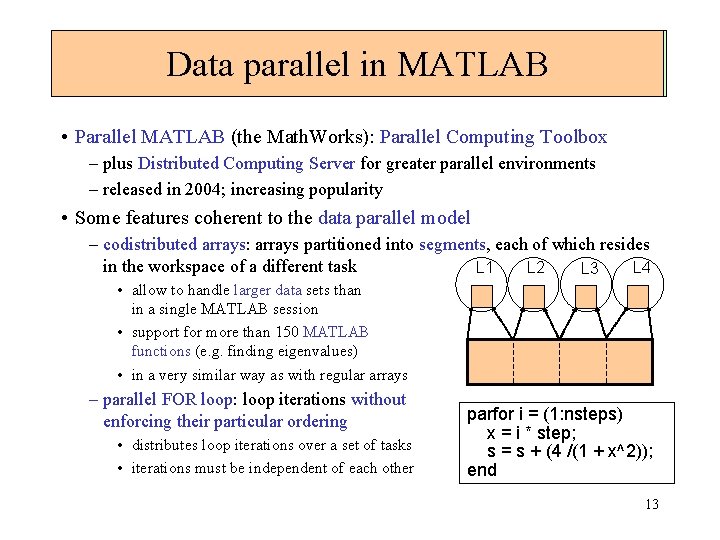

Data Codistributed parallel in MATLAB arrays • Parallel MATLAB (the Math. Works): Parallel Computing Toolbox – plus Distributed Computing Server for greater parallel environments – released in 2004; increasing popularity • Some features coherent to the data parallel model – codistributed arrays: arrays partitioned into segments, each of which resides in the workspace of a different task L 1 L 4 L 2 L 3 • allow to handle larger data sets than in a single MATLAB session • support for more than 150 MATLAB functions (e. g. finding eigenvalues) • in a very similar way as with regular arrays – parallel FOR loop: loop iterations without enforcing their particular ordering • distributes loop iterations over a set of tasks • iterations must be independent of each other parfor i = (1: nsteps) x = i * step; s = s + (4 /(1 + x^2)); end 13

Shared variables model

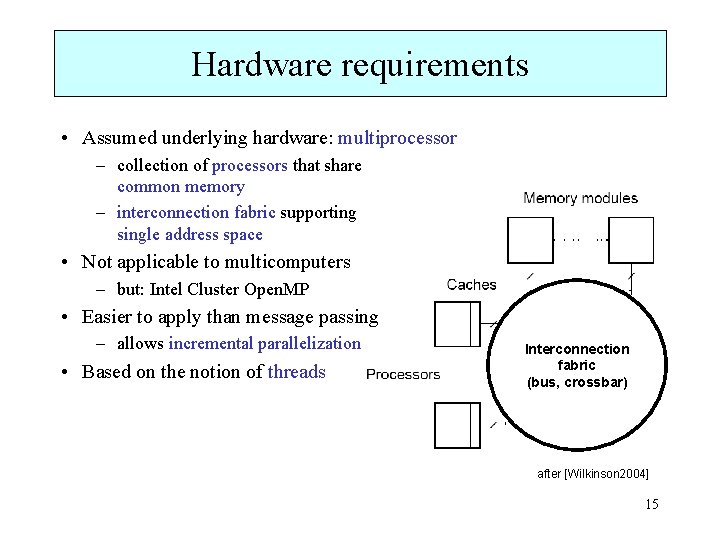

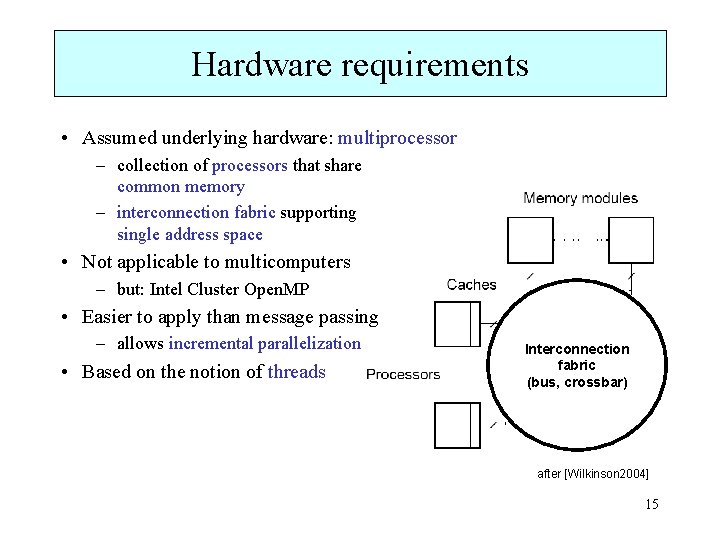

Hardware requirements • Assumed underlying hardware: multiprocessor – collection of processors that share common memory – interconnection fabric supporting single address space • Not applicable to multicomputers – but: Intel Cluster Open. MP • Easier to apply than message passing – allows incremental parallelization • Based on the notion of threads Interconnection fabric (bus, crossbar) after [Wilkinson 2004] 15

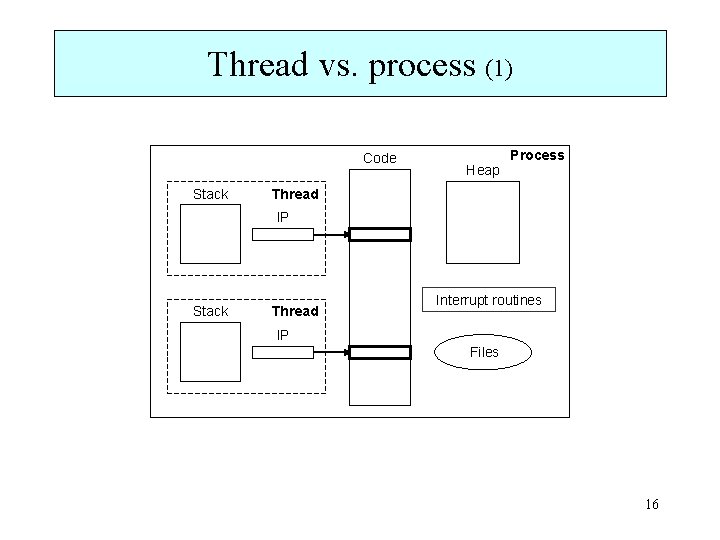

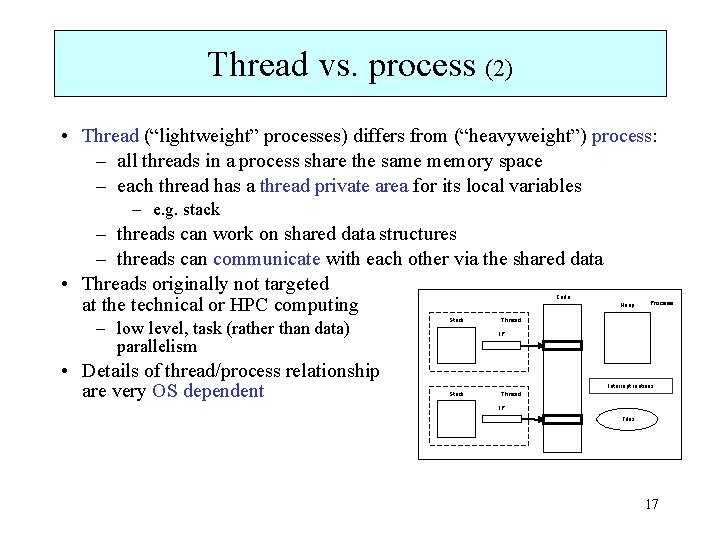

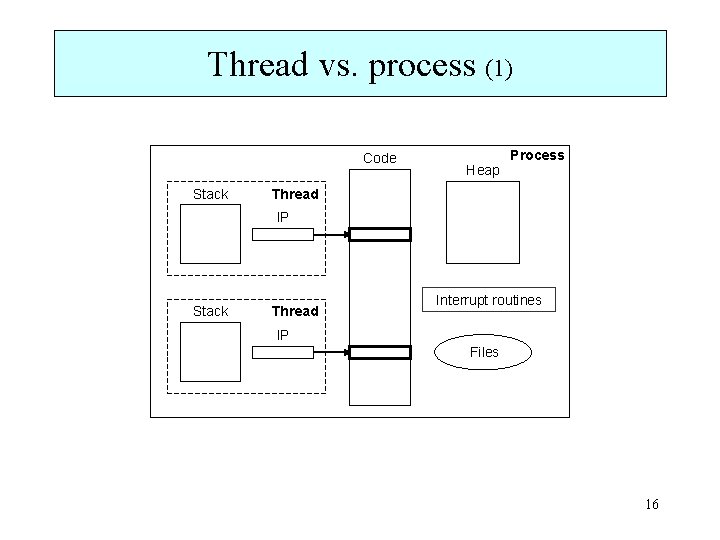

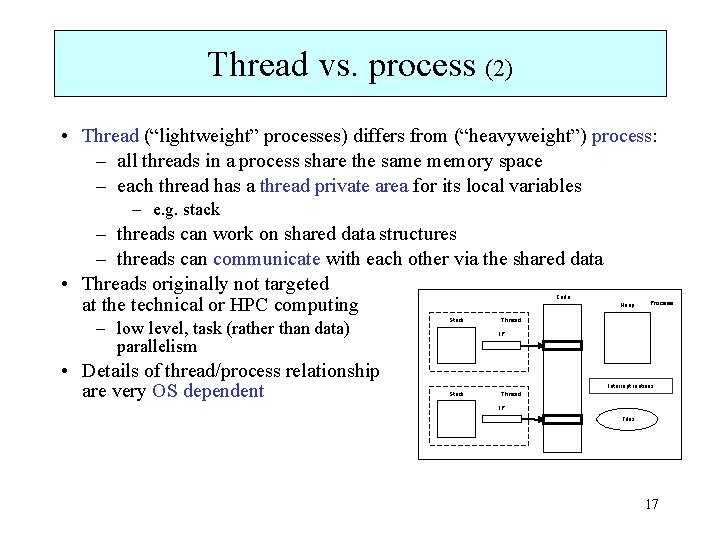

Thread vs. process (1) Code Stack Heap Process Thread IP Stack Thread Interrupt routines IP Files 16

Thread vs. process (2) • Thread (“lightweight” processes) differs from (“heavyweight”) process: – all threads in a process share the same memory space – each thread has a thread private area for its local variables – e. g. stack – threads can work on shared data structures – threads can communicate with each other via the shared data • Threads originally not targeted at the technical or HPC computing Code – low level, task (rather than data) parallelism • Details of thread/process relationship are very OS dependent Stack Heap Process Thread IP Interrupt routines Stack Thread IP Files 17

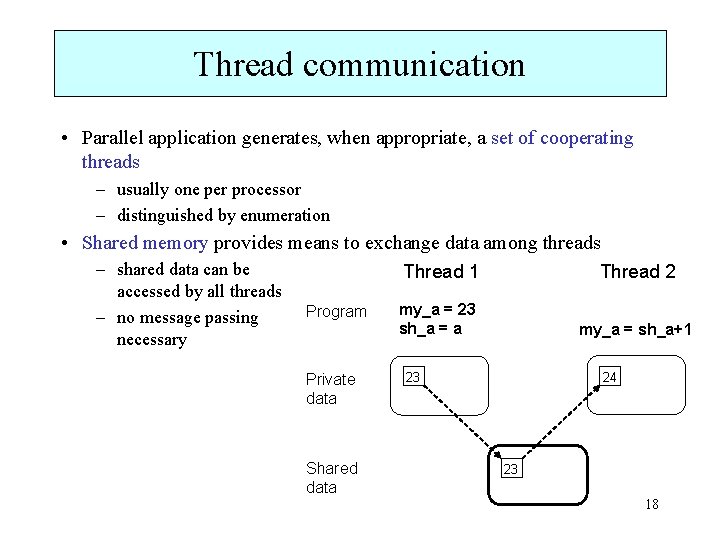

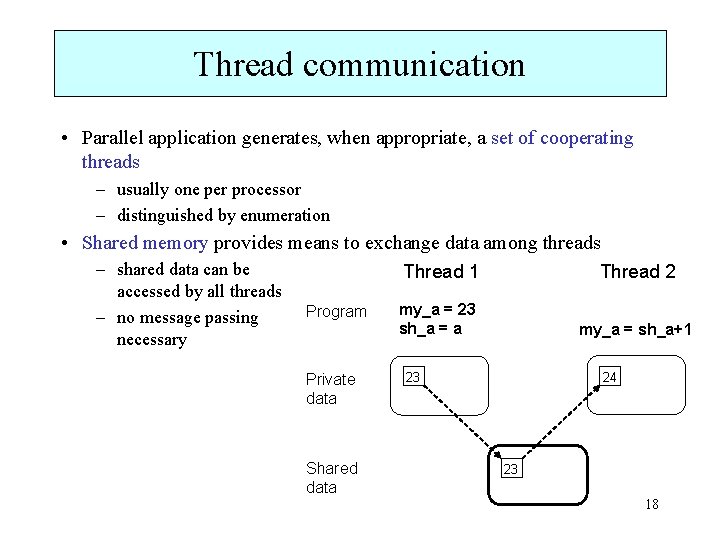

Thread communication • Parallel application generates, when appropriate, a set of cooperating threads – usually one per processor – distinguished by enumeration • Shared memory provides means to exchange data among threads – shared data can be accessed by all threads – no message passing necessary Program Private data Shared data Thread 1 Thread 2 my_a = 23 sh_a = a my_a = sh_a+1 23 24 23 18

Thread synchronization • Threads execute their programs asynchronously • Writes and reads are always nonblocking • Accessing shared data needs careful control – need some mechanisms to ensure that the actions occur in the correct order • e. g. write of A in thread 1 must occur before its read in thread 2 • Most common synchronization constructs: – master section: a section of code executed by one thread only • e. g. initialisation, writing a file – barrier: all threads must arrive at a barrier before any thread can proceed past it • e. g. delimiting phases of computation (e. g. a timestep) – critical section: only one thread at a time can enter a section of code • e. g. modification of shared variables • Makes shared-variables programming error-prone 19

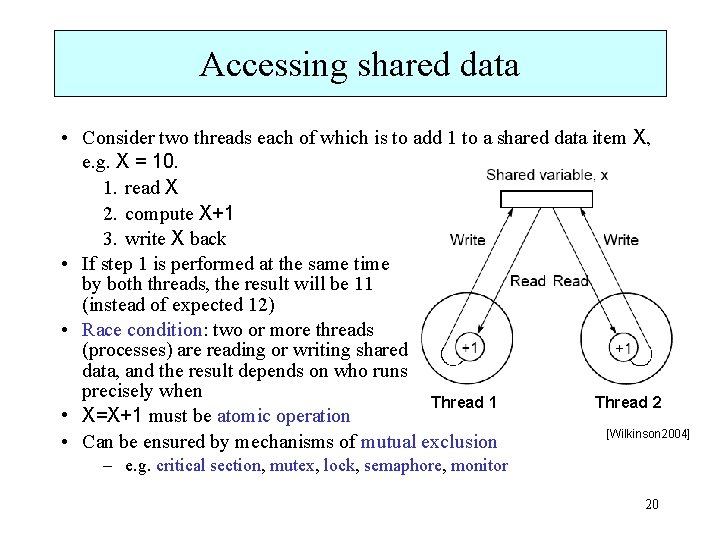

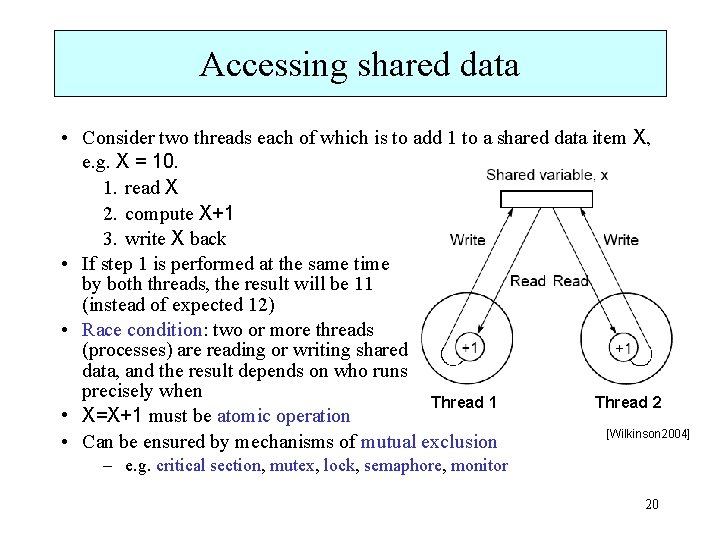

Accessing shared data • Consider two threads each of which is to add 1 to a shared data item X, e. g. X = 10. 1. read X 2. compute X+1 3. write X back • If step 1 is performed at the same time by both threads, the result will be 11 (instead of expected 12) • Race condition: two or more threads (processes) are reading or writing shared data, and the result depends on who runs precisely when Thread 1 Thread 2 • X=X+1 must be atomic operation [Wilkinson 2004] • Can be ensured by mechanisms of mutual exclusion – e. g. critical section, mutex, lock, semaphore, monitor 20

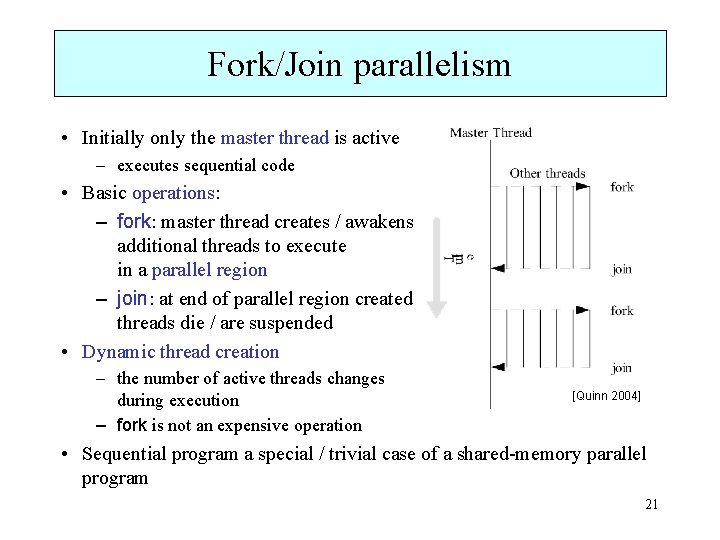

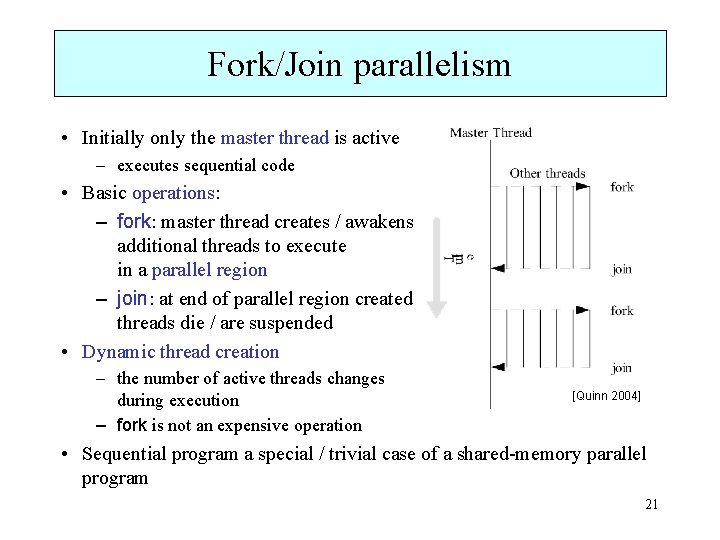

Fork/Join parallelism • Initially only the master thread is active – executes sequential code • Basic operations: – fork: master thread creates / awakens additional threads to execute in a parallel region – join: at end of parallel region created threads die / are suspended • Dynamic thread creation – the number of active threads changes during execution – fork is not an expensive operation [Quinn 2004] • Sequential program a special / trivial case of a shared-memory parallel program 21

Computer realization • Compiler’s support: – automatic parallelization – assisted parallelization – Open. MP • Thread libraries: – POSIX threads, Windows threads [next slides] 22

Automatic parallelization • The code instrumented automatically by the compiler – according the compilation flags and/or environment variables • Parallelizes independent loops only – processed by the prescribed number of parallel threads • Usually provided by Fortran compilers for multiprocessors – as a rule proprietary solutions • Simple and sometimes fairly efficient • Applicable to programs with a simple structure • Ex. : – XL Fortran (IBM, AIX): -qsmp=auto option, XLSMPOPTS environment variable (the number of threads) – Fortran (SUN, Solaris): -autopar flag, PARALLEL environment variable – PGI C (Portland Group, Linux): -Mconcur flag 23

Assisted parallelization • The programmer provides the compiler with additional information by adding compiler directives – special lines of source code with meaning only to a compiler that understands them • in the form of stylized Fortran comments or #pragma in C • ignored by nonparallelizing compilers • Assertive and prescriptive directives [next slides] • Diverse formats of the parallelizing directives, but similar capabilities standard required 24

Assertive directives • Hints that state facts that the compiler might not guess from the code itself • Evaluation context dependent • Ex. : XL Fortran (IBM, AIX) – no dependencies (the references in the loop do not overlap, parallelization possible): !SMP$ ASSERT (NODEPS) – trip count (average number of iterations of the loop; helps to decide if unroll or parallelize the loop): !SMP$ ASSERT (INTERCNT(100)) 25

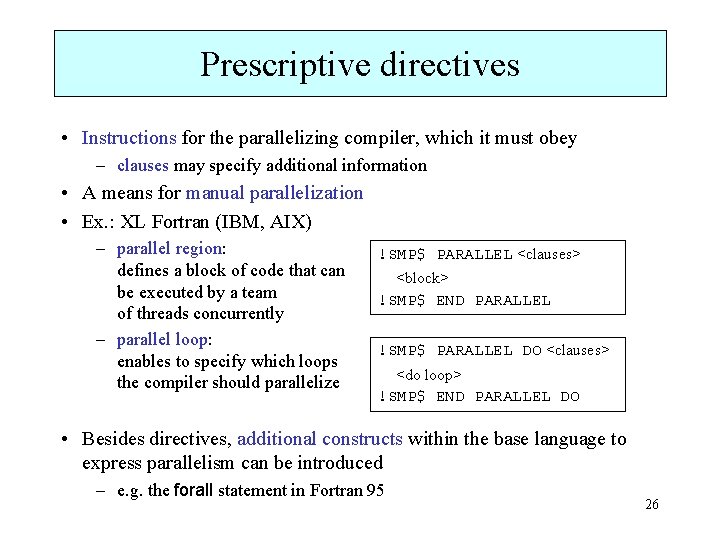

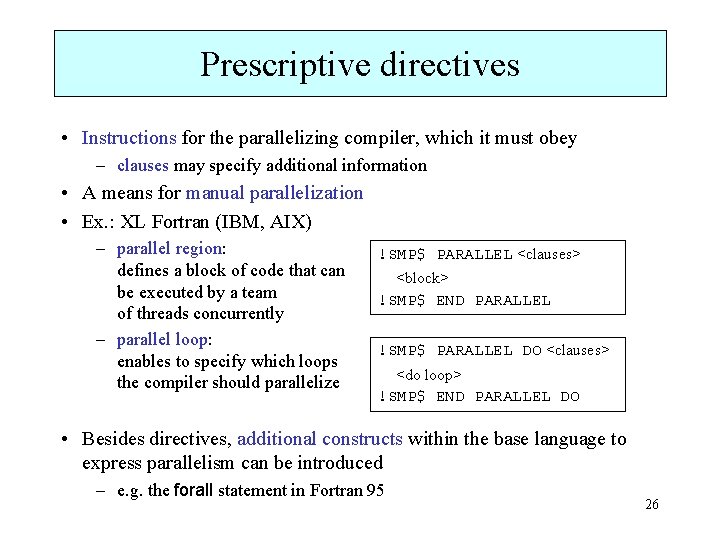

Prescriptive directives • Instructions for the parallelizing compiler, which it must obey – clauses may specify additional information • A means for manual parallelization • Ex. : XL Fortran (IBM, AIX) – parallel region: defines a block of code that can be executed by a team of threads concurrently – parallel loop: enables to specify which loops the compiler should parallelize !SMP$ PARALLEL <clauses> <block> !SMP$ END PARALLEL !SMP$ PARALLEL DO <clauses> <do loop> !SMP$ END PARALLEL DO • Besides directives, additional constructs within the base language to express parallelism can be introduced – e. g. the forall statement in Fortran 95 26

Open. MP • API for writing portable multithreaded applications based on the shared variables model – master thread spawns a team of threads as needed – relatively high level (compared to thread libraries) • A standard developed by the Open. MP Architecture Review Board – http: //www. openmp. org – first specification in 1997 • A set of compiler directives and library routines • Language interfaces for Fortran, C and C++ – Open. MP-like interfaces for other languages (e. g. Java) • Parallelism can be added incrementally – i. e. the sequential program evolves into a parallel program – single source code for both the sequential and parallel versions • Open. MP compilers available on most platforms (Unix, Windows, etc. ) [More in a special lecture] 27

Thread libraries • Collection of routines to create, manage, and coordinate threads • Main representatives: – POSIX threads (Pthreads), – Windows threads (Windows (Win 32) API) • Explicit threading not primarily intended for parallel programming – low level, quite complex coding 28

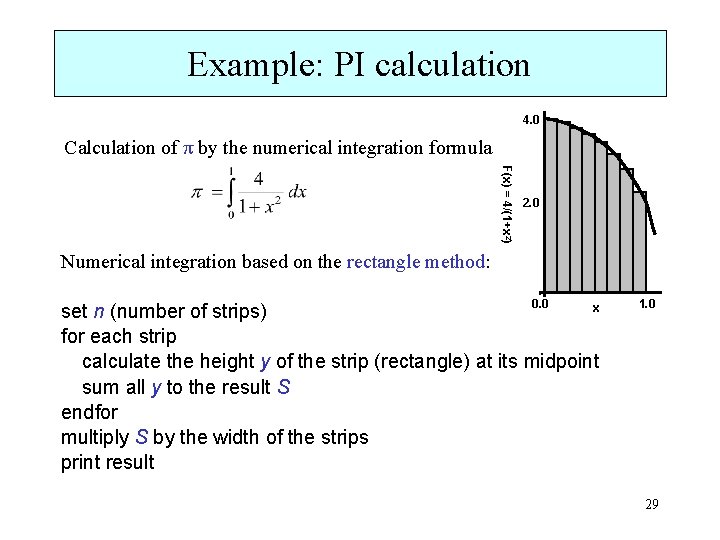

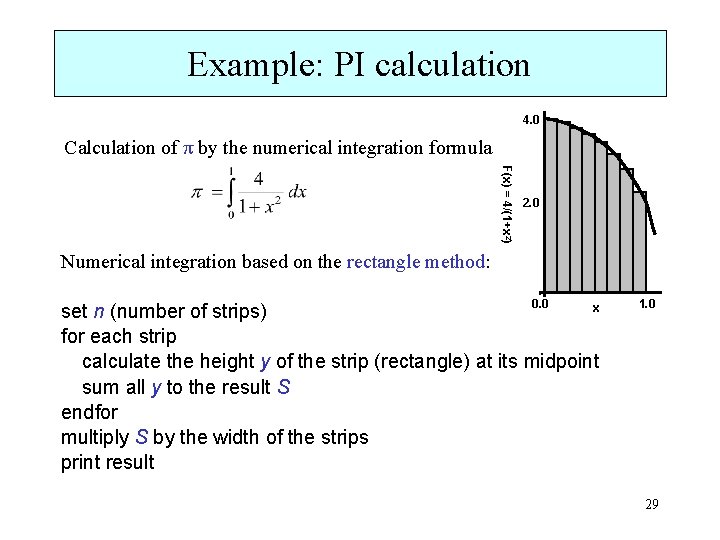

Example: PI calculation 4. 0 Calculation of π by the numerical integration formula F(x) = 4/(1+x 2) 2. 0 Numerical integration based on the rectangle method: 0. 0 x set n (number of strips) for each strip calculate the height y of the strip (rectangle) at its midpoint sum all y to the result S endfor multiply S by the width of the strips print result 1. 0 29

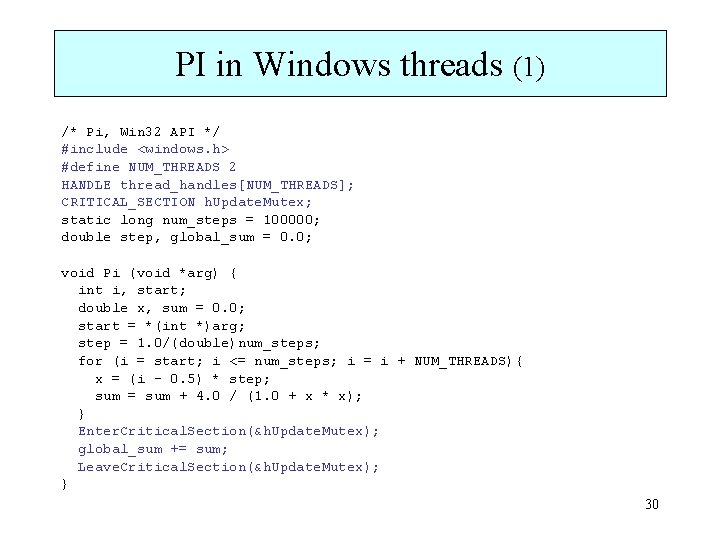

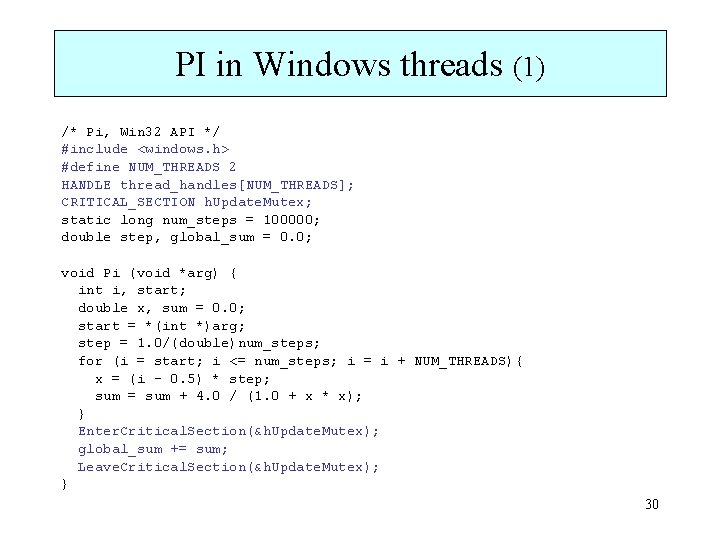

PI in Windows threads (1) /* Pi, Win 32 API */ #include <windows. h> #define NUM_THREADS 2 HANDLE thread_handles[NUM_THREADS]; CRITICAL_SECTION h. Update. Mutex; static long num_steps = 100000; double step, global_sum = 0. 0; void Pi (void *arg) { int i, start; double x, sum = 0. 0; start = *(int *)arg; step = 1. 0/(double)num_steps; for (i = start; i <= num_steps; i = i + NUM_THREADS){ x = (i - 0. 5) * step; sum = sum + 4. 0 / (1. 0 + x * x); } Enter. Critical. Section(&h. Update. Mutex); global_sum += sum; Leave. Critical. Section(&h. Update. Mutex); } 30

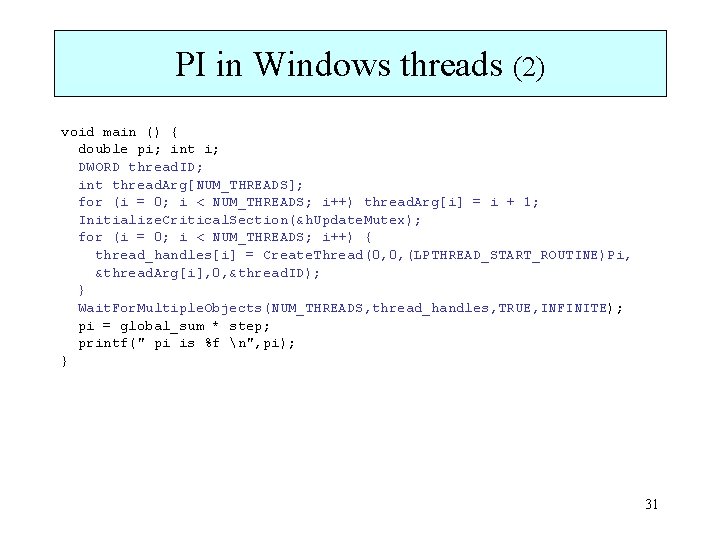

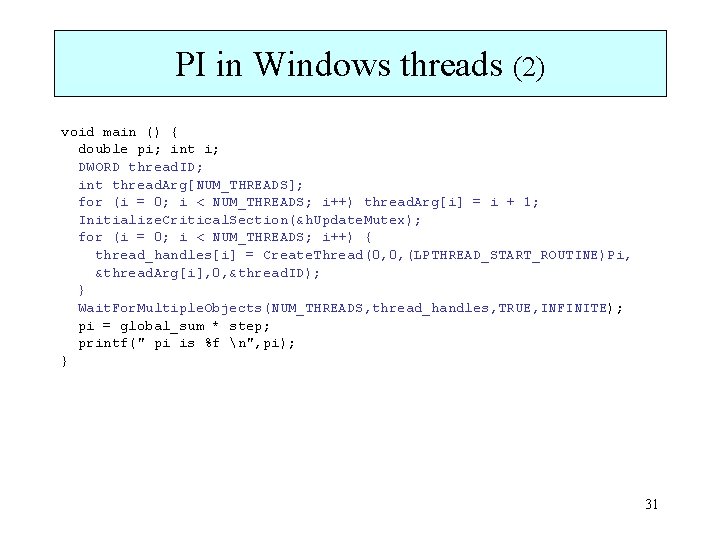

PI in Windows threads (2) void main () { double pi; int i; DWORD thread. ID; int thread. Arg[NUM_THREADS]; for (i = 0; i < NUM_THREADS; i++) thread. Arg[i] = i + 1; Initialize. Critical. Section(&h. Update. Mutex); for (i = 0; i < NUM_THREADS; i++) { thread_handles[i] = Create. Thread(0, 0, (LPTHREAD_START_ROUTINE)Pi, &thread. Arg[i], 0, &thread. ID); } Wait. For. Multiple. Objects(NUM_THREADS, thread_handles, TRUE, INFINITE); pi = global_sum * step; printf(" pi is %f n", pi); } 31

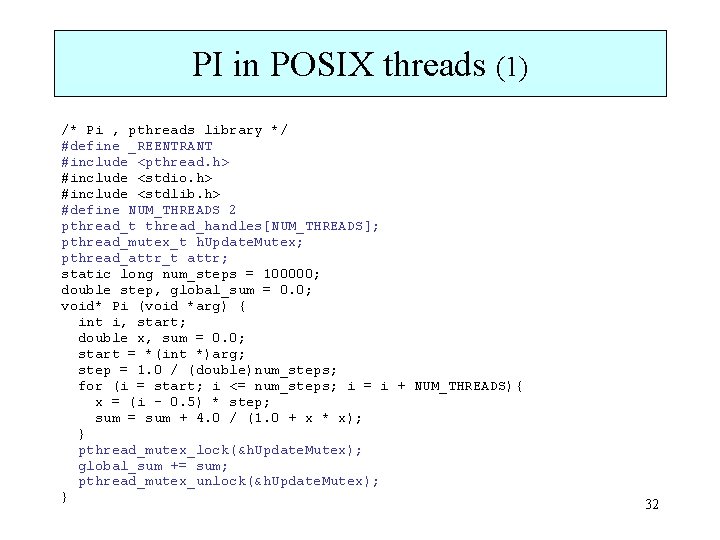

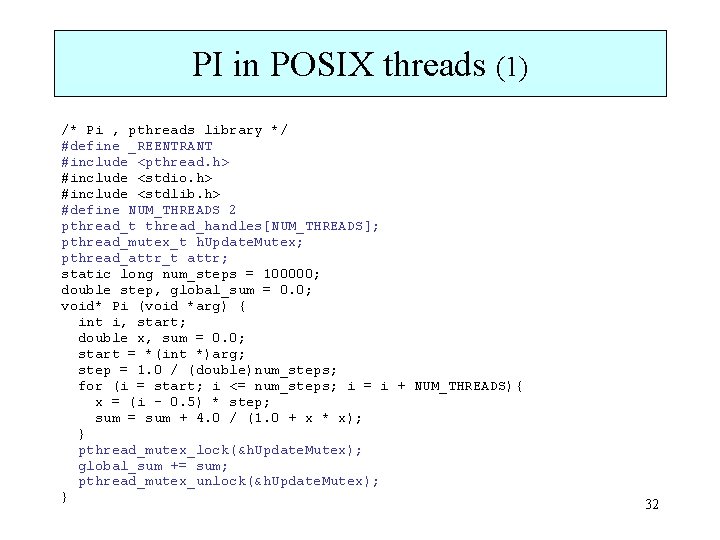

PI in POSIX threads (1) /* Pi , pthreads library */ #define _REENTRANT #include <pthread. h> #include <stdio. h> #include <stdlib. h> #define NUM_THREADS 2 pthread_t thread_handles[NUM_THREADS]; pthread_mutex_t h. Update. Mutex; pthread_attr_t attr; static long num_steps = 100000; double step, global_sum = 0. 0; void* Pi (void *arg) { int i, start; double x, sum = 0. 0; start = *(int *)arg; step = 1. 0 / (double)num_steps; for (i = start; i <= num_steps; i = i + NUM_THREADS){ x = (i - 0. 5) * step; sum = sum + 4. 0 / (1. 0 + x * x); } pthread_mutex_lock(&h. Update. Mutex); global_sum += sum; pthread_mutex_unlock(&h. Update. Mutex); } 32

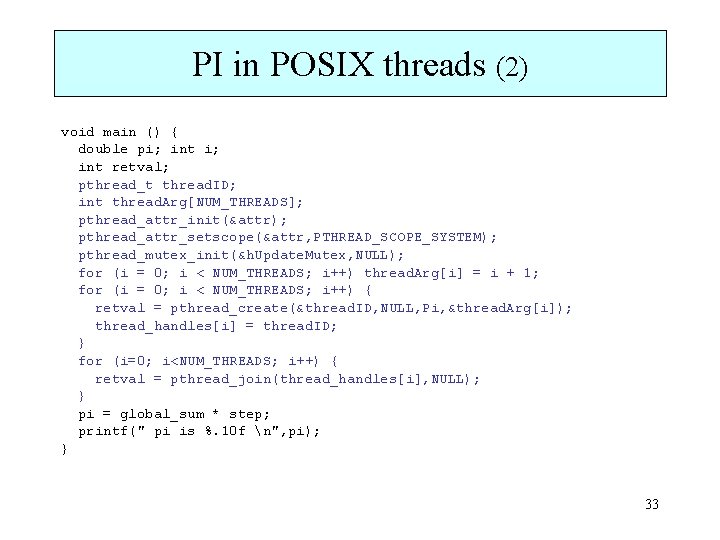

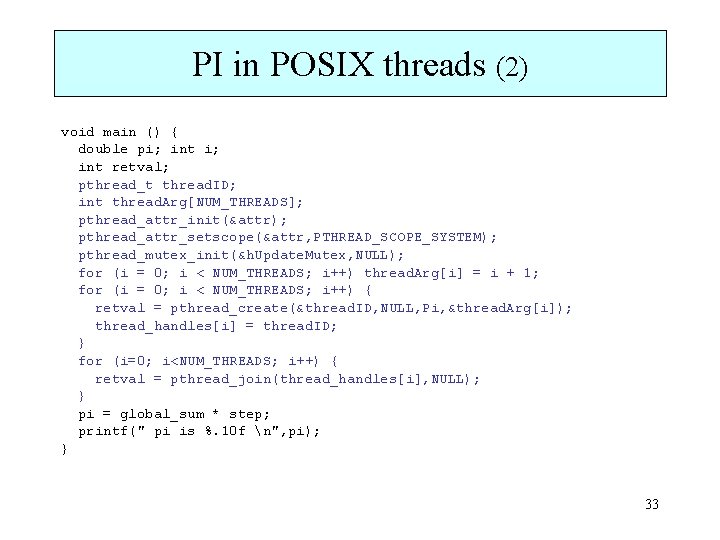

PI in POSIX threads (2) void main () { double pi; int retval; pthread_t thread. ID; int thread. Arg[NUM_THREADS]; pthread_attr_init(&attr); pthread_attr_setscope(&attr, PTHREAD_SCOPE_SYSTEM); pthread_mutex_init(&h. Update. Mutex, NULL); for (i = 0; i < NUM_THREADS; i++) thread. Arg[i] = i + 1; for (i = 0; i < NUM_THREADS; i++) { retval = pthread_create(&thread. ID, NULL, Pi, &thread. Arg[i]); thread_handles[i] = thread. ID; } for (i=0; i<NUM_THREADS; i++) { retval = pthread_join(thread_handles[i], NULL); } pi = global_sum * step; printf(" pi is %. 10 f n", pi); } 33

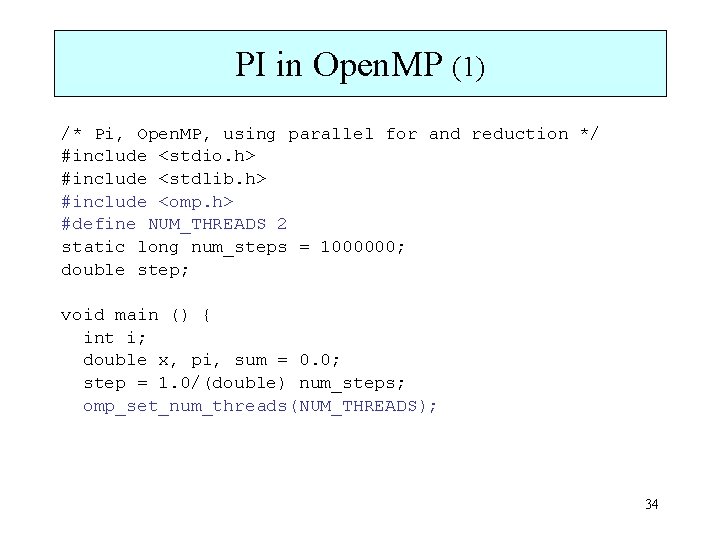

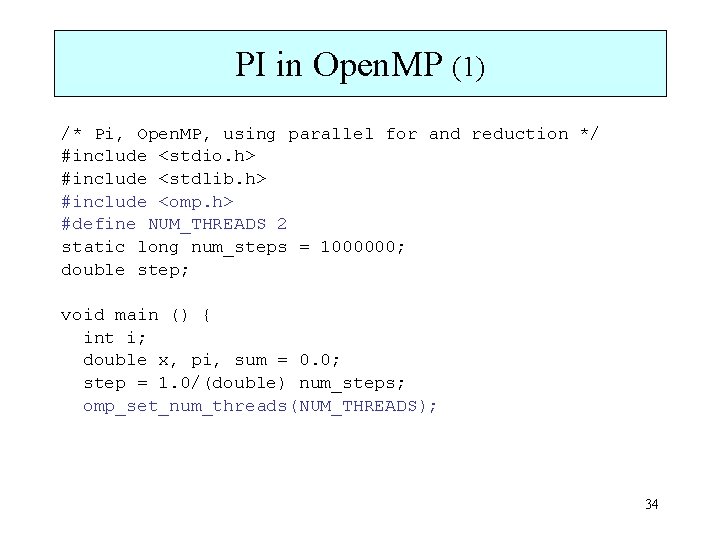

PI in Open. MP (1) /* Pi, Open. MP, using parallel for and reduction */ #include <stdio. h> #include <stdlib. h> #include <omp. h> #define NUM_THREADS 2 static long num_steps = 1000000; double step; void main () { int i; double x, pi, sum = 0. 0; step = 1. 0/(double) num_steps; omp_set_num_threads(NUM_THREADS); 34

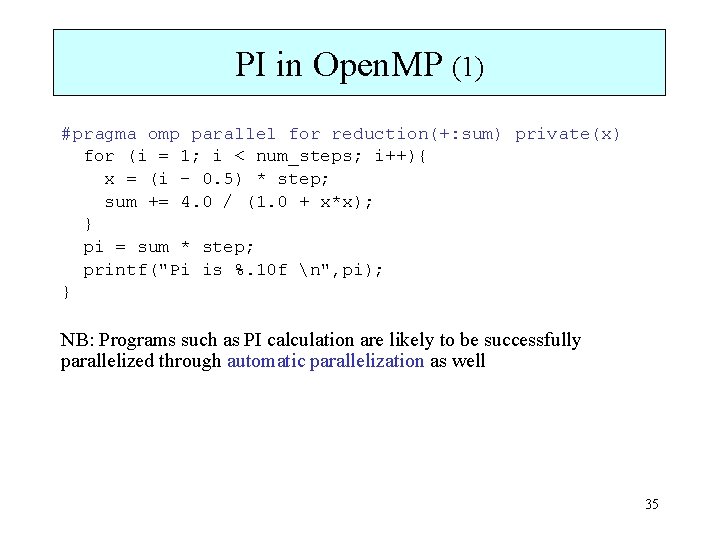

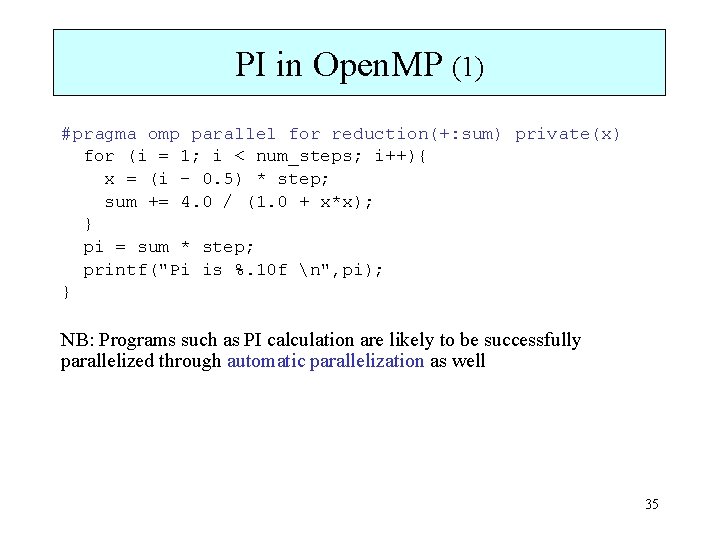

PI in Open. MP (1) #pragma omp parallel for reduction(+: sum) private(x) for (i = 1; i < num_steps; i++){ x = (i - 0. 5) * step; sum += 4. 0 / (1. 0 + x*x); } pi = sum * step; printf("Pi is %. 10 f n", pi); } NB: Programs such as PI calculation are likely to be successfully parallelized through automatic parallelization as well 35

Message passing model

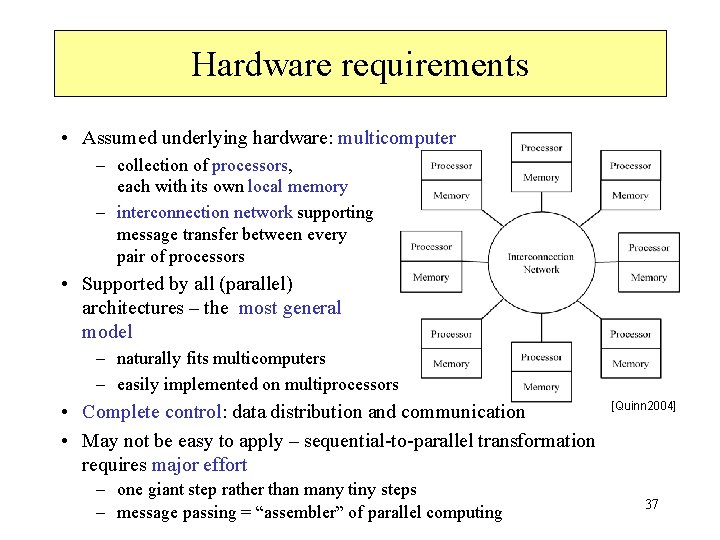

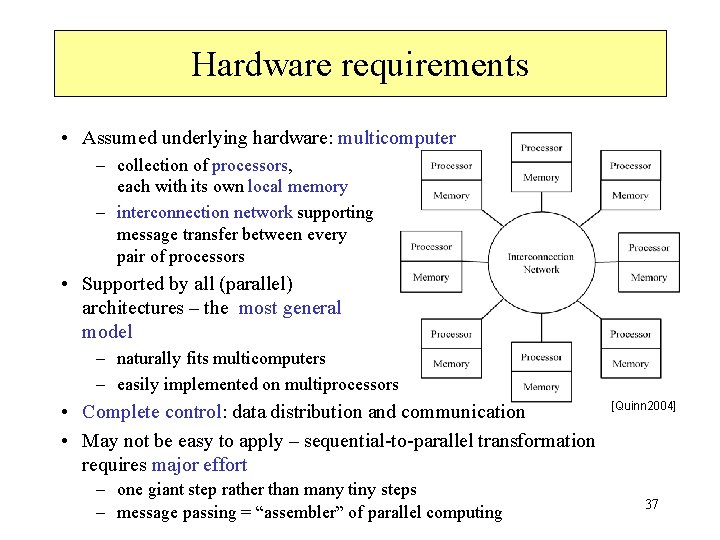

Hardware requirements • Assumed underlying hardware: multicomputer – collection of processors, each with its own local memory – interconnection network supporting message transfer between every pair of processors • Supported by all (parallel) architectures – the most general model – naturally fits multicomputers – easily implemented on multiprocessors • Complete control: data distribution and communication • May not be easy to apply – sequential-to-parallel transformation requires major effort – one giant step rather than many tiny steps – message passing = “assembler” of parallel computing [Quinn 2004] 37

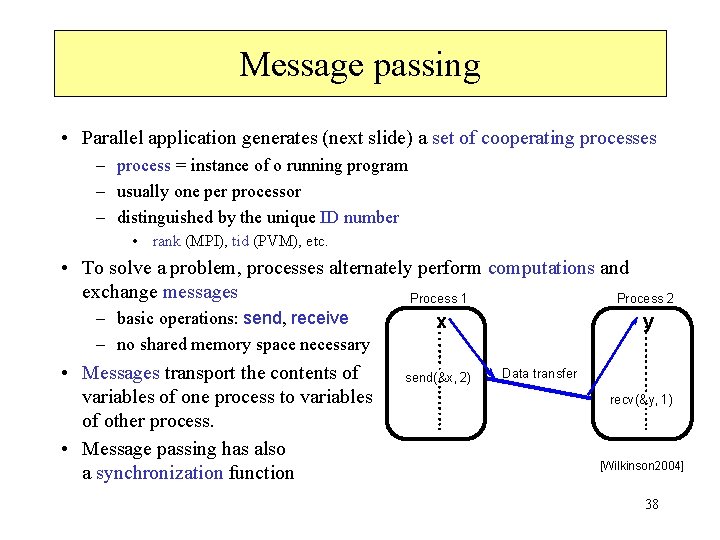

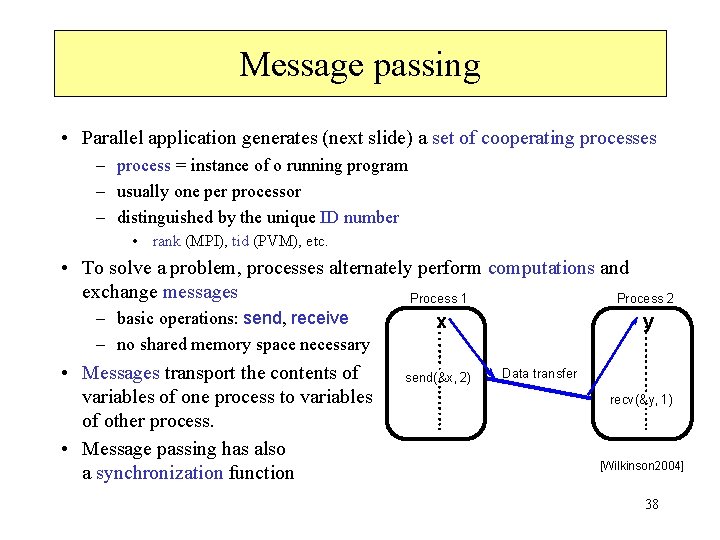

Message passing • Parallel application generates (next slide) a set of cooperating processes – process = instance of o running program – usually one per processor – distinguished by the unique ID number • rank (MPI), tid (PVM), etc. • To solve a problem, processes alternately perform computations and exchange messages Process 1 Process 2 – basic operations: send, receive x y – no shared memory space necessary • Messages transport the contents of variables of one process to variables of other process. • Message passing has also a synchronization function send(&x, 2) Data transfer recv(&y, 1) [Wilkinson 2004] 38

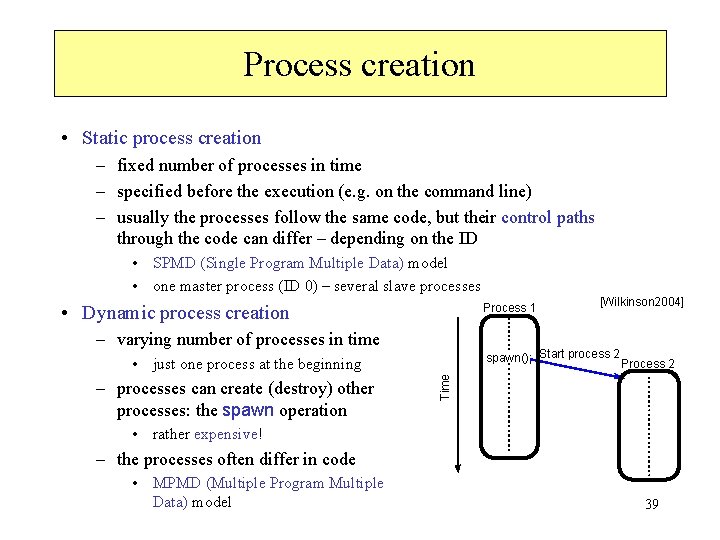

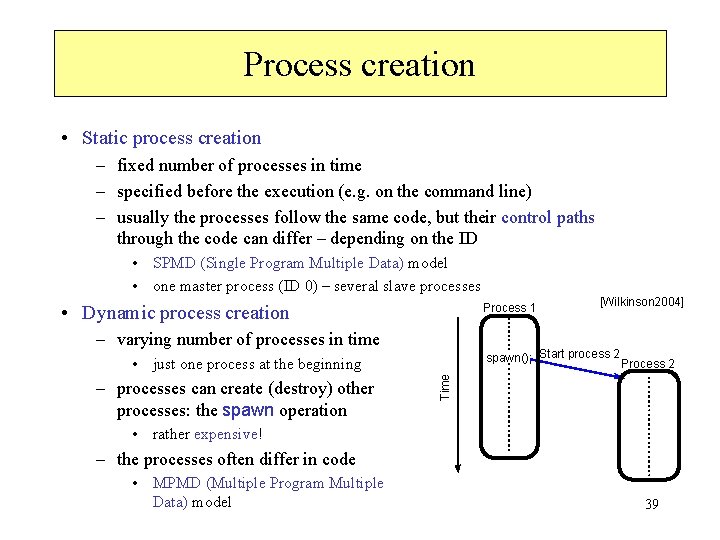

Process creation • Static process creation – fixed number of processes in time – specified before the execution (e. g. on the command line) – usually the processes follow the same code, but their control paths through the code can differ – depending on the ID • SPMD (Single Program Multiple Data) model • one master process (ID 0) – several slave processes • Dynamic process creation Process 1 – varying number of processes in time spawn(); Start process 2 Process 2 Time • just one process at the beginning – processes can create (destroy) other processes: the spawn operation [Wilkinson 2004] • rather expensive! – the processes often differ in code • MPMD (Multiple Program Multiple Data) model 39

Point-to-point communication • Exactly two processes are involved • One process (sender / source) sends a message and another process (receiver / destination) receives it – active participation of processes on both sides usually required • two-sided communication • In general, the source and destination processes operate asynchronously – the source may complete sending a message long before the destination gets around to receiving it – the destination may initiate receiving a message that has not yet been sent • The order of messages is guaranteed (they do not overtake) • Examples of technical issues – – handling more messages waiting to be received sending complex data structures using message buffers send and receive routines – blocking vs. nonblocking 40

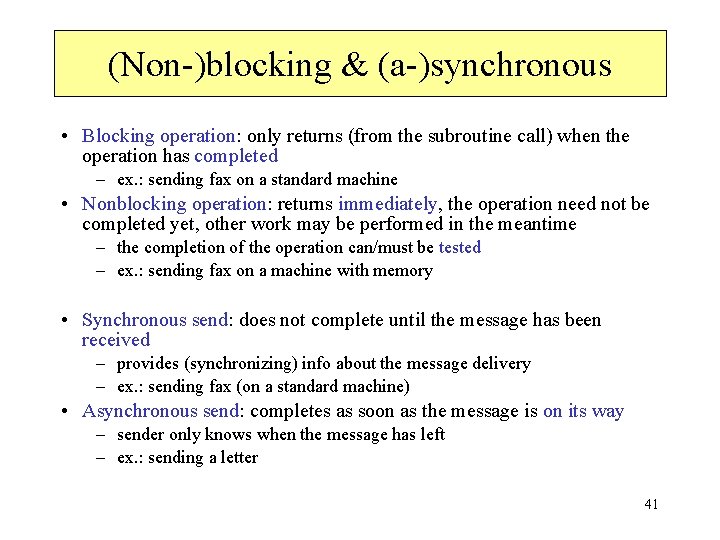

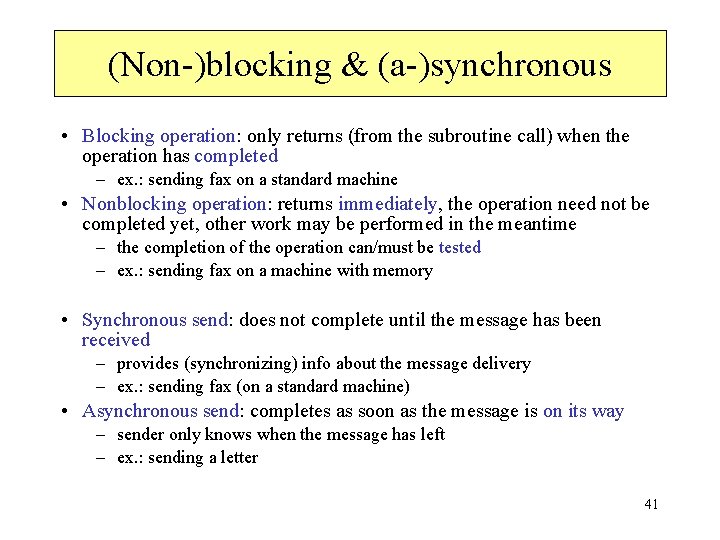

(Non-)blocking & (a-)synchronous • Blocking operation: only returns (from the subroutine call) when the operation has completed – ex. : sending fax on a standard machine • Nonblocking operation: returns immediately, the operation need not be completed yet, other work may be performed in the meantime – the completion of the operation can/must be tested – ex. : sending fax on a machine with memory • Synchronous send: does not complete until the message has been received – provides (synchronizing) info about the message delivery – ex. : sending fax (on a standard machine) • Asynchronous send: completes as soon as the message is on its way – sender only knows when the message has left – ex. : sending a letter 41

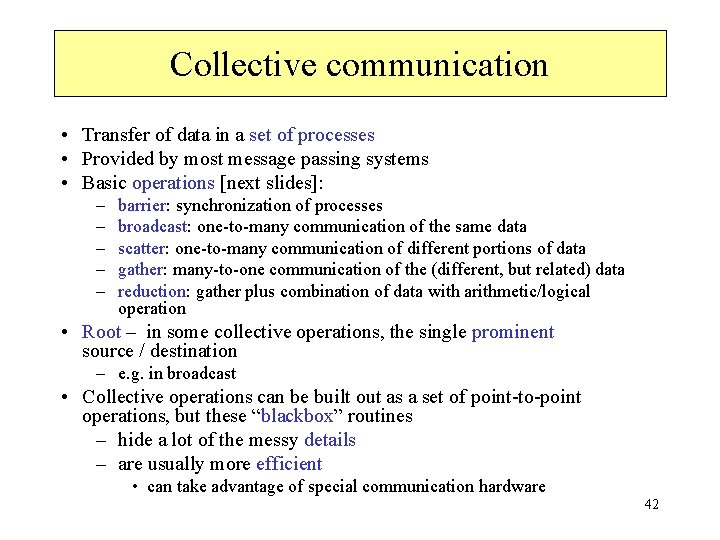

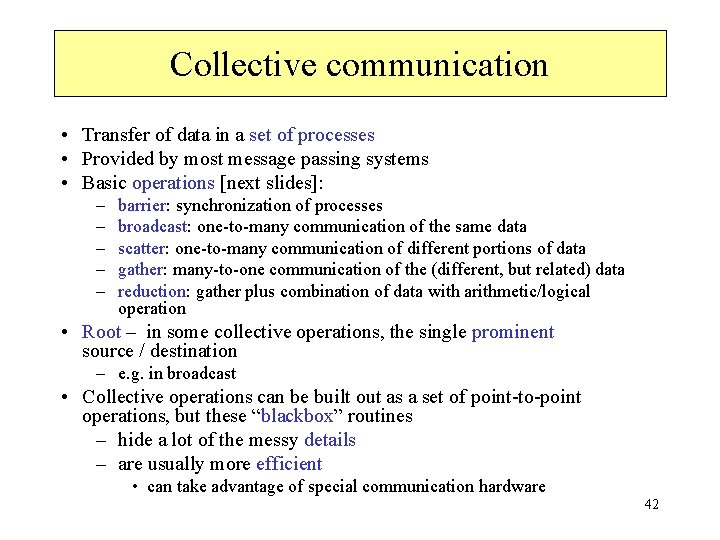

Collective communication • Transfer of data in a set of processes • Provided by most message passing systems • Basic operations [next slides]: – – – barrier: synchronization of processes broadcast: one-to-many communication of the same data scatter: one-to-many communication of different portions of data gather: many-to-one communication of the (different, but related) data reduction: gather plus combination of data with arithmetic/logical operation • Root – in some collective operations, the single prominent source / destination – e. g. in broadcast • Collective operations can be built out as a set of point-to-point operations, but these “blackbox” routines – hide a lot of the messy details – are usually more efficient • can take advantage of special communication hardware 42

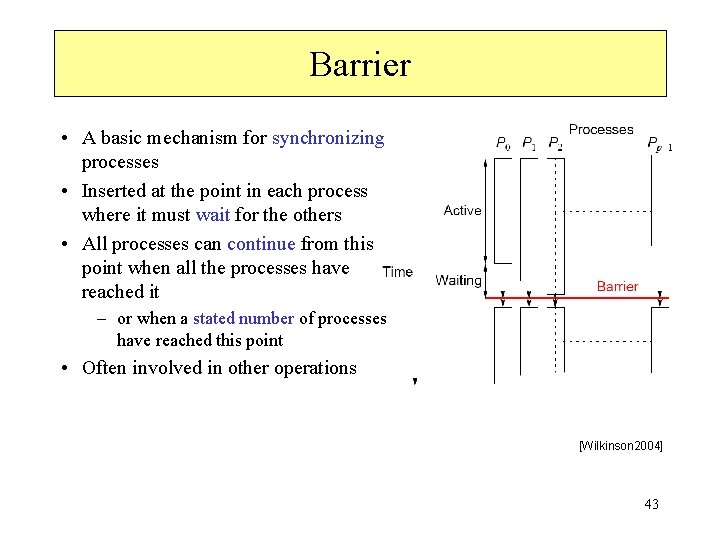

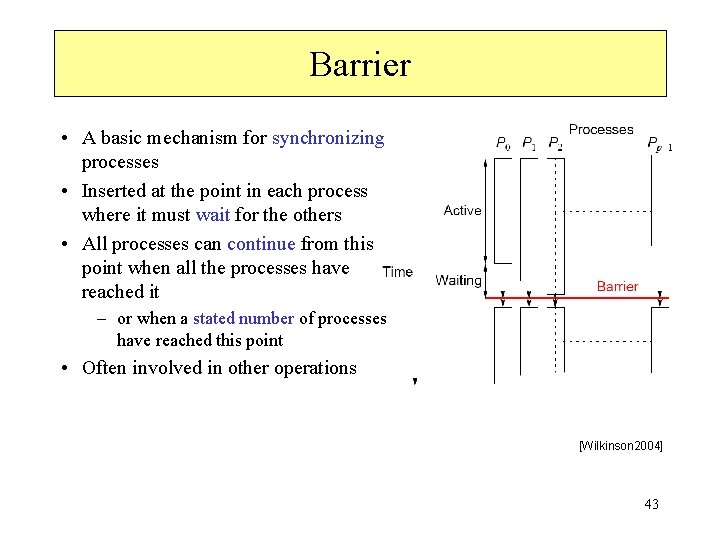

Barrier • A basic mechanism for synchronizing processes • Inserted at the point in each process where it must wait for the others • All processes can continue from this point when all the processes have reached it – or when a stated number of processes have reached this point • Often involved in other operations [Wilkinson 2004] 43

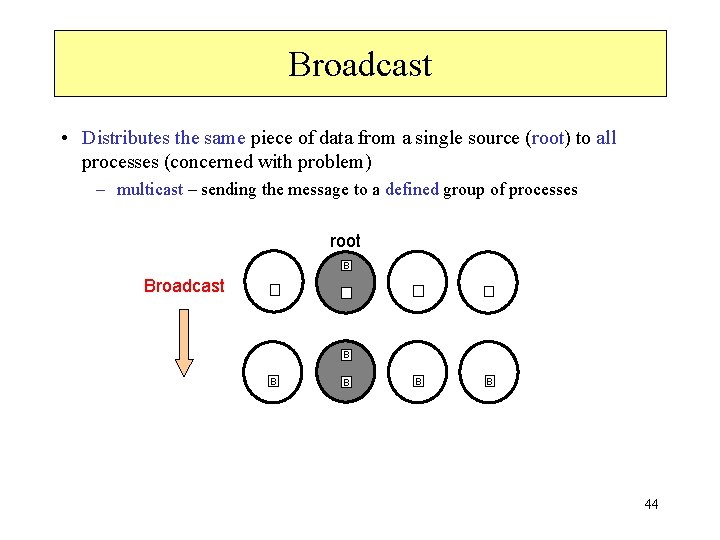

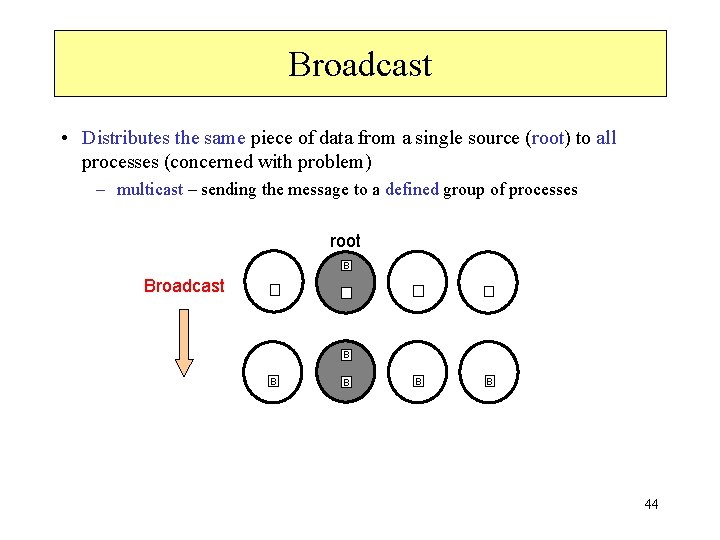

Broadcast • Distributes the same piece of data from a single source (root) to all processes (concerned with problem) – multicast – sending the message to a defined group of processes root B Broadcast B B B 44

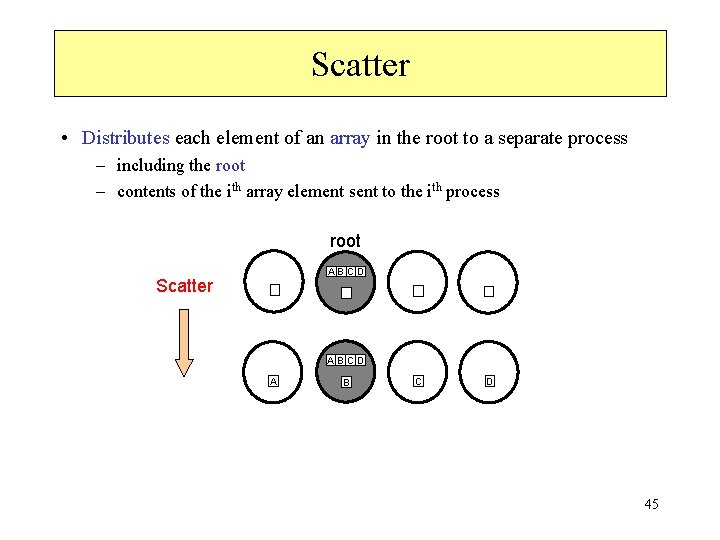

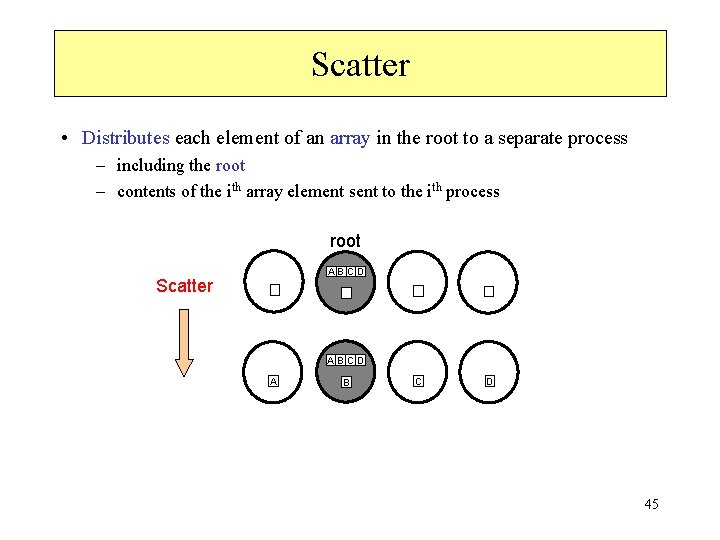

Scatter • Distributes each element of an array in the root to a separate process – including the root – contents of the ith array element sent to the ith process root ABCD Scatter ABCD A B C D 45

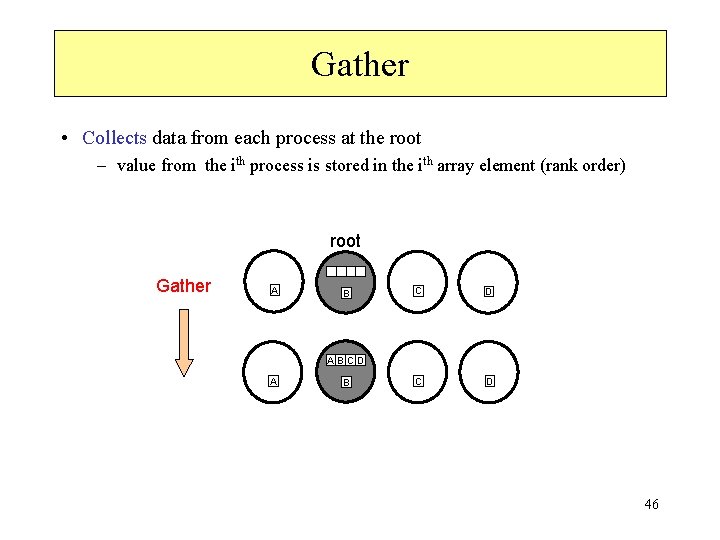

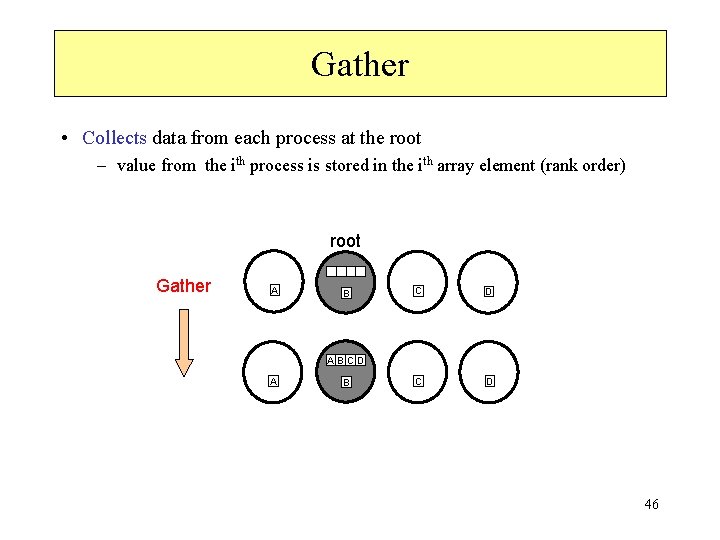

Gather • Collects data from each process at the root – value from the ith process is stored in the ith array element (rank order) root Gather A B C D ABCD A B 46

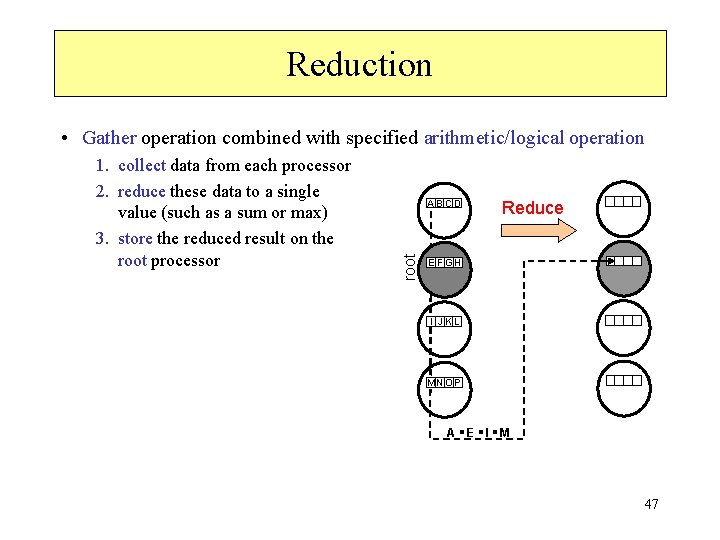

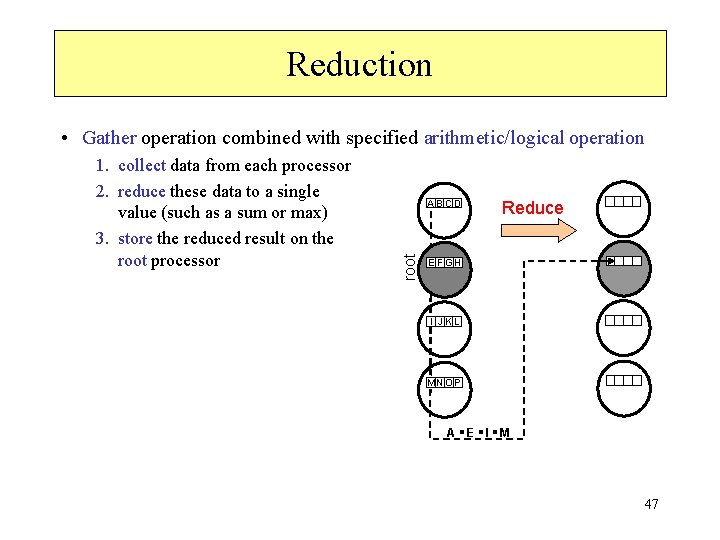

Reduction • Gather operation combined with specified arithmetic/logical operation ABCD root 1. collect data from each processor 2. reduce these data to a single value (such as a sum or max) 3. store the reduced result on the root processor Reduce E F GH I JKL MNO P A E I M 47

Message passing system (1) • Computer realization of the message passing model • Most popular message passing systems (MPS): – Message Passing Interface (MPI) [next lecture] – Parallel Virtual Machine (PVM) – in distributed computing Corba, Java RMI, DCOM, etc. 48

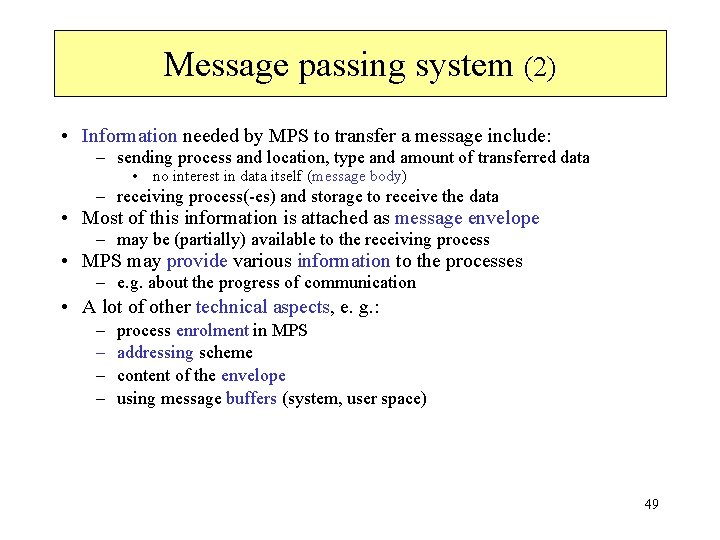

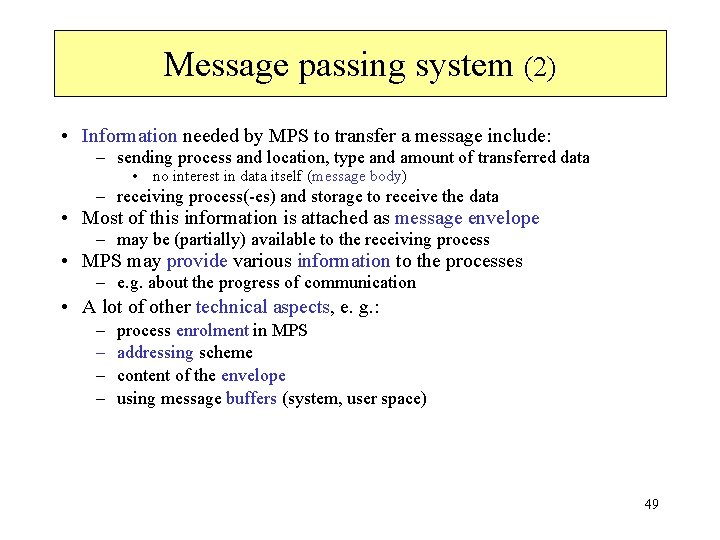

Message passing system (2) • Information needed by MPS to transfer a message include: – sending process and location, type and amount of transferred data • no interest in data itself (message body) – receiving process(-es) and storage to receive the data • Most of this information is attached as message envelope – may be (partially) available to the receiving process • MPS may provide various information to the processes – e. g. about the progress of communication • A lot of other technical aspects, e. g. : – – process enrolment in MPS addressing scheme content of the envelope using message buffers (system, user space) 49

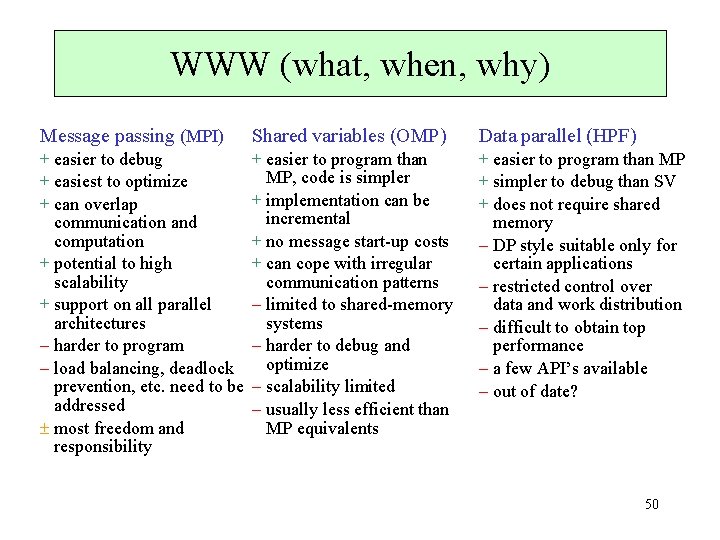

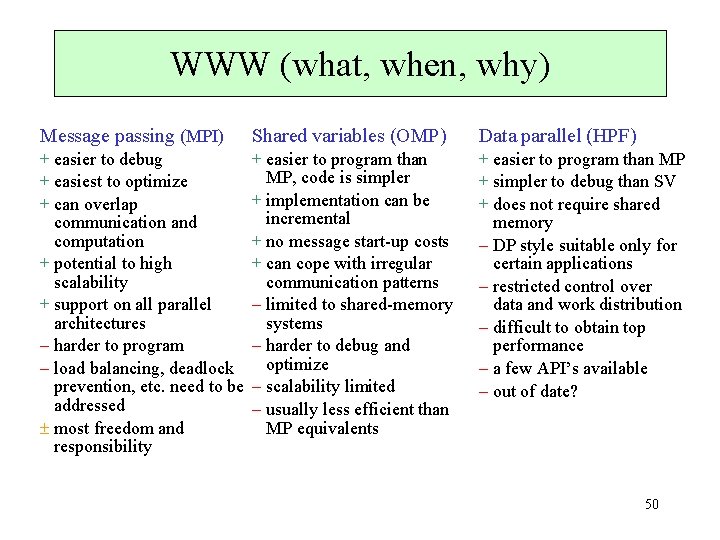

WWW (what, when, why) Message passing (MPI) Shared variables (OMP) Data parallel (HPF) + easier to debug + easiest to optimize + can overlap communication and computation + potential to high scalability + support on all parallel architectures – harder to program – load balancing, deadlock prevention, etc. need to be addressed ± most freedom and responsibility + easier to program than MP, code is simpler + implementation can be incremental + no message start-up costs + can cope with irregular communication patterns – limited to shared-memory systems – harder to debug and optimize – scalability limited – usually less efficient than MP equivalents + easier to program than MP + simpler to debug than SV + does not require shared memory – DP style suitable only for certain applications – restricted control over data and work distribution – difficult to obtain top performance – a few API’s available – out of date? 50

Conclusions • The definition of parallel programming models is not uniform in literature; other models can be e. g. – thread programming model – hybrid models, e. g. the combination of the message passing and shared variables model • explicit message passing between the nodes of a cluster as well as shared-memory and multithreading within the nodes • Models continue to evolve along with the changing world of computer hardware and software – CUDA parallel programming model for CUDA GPU architecture 51

Further study • The message passing model and shared variables model somehow treated in all general textbooks on parallel programming • exception: [Foster 1995] almost skips data sharing • There are plenty of books dedicated to shared objects, synchronisation and shared memory, e. g. [Andrews 2000] Foundations of Multithreaded, Parallel, and Distributed Programming • not necessarily focusing on parallel processing • Data parallelism is usually a marginal topic 52

53