Parallel Algorithms Chapter 1 What is a Parallel

- Slides: 23

Parallel Algorithms Chapter 1

What is a Parallel Algorithm? § Imagine you needed to find a lost child in the woods. § Even in a small area, searching by yourself would be very time consuming § Now if you gathered some friends and family to help you, you could cover the woods in much faster manner…

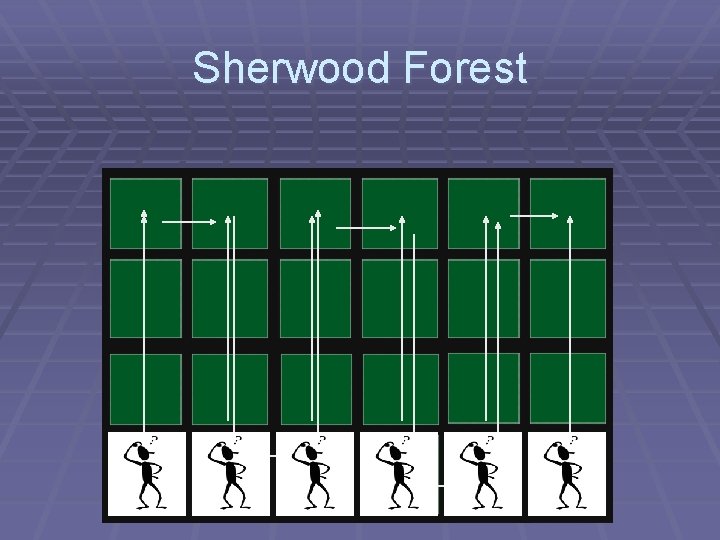

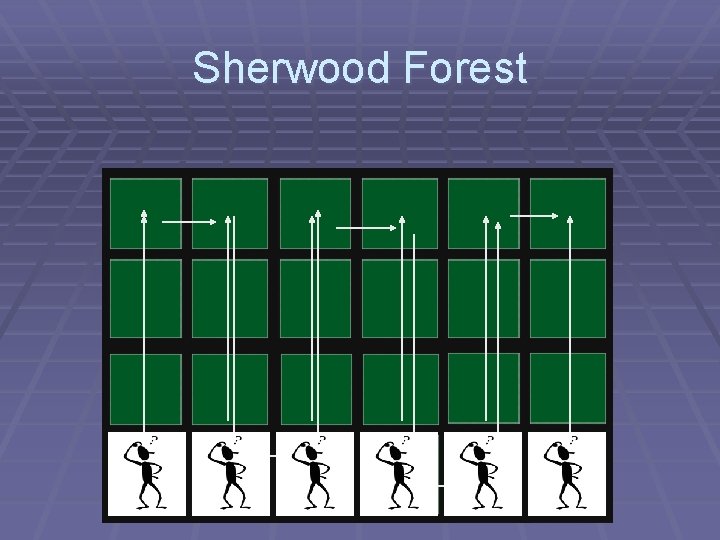

Sherwood Forest

Parallel Architectures § Architecture: 1) Structure - Content (e. g. Implementing the parallel algorithm) - Form (e. g. work as a multiple processor system) 2) Behavior (e. g. more secure or more reliable or more faster)

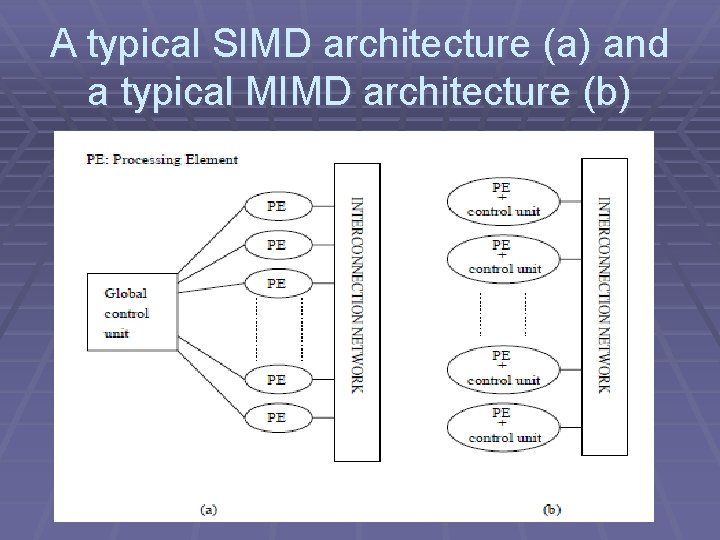

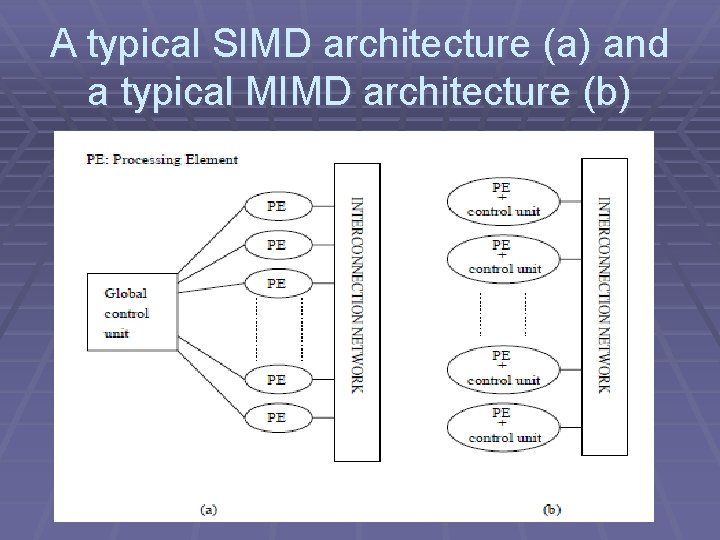

1. Controlling Mechanism Every processor can work under one global (central) control unit or under its local (independent) control unit. 1. 1 Single Instruction Multiple Data (SIMD) - All processors do exactly the same thing - Simple hardware - One global control unit connected to each processor

(Continue…) 1. 2 Multiple Instruction Multiple Data (MIMD) - N processors all doing their own thing. - Each processor has a local control unit. (read and write ) - E. g. If (X==Y) Execute instructions A; Else

A typical SIMD architecture (a) and a typical MIMD architecture (b)

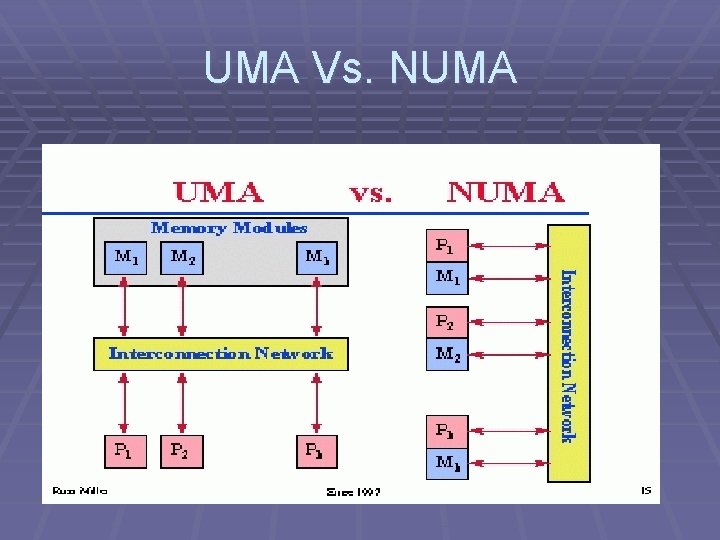

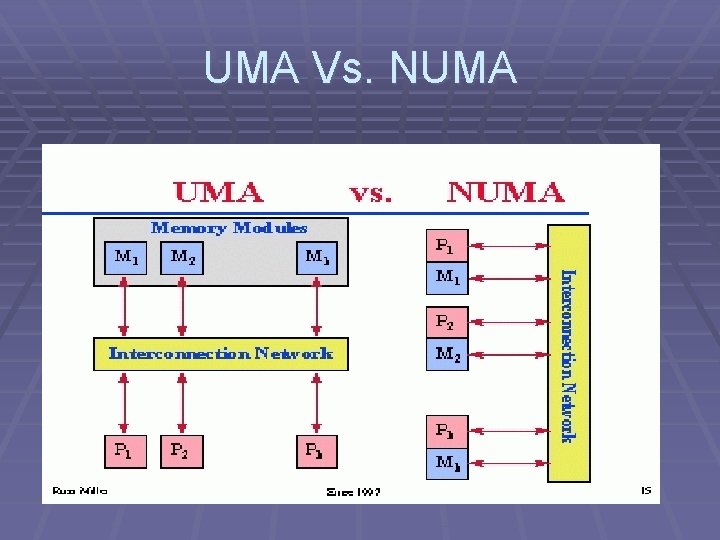

2. Memory Access Issues 2. 1 Uniform memory access (UMA) • All processors have access to all parts of memory. • Access time to all regions of memory is the same. • Access time for different processors is the same.

UMA (Continued) § In the UMA architecture, each processor may use a private cache. § The UMA model is suitable for general purpose and time sharing applications by multiple users. § It can be used to speed up the execution of a single large program in time critical applications.

2. Memory Access Issues (Continued) 2. 2 Non-uniform memory access (NUMA) • All processors have access to all parts of memory. • Access time of processor differs depending on region of memory. • Different processors access different regions of memory at different speeds.

NUMA (Continued) § Under NUMA, a processor can access its own local memory faster than non-local memory. § The benefits of NUMA are limited to particular workloads, notably on servers where the data are often associated strongly with certain tasks or users

UMA Vs. NUMA

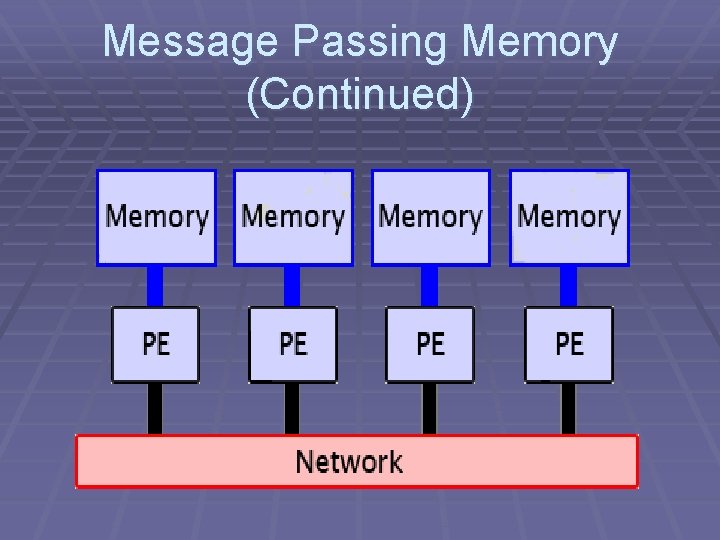

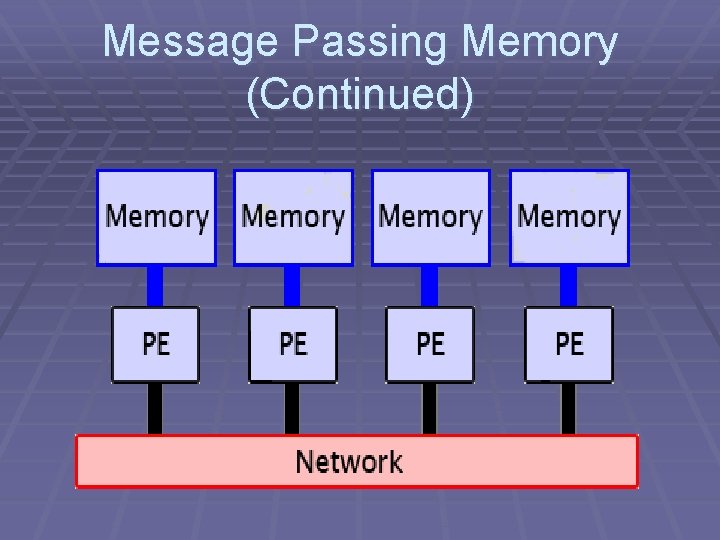

2. Memory Access Issues (Continued) 2. 3 Message-Passing Memory • These platforms comprise of a set of processors and their own (exclusive) memory. • Processors communicate by passing messages directly and coordination is via sending/receiving messages.

Message Passing Memory (Continued)

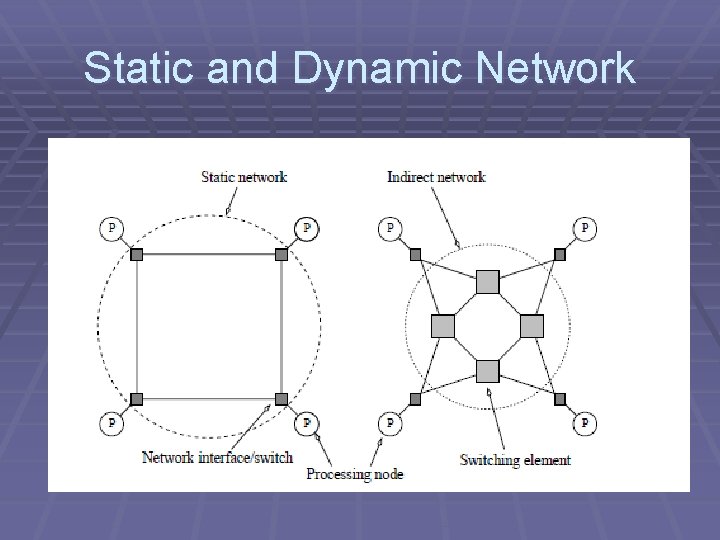

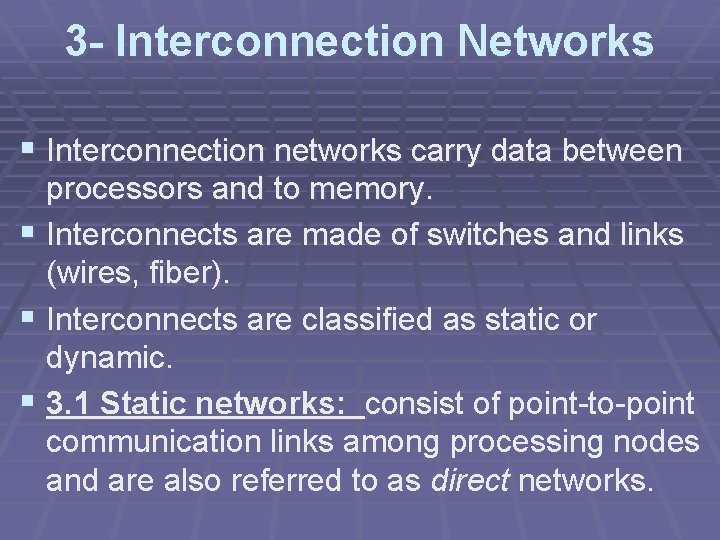

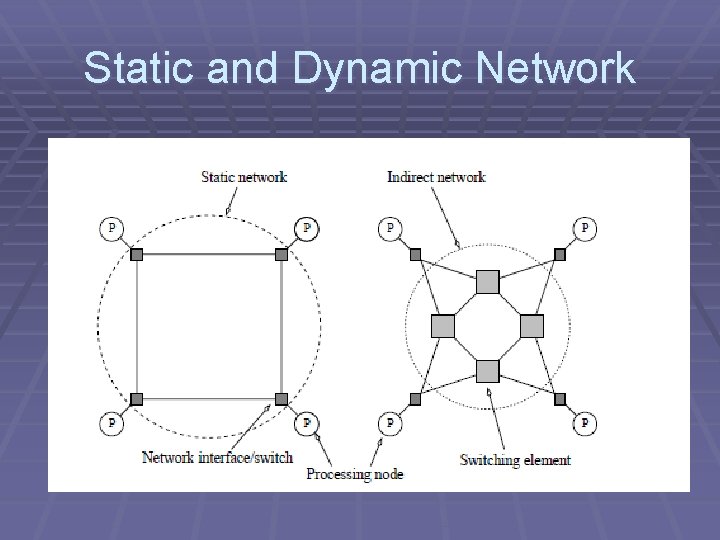

3 - Interconnection Networks § Interconnection networks carry data between processors and to memory. § Interconnects are made of switches and links (wires, fiber). § Interconnects are classified as static or dynamic. § 3. 1 Static networks: consist of point-to-point communication links among processing nodes and are also referred to as direct networks.

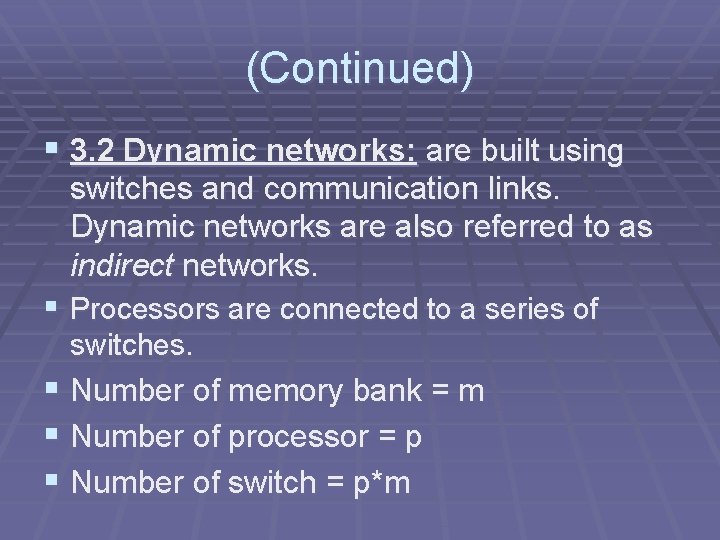

(Continued) § 3. 2 Dynamic networks: are built using switches and communication links. Dynamic networks are also referred to as indirect networks. § Processors are connected to a series of switches. § Number of memory bank = m § Number of processor = p § Number of switch = p*m

Static and Dynamic Network

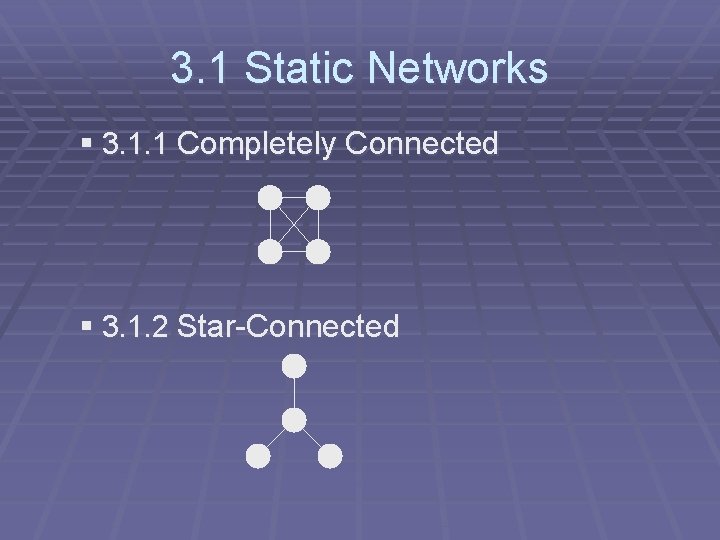

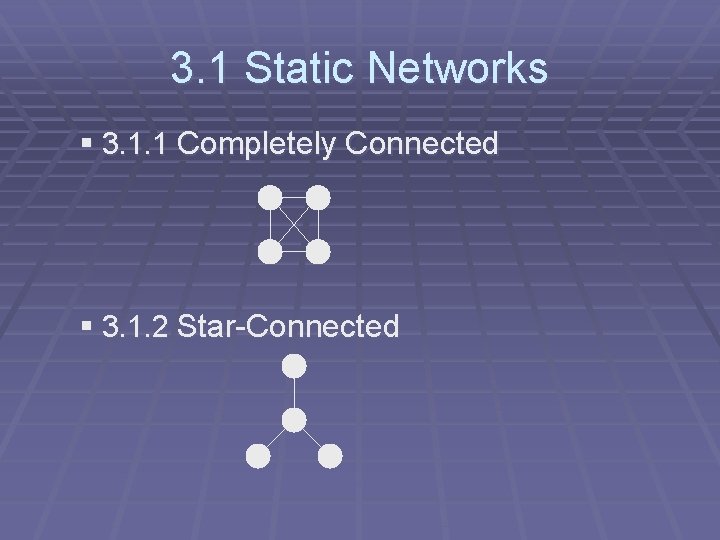

3. 1 Static Networks § 3. 1. 1 Completely Connected § 3. 1. 2 Star-Connected

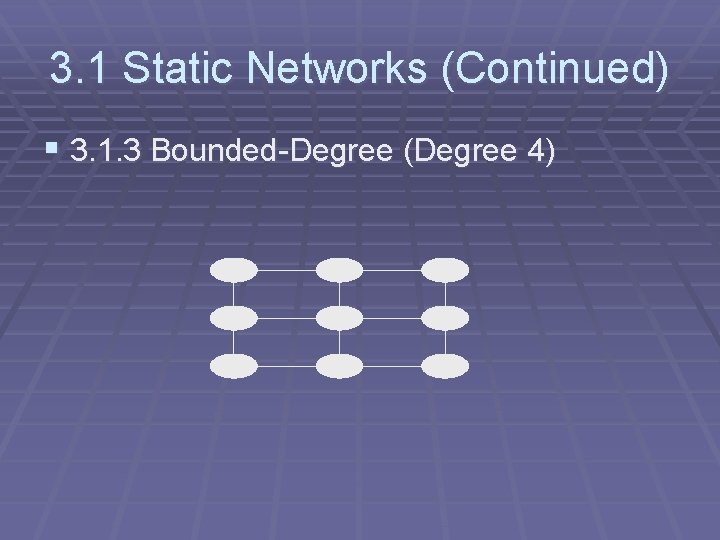

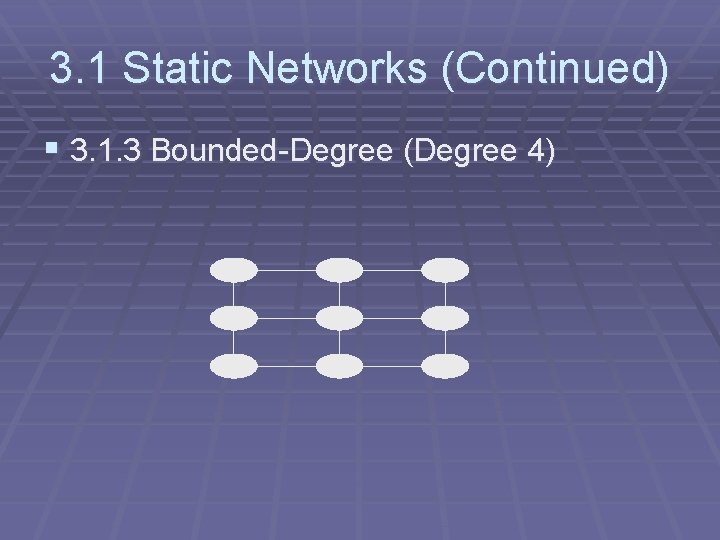

3. 1 Static Networks (Continued) § 3. 1. 3 Bounded-Degree (Degree 4)

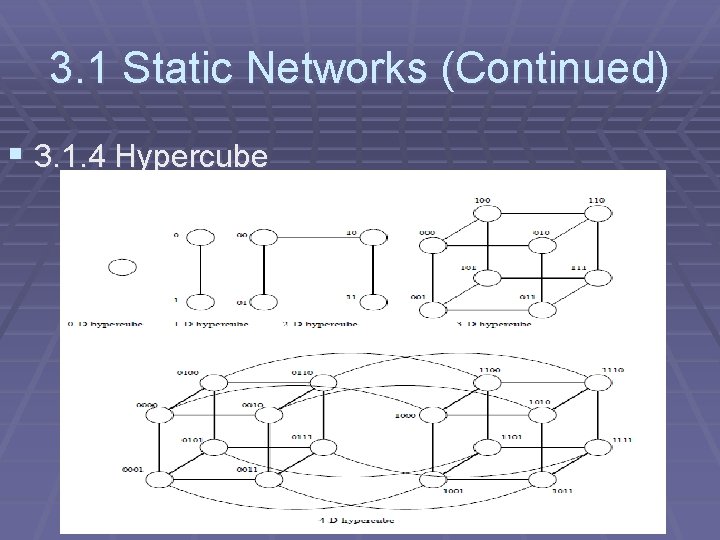

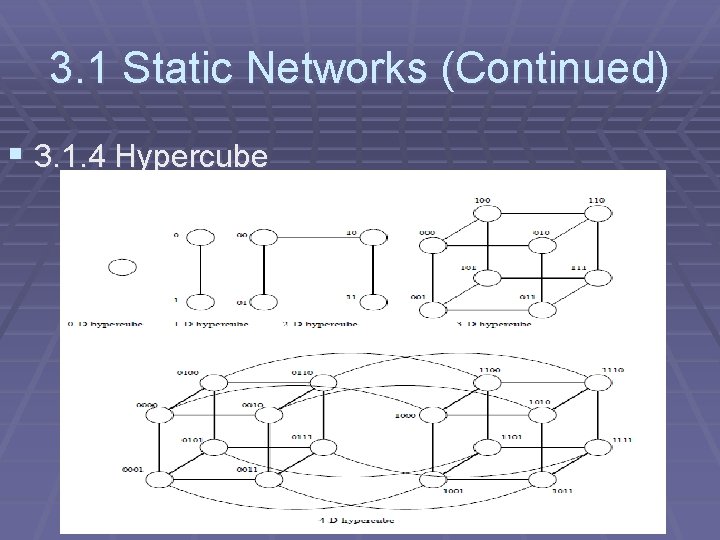

3. 1 Static Networks (Continued) § 3. 1. 4 Hypercube

The PRAM Model § Parallel Random Access Machine § Theoretical model for parallel machines. § p processors with uniform access to a large memory bank § MIMD § UMA (uniform memory access) – Equal memory access time for any processor to any address

Memory Protocols - Depending on how simultaneous memory accesses are handled, PRAMs can be divided into four subclasses. § Exclusive-Read Exclusive-Write(EREW). § Exclusive-Read Concurrent-Write (ERCW). § Concurrent-Read Exclusive-Write (CREW). § Concurrent-Read Concurrent-Write (CRCW). - If concurrent write is allowed we must decide which value to accept.

§ Some Algorithms for CREW will be discussed in the next session.