PAIRS Forming a ranked list using mined pairwise

![References • • • [1] X. Ding and B. Liu. The utility of linguistic References • • • [1] X. Ding and B. Liu. The utility of linguistic](https://slidetodoc.com/presentation_image/f8b4288b1e4499015770a37018c14190/image-18.jpg)

- Slides: 19

PAIRS Forming a ranked list using mined, pairwise comparisons Reed A. Coke, David C. Anastasiu, Byron J. Gao

PAIRS • Pairwise Automatic Inferential Ranking System dmlab. cs. txstate. edu/pairs

The Problem • Given a list of items, as well as an optional attribute, how best to generate a ranked list in an online system • What is the fastest way to get an accurate result? • What is the most accurate way to get a fast result?

Previous approaches • NLP techniques are likely the best • Can be very costly time-wise – Especially with nonstandard grammar of internet • PAIRS is an attempt at finding a balance between speed and accuracy

Overall Architecture • • 1. Query Parsing (fast) 2. Comparison Location (slow) 3. Comparison Evaluation (fast) 4. Ranking (fast)

Query Parsing • Separates list into pairs: – i. e. (A, B, C)-> (A, B), (A, C), (B, C) • Leads to rapid explosion of searches • Each pair then is expanded into 4 queries – i. e. “A vs. B”, “A, B”, etc. • Finally, each query is sent alternatingly through Yahoo and Google, thanks to Abstract. Search 2

Comparison Location • Text is retrieved from each unique URL in the search results. • The text is then sent to a Java program which tags the part of speech of each word. • Line by line, the program determines whether or not the sentence is comparative. • Experimental results for Comparison Location – PAIRS keyword list: 50% recall, 80% precision – Ganapathibotla & Liu list: 97. 7% recall, 32% precision

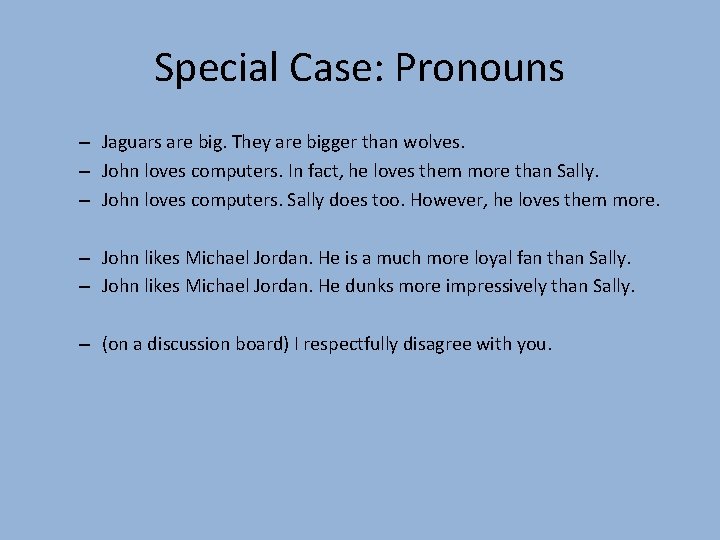

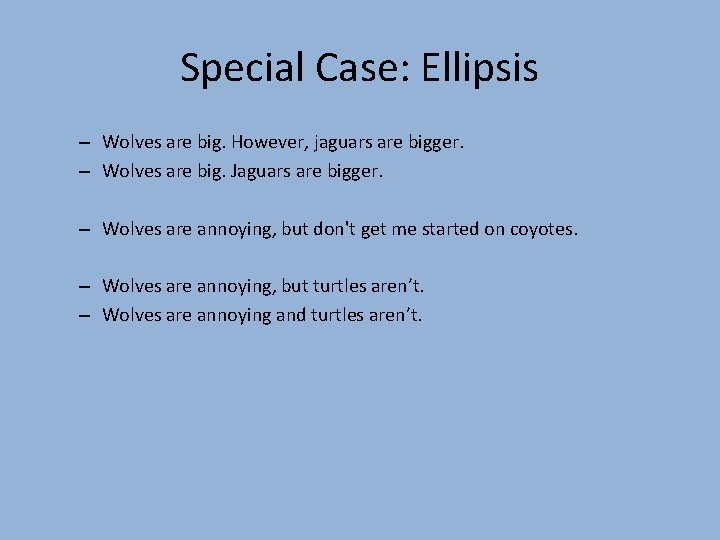

Location (continued) • A comparative sentence is one that meets the following criteria: – Contains a comparative word – Contains both nouns (stemmed) in the pair • Special cases: – Pronouns and ellipsis, keep track of “relevancy” of past nouns – Phrases • Any comparison is then evaluated immediately

Special Case: Pronouns – Jaguars are big. They are bigger than wolves. – John loves computers. In fact, he loves them more than Sally. – John loves computers. Sally does too. However, he loves them more. – John likes Michael Jordan. He is a much more loyal fan than Sally. – John likes Michael Jordan. He dunks more impressively than Sally. – (on a discussion board) I respectfully disagree with you.

Special Case: Ellipsis – Wolves are big. However, jaguars are bigger. – Wolves are big. Jaguars are bigger. – Wolves are annoying, but don't get me started on coyotes. – Wolves are annoying, but turtles aren’t. – Wolves are annoying and turtles aren’t.

Relevance Dictionary • Keep track of all nouns • Score is affected by recency and frequency

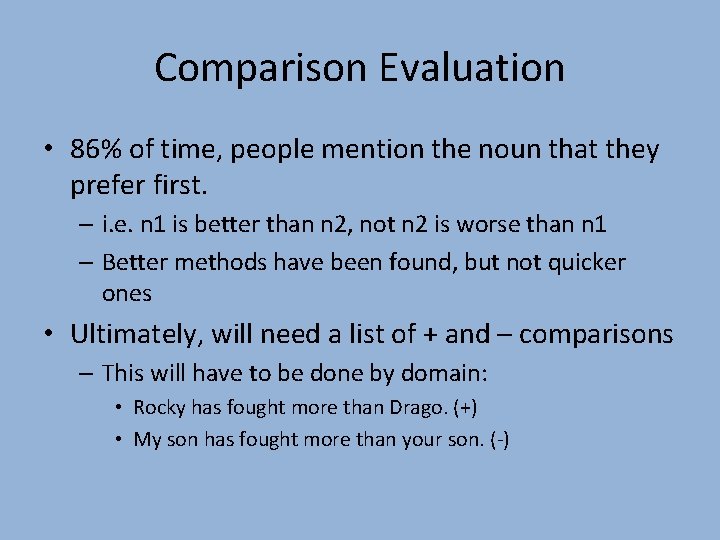

Comparison Evaluation • 86% of time, people mention the noun that they prefer first. – i. e. n 1 is better than n 2, not n 2 is worse than n 1 – Better methods have been found, but not quicker ones • Ultimately, will need a list of + and – comparisons – This will have to be done by domain: • Rocky has fought more than Drago. (+) • My son has fought more than your son. (-)

Creating the Ranking • Create a graph with weight edges • Brute force the score of the path from each node to every other node within the connected component • This results in a ranked list for each component

Problems • Still slow • Query parsing needs experiments to determine just how many queries are needed per pair • System is untested as a whole. Must be tested on a closed set of docs to determined total precision/recall • Comparison evaluation could be more graceful • Graph traversal algorithm could be better

Applications • PAIRS has several interesting applications – College decisions – Product comparison – Any sort of popularity contest – Taking a majority vote

Future Research • Polishing each component of PAIRS • Testing PAIRS on a closed system • Bridge. Finder

Conclusion • PAIRS was built from the ground up. The only pre-programmed component of PAIRS was the Stanford POS tagger. • Things I learned about research – How to formulate a research topic – How to research previous work in a topic – Experimentation – How to write a technical report – How to give a presentation

![References 1 X Ding and B Liu The utility of linguistic References • • • [1] X. Ding and B. Liu. The utility of linguistic](https://slidetodoc.com/presentation_image/f8b4288b1e4499015770a37018c14190/image-18.jpg)

References • • • [1] X. Ding and B. Liu. The utility of linguistic rules in opinion mining. In Proceedings of the 30 th annual international ACM SIGIR conference on Research and development in information retrieval, SIGIR '07, pages 811{812, New York, NY, USA, 2007. ACM. [2] X. Ding, B. Liu, and P. S. Yu. A holistic lexicon-based approach to opinion mining. In Proceedings of the international conference on Web search and web data mining, WSDM '08, pages 231{240, New York, NY, USA, 2008. ACM. [3] M. Ganapathibhotla and B. Liu. Mining opinions in comparative sentences. In Proceedings of the 22 nd International Conference on Computational Linguistics - Volume 1, COLING '08, pages 241{248, Stroudsburg, PA, USA, 2008. Association for Computational Linguistics. [4] A. Go, R. Bhayani, and L. Huang. Twitter sentiment classification using distant supervision. Technical report, Stanford University, 2010. [5] M. Hu and B. Liu. Mining and summarizing customer reviews. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, KDD '04, pages 168{177, New York, NY, USA, 2004. ACM. [6] N. Jindal and B. Liu. Identifying comparative sentences in text documents. In Proceedings of the 29 th annual international ACM SIGIR conference on Research and development in information retrieval, SIGIR '06, pages 244{251, New York, NY, USA, 2006. ACM. [7] N. Jindal and B. Liu. Mining comparative sentences and relations. In AAAI'06, pages {1{1, 2006. [8] B. Liu. Web Data Mining. Springer, 2008. [9] K. Toutanova, D. Klein, C. D. Manning, and Y. Singer. Feature-rich part-of-speech tagging with a cyclic dependency network. In Proceedings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology - Volume 1, NAACL '03, pages 173{180, Stroudsburg, PA, USA, 2003. Association for Computational Linguistics. 10 [10] K. Toutanova and C. D. Manning. Enriching the knowledge sources used in a maximum entropy part-of-speech tagger. In Proceedings of the 2000 Joint SIGDAT conference on Empirical methods in natural language processing and very large corpora: held in conjunction with the 38 th Annual Meeting of the Association for Computational Linguistics Volume 13, EMNLP '00, pages 63{70, Stroudsburg, PA, USA, 2000. Association for Computational Linguistics.

Questions?