Paging Algorithms Vivek Pai Kai Li Princeton University

- Slides: 22

Paging Algorithms Vivek Pai / Kai Li Princeton University

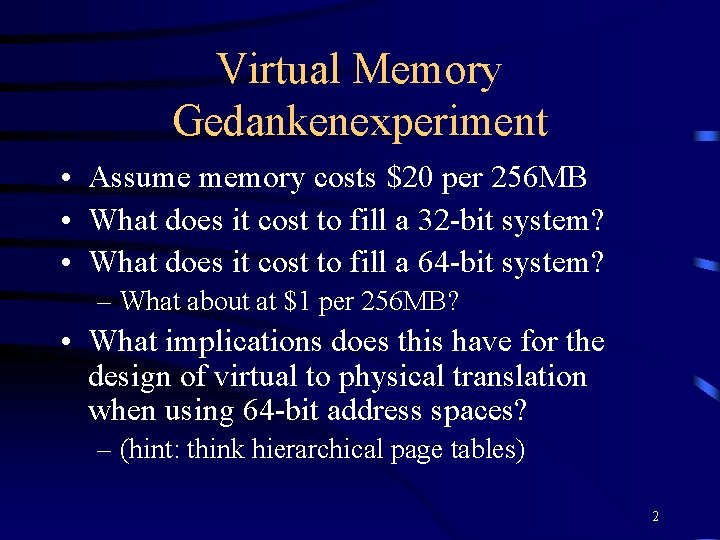

Virtual Memory Gedankenexperiment • Assume memory costs $20 per 256 MB • What does it cost to fill a 32 -bit system? • What does it cost to fill a 64 -bit system? – What about at $1 per 256 MB? • What implications does this have for the design of virtual to physical translation when using 64 -bit address spaces? – (hint: think hierarchical page tables) 2

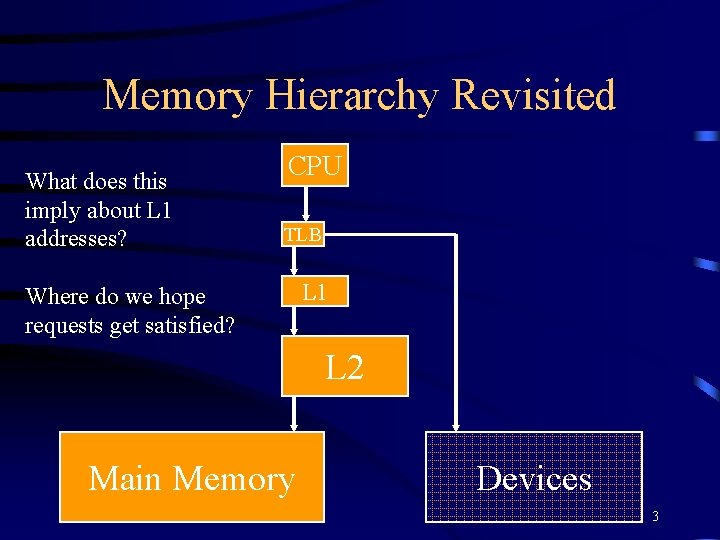

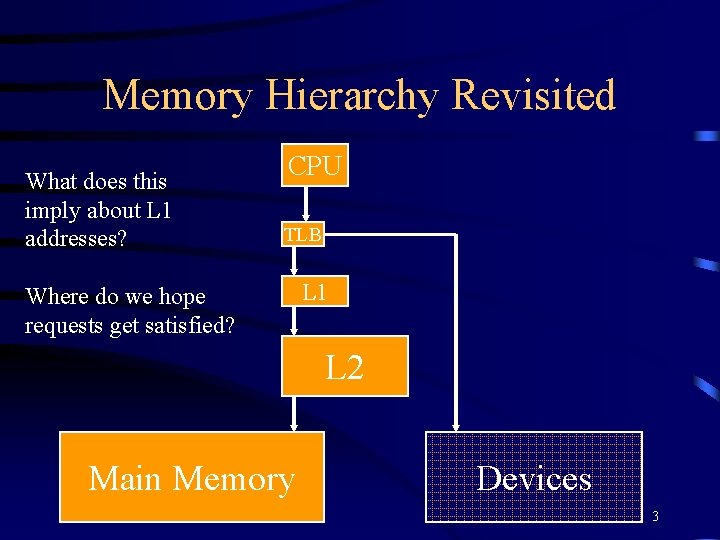

Memory Hierarchy Revisited What does this imply about L 1 addresses? CPU TLB Where do we hope requests get satisfied? L 1 L 2 Main Memory Devices 3

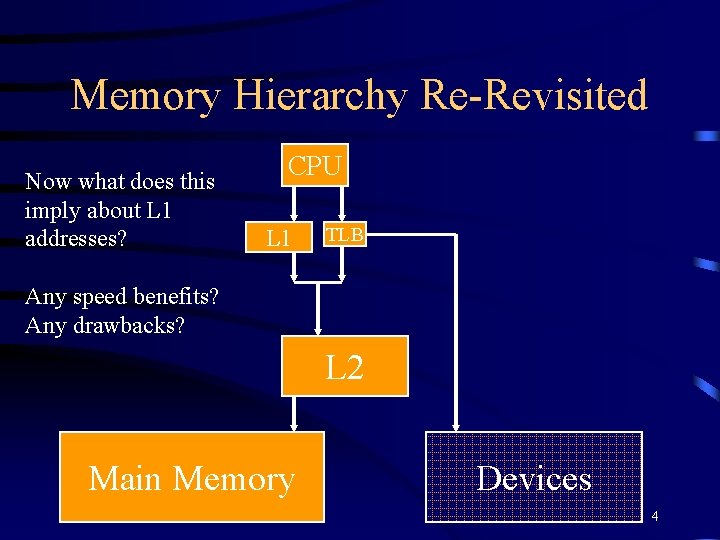

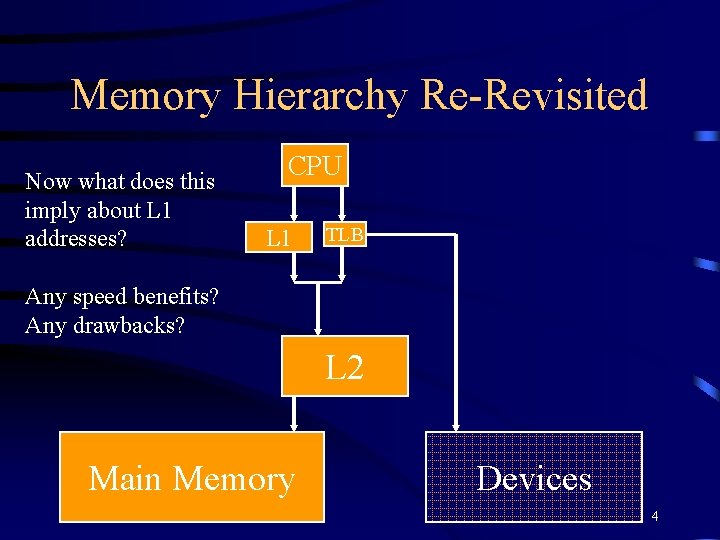

Memory Hierarchy Re-Revisited Now what does this imply about L 1 addresses? CPU L 1 TLB Any speed benefits? Any drawbacks? L 2 Main Memory Devices 4

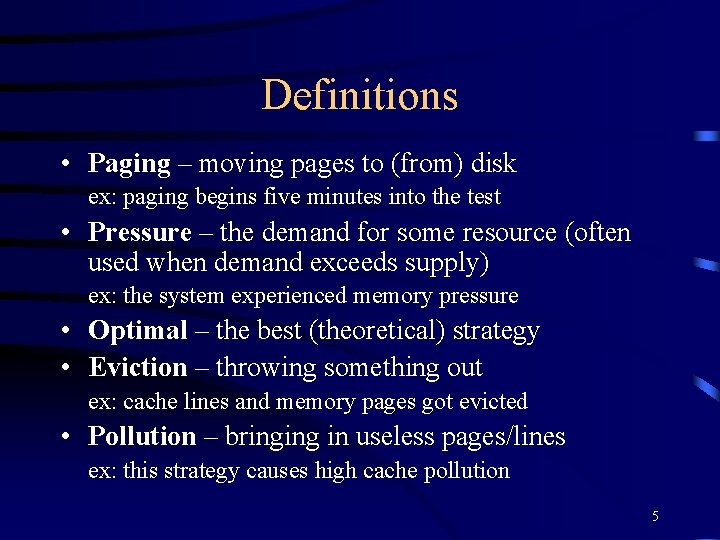

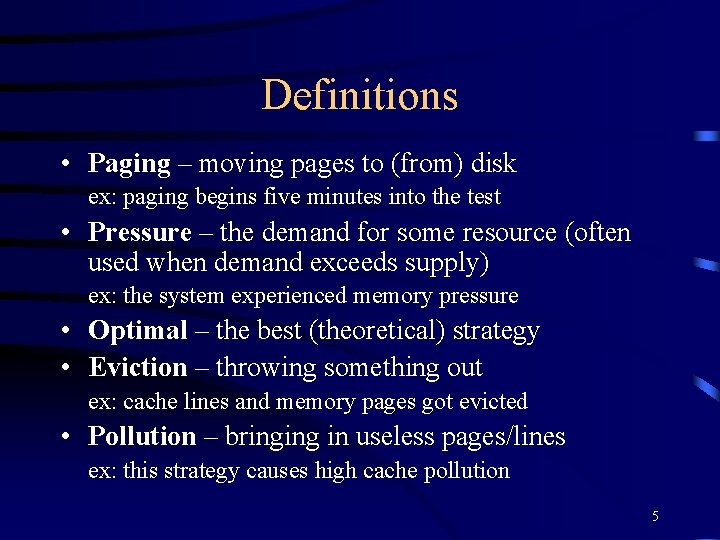

Definitions • Paging – moving pages to (from) disk ex: paging begins five minutes into the test • Pressure – the demand for some resource (often used when demand exceeds supply) ex: the system experienced memory pressure • Optimal – the best (theoretical) strategy • Eviction – throwing something out ex: cache lines and memory pages got evicted • Pollution – bringing in useless pages/lines ex: this strategy causes high cache pollution 5

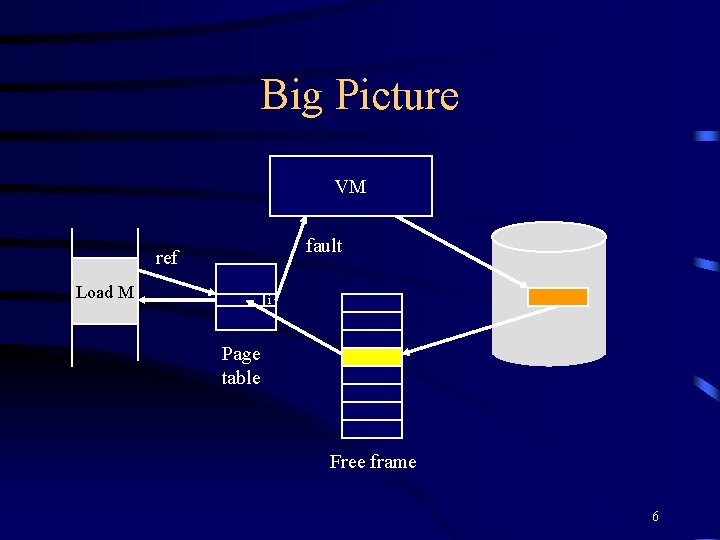

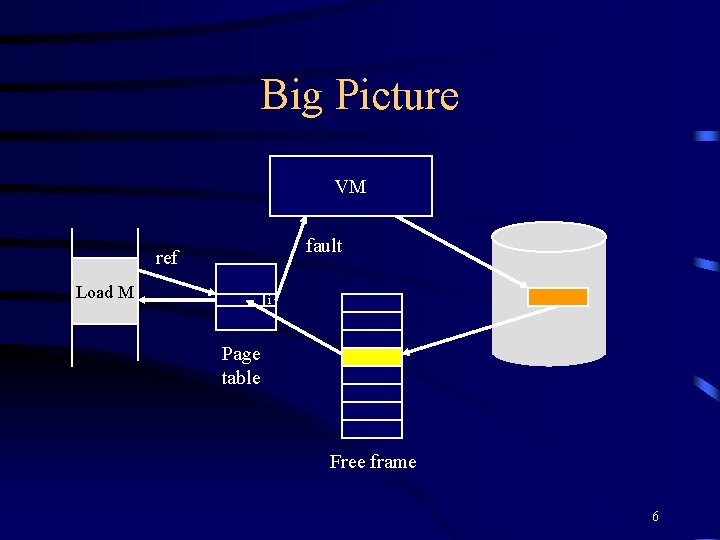

Big Picture VM fault ref Load M i Page table Free frame 6

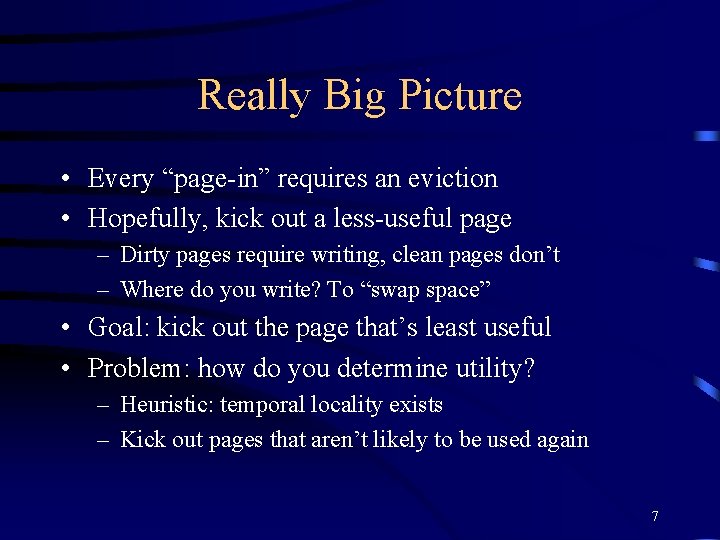

Really Big Picture • Every “page-in” requires an eviction • Hopefully, kick out a less-useful page – Dirty pages require writing, clean pages don’t – Where do you write? To “swap space” • Goal: kick out the page that’s least useful • Problem: how do you determine utility? – Heuristic: temporal locality exists – Kick out pages that aren’t likely to be used again 7

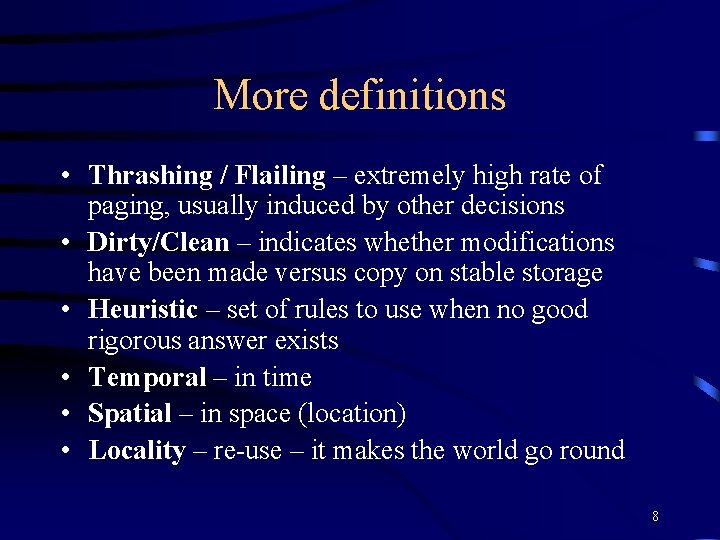

More definitions • Thrashing / Flailing – extremely high rate of paging, usually induced by other decisions • Dirty/Clean – indicates whether modifications have been made versus copy on stable storage • Heuristic – set of rules to use when no good rigorous answer exists • Temporal – in time • Spatial – in space (location) • Locality – re-use – it makes the world go round 8

What Makes This Hard? • Perfect reference stream hard to get – Every memory access would need bookkeeping • Imperfect information available, cheaply – Play around with PTE permissions, info • Overhead is a bad idea – If no memory pressure, ideally no bookkeeping – In other words, make the common case fast 9

Steps in Paging • Data structures – A list of unused page frames – Data structure to map a frame to its pid/ virtual address • On a page fault – Get an unused frame or a used frame – If the frame is used • If it has been modified, write it to disk • Invalidate its current PTE and TLB entry – Load the new page from disk – Update the faulting PTE and invalidate its TLB entry – Restart the faulting instruction 10

Optimal or MIN • Algorithm: – Replace the page that won’t be used for the longest time • Pros – Minimal page faults – This is an off-line algorithm for performance analysis • Cons – No on-line implementation • Also called Belady’s Algorithm 11

Not Recently Used (NRU) • Algorithm – Randomly pick a page from the following (in order) • • Not referenced and not modified Not referenced and modified Referenced and not modified Referenced and modified • Pros – Easy to implement • Cons – Not very good performance, takes time to classify 12

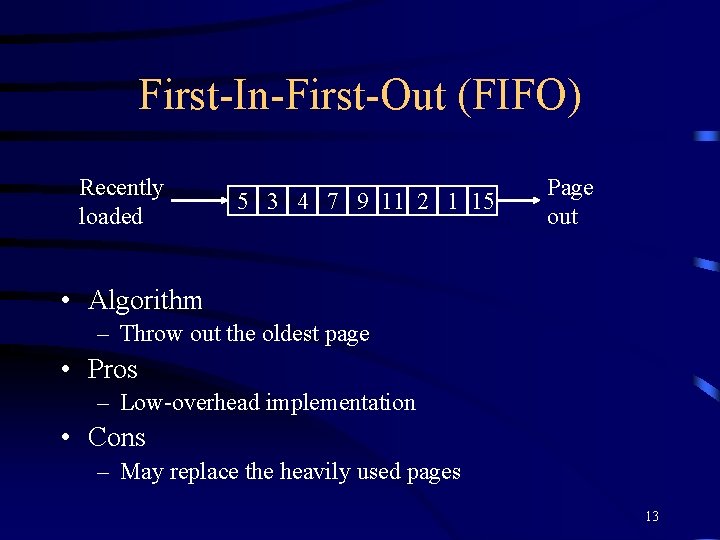

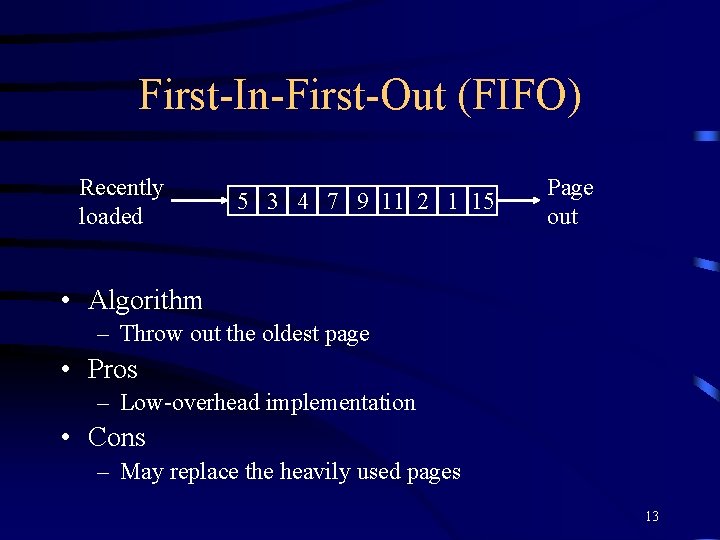

First-In-First-Out (FIFO) Recently loaded 5 3 4 7 9 11 2 1 15 Page out • Algorithm – Throw out the oldest page • Pros – Low-overhead implementation • Cons – May replace the heavily used pages 13

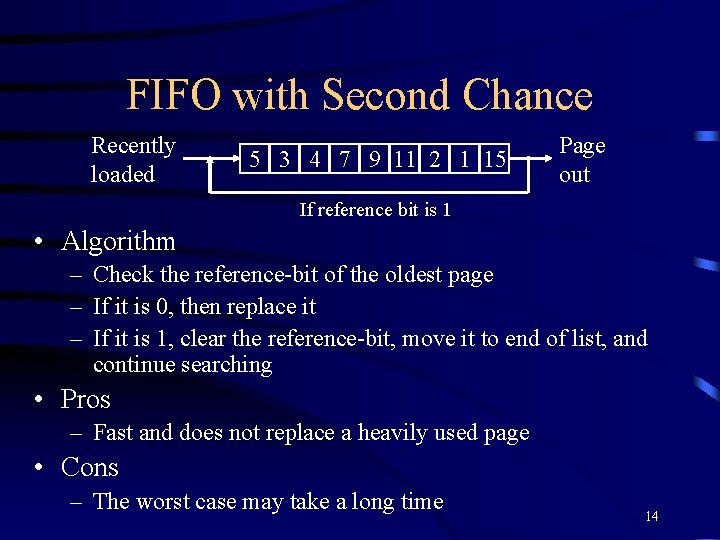

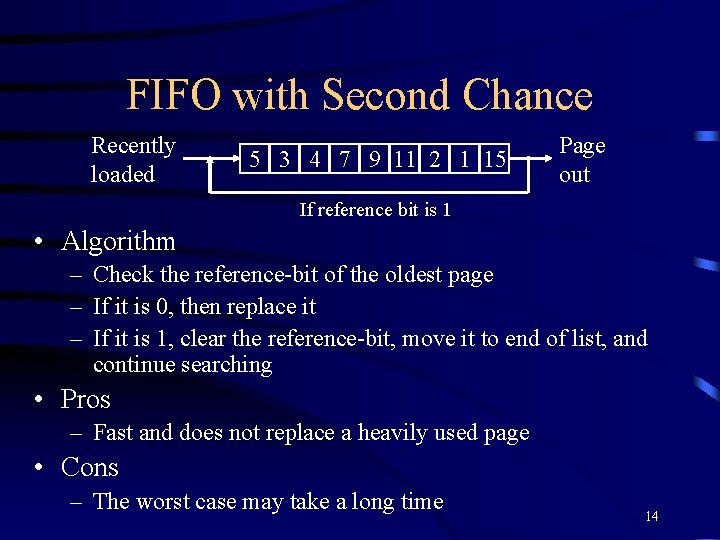

FIFO with Second Chance Recently loaded 5 3 4 7 9 11 2 1 15 Page out If reference bit is 1 • Algorithm – Check the reference-bit of the oldest page – If it is 0, then replace it – If it is 1, clear the reference-bit, move it to end of list, and continue searching • Pros – Fast and does not replace a heavily used page • Cons – The worst case may take a long time 14

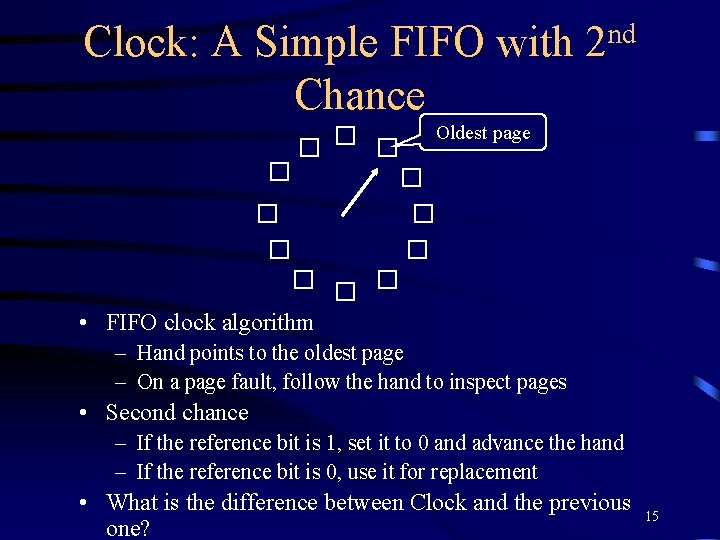

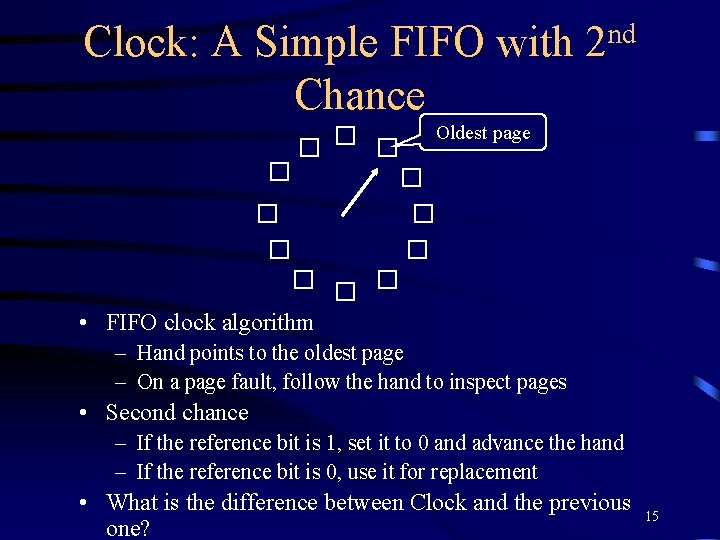

Clock: A Simple FIFO with Chance nd 2 Oldest page • FIFO clock algorithm – Hand points to the oldest page – On a page fault, follow the hand to inspect pages • Second chance – If the reference bit is 1, set it to 0 and advance the hand – If the reference bit is 0, use it for replacement • What is the difference between Clock and the previous one? 15

Enhanced FIFO with 2 nd-Chance Algorithm • Same as the basic FIFO with 2 nd chance, except that this method considers both reference bit and modified bit – – (0, 0): neither recently used nor modified (0, 1): not recently used but modified (1, 0): recently used but clean (1, 1): recently used and modified • Pros – Avoid write back • Cons – More complicated 16

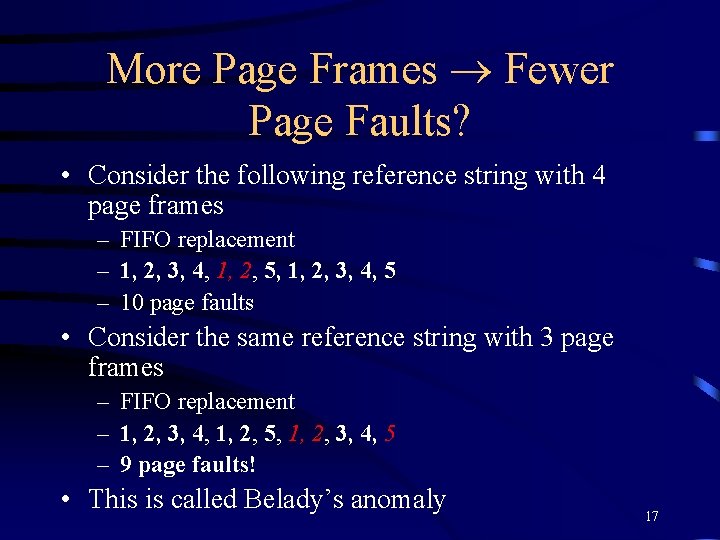

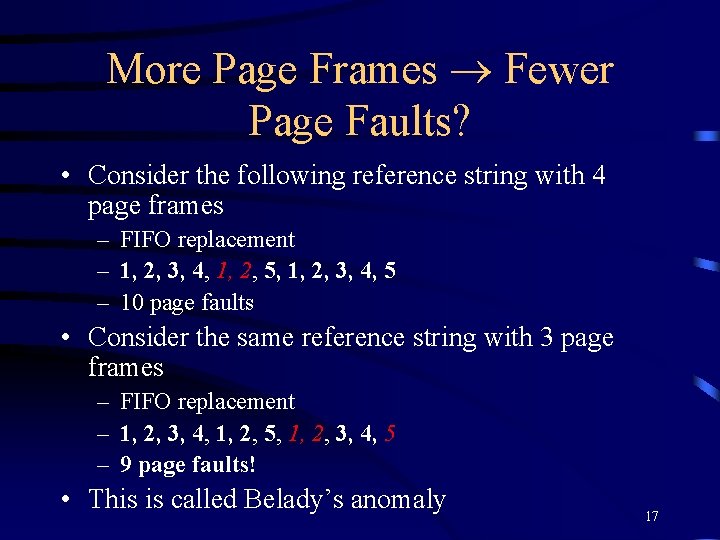

More Page Frames Fewer Page Faults? • Consider the following reference string with 4 page frames – FIFO replacement – 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 – 10 page faults • Consider the same reference string with 3 page frames – FIFO replacement – 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 – 9 page faults! • This is called Belady’s anomaly 17

Least Recently Used (LRU) • Algorithm – Replace page that hasn’t been used for the longest time • Question – What hardware mechanisms required to implement LRU? 18

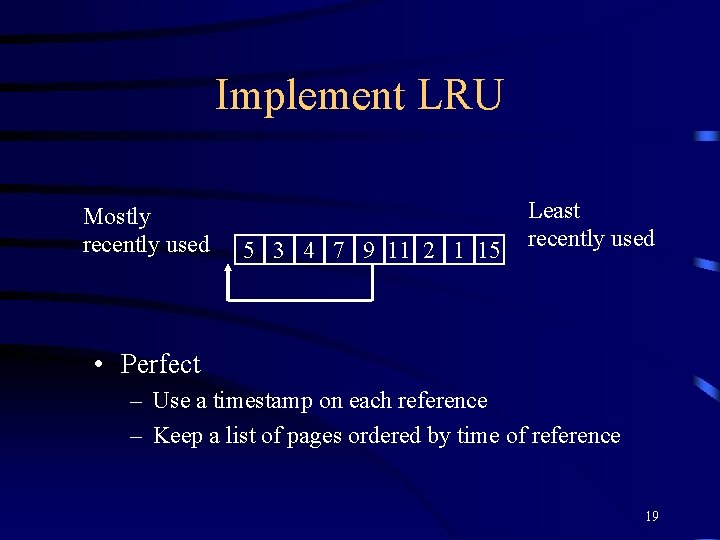

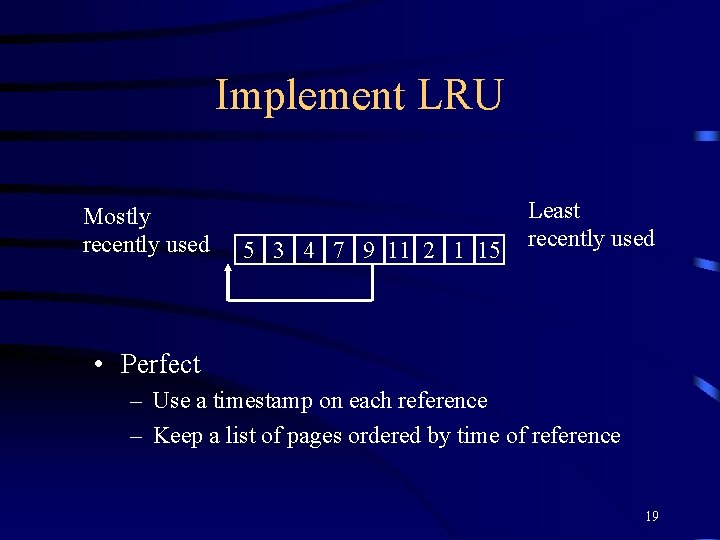

Implement LRU Mostly recently used 5 3 4 7 9 11 2 1 15 Least recently used • Perfect – Use a timestamp on each reference – Keep a list of pages ordered by time of reference 19

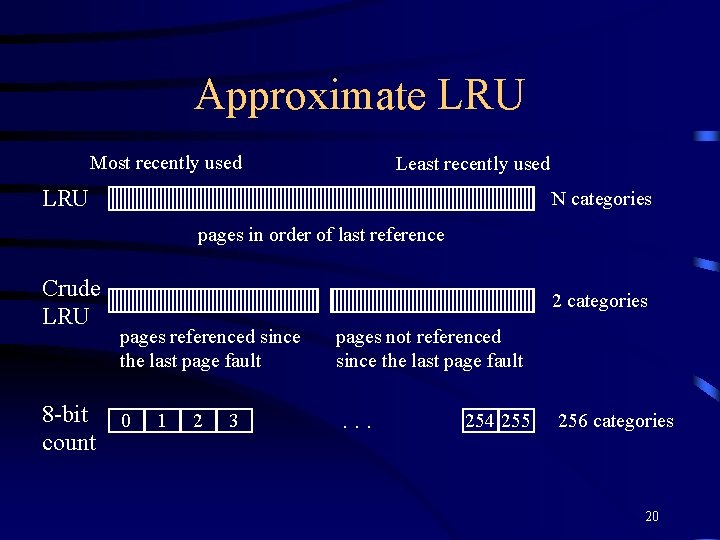

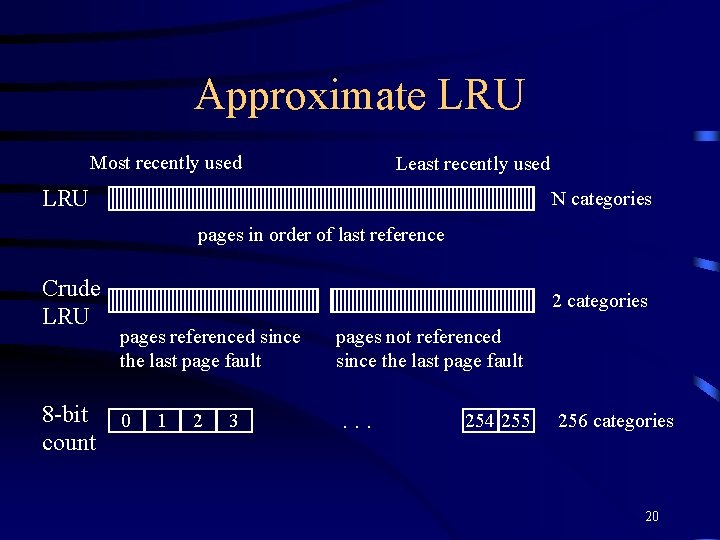

Approximate LRU Most recently used Least recently used LRU N categories pages in order of last reference Crude LRU 8 -bit count 2 categories pages referenced since the last page fault pages not referenced since the last page fault 0 . . . 1 2 3 254 255 256 categories 20

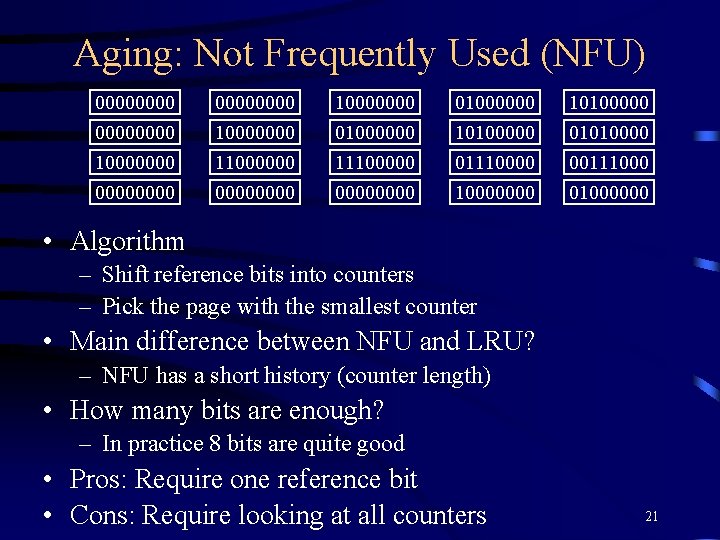

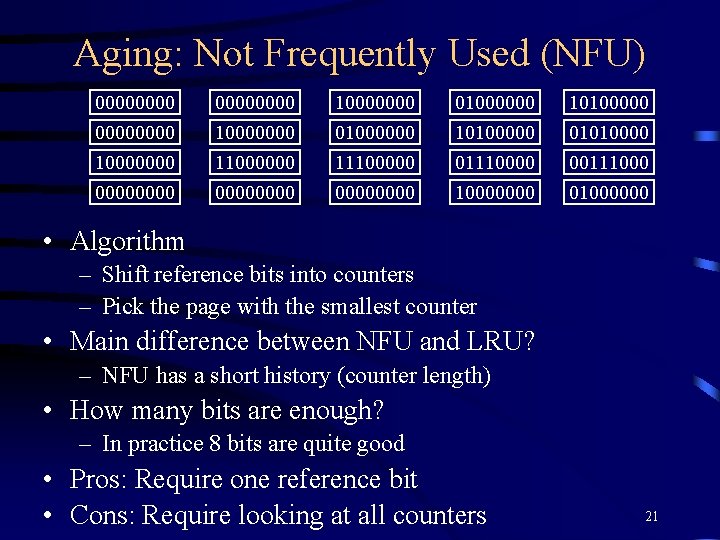

Aging: Not Frequently Used (NFU) 00000000 10000000 01000000 10100000 01010000000 00000000 11100000 01110000000 00111000 01000000 • Algorithm – Shift reference bits into counters – Pick the page with the smallest counter • Main difference between NFU and LRU? – NFU has a short history (counter length) • How many bits are enough? – In practice 8 bits are quite good • Pros: Require one reference bit • Cons: Require looking at all counters 21

Where Do We Get Storage? • 32 bit VA to 32 bit PA – no space, right? – Offset within page is the same • No need to store offset – 4 KB page = 12 bits of offset – Those 12 bits are “free” in PTE • Page # + other info <= 32 bits – Makes storing info easy 22